3. Research Gaps & Objectives

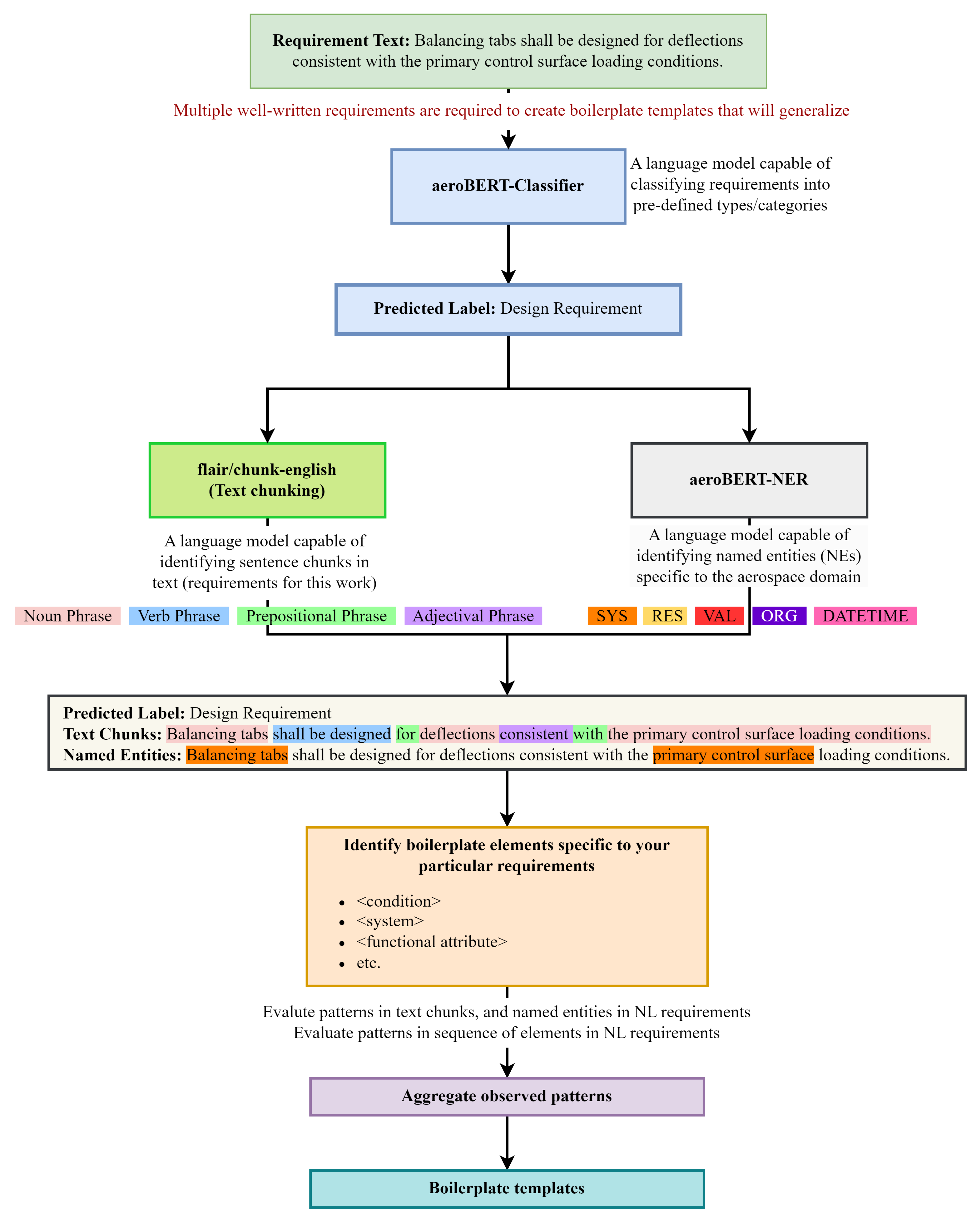

As mentioned, a divide exists between NLP4RE research and its industrial adaptation, with most of the research in this domain being mostly limited to software engineering. This work instead focuses on the aerospace domain (specifically aerospace requirements) and more specifically the application NLP to analyze and standardize requirements for the purpose of supporting the shift to model-based approaches and MBSE. The two LMs with aerospace-specific domain knowledge (aeroBERT-Classifier [

24], and aeroBERT-NER [

23]), previously developed by the authors were used in this work to establish a methodology (or pipeline) for standardizing requirements by first being able to classify, followed by extracting named entities of interest. These extracted entities were used to populate a requirements table in an Excel spreadsheet, which is expected to reduce the time and cost involved by helping MBSE practitioners in replicating this table in an MBSE environment, as compared to a completely manual process.

Lastly, a text chunking LM (

flair/chunk-english [

25]) along with aeroBERT-NER was used to obtain text chunks and named entities. These were used to identify patterns in each type of NL requirements considered. These patterns eventually led to the definition of boilerplates (templates) aimed at facilitating the future standardization of requirements.

Summary

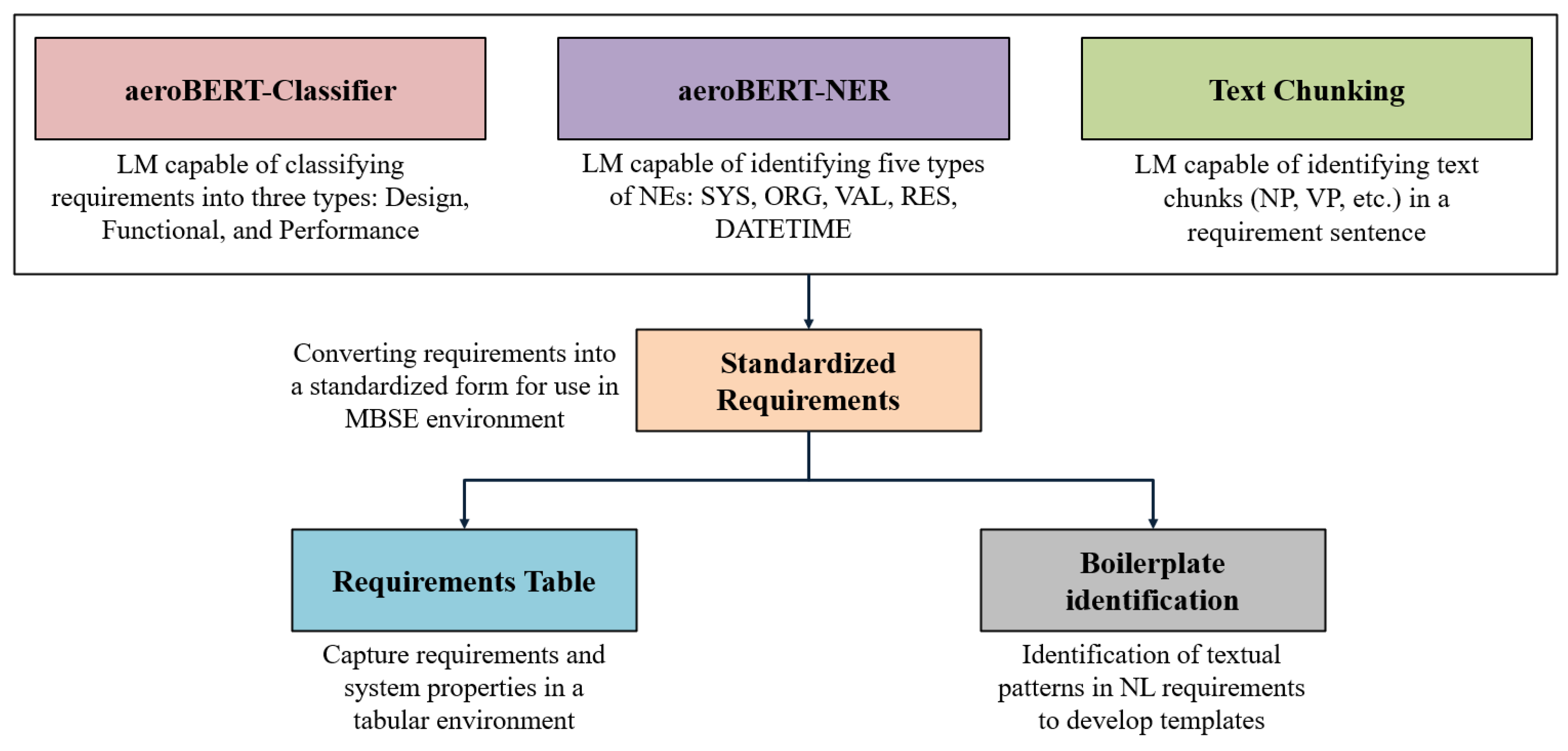

Figure 10 shows the pipeline consisting of different LMs. Merging their outputs enables the conversion of NL requirements into semi-machine-readable (or standardized) requirements. To make it clear how these refactored requirements should be read, boilerplate templates are proposed with a uniform ordering of key

elements in a given requirement.

The resulting standardized requirements support the creation of system models using the principles of MBSE. This effort achieves it through the:

Creation of requirements tables: The proposed requirement table contains columns populated by outputs obtained from aeroBERT-Classifier [

24] and aeroBERT-NER [

23]. This table can further aid in the creation of model-based (e.g., SysML) requirement objects by automatically extracting relevant words (system names, resources, quantities, etc.) from free-form NL requirements.

-

Identification and creation of requirements boilerplates: Distinct requirement types have unique linguistic patterns that set them apart from each other. “Patterns” in this case means the order of types of tags (sentence chunks and NEs) in sequences representing the original requirement.

To ensure the boilerplate templates are tailored to each requirement type in a swift and efficient manner, it is crucial to adopt an agile approach based on dynamically identified syntactic patterns in requirements, which is a more adaptive approach when compared to their rule-based counterparts. This study leverages language models to detect the linguistic patterns in requirements, which in turn aid in creating bespoke boilerplates.

The aeroBERT-Classifier is used for requirement classification, aeroBERT-NER for identifying named entities relevant to the aerospace domain, flair/chunk-english for extracting text chunks present in each requirement type. The extracted named entities and text chunks are analyzed to identify different elements present in a requirement sentence, and their presence and order in a requirement are used to determine distinct boilerplate templates. The templates identified might resemble the following formats:

- (a)

<Each/The/All> <system/systems> shall <action>.

- (b)

<Each/The/All> <system/systems> shall allow <entity> to be <state> for at least <value>.

In this study, well-crafted requirements are used to identify boilerplate templates, which can be utilized by inexperienced engineers to establish uniformity in their requirement compositions from the outset.

4. Methodology

This work uses a collection of 310 requirements (the list of the requirements can be found in [

24]) categorized into design, functional, and performance requirements (Figure

Table 3). Since requirements texts are almost always proprietary, all the requirements used for this work were obtained from Parts 23 and 25 of Title 14 of the Code of Federal Regulations (CFRs) [

52]. While these requirements are mostly used for verifying compliance during certification, they have been used in this work to establish a methodology for the conversion of NL requirements into semi-machine-readable requirements.

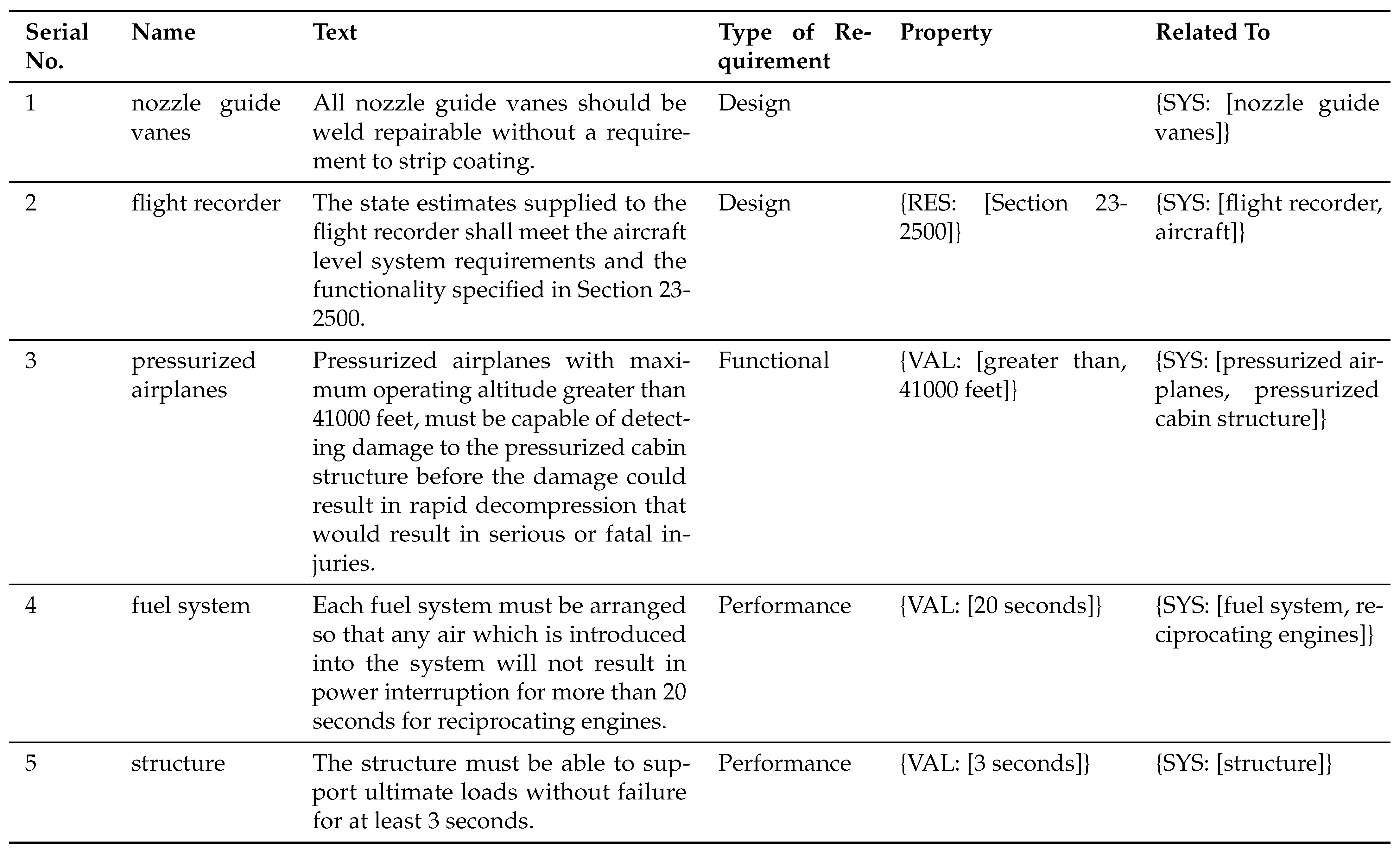

4.1. Creating Requirement Tables

Requirement tables are helpful for filtering requirements of interest and performing requirements analysis. For the purpose of this work, five columns were chosen to be included in the requirement table, as shown in

Table 4. The

Name column was populated by the system name (SYS named entity) identified by aeroBERT-NER [

23]. The

Type of Requirement column was populated after the requirement is classified as a design, functional, or performance requirement by aeroBERT-Classifier [

24]. The

Property column was populated by all the named entities identified by aeroBERT-NER [

23] (except for SYS) Lastly, the

Related to column was populated by system names identified by aeroBERT-NER.

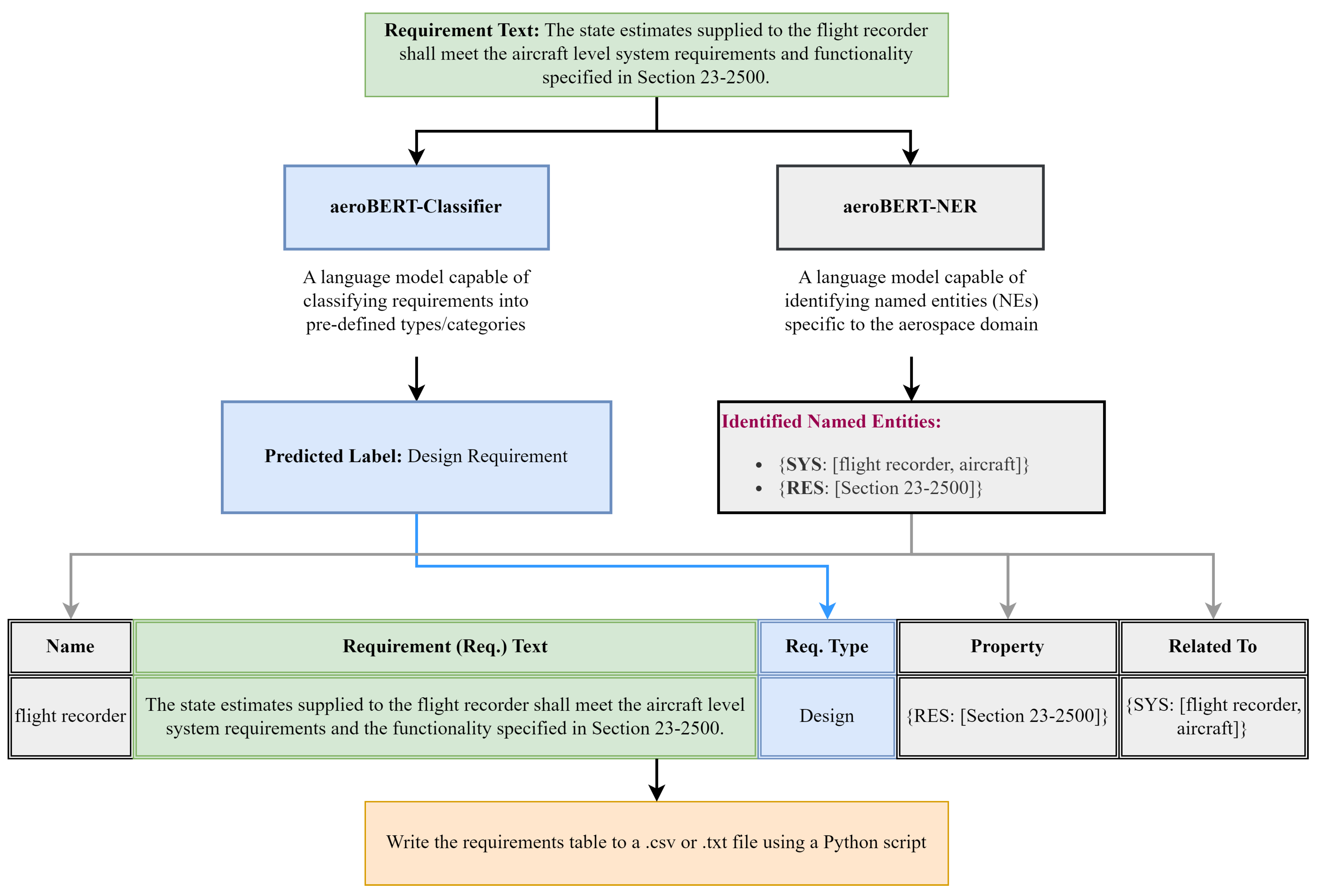

The flowchart in

Figure 11 illustrates the procedure for generating a requirements table for a single requirement utilizing aeroBERT-NER and aeroBERT-Classifier. In particular, it shows how the LMs are used to extract information from the requirement and how this information is employed to populate various columns of the requirements table. While this process is demonstrated on a single requirement, it can be used on multiple requirements simultaneously.

4.2. Observation and Analysis of Linguistic Patterns in Aerospace Requirements for Boilerplate Creation

This section discusses the process of identifying linguistic patterns in the requirements after they have been processed by flair/chunk-english and aeroBERT-NER. Discovered patterns will be discussed here as well. The next paragraphs, in particular, describe the pattern mining and standardization task for NL requirements analysis.

All the requirements were first classified using aeroBERT-Classifier [

24]. This was done to examine if different types of requirements exhibit different textual patterns using sentence chunks (

Table 2).

flair/chunk-english language model [

25] was used for text chunking and aeroBERT-NER [

23] was used to identify named entities.

The details regarding the textual patterns identified in requirements belonging to various types are described below.

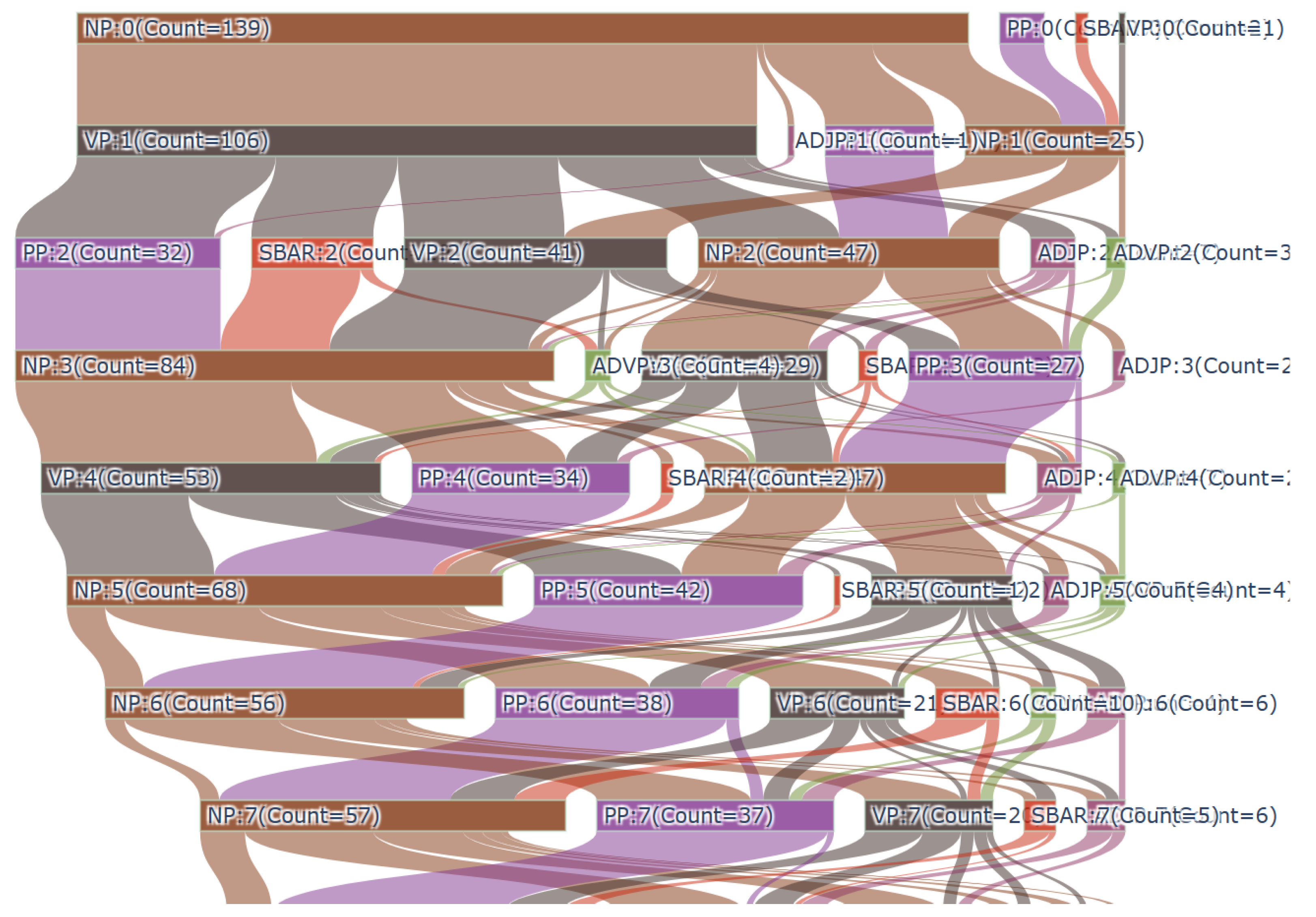

Design Requirements

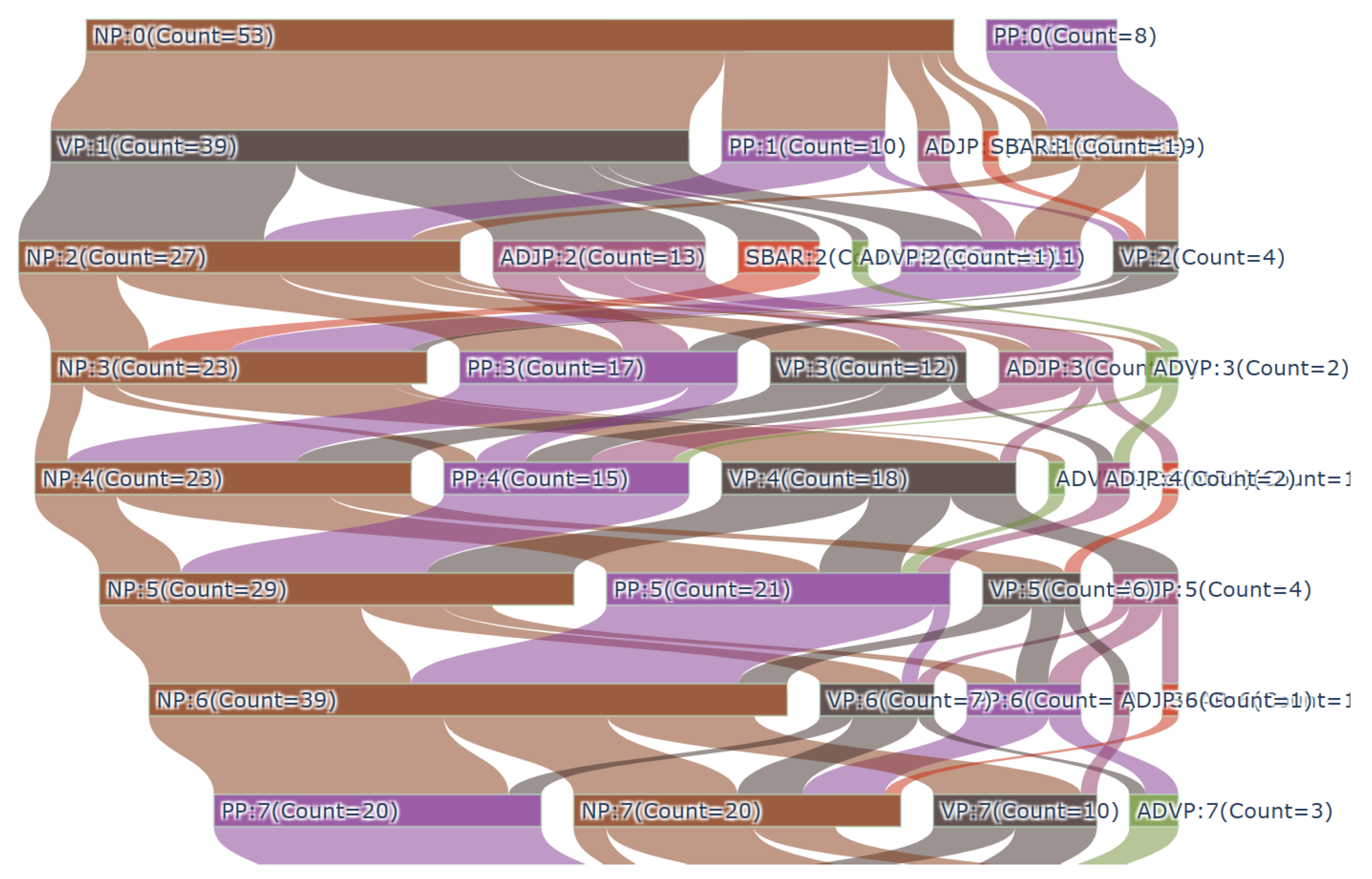

Of the 149 design requirements, 139 started with a noun phrase (NP), 7 started with a prepositional phrase (PP), 2 started with a subordinate clause (SBAR), and only 1 started with a verb phrase (VP). In 106 of the requirements, NPs were followed by a VP or another NP. The detailed sequence of patterns is shown in

Figure 12.

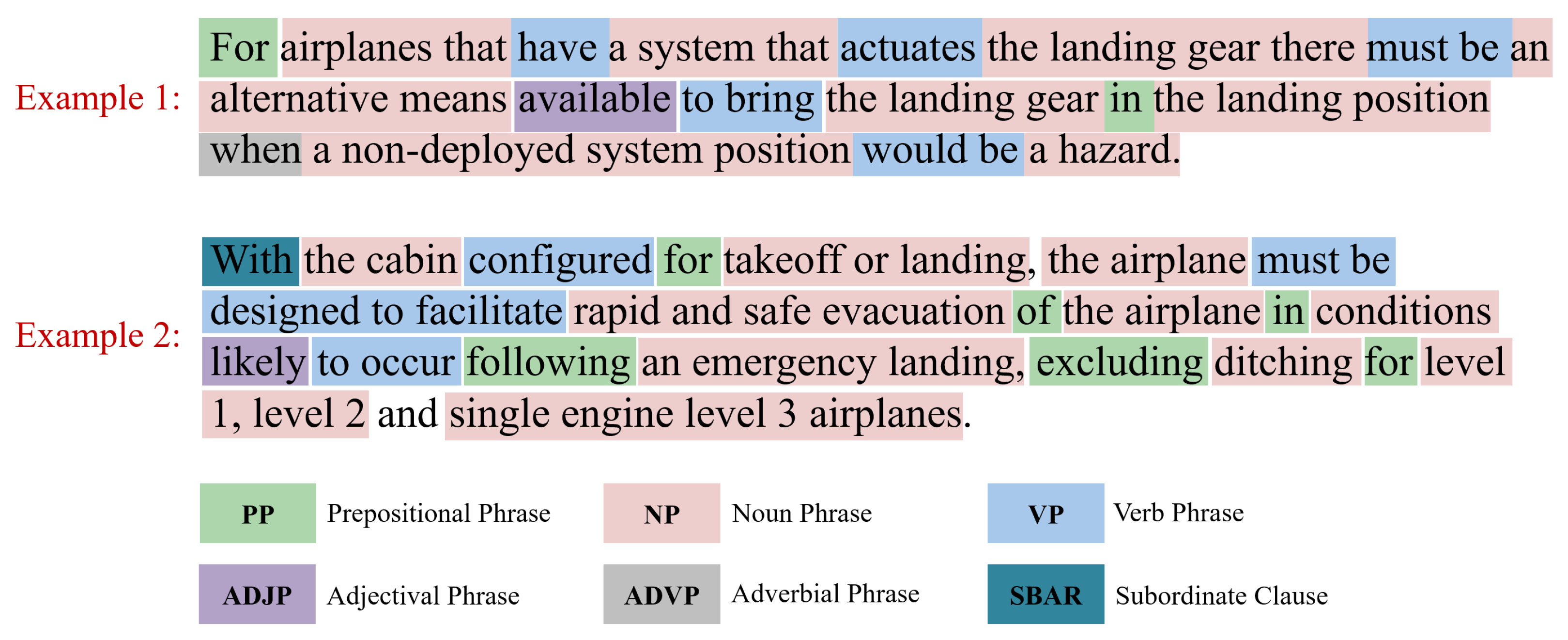

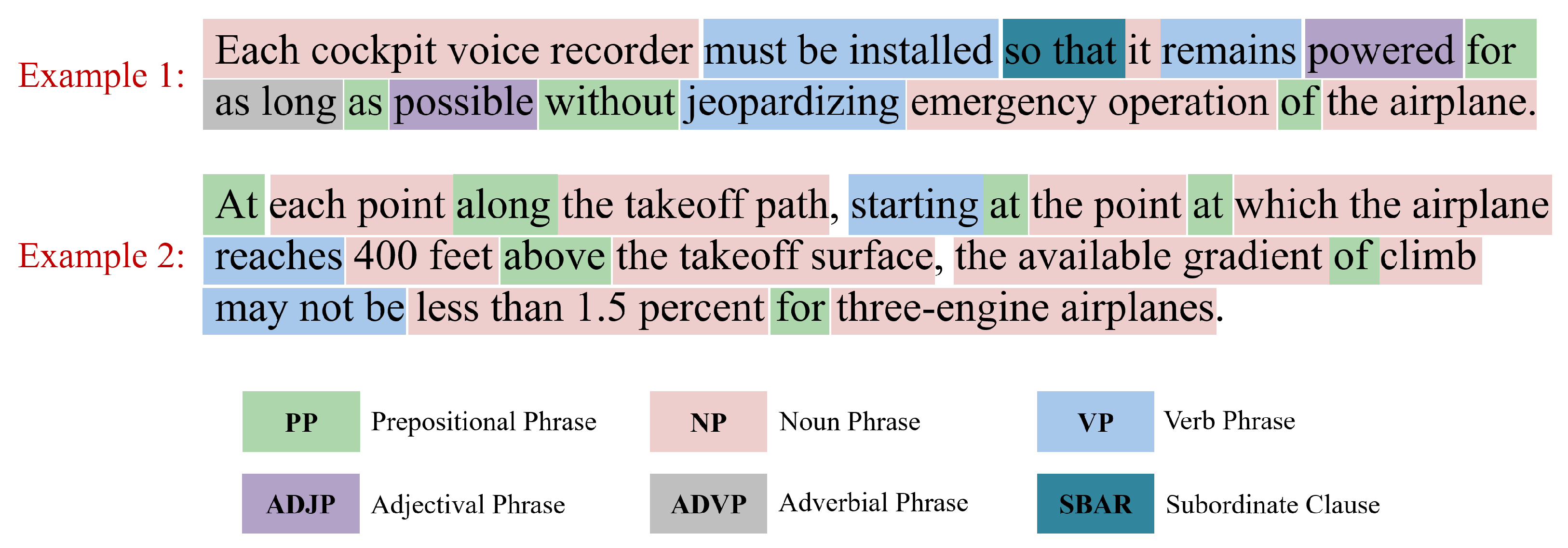

Examples showing design requirements beginning with different types of sentence chunks are shown in

Figure 13 and

Figure 14.

Examples 1-4 show the design requirements beginning with different types of sentence chunks (PP, SBAR, VP, and NP, respectively), which gives a glimpse into the different ways these requirements can be written and the variation in them. For example 1, the initial prepositional phrase (PP) appears to specify a particular family of aircraft which are those with retractable landing gear. This provides a feel of a sector-wide policy statement rather than a limitation to a particular component or system. In all cases, the first NP is often the system name and followed by VPs.

It is important to note that in example 3 (

Figure 14), the term “

Balancing” is wrongly classified as a VP. The entire term “

Balancing tabs” should have been identified as an NP instead. This error can be attributed to the fact that an off-the-shelf sentence chunking model (

flair/chunk-english) was used and hence it failed to identify “

Balancing tabs” as a single NP due to the lack of aerospace domain knowledge. Such discrepancies can be resolved by simultaneously accounting for the named entities identified by aeroBERT-NER for the same requirement. For this example, “

Balancing tabs” was identified as a system name (SYS) by aeroBERT-NER, which should be a NP by default. In places where the text chunking and NER models disagree, the results from the NER model take precedence since it is fine-tuned on an annotated aerospace corpus and hence has more context regarding the aerospace domain.

Sankey diagrams can be used to recognize and filter requirements that exhibit dominant linguistic structures. By doing so, the more prominent sequences or patterns can be removed, allowing for greater focus on the less common ones for further investigation.

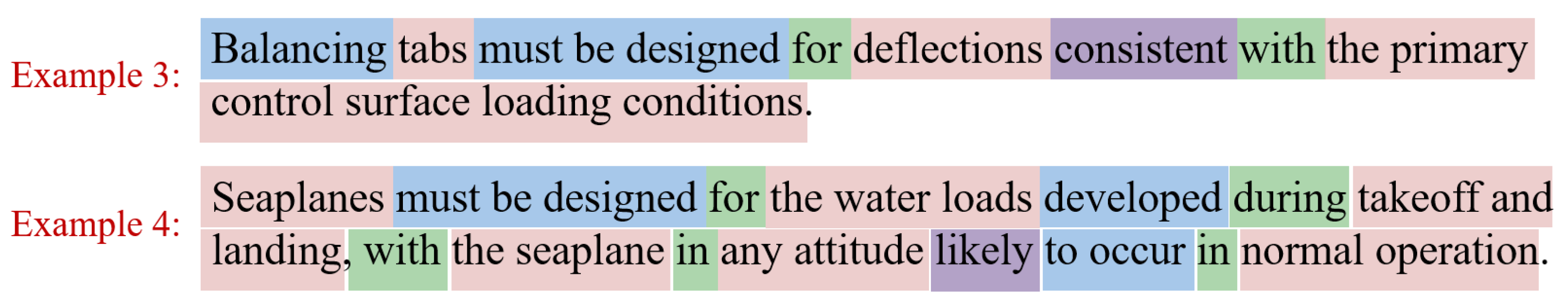

Functional Requirements

Of the 100 requirements classified as functional, 84 started with a NP, 10 started with a PP, and 6 started with a SBAR. The majority of the NPs are followed by a VP, which occurred in 69 requirements. The detailed sequence of patterns is shown in

Figure 15.

Functional requirements used for this work start with an NP, PP, or SBAR.

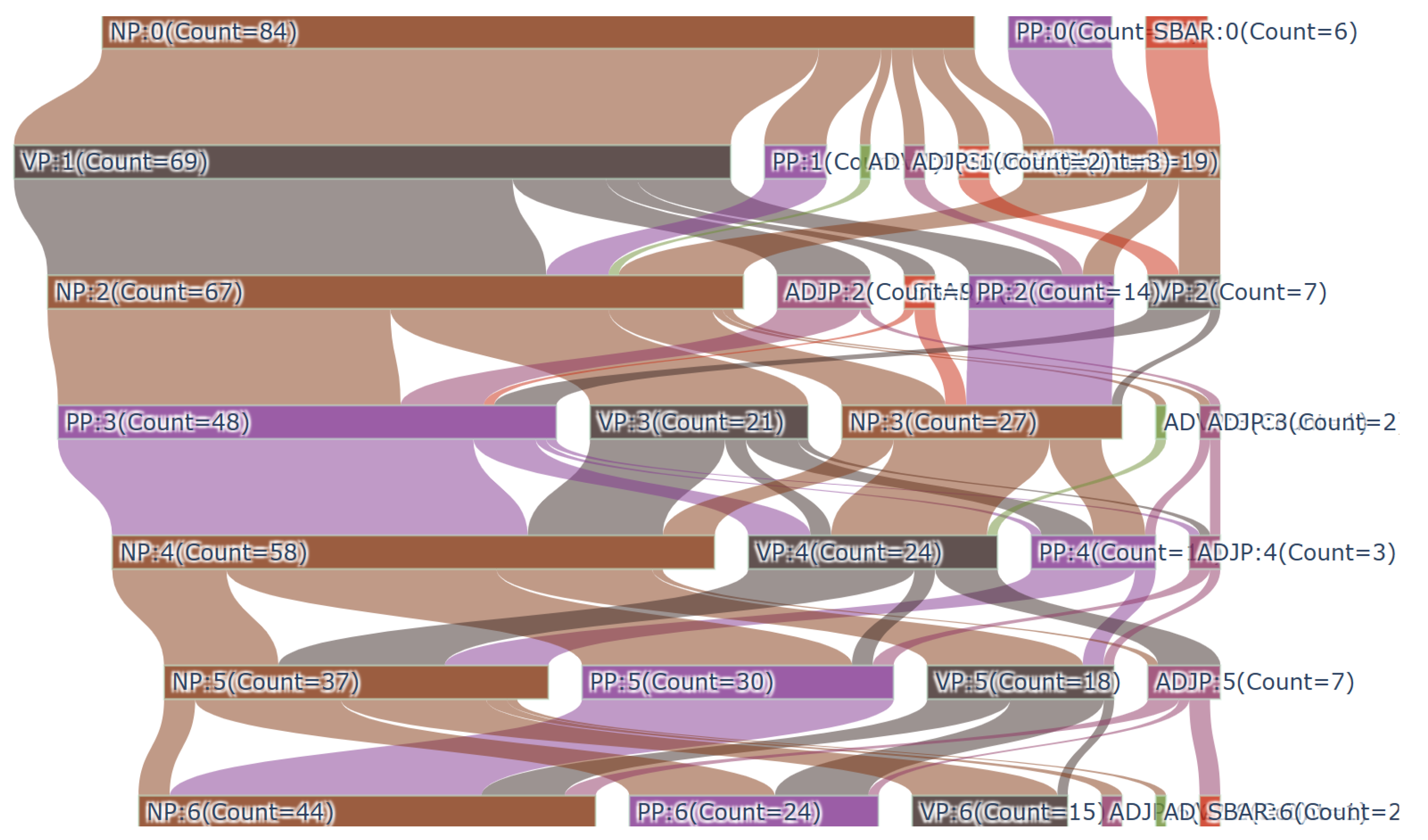

Figure 16 shows examples of functional requirements beginning with these three types of sentence chunks.

The functional requirements beginning with an NP have system names in the beginning (Example 1 of

Figure 16). However, this is not the case for requirements that start with a condition, as shown in example 3.

Performance Requirements

Of the 61 requirements classified as performance requirements, 53 started with a NP and 8 started with a PP. The majority of the NPs are followed by a VP for 39 requirements. The detailed sequence of patterns is shown in

Figure 17.

Examples of performance requirements starting with a NP and PP are shown in

Figure 18. The requirement starting with a NP usually starts with a system name, which is in line with the trends seen in the design and functional requirements. Whereas, the requirements starting with a PP usually have a condition in the beginning.

In example 2 (

Figure 18), quantities such as “400 feet” and “1.5 percent” are tagged as NP, however, there is no way to distinguish between the different types of NPs (NPs containing cardinal numbers vs not). Using aeroBERT-NER in conjunction with

flair/chunk-english helps clarify different types of entities beyond their text chunk tags, which is helpful for ordering entities in a requirement text. The same idea applies to resources (RES, for example, Section 25-395) as well.

4.2.1. General Patterns Observed in Requirements

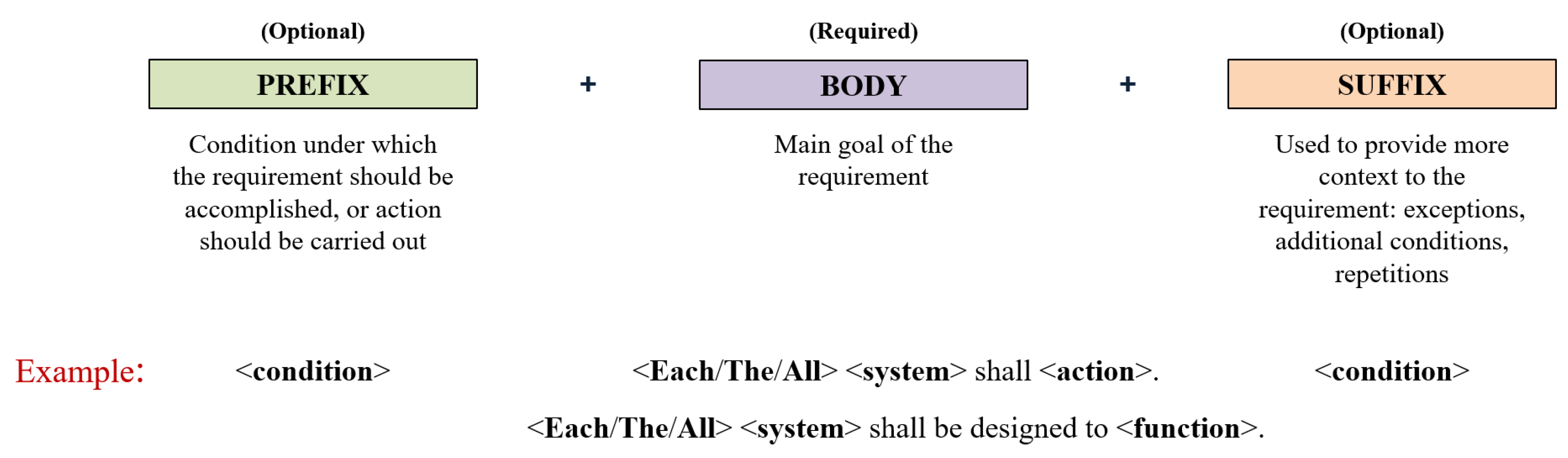

After analyzing the three types of requirements, it was discovered that there was a general pattern irrespective of the requirement type, as shown in

Figure 19.

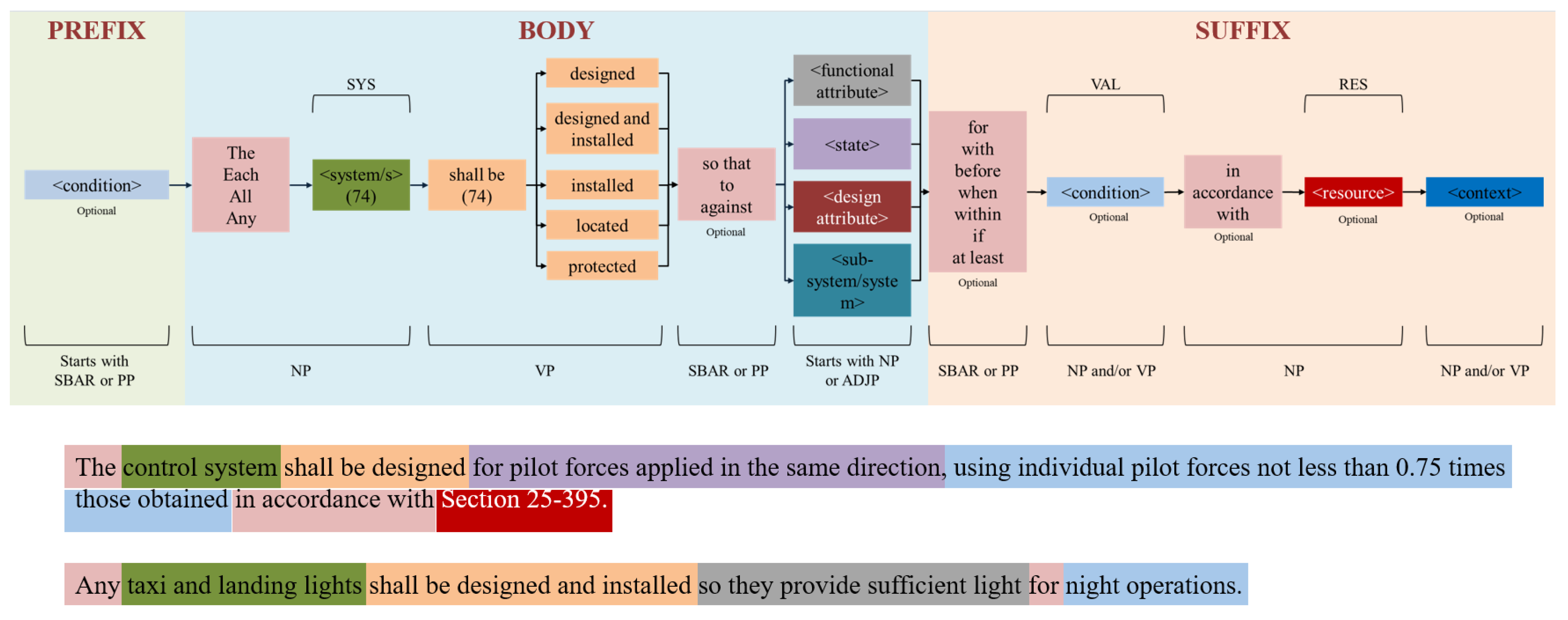

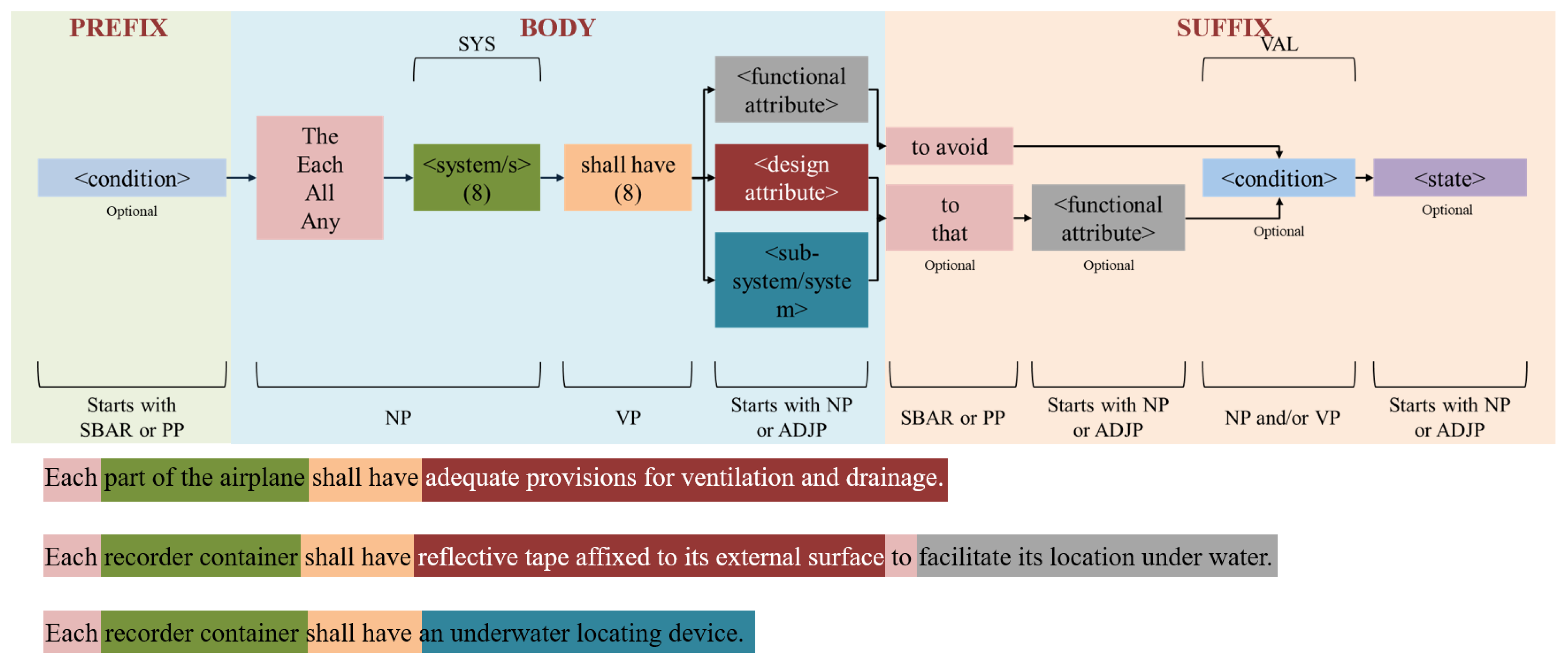

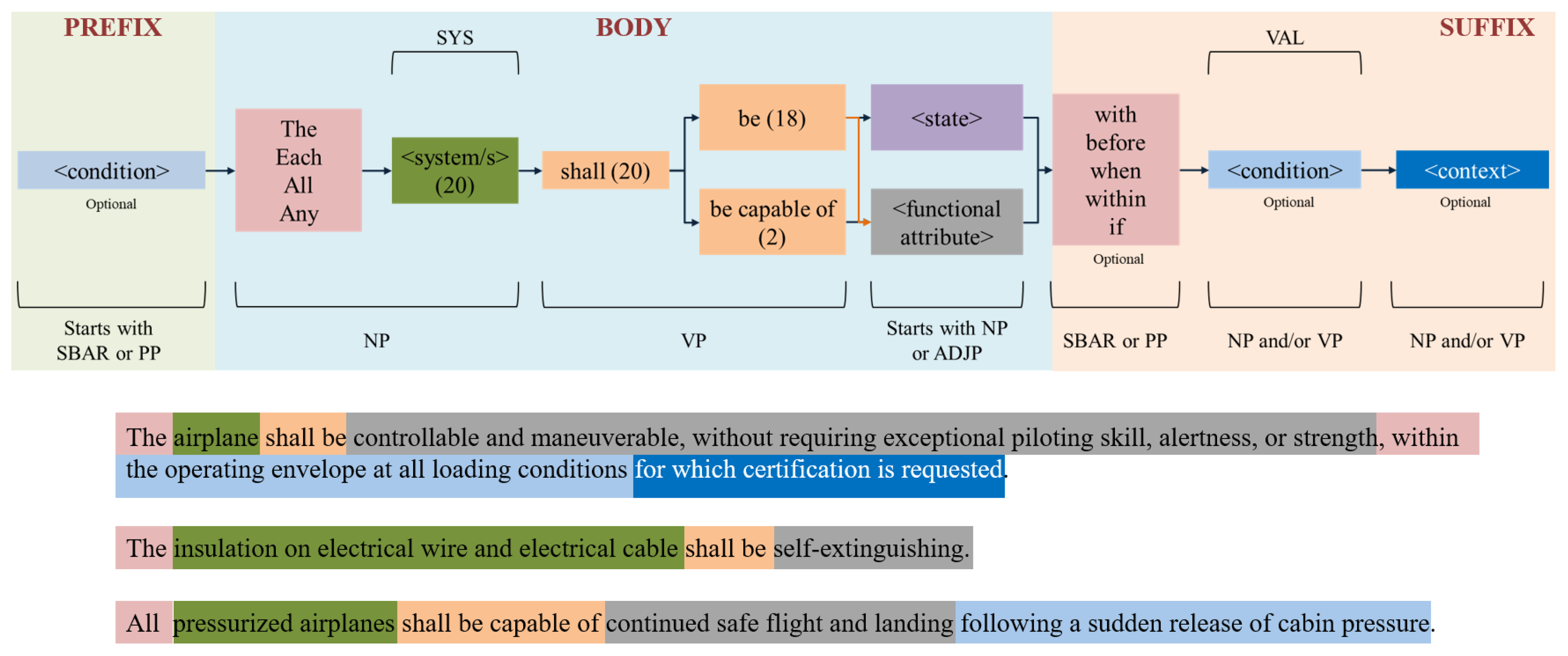

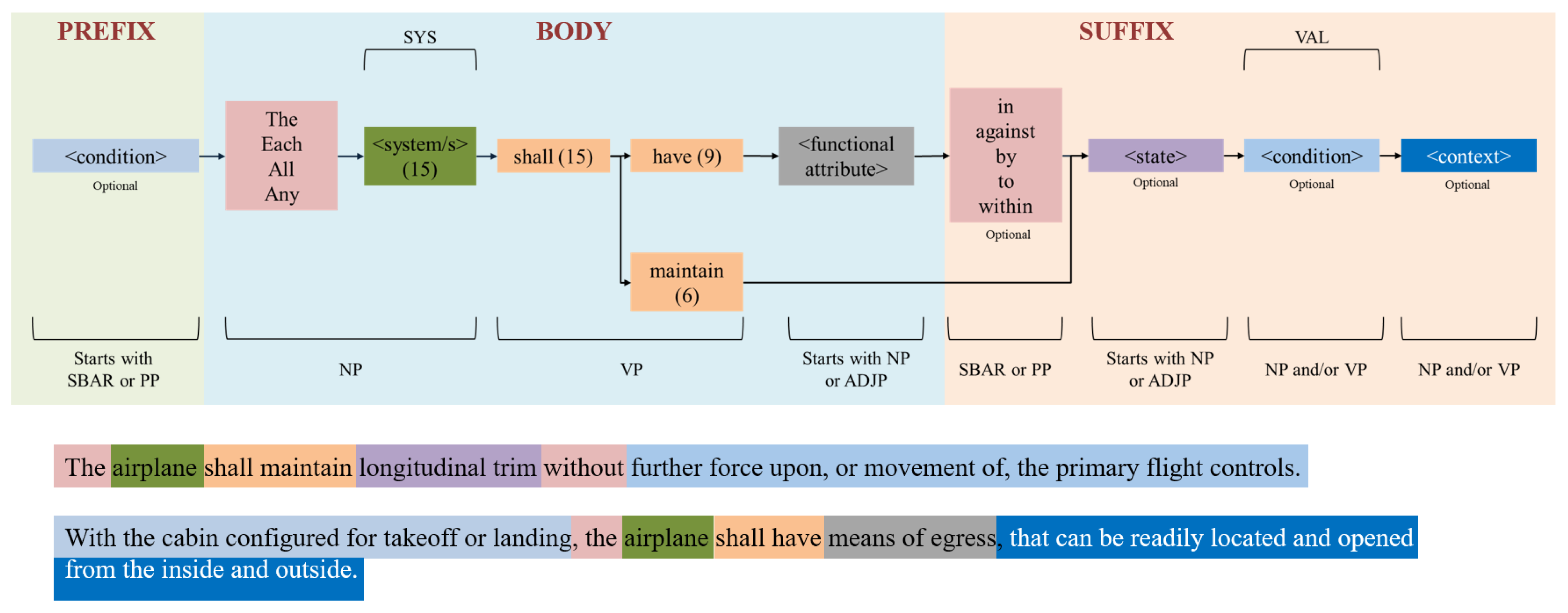

The Body section of the requirement usually starts with a NP (system name), which (for most cases) contains an SYS named entity, whereas the beginning of a Prefix and Suffix is usually marked by a SBAR or PP, namely, `so that’, `so as’, `unless’, `while’, `if’, `that’, etc. Both the Prefix and Suffix provide more context into a requirement and thus are likely to be conditions, exceptions, the state of the system after a function is performed, etc. Usually, the Suffix contains various different types of named entities, such as names of resources (RES), values (VAL), other system or sub-system names (SYS) that add more context to the requirement, etc. It is mandatory for a requirement to have a Body, while prefixes and suffixes are optional.

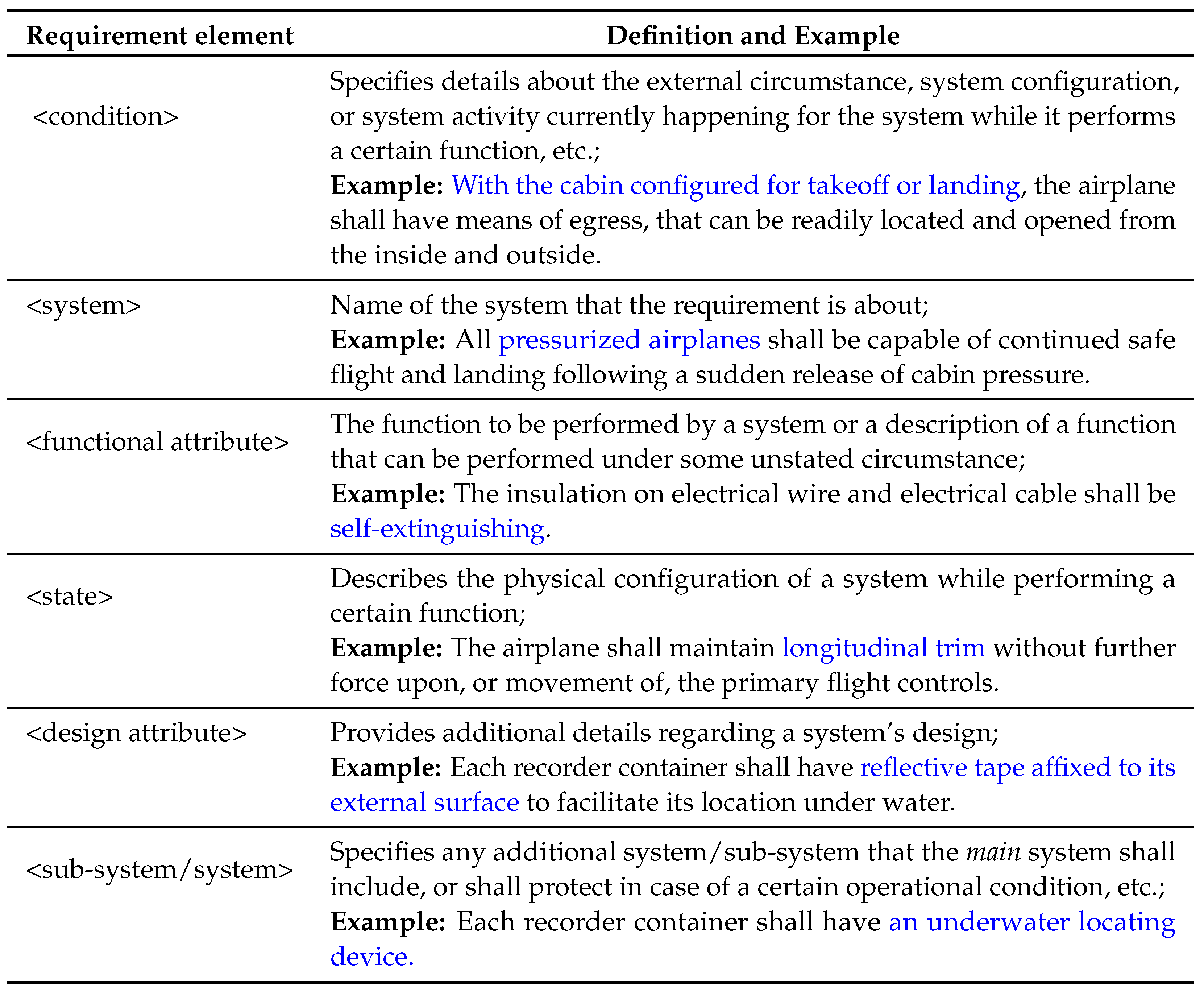

Table 5 presents the various elements of an aerospace requirement, including system, functional attribute, state, condition, and others, along with examples. The presence, absence, and order of these elements distinguish requirements of different types, as well as requirements within the same type, resulting in distinct boilerplate structures. These elements often begin or end with a specific type of sentence chunk and/or contain a particular named entity, which can aid in their identification.

Below, the methodology employed to identify patterns in requirements for the purpose of creating boilerplates is discussed, including the details regarding how this observed general pattern (

Figure 19) was tailored to different types of requirements.

Methodology for the Identification of Boilerplate Templates

The requirements are first classified using the aeroBERT-Classifier. The patterns in the sentence chunk and NE tags are then examined for each of the requirements and the identified patterns are used for boilerplate template creation. Based on these tags, it was observed that a requirement with a <condition> in the beginning usually starts with a PP or SBAR. Requirements with a condition, in the beginning, were however rare in the dataset used for this work. The initial <condition> is almost always followed by a NP, which contains a <system> name which can be distinctly identified within the NP by using the named entity tag (SYS). The initial NP is always succeeded by a VP which contains the term “shall [variations]”. These “[variations]” (shall be designed, shall be protected, shall have, shall be capable of, shall maintain, etc.) were crucial for the identification of different types of boilerplates since they provided information regarding the action that the <system> should be performing (to protect, to maintain, etc.). The VP is followed by a SBAR or PP but this is optional. The SBAR/PP is succeeded by a NP or ADJP and can contain either a <functional attribute>, <state>, <design attribute>, <user>, or a <sub-system/system>, depending on the type/sub-type of a requirement. This usually brings an end to the main Body of the requirement. The Suffix is optional and can contain additional information such as operating and environmental conditions (can contain VAL named entity), resources (RES), and context.

The process of categorizing requirements, chunking, performing NER, and identifying requirement elements is iterated for all the requirements. The elements are recognized by combining information obtained from the sentence chunker and aeroBERT-NER. The requirements are subsequently aggregated into groups based on the sequence/order of identified elements to form boilerplate structures. Optional elements are included in the structures to cover any variations that may occur, allowing more requirements to be accommodated under a single boilerplate.

The flowchart in

Figure 20 summarizes the process for identifying boilerplate templates from well-written requirements. The example shown in the flowchart illustrates the steps involved in using a single requirement. It is crucial to note that in order to generate boilerplate templates that can be applied more broadly, patterns observed across multiple requirements need to be identified.

6. Conclusions & Future Work

Two language models, aeroBERT-NER and aeroBERT-Classifier, fine-tuned on annotated aerospace corpora, were used to demonstrate a methodology for creating a requirements table. This table contains five columns and is intended to assist with the creation of a requirements table in SysML. Additional columns can be added to the table if needed, but this may require developing new language models to extract the necessary data. Furthermore, aeroBERT-NER and aeroBERT-Classifier can be improved by adding new named entities or requirement types to extract other relevant information.

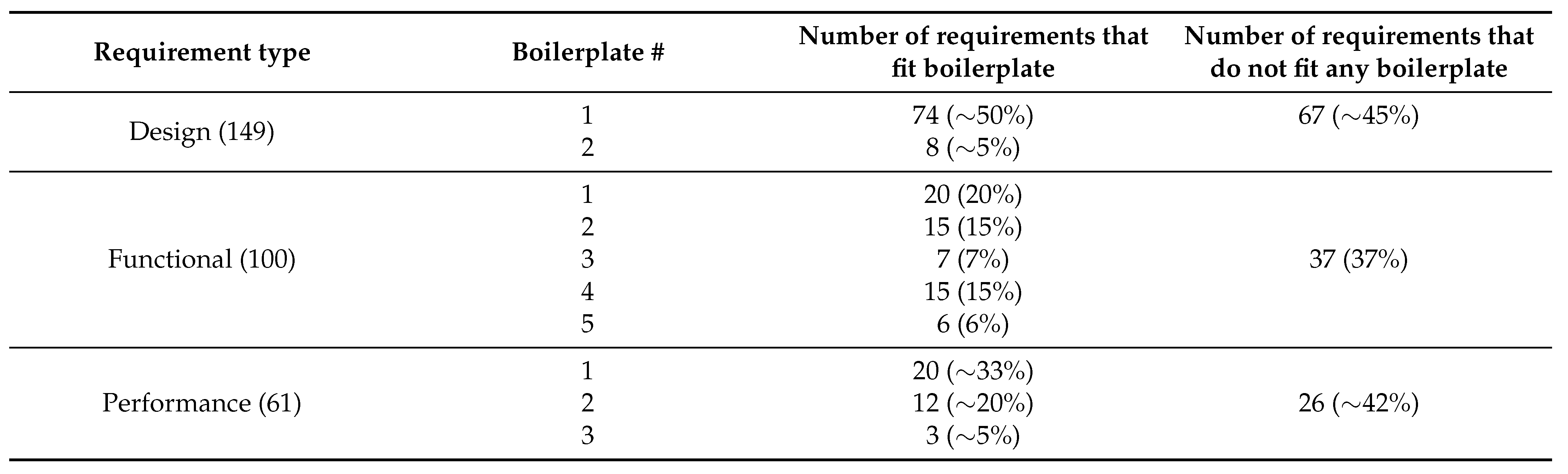

Boilerplate templates for various types of requirements were identified using the aeroBERT models for classification and NER as well as an off-the-shelf sentence chunking model (flair/chunk-english). To account for variations within requirements, multiple boilerplate templates were obtained. Two, five, and three boilerplate templates were identified for the design, functional, and performance requirements (used for this work) respectively.

The use of these templates, particularly by inexperienced engineers working with requirements, will ensure that requirements are written in a standardized form from the beginning. In doing so, this work democratizes a methodology for the identification of boilerplates given a set of requirements, which can vary from one company or industry to another.

Boilerplates can be utilized to create new requirements that follow the established structure or to assess the conformity of NL requirements with the identified boilerplates. These activities are valuable for standardizing requirements on a larger scale and at a faster pace. As such they are expected to contribute to the adoption of MBSE effectively. Subject matter experts (SMEs) should review the identified boilerplates to ensure their accuracy and consistency.

The boilerplates were identified specifically for certification requirements outlined in Parts 23 and 25 of Title 14 CFRs. While these boilerplate templates may not directly correspond to the proprietary system requirements used by aerospace companies, the methodology presented in this paper remains applicable.

Future work could focus on exploring the creation of a data pipeline that incorporates different LMs to automatically or semi-automatically translate NL requirements into system models. This method would likely involve multiple rounds of refinement and necessitate input from experienced MBSE practitioners.

Exploring the use of generative LMs like T5, the GPT family, etc., may prove beneficial in rewriting free-form NL requirements. These models could be trained on a dataset containing NL requirements and their corresponding rewritten versions that adhere to industry standards. With this training, the model may be capable of generating well-written requirements based on the input NL requirements. Although untested, this approach presents an interesting research direction to explore.

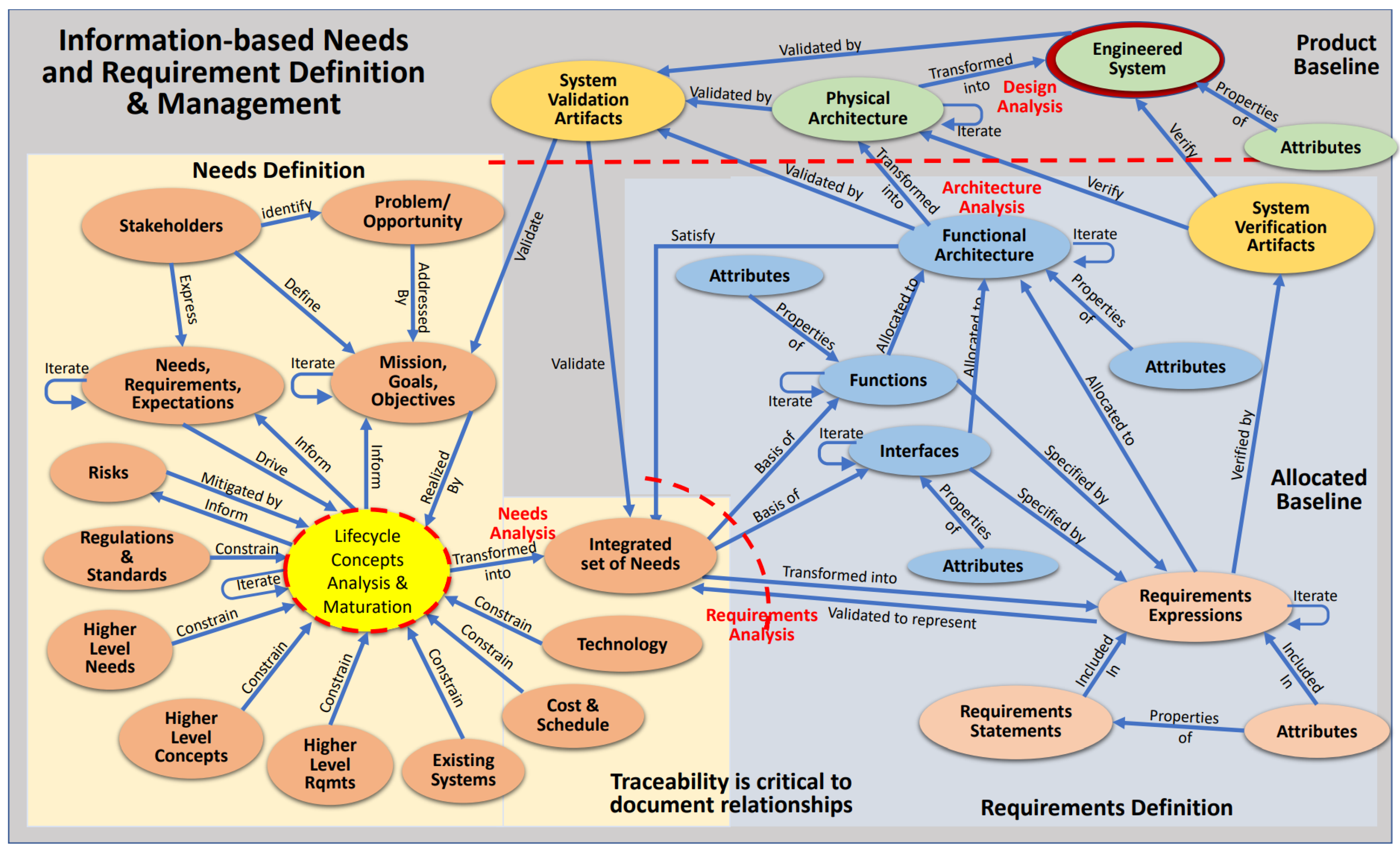

Figure 1.

Information-based requirement development and management model [

15].

Figure 1.

Information-based requirement development and management model [

15].

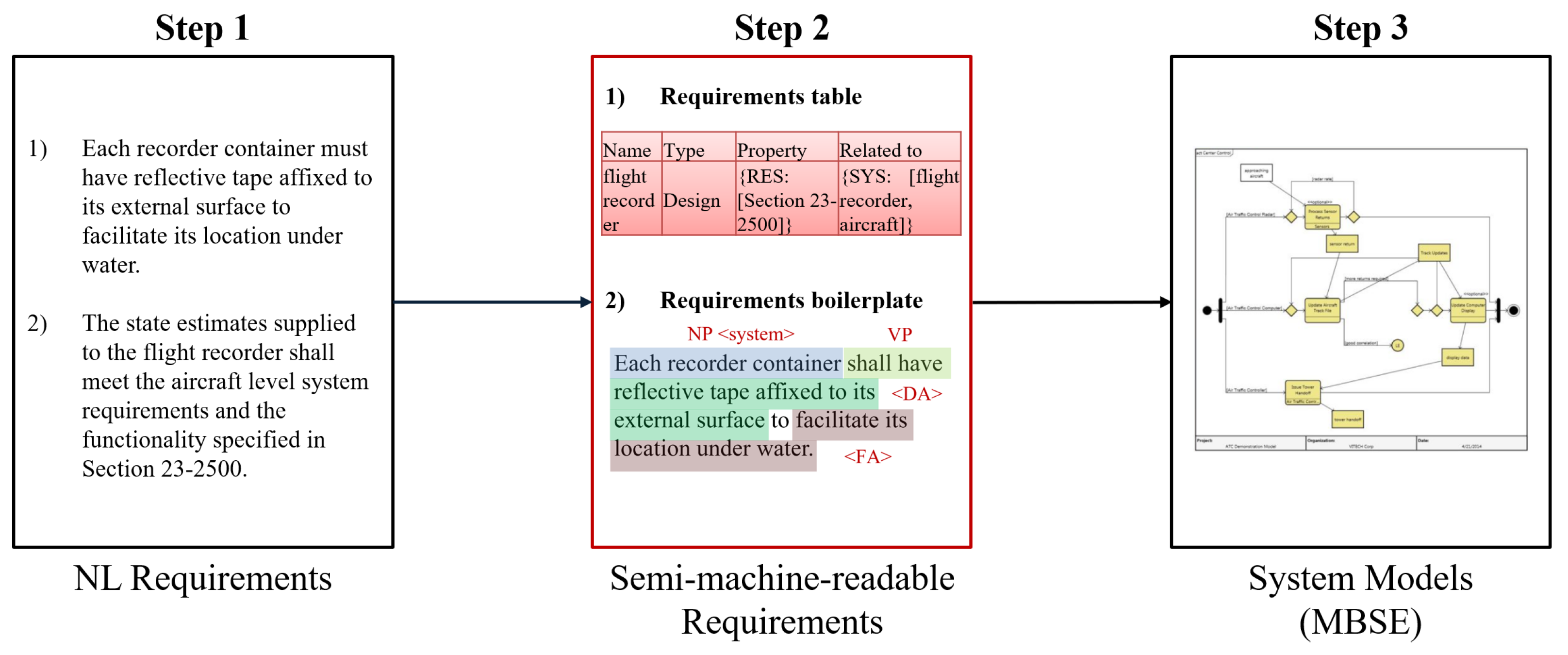

Figure 2.

Steps of requirements engineering, starting with gathering requirements from various stakeholders, followed by using NLP techniques to standardize them and lastly converting the standardized requirements into models. The main focus of this work is to convert NL requirements into semi-machine-readable requirements (where parts of the requirement become data objects) as shown in Step 2.

Figure 2.

Steps of requirements engineering, starting with gathering requirements from various stakeholders, followed by using NLP techniques to standardize them and lastly converting the standardized requirements into models. The main focus of this work is to convert NL requirements into semi-machine-readable requirements (where parts of the requirement become data objects) as shown in Step 2.

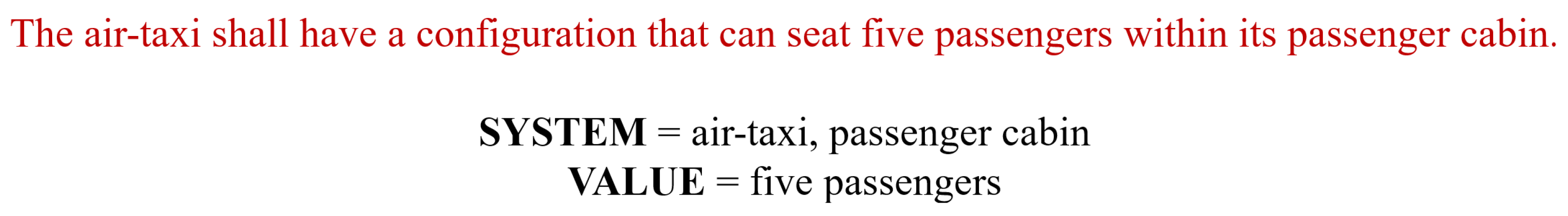

Figure 3.

An illustration of how to convert the contents of a natural language requirement into data objects can be seen in this example. In the given requirement “The air-taxi shall have a configuration that can seat five passengers within its passenger cabin”, the air-taxi and passenger cabin are considered as SYSTEM, while five passengers is classified as a VALUE.

Figure 3.

An illustration of how to convert the contents of a natural language requirement into data objects can be seen in this example. In the given requirement “The air-taxi shall have a configuration that can seat five passengers within its passenger cabin”, the air-taxi and passenger cabin are considered as SYSTEM, while five passengers is classified as a VALUE.

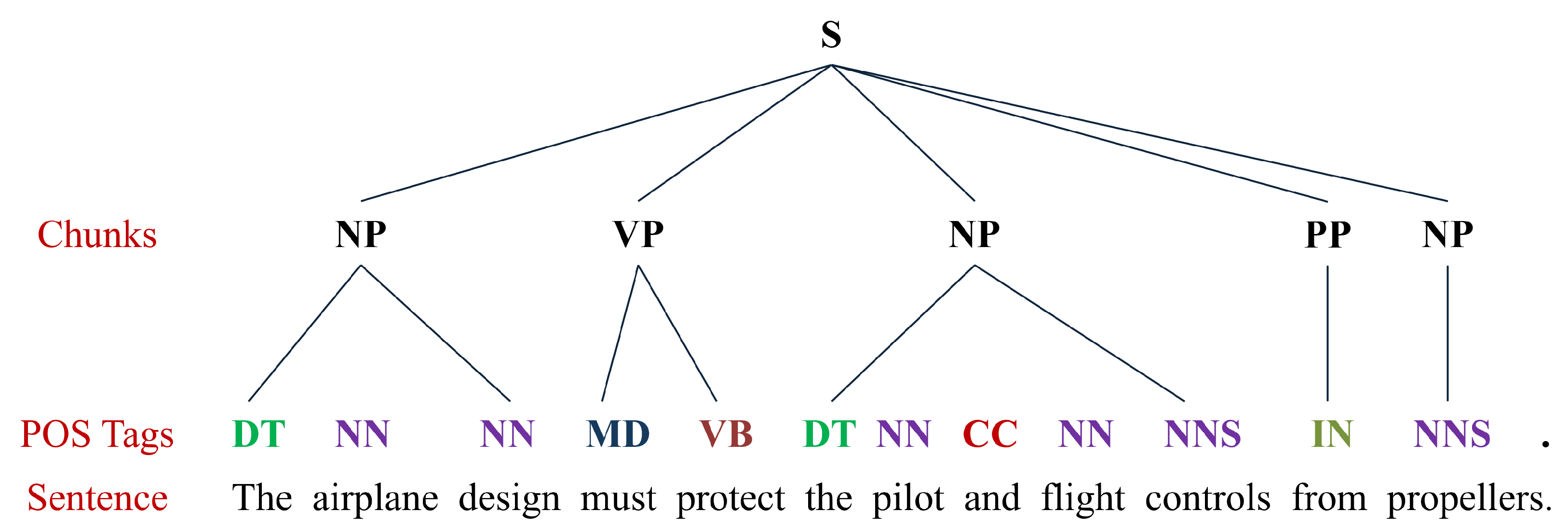

Figure 4.

An aerospace requirement along with its POS tags and sentence chunks. Each word has a POS tag associated with it which can then be combined together to obtain a higher-level representation called sentence chunks (NP: Noun Phrase; VP: Verb Phrase; PP: Prepositional Phrase).

Figure 4.

An aerospace requirement along with its POS tags and sentence chunks. Each word has a POS tag associated with it which can then be combined together to obtain a higher-level representation called sentence chunks (NP: Noun Phrase; VP: Verb Phrase; PP: Prepositional Phrase).

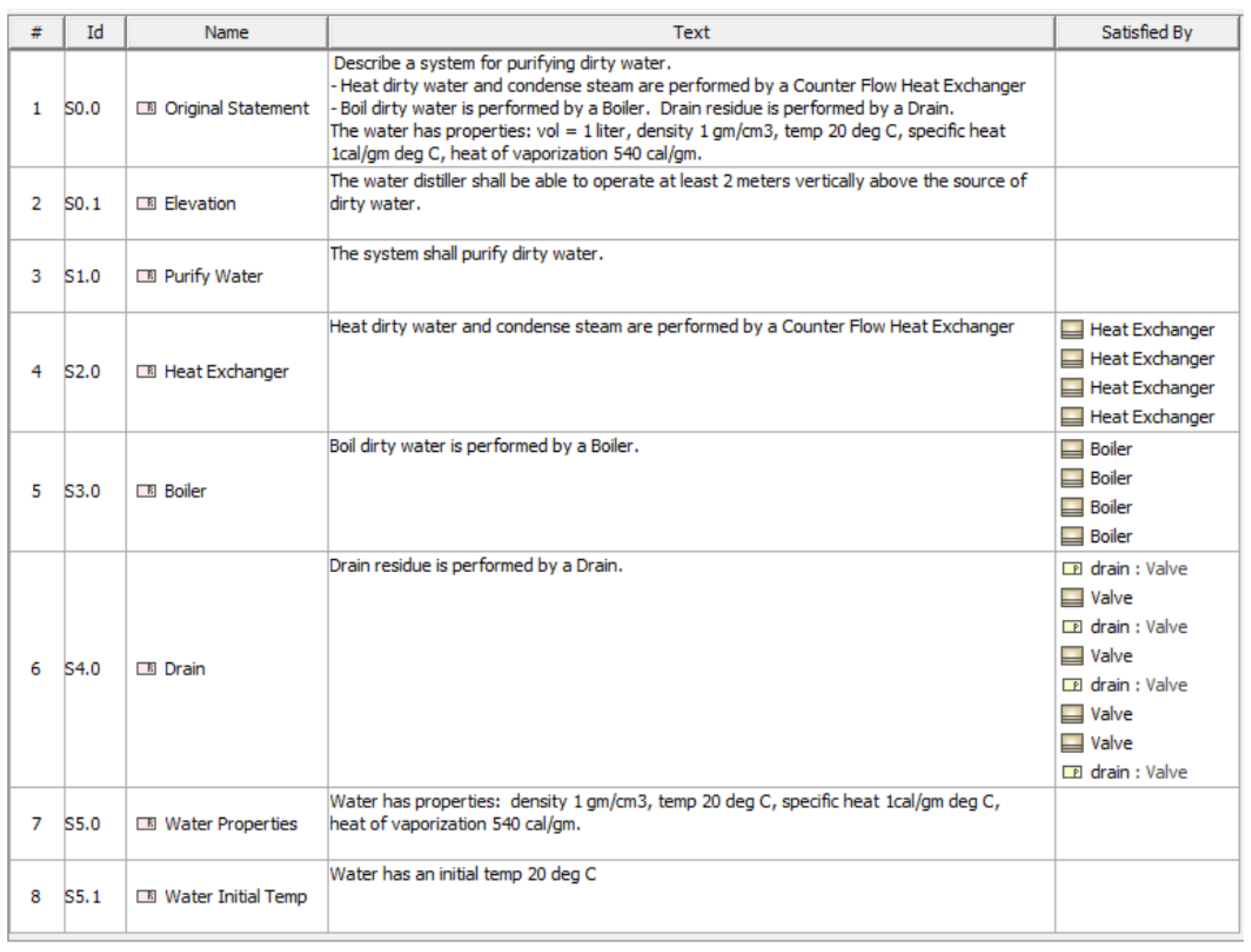

Figure 5.

A SysML requirement table with four columns, namely, Id, Name, Text, and Satisfied by. It typically contains these four columns by default, however, more columns can be added to capture other properties pertaining to the requirement.

Figure 5.

A SysML requirement table with four columns, namely, Id, Name, Text, and Satisfied by. It typically contains these four columns by default, however, more columns can be added to capture other properties pertaining to the requirement.

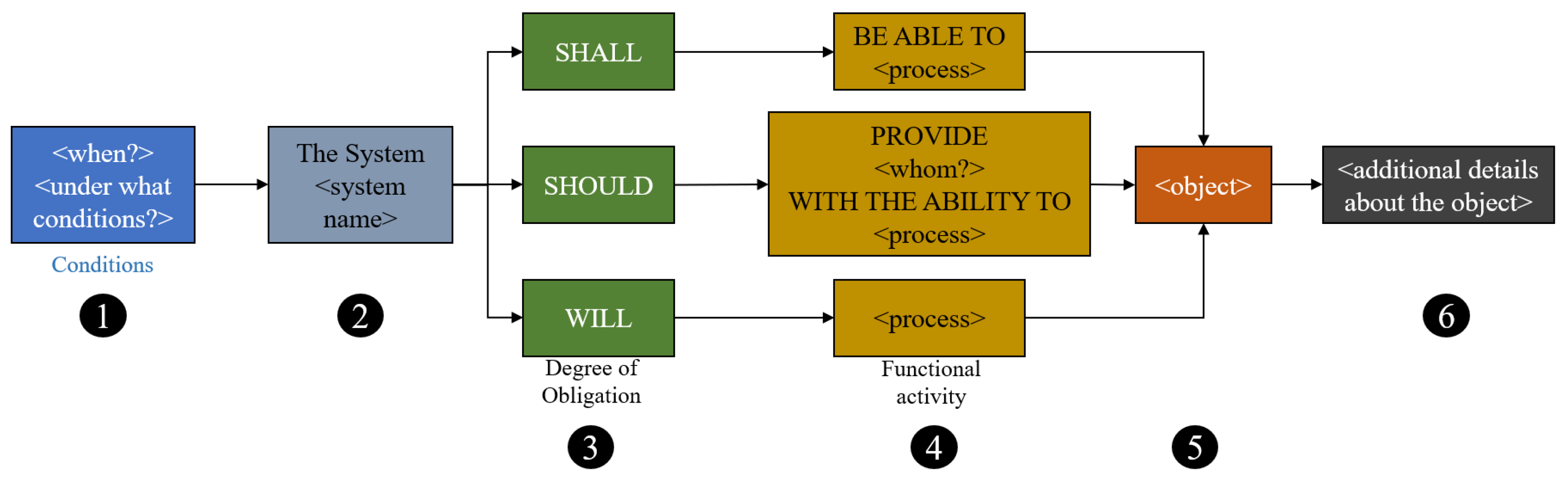

Figure 6.

Rupp’s Boilerplate structure, which can be divided into six different parts, namely, (1) Condition, (2) System, (3) Degree of Obligation, (4) Functional activity, (5) Object, (6) Additional details about the object [

18,

20,

21].

Figure 6.

Rupp’s Boilerplate structure, which can be divided into six different parts, namely, (1) Condition, (2) System, (3) Degree of Obligation, (4) Functional activity, (5) Object, (6) Additional details about the object [

18,

20,

21].

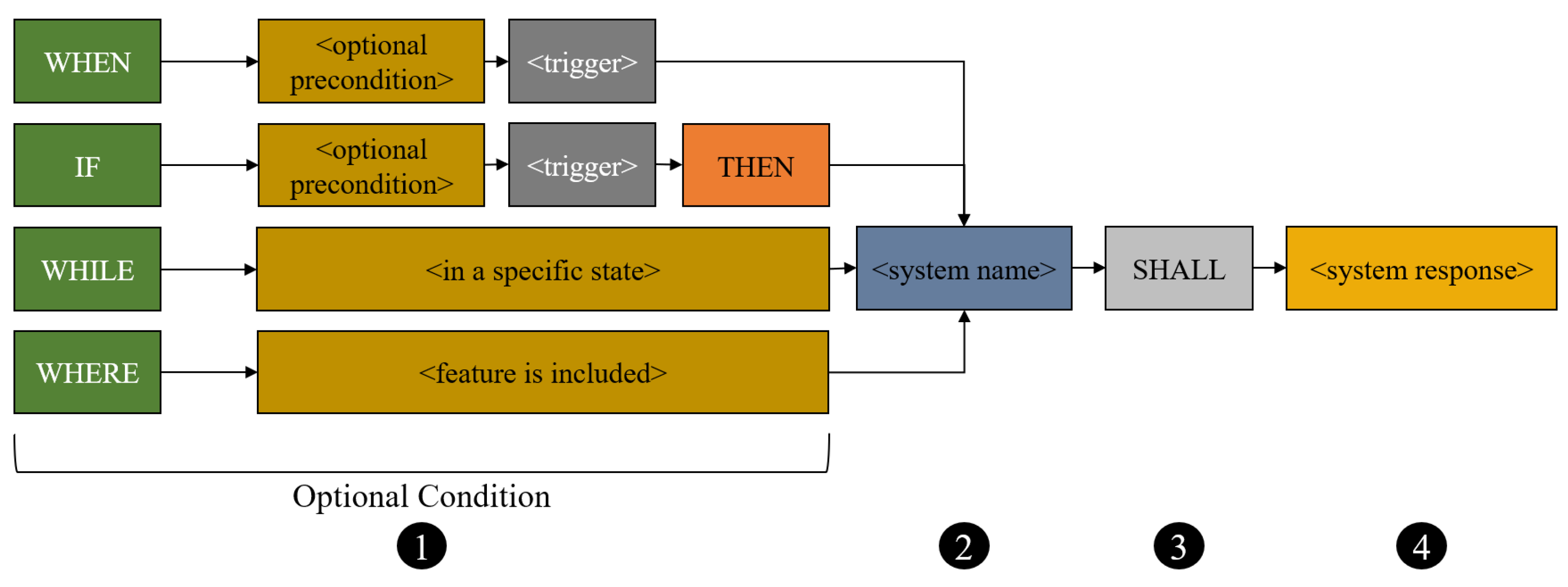

Figure 7.

EARS Boilerplate structure, which can be divided into four different parts, namely, (1) Optional condition block, (2) System/subsystem name, (3) Degree of Obligation, (4) Response of the system [

20,

22].

Figure 7.

EARS Boilerplate structure, which can be divided into four different parts, namely, (1) Optional condition block, (2) System/subsystem name, (3) Degree of Obligation, (4) Response of the system [

20,

22].

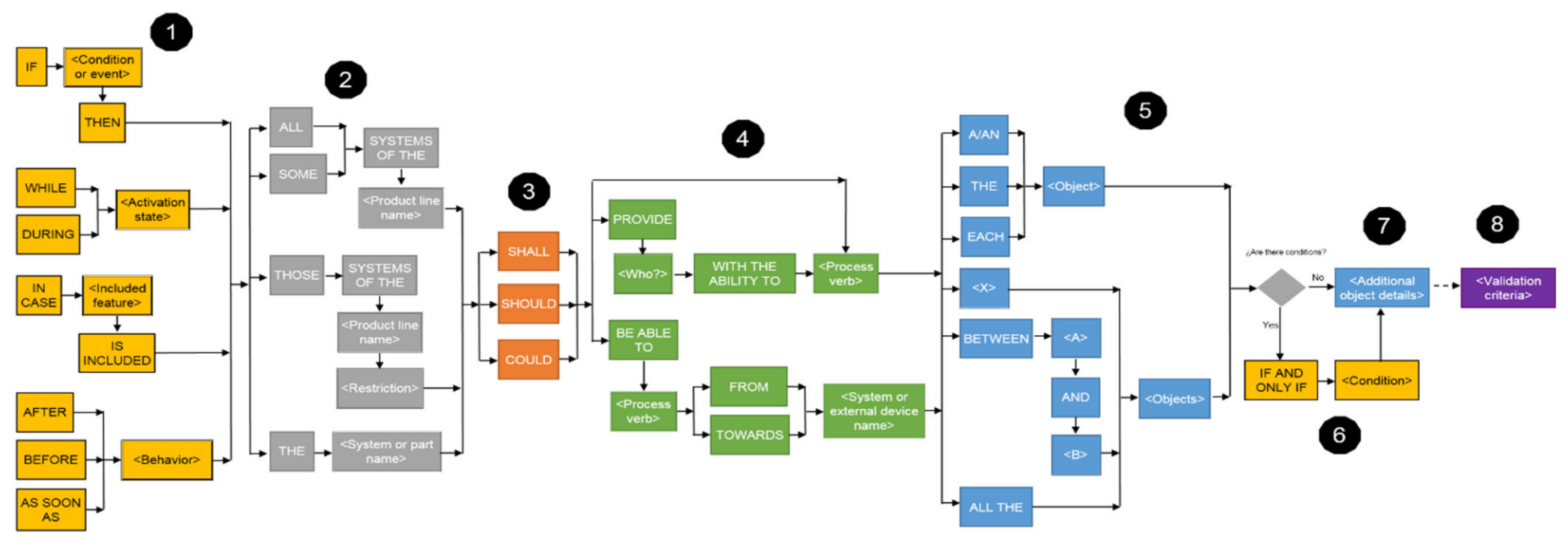

Figure 8.

Mazo and Jaramillo template [

18].

Figure 8.

Mazo and Jaramillo template [

18].

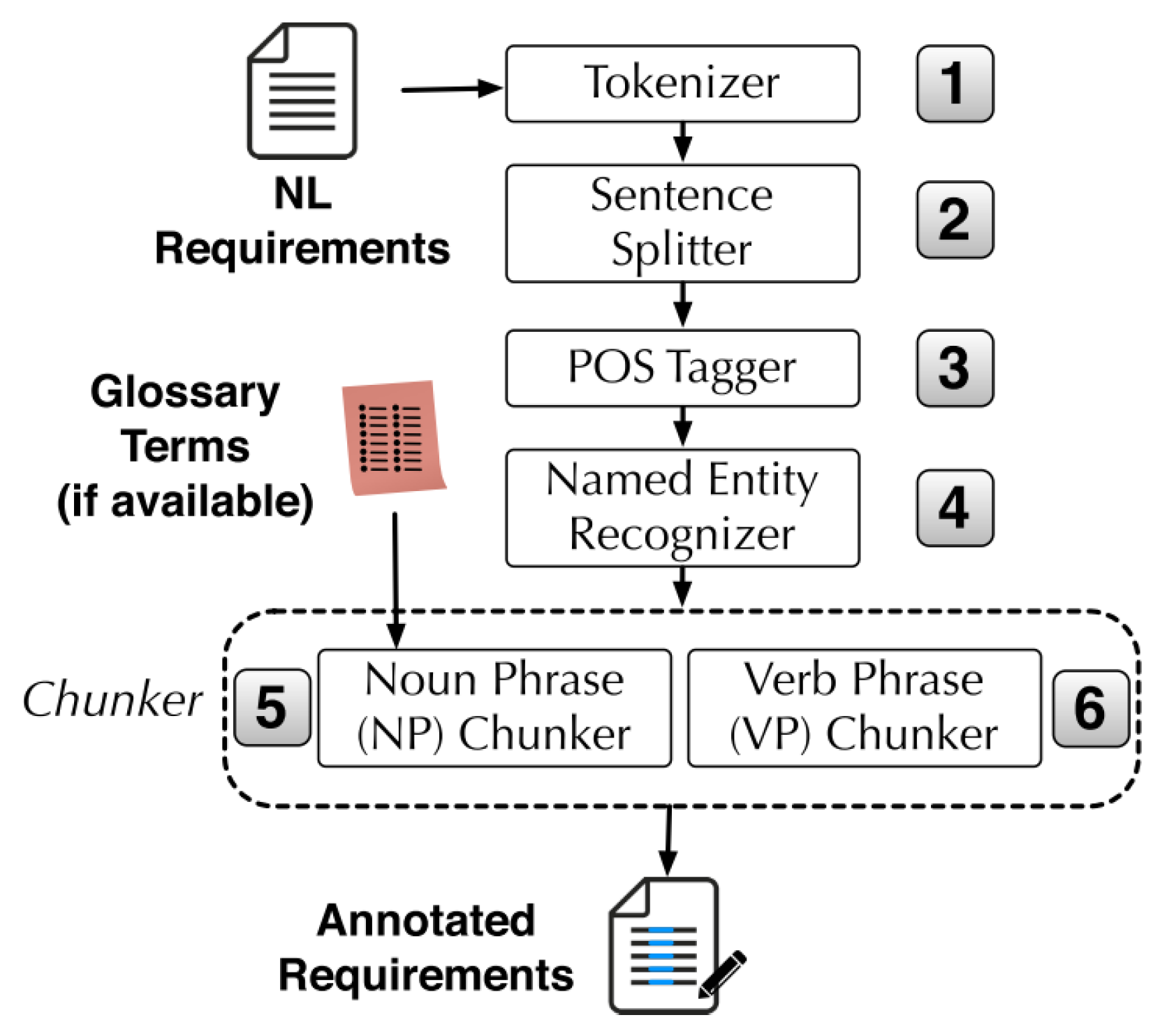

Figure 9.

NLP pipeline for text chunking and NER [

51].

Figure 9.

NLP pipeline for text chunking and NER [

51].

Figure 10.

Pipeline for converting NL requirements to standardized requirements using various LMs.

Figure 10.

Pipeline for converting NL requirements to standardized requirements using various LMs.

Figure 11.

Flowchart showcasing the creation of requirements table for the requirement “

The state estimates supplied to the flight recorder shall meet the aircraft level system requirements and functionality specified in Section 23-2500” using two LMs, namely, aeroBERT-Classifier [

24] and aeroBERT-NER [

23] to populate various columns of the table. A zoomed-in version of the figure can be found here and more context can be found in [

53].

Figure 11.

Flowchart showcasing the creation of requirements table for the requirement “

The state estimates supplied to the flight recorder shall meet the aircraft level system requirements and functionality specified in Section 23-2500” using two LMs, namely, aeroBERT-Classifier [

24] and aeroBERT-NER [

23] to populate various columns of the table. A zoomed-in version of the figure can be found here and more context can be found in [

53].

Figure 12.

Sankey diagram showing the text chunk patterns in design requirements. A part of the figure is shown due to space constraints, however, the full diagram can be found here [

53].

Figure 12.

Sankey diagram showing the text chunk patterns in design requirements. A part of the figure is shown due to space constraints, however, the full diagram can be found here [

53].

Figure 13.

Examples 1 and 2 show a design requirement beginning with a prepositional phrase (PP) and subordinate clause (SBAR) which is uncommon in the requirements dataset used for this work. The uncommon starting sentence chunks (PP, SBAR) are however followed by a noun phrase (NP) and verb phrase (VP). Most of the design requirements start with a NP.

Figure 13.

Examples 1 and 2 show a design requirement beginning with a prepositional phrase (PP) and subordinate clause (SBAR) which is uncommon in the requirements dataset used for this work. The uncommon starting sentence chunks (PP, SBAR) are however followed by a noun phrase (NP) and verb phrase (VP). Most of the design requirements start with a NP.

Figure 14.

Example 3 shows a design requirement starting with a verb phrase (VP). Example 4 shows the requirement starting with a noun phrase (NP) which was the most commonly observed pattern.

Figure 14.

Example 3 shows a design requirement starting with a verb phrase (VP). Example 4 shows the requirement starting with a noun phrase (NP) which was the most commonly observed pattern.

Figure 15.

Sankey diagram showing the text chunk patterns in functional requirements. A part of the figure is shown due to space constraints, however, the full diagram can be found [

53].

Figure 15.

Sankey diagram showing the text chunk patterns in functional requirements. A part of the figure is shown due to space constraints, however, the full diagram can be found [

53].

Figure 16.

Examples 1, 2, and 3 show functional requirements starting with a NP, PP, and SBAR. Most of the functional requirements start with a NP, however.

Figure 16.

Examples 1, 2, and 3 show functional requirements starting with a NP, PP, and SBAR. Most of the functional requirements start with a NP, however.

Figure 17.

Sankey diagram showing the text chunk patterns in performance requirements. A part of the figure is shown due to space constraints, however, the full diagram can be found here [

53].

Figure 17.

Sankey diagram showing the text chunk patterns in performance requirements. A part of the figure is shown due to space constraints, however, the full diagram can be found here [

53].

Figure 18.

Examples 1 and 2 show performance requirements starting with NP and PP respectively.

Figure 18.

Examples 1 and 2 show performance requirements starting with NP and PP respectively.

Figure 19.

The general textual pattern observed in requirements was <Prefix> + <Body> + <Suffix> out of which Prefix and Suffix are optional and can be used to provide more context about the requirement. The different variations of the requirement Body, Prefix, and Suffix are shown as well [

53].

Figure 19.

The general textual pattern observed in requirements was <Prefix> + <Body> + <Suffix> out of which Prefix and Suffix are optional and can be used to provide more context about the requirement. The different variations of the requirement Body, Prefix, and Suffix are shown as well [

53].

Figure 20.

Flowchart showcasing the creation of boilerplate templates using three language models, namely, aeroBERT-Classifier [

24], aeroBERT-NER [

23], and

flair/chunk-english. A zoomed-in version of the figure can be found here and more context can be found in [

53].

Figure 20.

Flowchart showcasing the creation of boilerplate templates using three language models, namely, aeroBERT-Classifier [

24], aeroBERT-NER [

23], and

flair/chunk-english. A zoomed-in version of the figure can be found here and more context can be found in [

53].

Figure 21.

The schematics of the first boilerplate for design requirements along with some examples that fit the boilerplate are shown here. This boilerplate accounts for 74 of the 149 design requirements (∼50%) used for this study and is tailored toward requirements that mandate the way a <system> should be designed and/or installed, its location, and whether it should protect another <system/sub-system> given a certain <condition> or <state>. Parts of the NL requirements shown here are matched with their corresponding boilerplate elements via the use of the same color scheme. In addition, the sentence chunks and named entity tags are displayed below and above the boilerplate structure, respectively.

Figure 21.

The schematics of the first boilerplate for design requirements along with some examples that fit the boilerplate are shown here. This boilerplate accounts for 74 of the 149 design requirements (∼50%) used for this study and is tailored toward requirements that mandate the way a <system> should be designed and/or installed, its location, and whether it should protect another <system/sub-system> given a certain <condition> or <state>. Parts of the NL requirements shown here are matched with their corresponding boilerplate elements via the use of the same color scheme. In addition, the sentence chunks and named entity tags are displayed below and above the boilerplate structure, respectively.

Figure 22.

The schematics of the second boilerplate for design requirements along with some examples that fit the boilerplate are shown here. This boilerplate accounts for 8 of the 149 design requirements (∼5%) used for this study and focuses on requirements that mandate a <functional attribute>, <design attribute>, or the inclusion of a <system/sub-system> by design. Two of the example requirements highlight the <design attribute> element which emphasizes additional details regarding the system design to facilitate a certain function. The last example shows a requirement where a <sub-system> is to be included in a system by design.

Figure 22.

The schematics of the second boilerplate for design requirements along with some examples that fit the boilerplate are shown here. This boilerplate accounts for 8 of the 149 design requirements (∼5%) used for this study and focuses on requirements that mandate a <functional attribute>, <design attribute>, or the inclusion of a <system/sub-system> by design. Two of the example requirements highlight the <design attribute> element which emphasizes additional details regarding the system design to facilitate a certain function. The last example shows a requirement where a <sub-system> is to be included in a system by design.

Figure 23.

The schematics of the first boilerplate for functional requirements along with some examples that fit the boilerplate is shown here. This boilerplate accounts for 20 of the 100 functional requirements (20%) used for this study and is tailored toward requirements that describe the capability of a <system> to be in a certain <state> or have a certain <functional attribute>. The first example requirement focuses on the handling characteristics of the system (airplane in this case).

Figure 23.

The schematics of the first boilerplate for functional requirements along with some examples that fit the boilerplate is shown here. This boilerplate accounts for 20 of the 100 functional requirements (20%) used for this study and is tailored toward requirements that describe the capability of a <system> to be in a certain <state> or have a certain <functional attribute>. The first example requirement focuses on the handling characteristics of the system (airplane in this case).

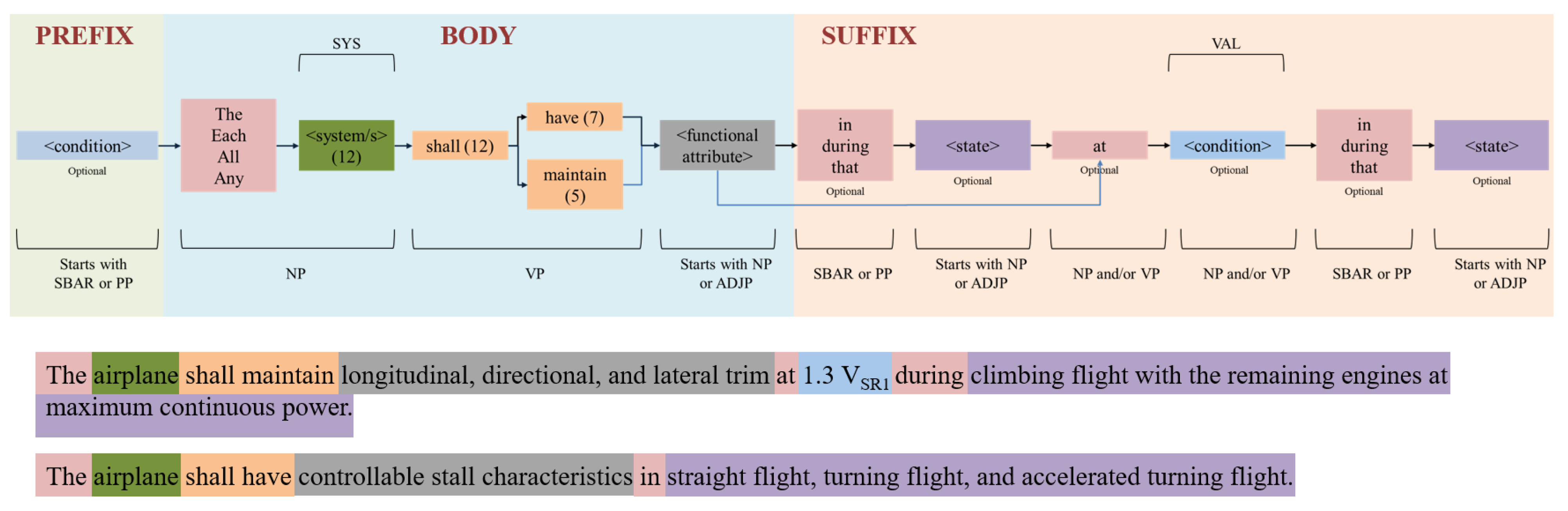

Figure 24.

The schematics of the second boilerplate for functional requirements along with some examples that fit the boilerplate is shown here. This boilerplate accounts for 15 of the 100 functional requirements (15%) used for this study and is tailored toward requirements that require the <system> to have a certain <functional attribute> or maintain a particular <state>.

Figure 24.

The schematics of the second boilerplate for functional requirements along with some examples that fit the boilerplate is shown here. This boilerplate accounts for 15 of the 100 functional requirements (15%) used for this study and is tailored toward requirements that require the <system> to have a certain <functional attribute> or maintain a particular <state>.

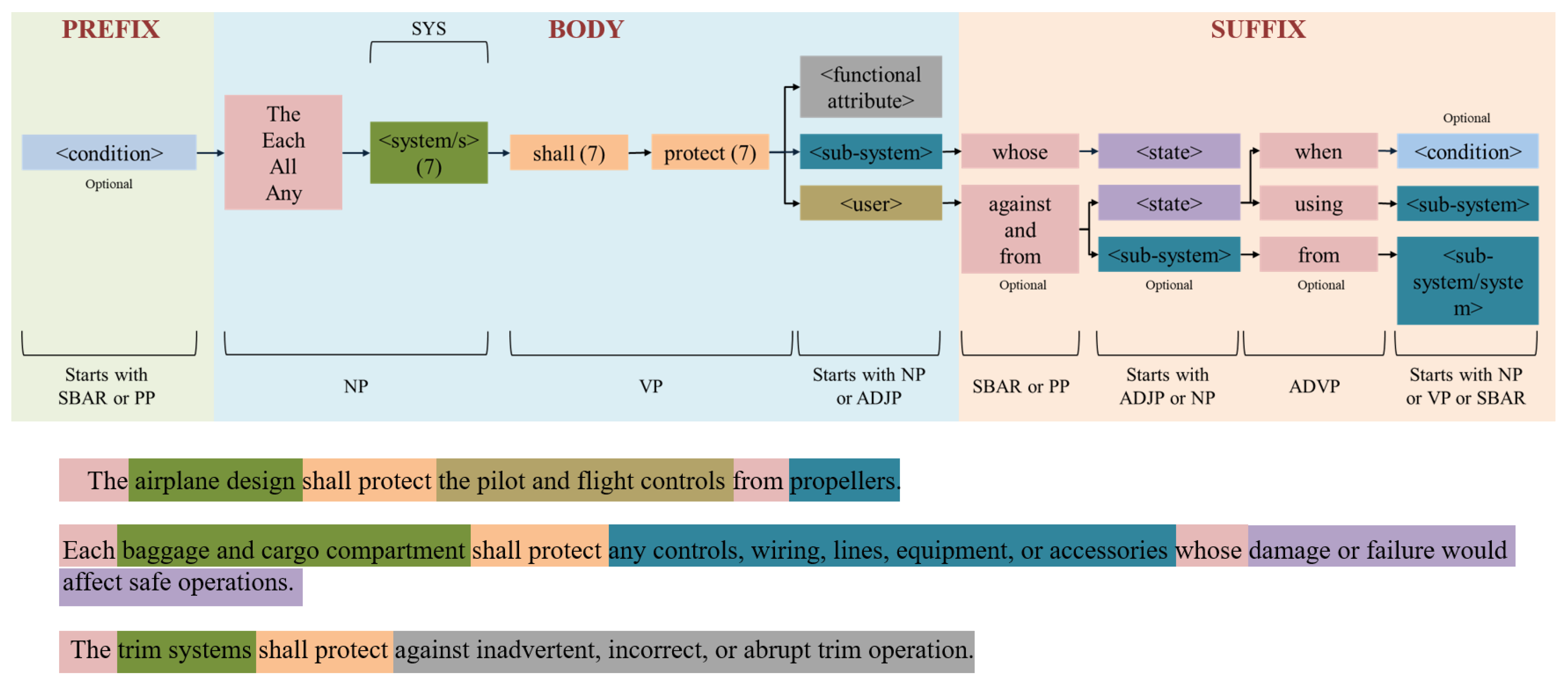

Figure 25.

The schematics of the third boilerplate for functional requirements along with some examples that fit the boilerplate is shown here. This boilerplate accounts for 7 of the 100 functional requirements (7%) used for this study and is tailored toward requirements that require the <system> to protect another <sub-system/system> or <user> against a certain <state> or another <sub-system/system>.

Figure 25.

The schematics of the third boilerplate for functional requirements along with some examples that fit the boilerplate is shown here. This boilerplate accounts for 7 of the 100 functional requirements (7%) used for this study and is tailored toward requirements that require the <system> to protect another <sub-system/system> or <user> against a certain <state> or another <sub-system/system>.

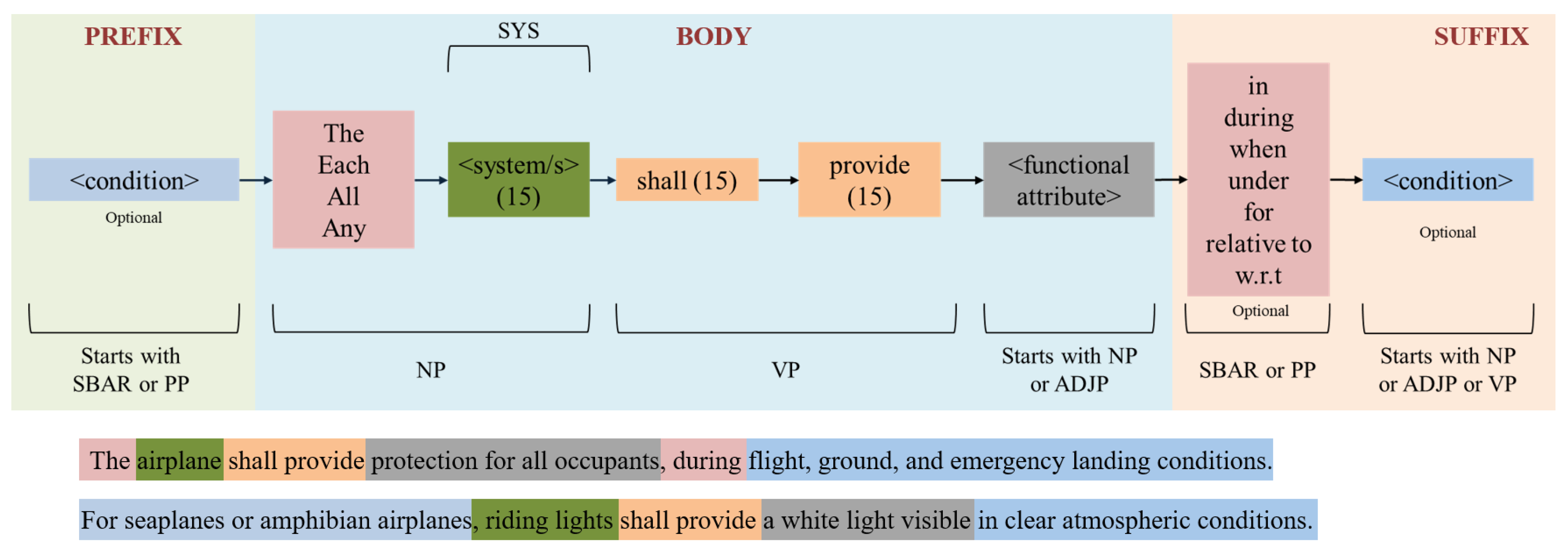

Figure 26.

The schematics of the fourth boilerplate for functional requirements along with some examples that fit the boilerplate is shown here. This boilerplate accounts for 15 of the 100 functional requirements (15%) used for this study and is tailored toward requirements that require the <system> to provide a certain <functional attribute> given a certain <condition>.

Figure 26.

The schematics of the fourth boilerplate for functional requirements along with some examples that fit the boilerplate is shown here. This boilerplate accounts for 15 of the 100 functional requirements (15%) used for this study and is tailored toward requirements that require the <system> to provide a certain <functional attribute> given a certain <condition>.

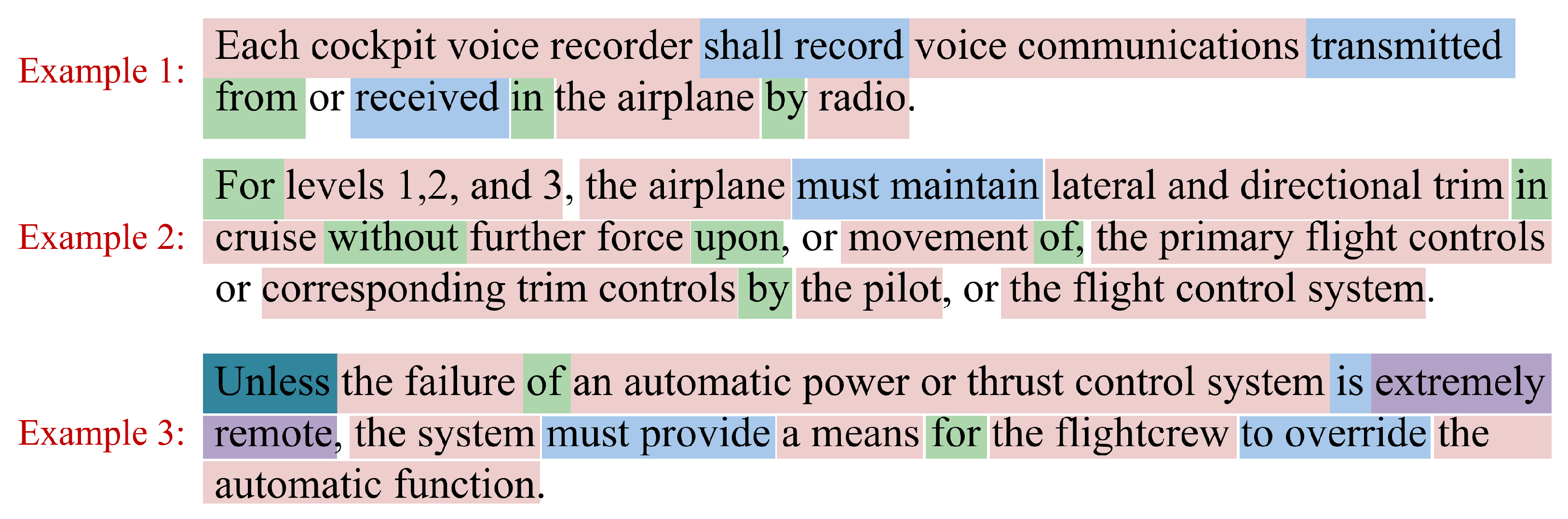

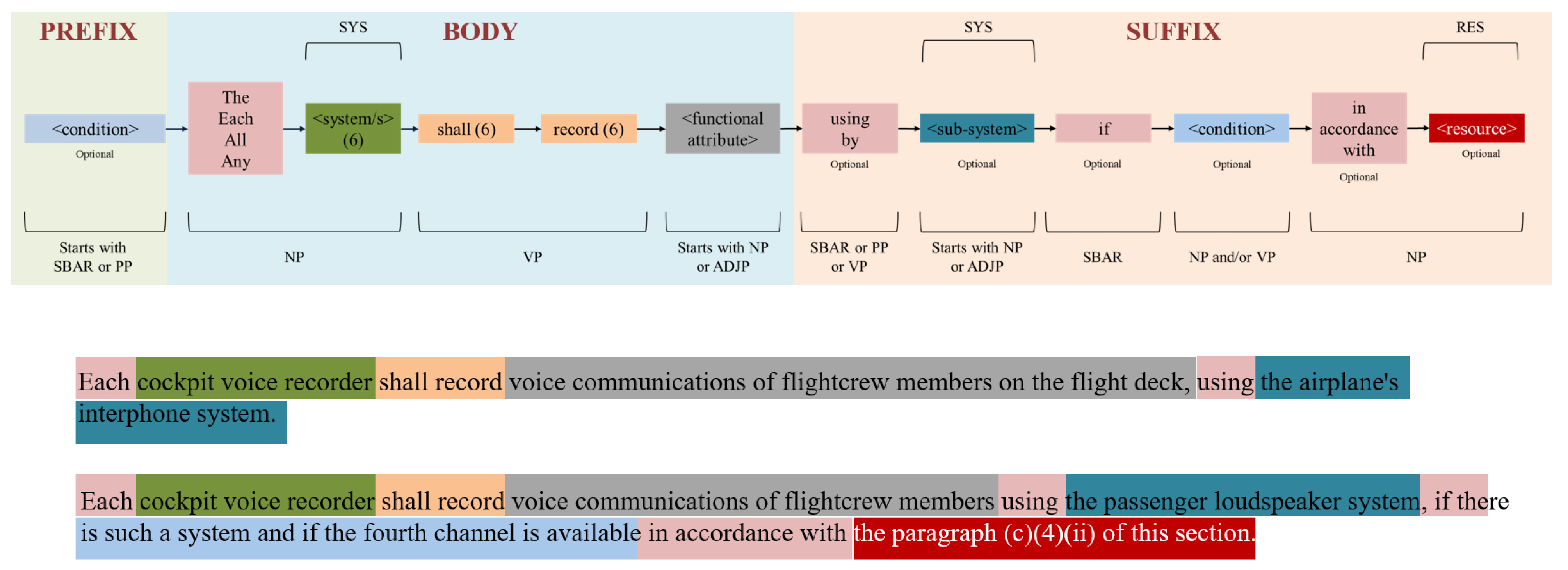

Figure 27.

The schematics of the fifth boilerplate for functional requirements along with some examples that fit the boilerplate is shown here. This boilerplate accounts for 6 of the 100 design requirements (6%) used for this study and is specifically focused on requirements related to the cockpit voice recorder since a total of six requirements in the entire dataset were about this particular system and its <functional attribute> given a certain <condition>.

Figure 27.

The schematics of the fifth boilerplate for functional requirements along with some examples that fit the boilerplate is shown here. This boilerplate accounts for 6 of the 100 design requirements (6%) used for this study and is specifically focused on requirements related to the cockpit voice recorder since a total of six requirements in the entire dataset were about this particular system and its <functional attribute> given a certain <condition>.

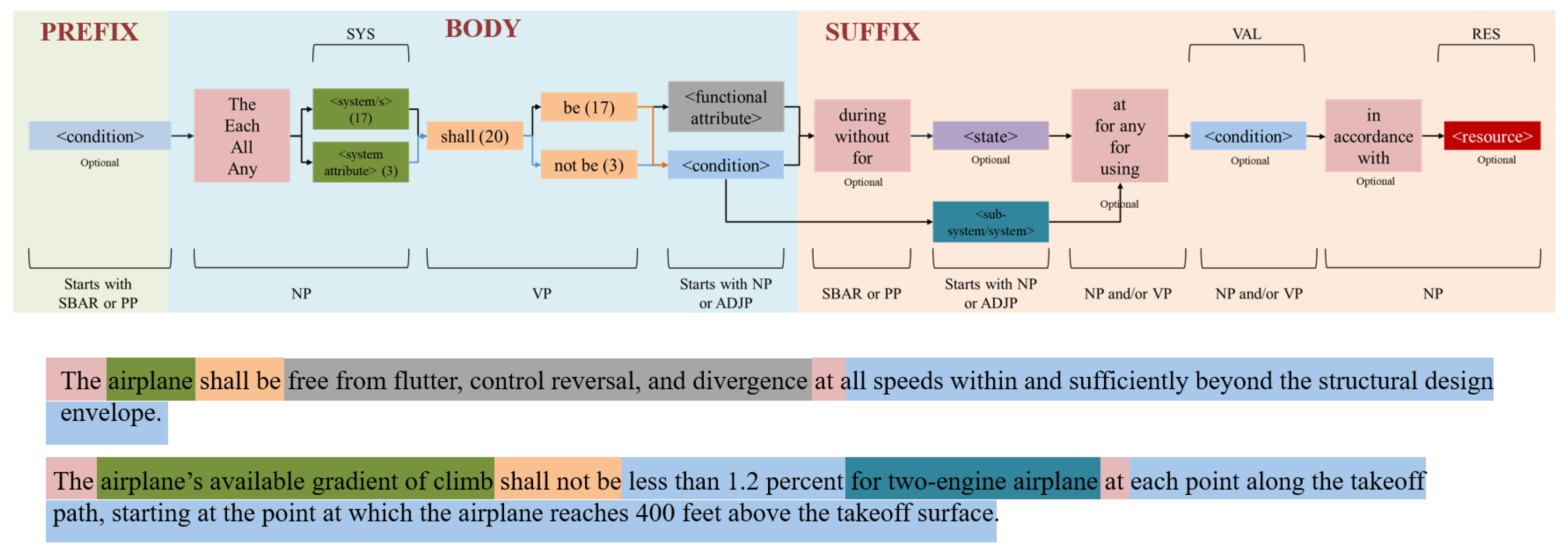

Figure 28.

The schematics of the first boilerplate for performance requirements along with some examples that fit the boilerplate are shown here. This boilerplate accounts for 20 of the 61 performance requirements (∼33%) used for this study. This particular boilerplate has the element <system attribute> which is unique as compared to the other boilerplate structures. In addition, this boilerplate caters to the performance requirements specifying a <system> or <system attribute> to satisfy a certain <condition> or have a certain <functional attribute>.

Figure 28.

The schematics of the first boilerplate for performance requirements along with some examples that fit the boilerplate are shown here. This boilerplate accounts for 20 of the 61 performance requirements (∼33%) used for this study. This particular boilerplate has the element <system attribute> which is unique as compared to the other boilerplate structures. In addition, this boilerplate caters to the performance requirements specifying a <system> or <system attribute> to satisfy a certain <condition> or have a certain <functional attribute>.

Figure 29.

The schematics of the second boilerplate for performance requirements along with some examples that fit the boilerplate are shown here. This boilerplate accounts for 12 of the 61 performance requirements (∼20%) used for this study. This boilerplate focuses on performance requirements that specify a <functional attribute> that a <system> should have or maintain given a certain <state> or <condition>.

Figure 29.

The schematics of the second boilerplate for performance requirements along with some examples that fit the boilerplate are shown here. This boilerplate accounts for 12 of the 61 performance requirements (∼20%) used for this study. This boilerplate focuses on performance requirements that specify a <functional attribute> that a <system> should have or maintain given a certain <state> or <condition>.

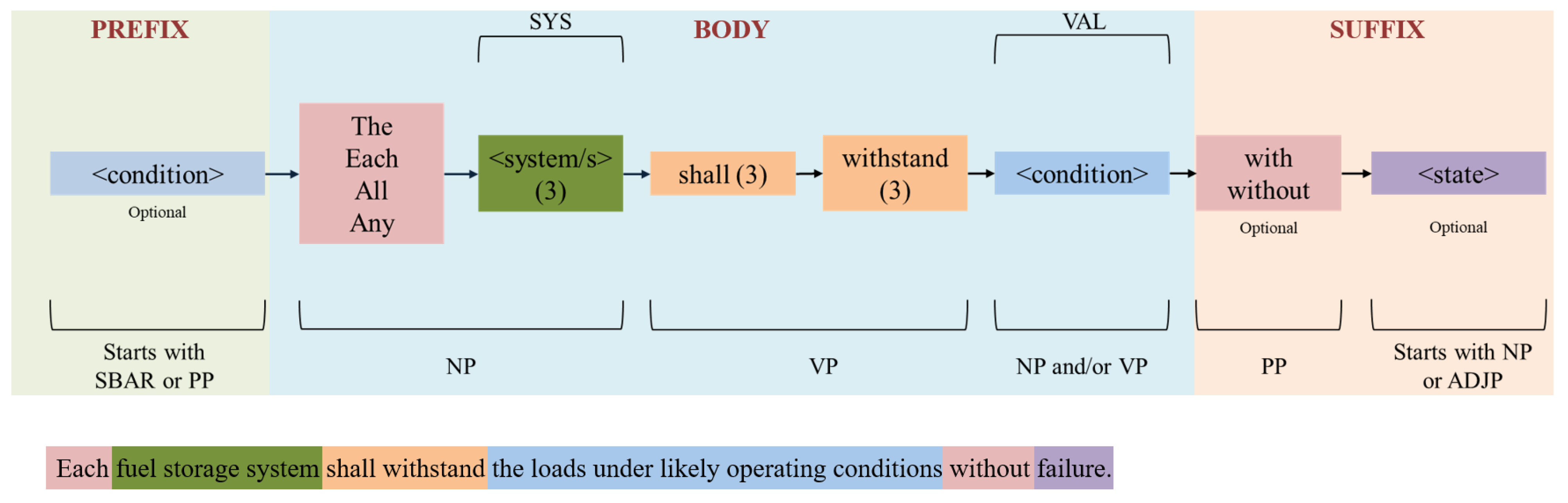

Figure 30.

The schematics of the third boilerplate for performance requirements along with some examples that fit the boilerplate are shown here. This boilerplate accounts for 3 of the 61 performance requirements (∼5%) used for this study and focuses on a <system> being able to withstand and certain <condition> with or without ending up in a certain <state>.

Figure 30.

The schematics of the third boilerplate for performance requirements along with some examples that fit the boilerplate are shown here. This boilerplate accounts for 3 of the 61 performance requirements (∼5%) used for this study and focuses on a <system> being able to withstand and certain <condition> with or without ending up in a certain <state>.

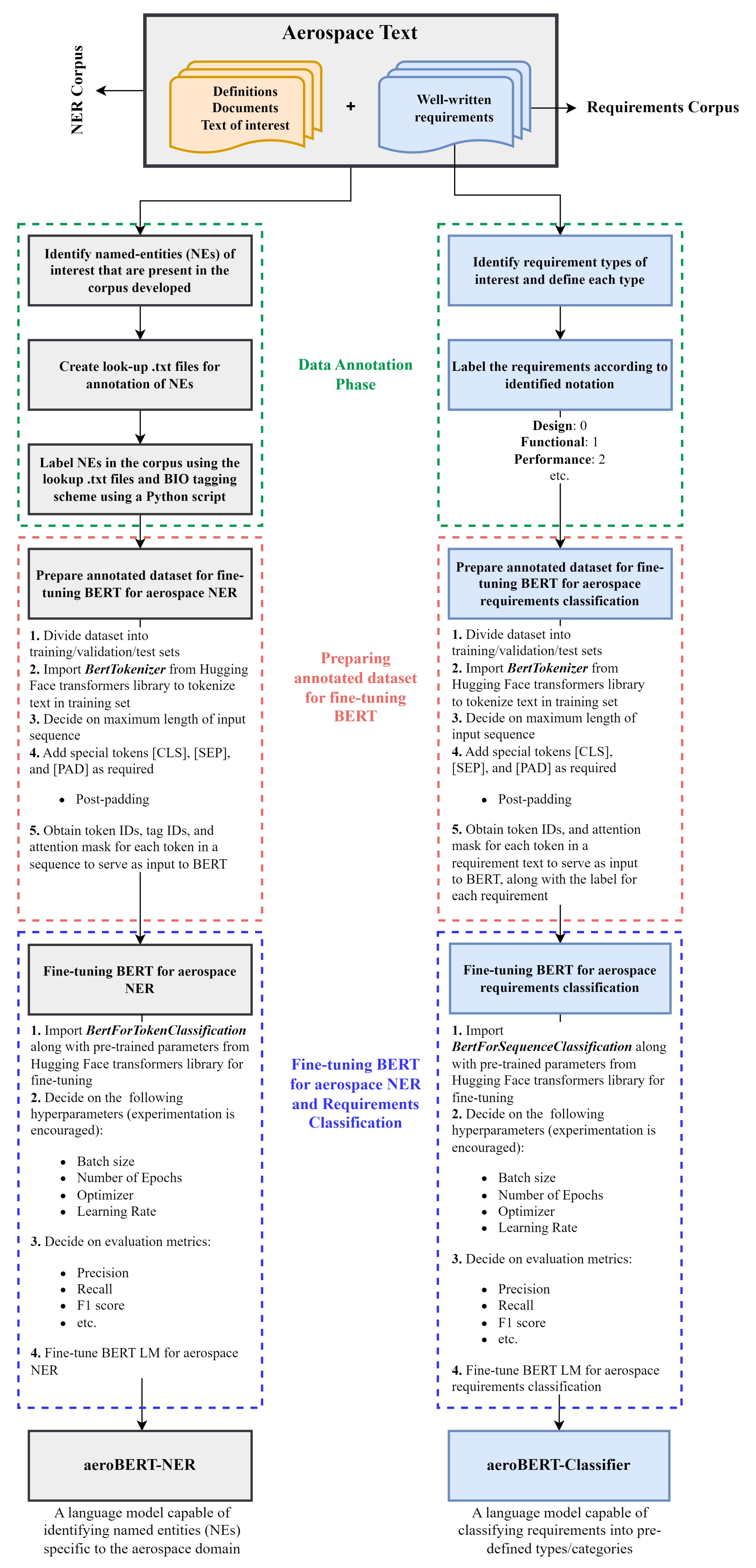

Figure 31.

Practitioner’s Guide to creation of aeroBERT-NER and aeroBERT-Classifier. A zoomed-in version of this figure can be found [

53].

Figure 31.

Practitioner’s Guide to creation of aeroBERT-NER and aeroBERT-Classifier. A zoomed-in version of this figure can be found [

53].

Table 1.

aeroBERT-NER is capable of identifying five types of named entities. The BIO tagging scheme was used for annotating the NER dataset [

23].

Table 1.

aeroBERT-NER is capable of identifying five types of named entities. The BIO tagging scheme was used for annotating the NER dataset [

23].

| Category |

NER Tags |

Example |

| System |

B-SYS, I-SYS |

nozzle guide vanes, flight recorder, fuel system |

| Value |

B-VAL, I-VAL |

5.6 percent, 41000 feet, 3 seconds |

| Date time |

B-DATETIME, I-DATETIME |

2017, 2014, Sept. 19,1994 |

| Organization |

B-ORG, I-ORG |

DOD, NASA, FAA |

| Resource |

B-RES, I-RES |

Section 25-341, Section 25-173 through 25-177, Part 25 subpart C |

Table 2.

A subset of text chunks along with definitions and examples is shown here [

25,

46]. The

blue text highlights the type of text chunk of interest. This is not an exhaustive list.

Table 2.

A subset of text chunks along with definitions and examples is shown here [

25,

46]. The

blue text highlights the type of text chunk of interest. This is not an exhaustive list.

| Sentence Chunk |

Definition |

| Noun Phrase (NP) |

Consists of a noun and other words modifying the noun (determinants, adjectives, etc.); |

| |

Example:The airplane design must protect the pilot and flight controls from propellers. |

| Verb Phrase (VP) |

Consists of a verb and other words modifying the verb (adverbs, auxiliary verbs, prepositional phrases, etc.); |

| |

Example: The airplane design must protect the pilot and flight controls from propellers. |

| Subordinate Clause (SBAR) |

Provides more context to the main clause and is usually introduced by subordinating conjunction (because, if, after, as, etc.) |

| |

Example: There must be a means to extinguish any fire in the cabin such that the pilot, while seated, can easily access the fire extinguishing means. |

| Adverbial Clause (ADVP) |

Modifies the main clause in the manner of an adverb and is typically preceded by subordinating conjunction; |

| |

Example: The airplanes were grounded until the blizzard stopped. |

| Adjective Clause (ADJP) |

Modifies a noun phrase and is typically preceded by a relative pronoun (that, which, why, where, when, who, etc.); |

| |

Example: I can remember the time when air-taxis didn’t exist.

|

Table 3.

Breakdown of the types of requirements used for this work. Three different types of requirements were analyzed namely, design, functional, and performance.

Table 3.

Breakdown of the types of requirements used for this work. Three different types of requirements were analyzed namely, design, functional, and performance.

| Requirement type |

Count |

| Design |

149 |

| Functional |

99 |

| Performance |

62 |

| Total |

310 |

Table 4.

List of language models used to populate the columns of requirement table.

Table 4.

List of language models used to populate the columns of requirement table.

| Column Name |

Description |

Method used to populate |

| Name |

System (SYS named entity) that the requirement pertains to |

aeroBERT-NER [23] |

| Text |

Original requirement text |

Original requirement text |

| Type of Requirement |

Classification of the requirement as design, functional, or performance |

aeroBERT-Classifier [24] |

| Property |

Identified named entities belonging to RES, VAL, DATETIME, and ORG categories present in a requirement related to a particular system (SYS) |

aeroBERT-NER [23] |

| Related to |

Identified system named entity (SYS) that the requirement properties are associated with |

aeroBERT-NER [23] |

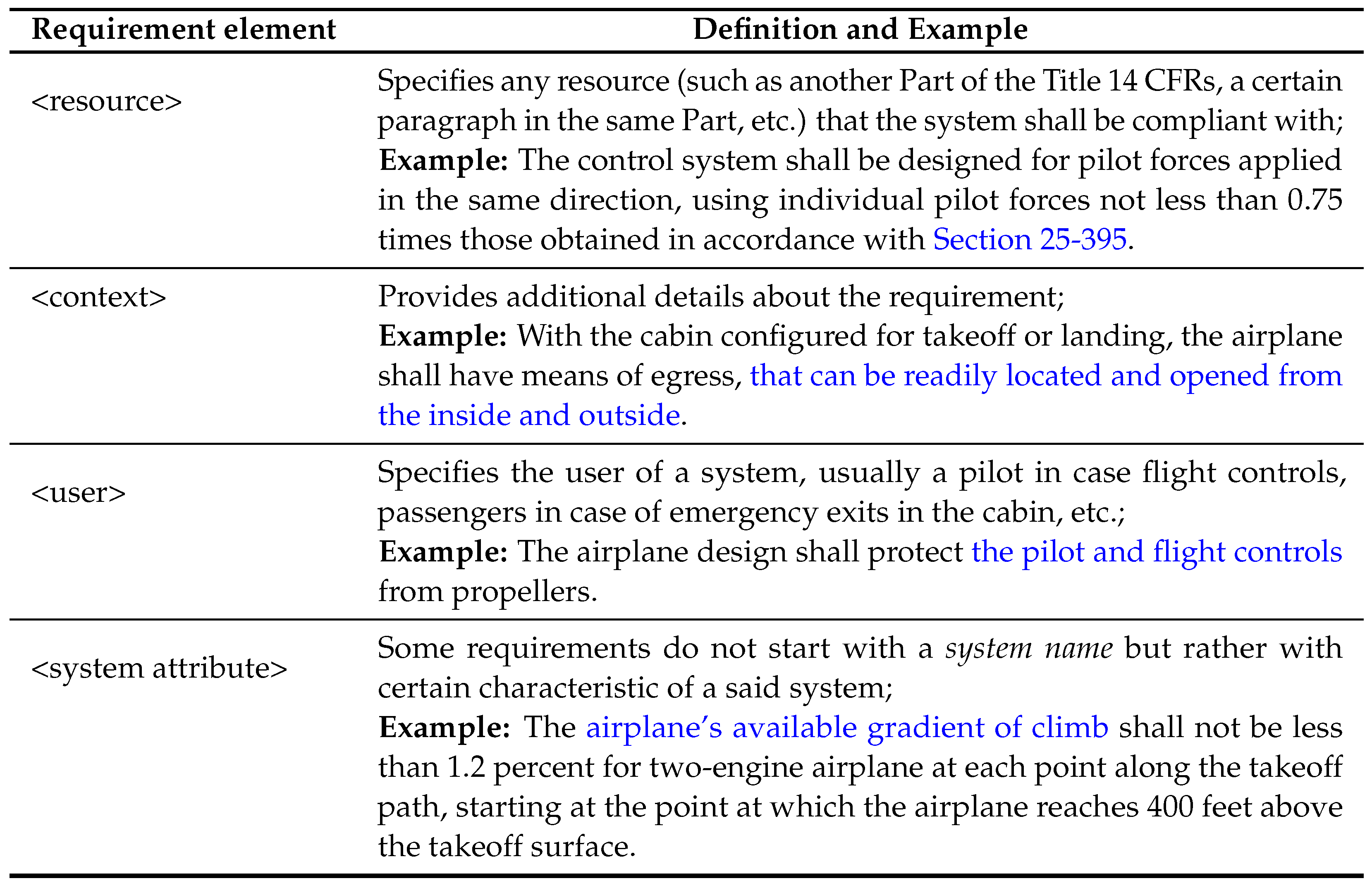

Table 5.

Different elements of an aerospace requirement. The

blue text highlights a specific element of interest in a requirement [

53].

Table 5.

Different elements of an aerospace requirement. The

blue text highlights a specific element of interest in a requirement [

53].

Table 6.

Requirement table containing columns extracted from NL requirements using language models. This table can be used to aid the creation of SysML requirement table [

53].

Table 6.

Requirement table containing columns extracted from NL requirements using language models. This table can be used to aid the creation of SysML requirement table [

53].

Table 7.

Summary of boilerplate template identification task. Two, five, and three boilerplate templates were identified for design, functional, and performance requirements that were used for this study.

Table 7.

Summary of boilerplate template identification task. Two, five, and three boilerplate templates were identified for design, functional, and performance requirements that were used for this study.

| Requirement type |

Count |

Boilerplate Count |

% of requirements covered |

| Design |

149 |

2 |

∼55% |

| Functional |

100 |

5 |

63% |

| Performance |

61 |

3 |

∼58% |

Table 8.

This table presents the results of a coverage analysis on the boilerplate templates that were proposed. According to the analysis, 67 design, 37 functional, and 26 performance requirements did not conform to the boilerplate templates that were developed as part of this work.

Table 8.

This table presents the results of a coverage analysis on the boilerplate templates that were proposed. According to the analysis, 67 design, 37 functional, and 26 performance requirements did not conform to the boilerplate templates that were developed as part of this work.