Submitted:

09 May 2023

Posted:

10 May 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Statistical Description of Complex Networks

2.1. Fermionic graphs

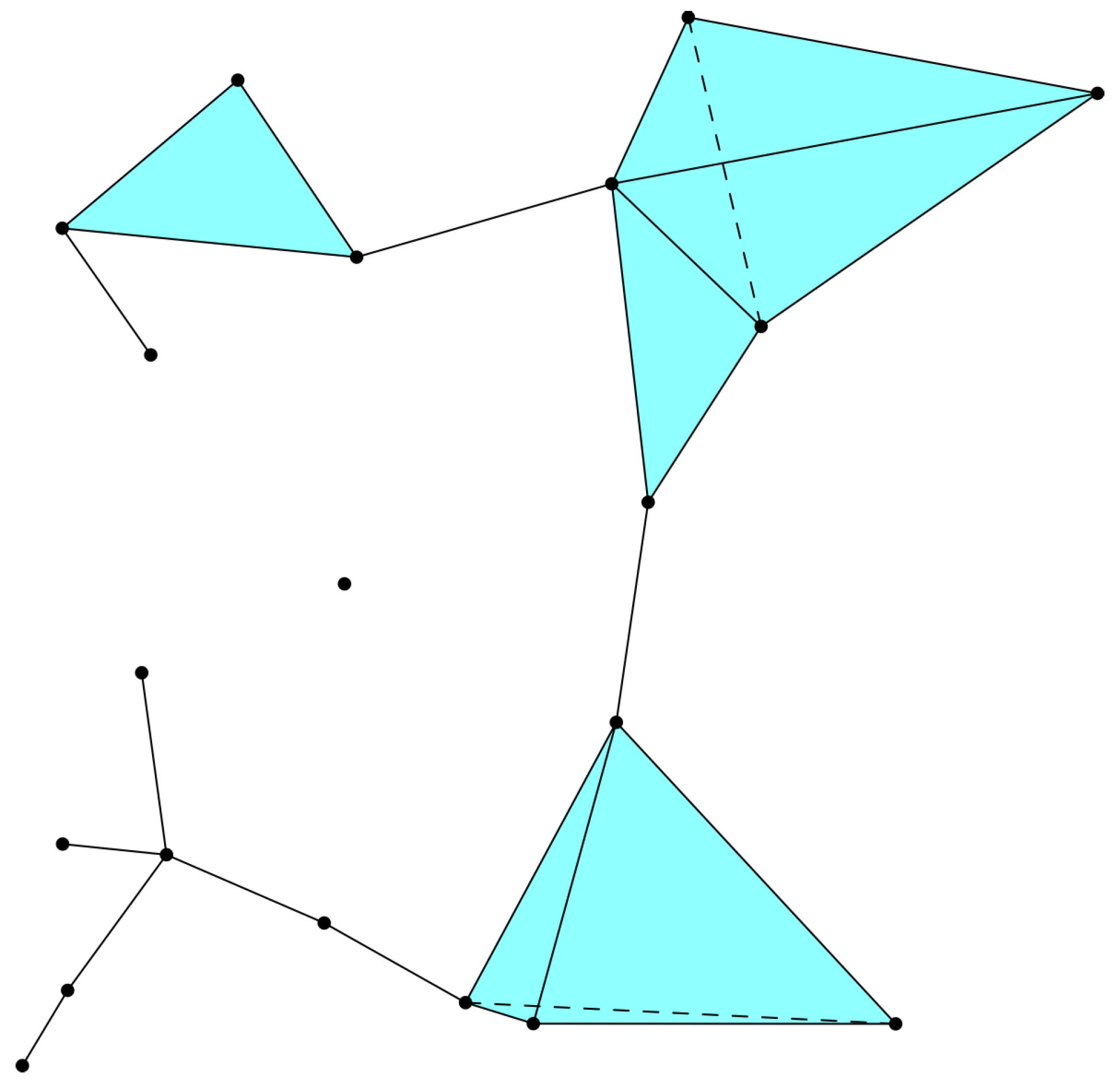

3. Building Discrete Spacetime

- Vertices or nodes of the network are "atoms" of spacetime.

- The distance between two neighboring atoms can not be less than the fundamental length, .

- Spacetime geometry is encoded in the nonassociative structure of the network.

- Interaction between atoms of spacetime, being nonlocal defines the spacetime geometry.

- Time is quantized and the evolution of spacetime geometry is governed by a random/stochastic process.

- The spacetime dimension is a dynamical variable.

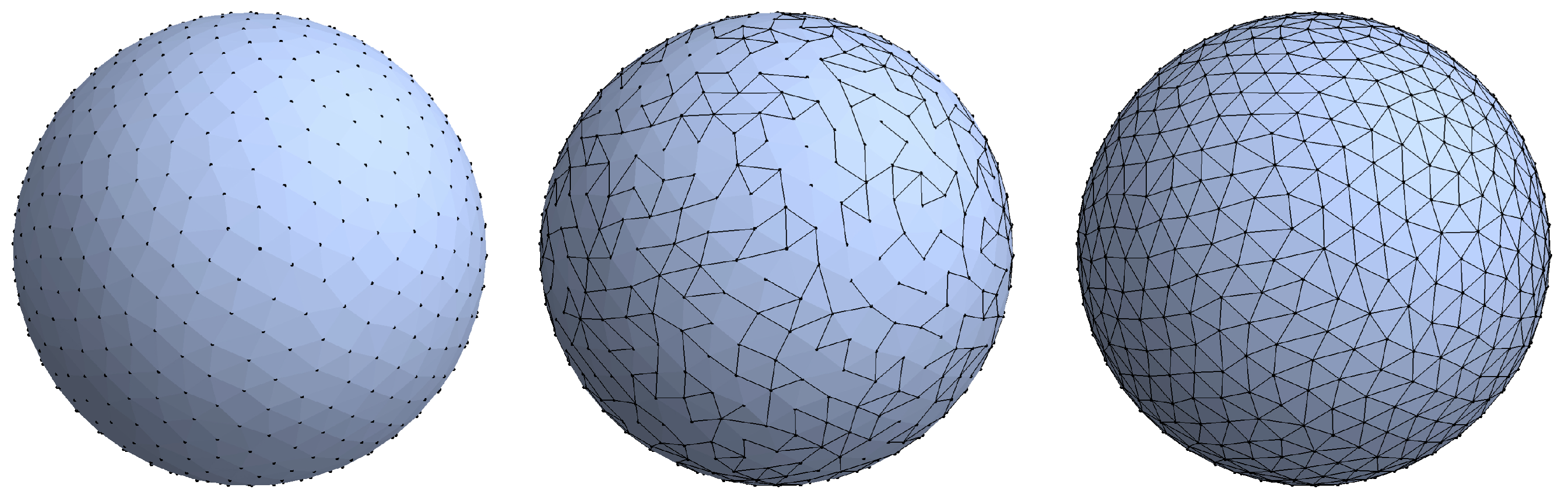

4. Spacetime as a Complex Network

4.1. Toy Homogeneous Model

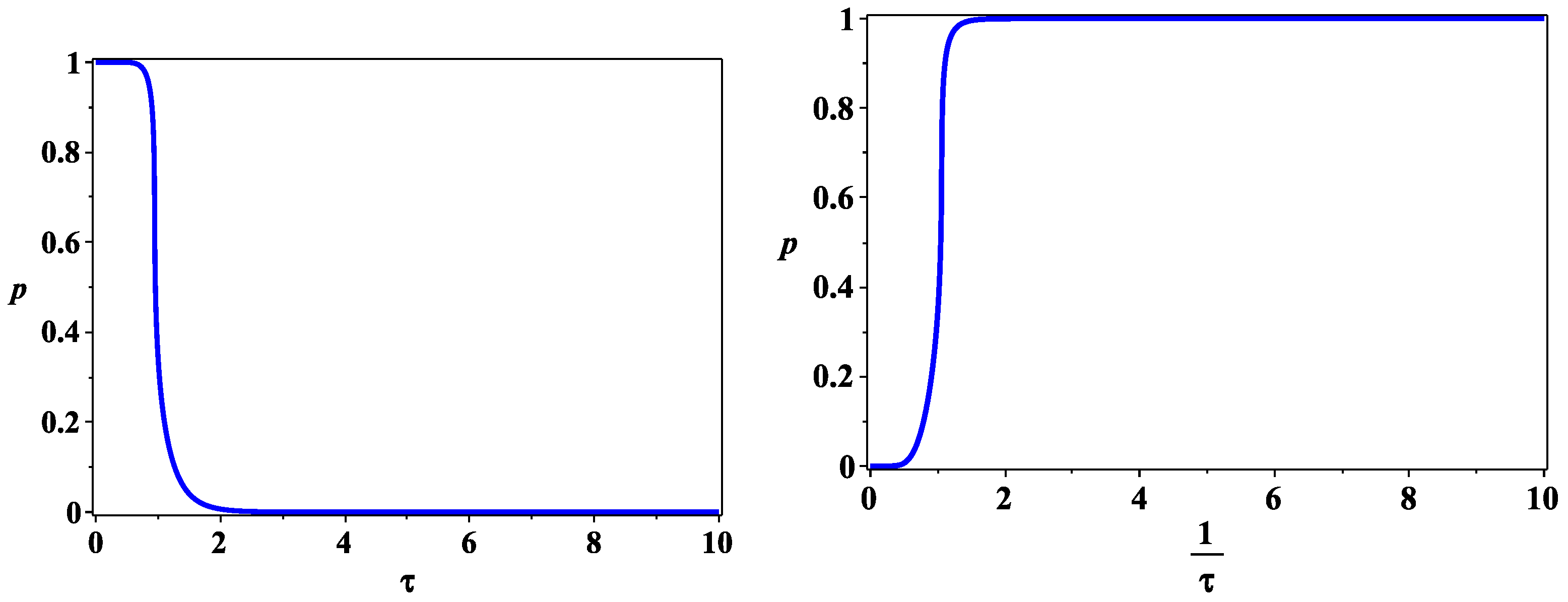

4.2. Spacetime Evolution

5. The Cosmological Constant Problem

6. Conclusion

Funding

Acknowledgments

Conflicts of Interest

References

- Zel’dovich, Y.B. The cosmological constant and the theory of elementary particles. Soviet Physics Uspekhi 1968, 11, 381. [Google Scholar] [CrossRef]

- Zel’dovich, Y.B. The Cosmological Constant and Elementary Particles. JETP Letters 1967, 6, 316. [Google Scholar] [CrossRef]

- Weinberg, S. The cosmological constant problem. Rev. Mod. Phys. 1989, 61, 1–23. [Google Scholar] [CrossRef]

- Weinberg, S. The Cosmological Constant Problems (Talk given at Dark Matter 2000, February, 2000), 2000. arXiv:astro-ph/astro-ph/0005265.

- Carroll, S.M.; Press, W.H.; Turner, E.L. The Cosmological Constant. Annual Review of Astronomy and Astrophysics 1992, 30, 499–542. [Google Scholar] [CrossRef]

- Dolgov, A.D. The Problem of Vacuum Energy and Cosmology (A lecture presented at the 4th Colloque Cosmologie, Paris, June, 1997), 1997, [arXiv:astro-ph/astro-ph/9708045].

- Carroll, S.M. The Cosmological Constant. Living Reviews in Relativity 2001, 4, 1. [Google Scholar] [CrossRef] [PubMed]

- Peebles, P.J.E.; Ratra, B. The cosmological constant and dark energy. Rev. Mod. Phys. 2003, 75, 559–606. [Google Scholar] [CrossRef]

- Padmanabhan, T. Cosmological constant – the weight of the vacuum. Physics Reports 2003, 380, 235–320. [Google Scholar] [CrossRef]

- Copeland, E.J.; Sami, M.; Tsujikawa, S. Dynamics of dark energy. International Journal of Modern Physics D 2006, 15, 1753–1935. [Google Scholar] [CrossRef]

- Rugh, S.; Zinkernagel, H. The quantum vacuum and the cosmological constant problem. Studies in History and Philosophy of Science Part B: Studies in History and Philosophy of Modern Physics 2002, 33, 663–705. [Google Scholar] [CrossRef]

- O’Raifeartaigh, C.; O’Keeffe, M.; Nahm, W.; Mitton, S. One hundred years of the cosmological constant: from “superfluous stunt” to dark energy. The European Physical Journal H 2018, 43, 73–117. [Google Scholar] [CrossRef]

- Sahni, V.; Starobinsky, A. The Case for a Positive Cosmological Λ-term. International Journal of Modern Physics D 2000, 09, 373–443. [Google Scholar] [CrossRef]

- Pauli, W. Pauli Lectures on Physics, Volume VI: Selected Topics in Field Quantization; Pauli Lectures on Physics delivered in 1950–1951 at the Swiss Federal Institute of Technology, Dover Publications, 2000. [Google Scholar]

- Kamenshchik, A.Y.; Starobinsky, A.A.; Tronconi, A.; Vardanyan, T.; Venturi, G. Pauli–Zeldovich cancellation of the vacuum energy divergences, auxiliary fields and supersymmetry. The European Physical Journal C 2018, 78, 200. [Google Scholar] [CrossRef]

- Kamenshchik, A.Y.; Tronconi, A.; Vacca, G.P.; Venturi, G. Vacuum energy and spectral function sum rules. Phys. Rev. D 2007, 75, 083514. [Google Scholar] [CrossRef]

- Berkov, A.; Narozhny, N.; Okun, L. I Ya Pomeranchuk and Physics at the Turn of the Century; World Scientific, 2004. [Google Scholar] [CrossRef]

- DeWitt C.M., W.J.e. Superspace and the Nature of Quantum Geometrodynamics. In Battelle Rencontres: 1967 lectures in mathematics and physics; Benjamin, 1968. [Google Scholar]

- Hawking, S. Spacetime foam. Nuclear Physics B 1978, 144, 349–362. [Google Scholar] [CrossRef]

- Hawking, S.W. The Cosmological Constant [and Discussion]. Philosophical Transactions of the Royal Society of London. Series A, Mathematical and Physical Sciences 1983, 310, 303–310. [Google Scholar] [CrossRef]

- Strominger, A. Vacuum Topology and Incoherence in Quantum Gravity. Phys. Rev. Lett. 1984, 52, 1733–1736. [Google Scholar] [CrossRef]

- Carlip, S. Spacetime Foam and the Cosmological Constant. Phys. Rev. Lett. 1997, 79, 4071–4074. [Google Scholar] [CrossRef]

- Carlip, S. Spacetime foam: a review. 2023, arXiv:gr-qc/2209.14282]. [Google Scholar] [CrossRef]

- Loll, R.; Silva, A. Measuring the Homogeneity (or Otherwise) of the Quantum Universe. 2023, arXiv:hep-th/2302.10256]. [Google Scholar]

- Ashtekar, A.; Stachel, J. Conceptual Problems of Quantum Gravity; Einstein Studies 2, Birkhüser, 1991. [Google Scholar]

- Gross D. et al, editors The Quantum Structure of Space and Time: Proceedings of the 23rd Solvay Conference on Physics Brussels, Belgium 1-3 December 2005; 3 December.

- Murugan, J.; Weltman, A.; Ellis, G.F.R.; editors. Foundations of Space and Time: Reflections on Quantum Gravity; Cambridge University Press, 2012. [Google Scholar]

- Butterfield, J.; Isham, C. Spacetime and the Philosophical Challenge of Quantum Gravity. In Physics meets Philosophy at the Planck Scale; Callender, C., Huggett, N., Eds.; Cambridge University Press: Cambridge, 2000. [Google Scholar]

- Oriti, D. (Ed.) Approaches to Quantum Gravity: Toward a New Understanding of Space, Time and Matter; Cambridge University Press: New York, 2009. [Google Scholar]

- Majid, S.; Polkinghorne, J.; Penrose, R.; Taylor, A.; Connes, A.; Heller, M. On Space and Time; Canto Classics, Cambridge University Press, 2012. [Google Scholar]

- Ambjørn, J. Discrete Quantum Gravity, In. In Approaches to Quantum Gravity: Toward a New Understanding of Space, Time and Matter; Oriti, D., Ed.; Cambridge University Press: New York, 2009. [Google Scholar]

- Loll, R. Discrete Approaches to Quantum Gravity in Four Dimensions. Living Reviews in Relativity 1998, 1, 13. [Google Scholar] [CrossRef]

- ’t Hooft, G. Quantum mechanics, statistics, standard model and gravity. General Relativity and Gravitation 2022, 54, 56. [Google Scholar] [CrossRef]

- Van Raamsdonk, M. Building up spacetime with quantum entanglement. General Relativity and Gravitation 2010, 42, 2323–2329. [Google Scholar] [CrossRef]

- Swingle, B. Spacetime from Entanglement. Annual Review of Condensed Matter Physics 2018, 9, 345–358. [Google Scholar] [CrossRef]

- Padmanabhan, T. Atoms of Spacetime and the Nature of Gravity. Journal of Physics: Conference Series 2016, 701, 012018. [Google Scholar] [CrossRef]

- Dowker, F. The birth of spacetime atoms as the passage of time. Annals of the New York Academy of Sciences 2014, 1326, 18–25. [Google Scholar] [CrossRef]

- Padmanabhan, T. Distribution Function of the Atoms of Spacetime and the Nature of Gravity. Entropy 2015, 17, 7420–7452. [Google Scholar] [CrossRef]

- Sabinin, L.V. Smooth quasigroups and loops; Kluwer Academic Publishers: Dordrecht, 1999. [Google Scholar]

- Nesterov, A.I.; Sabinin, L.V. Non-associative geometry and discrete structure of spacetime. Comment. Math. Univ. Carolin. 2000, 41,2, 347–358. [Google Scholar]

- Nesterov, A.I.; Sabinin, L.V. Nonassociative geometry: Towards discrete structure of spacetime. Phys. Rev. D 2000, 62, 081501. [Google Scholar] [CrossRef]

- Sabinin, L. Nonassociative Geometry and Discrete Space-Time. International Journal of Theoretical Physics 2001, 40, 351–358. [Google Scholar] [CrossRef]

- Nesterov, A.I.; Sabinin, L.V. Nonassociative Geometry: Friedmann-Robertson-Walker Spacetime. IJGMMP 2006, 3, 1481–1491. [Google Scholar] [CrossRef]

- Nesterov, A. Gravity within the Framework of Nonassociative Geometry. In Non-Associative Algebra and Its Applications; Lev Sabinin, Larissa Sbitneva, I.S., Ed.; Chapman and Hall/CRC, 2006; pp. 299–311. [Google Scholar]

- Nesterov, A.I.; Mata Villafuerte, P.H. Spacetime as a Complex Network with Hidden Geometry (unpublished).

- Nesterov, A.I.; Mata, H. How Nonassociative Geometry Describes a Discrete Spacetime. Frontiers in Physics 2019, 7, 32. [Google Scholar] [CrossRef]

- Dowker, F. Causal Sets and Discrete Spacetime. AIP Conference Proceedings 2006, 861, 79–88. [Google Scholar] [CrossRef]

- Bombelli, L.; Lee, J.; Meyer, D.; Sorkin, R.D. Space-time as a causal set. Phys. Rev. Lett. 1987, 59, 521–524. [Google Scholar] [CrossRef] [PubMed]

- Dowker, F.; Zalel, S. Evolution of universes in causal set cosmology. Comptes Rendus Physique 2017, 18, 246–253. Testing different approaches to quantum gravity with cosmology / Tester les théories de la gravitation quantique à l’aide de la cosmologie. [Google Scholar] [CrossRef]

- Ambjørn, J.; Carfora, M.; Marzuoli, A. The Geometry of Dynamical Triangulations; Springer Berlin Heidelberg, Berlin, 1997. [Google Scholar]

- Ambjørn, J.; Loll, R. Non-perturbative Lorentzian quantum gravity, causality and topology change. Nuclear Physics B 1998, 536, 407–434. [Google Scholar] [CrossRef]

- Loll, R. The emergence of spacetime or quantum gravity on your desktop. Classical and Quantum Gravity 2008, 25, 114006. [Google Scholar] [CrossRef]

- Ambjørn, J.; Jurkiewicz, J.; Loll, R. Reconstructing the Universe. Phys. Rev. D 2005, 72, 064014. [Google Scholar] [CrossRef]

- Ambjørn, J.; Jordan, S.; Jurkiewicz, J.; Loll, R. Quantum spacetime, from a practitioner’s point of view. AIP Conference Proceedings 2013, 1514, 60–66. [Google Scholar] [CrossRef]

- Glaser, L.; Loll, R. CDT and cosmology. Comptes Rendus Physique 2017, 18, 265–274. Testing different approaches to quantum gravity with cosmology / Tester les théories de la gravitation quantique à l’aide de la cosmologie. [Google Scholar] [CrossRef]

- Ambjørn, J.; Görlich, A.; Jurkiewicz, J.; Loll, R. Nonperturbative quantum gravity. Physics Reports 2012, 519, 127–210. [Google Scholar] [CrossRef]

- Park, J.; Newman, M.E.J. Statistical mechanics of networks. Phys. Rev. E 2004, 70, 066117. [Google Scholar] [CrossRef] [PubMed]

- Squartini, T.; Garlaschelli, D. Maximum-Entropy Networks: Pattern Detection, Network Reconstruction and Graph Combinatorics; Springer International Publishing: Cham, 2017. [Google Scholar]

- van der Hoorn, P.; Lippner, G.; Krioukov, D. Sparse Maximum-Entropy Random Graphs with a Given Power-Law Degree Distribution. Journal of Statistical Physics 2018, 173, 806–844. [Google Scholar] [CrossRef]

- Garlaschelli, D.; Loffredo, M.I. Maximum likelihood: Extracting unbiased information from complex networks. Phys. Rev. E 2008, 78, 015101. [Google Scholar] [CrossRef] [PubMed]

- Garlaschelli, D.; Ahnert, S.E.; Fink, T.M.A.; Caldarelli, G. Low-Temperature Behaviour of Social and Economic Networks. Entropy 2013, 15, 3148–3169. [Google Scholar] [CrossRef]

- Cimini, G.; Squartini, T.; Saracco, F.; Garlaschelli, D.; Gabrielli, A.; Caldarelli, G. The statistical physics of real-world networks. Nature Reviews Physics 2019, 1, 58–71. [Google Scholar] [CrossRef]

- Watts, D.J.; Strogatz, S.H. Collective dynamics of ‘small-world’networks. Nature 1998, 393, 440–442. [Google Scholar] [CrossRef]

- Newman, M.E.J.; Strogatz, S.H.; Watts, D.J. Random graphs with arbitrary degree distributions and their applications. Phys. Rev. E 2001, 64, 026118. [Google Scholar] [CrossRef]

- Boccaletti, S.; Latora, V.; Moreno, Y.; Chavez, M.; Hwang, D.U. Complex networks: Structure and dynamics. Physics Reports 2006, 424, 175–308. [Google Scholar] [CrossRef]

- Albert, R.; Barabási, A.L. Statistical mechanics of complex networks. Rev. Mod. Phys. 2002, 74, 47–97. [Google Scholar] [CrossRef]

- Nesterov, A.I.; Mata Villafuerte, P.H. Complex networks in the framework of nonassociative geometry. Phys. Rev. E 2020, 101, 032302. [Google Scholar] [CrossRef]

- Regge, T. General relativity without coordinates. Il Nuovo Cimento (1955-1965) 1961, 19, 558–571. [Google Scholar] [CrossRef]

- Williams, R.M.; Tuckey, P.A. Regge calculus: a brief review and bibliography. Classical and Quantum Gravity 1992, 9, 1409–1422. [Google Scholar] [CrossRef]

- Regge, T.; Williams, R.M. Discrete structures in gravity. Journal of Mathematical Physics 2000, 41, 3964–3984. [Google Scholar] [CrossRef]

- Wang, Q. Reformulation of the Cosmological Constant Problem. Phys. Rev. Lett. 2020, 125, 051301. [Google Scholar] [CrossRef] [PubMed]

- Ossola, G.; Sirlin, A. Considerations concerning the contributionsof fundamental particles to the vacuum energy density. The European Physical Journal C - Particles and Fields 2003, 31, 165–175. [Google Scholar] [CrossRef]

- Akhmedov, E.K. Vacuum energy and relativistic invariance. 2002, arXiv:hep-th/hep-th/0204048. [Google Scholar]

- Volovik, G.E. On Contributions of Fundamental Particles to the Vacuum Energy. In I Ya Pomeranchuk and Physics at the Turn of the Century; World Scientific, 2004; pp. 234–244. [Google Scholar] [CrossRef]

- Wang, Q.; Zhu, Z.; Unruh, W.G. How the huge energy of quantum vacuum gravitates to drive the slow accelerating expansion of the Universe. Phys. Rev. D 2017, 95, 103504. [Google Scholar] [CrossRef]

- Carlip, S. Hiding the Cosmological Constant. Phys. Rev. Lett. 2019, 123, 131302. [Google Scholar] [CrossRef]

- Klaus, S. On combinatorial Gauss-Bonnet Theorem for general Euclidean simplicial complexes. Frontiers of Mathematics in China 2016, 11, 1345. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).