1. Introduction

Enormous research has been done over the past decade aiming to convert human brain signals to speech. Although experiments have shown that the excitation of the central motor cortex is elevated when visual and auditory cues are employed, the functional benefit of such a method is limited [

1]. Imagined speech, sometimes called inner speech, is an excellent choice for decoding human thinking using the Brain-Computer Interface (BCI) concept. BCI is being developed to progressively allow paralyzed patients to interact directly with their environment. Brain signals usable with the BCI systems can be recorded with a variety of common recording technologies, such as the Magnetoencephalography (MEG), the Electrocorticography (ECOG), the functional Magnetic Resonance Imaging (fMRI), functional Near-Infrared Spectroscopy (fNIRS), and the Electroencephalography (EEG). EEG headsets are used to record the electrical activities of the human brain. EEG-based BCI systems can convert the electrical activities of the human brain into commands. An EEG-based implementation is considered an effective way to help patients, with a high level of disability or physically challenged, control their supporting systems like wheelchairs, computers, or wearable devices [

2], [

3], [

4], and [

5]. Moreover, in our very recent research [

6] and [

7], we accomplished excellent accuracy in classifying EEG signals to control a drone and consider the Internet of things (IoT) to design an Internet of Brain Controlled Things (IoBCT) system.

Applying soft-computing tools, such as Artificial Neural Networks (ANNs), genetic algorithms, and fuzzy logic helps designers implement intelligent devices that fit the needs of physically challenged people [

8]. Siswoyo et al. [

9] suggested a three-layer neural network to develop the mapping from input received from EEG sensors to three control commands. Fattouh et al. [

10] recommended a BCI control system to distinguish between four control commands besides the emotional status of the user. If the user is satisfied, the specific control command is still executed, or the controller should stop the implementation and ask the patient to choose another command. Decoding the brain waves and presenting them as an audio command is a more reliable solution to avoid the execution of unwanted commands, and this is mainly true if the user can listen to the translated commands from his brain and confirm or deny the execution of that command. A deep learning algorithm offers a valuable solution for processing, analyzing, and classifying brainwaves [

11]. Modern studies have concentrated on both healthy and patient individuals only to communicate their thoughts [

12]. Vezard et al. [

13] reached 71.6% accuracy in a Binary Alertness States (BAS) estimation by applying the Common Spatial Pattern (CSP) to extract the feature. The methods in [

14] and [

15] reached an EEG classification accuracy of merely 54.6% and 56.76%, respectively. This was achieved by applying a multi-stage CSP for the EEG dataset feature extraction. In [

16], researchers employed the power of a deep learning algorithm using the Recurrent Neural Network (RNN) to process and classify the EEG dataset.

For cheaper and more effortless setup and maintained BCI systems, it is preferable to have as few EEG channels as possible. There are two types of BCI systems: online, such as the systems described in [

17] and [

18], and offline BCI systems, such as the systems described in [

19]. In the offline EEG system, the EEG data recorded from the participants are stored and processed later; on the other hand, the online BCI system processes the data in real time, such as in the case of a moving wheelchair. Recent research [

20] revealed that inner speech differs at the phonologic level. Wang et al. [

21] demonstrated in their study, which was based on common spatial patterns and Event-Related Spectral Perturbation (ERSP) that the highly significant EEG channels for classifying inner speech are the ones laid on the Broca’s and Wernicke’s regions. Essentially, the Wernicke region is responsible for ensuring that the speech makes sense, while the Broca region ensures that the speech is produced fluently. Given that both Wernicke’s and Broca’s regions are participating in inner speech, it is not easy to eliminate the effect of the auditory activities from the EEG signal recorded during speech imagination. Indeed, some researchers suggested that auditory and visual activities are essential to decide the brain response [

22] and [

23].

In most studies, the participants are directed to imagine speaking the commands only once. However, in [

24] and [

25], the participants must imagine saying a specific command multiple times in the same recording. In [

26], the commands “left,” “right,” “up,” and “down” have been used. This choice of commands is not only motivated by the suitability of these commands in practical applications but also because of their various manner and places of articulation. Maximum classification accuracy of 49.77% and 85.57% were obtained, respectively. This was accomplished using the kernel Extreme Learning Machine (ELM) classification algorithm. Significant efforts have been recently published by

Nature [

27] where a 128-channel EEG headset was used to record inner speech-based brain activities. The acquired dataset consists of EEG signals from 10 participants recorded by 128 channels distributed all over the scalp according to the ‘ABC’ layout of the manufacturer of the EEG headset used in this study. The participants were instructed to produce inner speech for four words: ‘up’, ‘down’, ‘left’, and ‘right’ based on a visual cue they saw in each trial. The cue was an arrow on a computer screen that rotated in the corresponding directions. This was repeated 220 times for each participant. However, since some participants reported fatigue, the final number of trials included in the dataset for each participant differed slightly. The total number of trials was 2236 with an equal number of trials per class for all participants. The EEG signals included event markers and were already preprocessed. The preprocessing included a band pass filter between 0.5-100 Hz, a notch filter at 50 Hz, artifact rejection using Independent Component Analysis (ICA), and down-sampling to 254 Hz. The Long-Short Term Memory (LSTM) algorithm has been used in [

28] and [

29] to classify EEG signals. In [

28], 84% accuracy of EEG data classification was achieved. In [

29], an excellent accuracy of 98% was achieved in classifying the EEG-based inner speech, but researchers used an expensive EEG headset. Getting high accuracy in classifying the brain signals is considered essential in the design of future brain-controlled systems, which can be tested in real-time or in simulation software such as V-Rep [

30] to check for any uncounted errors.

Most of the researchers have used high-cost EEG headsets to build BCI systems for imagined speech processing. Using the RNN for time-series input showed good execution in extracting features over time, and they achieved an 85% classification accuracy. Although innovative techniques in conventional representations, such as Event-Related Potential (ERP), and Steady-State Visual Evoked Potential (SSVEP), have expanded the communication ability of patients with a high level of disability, these representations are restricted in their use for the availability of a visual stimulus [

31], [

32]. Practicality research studied imagined speech in EEG-based BCI systems and showed that imagined speech could be extrapolated using texts with high discriminatory pronunciation [

33]. Hence, BCI-based gear can be controlled by processing brain signals and extrapolating the inner speech [

34]. Extensive research has been conducted to develop BCI systems using inner speech and motor imagery [

35]. To investigate the feasibility of using EEG signals for imagined speech recognition, a research study reported promising results on imagined speech classification [

36]. In addition, a similar research study examined the feasibility of using EEG signals for inner speech recognition and increasing the efficiency of such use [

37].

In this paper, we have used a low-cost low-channel 8-channel EEG headset,

g.tec Unicorn Hybrid Black+ [

38], with MATLAB 2023a for recording the dataset to decrease the computational complexity required later in the processing. Then, we decoded the identified signals into four audio commands:

Up, Down, Left, and Right. These commands were performed as an imagined speech by four healthy subjects whose ages are between 20-year to 56-year-old, and those were two females and two males. The EEG signals were recorded while the imagination of speech occurred. An imagined speech based BCI model was designed using deep learning. Audio cues were used to stimulate the motor imagery of the participants in this study, and the participant responded with imagined speech commands. Pre-processing and filtration techniques were employed to simplify the recorded EEG dataset and speed up the learning process of the designed algorithm. Moreover, the short-long term memory technique was used to classify the imagined speech-based EEG dataset.

2. Materials and Methods

We considered research methodologies and equipment in order to optimize the system design, simulation, and verification.

2.1. Apparatus

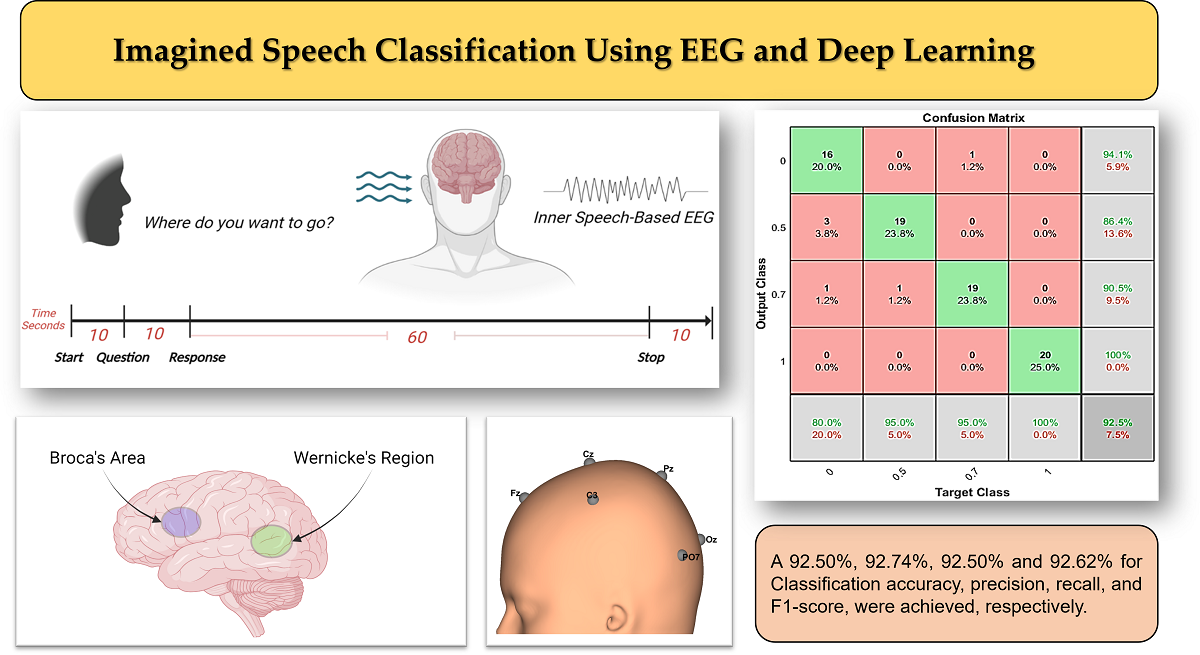

In order to optimize the system design, reduce the cost of the designed system and decrease the computational complexity, we used a low-cost EEG headset. We have used a low number of EEG channels with the concentration on the placement of EEG sensors at the proper places on the scalp to measure specific brain activities. The EEG signals were recorded using the g.tec Unicorn Hybrid Black+ headset. It has eight-channel EEG electrodes with a 250 Hz sampling frequency. It records up to seventeen channels, including the 8-channel EEG, a 3-dimensional accelerometer, a gyro, a counter signal, a battery signal, and a validation signal. The EEG electrodes of this headset are made of a conductive rubber that allows recording dry or with gel. Eight channels are recorded on the following positions: (FZ, C3, CZ, C4, PZ, PO7, OZ, and PO8). The used g.tec headset provides standard EEG head caps of various sizes with customized electrode positions. A cap of appropriate size was chosen for each participant by measuring the head boundary with a soft measuring tape. All EEG electrodes were placed in the marked positions in the cap, and the gap between the scalp and the electrodes was filled with a conductive gel provided by the EEG headset manufacturer.

We considered the international electrode placement 10-20 recommended by the American clinical neurophysiology society [

39]. The head cap has been adjusted to ensure their electrodes are placed as close to Broca’s and Wernicke’s regions as possible, which we assume to produce good quality imagined speech-based EEG signals due to this placement.

Figure 1 shows the

g.tec Unicorn Hybrid Black+ headset with the electrode map. Ground and reference are positioned on the back of the ears (mastoids) of the participant using a disposable sticker.

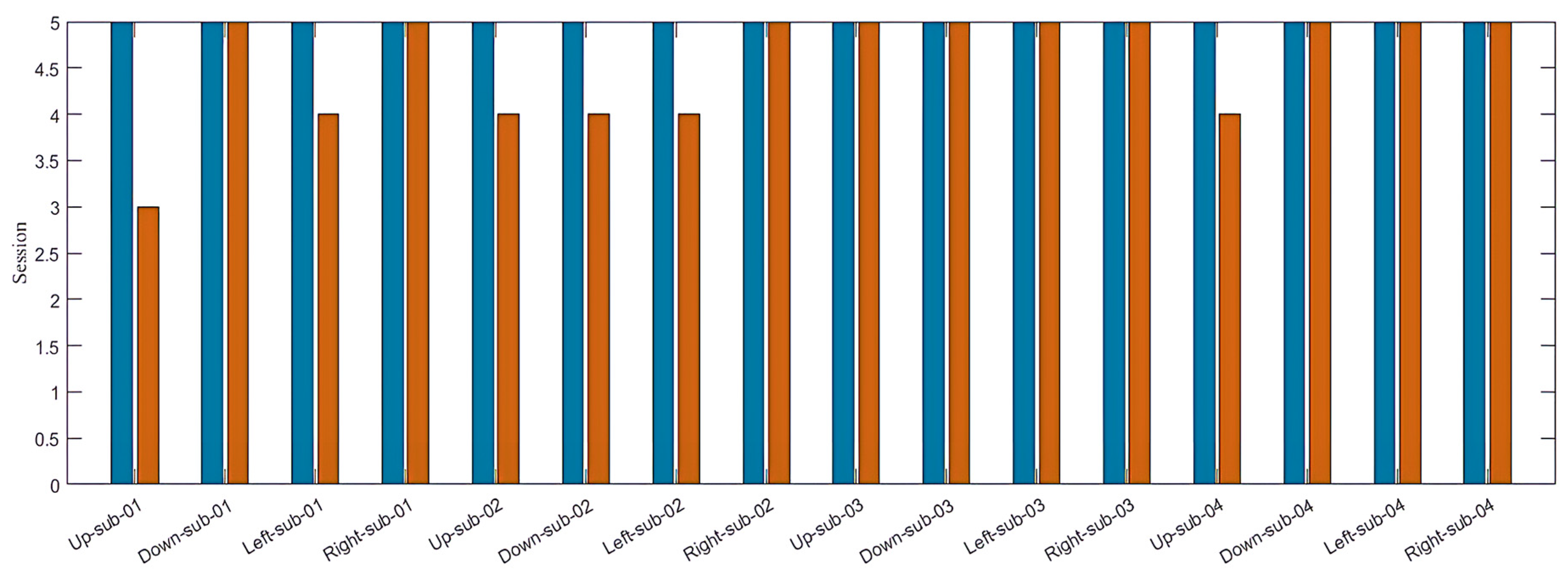

2.2. Procedure and Data Collection

The study was conducted in the Department of Electrical & Computer Engineering and Computer Science at Jackson State University. The experimental protocol was approved by the Institutional Review Board (IRB) at Jackson State University in the state of Mississippi [

40]. Four healthy participants: two females and two males in age range (20-56), with no speech loss, no hearing loss, and with no neurological or movement disorders participated the experiment and signed their written informed consent. Each participant was a native English speaker. None of the participants had any previous BCI experience and contributed to approximately one hour of recording. In this work, the participants are classified by aliases “sub-01” through “sub-04”. The age, gender, and language information about the participating subjects is provided in

Table 1.

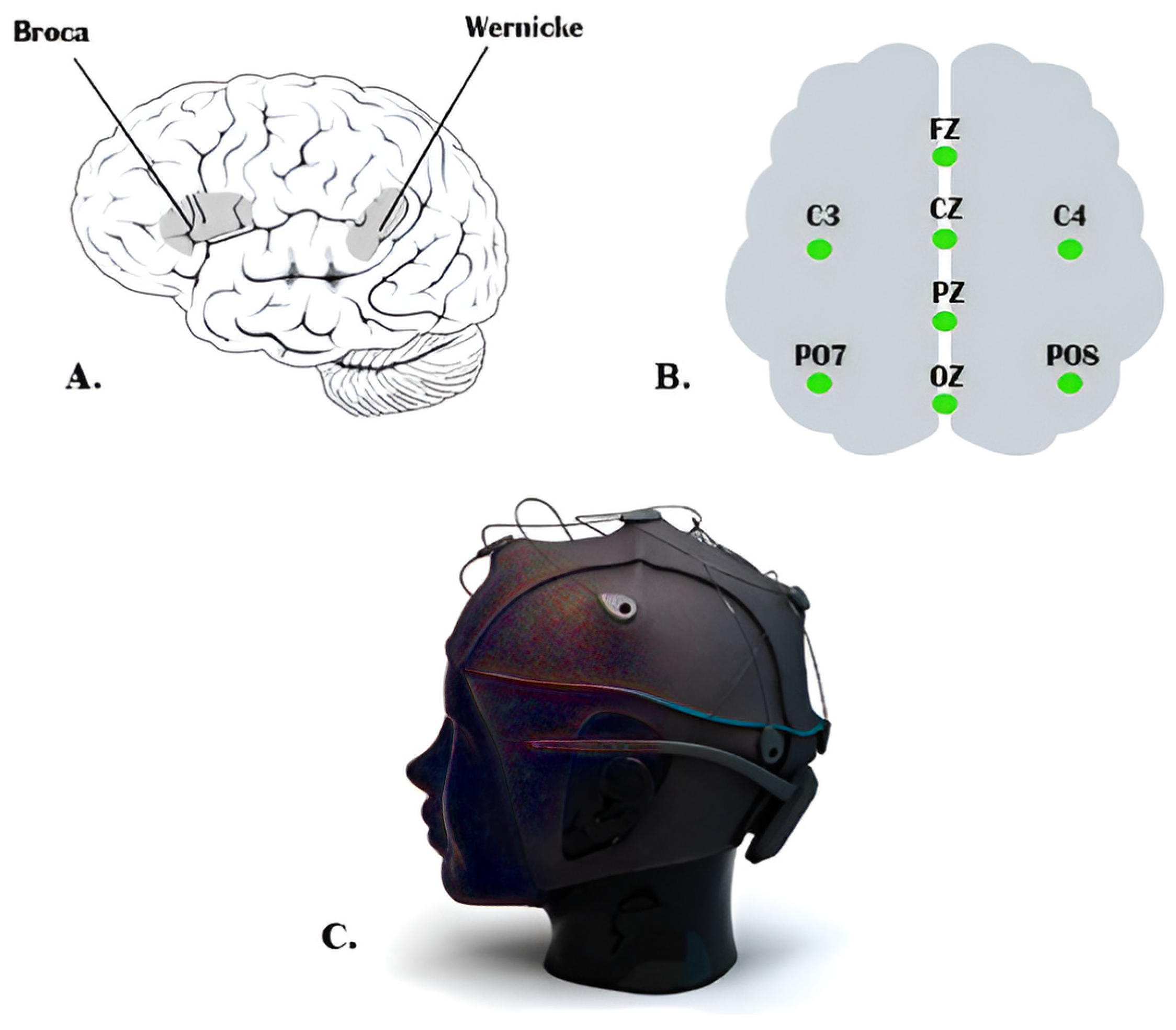

The experiment has been designed to record the brain’s activities while imagining speaking a specific command. When we usually talk to each other, our reactions will be based on what we hear or sometimes on what we see. Therefore, we could improve the accuracy of classifying different commands by allowing participants to respond to an audio question. Each participant was seated in a comfortable chair in front of another chair where a second participant would announce the question as an audio cue. To familiarize the participant with the experimental procedures, all experiment steps were explained before the experiment date and before signing the consent form. The experimental procedures were explained again during the experiment day while the EEG headset and the external electrodes were placed. The setup procedure took approximately 15 minutes. Four commands have been chosen to be imagined as a response to the question: “

Where do you want to go?” A hundred recordings were acquired for each command where each participant finished 25 recordings. Each recording lasted approximately 2 minutes and required two participants to be present. Unlike the procedure in [

24] and [

25], we did not set a specific number for each command to be repeated. When the recording began, the question was announced after 10 to 12 seconds as audio cues by one of the other three participants. After 10 seconds, the participant started executing his response for 60 seconds by keeping repeat imagining saying the required command, and the recording was stopped after 10 seconds. In each recording, the participant responded by imagining saying the specified command, which was one of the four commands. Since we have four commands, the total recorded EEG dataset for all was 400 recordings.

The recorded EEG dataset for all 400 recordings was labeled and stored; then, the EEG dataset was imported into MATLAB to prepare it for processing. The EEG dataset was processed and classified together without separating them according to their corresponding participants, so we could evaluate our designed algorithm according to its performance in dealing with a dataset from different subjects. For each command, the first 25 recordings were for subject 1, the second 25 recordings were for subject 2, and so on. After finishing the classification process, the results were labeled according to the order of the participant’s dataset.

Figure 2 illustrates the recording and signal processing procedures.

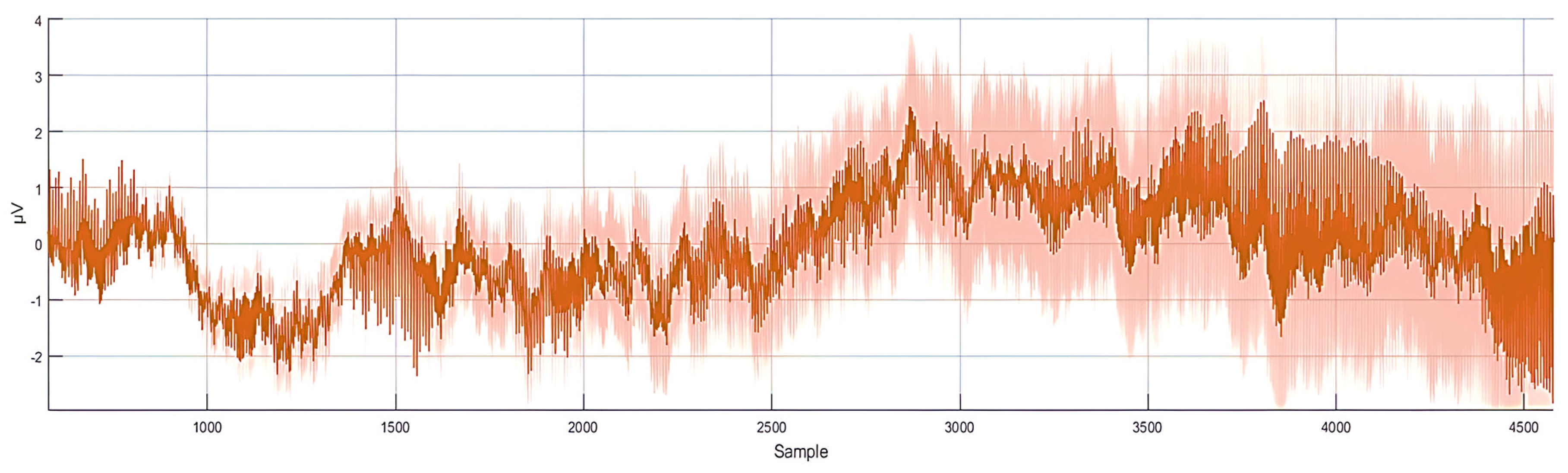

Figure 3 shows sample of the recorded 8-channel raw EEG signals.

2.3. Data Pre-processing and Data Normalization

Preprocessing the raw EEG signals is essential to remove any unwanted artifacts raised from the movement of face muscles during the recording process from the scalp that could affect the accuracy of the classification process. The recorded EEG signals were analyzed using MATLAB where bandpass filter between 10 and 100 Hz was used to eliminate any noisy signals from EEG. This filtering bandwidth maintains the range frequency bands corresponding to human brain EEG frequency limit [

41]. Then, normalization (vectorization) and feature extraction techniques have been applied to simplify the dataset and reduce the computing power required to classify the four commands. The dataset was divided into 320 recordings and 80 recordings for the testing dataset (80% for training and 20% for testing). The EEG dataset was acquired from eight EEG sensors, and it contains different frequency bands with different amplitude ranges. Thus, it was beneficial to normalize the EEG dataset to boost the training process speed and get as many accurate results as possible. The training and testing dataset were normalized by determining the mean and standard deviation for each of the eight input signals. Then, the mean value was calculated for both the training and testing dataset. Then, the results for both were divided by the standard deviation as follows:

where (

X) is the raw EEG signal, (

µ) is the calculated mean value, and (

σ) is the calculated standard deviation. After the normalization procedure, the dataset was prepared for the training process.

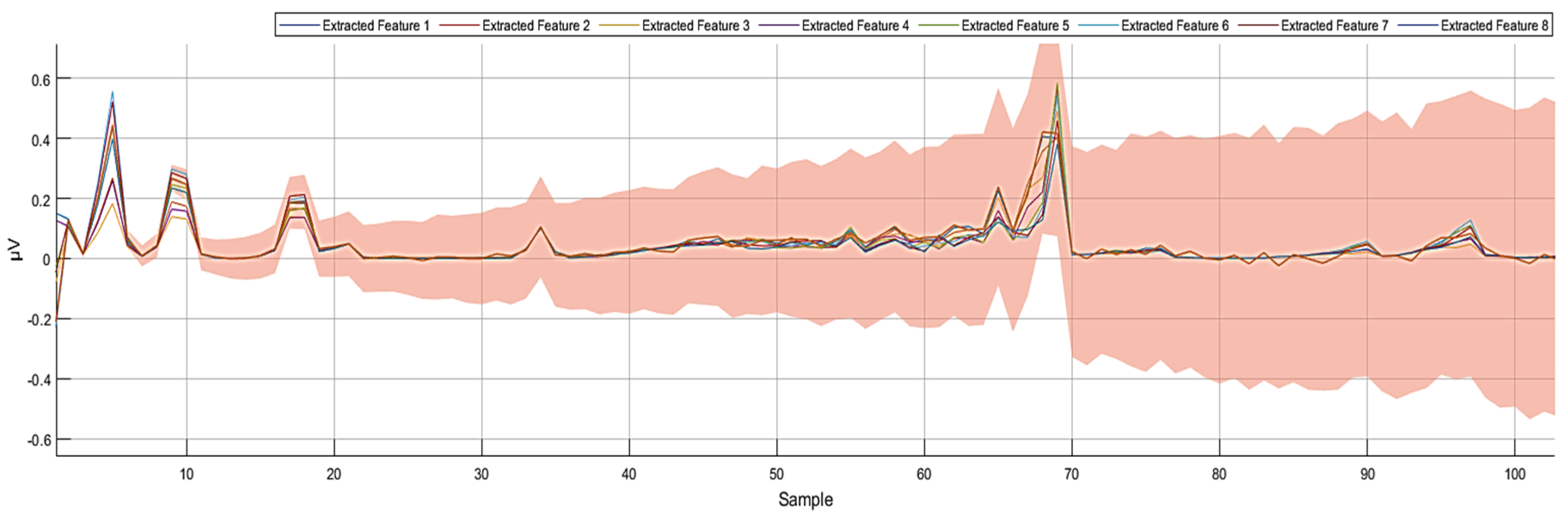

Figure 4 shows the normalized representation of the 8-channel raw EEG signals.

2.4. Feature Extraction

Wavelet scattering transform is a knowledge-based feature extraction technique that employs complex wavelets to balance the discrimination power and stability of the signal. This technique filters the signal by assembling a cascade of wavelet decomposition coefficients, complex modulus, and low-pass-filtering processes. The wavelet scattering transformation method facilitates the modulus and averaging process of the wavelet coefficients to acquire stable features. Then, the cascaded wavelet transformations are employed to retrieve the high-frequencies data loss that occurred due to the previous wavelet coefficients’ averaging modulus process. The obtained wavelet scattering coefficients retain translation invariance and local stability. In this feature-extracting procedure, a series of signal filtrations is applied to construct a feature vector representing the initial signal. This filtration process will continue until the feature vector for the whole signal length is constructed. A feature matrix is constructed for the eight EEG signals. As an outcome of the normalization stage, the obtained dataset consists of one vector with many samples for each command in each of the 100 recordings. Training the deep learning algorithm with a similar dataset is computationally expensive. For instance, in the first recording of the command Up, a (1x80480) vector has been constructed after the normalization stage. After filtering the dataset for all 100 recordings and using wavelet scattering transformation, 8 features were extracted and the (1x80480) vector of the normalized data was minimized to an (8x204) matrix for each recording.

Using the wavelet scattering transformation for all the recorded dataset (training and testing datasets) minimized the time spent during the learning process. Moreover, the wavelet scattering transformation provided more organized and recognizable brain activities. Using the wavelet scattering transformation allowed us to optimize the classifications generated by the deep learning algorithm for distinguishing between the four different commands more accurately.

Figure 5 shows the eight extracted features after applying the wavelet scattering transformation.

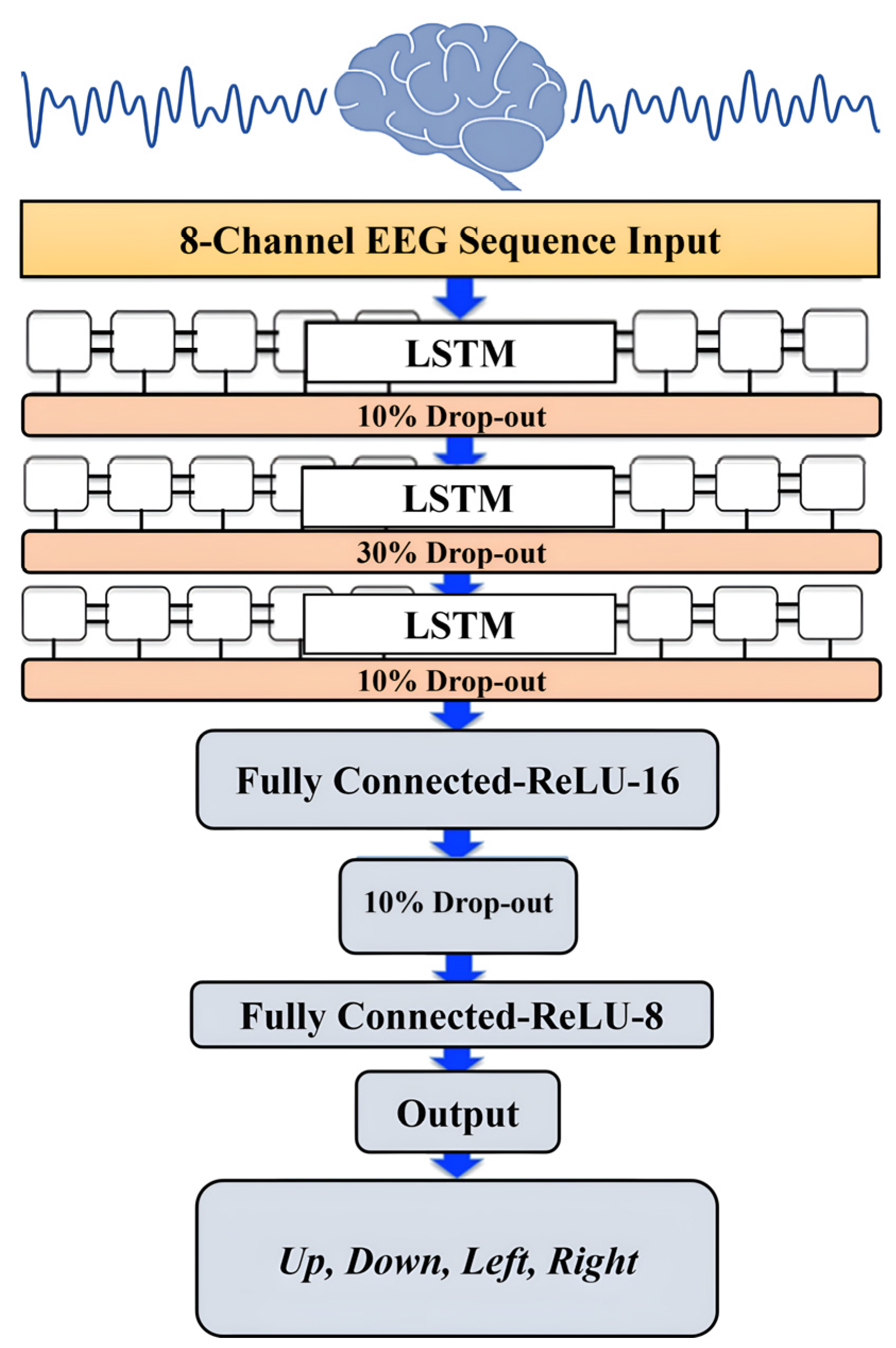

2.5. Data Classification

The normalization and feature extraction techniques were used with both the learning and testing datasets to enhance the classification accuracy of the designed BCI system. At this point, the processed datasets were prepared to be trained in deep learning. An LSTM is a type of RNN that can learn long-term dependencies among time steps of a sequenced dataset. The LSTM architecture is a good fit for classifying the sequenced EEG dataset. On the input side, the LSTM was constructed to have an input layer receiving sequence signals, which were eight time-series EEG signals. On the output side, the LSTM was constructed to have a one-vector output layer with Rectified Liner Unit (ReLU) activation function. The output values were set to be (0, 0.5, 0.7, 1.0) for the desired four commands: Up, Down, Left, and Right, respectively. During the training process of the used LSTM model, we noticed that limiting the output values of the four indicated classes between zero and one made the learning faster and more efficient. Three LSTM layers were chosen with 80 hidden units followed by a dropout layer between them. To prevent or reduce overfitting in the training process, we considered dropout ratios of 0.1, 0.3, and 0.1 for the training parameters in the LSTM neural network layers. The dropout layers randomly set 10%, 30%, and 10% of the training parameters to zero in the first, second, and third LSTM layers, respectively. Another technique was used to overcome the overfitting in the learning process and for a smoother training process, which is the L2 Regularization. The L2 Regularization is the most common type of all regularization techniques and is also commonly known as weight decay or ride regression.

The mathematical form of this regularization technique can be summarized in the following two equations:

During the

L2 Regularization, the loss function of the neural network is expressed by a purported regularization term, which is called Ω in (2). W is the weight vector,

λ is the regularization coefficient (initial value has been set to 0.0001), and the regularization function is

Ω(w). The regularization term Ω is defined as the

L2 norm of the weight matrices (W), which is the summation of all squared weight values of a weight matrix. The regularization term is weighted by the scalar

divided by two and added to the regular loss function L(W) in (3). The scalar

is sometimes called as the regularization coefficient (initial value has been set to 0.0001) and is a supplementary hyperparameter introduced into the neural network, and it determines how much the model is being regularized. The network ended with two fully connected and SoftMax output layer with the number of class labels equal to the desired number of the four outputs. Two fully connected layers and one dropout layer with a 0.1 dropout ration were added after the output of the LSTM hidden units. These two fully connected layers consisted of 16 and 8 nodes and used

ReLU activation functions, and these two layers computed the weighted sum of the inputs and passed the output to the final output layer.

Figure 6 illustrates the architecture of the designed LSTM model.

3. Results

Using the eight-channel EEG headset enabled us to design a minimized compute-intensive algorithm to distinguish between four imagined speech commands. Moreover, using the wavelet scattering transformation improved the simplicity of the EEG dataset by extracting features from each channel and reducing the dimension of the EEG feature matrix. The feature matrix was calculated for each recording of the four imagined speech commands. Using the feature mattresses to train the LSTM model improved the learning process and the execution time of the learning process. Using the auditory stimuli by asking a question to the participants showed that more accuracy in an offline BCI system could be achieved to classify an imagined speech, and we were able to obtain better results than what was achieved in [

42] where a mixed visual and auditory stimuli were used. An accuracy of 92.50% was achieved when testing the resulting LSTM model with the remaining 20% of the normalized and filtered EEG dataset. The results were achieved with the utilization of the Adaptive Moment Estimation (Adam) optimizer. The Adam optimizer is a method for calculating the adaptive learning rate for each of the hyper-parameters of the LTSM-RNN model. We achieved 92.50% after training the LSTM-RNN model on 80% of the recorded EEG dataset with 800 max Epochs and 40 for mini-batch size.

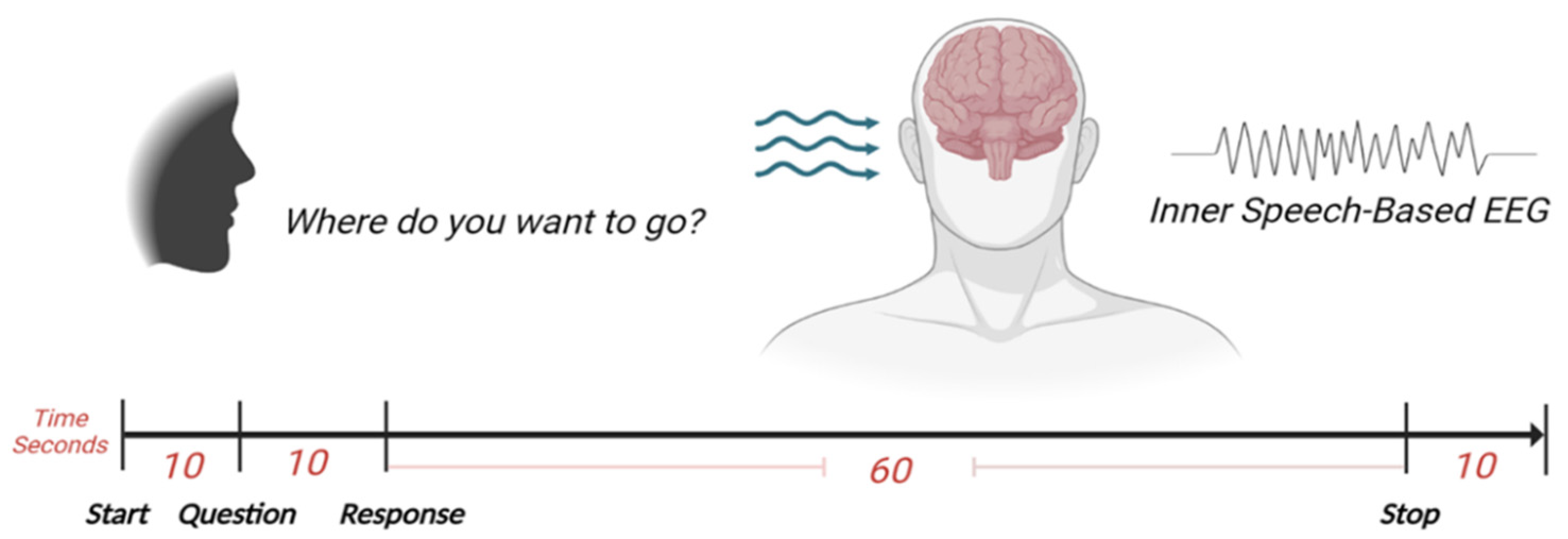

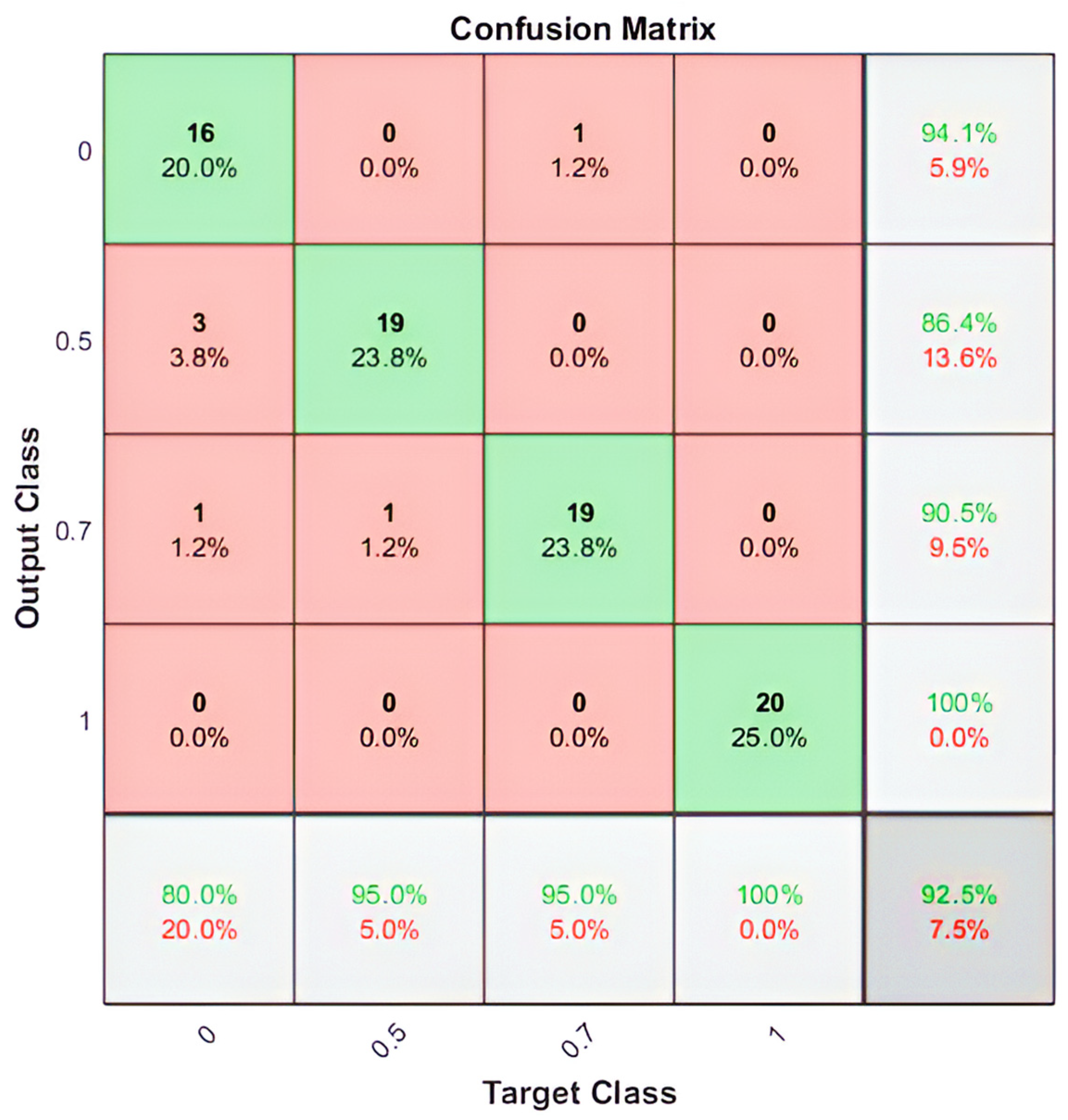

Figure 7 illustrates the classification accuracy of the designed LSTM model.

By employing the LSTM model, we could distinguish between four different imagined speech-based commands. For each command, 20 recordings were used for the testing stage, and the nominal values (0, 0.5, 0.7, and 1.0) were assigned for each command as an output value, respectively. The output value of (0) representing the command Up predicted (16/20) of the expected outputs and accomplished 80% of classification accuracy. The output values of (0.5) and (0.7), which represent the commands Down and Left, predicted (19/20) of the expected outputs and accomplished 95% of classification accuracy. While the output value of (1.0), which represents the command Right, predicted (20/20) of the expected outputs and accomplished 100% of classification accuracy. We calculated the 92.50% overall classification accuracy from averaging (80%, 95%, 95%, 100%) resulting from each imagined speech command.

Figure 8 illustrates the number and percentage of correct classifications by the trained LSTM network.

For better evaluation of the performance of the trained LSTM model, the classified dataset was categorized into true positive, true negative, false positive, and false negative. The number of true positive and true negative are the classes number that are correctly classified. Numbers of false positive and false negative are the classes number that have been misclassified. The state-of-art metrics for classification are accuracy, precision, recall, and F-score. The recall or sometimes called sensitivity estimates the ratio of true positive over the total number of true positive and false negative. Precision estimates the ratio of true positive over the total number of true positive and false negative. The F-score estimates the average between the recall and precision. Using the above confusion matrix, we calculated all the three metrics, and we obtained 92.74%, 92.50%, and 92.62% for precision, recall, and F1-score, respectively.

4. Discussion

Although the overall accuracy of classifying the imagined speed for the designed BCI system is considered excellent, one of the commands still needs improvements to show a higher accuracy compared with the other three commands. For each of the 100-recordings, the participants imagined saying each of the individual four commands. Unlike the recording scenario in [

24] and [

25], we did not set a specific number for each command to be repeated. Rather, the participants were instructed to keep repeating each command for 60 seconds. The first command

Up was always the first to be imagined. The reason might be because the participant’s brain has adapted to the speech-imagining process gradually. At the beginning of the recording, a participant might not have been focused enough to produce a good EEG signal while imagining saying a command. Another reason might be because the timing to present the question is not enough to generate the best EEG signal, especially at the beginning of the recording where the question was announced immediately as soon as the recording has started. Another limitation is related to the participants who were all healthy subjects, and no one had any challenges in normal speech or language production.

Although the recorded EEG dataset has a potential flaw, we still have an excellent performing LSTM imagined speech classification model that can be used to decode our brain thoughts. We used audio cues to stimulate the brain by asking a question to the participants and let the person imagine the response unlike [

27] and [

29] where visual cues were used. The resulting LSTM model can be converted to a

C++ or

Python code using MATLAB code generation and uploaded to a microcontroller to be tested in real-time.

5. Conclusions

A BCI system is particularly more beneficial if it can be converted into an operational and practical real-time system. Although the offline BCI approach allows the researchers to use computationally expensive algorithms for processing the EEG datasets, it is applicable only in a research environment. This research provided insights towards using low-cost with a low number of channels EEG headset to develop a reliable BCI system using a minimized computing for optimum learning process. We accomplished the resulting imagined speech classification model by employing the LSTM neural architecture in the learning and classification process. We placed the EEG sensors on carefully selected spots on the scalp to demonstrate that we could obtain classifiable EEG data with fewer numbers of sensors. By employing wavelet scattering transformation, the classified EEG signals showed the possibility of building a reliable BCI to translate brain thoughts to speech and helped physically challenged people to improve the quality of their lives. All the testing and training stages were implemented offline without any online testing or execution. Future work is planned to implement and test an online BCI system using MATLAB/Simulink and g.tec Unicorn Hybrid Black+ headset.

6. Future Work

Further deep learning and filtration techniques will be implemented on the EEG dataset to improve the classification accuracy. We obtained a promising preliminary result with the Support Vector Machine (SVM) classification model. Online testing for the resulting classification model is planned to be implemented using MATLAB Simulink for better evaluating the classification performance in real-time.

Author Contributions

Conceptualization, M.M.A.; methodology, M.M.A., K.H.A. and W.L.W.; software, M.M.A.; validation, M.M.A. and W.L.W.; formal analysis, M.M.A., K.H.A. and W.L.W.; investigation, K.H.A., M.M.A. and W.L.W; resources, K.H.A and M.M.A.; data curation, K.H.A., and M.M.A.; writing–original draft preparation, K.H.A., M.M.A., M.M.A. and W.L.W.; writing–review and editing, K.H.A., M.M.A. and W.L.W.; visualization, K.H.A., M.M.A. and W.L.W; supervision, K.H.A.; project administration, K.H.A.; funding acquisition, K.H.A., M.M.A. and W.L.W. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Review Board of Ethics Committee of Jackson State University (Approval no.: 0067-23).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Acknowledgments

The authors acknowledge the contribution of the participants and the support of the Department of Electrical & Computer Engineering and Computer Science at Jackson State University (JSU).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Panachakel, J. T., Vinayak, N. N., Nunna, M., Ramakrishnan, A. G., and Sharma, K. (2020b). “An improved EEG acquisition protocol facilitates localized neural activation,” in Advances in Communication Systems and Networks (Springer), 267–281. [CrossRef]

- Abdulkader, S. N., Atia, A., and Mostafa, M.-S. M. (2015). Brain-computer interfacing: applications and challenges. Egypt. Inform. J. 16, 213–230. [CrossRef]

- Mokhles M. Abdulghani, Wilbur L. Walters, and Khalid H. Abed, "EEG Classifier Using Wavelet Scattering Transform-Based Features and Deep Learning for Wheelchair Steering," The 2022 International Conference on Computational Science and Computational Intelligence – Artificial Intelligence (CSCI'22–AI), IEEE Conference Publishing Services (CPS), Las Vegas, Nevada, December 14-16, 2022.

- K. M. Al-Aubidy and M. M. Abdulghani, "Wheelchair Neuro Fuzzy Control Using Brain Computer Interface", 12th International Conference on Developments in eSystems Engineering (DeSE), pp. 640-645, 2019.

- Al-Aubidy, Kasim M., and Mokhles M. Abdulghani. "Towards Intelligent Control of Electric Wheelchairs for Physically Challenged People."Advanced Systems for Biomedical Applications: 225. [CrossRef]

- Mokhles M. Abdulghani, Arthur A. Harden, and Khalid H. Abed, “A Drone Flight Control Using Brain-Computer Interface and Artificial Intelligence,” The 2022 International Conference on Computational Science and Computational Intelligence – Artificial Intelligence (CSCI'22–AI), IEEE Conference Publishing Services (CPS), Las Vegas, Nevada, December 14-16, 2022.

- Mokhles M. Abdulghani, Wilbur L. Walters, and Khalid H. Abed, "Low-Cost Brain Computer Interface Design Using Deep Learning for Internet of Brain Controlled Things Applications," The 2022 International Conference on Computational Science and Computational Intelligence – Artificial Intelligence (CSCI'22–AI), IEEE Conference Publishing Services (CPS), Las Vegas, Nevada, December 14-16, 2022.

- M. Rojas, P. Ponce, & A. Molina, “A fuzzy logic navigation controller implemented in hardware for an electric wheelchair”, Intr. Journal of Advanced Robotic Systems, January-February 2018, pp.1-12. [CrossRef]

- A. Siswoyo, Z. Arief, & I.A. Sulistijono, “Application of Artificial Neural Networks in Modeling Direction Wheelchairs Using Neurosky Mindset Mobile (EEG) Device”, EMITTER International Journal of Engineering Technology, Vol. 5, No. 1, June 2017, pp.170-191. [CrossRef]

- A. Fattouh, O. Horn, & G. Bourhis, “Emotional BCI Control of a Smart Wheelchair”, IJCSI International Journal of Computer Science Issues, Vol. 10, No 1, May 2013, pp. 32-36.

- Y. Roy, H. Banville, I. Albuquerque, A. Gramfort, T. H. Falk, and J. Faubert, “Deep learning-based electroencephalography analysis: a systematic review,” J. Neural Eng., vol. 16, no. 5, p. 051001, Aug. 2019. [CrossRef]

- c. H. Nguyen, G. K. Karavas, and P. Artemiadis, “Inferring imagined speech using EEG signals: A new approach using Riemannian manifold features,” J. Neural Eng., vol. 15, no. 1, pp. 016002–016018, Feb. 2018. [CrossRef]

- L. Vezard, P. Legrand, M. Chavent, F. Fa ̈ıta-A ̈ınseba, and L. Trujillo, EEG classification for the detection of mental states, Applied Soft Computing, 2015. [CrossRef]

- H. Meisheri, N. Ramrao, and S. K. Mitra, Multiclass Common Spatial Pattern with Artifacts Removal Methodology for EEG Signals, in ISCBI, IEEE, 2016. [CrossRef]

- T. Shiratori, H. Tsubakida, A. Ishiyama, and Y. Ono, Three-class classification of motor imagery EEG data including rest state using filter-bank multi-class common spatial pattern, in BCI, IEEE, 2015.

- J. J. Bird, D. R. Faria, L. J. Manso, A. Ekárt, and C. D. Buckingham, “A Deep Evolutionary Approach to Bioinspired Classifier Optimisation for BrainMachine Interaction,” Complexity, vol. 2019, pp. 1–14, Mar. 2019. [CrossRef]

- Wu, D. (2016). Online and offline domain adaptation for reducing BCI calibration effort. IEEE Trans. Hum. Mach. Syst. 47, 550–563. [CrossRef]

- Khan, M. J., and Hong, K.-S. (2017). Hybrid EEG–fNIRS-based eight-command decoding for BCI: application to quadcopter control. Front. Neurorobot. 11:6. [CrossRef]

- Edelman, B. J., Baxter, B., and He, B. (2015). EEG source imaging enhances the decoding of complex right-hand motor imagery tasks. IEEE Trans. Biomed. Eng. 63, 4–14. [CrossRef]

- Weitong Chen, Sen Wang, Xiang Zhang, Lina Yao, Lin Yue, Buyue Qian, and Xue Li, “EEG-based Motion Intention Recognition via Multi-task RNNs,” in Proceedings of the 2018 SIAM International Conference on Data Mining, Philadelphia, PA: Society for Industrial and Applied Mathematics, 2018, pp. 279–287. [CrossRef]

- Wang, H. E., Bénar, C. G., Quilichini, P. P., Friston, K. J., Jirsa, V. K., and Bernard, C. (2014). A systematic framework for functional connectivity measures. Front. Neurosci. 8:405. [CrossRef]

- Riccio A, Mattia D, Simione L, Olivetti M, Cincotti F (2012) Eye gaze independent brain computer interfaces for communication. J Neural Eng 9: 045001. [CrossRef]

- Hohne J, Schreuder M, Blankertz B, Tangermann M (2011) A novel 9-class auditory ERP paradigm driving a predictive text entry system. Front Neuroscience 5: 99. [CrossRef]

- Nguyen, C. H., Karavas, G. K., and Artemiadis, P. (2017). Inferring Inner speech using EEG signals: a new approach using Riemannian manifold features. J. Neural Eng. 15:016002. [CrossRef]

- Koizumi, K., Ueda, K., and Nakao, M. (2018). “Development of a cognitive brain-machine interface based on a visual imagery method,” in 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) (Honolulu: IEEE), 1062–1065. [CrossRef]

- Pawar, D., and Dhage, S. (2020). Multiclass covert speech classification using extreme learning machine. Biomed. Eng. Lett. 10, 217–226. [CrossRef]

- Nieto, N., Peterson, V., Rufiner, H.L.et al. Thinking out loud, an open-access EEG-based BCI dataset for inner speech recognition.Sci Data 9, 52 (2022). [CrossRef]

- P. Bashivan, I. Rish, M. Yeasin, and N. Codella, Learning Representations from EEG With Deep Recurrent-Convolutional Neural Networks, arXiv, 2015.

- Stephan, F., Saalbach, H., and Rossi, S. (2020). The brain differentially prepares inner and overt speech production: electrophysiological and vascular evidence. Brain Sci. 10:148. [CrossRef]

- Abdulghani, M. M., Al-Aubidy, K.M. “Design and evaluation of a MIMO ANFIS using MATLAB and V-REP” 11th International Conference on Advances in Computing, Control, and Telecommunication Technologies, ACT 2020; Virtual, Online; 28 August 2020 through 29 August 2020, ISBN: 978-171381851-9.

- X. Pei, D. L. Barbour, E. C. Leuthardt, and G. Schalk, “Decoding vowels and consonants in spoken and imagined words using electrocorticographic signals in humans,” J. Neural Eng., vol. 8, no. 4, pp. 046028–046039, Aug. 2011. [CrossRef]

- Y. Chen et al., “A high-security EEG-based login system with RSVP stimuli and dry electrodes,” IEEE Trans. Inf. Forensics Security, vol. 11, no. 12, pp. 2635–2647, Dec. 2016. [CrossRef]

- K. Brigham, B.V.K.V. Kumar, Subject identification from electroencephalogram (EEG) signals during imagined speech, 2010 Fourth IEEE International Conference on Biometrics: Theory, Applications and Systems (BTAS) (2010) 1–8. [CrossRef]

- K. Mohanchandra, S. Saha, G.M. Lingaraju, EEG based brain computer interface for speech communication: principles and applications, in: A.E. Hassanien, A.T. Azar (Eds.), Brain–Computer Interfaces: Current Trends and Applications, Springer International Publishing, Cham, 2015, pp. 273–293. [CrossRef]

- P. Gaur, R.B. Pachori, H. Wang, G. Prasad, An automatic subject specific intrinsic mode function selection for enhancing two-class EEG based motor imagery-brain computer interface, IEEE Sens. J. 19 (2019) 6938–6947. [CrossRef]

- M. D’Zmura, S. Deng, T. Lappas, S. Thorpe, R. Srinivasan, Toward EEG sensing of imagined speech, in: J.A. Jacko (Ed.), Human–Computer Interaction. New Trends: 13th International Conference, HCI International 2009, San Diego, CA, USA, July 19–24, 2009, Proceedings, Part I, Springer Berlin Heidelberg, Berlin, Heidelberg, 2009, pp. 40–48.

- M. Matsumoto, J. Hori, Classification of silent speech using support vector machine and relevance vector machine, Appl. Soft Comput. 20 (2014) 95–102. [CrossRef]

- g.tec Medical Engineering GmbH (2020). Unicorn Hybrid Black. Find it at: https://www.unicorn-bi.com/brain-interface-technology/(Accessed 12 19, 2022).

- Acharya, J.N.; Hani, A.; Cheek, J.; Thirumala, P.; Tsuchida, T.N. American Clinical Neurophysiology Society Guideline 2: Guidelines for Standard Electrode Position Nomenclature. J. Clin. Neurophysiol. 2016, 33, 308–311. [CrossRef]

- Jackson State University, Institutional Review Board (IRB), https://www.jsums.edu/researchcompliance/irb-protocol/. 2023.

- Liu, Qing, Liangtao Yang, Zhilin Zhang, Hui Yang, Yi Zhang, and Jinglong Wu. "The Feature, Performance, and Prospect of Advanced Electrodes for Electroencephalogram." Biosensors 13, no. 1 (2023): 101. [CrossRef]

- Li H, Chen F. Classify imaginary mandarin toneswith cortical EEG signals[C]//INTERSPEECH. 2020: 4896-4900.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).