Submitted:

25 February 2023

Posted:

28 February 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

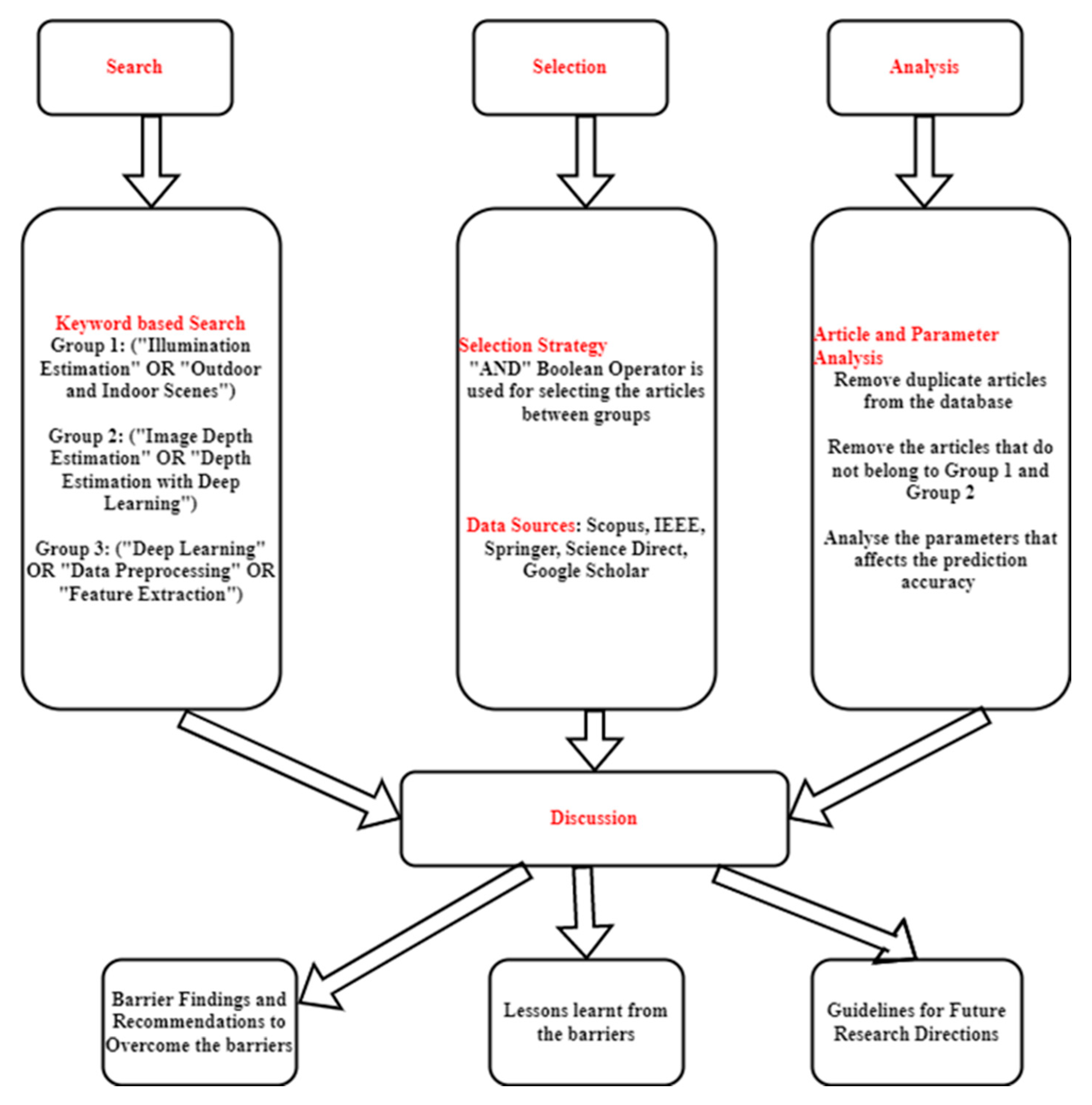

2. Review Methodology and Background

2.1. Review Scope and Strategies

2.2. Research Gap and Motivations

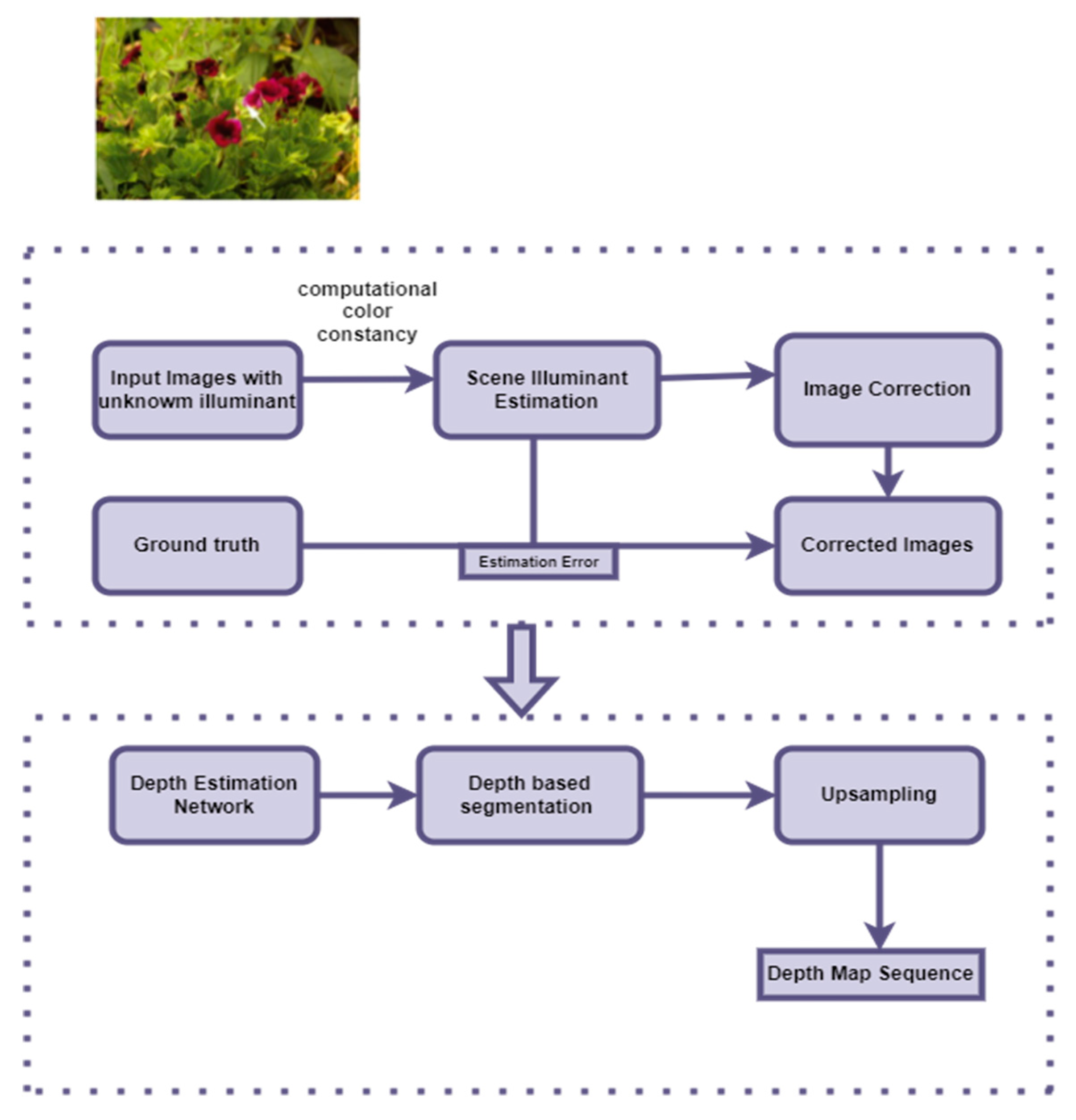

2.3. Challenges in Color Constancy

3. Deep Learning Methods and Applications in Illumination Estimation

3.1. Color Constancy Methods

3.2. Illumination Estimation Applications

4. Barriers in Illumination Estimation and Recommendations to Overcome These Barriers

5. Conclusions

Author Contributions

Funding

References

- Afifi, M., Barron, J. T., LeGendre, C., Tsai, Y. T., & Bleibel, F. (2021). Cross-camera convolutional color constancy. In Proceedings of the IEEE/CVF International Conference on Computer Vision (pp. 1981-1990).

- Afifi, M., Punnappurath, A., Finlayson, G., Brown, M.S., 2019. As-projective-as-possible bias correction for illumination estimation algorithms. J. Opt. Soc. Am. A 36, 71–78. [CrossRef]

- Agarwal, V., Gribok, A.V., Abidi, M.A., 2007. Machine learning approach to color constancy. Neural Netw. 20, 559–563. [CrossRef]

- Bai, J., Guo, J., Wan, C., Chen, Z., He, Z., Yang, S., Yu, P., Zhang, Y., Guo, Y., 2022. Deep Graph Learning for Spatially-Varying Indoor Lighting Prediction.

- Barnard, K., 1998. Modeling Scene Illumination Colour for Computer Vision and Image Reproduction: A survey of computational approaches (Ph D Thesis). Simon Fraser University.

- Barron, J.T., 2015. Convolutional Color Constancy.

- Bianco, S., Ciocca, G., Cusano, C., Schettini, R., 2009. Improving Color Constancy Using Indoor–Outdoor Image Classification. IEEE Trans. Image Process. Publ. IEEE Signal Process. Soc. 17, 2381–92. [CrossRef]

- Cardei, V.C., Funt, B., n.d. Committee-Based Color Constancy 3.

- Celik, T., Tjahjadi, T., 2012. Adaptive colour constancy algorithm using discrete wavelet transform. Comput. Vis. Image Underst. 116, 561–571. [CrossRef]

- Chen, J., Yang, G., Ding, X., Guo, Z., Wang, S., 2022. Robust detection of dehazed images via dual-stream CNNs with adaptive feature fusion. Comput. Vis. Image Underst. 217, 103357. [CrossRef]

- Cheng, D., Price, B., Cohen, S., Brown, M.S., 2015. Effective learning-based illuminant estimation using simple features, in: 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Presented at the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), IEEE, Boston, MA, USA, pp. 1000–1008. [CrossRef]

- Choi, H.-H., Kang, H.-S., Yun, B.-J., 2020. CNN-Based Illumination Estimation with Semantic Information. Appl. Sci. 10. [CrossRef]

- Choi, H.-H., Yun, B.-J., 2021. Very Deep Learning-Based Illumination Estimation Approach With Cascading Residual Network Architecture (CRNA). IEEE Access 9, 133552–133560. [CrossRef]

- de Queiroz Mendes, R., Ribeiro, E.G., dos Santos Rosa, N., Grassi, V., 2021. On deep learning techniques to boost monocular depth estimation for autonomous navigation. Robot. Auton. Syst. 136, 103701. [CrossRef]

- Einabadi, F., Guillemaut, J.-Y., Hilton, A., 2021. Deep Neural Models for Illumination Estimation and Relighting: A Survey. Comput. Graph. Forum 40, 315–331. [CrossRef]

- F. Zhan, Y. Yu, C. Zhang, R. Wu, W. Hu, S. Lu, F. Ma, X. Xie, L. Shao, 2022. GMLight: Lighting Estimation via Geometric Distribution Approximation. IEEE Trans. Image Process. 31, 2268–2278. [CrossRef]

- Ferraz, C.T., Borges, T.T.N., Cavichiolli, A., Gonzaga, A., Saito, J.H., n.d. Evaluation of Convolutional Neural Networks for Raw Food Texture Classification under Variations of Lighting Conditions 4.

- Forsyth, D.A., 1990. A novel algorithm for color constancy. Int. J. Comput. Vis. 5, 5–35. [CrossRef]

- Foster, D.H., 2011. Color constancy. Vision Res. 51, 674–700. [CrossRef]

- Foster, D.H., Nascimento, S.M.C., Amano, K., Arend, L., Linnell, K.J., Nieves, J.L., Plet, S., Foster, J.S., 2001. Parallel detection of violations of color constancy. Proc. Natl. Acad. Sci. 98, 8151–8156. [CrossRef]

- Foster, D.H., Reeves, A., 2022. Colour constancy failures expected in colourful environments. Proc. R. Soc. B Biol. Sci. 289, 20212483. [CrossRef]

- Gardner, M.-A., Sunkavalli, K., Yumer, E., Shen, X., Gambaretto, E., Gagné, C., Lalonde, J.-F., 2017. Learning to Predict Indoor Illumination from a Single Image. ACM Trans. Graph. 36. [CrossRef]

- Garon, M., Sunkavalli, K., Hadap, S., Carr, N., Lalonde, J.-F., 2019. Fast Spatially-Varying Indoor Lighting Estimation, in: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Presented at the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), IEEE, Long Beach, CA, USA, pp. 6901–6910. [CrossRef]

- Gijsenij, A., Gevers, T., 2007. Color Constancy using Natural Image Statistics. [CrossRef]

- Gijsenij, A., Gevers, T., van de Weijer, J., 2011. Computational Color Constancy: Survey and Experiments. IEEE Trans. Image Process. 20, 2475–2489. [CrossRef]

- H. -H. Choi, B. -J. Yun, 2020. Deep Learning-Based Computational Color Constancy With Convoluted Mixture of Deep Experts (CMoDE) Fusion Technique. IEEE Access 8, 188309–188320. [CrossRef]

- Hold-Geoffroy, Y., Sunkavalli, K., Hadap, S., Gambaretto, E., Lalonde, J.-F., 2017. Deep outdoor illumination estimation, in: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Presented at the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), IEEE, Honolulu, HI, pp. 2373–2382. [CrossRef]

- Huan, L., Zheng, X., Gong, J., 2022. GeoRec: Geometry-enhanced semantic 3D reconstruction of RGB-D indoor scenes. ISPRS J. Photogramm. Remote Sens. 186, 301–314. [CrossRef]

- J. Park, H. Park, S. -E. Yoon, W. Woo, 2020. Physically-inspired Deep Light Estimation from a Homogeneous-Material Object for Mixed Reality Lighting. IEEE Trans. Vis. Comput. Graph. 26, 2002–2011. [CrossRef]

- J. T. Barron, Y. Tsai, 2017. Fast Fourier Color Constancy, in: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Presented at the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 6950–6958. [CrossRef]

- Jin, X., Zhu, X., Li, Xinxin, Zhang, K., Li, Xiaodong, Zhang, X., Zhou, Q., Xie, S., Fang, X., 2021. Learning HDR illumination from LDR panorama images. Comput. Electr. Eng. 91, 107057. [CrossRef]

- Kán, P., Kafumann, H., 2019. DeepLight: light source estimation for augmented reality using deep learning. Vis. Comput. 35, 873–883. [CrossRef]

- Laakom, F., Raitoharju, J., Iosifidis, A., Nikkanen, J., Gabbouj, M., 2020. Monte Carlo Dropout Ensembles for Robust Illumination Estimation.

- Laakom, F., Raitoharju, J., Nikkanen, J., Iosifidis, A., Gabbouj, M., 2021. Robust channel-wise illumination estimation.

- Lalonde, J.-F., Efros, A.A., Narasimhan, S.G., 2012. Estimating the Natural Illumination Conditions from a Single Outdoor Image. Int. J. Comput. Vis. 98, 123–145. [CrossRef]

- Lou, Z., Gevers, T., Hu, N., & Lucassen, M. P. (2015, September). Color Constancy by Deep Learning. In BMVC (pp. 76-1).

- Li, M., Guo, J., Cui, X., Pan, R., Guo, Y., Wang, C., Yu, P., Pan, F., 2019. Deep Spherical Gaussian Illumination Estimation for Indoor Scene. [CrossRef]

- Li, N., Li, C., Chen, S., Kan, J., 2020. An Illumination Estimation Algorithm based on Outdoor Scene Classification. Int. J. Circuits Syst. Signal Process. 14. [CrossRef]

- Lou, Z., Gevers, T., Hu, N., Lucassen, M.P., 2015. Color Constancy by Deep Learning, in: Procedings of the British Machine Vision Conference 2015. Presented at the British Machine Vision Conference 2015, British Machine Vision Association, Swansea, p. 76.1-76.12. [CrossRef]

- Luo, Y., Wang, X., Wang, Q., 2022. Which Features Are More Correlated to Illuminant Estimation: A Composite Substitute. Appl. Sci. 12. [CrossRef]

- Mertan, A., Duff, D.J., Unal, G., 2022. Single image depth estimation: An overview. Digit. Signal Process. 123, 103441. [CrossRef]

- Ming, Y., Meng, X., Fan, C., Yu, H., 2021. Deep learning for monocular depth estimation: A review. Neurocomputing 438, 14–33. [CrossRef]

- P. V. Gehler, C. Rother, A. Blake, T. Minka, T. Sharp, 2008. Bayesian color constancy revisited, in: 2008 IEEE Conference on Computer Vision and Pattern Recognition. Presented at the 2008 IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–8. [CrossRef]

- Park, M.-G., Yoon, K.-J., 2019. As-planar-as-possible depth map estimation. Comput. Vis. Image Underst. 181, 50–59. [CrossRef]

- S. Bianco, C. Cusano, R. Schettini, 2015. Color constancy using CNNs, in: 2015 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). Presented at the 2015 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 81–89. [CrossRef]

- Sarker, I.H., 2021. Deep Learning: A Comprehensive Overview on Techniques, Taxonomy, Applications and Research Directions. SN Comput. Sci. 2, 420. [CrossRef]

- Schaefer, G., Hordley, S., Finlayson, G., 2005. A combined physical and statistical approach to colour constancy. [CrossRef]

- Shah, S.T.H., Xuezhi, X., 2021. Traditional and modern strategies for optical flow: an investigation. SN Appl. Sci. 3, 289. [CrossRef]

- Tan, R. T., Nishino, K., & Ikeuchi, K. (2004). Color constancy through inverse-intensity chromaticity space. JOSA A, 21(3), 321-334.

- Tran, H.Q., Ha, C., 2022. Machine learning in indoor visible light positioning systems: A review. Neurocomputing 491, 117–131. [CrossRef]

- Trinh, D.-H., Daul, C., 2019. On illumination-invariant variational optical flow for weakly textured scenes. Comput. Vis. Image Underst. 179, 1–18. [CrossRef]

- Van De Weijer, J., Gevers, T., & Gijsenij, A. (2007). Edge-based color constancy. IEEE Transactions on image processing, 16(9), 2207-2214.

- Vazquez-Corral, J., Vanrell, M., Baldrich, R., Tous, F., 2012. Color Constancy by Category Correlation. Image Process. IEEE Trans. On 21, 1997–2007. [CrossRef]

- Wang, X., Shen, J., 2019. Machine Learning and its Applications in Visible Light Communication Based Indoor Positioning, in: 2019 International Conference on High Performance Big Data and Intelligent Systems (HPBD&IS). Presented at the 2019 International Conference on High Performance Big Data and Intelligent Systems (HPBD&IS), IEEE, Shenzhen, China, pp. 274–277. [CrossRef]

- Zhan, F., Zhang, C., Yu, Y., Chang, Y., Lu, S., Ma, F., Xie, X., 2020. EMLight: Lighting Estimation via Spherical Distribution Approximation.

- Zhang, K., Li, Xinxin, Jin, X., Liu, B., Li, Xiaodong, Sun, H., 2021. Outdoor illumination estimation via all convolutional neural networks. Comput. Electr. Eng. 90, 106987. [CrossRef]

- Zhang, N., Nex, F., Kerle, N., Vosselman, G., 2022. LISU: Low-light indoor scene understanding with joint learning of reflectance restoration. ISPRS J. Photogramm. Remote Sens. 183, 470–481. [CrossRef]

- Zhang, W., Kavehrad, M., 2013. Comparison of VLC-based indoor positioning techniques, in: Dingel, B.B., Jain, R., Tsukamoto, K. (Eds.), . Presented at the SPIE OPTO, San Francisco, California, USA, p. 86450M. [CrossRef]

| Title of Study | Keywords | Outcome/Significance |

|---|---|---|

| Modeling Scene Illumination Color for Computer Vision and Image Reproduction: A survey of computational approaches (Barnard, 1998) | Illumination modeling, Image reproduction and Image enhancement. | Discussion on the progress in modeling the scene illumination that will result in progress in computer vision, image enhancement, and image reproduction. Also, the nature of image formation and acquisition, overview of the computational approaches have also been investigated. |

| Computational Color Constancy: Survey and Experiments (Gijsenij et al., 2011) | Statistical and learning based color constancy methods | Various publicly available methods for computational color constancy, of which some are considered to be state-of-the-art, are evaluated on two data sets (the grey-ball SFU (Simon fraser university -set and the Color-checker-set). |

| Machine learning in indoor visible light positioning systems: A review (Tran and Ha, 2022) | Machine learning; illumination-based positioning algorithms | Deep discussions of articles published during the past five years in terms of their proposed algorithm, space (2D/3D), experimental simulation method), positioning accuracy, type of collected data, type of optical receiver, and number of transmitters. |

| Deep Neural Models for Illumination Estimation and Relighting: A Survey (Einabadi et al., 2021) | Deep learning; Illumination Estimation and Relighting | Discussion on the main characteristics of the current deep learning methods, dataset and possible future trends for illumination estimation and relighting. |

| Deep learning for monocular depth estimation: A review (Ming et al., 2021) | Deep learning; Monocular depth estimation | Summarize and categorize the deep learning models for monocular depth estimation. Publicly available datasets and the corresponding evaluation metrics are introduced. The novelties and performance of these methods are compared and discussed. |

| Single image depth estimation: An overview (Mertan et al., 2022) | Illumination-based depth estimation; Single image depth estimation problem. | Investigations into the mechanisms, principles, and failure cases of contemporary solutions for depth estimation. |

| Title of Study | Methodology/ Technique used |

Performance metric | Outcome/Significance |

|---|---|---|---|

| Machine learning approach to color constancy (Agarwal et al., 2007) | Ridge regression and Support Vector Regression | Uncertainty analysis | The shorter training time and single parameter optimization provides a potential scope for real time video tracking application |

| Convolutional Color Constancy (Barron, 2015) | Discriminative learning using convolutional neural networks and structured prediction | Angular error (mean, median, tri-mean, Error for lowest/ highest 25% predictions | The model can improve performance on standard benchmarks (like White-patch, grey-world etc) by nearly 40% |

| CNN-Based Illumination Estimation with Semantic Information (Choi et al., 2020) | CNN with new pooling layer to distinguish between useful data and noisy data, and thus efficiently remove noisy data during learning and evaluating. | Mean angular error (MSE) (mean, median, tri-mean, Error for lowest/ highest 25% predictions |

Takes computational color constancy to higher accuracy and efficiency by adopting a novel pooling method. Results prove that the proposed network outperforms its conventional counterparts in estimation accuracy |

| Color Constancy Using CNNs (S. Bianco et al., 2015) | CNN (max pooling, one fully connected layer and three output nodes.) | Angular error (the minimum 10th percentile, 90th percentile, median, maximum, minimum) (Comparison over the methods) |

Integrate feature learning and regression into one optimization process, which leads to a more effective model for estimating scene illumination. Improves stability of the local illuminant estimation ability of the proposed method |

| Deep Learning-Based Computational Color Constancy with Convoluted Mixture of Deep Experts (CMoDE) Fusion Technique (H. -H. Choi and B. -J. Yun, 2020) | CMoDE fusion technique, multi-stream deep neural network (MSDNN) | Angular error (mean, median, tri-mean, mean of best/worst 25%) (Comparison over the methods) |

CMoDE-based DCNN brings significant progress towards efficiency of using computing resources, as well as accuracy of estimating illuminants |

| Fast Fourier Color Constancy (J. T. Barron and Y. Tsai, 2017) | Fast Fourier Color Constancy in Frequency domain | MSE (mean, median, tri-mean, best/worst 25%) | The method operates in the frequency domain, produces lower error rates than the previous state-of-the-art by 13 − 20% while being 250 − 3000× faster |

| Color Constancy by Deep Learning (Lou et al., 2015) | DNN-based regression | Angular error (mean, median, standard deviation) |

The method outperforms the state-of-the-art by 9%. In cross dataset validation, this approach reduces the median angular error by 35%. The algorithm operates at more than 100 fps during testing. |

| As-Projective-As-Possible Bias Correction for Illumination Estimation Algorithms (Afifi et al., 2019) | Improving the accuracy of the fast statistical-based algorithms by applying a post-estimate bias-correction function to transform the estimated R, G, B vector such that it lies closer to the correct solution. | MSE (median, tri-mean, best/worst 25%) |

Propose an As-projective-as-possible (APAP) projective transform that locally adapts the projective transform to the input R, G, B vector which is effective over the state-of-the-art statistical methods |

| Robust channel-wise illumination estimation (Laakom et al., 2021) | Efficient CNN | Angular error (mean, median, tri-mean, best/worst 25%) | The method substantially reduces the number of parameters needed to solve the task by up to 90% while achieving competitive experimental results compared to state-of-the-art methods |

| Deep Outdoor Illumination Estimation (Hold-Geoffroy et al., 2017) | CNN for outdoor illumination estimations | MSE; scale-invariant MSE; per-color scale invariant MSE. |

An extensive evaluation on both the panorama dataset and captured HDR environment maps shows significantly superior performances |

| Fast Spatially-Varying Indoor Lighting Estimation (Garon et al., 2019) | CNN for indoor illumination estimations | MSE Mean Absolute Error |

Achieve lower lighting estimation errors and are preferred by users over the state-of-the-art models |

| Monte Carlo Dropout Ensembles for Robust Illumination Estimation (Laakom et al., 2020) | Monte Carlo dropout | Angular error (mean, median, tri-mean, best/worst 25%) |

The proposed framework leads to state-of-the-art performance on INTEL-TAU dataset |

| Very Deep Learning-Based Illumination Estimation Approach with Cascading Residual Network Architecture (CRNA) (Choi and Yun, 2021) | Cascading Residual Network Architecture (CRNA), which incorporates the ResNet and cascading mechanism into the deep convolutional neural network (DCNN) | Angular error (mean, median, tri-mean, best/worst 25%) (Comparison over the methods) |

The proposed approach delivers more stable and robust results and implies the generalization potential for deep learning models across different applications by comparative experiments in different datasets. |

| Effective Learning-Based Illuminant Estimation Using Simple Features (Cheng et al., 2015) | A learning-based method based on four simple color features and show how to use this with an ensemble of regression trees to estimate the illumination | Angular error (mean, median, tri-mean, best/worst 25%) |

A learning-based method based on four simple color features and propose how to use this with an ensemble of regression trees to estimate the illumination (develop a learning-based illumination estimation method with a running-time of statistical methods.) |

| On deep learning techniques to boost monocular depth estimation for autonomous navigation (de Queiroz Mendes et al., 2021) | A lightweight and fast supervised CNN architecture combined with novel feature extraction models which are designed for real-world autonomous navigation | the Scale invariant Error, Absolute Relative Difference, Squared Relative Difference, Log10, Mean Absolute Error, Linear RMSE and Log RMSE with indoor and outdoor ablation studies | Able to determine optimal training conditions, using different deep learning techniques, as well as optimized network structures that enabled the generation of high-quality predictions in reduced processing time. |

| Learning HDR illumination from LDR panorama images (Jin et al., 2021) | CNN combining with physical modelling | MSE loss function | Results show that the method can predict accurate spherical harmonic coefficients, and the recovered luminance is realistic. |

| LISU: Low-light indoor scene understanding with joint learning of reflectance restoration (Zhang et al., 2022) | CNN based novel cascade network to study semantic segmentation in low-light indoor environments |

Overall Accuracy, Mean accuracy, Mean intersection over Union (mIoU) |

The approach is compared with other CNN-based segmentation frameworks, including the state-of-the-art DeepLab v3+, on the proposed real data set in terms of mIoU, the experimental results also show that the semantic information supports the restoration of a sharper reflectance map, thus further improving the segmentation. |

| GeoRec: Geometry-enhanced semantic 3D reconstruction of RGB-D indoor scenes (Huan et al., 2022) | Geometry-enhanced multi-task learning network | Mean chamfer distance error, Mean average precision mAP, Mean intersection over Union mIoU |

With the parsed scene semantics and geometries, the proposed GeoRec reconstructs an indoor scene by placing reconstructed object mesh models with 3D object detection results in the estimated layout cuboid. |

| DeepLight: light source estimation for augmented reality using deep learning (Kán and Kafumann, 2019) | Residual Neural Network (ResNet) | Angular error | An end-to-end AR system is presented which estimates a directional light source from a single RGB-D camera and integrates this light estimate into AR rendering. |

| Deep Spherical Gaussian Illumination Estimation for Indoor Scene (Li et al., 2019) | CNN with extra glossy loss function | Peak Signal to noise Ratio (PSNR) and structural similarity | The proposed approach outperforms the state-of-the-arts both qualitatively and quantitatively. |

| GMLight: Lighting Estimation via Geometric Distribution Approximation (F. Zhan et al., 2022) | Regression network with spherical convolution with a generative projector for progressive guidance in illumination generation | RMSE and scale invariant RMSE | GMLight achieves accurate illumination estimation and superior fidelity in relighting for 3D object insertion |

| Deep Graph Learning for Spatially Varying Indoor Lighting Prediction (Bai et al., 2022) | A new lighting model (dubbed DSGLight) based on depth-augmented Spherical Gaussians (SG) and a Graph Convolutional Network (GCN) |

PSNR & Qualitative Analysis |

DSGLight combines both learning and physical models, and encodes both direct lighting and indirect environmental lighting more faithfully and compactly. |

| Outdoor illumination estimation via all convolutional neural networks (Zhang et al., 2021) | CNN | Angle error | Pruning and quantization are used to compress the network, resulting in a significant reduction in the number of network parameters and the storage space, only with a slight loss of precision |

| An illumination estimation algorithm based on outdoor scene classification (Li et al., 2020) | Support Vector Machine (SVM) classifiers with optimization algorithm | Angle error (average, median) and reproduction angle error | Achieve state-of-art results in outdoor environment. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).