Submitted:

09 January 2026

Posted:

09 January 2026

You are already at the latest version

Abstract

Keywords:

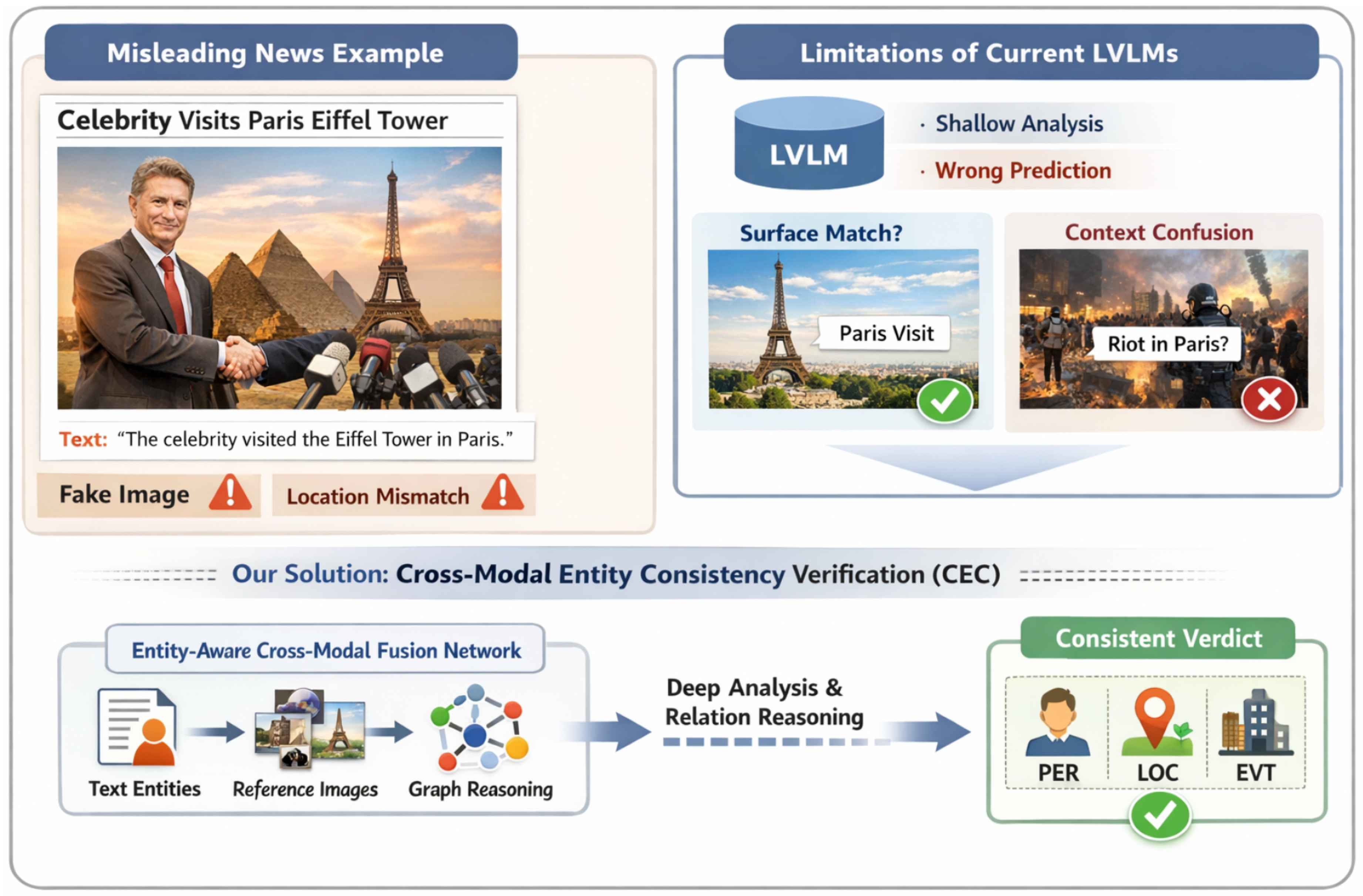

1. Introduction

- We propose the Entity-Aware Cross-Modal Fusion Network (EACFN), a novel architecture for fine-grained cross-modal entity consistency verification, specifically designed to address limitations of existing LVLMs in complex news scenarios.

- We introduce a unique Entity Alignment & Relation Reasoning (EARR) module based on Graph Neural Networks, enabling explicit modeling and inference of complex relationships between textual and visual entities, leading to more robust consistency judgments.

- Our EACFN effectively integrates reference image information through a dedicated enhancement module, significantly boosting performance in critical tasks and demonstrating superior accuracy over state-of-the-art zero-shot LVLMs across multiple entity types and real-world news datasets.

2. Related Work

2.1. Multimodal Misinformation Detection and Cross-Modal Consistency

2.2. Vision-Language Models and Graph-based Multimodal Reasoning

3. Method

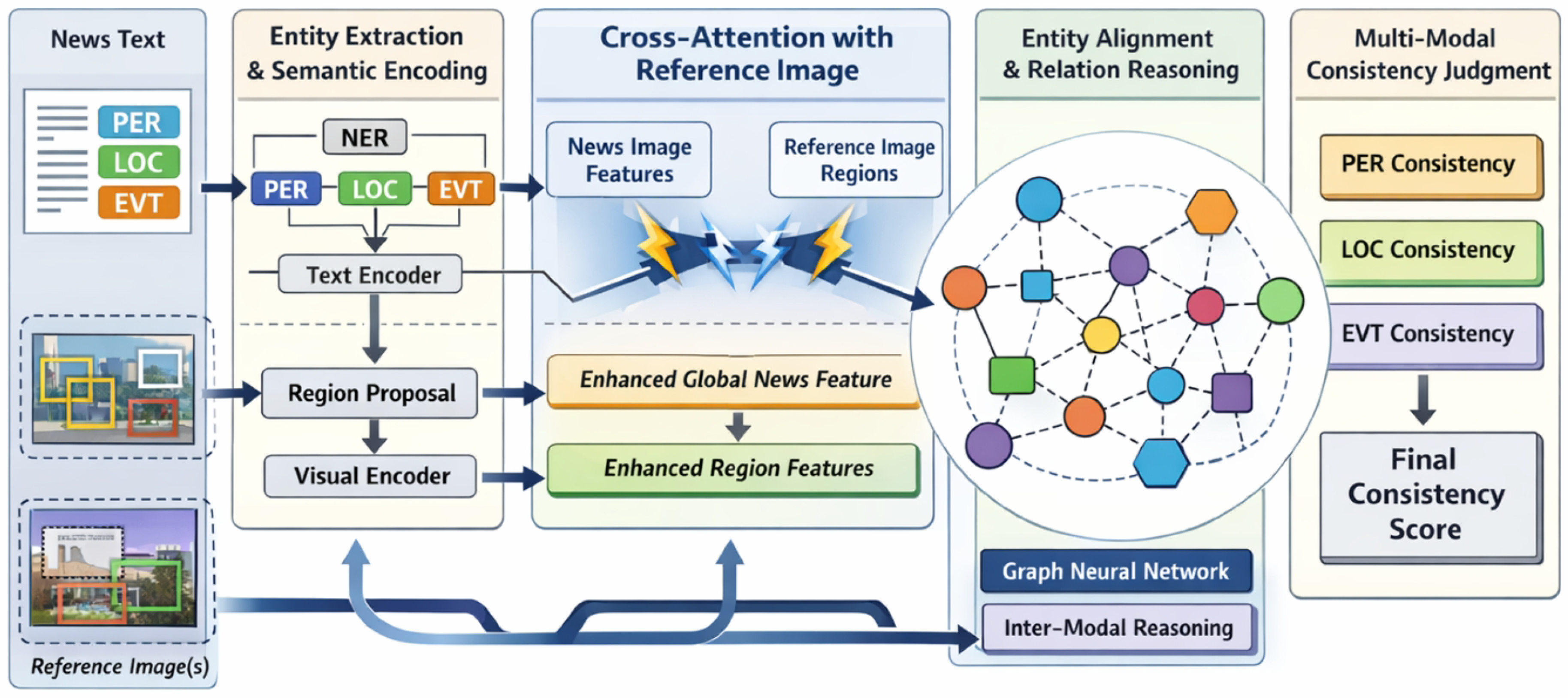

3.1. Overall Architecture

3.2. Entity Extraction & Semantic Encoding (EESE)

3.2.1. Text Entity Extraction and Encoding

3.2.2. Image Region Detection and Feature Extraction

3.3. Reference & News Image Feature Enhancement (RNIFE)

3.4. Entity Alignment & Relation Reasoning (EARR)

3.5. Multi-modal Consistency Judgment (MCJ)

4. Experiments

4.1. Experimental Setup

4.1.1. Datasets

- TamperedNews-Ent: This dataset comprises news articles where images have been intentionally manipulated or deceptively paired with text. It primarily serves to assess a model’s ability to detect subtle inconsistencies indicative of fake news.

- News400-Ent: This dataset consists of real-world news articles with naturally occurring image-text pairs. It is used to evaluate the model’s performance and robustness in authentic news contexts.

-

MMG-Ent: This dataset is designed for document-level consistency verification and includes three specialized sub-tasks focusing on specific entity consistency challenges:

- -

- LCt (Location Consistency): Verifies consistency of location entities.

- -

- LCo (Comparative Consistency): Assesses whether entities are consistent when compared across similar news contexts.

- -

- LCn (Reference Consistency): Determines consistency with respect to provided reference images.

4.1.2. Evaluation Metrics

4.1.3. Baseline Models

- InstructBLIP: A powerful LVLM capable of following intricate instructions and performing zero-shot image-text reasoning.

- LLaVA 1.5: Another state-of-the-art LVLM leveraging large language models and visual encoders for multimodal understanding.

- w/o: The model only processes the news image and its associated text, without any additional reference images.

- comp: The model is provided with entity-related reference images alongside the news image and text, aimed at enhancing verification.

4.1.4. Implementation Details

4.2. Overall Performance

- On the TamperedNews-Ent dataset, EACFN (comp) attains the highest accuracy for PER (82.0%) and EVT (81.0%) entity verification, significantly surpassing all baseline models. This demonstrates EACFN’s superior capability in identifying subtle discrepancies in manipulated news scenarios.

- For the News400-Ent dataset, EACFN (comp) further elevates the EVT entity accuracy to an impressive 87.0%, which is the highest recorded. This highlights EACFN’s robust understanding of complex events in real-world news contexts.

- Even in the absence of reference images (the ‘w/o’ setting), EACFN exhibits strong performance. Notably, on the MMG-Ent dataset, EACFN (w/o) outperforms LLaVA 1.5 across all sub-tasks, achieving 75.0% for LCt, 52.0% for LCo, and 65.0% for LCn. This suggests that EACFN’s Entity Alignment & Relation Reasoning (EARR) module possesses enhanced generalization capabilities and a finer ability to capture intrinsic entity features, even when direct external visual evidence is not provided.

- While EACFN (w/o) achieves the highest LOC accuracy (83.0%) on TamperedNews-Ent, its performance for LOC on News400-Ent (comp) is slightly lower than the best baseline. This observation suggests that location entity verification might be more sensitive to the quality and diversity of reference images, presenting a promising direction for future refinement.

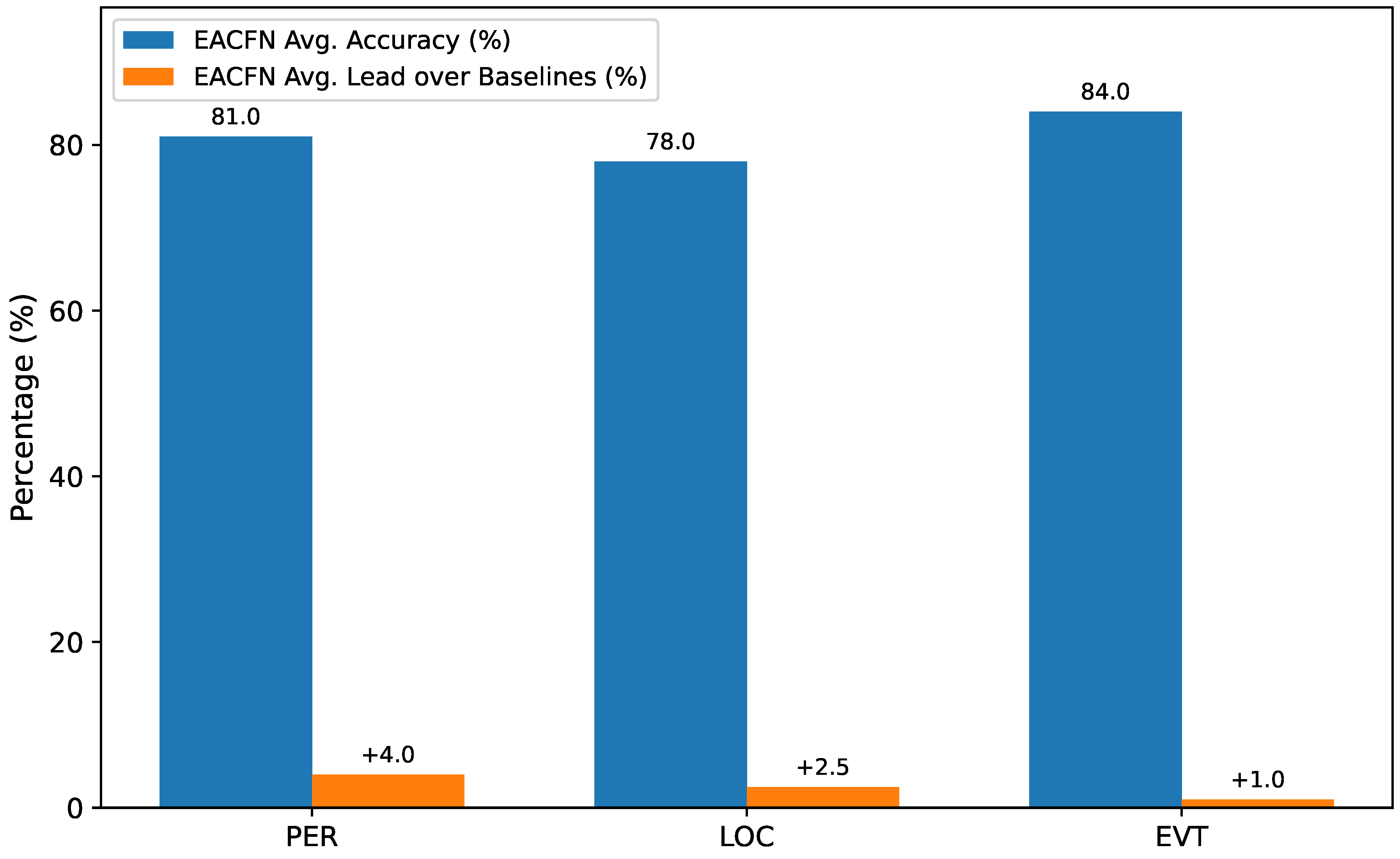

4.3. Fine-Grained Entity-Type Analysis

4.4. Ablation Study

- Effectiveness of Reference & News Image Feature Enhancement (RNIFE): The ‘EACFN w/o RNIFE’ variant simulates a scenario where the model does not explicitly fuse reference image information into news image features, effectively bypassing the enhancement mechanism while still processing both images (but without targeted cross-attention). Comparing this variant with the full EACFN model (which inherently uses RNIFE in ‘comp’ mode), we observe a notable performance drop across all entity types and datasets. For instance, on TamperedNews-Ent, the PER accuracy decreases from 82.0% to 75.0%, and EVT from 81.0% to 76.0%. This significant degradation underscores the critical role of the RNIFE module in selectively leveraging relevant visual evidence from reference images to create a more robust and entity-aware representation of the news image. The targeted cross-attention mechanism in RNIFE is crucial for disambiguating entities and confirming consistency by providing external, corroborating visual information.

- Effectiveness of Entity Alignment & Relation Reasoning (EARR): To evaluate the EARR module, we introduce ‘EACFN w/o EARR (Simple Fusion)’. In this variant, the Graph Neural Network (GNN) component of EARR is replaced by a simpler aggregation mechanism, such as direct concatenation of entity embeddings followed by an MLP for interaction, or a simple attention mechanism without explicitly modeling graph relationships. As shown in Table 2, removing the EARR module leads to a consistent decline in performance. For example, on News400-Ent, the EVT accuracy drops from 87.0% to 83.0%. This demonstrates that the GNN-based EARR module is indispensable for explicitly modeling complex, multi-modal relationships and interdependencies between textual and visual entities. Its ability to perform iterative message passing and dynamic edge weighting through multi-head attention enables a more nuanced understanding of entity consistency, which simple fusion methods cannot achieve. The GNN’s capacity to build a richer contextual representation for each entity node by considering its multimodal neighbors is paramount for robust verification.

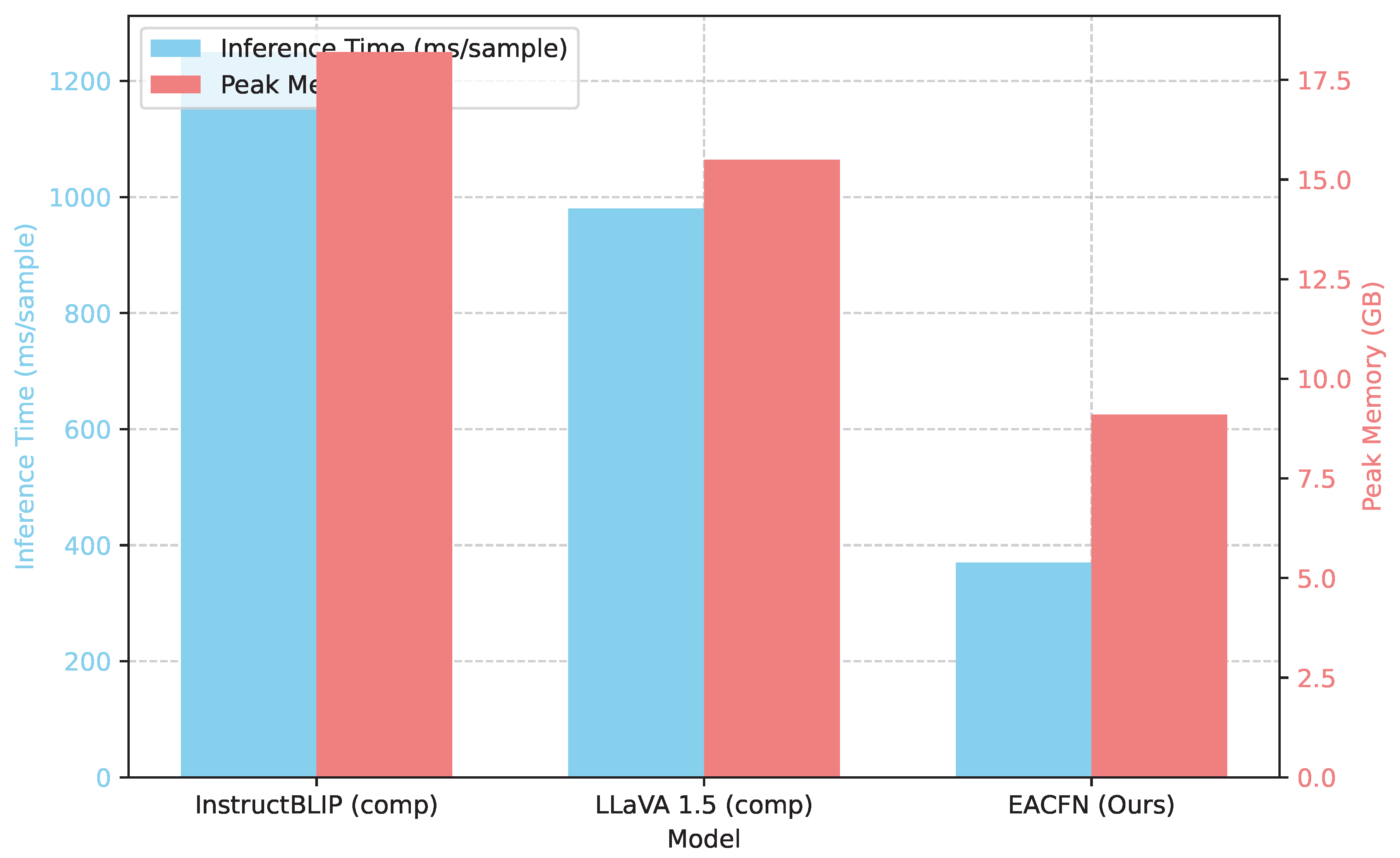

4.5. Computational Efficiency

4.6. Human Evaluation

- EACFN demonstrates significantly higher "Agreement with Human" (81.0%) compared to InstructBLIP (68.0%) and LLaVA 1.5 (71.0%). This indicates that EACFN’s judgments align more closely with human intuition, especially in nuanced cases of consistency where direct visual comparison might be ambiguous or require deeper contextual understanding.

- Remarkably, EACFN also shows a higher "Better than Human" rate (12.0%), suggesting that in certain complex scenarios, EACFN can identify subtle inconsistencies that even human annotators might initially overlook or find difficult to confirm. This highlights EACFN’s capability to learn intricate cross-modal patterns that may escape explicit human coding, potentially serving as a valuable pre-screening tool.

- Furthermore, human annotators reported a higher average confidence score (4.1) for EACFN’s outputs when they agreed, implying that EACFN provides more definitive and trustworthy consistency judgments, making it a valuable tool for aiding human fact-checkers in verifying multimodal news content.

4.7. Qualitative Error Analysis

5. Conclusion

References

- Wu, Y.; Lin, Z.; Zhao, Y.; Qin, B.; Zhu, L.N. A Text-Centered Shared-Private Framework via Cross-Modal Prediction for Multimodal Sentiment Analysis. In Proceedings of the Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021. Association for Computational Linguistics, 2021, pp. 4730–4738. [CrossRef]

- Wu, Y.; Zhan, P.; Zhang, Y.; Wang, L.; Xu, Z. Multimodal Fusion with Co-Attention Networks for Fake News Detection. In Proceedings of the Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021. Association for Computational Linguistics, 2021, pp. 2560–2569. [CrossRef]

- Ayoola, T.; Tyagi, S.; Fisher, J.; Christodoulopoulos, C.; Pierleoni, A. ReFinED: An Efficient Zero-shotcapable Approach to End-to-End Entity Linking. In Proceedings of the Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies: Industry Track. Association for Computational Linguistics, 2022, pp. 209–220. [CrossRef]

- Zhou, Y.; Long, G. Style-Aware Contrastive Learning for Multi-Style Image Captioning. In Proceedings of the Findings of the Association for Computational Linguistics: EACL 2023, 2023, pp. 2257–2267. [CrossRef]

- Zhou, Y. Sketch storytelling. In Proceedings of the ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2022, pp. 4748–4752. [CrossRef]

- Zhou, Y.; Chen, Y.; Chen, Y.; Ye, S.; Guo, M.; Sha, Z.; Wei, H.; Gu, Y.; Zhou, J.; Qu, W. EAGLE: An Enhanced Attention-Based Strategy by Generating Answers from Learning Questions to a Remote Sensing Image. In Proceedings of the International Conference on Computational Linguistics and Intelligent Text Processing. Springer, 2019, pp. 558–572. [CrossRef]

- Xu, Z.; Zhang, X.; Li, R.; Tang, Z.; Huang, Q.; Zhang, J. Fakeshield: Explainable image forgery detection and localization via multi-modal large language models. arXiv 2024, arXiv:2410.02761. [Google Scholar] [CrossRef]

- Zhang, X.; Li, R.; Yu, J.; Xu, Y.; Li, W.; Zhang, J. Editguard: Versatile image watermarking for tamper localization and copyright protection. In Proceedings of the Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2024, pp. 11964–11974.

- Zhang, X.; Tang, Z.; Xu, Z.; Li, R.; Xu, Y.; Chen, B.; Gao, F.; Zhang, J. Omniguard: Hybrid manipulation localization via augmented versatile deep image watermarking. In Proceedings of the Proceedings of the Computer Vision and Pattern Recognition Conference, 2025, pp. 3008–3018.

- Potts, C.; Wu, Z.; Geiger, A.; Kiela, D. DynaSent: A Dynamic Benchmark for Sentiment Analysis. In Proceedings of the Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers). Association for Computational Linguistics, 2021, pp. 2388–2404. [CrossRef]

- Zhao, H.; Zhang, J.; Chen, Z.; Yuan, B.; Tao, D. On robust cross-view consistency in self-supervised monocular depth estimation. Machine Intelligence Research 2024, 21, 495–513. [Google Scholar] [CrossRef]

- Islam, K.I.; Kar, S.; Islam, M.S.; Amin, M.R. SentNoB: A Dataset for Analysing Sentiment on Noisy Bangla Texts. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2021. Association for Computational Linguistics, 2021, pp. 3265–3271. [CrossRef]

- Lin, B.; Ye, Y.; Zhu, B.; Cui, J.; Ning, M.; Jin, P.; Yuan, L. Video-LLaVA: Learning United Visual Representation by Alignment Before Projection. In Proceedings of the Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing. Association for Computational Linguistics, 2024, pp. 5971–5984. [CrossRef]

- Yang, J.;Wang, Y.; Yi, R.; Zhu, Y.; Rehman, A.; Zadeh, A.; Poria, S.; Morency, L.P. MTAG: Modal-Temporal Attention Graph for Unaligned Human Multimodal Language Sequences. In Proceedings of the Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Association for Computational Linguistics, 2021, pp. 1009–1021. [CrossRef]

- Hu, J.; Liu, Y.; Zhao, J.; Jin, Q. MMGCN: Multimodal Fusion via Deep Graph Convolution Network for Emotion Recognition in Conversation. In Proceedings of the Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers). Association for Computational Linguistics, 2021, pp. 5666–5675. [CrossRef]

- Hu, G.; Lin, T.E.; Zhao, Y.; Lu, G.;Wu, Y.; Li, Y. UniMSE: Towards Unified Multimodal Sentiment Analysis and Emotion Recognition. In Proceedings of the Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing. Association for Computational Linguistics, 2022, pp. 7837–7851. [CrossRef]

- Tang, J.; Li, K.; Jin, X.; Cichocki, A.; Zhao, Q.; Kong, W. CTFN: Hierarchical Learning for Multimodal Sentiment Analysis Using Coupled-Translation Fusion Network. In Proceedings of the Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers). Association for Computational Linguistics, 2021, pp. 5301–5311. [CrossRef]

- Lin, Z.; Tian, Z.; Lan, J.; Zhao, D.; Wei, C. Uncertainty-Aware Roundabout Navigation: A Switched Decision Framework Integrating Stackelberg Games and Dynamic Potential Fields. IEEE Transactions on Vehicular Technology 2025, 1–13. [Google Scholar] [CrossRef]

- Tian, Z.; Lin, Z.; Zhao, D.; Zhao, W.; Flynn, D.; Ansari, S.; Wei, C. Evaluating scenario-based decision-making for interactive autonomous driving using rational criteria: A survey. arXiv 2029, arXiv:2501.01886. [Google Scholar] [CrossRef]

- Wu, Z.; Kong, L.; Bi,W.; Li, X.; Kao, B. Good for Misconceived Reasons: An Empirical Revisiting on the Need for Visual Context in Multimodal Machine Translation. In Proceedings of the Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers). Association for Computational Linguistics, 2021, pp. 6153–6166. [CrossRef]

- Wei, K.; Sun, X.; Zhang, Z.; Zhang, J.; Zhi, G.; Jin, L. Trigger is not sufficient: Exploiting frame-aware knowledge for implicit event argument extraction. In Proceedings of the Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), 2021, pp. 4672–4682.

- Wei, K.; Yang, Y.; Jin, L.; Sun, X.; Zhang, Z.; Zhang, J.; Li, X.; Zhang, L.; Liu, J.; Zhi, G. Guide the many-to-one assignment: Open information extraction via iou-aware optimal transport. In Proceedings of the Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), 2023, pp. 4971–4984.

- Ren, L. AI-Powered Financial Insights: Using Large Language Models to Improve Government Decision-Making and Policy Execution. Journal of Industrial Engineering and Applied Science 2025, 3, 21–26. [Google Scholar] [CrossRef]

- Ren, L. Leveraging large language models for anomaly event early warning in financial systems. European Journal of AI, Computing & Informatics 2025, 1, 69–76. [Google Scholar]

- Ren, L.; et al. Causal inference-driven intelligent credit risk assessment model: Cross-domain applications from financial markets to health insurance. Academic Journal of Computing & Information Science 2025, 8, 8–14. [Google Scholar]

- Zheng, L.; Tian, Z.; He, Y.; Liu, S.; Chen, H.; Yuan, F.; Peng, Y. Enhanced mean field game for interactive decision-making with varied stylish multi-vehicles. arXiv 2029, arXiv:2509.00981. [Google Scholar] [CrossRef]

- Yang, J.; Yu, Y.; Niu, D.; Guo, W.; Xu, Y. ConFEDE: Contrastive Feature Decomposition for Multimodal Sentiment Analysis. In Proceedings of the Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). Association for Computational Linguistics, 2023, pp. 7617–7630. [CrossRef]

- Allaway, E.; Srikanth, M.; McKeown, K. Adversarial Learning for Zero-Shot Stance Detection on Social Media. In Proceedings of the Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Association for Computational Linguistics, 2021, pp. 4756–4767. [CrossRef]

- Wei, K.; Zhong, J.; Zhang, H.; Zhang, F.; Zhang, D.; Jin, L.; Yu, Y.; Zhang, J. Chain-of-specificity: Enhancing task-specific constraint adherence in large language models. In Proceedings of the Proceedings of the 31st International Conference on Computational Linguistics, 2025, pp. 2401–2416.

- Zhou, Y.; Shen, J.; Cheng, Y. Weak to strong generalization for large language models with multi-capabilities. In Proceedings of the The Thirteenth International Conference on Learning Representations, 2025.

- Zhou, Y.; Li, X.; Wang, Q.; Shen, J. Visual In-Context Learning for Large Vision-Language Models. In Proceedings of the Findings of the Association for Computational Linguistics, ACL 2024, Bangkok, Thailand and virtual meeting, August 11-16, 2024. Association for Computational Linguistics, 2024, pp. 15890–15902. [CrossRef]

- Li, Z.; Xu, B.; Zhu, C.; Zhao, T. CLMLF:A Contrastive Learning and Multi-Layer Fusion Method for Multimodal Sentiment Detection. In Proceedings of the Findings of the Association for Computational Linguistics: NAACL 2022. Association for Computational Linguistics, 2022, pp. 2282–2294. [CrossRef]

- Zhou, Y.; Tao, W.; Zhang, W. Triple sequence generative adversarial nets for unsupervised image captioning. In Proceedings of the ICASSP 2021-2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2021, pp. 7598–7602. [CrossRef]

- Dimitrov, D.; Bin Ali, B.; Shaar, S.; Alam, F.; Silvestri, F.; Firooz, H.; Nakov, P.; Da San Martino, G. Detecting Propaganda Techniques in Memes. In Proceedings of the Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers). Association for Computational Linguistics, 2021, pp. 6603–6617. [CrossRef]

- Zhao, H.; Zhang, J.; Chen, Z.; Zhao, S.; Tao, D. Unimix: Towards domain adaptive and generalizable lidar semantic segmentation in adverse weather. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2024, pp. 14781–14791.

- Zhao, H.; Zhang, Q.; Zhao, S.; Chen, Z.; Zhang, J.; Tao, D. Simdistill: Simulated multi-modal distillation for bev 3d object detection. In Proceedings of the Proceedings of the AAAI conference on artificial intelligence, 2024, Vol. 38, pp. 7460–7468. [CrossRef]

- Chen, W.; Hu, H.; Chen, X.; Verga, P.; Cohen,W. MuRAG: Multimodal Retrieval-Augmented Generator for Open Question Answering over Images and Text. In Proceedings of the Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing. Association for Computational Linguistics, 2022, pp. 5558–5570. [CrossRef]

- Wu, H.; Li, H.; Su, Y. Bridging the Perception-Cognition Gap:Re-engineering SAM2 with Hilbert-Mamba for Robust VLM-based Medical Diagnosis, 2025, [arXiv:cs.CV/2512.24013]. [CrossRef]

- Huang, Y.; Fang, M.; Cao, Y.; Wang, L.; Liang, X. DAGN: Discourse-Aware Graph Network for Logical Reasoning. In Proceedings of the Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Association for Computational Linguistics, 2021, pp. 5848–5855. [CrossRef]

- Li, J.; Xu, K.; Li, F.; Fei, H.; Ren, Y.; Ji, D. MRN: A Locally and Globally Mention-Based Reasoning Network for Document-Level Relation Extraction. In Proceedings of the Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021. Association for Computational Linguistics, 2021, pp. 1359–1370. [CrossRef]

- Qiao, S.; Ou, Y.; Zhang, N.; Chen, X.; Yao, Y.; Deng, S.; Tan, C.; Huang, F.; Chen, H. Reasoning with Language Model Prompting: A Survey. In Proceedings of the Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). Association for Computational Linguistics, 2023, pp. 5368–5393. [CrossRef]

- Hao, S.; Gu, Y.; Ma, H.; Hong, J.;Wang, Z.;Wang, D.; Hu, Z. Reasoning with Language Model is Planning with World Model. In Proceedings of the Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing. Association for Computational Linguistics, 2023, pp. 8154–8173. [CrossRef]

| Model | ICS | TamperedNews-Ent | News400-Ent | MMG-Ent | ||||||

| PER | LOC | EVT | PER | LOC | EVT | LCt | LCo | LCn | ||

| InstructBLIP | w/o | 66.0 | 81.0 | 76.0 | 68.0 | 75.0 | 79.0 | 63.0 | 30.0 | 59.0 |

| comp | 73.0 | 78.0 | 72.0 | 71.0 | 67.0 | 85.0 | - | - | - | |

| LLaVA 1.5 | w/o | 61.0 | 79.0 | 71.0 | 63.0 | 70.0 | 57.0 | 70.0 | 48.0 | 27.0 |

| comp | 78.0 | 73.0 | 77.0 | 77.0 | 70.0 | 85.0 | - | - | - | |

| EACFN (Ours) | w/o | 75.0 | 83.0 | 79.0 | 74.0 | 78.0 | 82.0 | 75.0 | 52.0 | 65.0 |

| comp | 82.0 | 81.0 | 81.0 | 80.0 | 75.0 | 87.0 | - | - | - | |

| Model Variant | TamperedNews-Ent | News400-Ent | ||||

| PER | LOC | EVT | PER | LOC | EVT | |

| EACFN (Full Model) | 82.0 | 81.0 | 81.0 | 80.0 | 75.0 | 87.0 |

| w/o RNIFE | 75.0 | 77.0 | 76.0 | 74.0 | 71.0 | 81.0 |

| w/o EARR (Simple Fusion) | 79.0 | 78.0 | 77.0 | 76.0 | 72.0 | 83.0 |

| Model | Agreement with Human (%) | Better than Human (%) | Confidence (1-5 Scale) |

|---|---|---|---|

| InstructBLIP (comp) | 68.0 | 5.0 | 3.2 |

| LLaVA 1.5 (comp) | 71.0 | 7.0 | 3.5 |

| EACFN (Ours) | 81.0 | 12.0 | 4.1 |

| Error Type | Classification | Description |

|---|---|---|

| Subtle Visual Mismatch | FN | Model fails to detect minor inconsistencies in visual details (e.g., slight difference in attire, background objects not matching textual context) when the overall scene is similar. |

| Ambiguous Visual Context | FN | News image contains elements that could be interpreted in multiple ways, leading the model to incorrectly find consistency with text (e.g., a generic street scene misaligned with a specific textual location). |

| Complex Event Nuances | FP/FN | Difficulties in capturing the precise timing, agents, or outcomes of an event. Model might over-generalize an action or miss a crucial detail (e.g., mistaking a protest for a celebration if visual cues are subtle). |

| Entity Overlap/Confusion | FN | Multiple similar-looking persons or objects in an image make it hard for the model to correctly associate a textual entity with its unique visual counterpart. |

| Reference Image Ambiguity | FP | The provided reference image, while intended to help, contains elements that are misleading or too generic, leading EACFN to incorrectly infer consistency for the news image. |

| Lack of Visual Evidence | FP | Text describes an entity or event for which there is no discernible visual representation in the news image, but the model falsely infers presence or consistency due to strong textual context. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).