Submitted:

08 January 2026

Posted:

09 January 2026

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Related Work

2.1. Multi-Turn User-Interactive Reinforcement Learning

2.2. Progressive Horizon Scaling Methods

2.3. Domain-Adaptive Tool Orchestration

2.4. Research Gaps and Opportunities

2.5. Preliminary Concepts

3. Method

3.1. Progressive User-Interactive Training

3.2. Adaptive Horizon Management

3.3. Domain-Adaptive Tool Orchestration

3.4. Algorithm

Algorithm 1: Progressive Multi-Turn RL Framework for LLM Agents |

|

3.5. Theoretical Analysis

4. Experiment

4.1. Experimental Settings

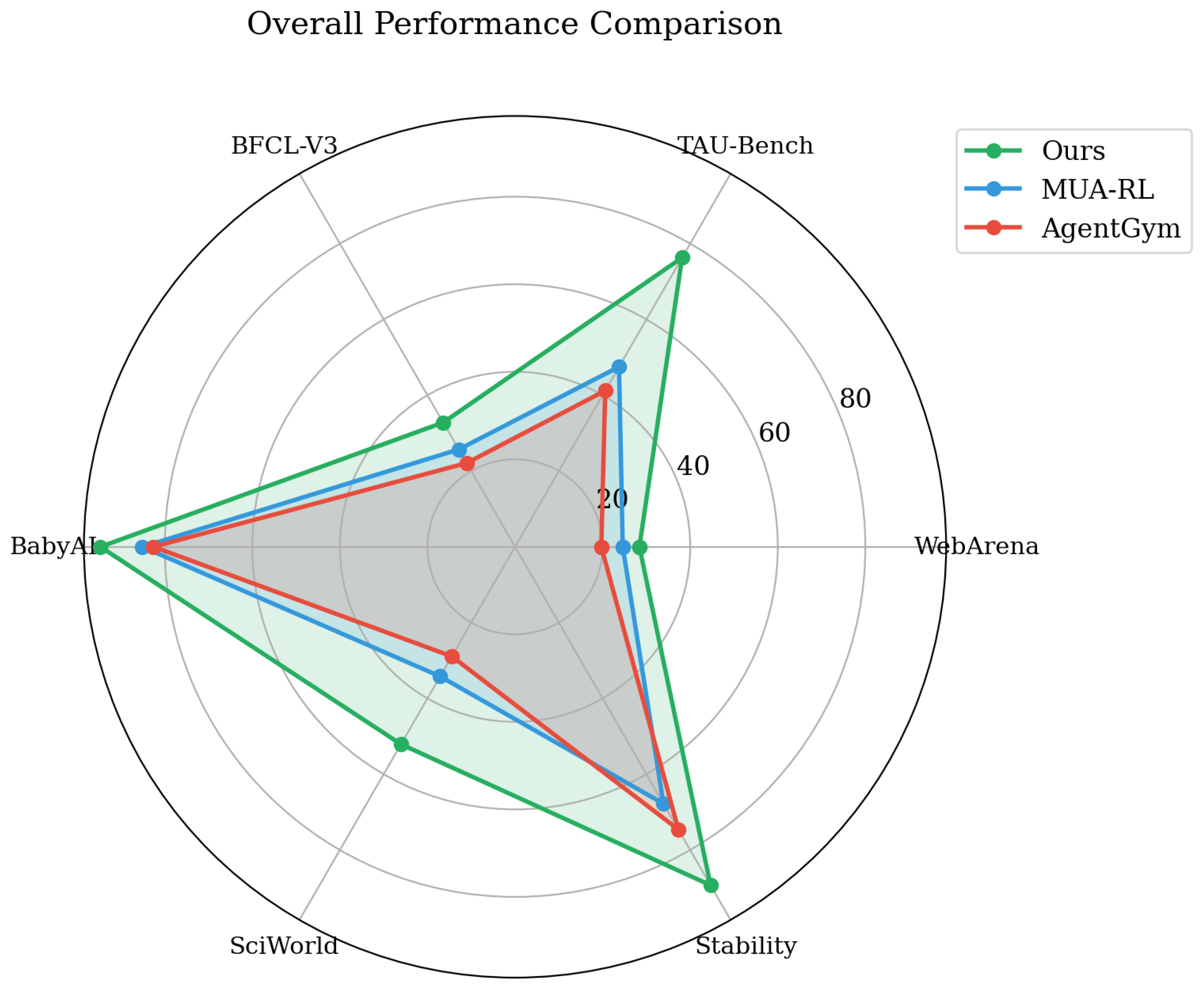

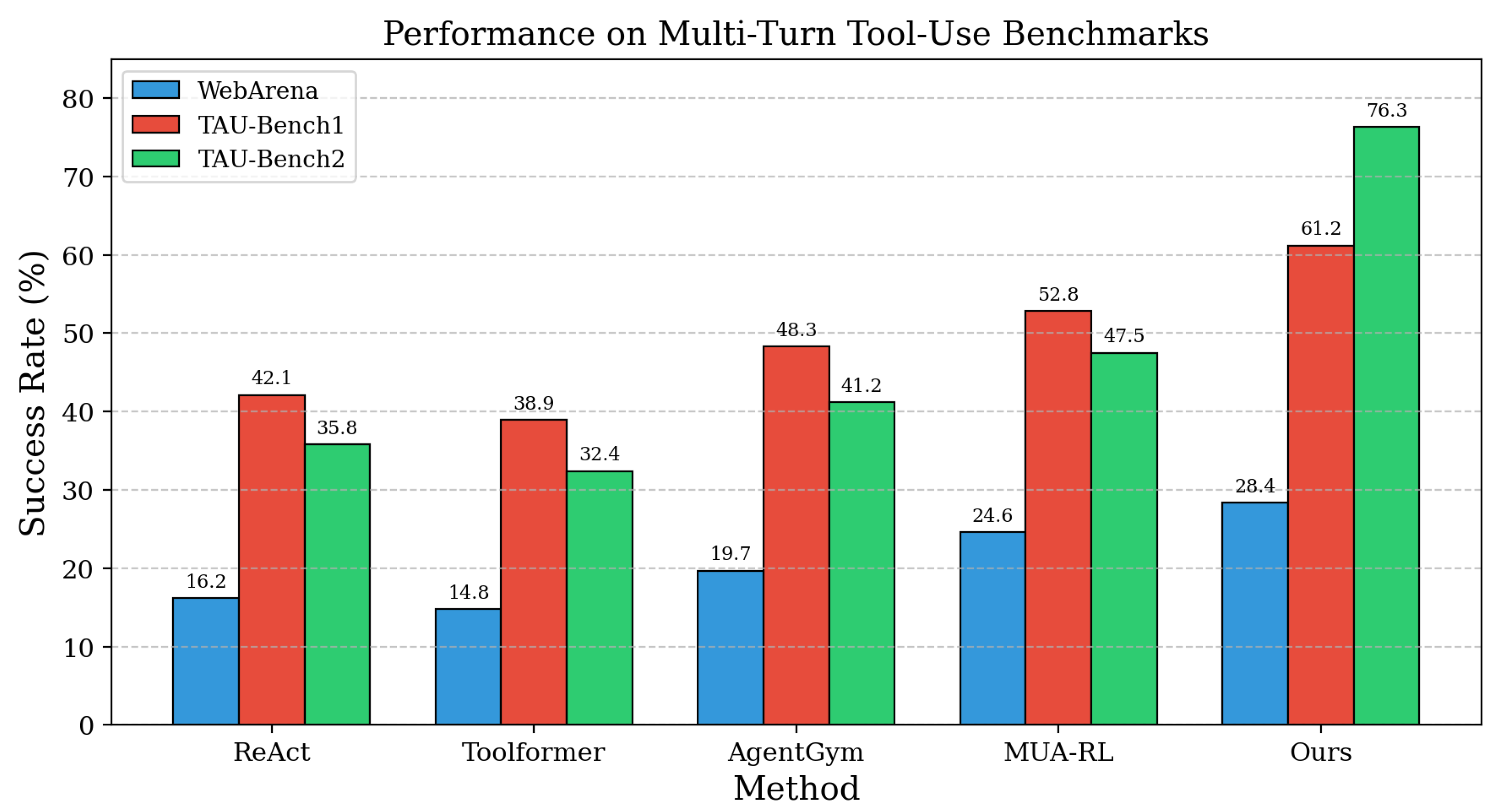

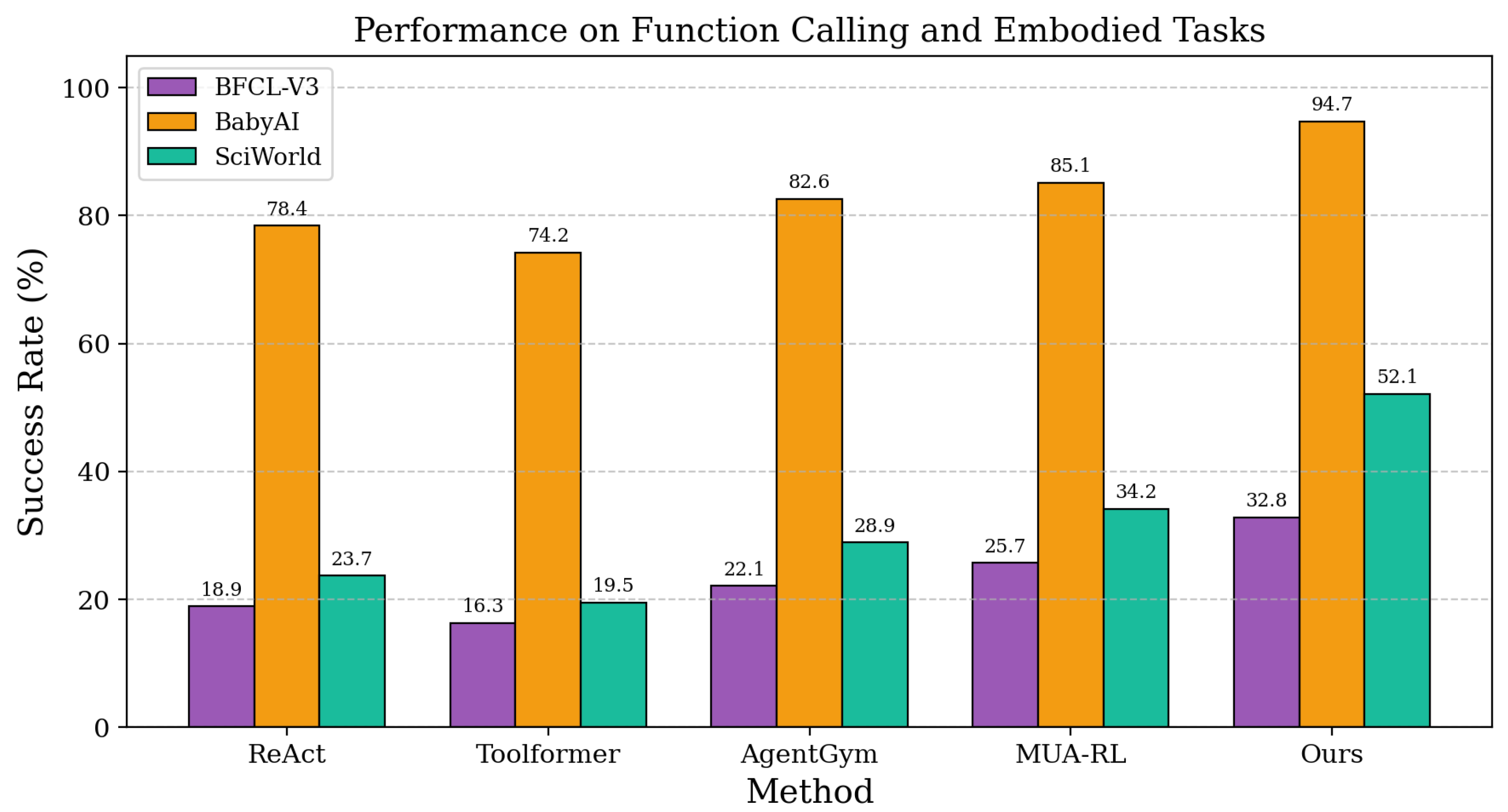

4.2. Main Results

4.3. Case Study

4.4. Ablation Study

5. Limitations

6. Conclusion

References

- Lei, Y.; Xu, J.; Liang, C.X.; Bi, Z.; Li, X.; Zhang, D.; Song, J.; Yu, Z. Large Language Model Agents: A Comprehensive Survey on Architectures, Capabilities, and Applications 2025.

- Song, X.; Chen, K.; Bi, Z.; Niu, Q.; Liu, J.; Peng, B.; Zhang, S.; Yuan, Z.; Liu, M.; Li, M.; et al. Transformer: A Survey and Application. researchgate 2025.

- Liang, X.; Tao, M.; Xia, Y.; Wang, J.; Li, K.; Wang, Y.; He, Y.; Yang, J.; Shi, T.; Wang, Y.; et al. SAGE: Self-evolving Agents with Reflective and Memory-augmented Abilities. Neurocomputing 2025, p. 130470.

- Wu, X.; Wang, H.; Tan, W.; Wei, D.; Shi, M. Dynamic allocation strategy of VM resources with fuzzy transfer learning method. Peer-to-Peer Networking and Applications 2020, 13, 2201–2213.

- Bi, Z.; Chen, K.; Wang, T.; Hao, J.; Song, X. CoT-X: An Adaptive Framework for Cross-Model Chain-of-Thought Transfer and Optimization. arXiv:2511.05747 2025.

- Tian, Y.; Yang, Z.; Liu, C.; Su, Y.; Hong, Z.; Gong, Z.; Xu, J. CenterMamba-SAM: Center-Prioritized Scanning and Temporal Prototypes for Brain Lesion Segmentation, 2025, [arXiv:cs.CV/2511.01243].

- Qu, D.; Ma, Y. Magnet-bn: markov-guided Bayesian neural networks for calibrated long-horizon sequence forecasting and community tracking. Mathematics 2025, 13, 2740.

- Lin, S. Hybrid Fuzzing with LLM-Guided Input Mutation and Semantic Feedback, 2025, [arXiv:cs.CR/2511.03995].

- Yang, C.; He, Y.; Tian, A.X.; Chen, D.; Wang, J.; Shi, T.; Heydarian, A.; Liu, P. Wcdt: World-centric diffusion transformer for traffic scene generation. In Proceedings of the 2025 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2025, pp. 6566–6572.

- Qi, H.; Hu, Z.; Yang, Z.; Zhang, J.; Wu, J.J.; Cheng, C.; Wang, C.; Zheng, L. Capacitive aptasensor coupled with microfluidic enrichment for real-time detection of trace SARS-CoV-2 nucleocapsid protein. Analytical chemistry 2022, 94, 2812–2819.

- Lin, S. Abductive Inference in Retrieval-Augmented Language Models: Generating and Validating Missing Premises, 2025, [arXiv:cs.CL/2511.04020].

- He, Y.; Li, S.; Li, K.; Wang, J.; Li, B.; Shi, T.; Xin, Y.; Li, K.; Yin, J.; Zhang, M.; et al. GE-Adapter: A General and Efficient Adapter for Enhanced Video Editing with Pretrained Text-to-Image Diffusion Models. Expert Systems with Applications 2025, p. 129649.

- Wu, X.; Wang, H.; Zhang, Y.; Zou, B.; Hong, H. A tutorial-generating method for autonomous online learning. IEEE Transactions on Learning Technologies 2024, 17, 1532–1541.

- Zhou, Y.; He, Y.; Su, Y.; Han, S.; Jang, J.; Bertasius, G.; Bansal, M.; Yao, H. ReAgent-V: A Reward-Driven Multi-Agent Framework for Video Understanding. arXiv preprint arXiv:2506.01300 2025.

- Wang, J.; He, Y.; Zhong, Y.; Song, X.; Su, J.; Feng, Y.; Wang, R.; He, H.; Zhu, W.; Yuan, X.; et al. Twin co-adaptive dialogue for progressive image generation. In Proceedings of the Proceedings of the 33rd ACM International Conference on Multimedia, 2025, pp. 3645–3653.

- Cao, Z.; He, Y.; Liu, A.; Xie, J.; Chen, F.; Wang, Z. TV-RAG: A Temporal-aware and Semantic Entropy-Weighted Framework for Long Video Retrieval and Understanding. In Proceedings of the Proceedings of the 33rd ACM International Conference on Multimedia, 2025, pp. 9071–9079.

- Gao, B.; Wang, J.; Song, X.; He, Y.; Xing, F.; Shi, T. Free-Mask: A Novel Paradigm of Integration Between the Segmentation Diffusion Model and Image Editing. In Proceedings of the Proceedings of the 33rd ACM International Conference on Multimedia, 2025, pp. 9881–9890.

- Lin, S. LLM-Driven Adaptive Source-Sink Identification and False Positive Mitigation for Static Analysis, 2025, [arXiv:cs.SE/2511.04023].

- Cao, Z.; He, Y.; Liu, A.; Xie, J.; Wang, Z.; Chen, F. CoFi-Dec: Hallucination-Resistant Decoding via Coarse-to-Fine Generative Feedback in Large Vision-Language Models. In Proceedings of the Proceedings of the 33rd ACM International Conference on Multimedia, 2025, pp. 10709–10718.

- Xin, Y.; Qin, Q.; Luo, S.; Zhu, K.; Yan, J.; Tai, Y.; Lei, J.; Cao, Y.; Wang, K.; Wang, Y.; et al. Lumina-dimoo: An omni diffusion large language model for multi-modal generation and understanding. arXiv preprint arXiv:2510.06308 2025.

- Yu, Z. Ai for science: A comprehensive review on innovations, challenges, and future directions. International Journal of Artificial Intelligence for Science (IJAI4S) 2025, 1.

- Cao, Z.; He, Y.; Liu, A.; Xie, J.; Wang, Z.; Chen, F. PurifyGen: A Risk-Discrimination and Semantic-Purification Model for Safe Text-to-Image Generation. In Proceedings of the Proceedings of the 33rd ACM International Conference on Multimedia, 2025, pp. 816–825.

- Xin, Y.; Du, J.; Wang, Q.; Lin, Z.; Yan, K. Vmt-adapter: Parameter-efficient transfer learning for multi-task dense scene understanding. In Proceedings of the Proceedings of the AAAI conference on artificial intelligence, 2024, Vol. 38, pp. 16085–16093.

- Sarkar, A.; Idris, M.Y.I.; Yu, Z. Reasoning in computer vision: Taxonomy, models, tasks, and methodologies. arXiv preprint arXiv:2508.10523 2025.

- Yu, Z.; Idris, M.Y.I.; Wang, P.; Qureshi, R. CoTextor: Training-Free Modular Multilingual Text Editing via Layered Disentanglement and Depth-Aware Fusion. In Proceedings of the The Thirty-ninth Annual Conference on Neural Information Processing Systems Creative AI Track: Humanity, 2025.

- Xin, Y.; Yan, J.; Qin, Q.; Li, Z.; Liu, D.; Li, S.; Huang, V.S.J.; Zhou, Y.; Zhang, R.; Zhuo, L.; et al. Lumina-mgpt 2.0: Stand-alone autoregressive image modeling. arXiv preprint arXiv:2507.17801 2025.

- Bi, Z.; Duan, H.; Xu, J.; Chia, X.; Geng, Y.; Cui, X.; Du, V.; Zou, X.; Zhang, X.; Zhang, C.; et al. GeneralBench: A Comprehensive Benchmark Suite and Evaluation Platform for Large Language Models. arxiv 2025.

- Yu, Z.; Idris, M.Y.I.; Wang, P. Physics-constrained symbolic regression from imagery. In Proceedings of the 2nd AI for Math Workshop@ ICML 2025, 2025.

- Wu, X.; Zhang, Y.T.; Lai, K.W.; Yang, M.Z.; Yang, G.L.; Wang, H.H. A novel centralized federated deep fuzzy neural network with multi-objectives neural architecture search for epistatic detection. IEEE Transactions on Fuzzy Systems 2024, 33, 94–107.

- Lin, Y.; Wang, M.; Xu, L.; Zhang, F. The maximum forcing number of a polyomino. Australas. J. Combin 2017, 69, 306–314.

- Wang, S.; Wang, M. A Note on the Connectivity of m-Ary n-Dimensional Hypercubes. Parallel Processing Letters 2019, 29, 1950017.

- Wang, M.; Yang, W.; Wang, S. Conditional matching preclusion number for the Cayley graph on the symmetric group. Acta Math. Appl. Sin.(Chinese Series) 2013, 36, 813–820.

- Wang, M.; Wang, S. Diagnosability of Cayley graph networks generated by transposition trees under the comparison diagnosis model. Ann. of Appl. Math 2016, 32, 166–173.

- Zhao, W.; et al. MUA-RL: Multi-turn User-interacting Agent Reinforcement Learning for Agentic Tool Use. arXiv preprint arXiv:2508.18669 2025.

- Wang, M.; Xu, S.; Jiang, J.; Xiang, D.; Hsieh, S.Y. Global reliable diagnosis of networks based on Self-Comparative Diagnosis Model and g-good-neighbor property. Journal of Computer and System Sciences 2025, p. 103698.

- Yao, S.; Shinn, N.; Razavi, P.; Narasimhan, K. τ-bench: A Benchmark for Tool-Agent-User Interaction in Real-World Domains. arXiv preprint arXiv:2406.12045 2024.

- Patil, S.; et al. The Berkeley Function Calling Leaderboard (BFCL): From Tool Use to Agentic Evaluation of Large Language Models. In Proceedings of the Proceedings of the 42nd International Conference on Machine Learning (ICML), 2025.

- Bai, Z.; Ge, E.; Hao, J. Multi-Agent Collaborative Framework for Intelligent IT Operations: An AOI System with Context-Aware Compression and Dynamic Task Scheduling. arXiv preprint arXiv:2512.13956 2025.

- Han, X.; Gao, X.; Qu, X.; Yu, Z. Multi-Agent Medical Decision Consensus Matrix System: An Intelligent Collaborative Framework for Oncology MDT Consultations. arXiv preprint arXiv:2512.14321 2025.

- Wang, M.; Xiang, D.; Wang, S. Connectivity and diagnosability of leaf-sort graphs. Parallel Processing Letters 2020, 30, 2040004.

- Wu, X.; Zhang, Y.; Shi, M.; Li, P.; Li, R.; Xiong, N.N. An adaptive federated learning scheme with differential privacy preserving. Future Generation Computer Systems 2022, 127, 362–372.

- Wang, H.; Zhang, X.; Xia, Y.; Wu, X. An intelligent blockchain-based access control framework with federated learning for genome-wide association studies. Computer Standards & Interfaces 2023, 84, 103694.

- Yu, Z.; Idris, M.Y.I.; Wang, P. DC4CR: When Cloud Removal Meets Diffusion Control in Remote Sensing. arXiv preprint arXiv:2504.14785 2025.

- Wu, X.; Dong, J.; Bao, W.; Zou, B.; Wang, L.; Wang, H. Augmented intelligence of things for emergency vehicle secure trajectory prediction and task offloading. IEEE Internet of Things Journal 2024, 11, 36030–36043.

- Xi, Z.; et al. AgentGym-RL: Training LLM Agents for Long-Horizon Decision Making through Multi-Turn Reinforcement Learning. arXiv preprint arXiv:2509.08755 2025.

- Zhou, S.; Xu, F.F.; Zhu, H.; Zhou, X.; Lo, R.; Sridhar, A.; Cheng, X.; Ou, T.; Bisk, Y.; Fried, D.; et al. WebArena: A Realistic Web Environment for Building Autonomous Agents. In Proceedings of the The Twelfth International Conference on Learning Representations (ICLR), 2024.

- Chevalier-Boisvert, M.; Bahdanau, D.; Lahlou, S.; Willems, L.; Saharia, C.; Nguyen, T.H.; Bengio, Y. BabyAI: A Platform to Study the Sample Efficiency of Grounded Language Learning. In Proceedings of the The Seventh International Conference on Learning Representations (ICLR), 2019.

- Wang, R.; Jansen, P.; Côté, M.A.; Ammanabrolu, P. ScienceWorld: Is your Agent Smarter than a 5th Grader? In Proceedings of the Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing (EMNLP), 2022, pp. 11279–11298.

- Motiian, S.; Piccirilli, M.; Adjeroh, D.A.; Doretto, G. Unified deep supervised domain adaptation and generalization. In Proceedings of the Proceedings of the IEEE international conference on computer vision, 2017, pp. 5715–5725.

- Gao, Y.; Cui, Y. Deep transfer learning for reducing health care disparities arising from biomedical data inequality. Nature communications 2020, 11, 5131.

- Wang, P.; Yang, Y.; Yu, Z. Multi-batch nuclear-norm adversarial network for unsupervised domain adaptation. In Proceedings of the 2024 IEEE International Conference on Multimedia and Expo (ICME). IEEE, 2024, pp. 1–6.

- Yu, Z.; Wang, P. Capan: Class-aware prototypical adversarial networks for unsupervised domain adaptation. In Proceedings of the 2024 IEEE International Conference on Multimedia and Expo (ICME). IEEE, 2024, pp. 1–6.

- Gao, Y.; Cui, Y. Clinical time-to-event prediction enhanced by incorporating compatible related outcomes. PLOS digital health 2022, 1, e0000038.

- Team, Q. Qwen2.5 Technical Report. arXiv preprint arXiv:2412.15115 2024.

- Yao, S.; Zhao, J.; Yu, D.; Du, N.; Shafran, I.; Narasimhan, K.; Cao, Y. ReAct: Synergizing Reasoning and Acting in Language Models. In Proceedings of the The Eleventh International Conference on Learning Representations (ICLR), 2023.

- Schick, T.; Dwivedi-Yu, J.; Dessì, R.; Raileanu, R.; Lomeli, M.; Zettlemoyer, L.; Cancedda, N.; Scialom, T. Toolformer: Language Models Can Teach Themselves to Use Tools. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), 2023, Vol. 36.

- Xi, Z.; Chen, Y.; Yan, R.; He, W.; Ding, R.; Ji, B.; Tang, S.; Wang, P.; Gui, T.; Zhang, Q.; et al. AgentGym: Evolving Large Language Model-based Agents across Diverse Environments. arXiv preprint arXiv:2406.04151 2024.

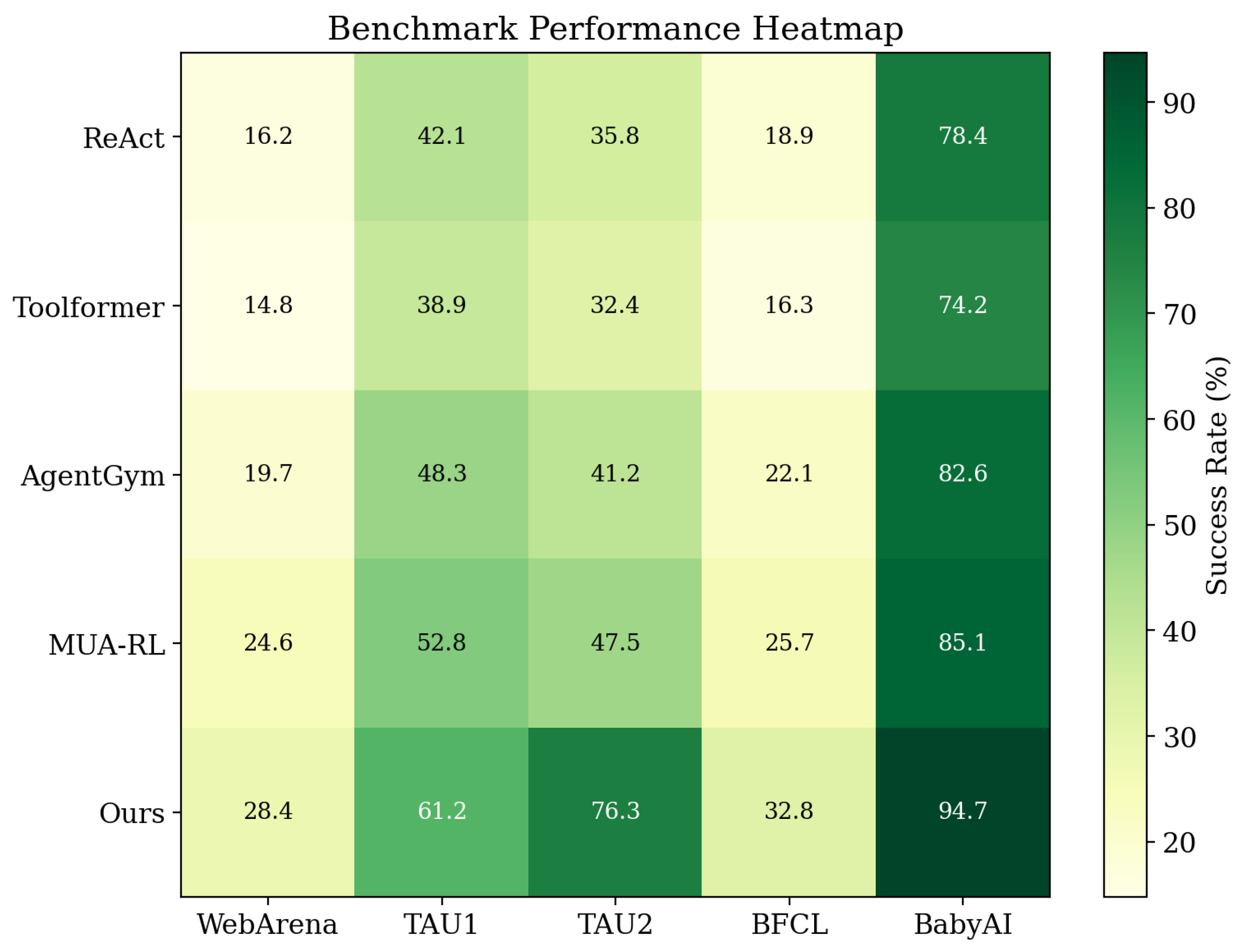

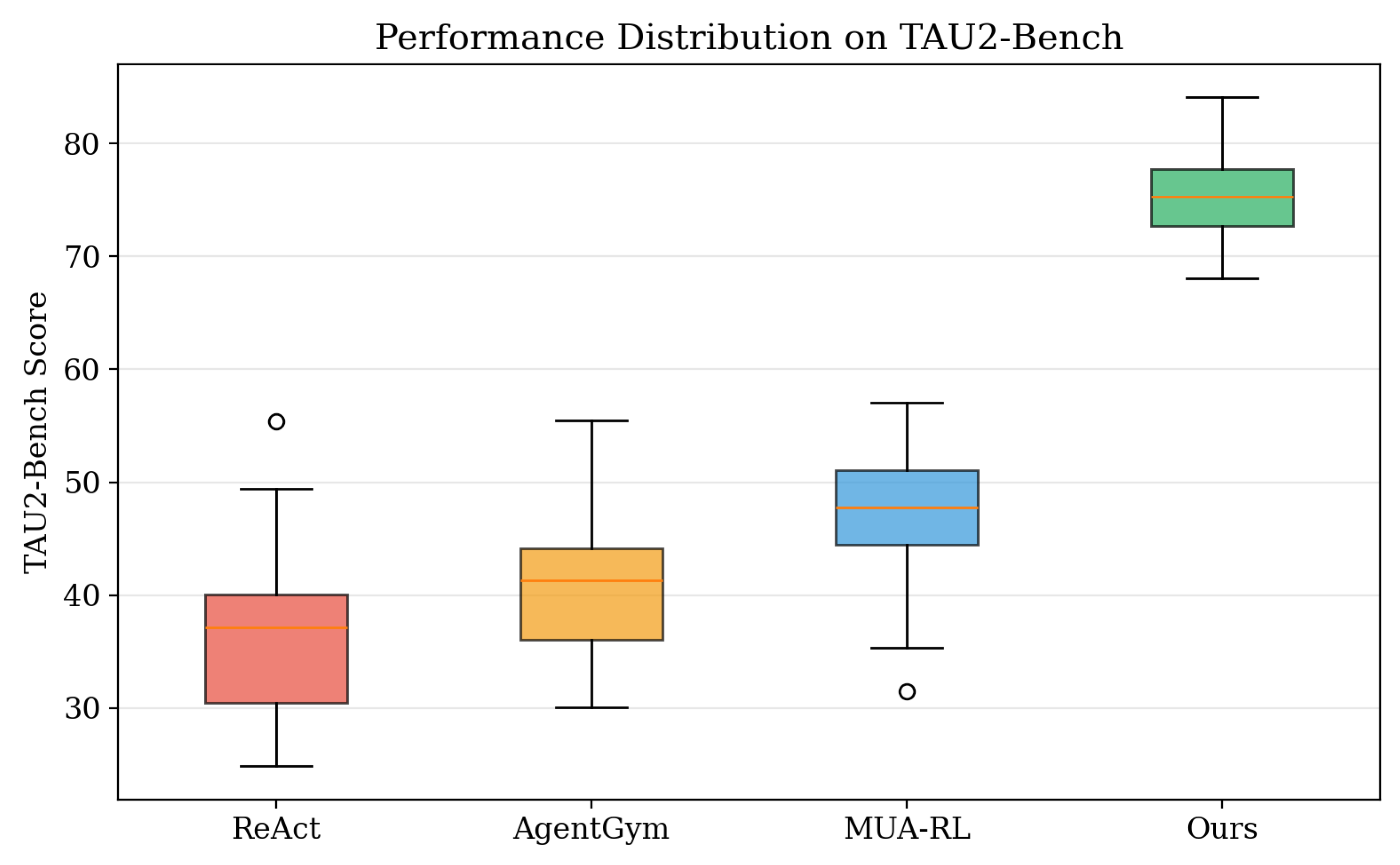

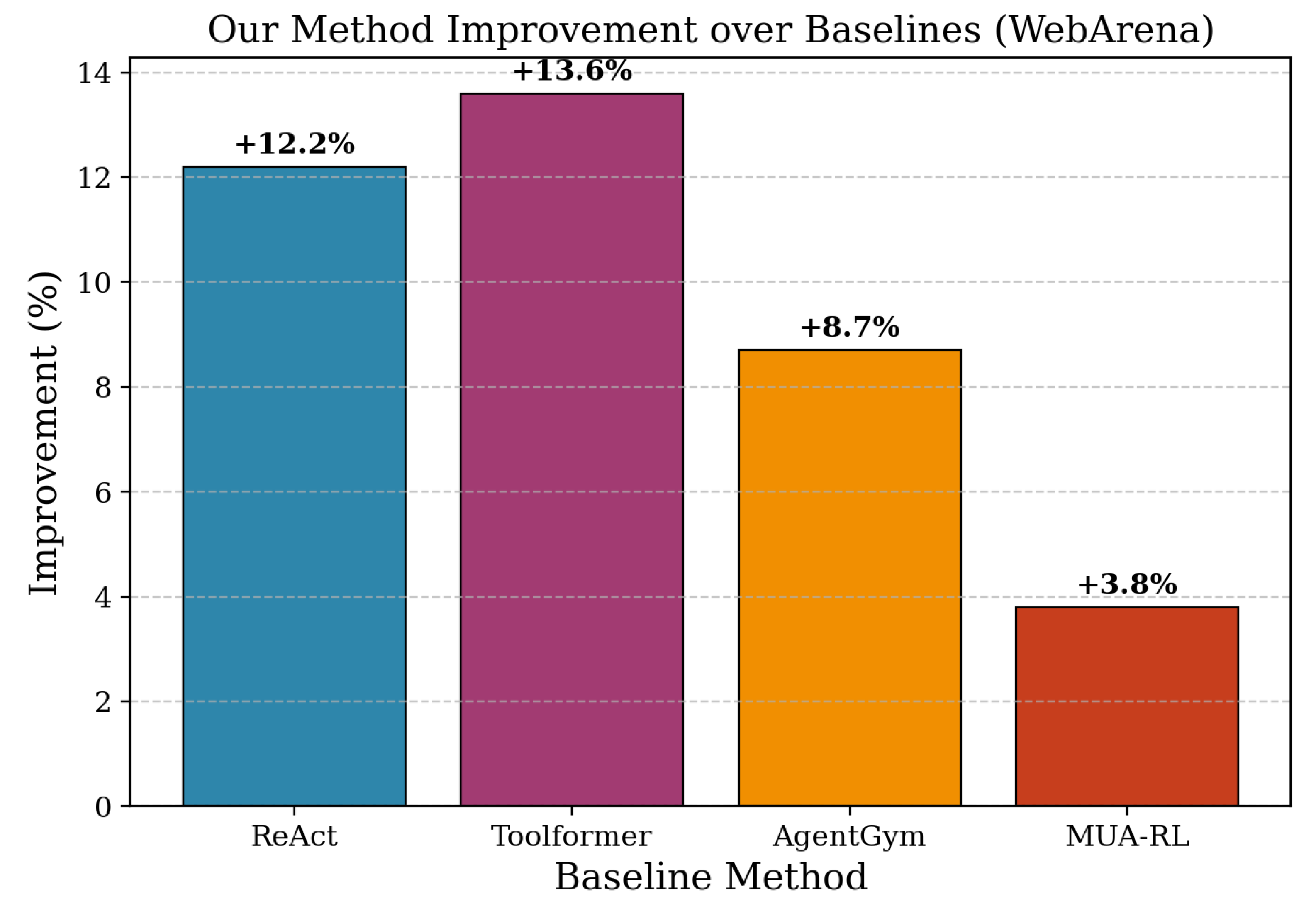

| Method | WebArena | TAU1 | TAU2 | BFCL-V3 | BabyAI | SciWorld |

|---|---|---|---|---|---|---|

| ReAct [55] | 16.2 | 42.1 | 35.8 | 18.9 | 78.4 | 23.7 |

| Toolformer [56] | 14.8 | 38.9 | 32.4 | 16.3 | 74.2 | 19.5 |

| AgentGym [57] | 19.7 | 48.3 | 41.2 | 22.1 | 82.6 | 28.9 |

| MUA-RL [34] | 24.6 | 52.8 | 47.5 | 25.7 | 85.1 | 34.2 |

| Ours | 28.4 | 61.2 | 76.3 | 32.8 | 94.7 | 52.1 |

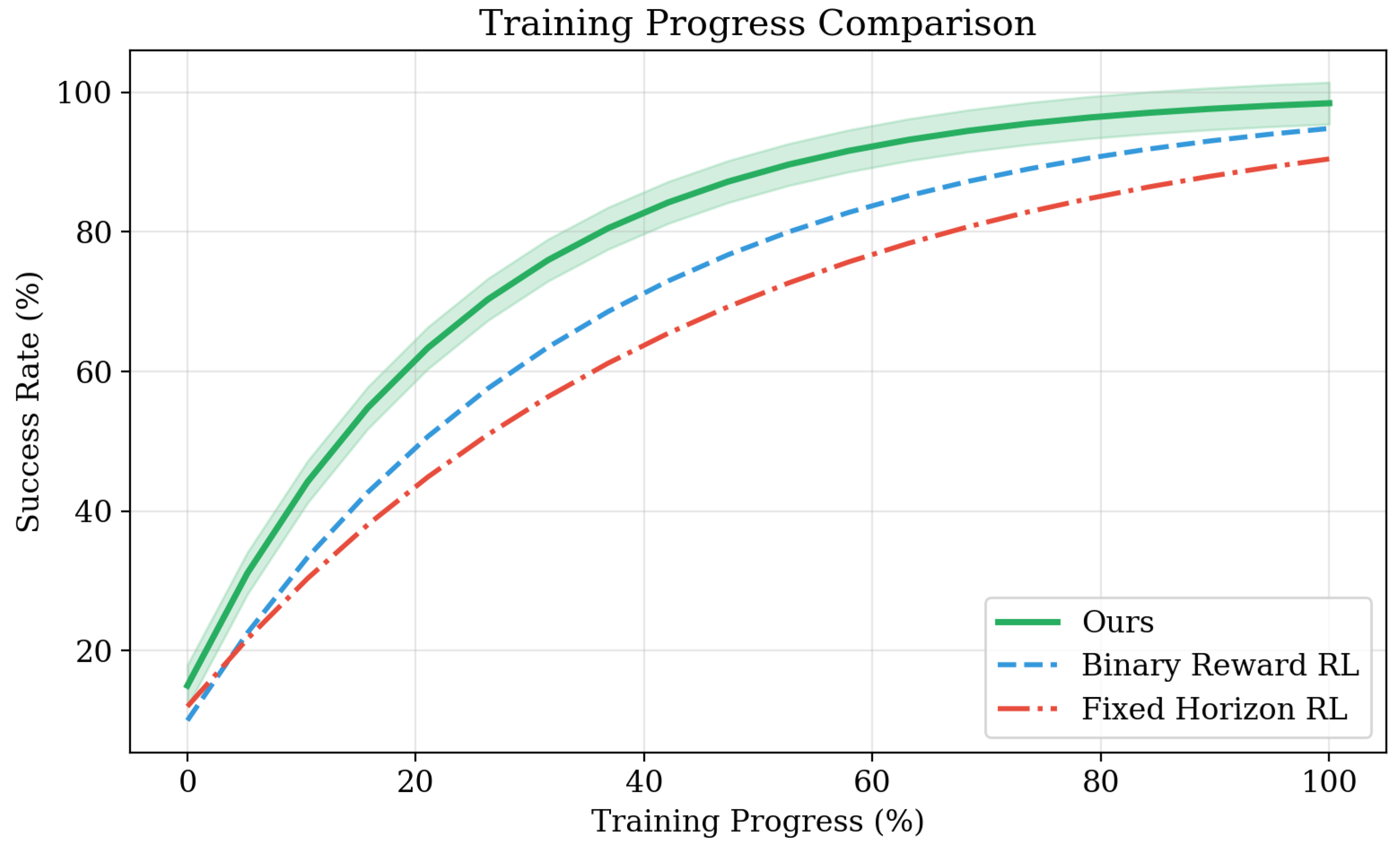

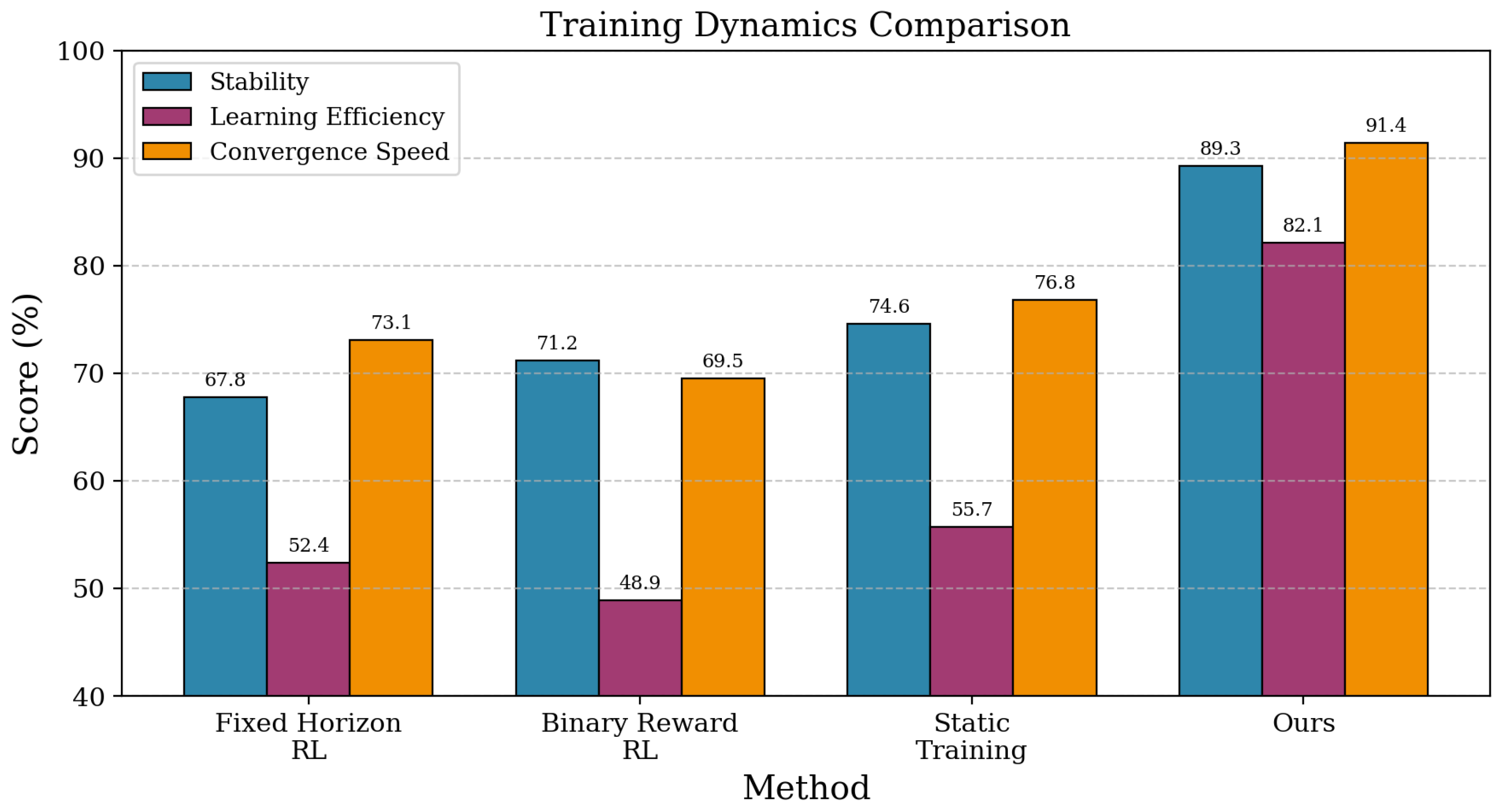

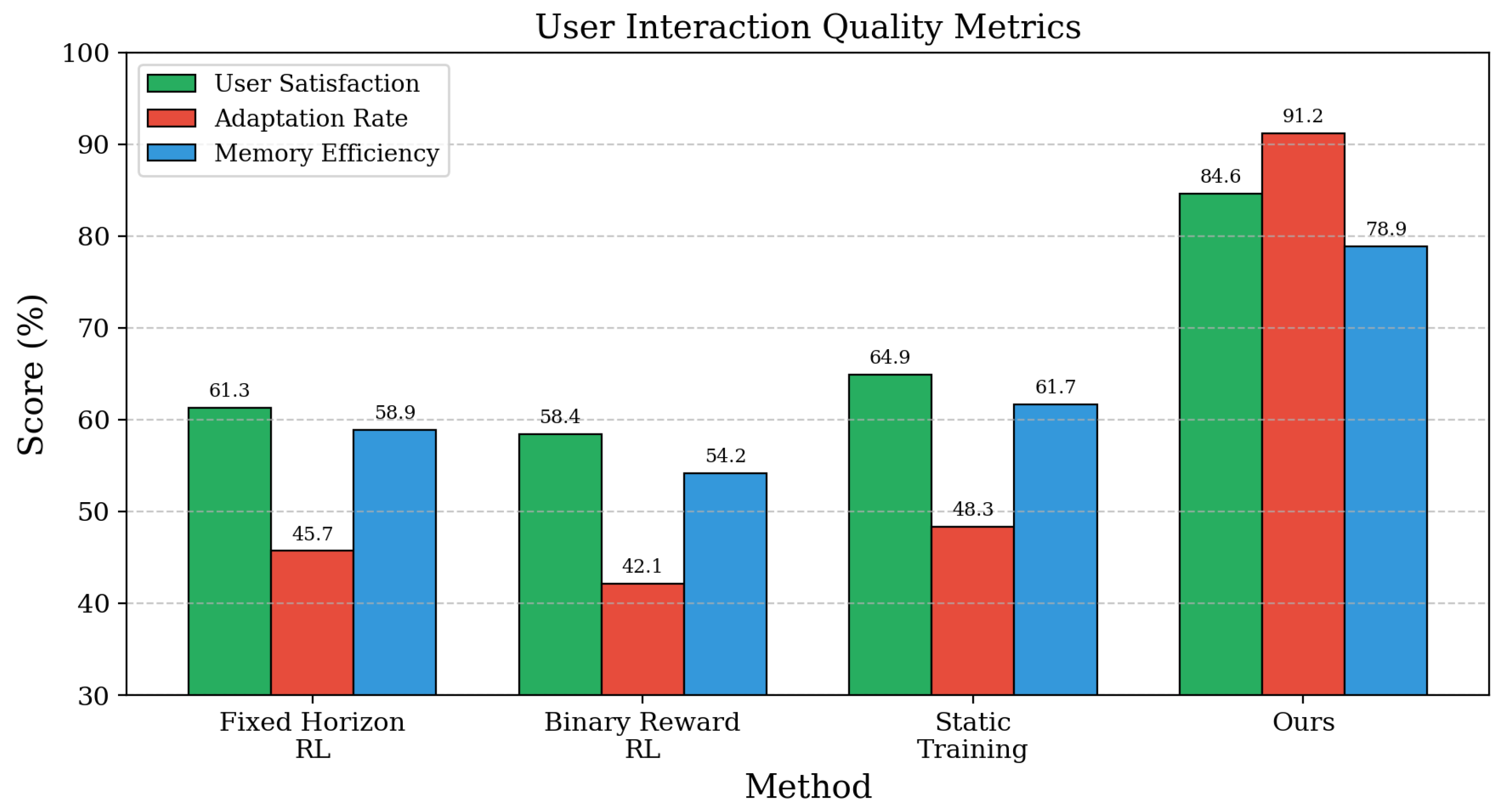

| Method | Stability | Learn. Eff. | Conv. Speed | User Sat. | Adapt. Rate | Mem. Eff. |

|---|---|---|---|---|---|---|

| Fixed Horizon RL | 67.8 | 52.4 | 73.1 | 61.3 | 45.7 | 58.9 |

| Binary Reward RL | 71.2 | 48.9 | 69.5 | 58.4 | 42.1 | 54.2 |

| Static Training | 74.6 | 55.7 | 76.8 | 64.9 | 48.3 | 61.7 |

| Ours | 89.3 | 82.1 | 91.4 | 84.6 | 91.2 | 78.9 |

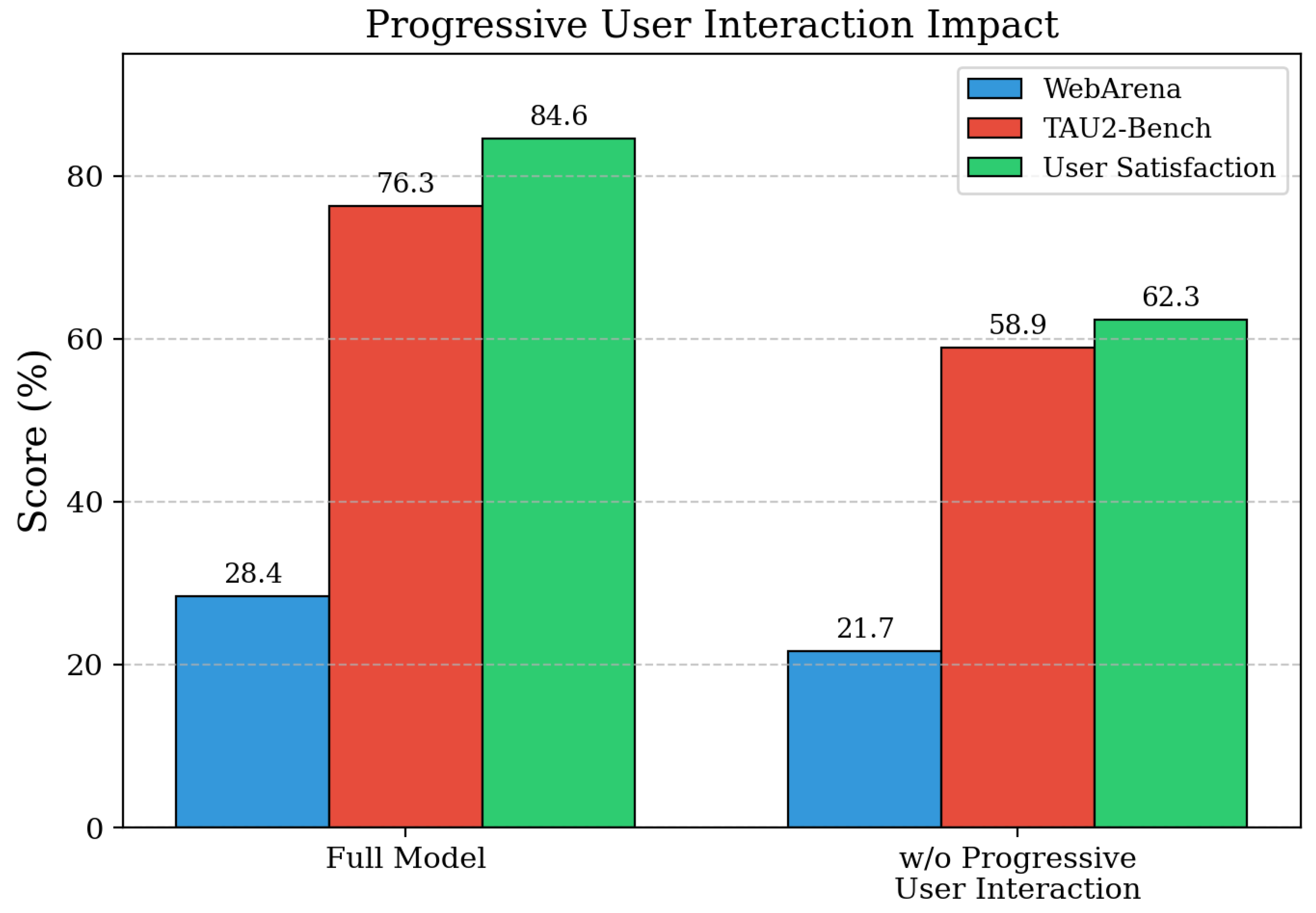

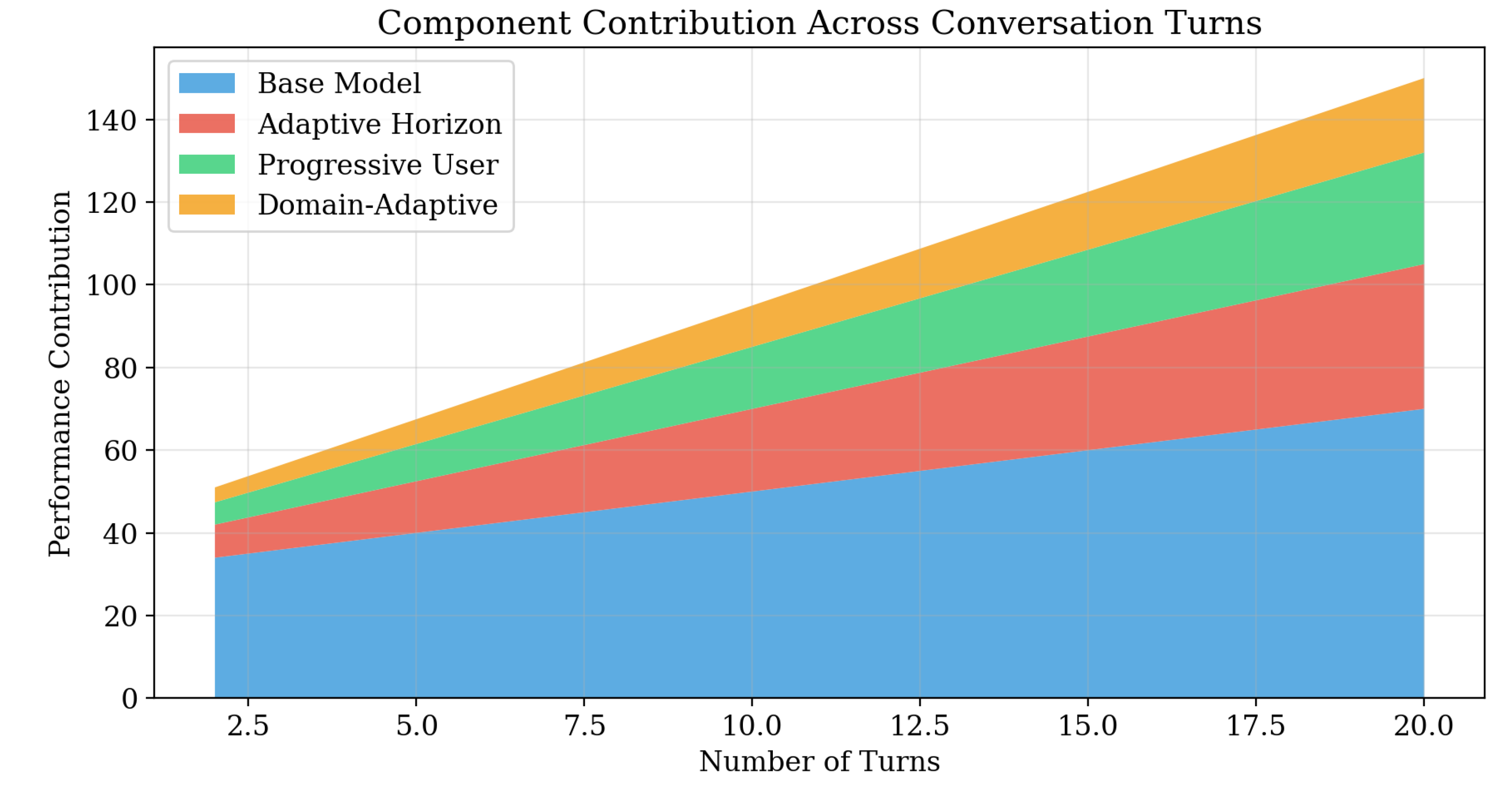

| Variant | WebArena | TAU2-Bench | User Satisfaction |

|---|---|---|---|

| Full Model | 28.4 | 76.3 | 84.6 |

| w/o Progressive User Interaction | 21.7 | 58.9 | 62.3 |

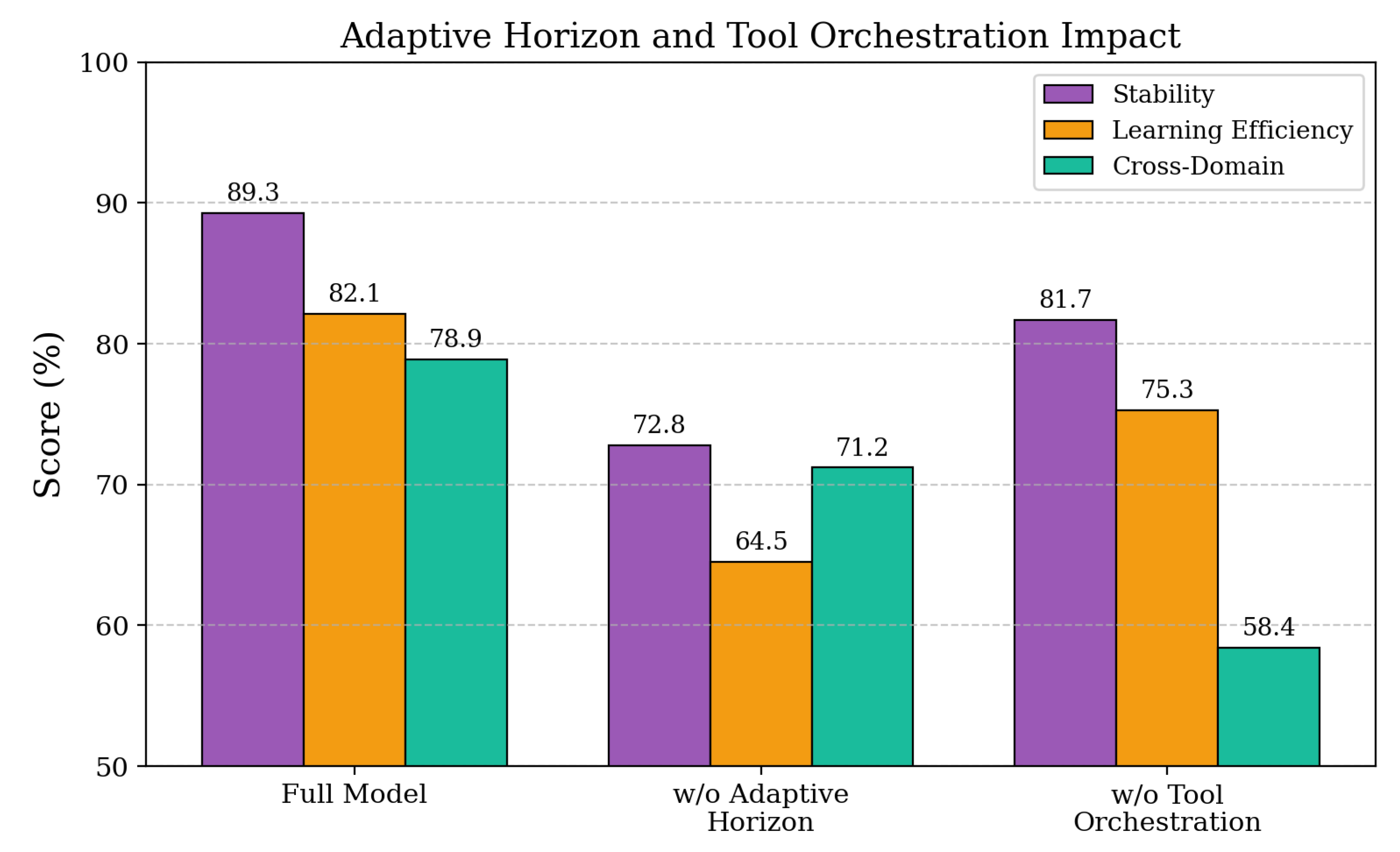

| Variant | Stability | Learn. Eff. | Cross-Domain |

|---|---|---|---|

| Full Model | 89.3 | 82.1 | 78.9 |

| w/o Adaptive Horizon Mgmt. | 72.8 | 64.5 | 71.2 |

| w/o Domain-Adaptive Tool Orch. | 81.7 | 75.3 | 58.4 |

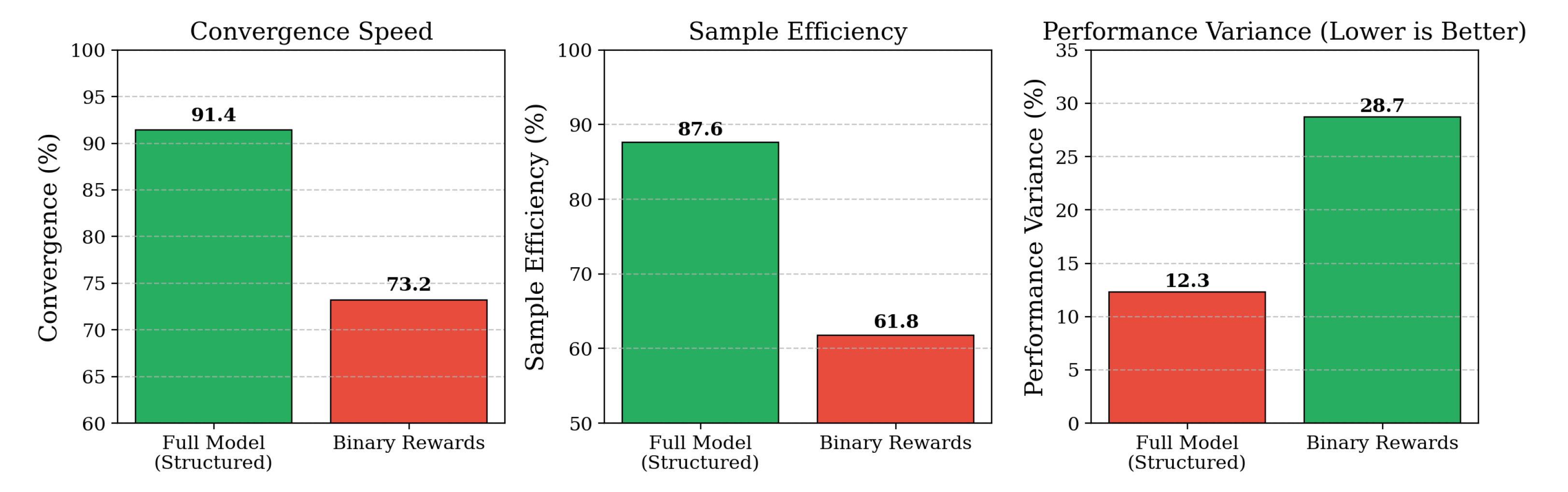

| Variant | Conv. | Sample Eff. | Perf. Var. |

|---|---|---|---|

| Full Model | 91.4 | 87.6 | 12.3 |

| Binary Rewards | 73.2 | 61.8 | 28.7 |

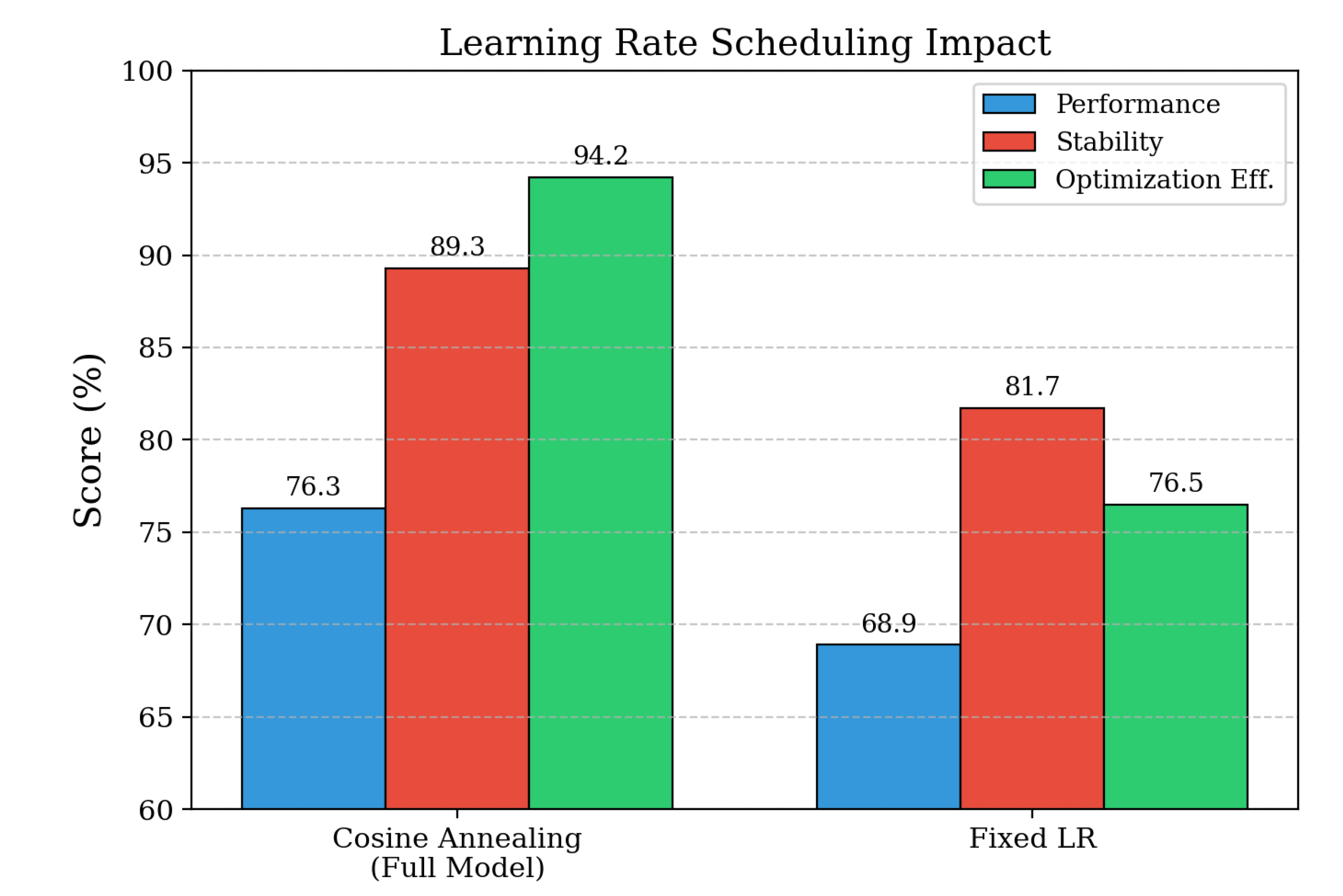

| Variant | Perf. | Stability | Opt. Eff. |

|---|---|---|---|

| Full Model | 76.3 | 89.3 | 94.2 |

| Fixed LR | 68.9 | 81.7 | 76.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).