1. Introduction

Optimal transport theory provides a geometrically meaningful framework for comparing probability measures by accounting for both mass distribution and the underlying space geometry. Since Kantorovich’s seminal formulation [

1], the theory has evolved into a rich mathematical discipline with applications ranging from partial differential equations to machine learning. Comprehensive treatments can be found in the monographs of Villani [

2,

3].

Wasserstein distances, derived from optimal transport, have become indispensable tools in statistics, image analysis, and data science due to their favorable geometric properties. However, computational complexity remains a significant challenge, motivating the development of regularized formulations that preserve convexity while enabling efficient computation.

Entropic regularization, introduced by Cuturi [

4], has emerged as the dominant approach, leading to the Sinkhorn algorithm with quadratic complexity. As surveyed by Peyré and Cuturi [

5], this method enables large-scale applications but produces fully dense coupling matrices. While appropriate for many tasks, dense couplings may be undesirable in applications requiring sparse, interpretable transport plans, such as graph matching, feature correspondence, or problems with inherent sparsity structure.

In this work, we propose an alternative regularization based on the total variation (TV) norm. The TV norm has a long history in analysis and inverse problems, particularly in image processing where it preserves edges and promotes piecewise constant solutions [

6]. We employ TV to penalize deviations of transport plans from the independent product of marginals, yielding a convex optimization problem with non-smooth regularization that naturally encourages sparse couplings.

Our contributions are threefold: (1) we define the TV-regularized optimal transport problem and establish its basic properties, including existence of minimizers; (2) we prove that for any positive regularization parameter, the functional defines a complete metric on the space of probability measures; (3) we analyze limiting behavior as the regularization parameter varies and present a discrete linear programming formulation. Throughout, we emphasize theoretical understanding while noting computational implications.

The paper is structured as follows:

Section 2 establishes notation and reviews necessary background.

Section 3 studies properties of the TV deviation functional.

Section 4 defines the regularized problem and proves existence.

Section 5 establishes metric properties with detailed proofs.

Section 6 analyzes limiting regimes.

Section 7 presents the discrete formulation.

Section 8 provides illustrative examples, and

Section 9 concludes with future directions.

2. Preliminaries and Notation

Let be a compact metric space. We denote by () the set of Borel probability measures on . For (), let denote the set of couplings (transport plans) with marginals and , i.e., probability measures on satisfying and for all Borel sets .

Given a continuous cost function

×

→

, the Kantorovich optimal transport problem is

When

for

, the

p-power of this infimum defines the

p-Wasserstein distance

.

The total variation norm of a finite signed measure

on

is

where

denotes bounded continuous functions. Equivalently,

where

is the Borel

-algebra. This norm induces the strong topology on measures and plays a fundamental role in probability and statistics [

7,

8].

For (), we denote by their product measure on , representing the joint distribution of independent random variables with marginals and .

3. Total Variation Deviation from Independence

Definition 1 (TV deviation from independence)

. For (Ω) and , define

This functional measures how much the coupling deviates from statistical independence. It vanishes precisely when , and achieves its maximum value of 2 when is singular with respect to .

Proposition 1 (Properties of ). The functional satisfies:

- (i)

Convexity: For any and ,

- (ii)

Lower semicontinuity: If weakly in , then

- (iii)

-

Subadditivity: For any (Ω), , and ,

where π is the gluing of and .

- (iv)

Bounds: for all .

Proof. (i) Convexity follows from the triangle inequality for

. (ii) Lower semicontinuity holds because

is lower semicontinuous with respect to weak convergence. (iii) For subadditivity, let

be a gluing of

and

, and

. Then

using the triangle inequality and properties of product measures. (iv) The bounds follow from

for any probability measure

. □

4. TV-Regularized Optimal Transport

Definition 2 (TV-regularized optimal transport)

. Let (Ω), , and be continuous. Define

The parameter controls the trade-off between transportation cost and deviation from independence. When , we recover the classical optimal transport problem. As increases, couplings close to the independent product are favored.

Theorem 2 (Existence of minimizers). For any and , the infimum in the definition of is attained.

Proof. The set

is compact in the weak topology by Prokhorov’s theorem, as

is compact. The objective functional

is lower semicontinuous: the first term is continuous by continuity of

c and weak convergence, while the second term is lower semicontinuous by Proposition 1(ii). A lower semicontinuous function on a compact set attains its minimum. □

5. Metric Properties

We now focus on the case , where d is the metric on .

Proposition 3 (Basic properties). For any and :

- (i)

(non-negativity)

- (ii)

(symmetry)

- (iii)

(boundedness)

Proof. (i) Both terms in the definition are non-negative. (ii) Symmetry follows from symmetry of d and . (iii) Using the independent coupling gives , so since . □

Theorem 4 (Identity of indiscernibles). For , if and only if .

Proof. If , the diagonal coupling satisfies and so .

Conversely, suppose

. Let

be a minimizing sequence with

Since both terms are non-negative, we have

and

. The first condition implies

concentrates on the diagonal

, which forces

as marginals. □

Theorem 5 (Triangle inequality)

. For any and ,

Proof. Let

. Choose

and

such that

By the gluing lemma [

3], there exists

with marginals

(on the first two coordinates) and

(on the last two coordinates). Define

.

For the transport cost, by the triangle inequality for

d:

For the TV term, by Proposition 1(iii):

Combining these estimates:

Since was arbitrary, the result follows. □

Corollary 6 (Metric property). For any , defines a metric on .

Proof. Combine Proposition 3 (non-negativity, symmetry), Theorem 4 (identity of indiscernibles), and Theorem 5 (triangle inequality). □

6. Limiting Regimes

Theorem 7 (Recovery of Wasserstein distance)

. For ,

Moreover, if is a minimizer for , then any weak limit point of as is an optimal coupling for .

Proof. For any

and

,

Taking infimum over

gives

since

. The squeeze theorem yields the limit.

For the second claim, let

and

weakly. Then

so

is optimal for

. □

Theorem 8 (Large regularization limit)

. For ,

Proof. For any

,

Taking

gives

for all

, hence

where the last equality follows from [

8].

For the lower bound, note that for any

,

□

7. Discrete Formulation and Computation

Consider discrete measures and with , (probability simplices). Let .

Proposition 9 (Linear programming formulation)

. The discrete TV-regularized optimal transport problem is equivalent to:

where represent positive and negative parts of the deviation .

Proof. The TV norm in discrete setting becomes the norm: . Introducing slack variables with and yields the linear program. □

This formulation involves variables and constraints, making it solvable by standard linear programming algorithms (simplex, interior-point methods) for moderate .

Table 1.

Comparison of transport formulations (n: support size, : entropic regularization parameter, : TV regularization parameter).

Table 1.

Comparison of transport formulations (n: support size, : entropic regularization parameter, : TV regularization parameter).

| Method |

Metric? |

Computational

Complexity |

Sparsity

of π*

|

Convergence

λ → 0 |

|

(unregularized) |

Yes |

|

High |

— |

| Entropic OT [4] |

Yes |

|

None |

|

| TV-regularized OT |

Yes |

(LP) |

Moderate |

|

8. Examples and Illustrations

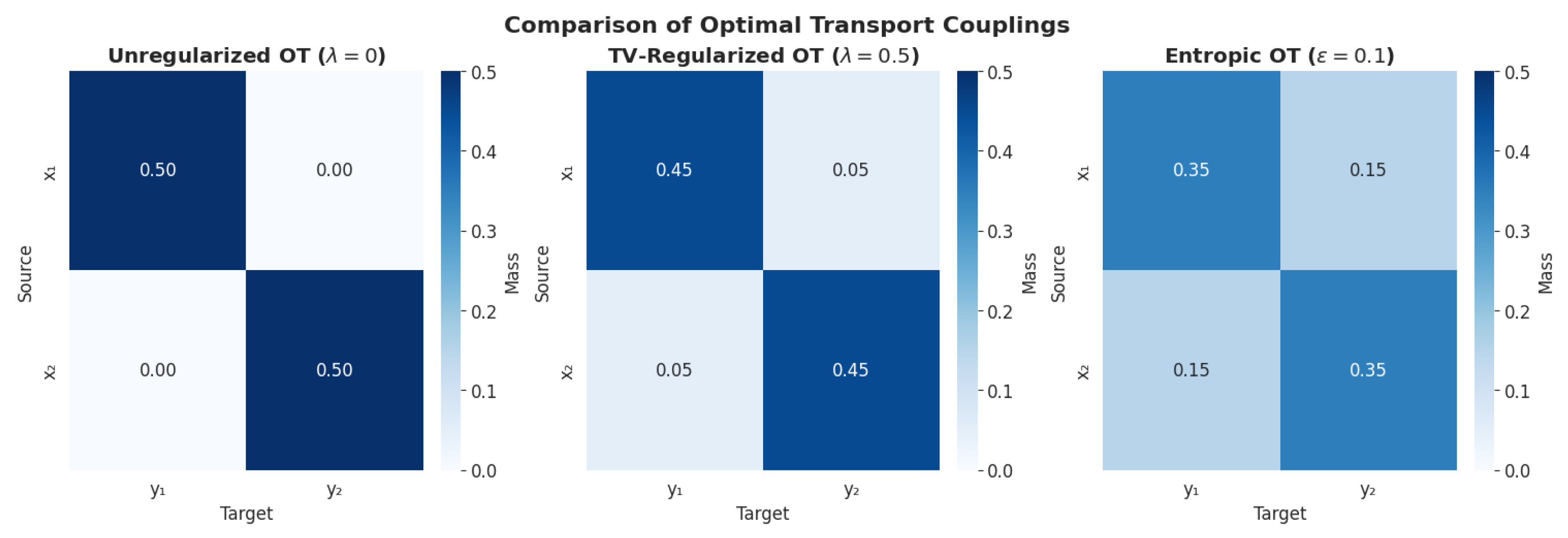

Example 10 (Two-point distributions). Let , , with . The optimal couplings for different λ are:

: , ,

: , ,

: ,

The unregularized solution is sparse (two zeros), while entropic regularization would yield a fully dense matrix. TV regularization produces intermediate sparsity.

Example 11 (Gaussian distributions)

. Consider and on discretized with points. Figure 1 (conceptual) shows that:

For small λ, the optimal coupling approximates the monotone rearrangement (sparse in the sense of Monge map)

For large λ, the coupling approaches the product measure (dense but independent)

TV regularization preserves more sparsity than entropic regularization at comparable regularization strength

9. Discussion and Future Work

We have introduced and analyzed a total variation regularized optimal transport problem. The main theoretical contributions are:

Existence of optimal transport plans (Theorem 2)

Proof that defines a metric for (Corollary to Theorem 5)

Characterization of limiting behavior as and (Theorems 7 and 8)

Discrete linear programming formulation for computation

Compared to entropic regularization, the TV-regularized formulation offers distinct advantages for applications requiring sparse couplings but comes at higher computational cost due to the linear programming structure.

Future Research Directions

Algorithmic development: Specialized algorithms (primal-dual methods, network flow formulations, cutting-plane methods) could improve computational efficiency beyond generic LP solvers.

Metric geometry: Study geodesics, curvature, and other geometric properties of . Does induce a geodesic metric space?

Statistical properties: Analyze sample complexity, consistency, and robustness of estimators based on . The TV component may provide robustness to outliers.

Applications: Explore specific domains where sparse couplings are beneficial: graph matching, feature selection, multi-marginal problems with sparsity constraints.

Extensions: Generalize to unbalanced optimal transport (allowing mass variation), dynamic formulations (Benamou-Brenier), and other cost functions beyond .

Hybrid approaches: Combine TV and entropic regularization to balance computational efficiency with sparsity promotion.

References

- L. V. Kantorovich. On the translocation of masses. Doklady Akademii Nauk SSSR, 37(7–8):227–229, 1942.

- C. Villani. Topics in Optimal Transportation. American Mathematical Society, 2003.

- C. Villani. Optimal Transport: Old and New. Springer, 2009.

- M. Cuturi. Sinkhorn distances: Lightspeed computation of optimal transport. In Advances in Neural Information Processing Systems, 2013.

- G. Peyré and M. Cuturi. Computational optimal transport. Foundations and Trends in Machine Learning, 11(5–6):355–607, 2019.

- L. I. Rudin, S. Osher, and E. Fatemi. Nonlinear total variation based noise removal algorithms. Physica D, 60:259–268, 1992.

- V. I. Bogachev. Measure Theory. Springer, 2007.

- A. L. Gibbs and F. E. Su. On choosing and bounding probability metrics. International Statistical Review, 70(3):419–435, 2002.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).