1. Introduction

Difference equations have been widely used to model time series by means of exponential functions. The parameters of the exponential functions result from an optimization process that minimizes the modelling error. These techniques suffer from numerical problems that affect the accuracy of the computed parameters. The frequencies and the dumping factors of the exponential functions are the roots of polynomials that result from the optimization process. However the numerical computation of roots is rather sensitive leading to compromised accuracy. To overcome this problem, alternative approaches for the computation of the exponential parameters were proposed in literature. Among the most efficient techniques are the well known ESPRIT and HSVD algorithms that perform well when it comes to spectral estimation but continue having numerical problems to predict future samples [

1,

2]. In addition round-off errors add-up creating significant deviation from the correct values of the parameters which compromises the prediction based on extrapolation of the exponential functions

. This paper exploits the null space of the Hankel matrix of the data allowing the prediction of future samples with better accuracy and confidence. In a more general sense the notion of null space refers to the set of eigenvectors of the data Hankel matrix which are associated with the smallest eigenvalues.

A generalized form of the new algorithm is also derived and applied to multichannel signals. Multichannel modeling has been widely used in signal processing applications. In particular, time varying multichannel signals are very common in real life applications such as video and multi source audio but also in econometrics while studying the combined evolution of various economic indices. The prediction of future vectors in a multichannel configuration has been an important problem with interesting applications. Among the most popular approaches are the multichannel autoregressive, subspace identification, deep learning and wavelet methods [

8]. Here the multichannel autoregressive technique is considered and a new algorithm for the computation of future vectors is presented. It exploits the null space of the block-like Hankel matrix constructed from existing vectors of the multichannel signal. Both cases with and without cross-channel coupling are considered and different algorithms are presented.

2. The Orthogonal Approach

Let us consider the

pth order Hankel matrix

H of a given set W of N samples w

1, w

2, … w

N with

N>p and size

px(N-p+1). The SVD of

H is

H=

U*S*V where

U is a

pxp matrix of singular vectors of size

p,

S is a

pxp diagonal matrix of singular values, arranged in ascending order and

V is a

px(N-p+1) matrix of singular vectors of size (N-p+1) [

3]. Assuming that the size of the null space of

H is q only p-q singular values in

S are non-zero and q of the singular vectors of

U namely

u1… uq span the null space of

H. If

Uq is a

pxq matrix of these vectors then the N-p+1 column vectors of

H are orthogonal to

Uq [

4,

5,

6]. The

(N+1)th unknown sample of the set W that has to be estimated, namely w(N+1), along with the p-1 known samples w(N-p+2), w(N-p+3), w(N-p+4) … w(N) form a new column vector of size

p, namely

x1=[w(N-p+2) w(N-p+3) w(N-p+4) … w(N) w(N+1)]

T which is part of the augmented Hankel matrix

Ha1=[

H x1]. Since all the vectors of

H are orthogonal to

Uq the same should hold for the vectors of the augmented matrix

Ha1, hence

x1 is also orthogonal to

Uq. By splitting

x1 into two parts, the known part namely

=[w(N-p+2) w(N-p+3) … w(N)] and the unknown part y

1=w(N+1) the following equation is obtained,

where

u1i consists of the p-1 first components of the i

th singular vector

ui and u

pi is the last component of

ui.

Equation (1) is only theoretically valid. In real life the orthogonality is only approximate and (1) should be reformulated as follows,

where e

1i is the approximation error. Best approximation will be achieved when the accumulated squared error is minimized,

which leads to the solution of the following minimization problem,

An equivalent minimization problem results after elaborating the above expression (3),

By setting to zero the first derivative of (4) with respect to y

1 the following solution is obtained,

3. The Case of Two Unknown Samples

To estimate w(N+1) and w(N+2) simultaneously a similar approach is used. Besides the vector

x1 a second column vector

x2 of size

p, consisting of p-2 known samples w(N-p+3), w(N-p+4),.. w(N) and two unknown samples, namely w(N+1) and w(N+2) is formed. The augmented Hankel matrix

Ha1 becomes

Ha2=[H x1 x2] and both vectors

x1 and

x2 have to be orthogonal to

Uq. Consequently next to equation (1) an additional equation to guarantee the orthogonality of

x2 is needed. Vector

x2 is split into two parts, the known part

s2 and the unknown components y

1 and y

2 with

=[w(N-p+3) w(N-p+4) … w(N)] and [y

1 y

2]=[w(N+1) w(N+2)]. Due to the orthogonality property the following must hold,

where

u2i consists of the p-2 first components of the i

th singular vector

ui and u

p-1,i and u

pi are the last two components of

ui.

As it was mentioned before, equation (6) is only theoretically valid. In real life the orthogonality is only approximate and (6) should be reformulated as follows,

where e

2i is the approximation error. For best estimates of both w(N+1) and w(N+2) the following combined optimization problem must be solved,

By using (1.1) and (7) the above minimization problem can be expressed as,

To solve the above the first derivatives of expression (9) with respect to y

1 and y

2 are set to zero. This leads to the following two equations,

The above linear system of equations provides best estimates for y 1 and y2.

4. The General Case of Many Unknown Samples

When three future samples need to be estimated, a third term in the optimization problem (8) is added [

7,

9]. By following the same type of notation equation (8) becomes,

where e

3i is the error due to the third unknown sample w(N+3)=y

3

By following the same notation as in the case of two unknown samples the error e

3i can be expressed as follows,

where

=[w(N-p+4) w(N-p+4) … w(N)],

u3i consists of the p-3 first components of the i

th singular vector

ui and u

p-2,i , u

p-1,i and u

pi are the last three components of

ui.

By setting the first derivatives of expression (11) with respect to y1, y2 and y3 to zero, a linear system of three equations is formed and its solution provides the estimates of the three unknown samples y1, y2 and y3 .

By replacing (1), (7) and (12) into expression (11) and by elaborating on the algebraic manipulations, the following set of equations is obtained,

The above system of equations (13) maybe expressed as,

where

y=[y

1 y

2 y

3],

A is a symmetric matrix and

b is a vector.

In a more general notation, the elements of the symmetric matrix

A for all j ≤ k and k≤n can be expressed as,

where

n stands for the number of samples to be estimated, namely

n=3 in this case.

Similarly, the elements of

b can be expressed as,

With

A and

b known, the estimation of

y is straightforward based on equation (14),

where

y is the vector of

n unknown future samples of the set W.

5. The Multichannel Solution Without Cross Channel Coupling

The above technique can be generalized and applied to the multichannel configuration. In particular for the case without cross channel coupling each vector is a linear combination of previous vectors according to the following equation,

where the coefficients

are scalars and

are the

N vectors of size

m of the multichannel signal [

8].

If matrix

Hb in (19) stands for the block Hankel matrix of the above multichannel signal, the error

for all

will be zero when the vector

is part of the null space of matrix

Hb

Assuming that the augmented block Hankel matrix

Hba=[

Hb h1] shares the same null space as

Hb, where

h1 is a

pxm matrix with its last row the unknown vector

as shown below,

then all column vectors of matrix

h1 should also be orthogonal to the null space of matrix

Hb

The SVD of Hb is Hb=Ub*Sb*Vb where Ub is a pxp matrix of singular vectors of size p, Sb is a pxp diagonal matrix of singular values, arranged in ascending order and Vb is a px[(N-p+1)*m] matrix of singular vectors of size (N-p+1)*m. Assuming that the size of the null space of Hb is q only p-q singular values in Sb are non-zero and q of the singular vectors of Ub namely ub1… ubq span the null space of Hb. If Ubq is a pxq matrix of these vectors then the (N-p+1)*m column vectors of Hb are orthogonal to Ubq. The m column vectors of h1 which should also be orthogonal to Ubq, consist of (p-1) known values and one unknown, their last element. They can be treated independently with null space the vectors in Ubq. Therefore to determine each of the m elements of vector , equation (5) can be used separately with each one of the m column vectors of matrix , after replacing the corresponding null space vectors ub1… ubq.

The elements of future vectors , k=2,…n can be determined by applying the equations (15), (16) and (17) and by using the corresponding null space vectors ub1… ubq.

More specifically, by introducing the matrices and assuming that they share the same null space as Hb, each one of the m column vectors of these matrices can be processed separately using equations (15), (16) and (17) to provide n future estimates for each individual channel.

6. The Multichannel Solution with Cross Channel Coupling

When cross channel coupling is assumed, each vector is a linear combination of previous vectors according to the following equation,

where the coefficients

are

mxm matrices,

are the

N vectors of size

m and

is the modelling error. By expanding equation (21) the

kth element of vector

, denoted as

, is equal to

where

are the

kth rows of the matrices,

respectively and

is the

kth element of the modelling error.

If matrix

Hbk in (23) stands for the block-like Hankel matrix which is associated to the

kth element of the multichannel signal, the error

for all

will be zero when the vector

is part of the null space of matrix

Hbk,

Assuming that the augmented block-like Hankel matrix

Hbka=[

Hbk hk] shares the same null space as

Hbk, where

hk is a

(p-1)*m+1 vector with its last element the

kth unknown sample

of the vector

, as shown below,

then vector

hk should also be orthogonal to the null space of matrix

Hbk.

The SVD of Hbk is Hbk=Ubk*Sbk*Vbk where Ubk is a [(p-1)*m+1]x[(p-1)*m+1] matrix of singular vectors of size (p-1)*m+1, Sbk is a [(p-1)*m+1]x[(p-1)*m+1] diagonal matrix of singular values, arranged in ascending order and Vbk is a [(p-1)*m+1]x(N-p+1) matrix of singular vectors of size (N-p+1). Assuming that the size of the null space of Hbk is q only [(p-1)*m+1]-q singular values in Sbk are non-zero and q of the singular vectors of Ubk namely ubk1… ubkq span the null space of Hbk. If Ubkq is a [(p-1)*m+1]xq matrix of these vectors then the (N-p+1) column vectors of Hbk along with vector hk are orthogonal to Ubkq. The vector hk consist of (p-1)*m known values and one unknown, its last element. The estimation of the unknown element can be done independently with corresponding null space the vectors in Ubkq. Hence for the unknown element of vector equation (5) can be used with corresponding null space the q vectors ubk1… ubkq.

In order to estimate all the elements of the unknown vector the same procedure is repeated for all m possible values of k. For each value of k the SVD of the block-like Hankel matrix in (23) has to be computed and the new null space vectors have to be used with equation (5).

7. Experimentation – Testing

The proposed algorithm was tested against the well known method ESPRIT that models the signal by means of exponential sinusoids. A large set of signals, four thousands in total, with variable complexity in terms of the rank of their covariance matrix was considered. The comparison was based on the accuracy of predicting future samples as well as on the overall consistency of the two algorithms. The term consistency refers to the homogeneity of the prediction errors. Each signal consists of 2000 to 2500 samples and it was generated from white noise that was filtered using random low pass FIR filters with hundreds of taps. Four sets of future samples were selected for the comparison. The latter is based on the RMSE among the predicted values and the actual values of the signals. The four sets refer to predicting the first, tenth, twentieth and thirtieth future samples using both the proposed and the ESPRIT algorithms and comparing the RMSEs of the two methods. To evaluate the algorithms with respect to their consistency the variance of the errors is used. In order to eliminate variations due to the differences of the signal amplitudes, all errors were normalized with respect to the actual value of the predicted sample.

For the ESPRIT method the prediction for each one of the 4000 signals is based on twenty different models with orders equally spaced between 140 and 900. By averaging these twenty different results the overall prediction is computed.

For the new method the size p of the Hankel matrix H is set to 600. Alternative choices for p were also tested showing no significant change in the results as long as p is not too small compared to the length N of the signals. Regarding the null space not only one single size for each signal is used. Instead, an optimum set of null spaces for each signal is considered and the overall prediction is computed by averaging individual results. There are two constraints while choosing the optimum set of null spaces, a) the sizes of the null spaces of the optimum set are consecutive numbers and b) the variance of the results of the set is minimal. The second constraint is imposed to guarantee maximum consistency of the chosen set.

Table 1 shows the obtained results for the four different setups in predicting future samples. In all cases the new method performs better than ESPRIT in terms of both RMSE and variance.

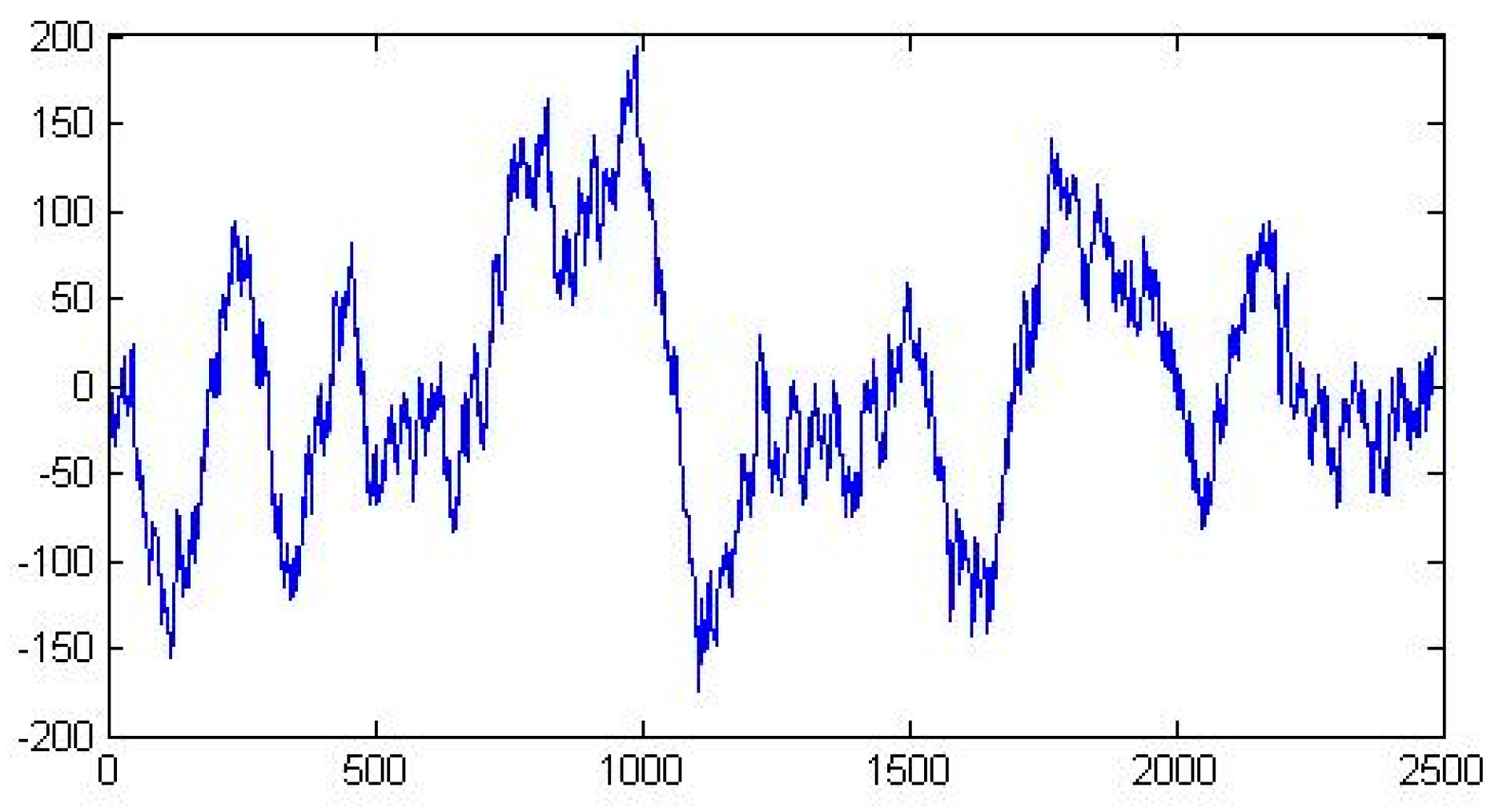

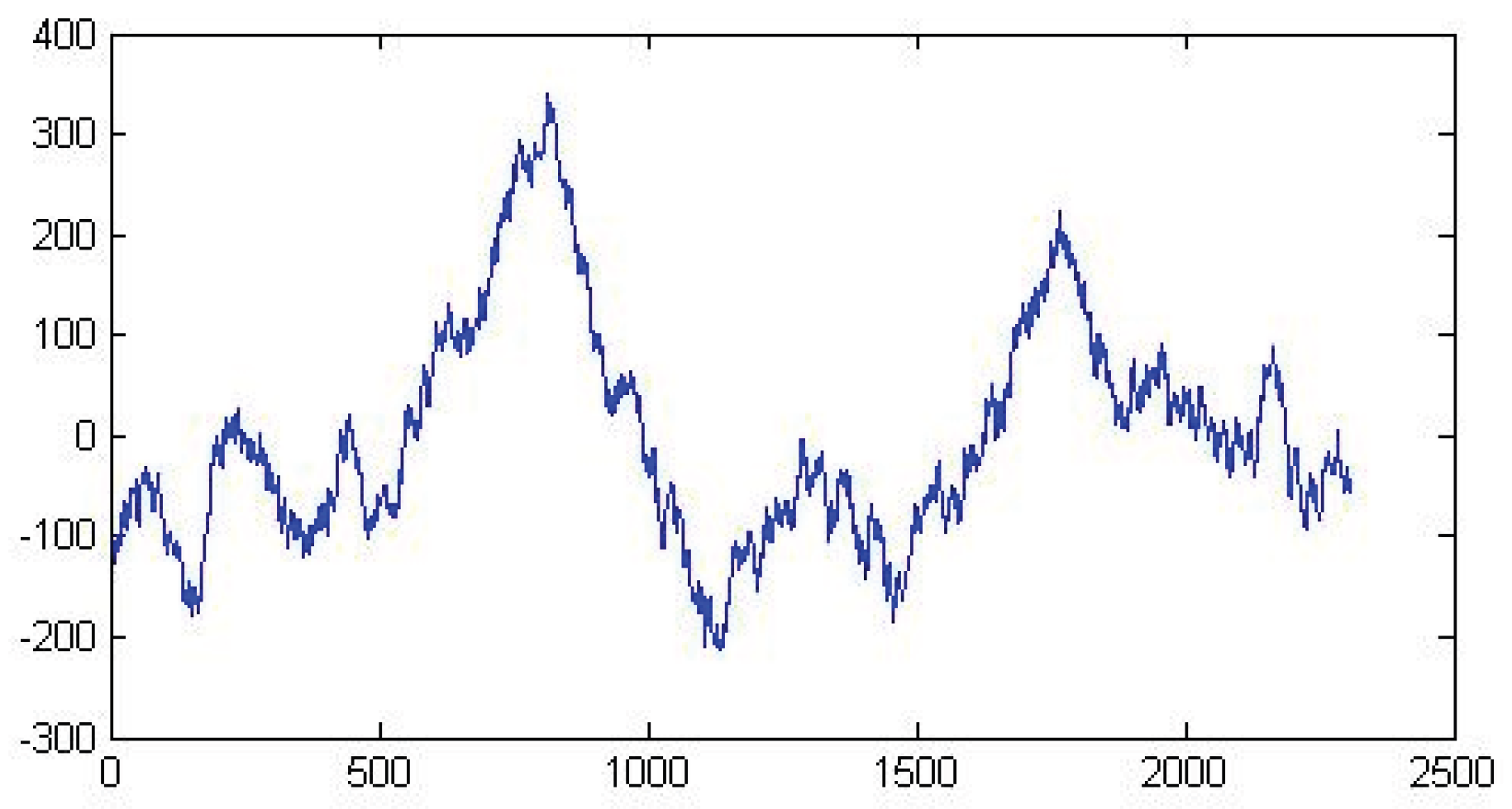

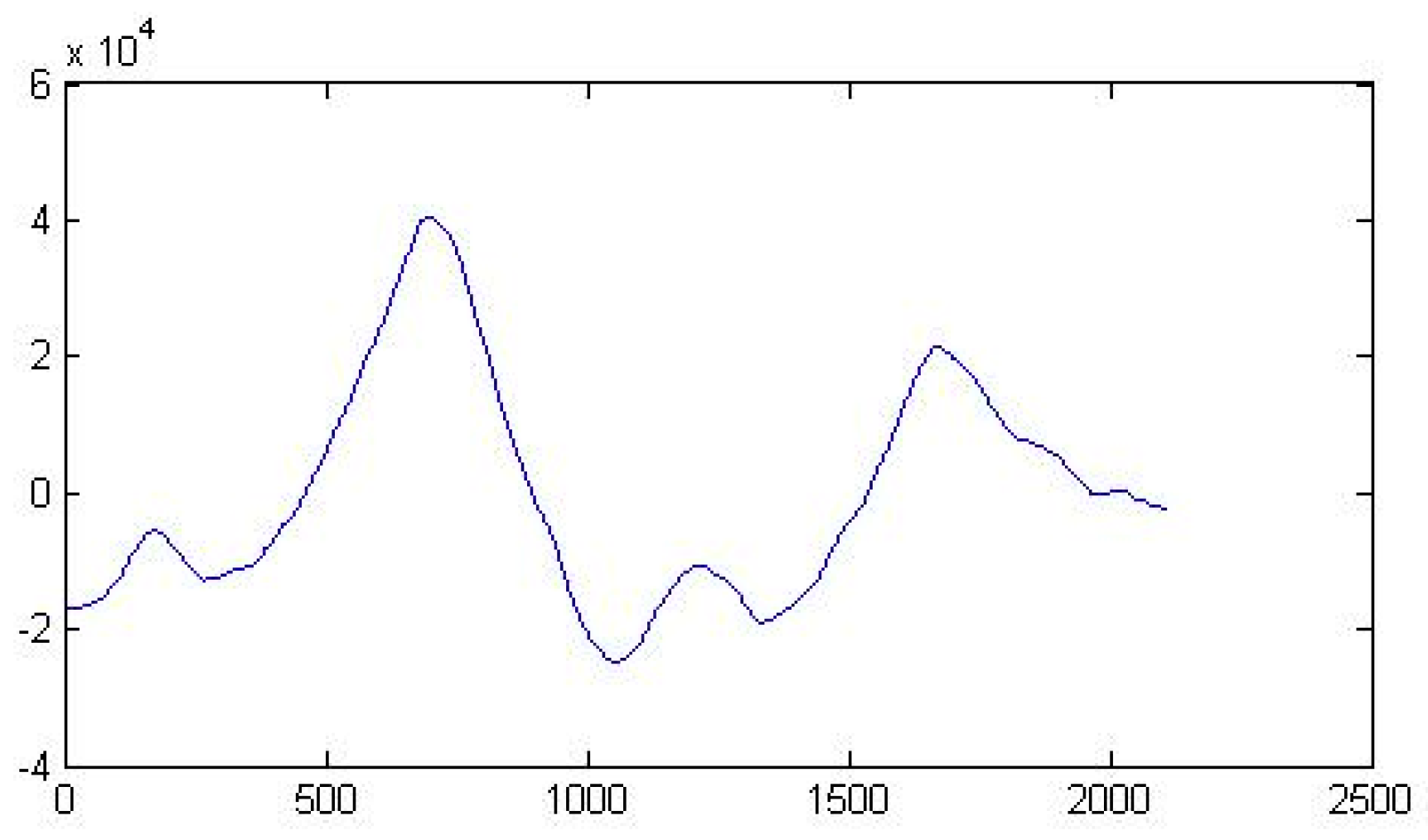

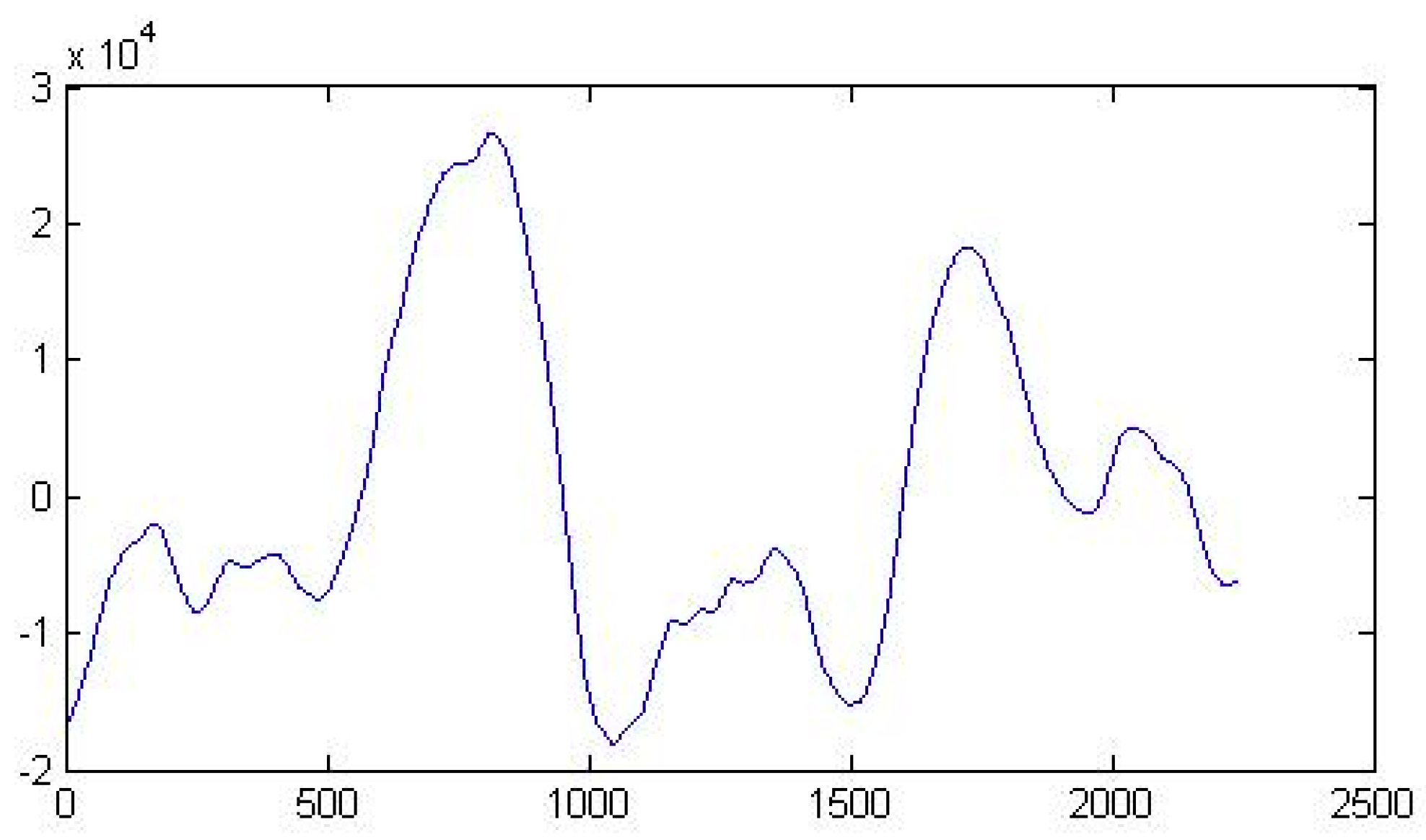

Figure 1,

Figure 2,

Figure 3 and

Figure 4 show the shape of representative signals among the 4000 used. The rank of the covariance matrix of all 4000 signals varies considerably, ranging from low to high rank. This way the results are not biased with respect to the degree of predictability of the signals.

8. Conclusion

A new method to predict future samples is presented and it is compared against the ESPRIT method. It was proven to be more accurate and more consistent than ESPRIT, based on results from four thousand time series of variable spectral complexity. The new method exploits the properties of the eigenvectors of the Hankel matrix of the time series that are associated with the low eigenvalues. A generalization of the algorithm to multichannel signals was also presented. Both cases, with and without cross-channel coupling, were considered and different algorithms were proposed. The algorithms are fast and stable and can predict any set of future samples/vectors. Further research will focus on both the optimal selection of the null space of the time series Hankel matrix as well as the choice of the model size.

References

- S. van Huffel, H. Chen, C. Decanniere and P. van Hecke, "Algorithm for time-domain NMR data fitting based on total least squares", J.Magn.Res., Series A 110, pp. 228-237, 1994. [CrossRef]

- Roy R., Paulraj A., and Kailath T., “ESPRIT—a subspace rotation approach to estimation of parameters of cisoids in noise”, IEEE Transactions on Acoustics, Speech, and Signal Processing. (1986) 34, no. 5, 1340–1342, 2-s2.0-0022794413. [CrossRef]

- R.A. Horn, C.R. Johnson, “Matrix Analysis”, Cambridge University Press, 1996.

- Dologlou and G.Carayannis, "LPC/SVD analysis of signals with zero modelling error", Signal Processing, Vol. 23 (3), pp. 293-298, 1991. [CrossRef]

- I. Dologlou, G. Carayannis, "Physical interpretation of signal reconstruction from reduced rank matrices", IEEE Trans. Acoust. Speech Signal Process., ASSP, July 1991, pp. 1681-1682. [CrossRef]

- J.A. Cadzow, "Signal enhancement: A composite property mapping algorithm", IEEE Trans. Acoust. Speech Signal Process., Vol. 36, No. 1, January 1998, pp. 49-62. [CrossRef]

- Ioannis Dologlou, “Estimating future samples in time series data”, or doi.org/10.20944/preprints202509.2140.v2 July 2025. [CrossRef]

- H. Lütkepohl, New Introduction to Multiple Time Series Analysis, Springer, 2005.

- I. Dologlou “A Non-Parametric Algorithm To Estimate Future Samples In Time Series”, World Journal of Applied Mathematics and Statistics, 2025, 1(4), 01-05.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).