Submitted:

24 November 2025

Posted:

25 November 2025

You are already at the latest version

Abstract

Keywords:

1. Background of the Study

1.1. Introduction

2. Data Source and Description

2.1. Dataset Description

- Duration of account (time since customer's subscription)

- Contract and payment details (e.g. contract type, payment method, monthly fees)

- Subscribed services (e.g. internet, phone, etc. plus additional features)

- Total Spending (e.g. Total Charges)

2.2. Data-Related Issues and Preprocessing

2.3. Problem: Non-Numeric Entries in the Total Charges Column

2.4. Preprocessing Step: Converting to Numeric and Handling Errors

Non-Numeric Values in the Total_Charges Column

Issue: Presence of an Irrelevant Identifier Column

Issue: Categorical Variables with Binary Values

Problem: Multiclass Categorical Variables

Issue: Duplicate Records

Issue: Remaining Missing or Hidden Blank Values

Issue: Mixed Data Types Across Columns

2.5. Final Dataset Overview After Preprocessing

2.6. Data Preprocessing

2.6.1. Conversion of Total Charges into a Numeric Format

2.6.2. Removal of Non-Predictive Identifier Column

2.6.3. Encoding of Binary Categorical Variables

2.6.4. One-Hot Encoding of Multi-Class Categorical Variables

2.6.5. Removal of Duplicate Records

2.6.6. Verification of Data Types

2.6.7. Statistical Summary and Quality Checks

2.6.8. Final Clean Dataset for Modelling

3. Methodology

3.1. Selected Techniques

3.2. Justification for Chosen Techniques

Empirical outcome (hold-out test set)

3.3. Business alignment

3.4. Model Validation Methods

3.5. Performance Metrics

4. Conclusions and Improvement

4.1. Conclusion

4.2. Improvements

References

- Das, D.; Mahendher, S. Comparative analysis of machine learning approaches in predicting telecom customer churn. Educational Administration: Theory and Practice 2024, 30, 8185–8199. [Google Scholar]

- Wagh, S.K.; Andhale, A.A.; Wagh, K.S.; Pansare, J.R.; Ambadekar, S.P.; Gawande, S. Customer churn prediction in telecom sector using machine learning techniques. Results Control. Optim. 2024, 14, 100342. [Google Scholar] [CrossRef]

- Afzal, M.; Rahman, S.; Singh, D.; Imran, A. Cross-Sector Application of Machine Learning in Telecommunications: Enhancing Customer Retention Through Comparative Analysis of Ensemble Methods. IEEE Access 2024, 12, 115256–115267. [Google Scholar] [CrossRef]

- Ullah, I.; Raza, B.; Malik, A.K.; Imran, M.; Islam, S.U.; Kim, S.W. A Churn Prediction Model Using Random Forest: Analysis of Machine Learning Techniques for Churn Prediction and Factor Identification in Telecom Sector. IEEE Access 2019, 7, 60134–60149. [Google Scholar] [CrossRef]

- Adeniran, I.A.; Efunniyi, C.P.; Osundare, O.S.; Abhulimen, A.O. Implementing machine learning techniques for customer retention and churn prediction in telecommunications. Comput. Sci. IT Res. J. 2024, 5, 2011–2025. [Google Scholar] [CrossRef]

- Chang, V.; Hall, K.; Xu, Q.A.; Amao, F.O.; Ganatra, M.A.; Benson, V. Prediction of Customer Churn Behavior in the Telecommunication Industry Using Machine Learning Models. Algorithms 2024, 17, 231. [Google Scholar] [CrossRef]

- Fujo, S.W.; Subramanian, S.; Khder, M.A. Customer churn prediction in telecommunication industry using deep learning. Information Sciences Letters 2022, 11, 24. [Google Scholar] [CrossRef]

- Jayalekshmi, K.R. A comparative analysis of predictive models using machine learning algorithms for customer attrition in the mobile telecom sector. The Online Journal of Distance Education and e-Learning 2023, 11. [Google Scholar]

- Edwine, N.; Wang, W.; Song, W.; Ssebuggwawo, D. Detecting the Risk of Customer Churn in Telecom Sector: A Comparative Study. Math. Probl. Eng. 2022, 2022, 8534739. [Google Scholar] [CrossRef]

- Dodda, R.; Raghavendra, C.; Aashritha, M.; Macherla, H.V.; Kuntla, A.R. A Comparative Study of Machine Learning Algorithms for Predicting Customer Churn: Analyzing Sequential, Random Forest, and Decision Tree Classifier Models. In Proceedings of the 2024 5th International Conference on Electronics and Sustainable Communication Systems (ICESC); pp. 1552–1559.

- Ogbonna, O.J.; Aimufua, G.I.; Abdullahi, M.U.; Abubakar, S. Churn Prediction in Telecommunication Industry: A Comparative Analysis of Boosting Algorithms. Dutse J. Pure Appl. Sci. 2024, 10, 331–349. [Google Scholar] [CrossRef]

- Manzoor, A.; Qureshi, M.A.; Kidney, E.; Longo, L. A Review on Machine Learning Methods for Customer Churn Prediction and Recommendations for Business Practitioners. IEEE Access 2024, 12, 70434–70463. [Google Scholar] [CrossRef]

| Variable Name | Description | Type |

|---|---|---|

| gender | Gender of the customer | Categorical |

| SeniorCitizen | Indicates if customer is a senior citizen (1 = Yes, 0 = No) | Numerical (binary) |

| tenure | Number of months customer has subscribed | Numerical |

| MonthlyCharges | Monthly billing amount | Numerical |

| TotalCharges | Total cumulative charges paid by customer | Numerical |

| Contract | Type of contract (Month-to-month, One year, Two year) | Categorical |

| InternetService | Internet service type (DSL, Fiber Optic, None) | Categorical |

| PaymentMethod | Billing/payment method | Categorical |

| Churn | Target outcome: customer churn status (Yes/No) | Categorical (binary) |

| Model | Role in the pipeline | Fit for our data / business need |

|---|---|---|

|

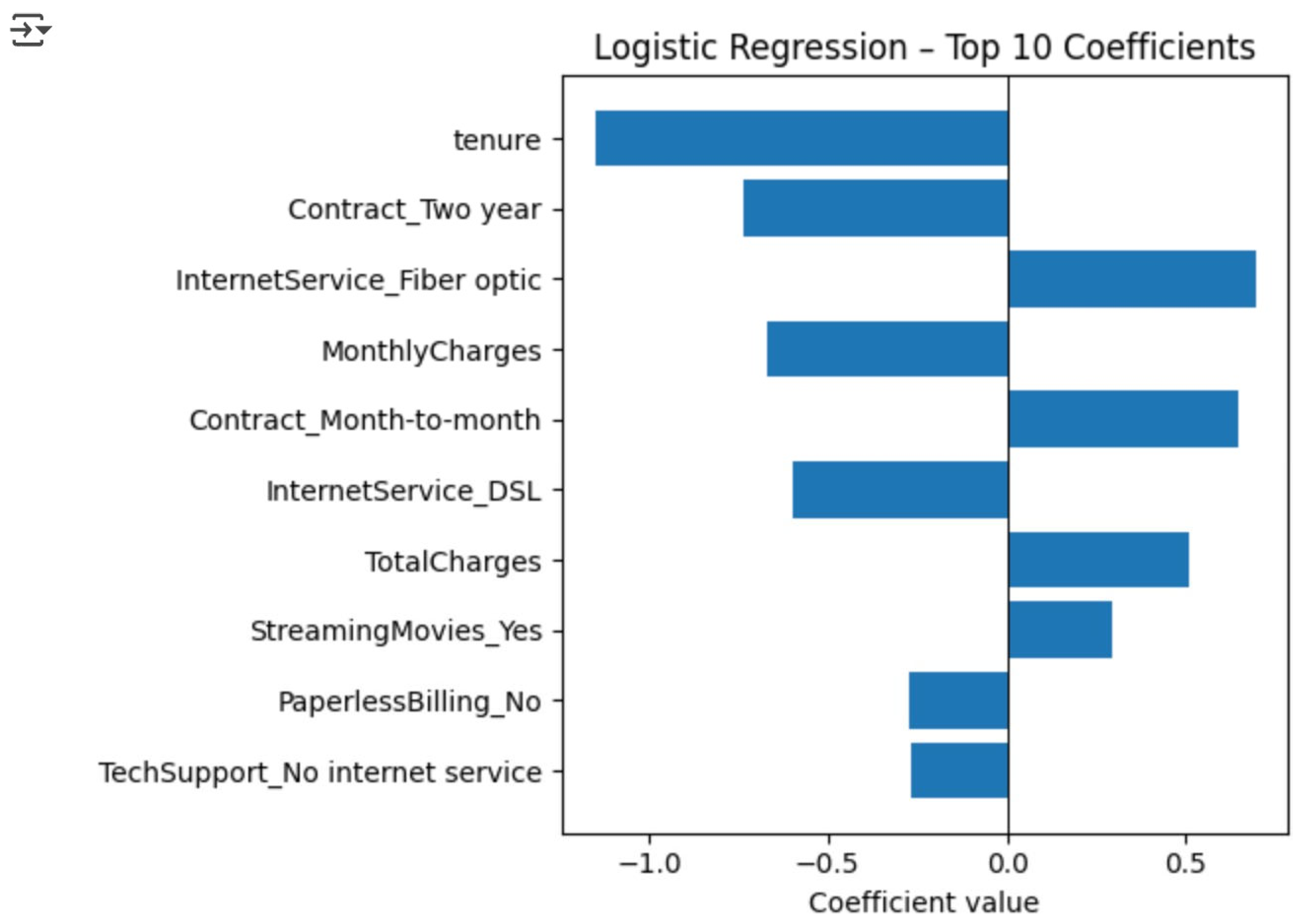

Logistic Regression (LR) |

Transparent baseline |

Coefficients translate directly into odds-ratios for variables like Contract Type or Tenure, giving management clear “what-if” levers. |

|

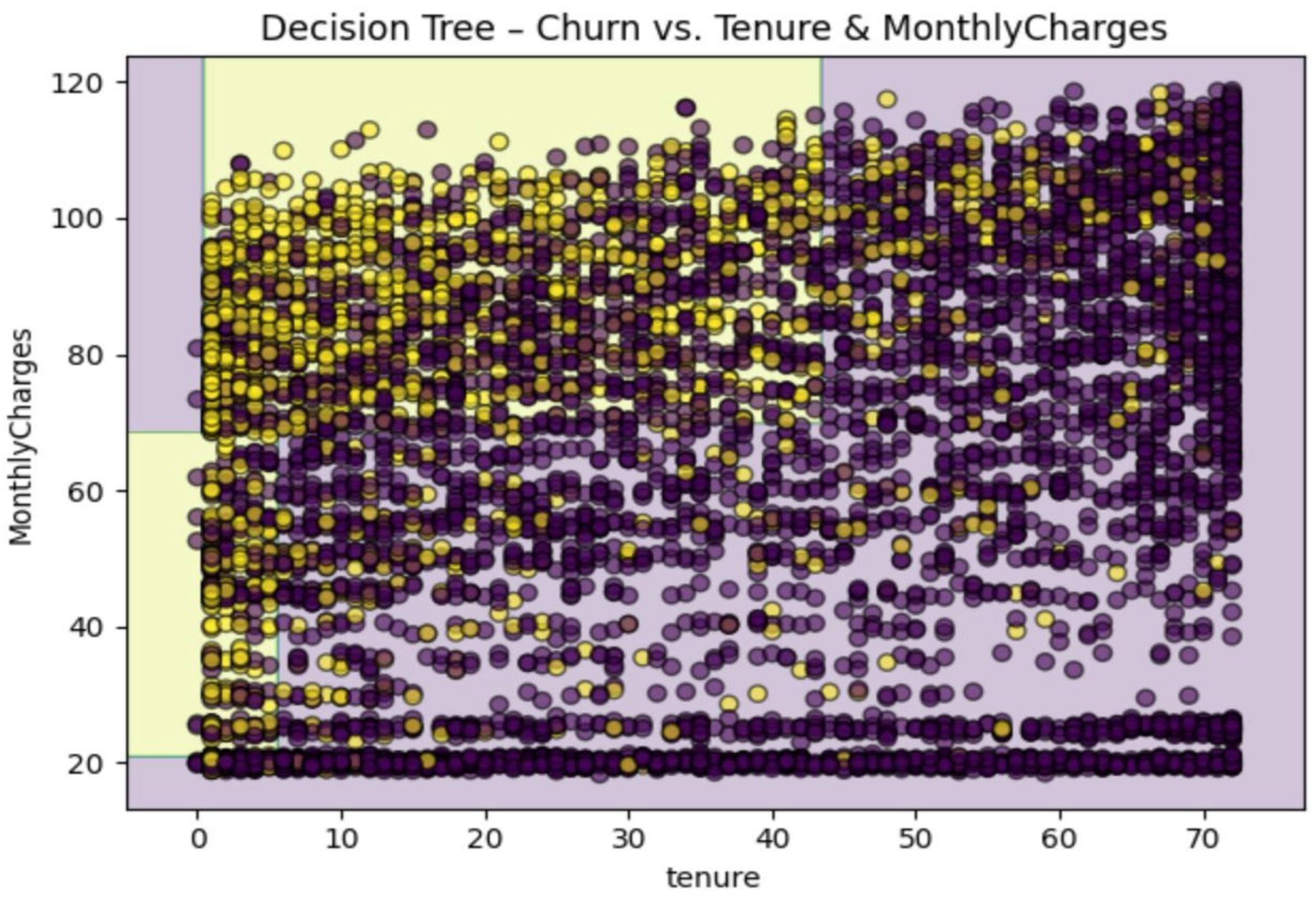

Decision Tree (CART) |

Rule generator |

Converts features into intuitive if- then rules that support helpdesk scripts and retention playbooks. |

|

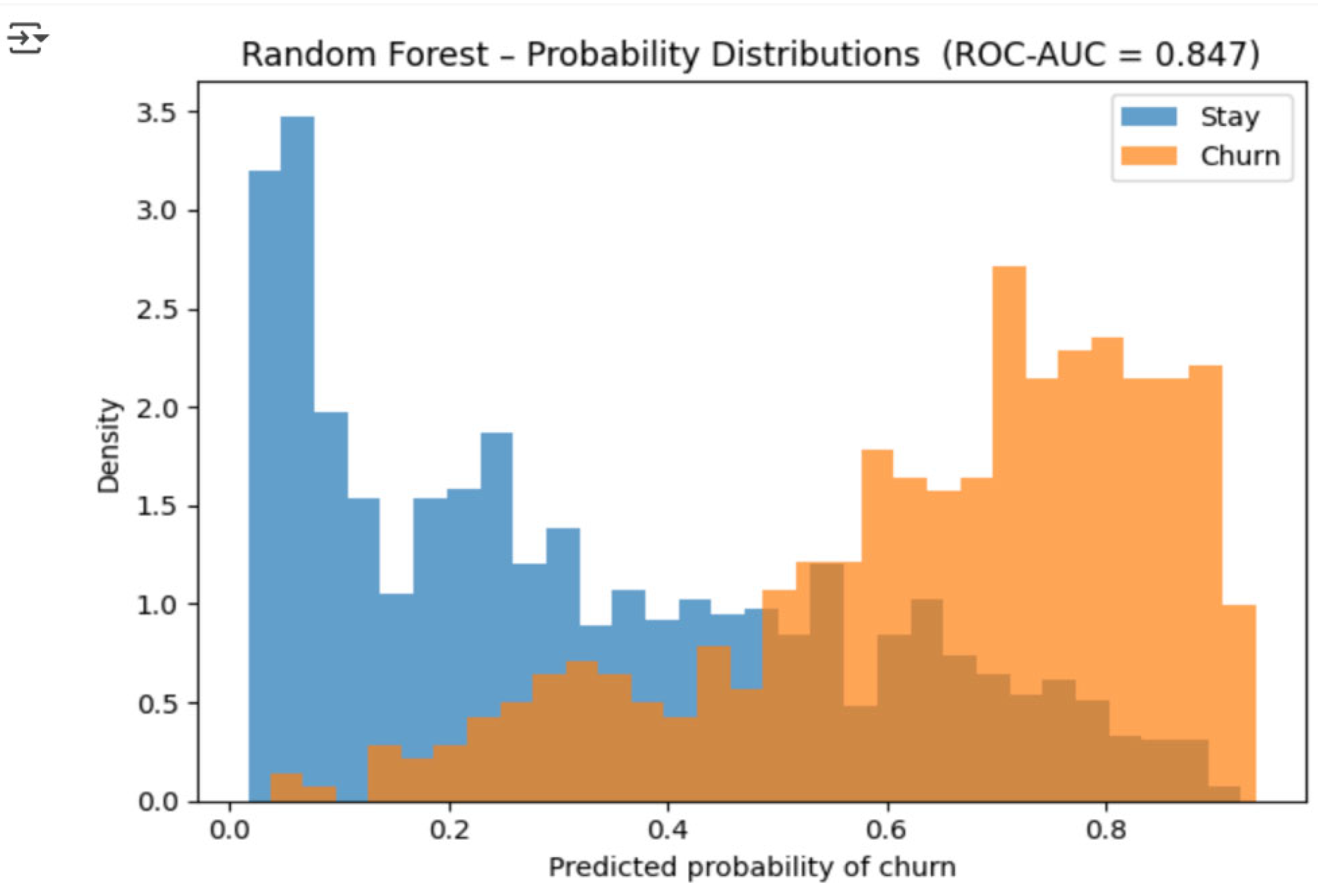

Random Forest (RF) |

High-bias/variance balance |

Handle weak interactions among add-on services, reduces over- fitting of single trees, still exports stable feature rankings for marketing. |

|

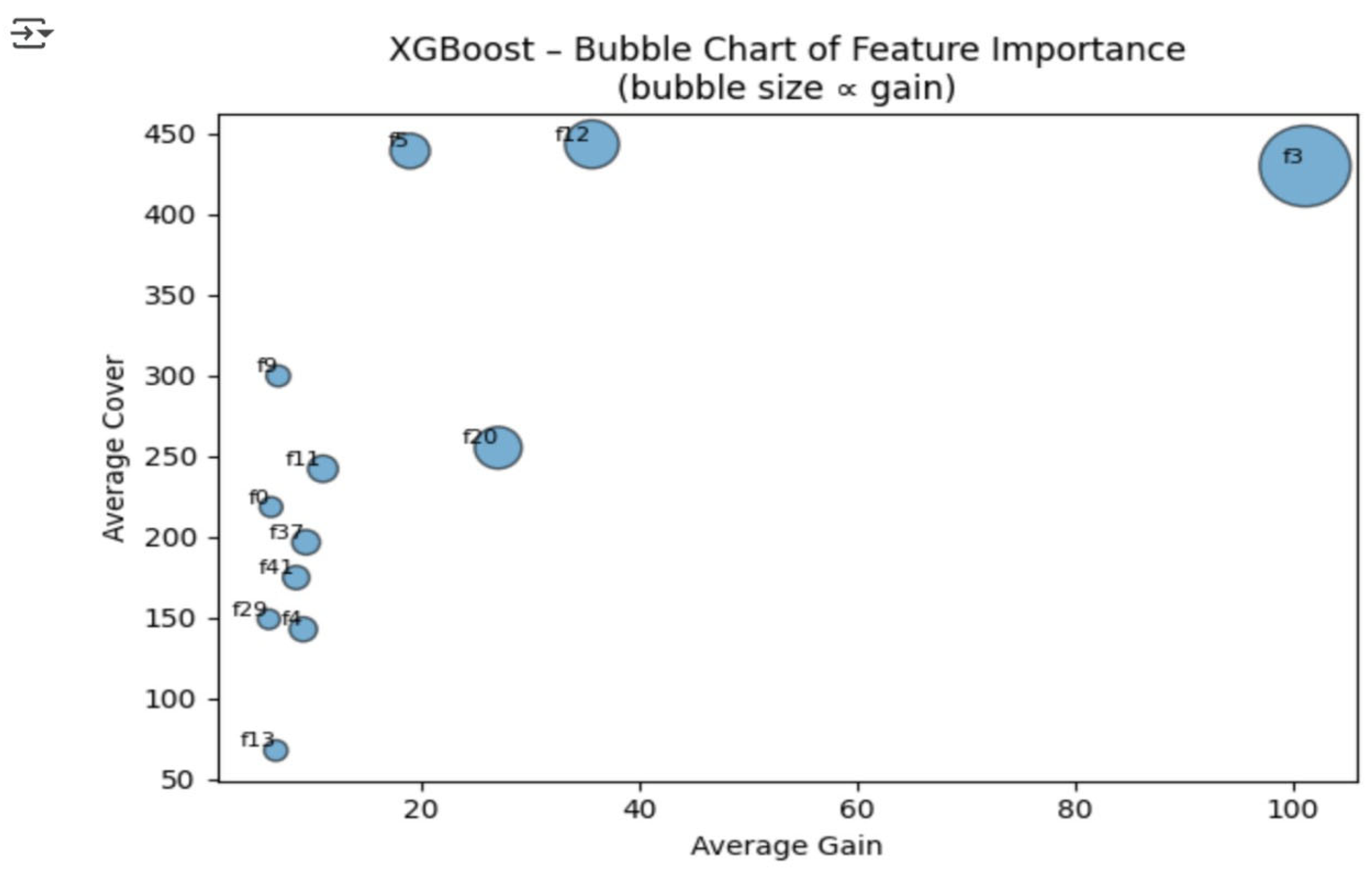

XGBoost (XGB) |

Accuracy maximiser |

Proven top performer on tabular churn data, manages sparse one- hot matrices, and includes native class-imbalance weighting. |

| Setting | Rationale |

|---|---|

| Class weighting (class_weight="balanced" for LR/Tree/RF, scale_pos_weight=2.8 for XGB) |

Offsets minority churn class without over- sampling and keeps training time low. |

| Tree depth / leaf size (Tree = 6 levels; RF leaf = 30) | Prevents “one customer per leaf” on a 7 k-row dataset and keep rules readable. |

| XGB learning rate 0.05 & 600 trees | Empirically hit the accuracy plateau; follows the rule-of-thumb trees ≈ 100 / lr. |

| Search budget ≤ 50 trials/model via RandomizedSearchCV | Small grid tuned to dataset size—completes in under 3 min on a standard laptop, so we can demonstrate live. |

| Model | ROC-AUC | F1 | Brier |

|---|---|---|---|

| LR | 0.837 | 0.593 | 0.150 |

| CART | 0.802 | 0.561 | 0.162 |

| RF | 0.866 | 0.621 | 0.144 |

| XGB | 0.883 | 0.640 | 0.147 |

| Model | ROC-AUC | Precision | Recall | F1-Score | Accuracy |

|---|---|---|---|---|---|

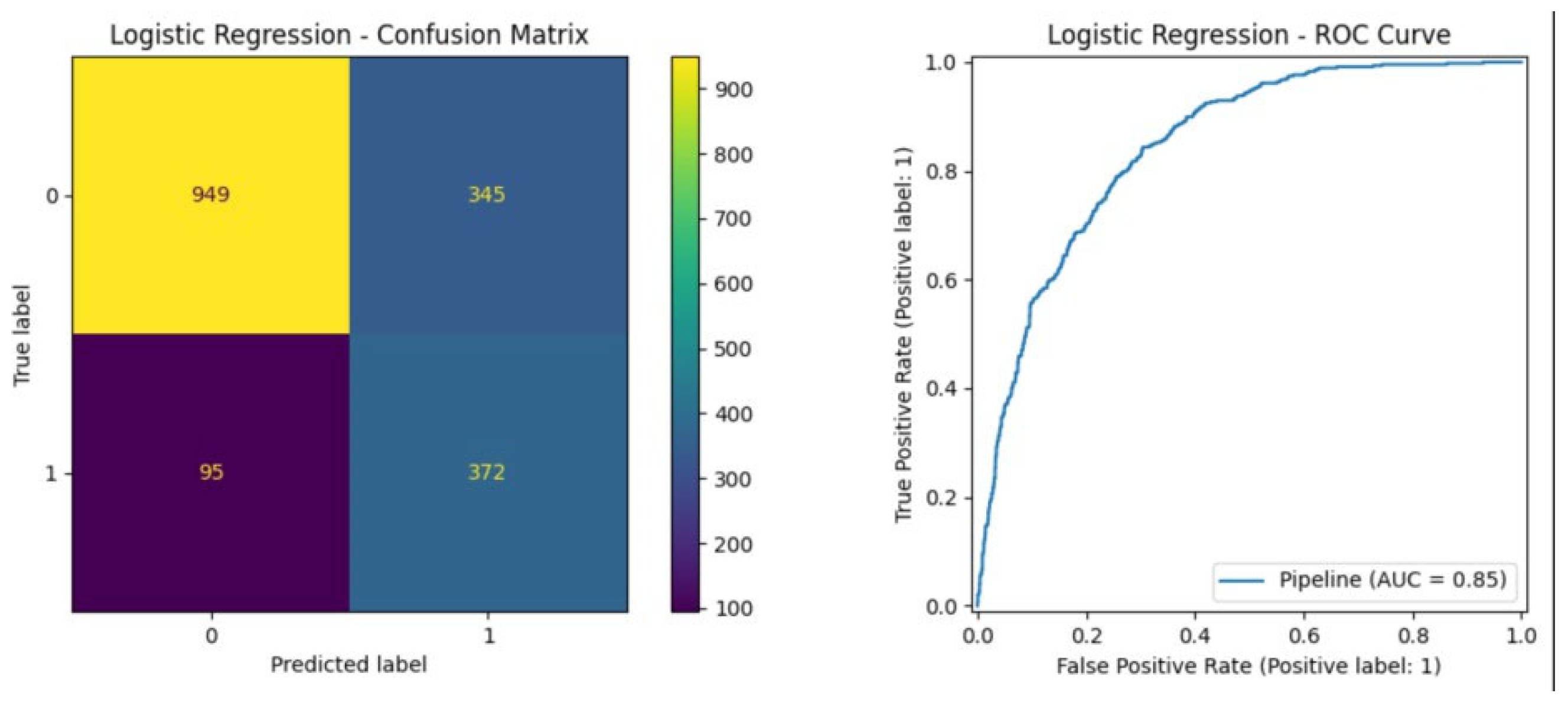

| Logistic Regression | 0.846 | 0.519 | 0.797 | 0.628 | 0.750 |

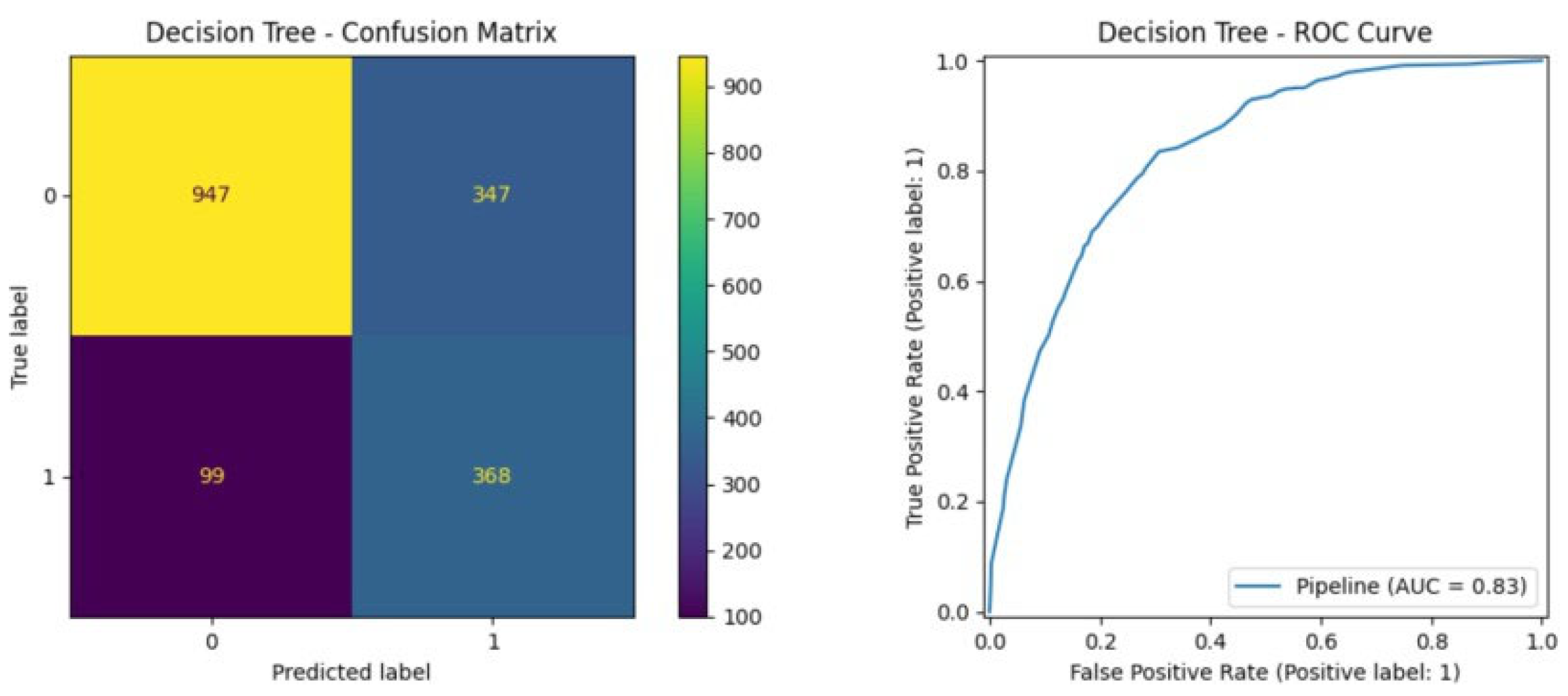

| Decision Tree | 0.834 | 0.515 | 0.788 | 0.623 | 0.747 |

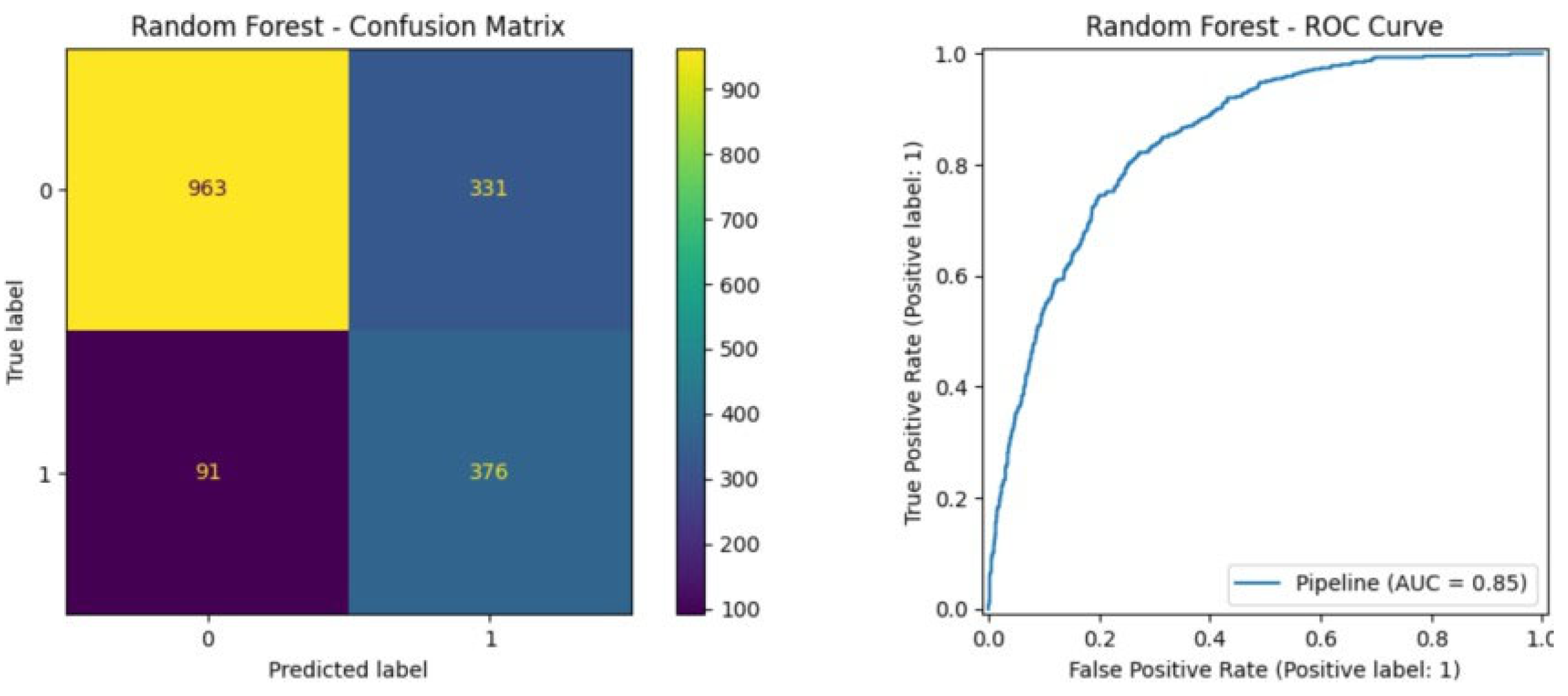

| Random Forest | 0.847 | 0.532 | 0.805 | 0.641 | 0.760 |

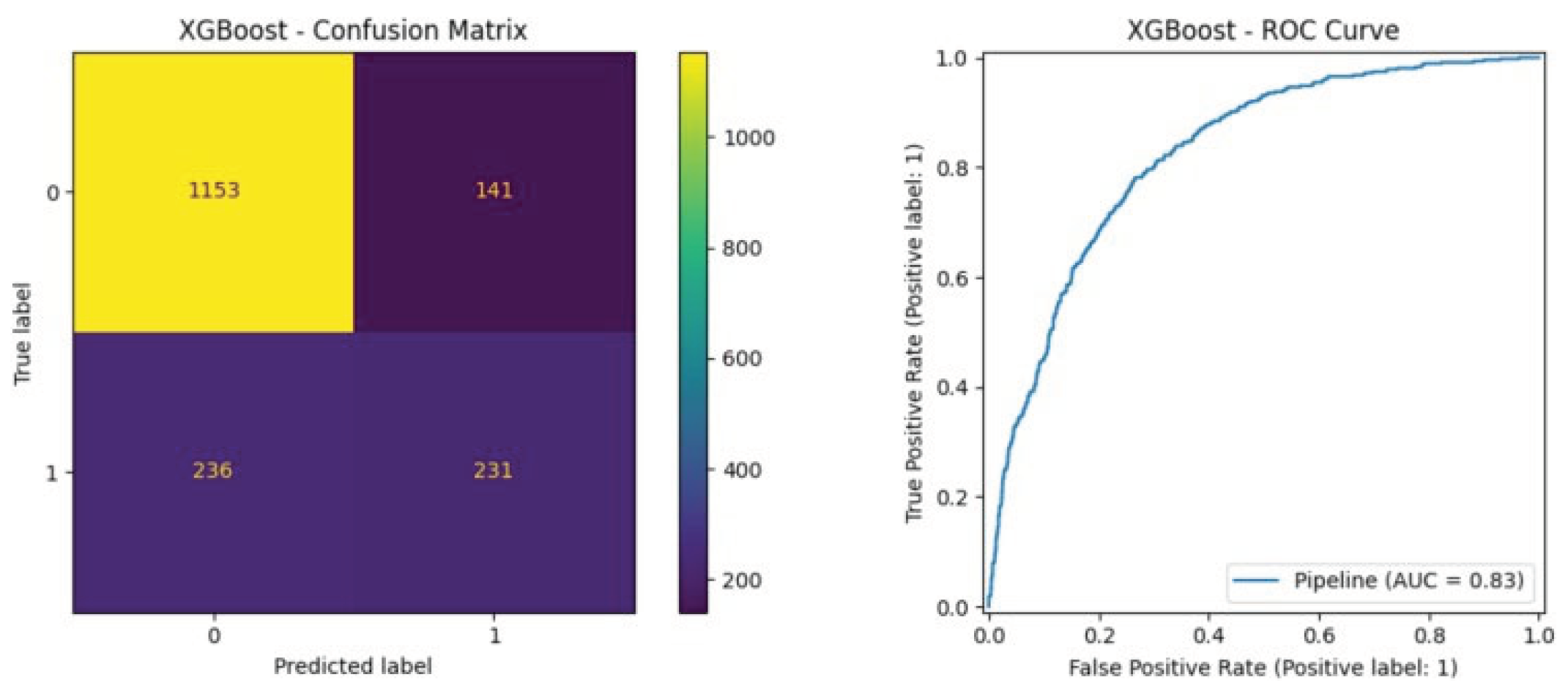

| XGBoost | 0.826 | 0.621 | 0.495 | 0.551 | 0.786 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).