Submitted:

07 November 2025

Posted:

12 November 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

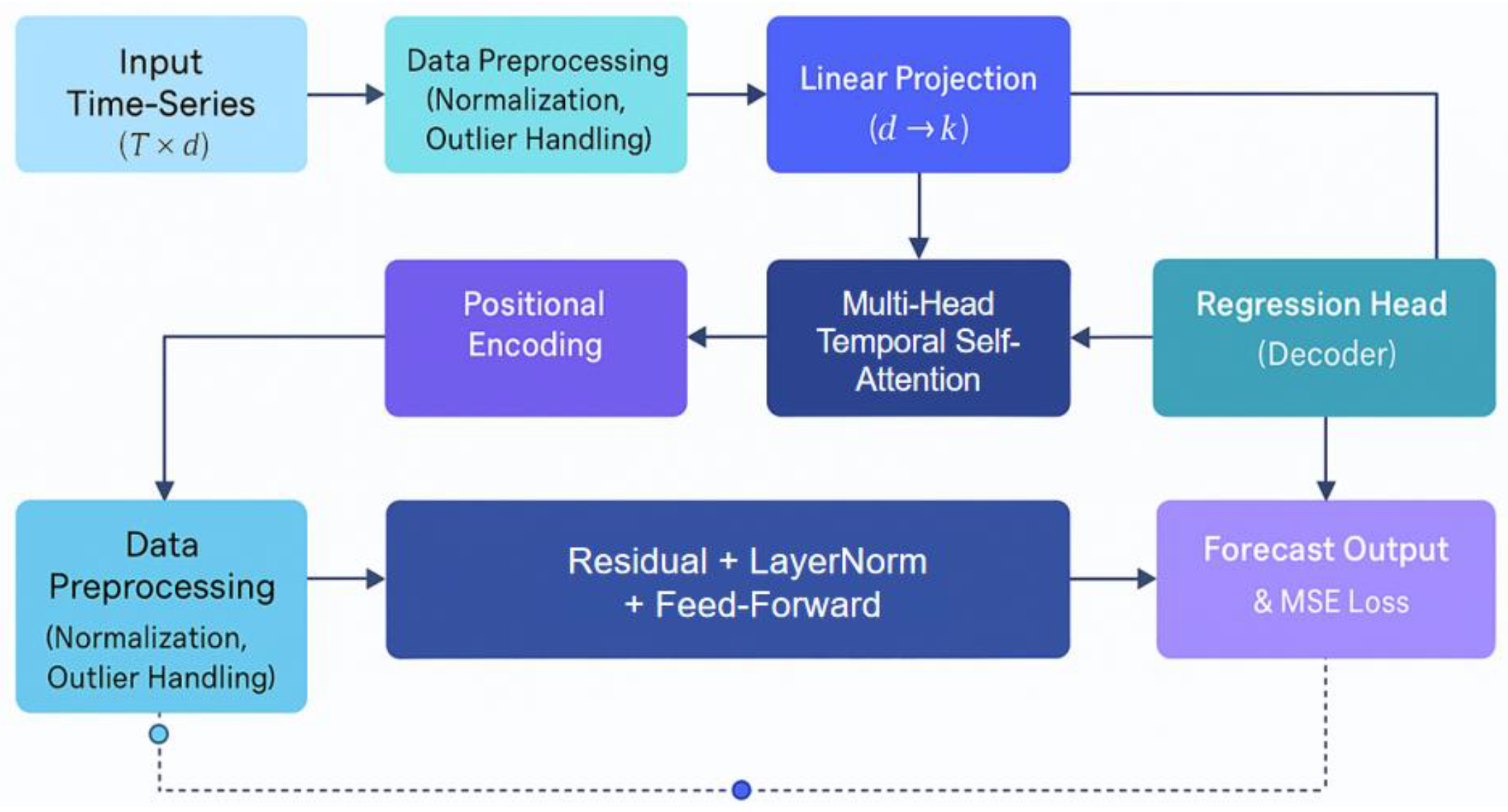

2. Proposed Approach

3. Performance Evaluation

3.1. Dataset

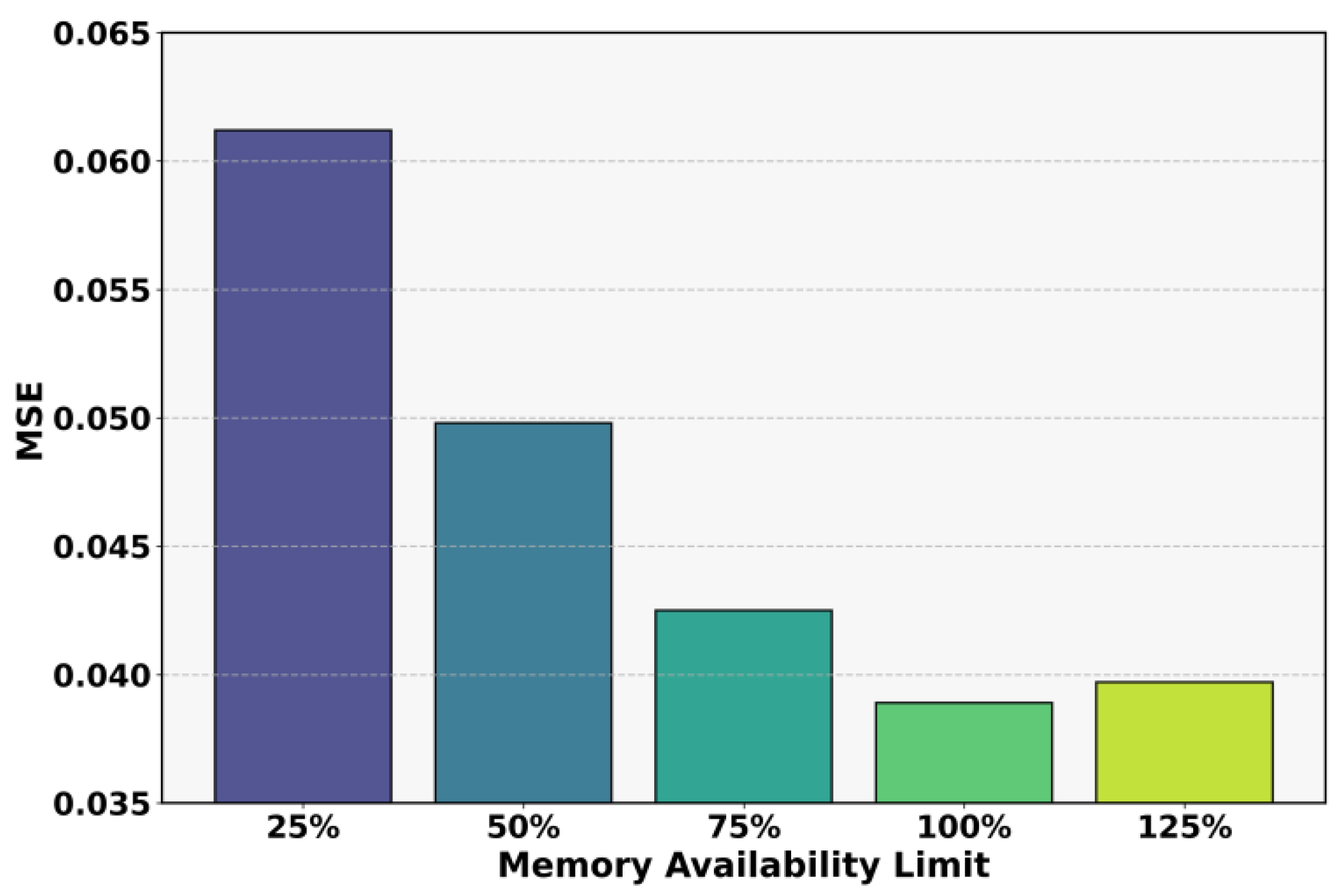

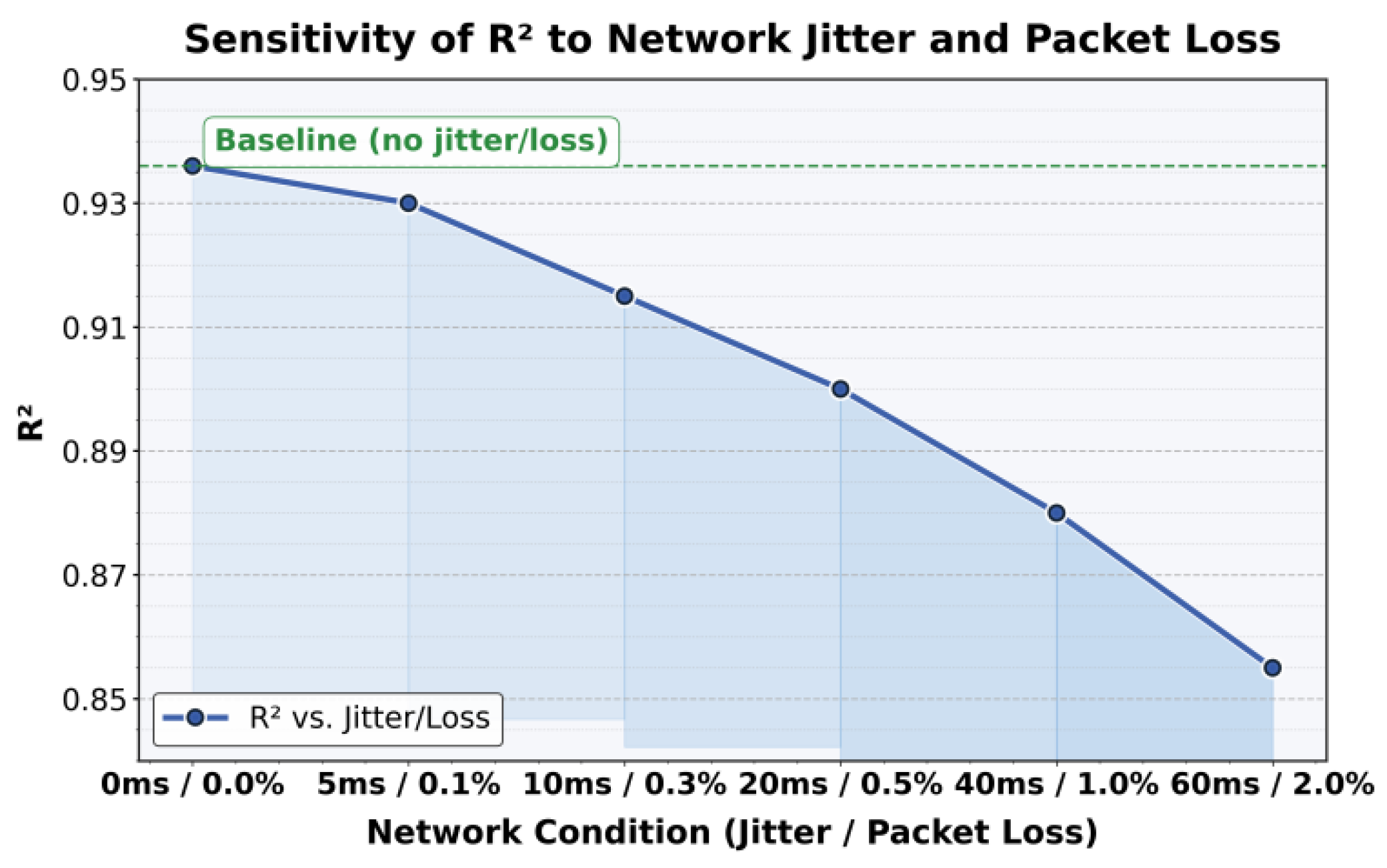

3.2. Experimental Results

4. Conclutions

References

- H. Chen and Z. Dang, "A hybrid deep learning-based load forecasting model for logical range. Applied Sciences 2025, 15, 5628. [CrossRef]

- K. Aidi and D. Gao, "Temporal-spatial deep learning for memory usage forecasting in cloud servers," 2025.

- Z. Lai, D. Z. Lai, D. Zhang, H. Li, et al., "LightCTS: A lightweight framework for correlated time series forecasting," Proceedings of the ACM on Management of Data, vol. 1, no. 2, pp. 1-26, 2023.

- J. Tang, S. Chen, C. Gong, et al., "LLM-PS: Empowering large language models for time series forecasting with temporal patterns and semantics," arXiv preprint arXiv:2503.09656, 2025. arXiv:2503.09656.

- H. Wang, "Temporal-semantic graph attention networks for cloud anomaly recognition,", 2024.

- W. Xu, M. W. Xu, M. Jiang, S. Long, Y. Lin, K. Ma and Z. Xu, "Graph neural network and temporal sequence integration for AI-powered financial compliance detection," 2025.

- H. Yang, M. H. Yang, M. Wang, L. Dai, Y. Wu and J. Du, "Federated graph neural networks for heterogeneous graphs with data privacy and structural consistency," 2025.

- S. Simaiya, U. K. S. Simaiya, U. K. Lilhore, Y. K. Sharma, et al., "A hybrid cloud load balancing and host utilization prediction method using deep learning and optimization techniques. Scientific Reports 2024, 14, 1337. [Google Scholar] [CrossRef] [PubMed]

- R. Akter, M. G. R. Akter, M. G. Shirkoohi, J. Wang, et al., "An efficient hybrid deep neural network model for multi-horizon forecasting of power loads in academic buildings. Energy and Buildings, 2025; 329, 115217. [Google Scholar]

- K. Y. Chan, B. K. Y. Chan, B. Abu-Salih, R. Qaddoura, et al., "Deep neural networks in the cloud: Review, applications, challenges and research directions. Neurocomputing 2023, 545, 126327. [Google Scholar] [CrossRef]

- J. Hu, B. Zhang, T. Xu, H. Yang and M. Gao, "Structure-aware temporal modeling for chronic disease progression prediction. 2025; arXiv:2508.14942. [Google Scholar]

- X. Wang, X. Zhang and X. Wang, "Deep skin lesion segmentation with Transformer-CNN fusion: Toward intelligent skin cancer analysis. 2025; arXiv:2508.14509.

- D. Gao, "High fidelity text to image generation with contrastive alignment and structural guidance. 2025; arXiv:2508.10280.

- Z. Xue, Y. Zi, N. Qi, M. Gong and Y. Zou, "Multi-level service performance forecasting via spatiotemporal graph neural networks. 2025; arXiv:2508.07122.

- Y. Li, S. Han, S. Wang, M. Wang and R. Meng, "Collaborative evolution of intelligent agents in large-scale microservice systems. 2025; arXiv:2508.20508.

- X. Zhang, X. Wang and X. Wang, "A reinforcement learning-driven task scheduling algorithm for multi-tenant distributed systems. 2025; arXiv:2508.08525.

- G. Yao, H. H. Liu and L. Dai, "Multi-agent reinforcement learning for adaptive resource orchestration in cloud-native clusters. 2025; arXiv:2508.10253. [Google Scholar]

- R. Zhang, "AI-driven multi-agent scheduling and service quality optimization in microservice systems. Transactions on Computational and Scientific Methods 2025, 5.

- Y. Zi, M. Gong, Z. Xue, Y. Zou, N. Qi and Y. Deng, "Graph neural network and transformer integration for unsupervised system anomaly discovery. 2025; arXiv:2508.09401.

- Ren, Y. Strategic Cache Allocation via Game-Aware Multi-Agent Reinforcement Learning. Transactions on Computational and Scientific Methods 2024, 4. [Google Scholar]

- Y. Nie, N. H. Nguyen, P. Sinthong, et al., "A time series is worth 64 words: Long-term forecasting with transformers. 2022; arXiv:2211.14730.

- K. Wang, J. K. Wang, J. Zhang, X. Li, et al., "Long-term power load forecasting using LSTM-informer with ensemble learning. Electronics 2023, 12, 2175. [Google Scholar] [CrossRef]

- F. Liu and C. Liang, "Short-term power load forecasting based on AC-BiLSTM model. Energy Reports 2024, 11, 1570–1579. [CrossRef]

- Y. Liu, T. Hu, H. Zhang, et al., "iTransformer: Inverted transformers are effective for time series forecasting. 2023; arXiv:2310.06625.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).