Submitted:

03 October 2025

Posted:

22 October 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

- We introduce VISCON, a convolutional decoder framework, to systematically examine CNN-based captioning across multiple dimensions: network depth, data augmentation, attention integration, and sentence length effects.

- Our study offers the first detailed evaluation of CNN captioning models with respect to metric-specific performance trade-offs, demonstrating that while VISCON can approximate RNN baselines in some metrics, it consistently struggles on CIDEr, indicating limited ability to capture high-level semantic richness.

2. Related Work

2.1. Retrieval-Based Approaches

2.2. Template-Based Approaches

2.3. Deep Learning-Based Approaches

2.4. Hybrid and Emerging Paradigms

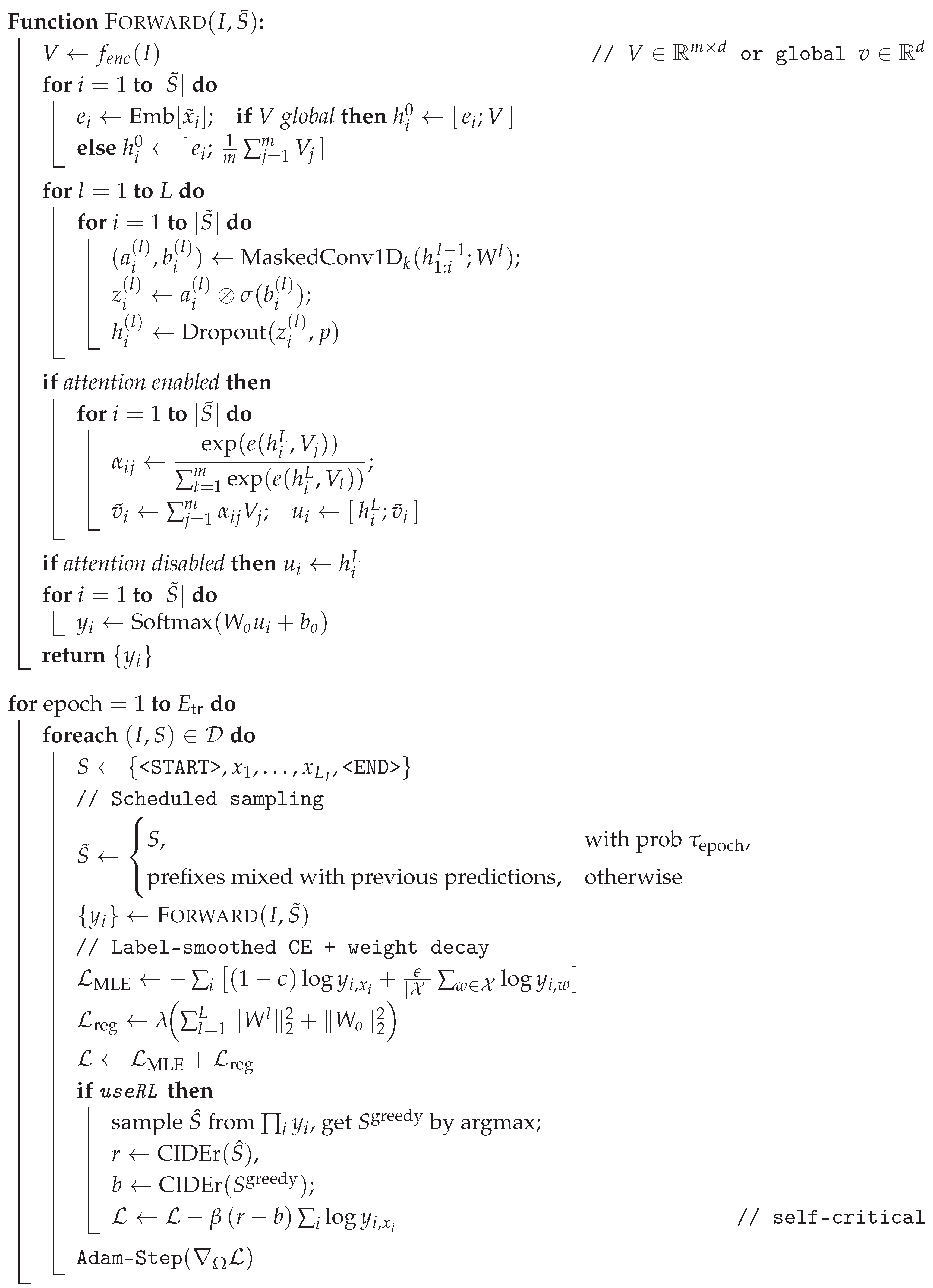

| Algorithm 1:VISCON Training (CNN+CNN and optional +Att) |

|

Input: Dataset ; frozen encoder ; vocab with <START>, <END>, <PAD>;

decoder depth L, kernel size k, dims ; smoothing ; weight decay ; dropout p;

learning rate ; epochs ; scheduled sampling prob ; RL flag useRL.

Output: Trained parameters

|

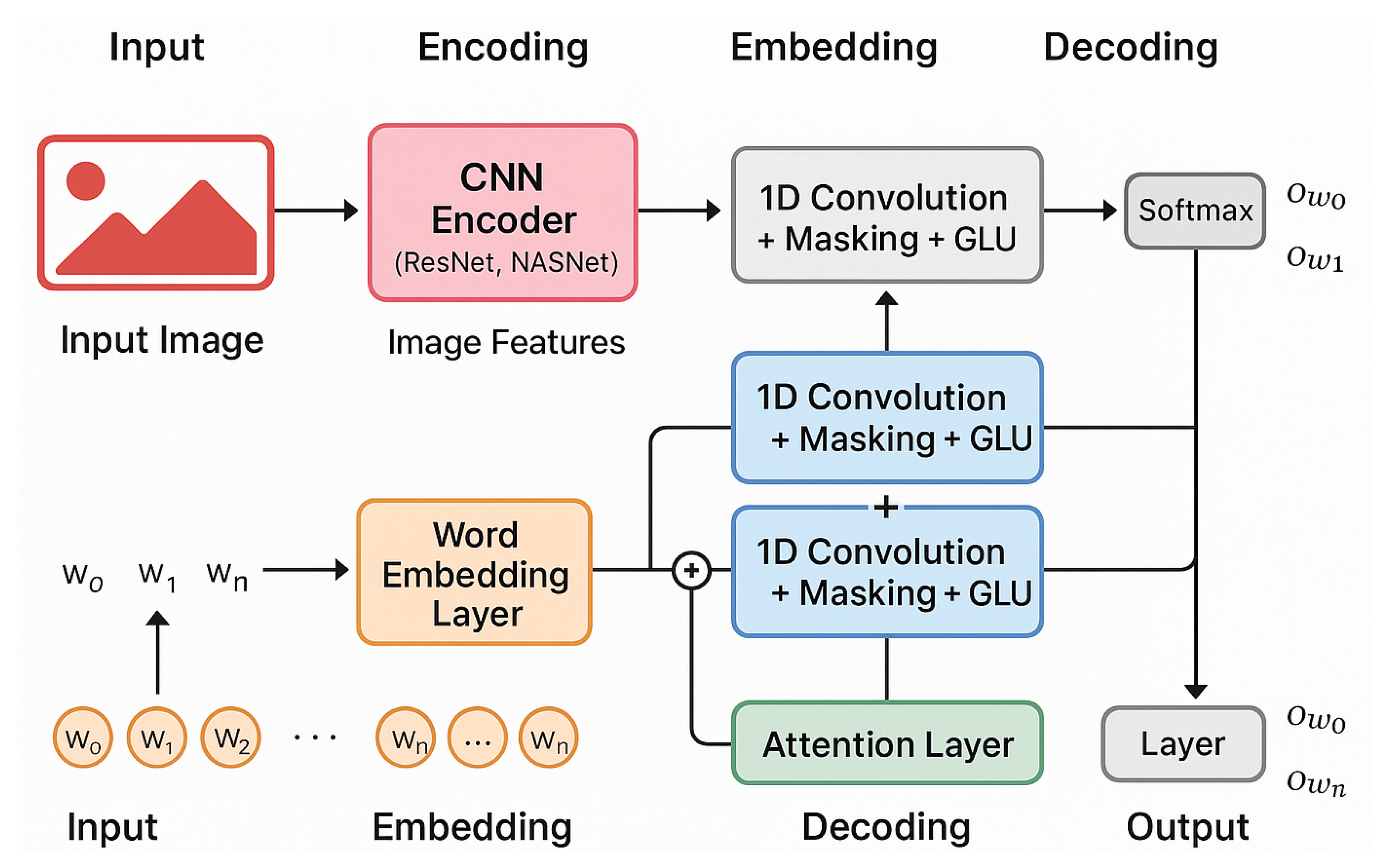

3. Proposed Method: VISCON Framework

3.1. Problem Formulation

3.2. Encoder Network

3.3. Word Embedding and Input Representation

3.4. Convolutional Decoder Architecture

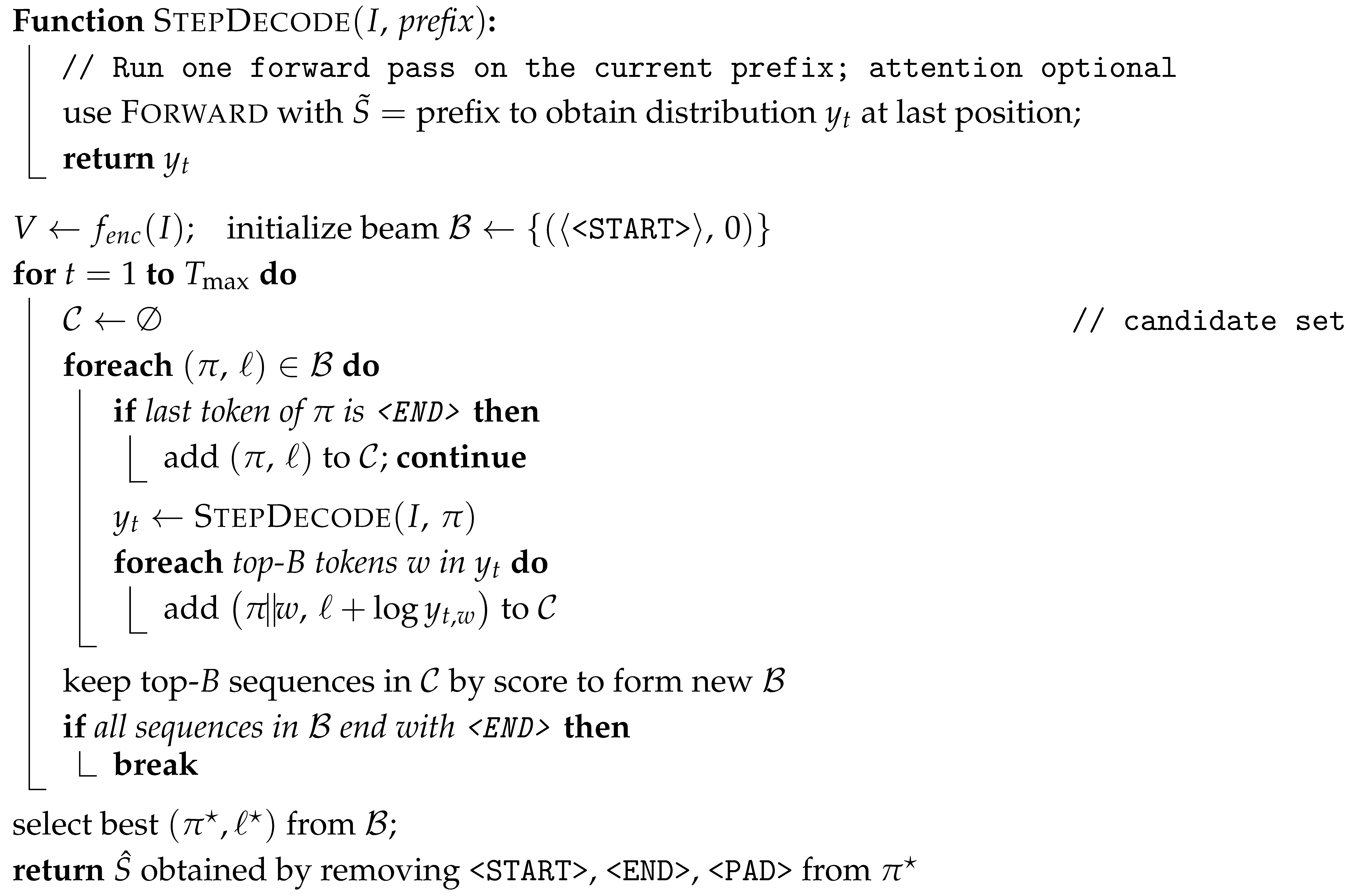

| Algorithm 2: VISCON Inference (Greedy or Beam Search) |

|

Input: Image I; encoder ; trained params ; max length ; beam size B.

Output: Caption

|

3.5. Attention-Augmented VISCON

3.6. Output Prediction Layer

3.7. Training Objective and Regularization

- Label Smoothing: We apply label smoothing with parameter to prevent overconfidence:

- Dropout and Weight Decay: Dropout is applied after each convolutional block, and regularization is added.

- Reinforcement Learning Fine-tuning: Inspired by SCST, the loss can be further optimized with respect to evaluation metrics such as CIDEr:where is the reward of sampled caption and b is a baseline.

3.8. Complexity Analysis

3.9. Algorithm Illustration

| Method | BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 | METEOR | CIDEr | ROUGE-L |

| Flickr8k Dataset | |||||||

| Karpathy et al. [9] | 0.582 | 0.387 | 0.249 | 0.163 | _ | _ | _ |

| Vinyals et al. [7] | 0.635 | 0.414 | 0.275 | _ | _ | _ | _ |

| Xu et al. [11] | 0.671 | 0.451 | 0.302 | 0.198 | 0.1893 | _ | _ |

| VISCON-Base (CNN+CNN) | 0.6279 | 0.4439 | 0.3022 | 0.2038 | 0.1924 | 0.4748 | 0.4544 |

| VISCON-Att (CNN+CNN+Att) | 0.6312 | 0.4471 | 0.3050 | 0.2074 | 0.1951 | 0.4879 | 0.4563 |

| Flickr30k Dataset | |||||||

| Mao et al. [8] | 0.600 | 0.410 | 0.280 | 0.190 | _ | _ | _ |

| Donahue et al. [26] | 0.590 | 0.392 | 0.253 | 0.165 | _ | _ | _ |

| Karpathy et al. [9] | 0.575 | 0.372 | 0.242 | 0.159 | _ | _ | _ |

| Vinyals et al. [7] | 0.666 | 0.426 | 0.279 | 0.185 | _ | _ | _ |

| Xu et al. [11] | 0.669 | 0.436 | 0.290 | 0.192 | 0.1849 | _ | _ |

| VISCON-Base (CNN+CNN) | 0.6432 | 0.4495 | 0.3108 | 0.2123 | 0.1774 | 0.3831 | 0.4344 |

| VISCON-Att (CNN+CNN+Att) | 0.6411 | 0.4452 | 0.3071 | 0.2102 | 0.1781 | 0.3868 | 0.4351 |

4. Experiments

4.1. Benchmarks, Metrics, and Evaluation Protocol

Training Details.

4.2. Compared Methods

4.3. Depth of the Convolutional Decoder

4.4. Image Transforms for Data Augmentation

| Model(Layers) |  |

|

|

|

| VISCON-Base(1) | a small white dog is jumping into a pool | a man riding a bike on a dirt bike | a football player in red and white uniform wearing a red and white uniform | a little boy in a red shirt is sitting on a swing |

| VISCON-Base(2) | a white dog is jumping into a pool | a person riding a bike on a dirt bike | a football player in a red uniform and red uniform | a little girl in a red shirt is sitting on a swing |

| VISCON-Base(3) | a small white dog is playing in a pool | a person riding a bike on a dirt bike | a football player in a red uniform and a red uniform | a little boy in a red shirt is jumping on a swing |

| VISCON-Base(4) | a white and white dog is playing in a pool | a person riding a bike on a dirt bike | a football player in a red and white uniform | a little girl in a red shirt is sitting on a swing |

| VISCON-Att(1) | a white dog is swimming in a pool | a person riding a bike on a dirt bike | a football player in a red uniform and a football | a little boy in a red shirt is jumping over a swing |

| VISCON-Att(2) | a white dog is jumping over a blue pool | a man on a motorcycle rides a dirt bike | a football player in a red uniform | a little boy in a red shirt is sitting on a swing |

| VISCON-Att(3) | a small white dog is jumping into a pool | a person riding a bike on a dirt bike | a football player in a red uniform and a red uniform | a little boy in a red shirt is jumping over a swing |

| VISCON-Att(4) | a white dog is jumping over a blue pool | a man riding a bike on a dirt bike | a football player in a red uniform is holding a football | a little girl in a pink shirt is sitting on a swing |

4.5. Effect of Maximum Sentence Length

4.6. Results and Discussion

4.7. Comparison with Prior Work

4.8. Decoder Depth Study

4.9. Qualitative Caption Comparisons on Flickr8k

4.10. Image Transform Ablations

| Image Transform | BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 | METEOR | CIDEr | ROUGE-L |

| VISCON-Base | |||||||

| No transform | 0.6279 | 0.4439 | 0.3022 | 0.2038 | 0.1924 | 0.4748 | 0.4544 |

| Random Horizontal | 0.6290 | 0.4491 | 0.3068 | 0.2061 | 0.1956 | 0.4791 | 0.4566 |

| Random Vertical | 0.6218 | 0.4338 | 0.2922 | 0.1943 | 0.1889 | 0.4415 | 0.4473 |

| Random Flip | 0.6284 | 0.4464 | 0.3048 | 0.2059 | 0.1923 | 0.4703 | 0.4534 |

| Random Rotate | 0.6112 | 0.4226 | 0.2829 | 0.1883 | 0.1843 | 0.4202 | 0.4376 |

| Random Perspective | 0.6257 | 0.4431 | 0.3007 | 0.2008 | 0.1912 | 0.4574 | 0.4511 |

| VISCON-Att | |||||||

| No transform | 0.6312 | 0.4471 | 0.3050 | 0.2074 | 0.1951 | 0.4879 | 0.4563 |

| Random Horizontal | 0.6331 | 0.4516 | 0.3098 | 0.2103 | 0.1933 | 0.4812 | 0.4575 |

| Random Vertical | 0.6165 | 0.4306 | 0.2915 | 0.1941 | 0.1865 | 0.4303 | 0.4445 |

| Random Flip | 0.6237 | 0.4388 | 0.2976 | 0.1986 | 0.1892 | 0.4528 | 0.4502 |

| Random Rotate | 0.6079 | 0.4194 | 0.2799 | 0.1870 | 0.1826 | 0.4104 | 0.4339 |

| Random Perspective | 0.6260 | 0.4417 | 0.3026 | 0.2060 | 0.1910 | 0.4613 | 0.4487 |

4.11. Sentence-Length Ablation and CNN vs. LSTM

| Max. Sent. Length | BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 | METEOR | CIDEr | ROUGE-L |

| VISCON-Base | |||||||

| 10 | 0.6275 | 0.4395 | 0.2985 | 0.2047 | 0.1682 | 0.3714 | 0.4291 |

| 15 | 0.6246 | 0.4350 | 0.2930 | 0.1986 | 0.1915 | 0.4502 | 0.4492 |

| 20 | 0.6111 | 0.4310 | 0.2928 | 0.1965 | 0.1917 | 0.4601 | 0.4506 |

| 25 | 0.6102 | 0.4343 | 0.3005 | 0.2062 | 0.1948 | 0.4763 | 0.4546 |

| 30 | 0.5651 | 0.3996 | 0.2713 | 0.1820 | 0.1894 | 0.4559 | 0.4448 |

| 35 | 0.5576 | 0.3928 | 0.2677 | 0.1787 | 0.1894 | 0.4569 | 0.4439 |

| 40 | 0.5327 | 0.3748 | 0.2545 | 0.1698 | 0.1891 | 0.4553 | 0.4411 |

| CNN+LSTM | |||||||

| 10 | 0.4541 | 0.2953 | 0.1916 | 0.1253 | 0.1708 | 0.6253 | 0.3943 |

| 15 | 0.5898 | 0.4128 | 0.2825 | 0.1923 | 0.1966 | 0.5549 | 0.4490 |

| 20 | 0.6126 | 0.4317 | 0.3000 | 0.2065 | 0.2023 | 0.5326 | 0.4599 |

| 25 | 0.6252 | 0.4402 | 0.3042 | 0.2091 | 0.2000 | 0.5419 | 0.4610 |

| 30 | 0.6236 | 0.4394 | 0.3044 | 0.2076 | 0.2014 | 0.5205 | 0.4591 |

| 35 | 0.6230 | 0.4401 | 0.3066 | 0.2121 | 0.1978 | 0.5281 | 0.4594 |

| 40 | 0.6190 | 0.4383 | 0.3062 | 0.2122 | 0.2030 | 0.5497 | 0.4628 |

4.12. Additional Analysis: Decoding Width and Statistical Significance

| Model / Beam | BLEU-4 | METEOR | CIDEr | ROUGE-L | ±CIDEr (95%) |

| VISCON-Base, | 0.199 | 0.191 | 0.468 | 0.452 | 0.010 |

| VISCON-Base, | 0.204 | 0.192 | 0.475 | 0.454 | 0.010 |

| VISCON-Base, | 0.205 | 0.193 | 0.477 | 0.455 | 0.011 |

| VISCON-Att, | 0.203 | 0.194 | 0.482 | 0.455 | 0.011 |

| VISCON-Att, | 0.207 | 0.195 | 0.488 | 0.456 | 0.011 |

| VISCON-Att, | 0.208 | 0.195 | 0.489 | 0.457 | 0.012 |

4.13. Synthesis of Findings

5. Conclusions and Future Work

Future Work.

References

- Fei-Fei, L. , Iyer, A., Koch, C. and Perona, P., 2007. What do we perceive in a glance of a real-world scene?. Journal of vision, 7(1), pp.10-10.

- Sutskever, I. , Vinyals, O. and Le, Q.V., December. Sequence to sequence learning with neural networks. In Proceedings of the 27th International Conference on Neural Information Processing Systems- (pp. 3104-3112). 2014; Volume 2, pp. 3104–3112. [Google Scholar]

- Cho, K. , van Merriënboer, B. , Gulcehre, C., Bahdanau, D., Bougares, F., Schwenk, H. and Bengio, Y., October. Learning Phrase Representations using RNN Encoder–Decoder for Statistical Machine Translation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP) (pp. 1724-1734). 2014; pp. 1724–1734. [Google Scholar]

- Russakovsky, O. , Deng, J., Su, H., Krause, J., Satheesh, S., Ma, S., Huang, Z., Karpathy, A., Khosla, A., Bernstein, M. and Berg, A.C., 2015. Imagenet large scale visual recognition challenge. International journal of computer vision, 115(3), pp.211-252.

- Deng, J. , Dong, W., Socher, R., Li, L.J., Li, K. and Fei-Fei, L., 2009, June. Imagenet: A large-scale hierarchical image database. In 2009 IEEE conference on computer vision and pattern recognition (pp. 248-255). Ieee.

- Elman, J.L. , 1990. Finding structure in time. Cognitive science, 14(2), pp.179-211.

- Vinyals, O. , Toshev, A. , Bengio, S. and Erhan, D., 2015. Show and tell: A neural image caption generator. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 3156-3164). [Google Scholar]

- Mao, J. , Xu, W., Yang, Y., Wang, J., Huang, Z. and Yuille, A., 2014. Deep captioning with multimodal recurrent neural networks (m-rnn). arXiv:1412.6632.

- Karpathy, A. and Fei-Fei, L., 2015. Deep visual-semantic alignments for generating image descriptions. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 3128-3137).

- Bahdanau, D. , Cho, K.H. and Bengio, Y., 2015, January. Neural machine translation by jointly learning to align and translate. In 3rd International Conference on Learning Representations, ICLR 2015.

- Xu, K. , Ba, J., Kiros, R., Cho, K., Courville, A., Salakhudinov, R., Zemel, R. and Bengio, Y., 2015, June. Show, attend and tell: Neural image caption generation with visual attention. In International conference on machine learning (pp. 2048-2057). PMLR.

- Farhadi, A. , Hejrati, M., Sadeghi, M.A., Young, P., Rashtchian, C., Hockenmaier, J. and Forsyth, D., 2010, September. Every picture tells a story: Generating sentences from images. In European conference on computer vision (pp. 15-29). Springer, Berlin, Heidelberg. [CrossRef]

- Ordonez, V. , Kulkarni, G. and Berg, T., 2011. Im2text: Describing images using 1 million captioned photographs. Advances in neural information processing systems, 24, pp.1143-1151.

- Mason, R. and Charniak, E. , 2014, June. Nonparametric method for data-driven image captioning. In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics (: Short Papers) (pp. 592-598); Volume 2.

- Hochreiter, S. and Schmidhuber, J. Long short-term memory. Neural Computation, 9(8):1735–1780, 1997.

- S. Li, G. Kulkarni, T. L. Berg, A. C. Berg, and Y. Choi. 2011. Composing simple image descriptions using web-scale n-grams. In CoNLL. ACL, 220–228.

- G. Kulkarni, V. Premraj, V. Ordonez, S. Dhar, S. Li, Y. Choi, A. C. Berg, and T. Berg. 2013. Babytalk: Understanding and generating simple image descriptions. IEEE Transactions on Pattern Analysis and Machine Intelligence (PAMI) 35, 12 (2013), 2891–2903. [CrossRef]

- He, K., Zhang, X., Ren, S. and Sun, J., 2016. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 770-778).

- Sulabh Katiyar and Samir Kumar Borgohain, “Comparative Evaluation of CNN Architectures for Image Caption Generation” International Journal of Advanced Computer Science and Applications(IJACSA), 11(12), 2020. [CrossRef]

- Gehring, J., Auli, M., Grangier, D., Yarats, D. and Dauphin, Y.N., 2017, August. Convolutional sequence to sequence learning. In Proceedings of the 34th International Conference on Machine Learning-Volume 70 (pp. 1243-1252).

- Aneja, J., Deshpande, A. and Schwing, A.G., 2018. Convolutional image captioning. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 5561-5570).

- Hodosh, Micah, Young, Peter, and Hockenmaier, Julia. Framing image description as a ranking task: Data, models and evaluation metrics. Journal of Artificial Intelligence Research, pp. 853–899, 20. [CrossRef]

- Young, Peter, Lai, Alice, Hodosh, Micah, and Hockenmaier, Julia. From image descriptions to visual denotations: New similarity metrics for semantic inference over event descriptions. TACL, 2:67–78, 2014. [CrossRef]

- Lin, Tsung-Yi, Maire, Michael, Belongie, Serge, Hays, James, Perona, Pietro, Ramanan, Deva, Dollar, Piotr, and Zitnick, C Lawrence. Microsoft coco: Common objects in context. In ECCV, pp. 740–755. 2014. [CrossRef]

- X. Chen, H. Fang, T.-Y. Lin, R. Vedantam, S. Gupta, P. Dollár, C.L. Zitnick, Microsoft coco captions: Data collection and evaluation server (2015) , arXiv preprint arXiv:1504.00325.

- Donahue, J. , Anne Hendricks, L. , Guadarrama, S., Rohrbach, M., Venugopalan, S., Saenko, K. and Darrell, T., 1752015. Long-term recurrent convolutional networks for visual recognition and description. In Proceedings of176the IEEE conference on computer vision and pattern recognition (pp. 2625-2634). [Google Scholar]

- Criminisi, A. , Reid, I. and Zisserman, A., 1999. A plane measuring device. Image and Vision Computing, 17(8), pp.625-634. [CrossRef]

- Katiyar, S. and Borgohain, S.K., 2021. Image Captioning using Deep Stacked LSTMs, Contextual Word Embeddings and Data Augmentation. arXiv:2102.11237.

- Wang, C. , Yang, H. and Meinel, C., 2018. Image captioning with deep bidirectional LSTMs and multi-task learning. ACM Transactions on Multimedia Computing, Communications, and Applications (TOMM), 14(2s), pp.1-20. [CrossRef]

- Meishan Zhang, Hao Fei, Bin Wang, Shengqiong Wu, Yixin Cao, Fei Li, and Min Zhang. Recognizing everything from all modalities at once: Grounded multimodal universal information extraction. In Findings of the Association for Computational Linguistics: ACL 2024, 2024.

- Shengqiong Wu, Hao Fei, and Tat-Seng Chua. Universal scene graph generation. Proceedings of the CVPR, 2025.

- Shengqiong Wu, Hao Fei, Jingkang Yang, Xiangtai Li, Juncheng Li, Hanwang Zhang, and Tat-seng Chua. Learning 4d panoptic scene graph generation from rich 2d visual scene. Proceedings of the CVPR, 2025.

- Yaoting Wang, Shengqiong Wu, Yuecheng Zhang, Shuicheng Yan, Ziwei Liu, Jiebo Luo, and Hao Fei. Multimodal chain-of-thought reasoning: A comprehensive survey. 2025; arXiv:2503.12605.

- Hao Fei, Yuan Zhou, Juncheng Li, Xiangtai Li, Qingshan Xu, Bobo Li, Shengqiong Wu, Yaoting Wang, Junbao Zhou, Jiahao Meng, Qingyu Shi, Zhiyuan Zhou, Liangtao Shi, Minghe Gao, Daoan Zhang, Zhiqi Ge, Weiming Wu, Siliang Tang, Kaihang Pan, Yaobo Ye, Haobo Yuan, Tao Zhang, Tianjie Ju, Zixiang Meng, Shilin Xu, Liyu Jia, Wentao Hu, Meng Luo, Jiebo Luo, Tat-Seng Chua, Shuicheng Yan, and Hanwang Zhang. On path to multimodal generalist: General-level and general-bench. In Proceedings of the ICML, 2025.

- Jian Li, Weiheng Lu, Hao Fei, Meng Luo, Ming Dai, Min Xia, Yizhang Jin, Zhenye Gan, Ding Qi, Chaoyou Fu, et al. A survey on benchmarks of multimodal large language models. arXiv:2408.08632, 2024.

- Yann LeCun, Yoshua Bengio, and Geoffrey Hinton. Deep learning. Nature, 521(7553):436–444, may 2015. [CrossRef]

- Dong Yu Li Deng. Deep Learning: Methods and Applications. NOW Publishers, May 2014. URL https://www.microsoft.com/en-us/research/publication/deep-learning-methods-and-applications/.

- Eric Makita and Artem Lenskiy. A movie genre prediction based on Multivariate Bernoulli model and genre correlations. (May), mar 2016a. http://arxiv.org/abs/1604.08608.

- Junhua Mao, Wei Xu, Yi Yang, Jiang Wang, and Alan L Yuille. Explain images with multimodal recurrent neural networks. arXiv:1410.1090, 2014.

- Deli Pei, Huaping Liu, Yulong Liu, and Fuchun Sun. Unsupervised multimodal feature learning for semantic image segmentation. In The 2013 International Joint Conference on Neural Networks (IJCNN), pp. 1–6. IEEE, aug 2013. ISBN 978-1-4673-6129-3. http://ieeexplore.ieee.org/lpdocs/epic03/wrapper.htm?arnumber=6706748. [CrossRef]

- Karen Simonyan and Andrew Zisserman. Very deep convolutional networks for large-scale image recognition. arXiv:1409.1556, 2014.

- Richard Socher, Milind Ganjoo, Christopher D Manning, and Andrew Ng. Zero-Shot Learning Through Cross-Modal Transfer. In C J C Burges, L Bottou, M Welling, Z Ghahramani, and K Q Weinberger (eds.), Advances in Neural Information Processing Systems 26, pp. 935–943. Curran Associates, Inc., 2013. URL http://papers.nips.cc/paper/5027-zero-shot-learning-through-cross-modal-transfer.pdf.

- Hao Fei, Shengqiong Wu, Meishan Zhang, Min Zhang, Tat-Seng Chua, and Shuicheng Yan. Enhancing video-language representations with structural spatio-temporal alignment. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2024a.

- A. Karpathy and L. Fei-Fei, “Deep visual-semantic alignments for generating image descriptions,” TPAMI, vol. 39, no. 4, pp. 664–676, 2017.

- Hao Fei, Yafeng Ren, and Donghong Ji. Retrofitting structure-aware transformer language model for end tasks. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing, pages 2151–2161, 2020a.

- Shengqiong Wu, Hao Fei, Fei Li, Meishan Zhang, Yijiang Liu, Chong Teng, and Donghong Ji. Mastering the explicit opinion-role interaction: Syntax-aided neural transition system for unified opinion role labeling. In Proceedings of the Thirty-Sixth AAAI Conference on Artificial Intelligence, pages 11513–11521, 2022.

- Wenxuan Shi, Fei Li, Jingye Li, Hao Fei, and Donghong Ji. Effective token graph modeling using a novel labeling strategy for structured sentiment analysis. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 4232–4241, 2022.

- Hao Fei, Yue Zhang, Yafeng Ren, and Donghong Ji. Latent emotion memory for multi-label emotion classification. In Proceedings of the AAAI Conference on Artificial Intelligence, pages 7692–7699, 2020b. [CrossRef]

- Fengqi Wang, Fei Li, Hao Fei, Jingye Li, Shengqiong Wu, Fangfang Su, Wenxuan Shi, Donghong Ji, and Bo Cai. Entity-centered cross-document relation extraction. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, pages 9871–9881, 2022.

- Ling Zhuang, Hao Fei, and Po Hu. Knowledge-enhanced event relation extraction via event ontology prompt. Inf. Fusion, 100:101919, 2023. [CrossRef]

- Adams Wei Yu, David Dohan, Minh-Thang Luong, Rui Zhao, Kai Chen, Mohammad Norouzi, and Quoc V Le. Qanet: Combining local convolution with global self-attention for reading comprehension. arXiv preprint arXiv:1804.09541, arXiv:1804.09541, 2018.

- Shengqiong Wu, Hao Fei, Yixin Cao, Lidong Bing, and Tat-Seng Chua. Information screening whilst exploiting! multimodal relation extraction with feature denoising and multimodal topic modeling. arXiv:2305.11719, 2023a.

- Jundong Xu, Hao Fei, Liangming Pan, Qian Liu, Mong-Li Lee, and Wynne Hsu. Faithful logical reasoning via symbolic chain-of-thought. arXiv preprint arXiv:2405.18357, arXiv:2405.18357, 2024.

- Matthew Dunn, Levent Sagun, Mike Higgins, V Ugur Guney, Volkan Cirik, and Kyunghyun Cho. SearchQA: A new Q&A dataset augmented with context from a search engine. arXiv:1704.05179, 2017.

- Hao Fei, Shengqiong Wu, Jingye Li, Bobo Li, Fei Li, Libo Qin, Meishan Zhang, Min Zhang, and Tat-Seng Chua. Lasuie: Unifying information extraction with latent adaptive structure-aware generative language model. In Proceedings of the Advances in Neural Information Processing Systems, NeurIPS 2022, pages 15460–15475, 2022a.

- Guang Qiu, Bing Liu, Jiajun Bu, and Chun Chen. Opinion word expansion and target extraction through double propagation. Computational linguistics, 37(1):9–27, 2011.

- Hao Fei, Yafeng Ren, Yue Zhang, Donghong Ji, and Xiaohui Liang. Enriching contextualized language model from knowledge graph for biomedical information extraction. Briefings in Bioinformatics, 22(3), 2021. [CrossRef]

- Shengqiong Wu, Hao Fei, Wei Ji, and Tat-Seng Chua. Cross2StrA: Unpaired cross-lingual image captioning with cross-lingual cross-modal structure-pivoted alignment. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 2593–2608, 2023b.

- Bobo Li, Hao Fei, Fei Li, Tat-seng Chua, and Donghong Ji. 2024a. Multimodal emotion-cause pair extraction with holistic interaction and label constraint. ACM Transactions on Multimedia Computing, Communications and Applications, 2024. [CrossRef]

- Bobo Li, Hao Fei, Fei Li, Shengqiong Wu, Lizi Liao, Yinwei Wei, Tat-Seng Chua, and Donghong Ji. 2025. Revisiting conversation discourse for dialogue disentanglement. ACM Transactions on Information Systems, 2025; 43, 1–34. [CrossRef]

- Bobo Li, Hao Fei, Fei Li, Yuhan Wu, Jinsong Zhang, Shengqiong Wu, Jingye Li, Yijiang Liu, Lizi Liao, Tat-Seng Chua, and Donghong Ji. 2023. DiaASQ: A Benchmark of Conversational Aspect-based Sentiment Quadruple Analysis. In Findings of the Association for Computational Linguistics: ACL 2023. 13449–13467.

- Bobo Li, Hao Fei, Lizi Liao, Yu Zhao, Fangfang Su, Fei Li, and Donghong Ji. 2024b. Harnessing holistic discourse features and triadic interaction for sentiment quadruple extraction in dialogues. In Proceedings of the AAAI conference on artificial intelligence, Vol. 38. 18462–18470. [CrossRef]

- Shengqiong Wu, Hao Fei, Liangming Pan, William Yang Wang, Shuicheng Yan, and Tat-Seng Chua. 2025a. Combating Multimodal LLM Hallucination via Bottom-Up Holistic Reasoning. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 39. 8460–8468.

- Shengqiong Wu, Weicai Ye, Jiahao Wang, Quande Liu, Xintao Wang, Pengfei Wan, Di Zhang, Kun Gai, Shuicheng Yan, Hao Fei, et al. 2025b. Any2caption: Interpreting any condition to caption for controllable video generation. arXiv:2503.24379 (2025).

- Han Zhang, Zixiang Meng, Meng Luo, Hong Han, Lizi Liao, Erik Cambria, and Hao Fei. 2025. Towards multimodal empathetic response generation: A rich text-speech-vision avatar-based benchmark. In Proceedings of the ACM on Web Conference 2025. 2872–2881.

- Yu Zhao, Hao Fei, Shengqiong Wu, Meishan Zhang, Min Zhang, and Tat-seng Chua. 2025. Grammar induction from visual, speech and text. Artificial Intelligence, 2025; 341, 104306. [CrossRef]

- Pranav Rajpurkar, Jian Zhang, Konstantin Lopyrev, and Percy Liang. Squad: 100,000+ questions for machine comprehension of text. 2016; arXiv:1606.05250.

- Hao Fei, Fei Li, Bobo Li, and Donghong Ji. Encoder-decoder based unified semantic role labeling with label-aware syntax. In Proceedings of the AAAI conference on artificial intelligence, pages 12794–12802, 2021a. [CrossRef]

- D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” in ICLR, 2015.

- Hao Fei, Shengqiong Wu, Yafeng Ren, Fei Li, and Donghong Ji. Better combine them together! integrating syntactic constituency and dependency representations for semantic role labeling. In Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021, pages 549–559, 2021b.

- K. Papineni, S. Roukos, T. Ward, and W. Zhu, “Bleu: a method for automatic evaluation of machine translation,” in ACL, 2002, pp. 311–318.

- Hao Fei, Bobo Li, Qian Liu, Lidong Bing, Fei Li, and Tat-Seng Chua. Reasoning implicit sentiment with chain-of-thought prompting. arXiv preprint arXiv:2305.11255, arXiv:2305.11255, 2023a.

- Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), pages 4171–4186, Minneapolis, Minnesota, June 2019. Association for Computational Linguistics. URL https://aclanthology.org/N19-1423. [CrossRef]

- Shengqiong Wu, Hao Fei, Leigang Qu, Wei Ji, and Tat-Seng Chua. Next-gpt: Any-to-any multimodal llm. CoRR, abs/2309.05519, 2023c.

- Qimai Li, Zhichao Han, and Xiao-Ming Wu. Deeper insights into graph convolutional networks for semi-supervised learning. In Thirty-Second AAAI Conference on Artificial Intelligence, 2018. [CrossRef]

- Hao Fei, Shengqiong Wu, Wei Ji, Hanwang Zhang, Meishan Zhang, Mong-Li Lee, and Wynne Hsu. Video-of-thought: Step-by-step video reasoning from perception to cognition. In Proceedings of the International Conference on Machine Learning, 2024b.

- Naman Jain, Pranjali Jain, Pratik Kayal, Jayakrishna Sahit, Soham Pachpande, Jayesh Choudhari, et al. Agribot: agriculture-specific question answer system. IndiaRxiv, 2019.

- Hao Fei, Shengqiong Wu, Wei Ji, Hanwang Zhang, and Tat-Seng Chua. Dysen-vdm: Empowering dynamics-aware text-to-video diffusion with llms. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 7641–7653, 2024c.

- Mihir Momaya, Anjnya Khanna, Jessica Sadavarte, and Manoj Sankhe. Krushi–the farmer chatbot. In 2021 International Conference on Communication information and Computing Technology (ICCICT), pages 1–6. IEEE, 2021.

- Hao Fei, Fei Li, Chenliang Li, Shengqiong Wu, Jingye Li, and Donghong Ji. Inheriting the wisdom of predecessors: A multiplex cascade framework for unified aspect-based sentiment analysis. In Proceedings of the Thirty-First International Joint Conference on Artificial Intelligence, IJCAI, pages 4096–4103, 2022b.

- Shengqiong Wu, Hao Fei, Yafeng Ren, Donghong Ji, and Jingye Li. Learn from syntax: Improving pair-wise aspect and opinion terms extraction with rich syntactic knowledge. In Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence, pages 3957–3963, 2021.

- Bobo Li, Hao Fei, Lizi Liao, Yu Zhao, Chong Teng, Tat-Seng Chua, Donghong Ji, and Fei Li. Revisiting disentanglement and fusion on modality and context in conversational multimodal emotion recognition. In Proceedings of the 31st ACM International Conference on Multimedia, MM, pages 5923–5934, 2023.

- Hao Fei, Qian Liu, Meishan Zhang, Min Zhang, and Tat-Seng Chua. Scene graph as pivoting: Inference-time image-free unsupervised multimodal machine translation with visual scene hallucination. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 5980–5994, 2023b.

- S. Banerjee and A. Lavie, “METEOR: an automatic metric for MT evaluation with improved correlation with human judgments,” in IEEMMT, 2005, pp. 65–72.

- Hao Fei, Shengqiong Wu, Hanwang Zhang, Tat-Seng Chua, and Shuicheng Yan. Vitron: A unified pixel-level vision llm for understanding, generating, segmenting, editing. In Proceedings of the Advances in Neural Information Processing Systems, NeurIPS 2024,, 2024d.

- Abbott Chen and Chai Liu. Intelligent commerce facilitates education technology: The platform and chatbot for the taiwan agriculture service. International Journal of e-Education, e-Business, e-Management and e-Learning, 2021; 11:1–10, 01. [CrossRef]

- Shengqiong Wu, Hao Fei, Xiangtai Li, Jiayi Ji, Hanwang Zhang, Tat-Seng Chua, and Shuicheng Yan. Towards semantic equivalence of tokenization in multimodal llm. 2024; arXiv:2406.05127.

- Jingye Li, Kang Xu, Fei Li, Hao Fei, Yafeng Ren, and Donghong Ji. MRN: A locally and globally mention-based reasoning network for document-level relation extraction. In Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021, pages 1359–1370, 2021.

- Hao Fei, Shengqiong Wu, Yafeng Ren, and Meishan Zhang. Matching structure for dual learning. In Proceedings of the International Conference on Machine Learning, ICML, pages 6373–6391, 2022c.

- Hu Cao, Jingye Li, Fangfang Su, Fei Li, Hao Fei, Shengqiong Wu, Bobo Li, Liang Zhao, and Donghong Ji. OneEE: A one-stage framework for fast overlapping and nested event extraction. In Proceedings of the 29th International Conference on Computational Linguistics, pages 1953–1964, 2022.

- Isakwisa Gaddy Tende, Kentaro Aburada, Hisaaki Yamaba, Tetsuro Katayama, and Naonobu Okazaki. Proposal for a crop protection information system for rural farmers in tanzania. Agronomy, 11(12):2411, 2021. [CrossRef]

- Hao Fei, Yafeng Ren, and Donghong Ji. Boundaries and edges rethinking: An end-to-end neural model for overlapping entity relation extraction. Information Processing & Management, 57(6):102311, 2020c. [CrossRef]

- Jingye Li, Hao Fei, Jiang Liu, Shengqiong Wu, Meishan Zhang, Chong Teng, Donghong Ji, and Fei Li. Unified named entity recognition as word-word relation classification. In Proceedings of the AAAI Conference on Artificial Intelligence, pages 10965–10973, 2022. [CrossRef]

- Mohit Jain, Pratyush Kumar, Ishita Bhansali, Q Vera Liao, Khai Truong, and Shwetak Patel. Farmchat: a conversational agent to answer farmer queries. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, 2(4):1–22, 2018b.

- Shengqiong Wu, Hao Fei, Hanwang Zhang, and Tat-Seng Chua. Imagine that! abstract-to-intricate text-to-image synthesis with scene graph hallucination diffusion. In Proceedings of the 37th International Conference on Neural Information Processing Systems, pages 79240–79259, 2023d.

- P. Anderson, B. Fernando, M. Johnson, and S. Gould, “SPICE: semantic propositional image caption evaluation,” in ECCV, 2016, pp. 382–398.

- Hao Fei, Tat-Seng Chua, Chenliang Li, Donghong Ji, Meishan Zhang, and Yafeng Ren. On the robustness of aspect-based sentiment analysis: Rethinking model, data, and training. ACM Transactions on Information Systems, 41(2):50:1–50:32, 2023c. [CrossRef]

- Yu Zhao, Hao Fei, Yixin Cao, Bobo Li, Meishan Zhang, Jianguo Wei, Min Zhang, and Tat-Seng Chua. Constructing holistic spatio-temporal scene graph for video semantic role labeling. In Proceedings of the 31st ACM International Conference on Multimedia, MM, pages 5281–5291, 2023a.

- Shengqiong Wu, Hao Fei, Yixin Cao, Lidong Bing, and Tat-Seng Chua. Information screening whilst exploiting! multimodal relation extraction with feature denoising and multimodal topic modeling. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 14734–14751, 2023e.

- Hao Fei, Yafeng Ren, Yue Zhang, and Donghong Ji. Nonautoregressive encoder-decoder neural framework for end-to-end aspect-based sentiment triplet extraction. IEEE Transactions on Neural Networks and Learning Systems, 34(9):5544–5556, 2023d. [CrossRef]

- Kelvin Xu, Jimmy Ba, Ryan Kiros, Kyunghyun Cho, Aaron Courville, Ruslan Salakhutdinov, Richard S Zemel, and Yoshua Bengio. Show, attend and tell: Neural image caption generation with visual attention. arXiv preprint arXiv:1502.03044, 2015; 2, arXiv:1502.03044.

- Seniha Esen Yuksel, Joseph N Wilson, and Paul D Gader. Twenty years of mixture of experts. IEEE transactions on neural networks and learning systems, 2012; 23, 1177–1193. [CrossRef]

- Sanjeev Arora, Yingyu Liang, and Tengyu Ma. A simple but tough-to-beat baseline for sentence embeddings. In ICLR, 2017.

| Number of Layers |

BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 | METEOR | CIDEr | ROUGE-L |

| VISCON-Base (CNN+CNN) | |||||||

| 1 | 0.6279 | 0.4439 | 0.3022 | 0.2038 | 0.1924 | 0.4748 | 0.4544 |

| 2 | 0.6246 | 0.4350 | 0.2930 | 0.1986 | 0.1915 | 0.4502 | 0.4492 |

| 3 | 0.6193 | 0.4355 | 0.2967 | 0.2009 | 0.1939 | 0.4685 | 0.4507 |

| 4 | 0.6173 | 0.4345 | 0.2964 | 0.2013 | 0.1943 | 0.4670 | 0.4495 |

| VISCON-Att (CNN+CNN+Att) | |||||||

| 1 | 0.6257 | 0.4430 | 0.3018 | 0.2040 | 0.1929 | 0.4721 | 0.4541 |

| 2 | 0.6316 | 0.4479 | 0.3065 | 0.2078 | 0.1950 | 0.4864 | 0.4561 |

| 3 | 0.6180 | 0.4281 | 0.2901 | 0.1975 | 0.1902 | 0.4461 | 0.4443 |

| 4 | 0.6151 | 0.4262 | 0.2889 | 0.1947 | 0.1909 | 0.4524 | 0.4462 |

| Number of Layers |

BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 | METEOR | CIDEr | ROUGE-L |

| VISCON-Base (CNN+CNN) | |||||||

| 1 | 0.6432 | 0.4495 | 0.3108 | 0.2123 | 0.1774 | 0.3831 | 0.4344 |

| 2 | 0.6393 | 0.4469 | 0.3113 | 0.2154 | 0.1770 | 0.3874 | 0.4346 |

| 3 | 0.6364 | 0.4413 | 0.3051 | 0.2094 | 0.1782 | 0.3838 | 0.4338 |

| 4 | 0.6303 | 0.4363 | 0.3006 | 0.2070 | 0.1763 | 0.3780 | 0.4305 |

| VISCON-Att (CNN+CNN+Att) | |||||||

| 1 | 0.6382 | 0.4451 | 0.3080 | 0.2094 | 0.1772 | 0.3791 | 0.4336 |

| 2 | 0.6411 | 0.4452 | 0.3071 | 0.2102 | 0.1781 | 0.3868 | 0.4351 |

| 3 | 0.6400 | 0.4459 | 0.3081 | 0.2122 | 0.1786 | 0.3928 | 0.4359 |

| 4 | 0.6285 | 0.4349 | 0.2980 | 0.2021 | 0.1756 | 0.3627 | 0.4298 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).