1. Introduction

Hyperspectral images (HSIs) and Light Detection and Ranging (LiDAR) complement one another in a mutually reinforcing manner and have become indispensable for remote sensing applications, particularly in land-cover classification [

1]. HSIs can provide detailed spectral signatures for the precise discrimination of materials by capturing reflectance across hundreds of contiguous narrow spectral bands. LiDAR can offer accurate three-dimensional structural information and generate elevation and surface profiles that are largely unaffected by illumination and atmospheric conditions [

2]. The integration of HSI and LiDAR data enables the simultaneous use of rich spectral, spatial, and height cues, significantly improving the ability to differentiate classes with similar spectral features but varying in vertical structure, such as distinguishing vegetation from structures in urban environments. Early studies show that multimodal fusion outperforms single-source methods. However, the Hughes phenomenon (“curse of dimensionality”) can lead to overfitting and poor generalization. Thus, data fusion should include careful feature selection and dimensionality reduction [

3].

Early HSI-LiDAR fusion methods, while effective, were limited by manual feature design and restricted modeling power. However, they demonstrated the potential for improvement in the field. Researchers created spectral indices, principal components, texture measures, and elevation attributes, then combined these features before feeding them into traditional classifiers like Support Vector Machines (SVMs) or Random Forests [

4]. This method of feature-level fusion showed that combining spectral and height information improved accuracy compared to using either modality alone. These approaches have often failed to capture the relationships between spectral data and structural details. When many features are used with limited training data, overfitting occurs. With the advances, we can mitigate these problems and enable more accurate and robust HSI-LiDAR fusion.

Deep learning has reshaped feature extraction and fusion by replacing hand-crafted pipelines with data-driven representations. In HSI classification, convolutional neural networks (CNNs) learn joint spectral-spatial features end to end [

5]. Multi-branch designs with attention modules emphasize informative bands and neighborhoods, achieving state-of-the-art performance in HSI classification and HSI-LiDAR fusion [

6]. LiDAR height maps can be concatenated as auxiliary channels or handled in dedicated branches to inject shape and elevation cues; attention further improves accuracy in urban land-cover mapping. CNNs are easy to train with pretrained backbones and augmentation, but their fixed receptive fields hinder the capture of long-range dependencies and complex structure. These increase the compute and overfitting risk because broadening the context usually means larger patches or deeper models. Furthermore, misalignments between HSI and LiDAR data can adversely affect CNN accuracy, as these models assume precise coregistration.

GNNs naturally handle the irregular, non-Euclidean structure of remote-sensing data. In a typical GCN, pixels or objects (often after superpixel segmentation) become nodes linked to adjacent or similar nodes, letting information move across neighborhoods and multiple hops [

7]. Newer multi-scale designs, graph attention networks and graph U-Nets, pool nodes hierarchically to capture long-range context while keeping local detail [

8]. Building a combined HSI-LiDAR graph can better preserve shapes and boundaries than patch-based CNNs [

9]. With support from spectral graph theory, polynomial spectral filters provide efficient multi-scale convolutions that highlight important frequencies and reduce over-smoothing. Still, deep GNNs bring issues: many layers may over-smooth, large neighborhoods are costly, and naive graphs can connect dissimilar regions and add noise. Results are also sensitive to hyperparameters (connectivity, hops, learning rates). Current practice favors sparse, localized graphs, learnable adjacency, and techniques such as graph dropout and edge pruning to trade off information, noise, and efficiency.

Transformers have been adapted for HSI-LiDAR data fusion to capture complex dependencies and interactions [

10]. The Vision Transformer models treat HSI-LiDAR fusion images as sequences of patches, using self-attention to weight information from distant scene regions, aiding in disambiguating similar spectra with broader context. Dual-branch transformer architectures with cross-attention enable direct exchange between HSI and LiDAR features, improving spectral and elevation alignment and classification robustness, and adaptive gating balances modality contributions [

11]. Transformers placed after CNN/GCN extractors blend local spectral-spatial features with global context, and fully attention-based spectral-spatial-elevation models fuse imagery and LiDAR within one self-attention stack. Although these models attain high accuracy, their large parameter counts and weaker inductive biases make them data-hungry and computationally intensive, and thus susceptible to overfitting on the small datasets typical of HSI-LiDAR studies. Achieving stable performance requires careful initialization, strong regularization, and meticulous hyperparameter tuning. In limited data scenarios, pure transformers may struggle unless combined with training techniques or hybridized with convolutional layers.

We present a hybrid architecture that couples spectral graph convolutions with transformer-style attention, combining their strengths while mitigating their weaknesses. Spectral graph convolution—implemented with localized, multi-scale filters- is well-suited to HSI-LiDAR data, capturing fine detail and smooth regions with relatively few parameters. Transformer attention on the graph dynamically adjusts the influence of neighboring nodes, thereby expanding the receptive field without requiring very deep networks. Built for robustness, our MS-GWCT with graph-attention layers adapts well across settings and delivers reliable performance. To improve reliability, we integrate the Schrödinger Optimization Algorithm (SOA) [

12] for automated hyperparameter tuning, efficiently identifying near-optimal settings. Our contributions are summarized as follows:

(1) We propose a novel MS-GWCT architecture that combines multi-scale graph convolution with transformer attention mechanisms for classifying fused HSI and LiDAR data.

(2) We have developed a comprehensive suite of training strategies in our MS-GWCT framework, including focal loss with label smoothing, supervised contrastive regularization, data augmentation techniques such as Mixup, and stochastic graph augmentation, to improve robustness when labeled data are scarce.

(3) We introduce a practical application of the Schrödinger Optimization Algorithm (SOA) based hyperparameter search method to optimize numerous model design parameters, thereby achieving state-of-the-art classification performance on Houston 2013 and Trento benchmark datasets.

The remainder of this paper is organized as follows:

Section 2 (Methodology) presents the proposed MS-GWCT framework.

Section 3 (Experiments) describes the experimental setup, including datasets, use of the provided .mat files and train/test splits, implementation details, and evaluation metrics.

Section 4 reports classification results on the Houston 2013 and Trento benchmarks, compares MS-GWCT with state-of-the-art fusion models, and includes ablation studies on key design choices.

Section 5 discusses the findings, highlighting advantages and current limitations, and analyzing the influence of hyperparameter optimization.

Section 6 offers the conclusions.

2. Materials and Methods

In this section, we explain the methodology of the proposed Multi-Scale Graph Wavelet Convolutional Transformer (MS-GWCT) for HSI-LiDAR classification.

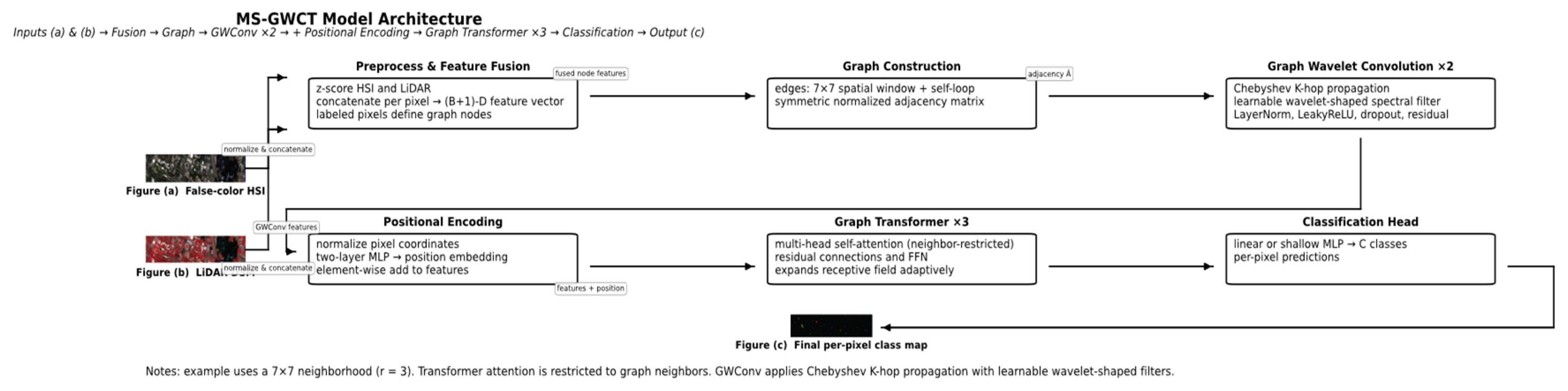

Figure 1.

Architecture of the proposed MS-GWCT model. It includes graph construction from co-registered HSI and LiDAR data, multi-scale graph wavelet convolution layers, Transformer-based attention layers on the graph, and a final classification head for per-pixel class prediction.

Figure 1.

Architecture of the proposed MS-GWCT model. It includes graph construction from co-registered HSI and LiDAR data, multi-scale graph wavelet convolution layers, Transformer-based attention layers on the graph, and a final classification head for per-pixel class prediction.

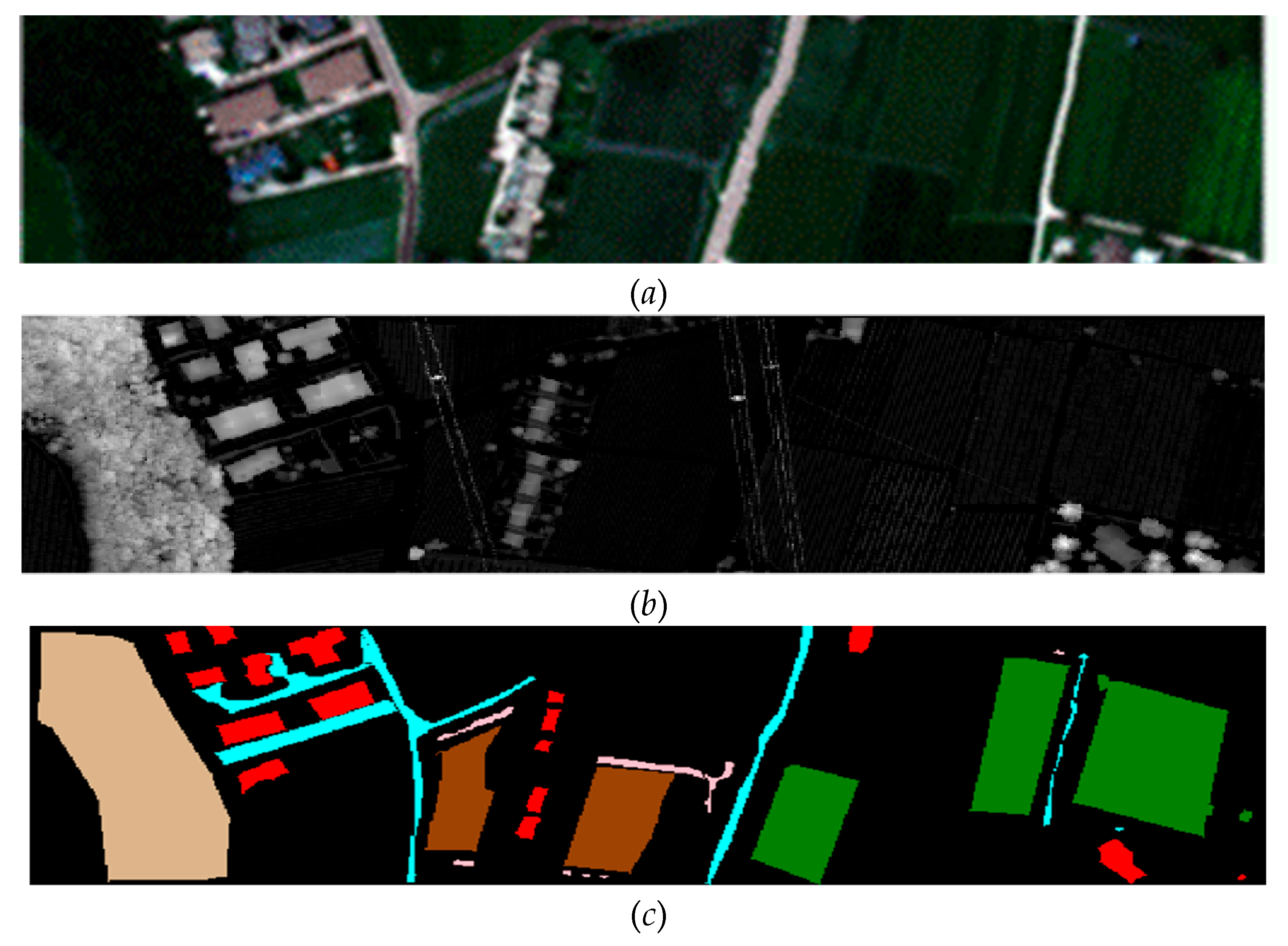

2.1. Data Preprocessing and Graph Construction

We assume a multimodal remote sensing dataset comprising a co-registered HSI and a LiDAR-derived digital surface model (DSM). In particular, we evaluate our approach on the two public datasets of Houston University 2013 data and the Trento data (described later in

Section 3.1)., each providing an HSI data cube along with corresponding LiDAR elevation data. Let

denote the HSI data cube, where

H and

W are the image height and width in pixels, and

B is the number of spectral bands. Thus, each spatial pixel location (

i,j) has an associated

B-dimensional spectral reflectance vector

. The LiDAR data is given as a single-channel matrix

, where

represents the elevation (DSM value) at position (

i,j). The ground-truth label map is

, where C is the number of land-cover classes (for example, C=15 for Houston 2013 and C=6 for Trento) and

denotes unlabeled pixels (background). We restrict our graph to the set of valid labeled pixels (those with

). From each dataset, we are given disjoint index sets for training and test pixels (e.g., provided by mask files TRLabel.mat and TSLabel.mat), which specify which labeled pixels are used for model training and which are held out for testing.

Before constructing the graph, we perform feature normalization and fusion to combine HSI and LiDAR information at each node. We apply z-score normalization independently to each HSI band and to the LiDAR channel, using statistics computed over all labeled pixels in the scene. Formally, for HSI band

b, let

and

be the mean and standard deviation of that band over all labeled pixels. We transform the HSI value at pixel (

i,j) in band

b as

. Similarly, let

and

be the mean and standard deviation of the LiDAR DSM values over all labeled pixels; we normalize the LiDAR feature as

. After normalization, we concatenate the HSI and LiDAR features at each labeled pixel to form a fused feature vector for that pixel. Denoting this fused feature for node (pixel)

n (located at image coordinates (

i,j)) as

, we have:

Here is the normalized HSI spectral vector at that pixel and is the normalized LiDAR value (treated as a one-dimensional feature). This results in a (B+1)-dimensional feature vector for each labeled node. Stacking all node feature vectors in a fixed order yields a feature matrix, where N is the number of labeled pixels (graph nodes). The n-th row of corresponds to node n’s fused HSI-LiDAR feature. We will use n (or sometimes (i,j) when referring to spatial coordinates) to index graph nodes from here onward.

Next, we construct a spatial graph over the labeled pixels to model their neighborhood relationships. The node set consists of all pixels with known class labels. We connect two nodes with an undirected edge (i.e.,) if their spatial locations lie within a fixed neighborhood in the image. Specifically, for the Houston 2013 dataset we use a square window of radius r=3(covering a 7×7 pixel neighborhood), and for Trento we use r=2 (a 5×5 window). In other words, any two labeled pixels whose coordinates differ by at most r in both row and column directions are connected by an edge. We also include a self-loop edge for every node (each node is connected to itself) to allow retention of its own features during graph convolution. This approach yields an adjacency matrix for the graph, defined as if nodes i and j are connected (including the case i=j for self-loops) and otherwise. Let be the identity matrix and let be the degree matrix of the graph (a diagonal matrix with, the degree of node i). We then compute the symmetric normalized adjacency matrix as. This normalized adjacency will serve as the core graph propagation operator in our model. The normalization scales each edge by the degrees of its incident nodes, balancing the influence of nodes with differing connectivity. It is a standard technique to improve numerical stability and prevent features from exploding or vanishing during message passing. Unless stated otherwise, we use this normalized adjacency for both datasets throughout training and inference. In analogy to image processing, can be viewed as a normalized graph Laplacian kernel that captures the spatial structure of the labeled data points.

While the basic graph uses unweighted edges (

for all neighboring nodes, 0 otherwise), we also experiment with incorporating feature similarity and spatial proximity into the edge weights to potentially reduce spurious connections (especially useful if there is some misregistration between HSI and LiDAR). In a weighted variant of the graph, we define the initial edge weight between two neighboring nodes

i and

j using a Gaussian kernel on both feature difference and spatial distance. Let

and

be the fused feature vectors of nodes

i and

j (after normalization), and let

and

be the normalized spatial coordinates of these pixels (for example,

if we scale the row and column indices to [0,1]). We define:

here

and

are temperature (bandwidth) hyperparameters controlling the sensitivity to spectral feature differences and spatial distances, respectively. Intuitively,

will be close to 1 (high weight) if node

i and

j have very similar HSI-LiDAR features and are spatially nearby, whereas

will be very small if the nodes’ features differ significantly or if they are far apart (even if they fall within the nominal

neighborhood). By setting appropriate

and

(larger values yield a flatter Gaussian, smaller values make it more selective), we obtain a feature-aware weighted adjacency. In practice, we found that the unweighted graph (treating all neighbors equally within the window)) already performs very well for Houston 2013 and Trento. The Gaussian edge weights of Equation (2) can help in cases of slight misregistration between HSI and LiDAR by down-weighting edges between dissimilar pixels. We include

and

as tunable hyperparameters in our later SOA search (Section 2.5), but in our final optimized models these weights were either essentially uniform (for large

values) or provided only marginal improvement. Thus, unless otherwise noted, one can assume an unweighted graph ((i.e.

for all adjacent

i,j) for the core MS-GWCT model.

Additionally, we use a simple graph augmentation method during training to improve robustness, especially because of the limited number of labeled nodes. At regular intervals (every P epochs), we randomly modify the graph’s edges and use the altered graph for one epoch, then switch back to the original. In practice, this involves randomly dropping some existing edges and adding new connections between nodes that are close in space but not originally connected. The dropout is applied symmetrically (if edge i–j is dropped, j–i is also dropped) and we typically drop each edge with a fixed probability (e.g., 10–20%). For adding edges, we consider pairs of nodes within the same spatial window that were not initially connected, and we sample a small number of new edges among them with a low probability (e.g., 5–15%). The probability of adding a particular edge can be biased by the Gaussian weight (Equation 2) between those nodes, so that we are more likely to add connections between feature-similar neighbors. After this random perturbation, we re-normalize the adjacency matrix to get a new for that training epoch’s forward and backward passes. In the following epoch, the graph can be reset to the original structure or perturbed again with a new random mask. We emphasize that these augmentations are only used during training; for inference, we always use the original, unperturbed graph. This noisy-neighbor sampling technique mitigates over-reliance on any single edge or hub node, reduces the risk of over-smoothing, and makes the model less sensitive to occasional incorrect edges due to misregistration. Our model's ability to learn robust features is particularly evident in the Houston 2013 scene (a dense urban area with many spectrally mixed pixels and complex structures) and in the Trento scene (with elongated agricultural fields where neighborhood connections might otherwise be repetitive). The augmentation encourages the model to learn more robust features that do not depend on a fixed, specific graph structure.

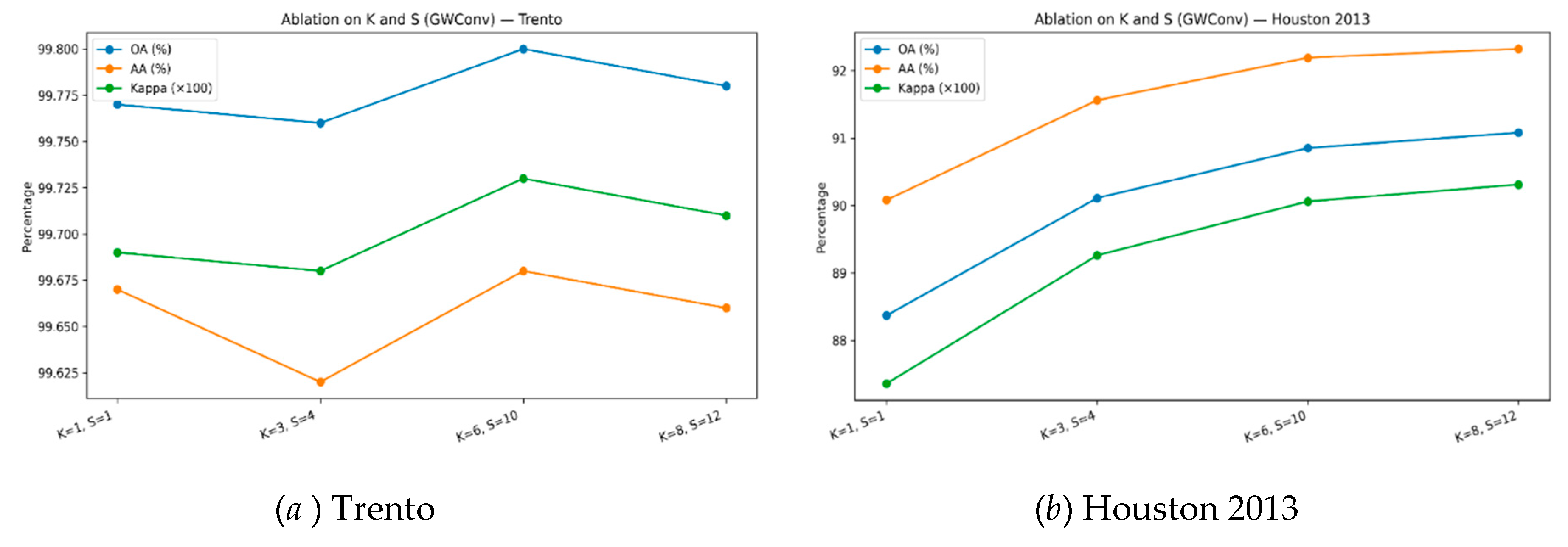

2.2. Multi-Scale Graph Wavelet Convolution

Let be the symmetric normalized adjacency matrix of the graph as constructed above. To perform graph convolutions, we adopt a spectral filtering approach based on graph wavelets. We avoid explicit eigen-decomposition of (which would be expensive for large graphs) by using a Chebyshev polynomial approximation of the graph filter, similar to established techniques for fast GCNs. Specifically, we use Chebyshev polynomials of order K to achieve an exact K-hop localized receptive field while keeping computations efficient.

Consider an input feature matrix

for the

-th graph convolution layer, where

N is the number of nodes and

is the number of feature channels in layer

. (For the first layer,

is the matrix of initial node features defined in

Section 2.1) We seek to apply a spectral graph filter to

to produce transformed features

. Using the Chebyshev polynomial basis [

13], we propagate and mix features as follows. We define the Chebyshev polynomial sequence

of order

k (with respect to a shifted domain

appropriate for the normalized adjacency) via the standard recurrence:

Applying this to the graph operator

, we can propagate node features outwards up to

K hops. Let

and

. Then for

we compute:

Here each represents the node features after aggregating information from the k-hop neighborhood (with alternating signs inherently handled by the recurrence). In effect, corresponds to the k-th Chebyshev polynomial applied to the input features. This sequence of propagated features provides a basis for graph filtering at multiple scales (from local 1-hop up to K-hop reach).

Next, we realize a wavelet-shaped spectral filter by taking a learnable linear combination of these propagated feature tensors. Let

be a set of filter coefficients for this layer (one coefficient for each

term). Then we define the filtered output as:

where the sum is taken elementwise on the node features. This operation implements a polynomial spectral filter on the graph, with the coefficients determining the filter’s frequency response (in the spectral domain of). The set effectively controls whether the filter behaves as a low-pass, band-pass, or high-pass filter, thereby determining how strongly local contrast (higher graph frequencies) versus regional smooth context (lower frequencies) is emphasized.

To initialize

with a meaningful prior (instead of random initialization), we draw inspiration from continuous wavelet functions. An effective design in our model is to initialize the spectral filter to have a wavelet-like shape (e.g., a Mexican-hat or Morlet wavelet) in the graph spectral domain. Instead of requiring a closed-form expression for this wavelet in terms of

’s eigenvalues, we pre-compute its coefficients via a discrete Chebyshev transform of the target spectral shape. In particular, if

denotes the desired wavelet filter response as a function of graph frequency

(with

normalized to [-1,1]), then the Chebyshev coefficient for the

k-th polynomial term can be obtained by an integral (or discrete sum) of the wavelet against the Chebyshev basis:

where is the Kronecker delta (which ensures the k=0 term is not double-counted) and are the graph’s spectral frequencies (eigenvalues of or the Laplacian, appropriately scaled to [-1,1]). In practice, we approximate this sum by sampling a set of representative values or by using the analytical moments of the target wavelet function. We then use these to initialize the filter in equation (5). All remain learnable and are updated during training; the initialization simply gives the model a sensible starting filter (e.g., one that accentuates medium-frequency signals corresponding to class boundaries).

A single Graph Wavelet Convolution (GWConv) layer thus maps the input

to an output

via the propagation in Equation (4) and filtering in Equation (5). To form the final layer output, we then apply a lightweight transformation and nonlinearity. In our implementation, after computing

from Equation (5) (which has the same dimension

), we apply a set of linear projection blocks to mix the features, followed by normalization, nonlinearity, and dropout, with a residual skip connection. Specifically, to capture multiple effective receptive fields within one GWConv layer, we use

S parallel linear projections (scale-specific learnable weight matrices) on

U, each producing an

-dimensional output. Let

for

denote these projection matrices (one for each “scale”). We combine their outputs by a learnable set of scalar weights. Denoting by

the learned combination weights for the

S projections (and

b a bias vector), the effective fused output for each node can be written as:

where is the n-th row of U (the features of node n after the wavelet filtering). In other words, the contributions of the S parallel linear transforms are weighted by and summed into a single output feature vector (plus bias). This design effectively allows the layer to adaptively emphasize a combination of multiple filter scales or feature subspaces. We then apply layer normalization (LayerNorm) to, followed by a non-linear activation (we use leaky ReLU with negative slope 0.2), and dropout (e.g. dropout probability p=0.25 in our experiments). Finally, we add a residual connection: the original input is added to the output (after a linear projection if needed to match dimensions) to preserve original information and counteract over-smoothing. The resulting is the output of one GWConv layer.

In our model, we stack two such GWConv layers to form a robust locality-aware spectral backbone. These two layers of multi-scale graph wavelet convolution supply rich local and mid-range features that are then fed into the subsequent attention module for global context integration.

2.3. Transformer-Based Graph Attention and Positional Encoding

To complement the fixed polynomial propagation of GWConv with data-dependent message passing, we adopt a Transformer-style self-attention block operating on the graph’s sparse neighborhoods. Let

be the node embeddings output by the GWConv stack (for simplicity, assume two GWConv layers have been applied and yielded features of dimension

F per node). We first apply a linear

projection to compute query, key, and value representations for each node, for each attention head. For a given attention head

h, we have learnable projection matrices

that map the

F-dimensional node features to

-dimensional queries, keys, and values respectively. (Typically,

if we use H heads and keep total dimensions constant.) We compute:

For each node i, is its query vector, is the key of node j, and is the value of node j. In a standard transformer, i would attend to all j in the sequence; here, we restrict attention to the graph neighborhood. That is, node i will only consider nodes j for which (including itself j=i due to the self-loop). For each neighbor pair (i,j), we compute a scaled dot-product attention score , where denotes dot product. We then apply a softmax normalization over i’s neighborhood (which includes i itself) to obtain the attention weight .

This yields a normalized attention weight for each edge i–j in the graph (for that head). The head’s output for node i is then a weighted sum of the neighbors’ value vectors..

After computing this for all heads, we concatenate the head outputs and project them with another weight matrix to get the final attention output for each node , where denotes concatenation. The above process constitutes a graph-based multi-head self-attention operation: each node attends to its neighbors (and itself), aggregating information with learned weights that depend on feature similarities (via dot products).

We adopt a pre-normalization Transformer encoder structure for stability, before applying the multi-head attention, we apply LayerNorm to the input. After computing the attention outputs, we add the original input (residual connection) and possibly apply dropout on the attention weights or outputs. Then we apply a second LayerNorm, followed by a position-wise two-layer feed-forward network (FFN) with a GELU activation and another residual connection. The FFN expands the feature dimension by a factor (e.g., 4×) and then compresses back to F (this expansion factor is a hyperparameter, we used 3–4×). The result is an updated node feature matrix that has integrated information from multi-hop neighbors (potentially beyond the fixed K of the GWConv, since stacking attention layers allows information to propagate further if needed) and that can adaptively weight which neighbors are most relevant for each node’s classification.

In practice, we stack L such graph Transformer blocks (with L=3 in our final model) to allow information to traverse multiple hops through successive attentions, learning which neighbors and which features are important for the classification task. This attention mechanism, in combination with the graph structure, enables the model to capture long-range context and complex interactions (e.g., between spectrally similar yet spatially distant regions, or between LiDAR-elevated structures and ground-level context) that static convolution alone might miss.

One challenge with graph-based processing is that absolute spatial location is not encoded (unlike CNNs on an image grid or ViTs with positional embeddings). Because our graph representation discards the original pixel coordinates (other than through the adjacency structure), we inject a positional encoding to retain some location information. We do this by mapping the normalized pixel coordinates through a small multi-layer perceptron (MLP) to obtain a d-dimensional position embedding for each node. Specifically, for each node with spatial coordinates (i,j) normalized as, we apply a two-layer MLP with a GELU activation to produce an embedding vector. We then add this positional embedding to the GWConv output features before feeding them into the first Transformer attention layer. This simple addition acts like a bias that can help the model learn location-dependent patterns. We found that it provides a mild benefit, particularly on scenes with strong location-dependent class priors (e.g., in the Houston 2013 scene, certain land-cover types are more likely in some regions of the image). In other scenes like Trento, which are more homogeneous or where classes are more uniformly distributed, the positional encoding is essentially neutral (does not harm performance).

2.4. Training Strategies and Loss Functions

We train the MS-GWCT model in a semi-supervised transductive manner, all labeled and unlabeled nodes participate in graph propagation (so test nodes can influence intermediate feature representations through their connections), but loss is computed only on labeled training nodes. This transductive setup is natural for graph-based learning and allows the model to utilize the graph structure of the entire dataset while still only using ground-truth labels from the training set.

To cope with scarce supervision and class imbalance, we employ a combination of specialized loss functions and regularization techniques. In particular, we use a focal loss to handle class imbalance and difficult examples, label smoothing to prevent overconfidence, Mixup augmentation to expand the training distribution, and a supervised contrastive loss to further shape the feature space. We also apply standard optimization techniques such as AdamW optimizer, learning rate scheduling with warmup, early stopping, and an Exponential Moving Average (EMA) of model weights. We describe each component below.

We use the focal loss to focus training on harder examples and address class imbalance. For a training node

i with true class

and predicted class probability

(the model’s predicted probability for the correct class), the focal loss is defined as:

where is the focusing parameter and is an optional weighting factor for class. Intuitively, the factor down-weights the loss contribution of samples that the model already classifies with high confidence, focusing more on those that the model finds difficult (where is low). The factor can be used to balance classes (assigning higher weight to minority classes). In our experiments with the fixed 50-samples-per-class training splits, the classes are balanced by design, so we set all (no class weighting). For scenarios with imbalance, could be tuned (we include it as a hyperparameter for SOA if needed). We found focusing parameter and a base (for all classes) to work well and we kept those fixed in final experiments (these were in fact discovered by the hyperparameter search).

To prevent the model from becoming over-confident in its predictions and to improve generalization, we apply label smoothing. With smoothing factor

, the one-hot training labels are smoothed as: the ground-truth class probability is set to

and the remaining probability

is distributed uniformly among all

C classes. For example, if

in a

C=15 class problem, the target probability for the correct class would be 0.97 and for each other class

. During training, we use the smoothed label in the cross-entropy loss. The smoothed cross-entropy for node

i is:

where

is the smoothed target probability for class

c (with

for the true class and

for

). In practice, we combine label smoothing with focal loss by applying the focal modulation on the smoothed target probabilities. Label smoothing (we used

) encourages the model to remain a bit uncertain and tends to improve generalization and calibration.

Mixup is a data augmentation technique originally proposed for vision tasks. We adapt it to our graph-based setting by mixing feature vectors and labels between random pairs of training nodes. In each training epoch, for a random subset of training samples we sample a partner and a mixing coefficient. Specifically, for two labeled training nodes

i and

j, we sample a mixing weight

(we used

). We then create a virtual training sample by linearly interpolating their features and labels:

Here and are one-hot encoded label vectors (of length C), so is a pseudo-label with fractional entries (which can be interpreted as a soft target distribution). We do not actually add new nodes to the graph; instead, we temporarily replace node i’s feature with and use as the target for node i in that forward pass (node j is not used in that pass). This is done for a random selection of pairs each epoch. Mixup essentially encourages the model to behave linearly between training examples, which provides a regularization against sharp decision boundaries. It has been shown to improve robustness and generalization, especially in limited-data settings. In our experiments, we found that using Mixup (with moderate around 0.4) consistently improved the model’s accuracy by a small margin and reduced overfitting.

In addition to the above, we include a supervised contrastive loss term to explicitly encourage the model to learn discriminative features for each class. We extract the feature vector before the final classifier (i.e., the output of the last attention layer or a projection thereof) for each training node, and

-normalize it to

. This will serve as an embedding for contrastive learning. For a given anchor node

i, let

be the set of other training nodes that share the same class label as

i (the positives for

i). Let

be the set of all other training nodes excluding

i itself. The supervised contrastive loss for anchor

i is defined as:

where denotes dot product (cosine similarity, since vectors are normalized) and T is a temperature hyperparameter. This loss pulls same-class node features together and pushes different-class features apart. With temperature T=0.1, it applies only to labeled training nodes. Adding this loss (with small weight) helps produce more distinct feature clusters, improving final classification, especially for difficult classes.

The total training loss for a batch is a weighted sum of the focal classification loss and the supervised contrastive loss. We define , where is a weight factor (we treated it as a hyperparameter). In our final models we typically use in the range 0.1–0.2, meaning the classification loss dominates while the contrastive loss provides a gentle regularization. This combination achieved the best validation performance in our ablation experiments (see Section 4.4).

We train the model using the AdamW optimizer (Adam with weight decay). The learning rate (LR) and weight decay are set via SOA tuning to typical values (LR, weight decay). Gradient clipping stabilizes training. The LR schedule starts with a 10% warm-up to the base LR, then follows a cosine decay to a small fraction, with a minimum floor. We train up to 400 epochs with early stopping based on validation. We use an EMA of model weights (decay 0.99-0.999), evaluated during testing for stability and accuracy. We also experimented with label propagation post-inference, which slightly improved output smoothness without affecting the main results; it’s optional.

2.5. Schrödinger Optimization Algorithm for Hyperparameter Tuning

The MS-GWCT model has many hyperparameters like Chebyshev polynomial order, GWConv scales, dropout, augmentation rates, attention heads, Transformer size, learning rate, and loss weights. Tuning these manually is hard and often ineffective, so we use SOA, an evolutionary strategy. Each individual is a hyperparameter set, and the algorithm updates the population based on fitness, which combines accuracy metrics. We start with random candidates, evaluate with short training, and pick top performers. New candidates are created by perturbing elites with Gaussian noise, categorical changes, and opposition moves. This continues over generations, gradually finding optimal hyperparameters as perturbation scales decrease over time.

We applied SOA to tune our model on each dataset separately. To keep the search feasible, we limited

P to 12 and

T to 15, evaluating around 180 configurations with truncated training. The search revealed clear optima for most hyperparameters. We then retrained a new model on the full training set using these hyperparameters.

Table 1 summarizes key choices for Houston 2013 and Trento. SOA showed Houston benefits from fewer scales S but larger model dimensions, likely due to greater class complexity. It also identified an optimal mid-range learning rate, weight decay, non-zero graph augmentation, and a small supervised contrastive weight. Notably, the automated search improved accuracy significantly over manual tuning. For example, on Houston 2013, a manually tuned configuration (based on our intuition and small grid searches) achieved about OA ≈ 88.19% ± 0.62, AA ≈ 90.07% ± 0.45, and Kappa ≈ 87.18% ± 0.67. In contrast, the SOA-selected configuration consistently exceeded OA = 92.60% ± 0.61, AA = 93.37% ± 0.39, Kappa = 91.97% ± 0.67 on Houston (as we will report in Section 4). This underscores the benefit of automated hyperparameter search for such a complex hybrid model. In our work, SOA provided an efficient mechanism to navigate the hyperparameter space of MS-GWCT, yielding well-tuned configurations that contributed to its state-of-the-art performance on the HSI-LiDAR classification benchmarks.

After obtaining these optimized hyperparameters, we retrained the MS-GWCT model from scratch on each dataset’s full training set (using all 50 samples per class, with no separate validation hold-out since we had already validated the configuration). The resulting models are what we use in the final evaluation.

5. Discussion and Limitations

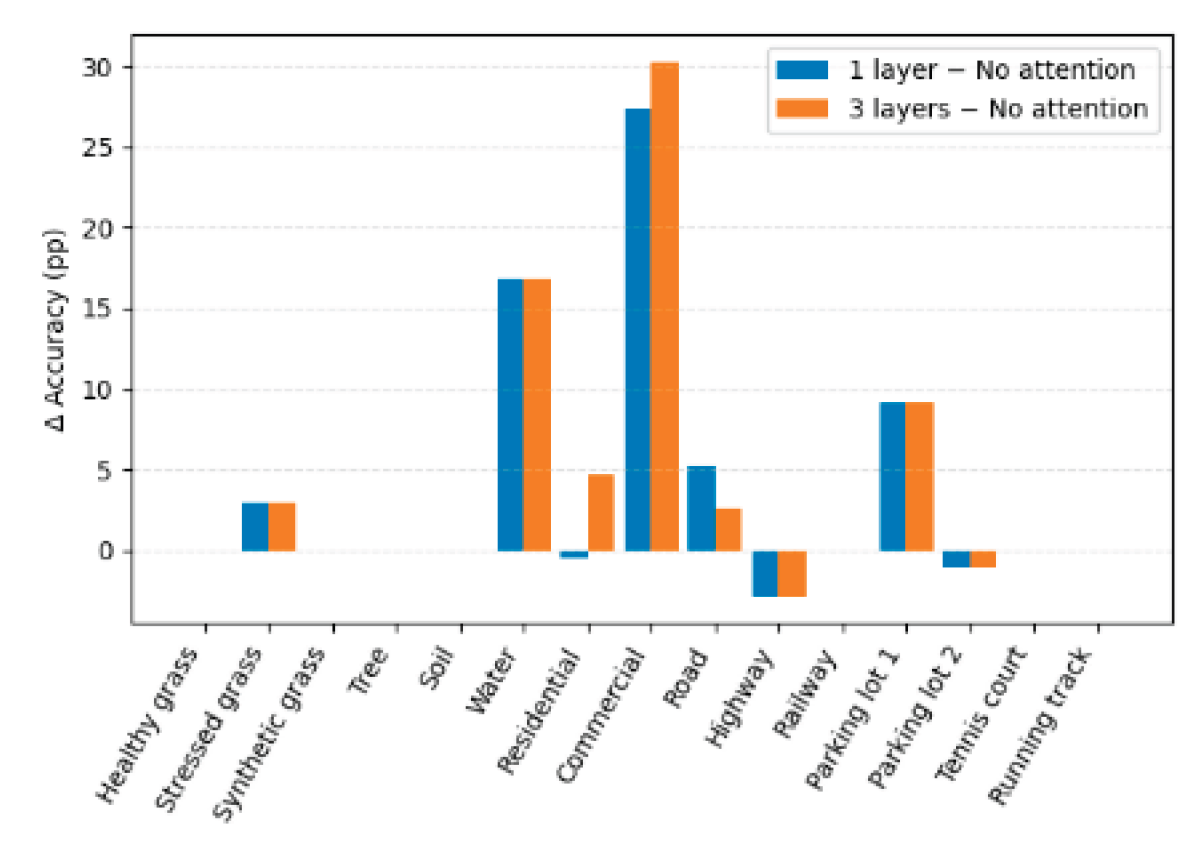

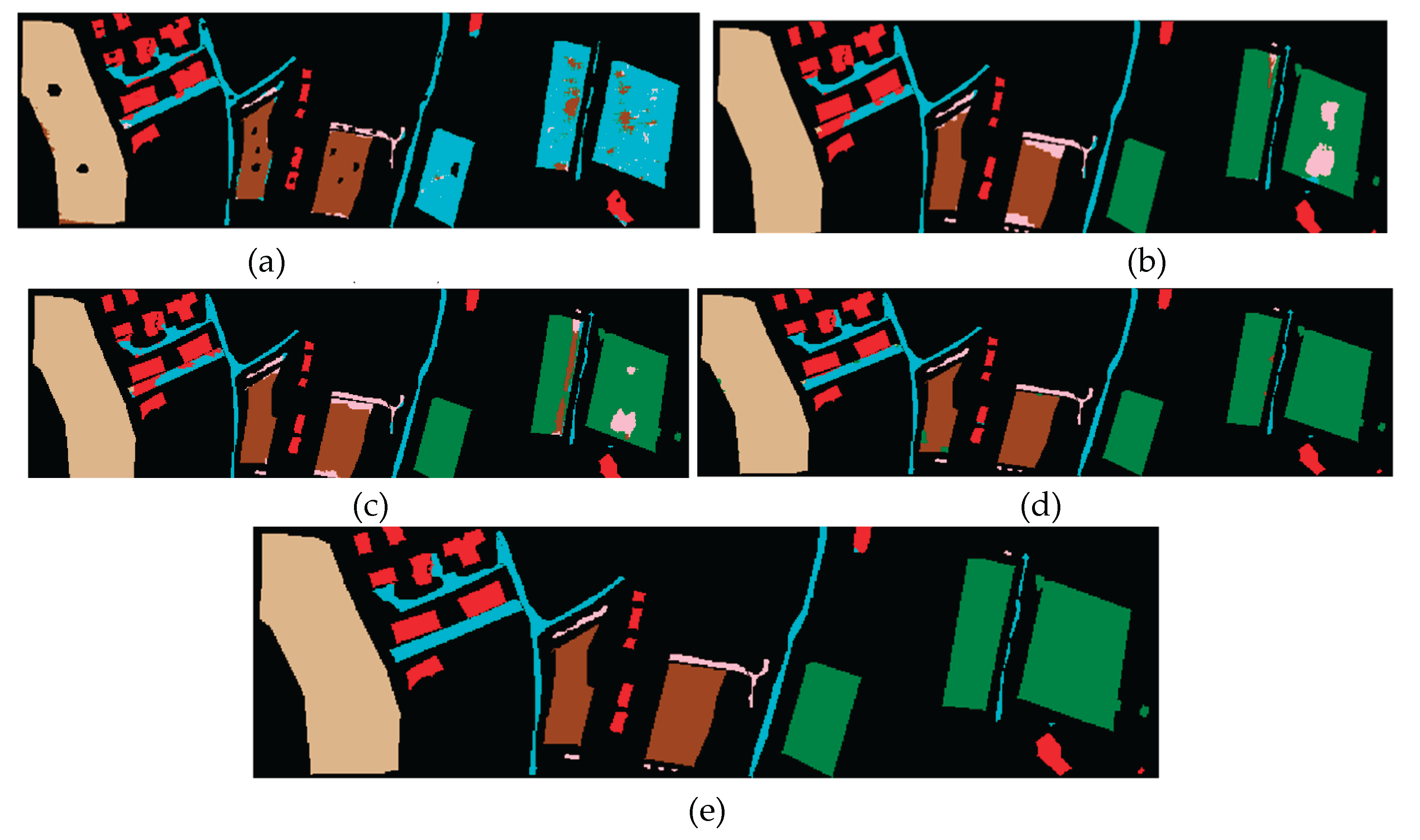

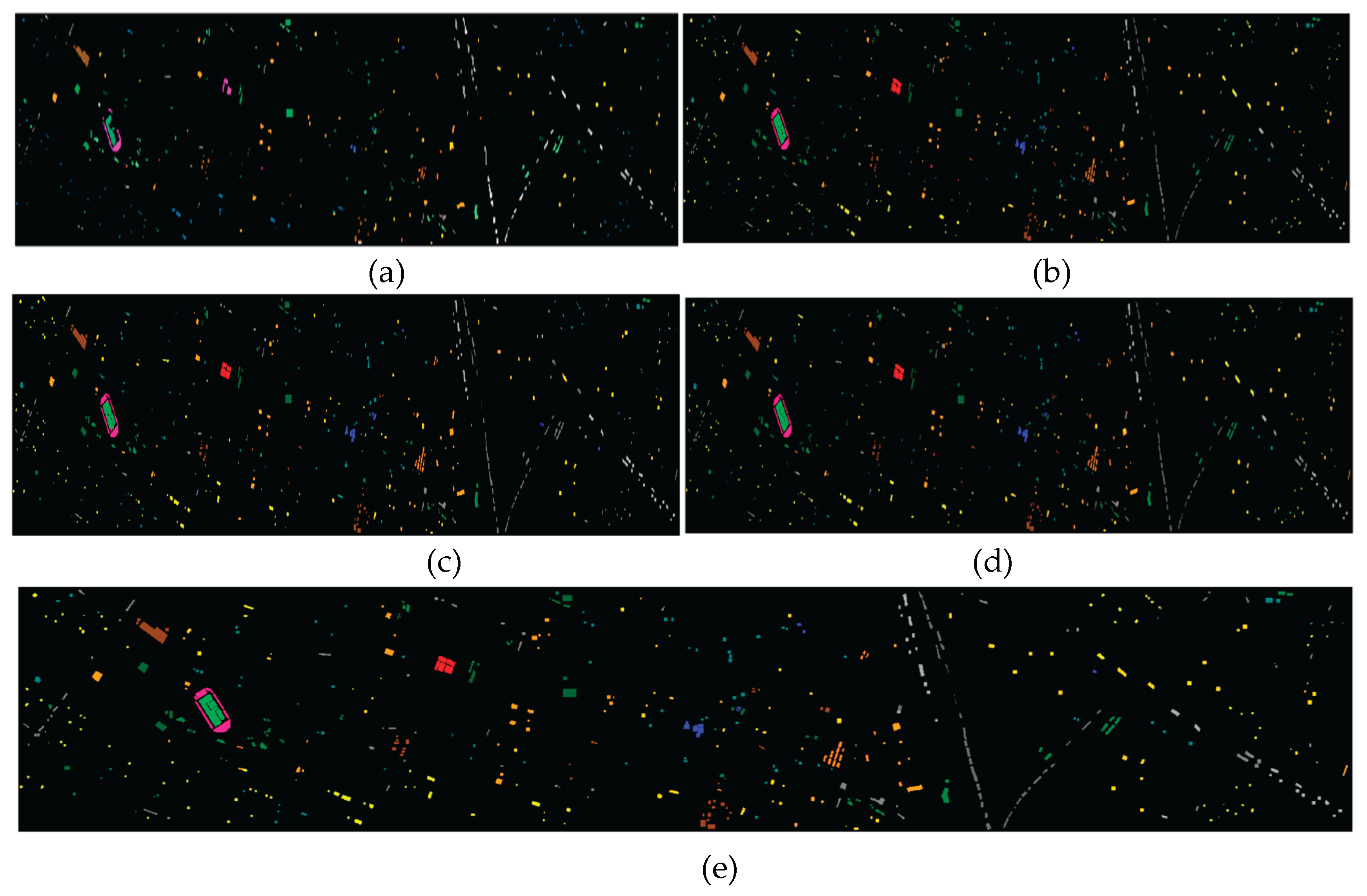

Our approach combines HSI and LiDAR features by concatenating data and creating a graph based on spectral and spatial proximity, ensuring LiDAR’s structural info is accessible. Graph wavelet convolution propagates this data locally, helping differentiate classes like buildings, roads, and parking lots with similar spectra but different heights. LiDAR reduces confusion among man-made surfaces, and the Houston confusion matrix shows fewer errors than using HSI alone. It offers an extra feature when spectral data is unclear. Most misclassifications occurred when LiDAR couldn't distinguish features, like flat-roofed buildings versus paved ground or shadows. Sometimes, the model confused rooftops with roads, but transformer attention helped by considering surrounding pixels. The multi-scale graph wavelet sharply defined class boundaries. In Trento, boundaries like apple orchards and vineyards, both vegetation but different classes, were often confused in patch-based CNNs due to mixed info at edges. Our MS-GWCT with band-pass filtering highlighted these differences. The graph better respects object boundaries than a fixed patch grid, as edges connect similar regions or neighboring dissimilar ones. Confusions mainly occurred at boundaries, like Road vs Soil or Apple vs Vineyard, where even humans are uncertain. The attention mechanism, including neighbors, smoothed some errors and reduced isolated misclassifications. We found three attention layers optimal, allowing info to travel up to 3 hops, connecting a pixel with about 9 others, helping correct small errors. More layers risk over-smoothing, so fewer layers suffice. The modular, data-efficient MS-GWCT model allows swapping graph or attention parts and extending modules independently. Even with only 50 samples per class, it outperforms methods with more data due to strong inductive biases: graph convolution assumes smooth labeling, and attention focuses on relevant features, reducing noise. Strategies like mixup and contrastive learning further improve data efficiency. Remaining errors often stem from similarity, misregistration, or boundary confusion, especially at edges like Apple vs Vineyard. Simpler models like an MLP or SVM might reach high accuracy with enough features but usually underperform by ignoring spatial context. Our spectral classifier on Houston data achieves 75-80% OA, improved by spatial context and multi-scale filtering. MS-GWCT excels but has limitations; it’s transductive, relying on a single large scene graph, is not suitable for new data without retraining, and assumes good registration.

6. Conclusion

We present MS-GWCT, a multimodal HSI–LiDAR classifier that couples multi-scale spectral graph wavelet filtering, for localized, parameter-efficient feature extraction, with graph-based Transformer attention for adaptive, long-range message passing. We combine focal loss with label smoothing, supervised contrastive regularization, mixup, and stochastic graph augmentation with Schrödinger Optimization for hyperparameter search, allowing MS-GWCT to perform strongly when labels are limited. In Trento and Houston 2013, the proposed MS-GWCT achieves an impressive 98.86% and 92.60% overall accuracy, respectively, using only 50 labeled pixels per class, surpassing competitive baselines. Ablations confirm the indispensability of both the multi-scale wavelet filters and the attention blocks. LiDAR cues play a crucial role in resolving class ambiguities, and the training strategies significantly enhance robustness to label scarcity and class imbalance. Our graph, constructed over labeled pixels, simplifies training under few-label regimes. However, this approach may under-utilize unlabeled data. While attention expands the receptive field, its computational cost increases with graph size. Scaling to large scenes will likely require more efficient sparse operations or indexing, to which MS-GWCT is naturally amenable.

Future research directions. Large-scale pretraining, Self-supervised or cross-modal pretraining on unlabeled HIS-LiDAR corpora to improve generalization across sensors, seasons, and sites. Scalable inference,Sparse/dilated attention, graph sampling, and model compression (quantization/pruning) for city-scale mapping and edge deployment. Uncertainty & active learning. Future work will prioritize unsupervised domain adaptation, open-set recognition, and sensor-mismatch handling to improve transfer across sensors, seasons, and regions, and will broaden the problem from pixel-wise classification to change detection, 3D semantic segmentation, and joint elevation–spectral fusion with SAR/thermal modalities.