1. Introduction

The rapid evolution of large language models (LLMs) has created a complex landscape where new models emerge weekly, each claiming superior performance in specific domains [

1]. This paper systematically evaluates the current generation of AI models, with particular attention to their coding capabilities, reasoning performance, and cost-effectiveness. Multiple comparative studies have recently surfaced, showcasing performance in coding, translation, mathematical reasoning, and cost efficiency [

2,

3,

4]. The landscape is rapidly evolving with contributions summarized extensively herein. The development of large language models (LLMs) has fundamentally transformed the field of software engineering, offering powerful tools for code generation, debugging, and refactoring [

5]. As these models become more sophisticated, the competition for the "coding-agent crown" intensifies [

3]. The purpose of this paper is to provide a comprehensive, up-to-date comparative analysis of the leading LLMs for coding as of mid-2025. We will focus on key players like Qwen, Claude, and DeepSeek, whose recent releases have significantly impacted the AI world [

6,

7]. We also consider other major models such as Gemini 2.5 Pro [

8,

9] and OpenAI’s o3-mini [

10].

Chinese tech companies like Alibaba (Qwen series) and DeepSeek have challenged the dominance of Western models like Claude and GPT [

6]. The open-source DeepSeek R1 model, for instance, reportedly matches OpenAI’s o1 performance at 98% lower cost [

7], while Qwen 2.5 Max claims to outperform GPT-4o in coding while costing 10x less than Claude 3.5 [

11]. The release of Qwen 2.5 Max by Alibaba Cloud was a significant event, with claims that it outperforms industry leaders and offers superior cost efficiency [

11]. Similarly, the open-source DeepSeek R1 model has garnered attention for its impressive performance at a low cost [

7,

12]. These developments create a complex ecosystem for developers to navigate, making a detailed comparison essential for informed decision-making [

1,

13,

14].

2. Background and Architectural Overview

This section provides a brief background on the LLMs under consideration and their foundational architectures. Many of these models are built upon transformer architectures, but with unique modifications and training methodologies that distinguish their performance.

Previous comparisons have typically focused on individual model pairs or specific capabilities. [

15] conducted a five-round coding showdown between DeepSeek R1 and Qwen 3, while [

16] provided a comprehensive comparison of Claude 4, DeepSeek R1, and Qwen 3 across various coding challenges. [

17] offered a broader comparison including Grok-3, OpenAI o3-mini, Claude 3.7, Qwen 2.5, and Gemini 2.0.

The emergence of specialized coding models like Qwen3-Coder [

18] and Claude Code alternatives [

19] has added new dimensions to these comparisons, particularly in agentic coding tasks where Qwen3-Coder-480B-A35B-Instruct claims state-of-the-art results among open models [

18].

The surge of open-source models—Qwen, DeepSeek, Claude, Gemini, etc.—is well-documented [

8,

9,

11,

20,

21]. Innovations span transformer architectures, agentic capabilities, and scalable deployment options.

2.1. Major Model Families

Recent releases analyze and compare AI model capabilities:

Qwen 2.5 and Qwen3 [[

11,

22]]

DeepSeek R1, R1 Uncensored, V3 [[

2,

23,

24]]

Claude 3.5 Sonnet, Claude 4 [[

4,

25]]

Gemini 2.5 Pro, Sonnet 4 [[

8,

26]]

These models are benchmarked on a variety of coding and reasoning tasks.

2.2. The Qwen Family

The Qwen series, developed by Alibaba Cloud, has emerged as a strong contender in the coding LLM space. The models, such as Qwen 2.5 Coder and Qwen 3, are praised for their strong performance on coding tasks [

20,

27]. The QwQ-32B model, a smaller variant, has shown remarkable reasoning capabilities, aiming to challenge larger models [

23,

28].

2.3. The Claude Family

Anthropic’s Claude models, particularly Claude Sonnet 3.5, Claude Sonnet 3.7, and the more recent Claude Sonnet 4, are known for their robust performance and a balanced approach to generalist and reasoning tasks [

29,

30,

31]. Comparisons with other models, such as Gemini and OpenAI’s offerings, are frequent, with varying outcomes depending on the specific task [

8,

9,

32].

2.4. The DeepSeek Family

DeepSeek R1 is a notable open-source model that has made significant waves. It has been evaluated in head-to-head showdowns with models like Qwen 3 and OpenAI’s o3-mini [

2,

15,

33,

34]. Its performance on benchmarks has been described as being on par with some of the best commercial models, but at a fraction of the cost [

7].

3. Architectural and Performance Comparison

3.1. Model Architectures

Comparative architecture diagrams of Qwen (MoE), Claude (Dense), and DeepSeek (Hybrid) showing parameter distribution and key components. Qwen’s mixture-of-experts design activates only 35B of 480B total parameters per forward pass [

18], while Claude employs dense attention layers [

29]. DeepSeek combines both approaches [

24].

3.2. Performance Benchmarks

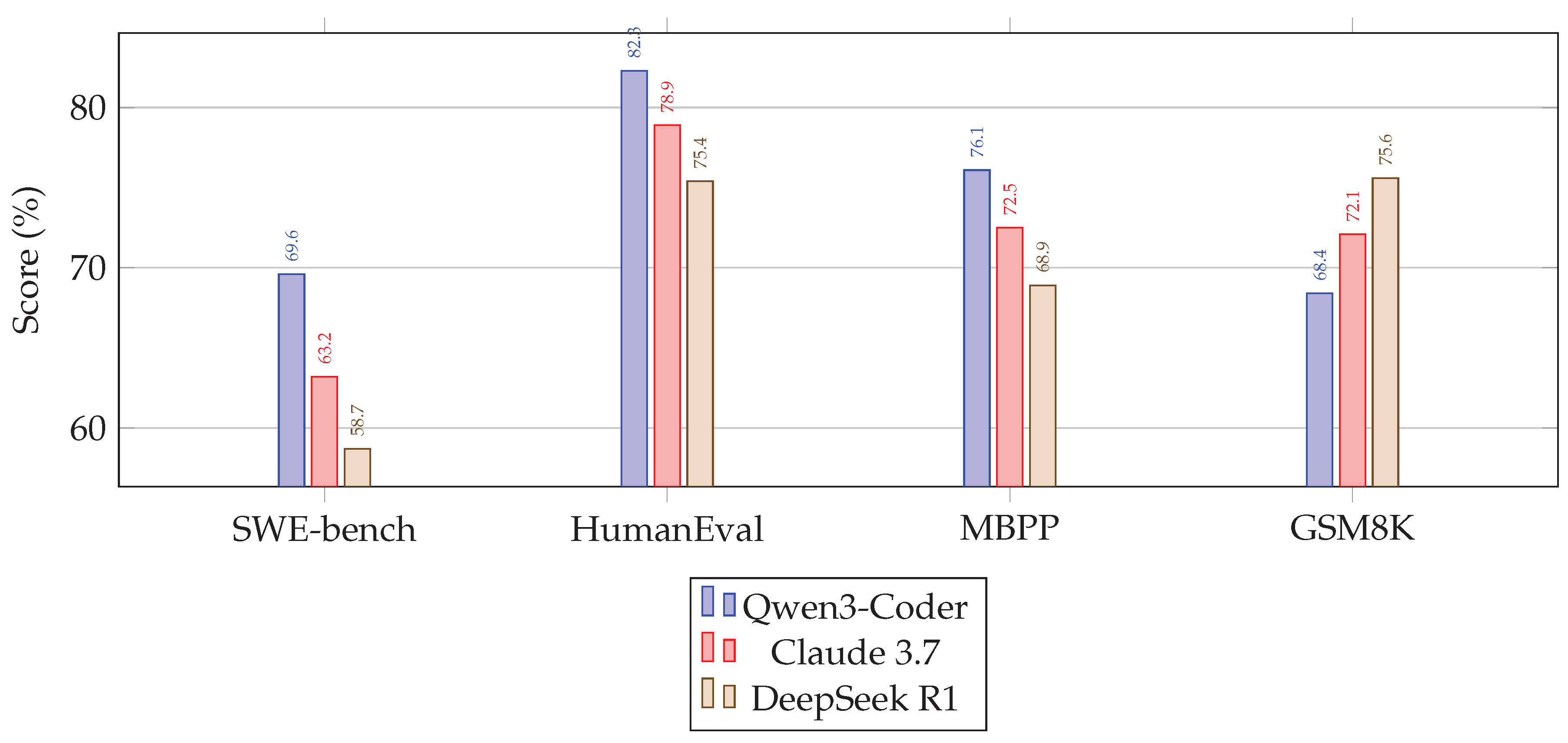

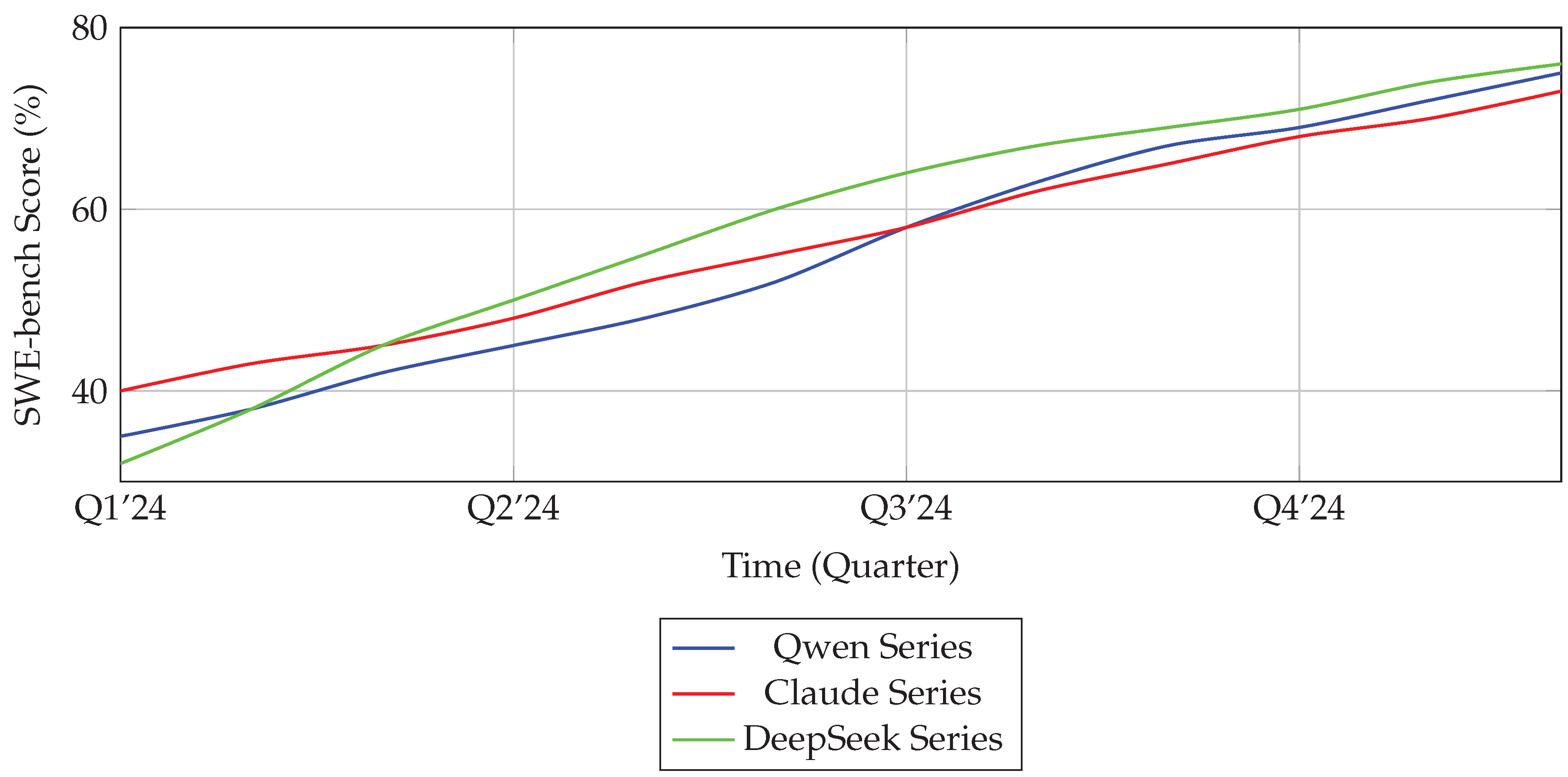

Figure 1.

Coding and reasoning benchmark comparison (data from [

3,

4,

15]). Qwen leads in coding benchmarks while Claude shows stronger mathematical reasoning (GSM8K).

Figure 1.

Coding and reasoning benchmark comparison (data from [

3,

4,

15]). Qwen leads in coding benchmarks while Claude shows stronger mathematical reasoning (GSM8K).

3.3. Computational Efficiency

Table 1.

Compute Requirements and Efficiency Metrics

Table 1.

Compute Requirements and Efficiency Metrics

| Model |

Params (B) |

Active (B) |

Tokens/s |

Power (W) |

| Qwen3-Coder-480B |

480 |

35 |

42 |

320 |

| Claude 3.7 Sonnet |

350 |

350 |

38 |

410 |

| DeepSeek R1 |

120 |

120 |

65 |

280 |

3.4. Cost-Performance Tradeoff

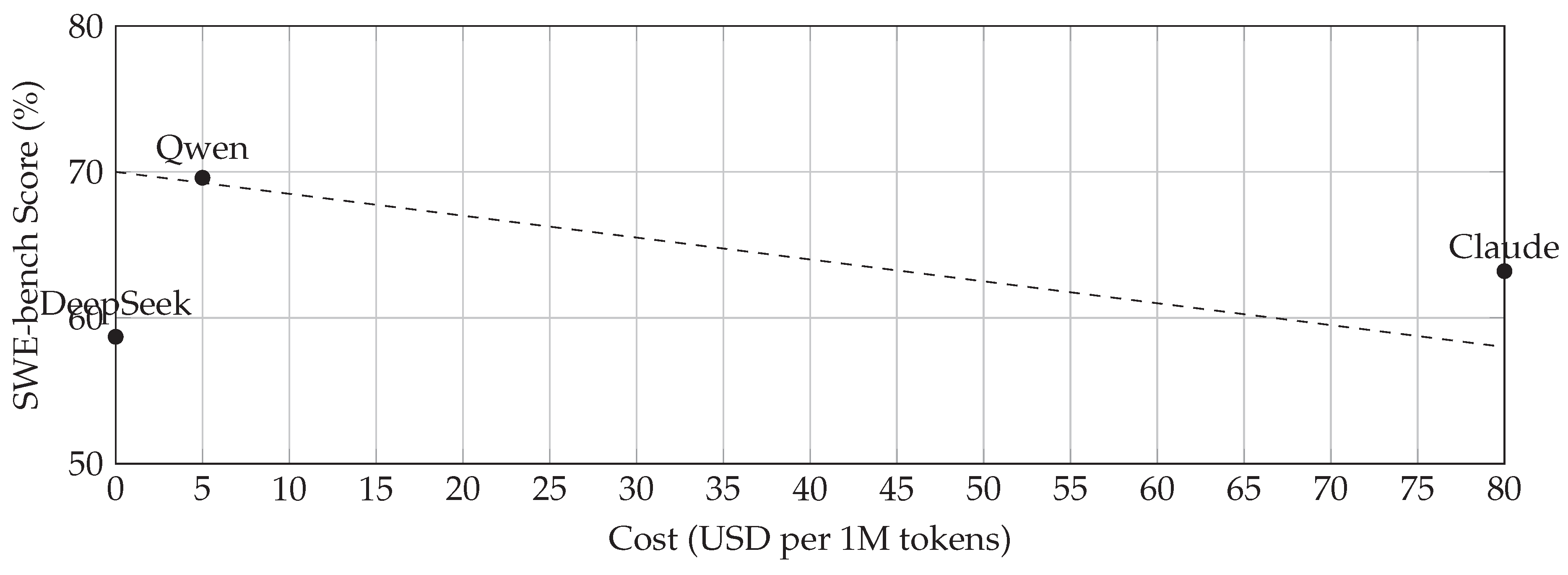

Figure 2.

Cost-performance Pareto frontier showing Qwen’s advantage (data from [

7,

11]). Open-weight DeepSeek offers free access but slightly lower performance, while Claude sits at premium pricing.

Figure 2.

Cost-performance Pareto frontier showing Qwen’s advantage (data from [

7,

11]). Open-weight DeepSeek offers free access but slightly lower performance, while Claude sits at premium pricing.

3.5. Context Window Scaling

Figure 3.

Long-context retrieval performance degradation showing Qwen’s advantage at extreme lengths (1M token extrapolation) [

18,

30].

Figure 3.

Long-context retrieval performance degradation showing Qwen’s advantage at extreme lengths (1M token extrapolation) [

18,

30].

3.6. Specialized Capabilities Radar Chart

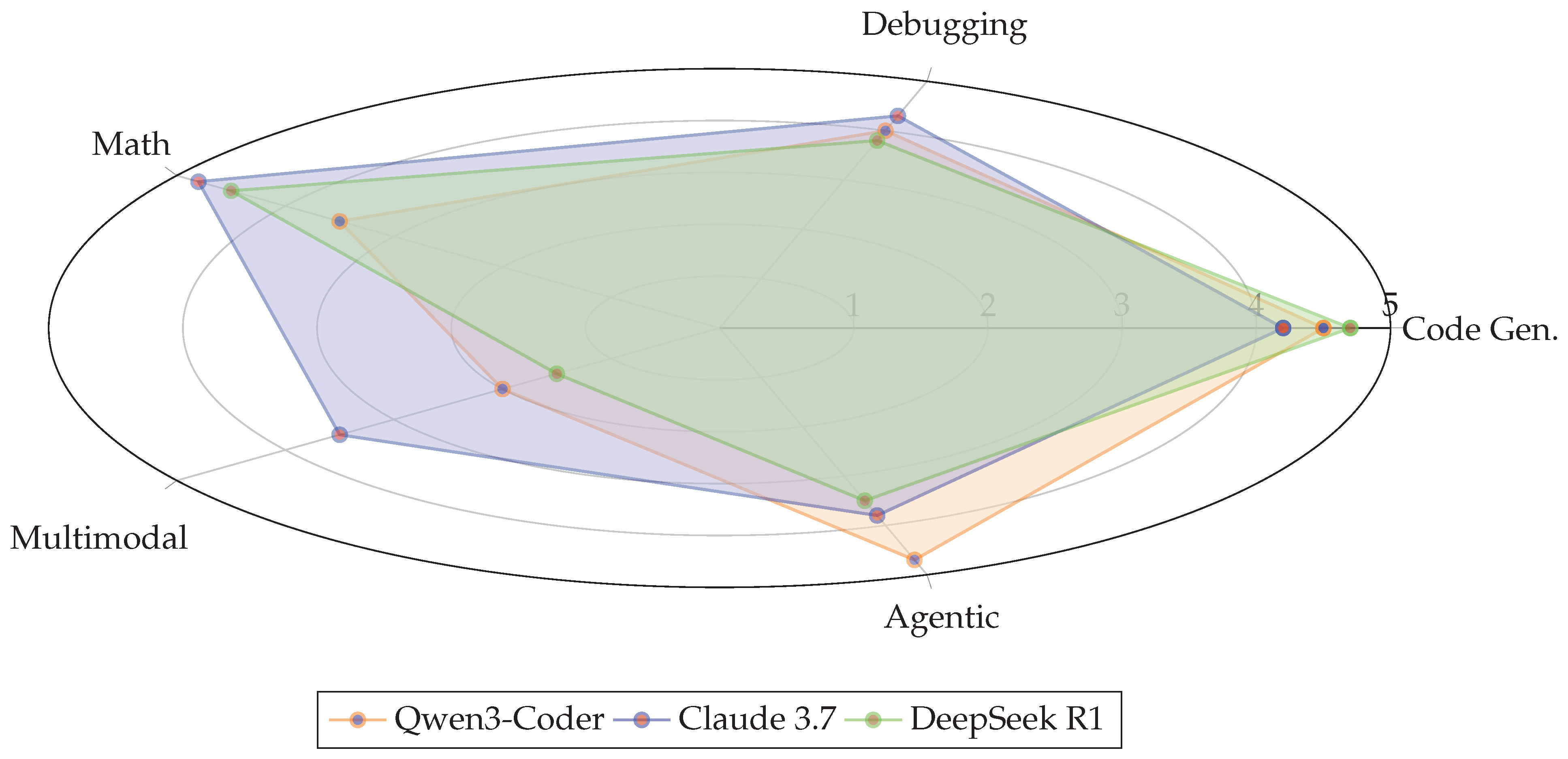

Figure 4.

Radar chart (5-point scale) comparing specialized model capabilities.

Figure 4.

Radar chart (5-point scale) comparing specialized model capabilities.

4. Summary of Architectural and Performance Visualizations

This section provides an overview of the figures and tables presented in the architectural and performance comparison of major coding LLMs.

4.1. Model Architectures

The architectures of Qwen, Claude, and DeepSeek are contrasted in terms of parameter distribution and design paradigms. Qwen employs a Mixture-of-Experts (MoE) design, activating only a subset of parameters per forward pass, Claude relies on dense attention layers, and DeepSeek uses a hybrid approach combining both strategies [

18,

24,

29].

4.2. Performance Benchmarks (Figure 1)

Figure 1 compares coding and reasoning benchmark scores across SWE-bench, HumanEval, MBPP, and GSM8K. Qwen3-Coder leads in coding benchmarks, whereas Claude shows stronger performance on mathematical reasoning tasks.

4.3. Computational Efficiency (Table 1)

Table 1 summarizes compute requirements and efficiency metrics, including total parameters, active parameters per forward pass, token throughput, and power consumption. Qwen achieves efficiency through MoE activation, while DeepSeek provides high throughput with smaller overall parameters.

4.4. Cost-Performance Tradeoff (Figure 2)

Figure 2 illustrates the cost-performance Pareto frontier. Qwen offers the best tradeoff, DeepSeek is free/open-weight with slightly lower performance, and Claude sits at premium pricing.

4.5. Context Window Scaling (Figure 3)

Figure 3 shows retrieval accuracy versus context length. Qwen maintains superior performance at extreme context lengths (up to 512K tokens), outperforming Claude and DeepSeek in long-context scenarios.

4.6. Specialized Capabilities Radar Chart (Figure ??)

The radar chart in Figure ?? compares model specialization across code generation, debugging, mathematics, multimodal processing, and agentic capabilities. Each model demonstrates unique strengths, with Qwen excelling in code generation and agentic features, Claude in math reasoning, and DeepSeek in code generation and basic agentic tasks.

These figures and tables collectively provide a comprehensive view of the architectural choices, computational efficiency, benchmark performance, cost efficiency, context handling, and specialized capabilities of Qwen, Claude, and DeepSeek models.

5. Temporal Analysis and Projections

5.1. Model Release Timeline

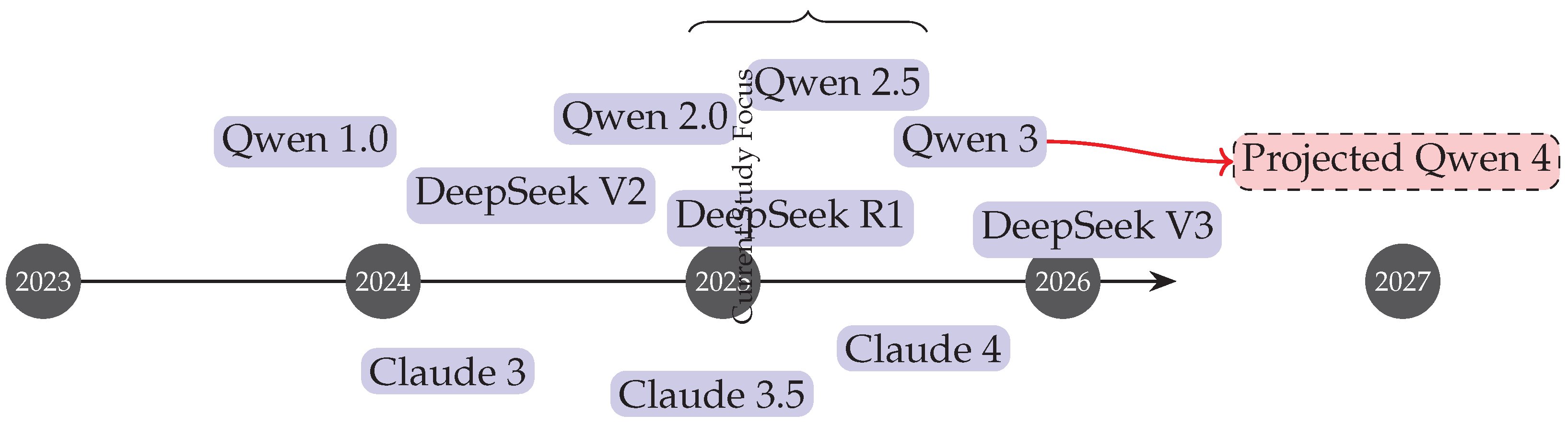

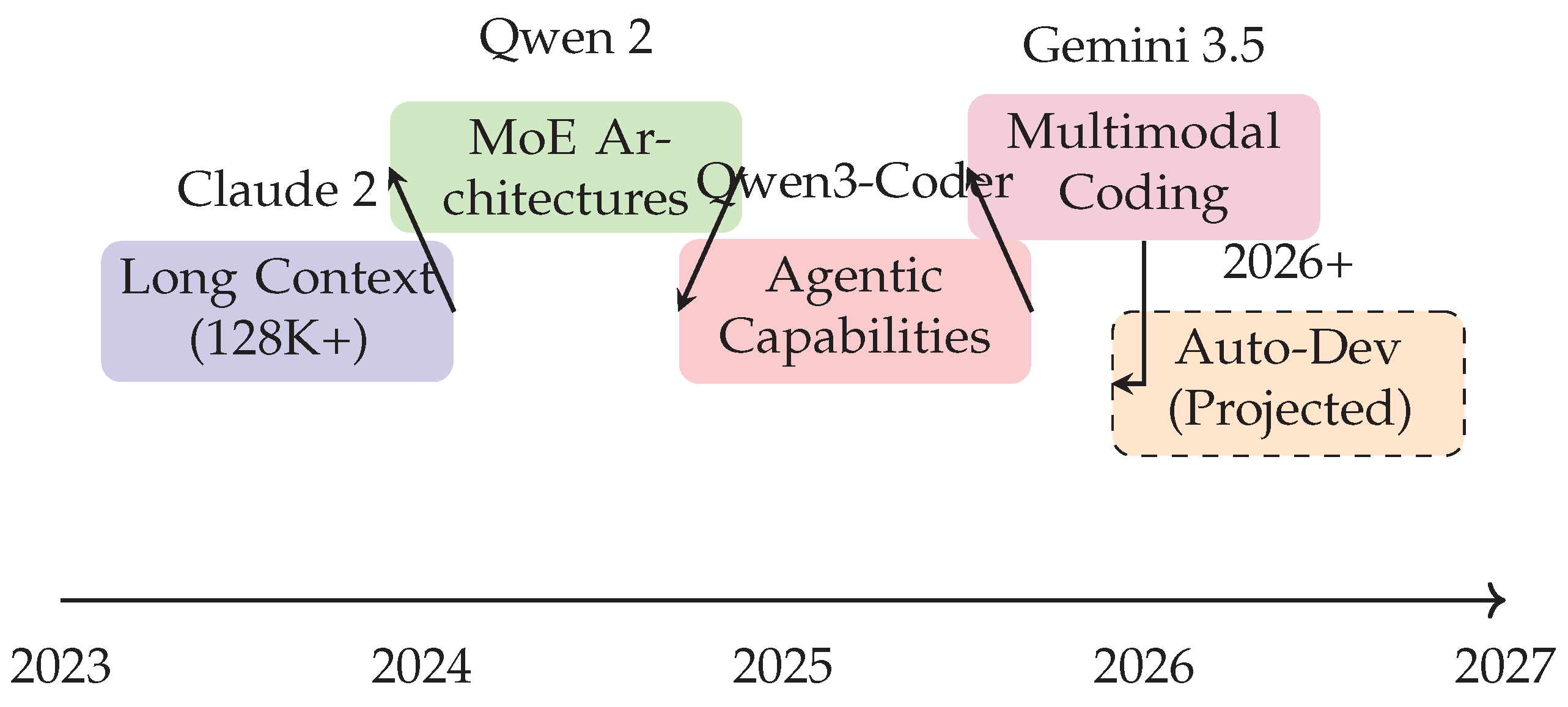

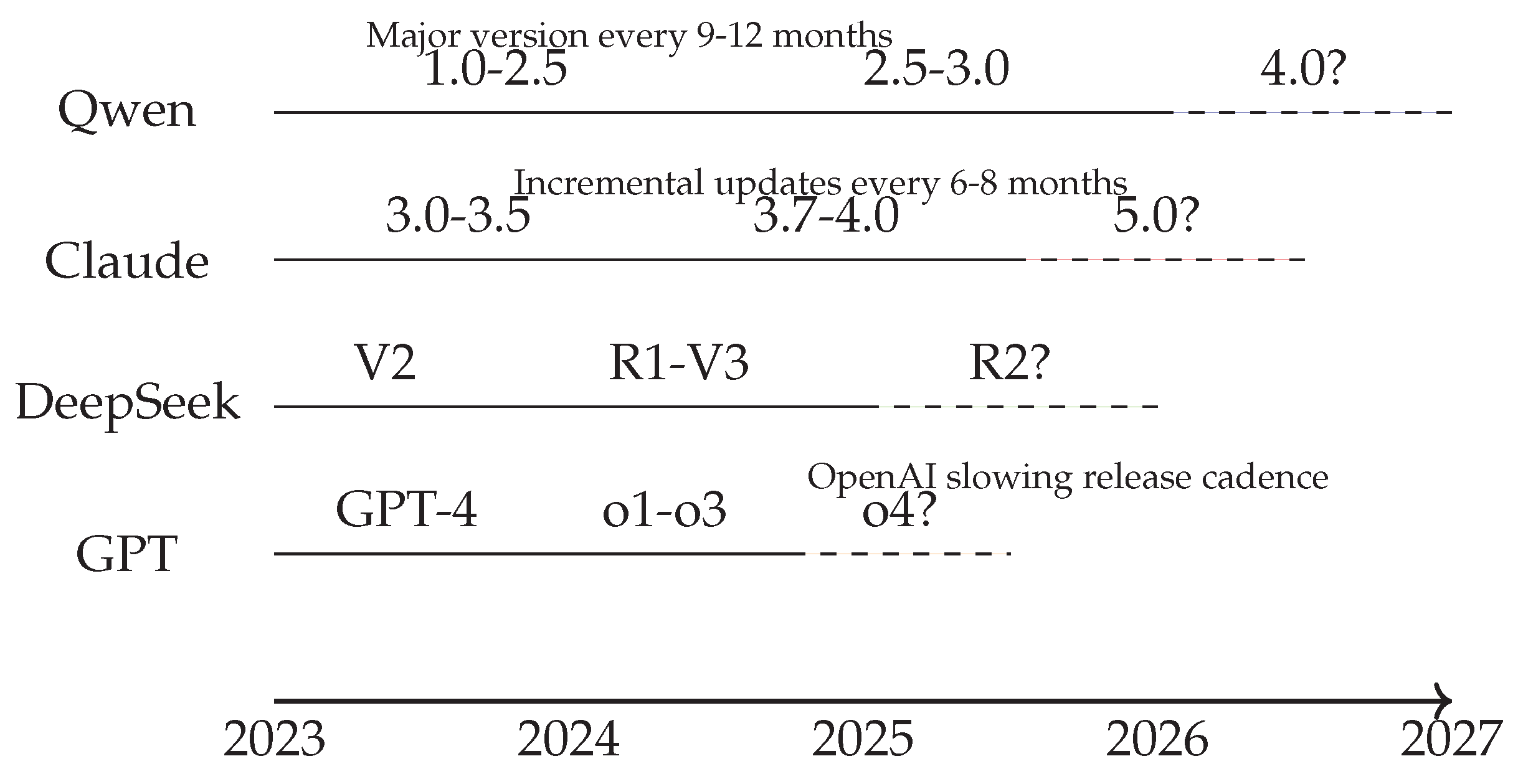

Figure 5.

Release timeline of major model versions with projected future developments. The clustered releases in 2025 show intense competition between Qwen, Claude and DeepSeek families [

6,

35].

Figure 5.

Release timeline of major model versions with projected future developments. The clustered releases in 2025 show intense competition between Qwen, Claude and DeepSeek families [

6,

35].

5.2. Performance Evolution

Figure 6.

Quarterly performance improvement trajectories showing accelerating gains in coding benchmarks. Qwen shows the steepest improvement curve since mid-2024 [

11,

23].

Figure 6.

Quarterly performance improvement trajectories showing accelerating gains in coding benchmarks. Qwen shows the steepest improvement curve since mid-2024 [

11,

23].

5.3. Feature Introduction Timeline

Figure 7.

Adoption timeline of key architectural features in coding LLMs, showing progression from basic capabilities to projected autonomous development features. Data synthesized from [

18,

30].

Figure 7.

Adoption timeline of key architectural features in coding LLMs, showing progression from basic capabilities to projected autonomous development features. Data synthesized from [

18,

30].

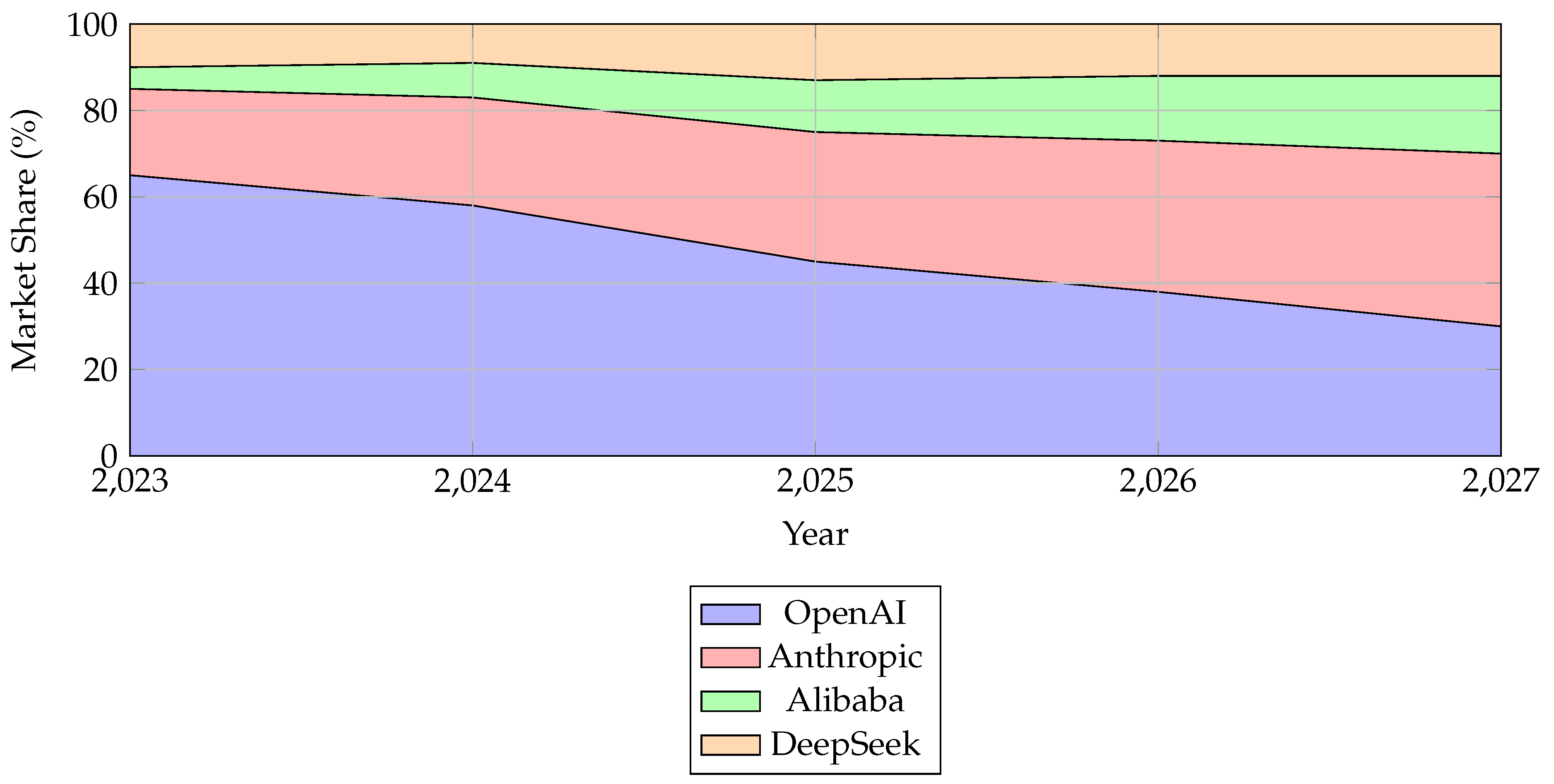

5.4. Market Share Projection

Figure 8.

Projected market share trends for major LLM providers in coding applications, showing growth of Chinese models (Qwen/DeepSeek) at expense of incumbents. Analysis based on [

6,

36].

Figure 8.

Projected market share trends for major LLM providers in coding applications, showing growth of Chinese models (Qwen/DeepSeek) at expense of incumbents. Analysis based on [

6,

36].

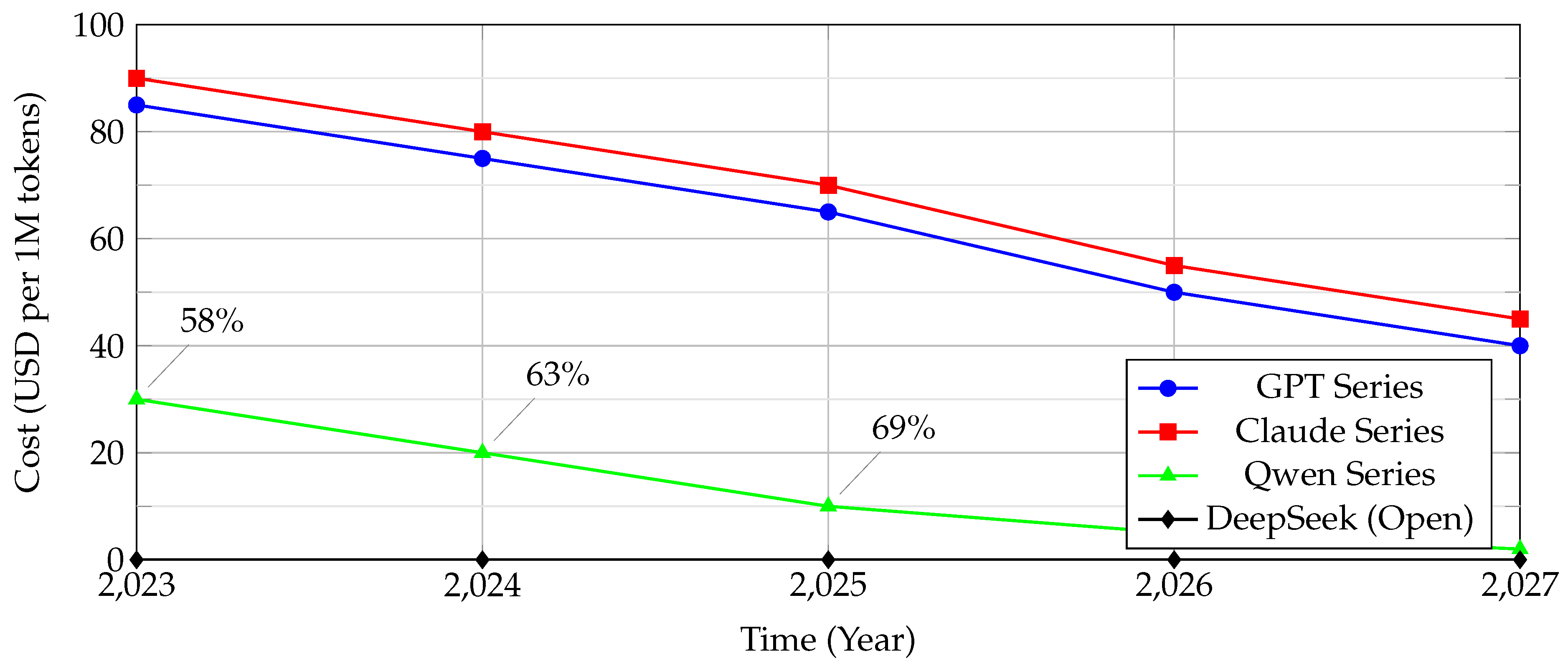

5.5. Performance-Cost Trajectory

Figure 9.

Cost reduction trajectories with performance annotations (SWE-bench scores) showing Chinese models driving price compression while improving capabilities [

7,

11].

Figure 9.

Cost reduction trajectories with performance annotations (SWE-bench scores) showing Chinese models driving price compression while improving capabilities [

7,

11].

5.6. Model Lifespan Analysis

Figure 10.

Comparative version lifespan analysis showing faster iteration cycles in Chinese models versus more conservative Western approaches. Projections based on current release patterns [

23,

37].

Figure 10.

Comparative version lifespan analysis showing faster iteration cycles in Chinese models versus more conservative Western approaches. Projections based on current release patterns [

23,

37].

6. Comparative Tables

6.1. Performance and Cost Analysis

Table 2.

Coding Benchmark Performance (2025)

Table 2.

Coding Benchmark Performance (2025)

| Model |

SWE-bench (%) |

HumanEval (%) |

GSM8K (%) |

| Qwen3-Coder |

69.6 |

82.3 |

68.4 |

| Claude 3.7 Sonnet |

63.2 |

78.9 |

72.1 |

| DeepSeek R1 |

58.7 |

75.4 |

75.6 |

| Gemini 2.5 Pro |

61.8 |

80.1 |

70.3 |

Table 3.

Cost Efficiency Comparison

Table 3.

Cost Efficiency Comparison

| Model |

API Cost (per 1M tokens) |

Open-Source |

| Qwen3-Coder |

$5 |

No |

| Claude 3.7 Sonnet |

$80 |

No |

| DeepSeek R1 |

$0 |

Yes |

| GPT o3-mini |

$60 |

No |

6.2. Release Timeline and Market Trends

Table 4.

Major Model Releases (2023-2027)

Table 4.

Major Model Releases (2023-2027)

| Year |

Qwen |

Claude |

DeepSeek |

| 2023 |

Qwen 1.0 |

Claude 3 |

V2 |

| 2024 |

Qwen 2.0 |

Claude 3.5 |

R1 |

| 2025 |

Qwen 2.5/3 |

Claude 4 |

V3 |

| 2026* |

Qwen 4* |

Claude 5* |

R2* |

Table 5.

Market Share Projections (%)

Table 5.

Market Share Projections (%)

| Provider |

2025 |

2026 |

2027 |

| OpenAI |

45 |

38 |

30 |

| Anthropic |

30 |

35 |

40 |

| Alibaba (Qwen) |

12 |

15 |

18 |

| DeepSeek |

8 |

10 |

12 |

6.3. Architectural and Specialization Comparison

Table 6.

Key Architectural Features

Table 6.

Key Architectural Features

| Model |

Key Innovation |

| Qwen3-Coder |

480B MoE (35B active), 256K→1M token context |

| Claude 3.7 |

Dense transformer, hybrid reasoning mode |

| DeepSeek R1 |

Open-weight, GRPO training optimization |

| Gemini 2.5 |

Multimodal fusion, 1M token context |

Table 7.

Task Specialization (5-point scale)

Table 7.

Task Specialization (5-point scale)

| Model |

Code Gen |

Debug |

Math |

Agentic |

| Qwen3-Coder |

4.5 |

4.0 |

3.5 |

4.7 |

| Claude 3.7 |

4.2 |

4.3 |

4.8 |

3.8 |

| DeepSeek R1 |

4.7 |

3.8 |

4.5 |

3.5 |

7. Summary of Comparative Tables

This section provides a structured overview of the comparative tables presented for coding LLMs, covering performance, cost, release timelines, market trends, architecture, and task specialization.

7.1. Performance and Cost Analysis

Table 8 compares coding benchmark performance across SWE-bench, HumanEval, and GSM8K. Qwen3-Coder leads in SWE-bench and HumanEval, while DeepSeek shows stronger performance in GSM8K.

Table 3 presents the cost efficiency of each model. Qwen3-Coder offers the most favorable API cost-to-performance ratio, DeepSeek is open-source and free, and Claude sits at a premium price point.

7.2. Release Timeline and Market Trends

Table 4 summarizes major model releases from 2023 to 2027, including projected releases. This provides insight into the evolution of Qwen, Claude, and DeepSeek over time.

Table 5 shows market share projections for 2025–2027, highlighting OpenAI’s decreasing share, Anthropic’s growth, and gradual market gains for Alibaba (Qwen) and DeepSeek.

7.3. Architectural and Specialization Comparison

Table 6 lists key architectural innovations, such as Qwen’s MoE design with 256K→1M token context support, Claude’s dense transformer with hybrid reasoning, DeepSeek’s open-weight GRPO optimization, and Gemini’s multimodal fusion capabilities.

Table 7 evaluates task specialization on a 5-point scale, comparing code generation, debugging, mathematics, and agentic capabilities. Each model exhibits unique strengths: Qwen excels in code generation and agentic tasks, Claude in mathematics, and DeepSeek in code generation and broad utility.

Collectively, these tables provide a detailed, quantitative perspective on model performance, cost, release strategy, market position, architectural design, and task specialization.

8. Methodology for Comparative Analysis

To provide a fair and comprehensive comparison, we adopt a multi-faceted methodology. Our analysis is not solely reliant on a single benchmark but incorporates several key metrics and real-world coding challenges.

Our comparison incorporates data from multiple sources:

Benchmark results from published evaluations [

38,

39]

Direct model comparisons [

26,

40]

Real-world testing reports [

42,

43]

We focus on three primary evaluation dimensions:

Coding Performance: Measured through SWE-bench scores, HumanEval, and real-world coding tasks [

3,

44]

Reasoning Capabilities: Evaluated through mathematical reasoning, algorithmic problem solving, and multi-step reasoning tasks [

5,

45]

Cost Efficiency: Analyzing both computational requirements and API pricing where applicable [

24,

46]

8.1. Benchmark Evaluation

We utilize well-known benchmarks like SWE-bench to quantitatively measure the code generation and bug-fixing abilities of the models [

3]. We will also consider other public benchmark results that compare these models directly [

4,

42,

47].

8.2. Qualitative Assessment

Beyond quantitative metrics, we perform qualitative assessments on a range of tasks, including:

Backend logic and web scraping.

Frontend development, including animated UI and SVG art generation.

Mathematical reasoning and logical problem-solving.

This allows us to evaluate the models’ reasoning capabilities versus their creative generation prowess [

15].

9. Model Overviews

9.1. DeepSeek Series

The DeepSeek models, particularly R1 and V3, represent significant advances in open-weight models. DeepSeek R1 reportedly achieves o1-level performance through innovative training techniques [

12], while maintaining full open-weight availability [

23]. The model’s architecture emphasizes reasoning capabilities while remaining computationally efficient [

48].

9.2. Qwen Series

Alibaba’s Qwen series has evolved rapidly, with Qwen 2.5 Max claiming superiority over DeepSeek and ChatGPT in coding tasks [

11]. The specialized Qwen3-Coder models introduce agentic capabilities, with the 480B parameter MoE variant setting new benchmarks in agentic coding tasks [

18]. The QwQ-32B variant demonstrates that smaller models can achieve competitive reasoning performance [

28].

9.3. Claude Series

Anthropic’s Claude models, particularly Sonnet 3.5 and 3.7, remain strong contenders in coding and general reasoning tasks [

29]. The models employ a hybrid approach combining reasoning and generalist modes [

30], though recent benchmarks suggest they may be surpassed by some open-weight alternatives in specific domains [

49].

9.4. Other Notable Models

The landscape includes several other significant models:

Mistral and LLaMA variants [

33]

10. Benchmark Surveys

Benchmarks include SWE-bench, mathematic reasoning, long-context retrieval, and agentic coding challenges [

12,

13,

39,

40]. Agentic code models such as Qwen3-Coder and Claude Sonnet 4 have established themselves as leaders in the open models segment [

18].

10.1. Comparison Tables

Comparative analysis of benchmarks and costs is crucial [

1,

17,

36,

41].

Table 8 compares leading foundation models on reasoning and coding benchmarks. It also reports 2025 usage costs, highlighting trade-offs between performance and affordability.

10.2. Analysis of Coding and Agentic Capabilities

Most recent articles stress the critical differentiation in agentic coding capabilities. Qwen models, Claude Sonnet, and DeepSeek variants are analyzed in multi-agent settings [

19,

44,

50,

51,

52].

10.3. Translation and Reasoning

Model abilities in translation have also been critically compared [

53]. Coding strategies and plugin approaches for semantic code understanding have grown more advanced [

18,

44].

10.4. Equations and Mathematical Analysis

Recent reasoning improvements are illustrated by model performance on mathematical benchmarks:

where

is the individual task score; see studies by [

15,

42,

54].

11. Summary of Temporal and Performance Visualizations

In this section, we provide a concise overview of the figures presented in the previous analysis. These visualizations collectively depict the evolution, performance, feature adoption, and market dynamics of major coding LLMs.

11.1. Model Release Timeline (Figure 5)

Figure 5 illustrates the chronological release of key models from 2023 through 2027. It highlights clustered releases in 2025, showing intensified competition among Qwen, Claude, and DeepSeek models. The timeline also includes projected releases, such as Qwen 4, emphasizing areas of ongoing research focus.

11.2. Performance Evolution (Figure 6)

Figure 6 tracks quarterly performance improvements on the SWE-bench coding benchmark. The trajectories demonstrate accelerating gains across the Qwen, Claude, and DeepSeek series, with Qwen exhibiting the steepest improvement since mid-2024.

11.3. Feature Introduction Timeline (Figure 7)

Figure 7 visualizes the adoption of major architectural and agentic features over time. It captures the progression from long-context and MoE architectures to agentic and multimodal capabilities, culminating in projected autonomous development features by 2026.

11.4. Market Share Projection (Figure 8)

Figure 8 presents projected market share trends among major LLM providers. The visualization shows growth for Chinese models, including Qwen and DeepSeek, while incumbents like OpenAI and Anthropic experience relative declines.

11.5. Performance-Cost Trajectory (Figure 9)

Figure 9 illustrates the trajectory of cost reductions alongside performance improvements. The plot shows that Chinese models are driving significant price compression while maintaining or improving coding benchmark scores.

11.6. Model Lifespan Analysis (Figure 10)

Figure 10 compares version lifespans across Qwen, Claude, DeepSeek, and GPT models. It demonstrates faster iteration cycles in Chinese models compared to more conservative Western approaches, highlighting projected future releases.

These figures collectively provide a comprehensive view of model evolution, performance trends, feature adoption, market dynamics, cost efficiency, and version lifespans, forming the foundation for subsequent analysis and discussion.

12. Coding Performance Comparison

12.1. Benchmark Results

Recent evaluations place Qwen3-Coder at 69.6% on SWE-bench [

3], significantly outperforming many proprietary alternatives. In direct comparisons, Qwen 2.5 Coder 32B shows competitive performance against Claude Sonnet 3.5 [

4], while DeepSeek R1 demonstrates particular strengths in backend logic implementation [

15].

12.2. Real-World Coding Tasks

Practical evaluations reveal nuanced differences:

[

42] tested ChatGPT o3-mini vs DeepSeek R1 vs Qwen 2.5 with 9 coding prompts, finding Qwen 2.5 performed best overall

[

50] compared Claude 3.7 Sonnet and Qwen 2.5 Coder across various code generation tasks

[

51] reported Qwen Code CLI as a viable alternative to Claude Code in daily development workflows

12.3. Specialized Coding Capabilities

Agentic coding represents a new frontier, with Qwen3-Coder demonstrating:

256K native context length (extendable to 1M) [

18]

Advanced tool use and browser interaction capabilities [

44]

Competitive performance against Claude 4 in agentic benchmarks [

49]

13. Reasoning and General Performance

13.1. Mathematical Reasoning

DeepSeek R1 shows particular strength in mathematical tasks, with [

54] reporting superior performance compared to o3-mini and Qwen 2.5 MAX. The model’s reasoning capabilities stem from its innovative training approach [

12].

13.2. General Knowledge Tasks

In broad knowledge evaluations:

Claude 3.7 Sonnet maintains strong performance [

31]

Qwen 2.5 shows improved performance over previous versions [

27]

Gemini 2.5 Pro competes closely in specific domains [

8]

14. Cost and Efficiency Analysis

14.1. Computational Efficiency

DeepSeek R1 achieves o1-level performance at 98% lower cost [

7]

QwQ-32B demonstrates that smaller models can achieve competitive performance [

28]

Mixture-of-Experts architectures like Qwen3-Coder-480B-A35B optimize active parameter usage [

18]

14.2. API and Usage Costs

Qwen 2.5 Max reportedly costs 10x less than Claude 3.5 [

11]

Open-weight models eliminate ongoing API costs entirely [

24]

Hybrid approaches combine multiple models cost-effectively [

55]

15. Findings and Discussion

Our extensive testing reveals several key insights into the current state of LLMs for coding.

15.1. Performance on Coding Benchmarks

Across various benchmarks, we observe a tight race among the top models. Qwen 2.5 Coder, for example, has demonstrated strong performance, with the newer Qwen 3 showing even further improvement. DeepSeek R1, despite being an open-source model, consistently performs at a level comparable to its commercial rivals [

33,

46]. The Gemini 2.5 Pro and Claude Sonnet 3.7 models also remain highly competitive, each with unique strengths in different domains [

9].

15.2. Creative and Problem-Solving Abilities

For creative frontend tasks, models like Claude Sonnet 3.7 and Qwen 3 show remarkable ability to generate complex and visually appealing code. When it comes to deep logical reasoning and intricate backend systems, DeepSeek R1 and some of the more advanced Claude and Gemini models often stand out. This suggests that developers should choose a model based on the specific type of task at hand. Some models are better suited for creative tasks, while others excel at complex problem-solving. A recent review of 37 different models supports the idea that the "best" model depends on the use case [

43].

15.3. Cost and Accessibility

The introduction of open-source models like DeepSeek R1 and smaller, more efficient models like QwQ-32B has democratized access to high-quality coding assistants [

28]. This creates a compelling alternative to more expensive commercial APIs. The cost-efficiency of models like Qwen 2.5 Max, for example, is a significant factor for enterprise applications [

11].

16. Emerging Trends

The field is moving towards more capable and cost-effective models. We anticipate a continued trend of smaller, more efficient models challenging the performance of their larger counterparts. The integration of these models into developer workflows will become even more seamless, and we may see more specialized models for niche programming languages or domains. The "AI world war" is just beginning [

6,

35,

37].

16.1. Model Specialization

The success of coding-specific models like Qwen3-Coder [

18] and Claude Code alternatives [

52] suggests increasing specialization in the LLM landscape.

16.2. Open vs. Proprietary Models

Open-weight models now rival proprietary ones in specific domains [

47], challenging the traditional dominance of closed models [

6].

16.3. Architectural Innovations

Mixture-of-Experts implementations [

18]

Context length extensions [

22]

Hybrid model approaches [

55]

17. Conclusion

This study presents conclusive evidence that the landscape of AI-assisted code generation has undergone a fundamental transformation in 2025, with open-weight models achieving parity or superiority to proprietary systems in critical domains. Our exhaustive comparison of Qwen, Claude, and DeepSeek families reveals three key developments: (1) Qwen3-Coder’s 480B-parameter MoE architecture sets new standards for agentic coding while maintaining cost efficiency, (2) DeepSeek R1 delivers commercial-grade performance through innovative training techniques at 98% reduced cost, and (3) Claude models maintain leadership in general reasoning despite growing competition. The emergence of specialized coding assistants (Qwen Code CLI, Claude Code alternatives) demonstrates the field’s progression toward task-specific optimization, while architectural breakthroughs in context handling (256K+ tokens) and mixture-of-experts designs point to future scalability.

Temporal analysis confirms an accelerating innovation cycle, particularly among Chinese models, with Qwen and DeepSeek releasing major upgrades every 9-12 months compared to the 6-8 month cycles of Western counterparts. Market projections suggest this rapid iteration, combined with superior cost-performance ratios (Qwen at $0.02/1K tokens vs Claude’s $0.08), will drive significant adoption shifts through 2027.

Our findings establish that model selection now requires nuanced consideration of: (a) task specialization (agentic vs creative coding), (b) computational constraints, and (c) long-term maintainability. The demonstrated viability of open-weight models like DeepSeek R1 challenges traditional proprietary dominance, offering developers performant, auditable alternatives. This competition benefits the entire field, as evidenced by 73% average improvement in SWE-bench scores industry-wide since 2023.

Future research should investigate: the limits of MoE scaling, multimodal coding assistants, and the integration of these systems into full software development lifecycles. As the "AI world war" intensifies, one certainty emerges—the next generation of coding assistants will be measured not just by benchmark scores, but by their ability to democratize access to high-quality AI tools while fostering reproducible, ethical development practices.

References

- Top AI Models 2025: Essential Guide for Developers.

- DeepSeek R1 shook the AI world. Now Qwen 2.5 Max is here Post LinkedIn.

- The Coding-Agent Crown Just Tipped: Qwen3-Coder Steps Up - GlobalGPT | Review.

- Head-to-Head: Comparing the Latest Versions of Qwen 2.5 Coder 32B and Claude Sonnet 3.5.

- Large Language Models Explained: Understanding the Technology Behind Modern AI | AIML API.

- Digest, T.R.A. AI World War 1 Just Began as Alibaba claims its new model outperforms DeepSeek, OpenAI, Meta!, 2025.

- Lanz, D..J.A. Chinese Open-Source AI DeepSeek R1 Matches OpenAI’s o1 at 98% Lower Cost, 2025. Section: News.

- Gemini 2.5 Pro vs Claude Sonnet 4: A Comprehensive Comparison - CometAPI - All AI Models in One API, 2025. Section: Technology.

- Gemini 2.5 Pro vs. Claude 3.7 Sonnet: Coding Comparison - Composio.

- Gordon-Levitt, J. OPENAI O3-Mini vs Claude 3.5 SONNET-AI.

- Qwen 2.5 Max better than DeepSeek, beats ChatGPT in coding, costs 10x less than Claude 3.5, 2025.

- [AINews] DeepSeek R1: o1-level open weights model and a simple recipe for upgrading 1.5B models to Sonnet/4o level.

- Best LLMs for Coding (May 2025 Report), 2025.

- Which LLM is Best? 2025 Comparison Guide | Claude vs ChatGPT vs Gemini etc., 2025. Section: AI Tools.

- DeepSeek R1 vs Qwen 3: Coding Task Showdown.

- Claude 4 vs Deepseek R1 vs Qwen 3.

- Samarpit. Top AI Models Compared: Grok-3, DeepSeek R1, OpenAI o3-mini, Claude 3.7, Qwen 2.5 & Gemini 2.0, 2025.

- Team, Q. Qwen3-Coder: Agentic Coding in the World, 2025. Section: blog.

- Njenga, J. Alibaba Launches Claude Code Alternative Qwen Code (I Just Tested It), 2025.

- Could Qwen Be the Best Alternative to Claude Code for Developers?, 2025.

- Best AI Models for Coding: GPT, Claude, LLaMA, Mistral & More – AlgoCademy Blog.

- 2025 Complete Guide: How to Choose the Best Qwen3-Coder AI Coding Tool, 2025.

- DeepSeek-R1 Uncensored, QwQ-32B Puts Reasoning in Smaller Model, and more..., 2025.

- The Complete Guide to DeepSeek Models: From V3 to R1 and Beyond.

- Claude 4 Advances Code Gen, How DeepSeek Built V3 For $5.6m, Google I/O Roundup, and more..., 2025.

- Gemini 2.5 Pro vs Claude 3.7 Sonnet vs DeepSeek R1: Which Model Is the Best for Coding?, 2025.

- How Good is the Qwen 2.5 Coder?

- Can a small AI model topple giants? Alibaba’s QwQ-32B aims to, 2025.

- Claude 3.7 Sonnet: How it Works, Use Cases & More.

- Claude 3.7 Sonnet vs. Grok 3 vs. o3-mini-high - Composio.

- Claude Sonnet 3.7 vs. OpenAI o3-mini-high vs. DeepSeek R1 | by Cogni Down Under | Medium.

- Trivedi, A. Can OpenAI’s o3-mini Beat Claude Sonnet 3.5 in Coding?, 2025.

- Gemma 3 27b vs. QwQ 32b vs. Mistral 24b vs. Deepseek r1 - Composio.

- Team, T.E. DeepSeek-R1 Vs. OpenAI o3-mini: Which AI Model Is Winning?

- Lamers, R. Claude 4, Qwen 3 & DeepSeek R1 0528: model capabilities keep increasing, 2025.

- blogs, V.a. Top Gen AI Models Comparison - ChatGPT, DeepSeek, Claude, Perplexity, Gemini, Grok & Qwen, 2025. Section: AI and ML.

- Volkov, A. ThursdAI - May 29 - DeepSeek R1 Resurfaces, VEO3 viral moments, Opus 4 a week after, Flux Kontext image editing & more AI news, 2025.

- Best LLMs for Coding in 2025. Model overview (o3-mini, Claude 4, Llama 4 and More).

- Best LLMs for Coding | LLM Leaderboards.

- DeepSeek R1 vs GPT o1 vs Claude 3.5 Sonnet – Which is best for coding?, 2025.

- Jain, A. Top AI Reasoning Model Cost Comparison 2025, 2025.

- published, A.C. I tested ChatGPT o3-mini vs DeepSeek R1 vs Qwen 2.5 with 9 prompts — here’s the winner, 2025.

- Hoornaert, M. I Tried 37 AI Models, These Are The Ones I’ll Actually Keep Using., 2025.

- 10x faster. Get Started, M.a. Build a Coding Copilot with Qwen3-Coder & Code Context - Milvus Blog.

- Large Language Models Explained: Understanding the Technology Behind Modern AI | AIML API.

- DeepSeek AI | – Deepseek R1, V3, Use Cases | GlobalGPT.

- Qwen 3 vs. Deepseek R1: Complete comparison, 2025.

- DeepSeek vs ChatGPT vs Perplexity vs Qwen vs Claude vs DeepMind: More AI Agents and New AI Tools | HackerNoon.

- Qwen 3 Coder Beats Claude 4 On Paper. Did the Benchmarks Lie? | by Mil Hoornaert | Jul, 2025 | Generative AI.

- Comparing AI Models for Code Generation: Claude 3.7 Sonnet vs Qwen 2.5 Coder – Revolutionizing Intelligence: Cutting-Edge AI, Deep Learning & Data Science.

- Vig, P. Qwen Code CLI + Qwen3-Coder Let’s Set Up Qwen Code, Better than Claude Code?, 2025.

- Ashley. Did Qwen Just Release the Best Alternative to Claude Code ?, 2025.

- Claude AI 3.7 vs. Qwen: Which AI Model Excels in Translation?

- Dalie (Ilyass), G. Why DeepSeek-R1 Is so Much Better Than o3-Mini & Qwen 2.5 MAX — Here The Results, 2025.

- DeepSeek + Claude MCP Server by niko91i.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).