1. Introduction to DiD

With a DiD analysis we try to estimate whether a response variable (i.e. a variable exposed to a treatment) will achieve a mean value that, computed for the set of all treated units (treated group), is statistically different than the mean value computed for the set of some comparable untreated units (untreated or control group), once any factors affecting the link between the treatment and the effect (confounders) are ruled out. Therefore, the DiD analysis aims at “discovering” if a time contingent causal-effect relationship (post hoc, ergo propter hoc) between the response variable and the treatment is statistically consistent with the data. Examples of changes in response variables analysed with DiD after a treatment are numerous and encompass various research fields. They may be the human mortality rate, the unemployment, the quantity of corn harvested, to mention a few. The treatment or causal factor may include the use of a new pharmaceutical drug, the implementation of new training program for unemployed workers, the application of a new agricultural technique, etc. Other known examples of a response variable are SAT (Site Acceptance Test) scores of equipment under quality check, the level of pollution in a county before and after the adoption of environmental measures, or the tree cover density in a region subjected to reforestation. Thanks to its flexibility, DiD has been widely used in economics, public policy, health research, management and numerous other fields.

To purse the above causal analysis, DiD relies on a combination of before-after and treated-untreated group comparisons, but it is worth emphasising from the very beginning that the variable corresponding to the treatment must be expressed as a dichotomous variable (Zero vs One; Yes vs No) and not as a continuous variable. As it will be clarified later, treatment variables will play a role similar to the role played by dummy variables in traditional regression analysis. Not surprisingly, the term “treatment dummy” is frequently used in DiD studies or in studies that are structured as DiD models. The term dummy for example appears already in Ashenfelter & Card (1985) in their study of the effect of some training program in the USA where the longitudinal structure of earnings of trainees and comparisons group are used to estimate the effectiveness of the program for participants. In their study of the relationship between casual factor and response variable, Card & Krueger (1994) provide another early clear illustration of how the treatment is included in the analysis. They investigated whether an increase of minimum wage by New Jersey in 1992 from $4.25 to $5.05 (treatment) resulted in a statistically significant change in employment level amongst fast food restaurant workers in New Jersey (treated units) from that in neighbouring Pennsylvania, which did not change its minimum wage (untreated units). The treatment was not the amount of the wage increase (a possible continuous variable) but the “mere” asymmetric implementation (say, Yes in New Jersey and No in Pennsylvania) of the policy measure.

In general, the DiD analysis aims at estimating the mean difference between actual and potential realizations (the unobserved realizations of the response in the absence of the treatment) of a response variable in treated units after the occurrence of an asymmetric exogenous event (the treatment administered to treated units only) and uses instrumentally the actual response realizations recorded in untreated units. Yet, this modification of mean differences generally occurs over time, and the passage of time represents a complicating challenge in a DiD study. Whatever the response variable we study, the passage of time may affect in a potentially significant way the actual realization of the response as the data generation process proceeds through a possibly long-time span and encompasses several pre-treatment and post-treatment periods. Hence, the specific effect attributable to the passage of time (and the numerous factors potentially concealed in the passage of time) on the mean value of the response variable in both the control group and the treatment group must be properly considered. In other words, the researcher must determine if it was the treatment itself the cause of any change in the mean value of the response variable within the treatment group over and above what was caused by the pure passage of time or by time-conditioned factors. In summary, the main ingredients of the DiD approach to the estimation of the causal-effect relationship are the existence of a treatment administered to treated units only, the behaviour of the treated and untreated response variables before and after the moment the treatment was implemented, and an appropriate consideration for the passage of time.

In this Review we will present the DiD estimation approach to the causal-effect relationship. We will try to highlight how with the DiD method the effect of the treatment can be estimated separately from the effect of the passage of time. To do so we will first present the simplest DiD framework in which the treatment status of each unit can vary over time according to the following dynamics: an initial time period (e.g. months, years) in which there is no treatment is followed by a time period with treatment administered to some units only. The moment in which the treatment is introduced represents the temporal turning point of the entire period under examination. The units under investigation can in turn be assigned to two groups: those classified as never treated (the control group) because they are never subjected to the treatment during the entire sample period and those units that are treated in the post-intervention period only (the treated group). We will assume (section 1.1) that the latter are uninterruptedly treated from the introduction of the treatment until the end of the observed periods. In the initial simplest DiD framework we will assume that the treatment is the only relevant independent variable affecting the outcome of the response dependent variable. Then, we will discuss the OLS way to estimate the effect of the treatment (section 2) as well as the identification problems related to the estimation process (section 1.4). We will call this initial framework Homogeneous case without cofactors. Homogeneity means that all the treated units will start to receive the treatment in the same moment. The presence of cofactors will be discussed later when the Homogeneous case with cofactors will be analysed in section 6. Analogously, the model structure in which treatments are administered in different periods to different treated units and never administered to some other units will be considered later in section 7 and it will be termed Heterogeneous Case (with or without cofactors). DiD techniques to be used under more complicated data structure (e.g. data generating clustering phenomenon or spatial-temporal relations) will be analysed in sections 8, 8.1, 8.2, and 8.3 at the end of the Review before the concluding section 9 which also contains important warnings and caveats. A final set of sections (section 10 and followings) surveys some applications taken from the literature and discusses methods and results.

A particular aspect of DiD on which we decided to focus is the exogeneity character of the treatment and the so-called parallel trend assumption (section 1.2). They represent fundamental elements of the method. As some of the papers discussed at the end of the Review will show clearly, in many cases DiD represents the statistical approach need to overcome the simultaneity and endogeneity difficulties inherent in many circumstances in more traditional OLS estimation techniques. Yet, this advantage of DiD over alternative techniques requires that some crucial assumptions about the data generation process are satisfied.

This Review will also review other aspects of the DiD methods that, in our opinion, are not sufficiently considered by DiD literature. In particular, in section 1.3 we discuss the Stable Unit Treatment Value Assumption (SUTVA) and show why DiD identification process requires that the treatment applied to one (or more) unit should not affect the outcome for other units. In other words, we discuss why the potential outcome of a generic unit in the analysed sample should not depend on the treatment status of some other units in the same sample or on the mechanism by which units are assigned to the control or treatment groups. We also pay special attention to the role that confounding factors have in DiD (section 5) and in sections 7, 7.1, 7.2, and 7.3 we analyse the most widespread methods proposed to estimate DiD when the sample period encompasses more than two periods and there is treatment heterogeneity. As anticipated above, complex data structure, such as data clustering and spatial-temporal dependence, that can affect the DiD estimation strategy, are discussed in sections 8, 8.1, 8.2, and 8.3.

Although this simple Review is conceived for applied economists, readers should keep in mind that DiD most attractive features are its (relative) simplicity and wide applicability. After all, to carry out a basic DiD study, we just require observations from a treated group and an untreated (comparison) group both before and after the intervention is enacted. Accordingly, in the last sections we discuss some papers that have applied DiD techniques in various research areas relevant in a public economics or public policy perspective. We stress that health care is not an area covered by this review because readers can access many several DiD studies that have been used to evaluate new policies and health programs. For example, in the USA dozens of studies have estimated the effects of expanded Medicaid eligibility through the Affordable Care Act (ACA). Following the Supreme Court ruling on the ACA, each State in the US choses whether to expand its threshold for Medicaid eligibility. This possibility created groups of treated states and comparison (untreated) States and enabled the application of DiD. These studies have informed ongoing policy debates in the US about the future of the ACA and the reader is referred to that literature (see Zeldow and Hatfield, 2021 for an introduction).

Finally, we stress that this Review covers the basic (almost intuitive) DiD techniques. There are other more advanced reviews (Callaway, 2021; Roth et. al., 2023, just to mention two papers) as well as chapter 5 of Angrist and Pischke (2009) and chapter 21 of Wooldridge (2010) that should be consulted by more advanced users.

We lastly stress that SW packages useful to implement basic and more advanced DiD methods can be found in the following websites (alphabetic order):

In the Appendix to the Review will include a few homemade ad hoc data sets to be used as examples of the DiD estimation techniques analysed in the Review and to conduct exercises.

1.1. A 2 × 2 (Two Groups and Two Periods) Homogenous DiD with No Cofactors

This Review presents a review of basic methods and recent developments introduced in the DiD literature in the last 30 years. Clearly, the fundamental notions of DiD could be assumed to be almost common knowledge and in theory they should not require a new basic review to be added to the many that already exists. Yet, since we want to offer a (may be incomplete, but) self-contained treatment of the subject we start with the basic framework need to identify a DiD model.

Assume that we have randomly drawn from an infinite population two samples of individuals (with or without the same numerousness of units), respectively denoted as G1 and G2. We call i an individual belonging to G1 and j an individual belonging to G2. Assume that the two periods under study are two years, each divided for expositional convenience in 12 months. We observe in each month of the first of the two years under study the realization of a random variable y representing the relevant variable under our investigation (income, unemployment, indebtedness, hours of work, rate of financial criminality, level of fever, etc.). For reasons that will become clear very shortly we call y the response variable. If is the realization of y for an individual i in G1 recorded during each month t = (1, …, 24), then is month t mean value of y generated by data of individuals belonging to G1 with N representing the total number of individuals in G1. Correspondingly, is month t mean value for group G2, generated by all j individuals of that group formed by M individuals. As a result, for each year we record 12 mean monthly values for each group. Altogether, we have 48 mean observations in the 2-groups × 2-years dataset.

We assume that the monthly evolution of

and

during the first 12 months is

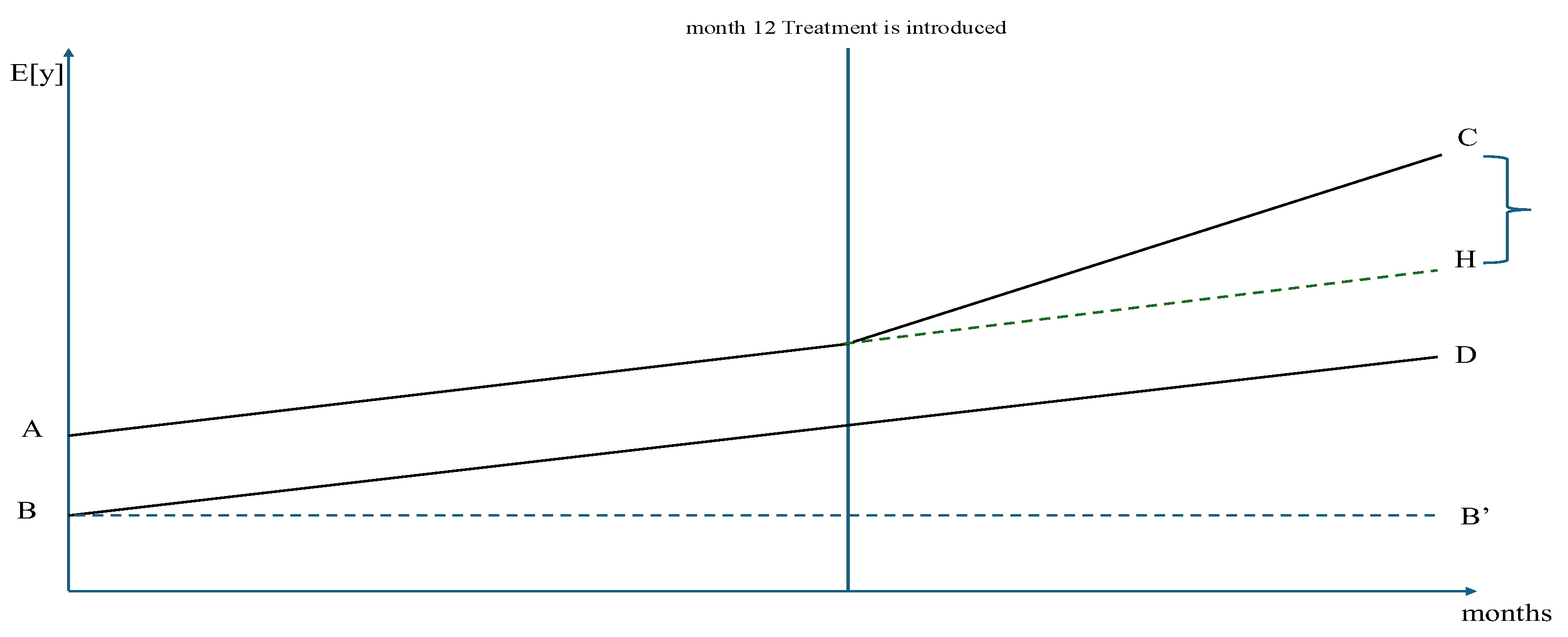

linearly parallel. In other words, we assume that the time evolution of the two series of mean values follows a parallel path having the same time slope so that the two paths are separated only by a group-specific constant (a sort of individual fixed effect used in the fixed effects least squares with dummy variables panel data analysis). Then, the plot of the time behaviour of the 24 mean values during the first year (first 12 months) corresponds to the left portion of

Figure 1 reproduced below (the first 12 months to the left of the vertical line).

Assume now that at the end of the first year (i.e. in correspondence to the vertical line in

Figure 1) “something” affecting

only G2 happened, ceteris paribus. That

something is generally assumed to correspond to an

exogenous event and it is called

Treatment (e.g. a new regulation, a more or less exogenous change of tax rates implemented in G2, some new subsidies paid to firms of that group, higher interest rates, a natural event, a new pharmaceutical therapy, etc.). This way of introducing Treatments should make clear that the word

“period” used in this DiD review is not synonymous of calendar unit of time but of “temporal phase”. In the 2 × 2 case we have two periods/phases: the first one (lasting 12 months) with no event and the second (lasting 12 months) with an event affecting the units of a group (G2 in our example) right from the

arrival of the event and continuously

until the end of our sample time.

We assume that individuals in G2

cannot anticipate the introduction of the treatment (and therefore cannot react in advance to its occurrence). Now it becomes relevant the right part of

Figure 1 (the part to the right of the vertical line). Inspection shows that the time path of the expected values of

y for the treated group G2 which, after the treatment, can be specified as:

(where Treatment = 1 means when the treatment is operative).

The G2 path has been twisted upward about the point in the plot corresponding to the last month of the first year (i.e. the last pre-treatment or pre-event month) while the time path of G1 proceeds according to the previous linear trend and is:

(where Treatment = 0 means when the treatment is not operative).

Hence, we assume that E[y] in G1 is unaffected by the treatment that is implemented with respect to G2 units only, and additionally that the treatment affecting G2 has no spillover effects on G1. Then, the dashed line represents the possible realizations of the expected values of the mean values of y for G2 in the absence of treatment but under the linear parallel trend hypothesis discussed above. In other words, the dashed line indicates what the path of the expected realizations of y in G2 would had been expected were the “perturbing” event (the treatment) absent, as if the Galilean inertia principle for uniform linear motion of corps was at work (no intervening external forces). Clearly, these realizations are not observed: actually, they do not exist. For that reason, we name them “potential realizations” as if they were the effect resulting from the application of a “vis inertiae, or force of inactivity” to use the terms employed by Newton in his Philosophiae Naturalis Principia Mathematica of 1687) because their force depends only upon their position (potential energy).

We now have all the ingredients useful to measure the

average effect that the treatment had on G2 (the treated group). The mean effect of the treatment is the difference between the value assumed by the mean

y in G2 after the treatment (solid line) and the value that it would had potentially assumed by pure

vis inertiae in the absence of the treatment (dashed line). That effect corresponds to the segment

CH in

Figure 1 whose length is the difference between the abscissa of point

C and the abscissa of point

H.

The measure of the length of the segment

CH =

C – H can be recovered as follows:

By manipulating the last equation, we express

CH as a

difference between two differences:

In the latter version, the measure of the treatment effect corresponds to the difference between two terms. The first term (included in the first parenthesis) measures

the difference in the realizations of the expected value of y for both groups (treated and untreated) in the post-treatment period, i.e. when

Treatment = 1 in the above expected values formulas. The second term (included in the second parenthesis) measures

the difference between the initial intercepts for the two groups i.e. when

Treatment = 0. The latter corresponds to the constant vertical distance of the two lines in the pre-treatment period and (under the hypothesis that the time trends are initially parallel and would had remained parallel in the absence of treatment). In other words, it is assumed that the constant initial difference (segment

AB) is constant during the entire period 1 because it depends only upon the above mentioned idiosyncratic constant elements and then, because of the Galilean inertia principle, it is bound to remain constant in the absence of treatment (the only new intervening force), even in period 2. This motivates the plot dashed line in period 2 in

Figure 1 and the previous description of those (unobserved) values as “potential”.

Therefore, the Average Effect of the Treatment upon Treated (ATET from now on) is obtained by differencing the mean response for the treatment and control units over time to eliminate time-invariant unobserved characteristics and also differencing the mean response of the groups (treated and untreated) to eliminate time-varying unobserved effects common to both groups. In other words, the DiD technique eliminates the influx of time-varying factors (confounders) by comparing the treatment group with a control group that is subjected to the same time-varying factors (confounders) as the treatment-receiving group.

As an example, we may think that y is the employment rate, and that the treatment is a subsidy paid only to firms in G2 (e.g. a particular area of the country) for every new employee. If expected unemployment in G1 and G2 follows a parallel trend in period 1 (when no subsidy was paid to firms), expected unemployment in G2 should stick to the linear trend of period 1 and remain parallel to that of G1. The dashed line would represent the potential expected value of unemployment in G2 in case of no subsidy granted to firms of G2 in period 2.

Figure 1 shows that the untreated group G1 has a role of paramount importance in the measurement procedure depicted above. G1 (untreated) acts as a

control group and supplies (loosely speaking) the substitutes for the unobservable (because they are never realised)

counterfactual observations of G2 to be used when studying the effect of the treatment. To be specific, the hypothesis is that in the absence of treatment reality would have evolved in G2 as described by the

E[

y] recorded in G1 with the obvious consequence that the right curly bracket in

Figure 1 would not exists because C → H. In other words, it would be

.

We can now proceed to estimate the effect of the event using all the expected values as follows.

C is the expected value of y for the treated group conditional upon the application of the treatment on that group

D is the expected value of y for the untreated group conditional upon the absence of the treatment for that group

A is the expected value of y for the treated group conditional upon the absence of the treatment

B is the expected value of y for the untreated group conditional upon the absence of the treatment

Therefore, calling

h = (

i, j) a generic individual in the

population (either treated or untreated, i.e. G1 + G2) we may write a linear regression model as follows

where:

yht is the value of the response variable for a unit in the population under study. Its value is measured in each group and each t, i.e. before and after the introduction of the treatment. It will correspond either to the i-th or to j-th observation at time t depending on the group (treated or untreated) of the unit.

β0 is the intercept of the regression model, common to treated and untreated units.

D1 is the Time Period Dummy which is a dummy variable that takes the value 0 or 1 depending on whether the h.th observation of the response variable refers to the pre (D1 = 0) or post treatment period (D1 = 1) independently on the group (treated or control) the observation belongs to. It simply indicates if that t is a period in which the treatment existed or not.

D2 is the Treatment Indicator Dummy which is a dummy variable that takes the value 0 or 1 depending on whether the h.th measurement refers to an individual in the control group (untreated) or in the treatment group respectively, independently on the time period. Therefore, D2 = 0 when the observation belongs to an untreated unit and D2 = 1 when the observation belongs to a treated unit (independently upon when the treatment was introduced). Clearly, in the simplified example of this section with only two periods, D2 = 0 means that the unit is never treated. Other settings are discussed in other sections.

D1× D2 is the interaction term between the time dummy and the treatment dummy. It s the most important coefficient to estimate. It measures the average effect of the treatment on treated units the estimated average differential impact of the treatment.

As it will be commented below, the above equation (1) represents the basic but elegant form of a DiD analysis for the homogenous case with no cofactors. In

Table 1 we analytically discuss the relevance of each coefficient. Here we stress that the

elegance of DiD (Goodman-Bacon, 2021 p. 254) makes it clear which comparisons generate the estimates, what leads to bias, and how to test the design. The expression in terms of sample means connects the regression to potential outcomes and shows that, under common trends assumption, a two-group/two-period (2×2) DiD identifies the average treatment effect on the treated.

The estimated coefficients of equation (1) have definite relations with the critical points of

Figure 1. These relations are illustrated in the following 2 × 2

Table 1 which gives a more explicit description of how the states of the word (time and treatment) can combine and how they affect the realization of

y in the above equation (1).

In what follows, the estimated coefficients obtained from an OLS regression of the model correspond to the expected values presented above. For the fitted model, the corresponding expectations are as follows. The caps (^) above the coefficients indicate that they are the estimated (fitted) values of the corresponding coefficients. Replacing

yht with the expected value of

yht also allows us to drop the error term

since by hypotheses in a well-behaved OLS regression model, the expected value of the error term is a zero mean and constant variance term. Hence, we can rewrite the content of each cell of the 2 × 2 matrix of

Table 1 as follows.

In terms of the hypothetical data set generating

Figure 1,

corresponds to point

B and must be interpreted as the average baseline common to the two groups (constant).

In terms of the hypothetical data generating

Figure 1,

still corresponds to point

B and, as above, and must be interpreted as the model baseline average (constant).

, which corresponds to slope of segment

DB, and is the time trend in control group in treatment.

In terms of the hypothetical data generating

Figure 1, we have that

- (i)

corresponds to point B, as above, and must be interpreted as the model baseline average (constant) and

- (ii)

, which corresponds to segment AB, is the constant difference between the two groups before the treatment.

In terms of the hypothetical data generating

Figure 1, the sum

corresponds to point

C.

We now proceed to calculate the difference in the expected value of y between the before (pre-) and after (post-) treatment phases of the study.

For the treatment group, the difference in expectations works out as follows:

which is the difference in estimated response between the after-treatment and before-treatment phases of the study recorded within the treatment group.

Similarly, for the control group we have:

The above is the difference in estimated response within the control group between the after-treatment and before-treatment phases of the study.

The

difference between the two differences measures the average net effect of the treatment on the treated group, that is,

The estimated coefficient is what we have called ATET (Average Treatment Effect upon Treated) and it has been obtained from the estimates of a linear model in which there a no cofactors (other independent variables affecting y). Its difference with respect to the similar measure of the treatment called ATE is discussed later.

For a more analytically grounded derivation of one may consult Angrist and Pischke (2009, p. 229) who discuss the expected DiD effect and then show the OLS regression that may be used for its estimation. Following the opposite route, Wooldridge (2010, p. 148) first starts from an OLS regression equation augmented with Treatment Dummies and then expresses and interprets the estimated relevant dummy as an estimate of the expected DiD treatment effect.

The following Remarks summarises the OLS use for a DiD strategy.

Remark n.1: the basic ingredients

We start with a set of i.i.d. individuals

i = 1, …,

n and a tuple

where

is the response variable,

is a cofactor vector (it may not be present) and

is the treatment assignment. We assume that the

potential outcome (realization of the response variable) depends on treatment and can be

We define the causal effect of the treatment as , the difference in potential outcomes of individual i, so that on average (population) we have that the Average Treatment Effect upon Treated (ATET) is . Clearly, each realization of the response can be observed in just one state of the word, i.e. conditional on either or , not both. Under the assumption that the treatment assignment is random (there is no systematic association between the potential outcome of an individual and the treatment), OLS methods can help us to overcome this missing data problem.

Remark n.2: ATET and OLS estimator

The OLS method of estimation of equation (1) correctly identifies the ATET in a DiD regression under parallel trend and no anticipation effects for it allows us to define the estimand which involves unobservable counterfactuals in a form (equation 1) that depends only on observed outcomes. This process is called “identification” (see below for more discussion).

Then, ATET is the expected value of the DiD effect between the treatment and control group (i.e.

CH in

Figure 1). After the DiD model is estimated, the estimated coefficient of the interaction term (

D1 ×

D2), i.e.

, will give us the estimated difference-in-differences effect of the treatment that we are seeking. The coefficient’s

t-score and corresponding

p-value will tell us whether the effect is statistically significant and if so, we can construct the 95% or 99% confidence intervals around the estimated coefficient using the coefficient’s standard error reported by the model output.

Finally, recall that we have randomly selected the participants (treated) and the non-participants (untreated). Therefore, at this stage we do not pose ourselves the question: why didn’t the non-participants participate? As we shall see, this question is outside the realm of DiD analysis. The general DiD assumption is that there is a sort of powerful external force determining in a random way the correct random sampling.

1.2. Violations of the Parallel Trend Assumption

Remark n.1 and

Figure 1 clearly indicate that the parallel trend assumption is one fundamental ingredient of DiD. Yet, the presence of parallel trends should not be ascertained from simple optical observation of plots like

Figure 1. There may be cases in which the pre-treatment trends of the treatment and control groups may appear different and the power to detect violations of parallel trends hypothesis is low. Or it may be the case that the pre-treatment trends were the same, but we have a reason to think that some other shock in the economy different than the treatment may cause the post-treatment trends to differ. Then, the question is: can we still use DiD when we are unsure about the validity of the parallel trends assumption? Rambachan and Roth (2023) note that one may assume that the pre-existing difference in trends persists from pre to post treatment periods and simply extrapolate this out. For example, we might assume that a difference in trends of 1% per month in the employment rate data set in treated and untreated areas would continue to hold after the treatment (say a policy wage intervention). Then, if the control group (no intervention) has employment grow at 3% per month after the intervention is passed, we would assume the treated group employment would have grown at 4% per month and compare the actual employment rate to this theoretical counterfactual. However, assuming the pre-treatment difference in trends carries out exactly in the post-treatment period is a very strong assumption, particularly if we did not have many pre-treatment periods over which to observe it. Rambachan and Roth (2023) suggest that researchers may instead want to consider robustness to some degree of deviation from the pre-existing trend, so that linear extrapolation need only be “approximately” correct, instead of exactly correct. This difference is realized by allowing the trend to deviate non-linearly from the pre-existing path by an amount, call it M –the bigger that amount, the more deviation from pre-existing trend is allowed. Once one abstains from imposing that the parallel trends assumption holds exactly, the (pseudo)parallel trend is tested by testing the restrictions on the possible post-treatment differences in trends (the above M) given the point identified pre-trends estimate. Such restrictions formalize the intuition motivating pre-trends tests, namely that pre-trends are informative about counterfactual post-treatment differences in trends. Then their paper shows that given M, we can identify a confidence set for the treatment parameter of interest. In doing so we clearly violate the “pure” parallel trend assumption needed to identify the DiD parameters and instead resort to a sort of partial identification approach. Researchers can then also find and report the breakdown point – how much of a deviation from the pre-existing difference in trends is needed before we can no longer reject the null. As an example, Rambachan and Roth (2023) consider the impact of a teacher collective bargaining reform on employment, in which parallel trends seem to hold for males, but in which there is a pre-existing negative trend for females. They show the DiD estimate for males at M=0 (linear extrapolation of the pre-existing trend), and then CI which get wider as they allow more and more of a deviation in trends (increasing M > 0). In contrast, for females, the DiD estimator is of opposite sign to what would be obtained when we extrapolate the pre-existing trend at M = 0, and then one sees how these results change as more deviations from these existing trends are allowed for.

Rambachan and Roth (2023) provide inference procedures that are uniformly valid so long as the difference in trends satisfies a variety of restrictions on the class of possible differences in trends and derive novel results on the power of these procedures. They recommend that applied researchers report robust confidence sets under economically motivated restrictions on parallel trends and conduct formal sensitivity analyses, in which they report confidence sets for the causal effect of interest under a variety of possible restrictions on the underlying trends. Such sensitivity analyses make transparent what assumptions are needed in order to draw particular conclusions.

A second approach is provided by Bilinski and Hatfield (2020). They recommend a move away from relying on traditional parallel trend pre-tests because of problems can emerge in both directions. Sometimes we may fail to reject parallel trends because the test power is low or, on the contrary, because the power is high. Yet, when we reject parallel trends, this doesn’t tell us much about the magnitude of the violation and whether it matters much for the results – with big enough samples, trivial differences in pre-trends will lead to rejection of parallel trends. Bilinski and Hatfield (2020) argue that the most popular approach to testing parallel trend is incorrect and frequently misleading and present test reformulations in a non-inferiority framework that rule out violations of model assumptions that exceed a threshold. We then focus on the parallel trends assumption, for which we propose a "one step up" method: 1) reporting treatment effect estimates from a model with a more complex trend difference than is believed to be the case and 2) testing that that the estimated treatment effect falls within a specified distance of the treatment effect from the simpler model. This reduces bias while also considering power, controlling mean-squared error. Our base model also aligns power to detect a treatment effect with power to rule out violations of parallel trends.

A third approach is proposed by Freyaldenhoven, Hansen and Shapiro (2019). Their idea is a solution similar to instrumental variables to net out the violation of parallel trends. For example, suppose that one wants to look at the impact of a minimum wage change on youth employment. The concern is that states may increase minimum wages during good times, so that labour demand will cause the trajectory of youth employment to differ between treated and control areas, even without the effect of minimum wages. Their solution is to find a covariate (e.g. adult employment) which is also affected by the confounder (labour demand), but which is not affected by the policy (i.e. if you believe minimum wages do not affect adult employment). Then this covariate can be used to reveal the dynamics of the confounding variable and adjust for it, giving the impact of the policy change. Importantly, this does NOT mean simply controlling for this covariate (which only works if the covariate is a very close proxy for the confounder of concern), but rather using it in a 2SLS or GMM estimator. Another example concerns the impact of SNAP program participation on household spending, where the main dataset has SNAP participation and the outcomes, and the concern is that income trends may determine both program participation and spending. Using a second dataset that has SNAP participation and income, they can instrument participation with leads of income, which requires assuming that households do not reduce labour supply in anticipation of getting the program.

1.3. The Stable Unit Treatment Value Assumption (SUTVA) (Rubin, 1978, I980, 1990)

DiD identification require that the treatment applied to one (or more) unit does not affect the outcome for other units. Following the definition of Angrist et al. (1996), Rubin (1980, 1990), Wooldridge (2010, 905) by Stable Unit Treatment Value Assumption (SUTVA) in causal studies we mean that the potential outcome for a generic unit does not depend on the treatment status of the other units or on the mechanism by which units are assigned to the control and treatment groups. In other words, treated and untreated units are expected not to mutually interfere and do not influence their outcomes (Cox, 1958). The authors themselves point out that the assumption is critical and does not always match with real situations. For instance, let us consider a generic market in which operators mutually know and interact, thus influencing the reactions to exogeneous or external events, or policies in which “spillover effects” among neighbours can affect the choices of people involved in the experiment (Sobel, 2006). Similarly, one could consider panel data settings in which units interact across temporal (e.g., anticipation effects), cross-sectional (Xu, 2024), and spatial dimensions (Wang, 2021; Wang et al., 2020; Xu, 2024). Imbens and Rubin (2015, pp. 10) use the example of the fertilizer applied to one plot that affected the yields in contiguous untreated plots. Another example might be that of students assigned to attend a tutoring program to improve their grades (treated units) who might interact with other students in their school who were not assigned to the tutoring program (untreated control units) and influence the grades of the latter. Treated students might affect “informally” the performance of the control students since their interaction can generate spillover effects of the treatment in favour of untreated students. Under these circumstances, to enable causal inference, the analysis might be completed at the school level rather than the individual level. SUTVA would then require no interference across schools, a more plausible assumption than no interference across students.

Hence, SUTVA demands that the potential outcomes for some untreated unit do not vary with the treatments assigned to some other treated units. In other words, a subject’s potential outcome is not affected by other subjects’ exposure to the treatment. The SUTVA implies that each individual has one and only one potential outcome under each exposure condition, that is with and without treatment (Schwartz et al., 2012), thus making the causal effect “stable”. On the contrary, when the SUTVA is not fulfilled, there could exist multiple potential outcomes for each individual under each exposure condition (i.e., the causal effect is not unique), potentially leading to lead to misleading inferences. In non-economic frameworks, researchers often add a second aspect of stability in causal studies and closely related to the original SUTVA, that is, the so called “consistency assumption” (Cole and Frangakis, 2009; VanderWeele, 2009) or “no-multiple-versions-of-treatment assumption”, which states that potential outcomes of individuals exposed to the treatment coincide with their observed outcomes. In other words, there are no hidden forms of treatment leading to different potential outcomes (Cerqua et al., 2022; 2023).

Laffers and Mellace (2020) introduced a third source of violation of the SUTVA, that is, the presence of measurement errors in either the observed outcome or the treatment indicator. While this new perspective extends the definition of the SUTVA, the authors also propose a way to relax the assumption by means of a sensitivity study. Specifically, they suggest computing the maximum share of units for which SUTVA can be violated without changing the conclusion about the sign of the treatment effect. According to the specificities of the empirical setting of interest, several other attempts to extend and to relax the SUTVA can be found in the recent literature (see, for instance, the paper by Qiu and Tong, 2021; VanderWeele et al., 2015 for a recent review on causal inference in the presence of interference). For instance, considering the case when all units are affected by the treatment, Cerqua et al. (2022) make use of a machine learning counterfactual framework in which the no-interference part of the SUTVA is substituted by a milder definition only requiring that the potential outcomes for treated units are not affected by the individual characteristics of the other treated units. Indeed, in Cerqua et al. (2023), the authors remove entirely the no-interference assumption and rely solely on the no-multiple-versions-of-treatment assumption, as they are aware that in many socio-economic applications agents are sensibly affected by interference across both space and time. Other strategies attempt to relax the assumption by using clustered or hierarchical data structures (for instance, individuals living restricted areas such as neighbourhoods) with potential spatial spillovers. VanderWeele (2010), for instance, introduced the definition of individual-and-neighbourhood-level SUTVA and neighbourhood-level SUTVA to deal with empirical setting in which cluster-level interventions are considered. Among others, Huber and Steinmayr (2021) allow for the interaction between individuals and higher-level structures (e.g., regions) and suggest a non-parametric modelling to separate individual-level treatment effects from spillover effects. However, while the SUTVA may be violated on the individual level, it must hold at the aggregate level. The latter can be referred to the regional SUTVA, which admits spillover effects between individuals within regions, but rules out spillovers across regions. Under this new setting, the total treatment effect may be split up into an individual effect and a within-region spillover effect driven by the treatment of other individuals in the region. Eventually, Ogburn et al. (2020) and Ogburn et al. (2024) considered the potential spillover effect produced by a network in which individuals mutually interact and treated individuals may spread the treatment to their social contacts.

In the rest of the Review, we will adopt the definition of SUTVA provided in Remark n.2, that is,

Remark n.3: the SUTVA assumption

The potential outcomes for any unit do not vary with the treatments assigned to other units, and, for each unit, there are no different forms or versions of each treatment level, which lead to different potential outcomes.

Essentially, Remark 3 states that an individual’s potential outcome under a given treatment depends neither on the treatment received by other individuals nor on different versions of the treatment itself. In other words, each individual has only one potential outcome for each level of treatment, and this outcome is independent of the treatment received by others. Specifically, we must make sure (i) that an individual’s outcome is not influenced by the treatment received by other individuals. For example, if we are evaluating the effect of a drug, SUTVA implies that whether a patient takes the drug does not affect the outcome of another patient who might not take it and (ii) that for each level of treatment, there is a unique version of the treatment that leads to a given potential outcome. This means that there are no different versions of the treatment that could lead to different outcomes for the same individual.

We may conclude that SUTVA is crucial for the correct interpretation of causal effects because, if violated, it can lead to biased estimates of treatment effects. For example, if interference is present (a student treated with a new textbook shares the improvements of her/his knowledge with an untreated fellow student), we may not be able to distinguish the effect of the treatment from the effect of the interactions between treated and untreated individuals.

1.4. Exogeneity and Identification. DiD and Traditional Econometrics

In OLS regression analysis we are interested in assessing the effect of a (usually) continuous variable

x on a dependent

Y under the hypothesis of exogeneity. The “true” causal effect of

x on

Y can be identified as long as independent changes of

x only produce a direct effect on

Y, by ruling out any potential indirect effect of

x on

Y occurring via the relation of

x with unobservable factors. Without this exogeneity condition, OLS produced biased estimated parameters. Using Cerulli’s (2015) example, we assume that the regression model is

where

β represents the causal effect of

x on

Y and

u is a non-observable factor. By differentiation we have

The model is identified as long as . If the autonomous changes in x are not exogenously determined, as x has also an indirect effect on Y through its effect on u and since u is not observed we cannot separate the direct effect (β) and the indirect effect ( and the model is no longer identified.

The counterfactual approach of the DiD to causality can be reformulated in terms of OLS model with the x assuming a binary form (say x0 for the treated and x1 for the untreated) instead of a continuous x. If we can observe two responses (Y0 state: treatment and Y1 state: no treatment) we write

Subtracting the second equation from the first we have

If a bias similar to the previous one is generated even when we use binary form data typically associated with treatment events and counterfactuals. Exogeneity of treatment is a necessary condition.

In DiD analysis we may go a little further and portrait the identification problem using the assumptions made above in section 1.1. We can re-write the target estimand of section 1.1 (which involved unobserved counterfactuals) in a form that depends only on observed outcomes. In DiD we call this process “identification”. To do so we assume that

the change in response from pre- to post-intervention in the control group is a good proxy for the counterfactual change in untreated potential outcomes in the treated group. When in a 2 periods framework we observe the treated and control units only once before treatment (

t = 1) and once after treatment (

t = 2), we write this as:

Notice that it involves unobserved counterfactual outcomes, namely

(the potential realization of y in case of no treatment; recall from

Figure 1 that these data no not exist). This is other way to state the parallel trend assumption or the

counterfactual assumption.

We also need to make more explicit another assumption of section 1.1. For DiD the treatment status of a unit can vary over time. However, we only permit two treatment histories: never treated (the control group) and treated in the post-intervention period only (the treated group). Thus, we will use D = 0 and D = 1 to represent the control and treated groups, with the understanding that the treated group only receives treatment whenever

T > T

0. Every unit has two potential outcomes, but we only observe one — the one corresponding to their actual treatment status.

The consistency assumption links the potential outcomes

at time

t with treatment d with treatment

d ∈ D = (0,1) to the observed outcomes

:

Finally, we add the assumption that future treatment does not affect past outcomes. Thus, in the pre-intervention period, the potential outcome with (future) treatment and the potential outcome with no (future) treatment are the same (no anticipation effects).

Using the assumptions made above, we can re-write the target estimand (which involved unobserved counterfactuals) in a form that depends only on observed outcomes. In DiD this process is specifically called “identification” and should not be confused with the specification problems typical of traditional OLS single equation regressions or with the so called over or under identification problems emerging from multi equations OLS systems. DiD identification relies on the Counterfactual Assumption and the Consistency Assumption discussed above, and ends with the familiar DiD estimator where for reducing notation we use

D instead of

D2 of equation (1) and indicate periods as numbers between parenthesis (see Callaway, 2022, 8):

You may compare the above ATET with the result obtained in section 1.1. To simplify reading and comparison we summarise the meaning of the above terms as follows:

is the post-intervention average response of the treated group

is the pre-intervention average response of the treated group

is the post-intervention average response of the control group

is the pre-intervention average response of the control group

In summary, DiD identification begins with the ATET, applies the Counterfactual Assumption and the Consistency Assumption, and ends with the familiar DiD estimator.

In section 3 we present a worked example in which the above expected values are computed and used to calculate the ATET coefficient as a difference among differences.

When we observe the treated and control units multiple times before and after treatment, we must adapt the target estimand and identifying assumptions accordingly. Identification problems with multi period DiD is discussed later.

Appendix A provides an example with an easy visualization of the data set

2. The OLS Version of the Two-Way Fixed Effects Regression (TWFE)

TWFE is the most common way to implement a DiD identification strategy under the assumption of treatment homogeneity. In this section we present what Roth, Sant’Anna, Bilinski, and Poe (2023, p. 2224) call a “static” TWFE which regresses the outcome variable on individual and period fixed effects and an indicator for whether the unit

h is treated in period

t. Recall that in section 1.2 we have defined

Then, the estimated ATET can be written by

replacing population means by their sample analogues (indicated by upper bars) to obtain

The above expression is algebraically equivalent to either of the following OLS regression system

where in the system

i indicates treated units,

j indicates untreated units, and

t is time, and

h in equation (2) below can be either

i or

j. The interpretation of the quantities involved in (2) is the following:

is the response variable

is a time effect

is a unit (not group) fixed effect

is the dummy (indicator) for whether or not unit h is affected by the treatment in period

t (the term D1 × D2 of the last column of

Table 2)

are idiosyncratic, time-varying unobservable factors.

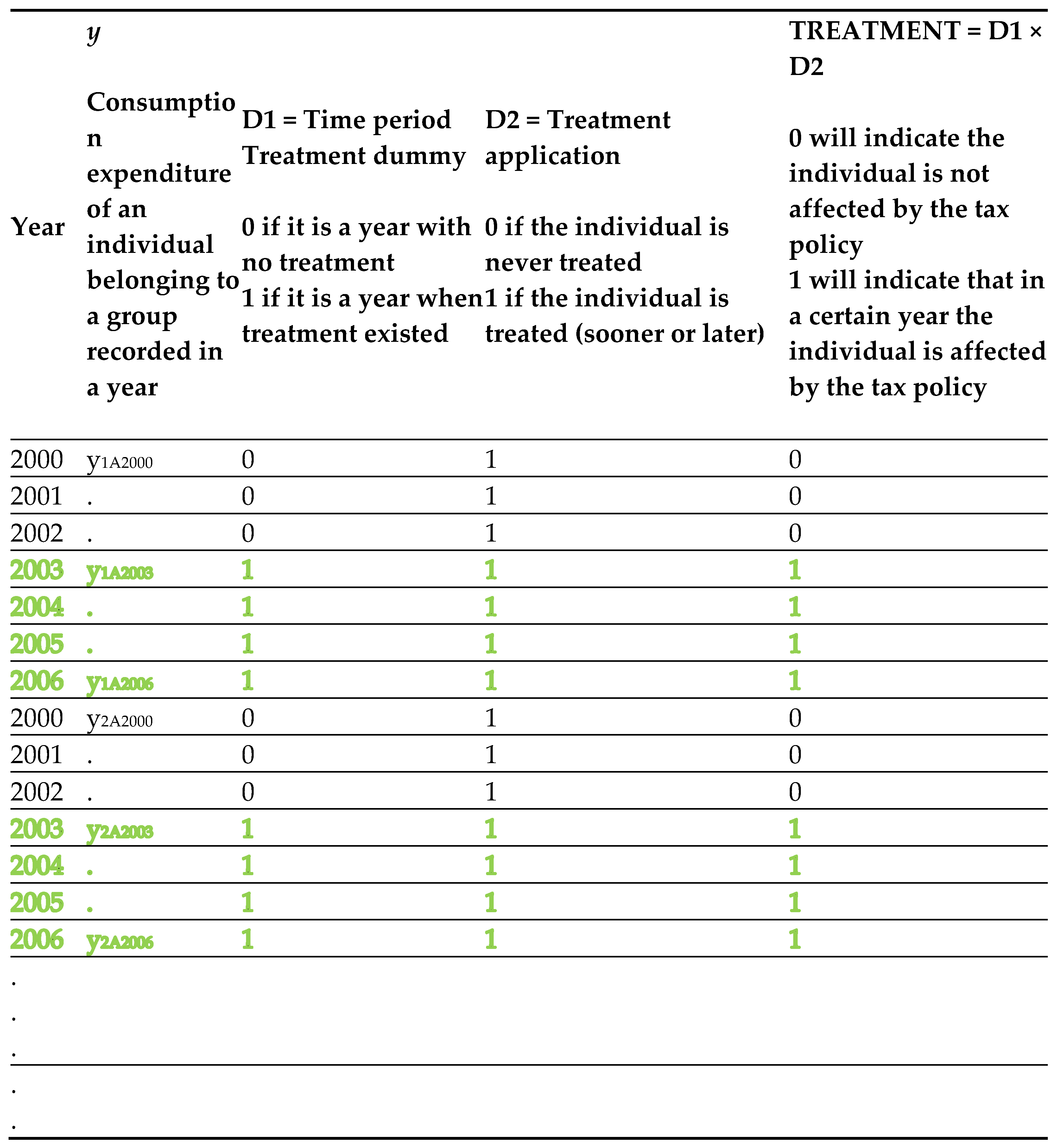

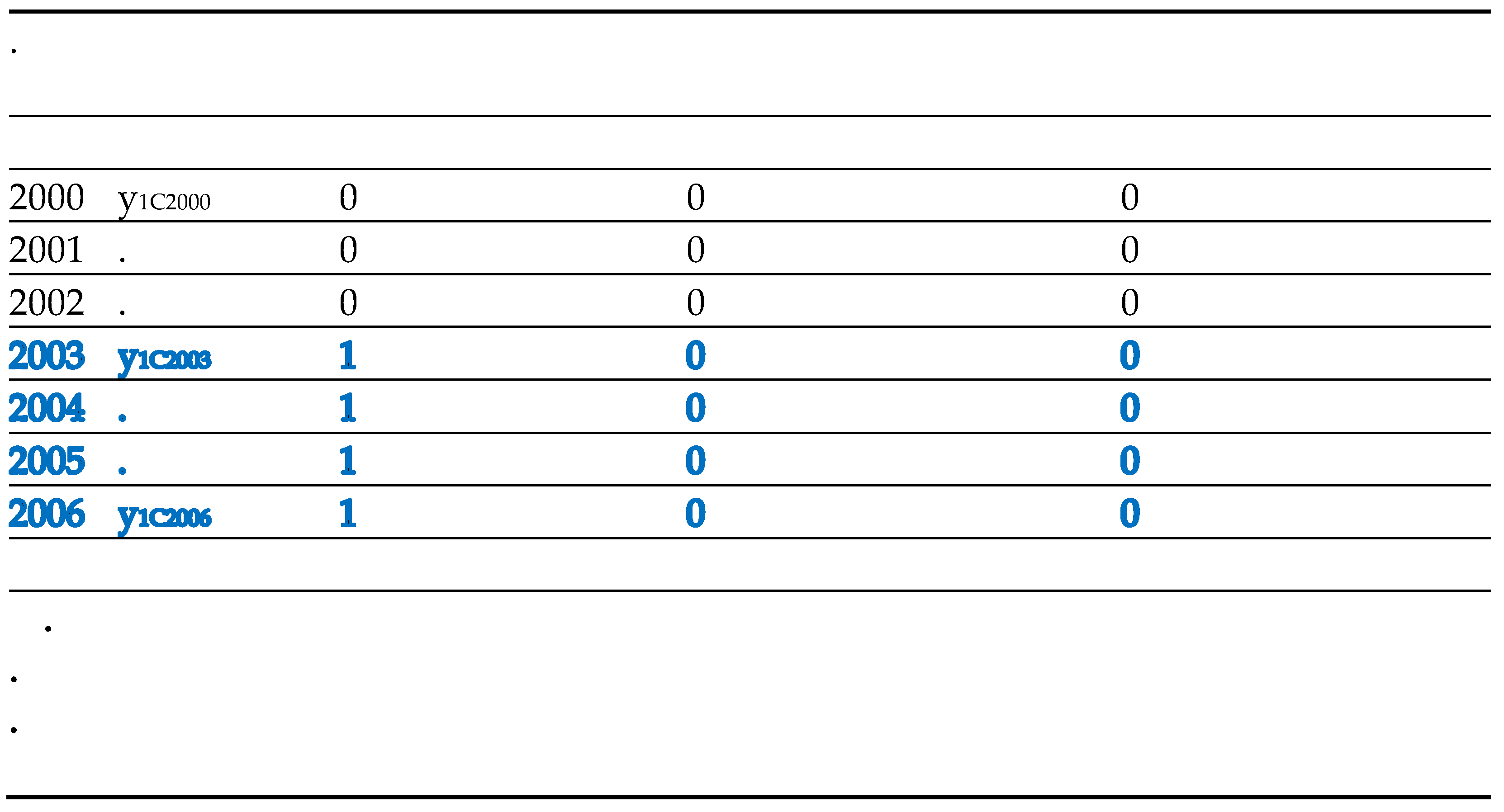

Table 2.

Example of data set for DiD with more than two years.

Table 2.

Example of data set for DiD with more than two years.

Table 2a.

Example of a DiD data set.

Table 2a.

Example of a DiD data set.

| 1 |

1 |

0 |

.5 |

| 1 |

2 |

0 |

.5 |

| 1 |

3 |

0 |

.5 |

| 1 |

4 |

0 |

.5 |

| 1 |

5 |

0 |

.5 |

| 1 |

6 |

0 |

.5 |

| 1 |

7 |

0 |

.5 |

| 1 |

8 |

0 |

.5 |

| 1 |

9 |

0 |

.5 |

| 1 |

10 |

0 |

.5 |

| 2 |

1 |

0 |

1 |

| 2 |

2 |

0 |

1 |

| 2 |

3 |

0 |

1 |

| 2 |

4 |

0 |

1 |

| 2 |

5 |

1 |

2 |

| 2 |

6 |

1 |

2 |

| 2 |

7 |

1 |

2 |

| 2 |

8 |

1 |

2 |

| 2 |

9 |

1 |

2 |

| 2 |

10 |

1 |

2 |

| 3 |

1 |

0 |

2 |

| 3 |

2 |

0 |

2 |

| 3 |

3 |

0 |

2 |

| 3 |

4 |

0 |

2 |

| 3 |

5 |

1 |

4 |

| 3 |

6 |

1 |

4 |

| 3 |

7 |

1 |

4 |

| 3 |

8 |

1 |

4 |

| 3 |

9 |

1 |

4 |

| 3 |

10 |

1 |

4 |

Table 2b.

Data set of a worked example.

Table 2b.

Data set of a worked example.

| Consumers’ Id |

Time |

Consumption € |

D1 |

D2 |

| 1 |

2010 |

12 |

0 |

1 |

| 2 |

2010 |

9 |

0 |

1 |

| 3 |

2010 |

13 |

0 |

1 |

| 4 |

2010 |

14 |

0 |

1 |

| 5 |

2010 |

15 |

0 |

1 |

| 6 |

2010 |

13 |

0 |

0 |

| 7 |

2010 |

14 |

0 |

0 |

| 8 |

2010 |

13 |

0 |

0 |

| 9 |

2010 |

16 |

0 |

0 |

| 10 |

2010 |

15 |

0 |

0 |

| 1 |

2011 |

15 |

1 |

1 |

| 2 |

2011 |

17 |

1 |

1 |

| 3 |

2011 |

19 |

1 |

1 |

| 4 |

2011 |

18 |

1 |

1 |

| 5 |

2011 |

22 |

1 |

1 |

| 6 |

2011 |

13.5 |

1 |

0 |

| 7 |

2011 |

14 |

1 |

0 |

| 8 |

2011 |

15 |

1 |

0 |

| 9 |

2011 |

15.5 |

1 |

0 |

| 10 |

2011 |

14.4 |

1 |

0 |

Equivalently, the previous system can be written in a single equation (panel data) version as follows:

where

is the individuals fixed effect,

is the time fixed effect, and

is the treatment dummy interaction. We can estimate equation (2) and interpret the estimated coefficients according to the result reported in Remark n.4 below, that is,

Remark n.4: causal interpretation of the TWFE estimator

Under parallel trend, treatment homogeneity and no spill-over, is the TWFE estimation of the causal effect of receiving the treatment.

As the very name suggests TWFE is the case where there are exactly two time periods, where no units is treated in the first time period, and where some units become treated in the second time period while other units remain untreated in the second time period. Notice that when we say periods we do not necessarily refer to units of time (years, months etc.) but to “time intervals”: the first (possibly composed by several years, several months, etc.) in which there is no treatment for nobody and the second (possibly composed by several years, several months, etc.) in which some units are treated (uniformly).

To illustrate formally TWFE we need some notation. Let us define the following quantities:

Then for

define

yht(1) to be unit i’s potential treated response in period

t and correspondingly

yht(0) to be unit i’s potential untreated response in period

t. Impose that

for all units. This is the no anticipation condition of section 1. It states that the treatment should not affect the response variable in periods before the treatment takes place. The result from the above assumption and conditions is that

In the first time period we observe untreated potential outcomes for the response variable for all units and in the second period we observe treated potential outcomes of the response variable for treated units and untreated potential outcomes of the response variable for untreated units.

Using the above definitions, we may define the ATET resulting from the DiD identification of the treatment effect as follows

which is equivalent to the one given in the previous section.

Using Callaway’s (2022, p. 6) definition, the ATET is the mean difference between treated and untreated potential outcomes among the treated group. Perhaps a main reason that the DID literature most often considers identifying the ATET rather than, say, the average effect of treatment is that, for the treated group, the researcher observes untreated potential outcomes (in pre-treatment time periods) and treated potential outcomes (in post-treatment time periods). The DID identification strategies exploit the above framework. As a result, it is natural to identify causal effect parameters that are local to the treated group.

Clearly, the model presented in equation (2) is the static specification of the TWFE, which yields a sensible estimand when there is no heterogeneity in treatment effects across either time or units. Following Roth, Sant’Anna, Bilinski, and Poe (2023, p. 2224) we can stress the relevance of these hypotheses more formally.

Define a period (e.g. year) g > t and let τh,t (g) = Yh,t(g) – Yh,t(∞). Suppose that for all units h, τh,t(g) = τ whenever t ≥ g. This implies that (a) all units have the same treatment effect, and (b) the treatment has the same effect regardless of how long it has been since treatment started. Then, under a suitable generalization of the parallel trends assumption and no anticipation assumption, the population regression coefficient α in equation (2) is equal to τ.

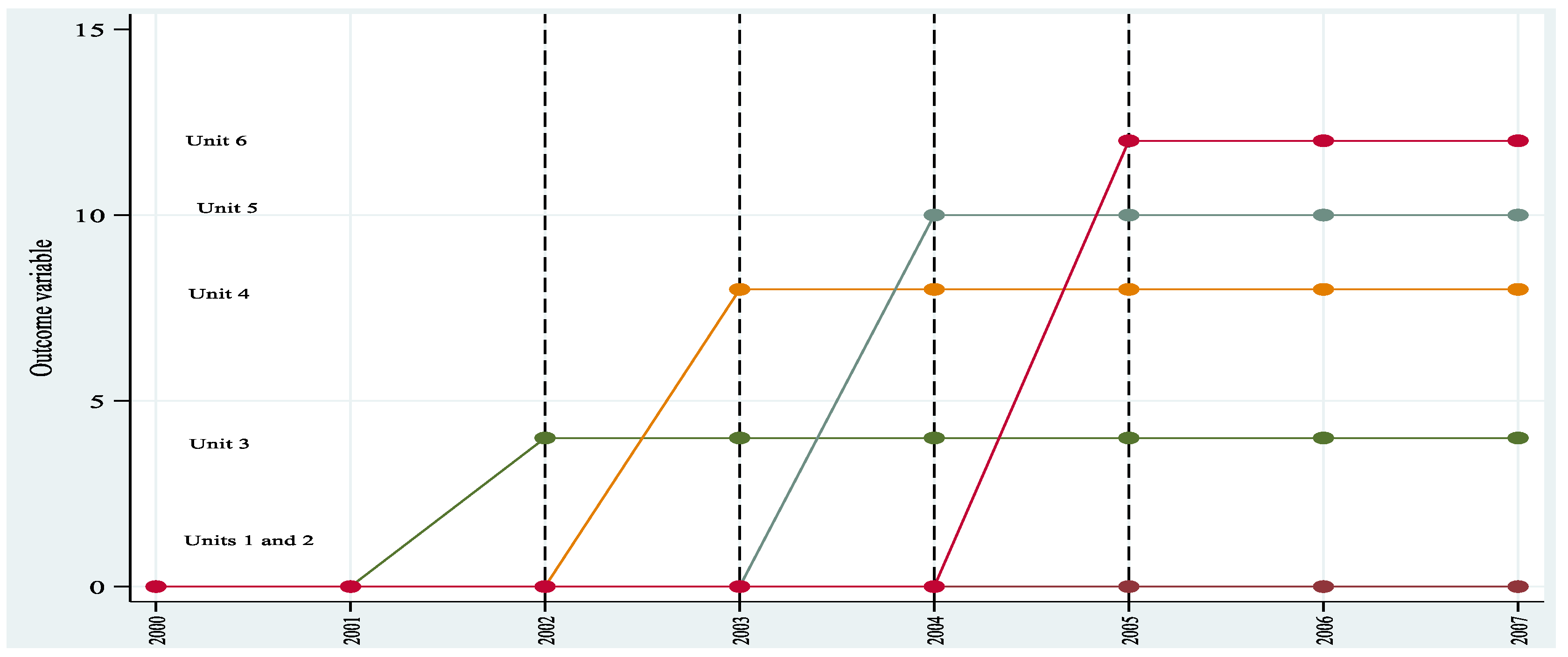

Yet, issues arise, however, when there is heterogeneity of treatment effects over time, as shown in Borusyak and Jaravel (2018), de Chaisemartin and D’Haultfoeuille (2020), and Goodman-Bacon (2021), among others. More generally, if treatment effects vary across both time and units, then τh,t(g) may get negative weight in the TWFE estimand for some combinations of t and g.

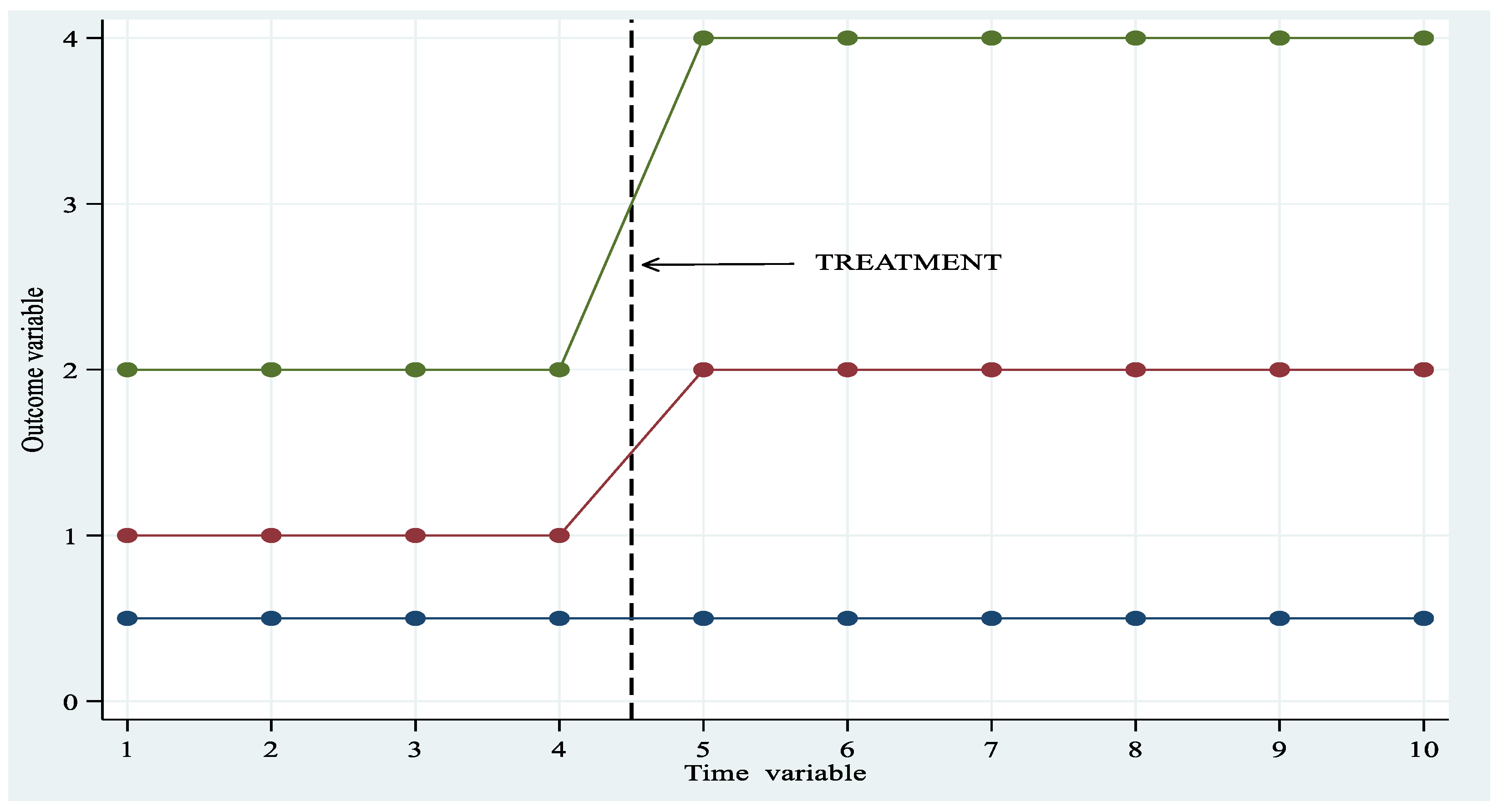

Figure 2 gives the idea of parallel trend with 3 units (unit 1 blue colour is untreated) and 10 periods. The plot has been generated using the data of

Table 2a reported in the Appendix. The following plot illustrates the time paths of the response variable. Notice that Unit 1 is never treated; Units 2 and Unit 3 start treatment at time 5 and are always treated from t = 5 to t =10.

2.1. Testing for the Parallel Trends and Anticipation Effects Assumptions in the TWFE Model

Given the fundamental importance of the parallel trend assumption, a natural question is: how to test for parallel trends in a panel data TWFE? In order to find answers, we start from the above model written as

where

is the indicator function, the treatment is indexed by

Dh, whit

Dh = 1 indicating that observation

h is part of the treated groups/units and

Dh = 0 indicates that it is part of the comparison/control group. Let

t index time from {1, ..., T} and suppose that an intervention begins at time

T0 for the treated units (same treatment periods for all treated units: the so-called homogenous case. See below). All other symbols have their usual meaning. The treatment effects of interest are

βk, representing differential post-period changes in the treated group relative to comparison at each time point. The average of these coefficients, that is,

is the average ATET.

We may exploit the above definition of the coefficients to derive some tests of the DiD identification hypothesis.

Parallel trends test: the slope test. In a parallel trends test for DiD identification, we may try and estimate whether there is a difference in slope between treatment and comparison groups prior to the intervention. Call

θ the different coefficient. Then, rewrite the above equation in a form that incorporates the pre-treatment coefficient

θ:

We can now test whether the (pre-treatment) differential slope θ = 0. If the null hypothesis for this test is non rejected (i.e., pv > 0.05), researchers may conclude that trends are parallel.

Anticipation effects: Researchers may instead examine the validity of the identification of the parameter by DiD by testing whether there is a significant “treatment” effect prior to the intervention, that is. an effect starting at T* < T

0. In this context, they might use the modified original panel model:

and estimate it by omitting data from after T

0. If the test statistic

is significant, this again suggests a violation of parallel trends. (Alternatively, a joint F-test can be used to test whether placebo effects at all possible

T* <

T0 were insignificant.)

2.2. More on the Parallel Trend Assumption

We have already stressed that DiD does not identify the treatment effect if treatment and control groups were on different trajectories prior to the treatment (common trend or parallel trend assumption).

With respect to equation (1) as the OLS equation of our DiD model we recall that

These assumptions guarantee that the common trends assumption is satisfied but they cannot be tested directly. This is quite disappointing because it leaves the tests for parallel trend the optical ability to visual checking on trends reported in plots.

In

Figure 1, we illustrated the case of an obvious pre-treatment parallel trend. In other cases, the assumption may be easily violated. Therefore, the question is: what has to be done? For those who think that hypothesis testing is in the realm of

optics and not

in the realm of mathematical statistics, a way to proceed is to inspect

visually the plot of the treated and untreated data.

Figure 1 is clearly a case of non-optically distorted test of parallel trend. The alternative presentation could be the make the G2 line start from A (eliminate the difference AB, which is the idiosyncratic constant element) and observe whether or not G1 and G2 lines overlap before the introduction of the treatment and diverge in period 2. More in general, optics cannot be a good substitute for mathematical statistics.

We may go back to initial 2 × 2 case and present a discussion of the parallel trend relevance. We had 2 groups, one treated and one untreated, and we indicate them as follows

where 0 is untreated (control) and 1 is treated. We also had 2 years and then we write

where 0 is the before treatment period and 1 is the treatment period. The guarantee a consistent estimate of the ATET we need to make the following parallel trend assumption

If the treated units had not received the treatment, the groups defined by

and

should have response variable showing the same paths as in

Figure 1 and

Figure 2. The group effects must be time invariant, and the time effect must be group invariant.

Within a 2-period framework, the possible “test” of this assumption is only graphical but for more than 2 periods same testing procedure based on Wald test are available. Many sw offer such statistical tests. We will present them alongside applications at the end of the Review.

In the linear case, Wooldridge (2021) has shown that tests of the Parallel Trend assumption are easily carried out in the context of pooled OLS estimation. In other words, in linear DiD models within a staggered treatment framework, the parallel trends assumption can be tested using pooled OLS estimation. This approach leverages the inclusion of cohort and time period dummies, along with cohort-by-time treatment indicators, in a linear regression model. The key idea is that under the parallel trends assumption, the coefficients on these interaction terms, when estimated via pooled OLS, are consistent for the estimation of ATET. Moreover, the tests are the same whether based only on the observations (imputation regression) or on pooled OLS using all observations— provided full flexibility is allowed in the treatment indicators. In other words, tests obtained pooling over the entire sample are equivalent to the commonly used ‘pre-trend’ tests (i.e. common tests used to examine the parallel trends assumption) that use only the untreated observations. As discussed by Wooldridge (2021), this means the tests using post-treatment data are not ‘contaminated’ by using treated observations—if the treatment effects are allowed to be flexible.

The algebraic equivalence of the pooled tests and pre-trends tests carries over to the nonlinear case provided the canonical link function is used in the Linear Exponential Function (LEF). In a DiD analysis with panel data, when using a linear exponential family (LEF) with the canonical link function, the pooled tests (using all data) and pre-trends tests (using only untreated observations) are algebraically equivalent. This means that the same underlying statistical properties and results can be obtained regardless of whether you pool all the data or focus only on the pre-treatment observations to check for parallel trends. Technically, if one uses a different mean function or different objective function, the test should be carried out using only the Dit = 0 observations (although it seems unlikely the difference would be important in practice). Wooldridge (2023) recently discusses the non-linear case.

In general, one should consider that the implications for applied work revolve around the (often-implausible) parallel trend assumption needed for the identification (using non-treated post treatment observations as counterfactuals) of a DiD model. Yet rather than just asserting that parallel trends hold, or abandoning projects where a pre-test rejects parallel trends (not to speak of the so-called optical test based the trend plots!), new approaches focus on thinking carefully about what sort of violations of parallel trends are plausible and examining robustness to these. Importantly, these methods should be used when there is reason to be sceptical of parallel trends ex ante, regardless of the outcome of a test of whether parallel trends hold pre-intervention. This type of sensitivity analysis will allow one to get bounds on likely treatment effects. For instance, a recent application comes from Manski and Pepper (2018), who look at how right-to-carry laws affect crime rates, obtaining bounds on the treatment effect under different assumptions about how much the change in crime rates in Virginia would have differed from those in Maryland in the absence of this policy change in Virginia.

In summary, the default DiD estimation equation should allow for a linear trend difference. This is a key recommendation of Bilinski and Hatfield (2020).

Which approach to use to examine robustness will depend on how many pre-periods you have: with only a small number of pre-intervention periods, the Rambachan and Roth approach of bounding seems most applicable for sensitivity analysis; when you have more periods you can consider fitting different pre-trends as in Bilinski and Hatfield (2020). Some issues are discussed in sections below.

3. Simple Worked Examples

We offer two simple numerical examples of ATET estimation with OLS and of the interpretation of the estimated coefficients. The second example relates to the interpretation of the response variable paths (before and after the treatment) as a tool for the evaluation of the presence of parallel trends.

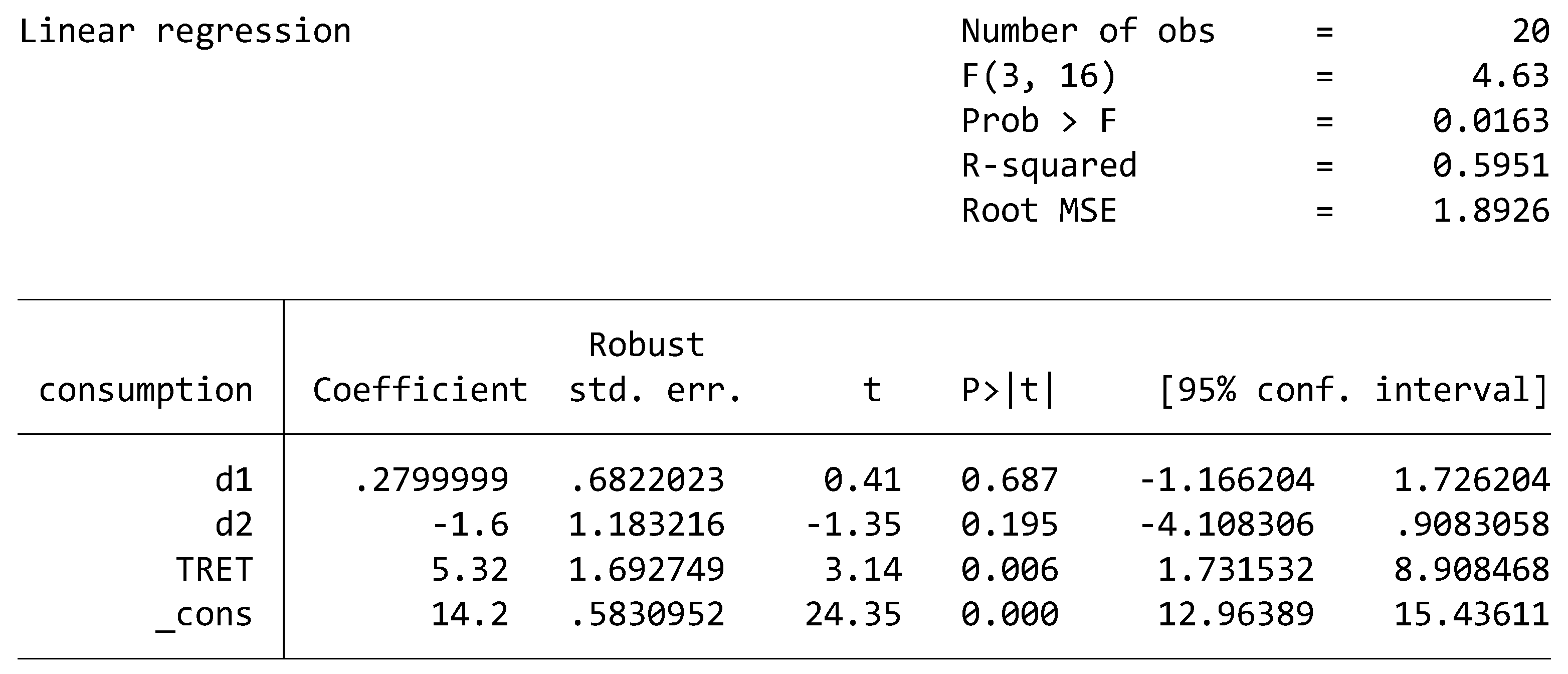

3.1. Example n.1

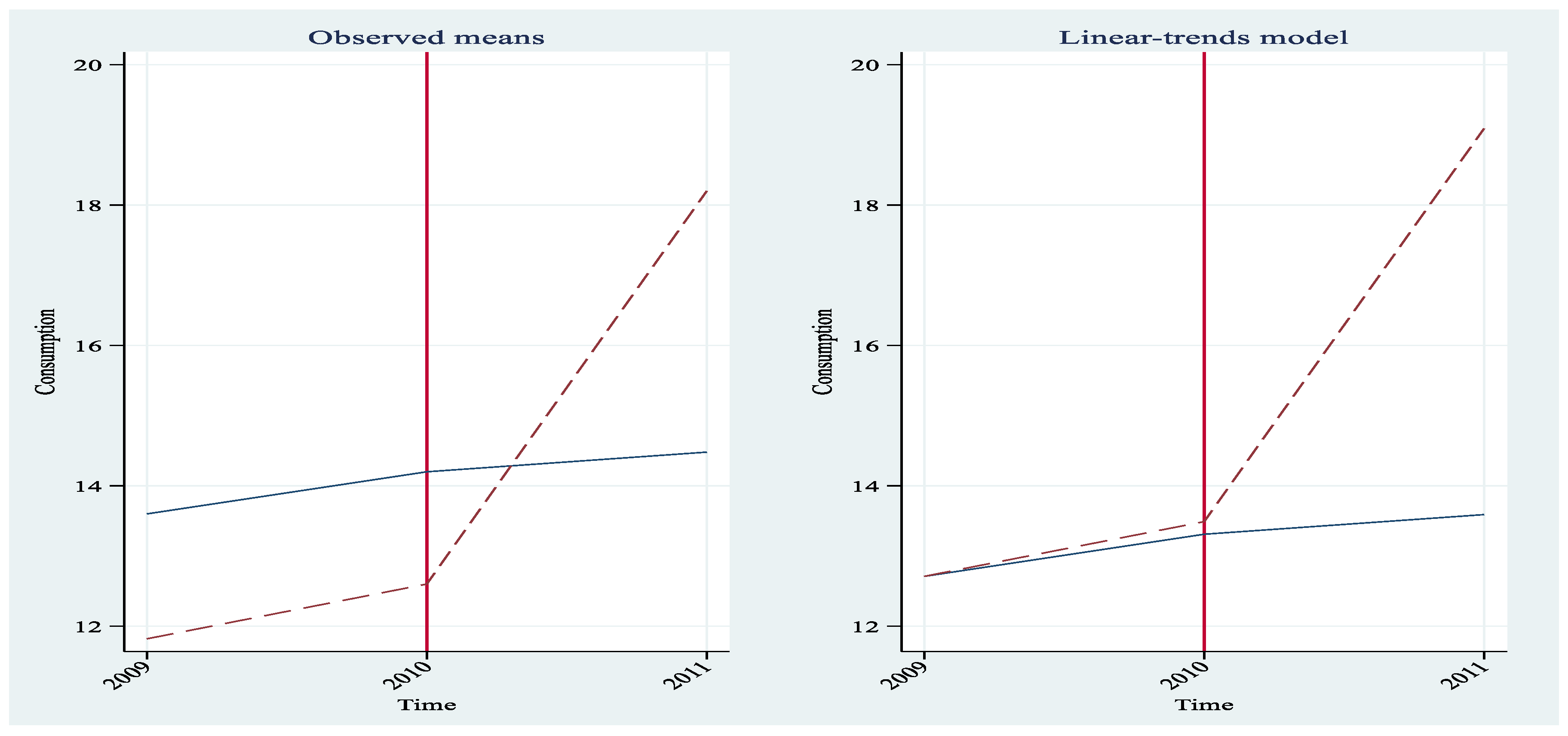

Assume we have a total of 10 Consumers in 2 equal-sized groups (a group of 5 treated consumers and a group of 5 untreated consumers); 2 periods corresponding to two years, namely 2010 and 2011; a Treatment occurring at the end of 2010 (consumption tax reduction for the control group only). We name treated consumers Mrs. 1- 5; and untreated consumers: Mrs. 6 -10. Data and dummies are presented in

Table 2b in the Appendix.

Using the above dataset, we show by direct calculation of mean values how to recover the ATET induced by the treatment. We need the following quantities:

Estimated results can be synthesized in the following 2x2 matrix

| |

Control |

Treated |

| Pre-Treatment |

14.2 |

12.6 |

| Post-Treatment |

14.48 |

18.2 |

Therefore, we obtain the ATET as the difference of the two differences:

Clearly, the above calculation does not tell us how “good” the computed ATET is from an inferential point of view. In other words, 5.32 has no CI around it or P-values. That’s why we must re-obtain the result following a route that allows to introduce inferential elements. Now we estimate ATET with the OLS after the creation using the above defined D1, D2 and TRET = D1× D2. (reported in small letters in the Table below). We run the OLS (with the option of robust SE) regression of equation (1) and obtain the results reported below.

Then, the estimated ATET is 5.32, which, according to the t-test reported in the table, is statistically significant at any level. Recall that TRET is the D1×D2 dummy variable.

We can interpret the above estimated coefficients as it follows:

The estimated Constant = 14.2 (with a p-value smaller than 0.05) is the mean value of the Consumption in the control group in 2010 (i.e. before the treatment). We can compare it with the result obtained from the numerical calculation reported above. The two figures coincide.

If we sum the coefficient Constant and the d2 coefficient, i.e. if we calculate 14.2 + (– 1.6), we obtain 12.6. This is the expected Consumption of the control group in 2011, i.e. during the year of treatment.

If we sum the coefficient Constant and the d1 coefficient, i.e. if we calculate 14.2 + 0.28 = 14.48, we obtain the mean value of the Consumption in the treatment group in 2010, i.e. before the treatment.

The estimated TRET = 5.32 is the (statistically significant) treatment effect. Treated units increase their average consumption by 5.32 euros with respect to untreated individuals.

In formula, we may write, after indicating differences with the symbol Δ, the calculation of ATET as

As it was stressed above, equation (1) is estimated with OLS under the robust SE option, which allows adjusting the model-based standard errors using the empirical variability of the model residuals which are the difference between observed outcome and the outcome predicted by the statistical model. The motivation of this choice is that as shown by Bertrand, Duflo, and Mullainathan (2004) the standard errors for DiD estimates are inconsistent if they do not account for the serial correlation of the outcome of interest. For a more complete discussion, see Cameron and Miller (2015) and MacKinnon (2019) and the references therein.

Generalising our discussion beyond the 2-group example studied above, we may stress that the response variables under investigation usually vary at the group and time levels, and so it makes sense to correct for serial correlation. Bertrand, Duflo, and Mullainathan (2004) show that using cluster–robust standard errors at the group level where treatment occurs provides correct coverage in the presence of serial correlation when the number of groups is not too small. Bester, Conley, and Hansen (2011) further show that using cluster–robust standard errors and using critical values of a t distribution with G − 1 degrees of freedom, where G is the number of groups, is asymptotically valid for a fixed number of groups and a growing sample size. In other words, consistency does not require the number of groups to be arbitrarily large, that is, to grow asymptotically. Cluster–robust standard errors with G−1 degrees of freedom are the default standard errors in many sw performing DiD analysis.

Hence, we could still obtain reliable standard errors even when the number of groups is not large. But what about data with a very small number of groups? Cluster–robust standard errors may still have poor coverage when the number of groups is very small or when the number of treated groups is small relative to the number of control groups. For cases where the number of groups is small,

Stata sw provides three alternatives. In what follows I reproduce the description of the alternatives provided by Stata to deal with the issue (

https://www.stata.com/manuals/tedidintro.pdf.). The first alternative is to use the wild

cluster bootstrap that imposes the null hypothesis that the ATET is 0. Cameron, Gelbach, and Miller (2008) and MacKinnon and Webb (2018) show that the wild cluster bootstrap provides better inference than using cluster–robust standard errors with t(G − 1) critical values. The second alternative comes from Imbens and Kolesar´ (2016), who show that with a small number of groups, you may use

bias-corrected standard errors with the degrees of freedom adjustment proposed by Bell and McCaffrey (2002). For the third alternative, one may use

aggregation type methods like those proposed by Donald and Lang (2007); they show that their method works well when the number of groups is small but the number of individuals in each group is large.

When the disparity between treatment and control groups is large, for example, because there is only one treated group or because the group sizes vary greatly, cluster–robust standard errors and the other methods mentioned above underperform. Yet the bias-corrected and cluster–bootstrap methods provide an improvement over the cluster–robust standard errors.

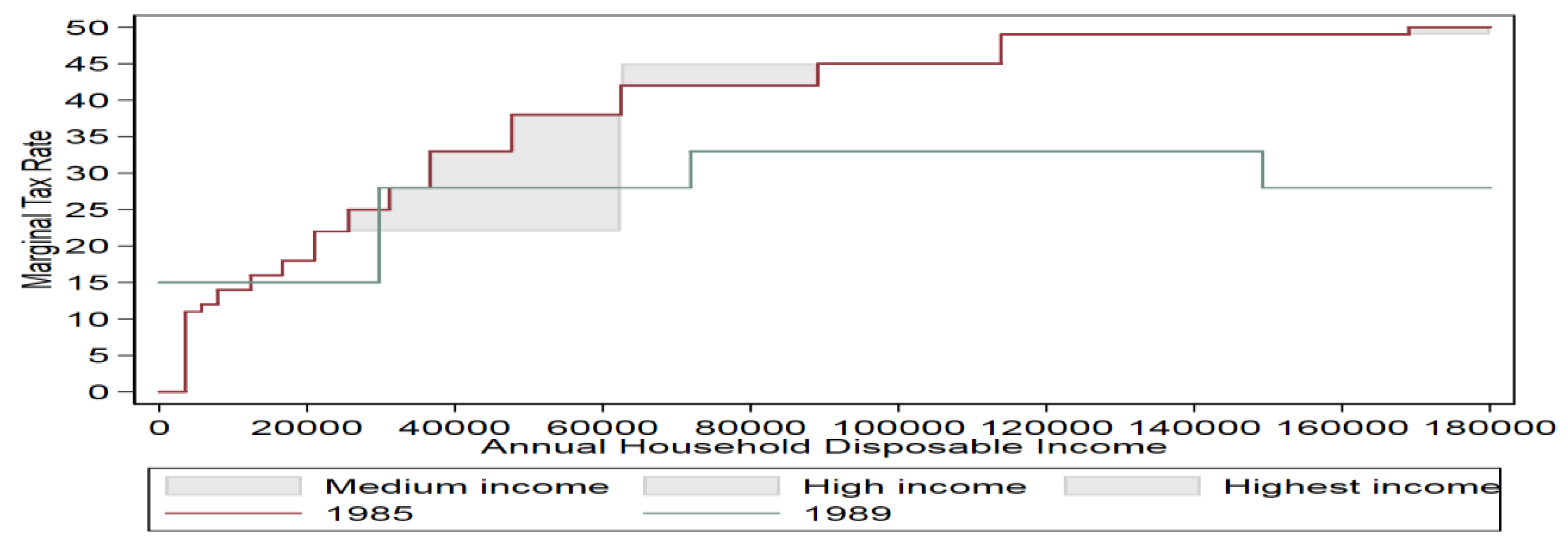

3.2. Example n.2

Use the data of

Table 3 in the Appendix (stuck in panel data form, i.e. in the version that is always recommended is defined by individual identity and time indicator) to generate a working data set for the future application of DiD. Answer the following “trivial” questions. How many units belong to the panel? By looking at D2, say how many units are treated Are the latter treated in the same years? What does D1 indicate? How is the dummy whose estimated coefficient corresponds to ATET obtained?

Assume the treatment is introduced at the end of year 2010 (look at the dummies). Write the FE panel data version of equation (1) with time and individual effects and estimate both ATET and the Time Effect. Using any graph routine that you may know, show that the parallel trend exists (graphically).

Figure 3 below shows the requested plots.

If you cannot produce the above plots using your SW, try to interpret those provided above. Yet, never forget that statistical inference is not a variant of “observing panoramic views” however elaborated they might be presented. In any future analysis that you will perform, please recall that the presence of parallel trends cannot be diminished and debased to a mere matter of good optical observation, however sophisticated the plots can be.

As one can see for both groups, there was an increase in the mean of the outcome response variable after 2010. Therefore, the increase in the treatment group cannot be attributable entirely to the tax treatment (see section 1). Yet, the deviation from a common trend was more sizable from the treatment group and the difference may indicate the effect of the treatment. The example shows how the DiD strategy relies on two differences. The first is a difference across time periods. Separately for the treatment group and the control group, we compute the difference of the outcome mean before and after the treatment. This across-time difference eliminates time-invariant unobserved group characteristics that confound the effect of the treatment on the treated group. But eliminating group-invariant unobserved characteristics is not enough to identify an effect. There may be time-varying unobserved confounders with an effect on the outcome mean, even after we control for time-invariant unobserved group characteristics. Therefore, we incorporate a second difference—a difference between the treatment group and the control group. DID eliminates time-varying confounders by comparing the treatment group with a control group that is subject to the same time-varying confounders as the treatment group. The reader can evaluate the above statements by reproducing with the data used in this estimation the differences calculated in section 3.

The ATET is then consistently estimated as a one parameter in a liner OLS equation by differencing the mean outcome for the treatment and control groups over time to eliminate time-invariant unobserved characteristics and also differencing the mean outcome of these groups to eliminate time-varying unobserved effects common to both groups.

4. ATET vs ATE

In the previous sections, we have used the acronyms ATET to indicate the estimation of the average causal effect on treated units when our data set includes both pre-treatment and post-treatment observations. It should not be confused with the Average Treatment Effect (ATE) which measures the effect of a treatment on a group of units estimated when we have observations recorded only for the after-treatment period (we do not have pre-treatment observations). Yet, we would like to know if the treatment has an effect on the response variable y of the treated vs untreated units. In an ideal world, we would observe y when a subject is treated (which we denote in what follows as y1), and we would observe y when the same subject is not treated (which we denote as y0). If the only difference in the data generation process of treated and untreated responses is the presence or absence of the treatment, we could average the difference between y1 and y0 across all the subjects in our dataset to obtain a measure of the average impact of the treatment. However, this ideal experiment setting is almost never available because we cannot observe a specific subject having received the treatment and having not received the treatment. When for instance the response is the level of consumption, and the treatment is the presence or the absence of a consumption tax for a specific group of consumers it is impossible to observe the consumers’ expenditure under both treatment (the presence of the tax) and absence of the treatment (no taxes). As a result, we cannot estimate individual-level treatment effects because of a missing-data problem. Econometricians have developed potential-outcome models to overcome this problem. Potential-outcome models bypass this missing-data problem and allow us to estimate the distribution of individual-level treatment effects. A potential-outcome model specifies the potential outcomes that each individual would obtain under each treatment level, the treatment assignment process, and the dependence of the potential outcomes on the treatment assignment process. These models are beyond the purpose of this DiD Review.

To illustrate the difference between ATE and ATET estimates, we follow Cameron and Trivedi (2005, p. 866). Define

the above difference between the response variable in the treated and untreated states. Back to

Figure 1 one immediately realises that Δ cannot be observed (Group 2 after the treatment). Then we define

whereas

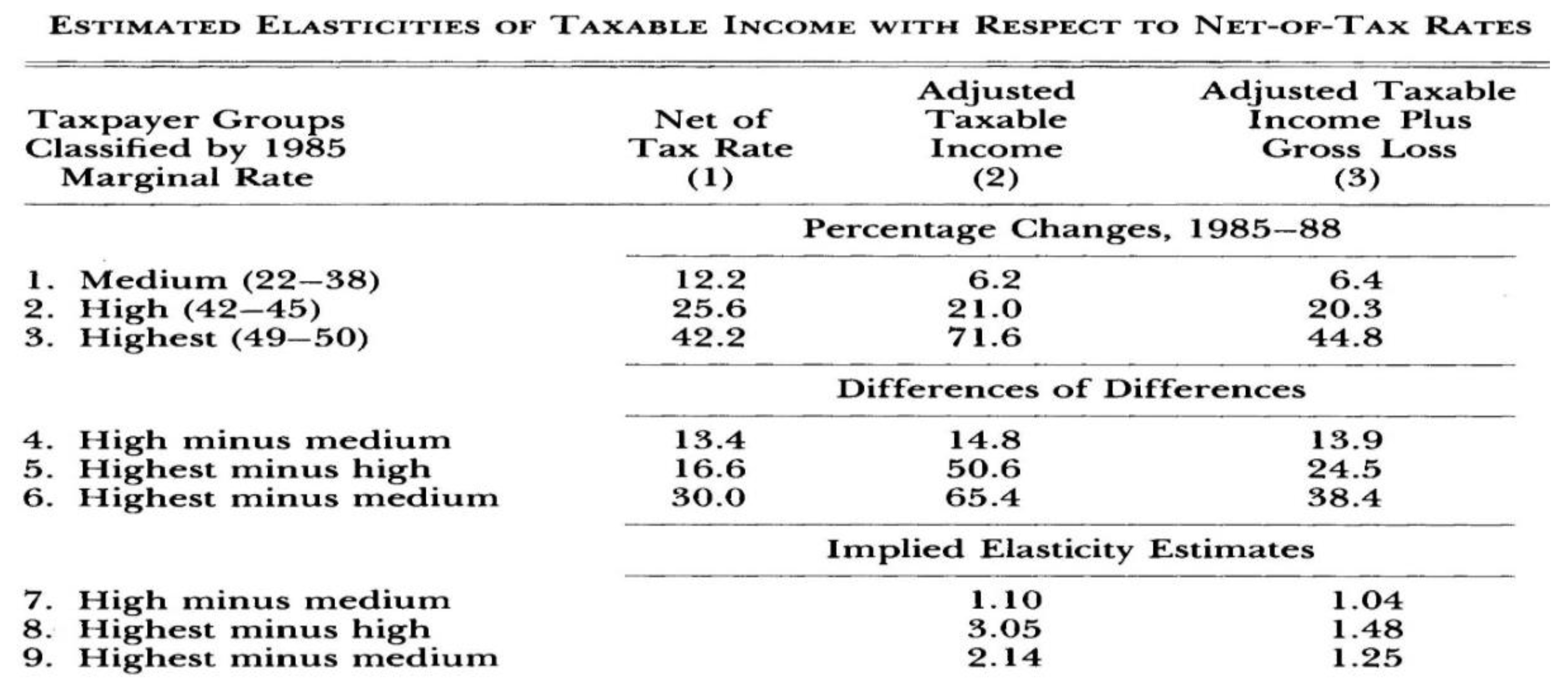

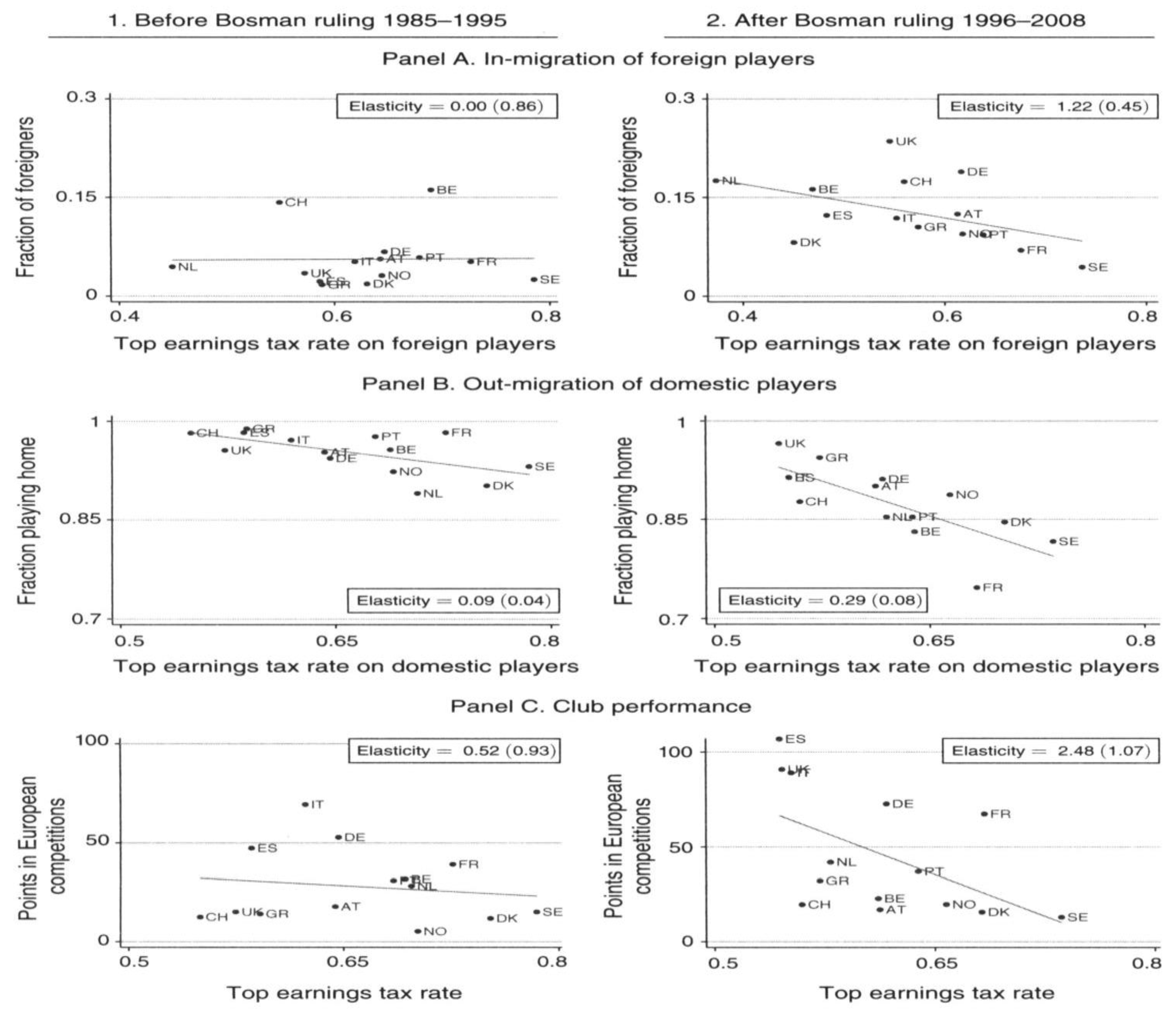

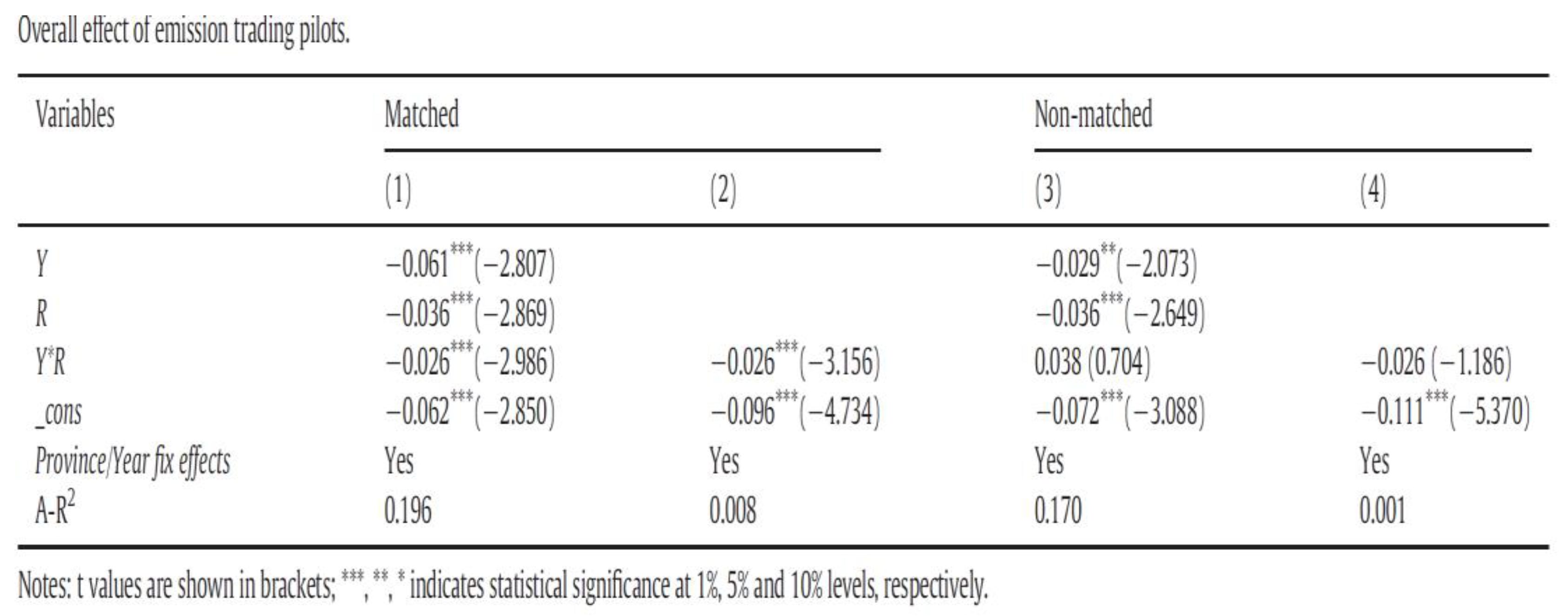

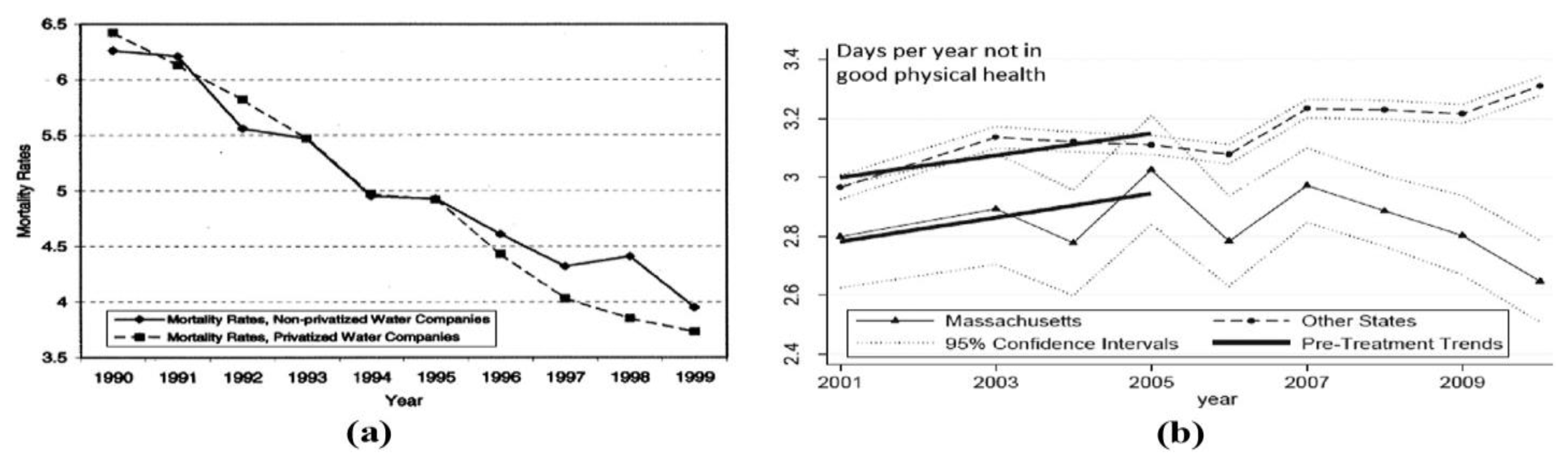

With the sample analogues (using the hypothesis of section 1):