Submitted:

03 August 2025

Posted:

04 August 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

- ➢

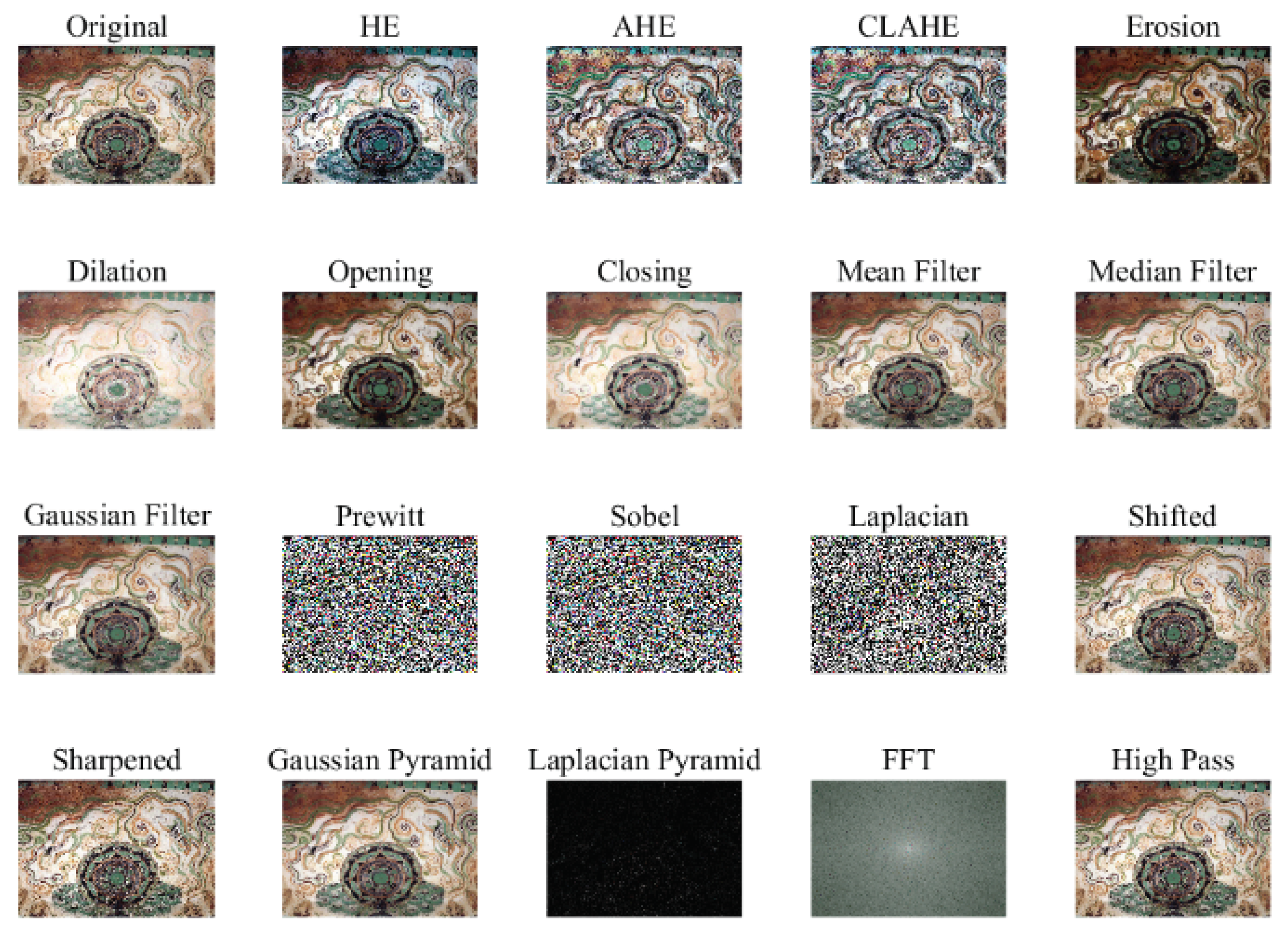

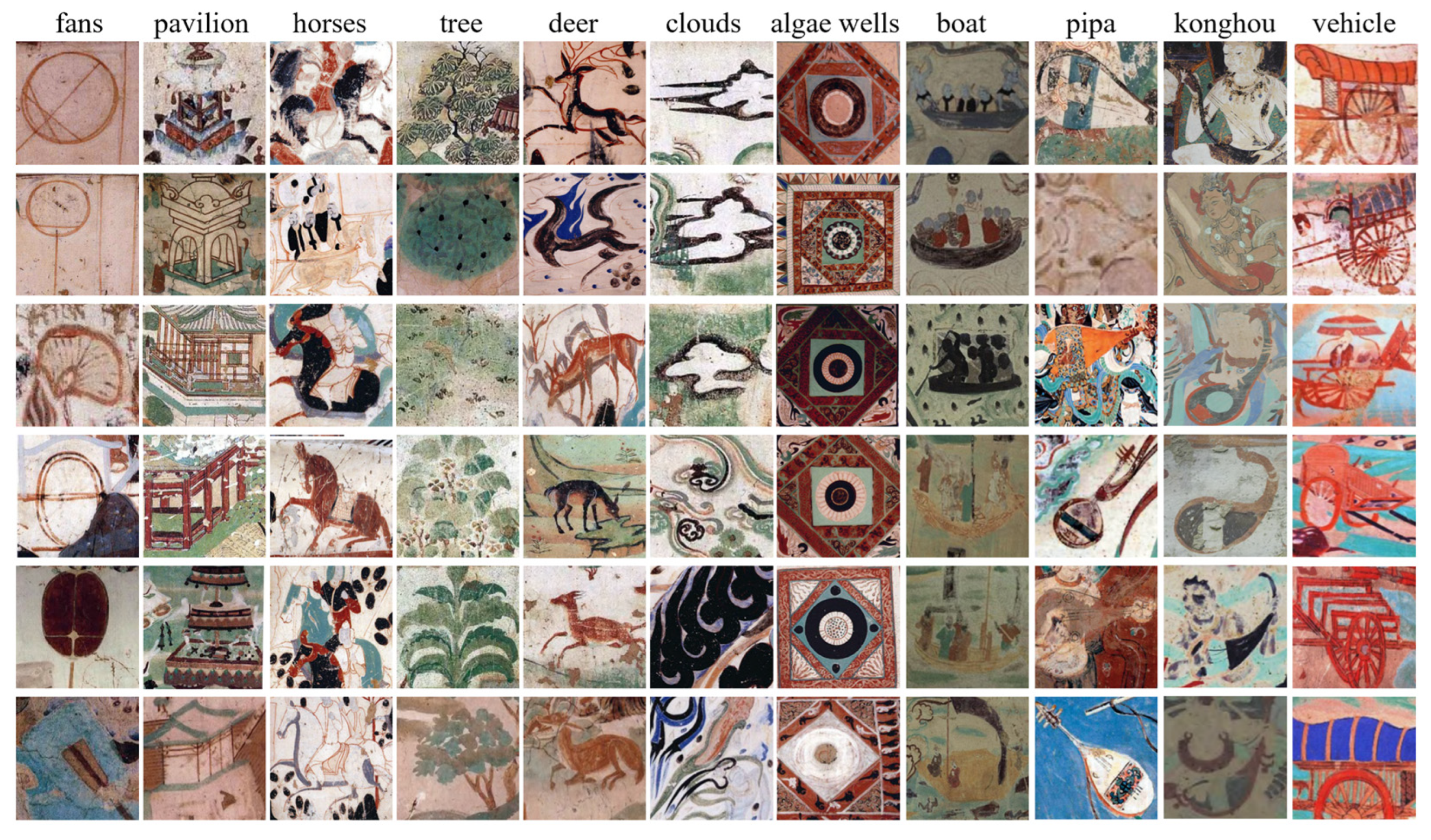

- Dataset Construction: A specialized dataset for Dunhuang grotto murals was compiled, encompassing 30 distinct classes of common mural art features. Rigorous evaluation procedures ensured the inclusion of only high-quality images, which were subsequently annotated manually to identify key elements within the mural artworks.

- ➢

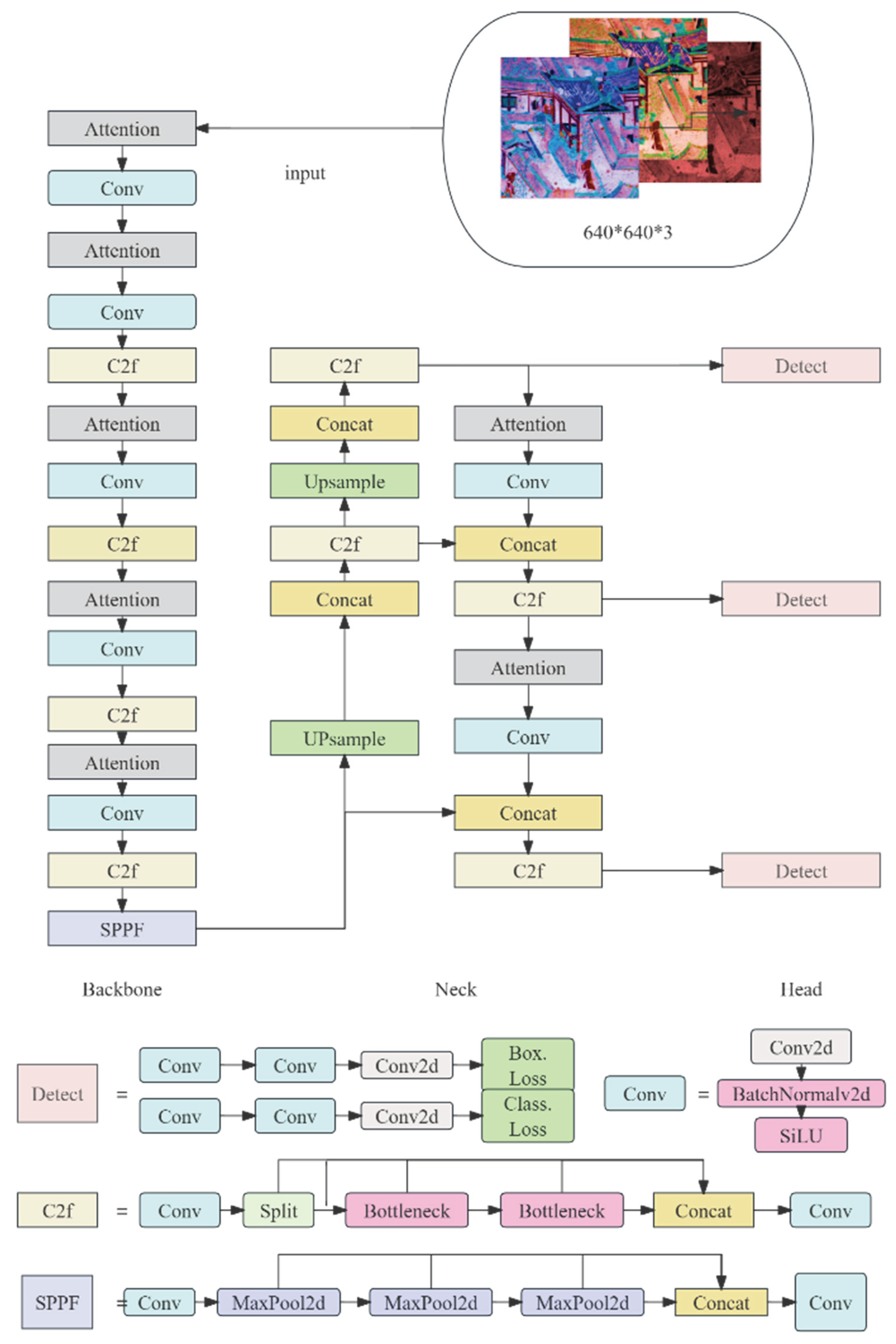

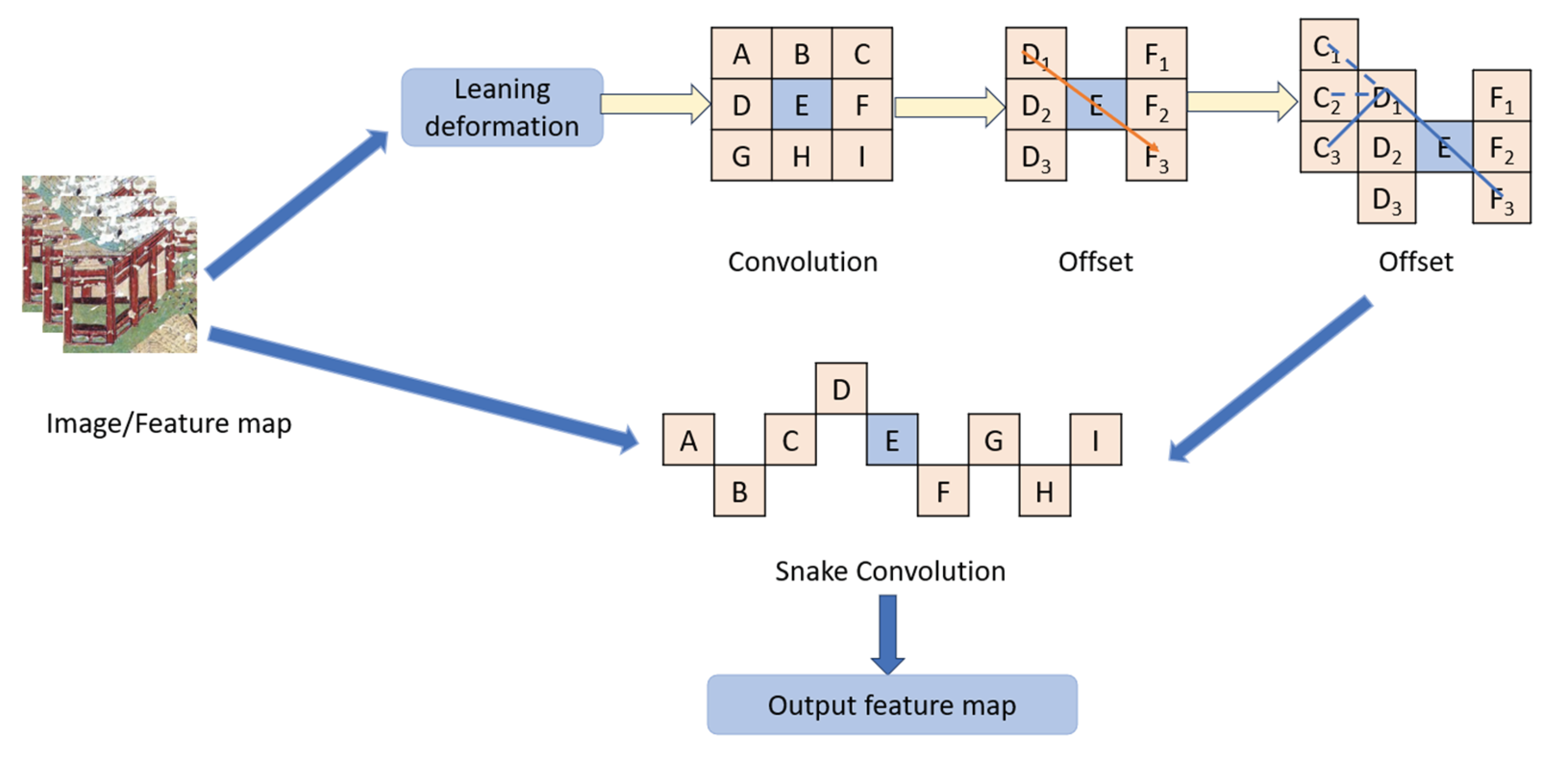

- Model Enhancement: Building upon the original YOLOv8 architecture, we incorporated DySnake Conv, a module known for its sensitivity to elongated topologies. This modification significantly improved the detection accuracy of mural art features, especially those with irregular shapes and elongated structures.

- ➢

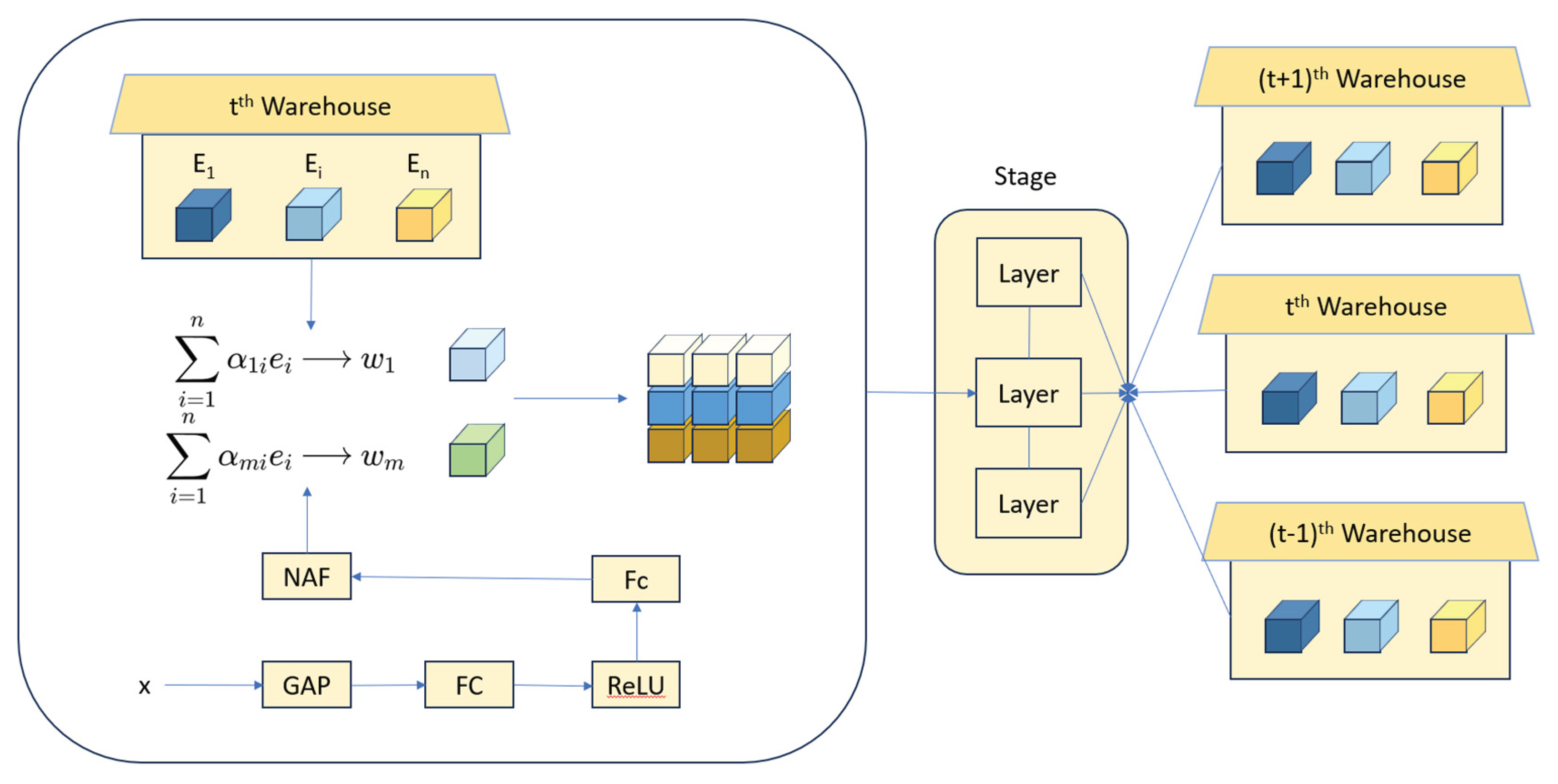

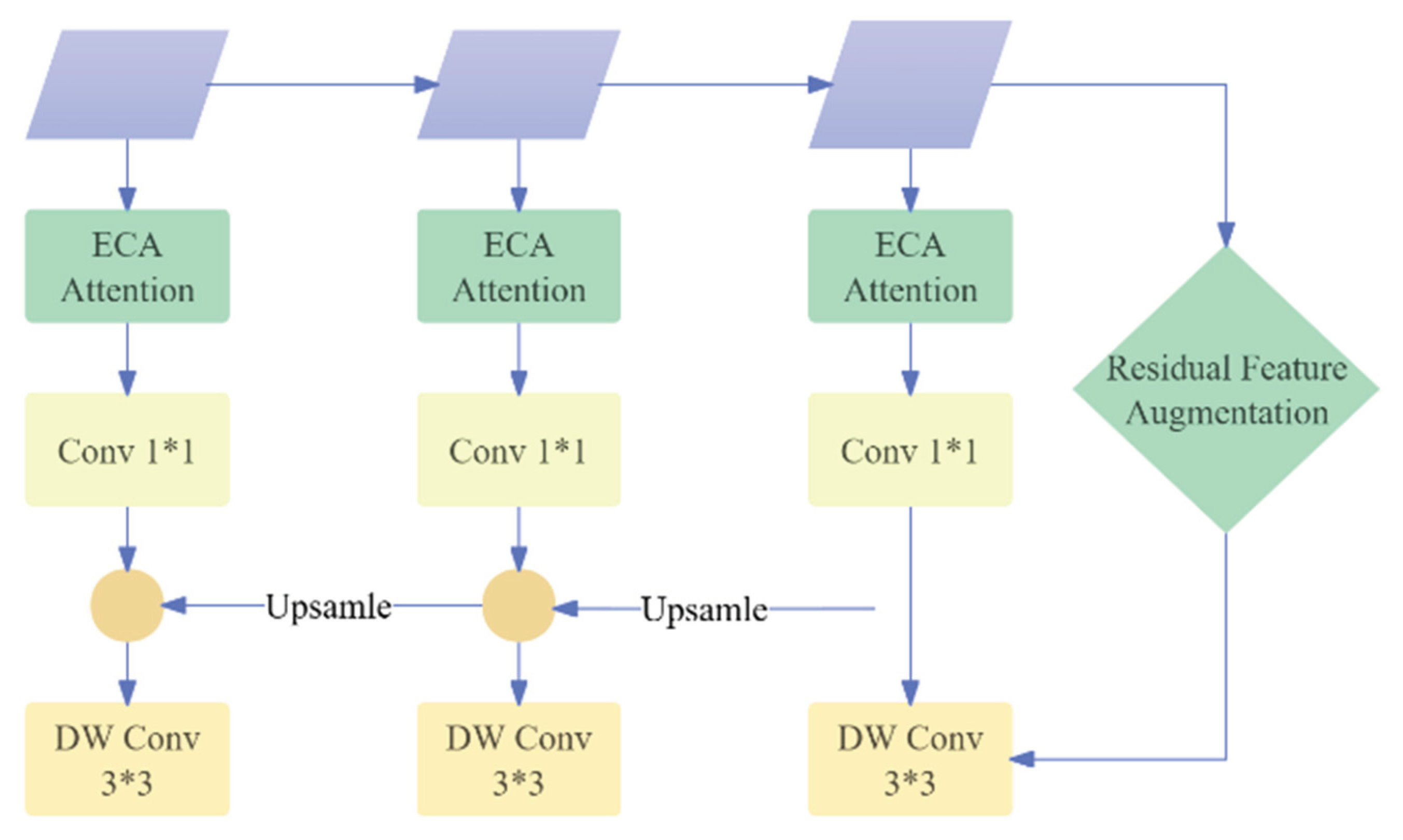

- Integrated Approach: Our method integrates an active target detection module, three-channel spatial attention mechanisms, and the dynamic convolution technique from Kernel Warehouse. These innovations reduce model parameters, mitigate overfitting, and alleviate computational and memory demands. Furthermore, this strategy substantially enhances the model’s ability to accurately and reliably detect mural art elements.

2. Materials and Procedures

2.1. The YOLOv8 Model’s Architecture

2.2. Dynamic Snake Convolution

2.3. Kernel Warehouse Dynamic Convolution

2.4. Lightweight Residual Feature Pyramid Network

3. Experiment and Results

3.1. Hardware and Software Configuration

| Name | Related Configuration |

| Operating system | Windows 11 (64 bit) |

| CPU | Intel (R) Core (TM) i7-14700HX |

| GPU | NVIDIA GeForce RTX 3050 |

| Software and environment | PyCharm 2021.3, Python 3.8, Pytorch 1.10 |

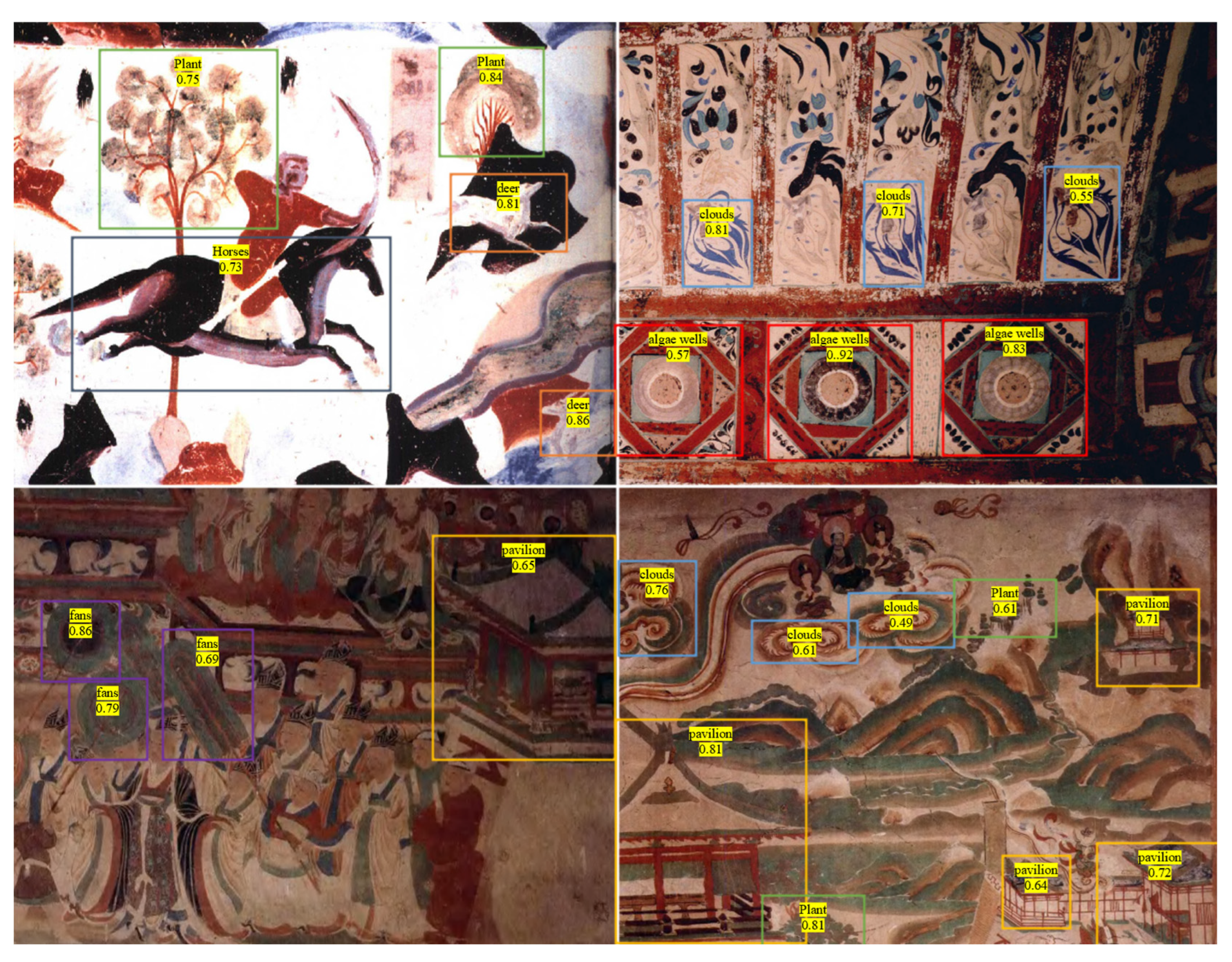

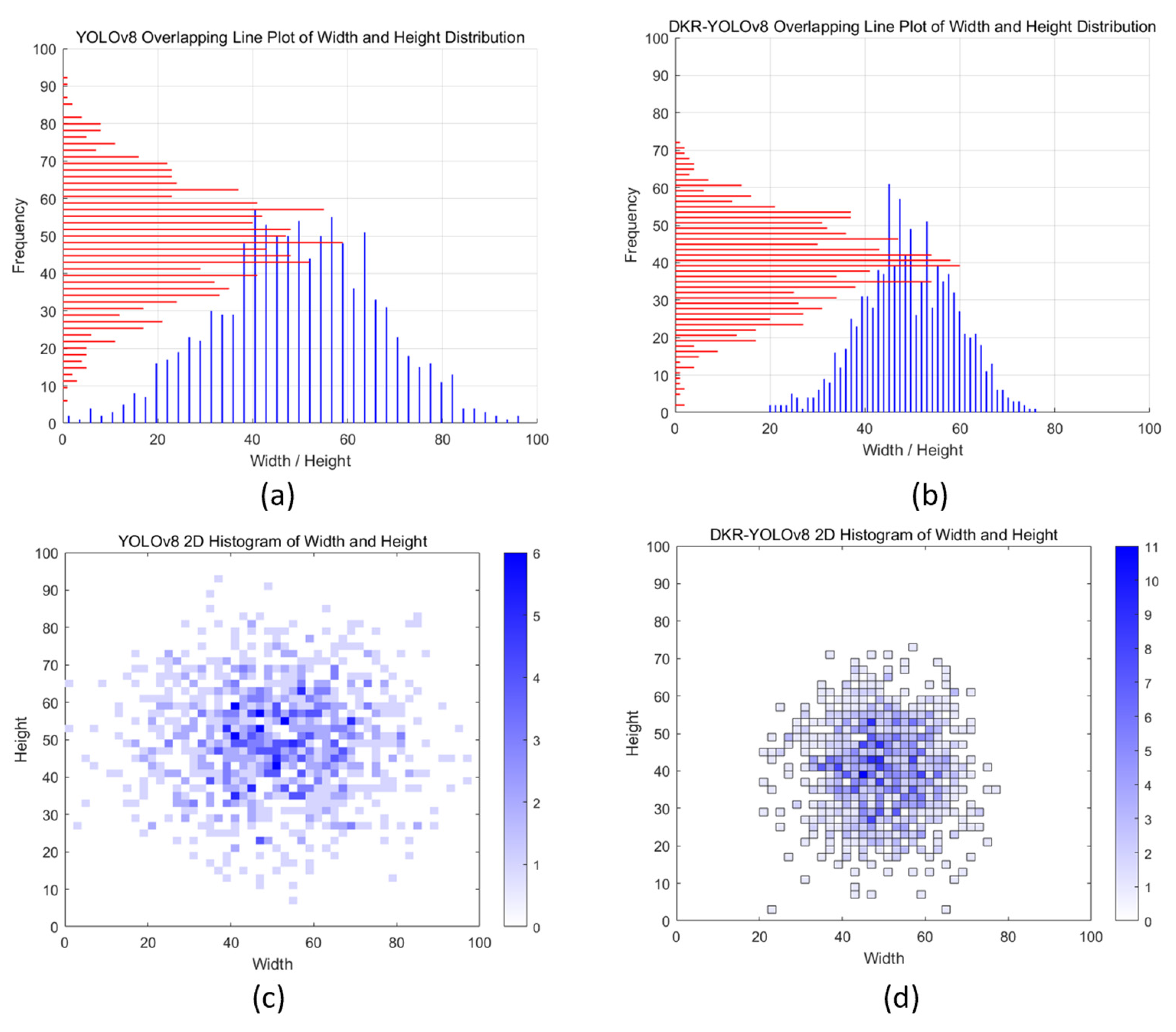

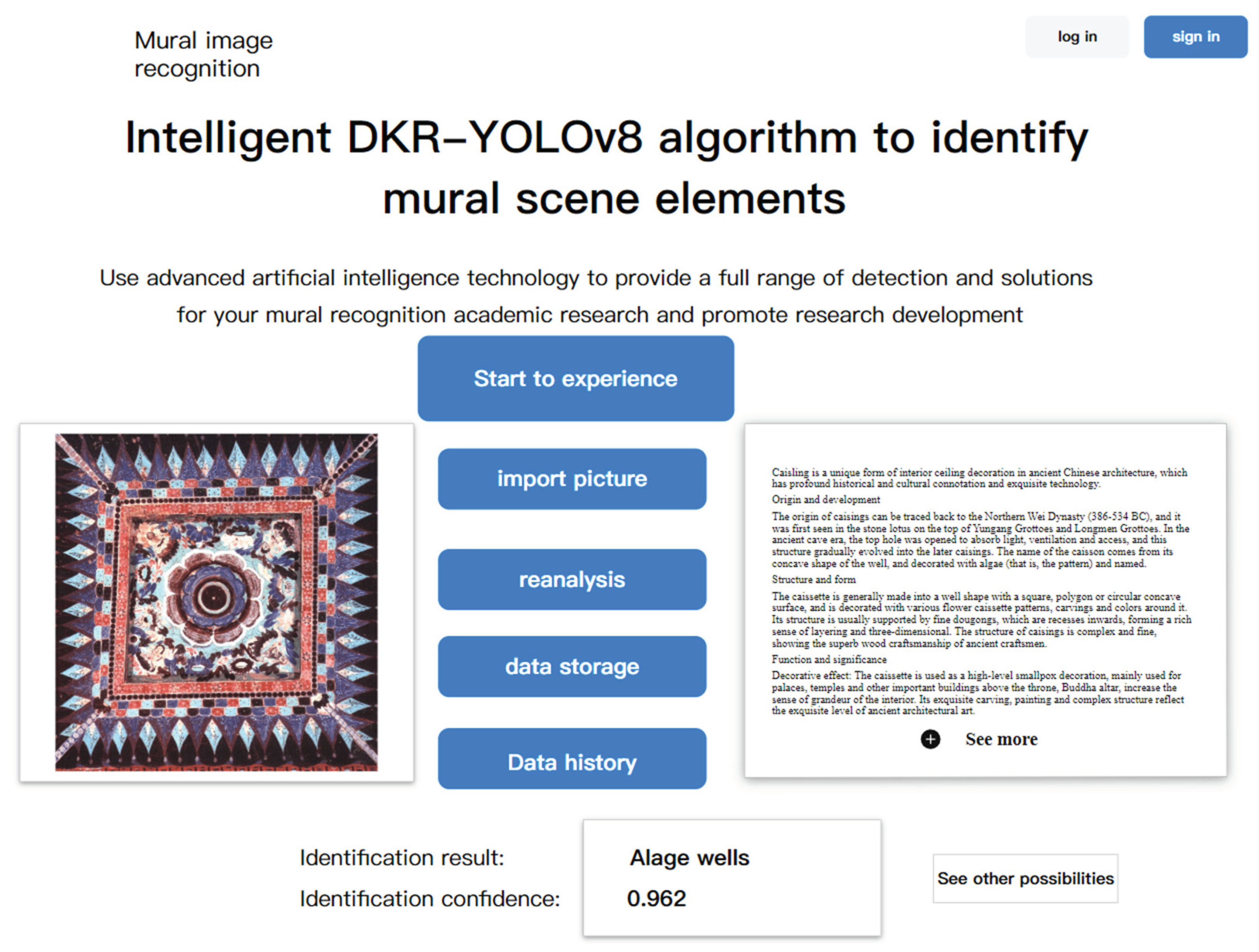

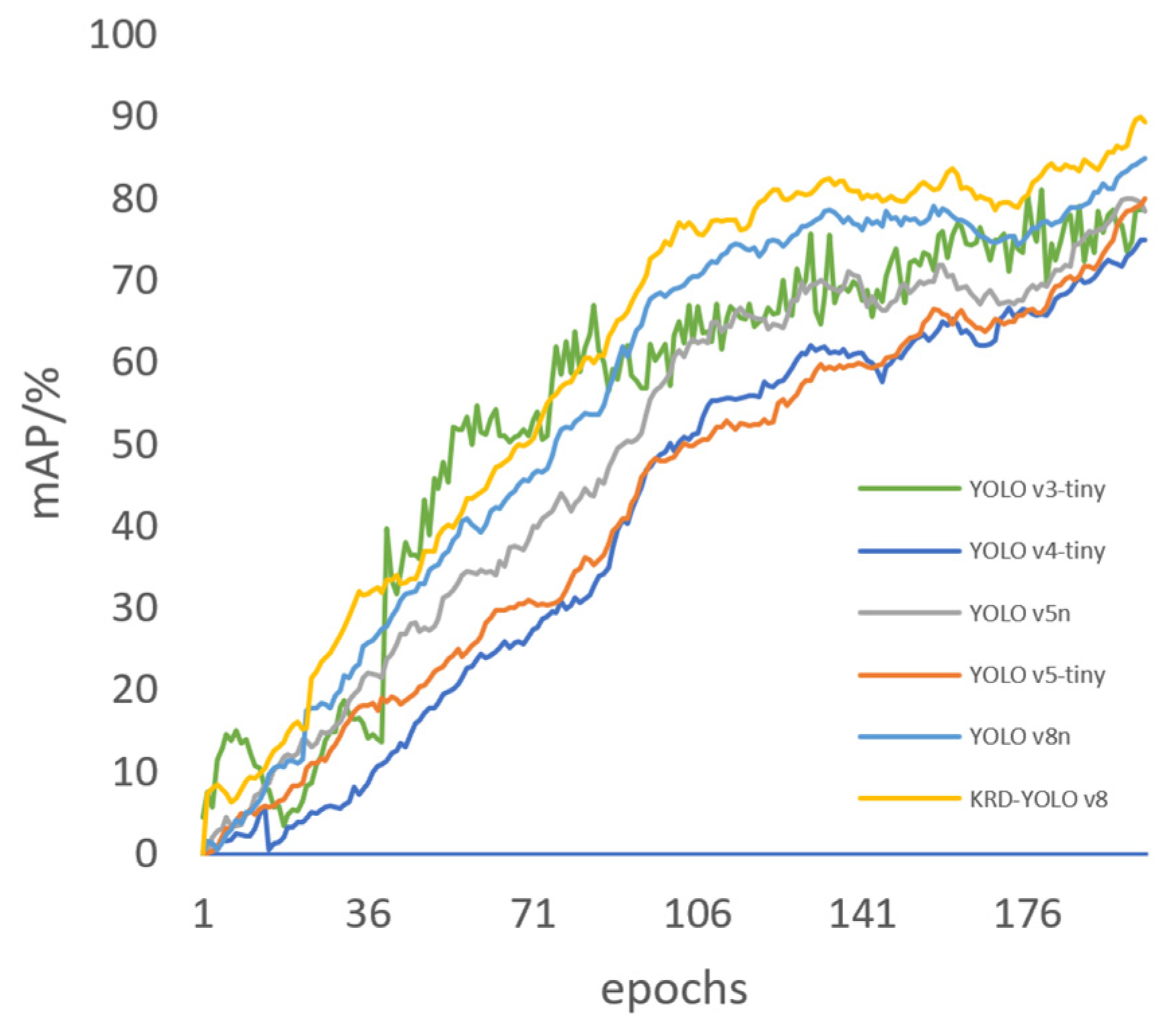

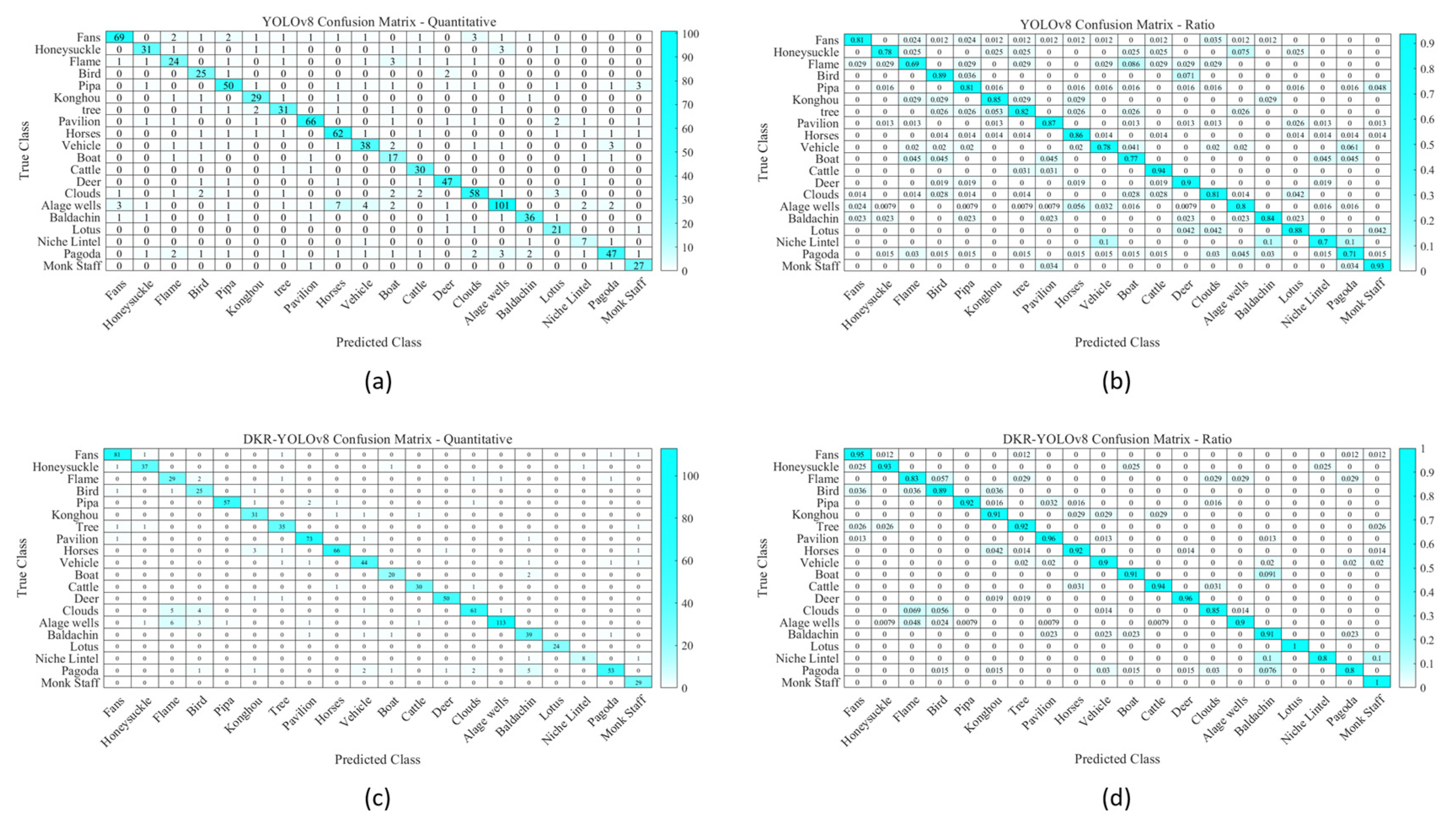

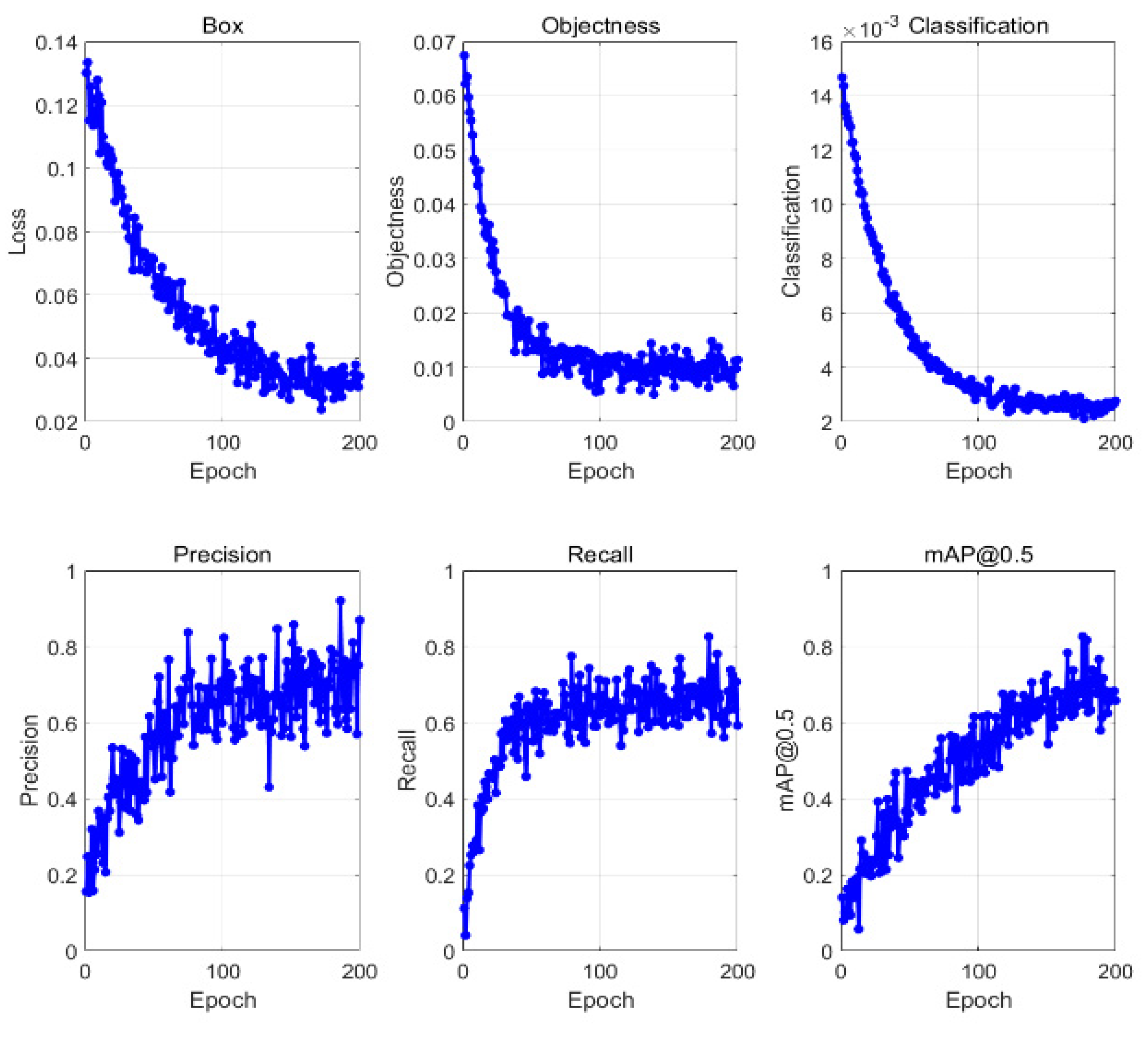

3.2. Recognition Results

3.3. Test Results on mural Dataset

| Simulations | P/% | R/% | mAP/% | F1/% | FPS |

|---|---|---|---|---|---|

| YOLOv3-tiny | 79.2 | 79.6 | 81.4 | 78.8 | 557 |

| YOLOv4-tiny | 81.4 | 74.8 | 82.6 | 78.1 | 229 |

| YOLOv5n | 80.1 | 75.2 | 82.3 | 77.2 | 326 |

| YOLOv7-tiny | 81.3 | 73.8 | 81.2 | 76.9 | 354 |

| YOLOv8 | 78.3 | 75.4 | 80.6 | 77.2 | 526 |

| DKR-YOLOv8 | 81.6 (0.2%↑) |

80.9 (1.6%↑) |

88.2 (6.8%↑) |

80.5 (2.1%↑) |

592 (6.2%↑) |

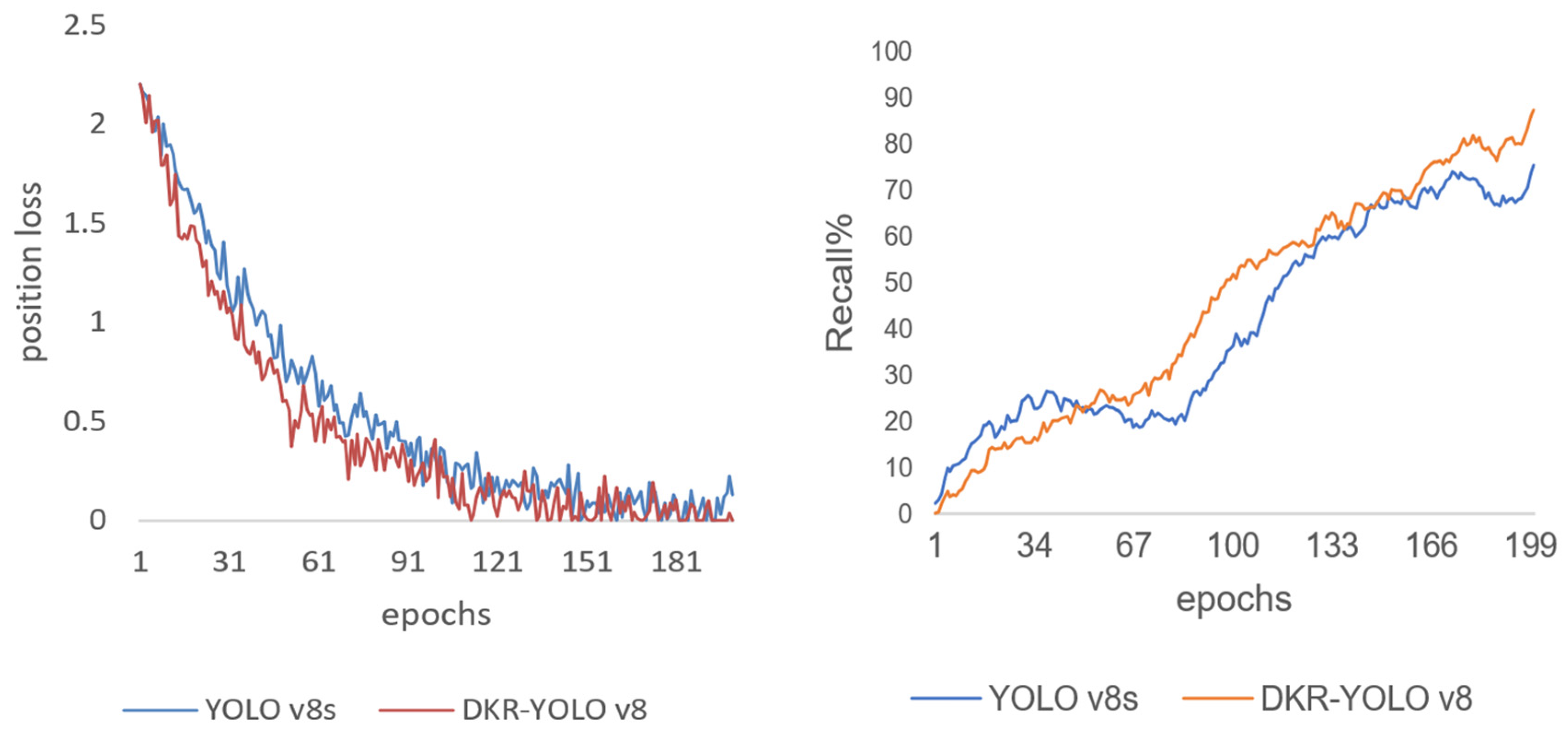

3.4. Ablation Experiment

4. Conclusions

4.1. Research Result

- ➢

- The creation of a large dataset comprising 20 diverse types of mural images has been a significant milestone in our study. This dataset serves as a robust foundation for validating the effectiveness of our proposed technique in environmental monitoring applications. By encompassing a wide variety of mural images, the dataset ensures that our algorithm can adapt to the diverse visual qualities and challenges present in real-world scenarios. This extensive collection not only enhances the reliability of our technique but also demonstrates its potential for application in specialized environmental surveillance systems, enabling more accurate and efficient mural monitoring across various contexts.

- ➢

- The identification and analysis of mural images, particularly those from Dunhuang, present substantial challenges due to the diverse nature of the samples, intricate backgrounds, and the limited recognition accuracy of current YOLO detection techniques. To address these obstacles, we propose the DKR-YOLOv8 model, which integrates Kernel Warehouse dynamic convolution and Dynamic Snake Convolution. These enhancements collectively improve the feature extraction network by capturing finer and more nuanced image characteristics. Additionally, the model incorporates a Lightweight Residual Feature Pyramid Network, significantly boosting detection accuracy and operational efficiency, particularly in dry grassland environments. These innovations enable the DKR-YOLOv8 model to achieve superior performance in recognizing and classifying mural images, surpassing the limitations of previous YOLO models.

- ➢

- The enhanced DKR-YOLOv8 model delivers outstanding performance in mural image recognition, achieving a precision rate of 81.6%, recall rate of 80.9%, F1 score of 80.5%, mean average precision (mAP) of 88.2%, 13.1 GFLOPs, and a processing speed of 592 frames per second (FPS). Compared to other YOLO models, the DKR-YOLOv8 model excels in both accuracy and speed, making it an ideal choice for mobile applications targeting mural analysis in environmental settings.

4.2. Research Prospects

- ➢

- Enhancing the Mural Database: The classification of images within the mural database faces challenges such as disputes over classification and a scarcity of comprehensive resources. These issues largely stem from the lack of large, publicly available mural image datasets. Furthermore, mural images are often subject to strict protection by local authorities, making their collection particularly difficult. Many mural images are concentrated in specific regions, sharing similar styles and content. However, their uneven distribution raises concerns about the reliability and representativeness of the collected data. To address these challenges, future research must include extensive fieldwork, close collaboration with local authorities, and the use of diverse references. The aim is to create a comprehensive, balanced, and extensive mural image dataset that accurately reflects the richness and diversity of ancient mural art.

- ➢

- Addressing Data Imbalance in Neural Architecture Search: Data imbalance is a significant issue when employing neural architecture search algorithms for classification. This challenge often arises due to limitations in time and manpower, leading to datasets that are unevenly distributed across categories. To ensure effective and reliable classification, it is essential to construct a balanced dataset for ancient mural classification. This involves not only increasing the overall size of the dataset but also ensuring that each category of murals is adequately represented. A balanced dataset will enable more effective training of the algorithm, resulting in improved recognition accuracy and a deeper understanding of ancient mural art.

- ➢

- Improving Recognition Accuracy and Handling Controversial Images: While significant improvements have been achieved in recognition accuracy for mural classification, challenges remain, particularly when addressing controversial mural images. These images often pose difficulties for the algorithm, leading to less satisfactory performance. To overcome this, future research should prioritize refining and enhancing the algorithm’s performance. This includes developing advanced feature extraction techniques capable of accurately capturing and analyzing the intricate characteristics of mural images, such as their content, style, and historical context. By focusing on these advancements, the algorithm will be better equipped to handle controversial images, resulting in more reliable and accurate classifications.

References

- Abo Jouhain, A. (2024). Optimization of Deep Learning Techniques for Real-Time Detection and Tracking of Marine Species utilizing YOLOv8 and Deep SORT Algorithms UIS]. [CrossRef]

- Aboyomi, D. D., & Daniel, C. (2023). A Comparative Analysis of Modern Object Detection Algorithms: YOLO vs. SSD vs. Faster R-CNN. ITEJ (Information Technology Engineering Journals), 8(2), 96-106.

- Ahsan, F. , Dana, N. H., Sarker, S. K., Li, L., Muyeen, S., Ali, M. F., Tasneem, Z., Hasan, M. M., Abhi, S. H., & Islam, M. R. (2023). Data-driven next-generation smart grid towards sustainable energy evolution: techniques and technology review. Protection and Control of Modern Power Systems, 8(3), 1-42. [CrossRef]

- Ali, M., & Zhang, Z. (2024). The YOLO Framework: A Comprehensive Review of Evolution, Applications, and Benchmarks in Object Detection. Computers 2024, 13, 336. In. [CrossRef]

- Ali, S. G. , Wang, X., Li, P., Li, H., Yang, P., Jung, Y., Qin, J., Kim, J., & Sheng, B. (2024). Egdnet: an efficient glomerular detection network for multiple anomalous pathological feature in glomerulonephritis. The Visual Computer, 1-18. [CrossRef]

- Amura, A. , Aldini, A., Pagnotta, S., Salerno, E., Tonazzini, A., & Triolo, P. (2021). Analysis of diagnostic images of artworks and feature extraction: design of a methodology. Journal of Imaging, 7(3), 53. [CrossRef]

- Bhalla, S. , Kumar, A., & Kushwaha, R. (2024). Feature-adaptive FPN with multiscale context integration for underwater object detection. Earth Science Informatics, 17(6), 5923-5939.

- Bolong, C. , Zongren, Y., Manli, S., Zhongwei, S., Jinli, Z., Biwen, S., Zhuo, W., Yaopeng, Y., & Bomin, S. (2022). Virtual reconstruction of the painting process and original colors of a color-changed Northern Wei Dynasty mural in Cave 254 of the Mogao Grottoes. Heritage Science, 10(1), 164. [CrossRef]

- Cederin, L. , & Bremberg, U. (2023). Automatic object detection and tracking for eye-tracking analysis. In.

- Cetinic, E. , & She, J. (2022). Understanding and creating art with AI: Review and outlook. ACM Transactions on Multimedia Computing, Communications, and Applications (TOMM), 18(2), 1-22. [CrossRef]

- Chappidi, J. , & Sundaram, D. M. (2024). Novel Animal Detection System: Cascaded YOLOv8 with Adaptive Preprocessing and Feature Extraction. Ieee Access. [CrossRef]

- Deng, X. , & Yu, Y. (2023). Ancient mural inpainting via structure information guided two-branch model. Heritage Science, 11(1), 131. [CrossRef]

- Du, R. (2024). The cultural heritage of secular music and dance in the Mogao Caves of Dunhuang: AN ARTS EDUCATION PERSPECTIVE. Arts Educa.

- Duan, W. (2006). The Complete collection of Chinese Dunhuang murals, The editorial board of the Complete Collection of Dunhuang Murals.

- Dunkerley, D. (2023). Leaf water shedding: Moving away from assessments based on static contact angles, and a new device for observing dynamic droplet roll-off behaviour. Methods in Ecology and Evolution, 14(12), 3047-3054. [CrossRef]

- Fei, B. , Lyu, Z., Pan, L., Zhang, J., Yang, W., Luo, T., Zhang, B., & Dai, B. (2023). Generative diffusion prior for unified image restoration and enhancement Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition.

- Huang, L. , Wang, W., Wu, Z.-F., Dou, H., Shi, Y., Feng, Y., Liang, C., Liu, Y., & Zhou, J. (2024). Group diffusion transformers are unsupervised multitask learners.

- Jaworek-Korjakowska, J. , Yap, M. H., Bhattacharjee, D., Kleczek, P., Brodzicki, A., & Gorgon, M. (2023). Deep neural networks and advanced computer vision algorithms in the early diagnosis of skin diseases. In State of the art in neural networks and their applications (pp. 47-81). Elsevier.

- Kim, T. , Zhou, X., & Pendyala, R. M. (2022). Computational graph-based framework for integrating econometric models and machine learning algorithms in emerging data-driven analytical environments. Transportmetrica A: Transport Science, 18(3), 1346-1375. [CrossRef]

- Koo, B. , Choi, H.-S., & Kang, M. (2021). Simple feature pyramid network for weakly supervised object localization using multi-scale information. Multidimensional Systems and Signal Processing, 32(4), 1185-1197. [CrossRef]

- Li, Q. , Wang, P., Liu, Z., Zhang, H., Song, Y., & Zhang, Y. (2024). Using scaffolding theory in serious games to enhance traditional Chinese murals culture learning . Computer Animation and Virtual Worlds, 35(1), e2213. [CrossRef]

- Li, W. , Lv, H., Liu, Y., Chen, S., & Shi, W. (2023). An investigating on the ritual elements influencing factor of decorative art: based on Guangdong’s ancestral hall architectural murals text mining. Heritage Science, 11(1). [CrossRef]

- Lian, Y. , & Xie, J. (2024). The evolution of digital cultural heritage research: Identifying key trends, hotspots, and challenges through bibliometric analysis. Sustainability, 16(16), 7125. [CrossRef]

- Lin, X. , Sun, S., Huang, W., Sheng, B., Li, P., & Feng, D. D. (2021). EAPT: efficient attention pyramid transformer for image processing. IEEE Transactions on Multimedia, 25, 50-61. [CrossRef]

- Liu, S. , Yang, J., Agaian, S. S., & Yuan, C. (2021). Novel features for art movement classification of portrait paintings. Image and Vision Computing, 108, 104121. [CrossRef]

- Liu, Y. , Chen, W., Bai, Y., Liang, X., Li, G., Gao, W., & Lin, L. (2024). Aligning cyber space with physical world: A comprehensive survey on embodied ai. arXiv preprint arXiv:2407.06886, arXiv:2407.06886.

- Mei, S. , Li, X., Liu, X., Cai, H., & Du, Q. (2021). Hyperspectral image classification using attention-based bidirectional long short-term memory network. IEEE Transactions on Geoscience and Remote Sensing, 60, 1-12. [CrossRef]

- Menai, B. L. (2023). Recognizing the artistic style of fine art paintings with deep learning for an augmented reality application, Université Mohamed Khider (Biskra-Algérie)].

- Mu, R. , Nie, Y., Cao, K., You, R., Wei, Y., & Tong, X. (2024). Pilgrimage to Pureland: Art, Perception and the Wutai Mural VR Reconstruction. International Journal of Human–Computer Interaction, 40(8), 2002-2018. [CrossRef]

- Nazir, A. , Cheema, M. N., Sheng, B., Li, P., Li, H., Xue, G., Qin, J., Kim, J., & Feng, D. D. (2021). Ecsu-net: an embedded clustering sliced u-net coupled with fusing strategy for efficient intervertebral disc segmentation and classification. IEEE Transactions on Image Processing, 31, 880-893. [CrossRef]

- Petracek, P. , Kratky, V., Baca, T., Petrlik, M., & Saska, M. (2023). New era in cultural heritage preservation: Cooperative aerial autonomy for fast digitalization of difficult-to-access interiors of historical monuments. IEEE Robotics & Automation Magazine. [CrossRef]

- Ren, H. , Sun, K., Zhao, F., & Zhu, X. (2024). Dunhuang murals image restoration method based on generative adversarial network. Heritage Science, 12(1), 39. [CrossRef]

- Sakiba, C. , Tarannum, S. M., Nur, F., Arpan, F. F., & Anzum, A. A. (2023). Real-time crime detection using convolutional LSTM and YOLOv7, Brac University].

- Shao, W. , Rajapaksha, P., Wei, Y., Li, D., Crespi, N., & Luo, Z. (2023). COVAD: Content-oriented video anomaly detection using a self attention-based deep learning model. Virtual Reality & Intelligent Hardware, 5(1), 24-41. [CrossRef]

- Song, S. (2023). New Era for Dunhuang Culture Unleashed by Digital Technology. International Core Journal of Engineering, 9(10), 1-14.

- Tekli, J. (2022). An overview of cluster-based image search result organization: background, techniques, and ongoing challenges. Knowledge and Information Systems, 64(3), 589-642. [CrossRef]

- Tian, Z. , Huang, J., Yang, Y., & Nie, W. (2023). KCFS-YOLOv5: A high-precision detection method for object detection in aerial remote sensing images. Applied Sciences, 13(1), 649. [CrossRef]

- Veysi, H. (2022). Megatsunamis and microbial life on early Mars. International Journal of Astrobiology, 21(3), 188-196. [CrossRef]

- Wang, X. , Tan, X., Gui, H., & Song, N. (2021). A semantic enrichment approach to linking and enhancing Dunhuang cultural heritage data. In Information and Knowledge Organisation in Digital Humanities (pp. 87-105). Routledge.

- Wang, X. , Zhao, K., Zhang, Q., & Liu, C. (2024). Digital deduction theatre: An experimental methodological framework for the digital intelligence revitalisation of cultural heritage. In Intelligent Computing for Cultural Heritage (pp. 203-220). Routledge.

- Xiao, H. , Zheng, H., & Meng, Q. (2023). Research on Deep Learning-Driven High-Resolution Image Restoration for Murals From the Perspective of Vision Sensing. Ieee Access. [CrossRef]

- Yu, Y. , Qian, J., Wang, C., Dong, Y., & Liu, B. (2024). Animation line art colorization based on the optical flow method. Computer Animation and Virtual Worlds, 35(1), e2229. [CrossRef]

- Zeng, Z. , Sun, S., Li, T., Yin, J., & Shen, Y. (2022). Mobile visual search model for Dunhuang murals in the smart library. Library Hi Tech, 40(6), 1796-1818. [CrossRef]

- Zeng, Z. , Sun, S., Li, T., Yin, J., Shen, Y., & Huang, Q. (2024). Exploring the topic evolution of Dunhuang murals through image classification. Journal of Information Science. 50(1), 35–52. [CrossRef]

- Zhang, B. (2024). Enhanced Safety of Autonomous Driving in Real-World Adverse Weather conditions , via Deep Learning-Based Object Detection Université d’Ottawa| University of Ottawa].

- Zhang, J. , Zhang, X., Huang, Z., Cheng, X., Feng, J., & Jiao, L. (2023). Bidirectional multiple object tracking based on trajectory criteria in satellite videos. IEEE Transactions on Geoscience and Remote Sensing, 61, 1-14. [CrossRef] [PubMed]

- Zhang, M. , & Tian, X. (2023). Transformer architecture based on mutual attention for image-anomaly detection. Virtual Reality & Intelligent Hardware, 5(1), 57-67. [CrossRef]

- Zhang, X. (2023). The Dunhuang Caves: Showcasing the Artistic Development and Social Interactions of Chinese Buddhism between the 4th and the 14th Centuries. Journal of Education, Humanities and Social Sciences, 21, 266-279. [CrossRef]

- Zhao, M. , Agarwal, N., Basant, A., Gedik, B., Pan, S., Ozdal, M., Komuravelli, R., Pan, J., Bao, T., & Lu, H. (2022). Understanding data storage and ingestion for large-scale deep recommendation model training: Industrial product. Proceedings of the 49th annual international symposium on computer architecture,.

- Zheng, J. , Fu, Y., Zhao, R., Lu, J., & Liu, S. (2024). Dead Fish Detection Model Based on DD-IYOLOv8. Fishes, 9(9), 356. [CrossRef]

- Zhong, Q. , Liu, Y., Ao, X., Hu, B., Feng, J., Tang, J., & He, Q. (2020). Financial defaulter detection on online credit payment via multi-view attributed heterogeneous information network. Proceedings of the web conference 2020.

- Zhu, W. , Du, X., Lyu, K., Liu, Z., Ren, S., Xiao, S., & Seong, D. (2023). Big Data in Art History: Exploring the Evolution of Dunhuang Artistic Style Through Archaeological Evidence. Mediterranean Archaeology and Archaeometry, 23(3), 87-106.

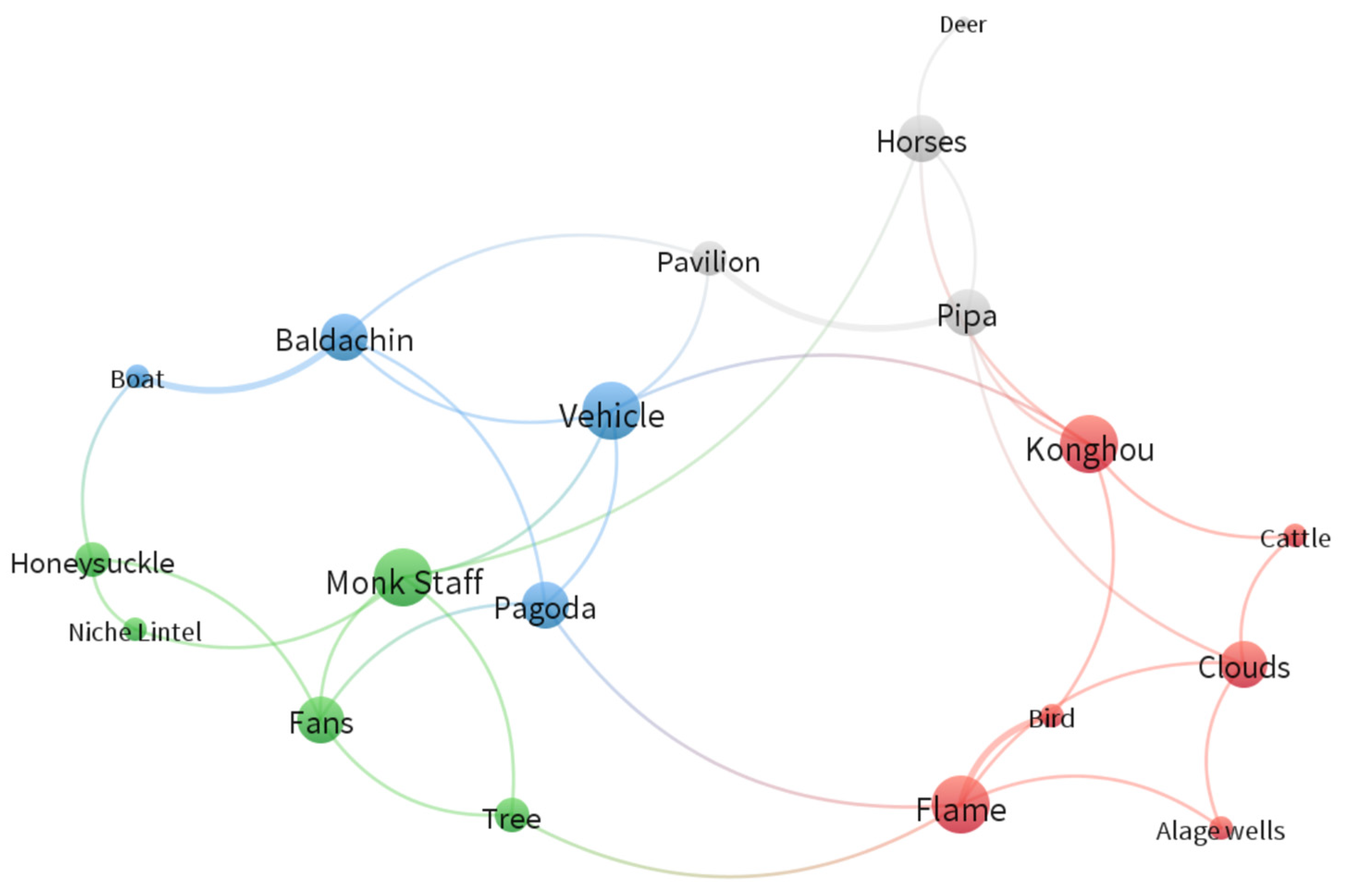

| category | name | introduce | sets |

|---|---|---|---|

| human landscap | Fans | These fans not only have practical uses but also carry rich cultural meanings, embodying the artistic achievements of ancient craftsmen. | 85 |

| Honeysuckle | In the edge of Dunhuang grotts, such as caisings, flat tiles, wall layers, arches, niches, and canopies, honeysuckle patterns are used as edge decorations. | 40 | |

| Flame | Flames in Dunhuang murals often appear as decorative patterns such as back light and halo, symbolizing light, holiness, and power. Around religious figures like Buddhas and Bodhisattvas, the use of flame patterns enhances their holiness and grandeur. | 35 | |

| Bird | Birds are common natural elements in Dunhuang murals. They adding vivid life and natural beauty to the murals. | 28 | |

| Pipa | As an important ancient plucked string instrument, the pipa frequently appears in Dunhuang murals, especially in musical and dance scenes. These pipa images not only showcase the form of ancient musical instruments but also reflect the music culture and lifestyle of the time. | 62 | |

| Konghou | The konghou is also an ancient plucked string instrument and is a significant part of musical and dance scenes in Dunhuang murals. | 34 | |

| tree | Trees in Dunhuang murals often serve as backgrounds or decorative elements, such as pine and cypress trees. They not only add natural beauty to the mural but also symbolize longevity, resilience, and other virtuous qualities. | 38 | |

| productive labor | Pavilion | Pavilions are common architectural images in Dunhuang murals. These architectural images not only display the artistic style and technical level of ancient architecture but also reflect the cultural life and aesthetic pursuits of the time. | 76 |

| Horses | Horses in Dunhuang murals often appear as transportation or symbolic objects, such as warhorses and horse-drawn carriages. These horse images are vigorous and powerful, reflecting the military strength and lifestyle of ancient society. | 72 | |

| Vehicle | Vehicles, including horse-drawn carriages and ox-drawn carriages, are also common transportation images in Dunhuang murals. These vehicles not only showcase the transportation conditions and technical level of ancient society but also reflect people’s lifestyles and cultural habits. | 49 | |

| Boat | While boats are not as common as land transportation in Dunhuang murals, they do appear in scenes reflecting water-based life. These boat reflecting the water transportation conditions and water culture characteristics of ancient society. | 22 | |

| Cattle | Cattle in Dunhuang murals often appear as farming or transportation images, such as working cows and ox-drawn carriages. These cattle images are simple and honest, closely connected to the farming life of ancient society. | 32 | |

| religious activities | Deer | Deer in Dunhuang murals often symbolize goodness and beauty. In some story paintings or decorative patterns, deer images add a sense of vivacity and harmony to the mural. | 52 |

| Clouds | Clouds in Dunhuang murals often serve as background elements. They may be light and graceful or thick and steady, creating different atmospheres and emotional tones in the mural. The use of clouds also symbolizes good wishes such as good fortune and fulfillment. | 72 | |

| Alage wells | Algae Wells are important architectural decorations. Located at the center of the ceiling, they are adorned with exquisite patterns and colors. They not only serve a decorative purpose but also symbolize the suppression of evil spirits and the protection of the building. | 126 | |

| Baldachin | Canopies or halos in Dunhuang murals may appear as head lights or back lights, covering religious figures such as Buddhas and Bodhisattvas, symbolizing holiness and nobility. | 43 | |

| Lotus | The lotus is a common floral pattern in Dunhuang murals, symbolizing purity, elegance, and good fortune. Below or around religious figures such as Buddhas and Bodhisattvas. | 24 | |

| Niche Lintel | Niche lintels are the decorative parts above the niches in Dunhuang murals, often painted with exquisite patterns and colors. These niche lintel images not only serve a decorative purpose but also reflect the artistic achievements and aesthetic pursuits of ancient craftsmen. | 10 | |

| Pagoda | Pagodas are important religious architectural images in Dunhuang murals. These pagoda images not only showcase the artistic style and technical level of ancient architecture but also reflect the spread and influence of Buddhist culture. | 66 | |

| Monk Staff | The monastic staff is a commonly used implement by Buddhist monks and may appear as an accessory to monk figures in Dunhuang murals. As an important symbol of Buddhist culture undoubtedly adds a strong religious atmosphere to the mural. | 29 |

| Models | Based Models | DSC | KW | RE-FPN | P/% | R/% | F1/% | mAP/% | FLOPs (G) | FPS |

|---|---|---|---|---|---|---|---|---|---|---|

| Model1 | YOLOv8 | 77.9 | 77.9 | 75.2 | 83.5 | 28.4 | 529 | |||

| Model2 | YOLOv8 | ✓ | 81.6 | 73.9 | 78.8 | 80.8 | 27.7 | 868 | ||

| Model3 | YOLOv8 | ✓ | 77.3 | 74.8 | 78.7 | 81.3 | 26.9 | 640 | ||

| Model4 | YOLOv8 | ✓ | 84.0 | 83.2 | 84.5 | 82.1 | 14.2 | 474 | ||

| Model5 | YOLOv8 | ✓ | ✓ | 80.9 | 74.8 | 75.9 | 82.0 | 27.9 | 669 | |

| Model6 | YOLOv8 | ✓ | ✓ | 80.6 | 79.8 | 76.5 | 86.2 | 13.4 | 539 | |

| Model7 | YOLOv8 | ✓ | ✓ | 80.6 | 81.4 | 84.3 | 78.9 | 15.0 | 524 | |

| Model8 | YOLOv8 | ✓ | ✓ | ✓ | 81.6 | 80.9 | 80.5 | 88.2 | 13.1 | 592 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).