1. Introduction

The use of automated sensors to collect data has grown extremely rapidly in recent years. Automated sensor data are now being used routinely for monitoring a wide range of systems, including human health, welfare and behaviour, the physical environment, wildlife, and agriculture. Automated sensors have the potential to massively increase the use of empirical data in decision making. This is partly because they are able to collect very large quantities of data, often at much lower cost than more traditional methods of data collection, and partly because they can collect data at a sufficiently high temporal resolution to feed into decision making in “real time”. In agriculture, numerous recent review papers discuss sensors in the areas of precision farming, smart farming, big data, and so on. Digital transformation/Smart farming for sustainable development is discussed in [

1], with an overview of how new precision farming technologies assist farmers in management/decision making, from which it is clear that artificial intelligence (AI) and machine learning (ML) play a critical role. Technologies are used to measure the environment (weather, GHG emissions), crops, soil, water and animals. In precision farming collected data is used for control (e.g. irrigation, fertilizer application, weeds, pests and diseases, feeding) and prediction for decision support (e.g. weather forecasting, crop and animal yields, ..). Other reviews [

2,

3] examine recent trends in precision farming for both crops and livestock and contain tables showing a range of technologies along with the main objectives. Many review papers discuss artificial intelligence applications [

3,

4], or focus on AI/Machine Learning [

5,

6], or Deep Learning [

7].

There are numerous recent reviews in the general area of precision livestock farming (PLF) [

8,

9,

10,

11,

12,

13,

14,

15] that discuss sensor data. Again, there are quite a few review papers that focus on machine learning/AI [

16,

17,

18,

19,

20]. [

21] suggests that much more work is needed to get to the stage of real-time auto-monitoring of livestock on farms whilst [

22] emphasises that development needs to be guided by livestock farmers’ needs enhancing (not substituting), farmers’ capabilities. Sensors used depend largely on the environments in which livestock are kept, with a major distinction between housed animals versus those that graze. They also depend on the practicality as well as the trade-off between cost and value of individual animals being monitored, versus animals being monitored in groups.

Because of the value, as well as high level of management and data collection for dairy cows, this area has the most extensive use of a range of sensors and research into their application with many review papers [

23,

24,

25,

26]. [

26] classifies the range of sensors used as ‘At Cow’ (includes wearable sensors such as accelerometers and Global Positioning System (GPS) collars as well as ingested), ‘Near Cow’ (sensors at fixed locations, includes feeding, weighing, sensors at feeding locations, imaging, and all remote real time sensors, such as Geographic Information Systems (GIS)), and ‘From Cow’ (includes milk measurements for example). By far the most established use of auto-monitoring in dairy is to detect estrous and/or calving [

27,

28,

29,

30,

31,

32,

33,

34,

35]. The most frequently used technology for this is accelerometers or pedometers, but other methods can be used, for example monitoring temperature, or 2-D image/video analyses, and there are studies showing that these methods generally work fairly well. There are also a range of methods for detecting lameness [

36,

37,

38,

39,

40,

41,

42,

43,

44,

45]. Objectives for which methods are less well-established include monitoring of dairy cows’ or calves’ health and well-being [

46,

47], including diagnosis of specific diseases [

34,

41,

48,

49,

50,

51,

52,

53]. Machine learning is predominant in papers [

19,

39,

41,

50,

51,

54,

55,

56] on using sensor data to predict events of interest, in individual dairy cows or calves.

The main distinction with precision livestock methods for beef cattle [

57] used for detecting calving [

58,

59,

60,

61], or health and welfare problems [

62,

63,

64], is the fact that they tend to be grazing, so sensors commonly used, often in combination, are accelerometers, Global Navigation Satellite System (GNSS) sensors and weather sensors. Sensors together with satellite imagery can be used to manage production and grazing more generally for ruminants on extensive systems [

65,

66]. Sensors used for sheep [

66,

67,

68,

69,

70] are also usually intended for use out in the field. As sheep are less valuable than cows, less expensive options are required for them [

70]. Objectives include detecting lambing [

71,

72,

73,

74], oestrus [

75], lameness [

44,

45] and other illnesses [

76,

77,

78], predators [

79], and changes in a range of behaviour measurements [

70] including behaviour relating to ewe-lamb welfare [

80], and production [

81].

In contrast most methods on real time monitoring of pigs have been developed for housed pigs [

82,

83,

84,

85], but much of this is still at the development stage, with more research needed to roll this out for use on farms for real time decision making particularly at the level of individual animals. The main areas of interest are to manage production and feeding [

86], to predict disease [

87,

88,

89], and to manage the welfare of piglets [

90] including detection of farrowing [

91] and nursing behaviour [

92,

93]. [

88] reviews prediction of health indicators for a range of common health and welfare problems in piglets, suggesting that whilst wearable sensors could be used, locational (sensors at fixed locations) sensors are preferable and more practical. Areas of ongoing research include recognition and tracking of pigs [

94,

95,

96,

97], automatically measuring or estimating weights [

96,

98,

99,

100], estimating behaviours [

96,

97,

98,

101], including aggression [

102,

103,

104,

105] which is of concern especially after mixing. Much of this research is based on 2D and 3D image analyses [

90,

106,

107] for which the advantage of locational sensing must be traded off against complexity and computational load of methods. Sound monitoring is similarly practical as it is cheap and also locational, but in general it cannot detect individuals. Sound can be used to detect pig vocalisations [

108,

109], such as coughing [

110] which can be indicative of respiratory disease and welfare related behaviours such as screaming.

The main distinction with poultry is that automatic measurement on real farms is more likely to be on groups rather than on individual birds [

111]. Commercial flock management is already automated in terms of controlling the environment by automatic monitoring of a range of measures in poultry houses such as temperature and humidity. Light schedules, layer egg collection and provision of feed and water are also automatically controlled. But in terms of real time monitoring of actual health and welfare using sensors, methods are still at the developmental stage, with advances needed before they could be used for commercial broiler and layer flocks [

112]. Methods are generally aimed at monitoring flocks, or unidentified individuals within flocks. Image and video analyses include methods for estimating weights [

113], recognition and tracking of individual birds [

114], detecting lameness [

115,

116] or sick birds [

117], group activity and colocation [

118], and group optical flow measured in flocks as an indicator of health problems [

119]. Sound analyses includes methods for detecting distress calls [

120] as an indicator of general productivity and welfare, abnormal sounds indicating respiratory disease [

121] and for distinguishing eating versus non eating vocalizations [

122]. Other research includes detection of feather pecking [

123] and environmental sensing to indicate specific diseases [

124]. RFID [

125] or accelerometers [

126,

127] could be used with the aim of measuring behaviour on a representative subset of birds, but is unlikely to be used more extensively in commercial flocks.

This review examines quantitative approaches underlying the use of sensor data for continuous monitoring of individuals, or groups, for real time prediction of health and welfare issues. In many areas, development and validation of quantitative approaches is still needed in order to move from the developmental stage to this intended practical application [

128]. Whilst there is wide-ranging literature in this area, much of it dominated by machine learning methods, in livestock farming there is a paucity that provides fair robust statistical comparisons of alternative quantitative methods and evidence that resulting decision making performs adequately in practice on commercial farms. That is, it must be practically feasible to repeatedly apply the method dynamically in real time on farms, and optimise decisions made. We discuss alternative quantitative approaches that could be used for real time decision making from automatic monitoring, which we refer to as

prediction/decision methods, as well as approaches to rigorous statistical evaluation of the resulting decision-making process, which we refer to as

prediction/decision validation. Associated research challenges are discussed in detail with reference made to livestock farming, but many issues discussed are relevant to other application areas. We illustrate points made by examining recent studies in various livestock species and by data simulation to aid understanding.

2. Sensors and Data Streams for Livestock Monitoring

A wide range of sensors can be used for monitoring to aid decision making in real time.

Table 1a shows some commonly used examples of sensors for on-farm monitoring of livestock [

13,

14,

15,

24,

66,

70]. Sensors commonly used may depend on species, and also on whether they are housed or outside/grazing. They are generally used for managing production, nutrition, grazing, reproduction, and/or detecting health and welfare problems. Key to precision/SMART farming is that data from sensors on individuals (or groups) allow the farmer to make efficient decisions in real time to take appropriate actions such as checking and isolating or treating individual animals or groups, or changing or supplementing feed for groups.

Table 1a is not intended to be an exhaustive list of sensors available, or being developed, for on-farm use, rather it is intended to elucidate aspects pertinent to applicability of different quantitative methods. Choice of appropriate quantitative methods will depend on the overall design of the data collection scheme and various characteristics of the resulting data streams (see

Table 1b) but most importantly on

who/what is being measured

when, and the

nature of the resulting measurements.

Table 1.

(a) Examples of commonly used sensors for real time monitoring of livestock on farms showing types of sensors, what is being measured on what species and the purposes of the monitoring.

Table 1.

(a) Examples of commonly used sensors for real time monitoring of livestock on farms showing types of sensors, what is being measured on what species and the purposes of the monitoring.

| Name |

Species

being

Monitored1

|

What is Being

Measured |

Sensor

Technology |

Purpose of

Monitoring |

Comments |

| 1 Individual intakes |

Dairy Cows, Beef Cattle, Calves, Sheep

|

Individual feeding & drinking behaviour; Amounts if technology allows

|

Sensors at feeders/drinkers that record individual RFID tags. More advanced systems that also record feed or drink taken at each bout. |

Managing Nutrition & Production; Detecting Health & Welfare Problems of individuals |

|

2 Group

intakes |

Dairy Cows, Beef Cattle, Calves, Pigs, Poultry, Goats, Sheep |

Group feeding & drinking including amounts |

Automatic livestock feeders and drinkers |

Managing group Nutrition & Production; Detecting Health & Welfare Problems in groups |

|

| 3 Individual weights |

Dairy Cows, Beef Cattle, Pigs, Sheep

|

Individual Live-weights |

Walk over weighers that record individual RFID tags. |

Managing Nutrition & Production; Detecting Health & Welfare Problems of individuals |

Walk over weighers placed to maximise the number of readings (e.g. on way in/out of milking parlour) |

| 4 Estimated weights per individual |

Pigs, Sheep |

Live-weights estimates per individual but individuals not identified |

Walk over weighers (e.g. at races, or in pens) |

Managing Nutrition & Production; Detecting Health & Welfare Problems in groups/of individuals |

Can be used to sort into different feeding areas using marking and/or gates system. For pigs in pens can be placed between loafing and feeding areas or separating off if unwell. |

5 Estimated liveweights per group/

individual |

Poultry - Broilers, Turkeys |

Live-weight plus number on plate hence average liveweights; Individuals not identified; Some platforms only measure one bird at a time |

Weighing Plates/Platforms for individuals/groups |

Managing group Nutrition & Production; Detecting Health & Welfare Problems in groups |

This is a sampling of weights in the flock. Could give 1000s of weight measurements per day. |

6 Milk

parlour data |

Dairy Cows |

Milk yield, milking duration, peak flow; milk quality, Somatic Cell Count (SCC); position in parlour/ milker |

Automatic milking systems plus manual sampling |

Managing Nutrition & Production; Detecting Health & Welfare Problems of individuals |

Milk quality and SCC measures may not be available in real time but could be sampled regularly (e.g. once per day or week). |

| 7 Milk bulk lab data |

Dairy Cows |

Somatic Cell Count (SCC), Milk quality |

Milk bulk sampling - manual sampling |

Managing group Nutrition & Production; Detecting Health & Welfare Problems in groups |

Milk quality and SCC measures may not be available in real time but could be sampled regularly (e.g. once per day or week). |

| 8 Milk other bulk data |

Dairy Cows |

Temperature, Volume, Stirring |

Milk bulk sampling - various sensors |

Managing group Milk Production and Processing |

Real time monitoring of physical attributes of milk in bulk tank are available |

| 9 Accelerometer |

Dairy Cows, Beef Cattle, Pigs, Sheep, Goat

|

Behaviour (e.g. activity or time budgets in different classes: lying/standing, grazing/not, rumination, … or raw acceleration in x, y and z direction) |

Accelerometer |

Detecting Heat, Calving/ Lambing/ Farrowing, Health & Welfare Problems |

Not usually used on pigs on real farms. For sheep cheaper options needed. For grazing animals often removed at intervals for data download and recharging. Can give raw accelerometer data but sometimes measures are derived only (e.g. behaviour). |

| 10 Pedometer |

Dairy Cows, Beef Cattle, Pigs, Sheep, Goat

|

Behaviour (step count) |

Pedometer |

Detecting Heat, Calving/ Lambing/ Farrowing, Health & Welfare Problems |

Less advanced than accelerometer |

| 11 Location |

Dairy Cows, Beef Cattle, Pigs, Sheep, Goat

|

Location and behaviour |

GNSS (Global navigation satellite system), GPS (global positioning system) |

Managing Grazing & Production; Detecting Health & Welfare Problems of individuals |

|

| 12 Relative location |

Dairy Cows, Beef Cattle, Pigs, Sheep, Goat

|

Location relative to static receivers and behaviour |

Proximity loggers plus static receivers |

Managing Grazing & Production; Detecting Health & Welfare Problems of individuals |

Locations can be estimated as well as mother-off spring distances |

13 2D

Imaging – pigs |

Pigs |

Body condition score, Liveweight |

2D Imaging from above |

Managing Nutrition & Production; Detecting Health & Welfare Problems of individuals |

Can be placed between loafing and feeding areas and used to sort into different feeding areas using gates system |

14 2D/3D

Imaging – identified cows & pigs |

Cows, Pigs |

Body condition score, Liveweight, Behaviour |

2D/3D Imaging from above |

Managing Nutrition & Production; Detecting Health & Welfare Problems of individuals |

Identifying individuals is difficult so used in combination with reading RFID tags at intervals and then tracking. |

15 2D

Imaging - birds |

Poultry - Broilers, Turkeys |

Location and behaviour; Dead birds; Weight estimation; |

2D Imaging from above |

Managing Nutrition & Production; Detecting Health & Welfare Problems of groups |

|

| 16 Thermal imaging |

Dairy Cows, Beef Cattle, Calves, Pigs, Poultry, Goats, Sheep |

Body Temperature |

Thermal Imaging |

Detecting Health & Welfare Problems of individuals |

Can be used for detecting heat stress, and potentially fever, pain, … |

| 17 Thermometer |

Dairy Cows |

Body Temperature |

Thermometer |

Detecting Health & Welfare Problems of individuals |

|

| 18 Sound - vocalisations |

Dairy Cows, Beef Cattle, Calves, Pigs, Poultry, Goats, Sheep |

Specific Species Dependent Vocalisations |

Acoustic Sensors |

Detecting Health & Welfare Problems of Groups |

These sensors are mounted in e.g. house, but could be used outside in confined areas |

| 19 Sound – feeding behaviour |

Cows, Sheep |

Feed intake, Behaviour (grazing, ruminating) |

Acoustic Sensors |

Managing Grazing & Production |

These sensors are mounted on animals |

| 20 Remote satellite sensing |

Available Grazing for Cows, Sheep |

Quality of grazing |

Remote Sensing (Satellite imaging) |

Managing Grazing |

|

| 21 Drones |

Cows, Sheep and Available Grazing |

Quality of grazing; Location of groups; |

UAV (Unmanned Aerial Vehicle) |

Managing Grazing; Detecting Health & Welfare Problems |

|

| 22 Environmental sensors |

Environmental conditions for livestock |

Temperature, Humidity, Emissions (e.g. Ammonia, Methane, CO2) |

Environmental sensors |

Managing Health & Welfare Problems of groups; Managing emissions |

|

| 23 Weather |

Weather for livestock |

Temperature, Humidity, Rainfall, Windspeed, … |

Weather station |

Managing Health & Welfare Problems of groups |

|

Table 1.

(b) Examples of commonly used sensors for real time monitoring of livestock on farms showing various characteristics that may be associated with the measurements and resulting monitoring data streams. The nature of the resulting sensor measurements is not listed here as raw data may be unprocessed, or may be processed in differing ways, leading to multiple types of measurements for each type of sensor that could be used for monitoring.

Table 1.

(b) Examples of commonly used sensors for real time monitoring of livestock on farms showing various characteristics that may be associated with the measurements and resulting monitoring data streams. The nature of the resulting sensor measurements is not listed here as raw data may be unprocessed, or may be processed in differing ways, leading to multiple types of measurements for each type of sensor that could be used for monitoring.

| Name |

Measurement

on:

|

Timing of sensor

measurements |

Animals

are:

|

Sensor

is: |

Sensor

is:

|

| Name |

Individuals ID known |

Individuals ID not known |

Some Individuals ID not known |

Groups or impact on groups |

Continuous or near-continuous |

Intermittent |

Regular |

Housed (usually) |

Outside/grazing |

On animal |

Not on animal |

At fixed location1 |

Mobile |

| 1 Individual intakes |

● |

|

|

|

|

● |

|

● |

● |

|

● |

● |

|

| 2 Group intakes |

|

|

|

● |

● |

● |

|

● |

● |

|

● |

● |

|

| 3 Individual weights |

● |

|

|

|

|

● |

● |

● |

● |

|

● |

● |

|

| 4 Estimated weights per individual |

|

● |

|

|

|

● |

|

● |

|

|

● |

● |

|

| 5 Estimated liveweights per group/individual |

|

|

● |

|

|

● |

|

● |

|

|

● |

● |

|

| 6 Milk parlour data |

● |

|

|

|

|

● |

● |

● |

● |

|

● |

● |

|

| 7 Milk bulk lab data |

|

|

|

● |

|

● |

● |

● |

● |

|

● |

● |

|

| 8 Milk bulk other data |

|

|

|

● |

● |

|

|

● |

● |

|

● |

● |

|

| 9 Accelerometer |

● |

|

|

|

● |

● |

|

● |

● |

● |

|

|

● |

| 10 Pedometer |

● |

|

|

|

● |

● |

|

● |

● |

● |

|

|

● |

| 11 Location |

● |

|

|

|

● |

● |

|

|

● |

● |

|

|

● |

| 12 Relative location |

● |

|

|

|

● |

● |

|

|

● |

● |

|

● |

● |

| 13 2D Imaging – pigs |

|

● |

|

|

● |

● |

|

● |

|

|

● |

● |

|

| 14 2D/3D Imaging – identified cows & pigs |

● |

|

|

|

● |

● |

|

● |

|

|

● |

● |

|

| 15 2D Imaging - birds |

|

|

● |

● |

● |

● |

|

● |

● |

|

● |

● |

|

| 16 Thermal imaging |

|

● |

|

|

● |

● |

|

● |

● |

|

● |

● |

● |

| 17 Thermometer |

● |

|

|

|

● |

● |

|

● |

● |

● |

|

|

● |

| 18 Sound - vocalisations |

|

|

|

● |

● |

|

|

● |

● |

|

● |

● |

|

| 19 Sound – feeding behaviour |

● |

|

|

|

● |

● |

|

|

● |

● |

|

● |

● |

| 20 Remote satellite sensing |

|

|

|

● |

|

● |

|

|

● |

|

● |

|

|

| 21 Drones |

|

|

● |

● |

● |

● |

|

|

● |

|

● |

|

● |

| 22 Environmental sensors |

|

|

|

● |

● |

|

|

● |

|

|

● |

● |

|

| 23 Weather |

|

|

|

● |

● |

|

|

|

● |

|

● |

● |

|

With regards to

when, some types of sensors are on the animal (e.g., accelerometers, GNSS collars or tags), generally resulting in (near) continuous time measurements, but others are at fixed locations and so often provide intermittent measurements (e.g., monitoring feeding bouts, or liveweights from walk over weighers). Management practices may imply, or may be designed such that that some of these measurements are taken at regular intervals (e.g., 2 or 3 times per day at/or to/from the milking parlour). Other measurements may be continuous time when being measured, but with lags in data availability and gaps (e.g., when accelerometers/GNSS sensor collars need to be removed from grazing sheep or cows for data download and/or battery charging) and others may be intermittent but continuous when being measured (e.g., when an individual is in view of 2D overhead cameras, or when an individual wearing a proximity logger is close to an antennae in the field). In general, data most useful for real time monitoring is data that is available in real time at sufficiently high temporal resolution at regular time intervals (i.e.

time series). Near continuous data at high temporal resolutions (e.g., every minute), or intermittent data, may be summarised to lower temporal resolutions (e.g. daily) resulting in regular time series data that are more practical for use for real time monitoring. However, in doing this care must be taken not to lose aspects of the data which may be important for detecting health issues, such as changes in diurnal behaviour [

128,

129,

130]. Spatial data could be available at a (near) continuous spatial scale (e.g., measurements from satellite imaging), at an intermittent spatial scale (e.g., GNSS on animals) or at discrete locations (e.g., in situ environmental or weather monitors). Monitoring data from sensors where each sensor measurement at each time is at a recorded spatial location (e.g., measurements from repeated satellite imaging, grazing cows wearing collars with both GNSS and accelerometers) is referred to as

spatio-temporal data. Explicit approaches for real time monitoring that utilise spatio-temporal data could be developed but here, we focus on time series data. For simplicity, spatial measurements can be summarised into time series, for example converting animal locations at fine temporal scale into distance travelled over pre-defined intervals (e.g. daily).

Who is being measured relates to whether individuals are monitored (e.g., accelerometers on dairy cows and walk over weighers that measure one animal at a time) or whether only groups are monitored (e.g., from automatic feeders and drinkers in pig or poultry houses). Either way, all individuals in a group may be measured or just some subset (e.g., some cows or sheep grazing on the hill may have GNSS collars and some may not, only a subset of poultry flocks will likely walk over weigh plates). Radio-Frequency Identification (RFID) tags, sometimes called Electronic Identification (EID) tags, are commonly worn by larger animals in order to record measurements from fixed or hand-held sensors for identified individuals. In some cases, measurements are made on individuals, but the individuals may not be identified in the data set (e.g., walk over weighers for sheep or pigs, used with a marking or gate system to sort them into different feed groups). Individual identification is ideal for health and welfare monitoring of individuals but clearly monitoring of groups and on unidentified individuals in groups could still be useful, since all livestock monitoring measurements are expected to be used for indicating when animals should be checked manually.

The final major consideration is the nature of the monitoring measurements available at each data point. Often the measurements are numerical and continuous (e.g. walk over weighers, milk yield, temperature, feed or water intake measured from automatic feeders) or discrete (e.g. step counts or counts of alarm calls in successive time intervals). Occasionally the measurements are ordinal (e.g., liveweight category for sorting into feed groups, body condition scores (BCS) or locomotion scores). Another measurement type is a classifier with no ordering and this is most often encountered when measuring behaviours according to some pre-define ethogram that will be species and/or environment dependent (e.g. classes could be lying, standing, grazing, ruminating, walking, running, sleeping, perching, feeding, drinking, …..). Sometimes binary classifiers are generated (e.g. grazing versus not grazing, lying versus standing). Furthermore, the measurements from a single sensor could be single continuous measures (e.g. liveweight, feed intake), or multivariable (e.g. acceleration in 3 directions, amounts of different gases), or even more complex (e.g. sound, image). Sometimes classifications are 2 way, for example behaviour by location classes. And finally, there may be multiple data streams per individual or group arising from different sensors, often at different temporal resolutions. Note that further processing (see below) could result in data streams that are different in nature than the original raw data.

It is convenient if the monitoring data alone can be used, however, often it needs to be used in conjunction with other information on individuals or groups. For example, behaviour will likely be affected by the amount of time spent grazing versus being housed, or the weather, whilst liveweights will be affected by days in gestation, birth or possibly changes in diet. If other information is needed, then, for real time monitoring, the issue of whether it is practical to record the required information accurately, and in a timely manner, on real farms needs to be considered.

The data from many types of sensors will need initial checking/cleaning before it is ready for subsequent use. How this is best done will depend on the detail of the sensor data. For example, liveweights, and liveweight changes must be within sensible ranges for the species, and can only change gradually, whereas behaviour could change suddenly. There are a range of methods that could be used for data cleaning, but it is important that these methods do not eliminate genuine data that are indicative of problems we want to detect and that this process is automatable on-farm.

Some sensor technology has underlying methods implemented that automatically converts the raw data into other derived measurements. For example, accelerometer data, where the raw data is usually acceleration in 3 dimensions at fine temporal resolution, is often converted to a general activity measure, or time budgets of behaviour classes [

131,

132,

133], or counts of behaviours, over pre-specified time intervals. Video may also be converted into time budgets or counts of behaviours, whilst sound may be converted, for example, into counts of coughs, or species dependent alarm calls. However, it could be advantageous to use raw rather than derived data for real time monitoring, since estimated quantities could be inaccurate, and, more generally, information is likely lost in estimating derived quantities, but this must be traded off against both complexity and increased computational load. Even for complex information such as sound, or images, in theory the raw (digitised) information could be used by a generic quantitative method for detecting changes in real time indicative of a problem. Use of all raw information might also be advantageous as it could have the capacity to detect a wider range of problems, but on the other hand there may be disadvantages in taking this data driven approach, due to a lack of focus on aspects of the data streams known, a priori, to be indicative of specific problems. Note that, where use of raw data is not feasible in real time monitoring and prediction, it would likely still be advantageous to be able to use the raw data for validation and to develop alternative quantitative approaches, However, some devices available for use on farms only gives access to derived quantities, either for commercial reasons or because on some devices the raw data are used to give derived quantities but not stored to save on memory use.

Following, we discuss generic issues relating to real time prediction/decision and prediction/decision validation that are applicable to any data streams, whether raw or derived, though we are focusing on time series data [

134] per individual or group. As mentioned above, many sensor data streams can be converted to time series data. Furthermore, though our focus is on real time prediction, data at fine temporal resolution may be converted to courser resolution to reduce computation load, to smooth out noise in the data, or to align with the resolution of the prediction/decision process.

3. Framing the Prediction/Decision and Validation Problem

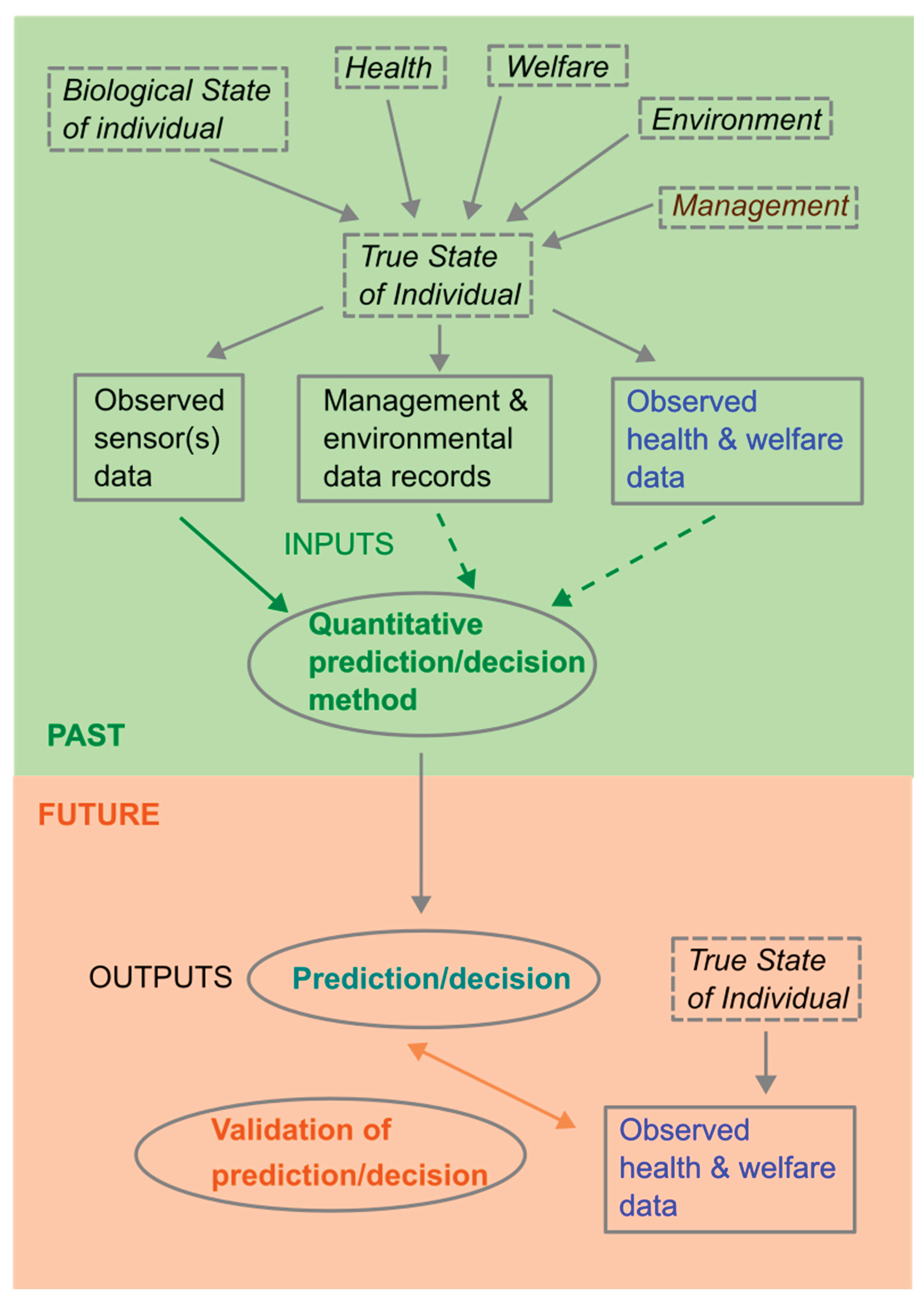

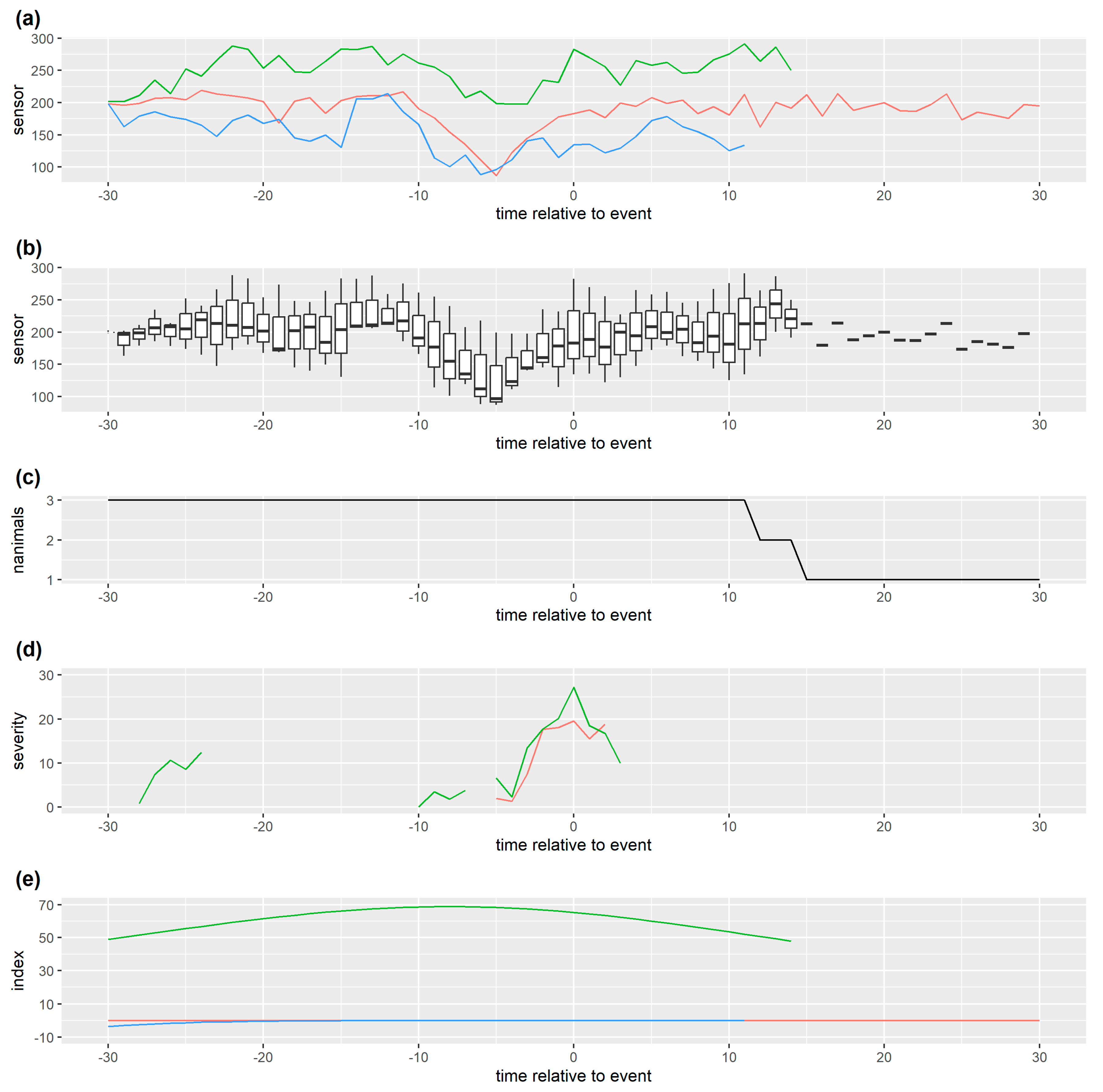

In framing the prediction/decision and validation problem (

Figure 1), it is important to distinguish between the true (unknown) state of the monitored individual and observed data. Observed data is driven by the true state of the individual which has multiple dependent drivers including how it is being managed, the environment it is in, its current health and welfare, and other aspects which make up the biological state of the individual such as breed, age, stage of gestation or lactation, etc. Observed data will be affected by noise and inaccuracies or incompleteness due to limitations of data collection methods. In addition to this, observed sensor data may differ between individuals for other reasons, for example normal activity in some animals may just be inherently higher, and/or more variable between times, than activity in other animals.

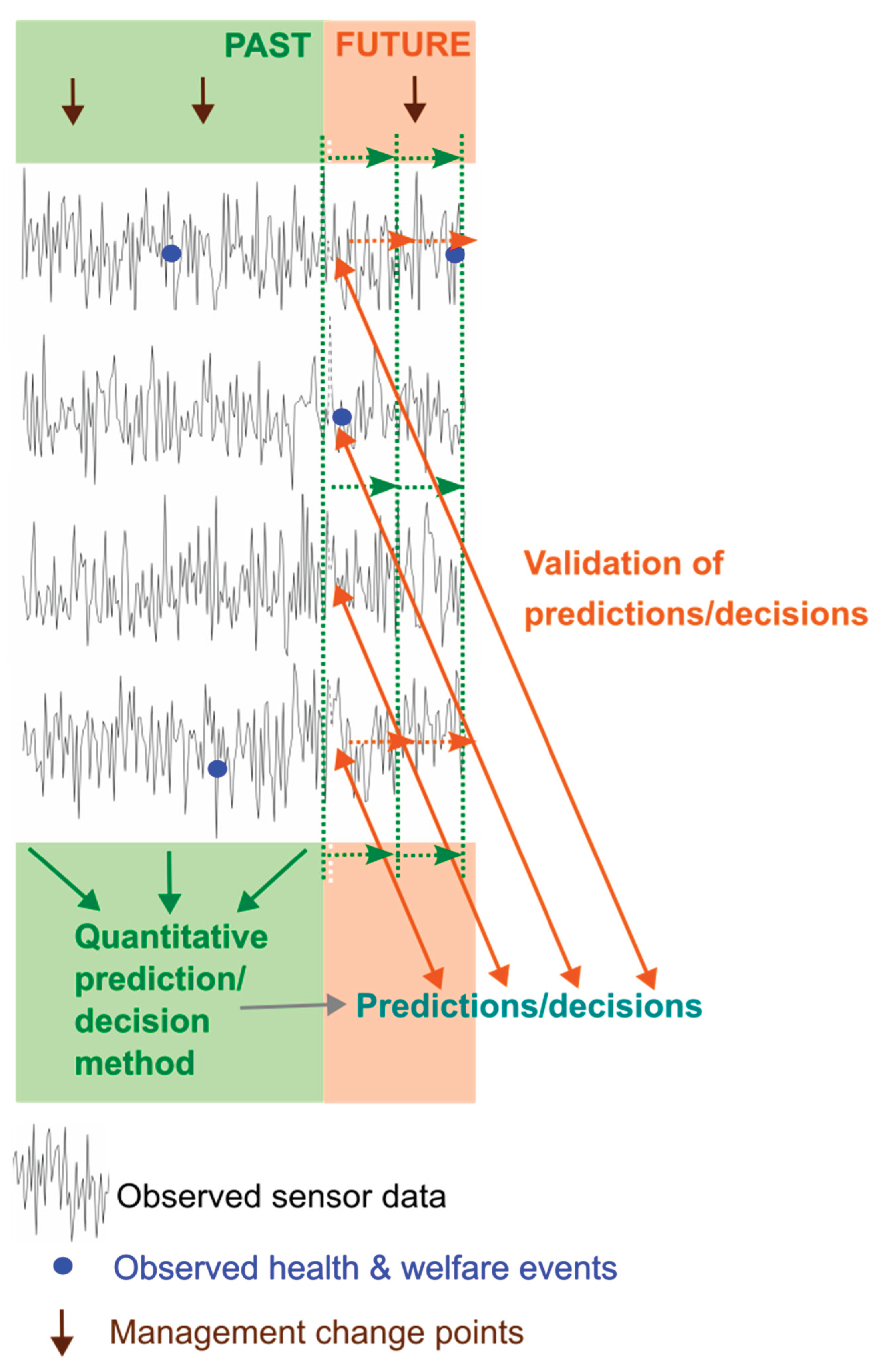

All quantitative methods use some inputs (data) to obtain outputs (the prediction/decision) at each time step (

Figure 1) and this process must be repeated to allow decisions to be made in real time as the data evolves (

Figure 2).

One important distinction between development of quantitative methods used to make predictions/decisions is that some of them use past sensor data alone, whilst others also use independently collected past observed data on health & welfare issues. We will refer to the latter as supervised methods and the former as unsupervised methods. Unsupervised methods usually involve some means of detecting that observed sensor data differs from expected sensor data when the individual is in a normal state, which is taken as an indication (prediction) that an individual may have a problem and should be checked and managed/treated appropriately (decision). However, unsupervised methods may need health and welfare data in order to optimize decision making. Supervised methods usually involve fitting a statistical model (or training a machine learning method) which uses past sensor data as inputs to predict current (or near future) observed health and welfare data as outputs. Data used for fitting could be past data from the individuals for which we require predictions/decisions or it could be data from other individuals. Once the model has been fitted, past sensor data from an individual may be inputted into the model to output a prediction of whether or not an individual currently has a health or welfare problem. Other health and welfare data may be needed to optimize decision making.

As mentioned above, the true state of the individual, and hence sensor data, may be influenced by drivers other than health and welfare, so any changes in these other drivers need to be taken into account in the method used to make predictions/decisions. There are two fundamentally different approaches to this. The first is to use observed data on these other drivers (which may be imperfect) to adjust for changes in the prediction/decision method (such as using these as additional covariates in statistical models). The second is to treat these other drivers as changes to be predicted in the same way as health and welfare data are handled.

Once a prediction/decision method has been developed it needs to be validated which involves comparing successive real time predictions/decisions with the health and welfare of individuals as the data evolves (

Figure 2). A limitation of this is that it can only take place against observed data on health & welfare issues for an individual which are likely imperfect. Initial validation can take place on the data set on which a method has been developed but final validation must take place on other data.

4. Methods for Predictions/Decisions

To recap, the objective we are focused on in the context of livestock is to use past monitoring data to decide whether there is an indication that an individual (or group) has issues currently and so should be checked and managed/treated appropriately, and to be able to repeat this decision-making process dynamically in real time on farms as monitoring data evolves. There are numerous quantitative methods that could be applied to use available data to learn to make predictions/decisions about other data of interest, which can all be termed statistical learning [

135]. The most obvious and/or commonly used methods are described below.

4.1. Simple Methods

By far the simplest method for making decisions based on a numerical monitoring data stream from each individual is to use a pre-defined constant (upper and /or lower) threshold(s), on the current (or most recent) monitoring data to classify an individual as abnormal in some way at the current time. An extension of this method would be to use different thresholds for different breeds, farming systems, and so on, or to use different thresholds depending on current environments within the same system (e.g. grazing versus not today or seasonal effects). The choice of these thresholds could be established from previous research comparing sensor data in a range of health and welfare data and in different contexts.

Another simple and generic approach to tailor this to a specific situation, or farm, would be to base thresholds on whether the current monitoring data observation lies in the (upper and/or lower) extremes(s)/outlier(s) of the empirical cumulative distribution function (eCDF) of all past monitoring data collected so far on that farm. Or, to account for long term ‘normal’ trends, this could be based instead on a more recent moving window of past monitoring data that is large enough to estimate the eCDF with sufficient accuracy to judge outliers. Another obvious extension when enough data is accumulated, would be to estimate eCDFs for each individual based on its’ monitoring data, so that what is ‘normal’ could be defined differently for different individuals. Note that this simple approach could allow that ‘normal’ monitoring data streams between individuals vary both with respect to means and variances. This seems a sensible approach for individuals that are usually well, but of course, it may fail to detect an individual that is constantly ill, and, conversely, may indicate an individual that is always well, as ill, just because the extremes in their eCDF do not arise from extreme monitoring data. Therefore, it is clearly oversimplistic to only consider within individual effects. Instead, some information must be shared between individuals as well. Comparison to recent/current group eCDF could allow adjustment to be made for management or environmental effects that are changing locally in time (i.e. short term effects). Note however, that any method based on eCDFs should allow that the more current observations being checked at any one time, the more likely some are to lie in the tails by chance when there is no problem.

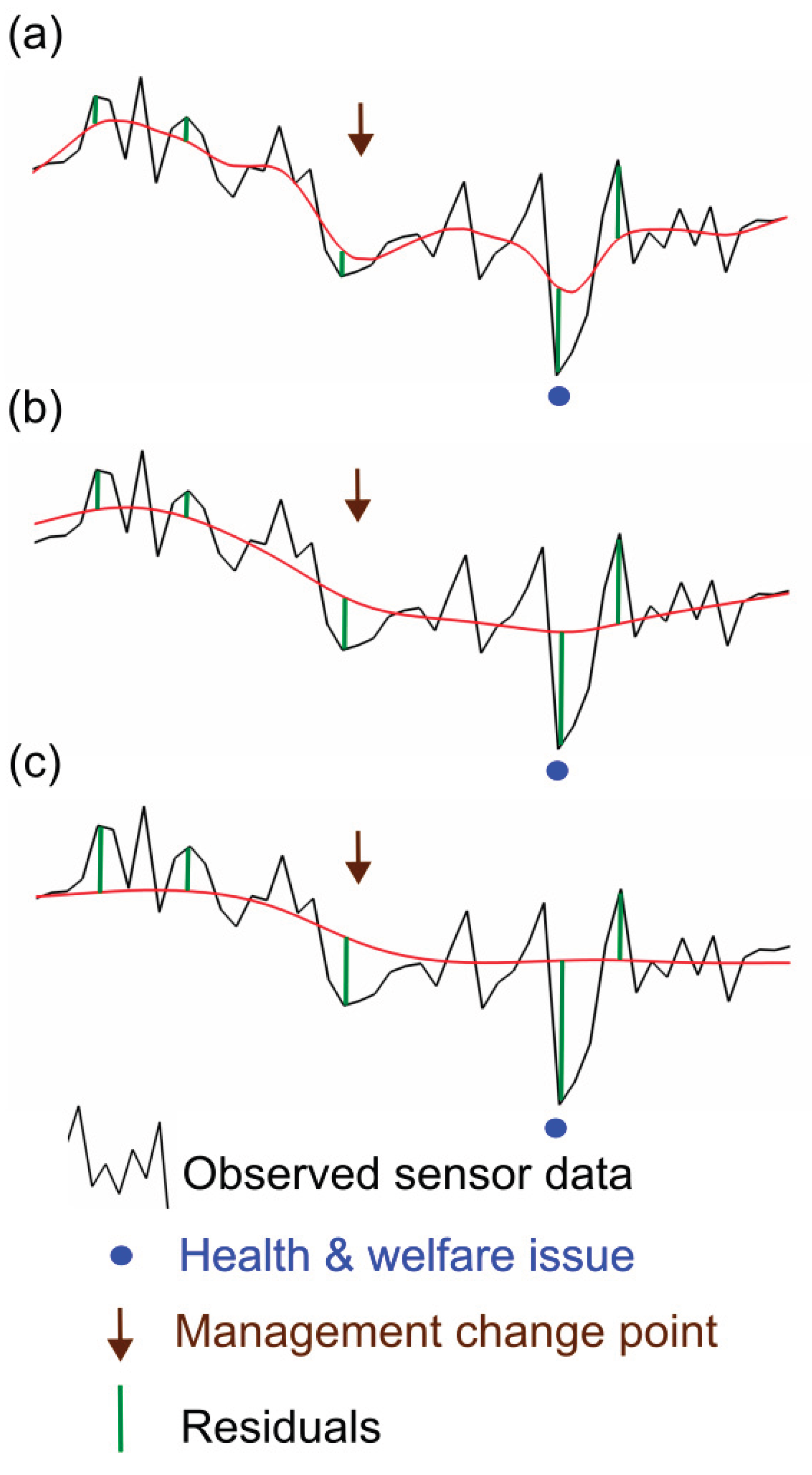

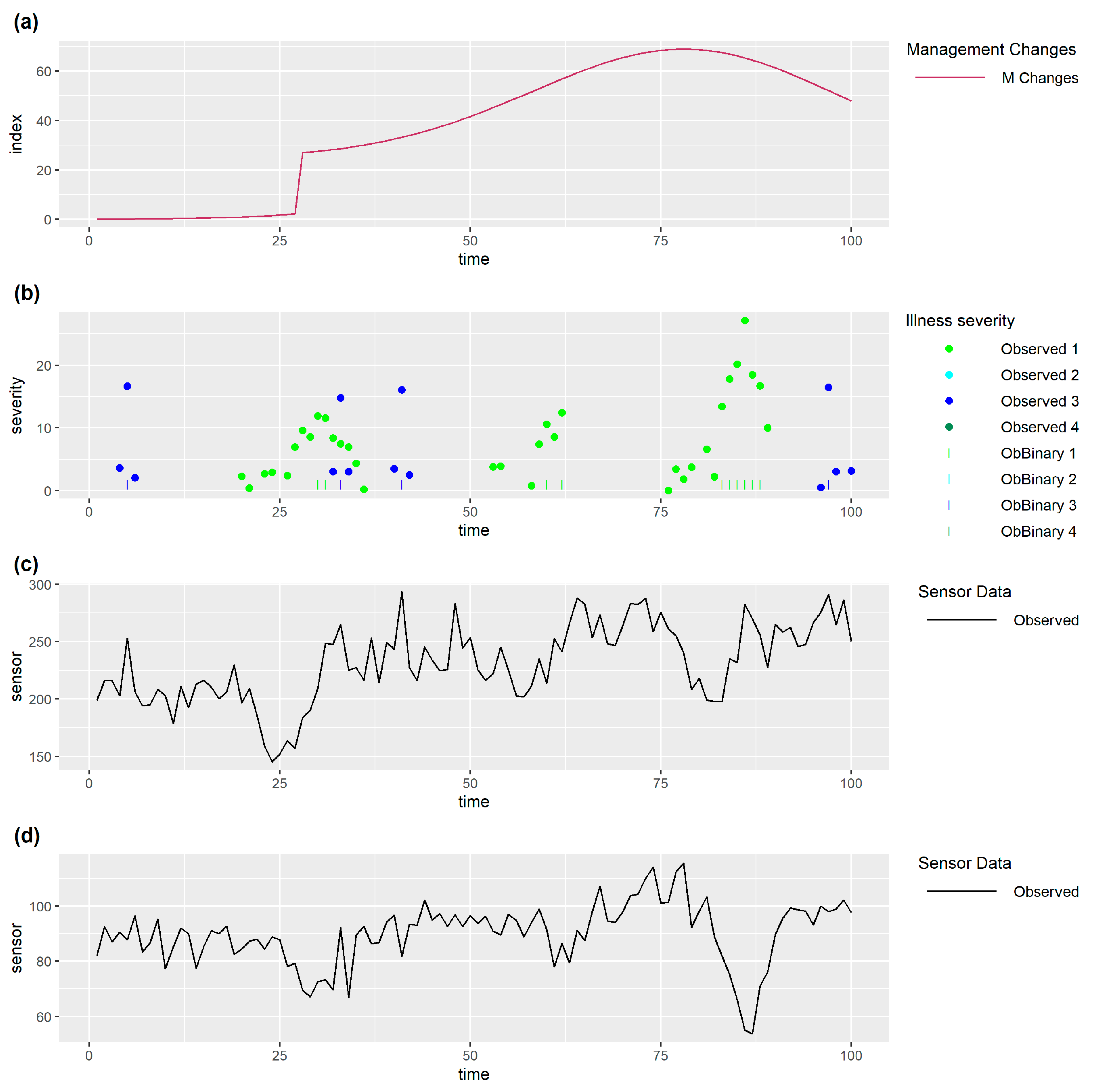

Another way of dealing with long term trends would be to smooth each monitoring data stream to establish a baseline and then to use threshold(s) to identify (upper and/or lower) extremes/outliers in the eCDF of the residuals from the smoother (ie. the monitoring data observations – smoothed values) (

Figure 3). Smoothers such as polynomial curves, simple moving averages, spline smoothers, or exponential smoothers could be used [

136,

137]. In deciding on appropriate smoothers, and on the extent of smoothing, it is important to consider the nature of departures that would be picked up. If the degree of smoothing is too weak, short term fluctuations indicative of a problem could be included in the baseline and so go undetected (

Figure 3(a)). On the other hand, if smoothing is stronger (

Figure 3(b)), longer term fluctuations that are not indicative of problem could be excluded from the baseline and thus may be judged to be a problem. This method may be extended adjusting for either long or short term trends seen for all individuals being managed together at the same point in time, from the empirical distribution of residuals for each individual (

Figure 3c). This is appealing as a generic sensor data driven way of dealing with management effects, such as changes in feed or grazing regime, or management group (e.g. herd or flock) wide treatments, without needing to use recorded data on management, and can be useful when management effects on sensor measurements are consistent across the group. However, group wide changes should always still be flagged as they could indicate a herd wide problem such as heat stress or disease spread, in which case recorded management data would still be needed to establish whether group wide changes are due to management or indicative of some problem. Smoothing methods should generally be applied adjusting for both management group effects and differences in individual monitoring data of individuals in their normal state.

It is possible that some basic preprocessing of monitoring data before simple methods are applied may improve them. A transform such as calculating logs may improve accuracy where effects are multiplicative on the raw scale and hence linear on the log scale. Some aspects mentioned above could be dealt with by preprocessing for example at each time point, subtracting the mean monitoring data over the group from individual monitoring data to adjust for group level changes, or standardising monitoring data for each individual could adjust for individual bias and noise which may vary between individuals in their normal state.

Figure 3.

Monitoring data for one individual with (a) a weak spline smoother based on this monitoring data which follows short term changes (b) a stronger spline smoother based on this monitoring data which follows long term changes and (c) a stronger spline smoother based on the monitoring data of all individuals managed together which thus follows long term management group changes in time. This simulated data has one management change point which results in a reduction in monitoring data streams from all individuals, and one later health issue for this individual which causes the sensor measurement to decrease further. The residual coincident with this health issue is relatively small for (a) and relatively large for (c), though in all three cases it is extreme compared to other residuals for this data. Here we have smoothed all the monitoring data but, for real time prediction/decision, residuals at the current time point must be calculated from a smoother only based on past data.

Figure 3.

Monitoring data for one individual with (a) a weak spline smoother based on this monitoring data which follows short term changes (b) a stronger spline smoother based on this monitoring data which follows long term changes and (c) a stronger spline smoother based on the monitoring data of all individuals managed together which thus follows long term management group changes in time. This simulated data has one management change point which results in a reduction in monitoring data streams from all individuals, and one later health issue for this individual which causes the sensor measurement to decrease further. The residual coincident with this health issue is relatively small for (a) and relatively large for (c), though in all three cases it is extreme compared to other residuals for this data. Here we have smoothed all the monitoring data but, for real time prediction/decision, residuals at the current time point must be calculated from a smoother only based on past data.

4.2. Anomaly/Change-Point Detection

Detection of change-points in time series data is a well know methodological research area with a wide range of applications [

138] which can be viewed as changes in the parameters underlying a statistical model of the time series. Often change-point detection focuses on detection of one or more long term state changes in a single univariate time series when the full series are available (offline change point detection). However, we are interested in detecting recent changes in any of a multiple of time series based on data so far (online change point detection). Furthermore, we are interested in detecting both abrupt short term changes (anomalies), as well as more gradual short term changes that could be indicative of a problem, whereas change-point detection tends to focus on non-transient changes from one underlying state to another.

The area of process or quality control [

139] is relevant in which control charts can be used to ascertain if a monitored process is out of control. This is generally implemented by generating an alarm when a measured quantity deviates from a predefined acceptable target distribution. One method commonly used is referred to as a cumulative sum (CUSUM) control chart in which the cumulative sum is a value that can be adjusted by long term average trends in order to ascertain whether recent values are outliers according to some predefined threshold.

Anomaly, or outlier, detection applied to time series [

140] can include methods arising from data where each data point is pre-labelled as normal or abnormal, referred to as supervised, but unsupervised anomaly detection is more commonly used. For anomaly detection in theory any method for forecasting time series [

141] could be used, defining anomalies as time series observations that depart from their forecasts. Actual methods used for online change point detection, or anomaly, or outlier detection, tend to fall into one of the categories below of statistical modelling, latent class or variable modelling or machine learning.

4.3. Classical Statistical Modelling

There are two fundamentally distinct ways in which classical statistical modelling approaches can be used, which can be referred to as unsupervised if based on monitoring data only or supervised if gold standard data on the outcome of interest(s) is used for model development and fitting.

4.3.1. Modelling Usual Monitoring Data

The first (unsupervised) is to form a statistical model of ‘normal’ monitoring data for all individuals (or groups), which can be applied to all (or some window of) the monitoring data so far, and then to use this, together with current/recent monitoring data to decide whether an individual (or group) is exceptional at the current point in time indicating there is likely to be some health or welfare problem. Formally, the statistical modelling uses past monitoring data to provide a predicted probability density function (PDF) for the monitoring data for each individual at each time, with subsequent current/recent monitoring data at the upper and/or lower extremes of this distribution likely indicative of some health or welfare problem.

For numerical monitoring data, linear mixed modelling (LMM [

142]) could be used with random effects included to allow for random variability between and within individuals, whilst also incorporating appropriate fixed effects characterising management, time in the year, time relative to giving birth, and so on. Extensions might be needed such as to allow for different underlying variability in sensor data between times for different individuals, or for errors that are correlated locally in time in sensor data as is often seen in time series data.

4.3.2. Modelling based on the Outcome of Interest

The second approach (supervised) relies on having gold standard outcome data. The LMM described above could be used to model the monitoring data, but with the addition of an explanatory variable of a classification of a health problem, or the severity (a continuous or ordinal variable) of a health problem (or problems). After initial model estimation, this could then be used for decision making in real time based on comparing actual monitoring data from each individual at each time with monitoring data PDFs estimated from the model assuming no health problem.

Conversely, a statistical model could be used that directly models the outcome of interest (e.g. a classification of a health problem, or the severity of a health problem) as a function of concurrent monitoring data. For example, generalised linear mixed models (GLMM [

142,

143]), with logit link and binomially distributed errors, could be used to model the binary outcome health problem/not as a function of concurrent monitoring data. LMMs could be similarly used for modelling severity outcomes. As above, random effects could be used to model inherent variability between and within individuals as well as appropriate fixed effects for management changes, for example, which might cause shifts in the monitoring data and hence in the outcome versus monitoring data relationship. After initial model estimation, this could then be used for decision making by using thresholds on the estimated probability of the binary outcome, or PDF of the severity, in the model estimated repeatedly for each individual at each time from past monitoring data together with other fixed effects.

For all these approaches, though some generic model development could be carried out for specific sensors on specific species, at some point this model would almost certainly need to be fitted and validated in the specific context in which it is to be used (i.e. for the actual animals on the farm). In particular, where there is between individual variability in monitoring data, random effect estimates would need to be obtained for the levels of individuals, in order to use this for decision making on farm for those individuals. Further, for methods involving modelling the outcome of interest, gold standard data would need to be available on farm at least initially, and possibly later on as well to intermittently sense check that the estimated model is still working, or to adjust it.

4.4. Latent Class or Variable Modelling

Latent class or variable modelling [

144] can be used to model monitoring time series as a function of either unobserved states (e.g. individual has a health problem, or not) or unobserved variables (e.g. the severity of a health problem). Hidden Markov Models (

HMM [

145]), are a special case of latent class modelling commonly used to model hidden mutually exclusive states underlying time series data, that could be applicable here. Cluster analysis [

146] is also used to deduce latent classes, though it is limited in that it does not allow other aspects of the data to be incorporated or modelled.

It is helpful to take a more generic approach of latent class or variable modelling. Multiple mutually exclusive latent states, multiple 2 level latent state classifications, or multiple latent variables could be used for multiple health problems. In a Bayesian model [

147] this can be done by specifying a parametric model for the association of the numerical monitoring data to the unobserved state(s) or variables. Bayesian modelling [

148] also provides the flexibility to model the latent class(es) or variables(s) in combination with other fixed and random effects as described above. Furthermore, relationships between the latent classes/variables and covariates could be included in the model, for example, to include tendency of health issues to occur at particular times of year (e.g. lameness in grazing animals) or times relative to birth (e.g. health issues that tend to occur soon after calving in dairy cows).

The resulting posterior distribution of the latent class(es)/variable(s) provides an estimate of the probability that an individual has a health problem, or an estimated PDF for severity, at the current time, which could be used for decision-making.

This approach can be classed as unsupervised in the sense that no data on the health status of individuals by times is needed for its’ application. Theoretically it is the most obvious direct approach to this problem. However, whilst it is relatively easy to specify appropriate models in a Bayesian framework, repeated fitting of the model as the monitoring data evolves for multiple individuals is likely to be computationally intensive though algorithms have been developed [

149] for sequential data that are specifically designed to address this kind of problem.

4.5. Machine Learning Methods

Machine learning is widely used in agriculture [

5,

16,

17,

150] including in livestock production and welfare. It is the most commonly used prediction method in recent papers for predicting health problems in livestock from auto-monitoring data [

38,

41,

46]. [

135,

151,

152] give introductions to machine learning methods and overviews of different techniques. Basically, machine learning is based on data that contains data points with multiple

features (measurements that can be numerical or categorical). The machine learning task will consist of using one or more of these features (referred to as input features) to predict one or more other features (referred to as output or target features).

Supervised machine learning is when the method is trained on a set of data points with known output features, usually referred to as labelled data points.

Unsupervised machine learning methods are trained on data sets containing input features only, and must predict output features, most commonly classifications, by patterns in the input features.

Semi-supervised machine learning is when the two approaches are combined by application to data points, some of which are labelled and some of which are not. When the output feature is categorical (e.g. individual has a health or welfare problem, or not) this tends to be referred to as a classification problem, or clustering in the case of unsupervised learning, whereas if the feature is numerical/continuous (e.g. severity of a health or welfare problem) this tends to be referred to as regression. Regression problems can be reformulated as classifications by binning values, though this is an ordinal, not categorical, classification. Note also that machine learning can be used to predict non-mutually exclusive classes.

In general, training in supervised learning will be based on minimising some cost (or loss) function which measures the departure of the predicted outputs to the actual outputs. For example, for binary target classifications binary cross entropy, which is log(p) where p is the probability of the true outcome, summed over the data points can be used whilst for regression, the mean square error (MSE), can be used. Unsupervised learning will also be based on minimising some cost (or loss) function, but it will not be dependent on the actual outputs. Either way, a balance must be struck between underfitting and overfitting, as both would result in inaccurate predictions on new data sets. Another general point about training is that some machine learning methods can be used with different settings (sometimes called hyperparameters), which can be chosen to have minimum cost, or best goodness of fit, for the training data set. In supervised machine learning it is important that appropriate coverage is achieved in the output space. For example, when training to predict a classifier there should an equal number of data points in each level. When there are imbalances, balance is often achieved by up- or down-sampling data points.

Once a method has been trained, the idea is that it can be used in other contexts for predictions of outputs, based on input features for new data points. Whether this results in accurate predictions or not, depends on both whether the input features capture everything needed to predict outputs reliably, but also on whether the machine learning ‘model’ holds. It may be that training may be needed in new contexts in which it is to be used. Furthermore, if one of the input features needed to get accurate predictions is the identification of the individual, then the machine learning method will need to be retrained in any context with new individuals.

4.5.1. Basic Machine Learning Methods

Interestingly, well established basic classical statistical methods, (such as regression, logistic regression, cluster analyses, PCA, and so on), along with parameters with the usual statistical interpretation (such as regression coefficients), are mentioned as machine learning methods in the above introductory papers. This is because, as mentioned above, all these methods are essentially doing the same thing, statistical learning [

135]. That said, when used in machine learning the methods by which the best fitting model is found tend to differ from in classical statistics where methods with provable theoretical properties (e.g. maximum likelihood) are often used. Regression methods for linear relationships include ridge, LASSO and elastic net regression. Support vector machines (SVM) can deal with non-linear relationships, by transforming the space of input features to a space with additional dimensions, in which simple linear classifiers can be used.

Decision trees and random forests are often used for supervised machine learning problems and can handle non-linear relationships. Decision trees basically involve successively partitioning the data into mutually exclusive sets based on criterion for the input features (which can be numeric or classifiers), resulting in a tree that best (according to some predefined measure) separates data points in the target space, which can either be a classification (classification tree) or numerical (regression tree). It is important with decision trees not to overfit the data to the targets as this will give inaccurate predictions on new data sets. Even if not overfitting, decision trees can appear to be good for the data set on which they are trained, as they were optimised for that, but they are often inaccurate when used to predict other data sets. To get around this, random forests are created by repeatedly generating optimal decision trees on bootstrap samples from the training data set, with each successive selection step in creating the tree based on a randomly selected subset of the input features. This results in a wide variety of trees. To get predictions, for any set of input features, estimates may be obtained for the output feature or target from the empirical PDF across these trees, on which decisions could be based.

4.5.2. Neural Networks

Neural networks are networks that can be trained to solve a wide range of supervised machine learning problems, and they are being used increasingly recently [

150]. Basically, the input features are the starting nodes, and the final nodes are the choice of output results. These output nodes usually make up a mutually exclusive categorization (with one class per node), along with probabilities of each node, though continuous variables can be handled similarly by binning to get classes. There are hidden nodes in layers in between the inputs and outputs. Each node takes a numerical value and is linked to nodes in the preceding layer using a weighted sum, plus some bias, and then a prespecified transformation to ensure that the resulting values lie between 0 and 1. Finding an optimum solution, involves setting all the weights and biases in the network appropriately to minimise some cost function over a training data set. Algorithms (e.g. back propagation) underlying neural networks do this efficiently.

Recurrent neural networks (RNN) are suggested for predicting future time series from past time series. They can cope with sequences of varying length, and contain feedback loops, so that predictions for the current time depend on the past time series. The underlying model is stable in the sense that weights and biases linking consecutive values remain constant. However, they are difficult to optimise.

Long Short-Term Memory (LSTM) networks are used to get around these optimisation problems by using different paths to control the relative impact of long to short term information on predictions. However, it is unclear whether these methods can be used for predicting other outputs, and they would likely need to be extended to include additional input features (not just the monitoring data).

4.5.3. Application of Machine Learning in Prediction/Decision Context

Here, the objective is to predict a different outcome(s) measure, based on monitoring data (time series) so far for an individual (

Figure 2), so this is how training would have to be done in order to get accurate predictions in practice. Given monitoring data streams for the full training set of data over a long period for each individual, this would create a huge training data set; for each individual at each time the data to be used as input could be the full monitoring data so far, together with other input features such as individual level variables (e.g. breed, age, …), time level variables (e.g. weather, management changes), and so on. Traditional machine learning methods assume that the number of input features do not change, so to use the actual monitoring data as inputs, windowing would be needed so that the same amount of past monitoring data is used at every time step for every individual. Furthermore, machine learning methods generally ignore random effects [

153] whereas it is plausible, as mentioned above, that underlying sensor values for individuals in their normal state need to be estimated to get accurate predictions of health outcomes. Presumably, this could be allowed for by including individual as part of the input features (for example, in neural networks, there could be one input node binary classifier per individual).

Research in this area [

41] often avoids these various complicating issues, for example, by reducing the data set to one (positive or negative) event per individual and summarising monitoring data, in a fixed number of multiple ways, up to that event time. More generally, a set of pertinent input features could be derived from the set of past monitoring data. For example, plausible measures could characterise recent short term variability for individuals, compared to their long-term variability, recent changes, changes per individual adjusted for diurnal effects or for group level management changes, and so on. This avoids complexity in the machine learning methods, but at the expense of a lack of generality, because of having to decide, a priori, what derivations from the initial set of input data are pertinent to the objectives. Any approach that essentially discards available information in data in this way, is not ideal when extensive long term monitoring data is available for individuals. Furthermore, these preprocessing steps applied to sensor data prior to using machine learning methods seem to be counter to a perceived appeal of machine learning methods, which is that they are black box, purely data driven, flexible, generic methods. However, it is suggested [

154] that for machine learning to be successful, knowledge needs to be combined with data, and that this can aid, not impede, in generalization of machine learning methods. It is plausible, that if the same data and knowledge are incorporated, albeit in different ways, for different quantitative methods, then difference in performance between these methods would be likely to reduce.

4.6. Discussion of Alternative Prediction/Decision Methods

The main advantages of the simple methods described above (

Section 4.1) is that they are easy to compute dynamically in real time as the data evolves, and that they are generic, in that they could be applied to any set of univariate numerical data streams. It should be noted though, that these methods are black-box, in that their output can lead to a binary decision, or in some cases a measure of the extent to which that decision should be made, but beyond that, does not elucidate more information about the underlying process. A common criticism of using black box methods is that detailed inferences about the system being studied cannot be made. However, on the premise that the basic inference we want to make in livestock monitoring, is just when to check animals or not, simplistic methods, or completely black box data driven methods (such as some machine learning methods), are acceptable so long as they can be shown to work. That is, it must be

computationally feasible to repeatedly apply the methods in real time on farm optimising decision making along the way, in any contexts in which they are to be applied. However, a method that concludes, based on one farm or population of farms, that some simple threshold can be used on the monitoring data streams resulting in optimal decision making, may not translate to other populations.

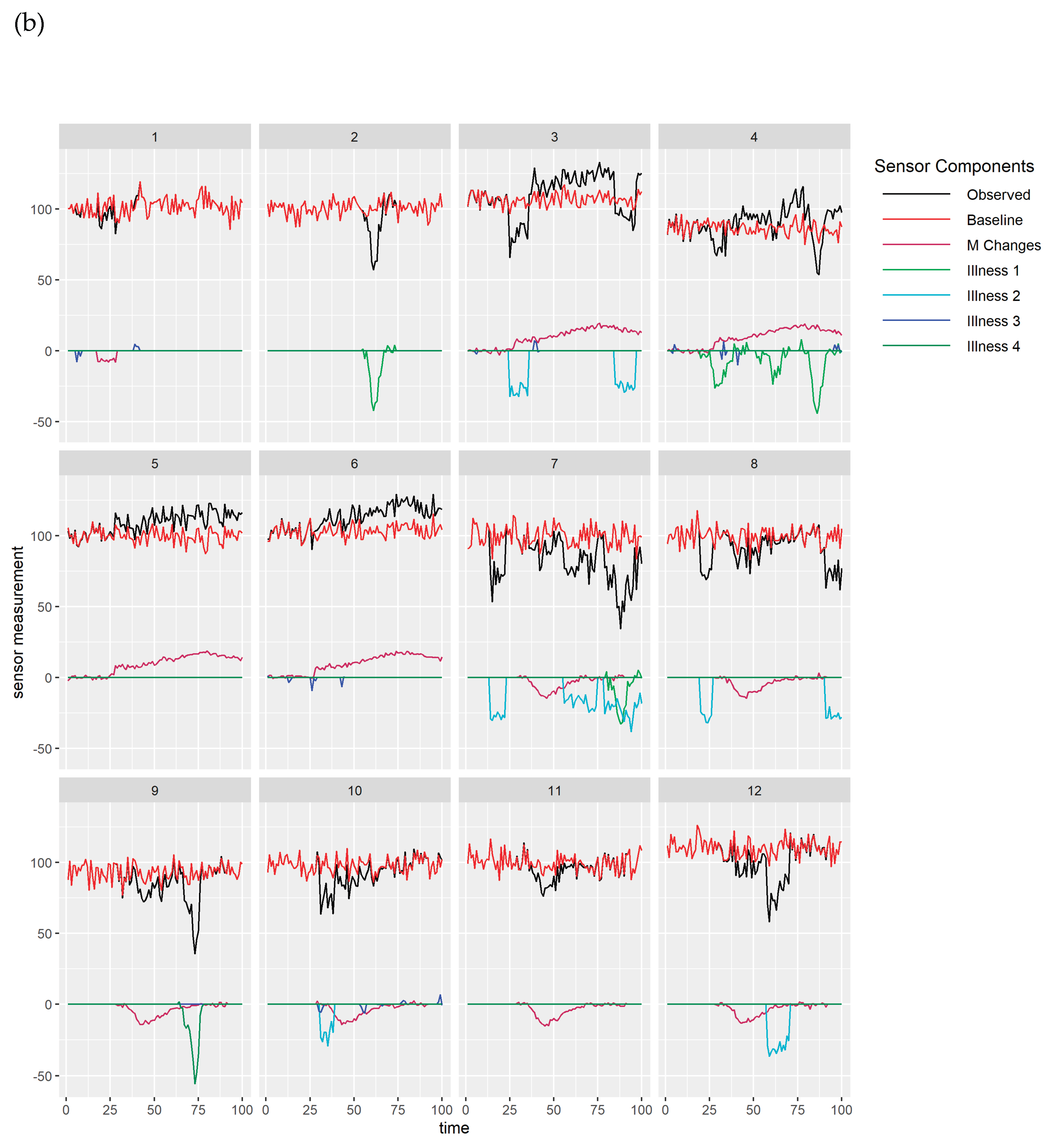

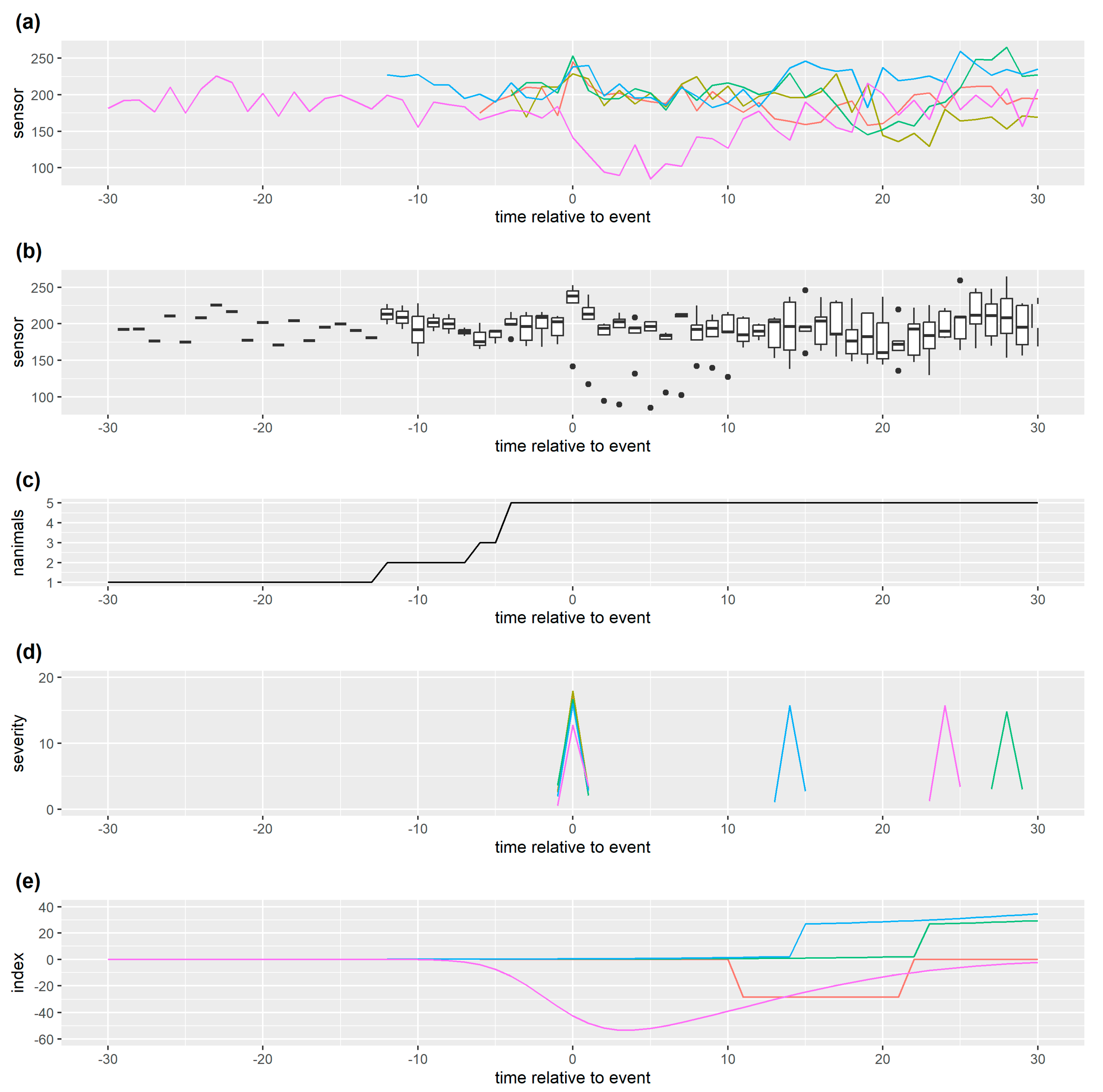

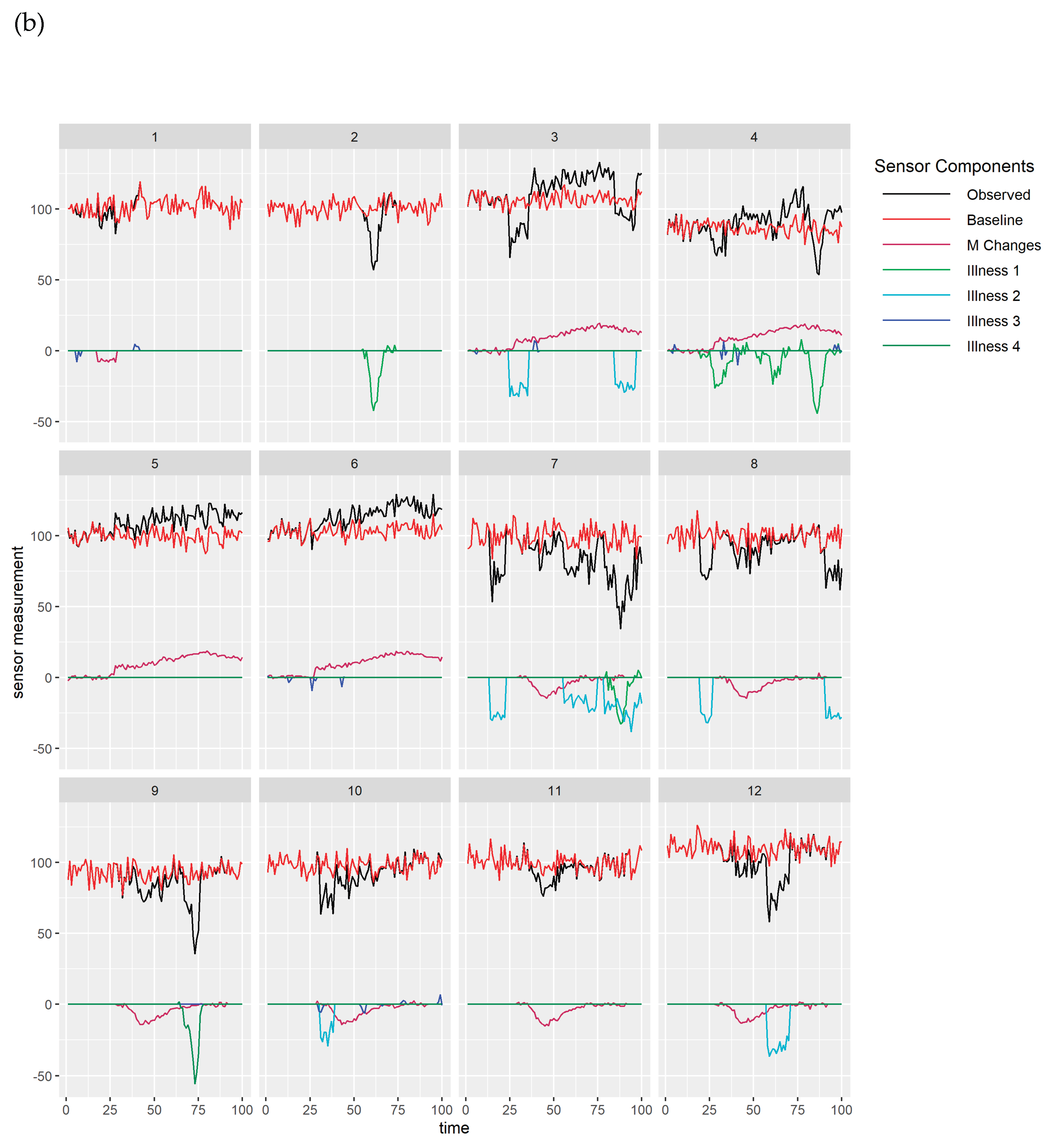

In further discussing alternative methods, it is useful to show simulated monitoring data illustrating some of the complexities.

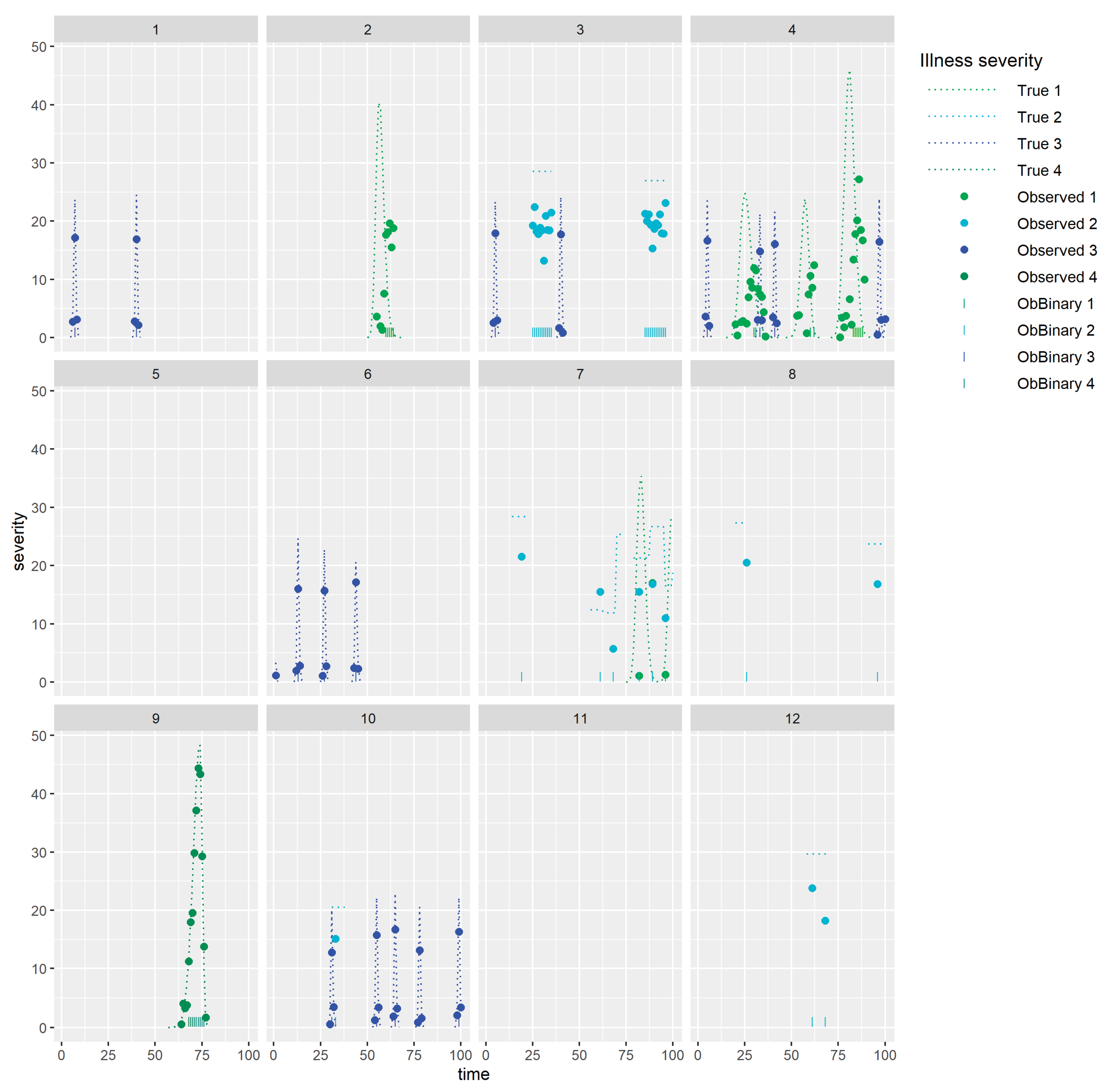

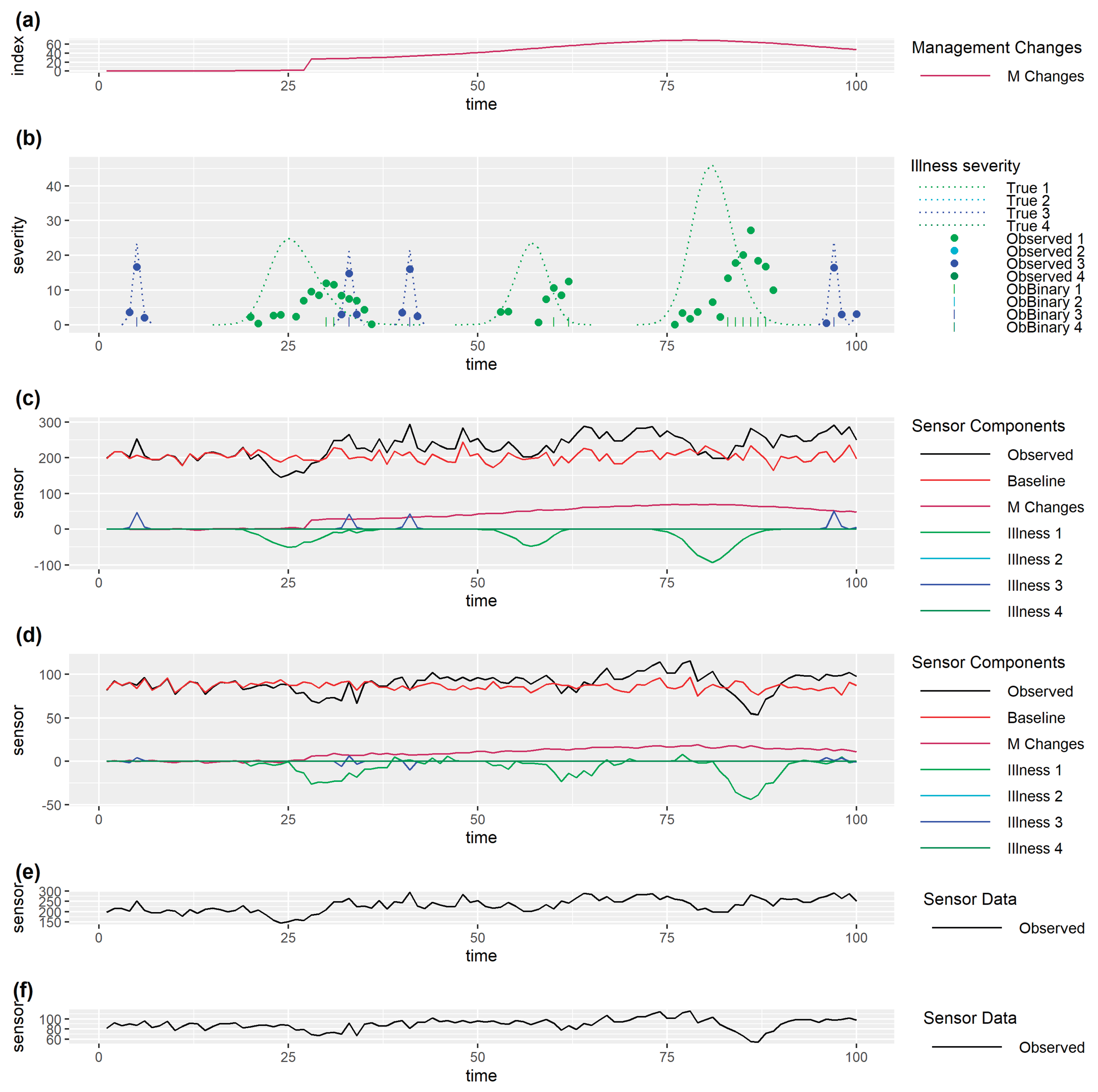

Figure 4(a) and

Figure 4(b) show simulated simultaneously observed monitoring data streams from 2 different types of sensors (both in black), together with components assumed to drive this data, for 12 animals in 3 management groups for which 4 types of health issues occur (1&4: illnesses, 2: isolation of individual animals for health reason, 3: heat). Observed data for each sensor depends on baselines per animal (both in red) which will depend on the current biological states of the individuals (for example stage in gestation or lactation) and which may exhibit underlying differences between animals in their average levels and noise. Baseline sensor levels (red) illustrate how some animals (e.g. 4 sensor 2) have lower levels than others (e.g. 12 sensor 2) and levels for some animals (e.g. 5, sensor 1) appear to be inherently more noisy than levels for others (e.g. 9 sensor 1). Sensor levels may be altered by the occurrence of management changes or health issues. Observed data from the first sensor (

Figure 4 (a)) are behaving as we might expect for activity measured by accelerometers, with activity immediately increasing with increased grazing, and decreasing with diet changes, and health issues apart from small increases in activity seen with heat events (labelled as illness 3). Note that activity decreasing when individual animals are isolated for a health reason (labelled as illness 2) could merely be indicative of their sudden change of environment. Observed data from the second sensor (

Figure 4 (b)) also change, though in some situations to a lesser extent, with grazing, diet changes and health issues apart from heat, but changes in response to some of the health issues (1) tend to lag behind the changes seen for the first sensor. Slower and more gradual changes in response to management or health issues would be expected in sensor measurements such as liveweight or milk yield than in sensors that measure behaviour.

Some of these complexities may make it difficult to apply methods on farm, for example, methods need to cope with major changes that occur routinely, such as birth of offspring, or management changes, for example altered grazing patterns within the year, changes in feed or routine health care. Further, if sensor data from different animals have inherently different biases or underlying variability, this must be allowed for in any method. Any of the above methods used in such way that this is not made explicit, could be applied to derived data for each individual that standardises a priori for these differences. That is, data streams could be pre-processed in such a way as to adjust for these differences. This could also make methods validated in one situation more transferable to another. In the case of supervised machine learning this could avoid the need to retrain the method for every new situation in which it is to be used, whilst in the case of statistical and latent variable/class modelling approaches this could result in simpler models where repeated fitting over time is computationally feasible. Statistical and latent variable/class modelling approaches, or machine learning methods, that explicitly allow for these added complexities, are intuitively appealing, but could be computationally intensive or result in non-identifiability (the method is too complex to achieve robust generalisable fits/estimation given available data). However, whether this is achieved via preprocessing adjustments or via direct modelling, one important thing to consider is whether a method would require all this information to be entered in real time when this is used on real farms as this could be impractical.

An alternative way of tackling major change events to avoid utilising this information, is to simply allow the method to include them (along with health & welfare issues) in the list of issues the method is intended to detect, or include them as additional latent states or latent variables (e.g. time grazing, time relative to calving), but this would clearly increase the complexity of these approaches. Furthermore, whilst intuitively appealing, this highlights that latent class or variable approaches, may need multiple classifications or variables to capture events such as these, as well as different types of health or welfare problems, making the underlying models far too complex. That said, a model that identifies different problems would be very useful for decision making.

One disadvantage of the simple approaches described above, or unsupervised statistical modelling approaches that simply model normal monitoring data in order to detect outliers from that, is that once abnormal monitoring data is detected, something must be done to ensure that it does not contribute to subsequent modelling of ‘normal’ monitoring data. Of course, it is feasible to do this so long as one is reasonably certain that the monitoring data is abnormal (and can exclude this on farm either manually, or preferably automatically), and so long as the modelling is being conducted in such a way that there remains enough information to estimate what is currently normal for all individuals. However, it is problematic to cope with this when an unmodelled state change has occurred for an individual (such as calving). Therefore, it is more appealing to build this into the model by using latent class or variable approaches, for example, which explicitly separate normal from abnormal states continuously, and in theory could cope with additional state changes such as calving, as well as the impact of latent variables such as time of gestation or time relative to calving.

One important distinction between the various methods described above is in whether they require independent data on the outcome of interest (e.g. whether animals have a health problem, or not) for their initial estimation. Of the methods above, supervised machine learning, and some statistical modelling approaches, requires this, but methods that use the monitoring data alone, (e.g. unsupervised machine learning, anomaly detection, latent class or variable modelling, and simple methods or classical statistical methods based on monitoring data only), do not. Of course, this difference is somewhat academic in the research development stage for a method, as at some point independent data on the outcome of interest will be required to validate its’ performance (see

Section 5) and to check that it generalises to different situations. If generalisation is not possible methods will need to be reapplied to each new situation (for example refitting statistical models or retraining machine learning methods).

If estimated random effects (e.g. individual, farm) are needed to accurately predict outcomes for individuals from monitoring data (and cannot be adjusted for by preprocessing), then both statistical modelling and machine learning methods, would need to be refitted/retrained in every context they are to be used, in order to be able to make predictions for new individuals/farms. More generally, careful consideration needs to be given to whether the context in which a method is to be used is sufficiently similar to the context in which it is trained/developed.

One of the most important considerations of the more complex methods (advanced statistical modelling, latent class/variable modelling, machine learning), relates to computational difficulties for initial fitting/training, and then for any refitting/retraining that might be needed on farms. The more complex methods based on more data information, may be more flexible/generic, but are likely harder to optimise, and possibly harder to apply on farm if information required is not readily available. If data preprocessing, and/or model simplification, are required for methods in order to make them tractable, this could result in a loss of flexibility. On the other hand, more explicit modelling assumptions would be expected to improve predictive accuracy so long as they are correct. Therefore, when comparing alternative methods is important not to attribute differences caused by different preprocessing or underlying assumptions and trade-offs, to method performance per se. Thus, for example, when input features for machine learning methods are derived via preprocessing to get around some of the complexities mentioned above, comparison should be made with other methods applied in the same way to the same pre-processed data.

The prediction/decision problem usually results in a binary decision of whether to check an individual (or group) or not. A more generalised approach would be to view this instead as a prediction, based on past data, of a numerical quantity for each individual at each time (which is correlated with the probability of a problem or the likely severity of a disease) which could be acted upon intelligently, depending on resources, for example. Whilst there are clear benefits to this more generalised approach, including that it may be more realistic, most research in this area treats this as a classification problem. Latent variable analysis is appealing as it addresses this directly. That said, most methods described above do include additional information, in that they result in numerical estimates that carry more information, such as the probability that an individual falls into different classes, on which decisions could be based.

Another important aspect is whether the methods can handle monitoring data from multiple types of sensors or not. One simple-minded approach is to combine measures on which decisions are based together into a single index somehow, as is commonly done for clinical scoring or quality of life measures. This is not recommended, however, as will likely mask important results in the individual measurements. If developed methods are based on data streams from each type of sensor, it could be relatively simple to combine the outcomes at the decision process stage. For example, if different sensors likely detect different health or welfare problems independently, a sensible approach would be to combine these by checking the animal if any of the monitoring data sources suggest there is a problem. However, if instead some aspect of the multi-variable space is needed to predict a problem, methods would need to operate in this space. In theory, both statistical modelling and machine learning methods could do this directly, though undoubtedly at the expense of increased computational load.

To summarise, there are various alternative methods that can be used for making predictions/decisions (e.g. to manually check and/or treat animals) based on past monitoring data of individuals (or groups) on farms, of varying degrees of complexity. The choice as to which to use in practice will need to take into account various aspects. Firstly, can they be used on farm completely automatically with no intervention, or will additional information/intervention be needed. Secondly, is it computationally feasible to use them to make decisions dynamically in real time on farm. And, finally, most importantly, are the predictions/decisions being made optimal. The next section discusses this latter issue, that is how to validate the predictions/decisions from different methods.

5. Validation of Predictions/Decisions

Having used a method to make predictions/decisions and/or identify anomalies or change points in real time as described in the previous section, the next step in development of these methods is to assess whether these coincide with real adverse events. We refer to this as validation. Viewed as a decision-making problem this is an assessment of the decisions that we make dynamically as the data evolves. A decision is clearly a classification – e.g. an individual should be manually checked as it is likely they are ill, or not. However, more broadly, as previously mentioned, it can be viewed as a comparison of predictions of the extent to which there is likely to be a problem, or estimated severity of a problem, from the monitoring data, with an independent measure over time of the extent of the true health or welfare for individuals (for example, the severity of the disease as measured by some gold standard). This view of the problem is more realistic in many circumstances than the classification problem, because it is a recognition that real issues, such as disease, are usually not discrete events that happen in time, but instead likely increase over time, and then diminish again once treated. Furthermore, if the prediction is a number, rather than a classifier, the number itself could aid in decision making in real time, for example, as mentioned above, when resources are limited, they can be focused on those individuals most likely to have the most severe problems.