1. Introduction

Lane detection is a fundamental problem in the autonomous driving and computer vision. In the past decade, the significant advancements have been achieved in this field. Exisitng lane detection methods can be divided into four categories: segmentation-based [

11,

23], anchor-based [

6,

24,

27], keypoint-based [

13,

18], and parameter-based [

4,

7] methods.

With the rapid development of lane detection algorithms, we have observed that existing algorithms are implemented independently. There is still a lack of a unified and comprehensive benchmark to ensure the effectiveness of newly developed lane detection methods and fair comparisons among them. The existing frameworks like Lanedet and PPLanedet [

25] face challenges in evaluating lane detection algorithms comprehensively. Specifically, Lanedet and PPLanedet only contain the segmentation-based and anchor-based methods, ignoring the parameter-based and keypoint-based methods. Besides, PPLanedet and lanedet reproduce the Tusimple [

23] and CULane [

11] datasets, but other datasets such as VIL100 [

21] and CurveLane [

10] datasets are not provided. To address the above issues and facilitate the research community and industry in developing more efficient and high-performance lane detection methods, we develop a unified lane detection toolbox with highly modular and lightweight advantages, named

UnLanedet.

UnLanedet features a unified, modular architecture for lane detection, accompanied by an efficient, lightweight training engine and a streamlined configuration interface. This design empowers researchers to develop and prototype lane detection algorithms with greater ease and flexibility, supporting on-the-fly adaptation of both configuration parameters and model components during both training and evaluation phases. Covering all major categories of lane detection approaches and providing built-in support for the VIL100 dataset, UnLanedet serves as a comprehensive platform for the training, validation, and benchmarking of lane detection models.

Leveraging a cohesive and unified codebase, we systematically reproduce and support more than ten representative lane detection algorithms. This foundation enables comprehensive and equitable benchmarking across methods, evaluating not only accuracy and inference efficiency but also examining how different backbone networks and individual algorithmic modules contribute to overall performance. Our extensive experiments provide an in-depth analysis of these characteristics, offering valuable insights into the strengths and limitations of various lane detection designs. We summarize our main contributions as follows:

A Comprehensive Toolbox for Lane Detection Algorithms. We provide a unified open-source toolbox called

UnLanedet with a highly modular and extensible design for lane detection. We provide a rich set of model reproductions, including SCNN [

11], RESA [

23], UFLD [

12], CLRNet [

24], CLRerNet [

6], LaneATT [

16], BezierNet [

15], GANet [

18], etc. In addition, we offer comprehensive documentation and tutorials to facilitate easy modification of our codebase, which is open for ongoing development.

Comprehensive Lane Detection Benchmark. We conducted a comprehensive benchmarking analysis primarily focused on lane detection models. Firstly, we validate the model performance on Tusimple, CULane, and VIL100 datasets. Subsequently, we evaluated the effectiveness of the latest backbones based on the CLRNet detector.

Strong Baselines for Lane Detection. In addition to reproducing the model results based on our codebase, we also conducted extensive experiments on the hyper-parameters of each model, resulting in a performance improvement in the reproduced results. Besides, we provide a strong baseline, named UnCLRNet, which is based on CLRNet by combining existing tricks in the backbone, neck, and loss function. UnCLRNet gets

80.21% F1 score on CULane with ConvNeXt-tiny [

9] backbone.

2. Related Work

2.1. Lane Detection

According to the representation of the lane, existing lane detection methods can be categorized into four classes: segmentation-based, anchor-based, keypoint-based, and parameter-based methods.

Segmentation-based methods view lane detection as a segmentation task. SCNN [

11] proposes a novel message-passing module to capture the spatial dependency. RESA [

23] develops a feature aggregation module to learn global features while keeping real-time detection.

Anchor-based methods regress the accurate lanes by redefining lane anchors. UFLD [

12] predicts lanes by a novel row-wise lane anchor. Line-CNN [

8] adopts the dense lane anchors and RCNN-like architecture to detect lanes. CLRNet [

24] uses the learnable lane anchors and the progressive lane anchor refinement to perform lane detection. CLRerNet [

6] proposes a new Lane-IoU loss to improve the prediction confidence. ADNet [

20] analyses the influence of lane anchors and proposes a novel lane anchor decomposition.

Keypoint-based methods treat the lane detection task as a keypoint detection task. PINet [

7] combines keypoint detection and instance segmentation to realize lane detection. GANet [

18] fuses the global features into the keypoint detection model and achieves real-time detection.

Parameter-based methods view lane detection as a parameter modeling task. PolyLaneNet [

17] casts a lane as a polynomial function and predicts the parameter of the polynomial function. BezierLaneNet [

4] utilizes the Bézier curve to model a lane for its ease of computation.

Besides, several studies have proposed a unified architecture for lane detection. Lane2Seq [

26] proposes a sequence generation framework for lane detection and a novel reinforcement learning-based model tuning method to improve the model performance. LaneLM [

22] proposes a prompt-based framework for lane detection and supports multi-turn conversations.

2.2. Lane Detection Toolboxes

Over the years, the field of object detection and semantic segmentation has witnessed significant progress with the development of various detection toolboxes, such as Detectron2 [

19], MMDetection [

2], and MMSegmentation [

3]. These toolboxes have played a crucial role in advancing object detection and semantic segmentation research and facilitating practical applications. In the field of lane detection, several developers provide the toolbox for lane detection, such as Lanedet and PPLanedet [

25]. They provide a comprehensive set of pre-defined models, training pipelines, and evaluation metrics, making it easier for researchers and practitioners to develop and deploy lane detection systems.

However, existing lane detection toolboxes focus on the segmentation-based and anchor-based methods, ignoring the parameter-based and keypoint-based methods. The UnLanedet, on the other hand, contains all types of lane detection methods, providing more concise and well-structured support for lane detection models.

3. Highlights of UnLanedet

In this section, we focus on the modular design of lane detection methods and the system design within UnLanedet.

3.1. Unified and Extensible Architecture

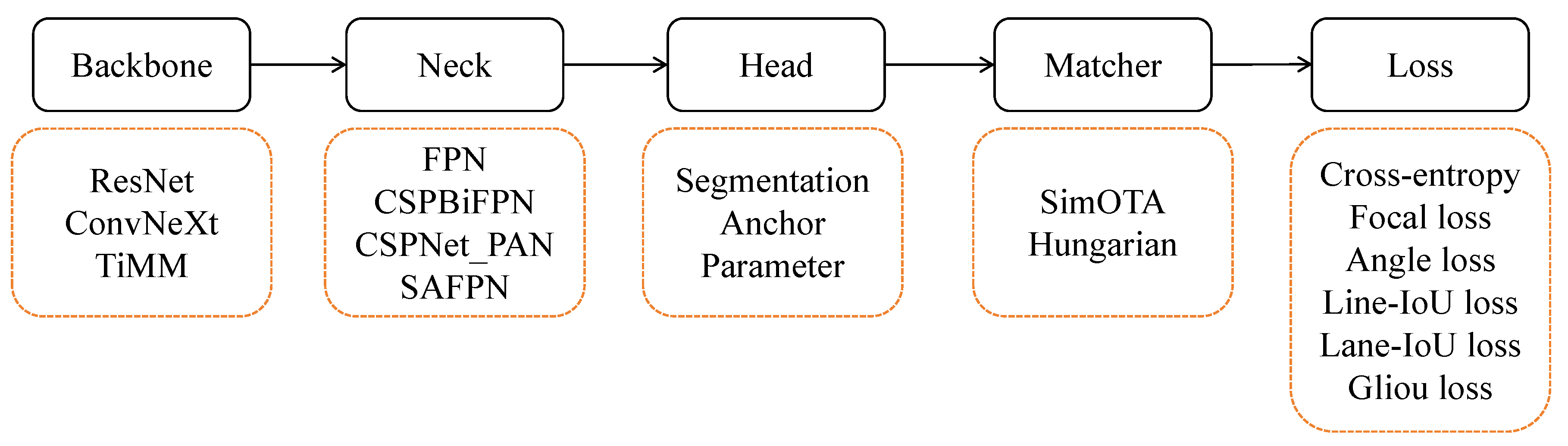

UnLanedet decomposes every lane detector into five plug-and-play parts: backbone, neck, head, matcher, and loss (Fig.

Figure 1). State-of-the-art variants are already shipped, yet custom modules drop in with no core code edits, keeping the whole system lean and future-proof.

3.2. Design Principle

The overall architecture of UnLanedet is illustrated in Fig.

Figure 2, and our design principles for UnLanedet can be summarized as follows:

Flexible configs. We adopt LazyConfig from the latest Detectron2, moving away from the registry-plus-string style seen in earlier toolkits. This pure-Python syntax lets users pass any object or data type directly, yielding short, clean, and easy-to-edit config files.

Resource-smart training. Built-in mixed-precision, activation checkpointing, and DDP are ready to use—features missing in prior works—while EMA is scheduled for the next release. These tricks cut memory and speed up training on modest GPUs.

Project isolation. Each method lives in its own folder, separate from the core code. This keeps user projects safe from core updates and eases version control.

Ready-made tools. One-line commands measure FLOPs, test speed, and visualize results, providing quick feedback during development.

Support for a range of datasets. UnLanedet contains three lane detection benchmarks, including Tusimple, CULane, and VIL100. Besides, we provide the basic class of the dataset, which develops can inherit it to achieve various datasets.

3.3. Comparison Between UnLanedet and Previous Frameworks

We compare UnLanedet and previous codebases in

Table 1, where UnLanedet offers distinct advantages for the development of lane detection algorithms: UnLanedet suppoers all kinds of lane detection methods, offering a fair evaluation platform for lane detection. UnLanedet supports Distributed Data-Parallel Training (DDP), which can speed up the training but PPLanedet and LaneDet does not. Besides, UnLanedet contains more datasets than other toolboxes.

4. Benchmarking Lane Detection Models

In this section, we first conducted a comprehensive benchmark of lane detection models using the standard ImageNet-1K pre-trained ResNet [

5] backbone. Then, we benchmark the newly proposed CNN-based model, i.e., ConvNext [

9].

Dataset and implementation details.

All experiments are conducted on Tusimple, CULane, and VIL100 datasets. The architectures of all models were aligned with their original implementations, including the number of layers in the backbone, neck, and head. We employ the same data augmentation as the original paper. All models are trained and tested on a single 3090 GPU with 24 GB memory. Models on CULane datset are trained 15 and 20 epochs and trained over 70 epochs on Tusimple dataset.

Main results on Tusimple.

We present the reproduced performance on Tusimple dataset in

Table 2. It can be observed that the reproduced results are similar to those in the original paper. ADNet in UnLanedet achieves the better performance than the official implementation.

Results on CULane dataset are shown in

Table 3. CondLaneNet with ResNet34 achieves 79.69% F1 score, better than the official implementation. CLRNet with ConvNext-tiny backbone gets 80.21% F1 score, demonstrating that the strong backbone is beneficial to model performance.

For the VIL100 dataset, we only support ADNet. We reproduce ADNet dataset with 89.43% F1 score. It should be noted that we select the result of the last epoch as the final result.s

Table 2.

Model performance on Tusimple dataset

Table 2.

Model performance on Tusimple dataset

| Model |

Venue |

Backbone |

Accuracy |

| SCNN [11] |

AAAI |

ResNet18 |

96.02 |

| RESA [23] |

AAAI |

ResNet18 |

96.27 |

| UFLD [12] |

ECCV |

ResNet18 |

95.17 |

| CLRNet [24] |

CVPR |

ResNet34 |

96.64 |

| LaneATT [16] |

CVPR |

ResNet34 |

94.65 |

| ADNet [20] |

ICCV |

ResNet34 |

96.65 |

| SRLane [1] |

AAAI |

ResNet34 |

96.21 |

| BezierNet [15] |

CVPR |

ResNet18 |

94.55 |

| GANet [18] |

CVPR |

ResNet18 |

96.18 |

| GSENet [14] |

AAAI |

ResNet18 |

96.16 |

Table 3.

Model performance on CULane dataset. * denotes the model training without segmentation loss. All models are trained without EMA.

Table 3.

Model performance on CULane dataset. * denotes the model training without segmentation loss. All models are trained without EMA.

| Model |

Venue |

Backbone |

F1 |

| UFLD [12]* |

ECCV |

ResNet18 |

63.41 |

| CLRNet [24] |

CVPR |

ResNet34 |

78.99 |

| CLRNet [24] |

CVPR |

ResNet50 |

79.30 |

| CLRNet [24] |

CVPR |

ConvNext-tiny |

80.21 |

| CondLaneNet |

ICCV |

ResNet34 |

79.69 |

| CLRerNet [6] |

WACV |

ResNet34 |

79.20 |

| CLRerNet [6] |

WACV |

ConvNexT-Tiny |

79.89 |

| ADNet [20] |

ICCV |

ResNet34 |

77.88 |

5. Conclusion

In this work we introduce UnLanedet, a unified yet lightweight benchmark suite crafted for lane-detection research. Its modular architecture covers diverse scenarios and metrics, allowing fair head-to-head comparisons of contemporary algorithms. We anticipate that UnLanedet will evolve into the community’s reference standard, accelerating progress and deepening insights in lane-detection technology.

Acknowledgment

We appreciate all developers who have contributed to UnLanedet.

References

- Chao Chen, Jie Liu; Chang Zhou, Jie Tang; GangshanWu. Sketch and refine: Towards fast and accurate lane detection. In Proceedings of the AAAI Conference on Artificial Intelligence; 2024; volume 38, pp. 1001–1009. [Google Scholar]

- Kai Chen, Jiaqi Wang, Jiangmiao Pang, Yuhang Cao, Yu Xiong, Xiaoxiao Li, Shuyang Sun, Wansen Feng, Ziwei Liu, Jiarui Xu, et al. MMDetection: Open MMLab Detection Toolbox and Benchmark. arXiv preprint arXiv:1906.07155, arXiv:1906.07155, 2019.

- MMSegmentation Contributors. MMSegmentation: Openmmlab semantic segmentation toolbox and benchmark. https://github.com/open-mmlab/mmsegmentation, 2020.

- Zhengyang Feng, Shaohua Guo, Xin Tan, Ke Xu, Min Wang, and Lizhuang Ma. Rethinking efficient lane detection via curve modeling. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 17062–17070, 2022.

- Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep Residual Learning for Image Recognition. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 770–778, 2016.

- Hiroto Honda and Yusuke Uchida. Clrernet: improving confidence of lane detection with laneiou. In Proceedings of the IEEE/CVF winter conference on applications of computer vision, pages 1176–1185, 2024.

- Yeongmin Ko, Younkwan Lee, Shoaib Azam; Farzeen Munir, Moongu Jeon, Witold Pedrycz. Key points estimation and point instance segmentation approach for lane detection. IEEE Transactions on Intelligent Transportation Systems 2021, 23, 8949–8958. [Google Scholar] [CrossRef]

- Xiang Li, Jun Li, Xiaolin Hu, and Jian Yang. Line-cnn: End-to-end traffic line detection with line proposal unit. IEEE Transactions on Intelligent Transportation Systems 2019, 21, 248–258.

- Zhuang Liu, Hanzi Mao, Chao-Yuan Wu, Christoph Feichtenhofer, Trevor Darrell, and Saining Xie. A ConvNet for the 2020s. CVPR, 2022.

- Depu Meng, Xiaokang Chen, Zejia Fan, Gang Zeng, Houqiang Li, Yuhui Yuan, Lei Sun, and Jingdong Wang. Conditional DETR for Fast Training Convergence. arXiv 2021, arXiv:2108.06152.

- Xingang Pan, Jianping Shi, Ping Luo, Xiaogang Wang, and Xiaoou Tang. Spatial as deep: Spatial cnn for traffic scene understanding. In Proceedings of the AAAI conference on artificial intelligence, volume 32, 2018.

- Zequn Qin, Huanyu Wang, and Xi Li. Ultra fast structure-aware deep lane detection. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, –28, 2020, Proceedings, Part XXIV 16, pages 276–291. Springer, 2020. 23–28 August 2020.

- Zhan Qu, Huan Jin, Yang Zhou, Zhen Yang, and Wei Zhang. Focus on local: Detecting lane marker from bottom up via key point. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 14122–14130, 2021.

- Junhao Su, Zhenghan Chen, Chenghao He, Dongzhi Guan, Changpeng Cai, Tongxi Zhou, Jiashen Wei, Wenhua Tian, and Zhihuai Xie. Gsenet: global semantic enhancement network for lane detection. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 38, pages 15108–15116, 2024.

- Zhiqing Sun, Shengcao Cao, Yiming Yang, and Kris Kitani. Rethinking transformer-based set prediction for object detection. arXiv 2020, arXiv:2011.10881, 2020.

- Lucas Tabelini, Rodrigo Berriel, Thiago M Paixao, Claudine Badue, Alberto F De Souza, and Thiago Oliveira-Santos. Keep your eyes on the lane: Real-time attention-guided lane detection. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 294–302, 2021.

- Lucas Tabelini, Rodrigo Berriel, Thiago M Paixao, Claudine Badue, Alberto F De Souza, and Thiago Oliveira-Santos. Polylanenet: Lane estimation via deep polynomial regression. In 2020 25th international conference on pattern recognition (ICPR), pages 6150–6156. IEEE, 2021.

- Jinsheng Wang, Yinchao Ma, Shaofei Huang, Tianrui Hui, Fei Wang, Chen Qian, and Tianzhu Zhang. A keypoint-based global association network for lane detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 1392–1401, 2022.

- Yuxin Wu, Alexander Kirillov, Francisco Massa, Wan-Yen Lo, and Ross Girshick. Detectron2. https://github.com/facebookresearch/detectron2, 2019.

- Lingyu Xiao, Xiang Li, Sen Yang, and Wankou Yang. Adnet: Lane shape prediction via anchor decomposition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 6404–6413, 2023.

- Yujun Zhang, Lei Zhu, Wei Feng, Huazhu Fu, Mingqian Wang, Qingxia Li, Cheng Li, and Song Wang. Vil-100: A new dataset and a baseline model for video instance lane detection. In Proceedings of the IEEE/CVF international conference on computer vision, pages 15681–15690, 2021.

- Yunzhi Zhang and Xiuxiu Bai. Lanelm: Lane detection as language modeling. Preprints, April 2025.

- Tu Zheng, Hao Fang, Yi Zhang, Wenjian Tang, Zheng Yang, Haifeng Liu, and Deng Cai. Resa: Recurrent feature-shift aggregator for lane detection. In Proceedings of the AAAI conference on artificial intelligence, volume 35, pages 3547–3554, 2021.

- Tu Zheng, Yifei Huang, Yang Liu, Wenjian Tang, Zheng Yang, Deng Cai, and Xiaofei He. Clrnet: Cross layer refinement network for lane detection. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 898–907, 2022.

- Kunyang Zhou. Pplanedet, a toolkit for lane detection based on paddlepaddle. https://github.com/zkyseu/PPlanedet, 2022.

- Kunyang Zhou. Lane2seq: towards unified lane detection via sequence generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 16944–16953, 2024.

- Kunyang Zhou and Rui Zhou. End-to-end lane detection with one-to-several transformer. arXiv 2023, arXiv:2305.00675, 2023.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).