1. Introduction

Context:

In recent years, Artificial intelligence (AI) has become deeply integrated into software engineering, transforming the way developers write, test and maintaining code. One of the most prominent applications of AI in this domain is the emergence of AI-powered code assistants such as GitHub Copilot. These tools leverage large language models trained on vast repositories of code to provide real time code suggestions, generate functions, and accelerate development processes. As a result they are being rapidly adopted in both industry and academia, offering the promise of increased productivity, faster prototyping and reduced development effort. However, their growing influence has sparked critical conversations about the safety, reliability and ethical use of AI-generated code especially in environments where software security is paramount.

Problem/Gap:

Despite the impressive capabilities of AI code assistants, their integration into secure software development workflows introduces a number of unresolved risks and challenges. These tools rely on large-scale language models trained on billions of lines of code scrapped from public repositories, many of which contain insecure, outdated, or non-compliant practices. Because the models learn patterns statistically, without truly understanding programming logic or security policies. They may generate code that looks syntactically correct but is functionally unsafe. For instance, they can suggest hardcoded passwords improper input validation, or the use of deprecated cryptographic functions, practices that can lead to critical vulnerabilities if deployed in real-world systems.

What’s more concerning is that many developers, especially those with limited security expertise, may over-rely on these suggestions, trusting them as correct due to their apparent fluency and speed. This over-reliance can result in false sense of security, where insecure code is unknowingly accepted and integrated into production. Compounding the issue is the lack of built-in, automated security verification in most AI code assistants, unlike dedicated security tools, these assistants do not actively scan their own outputs for flaws. As organisations move towards DevSecOps and continuous deployment, this introduces a serious gap how can we leverage the productivity gains of AI code assistants without compromising the integrity and security of the software?

Objective:

The objective of this paper is to critically examine the dual impact of AI code assistants on secure software development, highlighting their potential to improve coding efficiency and developer productivity while also analysing the security risks they may introduce.

Background :

The rapid advancement of artificial intelligence has led to the emergence of AI-powered tools that are reshaping the software development landscape. Among these, AI code assistants such as GitHub Copilot, Amazon CodeWhisperer, and Tabnine have gained considerable popularity for their ability to assist developers by generating code suggestions, completing functions, and offering real-time support during programming tasks. These tools are built on large language models (LLMs) like OpenAI’s Codex, which are trained on vast datasets containing billions of lines of publicly available code. As a result, AI code assistants are capable of producing human-like, functional code with minimal developer input.

While these tools offer significant benefit, such as increased productivity, reduced development time, and lower entry barriers for novice programmer, they also raise critical questions about software security. Secure software development is a disciplined approach that integrates security practices throughout the software development lifecycle (SDLC), emphasizing threat mitigation, vulnerability prevention, and compliance with industry standards. The adoption of AI code assistants introduces new dynamics into this process. Because these tools are not inherently aware of context, application architecture, or security requirements, they may generate insecure code patterns or replicate vulnerabilities present in their training data.

As organizations increasingly adopt DevSecOps practices, where security is integrated continuously and automatically into development pipelines, the use of AI code assistants presents both opportunities and challenges. Understanding how these tools align with secure coding practices, and what risks they may pose if used carelessly, is essential for leveraging their potential without compromising security.

2. Software Development Life Cycle (SDLC)

Several software developments models or approaches have been proposed and applied during the last 30 years. Each model has its characteristics, advantages and disadvantages, but common to them all is that they are typically not focusing on security [

2] (p.1111). A selection of five prominent development models is briefly analysed and compared in [

3]. These are:

The waterfall model is the classical and most heavy-weight approach to software development, whereas the agile model is the most light-weight and flexible approach. We will briefly describe the waterfall and agile models, as they represent very different approaches to secure programming.

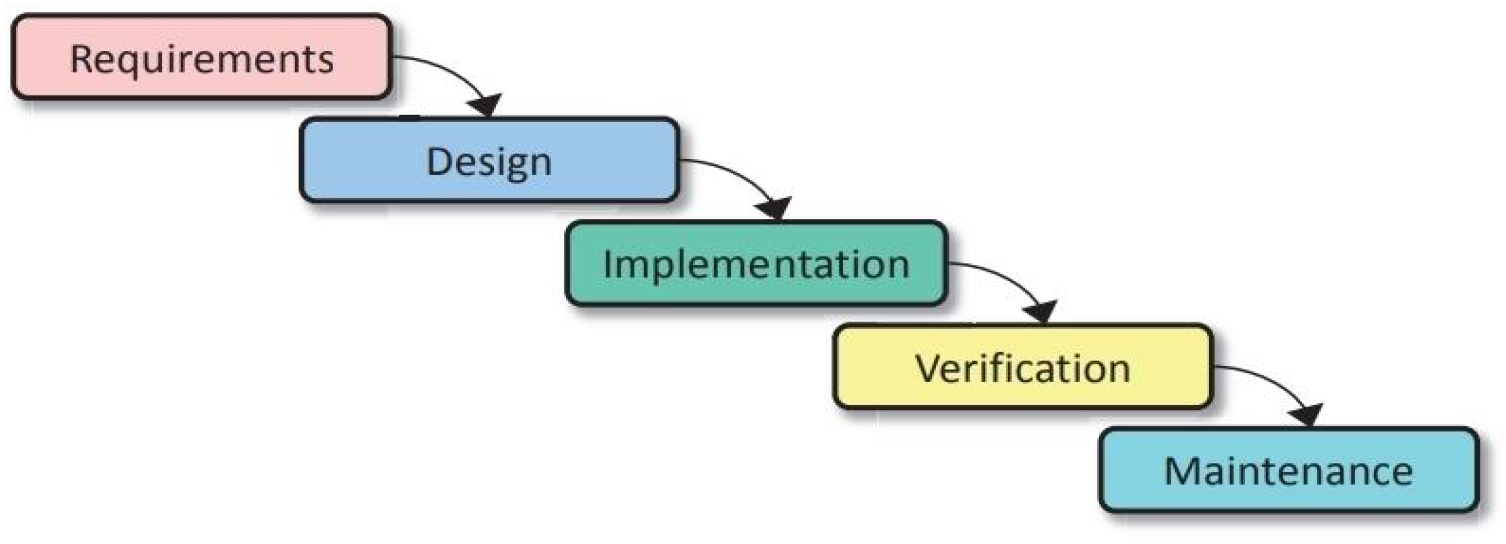

Figure 1 shows the waterfall model.

The basic idea behind the waterfall model is that the tasks of each phase must be fully completed before the next phase, which is symbolized by the waterfall metaphor where water only flows downwards. This also implies that the complete set of requirement is must be defined and fixed at the beginning of the project. In case it is necessary to revisit a previous stage, then a costly overhead is to be expected (metaphorically make water flow upwards), so this should be avoided. However, it is typically the case that requirements have to be changed in the middle of a software development project, so that many software development projects based on the waterfall model have suffered large blow-outs in cost and time.

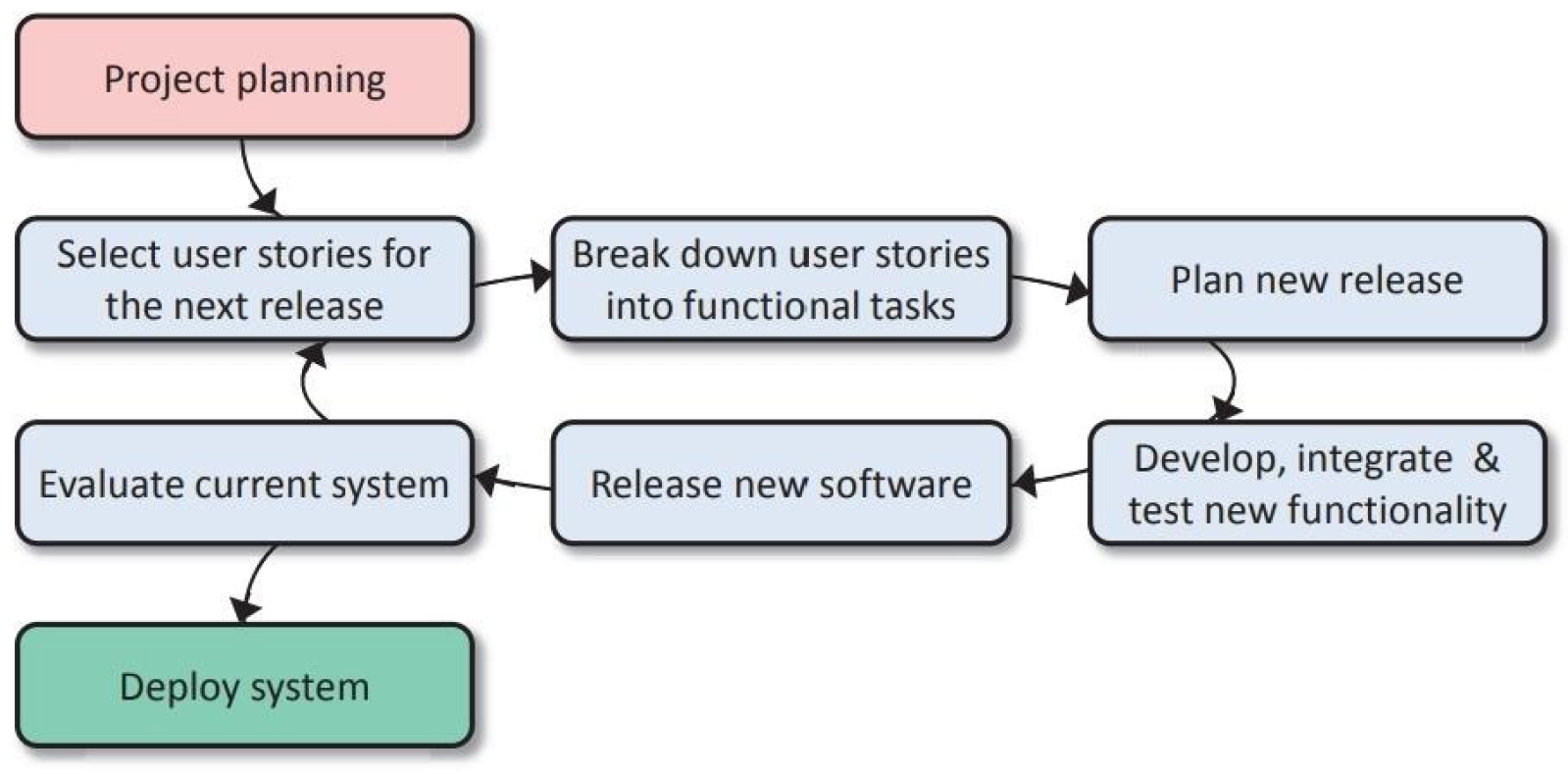

As a reaction to the rigid structure of the waterfall model several other models have been proposed, where the most recent and radical is the agile model (also known as XP: eXtreme Programming) illustrated in

Figure 2 below.

The basic idea behind the agile model is that new or evolving requirements can be specified in parallel with, or after already implemented requirements [

4]. This is possible by splitting the development into separate stories where each story covers a set of requirements implemented as functions that can be developed and tested more or less independently of other stories. Each cyclic iteration in the agile model is a sprint which can be completed in only a few weeks. The major drawback of the agile model is that it often does not scale well to large and complex development projects.

3. Overview of AI Code Assistants

Al code assistants are increasingly becoming integral tools in the software development lifecycle, designed to enhance coder productivity by leveraging machine learning and artificial intelligence technologies. These tools range from simple auto-completion features in integrated development environments (IDEs) to more advanced systems capable of generating entire code snippets or suggesting solutions to complex coding challenges. By analysing vast datasets of existing code, Al code assistants can offer recommendations that not only align with industry best practices but also cater to the specific contexts of the projects they are applied to. As a result, they facilitate a more streamlined programming process that allows developers to focus on higher-level design and architectural concerns, ultimately driving innovation and speeding up time-to-market for software products. Major players in this domain include tools such as GitHub Copilot, powered by OpenAl's GPT-3 model, and Tabnine, both of which utilize deep learning techniques to understand code patterns and suggest improvements. Moreover, their capabilities extend beyond mere code generation; they encompass bug detection, code review assistance, and integration with continuous integration / continuous deployment (CI/CD) pipelines, thereby creating a more comprehensive development environment. However, the deployment of Al code assistants is not without its challenges, as issues related to code quality, security, and ethical considerations arise, underscoring the necessity for ongoing research and guidelines to maximize the benefits while mitigating potential risks [

5].

4. The Role of AI in Software Development

Artificial Intelligence (Al) plays a transformative role in software development by augmenting human capabilities and streamlining various phases of the development lifecycle. Al-driven tools enhance developers' productivity through features such as code prediction, auto-completion, and error detection, which significantly reduce the time spent on routine coding tasks. Additionally, Al facilitates intelligent debugging and testing processes by analysing code patterns and identifying potential issues before they escalate into significant problems. Machine learning algorithms enable Al code assistants to learn from vast datasets, thus improving their performance over time and providing context-aware suggestions tailored to specific programming environments and user preferences [

6].

Moreover, Al-driven tools can assist in the management of software projects by optimizing resource allocation and predicting project timelines based on historical data. This predictive capability allows for better planning and execution, ultimately leading to the timely delivery of software products. Furthermore, Al fosters collaboration among development teams by enabling knowledge sharing and maintaining code consistency, as team members can easily access Al-generated insights and recommendations. By integrating Al into software development practices, organizations can not only enhance workflow efficiency but also foster a culture of innovation, allowing development teams to focus on more complex and creative aspects of software engineering [

7].

5. Benefits of AI Code Assistants

Al code assistants present numerous advantages that can significantly enhance the software development lifecycle. One of the most prominent benefits is increased efficiency, as these tools can automate repetitive coding tasks, thereby allowing developers to focus on more complex problems and innovations. By utilizing Al code assistants, developers can complete tasks more quickly, which ultimately leads to faster project delivery times. Furthermore, Al-driven suggestions facilitate rapid code completion, reducing the likelihood of bottlenecks in the development process. Additionally, Al code assistants contribute to enhanced code quality through their ability to suggest best practices and identify potential bugs during the coding process. This proactive approach not only reduces the likelihood of issues arising during later stages of development but also promotes adherence to coding standards and frameworks [

5]. The capability for rapid prototyping is another substantial benefit, as developers can quickly generate and iterate on ideas, adapting to feedback and market changes with agility. This iterative process is vital in today's fast-paced environment where time-to-market is crucial. Last but not least, Al code assistants promote knowledge sharing among team members and across organizations, by providing insights based on vast repositories of existing code and documentation [

8]. This access fosters a culture of collaboration and continuous learning, empowering developers to leverage collective expertise, which ultimately leads to a more proficient development team. In summary, the integration of Al code assistants into secure software development practices offers tangible benefits that can enhance efficiency, code quality, prototyping speed, and knowledge sharing, making them an invaluable asset in modern software engineering.

5.1. Increased Efficiency

The integration of Al code assistants into the software development process significantly enhances overall efficiency, allowing developers to focus on higher-level tasks and complex problem-solving. These Al tools expedite the coding process by auto-completing code snippets, suggesting contextually relevant functions, and generating boilerplate code, which accelerates development timelines and reduces the time spent on repetitive tasks. As a result, teams can shift their focus from mundane coding activities to critical thinking and architectural design, ultimately improving productivity [

6]. Furthermore, Al code assistants optimize workflows by providing instant access to documentation, enhancing knowledge retrieval and reducing the learning curve associated with unfamiliar programming languages or frameworks. The use of Al can also minimize errors caused by human fatigue during lengthy coding sessions, as these systems can identify and correct issues in real-time, enabling developers to produce higher volumes of accurate code within tighter deadlines. In industries where speed is crucial, such as fintech or e-commerce, the capability of Al to streamline the coding process not only fosters agility in product development but also facilitates quicker responses to market changes, thus providing a competitive edge [

9]. By enabling developers to achieve more in less time while maintaining high standards of work. Al code assistants contribute to a marked improvement in operational efficiency across development teams.

5.2. Enhanced Code Quality

The integration of Al code assistants into secure software development processes significantly enhances code quality through various mechanisms. Firstly, these tools employ sophisticated algorithms that analyze code for potential errors, inconsistencies, and deviations from best practices. This proactive detection of issues not only reduces the frequency of bugs but also assists developers in adhering to coding standards, resulting in cleaner and more maintainable codebases [

6]. Additionally, Al code assistants leverage vast databases of code examples and best practices, drawing from a wide range of programming styles and languages to suggest optimal solutions tailored to specific coding scenarios. This contextual awareness allows for the generation of high-quality code snippets that can be seamlessly integrated into existing projects. Furthermore. the implementation of real-time feedback mechanisms helps developers correct mistakes during the coding process, fostering a culture of continuous improvement and learning. The iterative nature of Al-assisted development also encourages thorough testing and refactoring, leading to more robust software products. Moreover, by providing insights into potential vulnerabilities and code inefficiencies, Al code assistants empower teams to construct secure applications from the ground up [

10]. As a result, the collaboration between human expertise and Al capabilities creates a formidable approach to programming that not only enhances the overall quality of the code but also instills confidence in the security and reliability of the software being developed.

5.3. Rapid Prototyping

The integration of Al code assistants into the software development lifecycle significantly enhances the rapid prototyping phase, thereby enabling developers to visualize and test their ideas more swiftly and effectively. Al code assistants streamline the process of creating prototypes by providing real-time code suggestions, automating routine coding tasks, and generating prototypes with minimal manual intervention. These tools enhance the speed of development by allowing developers to focus on higher-level design and functionality, rather than getting bogged down by the minutiae of syntax or language-specific implementations. Furthermore, the use of Al-enabled tools can facilitate quick iterations by enabling developers to make real-time adjustments and refinements based on immediate feedback. This iterative approach not only accelerates the pace of prototyping but also encourages creativity, allowing teams to explore multiple design alternatives quickly without extensive resource allocation [

6]. Additionally, the facilitation of this rapid prototyping capacity supports collaborative environments where teams can share prototypes and code modules, thus improving communication and alignment among stakeholders. However, it is critical to acknowledge that while Al can greatly enhance efficiency during the prototyping stage, developers must maintain vigilance over the Al's output to ensure that it aligns with project goals, adheres to security standards, and remains free from biases that could emerge from the training data used by the Al models [

9]. In conclusion, the advantages offered by Al code assistants in rapid prototyping signify a transformative shift in how software development can be approached, fostering an environment that prioritizes agility, innovation, and effective team collaboration.

5.4. Knowledge Sharing

The implementation of Al code assistants significantly enhances knowledge sharing within software development teams. These tools facilitate a collaborative environment by providing developers not only with code suggestions but also with context-aware insights, documentation, and coding best practices derived from vast datasets. By utilizing machine learning algorithms, Al code assistants can analyze code patterns and practices from successful projects across various domains, offering developers a repository of knowledge that can be referenced during coding sessions. This real-time provision of best practices ensures that even less experienced developers have access to high quality coding techniques, ultimately elevating overall team competence [

11]. Moreover, Al code assistants serve as dynamic learning platforms by capturing interactions and queries from developers, which can be used to inform future improvements in code generation and documentation. As developers engage with these tools, they can contribute their own insights and improvements to common code snippets, thereby fostering an organic flow of knowledge. The collaborative nature of this process not only enriches the tool's capability but also strengthens team cohesion as developers share experiences and solutions, resulting in a more knowledgeable workforce. Additionally, the ability of Al to suggest relevant resources and learning materials further empowers team members to continuously improve their skills and stay updated with the latest technologies [

12]. In this way, Al code assistants act as facilitators of knowledge transfer, bridging gaps in skill levels and promoting a culture of continuous learning within software development organizations.

6. Risks Associated with AI Code Assistants

The integration of Al code assistants in the software development lifecycle yields several noteworthy risks that must be carefully considered. One primary concern is the emergence of security vulnerabilities often introduced through the automation of code generation [

13]. Al models, trained on extensive datasets, may inadvertently produce code snippets that include exploitable flaws, such as hard-coded credentials or inadequate input validation, thus compromising the security of the application. Furthermore, these assistants may not be updated in real-time to reflect the latest security practices or vulnerabilities, leaving software at risk of exploitation due to outdated code suggestions. Additionally, the increasing reliance on Al code assistants can lead to significant dependency issues among developers [

13]. Over time, reliance on Al for generating boilerplate code or routine functions may result in developers losing their foundational problem-solving skills and deep understanding of programming principles, ultimately diminishing their capability to write secure and efficient code independently. Bias in Al models is another critical risk. Machine learning algorithms can inadvertently reflect the biases present in their training data, potentially leading to ethical and operational issues in the software developed [

14]. For example, if an Al code assistant is trained on a biased dataset, it might promote certain coding conventions or algorithms that are not universally applicable, potentially marginalizing less common but effective approaches. This underscores the importance of utilizing diverse and representative datasets to mitigate bias, which, if left unaddressed, can lead to inconsistent software quality and compromise overall project integrity.

6.1. Security Vulnerabilities

The integration of Al code assistants in software development introduces several security vulnerabilities that must be addressed to ensure the protection of sensitive data and overall system integrity. One critical concern is the potential for generated code to contain exploitable flaws, often stemming from the training datasets utilized by these Al models. Which may comprise open-source repositories with known vulnerabilities. For instance, an Al assistant trained on code that includes insecure authentication mechanisms might replicate these weaknesses in new applications [

14]. Furthermore, the opaque nature of many Al algorithms can obscure understanding of how code suggestions are derived, inhibiting the identification of potential security risks before deployment. Additionally, reliance on Al generated code can lead to a lack of thorough review processes, as developers might assume the code is inherently secure, thus increasing the risk of deploying unsafe software. Another significant vulnerability arises from the possibility of adversarial attacks, where malicious actors might intentionally craft inputs designed to confuse the Al model, resulting in flawed code that could facilitate security breaches. As Al code assistants become more prevalent, the need for rigorous security assessments, continuous training incorporating secure coding practices, and human oversight becomes paramount to mitigate these risks and foster a more secure software development environment [

15]. Ultimately, while Al code assistants can enhance productivity, their deployment in sensitive projects mandates a careful balancing act between leveraging automation and maintaining robust security protocols.

6.2. Dependency Issues

The integration of Al code assistants in software development raises significant dependency issues that could adversely affect project outcomes and overall system integrity One primary concern is the reliance on third party libraries and frameworks suggested or automatically integrated by Al systems. While these libraries can accelerate development and reduce the time spent on coding, the risk arises when developers become overly dependent on these tools without fully understanding their functionality or underlying security implications. This dependence might lead to scenarios where developers overlook critical vulnerabilities that may exist in the utilized libraries, which could subsequently introduce risks into the software. Additionally, Al code assistants often rely on large datasets to provide suggestions, and if these datasets are not current or comprehensive, the code generated could be misaligned with the latest security practices or could incorporate outdated methods that expose the application to new types of threats [

6]. Furthermore, dependency on proprietary solutions affects the flexibility and adaptability of the development process. Organizations may find themselves locked into specific vendors or tools, limiting their ability to pivot to better solutions as technology evolves. Moreover, a lack of transparency regarding how these Al systems produce code suggestions raises concerns about the predictability of outcomes, as developers may feel compelled to trust the Al-generated recommendations without adequate scrutiny. Consequently, this reliance can diminish critical thinking and problem solving skills among developers, ultimately impacting their ability to identify and resolve dependency-related issues. To mitigate these risks, it is imperative for software development teams to foster a balanced approach that encourages the use of Al code assistants while maintaining a strong foundation in the underlying coding principles and an acute awareness of the security implications associated with external dependencies [

14].

6.3. Loss of Developer Skills

The integration of Al code assistants in software development raises concerns regarding the potential erosion of essential programming skills among developers. As these tools increasingly automate repetitive coding tasks and enhance productivity, there is a significant risk that developers may become overly reliant on Al-generated suggestions and code completions. This reliance could lead to a phenomenon known as 'skill degradation, Where developers, particularly those in the early stages of their careers, fail to develop a deep understanding of programming languages algorithms, and problem solving techniques essential for effective software design. Furthermore, as Al code assistants handle more complex tasks, developers may find themselves bypassing fundamental coding principles, resulting in a diminished capacity to debug, optimize, and innovate beyond the capabilities of the Al tools. Over time, this skill loss could create a workforce that is not only less proficient in traditional programming but also ill-equipped to understand and address the underlying challenges of secure software development [

6]. To mitigate this risk, organizations should encourage a balanced approach where developers refine their skills alongside utilizing Al tools, ensuring that the maintain the necessary competencies to adapt to evolving technologies and security threats in software development.

6.4. Bias in AI Models

Bias in Al models is a significant concern in the context of secure software development, as it can lead to erroneous outputs that may introduce vulnerabilities or compromise the integrity of the software [

6]. Al code assistants are trained on vast datasets that often reflect existing societal prejudices, which can manifest in various ways, such as favouring certain coding paradigms, languages, or frameworks over others based on the data's demographic representation. This bias can result in less diverse solutions that may not adequately address the needs of a broad user base, potentially alienating specific groups or creating unintentional security oversights. Moreover, bias in Al can undermine developers' trust as they may rely on Al-generated code that inadvertently perpetuates flawed assumptions or stereotypes, thereby affecting the quality of outputs and leading to security vulnerabilities [

6]. For example, if an Al assistant favours specific coding conventions that lack inclusivity or robustness against diverse use cases, the resulting applications may be prone to exploitation by malicious actors who take advantage of these gaps. Therefore, it is vital for organizations employing Al code assistants to actively evaluate the training datasets for biases, implement regular assessments of the models for fairness, and involve diverse teams in the development process to mitigate these risks and ensure the creation of secure, equitable software solutions.

7. Case Studies of AI Code Assistants in Action

The application of Al code assistants has been demonstrated through various case studies, revealing both successful implementations and challenges faced in real-world software development environments. One notable example is the integration of GitHub Copilot within the development workflow of several organizations, such as Shopify and GitHub itself. These companies reported significant productivity boosts, with developers able to write code up to 30% faster with the assistance of this tool [

10]. The Al's ability to suggest code snippets based on context not only expedited routine tasks but also facilitated rapid prototyping, allowing teams to experiment with multiple solutions without extensive overhead Additionally, data from research performed by Stanford University highlighted that teams using Al code assistants produced code with fewer bugs, enhancing overall code quality compared to those relying solely on traditional development practices [

16].

Conversely, the implementation of Al code assistants has not been without its obstacles. A prominent challenge arose during the deployment of Al-based tools in secure environments, where strict compliance with security protocols is paramount. For instance, a financial institution that utilized an Al code assistant observed that while the tool enhanced speed and efficiency, it inadvertently introduced security vulnerabilities due to its dependence on existing codebases, some of which contained outdated security practices [

15]. Moreover, the lack of human oversight in code validation led to instances where the Al generated solutions that were not fully aligned with the organization's security policies. This scenario underscores the importance of maintaining rigorous checks and balances when integrating Al technologies into sensitive environments [

17]. These case studies illustrate the dual nature of Al code assistants, highlighting their capabilities to drive innovation while simultaneously emphasizing the necessity for developers and organizations to remain vigilant against inherent risks.

7.1. Successful Implementations

Several organizations have successfully integrated Al code assistants into their software development workflows, resulting in significant improvements in both productivity and code quality. A notable example is GitHub Copilot, which utilizes OpenAl's Codex model to assist developers in real-time. Companies that have adopted GitHub Copilot report a reduction in development time by up to 50%, allowing teams to expedite projects that otherwise could have taken weeks or months to complete [

18]. In a case study involving Microsoft, teams using Copilot were able to address coding tasks more efficiently, with improvements noted in debugging and code review processes. Additionally, companies such as Facebook and Google have implemented Al tools like DeepCode and CodeGuru to enhance their code review processes, effectively reducing security vulnerabilities and improving adherence to best practices in coding standards. These tools leverage machine learning to analyze vast code repositories and provide actionable insights which in turn allows developers to write more secure and optimized code [

19]. Furthermore, a financial technology firm that adopted an Al code assistant reported not only enhanced code quality but also a drastic reduction in bugs in production systems, leading to increased customer satisfaction. Such implementations illustrate that, when used effectively, Al code assistants can serve as invaluable resources that augment human intelligence, streamline the development process, and ultimately contribute to a more secure software environment [

20].

7.2. Challenges Faced

The implementation of Al code assistants in secure software development is not without its challenges, many of which stem from the intrinsic complexities of integrating advanced technologies into established workflows. One significant challenge is the integration of Al tools with existing software development environments, which may require significant modifications to coding practices and team dynamics, often leading to resistance from developers who may be apprehensive about adopting unfamiliar technologies. Additionally, ensuring the security of the development environment becomes increasingly complicated as Al code assistants could inadvertently introduce vulnerabilities by utilizing outdated libraries or generating insecure code patterns [

15]. Furthermore, the reliance on Al can lead to a dilution of software engineering skills among developers, as they may become overly dependent on these tools for code generation and problem-solving, creating potential skill gaps that can hinder long-term project sustainability. The issue of bias in Al models poses another significant challenge, as the data sets used to train these models may reflect existing biases present in the coding community, which could translate into biased outputs, thereby affecting the quality and integrity of the code produced [

21]. Finally, the dynamic nature of software security threats necessitates that Al code assistants must be constantly updated and tuned, presenting a logistical challenge for teams that need to balance the dual objectives of incorporating innovative technologies and maintaining the robustness and security of their software applications [

21]. Addressing these challenges requires strategic planning, ongoing education and collaborative efforts between development teams and Al tool providers to create effective solutions that enhance rather than hinder secure software development.

8. Best Practices for Secure AI Code Assistant Usage

To maximize the secure use of Al code assistants, organizations should implement a series of best practices that address both security and operational aspects. One critical practice is to conduct regular security audits on the Al code assistant's codebase, APls, and configurations to identify potential vulnerabilities before they are exploited. This involves not only reviewing the Al's interactions and outputs but also assessing the data it is trained on, ensuring it aligns with security standards and does not inadvertently introduce bias. Additionally, human oversight is paramount; developers should always validate and review the code produced by Al assistants, integrating this review process into their workflows to prevent blind trust in the Al-generated outputs. This human-Al collaboration leverages the strengths of both entities, allowing for more thorough scrutiny of coding practices and adherence to security protocols [

6]. Another essential practice is to ensure continuous training and updates of the Al mode to keep pace with evolving security threats and development standards. By regularly updating the Al's training data with the latest secure coding techniques and frameworks organizations can significantly diminish the risks associated with outdated or flawed outputs. It is also vital to implement version control for the Al's configurations and updates to maintain a clear record of changes made and to facilitate rollback if vulnerabilities are introduced [

6]. By adhering to these best practices organizations can effectively mitigate risks while harnessing the benefits of Al code assistants in their secure software development processes.

8.1. Regular Security Audits

Regular security audits are integral to leveraging Al code assistants in secure software development, as they systematically assess the security posture of both the Al tools and the software they help to create. These audits involve a thorough evaluation of the code produced with Al assistance, identifying potential security vulnerabilities that may arise from automated code suggestions. Conducting these evaluations at set intervals ensures that security metrics are consistently monitored and helps teams recognize emerging threats that could exploit vulnerabilities introduced by the Al code [

15]. Furthermore, regular audits facilitate compliance with industry standards and regulations that mandate security due diligence, thereby fostering trust among stakeholders. During these assessments, it is essential to adopt a multi-faceted approach including static and dynamic analysis, penetration testing, and code review by experienced developers who can decipher nuanced security concerns that simple automated checks may overlook. With the rapid evolution of threats in the cybersecurity landscape, periodic audits are crucial, as they not only assess current vulnerabilities but also enforce the assimilation of lessons learned from past security incidents [

15]. By maintaining a robust schedule of security audits, organizations can ensure that their implementations of Al code assistants contribute positively to their security frameworks rather than detract from them, thereby safeguarding sensitive data and maintaining operational integrity.

8.2. Human Oversight

Human oversight is an indispensable element in the deployment of Al code assistants within secure software development environments. Despite the sophisticated algorithms that underpin these Al tools, human intervention is crucial to ensure that the generated code adheres to security best practices and aligns with the specific requirements of the organization. Developers must critically evaluate the output provided by Al code assistants, as automated suggestions may inadvertently introduce vulnerabilities or fail to consider the broader context of the project [

14]. Continuous human oversight allows for the identification of potential risks relating to logic errors, security flaws, or misinterpretation of specifications that Al models may overlook. Moreover, it is essential for developers to remain engaged in the coding process to retain their skillsets and maintain a comprehensive understanding of the code being developed. This human involvement can be facilitated through code reviews, pair programming, and collaborative discussions, wherein developers provide the necessary context that Al may lack [

15]. Furthermore, human oversight helps in mitigating the risk of bias that may reside within Al models, ensuring that the software developed is fair and does not propagate existing societal biases [

15]. In essence, while Al code assistants can significantly enhance the efficiency and quality of code production, the presence of human expertise and oversight is vital to safeguard against security vulnerabilities and ethical pitfalls, ultimately contributing to a more robust and secure software development lifecycle.

8.3. Continuous Training and Updates

Continuous training and updates are vital for the effective utilization of Al code assistants in secure software development. As the landscape of programming languages, frameworks, and security threats evolves, Al models must be regularly retrained with new data to maintain their effectiveness and relevance. This involves feeding the Al system with diverse, high-quality code examples and security best practices that reflect contemporary coding standards and vulnerabilities [

15]. Additionally, the incorporation of real-time feedback from developers who utilize these assistants can significantly enhance the accuracy and reliability of the Al's outputs. Regular updates to the underlying algorithms are also essential, as they can introduce improvements that increase efficiency and reduce biases or errors in code generation. It is crucial that organizations implement a structured approach to this continuous learning cycle, involving regular assessments to identify knowledge gaps and retraining schedules that align with the pace of technological advancements. The integration of automated update mechanisms can facilitate a smoother operational flow, ensuring that Al code assistants are not only maintaining high performance but also staying current with the latest security patches and coding practices [

15]. Therefore, continuous training and updates should be framed as integral elements of an organization's strategy for employing Al code assistants, fostering a culture of adaptability and responsiveness amidst the rapidly changing field of software development.

9. Ethical Considerations

The integration of Al code assistants into secure software development raises several ethical considerations that must be addressed to ensure responsible usage. One primary concern is accountability in Al decisions, where the question arises as to who is responsible when Al-generated code results in security vulnerabilities or other consequences. Unlike human developers, Al systems, by their nature, lack direct accountability mechanisms; hence, organizations must foster a culture of shared responsibility between software developers and the Al systems they employ. This necessitates clear policies and guidelines that stipulate the roles and responsibilities when utilizing Al tools in development processes [

15]. Transparency in Al development is another crucial aspect, as the algorithms underlying Al code assistants often operate as 'black boxes,' making it difficult to understand how decisions are made. To enhance trust and reliability, developers and organizations should document the decision making processes, particularly in the refinement of Al models and the datasets used for their training . Moreover, ethical considerations extend to the biases inherent in the Al systems; if the datasets used to train these systems include biased or unrepresentative samples, the Al may perpetuate or amplify these biases in code generation. Hence, rigorous evaluation of training datasets is essential alongside the implementation of fair and equality-focused measures in Al development [

15]. Additionally, the ongoing need for ethical training for developers who work with Al tools cannot be overstated. Ensuring that developers are equipped with the knowledge to recognize potential ethical dilemmas, such as privacy violations and the misuse of Al capabilities, is imperative for fostering a more secure and ethically sound software development environment. By addressing these ethical considerations organizations can better navigate the complexities introduced by Al code assistants, ultimately fostering a development ecosystem that prioritizes not only efficiency and innovation but also ethical integrity and accountability [

21].

9.1. Accountability in AI Decisions

As organizations increasingly integrate Al code assistants into their secure software development processes, the question of accountability for the decisions made by these Al systems becomes paramount. Al code assistants, while designed to enhance productivity and improve code quality, must operate within a framework that clearly delineates responsibility for outcomes. When an Al system generates code or makes recommendations, it is essential to ascertain who is accountable for the potential risks and errors that may arise from its usage. This accountability concerns not only the developers who employ Al tools but also the organizations that deploy these technologies. Establishing a robust accountability model involves a proactive approach towards the development and deployment of Al systems, necessitating clear governance policies and oversight mechanisms. Organizations should implement best practices such as maintaining detailed logs of Al decisions, documenting the rationale behind underlying algorithms, and ensuring that human oversight remains a crucial component in the decision-making process [

23]. Furthermore, to mitigate liability and enhance trust, companies must clearly communicate their accountability frameworks to stakeholders. This may include outlining the limitations of Al tools, emphasizing the necessity of human intervention in critical decision-making, and ensuring compliance with existing legal frameworks [

15]. As Al technologies advance, the complexities surrounding accountability will likewise evolve, necessitating ongoing dialogue among developers, regulators and ethicists to foster an ecosystem where Al can be leveraged responsibly while navigating the ethical intricacies associated with its deployment.

9.2. Transparency in AI Development

Transparency in Al development is a critical factor in ensuring accountability, fostering trust, and enhancing the overall effectiveness of Al systems, particularly in the context of software development. As Al code assistants become increasingly integrated into the development lifecycle, it is essential to provide clear insights into how these systems operate including their underlying algorithms, decision-making processes, and training data. This transparency allows developers to understand the rationale behind the Al's suggestions and recommendations, thereby enabling them to assess the reliability and applicability of those outputs within their specific project parameters. Furthermore, transparency can help expose any biases present in the Al models, as well as illuminate how these biases may impact the quality and security of the generated code. Stakeholders can better navigate the implications of using Al when they possess ready access to information regarding the datasets utilized to train the models, the methodologies employed in their development, and the processes in place for ongoing evaluation and refinement [

23]. Additionally, implementing transparent practices encourages a culture of collaboration between Al developers, users, and regulatory bodies. Open channels of communication can promote a broader dialogue concerning ethical considerations and collective responsibilities, leading to industry-wide standards and best practices [

15]. Ultimately, transparency not only strengthens the user's confidence in Al code assistants but also serves as a pivotal mechanism to mitigate potential risks and enhance the overall integrity of secure software development.

10. Regulatory and Compliance Issues

The integration of Al code assistants into secure software development raises significant regulatory and compliance considerations that organizations must navigate to ensure legal and ethical adherence. Data privacy regulations such as the General Data Protection Regulation (GDPR) in the European Union and the California Consumer Privacy Act (CCPA) in the United States, impose strict guidelines on how personal and sensitive data is collected, processed, and stored. Al code assistants often rely on vast datasets, which may include identifiable information, necessitating that companies take proactive measures to anonymize data and secure informed consent from users when applicable [

21]. Moreover, industry-specific standards, such as those outlined by the Payment Card Industry Data Security Standard (PCI DSS) for payment systems or the Health Insurance Portability and Accountability Act (HIPAA) in healthcare, dictate technical and operational safeguards that must be followed [

22]. Compliance with these regulations is not only a legal obligation but also crucial in maintaining trust and reputation among users and stakeholders.

Furthermore, the evolving nature of AI technology complicates compliance efforts, as regulatory frameworks often lag behind technological advancements. Organizations must engage in continuous monitoring of legislative changes and engage with regulatory bodies to advocate for standards that are both operationally feasible and effectively safeguard the interests of consumers [

22]. Those utilizing Al code assistants should implement a robust framework for compliance management that includes regular training for employees on legal implications, maintaining up-to-date documentation of Al usage practices, and conducting audits to ensure adherence to relevant regulations. By taking these proactive steps, organizations can mitigate legal repercussions and enhance their overall security posture.

10.1. Data Privacy Regulations

In the context of secure software development, data privacy regulations play a crucial role, particularly when integrating Al code assistants into the development lifecycle. Compliance with laws such as the General Data Protection Regulation (GDPR) in the European Union and the California Consumer Privacy Act (CCPA) in the United States mandates that organizations carefully handle personal data, ensuring its security and confidentiality. These regulations require businesses to implement robust data processing practices, which extend to any data utilized by Al code assistants, especially when these tools access or analyze user data. Organizations must establish clear data governance policies to delineate what information is collected, how it is stored, and the duration for which it is retained [

21]. Moreover, transparency becomes a key aspect of compliance; stakeholders must be informed about data usage, and consent must be obtained where necessary. Additionally, the application of privacy-by-design principles ensures that data protection measures are integrated into software development from the outset, reducing the risk of breaches and enhancing trust among users. Al code assistants, by their nature, may inadvertently introduce risks, such as the potential for data leakage or unauthorised access to sensitive information during the code process. As a or unauthorized access to sensitive information during the coding process [

25]. As a result, organizations utilizing these tools must conduct thorough assessments and regular audits to ensure that their deployment aligns with applicable data privacy regulations. They must also equip their development teams with training and resources to address privacy issues while fostering a culture of responsibility. Maintaining compliance not only safeguards user data but also mitigates potential legal ramifications and reputational damage, thereby aligning the use of Al code assistants with broader organizational goals of security and trustworthiness in software development.

10.2. Industry Standards

In the context of secure software development, adherence to established industry standards is paramount for the effective utilization of Al code assistants. Prominent standards, such as the ISO/IEC 27001 for information security management systems and the NIST Cybersecurity Framework, provide guidelines that help organizations ensure that their software development processes integrate security comprehensively. Furthermore, the OWASP (Open Web Application Security Project) Top Ten offers a set of best practices specifically aimed at addressing common security vulnerabilities in software applications which can be highly relevant when employing Al tools that generate or suggest code. As Al code assistants are increasingly involved in the coding process, it is crucial for organizations to ensure these tools comply with relevant standards to mitigate risks associated with misuse or misconfiguration. Additionally, organizations should adopt a framework for Continuous Integration and Continuous Deployment (CI/CD) that aligns with industry standards, incorporating automated security testing protocols that evaluate the outputs of Al systems. The importance of training Al code assistants using secure code practices as defined by the CERT Secure Coding Standards cannot be overstated, as it directly influences the security posture of the generated code. Compliance with these industry standards not only fosters greater trust in the development process but also enhances accountability, ensuring that Al-enhanced software solutions remain aligned with both legal and ethical expectations. By systematically integrating these recognized standards into the deployment and operation of Al code assistants, developers can cultivate a security-oriented culture that significantly reduces the potential risks associated with Al in software development.

11. Future Trends in AI Code Assistants

The future of Al code assistants is poised for transformative advancements, driven by rapid developments in artificial intelligence technologies. As machine learning algorithms become increasingly sophisticated, we can anticipate significant improvements in their ability to understand and generate code. This evolution will be marked by the emergence of context-aware Al systems that can better comprehend the specific requirements and constraints of software projects, leading to more accurate and relevant code suggestions. Furthermore, advancements in natural language processing will enhance the interaction between developers and Al assistants, allowing for more intuitive and conversational coding experiences. In addition, we are likely to see a surge in the integration of Al code assistants with a variety of development tools and platforms, streamlining workflows and enhancing productivity. These integrations could facilitate real-time collaboration between teams, helping to bridge geographic and temporal divides in software development [

15]. Moreover, as organizations increasingly adopt DevOps practices, Al code assistants may become essential components in continuous integration and deployment (CI/CD) pipelines, automating routine tasks and enhancing the overall development lifecycle [

22]. On the security front, future Al code assistants may include improved mechanisms for detecting vulnerabilities, thus contributing to safer software development practices. Anticipated innovations will likely encompass adaptive learning capabilities, where Al tools evolve based on user feedback and project data. optimizing performance over time. The combination of these advancements will not only enhance the efficiency and effectiveness software development but also redefine the collaborative landscape between human developers and Al tools.

11.1. Advancements in AI Technology

The field of artificial intelligence has witnessed remarkable advancements that significantly impact software development, particularly through the evolution of Al code assistants. One notable progression is the integration of sophisticated machine learning algorithms, including deep learning and natural language processing (NLP), which enhance the ability of Al systems to understand and generate code with increased accuracy [

15]. For example, pre-trained models like OpenAl's Codex have been developed to comprehend various programming languages, providing developers with contextually relevant code suggestions that not only expedite the coding process but also reduce the likelihood of introducing errors. Furthermore, advancements in transfer learning enable Al assistants to adapt their knowledge across different domains, facilitating code assistance even in less common programming languages or niche applications. These technological strides are complemented by improvements in computational power, exemplified by the increasing availability of cloud-based Al services, which allow for more complex analyses and faster processing of coding tasks [

15]. Another significant advancement involves the use of Al-driven analytics to monitor code performance and detect patterns that might indicate potential vulnerabilities or inefficiencies. This proactive approach leads to enhanced code quality and security, as developers can quickly address issues before they escalate into critical problems. As Al continues to evolve, the incorporation of explainability features in Al models is also gaining attention, allowing developers to understand the rationale behind specific code suggestions, thereby fostering trust and facilitating informed decision-making in the software development lifecycle [

22]. Collectively, these advancements not only enhance the capabilities of Al code assistants but also lay the groundwork for a future in which Al plays an integral role in secure and efficient software development.

11.2. Integration with Development Tools

The integration of Al code assistants with development tools signifies a transformative shift in software development practices. Modern Integrated Development Environments (IDEs) such as Visual Studio Code, IntelliJ IDEA and GitHub's Copilot provide seamless integration capabilities, allowing developers to leverage Al assistance directly within their workflow. This integration enhances the coding experience by offering real-time suggestions, auto-completions, and code reviews, which can significantly expedite the development process. Furthermore, these tools are designed to analyze the existing codebase, thereby allowing the Al to provide context-aware guidance that aligns with the specific coding standards and best practices of the organization [

9].

Moreover, the integration facilitates a more collaborative environment among developers. With version control systems such as Git, Al assistants can help identify changes and suggest optimal merges or conflict resolutions by analysing code patterns across various projects. This also leads to better maintenance of code quality, as Al can flag potential issues or vulnerabilities before they progress to production [

9]. By being integrated into CI/CD pipelines, Al code assistants can ensure that code passing through the stages of development adheres to predefined security protocols and compliance measures. However, organizations must be cautious about the dependency on these integrated systems, as relying too heavily on Al suggestions could potentially lead to developers underutilizing their problem-solving skills [

15]. Therefore, striking a balance between leveraging Al tools and maintaining human oversight is crucial for optimizing both productivity and security in software development.

12. Conclusion

The integration of Al code assistants in software development presents a double-edged sword, offering both significant advantages and considerable risks. On one hand, these tools enhance productivity by automating routine coding tasks, thereby allowing developers to focus on more complex and creative elements of software design. They facilitate rapid prototyping and knowledge sharing, contributing to improvements in code quality through real-time feedback and suggestions [

10]. However, the reliance on Al code assistants is not without its pitfalls. The introduction of these technologies can lead to potential security vulnerabilities, as the generated code may inadvertently introduce flaws that developers may overlook. Additionally, the dependency on Al tools can diminish critical problem-solving skills among developers, as reliance on automation may stifle the acquisition and application of foundational knowledge [

24]. Moreover, issues such as bias in Al models can threaten the integrity of the software being developed, leading to ethical concerns. As the field progresses, it is crucial for organizations to adopt best practices to mitigate these risks. This includes conducting regular security audits, ensuring human oversight in the coding process, and committing to continuous training and updates of Al technologies [

24]. As the technology continues to evolve, stakeholders need to remain vigilant and proactive, balancing the benefits of improved efficiency and innovation with the imperative of maintaining security and ethical standards in software development.

References

- Jøsang, A., Ødegaard, M., & Oftedal, E. (2015). Cybersecurity through secure software development. In Information Security Education Across the Curriculum: 9th IFIP WG 11.8 World Conference, WISE9, Hamburg, Germany, May 26–28, 2015, Proceedings 9 (pp. 53-63). Springer International Publishing. [CrossRef]

- Shon Harris. CISSP All-in-One Exam Guide, Sixth Edition. McGraw-Hill, 2013.

- N.M.A. Munassar and A. A. Govardhan. A Comparison between Five Models of Software Engineering. Int. Journal of Computer Science Issues (IJCSI), 7(5), September 2010.

- K. Beck et al. Manifesto for Agile Software Development. Online article at: www.agilemanifesto.org, February 2001.

- A Imam - 2024 - trepo.tuni.fi. INTEGRATING AI INTO SOFTWARE DEVELOPMENT LIFE CYCLE.

- N Sherje - Research Journal of Computer Systems and …, 2024 - technicaljournals.org. Enhancing Software Development Efficiency through AI-Powered Code Generation.

- MJ Karamthulla, A Tadimarri, R Tillu… - International Journal …, 2024 - researchgate.net. Navigating the future: AI-driven project management in the digital era.

- A Soni, A Kumar, R Arora, R Garine - Tools, and Impact Analysis …, 2023 - papers.ssrn.com. Integrating AI into the Software Development Life Cycle: Best Practices, Tools, and Impact Analysis.

- S Pangavhane, G Raktate, P Pariane… - … on Decision Aid …, 2024 - ieeexplore.ieee.org. AI-Augmented Software Development: Boosting Efficiency and Quality. [CrossRef]

- A Sergeyuk, Y Golubev, T Bryksin, I Ahmed - Information and Software …, 2025 - Elsevier. Using AI-based coding assistants in practice: State of affairs, perceptions, and ways forward. [CrossRef]

- Sundaresan, Z Zhang - International journal of organizational …, 2022 - emerald.com. AI-enabled knowledge sharing and learning: redesigning roles and processes. [CrossRef]

- D Ajiga, PA Okeleke, SO Folorunsho… - … Software Engineering …, 2024 - researchgate.net. Enhancing software development practices with AI insights in high-tech companies. [CrossRef]

- H Hajipour - 2024 - publikationen.sulb.uni-saarland.de. AI code generation models: opportunities and risks. [CrossRef]

- J Vaidya, H Asif - Ieee Spectrum, 2023 - ieeexplore.ieee.org. A critical look at ai-generate software: Coding with the new ai tools is both irresistible and dangerous. [CrossRef]

- H Sheggam, X Zhang - 2024 IEEE Long Island Systems …, 2024 - ieeexplore.ieee.org. Exploring Security Risks and Mitigation Strategies in AI Code Helpers. [CrossRef]

- JT Liang, C Yang, BA Myers - Proceedings of the 46th IEEE/ACM …, 2024 - dl.acm.org. A large-scale survey on the usability of ai programming assistants: Successes and challenges. [CrossRef]

- JH Klemmer, SA Horstmann, N Patnaik… - … Security, 2024 - dl.acm.org. Using ai assistants in software development: A qualitative study on security practices and concerns. [CrossRef]

- R Pandey, P Singh, R Wei, S Shankar - arXiv preprint arXiv:2406.17910, 2024 - arxiv.org. Transforming software development: Evaluating the efficiency and challenges of github copilot in real-world projects. [CrossRef]

- F Song, A Agarwal, W Wen - arXiv preprint arXiv:2410.02091, 2024 - arxiv.org. The impact of generative AI on collaborative open-source software development: Evidence from GitHub Copilot. [CrossRef]

- D Yeverechyahu, R Mayya… - arXiv preprint arXiv …, 2024 - arxiv.org. The impact of large language models on open-source innovation: Evidence from GitHub Copilot. [CrossRef]

- S Ambati - 2023 - harvest.usask.ca. Security and Authenticity of AI-generated code.

- SR Gopireddy - Journal of Scientific and Engineering Research, 2024 - researchgate.net. AI-Powered Code Review and Vulnerability Detection in DevOps Pipelines.

- C Novelli, M Taddeo, L Floridi - Ai & Society, 2024 - Springer. Accountability in artificial intelligence: what it is and how it works. [CrossRef]

- HP Kothandapani - Emerging Science Research, 2025 - emergingpub.com. AI-Driven Regulatory Compliance: Transforming Financial Oversight through Large Language Models and Automation.

- TN Veena, A Chakraborty - scitepress.org. Securing Data Privacy, Preserving Trade Secrets: India Tech. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).