Submitted:

26 June 2025

Posted:

27 June 2025

You are already at the latest version

Abstract

Keywords:

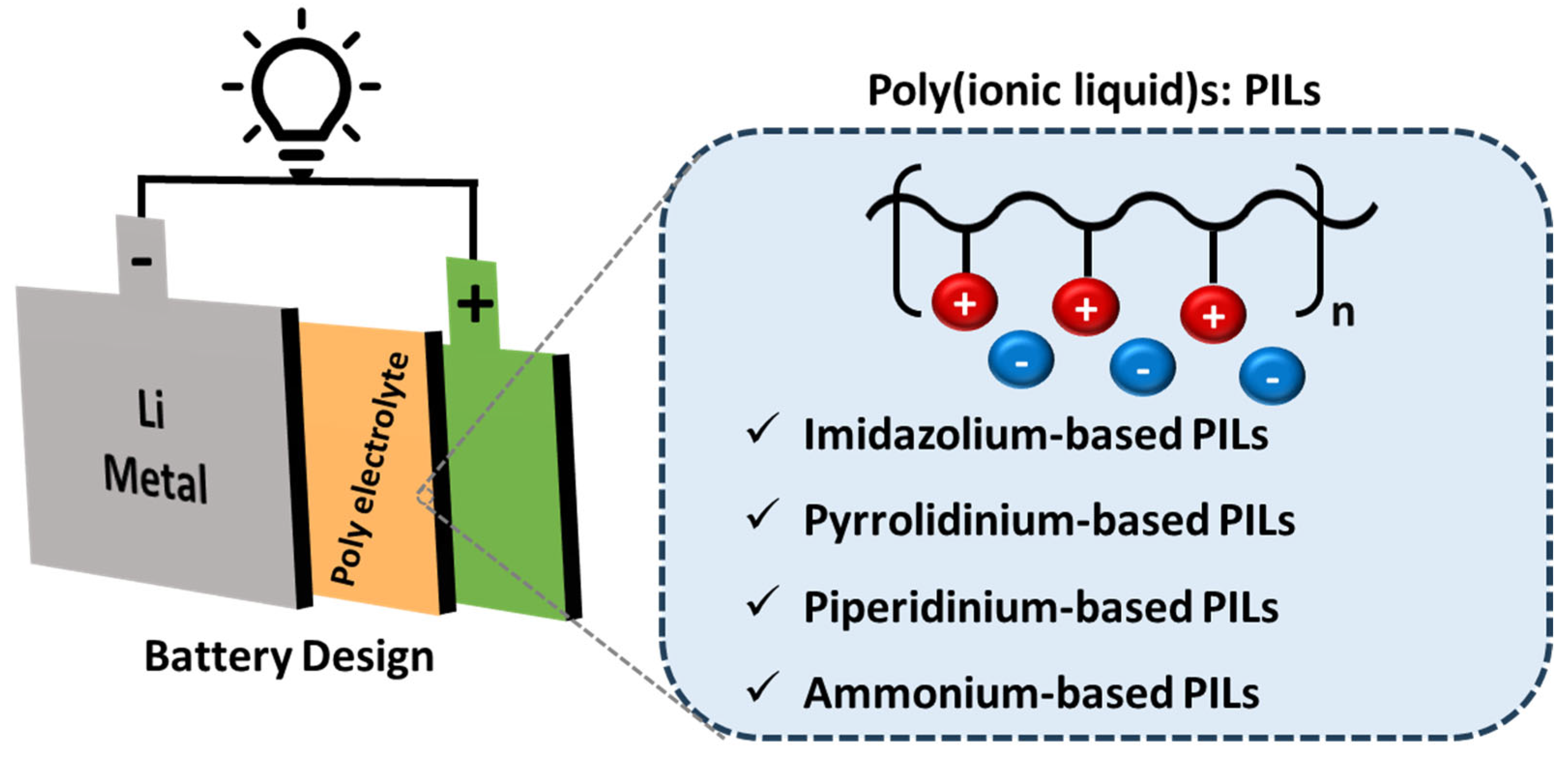

1. Introduction

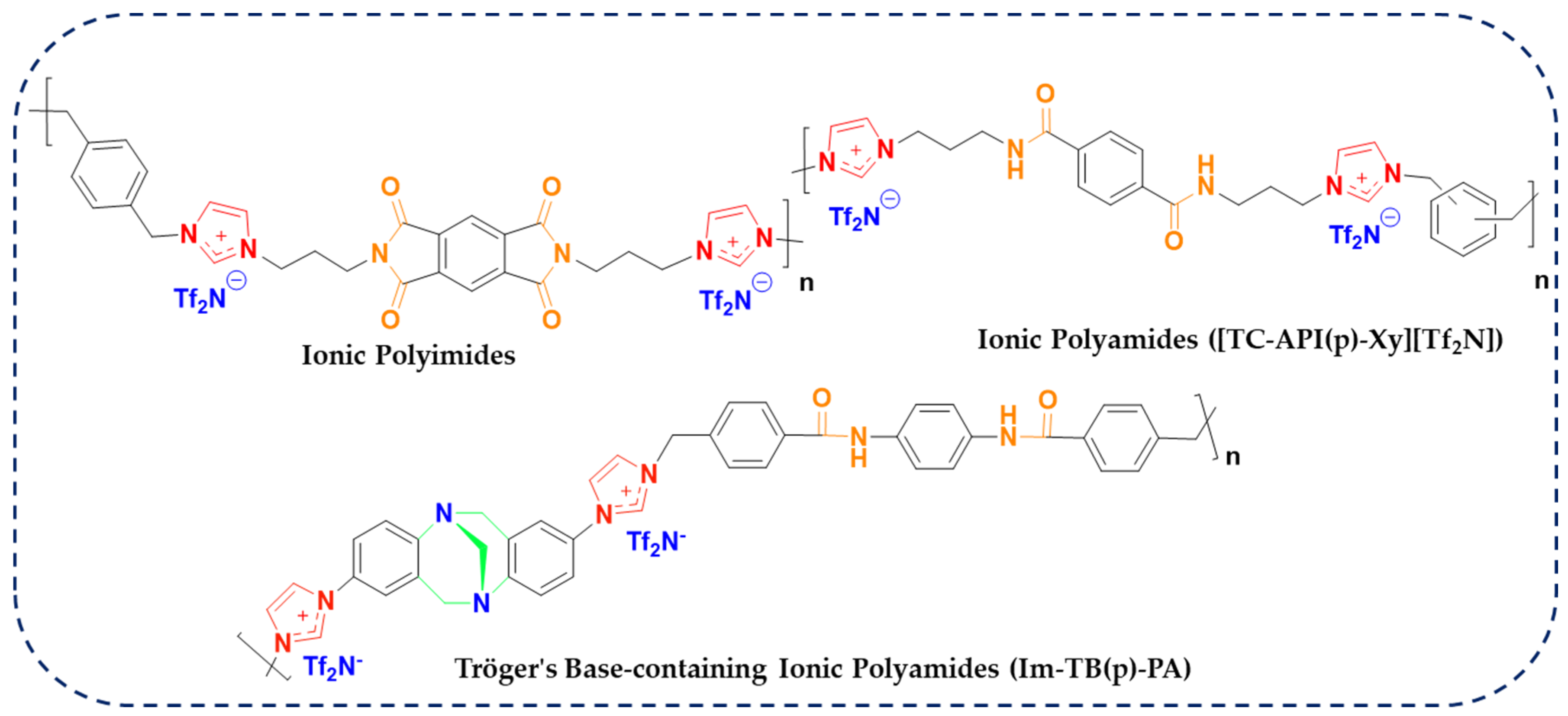

2. Materials and Methods

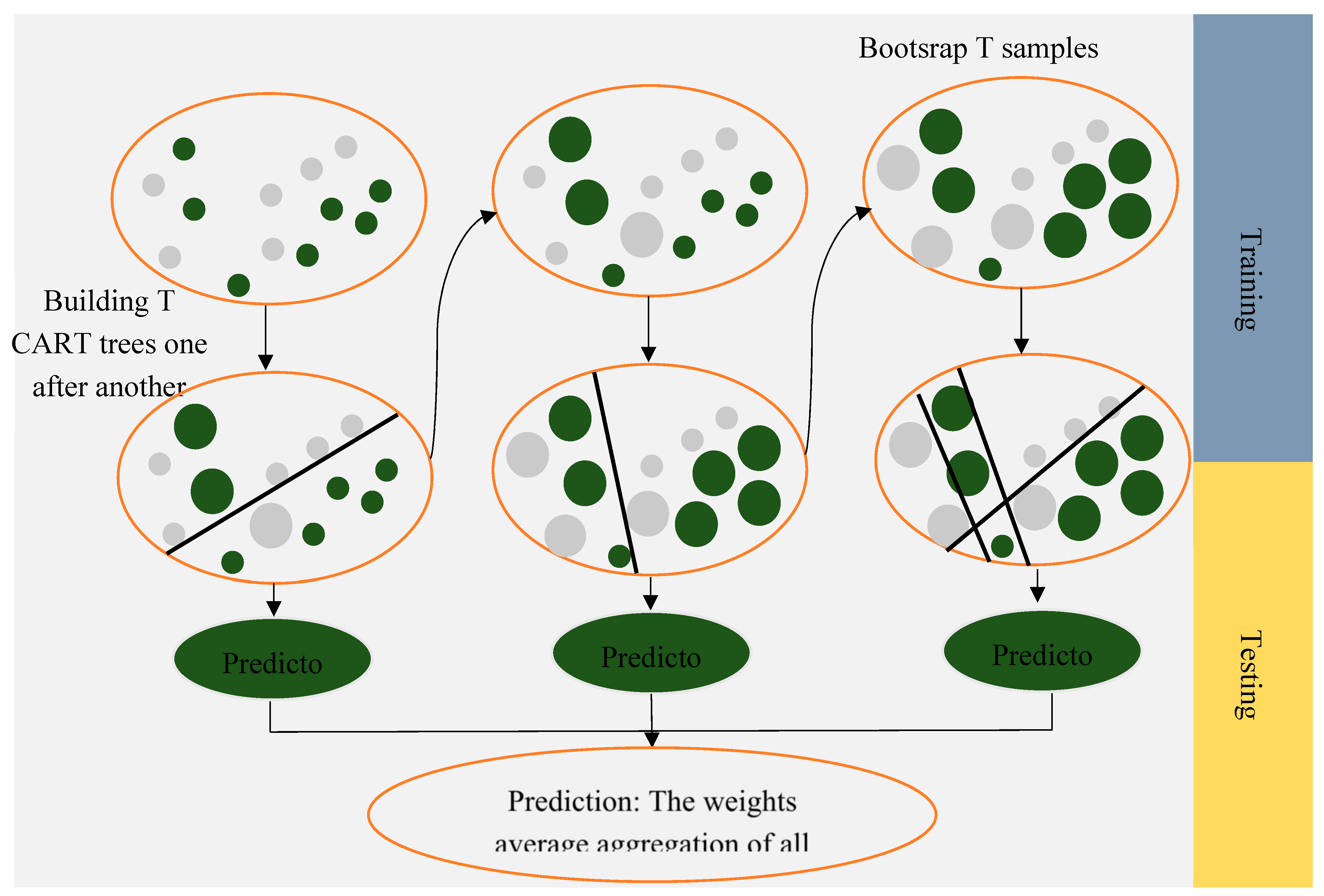

2.1. Model Developments

2.1.1. CatBoost

2.1.2. Random Forest

2.1.3. XGBoost

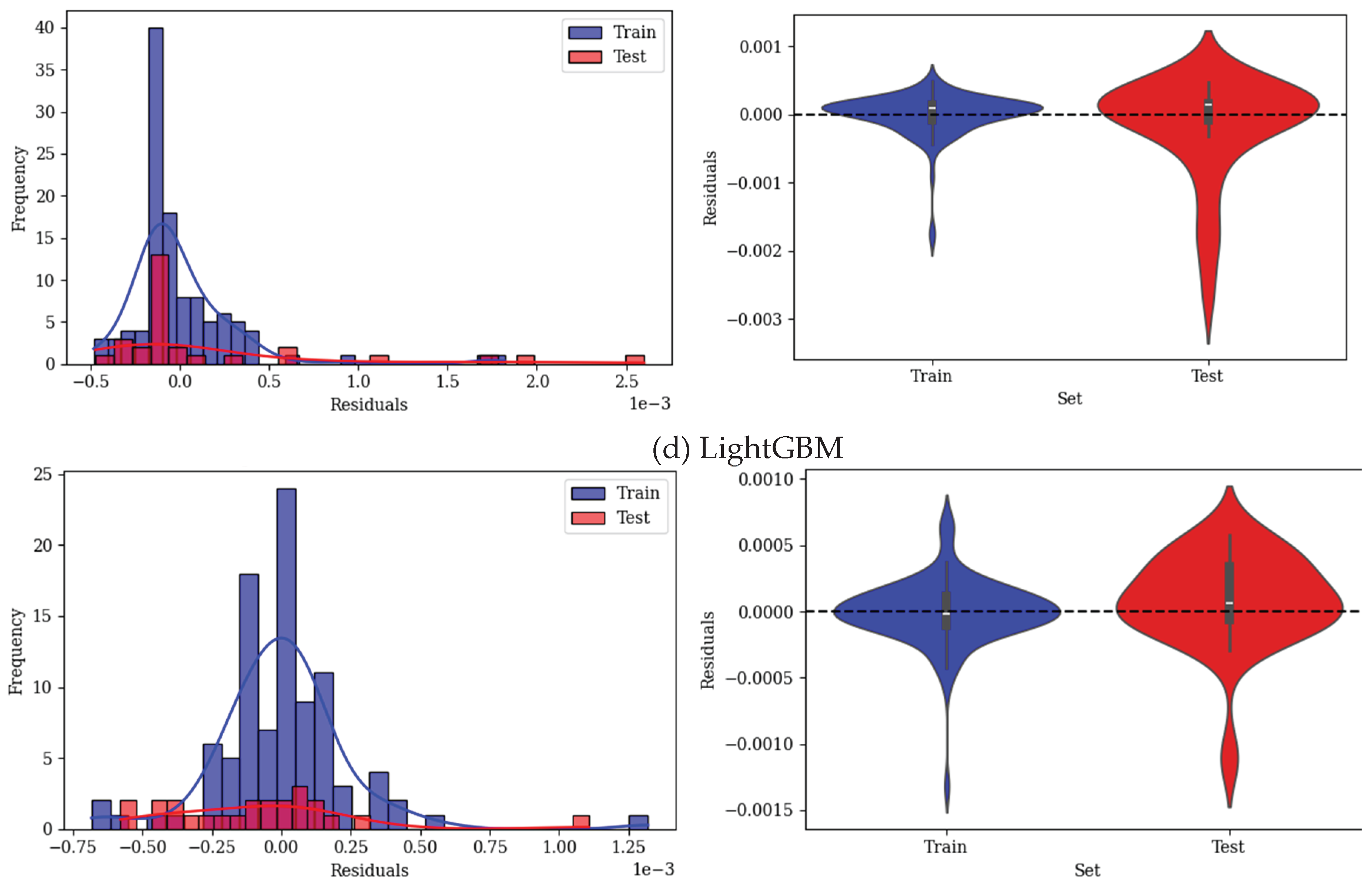

2.1.4. LightGBM

2.1.5. Evaluation Metrics

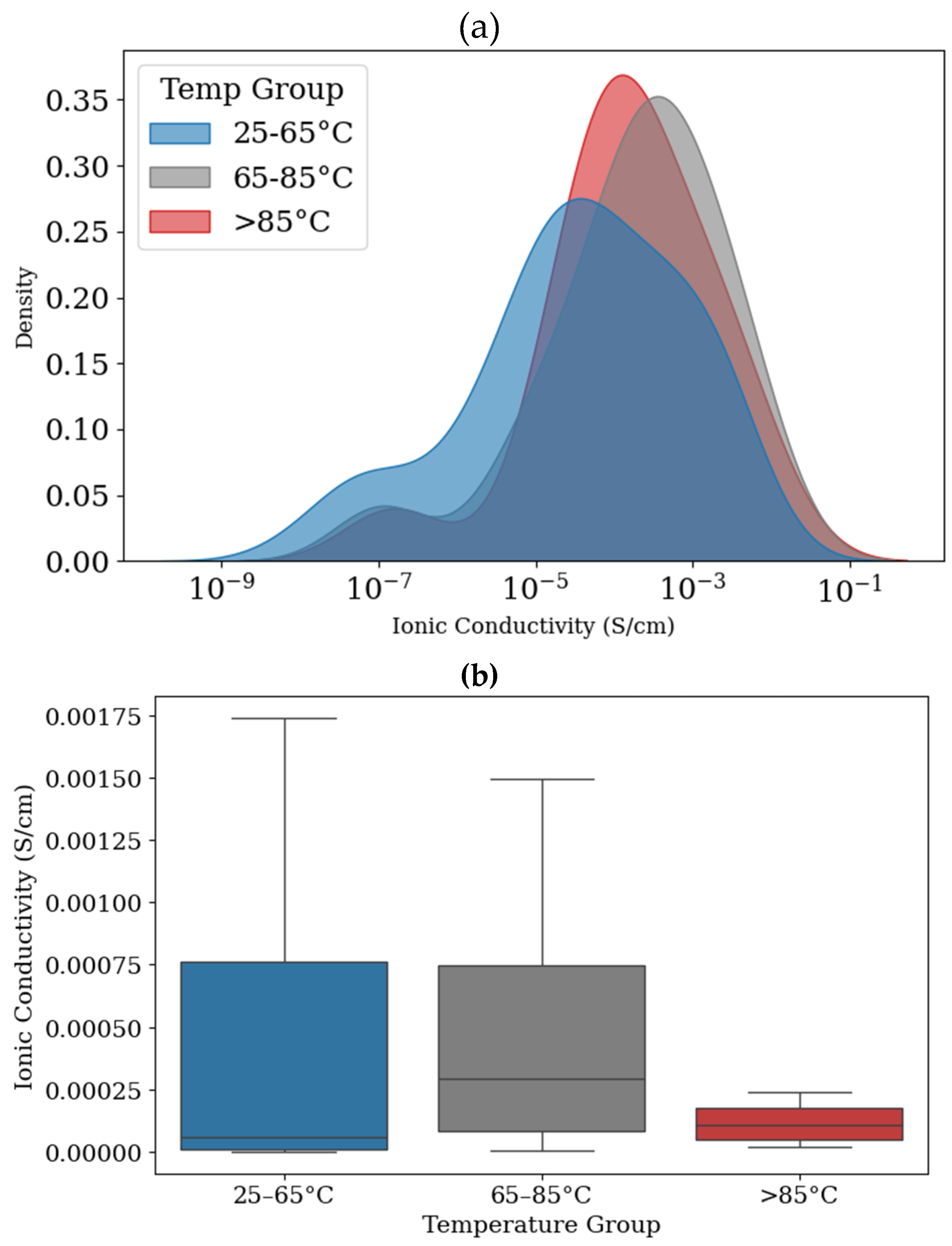

2.2. Data Gathering and Model Developments

3. Results

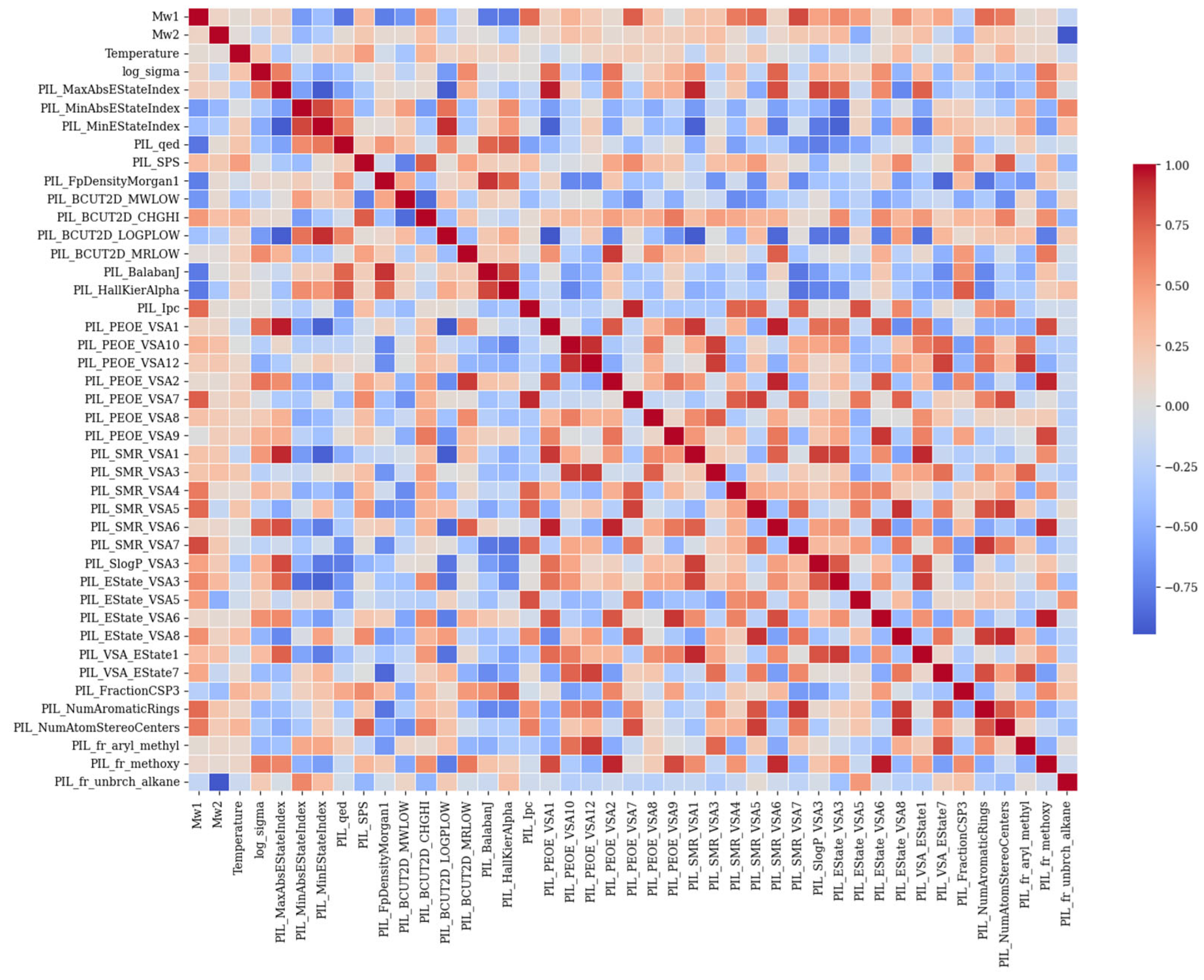

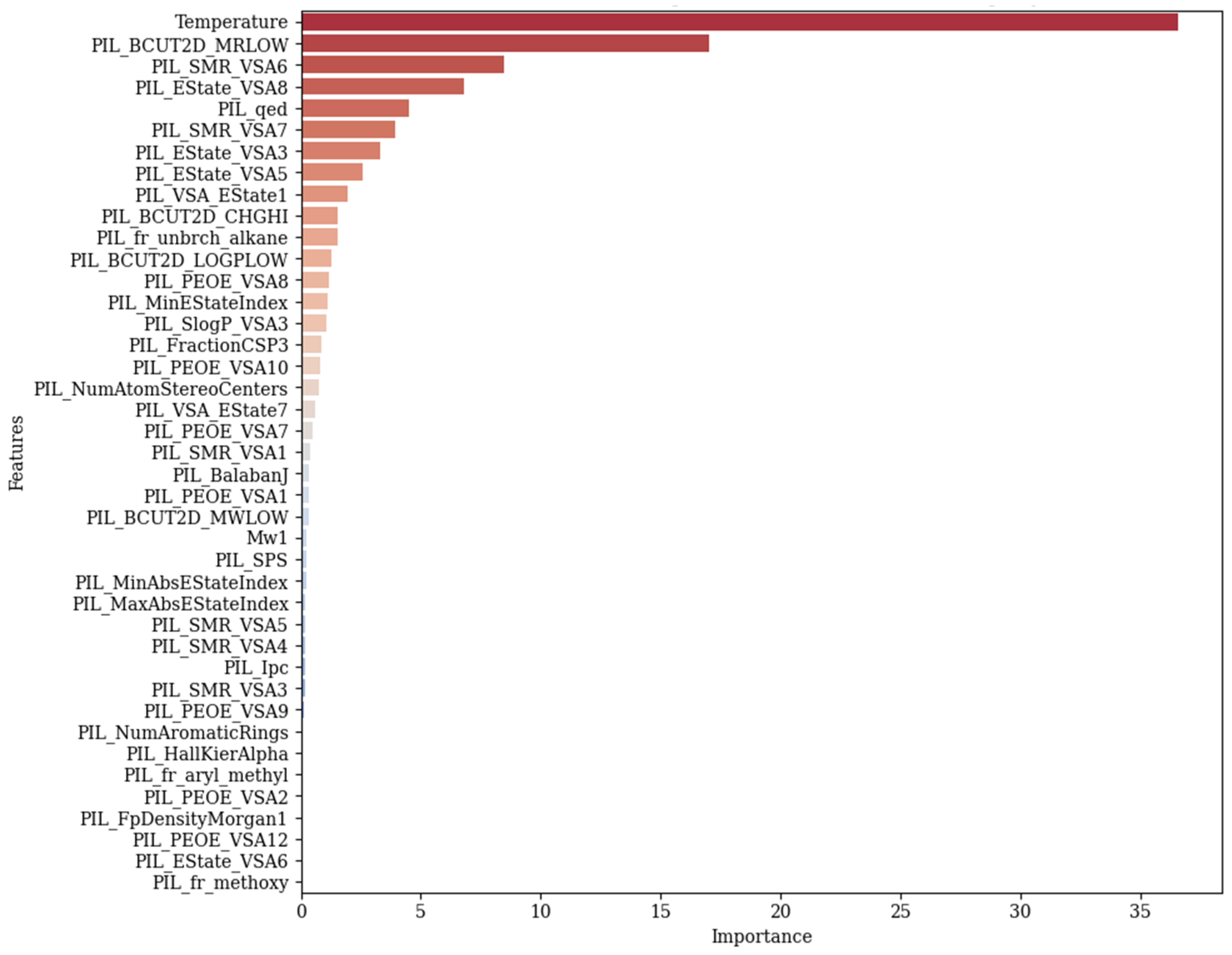

3.1. Feature Importance

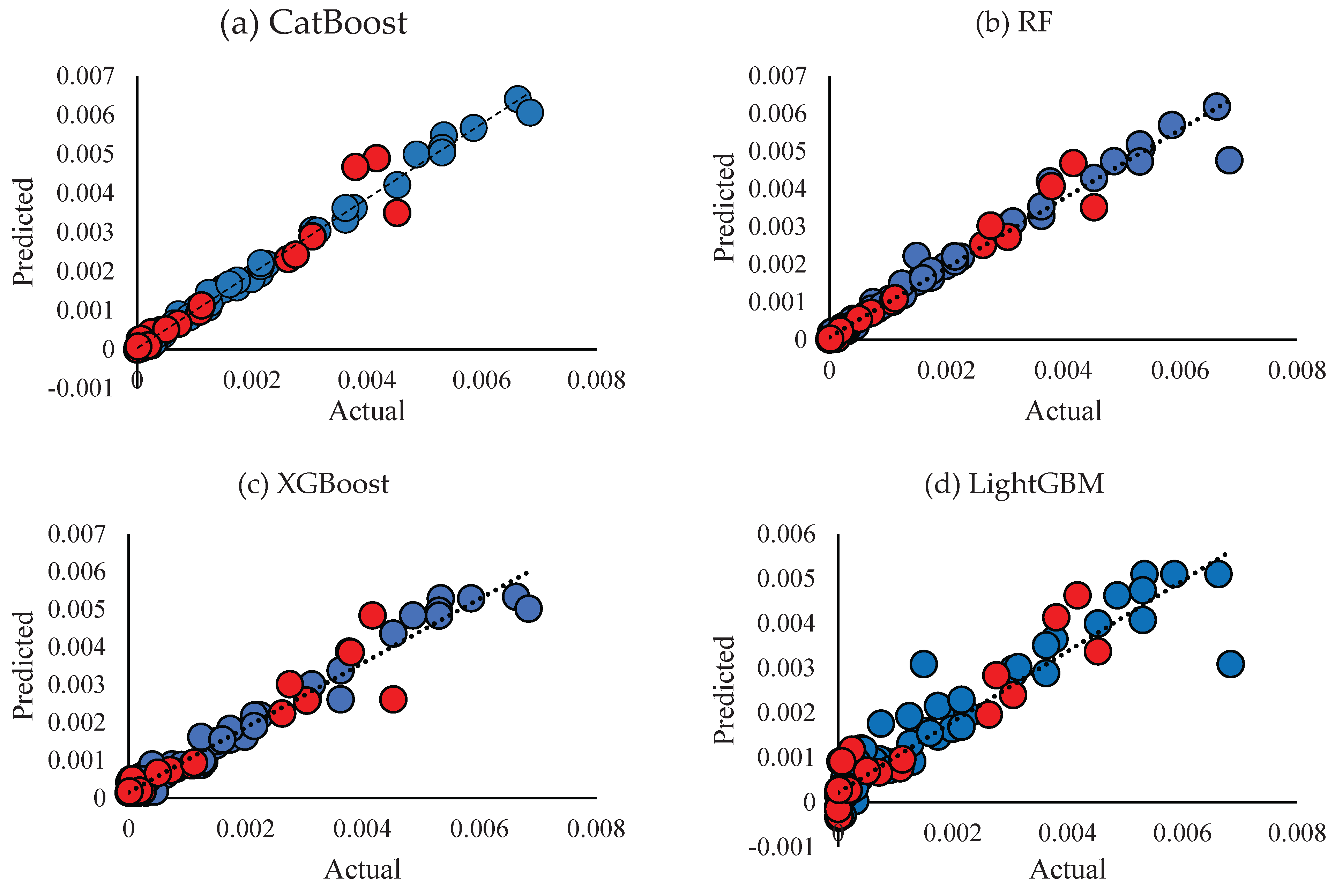

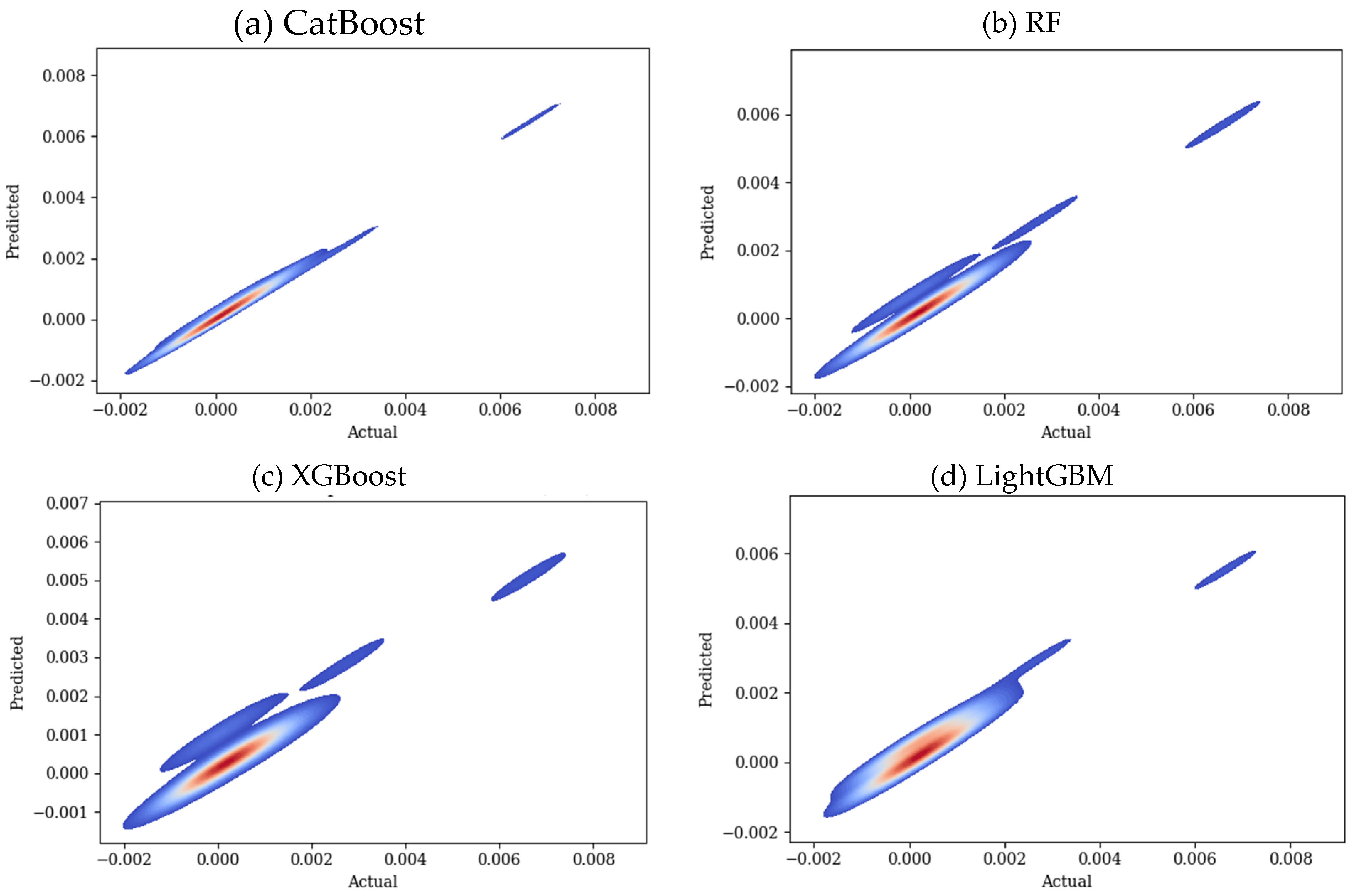

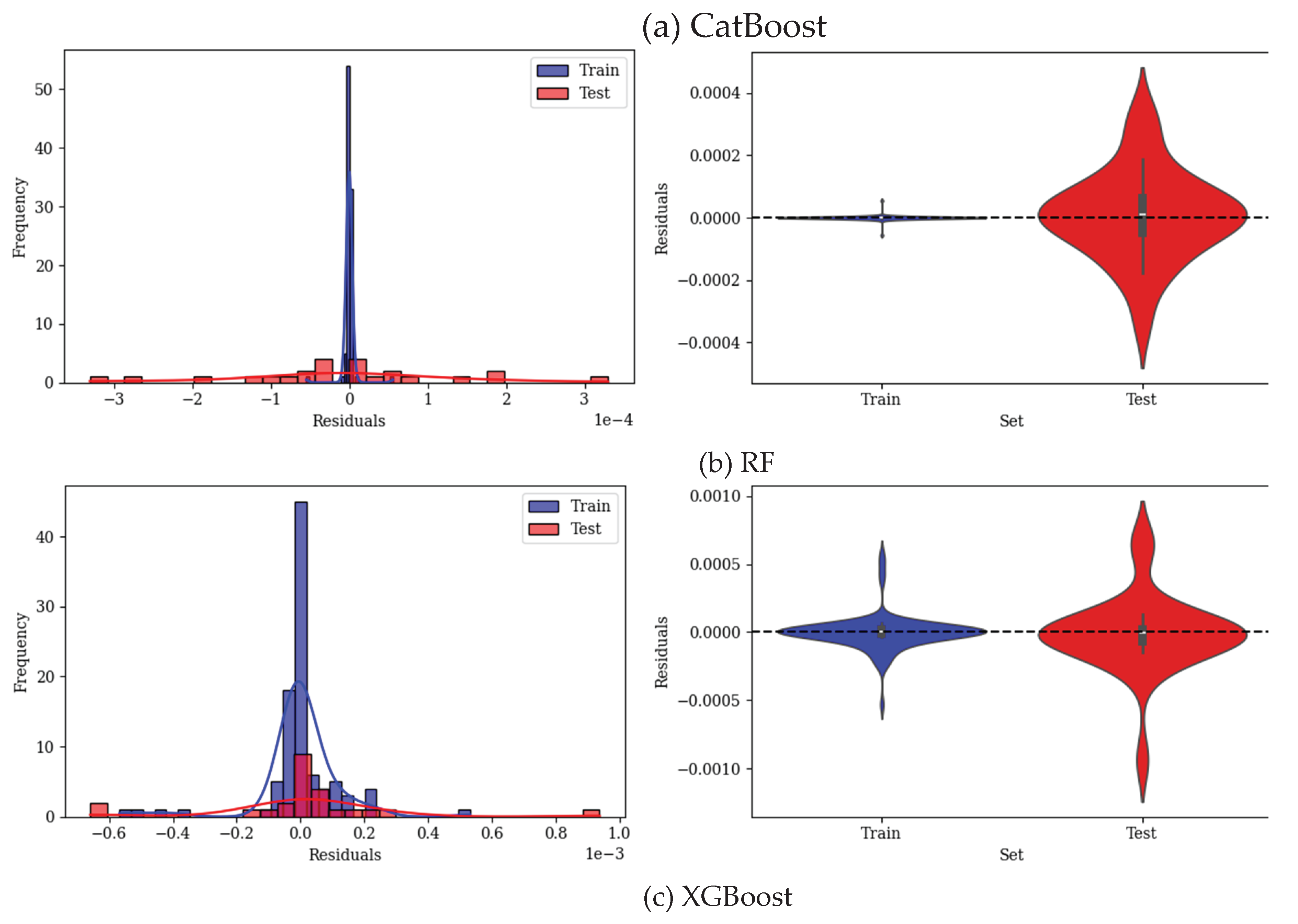

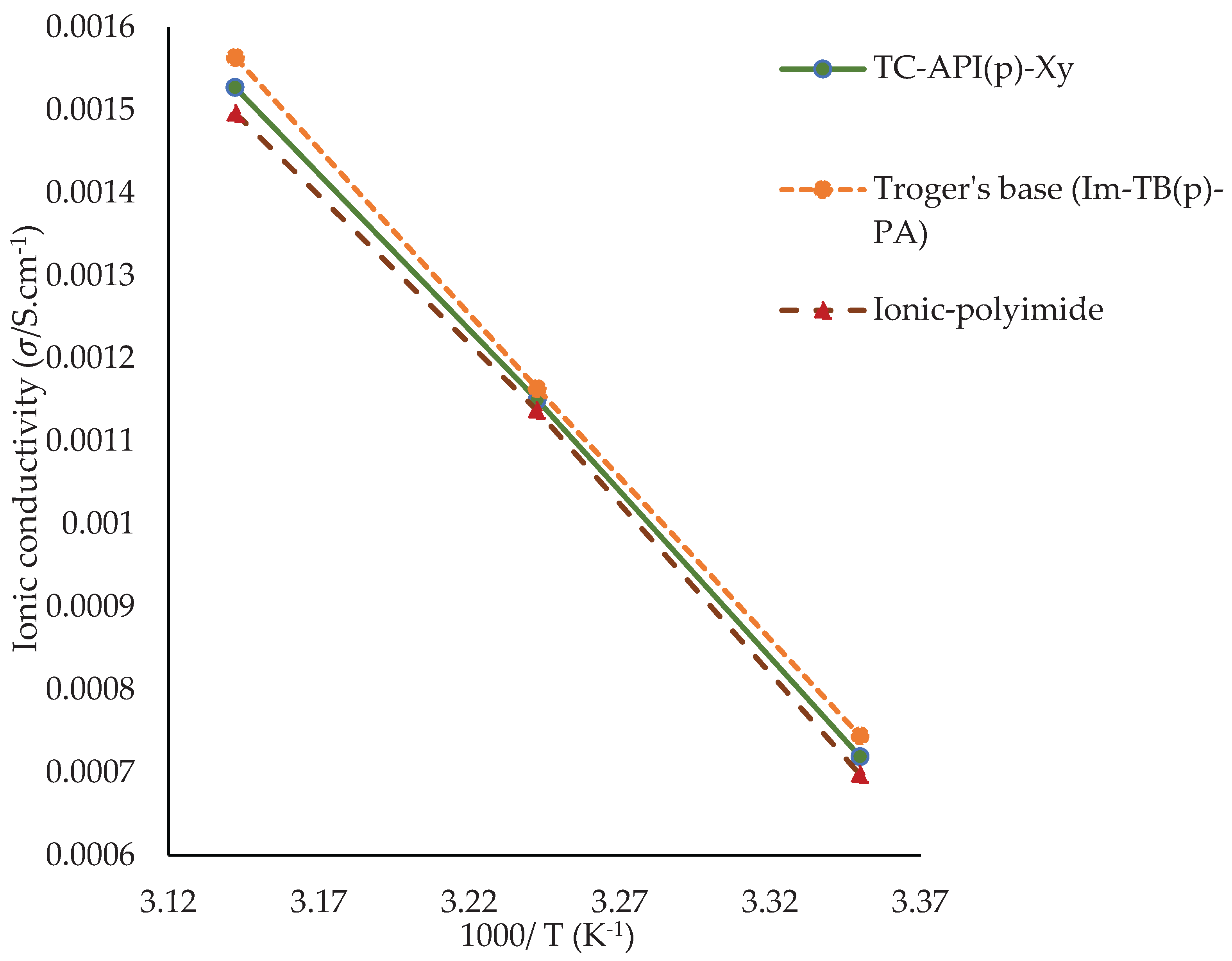

3.2. Predicting Conductivity for Ionenes

4. Conclusions

Author Contributions

Acknowledgment

References

- K. Kohzadvand, M. M. Kouhi, A. Barati, S. Omrani, and M. Ghasemi, “Prediction of interfacial wetting behavior of H2/mineral/brine; implications for H2 geo-storage,” J Energy Storage, vol. 72, Nov. 2023. [CrossRef]

- G. Piroozi, M. Kouhi, A. S.-J. of E. Storage, and undefined 2025, “Novel intelligent models for prediction of hydrogen diffusion coefficient in brine using experimental and molecular dynamics simulation data: Implications for,” ElsevierG Piroozi, MM Kouhi, A ShafieiJournal of Energy Storage, 2025•Elsevier, Accessed: May 13, 2025. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S2352152X25010102.

- M. R. N. Vilanova, A. T. Flores, and J. A. P. Balestieri, “Pumped hydro storage plants: a review,” Journal of the Brazilian Society of Mechanical Sciences and Engineering, vol. 42, no. 8, pp. 1–14, Aug. 2020. [CrossRef]

- G. L. Soloveichik, “Flow Batteries: Current Status and Trends,” Chem Rev, vol. 115, no. 20, pp. 11533–11558, Oct. 2015. [CrossRef]

- M. Li, J. Lu, Z. Chen, and K. Amine, “REVIEW 1800561 (1 of 24) 30 Years of Lithium-Ion Batteries,” 2018. [CrossRef]

- T. Kim, W. Song, D. Y. Son, L. K. Ono, and Y. Qi, “Lithium-ion batteries: outlook on present, future, and hybridized technologies,” J Mater Chem A Mater, vol. 7, no. 7, pp. 2942–2964, Feb. 2019. [CrossRef]

- A. Manthiram, “An Outlook on Lithium-Ion Battery Technology,” ACS Cent Sci, vol. 3, no. 10, pp. 1063–1069, Oct. 2017. [CrossRef]

- J. Xia, R. Petibon, D. Xiong, L. Ma, and J. R. Dahn, “Enabling linear alkyl carbonate electrolytes for high voltage Li-ion cells,” J Power Sources, vol. 328, pp. 124–135, Oct. 2016. [CrossRef]

- L. Long, S. Wang, M. Xiao, and Y. Meng, “Polymer electrolytes for lithium polymer batteries,” J Mater Chem A Mater, vol. 4, no. 26, pp. 10038–10069, Jun. 2016. [CrossRef]

- M. Zhu et al., “Recent advances in gel polymer electrolyte for high-performance lithium batteries,” Journal of Energy Chemistry, vol. 37, pp. 126–142, Oct. 2019. [CrossRef]

- T. P. Barrera et al., “Next-Generation Aviation Li-Ion Battery Technologies-Enabling Electrified Aircraft,” Electrochemical Society Interface, vol. 31, no. 3, pp. 69–74, Sep. 2022. [CrossRef]

- K. Vishweswariah, A. K. Madikere Raghunatha Reddy, and K. Zaghib, “Beyond Organic Electrolytes: An Analysis of Ionic Liquids for Advanced Lithium Rechargeable Batteries,” Batteries 2024, Vol. 10, Page 436, vol. 10, no. 12, p. 436, Dec. 2024. [CrossRef]

- N. Chawla, N. Bharti, and S. Singh, “Recent Advances in Non-Flammable Electrolytes for Safer Lithium-Ion Batteries,” Batteries 2019, Vol. 5, Page 19, vol. 5, no. 1, p. 19, Feb. 2019. [CrossRef]

- J. W. Fergus, “Ceramic and polymeric solid electrolytes for lithium-ion batteries,” J Power Sources, vol. 195, no. 15, pp. 4554–4569, Aug. 2010. [CrossRef]

- E. Peled, D. Golodnitsky, G. Ardel, C. Menachem, D. Bar Tow, and V. Eshkenazy, “The Role of Sei in Lithium and Lithium-Ion Batteries,” MRS Online Proceedings Library (OPL), vol. 393, p. 209, 1995. [CrossRef]

- K. Vishweswariah, A. M. R. R.- Batteries, and undefined 2024, “Beyond Organic Electrolytes: An Analysis of Ionic Liquids for Advanced Lithium Rechargeable Batteries,” mdpi.comK Vishweswariah, AK Madikere Raghunatha Reddy, K ZaghibBatteries, 2024•mdpi.com, Accessed: Feb. 26, 2025. [Online]. Available: https://www.mdpi.com/2313-0105/10/12/436.

- F. Gebert, M. Longhini, F. Conti, and A. J. Naylor, “An electrochemical evaluation of state-of-the-art non-flammable liquid electrolytes for high-voltage lithium-ion batteries,” J Power Sources, vol. 556, p. 232412, Feb. 2023.

- T. Stettner, F. C. Walter, and A. Balducci, “Imidazolium-Based Protic Ionic Liquids as Electrolytes for Lithium-Ion Batteries,” Batter Supercaps, vol. 2, no. 1, pp. 55–59, Jan. 2019. [CrossRef]

- J. Lu, F. Yan, and J. Texter, “Advanced applications of ionic liquids in polymer science,” Prog Polym Sci, vol. 34, no. 5, pp. 431–448, May 2009. [CrossRef]

- Y. Liu, T. Zhao, W. Ju, and S. Shi, “Materials discovery and design using machine learning,” Journal of Materiomics, vol. 3, no. 3, pp. 159–177, Sep. 2017. [CrossRef]

- D. Yong and J. U. Kim, “Accelerating Langevin Field-Theoretic Simulation of Polymers with Deep Learning,” Macromolecules, vol. 55, no. 15, pp. 6505–6515, Aug. 2022. [CrossRef]

- H. Doan Tran et al., “Machine-learning predictions of polymer properties with Polymer Genome,” J Appl Phys, vol. 128, no. 17, p. 171104, Nov. 2020.

- N. Andraju, G. W. Curtzwiler, Y. Ji, E. Kozliak, and P. Ranganathan, “Machine-Learning-Based Predictions of Polymer and Postconsumer Recycled Polymer Properties: A Comprehensive Review,” ACS Appl Mater Interfaces, vol. 14, no. 38, pp. 42771–42790, Sep. 2022. [CrossRef]

- J. Shi, F. Albreiki, N. Yamil J. Colón, S. Srivastava, and J. K. Whitmer, “Transfer Learning Facilitates the Prediction of Polymer-Surface Adhesion Strength,” J Chem Theory Comput, vol. 19, no. 14, pp. 4631–4640, Jul. 2023. [CrossRef]

- B. K. Wheatle, E. F. Fuentes, N. A. Lynd, and V. Ganesan, “Design of Polymer Blend Electrolytes through a Machine Learning Approach,” Macromolecules, vol. 53, no. 21, pp. 9449–9459, Nov. 2020. [CrossRef]

- G. Bradford et al., “Chemistry-Informed Machine Learning for Polymer Electrolyte Discovery,” ACS Cent Sci, vol. 9, no. 2, pp. 206–216, Feb. 2023. [CrossRef]

- Q. Gao, T. Dukker, A. M. Schweidtmann, and J. M. Weber, “Self-supervised graph neural networks for polymer property prediction †,” Cite this: Mol. Syst. Des. Eng, vol. 9, p. 1130, 2024. [CrossRef]

- E. Kazemi-Khasragh, C. González, and M. Haranczyk, “Toward diverse polymer property prediction using transfer learning,” Comput Mater Sci, vol. 244, p. 113206, Sep. 2024. [CrossRef]

- A. Babbar, S. Ragunathan, D. Mitra, A. Dutta, and T. K. Patra, “Explainability and extrapolation of machine learning models for predicting the glass transition temperature of polymers,” Journal of Polymer Science, vol. 62, no. 6, pp. 1175–1186, Mar. 2024. [CrossRef]

- E. Ascencio-Medina et al., “Prediction of Dielectric Constant in Series of Polymers by Quantitative Structure-Property Relationship (QSPR),” Polymers (Basel), vol. 16, no. 19, p. 2731, Oct. 2024. [CrossRef]

- K. Hatakeyama-Sato, T. Tezuka, M. Umeki, and K. Oyaizu, “AI-Assisted Exploration of Superionic Glass-Type Li+ Conductors with Aromatic Structures,” J Am Chem Soc, vol. 142, no. 7, pp. 3301–3305, Feb. 2020. [CrossRef]

- K. Li, J. Wang, Y. Song, and Y. Wang, “Machine learning-guided discovery of ionic polymer electrolytes for lithium metal batteries,” Nat Commun, vol. 14, no. 1, Dec. 2023. [CrossRef]

- S. Ibrahim and M. R. Johan, “Conductivity, Thermal and Neural Network Model Nanocomposite Solid Polymer Electrolyte S LiPF6),” Int J Electrochem Sci, vol. 6, no. 11, pp. 5565–5587, Nov. 2011. [CrossRef]

- F. H. Zhai et al., “A deep learning protocol for analyzing and predicting ionic conductivity of anion exchange membranes,” J Memb Sci, vol. 642, p. 119983, Feb. 2022. [CrossRef]

- Y. K. Phua, T. Fujigaya, and K. Kato, “Predicting the anion conductivities and alkaline stabilities of anion conducting membrane polymeric materials: development of explainable machine learning models,” Sci Technol Adv Mater, vol. 24, no. 1, p. 2261833, 2023. [CrossRef]

- J. Wang and P. Rajendran, “Conductivity Prediction Method of Solid Electrolyte Materials Based on Pearson Coefficient Method and Ensemble Learning,” Journal of Artificial Intelligence and Technology, Nov. 2024. [CrossRef]

- U. Arora, M. Singh, S. Dabade, and A. Karim, “Analyzing the efficacy of different machine learning models for property prediction of solid polymer electrolytes,” Jul. 2024. [CrossRef]

- L. Prokhorenkova, G. Gusev, A. Vorobev, A. V. Dorogush, and A. Gulin, “CatBoost: unbiased boosting with categorical features,” proceedings.neurips.ccL Prokhorenkova, G Gusev, A Vorobev, AV Dorogush, A GulinAdvances in neural information processing systems, 2018•proceedings.neurips.cc, Accessed: Mar. 11, 2025. [Online]. Available: https://proceedings.neurips.cc/paper/2018/hash/14491b756b3a51daac41c24863285549-Abstract.html.

- J. T. Hancock and T. M. Khoshgoftaar, “CatBoost for big data: an interdisciplinary review,” J Big Data, vol. 7, no. 1, pp. 1–45, Dec. 2020. [CrossRef]

- L. Breiman, “Random forests,” Mach Learn, vol. 45, no. 1, pp. 5–32, Oct. 2001. [CrossRef]

- A. Parmar, R. Katariya, and V. Patel, “A Review on Random Forest: An Ensemble Classifier,” Lecture Notes on Data Engineering and Communications Technologies, vol. 26, pp. 758–763, 2019. [CrossRef]

- M. Belgiu and L. Drăgu, “Random forest in remote sensing: A review of applications and future directions,” ISPRS Journal of Photogrammetry and Remote Sensing, vol. 114, pp. 24–31, Apr. 2016. [CrossRef]

- T. Chen, T. He, M. Benesty, V. K.-… version 0.4-2, and undefined 2015, “Xgboost: extreme gradient boosting,” cran.ms.unimelb.edu.auT Chen, T He, M Benesty, V Khotilovich, Y Tang, H Cho, K Chen, R Mitchell, I Cano, T ZhouR package version 0.4-2, 2015•cran.ms.unimelb.edu.au, 2024, Accessed: Mar. 11, 2025. [Online]. Available: https://cran.ms.unimelb.edu.au/web/packages/xgboost/vignettes/xgboost.pdf.

- L. Mackey, J. Bryan, M. M.-N. 2014 W. on High, and undefined 2015, “Weighted classification cascades for optimizing discovery significance in the higgsml challenge,” proceedings.mlr.pressL Mackey, J Bryan, MY MoNIPS 2014 Workshop on High-energy Physics and Machine Learning, 2015•proceedings.mlr.press, vol. 42, pp. 129–134, 2015, Accessed: Mar. 11, 2025. [Online]. Available: http://proceedings.mlr.press/v42/mack14.html.

- T. Chen and C. Guestrin, “XGBoost: A scalable tree boosting system,” Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, vol. 13-17-August-2016, pp. 785–794, Aug. 2016. [CrossRef]

- G. Ke, Q. Meng, T. Finley, … T. W.-A. in neural, and undefined 2017, “Lightgbm: A highly efficient gradient boosting decision tree,” proceedings.neurips.ccG Ke, Q Meng, T Finley, T Wang, W Chen, W Ma, Q Ye, TY LiuAdvances in neural information processing systems, 2017•proceedings.neurips.cc, Accessed: Mar. 11, 2025. [Online]. Available: https://proceedings.neurips.cc/paper/2017/hash/6449f44a102fde848669bdd9eb6b76fa-Abstract.html.

- K. Yin, Z. Zhang, L. Yang, and S. I. Hirano, “An imidazolium-based polymerized ionic liquid via novel synthetic strategy as polymer electrolytes for lithium-ion batteries,” J Power Sources, vol. 258, pp. 150–154, Jul. 2014. [CrossRef]

- F. Ma et al., “Solid Polymer Electrolyte Based on Polymerized Ionic Liquid for High Performance All-Solid-State Lithium-Ion Batteries,” ACS Sustain Chem Eng, vol. 7, no. 5, pp. 4675–4683, Mar. 2019. [CrossRef]

- Y. C. Tseng, S. H. Hsiang, C. H. Tsao, H. Teng, S. S. Hou, and J. S. Jan, “In situ formation of polymer electrolytes using a dicationic imidazolium cross-linker for high-performance lithium-ion batteries,” J Mater Chem A Mater, vol. 9, no. 9, pp. 5796–5806, Mar. 2021. [CrossRef]

- T. Huang, M. C. Long, X. L. Wang, G. Wu, and Y. Z. Wang, “One-step preparation of poly (ionic liquid)-based flexible electrolytes by in-situ polymerization for dendrite-free lithium-ion batteries,” Chemical Engineering Journal, vol. 375, p. 122062, Nov. 2019. [CrossRef]

- T. L. Chen, R. Sun, C. Willis, B. F. Morgan, F. L. Beyer, and Y. A. Elabd, “Lithium ion conducting polymerized ionic liquid pentablock terpolymers as solid-state electrolytes,” Polymer (Guildf), vol. 161, pp. 128–138, Jan. 2019. [CrossRef]

- D. Zhou et al., “In situ synthesis of hierarchical poly (ionic liquid)-based solid electrolytes for high-safety lithium-ion and sodium-ion batteries,” Nano Energy, vol. 33, pp. 45–54, Mar. 2017. [CrossRef]

- H. Niu et al., “Preparation of imidazolium based polymerized ionic liquids gel polymer electrolytes for high-performance lithium batteries,” Mater Chem Phys, vol. 293, p. 126971, Jan. 2023. [CrossRef]

- S. S. More et al., “Imidazolium Functionalized Copolymer Supported Solvate Ionic Liquid Based Gel Polymer Electrolyte for Lithium Ion Batteries,” ACS Appl Polym Mater, vol. 27, p. 45, Oct. 2024. [CrossRef]

- A. B. Georgescu et al., “Database, Features, and Machine Learning Model to Identify Thermally Driven Metal-Insulator Transition Compounds,” Chemistry of Materials, vol. 33, no. 14, pp. 5591–5605, Jul. 2021. [CrossRef]

| Ref | ML models | Inputs | Evaluation metrics |

|---|---|---|---|

| [28] | NN | Chemical composition, Temperature | NA |

| [23] | GNN | Chemical structures, Composition ratio, Temperatures | R2 = 0.16 |

| [24] | 1-Unsupervised learning 2-Ensemble of SVM, RF, XGB, and GCNN |

Molecular structure descriptors, Electronic structural variables, 3D molecular structure fingerprints, Electrochemical window | R2= 0.82, MAE = 1.8 |

| [29] | DNN | Chemical structure, Temperature, ion exchange capacity | Rp = 0.951, RMSE = 0.014 |

| [30] | CatBoost, XGBoost, RF* | Chemical structure, Temperature, Ion forms, Polymer main-chain types, anion-conducting moieties. | RMSE* = 0.014, MAE* = 0.01 |

| [19] |

ChemArr*, Chemprop, XGBoost | Chemical structure, Temperature, Mw, Salt concentration | Spearman R* = 0.59, MAE* = 1 |

| [31] | RF*, KNN, SVM, Adaboost, GBM | Standard deviation of Li-X ionic bond, Standard deviation of the mean adjacency number of Li atom, Average straight-line path electronegativity, Average straight-line path width, Packing fraction of sublattice, Average atomic volume, Average value of Li-Li bond | MAE* = 0.237, MSE* = 0.134 |

| [32] | RF, XGBoost*, LR, KNN, Chemprop |

Chemical structure of polymer, Salt chemical structure, Mw, Molality, Temperature | R2* = 0.93, MAE* = 0.21, RMSE* = 0.31, MSE* = 0.09 |

| Author | PIL-name | Number of data | Temperature (K) | Conductivity |

| [42] | P(EtVIm-TFSI) (NR) | 21 | 298.2-353.3 | 6.4E-6-4.4E-4 |

| [43] | VEIm-TFSI | 40 | 303.1-373.2 | 9.83E-9-2.41E-4 |

| [44] | P-20 | 7 | 297.8-352.7 | 2.9E-4-1.2E-3 |

| [45] | PIL-QSE | 7 | 285.1-358.2 | 7.1E-4-3.7E-3 |

| [46] | Mim-TFSI + Li-TFSI/EMIm-TFSI | 9 | 301.5-363.2 | 1.4E-5-6.8E-3 |

| [49] | PVIMTFSI-co-PPEGMA/LiTFSI | 6 | 333-357.8 | 3.8E-3-6.6E-3 |

| [47] | HPILSE | 23 | 252.9-353.2 | 4E-5-5.3E-3 |

| [48] | PIL-GPE | 7 | 298.1-353.2 | 1.2E-3-5.3E-3 |

| CatBoost | RF | XGBoost | LighGBM | |||||||||

| Train | Test | All | Train | Test | All | Train | Test | All | Train | Test | All | |

| R2 | 0.994 0.949 0.986 | 0.976 0.97 0.975 | 0.962 0.905 0.952 | 0.878 0.911 0.884 | ||||||||

| RMSE | 1.2E-4 3.35E-4 1.87E-4 | 2.55E-4 2.57E-4 2.56E-4 | 3.2E-4 4.5E-4 3.55E-4 | 5.81E-4 4.41E-4 5.56E-4 | ||||||||

| MAE | 7.33E-5 1.83E-4 9.52E-5 | 9.5E-5 1.26E-4 1E-4 | 2E-4 2.54E-4 2.14E-4 | 3.54E-4 3.28E-4 3.4E-4 | ||||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).