Introduction

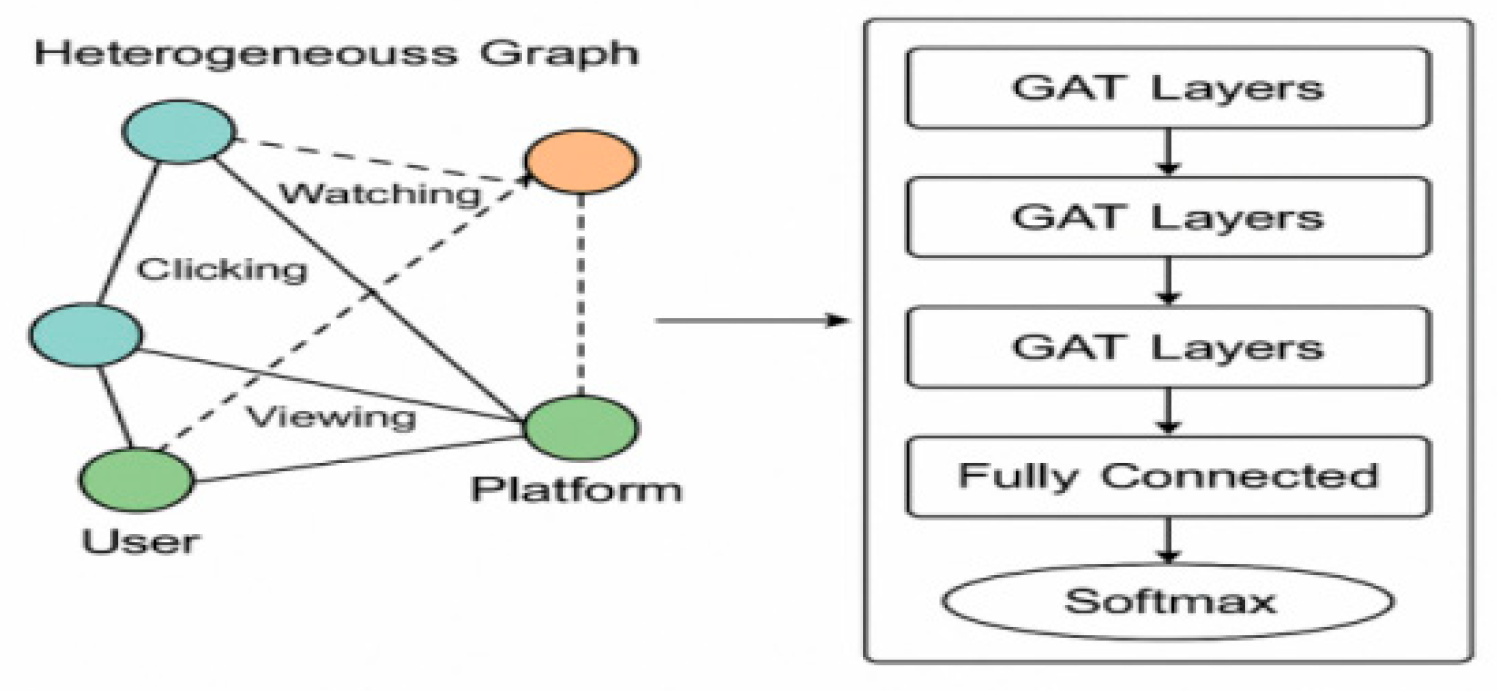

Graph Neural Network (GNN) as a deep learning method that can effectively process graph-structured data, has been widely used in the fields of complex networks, recommender systems and social media analysis. Its ability to capture deep dependencies between data by modeling relationships between nodes and edges makes it possible to accurately capture various types of data features and potential correlations when dealing with large-scale heterogeneous data. In the field of advertisement recommendation, GNN has a significant advantage in improving the effectiveness and accuracy of recommendation systems by mining the multidimensional relationships among user behavior, advertisement content and platform features. Based on this, exploring the application of graph neural networks in cross-platform advertisement recommendation has important theoretical and practical value, as it deepens the understanding of interest migration modeling in heterogeneous graph structures (theoretical), and enhances the precision and adaptability of ad delivery strategies across diverse platforms (practical).

Experimental Results and Analysis

Experimental Data Set

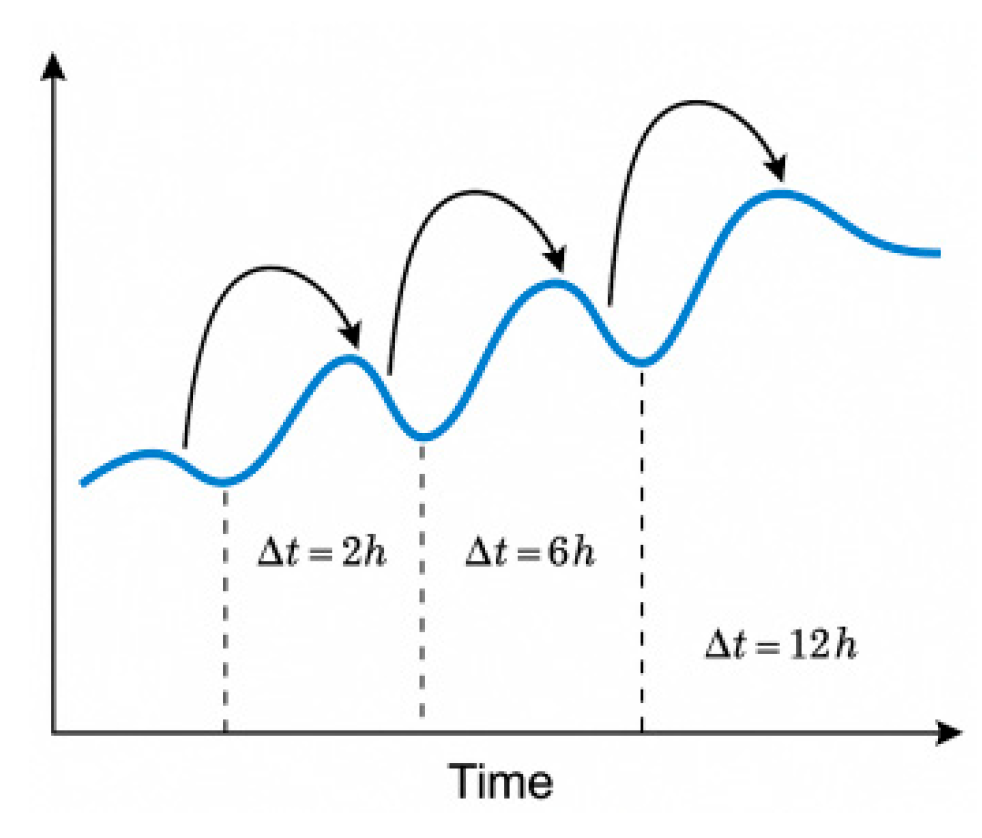

The experimental dataset is derived from ad placement logs from three mainstream platforms (Platform-A, Platform-B, and Platform-C), covering 2,870 records from January to December 2023. Data sources include social platforms, online video platforms, and information apps, captured via API. Fields include Ad_ID, Platform_ID, User_ID, Behavior Type (click, browse, stay), Timestamp, Device_Type, OS_Version, Content_Tag, and Contextual Characteristics (time period, geography, network environment). User behaviors are divided into 12 categories and 37 types of advertisement tags, and the data are de-weighted and normalized to provide a high-quality basis for graph neural network building and sequence recommendation [

8] .

Model Training and Evaluation

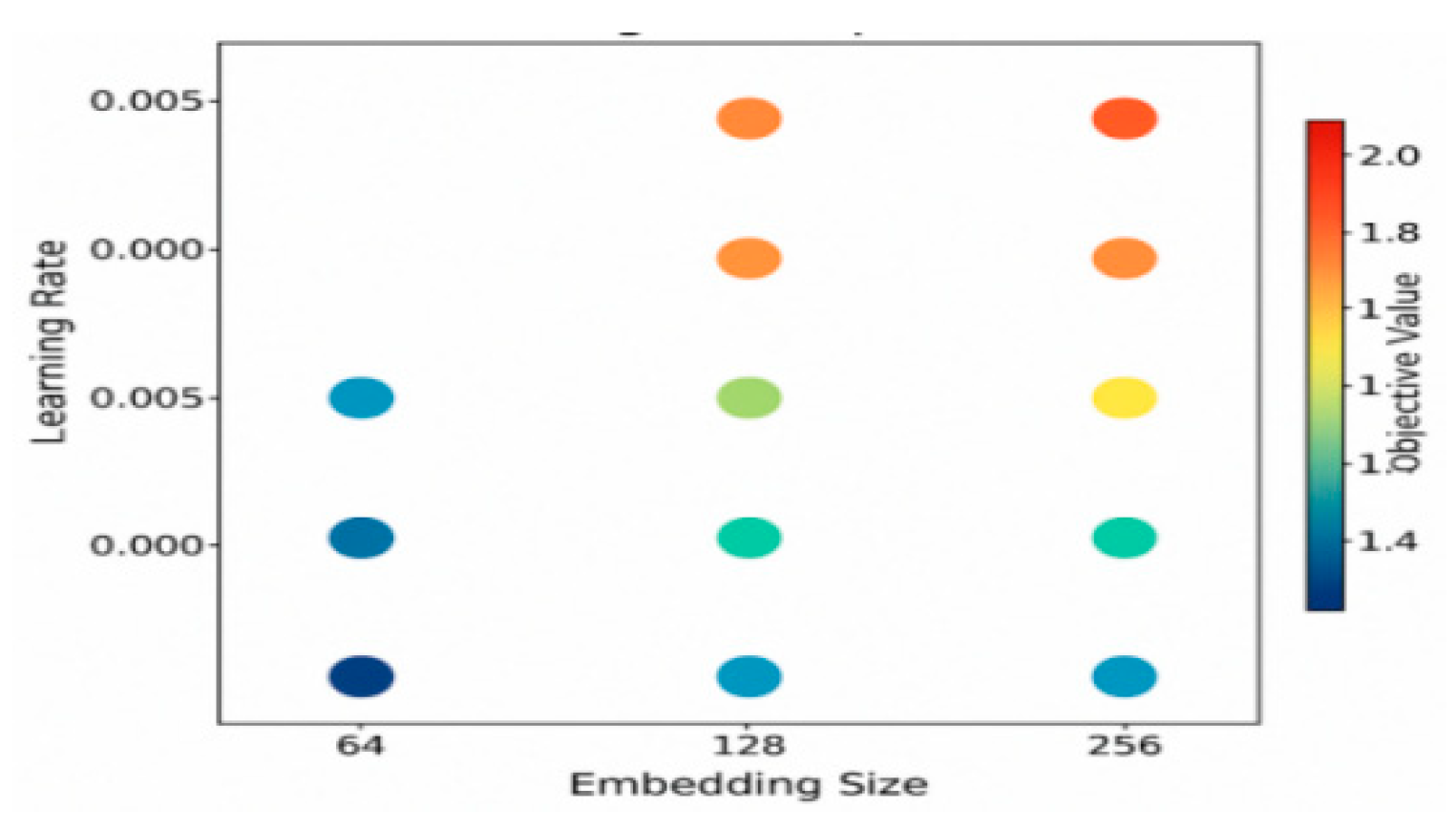

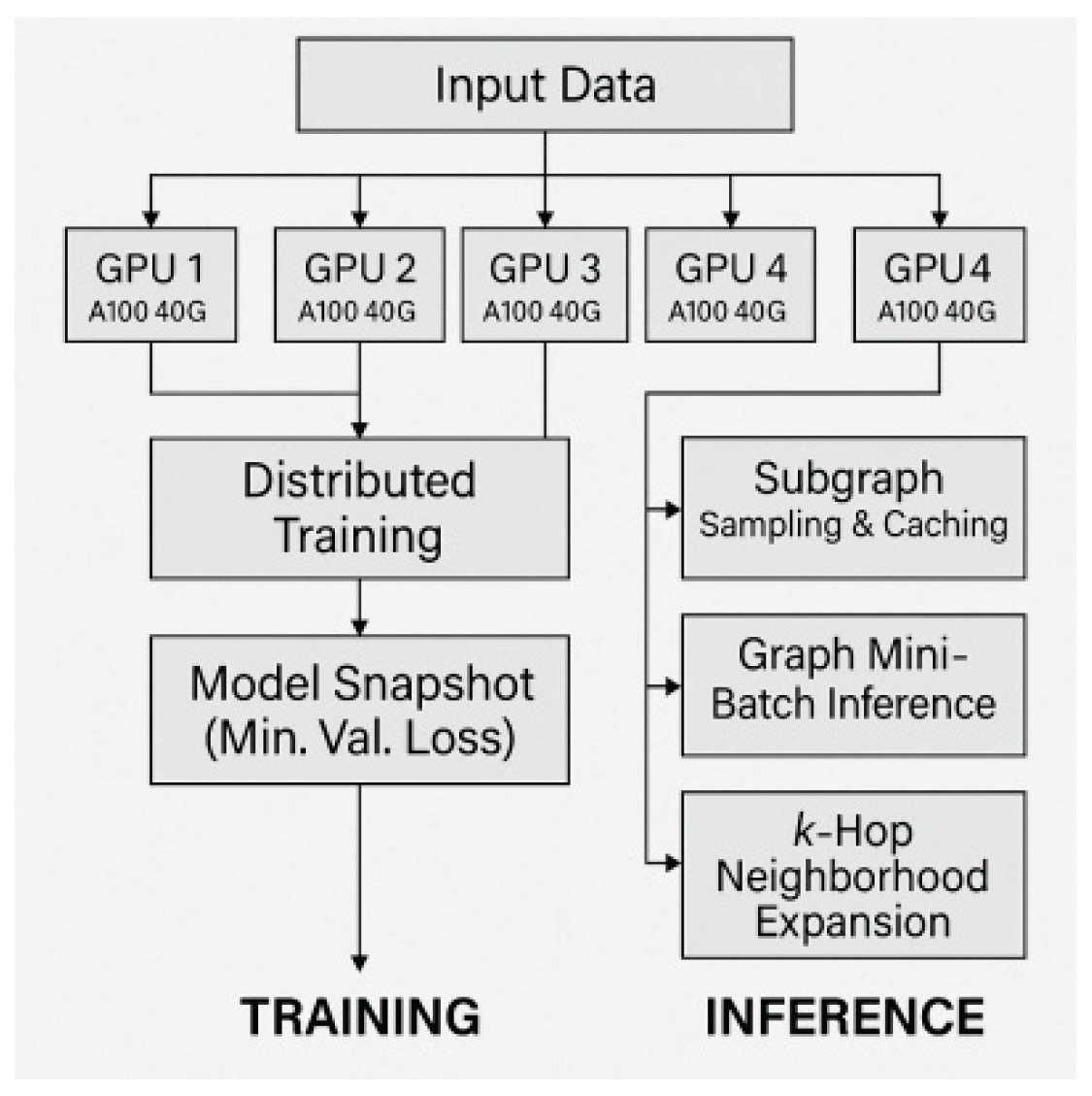

For the constructed cross-platform advertisement recommendation graph neural network model, the experimental phase is divided using 70% of training set, 15% of validation set and 15% of testing set [

9] . The training process is performed using Adam optimizer with the initial learning rate set to 0.001, the number of training rounds is fixed to 300, and the cross-entropy loss is monitored on the validation set to implement the early stopping mechanism. The evaluation metrics are selected as five core metrics: AUC, Accuracy, F1 value, Precision, and Recall to comprehensively measure the discriminative ability and generalization performance of the model. These metrics were chosen because they provide a balanced view of model performance across different scenarios, particularly in a multi-platform context. AUC is used to evaluate the model's ability to rank ads correctly, regardless of the threshold, which is crucial in a multi-platform setting where platforms may have different label distributions. Accuracy is included to assess the overall correctness of the model, while Precision and Recall are vital for understanding the trade-off between false positives and false negatives, which is especially important in advertising, where targeting the right audience is critical. F1 value combines both Precision and Recall, offering a single metric that balances the two, making it particularly useful in contexts where both false positives and false negatives need to be minimized. The evaluation is carried out simultaneously with the independent dimension of each platform and the combined dimension of the whole platform to ensure the model's adaptability to heterogeneous data, as shown in

Table 2.

According to the results in

Table 2, the model demonstrates stable predictive performance on each platform test set, in which Platform-B has the highest AUC value of 0.937, indicating that it has stronger discriminative power in the recognition of advertising behaviors on this platform. Platform-A and Platform-C are slightly lower in terms of accuracy and F1 index. The analysis found that the distribution of advertisement label categories in these two platforms is more uneven, which leads to a decrease in the model's fitting ability on a few categories of samples.

To address this issue, we implemented and compared two mitigation strategies during training: (1) applying a weighted cross-entropy loss, where underrepresented ad labels were assigned higher weights (e.g., frequent label = 1.0, rare label = 1.8); and (2) oversampling minority classes to at least 1.5× their original count. Comparative experiments on Platform-C showed that weighted loss improved the F1 score from 83.4% to 84.6%, while oversampling enhanced Recall from 82.1% to 84.3%, albeit with a slight drop in Precision. These results suggest that label imbalance significantly affects performance, and a combination of weighting and sampling may offer more robust improvements.The combined dimension evaluation shows that the model maintains high consistency and robustness under the overall cross-platform data structure, and the F1 value reaches 85.3%, which indicates that the GNN architecture is well adapted to cope with the problems of platform heterogeneity and advertisement diversity [

10] .

Analysis of Experimental Results

The experimental evaluation results reflect that the model has high consistency and discriminative ability in multi-platform advertisement recommendation scenarios, but there are still some differences in the performance in terms of the diversity of user behaviors and the heterogeneity of platform contents. Combined with the statistics of five core indexes on each platform test set after training (see

Table 3), it can be found that Platform-B outperforms Platform-A and Platform-C in AUC and F1 values. This suggests that the model demonstrates stronger generalization ability on platforms with clearer structural labels and more stable user behavioral patterns. As shown in

Figure 1, Platform-B's higher AUC and F1 values highlight its better ability to accurately predict user behaviors and recommend ads effectively, especially in environments with well-defined user actions. In contrast, Platform-C's performance fluctuates due to its higher label density and more complex ad types, leading to lower consistency in recommendation accuracy. The recall and precision of Platform-C fluctuates greatly, showing that the model has better generalization ability in the face of complex ad types and fragmented ads. the model's recognition stability decreases when facing complex ad types with fragmented behavioral sequences. In addition, although the overall merged results are high in all indicators, they imply the local error balance caused by the distribution differences between platforms, and further optimizing the model's ability to capture features of multi-platform differences will be the direction of subsequent improvement.

From the data in

Table 3, Platform-B performs optimally in all indicators, with an accuracy of 89.1%, an AUC value of 0.937, and an F1 value of 87.3%, thanks to its longest average behavioral sequence length (15.8), which enables the model to learn the user interest migration paths more adequately. Although Platform-C includes the most diverse ad types (37 categories) and the highest label density (3.1), it has the lowest F1 value and AUC, indicating that label redundancy and sample sparsity interfere with the model's discriminative performance. After merging the data, all the indicators are at a high level, indicating that the model has good stability under the overall multi-platform data fusion.

Conclusion

The recommendation system for cross-platform ad campaigns effectively improves the accuracy of ad delivery through a graph neural network model, especially when dealing with heterogeneous data and diverse ad content, demonstrating strong adaptability. The experimental results show that the behavioral differences between different platforms and the density of ad labels have a significant impact on the performance of the model, especially in Platform-B, where the model exhibits the best accuracy and AUC value. Subsequent work should further optimize the multi-platform data fusion by exploring advanced techniques such as transfer learning to align features across platforms, semi-supervised learning to leverage unlabeled data for improving feature extraction, and domain adaptation methods to bridge the gap between different platform behaviors. Additionally, improving the model's ability to recognize complex ad types and fragmented user behaviors can be achieved through incorporating multi-modal data, such as text, images, and behavioral sequences, to better capture diverse content features and user interactions. These approaches will contribute to more accurate and efficient cross-platform ad recommendations.

In this article, Xiang Li and Xinyu Wang are the co-first authors.

References

- Heo H, Lee S. Consumer Information-Seeking and Cross-Media Campaigns: An Interactive Marketing Perspective on Multi-Platform Strategies and Attitudes Toward Innovative Products [J]. Journal of Theoretical and Applied Electronic Commerce Research 2025, 20, 68. [Google Scholar] [CrossRef]

- Rizgar F, Zeebaree S R M. The Rise of Influence Marketing in E-Commerce: a Review of Effectiveness and Best Practices [J]. East Journal of Applied Science 2025, 1, 18–34. [Google Scholar] [CrossRef]

- Zhang K, Xing S, Chen Y. Research on Cross-Platform Digital Advertising User Behavior Analysis Framework Based on Federated Learning[J]. Artificial Intelligence and Machine Learning Review 2024, 5, 41–54. [Google Scholar] [CrossRef]

- Akmal T, Bilal M Z, Raza A. DVC Digital Video Commercial of Pakistani Food Advertising Industry: A Qualitative Analysis of Contemporary Digital Trends in Advertising Industry [J]. Contemporary Journal of Social Science Review 2025, 3, 808–822. [Google Scholar]

- Kasih E W, Benardi B, Ruslaini R. The power of Sequence: A Qualitative Analysis of Consumer Targeting and Spillover Effects in Social Media Advertising [J]. International Journal of Business, Marketing, Economics & Leadership (IJBMEL) 2024, 1, 28–42. [Google Scholar]

- Hatzithomas L, Theodorakioglou F, Margariti K, et al. Cross-Media Advertising Strategies and Brand Attitude: The Role of Cognitive Load [J]. International Journal of Advertising 2024, 43, 603–636. [Google Scholar] [CrossRef]

- Carah N, Hayden L, Brown M G, et al. Observing "tuned" advertising on digital platforms [J]. Internet Policy Review 2024, 13, 1–26. [Google Scholar]

- Hassan H, E. Social Media Advertising Features that Enhance Consumers' Positive Responses to Ads [J]. Journal of Promotion Management 2024, 30, 874–900. [Google Scholar] [CrossRef]

- Chen X J, Chen Y, Xiao P, et al. Mobile ad fraud: Empirical patterns in publisher and advertising campaign data [J]. International Journal of Research in Marketing 2024, 41, 265–281. [Google Scholar] [CrossRef]

- Kwon H B, Lee J, Brennan I. Complex interplay of R&D, advertising and exports in USA manufacturing firms: differential effects of capabilities [J]. Benchmarking: An International Journal 2025, 32, 459–491. [Google Scholar]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).