1. Introduction

With the growth of e-commerce platforms, personalized product recommendation systems are crucial for improving user experience and increasing sales. Traditional recommendation algorithms, such as collaborative filtering and matrix factorization, focus on user-item interactions. These models cannot fully capture the complex relationships between users and products. They also struggle with data sparsity, where most user-product interactions are not observed, and with capturing changing user preferences over time.

Recent advances in deep learning have led to models that can better capture these patterns. Techniques like Factorization Machines (FM), Graph Convolutional Networks (GCN), and attention mechanisms have been introduced to improve model performance by capturing higher-order feature interactions, user-item dependencies, and long-range relationships in user behavior. However, these models still face challenges in dealing with sparse and high-dimensional data and in capturing the changing nature of user preferences in real-time.

In this paper, we present the Enhanced Attention and Interaction Network (EAIN), a model that uses deep learning modules to improve the accuracy and personalization of e-commerce recommendations. EAIN combines higher-order feature interactions with attention mechanisms to improve the representation of user behaviors and product characteristics. The model uses techniques like Dynamic Interest Networks (DIN) to focus on relevant user behavior, Selective Feature Interaction (MaskBlock) to avoid overfitting, Channel Attention Networks (SE-Net) to adjust feature importance, and Position-Aware Interaction (PAIM) to capture the sequential nature of user interactions. By combining these components, EAIN improves upon traditional models, addressing data sparsity and the need for more accurate, personalized recommendations.

This work highlights the role of deep feature modeling and attention mechanisms in solving the challenges of personalized recommendation systems. The EAIN model improves prediction accuracy on key metrics and provides a basis for future improvements in e-commerce recommendation systems.

2. Related Work

Personalized recommendation systems have advanced significantly with the introduction of deep learning techniques. Methods such as matrix factorization, attention mechanisms, and feature interaction models have improved recommendation accuracy and scalability.

Yin et al. [

1] proposed a multi-head self-attention model to enhance feature interactions but faced limitations with sequential data. Xue et al. [

2] introduced Autohash for higher-order feature interactions in CTR prediction, though it performs poorly in dynamic environments.

Lu et al. [

3] combined LightGBM, DeepFM, and DIN (Dynamic Interest Network) to improve purchase prediction, capturing both linear and non-linear relationships. This hybrid approach works well but requires fine-tuning for large datasets. Lu [

4] worked on improving chatbot user satisfaction using decision trees, TF-IDF, and BERTopic, which could be adapted for e-commerce recommendations to improve user engagement.

Wang’s work on EAIN has been notably influenced by the advanced ensemble modeling techniques described in [

5]. The integration of Random Forest, GBM, and Neural Networks, as outlined in [

5], directly inspired the architecture of the Enhanced Attention and Interaction Network (EAIN), particularly in its approach to combining high-order feature interactions and neural attention mechanisms. The advanced data preprocessing pipeline and adaptive learning strategies presented in [

5] also significantly informed the feature engineering and training optimization in EAIN. This synergy has contributed to EAIN’s robust handling of sparse and high-dimensional data, enhancing its precision in personalized e-commerce recommendations.

Finally, Li [

6] showed how tool-integrated reasoning and Python code execution can improve problem-solving in large language models, which could enhance the transparency and explanation of recommendation results in real-time systems.

Despite advancements in recommendation models, challenges like data sparsity, overfitting, and dynamic user behavior remain. EAIN addresses these through attention mechanisms, higher-order feature interactions, and position-aware modules, enabling more personalized recommendations.

3. Methodology

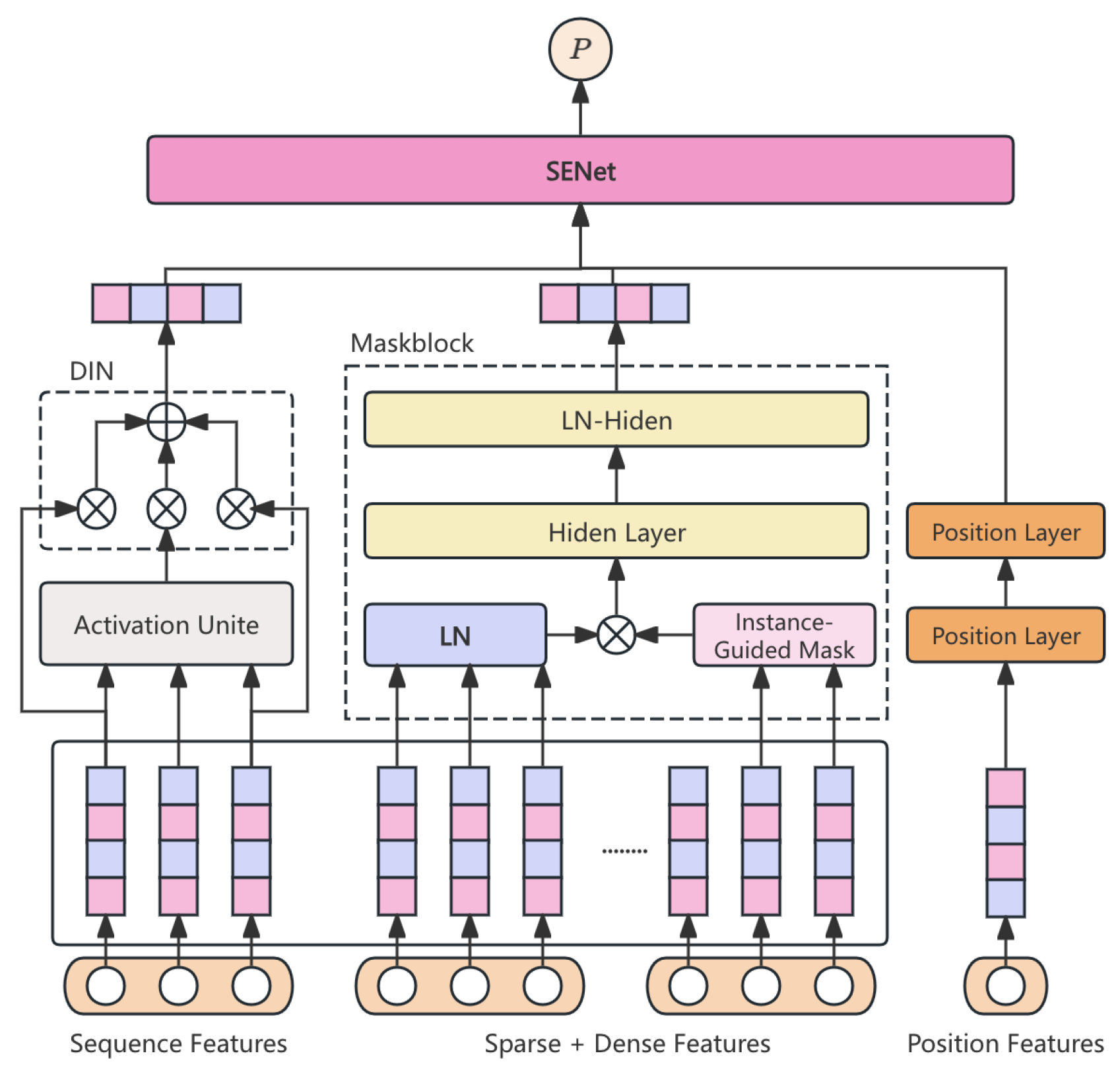

User recommendation systems are essential for improving user experience and boosting e-commerce sales. This study proposes the

Enhanced Attention and Interaction Network (EAIN), a deep learning-based recommendation model leveraging the Amazon e-commerce dataset. EAIN integrates advanced techniques such as temporal sequence processing, high-order feature interactions, and neural network components including Dynamic Interest Networks (DIN), MaskBlock for selective features, and Squeeze-and-Excitation Networks (SE-Net) for refined attention. A novel Position-Aware Interaction Module (PAIM) is introduced to encode positional information effectively. Optimized training is achieved through comprehensive data preprocessing and strategic negative sampling. Experimental results highlight EAIN’s superior performance in capturing user preferences and improving recommendation accuracy. The model pipeline is depicted in

Figure 1.

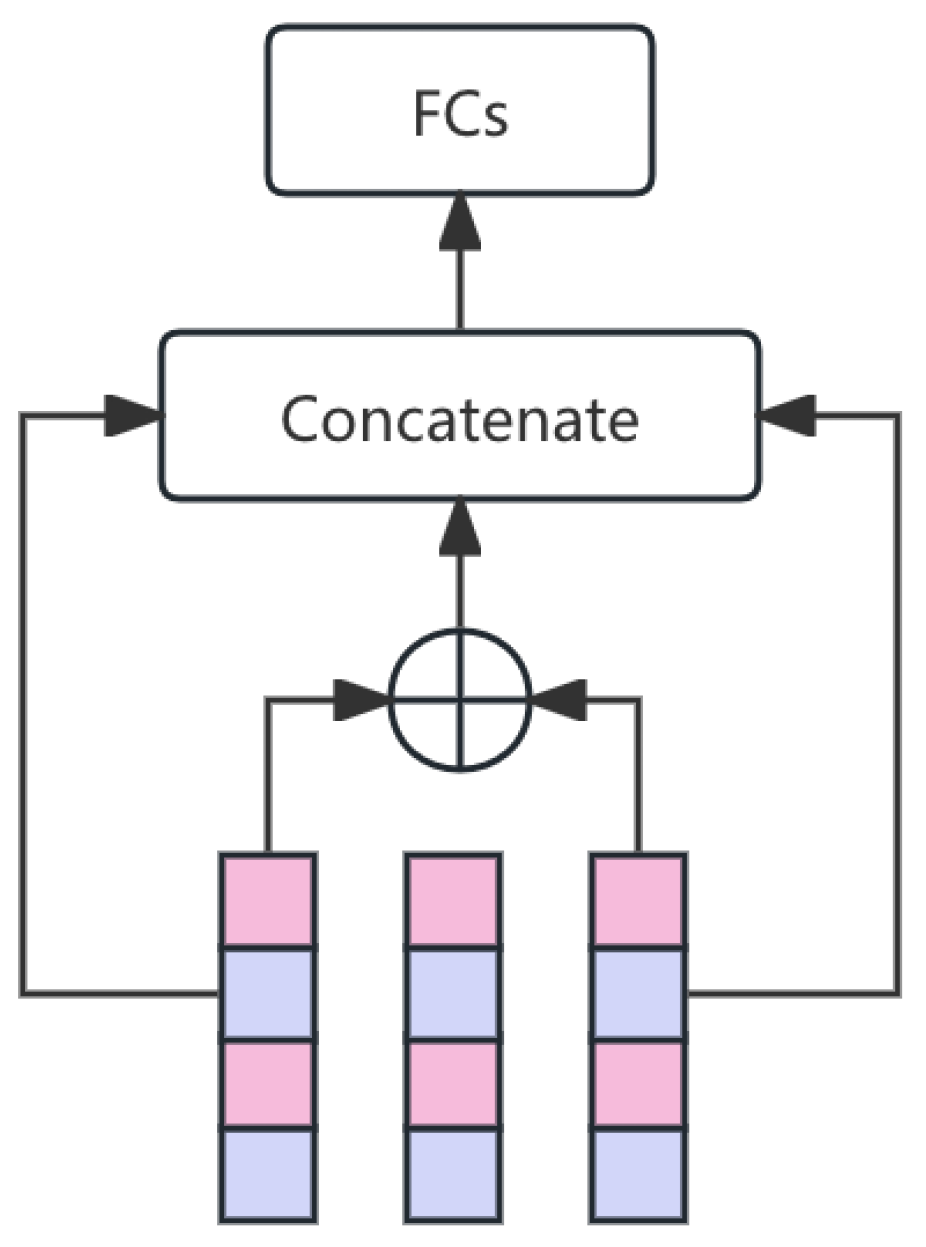

3.1. Dynamic Interest Network (DIN)

The DIN component is responsible for capturing the dynamic interests of users by attentively focusing on relevant historical behaviors. The core of din is the activation unit, the pivot of the activation unit is shown in

Figure 2.

Given a user behavior sequence

and a target item

, the attention mechanism is defined as:

where

,

, and

v are learnable parameters. The user representation is then aggregated as:

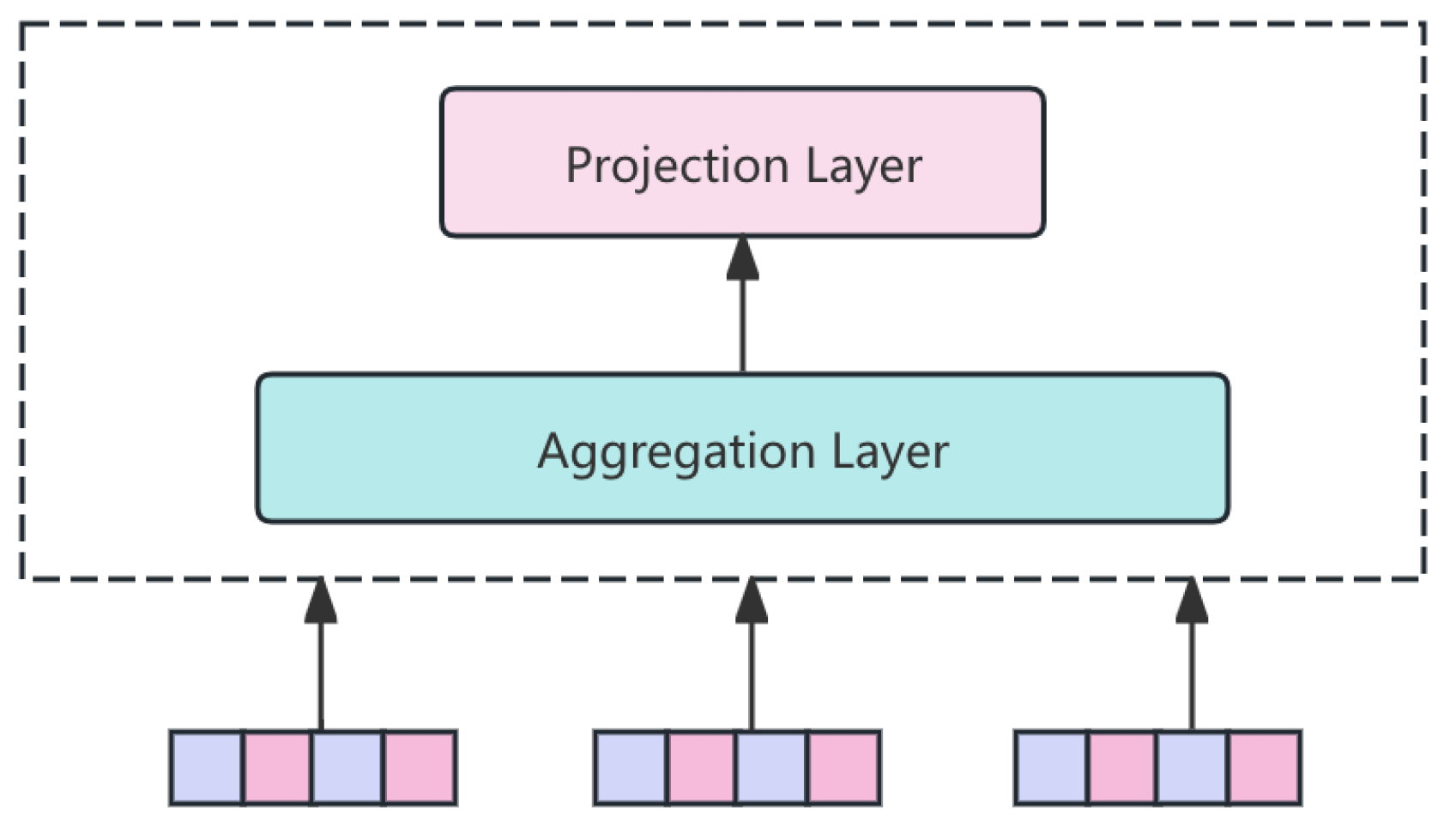

3.2. MaskBlock for Selective Feature Interactions

MaskBlock selectively masks feature interactions to prevent overfitting and enhance generalization. For feature vectors

and

, the interaction is defined as:

where

, learned during training, is the masking matrix, and ⊙ denotes element-wise multiplication. The MaskBlock’s core feature extraction employs the IGM framework. As shown in

Figure 3, the Aggregation and Projection Layers are fully connected (FC) layers. The Aggregation Layer, parameterized by

, integrates global context features and is wider than the subsequent Projection Layer, which reduces dimensionality using

.

3.3. Squeeze-and-Excitation Network (SE-Net) Attention

SE-Net is employed to recalibrate channel-wise feature responses, enhancing the representational power of the network. Given an input feature map

, the SE block performs the following operations:

where

is the ReLU activation function,

is the sigmoid function, and FC represents fully connected layers. The output

is the recalibrated feature map.

3.4. Position-Aware Interaction Module (PAIM)

The Position-Aware Interaction Module (PAIM) encodes positional information and captures high-order feature interactions, improving the model’s ability to understand sequence order in user interactions.

Positional Encoding

Positional encoding is crucial for the model to recognize the order of interactions. We define the positional encoding as:

where

t is the position index, and PositionEncoding can be implemented using sinusoidal functions or learned embeddings:

where

i is the dimension index and

C is the dimensionality of the encoding.

High-Order Feature Interactions

To capture high-order interactions, PAIM employs multiple layers of feature cross modules. The interaction at each layer

l is defined as:

where ⊗ denotes the outer product,

is the weight matrix for layer

l, and

is a non-linear activation function such as ReLU. The final high-order interaction representation is:

where

L is the number of interaction layers.

3.5. Loss Function

The model is trained using the binary cross-entropy loss function, suitable for binary classification tasks such as click prediction:

Additionally, to prevent overfitting and ensure model generalization, L2 regularization is applied:

where

is the regularization coefficient and

represents all model parameters.

3.6. Data Preprocessing

Effective data preprocessing is essential to ensure the quality and reliability of the input data for the recommendation model. In this study, we employ the following preprocessing techniques:

3.6.1. ID-Based Categorical Feature Embedding

To handle high-dimensional sparse representations, ID-based categorical features are transformed into dense embedding vectors. For a categorical feature

C with

unique categories, each category

is represented as:

where

is the embedding vector of dimension

d. This process captures latent relationships and enhances neural network learning efficiency.

3.6.2. Skive Above Negative Sampling

The Skive Above method refines negative sampling by discarding noisy samples after the last positive interaction in each

request_id. For interactions

, where

denotes positive and

negative, the last positive index

p is:

The filtered dataset

is defined as:

This approach emphasizes informative negatives, improving training efficiency and recommendation quality.

3.7. Feature Engineering

Robust feature engineering is vital for capturing the underlying patterns and temporal dynamics present in user interactions. We implement the following feature engineering strategies:

3.7.1. Temporal Sequence Construction

To capture temporal dependencies in user behavior, sequence features are constructed by segmenting interactions into time slices. For a given time

t, the sequence of the most recent

n interactions is:

where each

is an embedded interaction vector. This representation enables the model to learn evolving user preferences.

3.7.2. Contextual Feature Augmentation

To enhance user behavior modeling, contextual features such as time of day, device type, and location are integrated with sequential data. The augmented feature vector at time

t is:

where Concat denotes concatenation. This enriched representation improves the model’s accuracy and personalization capabilities.

3.7.3. High-Order Feature Interactions

To capture complex relationships between features, we construct high-order feature interactions using polynomial expansions or neural network-based interaction layers. Let

represent the concatenated feature vector. A second-order interaction can be represented as:

where ⊗ denotes the element-wise multiplication. These interactions are then fed into subsequent layers of the neural network to model higher-order dependencies.

4. Evaluation Metrics

The performance of the recommendation model is evaluated using several metrics:

4.1. Area Under the ROC Curve (AUC)

where TPR is the true positive rate.

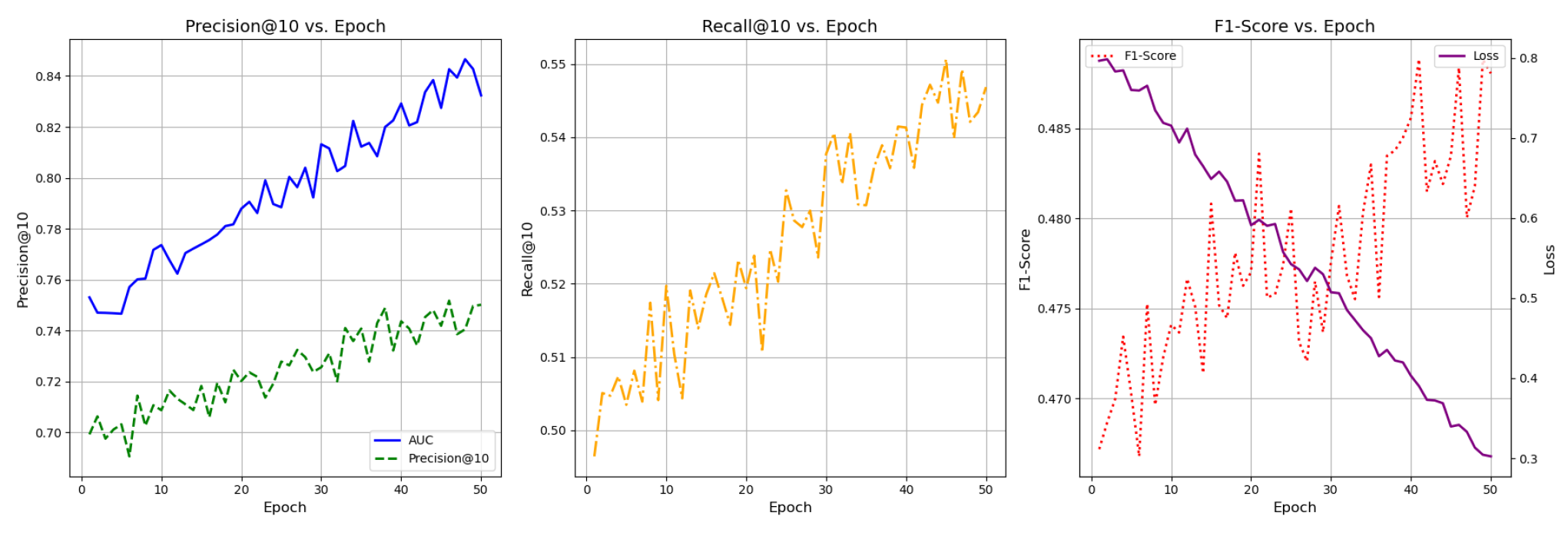

5. Experiment Results

Experiments were conducted on the Amazon e-commerce dataset to evaluate the proposed model against several baselines, including Logistic Regression, Matrix Factorization, and standard Deep Neural Networks.

The changes in model training indicators are shown in

Figure 4.

As shown in

Table 1, the proposed model outperforms all baseline models across all evaluation metrics.

6. Conclusions

This paper presents a robust user recommendation system for e-commerce platforms utilizing advanced deep learning techniques. Through meticulous data preprocessing, comprehensive feature engineering, and the integration of sophisticated neural network components, the proposed model achieves superior performance compared to traditional methods. The experimental results validate the effectiveness of the model in accurately capturing user preferences, thereby contributing valuable insights to the fields of machine learning and deep learning in recommendation systems.

References

- Yin, Y.; Huang, C.; Sun, J.; Huang, F. Multi-head self-attention recommendation model based on feature interaction enhancement. In Proceedings of the ICC 2022-IEEE International Conference on Communications. IEEE, 2022, pp. 1740–1745.

- Xue, N.; Liu, B.; Guo, H.; Tang, R.; Zhou, F.; Zafeiriou, S.; Zhang, Y.; Wang, J.; Li, Z. Autohash: Learning higher-order feature interactions for deep ctr prediction. IEEE Transactions on Knowledge and Data Engineering 2020, 34, 2653–2666. [Google Scholar] [CrossRef]

- Lu, J.; Long, Y.; Li, X.; Shen, Y.; Wang, X. Hybrid Model Integration of LightGBM, DeepFM, and DIN for Enhanced Purchase Prediction on the Elo Dataset. In Proceedings of the 2024 IEEE 7th International Conference on Information Systems and Computer Aided Education (ICISCAE). IEEE, 2024, pp. 16–20.

- Lu, J. Enhancing Chatbot User Satisfaction: A Machine Learning Approach Integrating Decision Tree, TF-IDF, and BERTopic. Preprints 2024. [Google Scholar] [CrossRef]

- Jin, T. Integrated Machine Learning for Enhanced Supply Chain Risk Prediction 2025.

- Li, S. Enhancing Mathematical Problem Solving in Large Language Models through Tool-Integrated Reasoning and Python Code Execution. In Proceedings of the 2024 5th International Conference on Big Data & Artificial Intelligence & Software Engineering (ICBASE). IEEE, September 2024, pp. 165–168.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).