1. Introduction

Sleep is a fundamental biological mechanism with pivotal role in preserving cognitive, physical wellbeing and proper emotion regulation [

1]. However, aging populations may experience sleep disorders related to insomnia [

2] or sleep fragmentation [

3] due to stress-related factors [

4], neurodegeneration [

5] or other chronic conditions [

6] and poor sleep quality [

7]. There is now concrete evidence from epidemiological studies that more than half of the older adults’ report sleep disturbances [

8]. Apart from being detrimental to quality of life the sleep barriers may be also indicative of physical and mental illnesses progression such as neurodegeneration [

5] and mental disorders [

9].

As we age there are sleep architecture alterations such as reduced slow wave (N3) and Rapid Eye Movement (REM) duration which is often accompanied by more frequent awakenings and increased sleep latency [

10]. Those disturbances are commonly associated with comorbidities such as chronic conditions (cardiovascular disease, diabetes, chronic pain) [

6], psychiatric disorders (depression, anxiety) [

11] and lifestyle factors such as sedentary behavior and lack of activity [

12]. Importantly, emerging evidence suggests that sleep disturbances may precede and potentially contribute to the development of neurodegenerative disorders by accelerating the accumulation of pathological proteins (e.g., amyloid-beta and tau in AD) and impairing the clearance mechanisms such as glymphatic flow [

13]. There is concrete evidence that irregular work schedules and shiftwork induce sleep disorders in shift-working nurses. A previous study employed the Bergen Shft Work Sleep Questionnaire (BSWSQ) to survey 1,586 Norwegian nurses and found that night shifts and rotating schedules were most associated with insomnia and sleepiness. Moreover, three-shift rotations induced higher insomnia rate than permanent night shifts [

14].

Given the growing aging population and the rising incidence of neurodegenerative diseases, understanding the bidirectional relationship between sleep disorders and neurodegeneration is critical. Interventions aimed at improving sleep quality through behavioral, environmental, or pharmacological means, may offer promising avenues to delay or mitigate the onset and progression of cognitive impairment in older adults [

15].

The concept of Natural Language Processing (NLP) enables the analysis of unstructured text from clinical notes, Electronic Health Records (EHRs) and patient queries which results in the automatic extraction of sleep-related information. Sleep text classification is a technique which receives as input narrative data from users and can identify a wide range of sleep-related symptoms such as insomnias, sleep apneas, parasomnias and hypersomnias.

Early studies in that field aimed at the identification of publication trends in sleep disorder research using text mining methods [

16]. It performed text mining on 4,515 PubMed articles through cluster analysis and logistic regression. Although being a pioneering NLP study in the fields of sleep-related texyal data, it suffered from several limitations especially when dealing with ambiguous terminology. NLP techniques were also used to extract sleep-related symptomatology from EHRs and their results are summarized in a systematic review which compared 27 NLP studies on EHR free-text narratives [

17]. The authors concluded that most of the studies focus on developmental aspects and not on the symptomatology itself. NLP techniques such as topic modeling and sentiment analysis can be also combined with traditional outcome measures and provide hybrid approaches in evaluating the efficacy of sleep-related interventions [

18]. Recently, the use of Large Language Models (LLMs) has induced revolutionary changes in the NLP field. Many patients refer to publicly available LLM models for medical advice. A recent blinded study compared ChatGPT and sleep specialists’ responses rated by experts and laypeople [

19]. The main finding of this study was that ChatGPT rated higher for emotional supportiveness and clarity by laypeople, whereas yielded slightly lower accuracy rated by experts. The main finding of the study was that ChatGPT responses may contain inaccuracies and lack of real-world clinical context.

Although there is concrete evidence of the association of sleep disorders with cognitive decline, physical and mental wellbeing, the identification of sleep disorders is mainly performed in clinical environments through polysomnography [

20] or in real-world settings with wearables and or mobile applications [

21]. Most methodological approaches remain limited by time-intensive diagnostic protocols, subjective self-reports, and the fragmented integration of sleep symptomatology across health systems [

22]. Traditional methods do not integrate until now the enormous wealth of the narratives that patients use to describe their sleep issues. Although these are highly subjective, these narratives may implicitly reveal the underlying causes of sleep disturbances, such as stress, neurodegeneration, poor sleep hygiene, or physiological conditions like sleep apnea [

23].

The rationale of the present study is to contribute to the research agenda by bridging a significant gap between qualitative symptom expression and structured clinical interpretation. Currently existing NLP approaches in healthcare focus on diagnosis or named-entity recognition without accounting for the latent semantic cues that suggest cause-specific sleep disturbances. Our aim is to integrate a publicly available, custom-generated dataset with word embeddings and transformer architectures to offer high-resolution insight into symptom patterns that would otherwise remain unclassified or misinterpreted.

The proposed pipeline represents a scalable, non-invasive, and efficient alternative enabling the early detection and differentiation of sleep problems across diverse and/or remote populations. Firstly, we investigated baseline models founded on naïve techniques and then improved the classification accuracy employing word embeddings and transformer-based models. The latter concepts improved the robustness of our approach, making it capable of processing unstructured textual input derived from patient self-reports, electronic health records, chatbot conversations and classifying them into five clinically meaningful categories. These categories not only differentiated between normal and disordered sleep but also identified probable etiologies: (1) stress-related factors, (2) neurodegenerative processes, (3) breathing-related disorders, and (4) poor sleep habits.

2. Materials and Methods

2.1. Dataset

We constructed a dataset consisting of narrative, sleep-related text samples in sentence form. The text samples were derived from electronic sources describing the causes and factors of the main sleep disorders, such as the National Sleep Foundation (NSF) [

24], the American Academy of Sleep Medicine (AASM) [

25], Centers for Disease Control and Prevention (CDC) – Sleep and Sleep Disorders [

26], National Institutes of Health (NIH) – Sleep Disorders Information [

27] and World Sleep Society [

28]. Each sentence is labeled according to the underlying reason for sleep quality or disturbance. The dataset contains a total of

623 text entries, where each entry is a free-form narrative describing an individual's sleep experience. Each entry includes two fields:

The first (text) is a natural language description of a sleep-related experience.

The second (label) is an integer from 0 to 4, representing the primary cause or nature of the sleep condition.

The label encoding is as follows:

0 – Sleep disorders related to stress-related factors (e.g., interpersonal conflict, job insecurity, grief, emotional distress).

1 – Sleep disturbances due to neurodegeneration or chronic conditions (e.g., dementia, diabetes, hypertension).

2 – Breathing-related sleep disorders, especially sleep apnea and its effects (e.g., snoring, gasping, CPAP use).

3 – Sleep disruption due to poor sleep habits or environmental/lifestyle factors (e.g., noise, caffeine, light exposure, irregular schedules).

4 – Normal or improved sleep experiences or narratives of recovered/restful sleep.

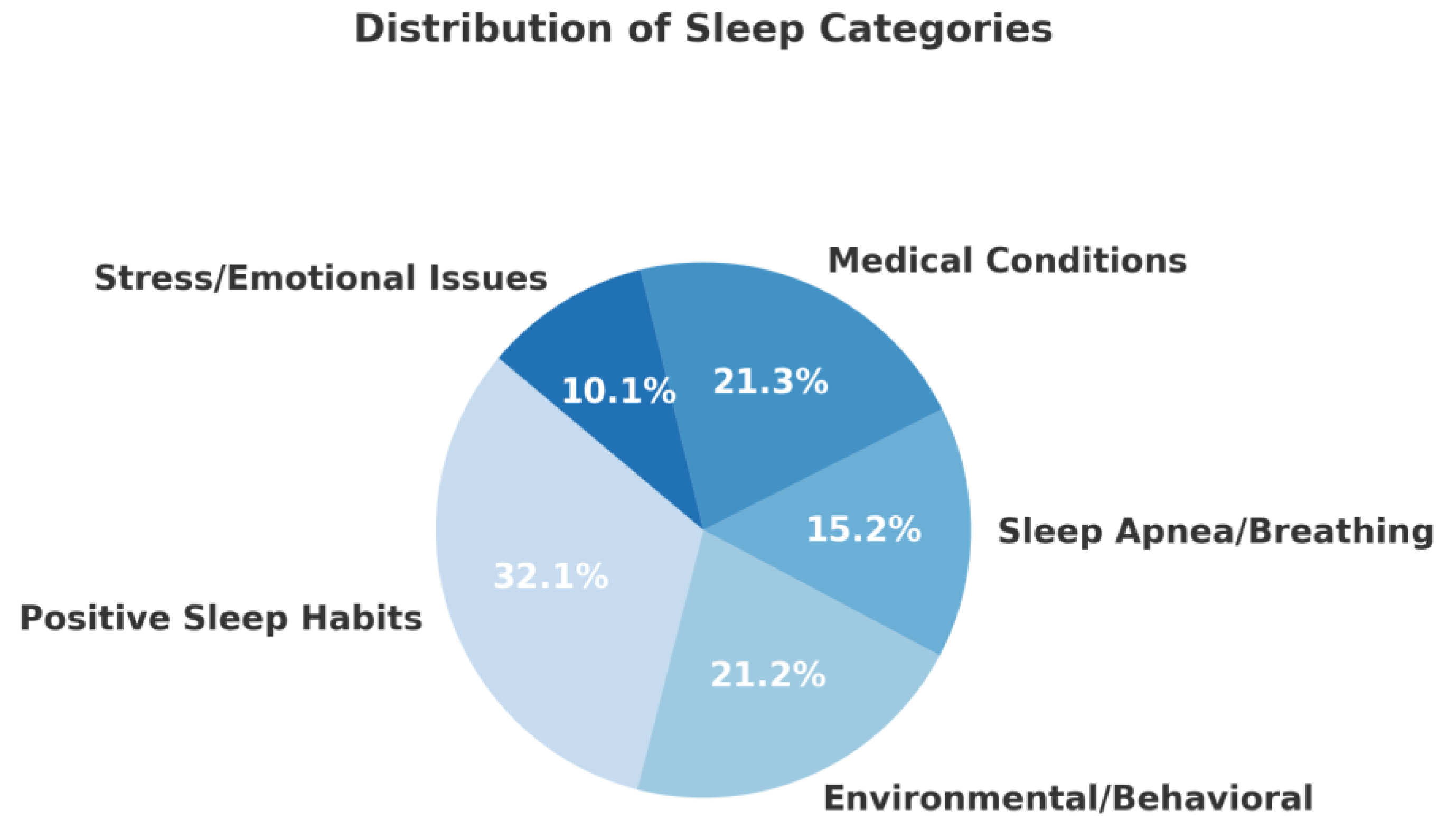

The dataset consists of 1) 43 instances (6.9%) of sleep disorders with stress/emotional issues, 2) 156 instances (25%) of sleep disorders due to medical conditions, 3) 66 instances (10.6%) of breathing-related sleep disorders, 4) 98 instances (15.7%) of poor sleep habits attributed to environmental/behavioral factors in accorddance with a study investigating shiftwork in Norwegian nurses [

14] and 5) 260 instances (41.7%) of good sleep quality. The dataset is moderately imbalanced, with

Category 4 (positive sleep experiences) comprising over

40% of the sample. The first two categories (stress/emotional and breathing disorders) are the smallest, together accounting for only about

18% of the data. This imbalance should be considered when training machine learning models, especially when using accuracy as a metric — and might require techniques like

class weighting,

resampling, or

stratified cross-validation. The distribution of the text-samples across the different categories is visualized in

Figure 1:

The dataset aims to integrate the scientific evidence associated with good sleep practices and sleep-associated disorders as defined by world-leading organizations [

24,

25,

26,

27,

28], Then, it transforms those guidelines into patient-related narratives. It is an ongoing procedure that is currently not balanced across all the classification categories. Therefore, there is need for further enhancing the sleep disorders and especially those associated with mental health factors. Further dataset versions should also integrate the existing surrogate gate with real-world instances from lived experience. It is publicly available at the following link [

29] and can be applied to several research and development areas, including:

Evaluation of machine learning algorithms for automated classification of sleep disturbances from narrative text.

Identification of thematic patterns in personal sleep narratives.

Training of models capable of distinguishing between multiple etiologies of sleep disturbance.

Development of personalized sleep health interventions and digital mental health tools that rely on natural language input from users.

2.2. Pre-Processing

We employed several resources from the Natural Language Processing Toolkit (NLTK) library to pre-process the textual data as follows:

The Punkt Tokenizer Models were used for sentence splitting and word tokenization. We used it to split a paragraph into sentences or words.

The ‘stopwords’ was used to download a list of common stopwords (‘the’,’is’,’and’,etc.). We use this to filter out common words that usually carry less meaning in text analysis.

The WordNet lexical database was used for lemmatization, which reduces words to their base form.

The ‘averaged_perceptron_tagger’ uses the part-of-speech (POS) tagger. This tags words in a sentence with their grammatical role (noun, verb, adjective, etc.).

The pre-processing code accomplished the following tasks: 1) Lowercasing, 2) Removing punctuation, 3) Tokenization, 4) Stopword removal, 5) POS tagging, 6) Lemmatization.

2.3. Baseline Model

Firstly, we established a baseline model by implementing a supervised machine learning pipeline using a Multinomial Naïve Bayes classifier. The dataset was first preprocessed using the Natural Language Toolkit (NLTK). The pre-processing involved the removal of stopwords and punctuation points. Then, the text was tokenized to retain only semantically relevant tokens. The cleaned dataset was then split into training (80%) and testing (20%) sets using stratified random sampling to ensure balanced representation across categories.

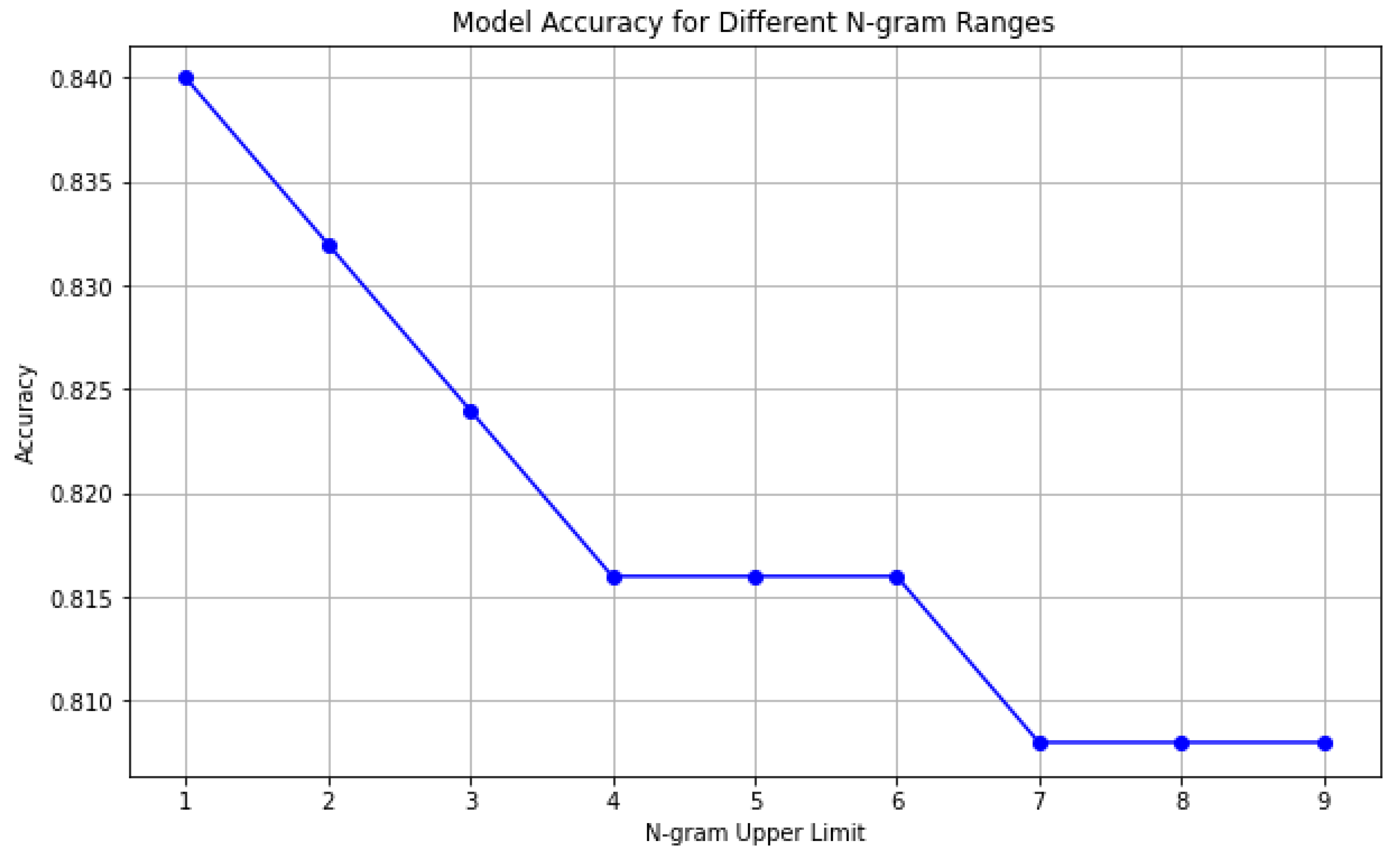

The textual feature extraction was performed through the Bag-of-Words model via CountVectorizer. We experimented with varying n-gram ranges from unigrams to 6-grams. For each n-gram configuration, a separate Multinomial Naive Bayes classifier was trained on the resulting feature vectors and evaluated on the test set. Model performance was assessed using classification accuracy. The accuracy results were plotted to visualize the relationship between n-gram size and predictive performance. This approach provided a scalable and interpretable framework for detecting sleep disorder patterns in narrative data. It formulated the baseline model and the groundwork for more advanced methods, such as contextual word embeddings and transformer-based models.

2.4. The Bidirectional Encoder Representations from Transformers (BERT) Model

It is a deep learning model introduced by Google [

30]. BERT employs the Transformer architecture to generate context-aware word embeddings. Unlike traditional models, it considers both left and right contexts simultaneously at every layer. This has revolutionized the NLP field, since it can capture deeper context and meaning from textual data. We used the ‘bert-base-uncased’ version, which is a 12-layer Transformer model trained on lowercased English text from BookCorpus and English Wikipedia. The tokenizer pre-processes raw input text into subword tokens and converts them into integer IDs and attention masks. The model itself returns hidden states that serve as rich contextual embeddings.

The model switched to inference mode by calling the ‘.eval()’ method. This action disables dropout layers to ensure deterministic behaviour. This is frequently used during feature extraction in classification tasks. The ‘outputs.last_hidden_state’ embeddings were used as input to machine learning classifiers, making BERT a powerful backbone for fine-tuned NLP pipelines.

To extract high-quality, contextualized sentence representations using BERT, we utilize the [CLS] token embedding from the model’s final hidden layer. The get_bert_embedding(text) function tokenizes input text using the bert-base-uncased tokenizer, applies truncation and padding to handle variable-length sequences, and converts the data into PyTorch tensors. The pre-trained BERT model is then executed in inference mode using torch.no_grad() to efficiently compute hidden states without gradient tracking. From the resulting tensor outputs.last_hidden_state, which has shape [batch_size, sequence_length, hidden_size], we extract the embedding of the [CLS] token located at index 0. This 768-dimensional vector serves as a compact representation of the entire input sequence and is commonly used in downstream tasks such as sentence classification, clustering, or semantic similarity analysis. The function returns this vector as a NumPy array, making it directly usable in traditional machine learning pipelines or as input to further neural layers.

2.5. Word Embeddings

A more advanced model was next implemented through a semantically rich classifier capable of distinguishing between the various types of sleep-related narratives. To achieve that, a word embedding-based approach using GloVe vectors combined with Support Vector Machine (SVM) classification was employed. The dataset was first preprocessed in the same way as the baseline model. The same tokenization approach as before was applied to convert each entry into a sequence of lowercased tokens.

Then, we utilized the pre-trained GloVe word embeddings with 50 dimensions to represent each narrative into a numerical (vector) format. For each sentence (document), we computed a document-level embedding by averaging the word vectors for each token present in the vocabulary. Entries containing no valid tokens (i.e., words not found in the embedding index) were excluded to ensure meaningful input representations.

The classification of the extracted features (word embeddings) was performed through a multi-class SVM classifier. Aiming to optimize the classification accuracy, we conducted a comprehensive hyperparameter search using the GridSearchCV function. We explored various kernels (linear, rbf, poly, sigmoid), regularization values (C), kernel coefficients (gamma), and polynomial degrees. A 10-fold cross-validation strategy was used during this process to identify the configuration that achieved the highest average classification accuracy across folds.

After identifying the optimal parameter set, we retrained the SVM model using the best combination on the full training data and evaluated its performance on the sequestered test set. This evaluation provided an unbiased estimate of generalization performance, reported via standard metrics such as accuracy, precision, recall, and the confusion matrix. This embedding-based methodology enables efficient classification of complex sleep narratives and serves as a strong baseline for comparison with more computationally intensive deep learning models.

2.6. Machine Learning Algorithms Used with BERT

2.6.1. Support Vector Machines

We trained a Support Vector Machine (SVM) classifier using the extracted BERT embeddings. To optimize the classifier's performance, a comprehensive hyperparameter search was conducted using GridSearchCV from the scikit-learn library. The parameter grid included:

C: Regularization parameter — values of [0.1, 0.4, 1, 2, 3, 4]

gamma: Kernel coefficient — values of [0.001, 0.01, 0.1, 0.4, 1, 2, 3, 4, ’scale’, ’auto’]

kernel: Kernel type — values of ['linear', 'rbf', ‘poly’, ‘sigmoid’]

degree of the polynomial kernel – values of [2, 3, 4, 5, 6, 7. 8]

‘class_weight’ : [None, ‘balanced’]

We explored different values for the regularization parameter / C, which performs a trade-off between margin maximization and minimization of the classification error. The kernel coefficient is used for the ‘rbf’, ‘poly’ and ‘sigmoid’ kernels. It determines the influence extent of each unique training instance. Smaller gamma values produce smoother decision boundaries, whereas higher gamma values result in more complex decision boundaries and maybe overfitting. The next parameter to fine-tune is the type of the kernel function. We investigated four different kernel types: 1) linear, 2) radial basis function, 3) polynomial and 4) sigmoid. These specify the type (linear vs. non-linear) of kernel function used to project data into higher-dimensional space. Higher degrees mean more complex decision boundaries. Another important SVM parameter is that of the class_weight. When fine-tuning this parameter, we can adjust weights for different classes. This is particularly useful in case of class imbalance. The None value treats all classes equally, whereas the ‘balanced’ value adjustes the weights automatically so as to be inversely proportional to class frequencies in the data. The optimal parameters are indicated in bold.

The grid search was performed using 10-fold cross-validation on the training set. This process systematically evaluated all parameter combinations to identify the model configuration that achieved the highest average classification accuracy. The resulting best-performing model was retrained on the full training set before final evaluation.

The trained model was evaluated on a hold-out test set, which comprised 20% of the original data. Performance was assessed using standard classification metrics including accuracy, precision, recall, and F1-score. Additionally, a confusion matrix was plotted to visualize the classifier's performance across different classes. All results were computed using scikit-learn’s evaluation utilities.

2.6.2. Random Forest Classifier

To develop a robust classification model, we employed a Random Forest classifier with hyperparameter tuning via GridSearchCV. The feature set consisted of BERT-derived embeddings extracted from preprocessed text, and the corresponding labels were retained from the original dataset. A parameter grid was defined to explore a range of configurations, including the number of trees in the forest (n_estimators ranging from 5 to 50 with a step of 5), the maximum depth of each tree (max_depth from 5 to 30 with a step of 5 and None for unconstrained growth), the minimum number of samples required to split a node (min_samples_split: [

2,

5,

7,

10]), and to define a leaf (min_samples_leaf : [

1,

2,

4]). The optimal parameter set is the following: {'bootstrap': False, 'max_depth': None, 'min_samples_leaf': 2, 'min_samples_split': 2, 'n_estimators': 30}. Both bootstrap sampling strategies (True, False) were also evaluated. A 10-fold cross-validation was applied within the grid search to ensure robust model selection based on classification accuracy. The grid search was executed in parallel using all CPU cores (n_jobs=-1), and the best-performing model was selected and evaluated on a held-out test set. The final model’s predictive performance was assessed using standard classification metrics and visualized through a confusion matrix to understand its performance across all classes.

2.6.3. XGBoost Classifier

Finally, we employed an XGBoost classifier trained on BERT-generated sentence embeddings. The data was partitioned into training and test sets using an 80/20 split, and transformed into XGBoost’s optimized DMatrix format. We applied a structured three-stage grid search approach to identify the best hyperparameter configuration. In Stage 1, tree structure parameters (max_depth and min_child_weight) were tuned using 5-fold cross-validation with early stopping based on multi-class log loss. The optimal values were: 1) max_depth: 3, 2) min_child_weight: 5. In Stage 2, we further refined the model by adjusting regularization (reg_alpha, reg_lambda) and sampling parameters (subsample, colsample_bytree). The optimal values obtained in this stage were: 1) subsample: 0.7, 2) colsample_bytree: 0.7, 3) reg_alpha: 0.5, 4) reg_lambda: 1.5. Finally, in Stage 3, we explored various learning rates (learning_rate) to balance convergence speed and generalization. At each stage, the configuration yielding the lowest average log loss on validation folds was selected. The final model was trained on the full training set using the best parameters and optimal number of boosting rounds. Performance was evaluated on the test set using a classification report, confusion matrix, and learning curve visualization, providing a comprehensive view of the model’s accuracy and calibration across classes. The final set of the fine-tuned parameters is the following: { 'objective': 'multi:softprob', 'num_class': 5, 'eval_metric': 'mlogloss', 'seed': 42, 'max_depth': 3, 'min_child_weight': 5, 'subsample': 0.7, 'colsample_bytree': 0.7, 'reg_alpha': 0.5, 'reg_lambda': 1.5, 'learning_rate': 0.05}}.

4. Discussion

The current study introduces

sleepCare, an automated Natural Language Processing (NLP) pipeline designed for the classification of sleep-related textual data. The

sleepCare approach systematically integrates NLP methodologies — including n-gram models and pre-trained word embeddings such as GloVe and BERT — with robust machine learning algorithms such as Support Vector Machines, Random Forests, and XGBoost classifiers. Drawing from best practices in modern text classification pipelines, the system incorporates essential phases such as advanced text preprocessing, feature extraction, hyperparameter tuning, and a structured model evaluation process. By transforming unstructured and highly variable text into structured, high-quality representations,

sleepCare establishes a reliable framework for automating complex text categorization tasks [

31].

The sleepCare pipeline currently employes traditional machine learning algorithms such as the 1) Support Vector Machines, 2) Random Forests and 3) XGBoost. The selection of the machine learning models was performed based on the following criteria: 1) To identify the ones most frequently used in NLP pipelines and 2) To employ models that allow easier hyper-parameter tuning to optimize the model’s performance. As the dataset size will increase, more elaborate neural (recurrent) network architectures would be investigated.

The sleepCare is regarded as a digital health application which enables the automated analysis of sleep-related textual reports such as sleep diaries, questionnaires and patient descriptions [

32]. The proposed pipeline may have important applications for remote sleep medicine solutions by providing early alerts of the most common sleep disorders [

33] in underserved or rural areas in which access to specialized sleep centers and clinicians is limited [

34]. So, sleepCare may enhance the arsenal of digital health tools towards preliminary screening, flag high-risk individuals for further clinical evaluation, and ultimately facilitate earlier intervention and improved management of sleep health, contributing to reduced morbidity and enhanced quality of life in populations with traditionally limited access to sleep medicine services [

35].

Thε efficacy of the sleepCare should be further validated through a pilot phase which would contain real-world data. The study design should integrate elderly participants from rural and urban settings which would randomly assigned either to a group interacting with the sleepCare system or with golden standard, clinical settings such as access to sleep clinics equipped with polysomnographic sensors and sleep experts. Potential outcome measures should be the accuracy of both approaches, the ease of use and the users’ satisfaction and adherence. Apart from those dependent variables, the classification accuracy of the sleepCare system should be further validated by means of sensorial and question-related data. Therefore, the future study design should also investigate whether the syste’'s approach would be statistically significantly correlated with the outcome of the Pittsburgh Sleep Quality Index (PSQI) [

36] or the SmartHypnos mobile application [

21].

In addition to developing the sleepCare classification pipeline, the present study contributed by constructing and publicly releasing a novel dataset of sleep-related narratives [

29]. The dataset was curated following the guidelines and terminologies proposed by leading sleep organizations, including the National Sleep Foundation (NSF) [

24], the American Academy of Sleep Medicine (AASM) [

25], the Centers for Disease Control and Prevention (CDC) [

26], the National Institutes of Health (NIH) [

27], and the World Sleep Society [

28]. Each narrative was carefully labeled to reflect specific symptomatology across major etiological categories of sleep disturbances, such as stress-related disorders, neurodegenerative-related sleep problems, breathing-related disorders, poor sleep hygiene, and descriptions of normal or improved sleep. By explicitly identifying probable underlying causes within naturalistic sleep narratives, the dataset addresses a critical gap in existing resources, which often overlook the nuanced expression of symptom patterns. Moreover, by following official clinical guidelines and explicitly labeling symptomatology, the construction of the sleepCare dataset advances broader explainability standards emphasized in the AI research community [

37]. It ensures that the features and categories used for model training are interpretable, clinically meaningful, and transparent, addressing key concerns about trustworthiness and reproducibility in health-related natural language processing. The open availability of this dataset fosters scientific transparency, enables reproducibility, and encourages collaboration across multidisciplinary teams aiming to advance sleep disorder screening, digital sleep health interventions, and personalized sleep medicine research. It lays the groundwork for future innovations in NLP-driven sleep diagnostics, particularly in remote, aging, or underserved populations where traditional assessments are less accessible.

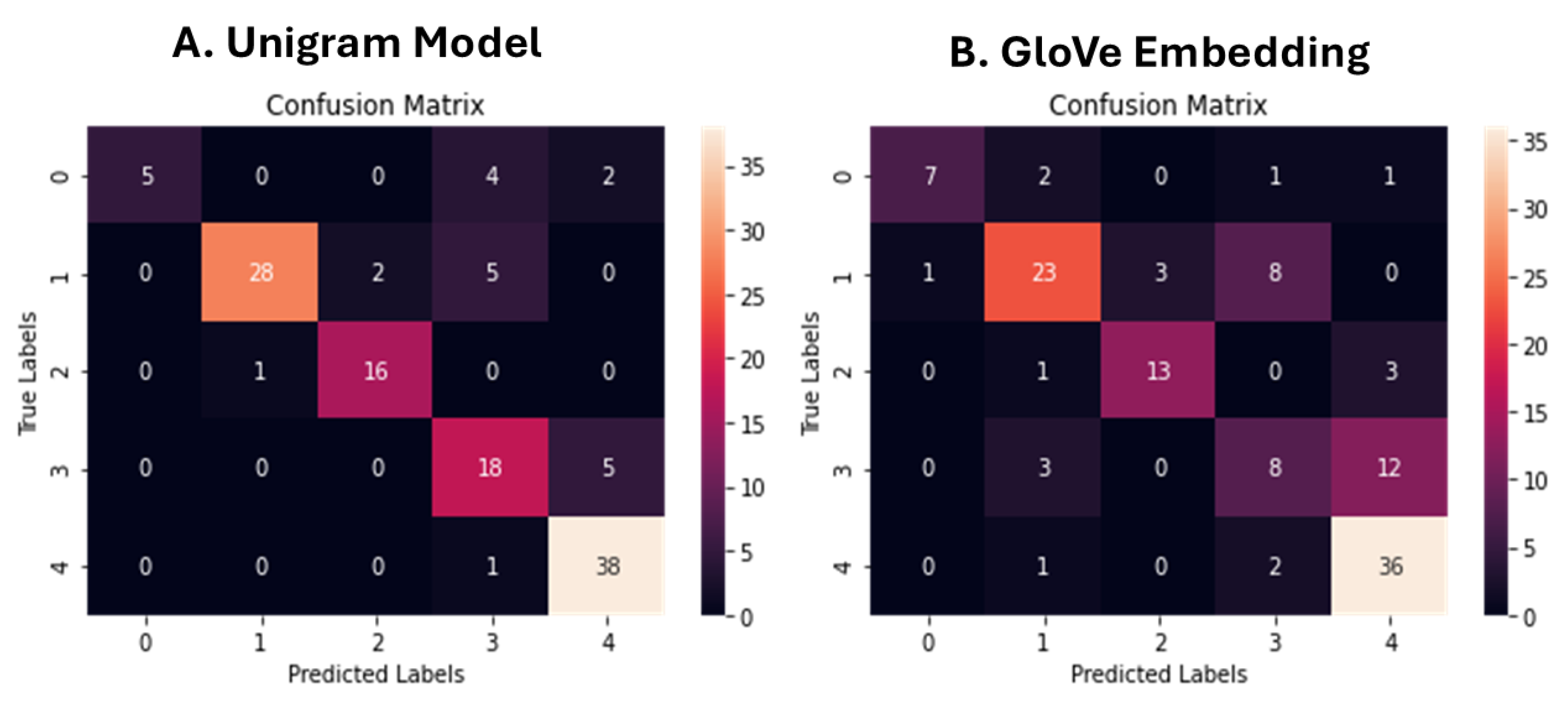

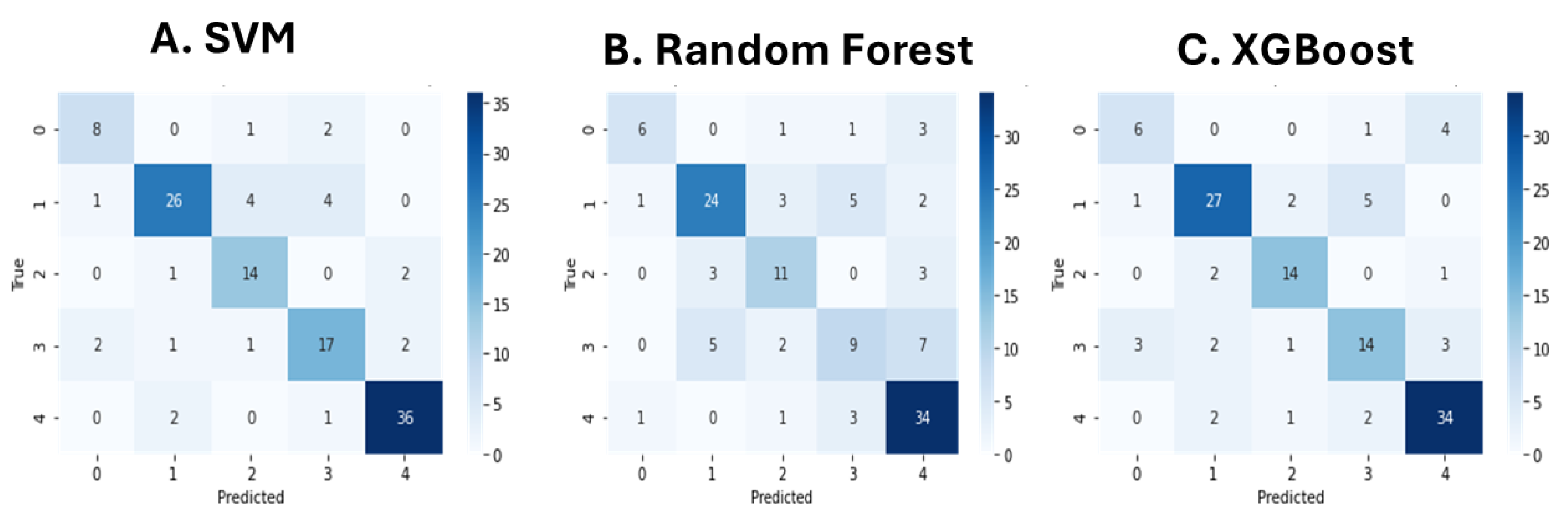

A comparative analysis was conducted across five different modeling approaches to assess the effectiveness of the sleepCare pipeline in classifying sleep-related narratives. Among all models, the Unigram-based Naive Bayes classifier achieved the highest overall accuracy (0.84) and macro-averaged F1-score (0.80), highlighting the strong predictive power of simple word frequency features for structured narrative datasets. However, further inspection of the class-wise precision and recall metrics revealed that its performance was disproportionately skewed toward majority classes, with poor recall for minority categories. In contrast, the BERT-based Support Vector Machine model achieved an accuracy of 0.81 and a macro-averaged F1-score of 0.78, while demonstrating more balanced performance across all classes. Notably, the SVM model incorporated a class weighting strategy during hyperparameter tuning (class_weight='balanced'), which improved its sensitivity to less represented sleep disorder categories and helped mitigate the effects of data imbalance. The BERT-based XGBoost model also showed competitive performance, achieving an accuracy of 0.76 and a macro F1-score of 0.73, benefiting from its ensemble structure and advanced regularization. In contrast, the GloVe-based SVM model and the BERT-based Random Forest model achieved lower performance levels, with accuracies of 0.70 and 0.67, respectively. Overall, the comparative analysis underscores not only the flexibility of the sleepCare framework to accommodate different feature extraction and classification strategies, but also the critical importance of addressing class imbalance to enhance the robustness and fairness of sleep disorder classification models.

The superior performance of the Unigram-based Naive Bayes model compared to higher-order n-gram models can be attributed to the nature of the sleep narrative texts, which are relatively short and structured, making individual word-level features highly informative [

38]. As the n-gram size increases, feature sparsity becomes more pronounced, leading to poorer generalization due to the rarity of longer phrases within a moderate-sized dataset [

39]. Similarly, the GloVe-based SVM model performed worse than the Unigram model, likely because GloVe embeddings, while capturing semantic similarities, may dilute fine-grained distinctions between closely related sleep symptoms, which are critical for accurate classification [

40]. In contrast, the BERT-based models leveraged deep contextual embeddings that preserved subtle linguistic cues within the narratives, resulting in better performance overall [

30]. Among the BERT-based classifiers, the Support Vector Machine model outperformed Random Forest and XGBoost classifiers, possibly due to its ability to handle high-dimensional and sparse representations more effectively [

41,

42]. SVMs are known to perform particularly well when the number of features greatly exceeds the number of samples, as is typical when using dense BERT embeddings. Additionally, the incorporation of class weighting in the SVM tuning process further enhanced its robustness across minority classes, while ensemble-based methods like Random Forest and XGBoost may have struggled with overfitting or bias toward majority classes despite their flexibility.

While the sleepCare pipeline demonstrates strong potential for classifying sleep-related narratives, several limitations must be acknowledged. First, the size of the curated dataset, although carefully constructed, remains relatively modest, potentially limiting the generalizability of the models to broader populations and more diverse linguistic styles [

43]. Second, the current system relies on supervised learning, requiring labeled data for training, which may not scale easily without additional annotation efforts [

44]. Furthermore, while the sleepCare pipeline addresses class imbalance to some extent through weighting strategies, more advanced techniques such as synthetic data generation or adaptive sampling could be explored to further enhance performance, especially in minority classes [

22,

45]. Another limitation is that the classification is based solely on textual input without integration of multimodal sleep-related data (e.g., actigraphy, polysomnography summaries), which could enrich context and improve diagnostic accuracy [

46,

47]. Future work will focus on expanding the dataset to include a wider range of sleep disorder narratives, exploring semi-supervised or self-supervised learning approaches to reduce labeling costs [

44], and integrating multimodal features to support more comprehensive and explainable models [

46]. Additionally, deployment in real-world rural and remote healthcare environments, accompanied by usability and feasibility studies, will be essential to validate the practical impact of the sleepCare framework [

48,

49].

The development of the sleepCare pipeline marks the first step toward establishing a broader roadmap for integrating natural language processing technologies into sleep medicine. By systematically categorizing free-text sleep narratives, the approach creates a structured foundation for building intelligent systems capable of assisting clinical decision-making. Future iterations of sleepCare will evolve beyond static multi-class classification to support predictive modeling, risk stratification, and symptom progression tracking, enabling earlier and more personalized interventions. A key direction involves the development of a conversational agent or chatbot that provides personalized recommendations, screens for sleep disorders, and delivers continuous 24/7 support—especially valuable in rural or underserved areas. To support this, sleepCare will adopt a closed-loop feedback system, allowing it to learn dynamically from user inputs, clinician feedback, and misclassification patterns. This feedback-driven architecture will ensure that model updates are informed by real-world performance, improving accuracy, equity, and robustness over time. Future enhancements will also include expanding the dataset with diverse, lived-experience narratives, integrating semi-supervised learning to reduce annotation needs, and fusing multimodal data (e.g., wearables, mobile apps) for richer context. As sleepCare matures, it will facilitate automatic triaging, identify high-risk individuals, and support referral prioritization. Ultimately, its ability to translate subjective narratives into clinically meaningful outputs positions sleepCare as a scalable and explainable decision-support tool, complementing traditional diagnostics and advancing the digital transformation of sleep healthcare.

To sum up, the sleepCare pipeline establishes a scalable and adaptable foundation for applying natural language processing to sleep health. By combining methodological rigor with a focus on accessibility, transparency, and clinical relevance, this work represents a significant step toward more intelligent, equitable, and patient-centered sleep medicine. Future developments will aim to expand the system’s capabilities, enhance real-world deployment, and further integrate personalized support tools into digital health ecosystems.