Submitted:

23 May 2025

Posted:

28 May 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Background

1.2. Research Motivation

1.3. Comparison of Different Reasoning Techniques

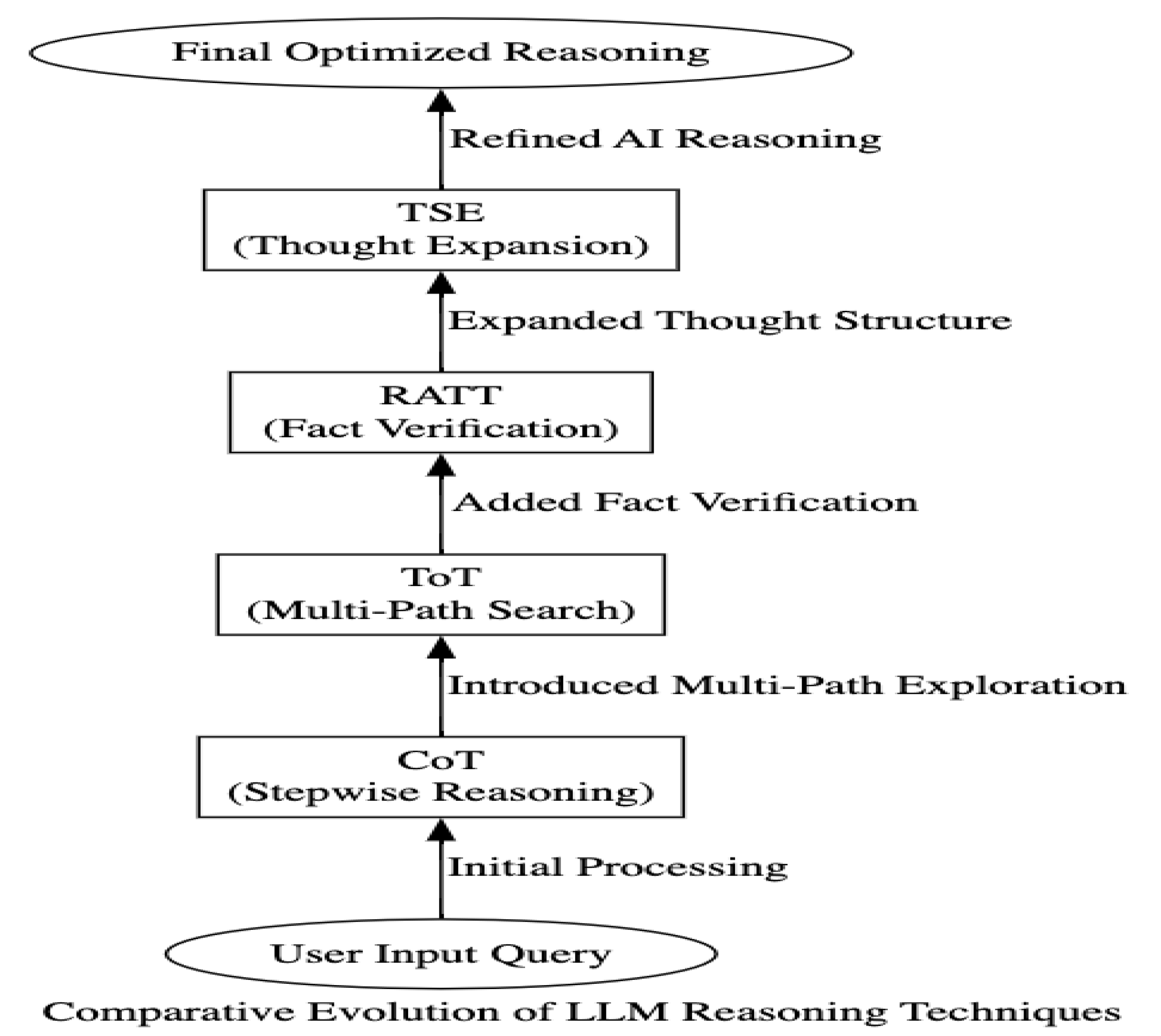

1.4. How These Methods Evolved Over Time

2. Methodology

2.1. How These Methods (Metrics, Evaluation Strategies) Are Comparable

2.2. Technical Aspects of LLM Reasoning Models

-

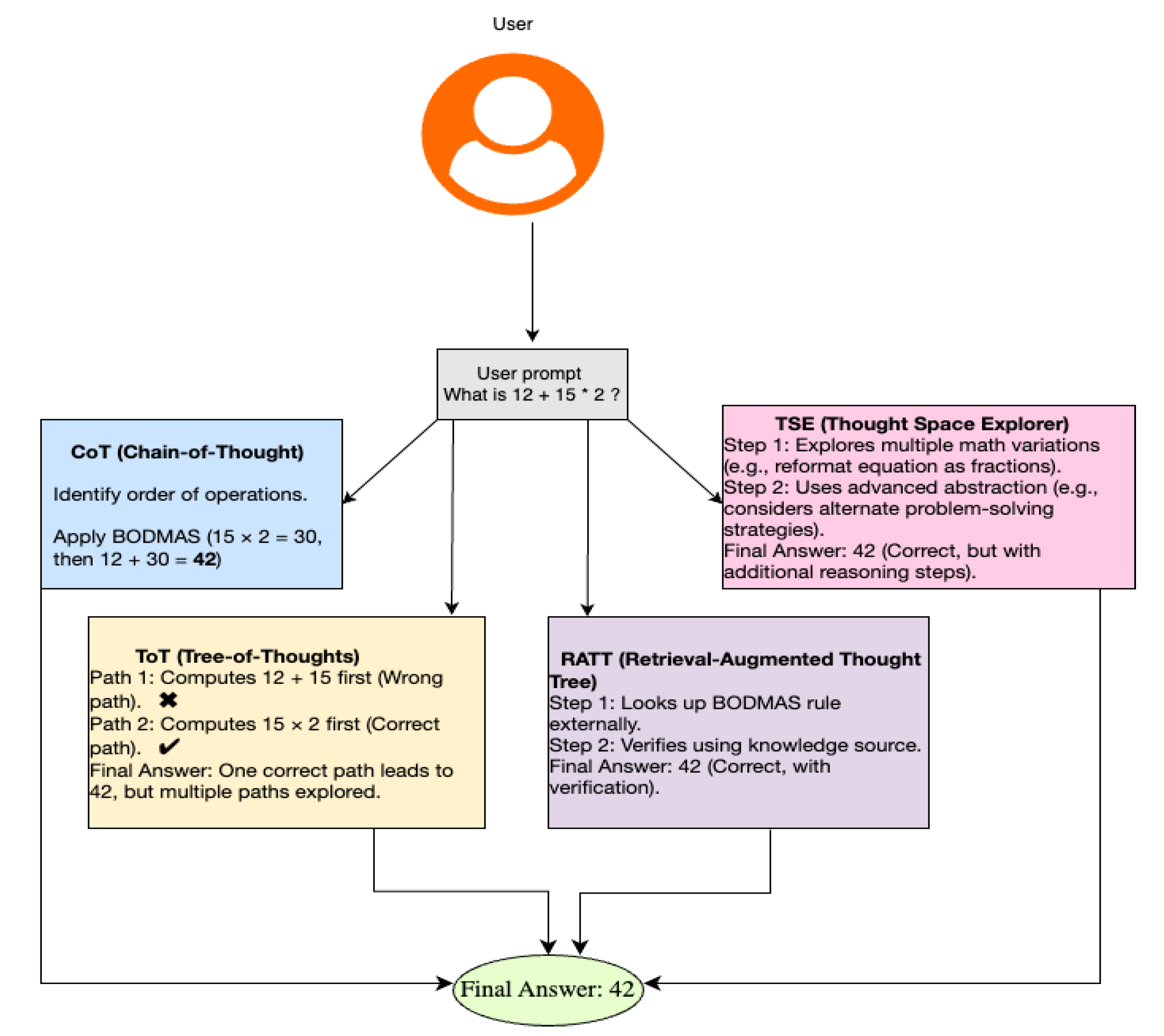

Transformer-Based Self-Attention and Contextual Reasoning:Transformer architecture with multi-head self-attention allows most modern LLMs including GPT-4, PaLM, and LLaMA to encode word associations across long spans. This method helps LLMs to dynamically access relevant information even when it lacks a natural logical foundation [1,2]. Techniques including Chain-of Thought (CoT) using this self-attention capacity help to promote interpretability and coherence by carefully arranging responses into consecutive chains of reasoning.

-

Multi Step Thought Generation and Search Algorithms:Search algorithms (BFS, DFS, heuristic optimization) are incorporated beyond CoT, Tree-of- Thought (ToT), and Thought Space Explorer (TSE), so offering organized decision-making frameworks. These models build hierarchical lines of reasoning that let LLM’s backtrack and improve answers. Examining several paths increases logical depth and adaptability but also computing complexity [5,8].

-

Integration of External Knowledge & Reinforcement Learning:To mitigate hallucinations, Retrieval-Augmented Thought Trees (RATT) incorporate retrieval-based verification, ensuring factual consistency in reasoning outputs. Prototypical incentive Models (Proto-RM) also improve Reinforcement Learning from Human Feedback (RLHF) by optimising incentive structures, hence aligning LLM-generated reasoning with human preferences [6,7].

3. Discussion

3.1. Key Observations Across the 8 Papers

-

Scaling Alone Does Not Guarantee Better Reasoning :Early studies showed, especially on GPT-1 and GPT-2, increasing model size greatly improves verbal fluency and generalizing [2,3]. However, their zero-shot learning ability, show that more complicated reasoning and logical consistency remain difficulties for bigger models. This underlines the requirement of explicit thinking models to enhance multi-step logical reasoning: with Tree-of- Thought (ToT) and Chain-of- Thought (CoT) [4] .

- Structured decision making gets clear when one advances from CoT linear step-wise thinking to ToT multi-path exploration. While CoT promotes thinking in arithmetic and logical problems, ToT lets more flexible problem solving by means of several search strategies and backtracking. Lack of validation processes in both approaches results in problems when models act aggressively but wrongly. [4,5].

- Fact Verification and Retrieval Enhance Model Reliability : Retrieval and fact verification help to improve model dependability as models offer plausible but false assertions in LLM theory. By including outside information retrieval into logical processes, the Retrieval-Augmented Thought Tree (RATT) structure immediately fixes this problem and greatly increases factual accuracy. This result implies that to balance knowledge retention with logical coherence, future LLMs should combine dynamic retrieval techniques with ordered reasoning [6,8].

- Exploration-Based Methods Improve Cognitive Flexibility : TSE emphasizes exploration’s optimization, therefore deviating from received wisdom. While ToT improves present thought trees and enables LLMs to go beyond cognitive blind spots, TSE aggressively looks for new ideas. This suggests that future artificial intelligence systems should investigate fresh ideas in a flexible way in addition to optimizing legal directions. [8].

- Human input and reinforcement learning define a great deal of artificial intelligence alignment. Although reaching LLM results to satisfy ethical standards and human preferences still poses a great challenge, intellectual development is quite crucial. Proto-RM (Prototypical Reward Model) optimizes the data efficiency of reward learning, hence improving Learning from Human Feedback (RLHF). This approach guarantees that artificial intelligence produces coherent and consistent with human expectations form of reasoning [7].

3.2. Challenges in LLM Reasoning & AI Alignment

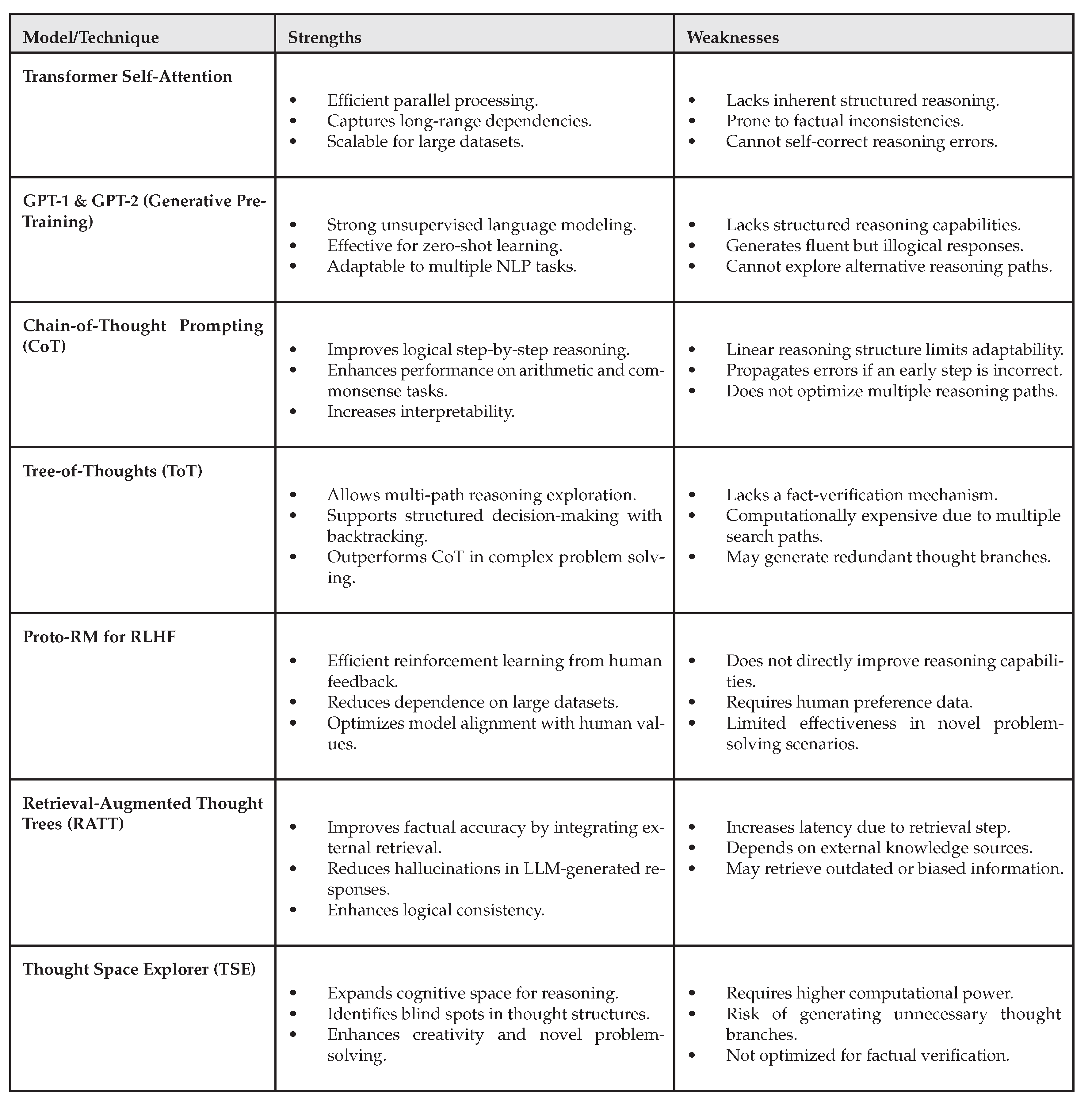

3.3. Strengths and Weaknesses of Different Models

|

4. Future Work and Open Challenges

4.1. Unanswered Questions in LLM Reasoning

4.2. Potential Research Directions

-

Scalability: Efficient Reasoning ArchitecturesAlthough modern organized thinking approaches such Tree-of- Thought (ToT) and Thought Space Explorer (TSE) considerably improve multi-step problem-solving, their extensive search routes result in major processing costs. Future research could look at hybrid models that balance organized thinking with computer efficiency—that fit memory-efficient architectures, adaptive search algorithms, or pruning procedures. Development of lightweight LLMs with strong reasoning capacity will be crucial for applications needing real-time decision making [5,6].

-

Interpretability: Transparent and Explainable ReasoningRetrieval-Augmented Thought Trees (RATT) and Chain-of- Thought (CoT) have advanced, but LLMs are still essentially black-box systems therefore it is difficult to know how they think. Explainable artificial intelligence (XAI) approaches—such as self-explanatory models, explicit reasoning trails, and visualization tools allowing users to confirm findings generated by AI—should take front stage in future research [6,8].

-

Bias Mitigation: Ethical AI DevelopmentLLMs trained on large, varied datasets reflect the inherent biases in human communication. Research must produce bias-detection and debiassing algorithms using adversarial training and reinforcement learning from human feedback (RLHF) to ensure fairness and neutrality in AI-generated reasoning.

5. Conclusions

5.1. Final Summary

5.2. What’s Next for LLM Reasoning?

References

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Advances in neural information processing systems 2017, 30.

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving language understanding by generative pre-training. OpenAI Blog 2018, 1.

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I.; et al. Language models are unsupervised multitask learners. OpenAI blog 2019, 1, 9.

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Xia, F.; Chi, E.; Le, Q.V.; Zhou, D.; et al. Chain-of-thought prompting elicits reasoning in large language models. Advances in neural information processing systems 2022, 35, 24824–24837.

- Yao, S.; Yu, D.; Zhao, J.; Shafran, I.; Griffiths, T.; Cao, Y.; Narasimhan, K. Tree of thoughts: Deliberate problem solving with large language models. Advances in neural information processing systems 2023, 36, 11809–11822.

- Zhang, J.; Wang, X.; Ren, W.; Jiang, L.; Wang, D.; Liu, K. RATT: A Thought Structure for Coherent and Correct LLM Reasoning, 2024, [arXiv:cs.CL/2406.02746].

- Zhang, J.; Wang, X.; Jin, Y.; Chen, C.; Zhang, X.; Liu, K. Prototypical reward network for data-efficient rlhf. arXiv preprint arXiv:2406.06606 2024.

- Zhang, J.; Mo, F.; Wang, X.; Liu, K. Thought Space Explorer: Navigating and Expanding Thought Space for Large Language Model Reasoning. 2024 IEEE International Conference on Big Data (BigData) 2024, pp. 8259–8251.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).