1. Introduction

Drones have become essential for multiple fields, including disaster response, industrial inspection, and autonomous delivery systems [

1,

2,

3]. Their versatility has led to widespread adoption in both commercial and research applications, but traditional drone operation requires precise manual control, often demanding significant training and experience [

4]. This challenge is particularly evident in cluttered or dynamic environments where human pilots must react quickly to avoid obstacles [

5]. To enhance accessibility and usability, researchers have explored alternative control methods such as gesture-based interfaces, brain-computer interaction, and voice-controlled navigation [

6,

7]. While these technologies have been studied independently, integrating them with other features into a seamless, user-friendly system remains an ongoing challenge.

Gesture-based control provides a natural and intuitive alternative to traditional remote controls. It leverages computer vision techniques to interpret human hand movements [

8]. Google’s MediaPipe Hands, one of the most widely used hand-tracking frameworks, detects 21 key landmarks of a hand. These landmark positions distinguish between different gestures [

9]. Prior research has demonstrated the effectiveness of gesture-based interfaces in robotic control, virtual reality, assistive technology, and human-robot interaction [

10,

11,

12]. However, their application in drone piloting—particularly when combined with autonomous safety mechanisms—remains underexplored. Some studies have attempted to integrate gesture recognition with drone control, but they often suffer from high latency, gesture misclassification with differing hand sizes, lighting issues, or limited adaptability in dynamic environments [

13].

Autonomous obstacle avoidance, another key component of drone navigation, has been successfully implemented using infrared time-of-flight sensors, LiDAR, and deep-learning-based object detection [

14,

15]. These systems enable drones to detect and avoid obstacles, significantly reducing the risk of collisions in unknown environments [

16]. However, many autonomous systems override user control entirely when avoiding obstacles, reducing pilot interaction and flexibility [

17]. This raises important questions about how to balance human input with autonomous safety mechanisms to provide a smooth and responsive user experience.

This study proposes a multifunctional drone control system that integrates hand-gesture recognition, autonomous obstacle avoidance, and 3D mapping using Bitcraze’s Crazyflie drone to create an intuitive and beginner-friendly piloting experience [

18]. The system ensures safe and interactive flight, allowing users to control drones with hand gestures. At[14,15 the same time, an autonomous safety feature prevents collisions by detecting obstacles within 0.35 meters and maneuvering the drone 0.2 meters away. A 3D mapping system visualizes the flight path and detected obstacles, and an AI-Deck streams live video, enabling navigation beyond direct line-of-sight. Unlike prior research, which often focuses on one aspect of drone control, this system bridges the gap between human interaction and autonomous safety, allowing for greater user engagement while maintaining obstacle avoidance [

19,

20].

By integrating gesture-based control with autonomous safety mechanisms and 3D mapping, this research advances human-drone interaction in a novel and practical way. Unlike many existing systems that treat gesture recognition and obstacle avoidance as separate functionalities, this work combines them into a seamless control framework that allows intuitive interaction while maintaining spatial awareness and autonomous safety overrides. This multifaceted integration addresses critical challenges in making drones accessible to beginners, including reducing reliance on traditional controllers and minimizing the risk of collisions during flight. The findings contribute to the fields of robotic control, autonomous navigation, and interactive machine learning, offering a scalable and user-friendly solution for applications in education, training, and real-world navigation. Furthermore, this study highlights persistent limitations in gesture recognition accuracy, command latency, and system usability, paving the way for future work in improving the strength and responsiveness of human-centered drone interfaces. A recent overview of the unmanned aerial vehicle (UAV) field emphasizes the growing need for multimodal and adaptive drone interfaces, underscoring the relevance and timeliness of this research [

21].

2. Materials and Methods

2.1. Integrated System Architecture and Workflow

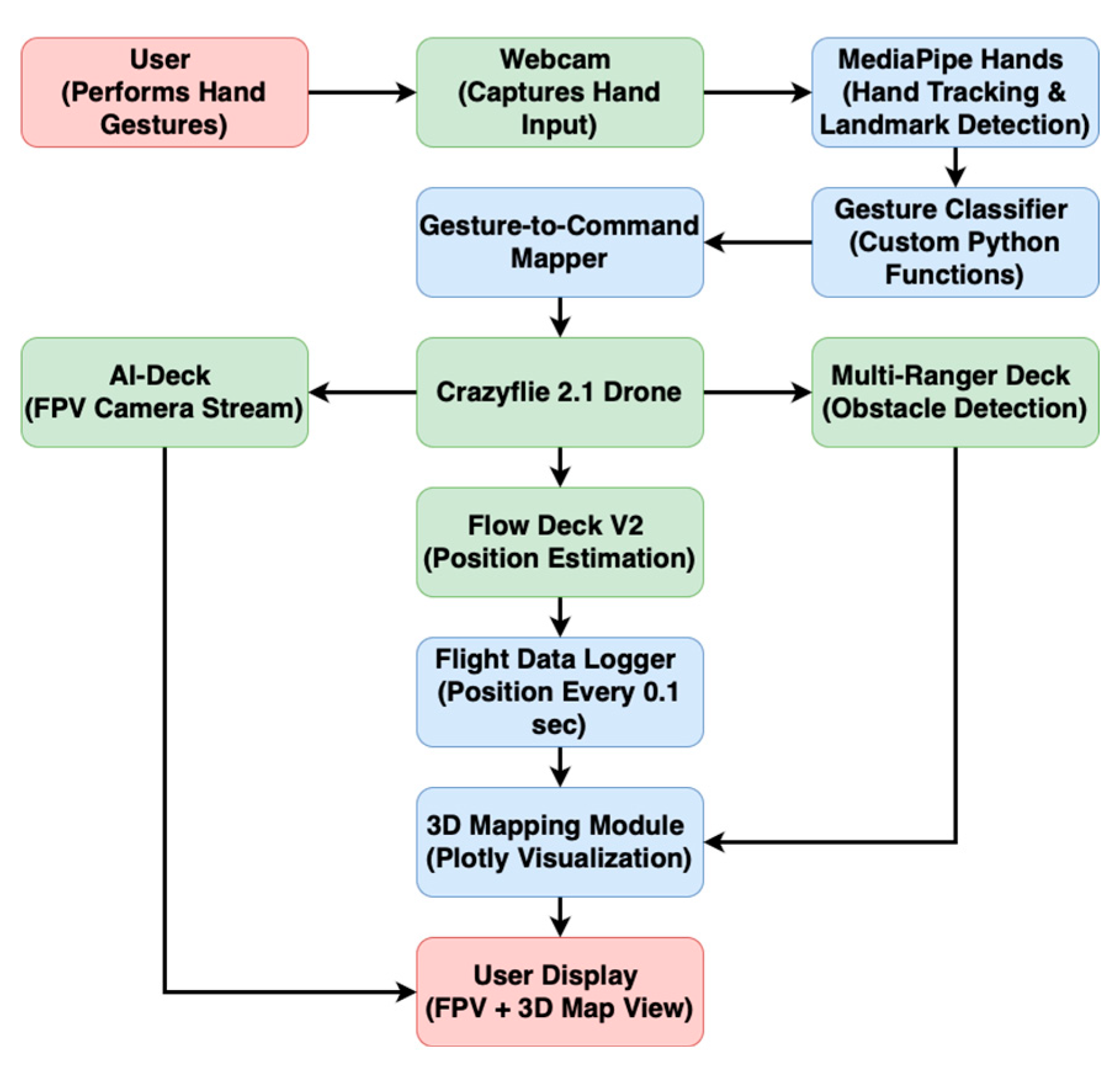

The architecture of the proposed drone control system integrates multiple hardware and software components to enable intuitive, gesture-based drone navigation with obstacle avoidance and 3D mapping. The user initiates control by performing predefined hand gestures in front of a webcam. These gestures are captured and processed using Google’s MediaPipe Hands framework. The landmark data is analyzed using custom Python classification functions that determine the type of gesture being shown. Using a command-mapping module, each recognized gesture is mapped to a specific flight command.

The mapped flight commands are wirelessly transmitted to the Bitcraze Crazyflie 2.1 drone via the Crazyflie Python library. Once airborne, the drone operates with three key expansion decks: the Multi-Ranger Deck for obstacle detection, the Flow Deck V2 for position estimation, and the AI-Deck for First-Person View (FPV) video streaming. The Multi-Ranger Deck uses infrared sensors to monitor proximity to objects in the front, back, left, and right directions.

Meanwhile, the Flow Deck V2 provides position data through a Kalman filter, which is logged every 0.1 seconds by a flight data logger. These coordinates generate a 3D map of the drone’s path using Python’s Plotly visualization library. Obstacle encounters are also marked on the map as red rectangular prisms. The AI-Deck streams live grayscale or color video to the user’s screen using socket communication, enabling remote navigation beyond the user’s direct line of sight. The FPV video feed and the interactive 3D map are displayed to the user, offering system feedback. This modular system functions in parallel, creating a responsive platform for safe, beginner-friendly drone piloting and for advanced mission-based piloting. This workflow and architecture can be visualized in

Figure 1 below.

2.2. Hardware and Software Components

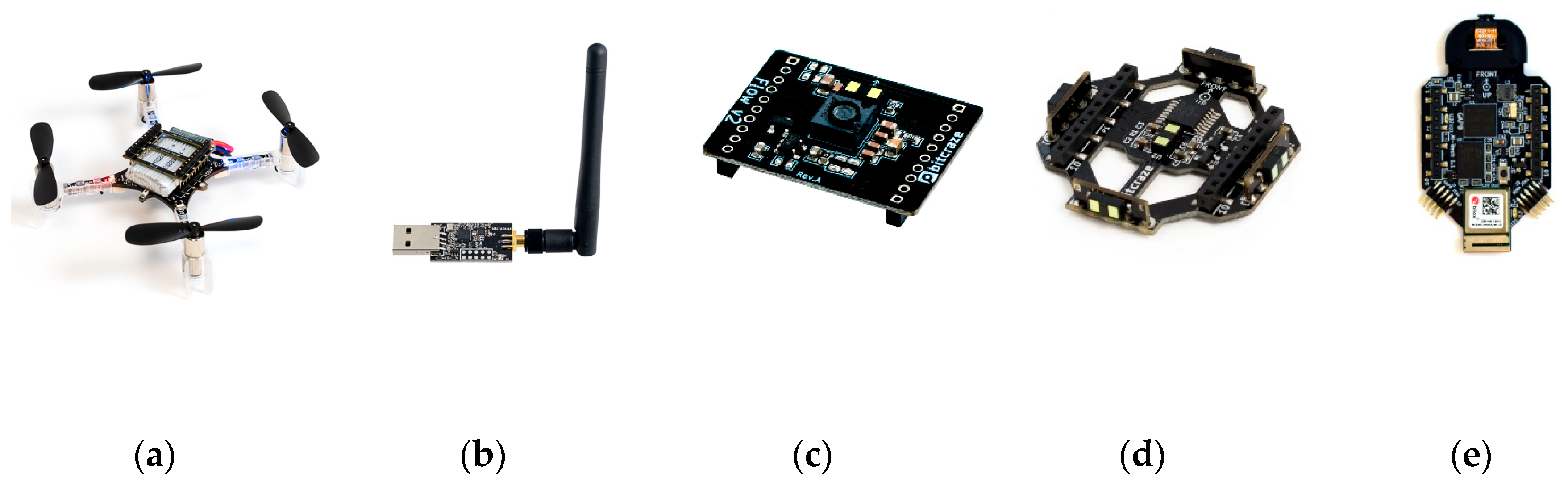

The Bitcraze Crazyflie 2.1 drone served as the experimental platform, selected for its compact size, modular design, and expandability. Several expansion decks and components were integrated to enhance its sensing and flight capabilities:

Crazyflie 2.1 Drone: Figure 2a illustrates the main aerial platform for this research. It is a lightweight, open-source nano quadcopter developed by Bitcraze, equipped with an STM32F405 microcontroller, built-in IMU, and expansion deck support via an easy-to-use pin header system [

22]. Its modular design allows seamless integration of multiple decks for advanced sensing and control functionalities.

Crazyradio PA:Figure 2b is a 2.4 GHz radio dongle used to establish wireless communication between the host computer and the Crazyflie 2.1 drone [

23]. The PA (Power Amplifier) version enhances signal strength and communication reliability, particularly in environments with interference or extended range requirements.

Flow Deck V2:Figure 2c provides optical flow-based position estimation and altitude measurement using a downward-facing time-of-flight (ToF) sensor and an optical flow camera [

24]. This enables the drone to maintain stable flight without external positioning systems.

Multi-Ranger Deck:Figure 2d utilizes infrared ToF distance sensors to detect obstacles in five directions—front, back, left, and right [

25]. This facilitates obstacle avoidance by continuously monitoring the drone’s surroundings.

AI-Deck 1.1:Figure 2e features a camera module for video streaming, allowing users to navigate the drone beyond their direct line of sight [

26]. The AI-Deck includes a GAP8 RISC-V multi-core processor, enabling onboard image processing and low-latency streaming to a ground station. A Linux system is required for setting up and flashing the AI-Deck, as the software tools provided by Bitcraze, such as the GAP8 SDK, are optimized for Linux-based environments.

Gesture-based control was implemented using Google’s MediaPipe Hands, a computer vision framework capable of tracking 21 key landmarks on a user’s hand. The gesture recognition software was implemented in Python, processing webcam input to identify predefined gestures and mapping them to corresponding flight control commands for the drone. The system was executed in Visual Studio Code on a laptop running Windows 11 with an Intel Core i9 processor, 64 GB of RAM, and an integrated webcam, which provided sufficient performance for processing and drone communication. The system leveraged Bitcraze’s Crazyflie Python library for wireless communication, ensuring seamless execution of gesture-based commands.

2.3. Code Implementation and System Logic

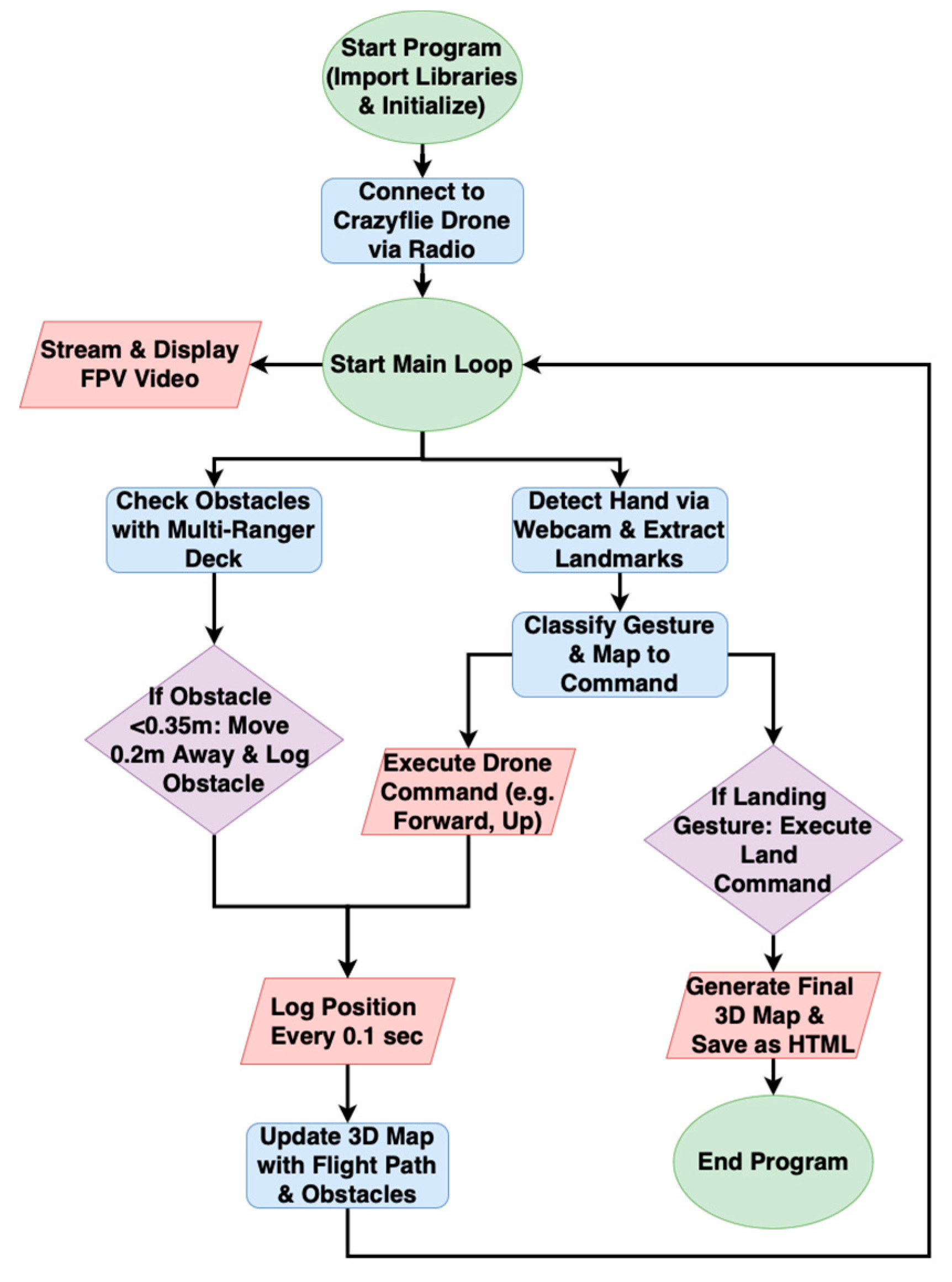

The full drone control system was implemented in Python, integrating hand-gesture recognition, autonomous obstacle avoidance, 3D mapping, and AI-Deck video streaming. Upon startup, all necessary software libraries are imported, and the system establishes a wireless radio connection to the Crazyflie 2.1 drone. A socket connection is simultaneously opened to the AI-Deck for FPV streaming. Google’s MediaPipe Hands framework is initialized to process webcam input and track 21 hand landmarks, which are later used for gesture classification. In parallel, the drone’s position is logged using the Kalman filter output from the Flow Deck V2, and a blank 3D map is initialized using Plotly to visualize flight data and obstacle encounters.

Once the system is fully initialized, the main loop begins and handles multiple tasks concurrently. The AI-Deck continuously streams grayscale or color video, which is decoded and displayed in a live feed using OpenCV, enabling remote FPV-based navigation. Simultaneously, the Multi-Ranger Deck monitors the proximity of obstacles in the front, back, left, and right. If an object is detected within 0.35 meters in any direction, the drone overrides gesture commands and automatically moves 0.2 meters away from the object to avoid collision. The location of each obstacle encounter is recorded and visualized as a red rectangular prism on the 3D map.

Meanwhile, MediaPipe Hands detects the user’s hand in the webcam frame and calculates geometric angles between finger joints and distances between landmark pairs. These features are passed into custom classification functions that recognize specific gestures such as “point,” “thumb up,” or “closed fist.” Each gesture is mapped to a unique drone command. For example, a “point” gesture triggers forward motion, “hand open” results in vertical ascent, and “thumb up” moves the drone laterally to the left. The drone executes each command in small increments (typically 0.05 meters) to ensure precision and responsiveness. When the landing gesture is detected, the drone initiates a landing sequence and stops logging data. At that point, the full 3D map is saved as an interactive HTML file, and flight coordinates are exported as a CSV dataset.

Throughout the flight, x, y, and z positions are recorded every 0.1 seconds using data from the Flow Deck. These positions are plotted and dynamically adjust the axis range of the 3D map. The AI-deck’s FPV stream provides additional spatial awareness for navigating beyond the user’s direct line of sight. These modules function in parallel, creating a responsive, beginner-friendly system that allows intuitive control while maintaining autonomous safety and environmental visualization. This system is visualized in

Figure 3 below.

2.4. Hand-Gesture Recognition and Control

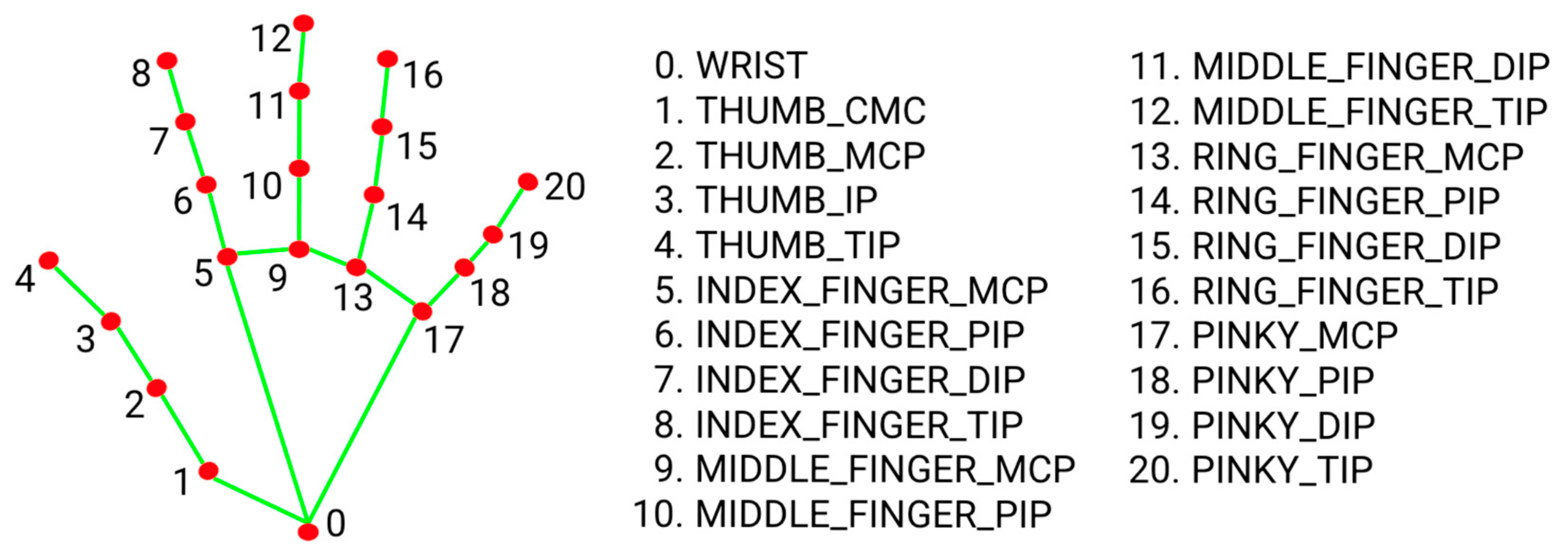

Gesture recognition in this study was implemented using Google’s MediaPipe Hands, an open-source framework that leverages machine learning to detect and track 21 key hand landmarks using a single RGB webcam, as illustrated in

Figure 4. Each landmark corresponds to specific joints and tips of the fingers and wrist. Once detected, these landmark coordinates are used to classify predefined hand gestures by calculating joint angles and Euclidean distances between specific landmark pairs.

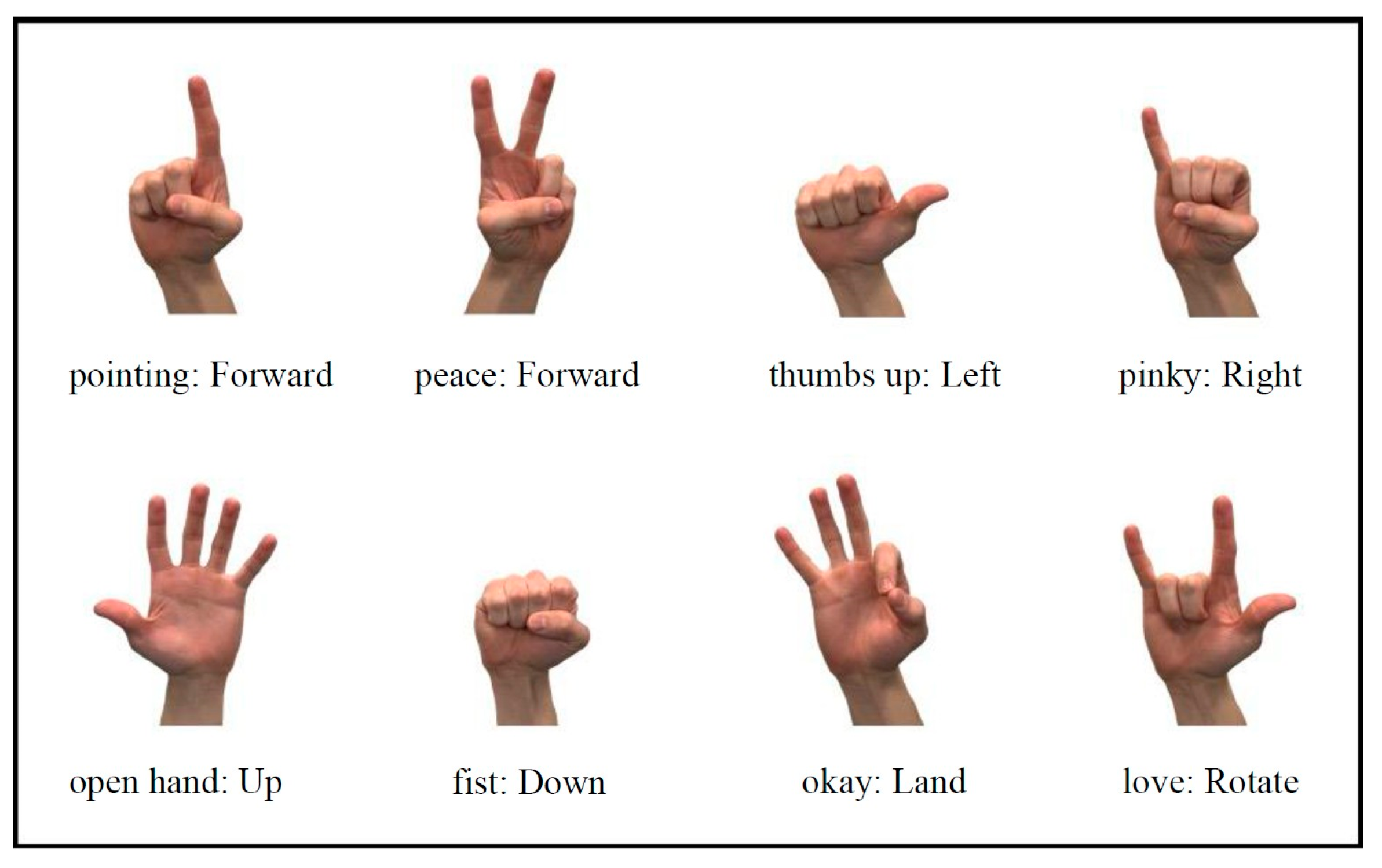

The system evaluates angles at key finger joints by applying geometric calculations to three-point sequences. For example, to detect if a finger is extended or bent, the angles formed at the Carpometacarpal (CMC), metacarpophalangeal (MCP), proximal interphalangeal (PIP), and distal interphalangeal (DIP) joints are measured. An extended finger typically shows an angle greater than 120°, whereas a bent finger produces a significantly smaller angle (usually <100°). In addition to angles, distances between landmark points (e.g., between the thumb tip and the base of the index finger) are used to determine the spread of the hand or proximity of fingers, further enhancing gesture classification. To control the drones using the different hand gestures, furthermore, we select eight common gestures in this paper, including “pointing”, “peace”, “thumbs up”, “pinky”, “open hand”, “fist”, “okay”, and “love”, to indicate the corresponding drone commands, as described below and demonstrated in

Figure 5.

A “pointing” gesture moves the drone forward.

A “peace” gesture moves the drone backward.

A “thumbs up” gesture moves the drone to the left.

A “pinky” gesture moves the drone to the right.

An “open hand” gesture triggers the drone to ascend (up).

A “fist” gesture triggers the drone to descend (down).

An “okay” gesture triggers the drone to land.

A “love” gesture triggers the drone to rotate.

First, the system detects

hand landmarks per frame and each landmark is represented by

in 2D image coordinates:

where “

” indicates the transpose. Then, a gesture in a frame can be represented by a

matrix

, which is the raw data for the gestures.

To classify hand gestures into the eight classes, such that

as shown in

Figure 5, in the next step, the system extracts two types of features from the matrix

, including the distance feature

and the angle feature

where

. Specifically, the distance features are defined by the Euclidean distances between landmark pairs

and

, such that

In addition, the joint angles features are defined using the cosine rule for 3 consecutive landmarks,

,`

, and

, such that

Finally, considering the system effectiveness and efficiency, we implemented this hand gesture classification only based a subset of ten features, such that

, where

,

,

,

,

,

,

,

,

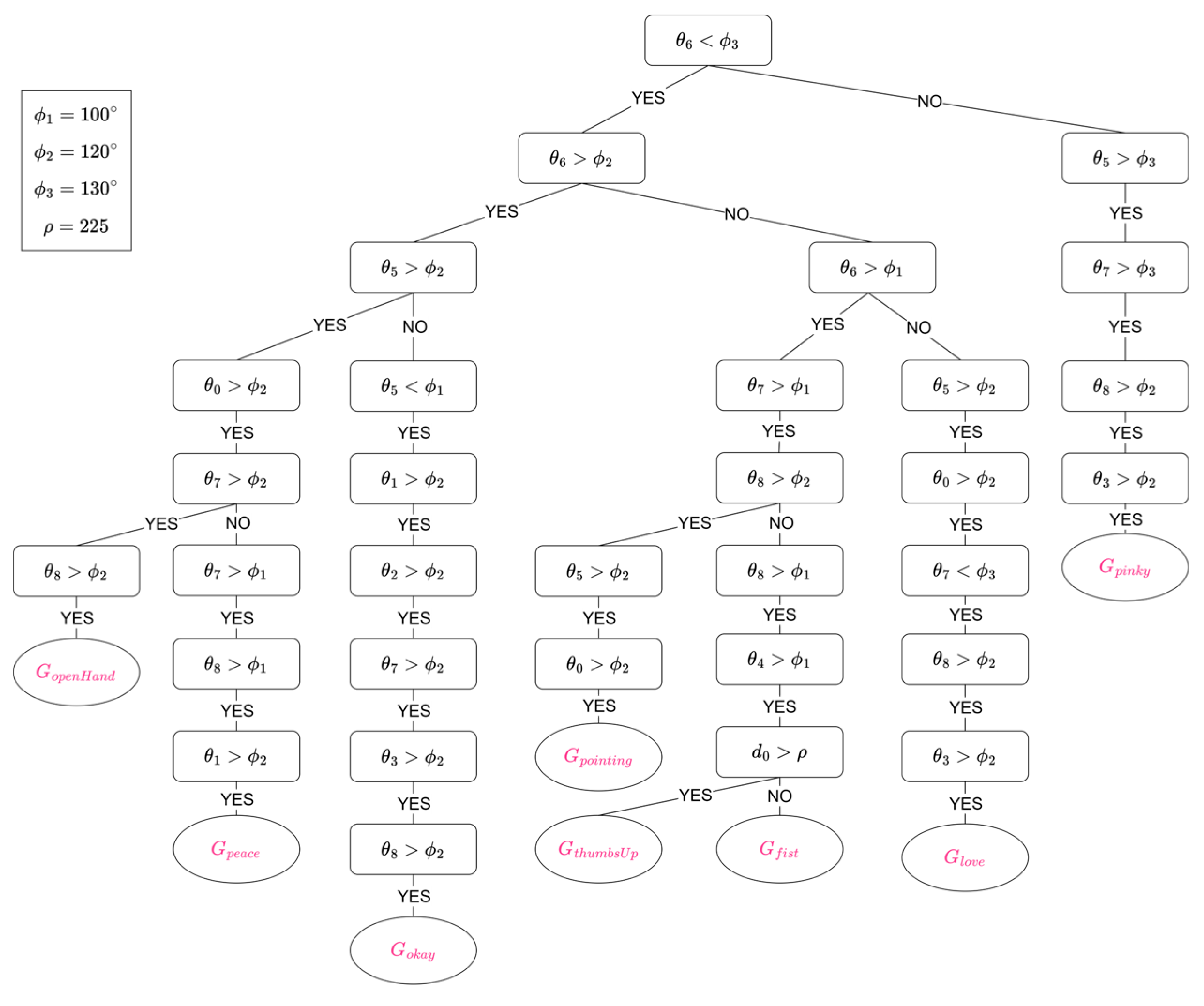

. Specifically, the gesture classification can be determined by using the following Boolean combinations of these features,

where the determined gesture class

and

indicates any unspecified hand gestures. This classification can also be implemented using a decision tree, as demonstrated in

Figure 6. It is noteworthy that in the decision-tree diagram, the unspecified hand gesture

is ignored.

Once a gesture was classified, it was mapped to a corresponding drone flight command through a gesture-command control function implemented in Python. Using the Crazyflie Python API and the MotionCommander interface, each recognized gesture triggered a predefined flight behavior. For instance, when the system detected an open hand gesture,

, the drone executed a vertical ascent using the

mc.up() command [

9]. This mapping system allowed for smooth, real-time gesture-to-command execution and was designed to be modular, enabling additional gestures and flight behaviors to be added with minimal modification to the underlying code.

These mappings enabled the drone to be controlled solely through hand gestures, offering an intuitive and accessible user experience. The system processed webcam input frame-by-frame, continuously checking for changes in gesture state and executing flight commands accordingly. This approach eliminated the need for physical controllers and reduced the learning curve for beginner users.

2.5. Obstacle Avoidance System

The obstacle avoidance system utilized sensor data from the Multi-Ranger Deck to detect obstacles in the front, back, left, and right directions. Unlike autonomous navigation systems that follow a predetermined path, this drone only moves in response to user hand gestures. While executing a commanded movement, the drone continuously scans its surroundings.

Let the drone be situated in a 3D space with its local coordinate system aligned such that the front direction corresponds to the positive x-axis and left to the positive y-axis. To assess the proximity of obstacles, the system defines directional obstacle distances by indicating the measured distances to obstacles in the front, back, left, and right directions.

These distances are obtained using infrared time-of-flight (ToF) sensors on the Multi-Ranger Deck, which actively measure the shortest line-of-sight distance between the drone and any nearby surface in the specified directions. To ensure safe navigation, the obstacle avoidance algorithm uses a fixed safety threshold,

meters. When any measured distance

falls below this threshold, the system initiates an automatic avoidance maneuver, moving it by

meters in the opposite direction to maintain a buffer from the obstacle.

These avoidance movements are issued using the Bitcraze Python API’s Motion Commander interface, allowing precise, small-scale control of the Crazyflie drone’s trajectory. This approach results in safe navigation while maintaining user control.

2.6. 3D Mapping and Flight Data Logging

A 3D mapping system was implemented to visualize the drone’s flight path and detected obstacles. The system recorded the drone’s x, y, and z coordinates at 0.1-second intervals, logging its movement throughout the flight. Obstacle detection data from the Multi-Ranger Deck was incorporated into the map, with obstacles represented as rectangular prisms positioned 0.35 meters from the detection point.

The collected flight data was processed and visualized using Python’s Plotly library, generating an interactive 3D representation of the environment. The resulting visualization provided a clear depiction of the drone’s trajectory and mapped obstacles, aiding in post-flight analysis and system evaluation.

2.7. Experimental Setup and Testing Procedure

Experiments were conducted in a controlled indoor environment to evaluate the performance of the integrated system, focusing on gesture recognition, obstacle avoidance, and 3D mapping accuracy. The testing procedure consisted of three primary evaluations:

Hand-Gesture Control Test: Users performed predefined hand gestures in front of a webcam to verify accurate classification and execution of drone commands. Each gesture was tested multiple times to assess recognition accuracy and command responsiveness.

Obstacle Avoidance Test: The drone was commanded to move forward while obstacles were placed in randomized positions along its path. The system’s ability to detect and avoid obstacles within the 0.35-meter threshold was analyzed, recording the reliability of avoidance maneuvers and any unintended collisions.

3D Mapping Validation: To assess mapping accuracy, the recorded flight path and obstacle locations were compared to real-world measurements. Errors in obstacle placement and discrepancies in flight path representation were quantified.

Each test was repeated multiple times to ensure consistency.

2.8. Data Availability

The Python code used for gesture recognition, obstacle avoidance, 3D mapping, AI-Deck streaming, and supporting resources will be publicly available in a GitHub repository upon publication. The repository will also include images of the generated 3D maps and a YouTube video demonstrating the system’s functionality.

3. Results

3.1. Obstacle Avoidance Performance

3.1.1. Obstacle Detection and Response

The obstacle avoidance system was designed to prevent collisions by integrating the Multi-Ranger Deck, which utilizes time-of-flight (ToF) sensors to detect objects in the drone’s surroundings. When an obstacle is detected within 0.35 meters, the drone automatically moves 0.2 meters in the opposite direction to maintain a safe distance. These values were selected to ensure the drone reacts promptly without excessive movement, striking a balance between responsiveness and stability.

The Multi-Ranger Deck can measure distance up to 4 meters within a few millimeters, as provided by Bitcraze [

28]. This level of accuracy allowed for consistent and reliable obstacle detection throughout testing, as proved by

Table 1. Also, the avoidance parameters were implemented as adjustable variables in the code, enabling customization based on the user’s specific requirements or environmental constraints.

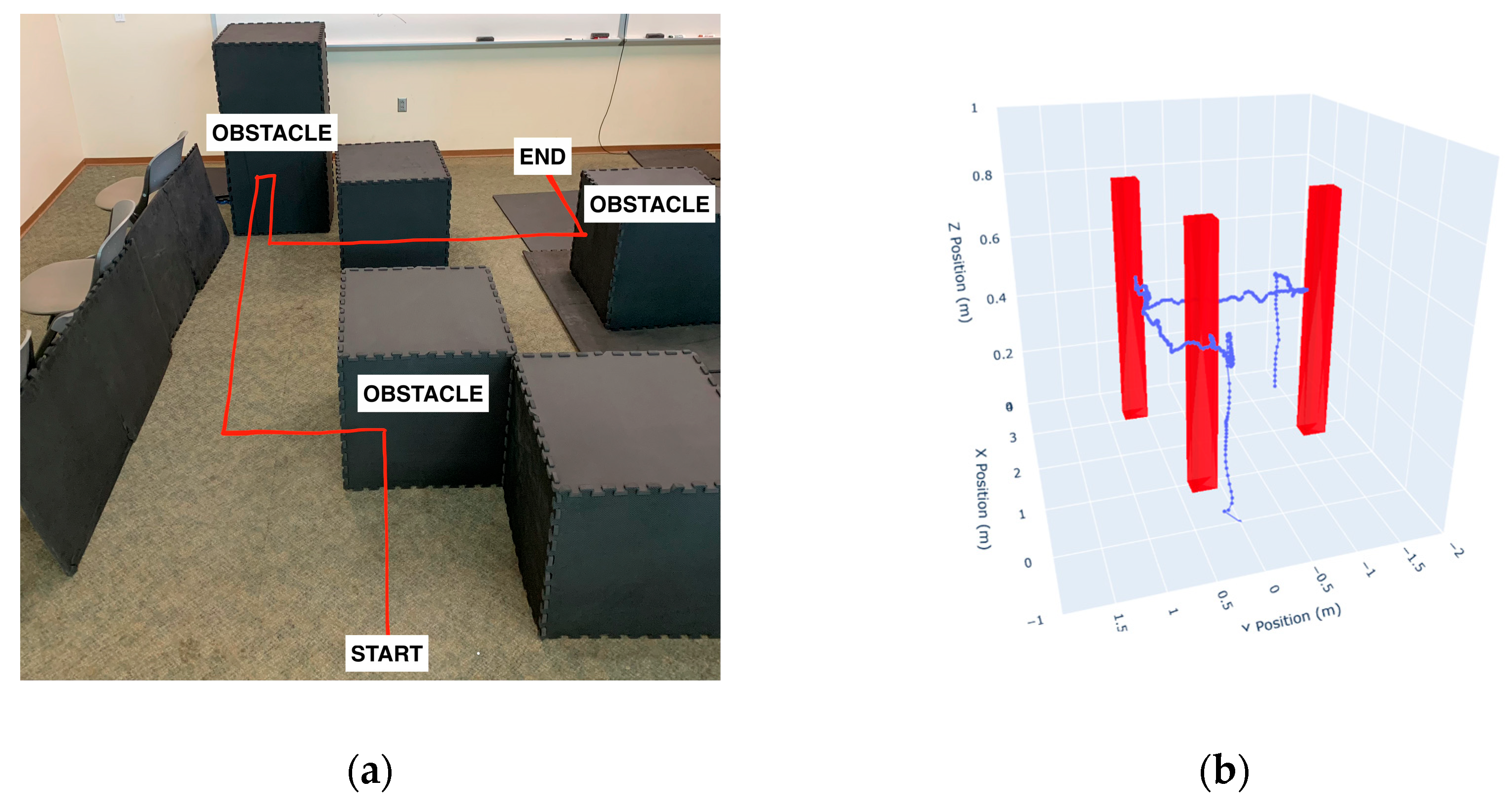

To evaluate the obstacle avoidance system’s reliability, structured tests were conducted where the drone was flown perpendicularly toward an obstacle placed at various orientations. For each direction—front, back, left, right, and angled (with the obstacle positioned diagonally)—the drone was commanded via gesture control to fly directly toward the object. Each scenario was tested 25 times, resulting in a total of 125 trials. As shown in

Table 1, the drone successfully avoided collisions in all cases, achieving a 100% avoidance rate across all directions.

During testing, when the drone’s battery was fully charged and all systems were functioning optimally, there were no instances where the drone failed to react to an obstacle and flew directly into it. The system reliably detected and responded to obstacles, enhancing the drone’s ability to navigate safely in constrained environments.

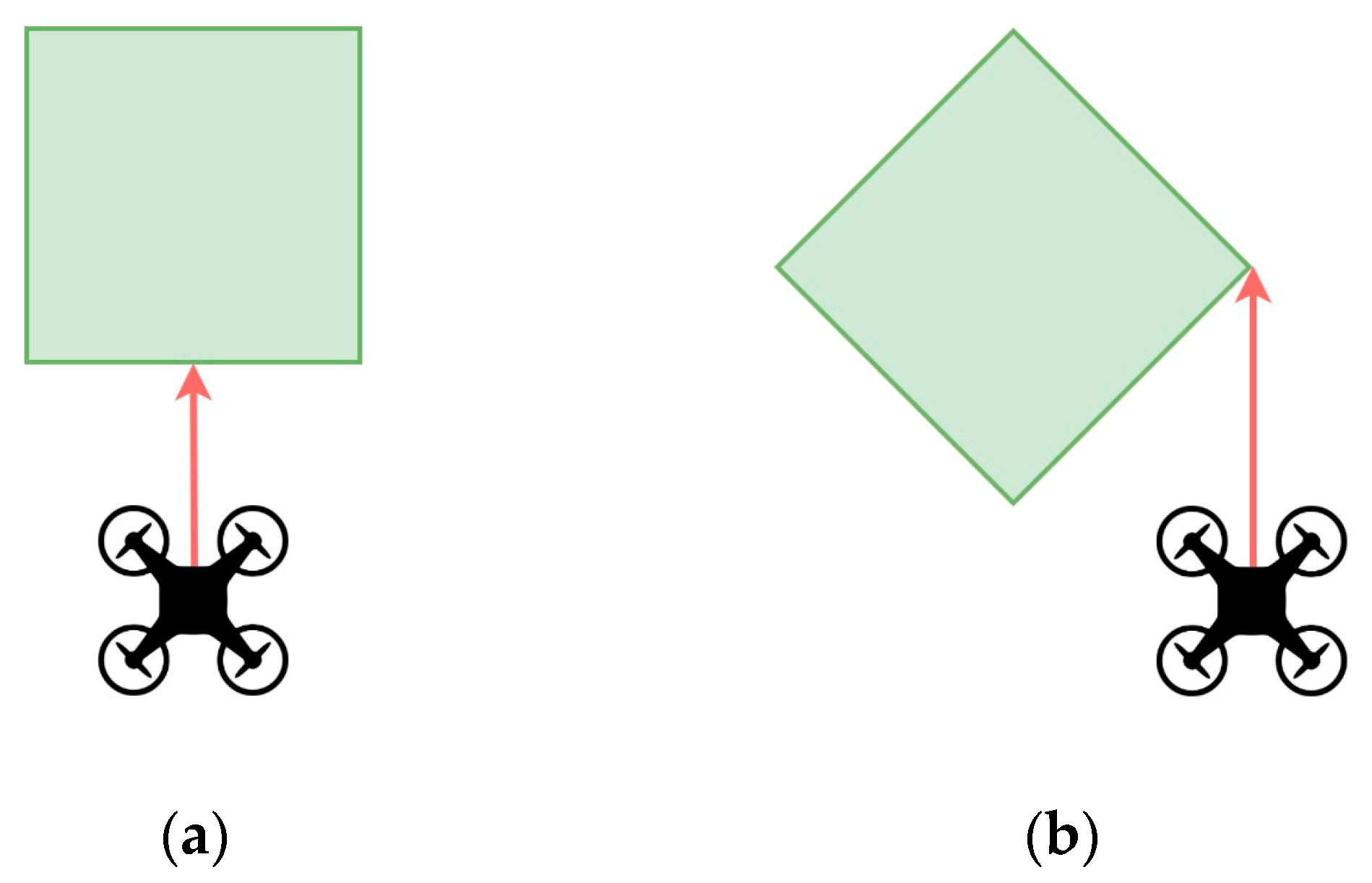

3.2.1. Obstacle Response During Perpendicular vs. Angled Approaches

During testing, the drone’s reaction to obstacles differed depending on how it approached them — either perpendicularly as seen in

Figure 7a or at an angle as seen in

Figure 7b. When flying straight toward an obstacle, the Multi-Ranger Deck reliably detected the object within the 0.35-meter threshold and immediately initiated a 0.2-meter movement in the opposite direction to avoid a collision. These perpendicular encounters typically resulted in smooth, consistent avoidance maneuvers, as the object was well-aligned with the front-facing sensor.

However, when the drone encountered an object at an angle, avoidance behavior became more nuanced. Angled obstacles—such as those turned or placed diagonally—were not always detected symmetrically by the front or side sensors. This asymmetry increased the possibility of the drone clipping a propeller on the edge of the object, particularly when the contact point fell between two sensor fields. Despite this, the drone successfully avoided collisions in these situations by responding based on whichever side of the drone’s boundary was first triggered. The system adjusted, initiating a horizontal or vertical avoidance maneuver depending on which sensor crossed the safety threshold first. A demonstration video of these two scenarios is available in the

Supplementary Materials .

Furthermore, the drone could approach obstacles at awkward angles due to its ability to rotate and then fly forward. Although the control system does not include a specific gesture for diagonal flight, this functionality effectively allows the drone to fly at an angle relative to its original grid orientation. As a result, obstacles may be encountered off-axis, challenging the avoidance system’s ability to interpret directionality accurately. Nonetheless, the obstacle detection logic proved adaptive enough to handle these situations, maintaining safe distances and adjusting flight paths as needed.

3.2. Hand-Gesture Recognition Performance

3.2.1. Gesture Recognition Accuracy

To assess the accuracy of the hand gesture recognition system, a controlled test was performed using all eight predefined gestures: pointing, peace, thumbs up, pinky, open hand, fist, okay, and love. Each gesture was presented perpendicularly and approximately one foot away from the laptop’s webcam—identified as the optimal distance and orientation for consistent detection. The gesture was raised and removed from the frame 25 times per test. The pointing, pinky, open hand, fist, okay, and love gestures were correctly identified in all 25 attempts, achieving a 100% recognition rate. However, the peace and thumbs up gestures occasionally exhibited inconsistencies in recognition. The peace gesture was sometimes initially misclassified as an open hand before correcting itself shortly after. Similarly, the thumbs up gesture was misidentified once as a fist, likely due to the visual similarity between the two gestures when the thumb is bent. Despite these minor issues, the system demonstrated high overall accuracy, as proven in

Table 2, particularly when gestures were presented in the ideal position relative to the camera.

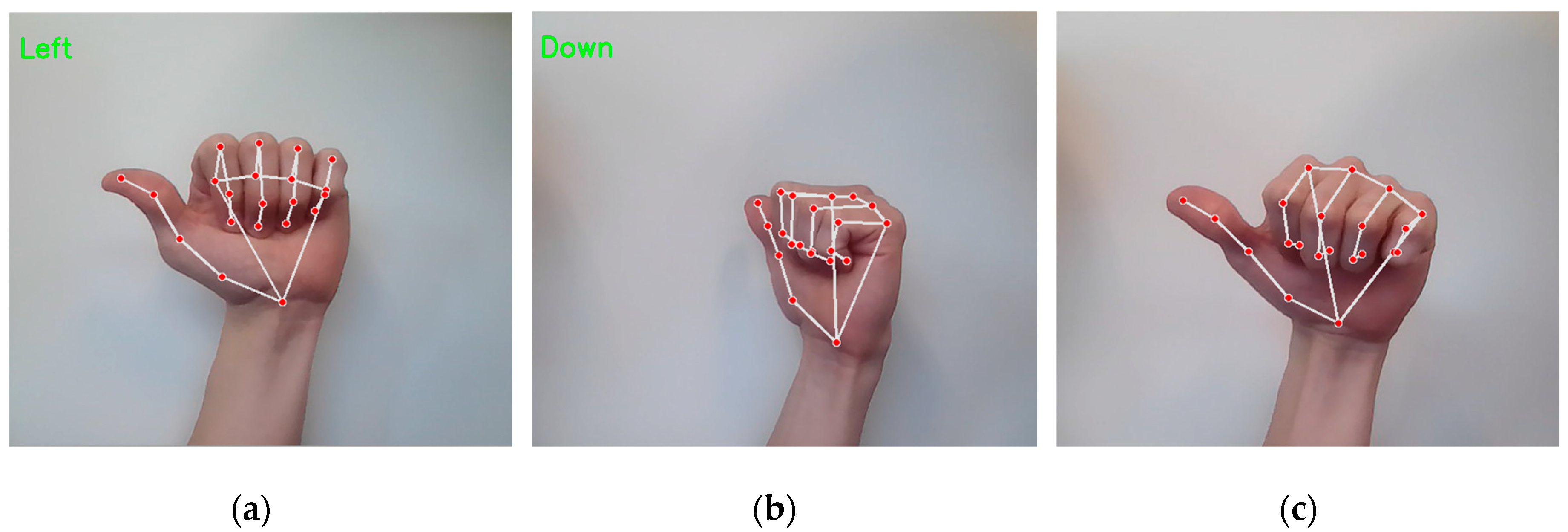

While MediaPipe Hands provided tracking capabilities, its accuracy could be inconsistent due to the system’s sensitivity to hand positioning. For optimal recognition, the user’s hand needed to be perfectly perpendicular and close to the camera as seen in

Figure 7a. If the hand was at an angle or too far away, the system sometimes failed to recognize gestures properly, leading to delays as users had to adjust their hand positioning multiple times.

Several factors impacted the system’s reliability:

Gesture Misclassification: The system occasionally misinterpreted one gesture as another (

Figure 8b), especially when switching between gestures, leading to unintended drone movements. Also, if a gesture was presented at an unfavorable angle or partially obscured, it sometimes failed to register as any labeled gesture (

Figure 8c), resulting in no response from the drone.

Lighting Conditions: Bright, even lighting improved recognition, while dim or uneven lighting sometimes led to unstable tracking of landmarks.

External Hand Interference: If another person’s hand entered the camera’s field of view, the system sometimes detected it as an input, disrupting the control process.

Despite these limitations, keeping the hand close to the camera and maintaining a stable positioning significantly improved detection reliability. Future improvements could include additional filtering techniques to reduce misclassification and enhance reliability in changing scenarios.

3.2.2. Gesture-to-Command Latency

The latency in gesture-based control primarily stemmed from the looping sequence of detecting a hand gesture and executing the corresponding drone movement. In each loop cycle, the system first detected the user’s hand gesture, then issued a movement command to the drone, and only after completing the movement did it begin detecting the next gesture.

To reduce latency, the movement per gesture was decreased, allowing the drone to execute commands more quickly. Since the system no longer had to wait for the drone to complete a larger movement before recognizing the next gesture, the overall responsiveness improved. This also made the gesture detection stream smoother, reducing interruptions in recognizing consecutive commands. As a result, shorter movements allowed for a more fluid interaction between the user and the drone, enhancing control precision.

3.2.3. User Experience and Usability

The hand-gesture control system provided an intuitive and engaging way to interact with the drone, especially for users with little to no prior experience in drone piloting. By eliminating the need for a traditional controller, the system allowed users to fly the drone using natural hand movements, making the experience more accessible.

Most users required some initial practice to become comfortable with the system. The main learning curve involved:

Memorizing multiple gestures and associating them with their corresponding drone commands.

Maintaining proper hand positioning in front of the camera to ensure accurate recognition.

Balancing attention between their hand gestures and the drone’s movement, particularly when not relying on the AI deck’s streamed FPV footage.

Once users became familiar with the system, they found it to be a responsive and effective alternative to traditional control methods. The ability to adjust hand positioning and recognize gestures contributed to an overall smooth and immersive control experience.

3.3. 3D Mapping and Flight Path Analysis

3.3.1. Accuracy of 3D Map Generation

The 3D mapping system recorded obstacles and the drone’s flight path based on sensor data collected during flight. The accuracy of the mapping in

Table 3 was evaluated by comparing the generated 3D representations with the physical obstacle and path locations.

The drone’s movements during this test, including minor wobbles and deviations, were consistently captured on the 3D map, resulting in a recorded deviation of 0 cm between the video and the map for the flight path. For obstacle placement accuracy, the dimensions and layout of the physical maze were measured and compared with the mapped results. The first obstacle showed the most deviation, likely due to instability during the drone’s initial takeoff.

The mapping system only placed encountered obstacles on the map—meaning obstacles that directly affected the drone’s path. If the drone flew near a wall or in front of an obstacle without attempting to move into it, that obstacle was not recorded in the 3D map. Instead, when the drone needed to determine a viable flight direction and an obstacle blocked that path, its location was noted and placed on the map. This explains why the 3D map (

Figure 9b) contained fewer obstacles than the physical course (

Figure 9a), and this discrepancy should not be interpreted as inaccuracy.

The flight path mapping was highly accurate, consistently starting at (0,0,0) and tracking movement relative to the takeoff position. Even when the drone wobbled or slightly deviated from a straight path, these deviations were accurately reflected in the 3D map. However, obstacle placement accuracy depended on sensor-based distance estimation. When an obstacle was detected, it was mapped at 0.35 meters from the detection point. Any slight sensor inconsistencies in distance measurement could lead to minor errors in obstacle placement, but the overall flight path remained precise and reliable. This 3D mapping example along with gesture control, obstacle avoidance, and drone FPV streaming can be seen in the

Supplementary Materials.

3.4. Integrated System Performance: Gesture Control and Obstacle Avoidance

3.4.1. Interaction Between Gesture Control and Obstacle Avoidance

Integrating gesture control with obstacle avoidance introduced an interactive dynamic where user commands had to coexist with the drone’s autonomous safety responses. When a user issued a command directing the drone toward an obstacle, the avoidance system overrode the gesture-based movement, causing the drone to back away from the detected object. This ensured collision prevention but occasionally led to unexpected movements from the user’s perspective.

Despite this, users retained control over the drone’s flight, as they could immediately issue a new gesture command after the avoidance maneuver. The 0.35-meter safety threshold and 0.2-meter retreat distance traded off safety and responsiveness, preventing crashes without making the drone feel unresponsive. Also, since these values were adjustable in the code, the system could be fine-tuned for different user preferences or environments.

During testing, users found that while the avoidance system sometimes interrupted their intended flight path, it did not completely disrupt their ability to navigate the drone. However, in confined spaces with multiple obstacles, the drone could enter a cycle of avoidance maneuvers, requiring the user to adjust their gestures to navigate effectively. Overall, the system provided a safe yet intuitive method for controlling the drone, even for users unfamiliar with manual drone operation.

3.4.2. AI-Deck Streaming and Remote Navigation

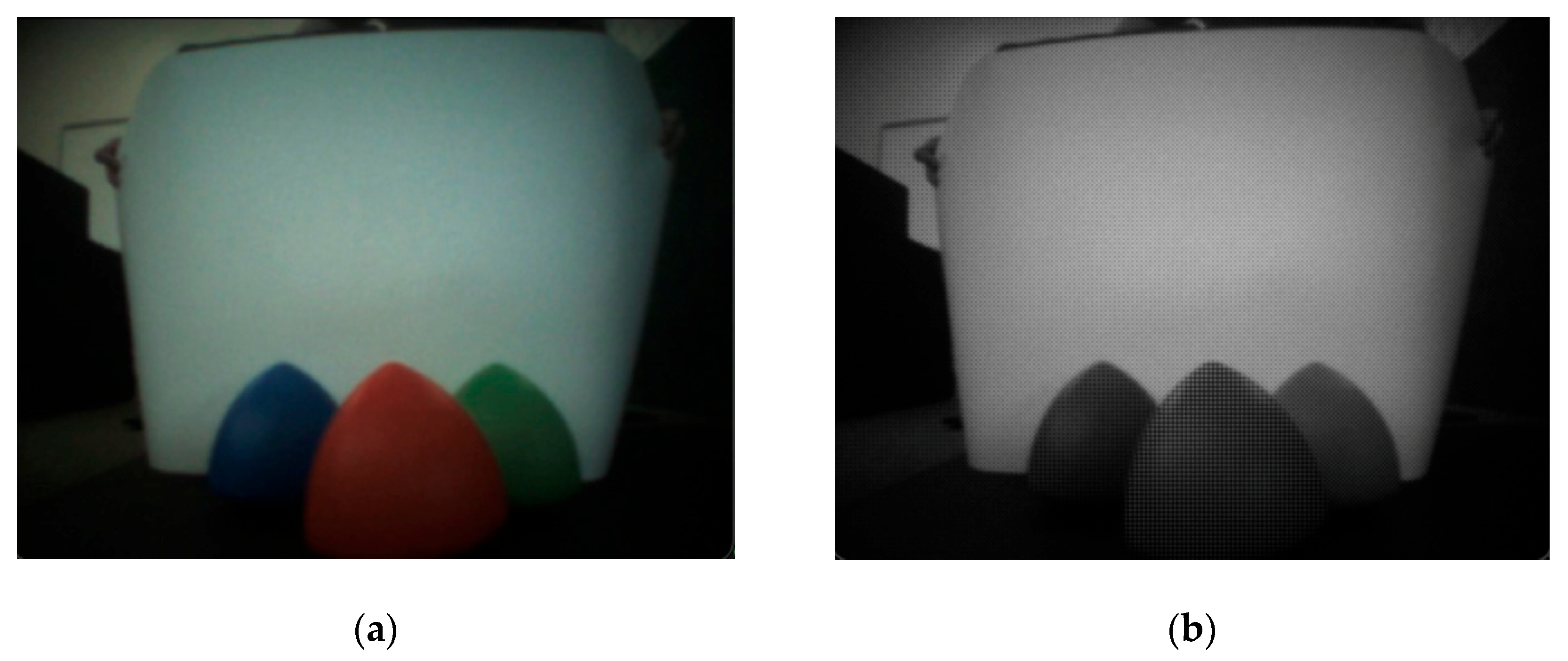

The AI-Deck’s video streaming feature allowed for navigation beyond line of sight using both greyscale and color video modes, enhancing user control and environmental awareness. While the color mode (

Figure 10a) provided better scene interpretation, the greyscale mode (

Figure 10b) offered better contrast, which could be beneficial in certain lighting conditions.

The latency was minimal when the drone moved at a slower speed, making it easier for users to react to the environment and adjust their gestures accordingly. However, as the speed increased, the latency became more noticeable, making precise maneuvering more difficult. The low-resolution feed also made navigation challenging, as fine details and distant obstacles were difficult to discern.

The limited field of view (FOV) of the AI-Deck stream meant that users could only see directly in front of the drone, making it difficult to gauge surroundings beyond the camera’s perspective. This restriction posed a challenge, particularly when navigating around complex obstacles. However, the obstacle avoidance system compensated for this limitation, preventing the drone from crashing into objects that were outside the camera’s view. This combination of AI-Deck streaming and autonomous obstacle avoidance allowed for a more reliable navigation experience, even with the limitations of the video feed.

3.5. Comparison with Existing Gesture-Controlled Drone Systems

To contextualize the novelty and performance of the proposed system, we compared it with two recent state-of-the-art gesture-based drone control systems from Yun et al. [

29] and Lee and Yu [

30]. Each system focused on hand gesture recognition for drone navigation but differed significantly in terms of autonomy, user feedback, and mapping capabilities.

The system proposed by Yun et al. [

29] utilized an RGB camera to recognize multiple different single-handed gestures for drone control. While their system achieved a high accuracy of +90% using both motion- and posture-based gestures and introduces a lightweight architecture for mobile and edge devices, it lacks a built-in obstacle avoidance system. In contrast, our system not only offers gesture-based navigation with similarly high recognition accuracy but also integrates autonomous obstacle avoidance. This feature is valuable in gesture-controlled drone systems because it allows users, especially beginners, to focus on intuitive control without the constant fear of crashing.

Lee and Yu’s wearable drone controller [

30] uses an inertial measurement unit (IMU) on the hand to classify gestures via machine learning and includes vibrotactile feedback to alert users of nearby obstacles. Although this provides a helpful sensory cue, the user must interpret the vibration and manually respond by steering the drone to avoid collisions. In contrast, our system eliminates this burden: the drone’s obstacle avoidance is entirely autonomous, requiring no user input to prevent crashes. This makes our platform more accessible and safer, especially in obstacle-dense environments.

Also, neither of the two compared systems offers a mapping system. Our solution includes 3D mapping that visualizes the drone’s flight trajectory and marks obstacles as red prisms in a virtual space. This capability provides insights into the environment and drone behavior over time and is especially useful for post-flight analysis.

In summary, unlike existing systems that rely heavily on manual control or external feedback, the proposed system is distinguished by its tight integration of gesture control, autonomous obstacle avoidance, real-time 3D mapping, and FPV video streaming, offering a unique and user-friendly platform for advanced drone interaction.

4. Discussion

This study demonstrates the successful integration of gesture-based drone control, autonomous obstacle avoidance, and 3D mapping, providing a more accessible and intuitive method for drone navigation. The hand-gesture control system, implemented using Google’s MediaPipe Hands, accurately recognized predefined gestures and converted them into flight commands. However, lighting conditions, hand positioning, and background noise affected recognition accuracy, a limitation observed in prior research on vision-based gesture interfaces [

31]. Despite this, the system provided hands-free control, reducing the need for traditional controllers and lowering the barrier to drone operation, aligning with previous studies that highlight the benefits of gesture-based interfaces for robotics and UAV applications [

32].

The obstacle avoidance system, utilizing infrared time-of-flight sensors, effectively detected and prevented collisions by identifying obstacles within 0.35 meters and moving 0.2 meters in the opposite direction. This mechanism ensured continuous flight in cluttered environments, where multiple directions were sometimes obstructed simultaneously. While the system performed reliably, occasional issues occurred due to the drone’s battery size, which led to rapid power depletion after only a few flights. Power limitations affecting drone performance have been discussed in previous research, suggesting that optimizing power management strategies or using energy-efficient algorithms could enhance flight duration [

33]. Furthermore, improving obstacle detection through sensor fusion with LiDAR or ultrasonic sensors has been proposed as a way to increase reliability in within different environments [

34].

The 3D mapping system provided valuable insight into the drone’s flight path and surrounding environment. By logging x, y, and z coordinates every 0.1 seconds, the system generated a spatial representation of obstacles as rectangular prisms positioned 0.35 meters from the detection point. While the maps closely matched the lab’s layouts, minor positioning inaccuracies were sometimes observed, likely due to improper coding adjustments. Prior research on SLAM (Simultaneous Localization and Mapping) systems suggests that incorporating adaptive filtering techniques, such as Kalman filtering, could improve mapping precision [

35]. Also, incorporating higher-resolution spatial data processing could enhance the system’s ability to visualize complex environments [

36].

A key aspect of this research was the integration of gesture control with autonomous navigation, allowing users to interact with the drone while ensuring safety through automatic collision avoidance. Studies on human-robot interaction suggest that balancing user input with autonomous decision-making is critical to achieving an effective shared control system [

37]. Future work could focus on refining the balance between manual input and autonomous intervention, ensuring that the system adapts dynamically to user intentions while maintaining safety protocols. One possible approach is to use reinforcement learning or adaptive AI models to adjust the system’s response based on feedback [

38].

The addition of an AI-Deck for live video streaming introduced a new capability: beyond-line-of-sight control. This feature allows users to pilot the drone remotely using live footage, which has promising applications in search and rescue, reconnaissance, and surveillance. However, the AI-Deck camera was limited by its low resolution and fixed focus, resulting in poor image quality. Prior research has explored alternative small-scale camera modules that improve image quality while maintaining low latency [

39]. Exploring better hardware options or implementing image-processing techniques such as super-resolution reconstruction could enhance the user experience [

40].

The integrated system functioned effectively as a cohesive unit, enabling intuitive control while maintaining a safety layer through obstacle avoidance. However, improvements are needed to enhance usability, responsiveness, and overall performance. The system’s responsiveness was limited by occasional processing delays, likely caused by the computational overhead of gesture recognition and obstacle detection running simultaneously. Optimizing the software pipeline by utilizing multi-threading or GPU acceleration could improve processing efficiency [

41]. Furthermore, reducing latency in the gesture recognition system could improve user experience, as research has shown that delays exceeding 100 milliseconds negatively impact interactive control systems [

42].

Although this system presents a significant advancement in human-drone interaction, several limitations remain. The gesture recognition system remains susceptible to improper hand positioning, lighting variations, and occlusion. Additionally, the obstacle avoidance system currently lacks adaptive decision-making capabilities and does not incorporate learning from prior interactions. To better evaluate and interpret the system's performance, we acknowledge the following potential threats to validity:

Internal Validity − The gesture recognition and drone control were tested under controlled indoor conditions with stable lighting and minimal distractions. Performance may decline in less controlled, real-world environments where lighting, background activity, or camera positioning vary significantly.

Construct Validity − The predefined gestures selected for this study may not be universally intuitive for all users, potentially influencing usability outcomes. Furthermore, users’ hand size, motion speed, and articulation could impact gesture recognition reliability.

External Validity − The findings are based on a specific hardware setup (Crazyflie 2.1, MediaPipe Hands, and AI-Deck). Results may not generalize to other drone models or hardware configurations without significant reengineering.

Reliability Threats − System performance may degrade over prolonged use due to sensor drift, thermal effects, or battery limitations. Additionally, the gesture classification system may face reduced robustness in multi-user settings or when exposed to unintended hand movements.

The paper aims to mitigate these threats in future work through more diverse testing environments, user studies across broader demographics, and hardware-agnostic system design to ensure greater generalizability and robustness. Specifically, future research could explore deep learning models for more robust gesture recognition, sensor fusion techniques for improved obstacle detection, and adaptive control strategies to enhance user autonomy while maintaining safety [

43,

44]. Also, expanding the 3D mapping system with SLAM integration could enable more detailed environmental awareness, improving applications in multi-drone coordination and autonomous exploration [

45]. By addressing these challenges, future modifications of this system could improve accuracy, usability, and adaptability, making drone technology more accessible to a broader range of users and applications. Furthermore, another promising research direction is the application of hand gesture control to robotic swarms systems and very large-scale robotic systems [

46,

47,

48].

5. Conclusions

This research demonstrated a gesture-controlled drone system integrating obstacle avoidance, 3D mapping, and drone FPV streaming via the AI-Deck, offering an intuitive and accessible approach to drone navigation. The combination of hand-gesture recognition and autonomous safety mechanisms reduces reliance on traditional controllers, making drone operation more approachable, particularly for beginners. While limitations such as gesture recognition sensitivity, video streaming quality, and processing delays were observed, the system successfully provided a safer and more interactive user experience. Future improvements could further refine usability, responsiveness, and reliability, including two camera views for better gesture recognition, a new camera module for higher-quality streaming, and further code optimization. Also, expanding the obstacle avoidance system to detect and respond to moving objects would enhance the drone’s adaptability, opening pathways for use in unpredictable spaces. Altogether, this multifunctional system represents a meaningful step toward safer, more intuitive human-drone interaction and paves the way for broader adoption of drone technology in education, training, and mission applications.

Author Contributions

Conceptualization, B.T., M.A., P.S., and P.Z.; methodology, B.T., M.A., P.S. and X.G.; software, B.T., M.A., P.S. and X.G.; validation, B.T., M.A. and P.S.; formal analysis, B.T. and P.Z.; investigation, B.T. and M.A.; resources, P.Z.; data curation, B.T., M.A. and P.S.; writing—original draft preparation, B.T.; writing—review and editing, B.T., X.G., P.Z. and H.M.; visualization, B.T., M.A. and P.S.; supervision, P.Z.; project administration, P.Z. and H.M.; funding acquisition, H.M. and P.Z.

Funding

This research was supported by the Defense Advanced Research Projects Agency (DARPA)-Grant #000825 and the NASA West Virginia Space Grant Consortium, NASA Agreement #80NSCC20M0055.

Institutional Review Board Statement

Not applicable

Informed Consent Statement

Not applicable

Data Availability Statement

Acknowledgments

The authors thank PiNG Lab and Marshall University for their support.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CMC |

Carpometacarpal |

| DIP |

Distal Interphalangeal |

| FOV |

Field of View |

| FPV |

First-Person View |

| IMU |

Inertial Measurement Unit |

| MCP |

Metacarpophalangeal |

| PA |

Power Amplifier |

| PIP |

Proximal Interphalangeal |

| SLAM |

Simultaneous Localization and Mapping |

| ToF |

Time-of-Flight |

| UAV |

Unmanned Aerial Vehicle |

References

- Amicone, D. , et al., A smart capsule equipped with artificial intelligence for autonomous delivery of medical material through drones. Applied Sciences 2021, 11, 7976. [Google Scholar] [CrossRef]

- Hu, D. , et al., Automating building damage reconnaissance to optimize drone mission planning for disaster response. Journal of Computing in Civil Engineering 2023, 37, 04023006. [Google Scholar] [CrossRef]

- Nooralishahi, P., F. López, and X. P. Maldague, Drone-enabled multimodal platform for inspection of industrial components. IEEE Access 2022, 10, 41429–41443. [Google Scholar] [CrossRef]

- Nwaogu, J.M. , et al., Enhancing drone operator competency within the construction industry: Assessing training needs and roadmap for skill development. Buildings 2024, 14, 1153. [Google Scholar] [CrossRef]

- Tezza, D., D. Laesker, and M. Andujar. The learning experience of becoming a FPV drone pilot. in Companion of the 2021 ACM/IEEE international conference on human-robot interaction. 2021.

- Lawrence, I.D. and A.R.R. Pavitra, Voice-controlled drones for smart city applications. Sustainable Innovation for Industry 6.0, 2024: p. 162-177.

- Shin, S.-Y., Y. -W. Kang, and Y.-G. Kim. Hand gesture-based wearable human-drone interface for intuitive movement control. in 2019 IEEE international conference on consumer electronics (ICCE). 2019. IEEE.

- Naseer, F. , et al. Deep learning-based unmanned aerial vehicle control with hand gesture and computer vision. in 2022 13th Asian Control Conference (ASCC). 2022. IEEE.

- Hayat, A. , et al. Gesture and Body Position Control for Lightweight Drones Using Remote Machine Learning Framework. International Conference on Electrical and Electronics Engineering. Springer, 2024. [Google Scholar]

- Bae, S. and H.-S. Park, Development of immersive virtual reality-based hand rehabilitation system using a gesture-controlled rhythm game with vibrotactile feedback: an fNIRS pilot study. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 2023, 31, 3732–3743. [Google Scholar]

- Manikanavar, A.R. and S.B. Shirol. Gesture controlled assistive device for deaf, dumb and blind people using Raspberry-Pi. in 2022 International Conference on Smart Technologies and Systems for Next Generation Computing (ICSTSN). 2022. IEEE.

- Paterson, J. and A. Aldabbagh. Gesture-controlled robotic arm utilizing opencv. in 2021 3rd International Congress on Human-Computer Interaction, Optimization and Robotic Applications (HORA). 2021. IEEE.

- Seidu, I. and J.O. Lawal, Personalized Drone Interaction: Adaptive Hand Gesture Control with Facial Authentication. Int J Sci Res Sci Eng Technol 2024, 11, 43–60. [Google Scholar] [CrossRef]

- Alanezi, M.A. , et al., Obstacle avoidance-based autonomous navigation of a quadrotor system. Drones 2022, 6, 288. [Google Scholar] [CrossRef]

- Xue, Z. and T. Gonsalves, Vision based drone obstacle avoidance by deep reinforcement learning. AI 2021, 2, 366–380. [Google Scholar] [CrossRef]

- Ostovar, I. , Nano-drones: enabling indoor collision avoidance with a miniaturized multi-zone time of flight sensor. 2022, Politecnico di Torino.

- Courtois, H. , et al. , OAST: Obstacle avoidance system for teleoperation of UAVs. IEEE Transactions on Human-Machine Systems 2022, 52, 157–168. [Google Scholar]

- Bouwmeester, R.J., F. Paredes-Vallés, and G.C. De Croon. Nanoflownet: Real-time dense optical flow on a nano quadcopter. in 2023 IEEE International Conference on Robotics and Automation (ICRA). 2023. IEEE.

- Khoza, N., P. Owolawi, and V. Malele. Drone Gesture Control using OpenCV and Tello. in 2024 Conference on Information Communications Technology and Society (ICTAS). 2024. IEEE.

- Zhang, N. , et al., End-to-end nano-drone obstacle avoidance for indoor exploration. Drones 2024, 8, 33. [Google Scholar] [CrossRef]

- Telli, K. , et al., A comprehensive review of recent research trends on unmanned aerial vehicles (uavs). Systems 2023, 11, 400. [Google Scholar]

- Bitcraze. Crazyflie 2.1. 2024 18 April 2025]; Available from: https://www.bitcraze.io/products/old-products/crazyflie-2-1/.

- Bitcraze. Crazyradio PA. 2025 18 April 2025]; Available from: https://www.bitcraze.io/products/crazyradio-pa/.

- Bitcraze. Flow deck v2. 2025 14 April 2025]; Available from: https://store.bitcraze.io/products/flow-deck-v2.

- Bitcraze. Multi-ranger deck. 2025 14 April 2025]; Available from: https://store.bitcraze.io/products/multi-ranger-deck.

- Bitcraze. AI-deck 1.1. 2025 14 April 2025]; Available from: https://store.bitcraze.io/products/ai-deck-1-1.

- Google. Hand landmarks detection guide. 2025 4 May 2025]; Available from: https://ai.google.dev/edge/mediapipe/solutions/vision/hand_landmarker.

- Bitcraze. Multi-ranger deck. 2025 17 April 2025]; Available from: https://www.bitcraze.io/products/multi-ranger-deck/.

- Yun, G., H. Kwak, and D.H. Kim, Single-Handed Gesture Recognition with RGB Camera for Drone Motion Control. Applied Sciences 2024, 14, 10230. [Google Scholar] [CrossRef]

- Lee, J.-W. and K.-H. Yu, Wearable drone controller: Machine learning-based hand gesture recognition and vibrotactile feedback. Sensors 2023, 23, 2666. [Google Scholar] [CrossRef]

- Natarajan, K., T. -H.D. Nguyen, and M. Mete. Hand gesture controlled drones: An open source library. in 2018 1st International Conference on Data Intelligence and Security (ICDIS). 2018. IEEE.

- Begum, T., I. Haque, and V. Keselj. Deep learning models for gesture-controlled drone operation. in 2020 16th International Conference on Network and Service Management (CNSM). 2020. IEEE.

- Khaksar, S. , et al., Design and Evaluation of an Alternative Control for a Quad-Rotor Drone Using Hand-Gesture Recognition. Sensors 2023, 23, 5462. [Google Scholar] [CrossRef] [PubMed]

- Moffatt, A. , et al. Obstacle detection and avoidance system for small UAVs using a LiDAR. in, 2020. [Google Scholar]

- Karam, S. , et al. , Microdrone-based indoor mapping with graph slam. Drones 2022, 6, 352. [Google Scholar]

- Krul, S. , et al., Visual SLAM for indoor livestock and farming using a small drone with a monocular camera: A feasibility study. Drones 2021, 5, 41. [Google Scholar] [CrossRef]

- Backman, K., D. Kulić, and H. Chung, Reinforcement learning for shared autonomy drone landings. Autonomous Robots 2023, 47, 1419–1438. [Google Scholar] [CrossRef]

- Schwalb, J. , et al. A study of drone-based AI for enhanced human-AI trust and informed decision making in human-AI interactive virtual environments. in 2022 IEEE 3rd International Conference on Human-Machine Systems (ICHMS). 2022. IEEE.

- Sacoto-Martins, R. , et al. Multi-purpose low latency streaming using unmanned aerial vehicles. in 2020 12th International Symposium on Communication Systems, Networks and Digital Signal Processing (CSNDSP). 2020. IEEE.

- Truong, N.Q. , et al., Deep learning-based super-resolution reconstruction and marker detection for drone landing. IEEE Access 2019. 7, 61639–61655.

- Lee, S.H. , Real-time edge computing on multi-processes and multi-threading architectures for deep learning applications. Microprocessors and Microsystems 2022, 92, 104554. [Google Scholar] [CrossRef]

- Elbamby, M.S. , et al., Toward low-latency and ultra-reliable virtual reality. IEEE network 2018, 32, 78–84. [Google Scholar] [CrossRef]

- Hu, B. and J. Wang, Deep learning based hand gesture recognition and UAV flight controls. International Journal of Automation and Computing 2020, 17, 17–29. [Google Scholar]

- Lyu, H. Detect and avoid system based on multi sensor fusion for UAV. in 2018 International Conference on Information and Communication Technology Convergence (ICTC). 2018. IEEE.

- Habib, Y. , Monocular SLAM densification for 3D mapping and autonomous drone navigation. 2024, Ecole nationale supérieure Mines-Télécom Atlantique.

- Gao, J. , et al., SwarmCVT: Centroidal Voronoi Tessellation-Based Path Planning for Very-Large-Scale Robotics. arXiv 2024, arXiv:2410.02510,. [Google Scholar]

- Zhu, P., C. Liu, and S. Ferrari, Adaptive online distributed optimal control of very-large-scale robotic systems. IEEE Transactions on Control of Network Systems 2021, 8, 678–689. [Google Scholar]

- Zhu, P., C. Liu, and P. Estephan, A Novel Multivariate Skew-Normal Mixture Model and Its Application in Path-Planning for Very-Large-Scale Robotic Systems, in 2024 American Control Conference (ACC). 2024, IEEE: Toronto, Canada. pp. 3783-3790.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).