1. Introduction

Meta-learning, often referred to as "learning to learn," [

1,

2,

3] has emerged as a powerful framework for improving model generalization, particularly in domains where data distributions vary significantly across tasks.

Inspired by these advances[

4], our proposed model integrates task correlation learning directly into the optimization procedure, seeking not only to minimize training loss but also to enhance the quality of downstream decision-making through better generalization. In magnetic resonance imaging (MRI) reconstruction, where datasets can differ widely in anatomy, acquisition protocols, and imaging artifacts, meta-learning provides a promising avenue to build adaptable models. Building on the foundation of optimization-based deep learning methods for MRI reconstruction[

5,

6] , we propose a bi-level optimization model for meta-learning that explicitly captures the relationship between training and validation datasets through learnable task correlations.

This work is motivated by a line of recent studies exploring optimization-driven and meta-learning approaches, including an optimization-based meta-learning model for diverse datasets[

7,

8,

9] and a learnable variational model for joint multimodal MRI reconstruction and synthesis[

10]. These advances illustrate that incorporating optimization formulations into the learning process enhances model robustness across diverse conditions.

Our framework formulates the meta-training as a constrained bi-level optimization problem, where the lower-level problem learns task-specific models weighted by a normalized hyperparameter matrix, and the upper-level problem adjusts these hyperparameters to minimize validation losses. Furthermore, we extend the training dynamics using the method of Lagrangian multipliers (MLM) to provide theoretical equivalence between direct loss minimization and primal-dual optimization updates, following similar optimization principles as used in optimal control frameworks for image processing[

11,

12].

By synthesizing principles from optimization theory, control dynamics[

13], and deep learning, our approach offers a principled and scalable solution for meta-learning training algorithm, potentially improving performance on heterogeneous, real-world imaging datasets. This work continues the trajectory of optimization-based learning[

9,

12,

14].

1.1. Optimization-Based Meta-Learning: Frameworks and Algorithms

In optimization-based meta-learning, the learner is not just learning parameters — it’s learning how to optimize.

Instead of using a fixed optimization method (like SGD or Adam), you train an optimizer (or regularizer) so that across many tasks, it adapts quickly and solves new tasks better. This is typically framed as a bi-level optimization problem:

where:

denotes the parameters of the optimizer, model, or regularizer,

represents tasks sampled from a distribution ,

measures how well the task is solved after a few optimization steps.

At each meta-training iteration:

2. Problem Settings

Suppose we are given a set of N training examples, denoted as , where for each index :

represents the input data (e.g., an undersampled measurement or an input image),

denotes the corresponding ground-truth output (e.g., a fully sampled or high-quality image).

We aim to train a neural network parameterized by , where the objective is to learn by minimizing a loss function ℓ that measures the discrepancy between the network’s output and the ground truth across the training set.

This training procedure can be formulated as a

bilevel optimization problem[

15], consisting of two levels:

Lower-level optimization: For a fixed set of trainable parameters , we update the reconstruction variable by solving a task-specific optimization problem guided by the network.

Upper-level optimization: After updating , we update the network parameters by minimizing the empirical loss ℓ over the training dataset.

Thus, the network training involves alternating between solving for

given

, and optimizing

based on the performance measured by the loss function

ℓ.

Set

and

be the collection of states

and controls

at all time steps respectively.

is a multi-phase unrolling network inspired by the proximal gradient algorithm, and the output of

is the updated multi-coil MRI data from each phase. In our framework, we introduce a neural network

that serves as an intermediate mapping between consecutive states of the reconstruction variable. Specifically, at each iteration

t (where

), the network

takes the current estimate

and produces an updated estimate

:

where

denotes the set of trainable parameters at iteration

t.

To initialize the reconstruction process, we define a separate network

, which operates with an initial set of control parameters

. The role of

is to map the given partial k-space measurements

to an initial image reconstruction

:

This initial estimate

then serves as the starting point for the iterative reconstruction process governed by the optimal control system, where

is applied sequentially to refine the reconstruction across

T iterations.

Suppose the training data which consists of batches.

And the validation data which consists of batches.

——————————–

Let

be the collection of the network parameters and

be the set of hyper-parameters. We optimize the following bi-level model:

where

ℓ denotes the loss function which can be taken as cross entropy loss. And the function

is to normalize the hyperparameter vector

, here we can simply take

as the softmax function.

Also we propose a generalized model where

, and we denote

denotes the

j-th column. After we apply the normalization function

(in (

3c)), the matrix

can be regarded as a weight matrix. Intuitively and ideally, each component

can represent the correlation between

and

.

| Algorithm 1: |

- 1:

Input: Initialize hyper-parameters and network parameters . - 2:

for epoch do

- 3:

⊳ Perform (k steps) gradient update steps in SGD on . - 4:

- 5:

⊳ Perform gradient update steps in SGD on . - 6:

end for - 7:

Output: . |

3. Network Training from the View of Method of Lagrangian Multipliers (MLM)

The network parameters to be solved from (3) are

. First, we apply MLM to solve the control problem in

Section 2. The Lagrangian of the control problem (3) is

where

are Lagrangian multipliers of (3).

We want to find primal solution

and dual solution

to solve (3), if

minimize (

4), by the first order optimality condition, we have

The algorithm is conducted in the following manner:

Fix

and define

, then by the first order optimality condition of

we get

By the first order optimality condition of

we get

Therefore for fixed

we get the optimal solutions

satisfy (1) and (1).

For fixed

, we compute the gradient

:

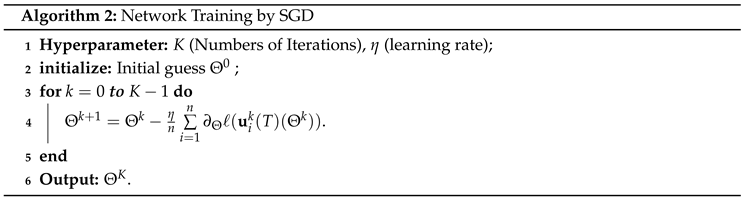

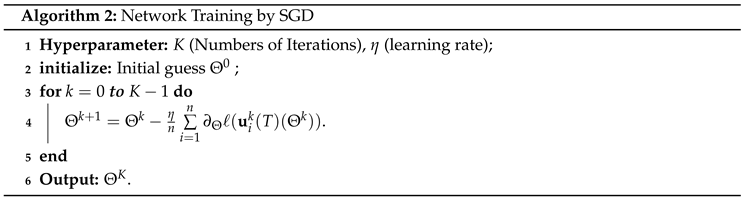

Recall the conventional SGD method, we update , where is interpreted as a function of .

In the following theorem, we show the equivalence between and .

Theorem 1. .

Proof. From (6) we have

From (7b), we have

Similarly, we can show (

Section 3) holds for

together with (

7a), we have

Hence, (8c) reduces to

Thus, we derive

. □

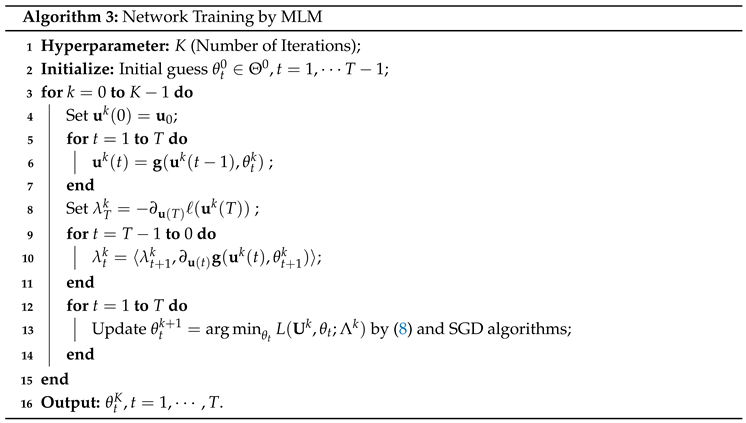

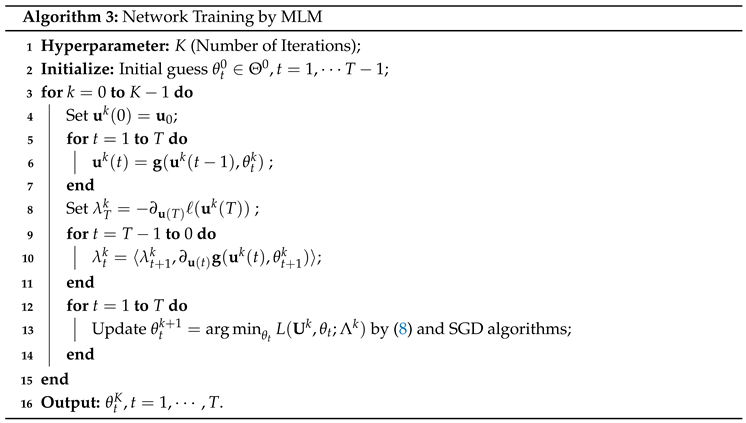

This theorem shows that applying SGD on the loss function ℓ is equivalent to performing SGD on L. We want to reach the optimal solutions with fixed , so we proceed the algorithm to update in the iterative scheme by replacing notation as . This training process is summarized in Algorithm 3, where , , and for each iteration . Now we can use one step of SGD optimization methods such as ADAM to update by replacing to be with gradient computed in (8).

Algorithm 3 trains a meta-learning model by solving a bilevel optimization problem using the Method of Lagrangian Multipliers (MLM).

Instead of naively updating the network parameters by only minimizing the loss, this algorithm:

Explicitly tracks the primal variables (the reconstruction variables ),

And the dual variables (the Lagrange multipliers ),

to ensure that the updates respect the dynamic constraint at each iteration:

where

denotes the learned update network and

are the trainable control parameters at iteration

t.

The forward pass simulates the network behavior over T iterations (like "unrolling" the optimization).

The backward pass computes how the loss at the end depends on every single step (using the chain rule and multipliers). The parameter update ensures that the learned optimizer not only reduces loss but respects the dynamic constraints (i.e., how evolves over time).

Thus, it mimics optimal control theory where we treat the evolution of the system as a dynamic process, not just static minimization.

4. Discussion

Optimization is fundamental to solving inverse problems, yet traditional methods often require careful tuning and lack flexibility across domains. Meta-learning addresses this by enabling optimizers to learn from data how to adaptively solve new tasks, capturing task-specific structures, and accelerating convergence[

16,

17]. This is especially important in applications such as quantitative MRI reconstruction, where diverse data distributions[

18] require robust and adaptable optimization strategies.

Optimization and meta-learning are increasingly intertwined with broader developments in decision-making under uncertainty. Recent works in predict-then-optimize frameworks[

19], fairness in resource allocation[

20], and integrated estimation-optimization perspectives[

21] highlight the importance of aligning learning models with downstream optimization goals[

22,

23,

24].

5. Conclusion

In this work, we proposed a bilevel optimization framework for meta-learning, where the training process is viewed through the lens of optimal control theory. By employing the Method of Lagrangian Multipliers (MLM), we explicitly modeled both the primal reconstruction variables and the dual Lagrange multipliers, enabling principled updates that respect the underlying dynamics of the optimization process[

25]. Our approach bridges classical optimization techniques with modern deep learning, offering a scalable and theoretically grounded method for learning adaptive optimization strategies.

This formulation not only improves the training stability but also enhances generalization across diverse tasks and domains, making it particularly suitable for challenging inverse problems such as quantitative MRI reconstruction. The equivalence established between direct loss minimization and Lagrangian-based optimization further validates the correctness and efficiency of our method.

Future work will explore extending this framework to more complex scenarios, such as multi-stage reconstruction, uncertainty-aware modeling, and real-time adaptation in dynamic environments. We believe that optimization-driven meta-learning approaches like ours hold strong potential for advancing learning systems in both medical imaging and broader scientific computing domains.

References

- T. M. Hospedales, A. T. M. Hospedales, A. Antoniou, P. Micaelli, and A. J. Storkey, “Meta-learning in neural networks: A survey,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021. [CrossRef]

- M. Huisman, J. N. M. Huisman, J. N. van Rijn, and A. Plaat, “A survey of deep meta-learning,” Artificial Intelligence Review, pp. 1–59, 2021. [CrossRef]

- C. Finn, A. C. Finn, A. Rajeswaran, S. Kakade, and S. Levine, “Online meta-learning,” in International Conference on Machine Learning. PMLR, 2019, pp. 1920–1930. [CrossRef]

- W. Bian, Y. W. Bian, Y. Chen, and X. Ye, “An optimal control framework for joint-channel parallel mri reconstruction without coil sensitivities,” Magnetic Resonance Imaging, 2022. [CrossRef]

- D. Kiyasseh, A. D. Kiyasseh, A. Swiston, R. Chen, and A. Chen, “Segmentation of left atrial mr images via self-supervised semi-supervised meta-learning,” in Medical Image Computing and Computer Assisted Intervention–MICCAI 2021: 24th International Conference, Strasbourg, France, –October 1, 2021, Proceedings, Part II 24. Springer, 2021, pp. 13–24. 27 September. [CrossRef]

- W. Bian, A. W. Bian, A. Jang, and F. Liu, “Multi-task magnetic resonance imaging reconstruction using meta-learning,” Magnetic Resonance Imaging, vol. 116, p. 110278, 2025. [CrossRef]

- Y. Chen, C.-B. Y. Chen, C.-B. Schönlieb, P. Liò, T. Leiner, P. L. Dragotti, G. Wang, D. Rueckert, D. Firmin, and G. Yang, “Ai-based reconstruction for fast mri—a systematic review and meta-analysis,” Proceedings of the IEEE, vol. 110, no. 2, pp. 224–245, 2022. [CrossRef]

- W. Bian, Y. W. Bian, Y. Chen, X. Ye, and Q. Zhang, “An optimization-based meta-learning model for mri reconstruction with diverse dataset,” Journal of Imaging, vol. 7, no. 11, p. 231, 2021. [CrossRef]

- Q. Liu, Q. Q. Liu, Q. Dou, and P.-A. Heng, “Shape-aware meta-learning for generalizing prostate mri segmentation to unseen domains,” in Medical Image Computing and Computer Assisted Intervention–MICCAI 2020: 23rd International Conference, Lima, Peru, –8, 2020, Proceedings, Part II 23. Springer, 2020, pp. 475–485. 4 October. [CrossRef]

- W. Bian, Q. W. Bian, Q. Zhang, X. Ye, and Y. Chen, “A learnable variational model for joint multimodal mri reconstruction and synthesis,” in International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, 2022, pp. 354–364. [CrossRef]

- W. Bian and Y. K. Tamilselvam, “A review of optimization-based deep learning models for mri reconstruction,” AppliedMath, vol. 4, no. 3, pp. 1098–1127, 2024. [CrossRef]

- A. Rajeswaran, C. A. Rajeswaran, C. Finn, S. M. Kakade, and S. Levine, “Meta-learning with implicit gradients,” Advances in neural information processing systems, vol. 32, 2019. [CrossRef]

- S. Wang, J. S. Wang, J. Chen, X. Deng, S. Hutchinson, and F. Dellaert, “Robot calligraphy using pseudospectral optimal control in conjunction with a novel dynamic brush model,” in 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2020, pp. 6696–6703. [CrossRef]

- W. Bian, “Optimization-based deep learning methods for magnetic resonance imaging reconstruction and synthesis,” Ph.D. dissertation, University of Florida, 2022.

- arXiv:2406.02626, 2024.

- Z. Ding, P. Z. Ding, P. Li, Q. Yang, S. Li, and Q. Gong, “Regional style and color transfer,” in 2024 5th International Conference on Computer Vision, Image and Deep Learning (CVIDL). IEEE, 2024, pp. 593–597. [CrossRef]

- W. Bian, A. W. Bian, A. Jang, L. Zhang, X. Yang, Z. Stewart, and F. Liu, “Diffusion modeling with domain-conditioned prior guidance for accelerated mri and qmri reconstruction,” IEEE Transactions on Medical Imaging, 2024. [CrossRef]

- Z. Ke, S. Z. Ke, S. Zhou, Y. Zhou, C. H. Chang, and R. arXiv preprint arXiv:2501.07033, arXiv:2501.07033, 2025.

- S. Verma, Y. S. Verma, Y. Zhao, S. Shah, N. Boehmer, A. Taneja, and M. Tambe, “Group fairness in predict-then-optimize settings for restless bandits,” in The 40th Conference on Uncertainty in Artificial Intelligence.

- N. Boehmer, Y. N. Boehmer, Y. Zhao, G. Xiong, P. Rodriguez-Diaz, P. D. C. Cibrian, J. Ngonzi, A. Boatin, and M. Tambe, “Optimizing vital sign monitoring in resource-constrained maternal care: An rl-based restless bandit approach,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 39, no. 28, 2025, pp. 28 843–28 849. [CrossRef]

- A. N. Elmachtoub, H. A. N. Elmachtoub, H. Lam, H. Zhang, and Y. arXiv preprint arXiv:2304.06833, arXiv:2304.06833, vol. 107, 2023.

- W. Bian, P. W. Bian, P. Li, M. Zheng, C. Wang, A. Li, Y. Li, H. Ni, and Z. Zeng, “A review of electromagnetic elimination methods for low-field portable mri scanner,” in 2024 5th International Conference on Machine Learning and Computer Application (ICMLCA). IEEE, 2024, pp. 614–618. [CrossRef]

- Z. Li, S. Z. Li, S. Qiu, and Z. Ke, “Revolutionizing drug discovery: Integrating spatial transcriptomics with advanced computer vision techniques,” in 1st CVPR Workshop on Computer Vision For Drug Discovery (CVDD): Where are we and What is Beyond?

- Z. Ke and Y. Yin, “Tail risk alert based on conditional autoregressive var by regression quantiles and machine learning algorithms,” in 2024 5th International Conference on Artificial Intelligence and Computer Engineering (ICAICE). IEEE, 2024, pp. 527–532. [CrossRef]

- W. Bian, A. W. Bian, A. Jang, and F. Liu, “Improving quantitative mri using self-supervised deep learning with model reinforcement: Demonstration for rapid t1 mapping,” Magnetic Resonance in Medicine, vol. 92, no. 1, pp. 98–111, 2024. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).