Submitted:

20 April 2025

Posted:

21 April 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Related Work

1.1.1. Traditional Segmentation Methods

1.1.2. Deep Learning-Based Methods

1.2. Motivations

1.3. Contributions

- (1).

- Spatially Ordered Sequence Perspective: We innovatively propose the idea of spatially ordered sequences, where different elevation points at the same planar position can be viewed as a sequence from low to high, with the sequence values containing elevation information. This operates point cloud semantic segmentation as a sequence generation task of the same length, providing a new way to process point cloud data.

- (2).

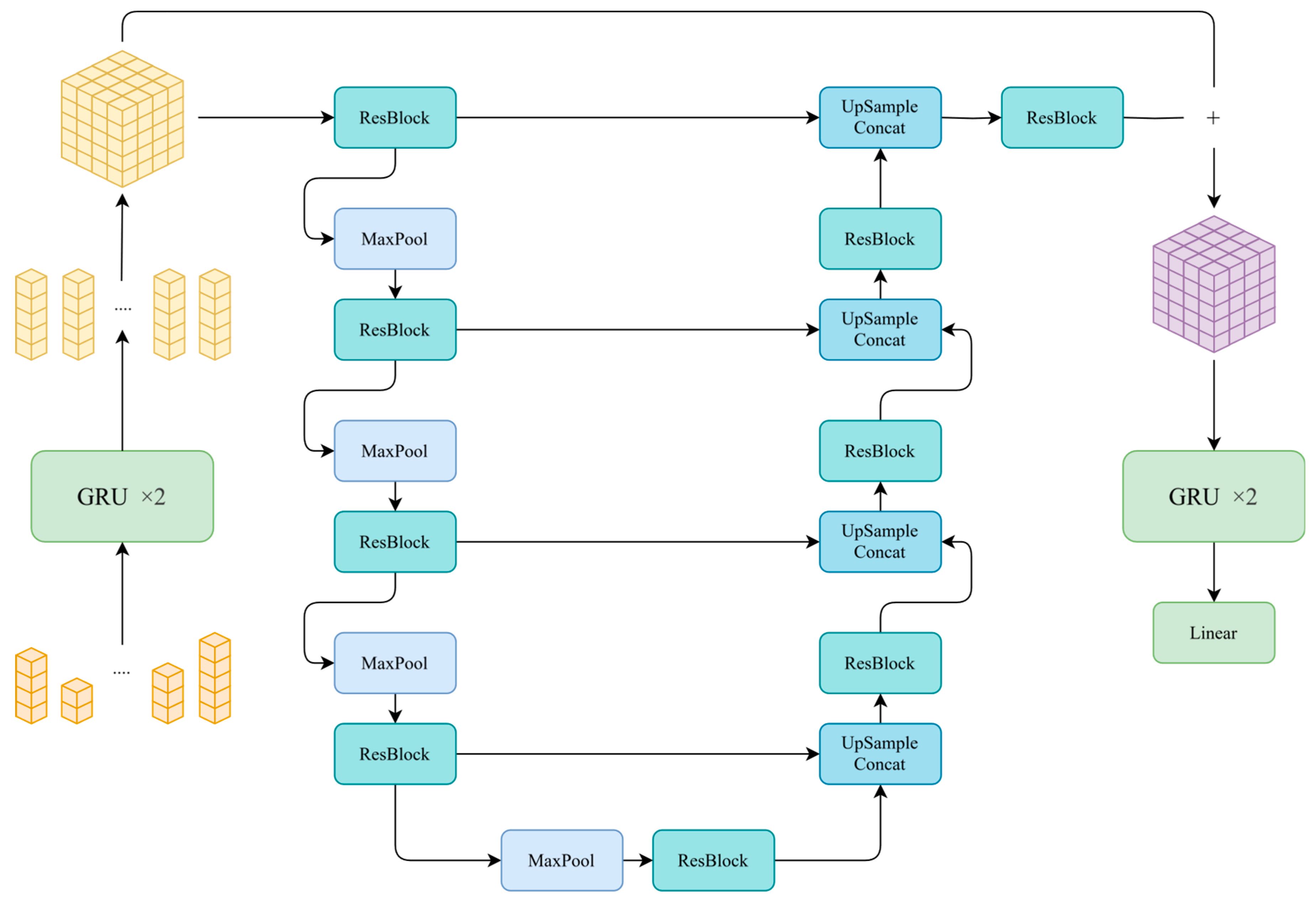

- SeqConv-Net Point Cloud Semantic Segmentation Architecture: Based on the spatially ordered sequence perspective, we design an RNN+CNN point cloud semantic segmentation architecture called SeqConv-Net, and innovatively use RNN hidden states as CNN inputs to fuse planar spatial information.

- (3).

- Construction and Validation of SeqConv-Net: We design the first network based on the SeqConv-Net architecture and validate its feasibility. Experiments show that our SeqConv-Net design is not only efficient and reliable but also interpretable. Compared to previous methods, it significantly improves the speed of point cloud semantic segmentation in large scenes while maintaining accuracy.

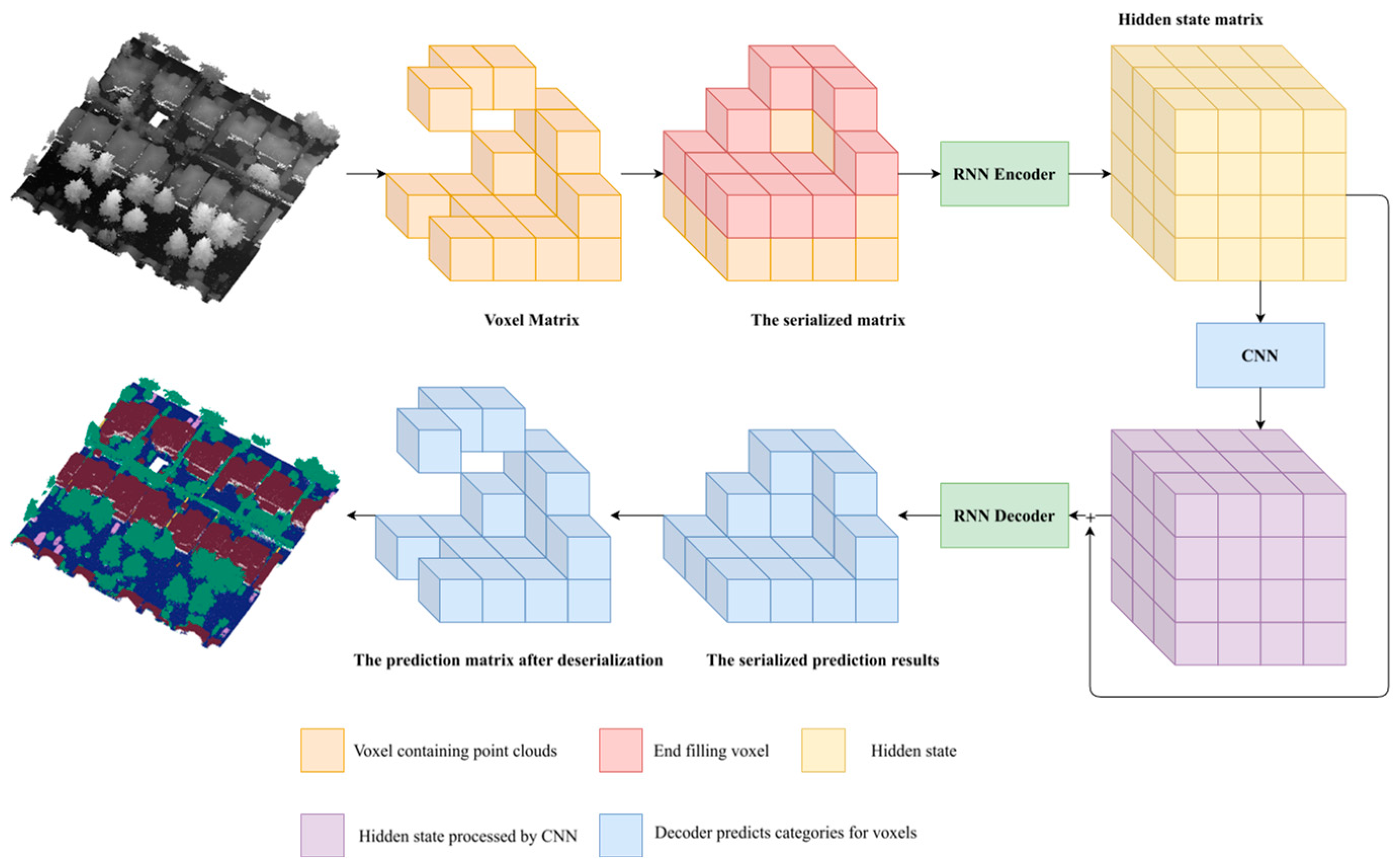

2. Point Cloud Semantic Segmentation Framework

2.1. Architecture Overview

2.2. Spatially Ordered Sequences

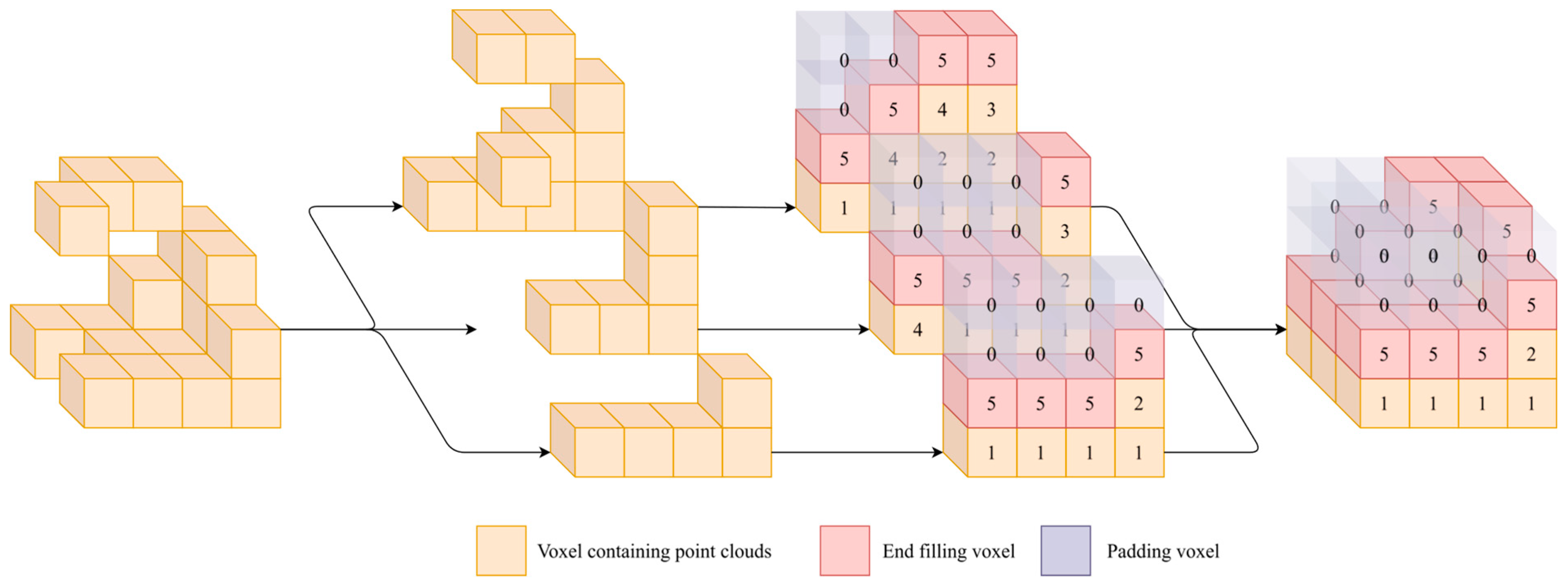

2.2.1. Spatially Ordered Sequences

- (1).

- Avoids issues with absolute elevation: Traditional methods using elevation coordinates may face inconsistencies or computational complexity due to variations in elevation range or noise. Using indices as elevation representations converts elevation information into relative positional relationships, avoiding instability caused by absolute elevation values and enhancing model robustness.

- (2).

- Fast generation of spatially related sequences: The index-based elevation representation allows for accelerated sequence generation using sorting algorithms. By sorting the sequence and using the indices of valid positions as input, spatial sequences can be quickly generated. This serialized representation facilitates efficient computer processing and significantly reduces preprocessing time compared to methods like KNN, especially for large-scale point cloud data.

- (3).

- Utilizes NLP embedding methods: By using integer indices as elevation representations, we can leverage embedding techniques from NLP, mapping indices to high-dimensional vector spaces for computation. This embedding representation captures relationships between elevations and provides richer feature representations for deep learning models, enhancing their expressive power.

- (4).

- Efficient voxel-to-point cloud recovery: During prediction, the network can sequentially output predictions for each valid position and use the indices to restore the correspondence between predictions and original voxels. This recovery process is computationally efficient and does not introduce additional losses.

2.2.2. Generation Algorithm for Spatially Ordered Sequences

2.2.3. Differences Between Spatially Ordered Sequences and NLP Sequences

2.3. Spatial Information Processing

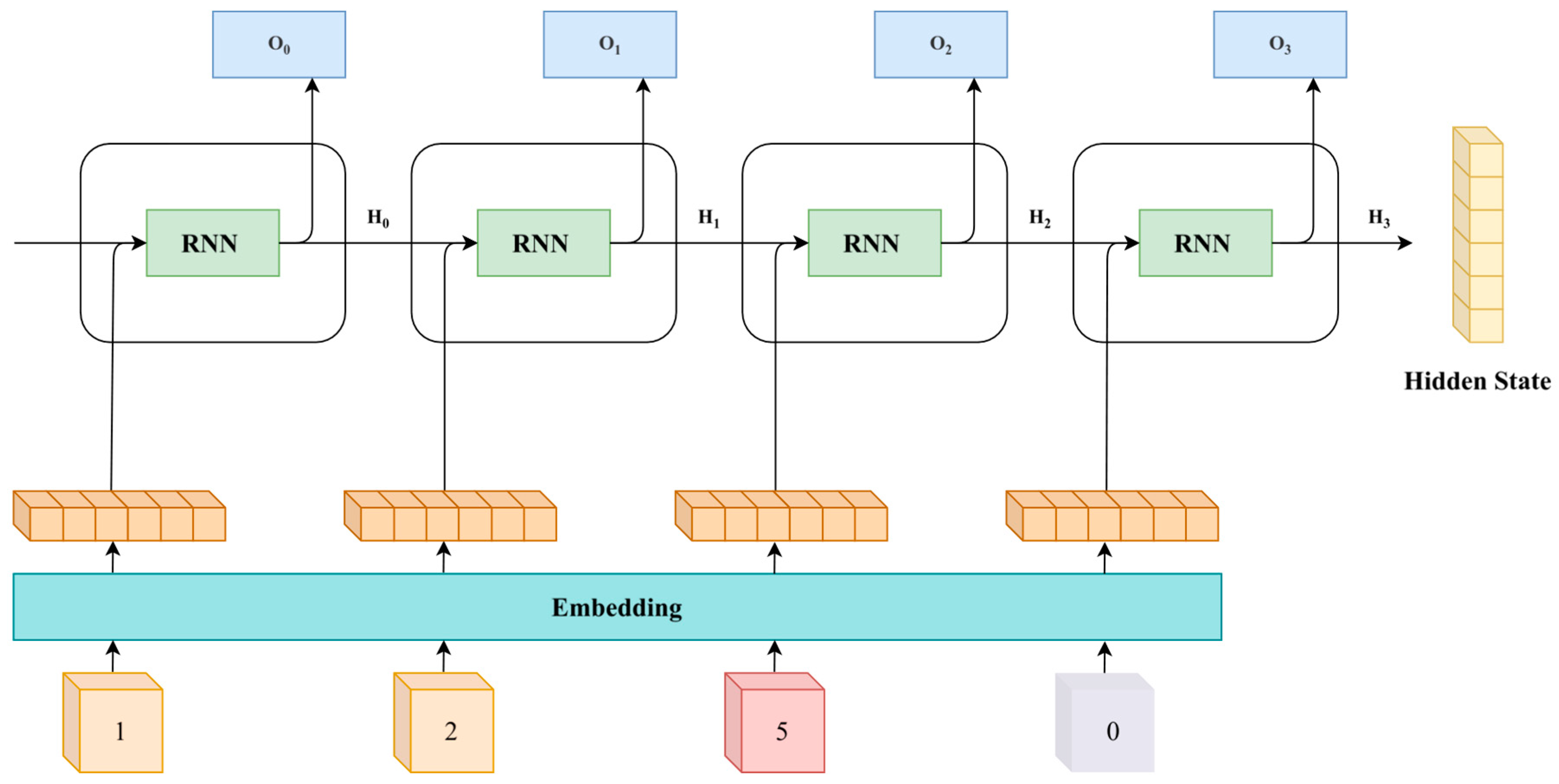

2.3.1. Elevation Information Extraction Using RNNs

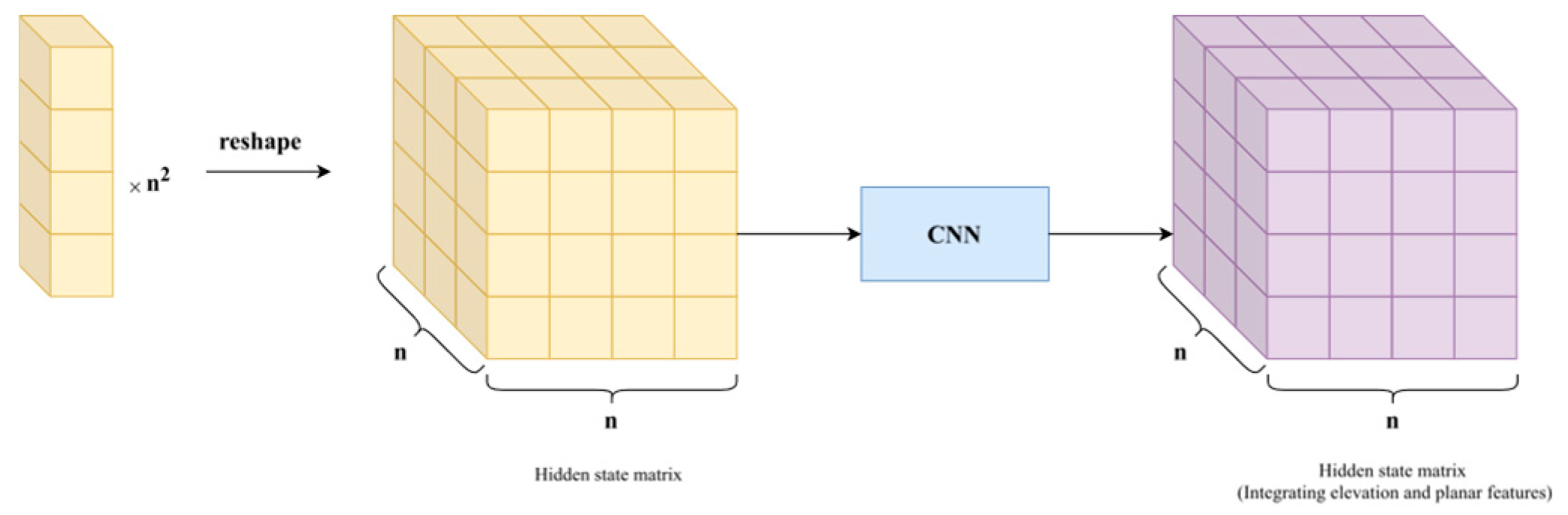

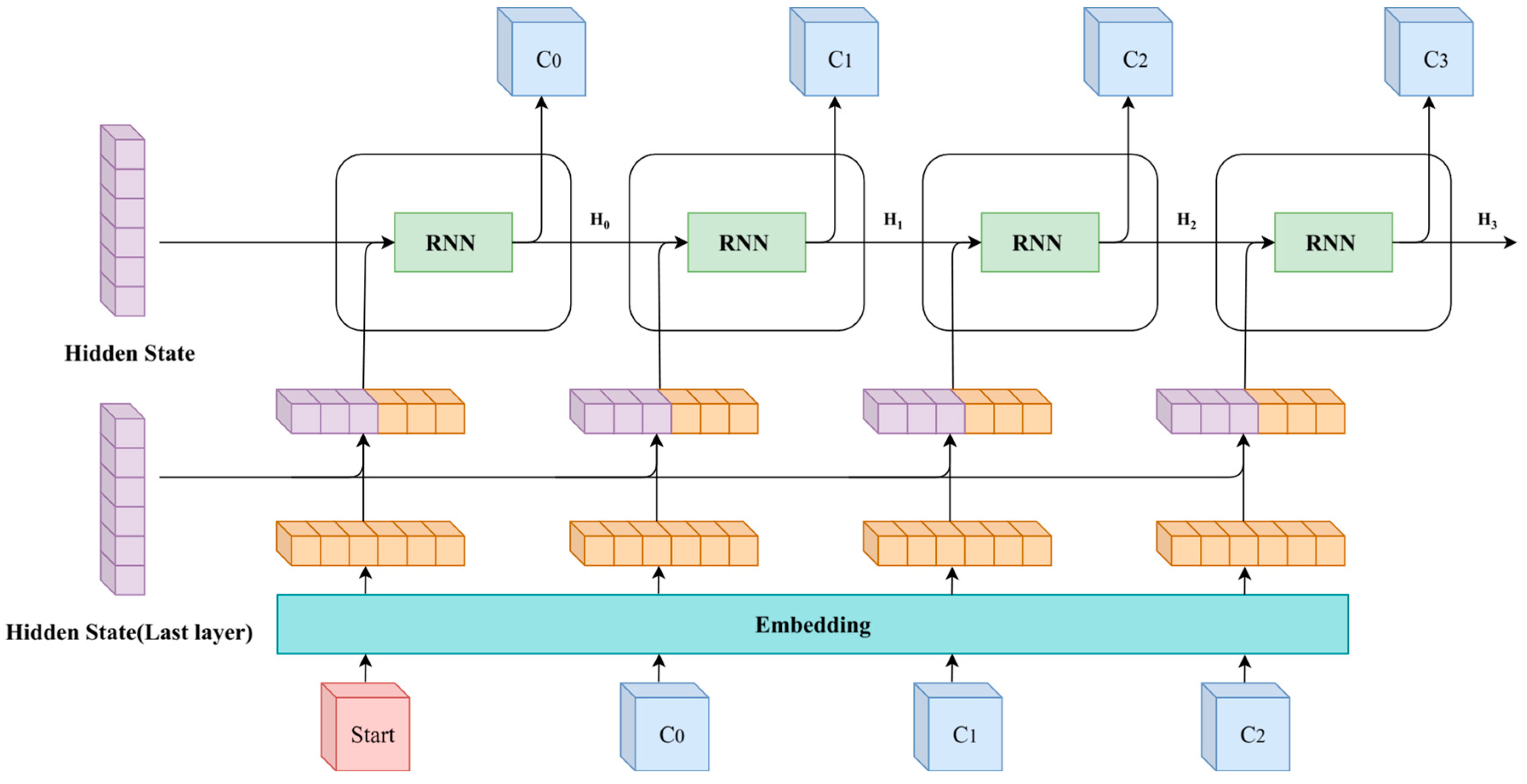

2.3.2. Planar Information Fusion and Extraction Using CNNs

2.3.3. Prediction

3. Experimental Results and Analysis

3.1. Data Preprocessing and Augmentation

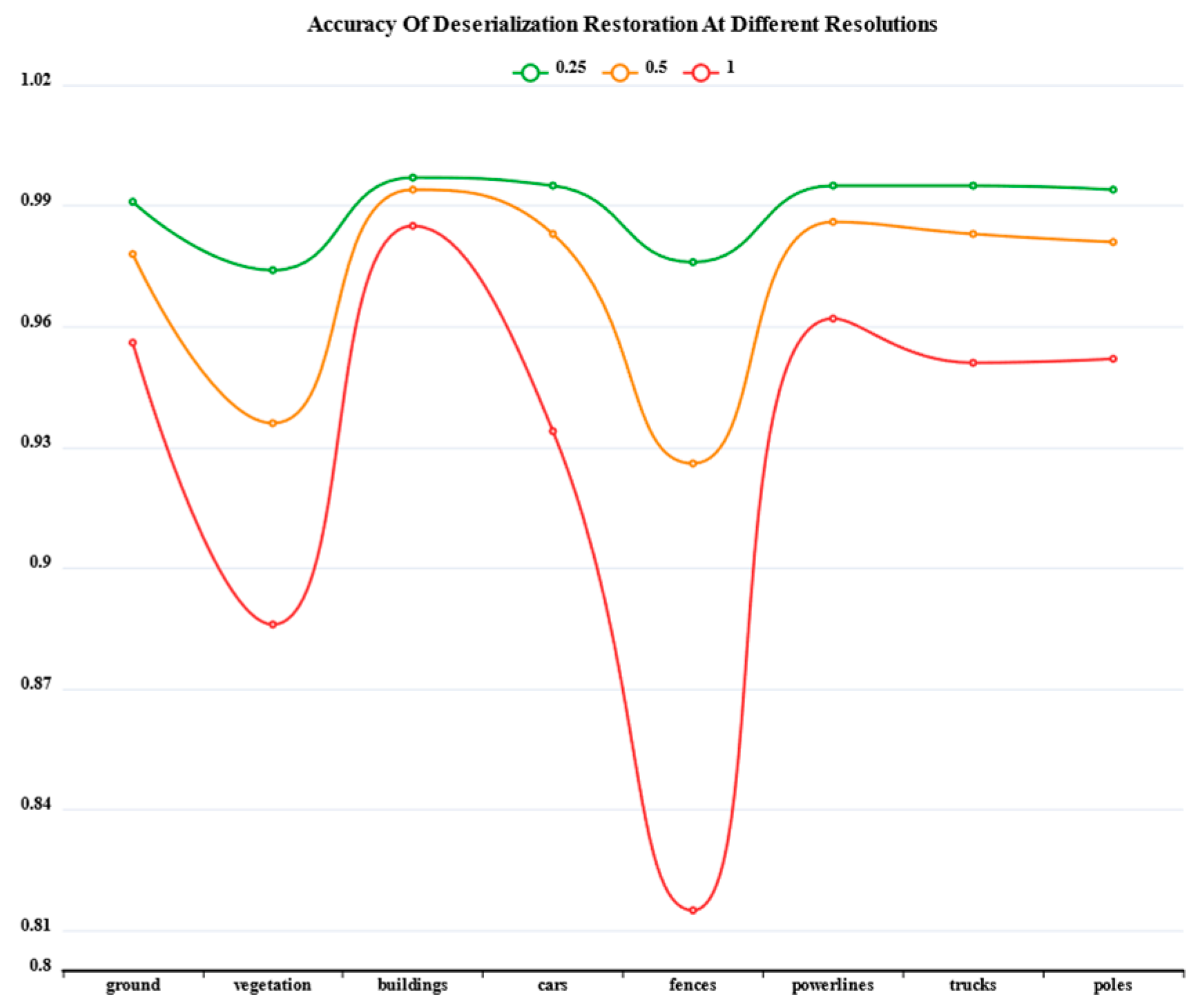

3.1.1. Sequence Loss

- (1).

- From the global accuracy perspective, even at the maximum resolution of one meter, the model's mIOU remains at 0.93. This result indicates:

- (2).

- The impact of serialization on object classification accuracy is within an acceptable range, and higher resolutions lead to greater serialization loss.

- (3).

- The accuracy differences between different object classes are mainly due to their inherent geometric features and spatial distribution characteristics, rather than the serialization process itself.

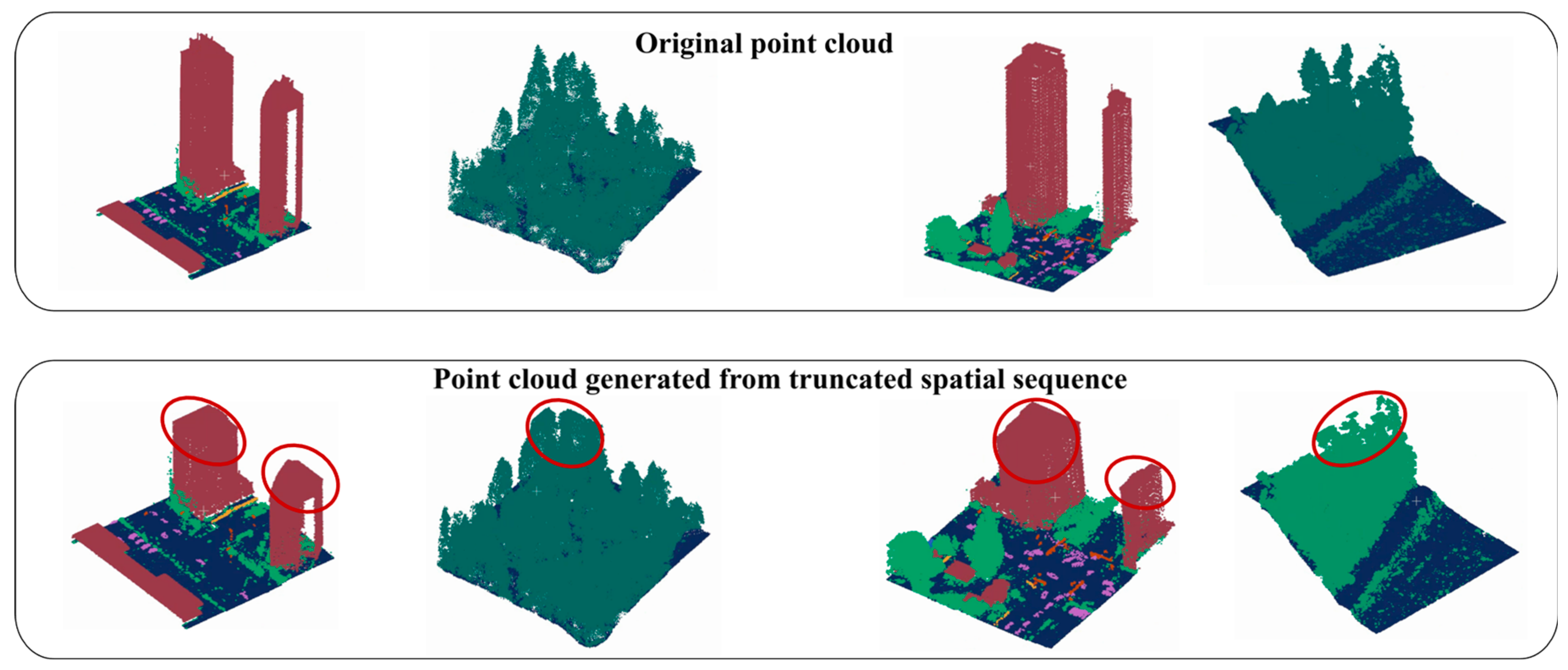

3.1.2. Elevation Truncation

3.2. Experiments

3.2.1. Network Structure

3.2.2. Elevation Embedding

3.2.3. Implementation Details and Evaluation Metrics

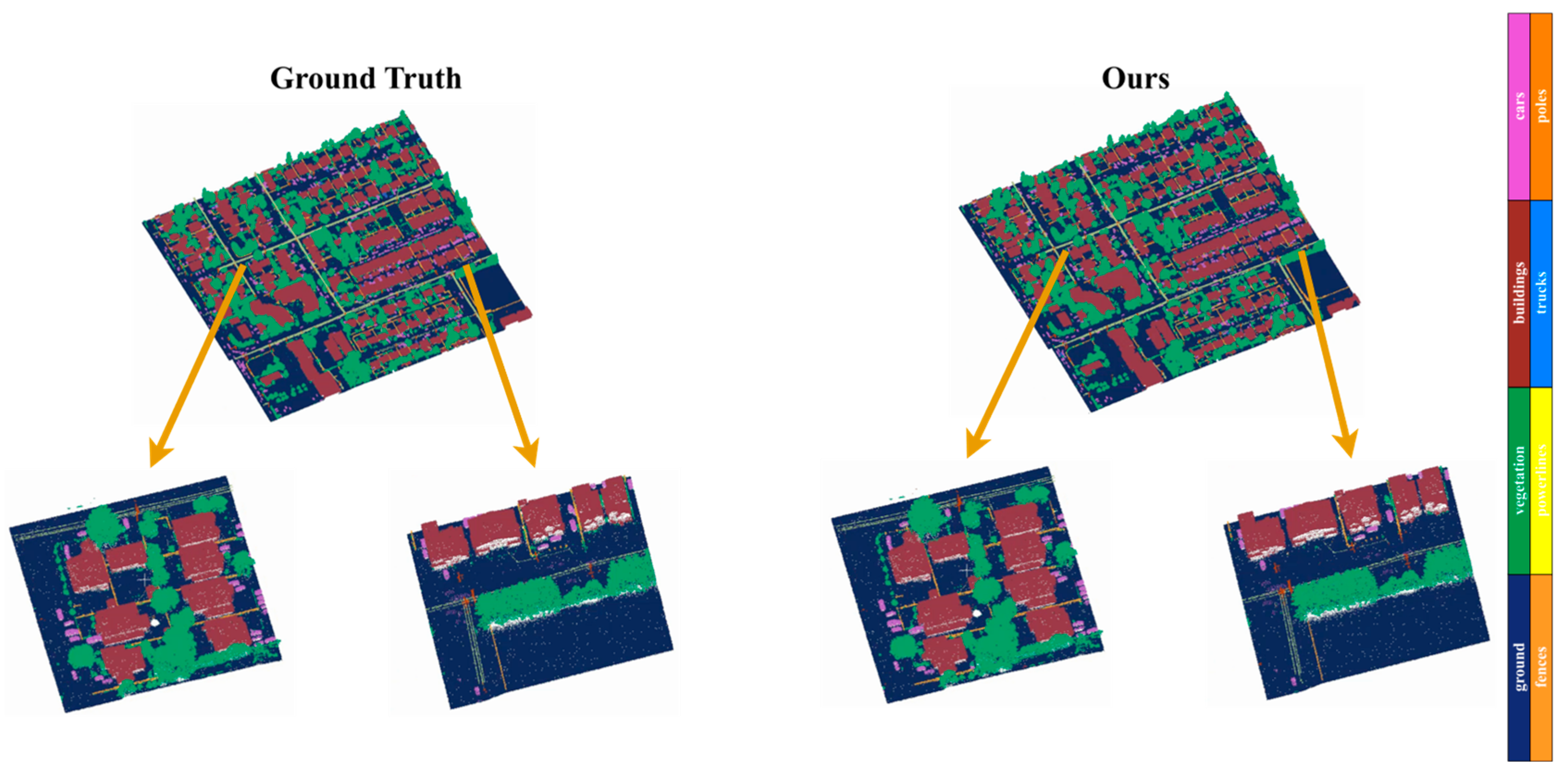

3.2.4. Results on the DALES Dataset

3.3. Ablation Studies

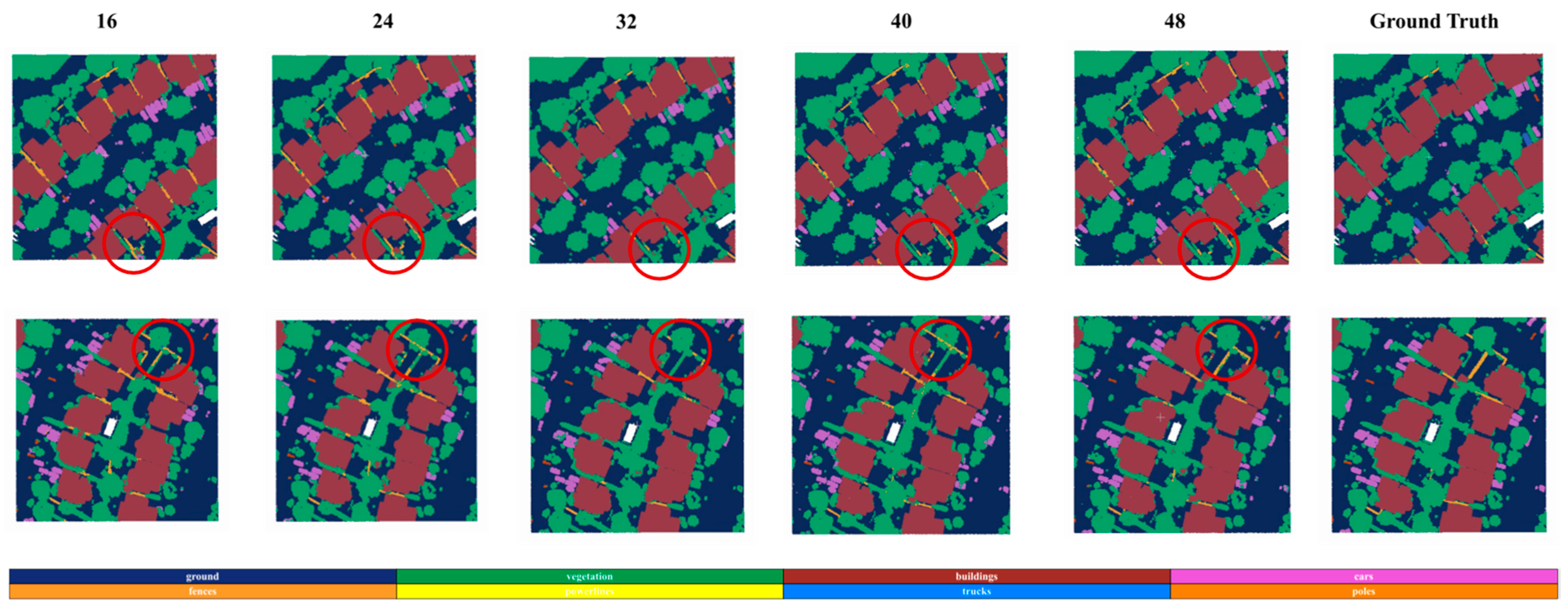

3.3.1. Hidden State Dimension

| ground | vegetation | buildings | cars | trucks | poles | powerlines | fences | mIOU | speed | |

| 16 | 0.942 | 0.839 | 0.930 | 0.655 | 0.396 | 0.679 | 0.607 | 0.406 | 0.682 | 4.05s |

| 24 | 0.950 | 0.851 | 0.931 | 0.685 | 0.412 | 0.681 | 0.658 | 0.453 | 0.702 | 4.26s |

| 32 | 0.953 | 0.870 | 0.932 | 0.732 | 0.453 | 0.704 | 0.717 | 0.495 | 0.732 | 5.56s |

| 40 | 0.952 | 0.896 | 0.932 | 0.763 | 0.522 | 0.701 | 0.704 | 0.501 | 0.747 | 6.02s |

| 48 | 0.964 | 0.919 | 0.934 | 0.751 | 0.532 | 0.706 | 0.724 | 0.511 | 0.755 | 8.01s |

3.3.2. Spatial Sequence Resolution

| ground | vegetation | buildings | cars | trucks | poles | power lines | fences | mIOU | speed | |

| 1 | 0.943 | 0.839 | 0.930 | 0.512 | 0.214 | 0.682 | 0.651 | 0.210 | 0.622 | 1.51s |

| 0.5 | 0.953 | 0.870 | 0.932 | 0.732 | 0.453 | 0.704 | 0.717 | 0.495 | 0.732 | 8.01s |

| 0.25 | 0.955 | 0.865 | 0.925 | 0.713 | 0.421 | 0.692 | 0.653 | 0.512 | 0.717 | 30.9s |

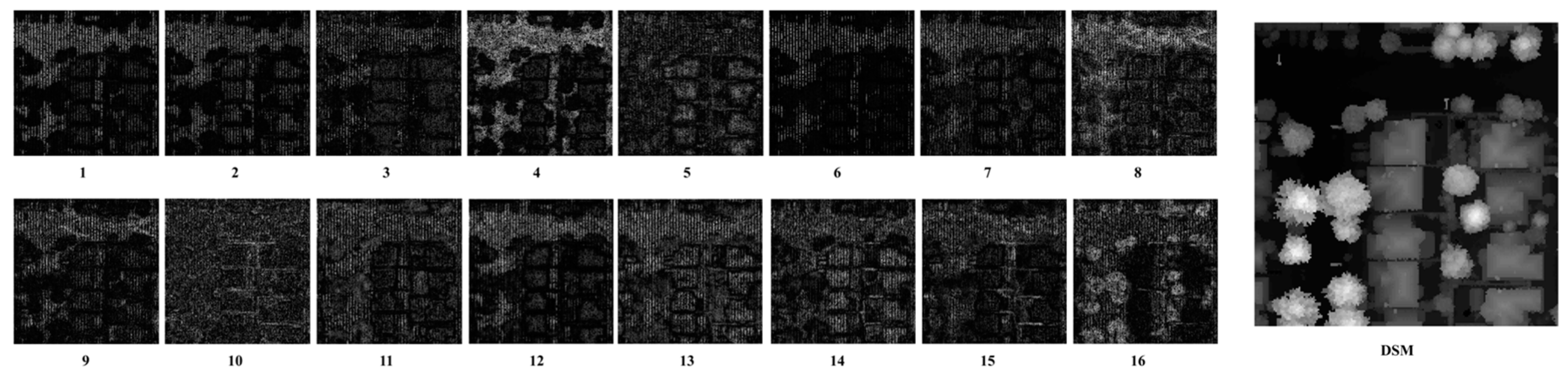

3.3.3. Exploring Hidden Variables

- (1).

- The focus of different channels on specific object features indicates that the model does not simply encode elevations, but learns spatial information perception through the CNN which enables the model to learn feature separation methods.

- (2).

- The complementary feature distribution across channels shows that the model achieves effective information decomposition and reorganization, not just simple encoding.

- (3).

- The correspondence between features and the DSM confirms that the hidden variable mapping process has practical physical significance.

4. Conclusions

Funding

References

- J. A. K. Suykens and J. Vandewalle, "Least squares support vector machine classifiers," Neural Processing Letters, vol. 9, pp. 293-300, 1999. [CrossRef]

- V. Svetnik et al., "Random forest: A classification and regression tool for compound classification and QSAR modeling," J. Chem. Inf. Comput. Sci., vol. 43, no. 6, pp. 1947–1958, 2003. [CrossRef]

- R. Qi, H. Su, K. Mo, and L. J. Guibas, "Pointnet: Deep learning on point sets for 3D classification and segmentation," in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), 2017, pp. 652–660.

- Y. Wang et al., "Dynamic graph CNN for learning on point clouds," ACM Trans. Graph., vol. 38, no. 5, pp. 1–12, 2019. [CrossRef]

- H. Thomas et al., "KPConv: Flexible and deformable convolution for point clouds," in Proc. IEEE/CVF Int. Conf. Comput. Vis. (ICCV), 2019, pp. 6411–6420.

- Robert, H. Raguet, and L. Landrieu, "Efficient 3D semantic segmentation with superpoint transformer," in Proc. IEEE/CVF Int. Conf. Comput. Vis. (ICCV), 2023, pp. 17195–17204.

- M. Pauly, R. Keiser, and M. Gross, "Multi-scale feature extraction on point-sampled surfaces," in Comput. Graph. Forum, vol. 22, no. 3, pp. 281–289, Sep. 2003.

- T. Rabbani, F. Van Den Heuvel, and G. Vosselmann, "Segmentation of point clouds using smoothness constraint," in Int. Arch. Photogramm., Remote Sens. Spatial Inf. Sci., vol. 36, no. 5, pp. 248–253, 2006.

- Golovinskiy and T. Funkhouser, "Min-cut based segmentation of point clouds," in Proc. IEEE 12th Int. Conf. Comput. Vis. Workshops (ICCV Workshops), 2009, pp. 39–46.

- R. Schnabel, R. Wahl, and R. Klein, "Efficient RANSAC for point-cloud shape detection," in Comput. Graph. Forum, vol. 26, no. 2, pp. 214–226, Jun. 2007. [CrossRef]

- J. Papon et al., "Voxel cloud connectivity segmentation-supervoxels for point clouds," in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), 2013, pp. 2027–2034.

- R. B. Rusu et al., "Functional object mapping of kitchen environments," in Proc. IEEE/RSJ Int. Conf. Intell. Robots Syst. (IROS), 2008, pp. 3525–3532.

- Y. Guo et al., "3D object recognition in cluttered scenes with local surface features: A survey," IEEE Trans. Pattern Anal. Mach. Intell., vol. 36, no. 11, pp. 2270–2287, Nov. 2014. [CrossRef]

- K. Lai, L. Bo, X. Ren, and D. Fox, "A large-scale hierarchical multi-view RGB-D object dataset," in Proc. IEEE Int. Conf. Robot. Autom. (ICRA), 2011, pp. 1817–1824.

- R. Qi, L. Yi, H. Su, and L. J. Guibas, "Pointnet++: Deep hierarchical feature learning on point sets in a metric space," in Adv. Neural Inf. Process. Syst., vol. 30, 2017.

- Q. Hu et al., "RandLA-Net: Efficient semantic segmentation of large-scale point clouds," in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. (CVPR), 2020, pp. 11108–11117.

- Y. Su et al., "DLA-Net: Learning dual local attention features for semantic segmentation of large-scale building facade point clouds," Pattern Recognit., vol. 123, p. 108372, 2022. [CrossRef]

- W. Wu, Z. Qi, and L. Fuxin, "PointConv: Deep convolutional networks on 3D point clouds," in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. (CVPR), 2019, pp. 9621–9630.

- B. Wu et al., "SqueezeSeg: Convolutional neural nets with recurrent CRF for real-time road-object segmentation from 3D LiDAR point cloud," in Proc. IEEE Int. Conf. Robot. Autom. (ICRA), 2018, pp. 1887–1893. [CrossRef]

- Milioto et al., "RangeNet++: Fast and accurate LiDAR semantic segmentation," in Proc. IEEE/RSJ Int. Conf. Intell. Robots Syst. (IROS), 2019, pp. 4213–4220.

- H. Su, S. Maji, E. Kalogerakis, and E. Learned-Miller, "Multi-view convolutional neural networks for 3D shape recognition," in Proc. IEEE Int. Conf. Comput. Vis. (ICCV), 2015, pp. 945–953. [CrossRef]

- T. Le and Y. Duan, "PointGrid: A deep network for 3D shape understanding," in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), 2018, pp. 9204–9214.

- H. Y. Meng et al., "VV-Net: Voxel VAE net with group convolutions for point cloud segmentation," in Proc. IEEE/CVF Int. Conf. Comput. Vis. (ICCV), 2019, pp. 8500–8508.

- B. Graham, M. Engelcke, and L. Van Der Maaten, "3D semantic segmentation with submanifold sparse convolutional networks," in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), 2018, pp. 9224–9232.

- Z. Liu, H. Tang, Y. Lin, and S. Han, "Point-voxel CNN for efficient 3D deep learning," in Adv. Neural Inf. Process. Syst., vol. 32, 2019.

- Zhang et al., "Deep FusionNet for point cloud semantic segmentation," in Comput. Vis. – ECCV 2020, pp. 644–663, 2020.

- H. Zhao et al., "Point transformer," in Proc. IEEE/CVF Int. Conf. Comput. Vis. (ICCV), 2021, pp. 16259–16268.

- M. H. Guo et al., "PCT: Point cloud transformer," Comput. Vis. Media, vol. 7, pp. 187–199, 2021.

- Qian et al., "PointNeXt: Revisiting PointNet++ with improved training and scaling strategies," in Adv. Neural Inf. Process. Syst., vol. 35, pp. 23192–23204, 2022.

- J. L. Elman, "Finding structure in time," Cogn. Sci., vol. 14, no. 2, pp. 179–211, 1990.

- Y. LeCun, L. Bottou, Y. Bengio, and P. Haffner, "Gradient-based learning applied to document recognition," Proc. IEEE, vol. 86, no. 11, pp. 2278–2324, Nov. 1998. [CrossRef]

- K. He, X. Zhang, S. Ren, and J. Sun, "Deep residual learning for image recognition," in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), 2016, pp. 770–778.

- I. Sutskever, O. Vinyals, and Q. V. Le, "Sequence to sequence learning with neural networks," in Adv. Neural Inf. Process. Syst., vol. 27, 2014.

- N. Varney, V. K. Asari, and Q. Graehling, "DALES: A large-scale aerial LiDAR data set for semantic segmentation," in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. Workshops (CVPRW), 2020, pp. 186–187.

- K. Cho et al., "Learning phrase representations using RNN encoder-decoder for statistical machine translation," arXiv preprint arXiv:1406.1078, 2014.

- S. Hochreiter and J. Schmidhuber, "Long short-term memory," Neural Comput., vol. 9, no. 8, pp. 1735–1780, 1997.

- O. Ronneberger, P. Fischer, and T. Brox, "U-Net: Convolutional networks for biomedical image segmentation," in Med. Image Comput. Comput.-Assist. Intervent. (MICCAI), 2015, pp. 234–241. [CrossRef]

- T. Mikolov, K. Chen, G. Corrado, and J. Dean, "Efficient estimation of word representations in vector space," arXiv preprint arXiv:1301.3781, 2013.

- J. Pennington, R. Socher, and C. D. Manning, "GloVe: Global vectors for word representation," in Proc. Conf. Empir. Methods Nat. Lang. Process. (EMNLP), 2014, pp. 1532–1543.

- A. Vaswani et al., "Attention is all you need," in Adv. Neural Inf. Process. Syst., vol. 30, 2017.

- L. C. Chen, Y. Zhu, G. Papandreou, F. Schroff, and H. Adam, "Encoder-decoder with atrous separable convolution for semantic image segmentation," in Proc. Eur. Conf. Comput. Vis. (ECCV), 2018, pp. 801–818.

- A. Dosovitskiy et al., "An image is worth 16x16 words: Transformers for image recognition at scale," arXiv preprint arXiv:2010.11929, 2020.

| Resolution | ground | vegetation | buildings | cars | fences | powerlines | trucks | poles | mIOU |

| 0.25 | 0.991 | 0.974 | 0.997 | 0.995 | 0.976 | 0.995 | 0.995 | 0.994 | 0.990 |

| 0.5 | 0.978 | 0.936 | 0.994 | 0.983 | 0.926 | 0.986 | 0.983 | 0.981 | 0.971 |

| 1 | 0.956 | 0.886 | 0.985 | 0.934 | 0.815 | 0.962 | 0.951 | 0.952 | 0.930 |

| Truncation | ground | vegetation | cars | trucks | powerlines | fences | poles | buildings | mIOU |

| NO | 0.915 | 0.943 | 0.927 | 0.977 | 0.955 | 0.828 | 0.973 | 0.990 | 0.938 |

| YES | 0.915 | 0.943 | 0.927 | 0.977 | 0.955 | 0.828 | 0.973 | 0.990 | 0.938 |

| Method | input points | mIOU | Speed |

| PointNet++ | 8192 | 0.683 | 726.6s |

| KPConv | 8192 | 0.726 | 186.9s |

| DGCNN | 8192 | 0.665 | 203.2s |

| PointCNN | 8192 | 0.584 | - |

| SPG | 8192 | 0.606 | - |

| ConvPoint | 8192 | 0.674 | - |

| PointTransformer | 8192 | 0.749 | 698.7s |

| PReFormer | 8192 | 0.709 | - |

| PointMamba | 8192 | 0.733 | 90.7s |

| PointCloudMamba | 8192 | 0.747 | 115.6s |

| Ours | - | 0.755 | 4.01s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).