Submitted:

18 March 2025

Posted:

18 March 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

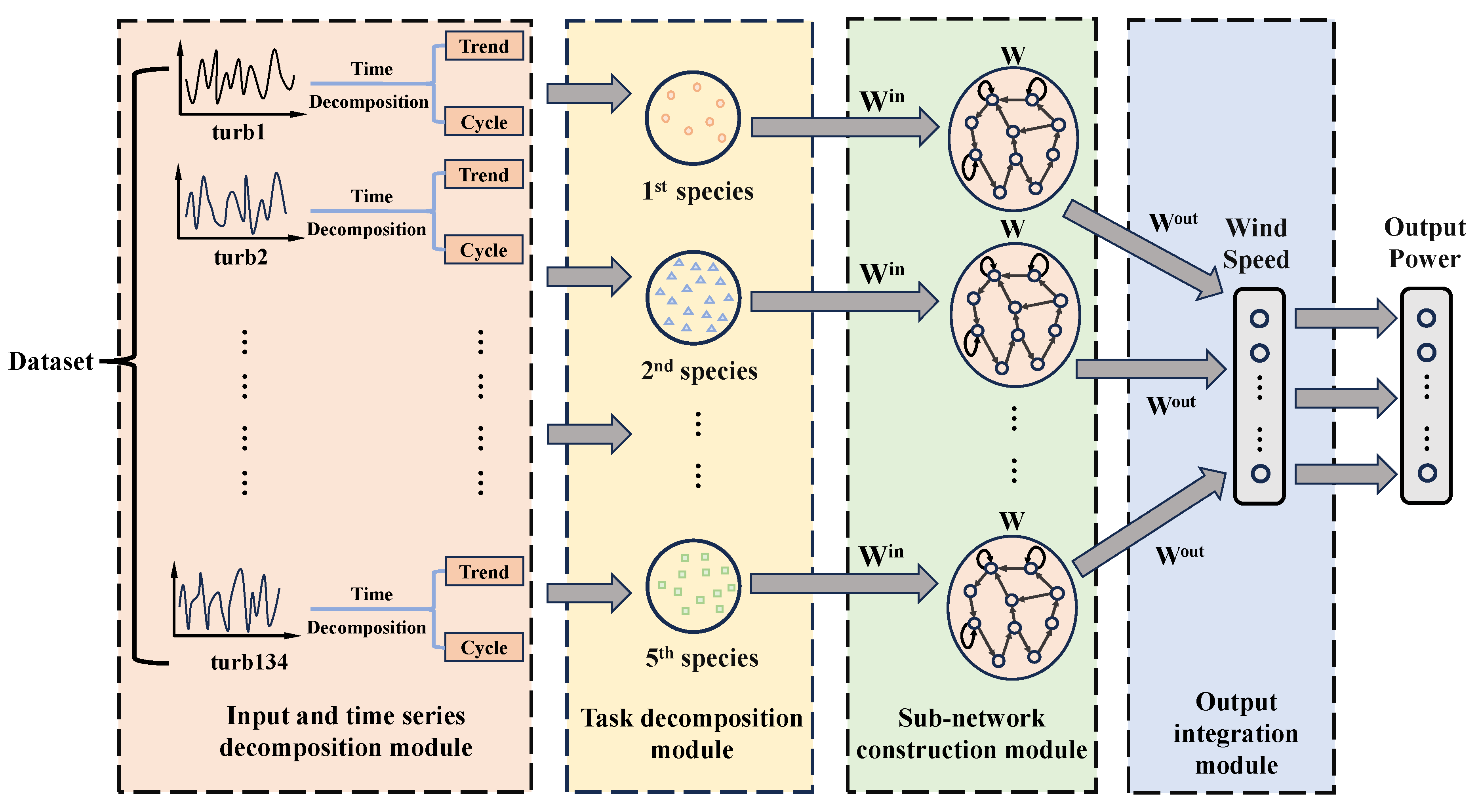

- A neural network wind energy prediction model that integrates modularization is proposed to make predictions based on different data features. The problem of task allocation is solved.

- In the Output integration module, a novel integration algorithm is proposed to integrate the data assigned to different tasks.

- The wind speed prediction model proposed in this paper is applied to wind energy prediction. In addition, in-depth analysis and experiments are conducted, and the results show that the method proposed in this paper enhances the prediction accuracy.

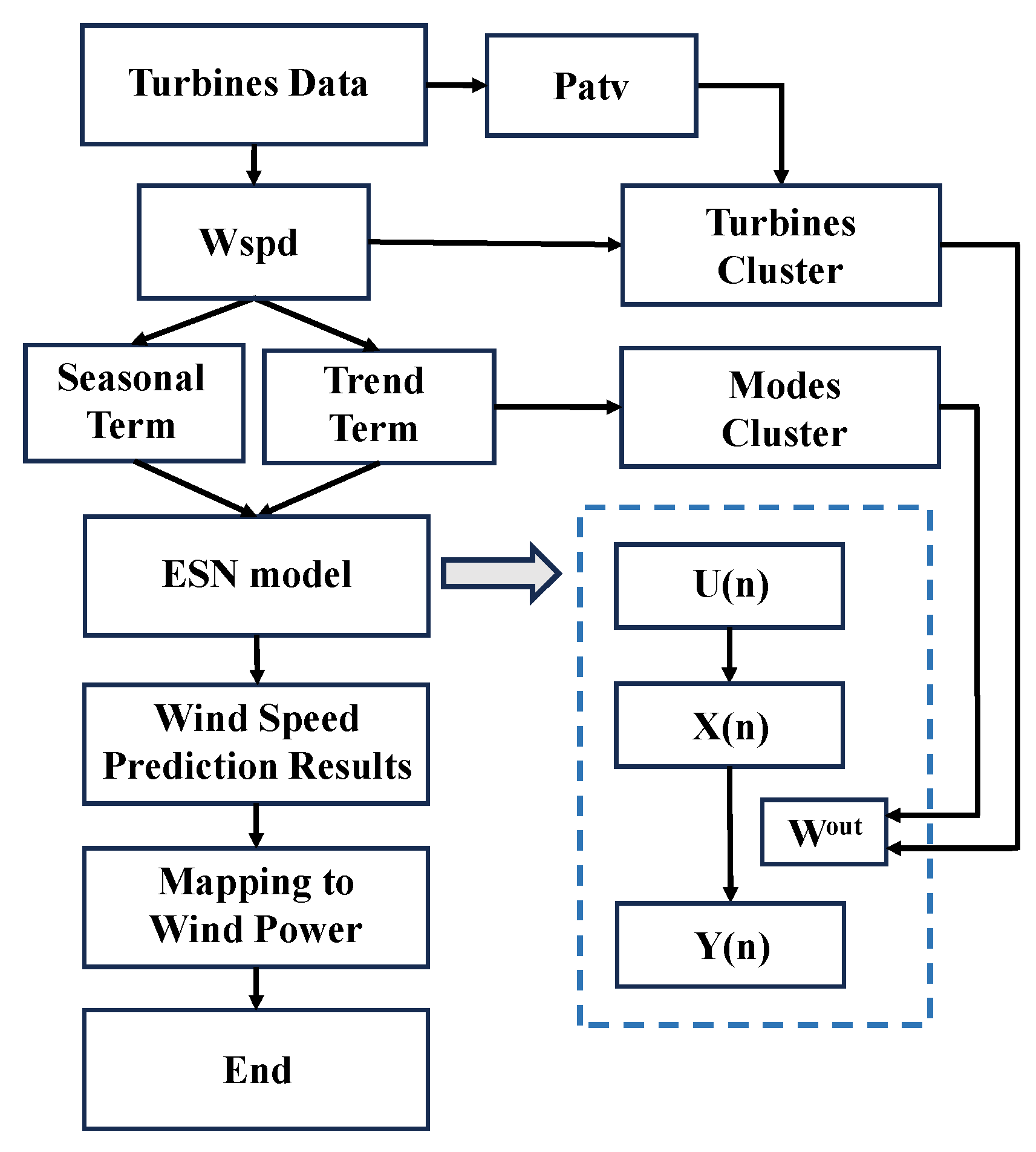

2. Construction of MESN

2.1. Input and Time Series Decomposition Module

2.2. Task Decomposition Module

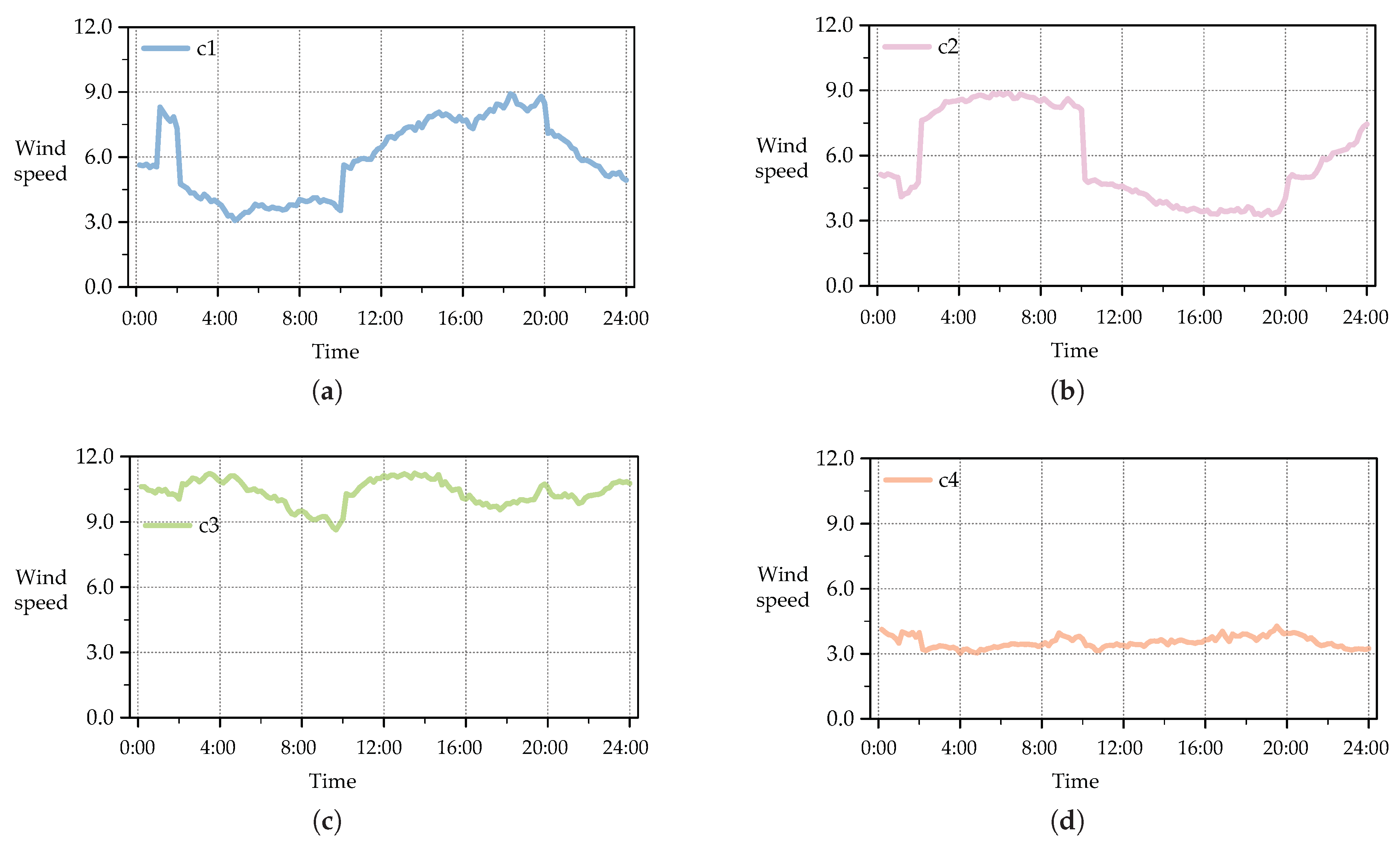

2.2.1. Modes-Cluster

2.2.2. Turbines-Cluster

2.3. Sub-Network Construction Module

2.4. Output Integration Module

| Algorithm 1 Algorithm of MESN |

|

3. Experiments

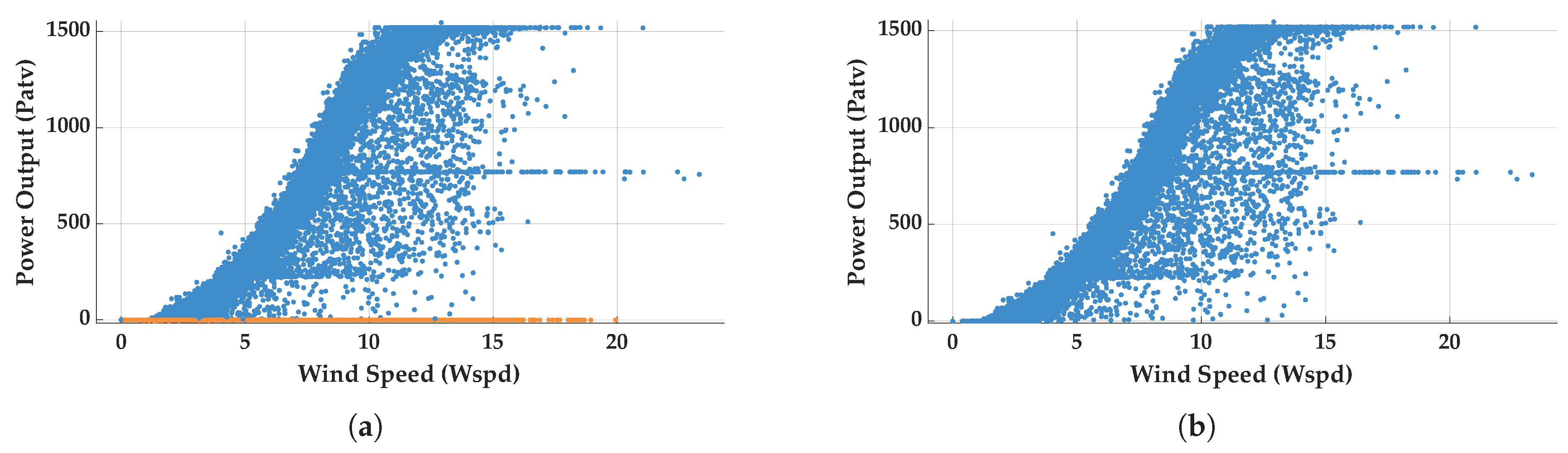

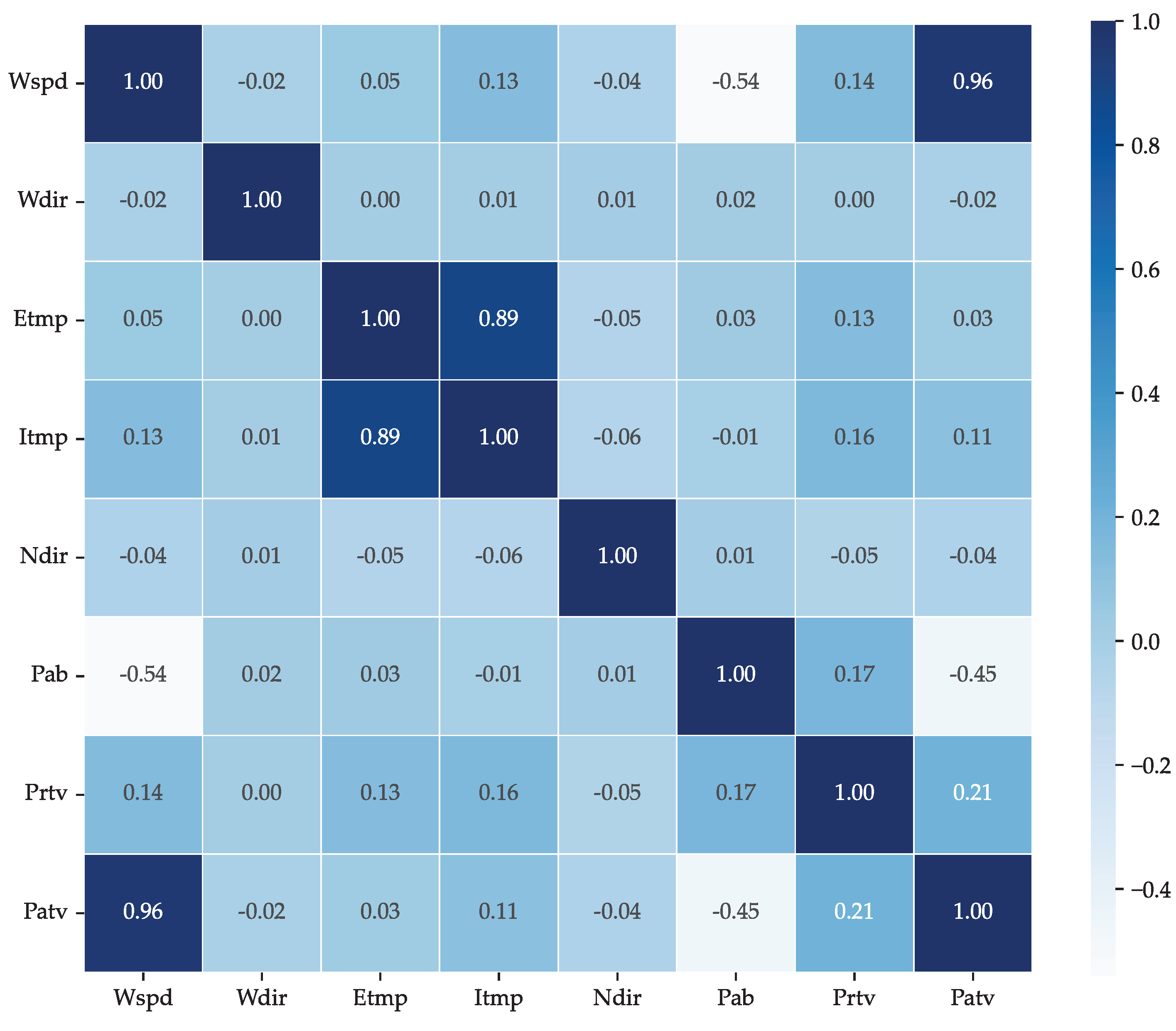

3.1. Data Pre-processing and Anomaly Detection

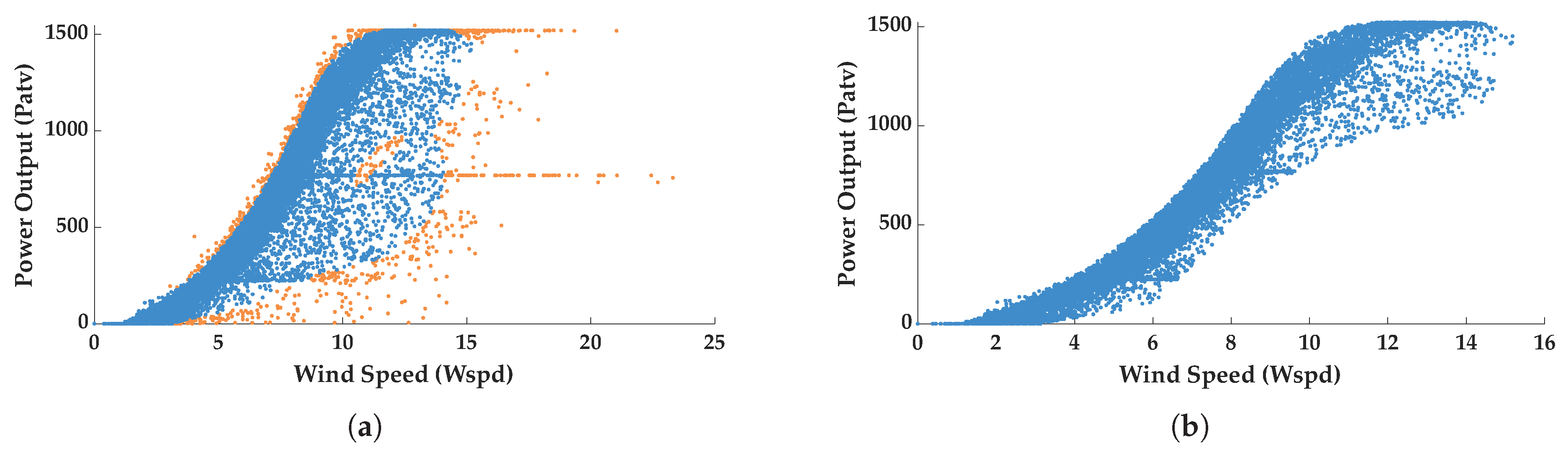

3.2. Cluster Analysis

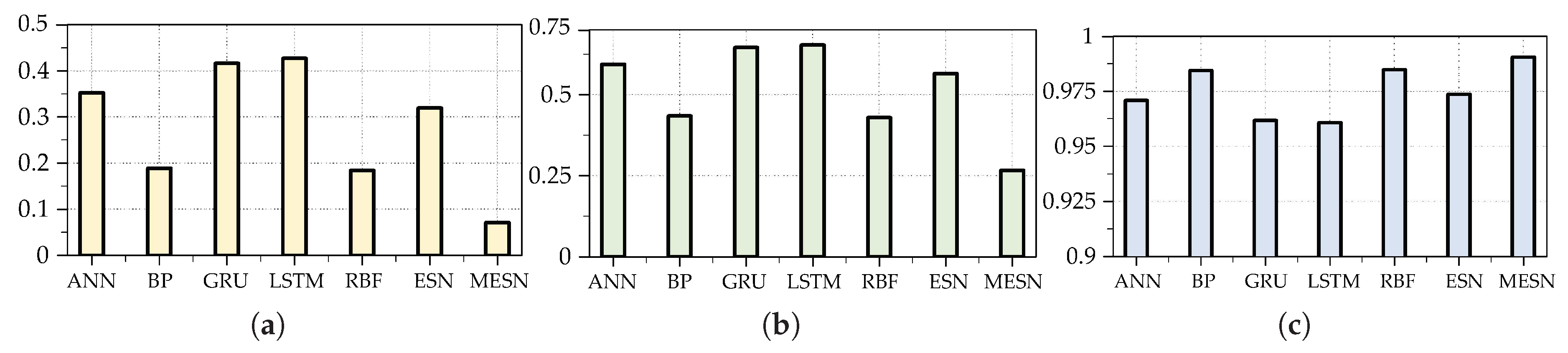

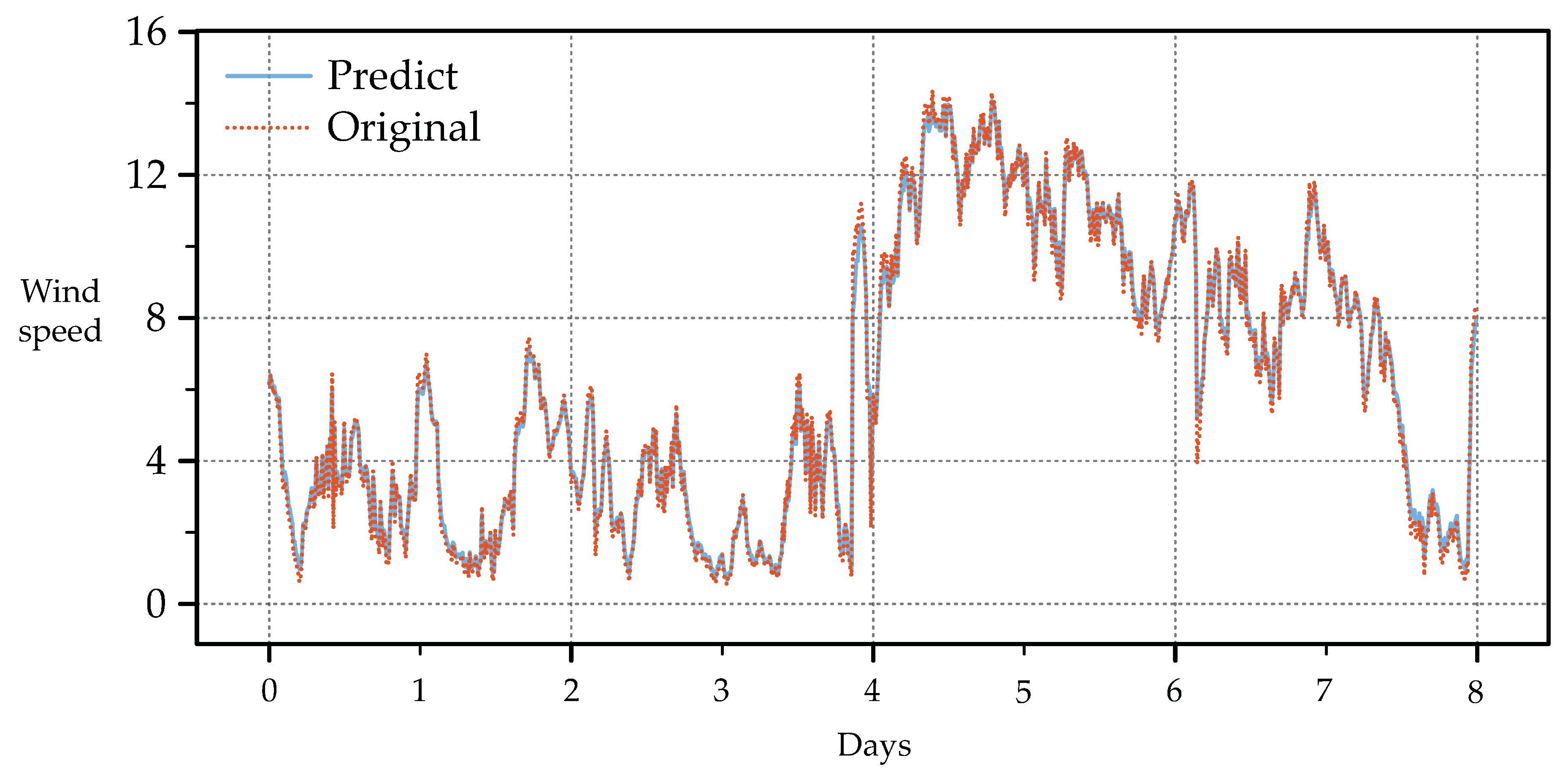

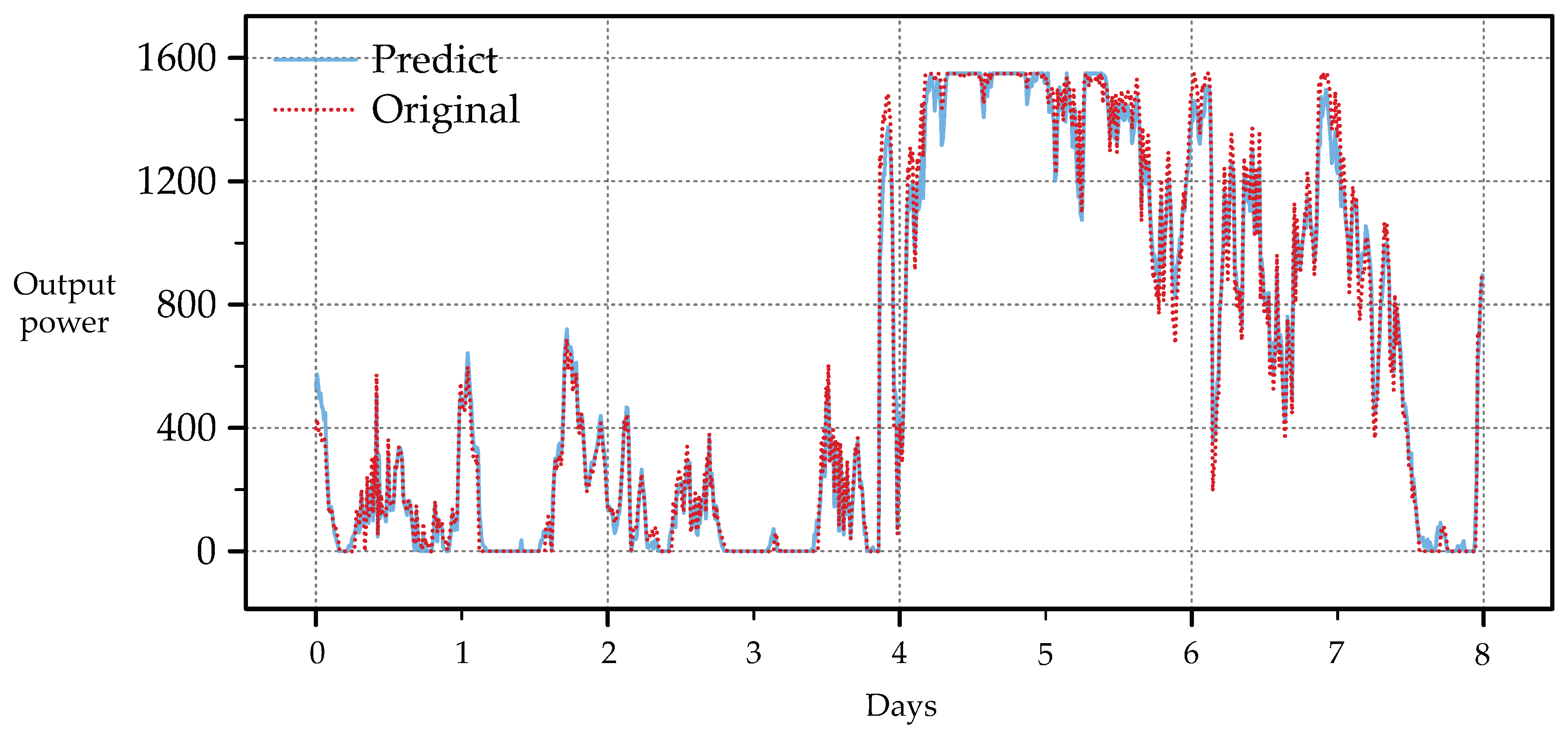

3.3. Experiment and Results

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ESN | Echo State Network |

| GWEC | Global Wind Energy Council |

| AR | Autoregressive model |

| ARMA | Autoregressive moving average model |

| ARIMA | Autoregressive Integrated Moving Average model |

| RNN | Recurrent Neural Network |

| LSTM | Long Short-Term Memory |

| GRU | Gate Recurrent Unit |

| CNN | Convolutional Neural Networks |

| MESN | Modular Echo State Network |

| Wspd | Wind speed recorded by an anemometer |

| Patv | Active power (target variable) |

| Etmp | Ambient temperature |

| Itmp | nternal temperature of turbine generator compartment |

| BP | Back propagation neural network |

| RBF | Radial basis function network |

| RMSE | Root Mean Squared Error |

| MSE | Mean Squared Error |

| R2 | coefficient of determination |

References

- Fang, P.; Fu, W.; Wang, K.; Xiong, D.; Zhang, K. A compositive architecture coupling outlier correction, EWT, nonlinear Volterra multi-model fusion with multi-objective optimization for short-term wind speed forecasting. Applied Energy 2022, 307, 118191. [Google Scholar] [CrossRef]

- Global Wind Energy Council. Global Wind Report 2024. Available online: https://www.gwec.net/reports/globalwindreport (accessed on 21 June 2024).

- Zhu, Y.; Liu, Y.; Wang, N.; Zhang, Z.; Li, Y. Real-time Error Compensation Transfer Learning with Echo State Networks for Enhanced Wind Power Prediction. Applied Energy 2025, 379, 124893. [Google Scholar] [CrossRef]

- Patel, K.; Dunstan, T.D.; Nishino, T. Time-Dependent Upper Limits to the Performance of Large Wind Farms Due to Mesoscale Atmospheric Response. Energies 2021, 14, 6437. [Google Scholar] [CrossRef]

- Nicoletti, F.; Bevilacqua, P. Hourly Photovoltaic Production Prediction Using Numerical Weather Data and Neural Networks for Solar Energy Decision Support. Energies 2024, 17, 466. [Google Scholar] [CrossRef]

- Singh, S.; Mohapatra, A. Repeated wavelet transform based ARIMA model for very short-term wind speed forecasting. Renewable energy 2019, 136, 758–768. [Google Scholar] [CrossRef]

- He, Q.; Zhao, M.; Li, S.; Li, X.; Wang, Z. Machine Learning Prediction of Photovoltaic Hydrogen Production Capacity Using Long Short-Term Memory Model. Energies 2025, 18, 543. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhu, Y.; Wang, X.; Yu, W. , Optimal echo state network parameters based on behavioural spaces. Neurocomputing, 2022, 503, 299–313. [Google Scholar] [CrossRef]

- Pereira, S.; Canhoto, P.; Salgado, R. Development and assessment of artificial neural network models for direct normal solar irradiance forecasting using operational numerical weather prediction data. Energy and AI 2024, 15, 100314. [Google Scholar] [CrossRef]

- Lydia, M.; Kumar, S.S.; Selvakumar, A.I.; Kumar, G.E.P. Linear and non-linear autoregressive models for short-term wind speed forecasting. Energy conversion and management 2016, 112, 115–124. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhao, Y.; Kong, C.; Chen, B. A new prediction method based on VMD-PRBF-ARMA-E model considering wind speed characteristic. Energy Conversion and Management 2020, 203, 112254. [Google Scholar] [CrossRef]

- Liu, M.-D.; Ding, L.; Bai, Y.-L. Application of hybrid model based on empirical mode decomposition, novel recurrent neural networks and the ARIMA to wind speed prediction. Energy Conversion and Management 2021, 233, 113917. [Google Scholar] [CrossRef]

- Duan, J.; Zuo, H.; Bai, Y.; Duan, J.; Chang, M.; Chen, B. Short-term wind speed forecasting using recurrent neural networks with error correction. Energy 2021, 217, 119397. [Google Scholar] [CrossRef]

- Joseph, L.P.; Deo, R.C.; Prasad, R.; Salcedo-Sanz, S.; Raj, N.; Soar, J. Near real-time wind speed forecast model with bidirectional LSTM networks. Renewable Energy 2023, 204, 39–58. [Google Scholar] [CrossRef]

- Fantini, D.; Silva, R.; Siqueira, M.; Pinto, M.; Guimarães, M.; Junior, A.B. Wind speed short-term prediction using recurrent neural network GRU model and stationary wavelet transform GRU hybrid model. Energy Conversion and Management 2024, 308, 118333. [Google Scholar] [CrossRef]

- Chitsazan, M.A.; Fadali, M.S.; Trzynadlowski, A.M. Wind speed and wind direction forecasting using echo state network with nonlinear functions. Renewable energy 2019, 131, 879–889. [Google Scholar] [CrossRef]

- Zhu, Y.; Yu, W.; Li, X. A Multi-objective transfer learning framework for time series forecasting with Concept Echo State Networks. Neural Networks 2025, 186, 107272. [Google Scholar] [CrossRef]

- Shu, H.; Zhu, H. Sensitivity analysis of deep neural networks. In Proceedings of the Proceedings of the AAAI Conference on Artificial Intelligence, 2019; pp. 4943–4950. [CrossRef]

- Hao, Y.; Yang, W.; Yin, K. Novel wind speed forecasting model based on a deep learning combined strategy in urban energy systems. Expert Systems with Applications 2023, 219, 119636. [Google Scholar] [CrossRef]

- Li, K.; Zhang, Z.; Yu, Z. Modular stochastic configuration network with attention mechanism for soft measurement of water quality parameters in wastewater treatment processes. Information Sciences 2025, 689, 121476. [Google Scholar] [CrossRef]

- Duan, H.; Meng, X.; Tang, J.; Qiao, J. NOx emissions prediction for MSWI process based on dynamic modular neural network. Expert Systems with Applications 2024, 238, 122015. [Google Scholar] [CrossRef]

- Yan, Z.; Zhang, H.; Piramuthu, R.; Jagadeesh, V.; DeCoste, D.; Di, W.; Yu, Y. HD-CNN: hierarchical deep convolutional neural networks for large scale visual recognition. In Proceedings of the Proceedings of the IEEE international conference on computer vision, 2015; pp. 2740–2748.

- Aljundi, R.; Chakravarty, P.; Tuytelaars, T. Expert gate: Lifelong learning with a network of experts. In Proceedings of the Proceedings of the IEEE conference on computer vision and pattern recognition, 2017; pp. 3366–3375. [CrossRef]

- Zhou, S.; Xu, H.; Zheng, Z.; Chen, J.; Li, Z.; Bu, J.; Wu, J.; Wang, X.; Zhu, W.; Ester, M. A comprehensive survey on deep clustering: Taxonomy, challenges, and future directions. ACM Computing Surveys 2024, 57, 1–38. [Google Scholar] [CrossRef]

- Kontschieder, P.; Fiterau, M.; Criminisi, A.; Bulo, S.R. Deep neural decision forests. In Proceedings of the Proceedings of the IEEE international conference on computer vision, 2015; pp. 1467–1475.

- Li, W.; Li, M.; Qiao, J.; Guo, X. A feature clustering-based adaptive modular neural network for nonlinear system modeling. ISA transactions 2020, 100, 185–197. [Google Scholar] [CrossRef] [PubMed]

- Shang, Z.; Chen, Y.; Chen, Y.; Guo, Z.; Yang, Y. Decomposition-based wind speed forecasting model using causal convolutional network and attention mechanism. Expert Systems with Applications 2023, 223, 119878. [Google Scholar] [CrossRef]

- Chen, Y.; Yu, M.; Wei, H.; Qi, H.; Qin, Y.; Hu, X.; Jiang, R. A Lightweight Framework for Rapid Response to Short-Term Forecasting of Wind Farms Using Dual Scale Modeling and Normalized Feature Learning. Energies 2025, 18, 580. [Google Scholar] [CrossRef]

- Huan, J.; Deng, L.; Zhu, Y.; Jiang, S.; Qi, F. Short-to-Medium-Term Wind Power Forecasting through Enhanced Transformer and Improved EMD Integration. Energies 2024, 17, 2395. [Google Scholar] [CrossRef]

| Feature Classification | Feature Name | Feature Description |

|---|---|---|

| External features | Wspd | Wind speed recorded by an anemometer |

| External features | Wdir(°) | The angle between the wind direction and the position of the turbine generator compartment |

| External features | Etmp (°C) | Ambient temperature |

| Internal feature | Itmp (°C) | Internal temperature of turbine generator compartment |

| Internal feature | Ndir (°) | Cabin direction, i.e. the yaw angle of the cabin |

| Internal feature | Pab (°) | Pitch angle of blade |

| Power characteristics | Prtv(kW) | Reactive power |

| Power characteristics | Patv(kW) | Active power (target variable) |

| Model | Number of layers | Number of iterations | Number of batches | Number of neuron nodes | Spectral radius | Parameters of regularization |

|---|---|---|---|---|---|---|

| ANN | 3 | 4000 | 42 | 96 | / | / |

| BP | 3 | 4200 | 42 | 96 | / | / |

| GRU | 2 | 4200 | 42 | 192 | / | / |

| LSTM | 2 | 4200 | 42 | 192 | / | / |

| RBF | / | / | / | / | 1.00E-05 | |

| ESN | / | / | / | 200 | 0.7 | 1.00E-06 |

| MESN | / | / | / | 200 | 0.8 | 1.00E-08 |

| Model | MSE | RMSE | R² |

|---|---|---|---|

| ANN | 0.3523 | 0.5936(2.23) | 0.9709 |

| BP | 0.1889 | 0.4346(1.63) | 0.9844 |

| GRU | 0.4166 | 0.6455(2.42) | 0.9617 |

| LSTM | 0.4270 | 0.6534(2.45) | 0.9607 |

| RBF | 0.1842 | 0.4292(1.61) | 0.9848 |

| ESN | 0.3194 | 0.5651(2.12) | 0.9736 |

| MESN | 0.0711 | 0.2667 | 0.9905 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).