1. Introduction

Breast cancer is a widespread health concern necessitating timely and accurate detection for effective treatment and improved patient outcomes. In this work, we propose an integrated approach leveraging both deep learning and machine learning techniques to address the pressing need for reliable and accessible tools for early breast cancer detection. Our solution aims to streamline the diagnostic process by automating the interpretation of medical ultrasound images, thus reducing the reliance on manual assessment and potential subjectivity. The motivation behind our endeavor lies in the imperative to enhance healthcare outcomes for individuals at risk of breast cancer, as timely diagnosis can significantly impact treatment options and prognosis. By integrating the state-of-the-art convolutional neural networks (CNNs) such as GoogLeNet, AlexNet, and ResNet18 for deep learning-based prediction, complemented by traditional machine learning classifiers including k-nearest neighbors (KNN) and support vector machine (SVM), we endeavor to provide medical professionals with robust tools for early detection. To construct our solution, we collected baseline ultrasound images of breasts from 600 female patients aged 25 to 75 years in 2018 1. Preprocessing steps are integral to our approach, as they enhance the quality of input data before feeding it into the models. Our final prediction models that are implemented using deep and machine learning hybrid approaches are seamlessly integrated into a MATLAB application, which is deployed as a standalone application supported at any Windows OS for ease of use by medical practitioners. The evaluation of our prediction models demonstrates promising results, with high accuracy achieved with both deep learning and machine learning approaches. These findings underscore the potential of our comprehensive solution to significantly improve early detection rates and ultimately enhance patient outcomes in the battle against breast cancer.

2. Related Work

In this section, we are going to explore previous research efforts in the field of breast cancer detection, focusing on methodologies and techniques employed for early diagnosis and classification. We will analyze the advancements made in medical imaging using preprocessing steps, machine and deep learning algorithms, computational approaches, and results. In 2, the researchers fine-tuned the hyperspectral imaging (HSI) dataset for detecting breast cancer. This dataset is structured as image cubes, where each pixel contains detailed spectral information across numerous narrow spectral bands. Moreover, they utilized a hyperspectral camera to capture images for their dataset, which compromises both near-infrared and visible ranges. For preprocessing, the Lee filter was employed to address speckle noise in the hyperspectral breast cancer detection dataset. This filter is a local adaptive filter that replaces each pixel value with a weighted average of neighboring pixels. The classification method used in the study is the fuzzy c-means clustering technique. It's a variation of the conventional k-means clustering method that allows for soft assignment of data points to clusters, where each data point has a degree of membership in each cluster rather than a binary assignment. This method was applied to segment cancerous regions in breast tissue samples based on their spectral characteristics. In the end, they achieved promising results with a sensitivity of 98.23% and 95.42%, specificity of 94.7% and 92.09%, and accuracy of 97.03% and 94.29% on two different samples. In 3, the authors demonstrated that deep learning and CNNs can overshadow the features of the region of interest with global features from the entire image. To address this issue, they introduced BCHisto-Net, a method designed to capture both local and global features focusing on the region of interest (ROI). Their solution comprises two main steps. The first step involves color normalization, where histopathological images undergo color normalization using the Reinhard method 4. This process aligns color distributions across images, ensuring consistent representation for accurate analysis by BCHisto-Net. The second step introduces BCHisto-Net, which utilizes two branches: one for global features and another for local features 5. Global features are derived from a color-normalized image, while local features begin with Otsu's thresholding method to identify regions of interest (ROIs), followed by Gaussian smoothing for improved feature extraction 5. Within this second step, the BCHisto-Net architecture incorporates parallel global and local feature extractors, each employing an inception module for feature extraction 6. The inception module utilizes convolution operations with varying receptive fields, concatenated along the channel axis to effectively capture low-level features. The global branch processes the color-normalized image, while the local branch employs Otsu's thresholding for ROI detection and Gaussian smoothing. Subsequent feature extraction involves five convolution blocks with dropout layers to prevent overfitting. The global and local features are aggregated and passed through fully connected layers for classification. Parameter optimization involves BCE loss for each branch independently, ensuring different weights for distinct feature extraction, culminating in a holistic training approach. BCHisto-Net achieved high accuracy rates of 95% and 89% on the KMC and BreakHis datasets, respectively, demonstrating its effectiveness in classifying breast histopathological images at 100× magnification. In the third related work 7, recent strides in computational breast cancer diagnosis are highlighted through innovative applications of neural networks. A notable contribution in this domain involves the development of a feed-forward backpropagation neural network (FNN) specifically tailored for analyzing Hematoxylin and Eosin (H&E) stained histopathological images. This method adeptly employs digital image processing techniques to extract eight distinct features from a substantial dataset comprising over 2600 breast biopsy images, with a primary focus on discerning cell nuclei characteristics. Within this framework, the FNN architecture is structured with a single hidden layer housing 20 neurons, alongside four output neurons tasked with distinguishing between benign and malignant tumors. Furthermore, it effectively categorizes malignant tumors into three specific types—Type1, Type2, and Type3. Notably, the study reports impressive diagnostic accuracy, with a precision of 96.07% in discriminating tumor types and an overall classification accuracy of 95.80% during testing phases. These findings underscore the potential of neural networks as adjunct tools for pathologists, enhancing diagnostic precision and pinpointing cases necessitating further investigation. This research aligns with broader trends in medical image processing, where machine learning models play a pivotal role in refining diagnostic workflows in clinical settings. The noteworthy high accuracy rates and the nuanced approach to the multi-classification of malignant tumors highlight the distinct contributions of this study within the realm of automated breast cancer detection systems. In the fourth related work 8, the researchers delve into the domain of medical image analysis, with a specific focus on the classification of breast cancer utilizing deep convolutional neural networks (CNNs). Their study centers on the adaptation of the DenseNet model, recognized for its proficiency in handling intricate image processing tasks, to meet the demands of breast cancer diagnosis. Through this adaptation, they achieve a notable 96% accuracy rate in multi-class classification, surpassing human expert performance. This success underscores the transformative potential of deep learning algorithms in augmenting diagnostic capabilities, particularly in critical areas such as cancer detection where accuracy is paramount. Furthermore, the findings emphasize the significance of data availability in refining and bolstering the efficacy of deep learning models, suggesting that with access to larger datasets, further performance enhancements are attainable. Ultimately, the research reaffirms the utility of CNNs in medical image analysis and highlights their potential to revolutionize diagnostic paradigms, offering unprecedented accuracy and efficiency in identifying and classifying complex medical conditions like breast cancer.

3. Proposed Solution

A. Preprocessing

In the preprocessing pipeline for our dataset, we begin by reading the dataset which comprises 437 benign, 210 malignant, and 133 normal RGB images. Following by applying data augmentation techniques, we have expanded our dataset from 647 to 17,480 images, augmenting the original images to enrich our dataset. Each image is then converted form unsigned 8-bit integers (unit8 format) to double format to simplify processing and reduce computational complexity. Moving forward, we implement a series of image enhancement steps. Firstly, each image undergoes a median filter applied separately to each one of them in order to reduce noise. Next, we applied a high-pass filter to enhance image edges and fine details. This involves subtracting a Gaussian-filtered version of the median-filtered image from the original, resulting in a sharper image. After these enhancements, we resized the images to a standard size of 224x224 pixels. Finally, as a last step before classification, the images are converted to unsigned 8-bit integers (unit8 format) to ensure compatibility with subsequent processing steps. These preprocessing steps collectively aim to improve image quality, enhance features, and prepare the dataset for effective classification analysis.

B. Deep Learning Approach

Our innovative solution to breast cancer detection integrates cutting-edge deep learning techniques with traditional machine learning methodologies to create a robust and accurate diagnostic tool. In our project, we strategically employed three cutting-edge convolutional neural networks (CNNs): GoogLeNet 910, ResNet18 11, and AlexNet 12. These CNNs were meticulously selected for their track record of delivering exceptional accuracy and efficiency in handling complex image data. GoogLeNet, with its impressive 144 layers and an input image size of 224x224x3, stands out for its hallmark inception modules that efficiently capture multi-scale features, making it ideal for scenarios with constrained computational resources but demanding deep networks. To harness GoogLeNet's power effectively, we replaced its original fully connected layer and classification layer with a new fully connected and classification layers tailored for our medical images. Additionally, we set the weight learn rate factor and bias learn rate factor to 10, ensuring optimal adaptation to our dataset. ResNet18, boasting 71 layers and sharing the same input image size as GoogLeNet, is renowned for its deep structure and ingenious use of residual connections, effectively tackling the vanishing gradient problem. Following the same strategy as with GoogLeNet, we replaced its fully connected and classification layers with new counterparts, maintaining consistency with the weight learn rate factor and bias learn rate. AlexNet, comprising 25 layers and an input image size of 227x227x3, underwent a similar transformation. We replaced its last three layers—fully connected, SoftMax, and classification—with new ones, each endowed with a weight learn rate factor and bias learn rate of 20, providing enhanced adaptability to our dataset. For the training regimen, we meticulously selected optimization algorithms tailored to each network's characteristics. GoogLeNet and ResNet18 benefited from the Adam optimizer 18, while AlexNet utilized RMSProp 19. The mini-batch size was set to 10 to balance computational efficiency and training stability, ensuring each iteration processed a manageable subset of the data. We capped the maximum number of training epochs at 50, a carefully chosen value that struck a balance between training efficiency and convergence. Shuffling the training data at the start of each epoch maintained robustness and ensured unbiased model training. Moreover, setting the learning rate to 0.0001 fine-tuned model performance supported with learning rate drop factor of 0.5 after each 10 epochs, optimizing prediction accuracy across all networks. Post-training, we leveraged the confusion matrix to meticulously assess model performance, computing accuracy, prediction, recall, and F1 score percentages. This comprehensive evaluation methodology provided invaluable insights into each model's effectiveness, guiding iterative improvements, and ensuring robust performance in real-world scenarios.

C. Machine Learning Approch

In the machine learning approach, the activations function is used as a feature extractor. This function processes the dataset alongside a trained CNN model and a specific layer from which features are to be extracted. In our case, we used the ResNet-18 CNN model and selected the 2D average pooling layer, referred to as 'pool5,' for feature extraction. This layer was chosen because it provided the highest accuracy percentage. The function's output consists of rows, where each row represents the extracted features of a single image. Each image is represented by 512 columns, corresponding to the number of features per image. Moving on with the utilized classifiers, starting with K-nearest neighbors (KNN) which is the widely used classification techniques for medical images. KNN operates on the principle of similarity, where it assigns a class label to a new data point based on the majority class label of its nearest neighbors in the training 13. Expanding on this, KNN considers the distance between data points using various distance metrics. One such metric is the distance weight of squared inverse, where the inverse of distances is weighted, giving more importance to closer neighbors. This metric is effective in situations where nearby points have higher relevance for classification 14. Additionally, KNN can utilize the cosine distance metric, which measures the cosine of the angle between two vectors. This metric is particularly useful for text data or high-dimensional sparse data where the magnitude of vectors is not as critical as their orientation 15. The cosine distance metric is ideal for analyzing ultrasound images in breast cancer detection. In these images, where features are often sparse and high-dimensional, the angle between vectors is more crucial than their magnitudes. This metric focuses on relative orientations, aiding in the accurate identification of cancerous patterns based on feature alignments within the image 16. In summary, KNN with the incorporation of distance weight of squared inverse and cosine distance metrics offers a flexible approach to breast cancer classification tasks, leveraging different distance calculations based on the characteristics of the data. SVM Linear is a machine learning algorithm used for classification tasks, particularly effective when dealing with linearly separable datasets. It works by finding the optimal hyperplane that best separates data points of different classes in a high-dimensional space 17. This hyperplane maximizes the margin, which is the distance between the closest data points from different classes, leading to better generalization and robustness of the classifier. SVM Linear achieves this separation by using a linear kernel function, which calculates the dot product of feature vectors in the input space. The decision boundary is then determined based on the sign of this dot product, assigning data points to different classes accordingly. One of SVM Linear's strengths is its efficiency in handling large-scale datasets with a linear separation boundary. It is computationally efficient and memory-friendly compared to non-linear SVM kernels, making it suitable for applications with a large number of features and data points.

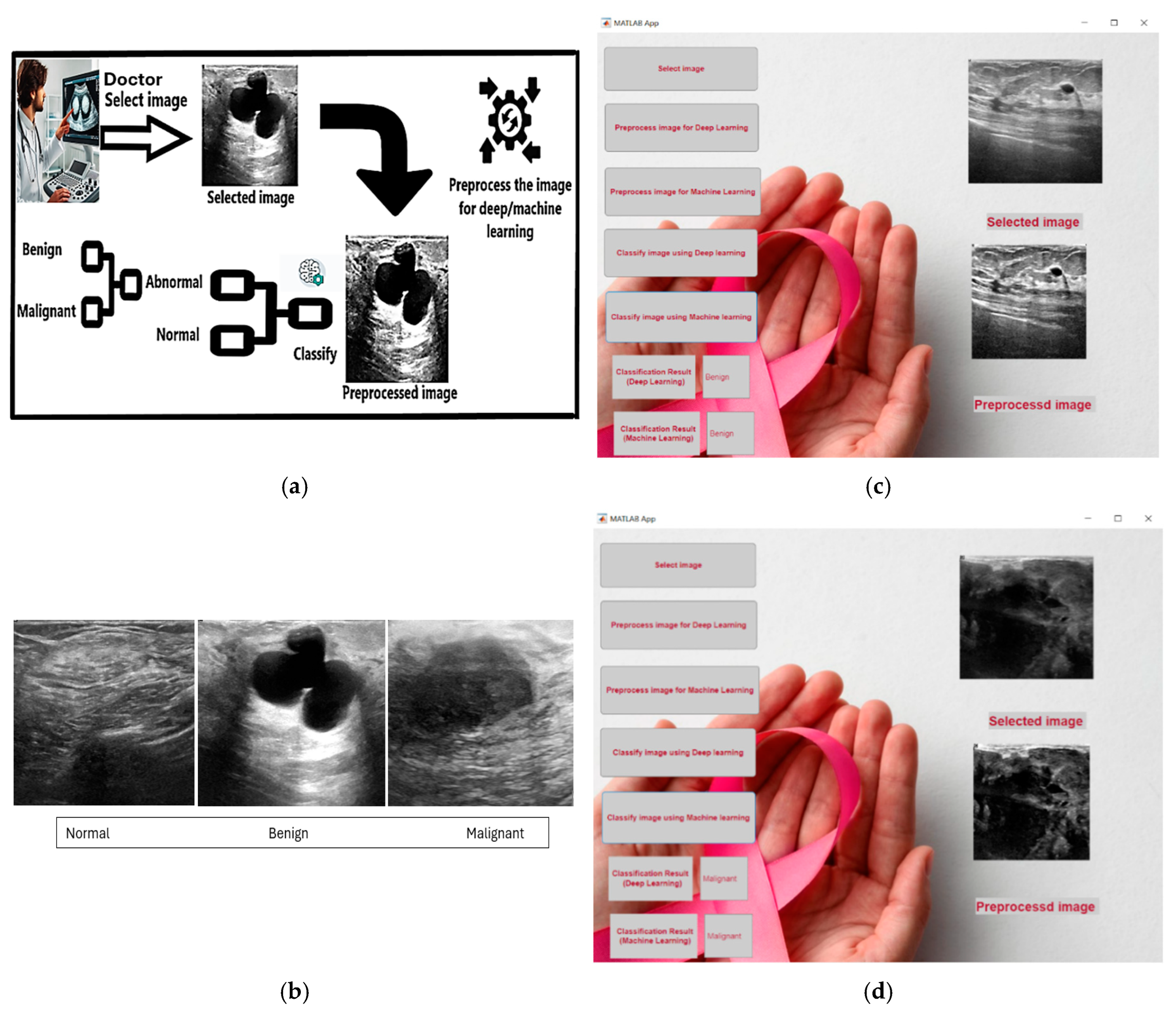

D. User Interface

We are implementing our project supported with a user-friendly interface by developing an application utilizing MATLAB. We ensured the integration of our highest accuracy models into the application. For our deep learning model, we have chosen ResNet-18 CNN, while for the machine learning approach, we opted for the KNN algorithm with 1 neighbor due to its superior accuracy percentage. In our application, we ensured that it does not require MATLAB to be operational. Instead, we managed to deploy the application as an executable file (exe) that can run on any device supporting the Windows operating system. This deployment implementation simplifies the application's usability and removes the limitation of MATLAB installation. Within our application, users have the option to select any ultrasound image of a breast cancer case. Once the user has chosen the image, it will be displayed on the application user interface. Subsequently, the user can preprocess the image for either the deep learning or machine learning approach. Upon pressing the preprocessing button, the preprocessed image will be displayed on the user interface. The final step involves pressing the button designated for classifying the image, whether using the deep learning or machine learning approach. Upon pressing the classify button, the classification result will be displayed in the corresponding text field, indicating whether the image is normal or abnormal, and the abnormal image can be classified further as benign or malignant. Through these steps, we have developed a reliable application that can be utilized by any health institution.

Figure 1.

(a) Represents the high-level architecture of the system; (b) Shows Benign example from user application; (c) Shows Malignant example from user application; (d) Shows images samples from the 3 classes Normal, Benign, and Malignant.

Figure 1.

(a) Represents the high-level architecture of the system; (b) Shows Benign example from user application; (c) Shows Malignant example from user application; (d) Shows images samples from the 3 classes Normal, Benign, and Malignant.

4. Simulation Results

For our breast cancer detection project, we leveraged the "Breast Ultrasound Images Dataset," released in 2018 1. This dataset is specifically designed for advanced medical image analysis tasks such as semantic segmentation and classification and is a crucial resource for enhancing the accuracy of breast cancer diagnosis. The dataset consists of 780 ultrasound images, annotated at the pixel level to support semantic segmentation tasks. These annotations include 780 objects across three primary categories: Benign, Malignant, and Normal. We have encountered all of these annotated categories to study pathological findings. We have noticed that the dataset contained some duplicated images in which it would affect the models` s accuracy, so all of the duplicated images were deleted. To ensure a rigorous evaluation of our models, we divided the dataset into training, validation and testing sets. Approximately 60% of the images were allocated for training, 10% for validation, while the remaining 30% were allocated for testing. This distribution was designed to provide a substantial training dataset and adequate resources for effective model validation and testing. Our project's primary challenge was to develop robust models capable of performing classification tasks accurately. We employed state-of-the-art convolutional neural networks (CNNs) such as GoogLeNet, AlexNet, and ResNet18 for training our models. These deep learning models are critical for identifying complex patterns indicative of benign and malignant conditions. The results of our study were highly promising, indicating that the integration of classification techniques considerably enhances the accuracy of breast cancer detection.

Table 1.

Highest models performance.

Table 1.

Highest models performance.

| Models’ performance Benign / Malignant |

| Model |

Accuracy |

Precision |

Recall |

F1_Score |

| KNN |

99.72% |

99.74% |

99.63% |

99.68% |

| SVM |

99.72% |

99.74% |

99.63% |

99.68% |

| ResNet-18 |

99.72% |

99.74% |

99.63% |

99.68% |

| Models’ performance Normal / Abnormal |

| Model |

Accuracy |

Precision |

Recall |

F1_Score |

| KNN |

98.63% |

96.60% |

98.78% |

97.65% |

| SVM |

97.65% |

95.21% |

96.74% |

95.95% |

| ResNet-18 |

99.66% |

99.27% |

99.53% |

99.40% |

These performance metrics underscore the effectiveness of our approach, combining detailed image analysis with powerful machine learning and deep learning algorithms. Additionally, the inclusion of these models in a MATLAB application provides a user-friendly tool for medical professionals, facilitating early diagnosis and improving the likelihood of successful patient outcomes.

5. Discussion

Our study investigated the effectiveness of both machine learning and deep learning techniques in detecting breast cancer from ultrasound images. We employed Support Vector Machine (SVM) and K-Nearest Neighbors (KNN) as representative machine learning methods, alongside deep learning models such as GoogLeNet, AlexNet, and ResNet18. Our results indicate that both SVM and KNN achieved high accuracy in distinguishing between all 4 classes which are normal, abnormal, benign, and malignant, with SVM scoring 99.72% accuracy for benign and malignant classes and 97.65% for distinguishing between normal and abnormal classes. These findings align with previous research, showcasing SVM's strength in classification tasks, especially when using a linear kernel, which we found to be the most effective for our dataset.

Table 2.

SVM performance.

Table 2.

SVM performance.

| SVM performance for different kernels Benign / Malignant |

| |

Accuracy |

Precision |

Recall |

F1_Score |

| Gaussian |

98.55% |

98.95% |

97.75% |

98.32% |

| Polynomial |

99.72% |

99.74% |

99.63% |

99.68% |

| Linear |

99.72% |

99.74% |

99.63% |

99.68% |

| SVM performance for different kernels Normal / Abnormal |

| |

Accuracy |

Precision |

Recall |

F1_Score |

| Gaussian |

97.31% |

95.13% |

95.48% |

95.30% |

| Polynomial |

66.00% |

64.37% |

75.12% |

61.15% |

| Linear |

97.65% |

95.21% |

96.74% |

95.95% |

Apart from the Support Vector Machine (SVM) model, another commonly used classification method is the k-nearest neighbors (KNN) algorithm. The choice of the parameter k, representing the number of neighbors considered, greatly influences KNN's performance. In our research, we assessed how different values of k affected the KNN algorithm's performance, varying from 1 to 5. Typically, smaller k values make the model more sensitive to data noise and outliers possibly causing overfitting, however in our proposed solution the k value was 1 since the dataset split was clear to predict by the trained models. Hence, selecting the optimal k value is crucial for achieving optimal results. After extensive evaluation, we found that setting the value k to 1 resulted in the highest accuracy of 99.72% for benign and malignant, while 98.63% for normal and abnormal. This suggests that considering only the closest neighbor for classification yields the most accurate results for our dataset. Furthermore, other performance metrics such as precision, recall, and F1 score also showed favorable outcomes for k=1, with all metrics exceeding 98%. Our results echo previous research utilizing machine learning algorithms for similar tasks, affirming the efficacy of KNN with a small k value for detecting breast cancer from ultrasound images. By refining parameter selection, we aim to improve the accuracy and reliability of our model, contributing to advancements in early breast cancer detection techniques.

In parallel with our exploration of machine learning techniques, we investigated the capabilities of deep learning models, focusing on evaluating the efficacy of GoogLeNet, ResNet18, and AlexNet for breast cancer detection from ultrasound images. Notably, ResNet-18 demonstrated the highest accuracy of 99.72% for benign and malignant classes and 99.66% for normal and abnormal classes, indicating its proficiency in efficiently capturing multi-scale features. While AlexNet achieved slightly lower accuracies at 98.27% for benign and malignant classes and 98.69% for normal and abnormal classes, however AlexNet performance remained robust across precision, recall, and F1 scores.

Table 3.

KNN performance.

Table 3.

KNN performance.

| KNN accuracy for different values of K Benign / Malignant |

| K |

Accuracy |

| 1 |

99.72% |

| 2 |

99.72% |

| 3 |

99.72% |

| 4 |

99.72% |

| 5 |

99.72% |

| KNN accuracy for different values of K Normal / Abnormal |

| K |

Accuracy |

| 1 |

98.63% |

| 2 |

98.63% |

| 3 |

98.11% |

| 4 |

98.05% |

| 5 |

97.82% |

These results underscore the resilience of deep learning techniques in accurately classifying ultrasound images for breast cancer detection. By leveraging complex neural architectures and effectively learning intricate patterns from medical data, these models show promise for enhancing diagnostic capabilities in healthcare settings. However, further exploration and validation across diverse datasets are imperative to establish their generalizability and scalability in real-world clinical applications. Nonetheless, the demonstrated effectiveness of deep learning models in our study highlights their potential to significantly impact early breast cancer detection and improve patient outcomes.

Table 4.

CNNs performance.

Table 4.

CNNs performance.

| CNN Deep learning models Benign / Malignant |

| CNN |

Accuracy |

Precision |

Recall |

F1_Score |

| GoogLeNet |

99.65% |

99.58% |

99.63% |

99.60% |

| Resnet18 |

99.72% |

99.74% |

99.63% |

99.68% |

| AlexNet |

98.27% |

97.80% |

98.27% |

98.03% |

| CNN Deep learning models Normal / Abnormal |

| CNN |

Accuracy |

Precision |

Recall |

F1_Score |

| GoogLeNet |

99.60% |

99.62% |

98.97% |

99.29% |

| Resnet18 |

99.66% |

99.27% |

99.53% |

99.40% |

| AlexNet |

98.69% |

98.27% |

98.75% |

98.50% |

Our study demonstrates the superior performance of deep learning models in breast cancer detection from ultrasound images compared to existing research. In contrast to the first related work utilizing hyperspectral imaging 2, where accuracies ranged around 95-98%, our deep learning models consistently exceeded 99% accuracy specifically for GoogLeNet and Resnet 18 CNNs, showcasing substantial advancements in diagnostic capabilities. Similarly, when compared to the second related work focusing on histopathological images 3, our research achieved remarkable accuracies exceeding 99% across various machine learning and deep learning models, surpassing the accuracies achieved by BCHisto-Net 3. Additionally, the third related work employs a feed-forward backpropagation neural network (FNN) for analyzing histopathological images 7, achieving accuracies of 96.07% in distinguishing tumor types and an overall classification accuracy of 95.80%, in the contrary our machine learning approach achieved 99.72% for benign and malignant classes and 98.63% for normal and abnormal classes, while in deep learning approach we achieved 99.72% for benign and malignant classes and 99.66% for normal and abnormal classes. Despite the differences in methodologies and imaging modalities, all studies underscore the efficacy of deep and machine learning techniques in improving breast cancer detection, signaling significant progress in diagnostic capabilities across varied image types.

6. Conclusions

In conclusion, this project demonstrates a substantial advancement in the field of medical diagnostics, specifically in the early detection of breast cancer. By effectively combining cutting-edge deep learning models such as GoogLeNet, AlexNet, and ResNet18 with traditional machine learning classifiers like KNN and SVM, we have developed a robust diagnostic tool that enhances the accuracy of breast cancer detection. Our preprocessing techniques ensure the quality of the input data, which is crucial for achieving high detection accuracy. The notable performance of ResNet-18 highlights the effectiveness of our chosen methodologies. Additionally, the user-friendly MATLAB application we developed simplifies the use of our advanced models in clinical field, enabling medical professionals to make timely and accurate diagnoses, which are essential for optimal patient care. The promising results obtained from the evaluation of our models reaffirm the potential of integrated deep and machine learning solutions to significantly improve early detection rates, thereby having a profound impact on patient outcomes and advancing the fight against breast cancer. This project not only contributes to technological advancements in healthcare but also underscores the critical importance of early cancer detection and the potential to save lives through innovation and accessibility.

References

- Al-Dhabyani W, Gomaa M, Khaled H, Fahmy A. Dataset of breast ultrasound images. Data in Brief. 2020 Feb;28:104863. [CrossRef]

- Youssef, A., Moa, B., & El-Sharkawy, Y. H. (2024). A Novel Visible and Near-Infrared Hyperspectral Imaging Platform for Automated Breast-Cancer Detection. Photodiagnosis and Photodynamic Therapy, 104048. https://www.sciencedirect.com/science/article/pii/S1572100024000875.

- R. Rashmi, K. Prasad, and C. B. K. Udupa, “BCHisto-Net: Breast histopathological image classification by global and local feature aggregation,” Artificial Intelligence in Medicine, Nov. 01, 2021. https://www.sciencedirect.com/science/article/pii/S0933365721001846#s0025.

- E. Reinhard, M. Adhikhmin, B. Gooch and P. Shirley, "Color transfer between images," in IEEE Computer Graphics and Applications, vol. 21, no. 5, pp. 34-41, July-Aug. 2001. [CrossRef]

- N. Otsu, "A Threshold Selection Method from Gray-Level Histograms," in IEEE Transactions on Systems, Man, and Cybernetics, vol. 9, no. 1, pp. 62-66, Jan. 1979. [CrossRef]

- C. Szegedy, V. Vanhoucke, S. Ioffe, J. Shlens, and Z. Wojna, “Rethinking the Inception Architecture for Computer Vision,” 2016.

- Singh, S., Gupta, P. R., & Sharma, M. K. (2010). Breast cancer detection and classification of histopathological images. International Journal of Engineering Science and Technology, 3(5), 4228.

- Nawaz, M. , & Hassan, T. (2018). Multi-Class Breast Cancer Classification using Deep Learning Convolutional Neural Network. International Journal of Advanced Computer Science and Applications, 9(6).

- Szegedy, Christian, et al. "Going deeper with convolutions." Proceedings of the IEEE conference on computer vision and pattern recognition. 2015.

- Szegedy, Christian, et al. "Rethinking the inception architecture for computer vision." Proceedings of the IEEE conference on computer vision and pattern recognition. 2016.

- He, Kaiming, et al. "Deep residual learning for image recognition." Proceedings of the IEEE conference on computer vision and pattern recognition. 2016.

- Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). Imagenet classification with deep convolutional neural networks. Advances in neural information processing systems, 25.

- Cover, T., & Hart, P. (1967). Nearest neighbor pattern classification. IEEE Transactions on Information Theory, 13(1), 21-27.

- Li, S. , & Jain, A. K. (2007). Handbook of face recognition. Springer Science & Business Media.

- Manning, C. D. , Raghavan, P., & Schütze, H. (2008). Introduction to information retrieval. Cambridge University Press.

- Kostadinov, D. , & Singh, M. (2017). Image Similarity Analysis Using Cosine Distance. International Conference on Medical Imaging and Computer-Aided Diagnosis, pp. 25-30.

- Cortes, C. , & Vapnik, V. (1995). Support-vector networks. Machine Learning, 20(3), 273-297.

- Kingma, D. P., & Ba, J. (2014). "Adam: A Method for Stochastic Optimization." In Proceedings of the International Conference on Learning Representations (ICLR).

- A. Graves, "Generating Sequences with Recurrent Neural Networks," arXiv preprint arXiv:1308.0850, 2013.

- Krizhevsky, I. Sutskever, and G. E. Hinton, "ImageNet Classification with Deep Convolutional Neural Networks," in Advances in Neural Information Processing Systems, vol. 25, pp. 1097-1105, 2012.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).