The following theories and methods are adopted in this study to implement the "Smart Police" system.

2.1. Edge AI Camera and Deep Learning

Edge AI refers to the deployment of artificial intelligence algorithms directly on local devices, enabling real-time data processing without relying on cloud-based servers. This technology significantly reduces latency, making it ideal for applications that require immediate decision-making, such as autonomous vehicles or smart surveillance systems. By processing data at the edge, Edge AI also minimizes bandwidth usage and enhances privacy, as sensitive information does not need to be transmitted to remote servers. As the adoption of IoT devices continues to grow, Edge AI is becoming a crucial component in building more efficient, responsive, and secure systems. Deep learning is based on mathematical foundations and ideas that govern the operation of deep neural networks—a subset of machine learning models—commonly referred to as deep learning theory

. Deep learning theory spans a wide range of concepts and strategies for training and optimizing deep neural networks for complicated pattern recognition tasks

. Deep learning algorithms consist of interconnected layers, including an input layer, hidden layers, and an output layer

. These layers form deep neural networks, which process input data by propagating it through a series of interconnected nodes called neurons

. The neurons in each layer are connected to neurons in subsequent layers, enabling the flow of information through the network

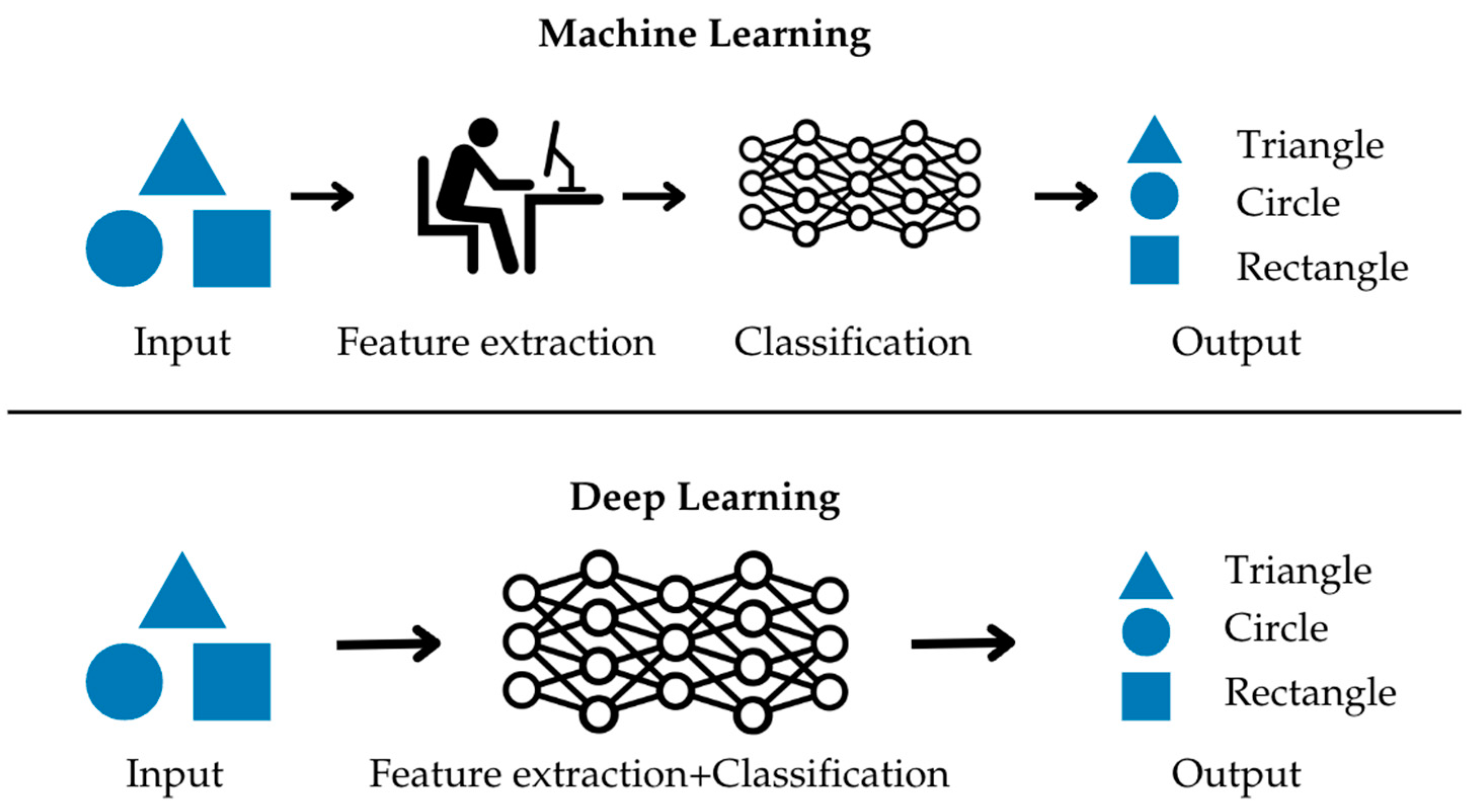

. Figure 1 shows the overview of machine learning and deep learning which differ in terms of features extraction part.

Deep learning is a subset of machine learning that focuses on artificial neural networks with multiple layers. These networks can automatically learn hierarchical representations of data, making them well-suited for tasks such as image recognition, natural language processing, and more. Convolutional Neural Networks (CNNs) are a specialized form of deep learning architecture designed specifically for processing image data by capturing spatial hierarchies in features.

A. Neural Network Forward Propagation

Each neuron in a layer computes a weighted sum of its inputs, followed by an activation function:

The activation of the neuron is given by:

where:

and are the weights and biases of layer ll,

is the activation of layer ll,

is the activation function.

Where Activation Functions can be

ReLU (Rectified Linear Unit):

Loss Functions is the function that the CNN will minimize can be selected from

Cross-Entropy Loss (for Classification):

Mean Squared Error (MSE) (for Regression):

B. Backpropagation and Gradient Descent

Through the training data and gradient descent algorithms, the learning or training process called backpropagation can be carried out. Weight and bias updates are performed using gradient descent:

where:

is the learning rate,

are gradients of the loss function w.r.t. weights and biases.

C. General Deep Learning Model Representation

A deep learning model with multiple layers can be represented as:

where

L is the total number of layers.

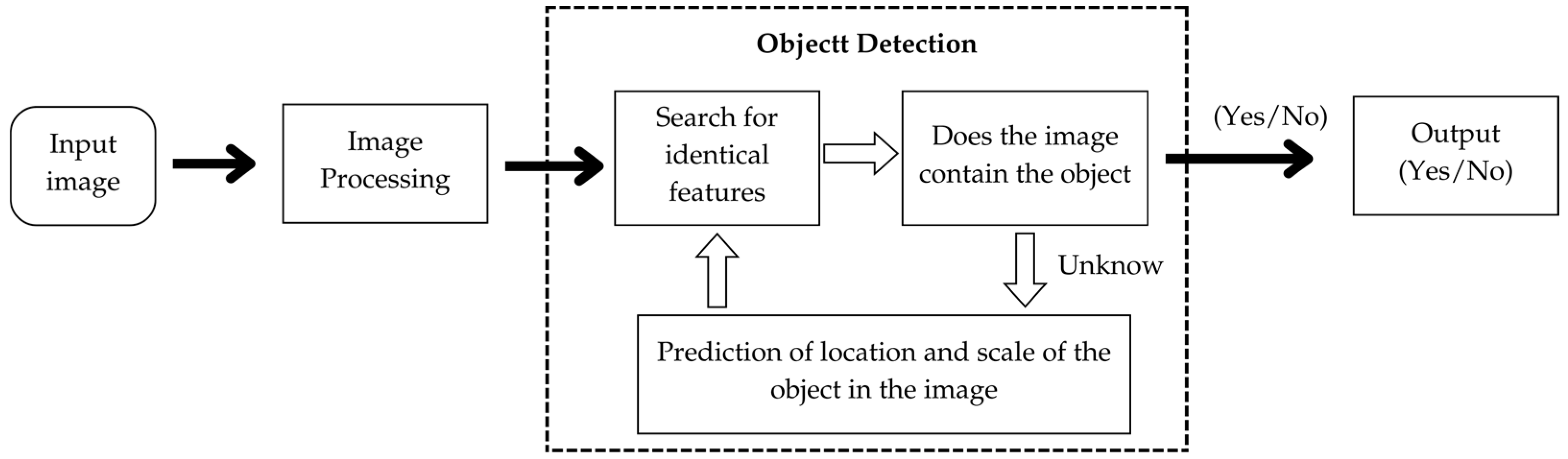

2.2. Object detection

Overall, deep learning leverages the structure of interconnected layers and the iterative adjustment of weights and biases to learn from data and make predictions. It has proven to be a powerful approach in various domains, enabling the development of sophisticated models capable of handling complex tasks. When combined with object detection—a computer vision task that involves recognizing and localizing various items in an image or video—deep learning can perform the inspection task of classifying objects. The goal is to offer precise bounding box coordinates that outline the positions of objects in addition to recognizing their existence. The algorithm of object detection must detect and classify multiple objects simultaneously and precisely locate them within the visual data in the form of images or videos.

Figure 2.

Object detection Flow.

Figure 2.

Object detection Flow.

Human and weapon detection is an algorithm that aims to accurately detect and localize human bodies or weapon parts in images or video frames. These algorithms typically leverage machine learning techniques, particularly deep learning, to learn discriminative features that separate humans from the background or other objects in the frame.The process of human detection involves training labeled data in the form of images or video frames with bounding boxes around the desired object. The training session uses machine learning model, such as deep neural network, to train to learn the discriminative features that differentiate humans from other objects. The model optimizes its parameters based on a defined objective function, aiming to minimize detection errors.

The following are examples of the key concepts in human detection.

A. Feature and Representation

Human detection algorithms rely on the extraction of relevant features or representations from the input data. These traits capture distinguishing human characteristics such as shape, texture, color, and motion. Haar-like features, Histogram of Oriented Gradients (HOG), Scale-Invariant Feature Transform (SIFT), and deep learning-based features produced from Convolutional Neural Networks (CNNs) are examples of commonly used features.

B. Machine learning

Machine learning plays a crucial role in human detection. To learn discriminative patterns that distinguish humans from non-human objects or backgrounds, supervised learning techniques such as Support Vector Machines (SVM), Random Forests, and Neural Networks are often used. These algorithms are trained on labeled datasets in which people are categorized as positive and non-humans as negative.

C. Classifier cascades

Classifier cascades are used to efficiently exclude non-human areas early in the detecting process. Cascades consist of several stages, each with a classifier that filters out non-human areas based on increasing complexity. This hierarchical strategy speeds up the detection process by reducing the number of locations that must be thoroughly analyzed.

D. Metrics of Evaluation

Evaluation metrics are used to measure the performance of human detection algorithms. Precision, recall, and the F1 score are common measures that assess the algorithm's capacity to recognize humans while reducing false positives and false negatives. To assess the accuracy of bounding box localization, additional metrics such as mean Average Precision and Intersection over Union are utilized.

E. COCO dataset

The COCO (Common Objects in Context) dataset is an image recognition dataset to perform various tasks, including object detection, instance segmentation, and image captioning, serving as a dependable resource for training and evaluating various models. Each image within the dataset is annotated with bounding boxes that accurately enclose instances of the object categories shown in the frame. In addition, the dataset includes segmentation masks for each object instance as well as descriptive label that explain the image content.The COCO dataset offers comprehensive annotations, rendering it invaluable or advancing computer vision research.

F. SSD MobileNet V2

SSD MobileNetV2 is a prevalent object detection framework that combines the Single Shot Multi-Box Detector (SSD) architecture with the MobileNetV2 convolutional neural network backbone, which is built to strike a balance between high accuracy and computational efficiency. The primary components of SSD MobileNetV2 are the backbone network and the detection head. The backbone network acts as a feature extractor, extracting relevant features from the input image. Another component, SSD MobileNetV2's detection head, predicts object bounding boxes and their class probabilities. It consists of a set of prediction layers followed by a sequence of convolutional layers with diminishing spatial resolution. These prediction layers provide a set of default bounding boxes with varied aspect ratios and sizes. The advantages of this model lie in its high detection accuracy and compatibility with mobile and embedded devices. SSD MobileNetV2 has made significant inroads into real-time object identification applications such as autonomous driving, mobile robots, and intelligent surveillance systems. Its efficient architecture enables real-time object detection on devices with limited processing resources, making it ideal for deployment in scenarios requiring low latency and high accuracy.

2.3. People Centric Development

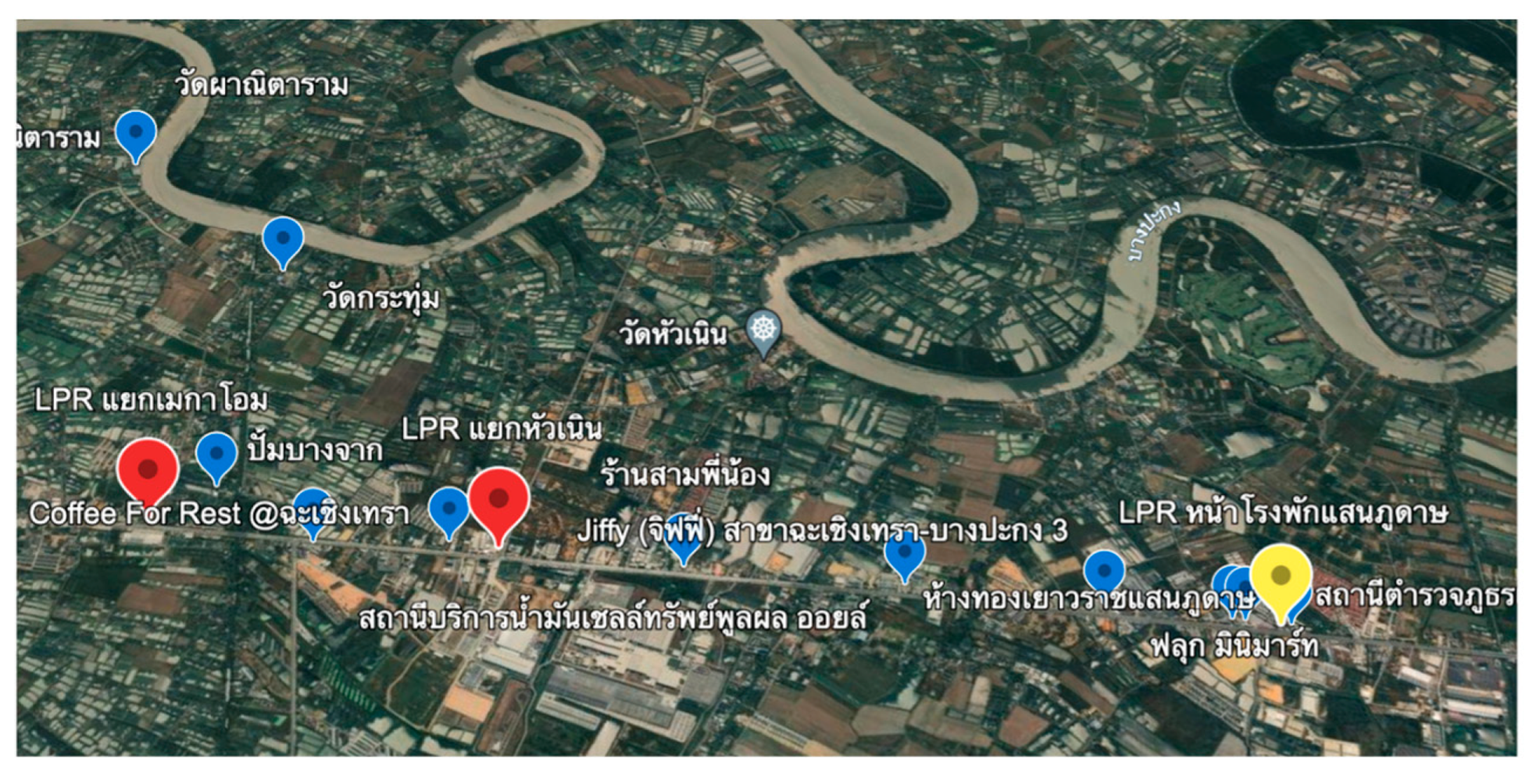

The development of an intelligent police system in Chachoengsao Province is based on a community-centered research approach that involves active participation from various stakeholders. The methodology comprises four primary phases:

A. Community Engagement and Data Collection

The community engagement process is designed to ensure that the system addresses local needs and concerns effectively.

Structured questionnaires, consisting of open-ended and closed-ended questions, were developed to gather comprehensive feedback. Open-ended questions allowed participants to freely express opinions and provide detailed insights, while closed-ended questions facilitated quantifiable data collection. Sampling and Participant Selection:Data collection targeted a diverse group of at least 200 participants from 19 subdistricts in Chachoengsao Province. The sampling process ensured representation from key stakeholders, including residents in high-crime areas, law enforcement officials, and local business owners. The sample size and confidence level (95-97%) ensured statistical reliability.

Multiple statistical methods were employed to analyze survey results:

▪ Descriptive statistics (e.g., percentage, frequency, mean, and standard deviation) provided an overview of public needs.

▪ Inferential statistics, such as t-tests and ANOVA, identified differences across demographic groups (e.g., gender, age, income, and education level).

▪ Chi-square tests were used to evaluate relationships between variables, while hypothesis testing methods validated findings.

B. Development of Collaborative Networks

A network of stakeholders was established to facilitate data collection and system development. Collaborative efforts involved local government agencies, law enforcement, private organizations, and community groups. Stakeholders provided insights on:

Identifying high-risk areas for theft and other crimes.

Proposing system functionalities tailored to the specific needs of the community.

Ensuring transparency and trust through regular consultations and information sharing.

C. System Development and Prototype Testing

The intelligent police system was developed based on the data collected and analyzed. Key steps included:

High-risk areas were identified based on crime statistics and community feedback. These areas were equipped with advanced technology such as intelligent CCTV systems and weapon detection sensors.

Artificial intelligence (AI) algorithms were incorporated to enable real-time analysis and alert generation. For example, the system could identify suspicious behaviors, vehicles, or individuals carrying weapons.

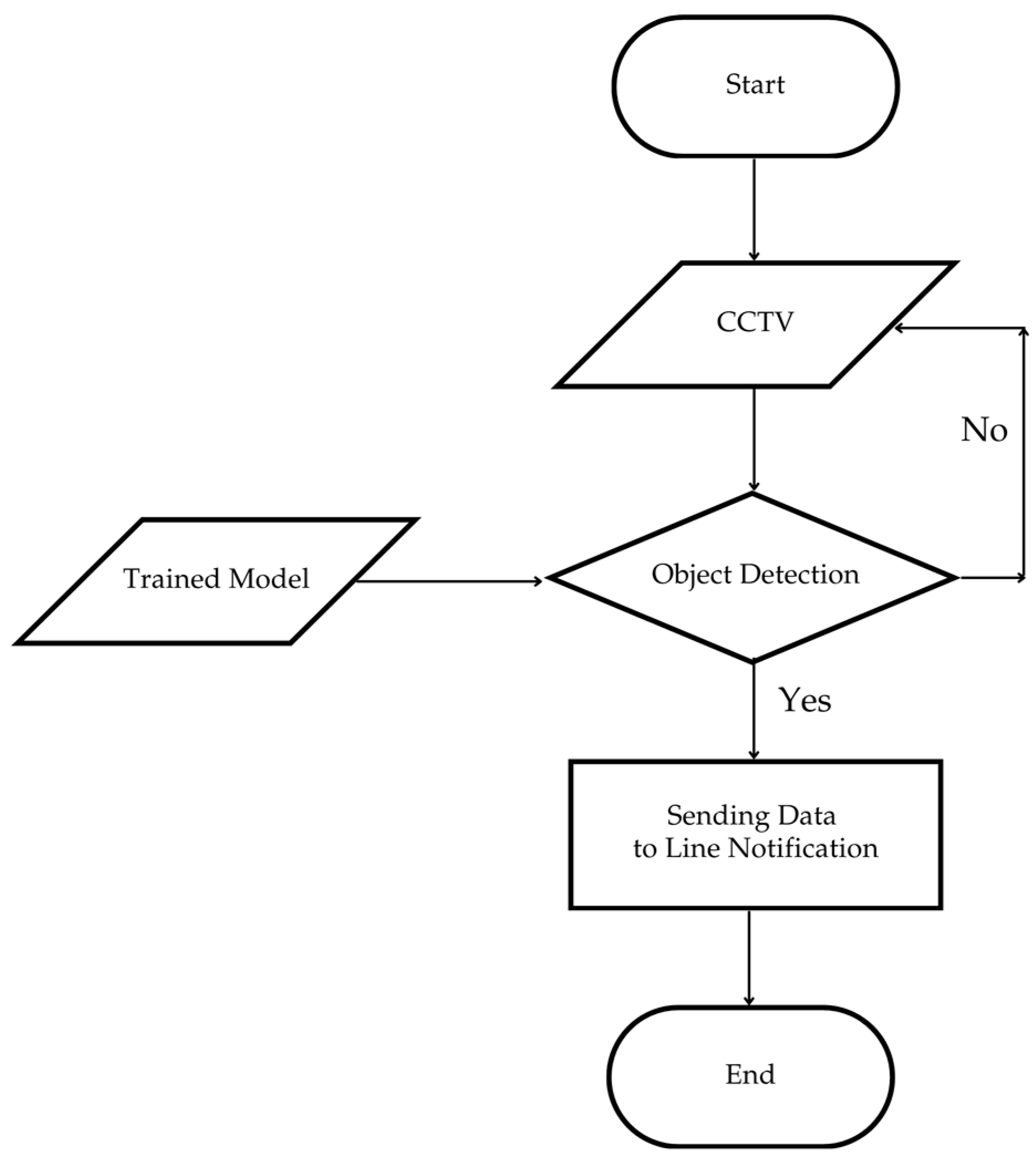

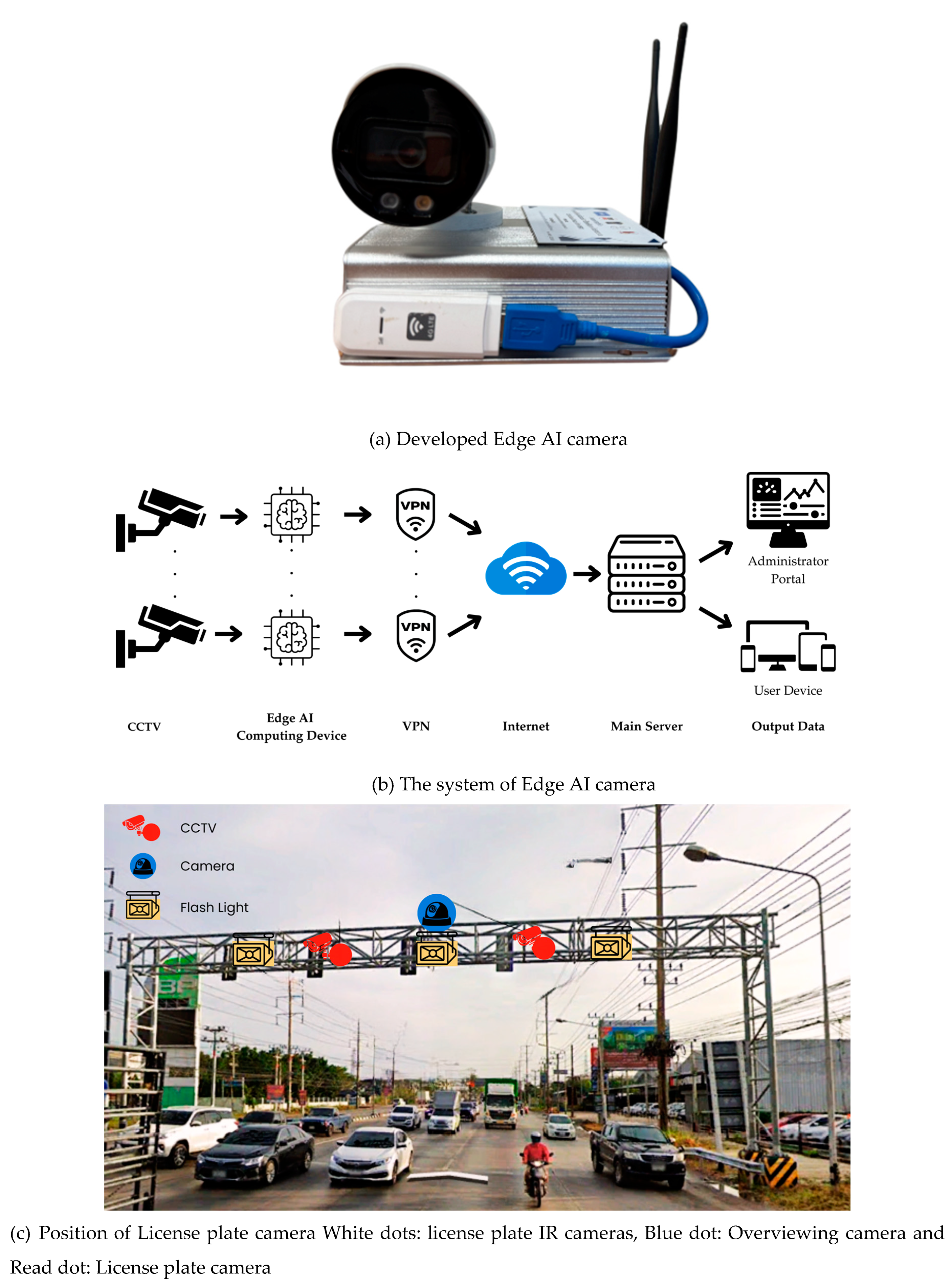

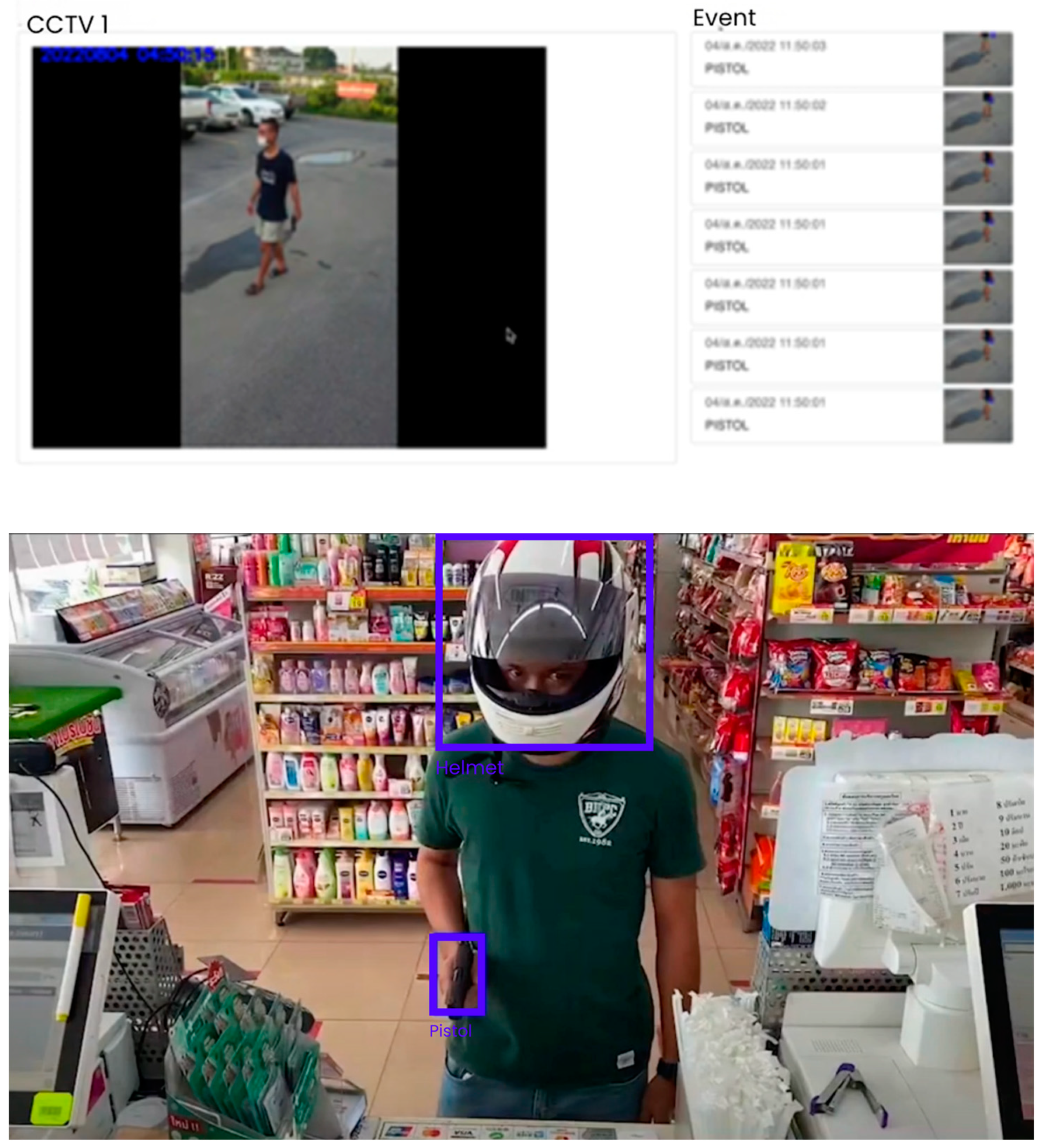

The prototype Edge AI cameras as shown in

Figure 3(a) were installed at 20 high-priority locations. During the pilot phase, alerts generated by the system were sent to law enforcement and local stakeholders via digital communication platforms (e.g., Line). Feedback from these trials was used to refine system performance and functionality. An intelligent modular system for crime detection in Chachoengsao City has been developed. It is designed to communicate with the cloud and perform AI-based processing autonomously, enabling it to send alerts to relevant individuals independently. Additionally, the system includes functionality for recording data on an SD card integrated into the module. The system is capable of detecting weapons (e.g., knives, guns) and helmets. The AI technology in this project integrates embedded cameras with an Edge AI system, which processes data directly on a specially developed board. The processed data is then transmitted to the cloud and subsequently to a server. This design distributes AI analysis responsibilities between the embedded system and the cloud system, significantly reducing data transmission volume compared to traditional systems. This approach ensures timely notifications to users, reduces operational costs, and enhances the overall effectiveness of the intelligent policing system.

The system's working principle is illustrated in

Figure 3(b). The system database is connected to the website of the Smart City Office, ensuring seamless integration and accessibility. The only necessary data or captured event image is transferred to the cloud to inform the police/authorized or member persons.

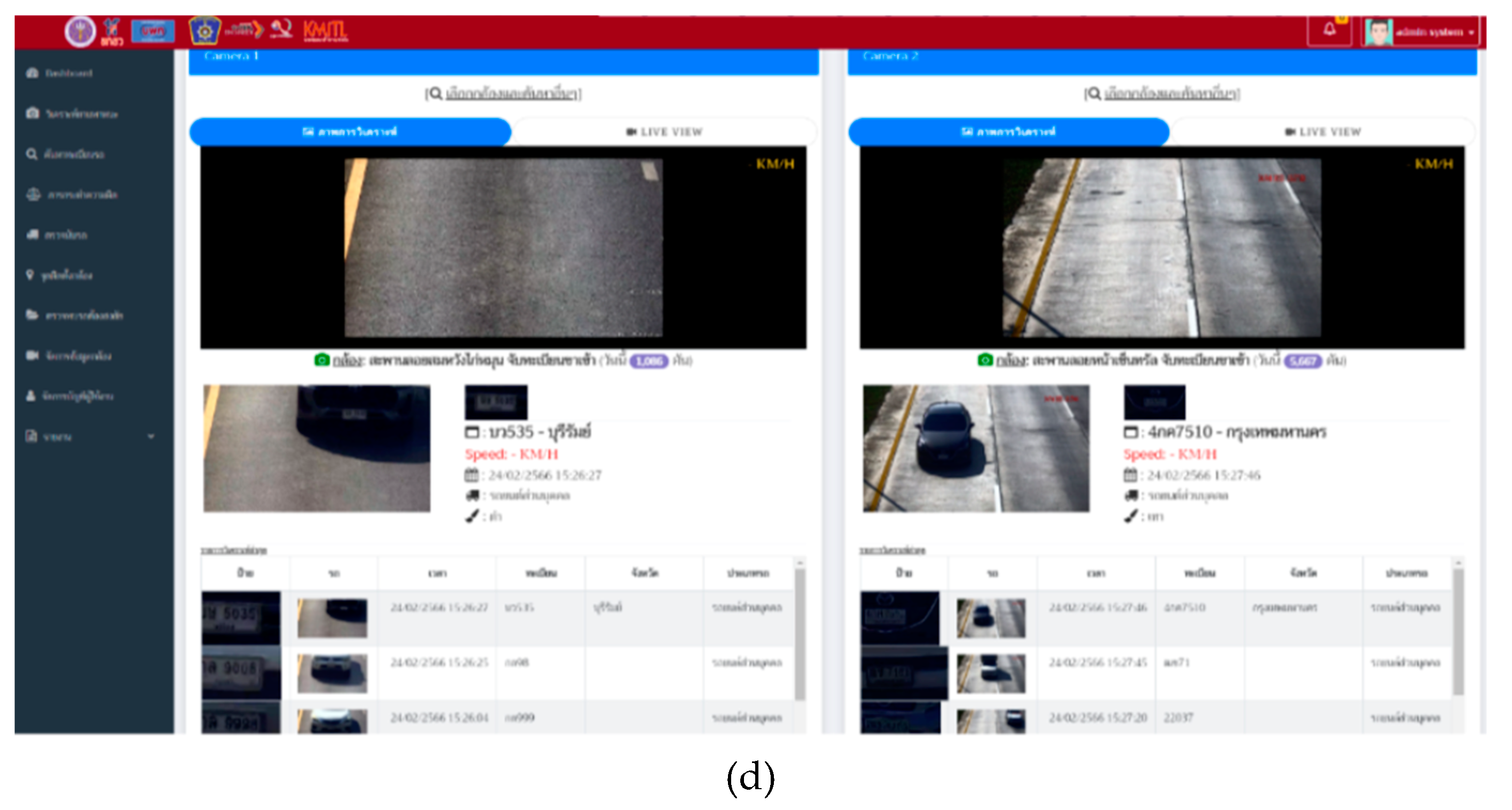

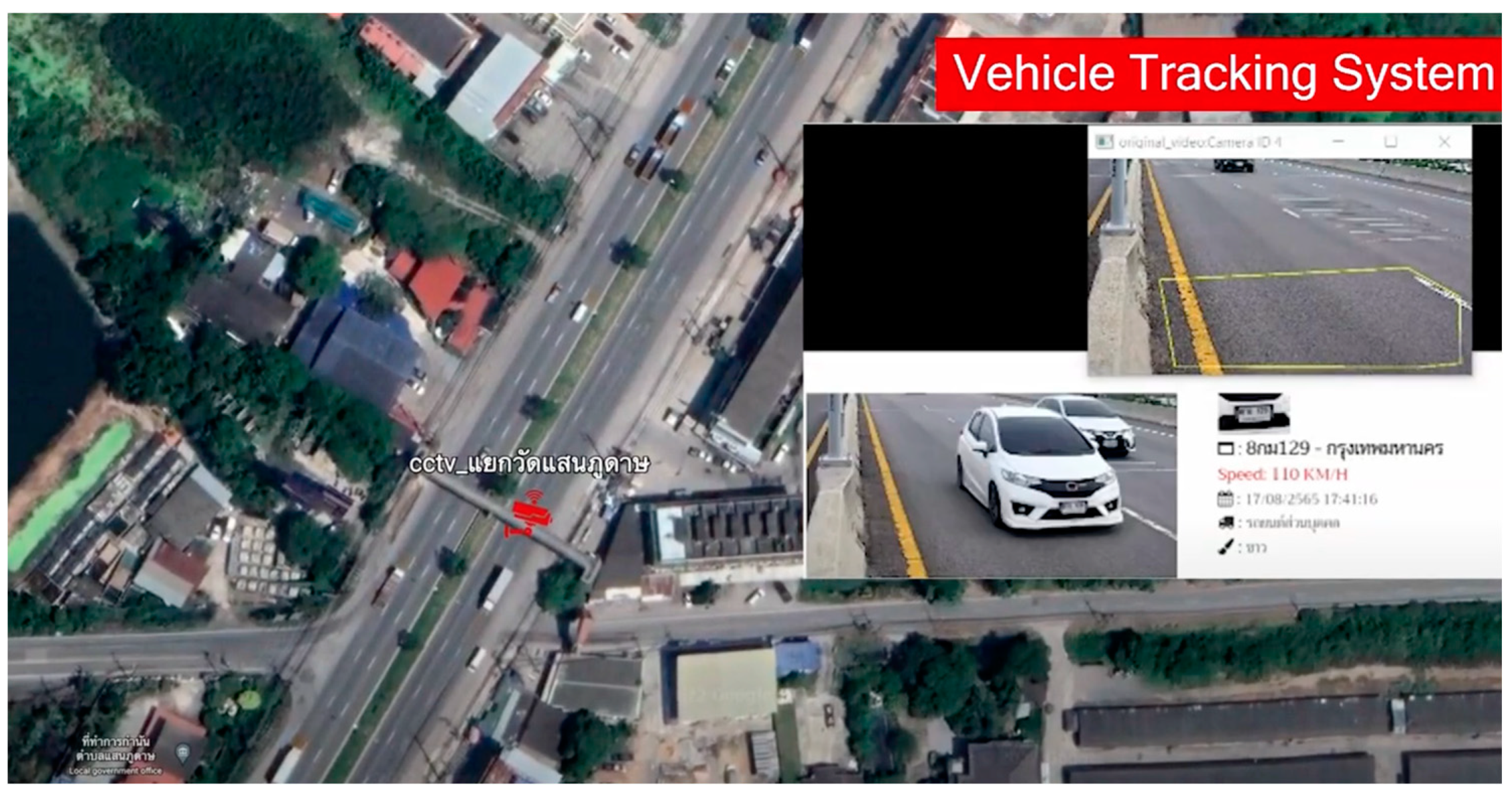

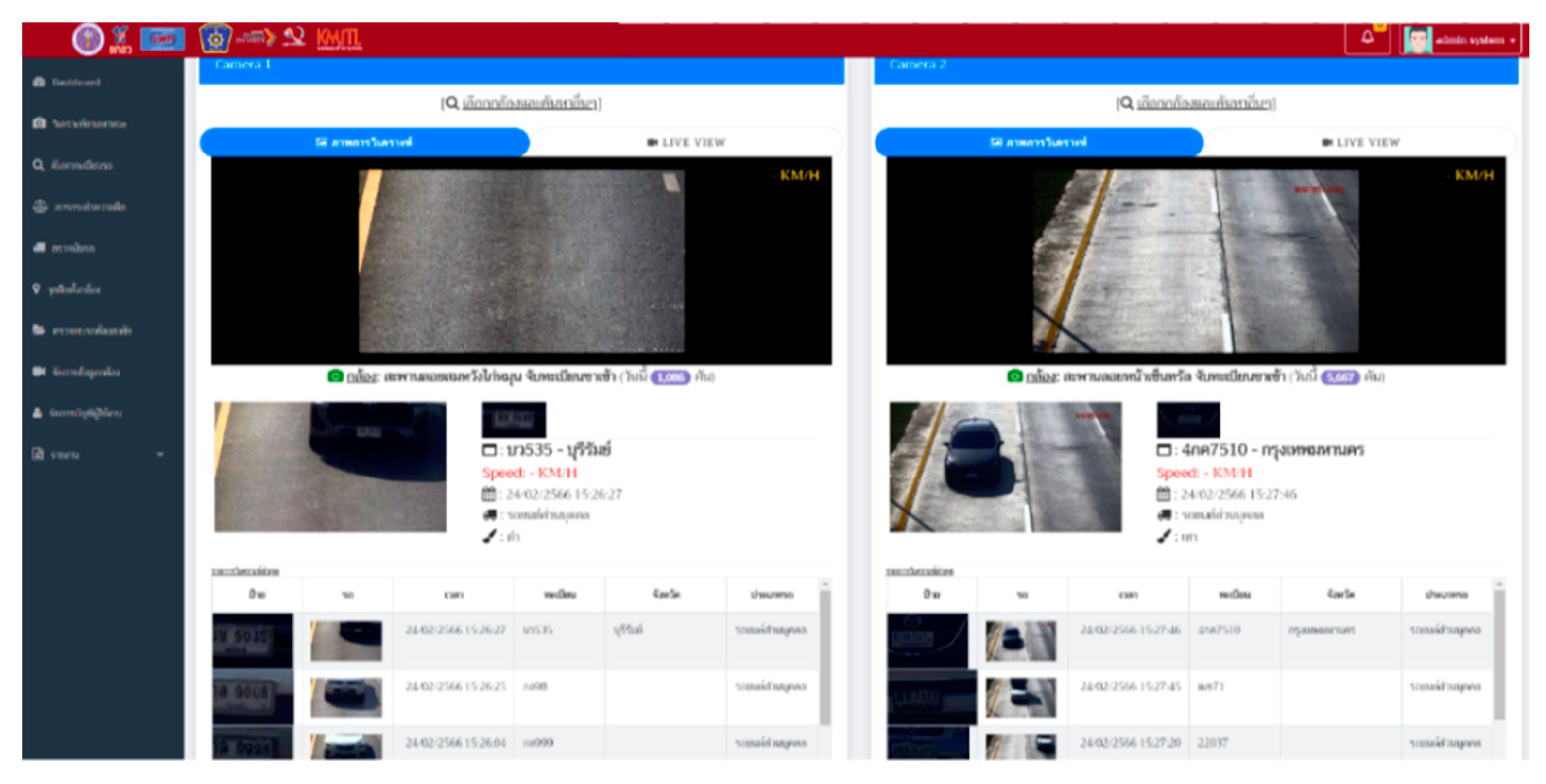

Figure 3(c) and (d) show the outdoor hardware of the license plate cameras and the developed software for the license plate detection, respectively. In

Figure 3(c) there are 6 cameras for the license plate detection, IR license plate detection (for detection with low light intensity condition) and over viewing.

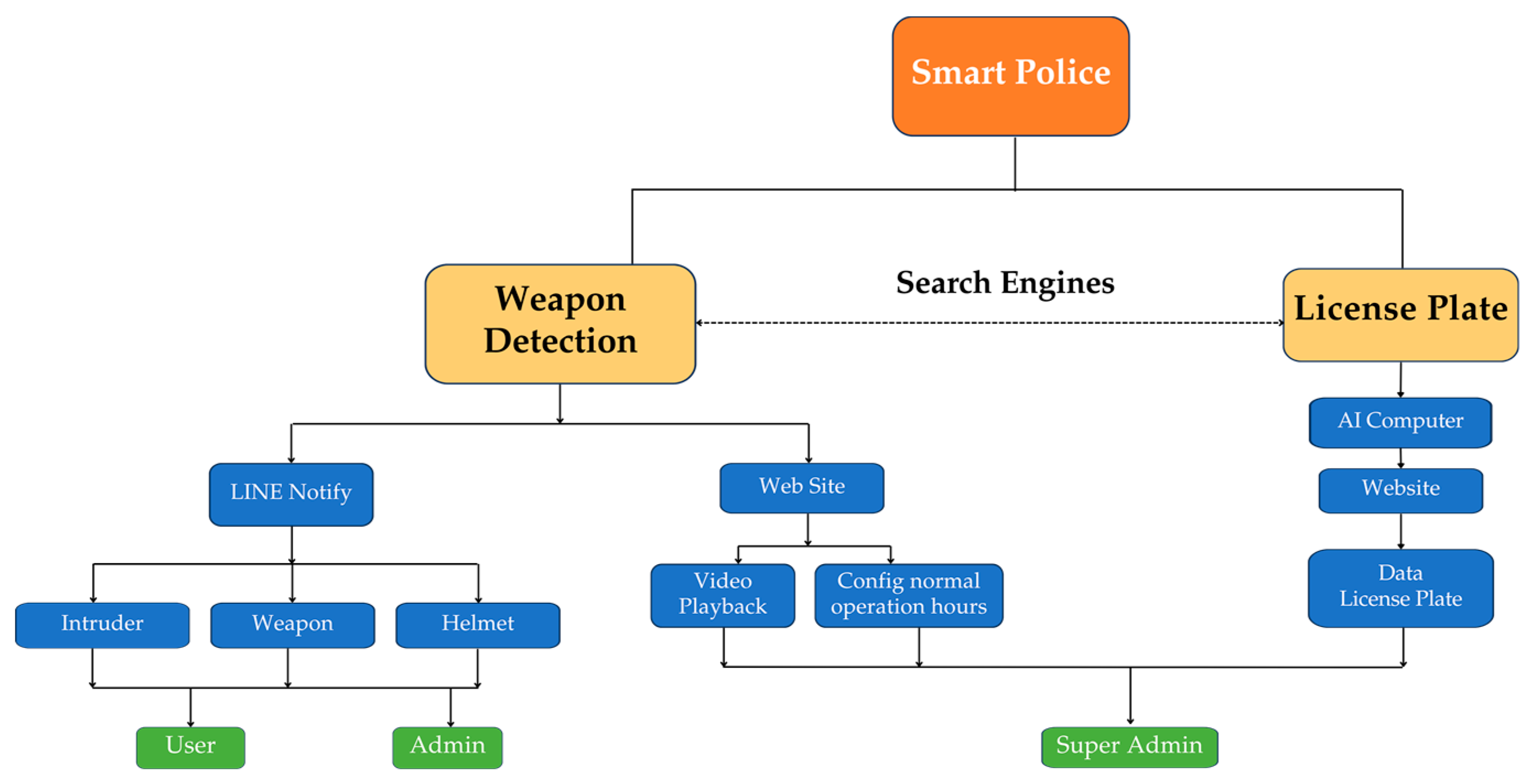

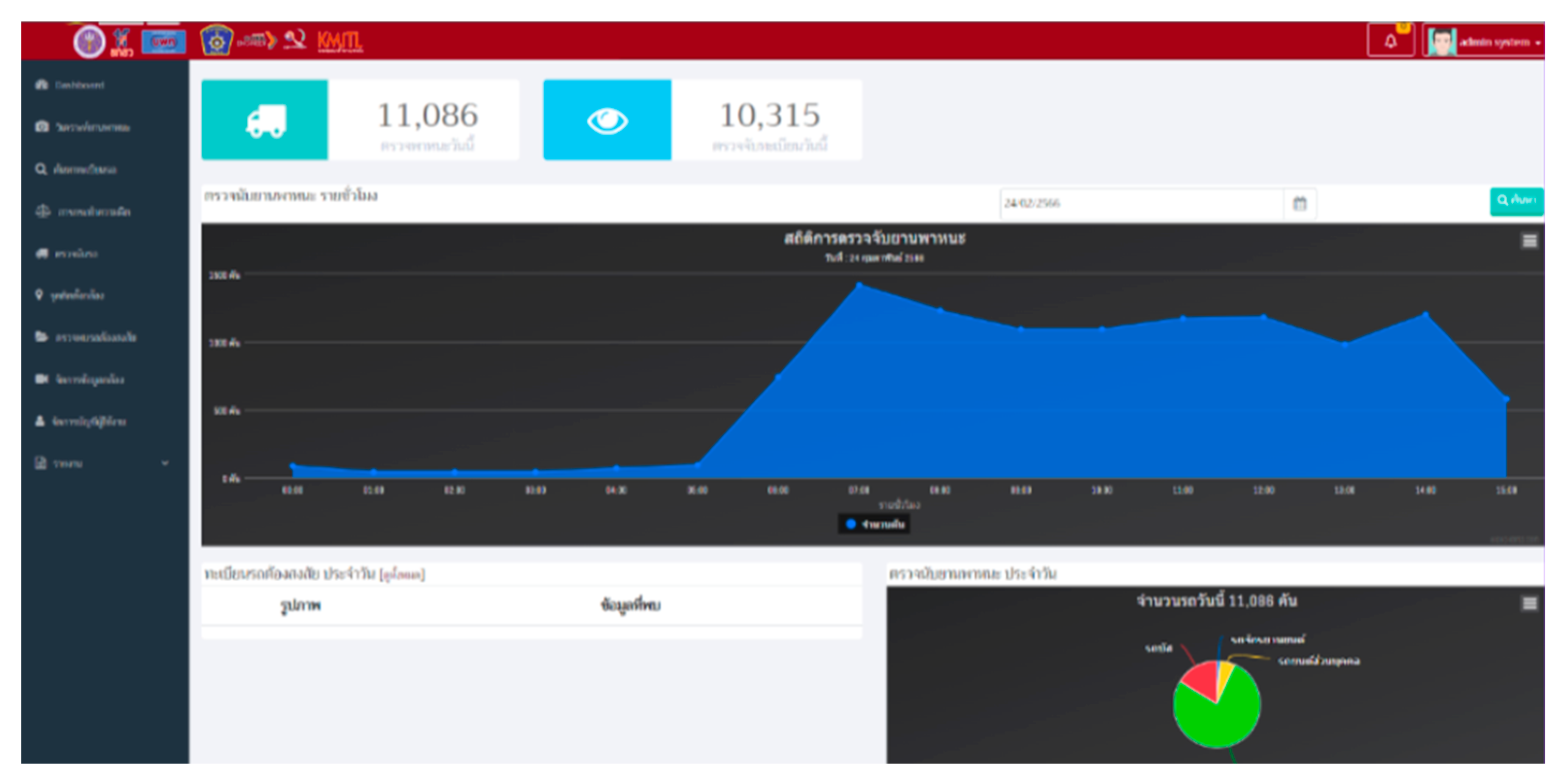

The Smart Police System consists of two main components: the Theft Alert System and the Vehicle Analysis System for road surveillance. These two systems are interconnected and accessible through dedicated websites for each system.

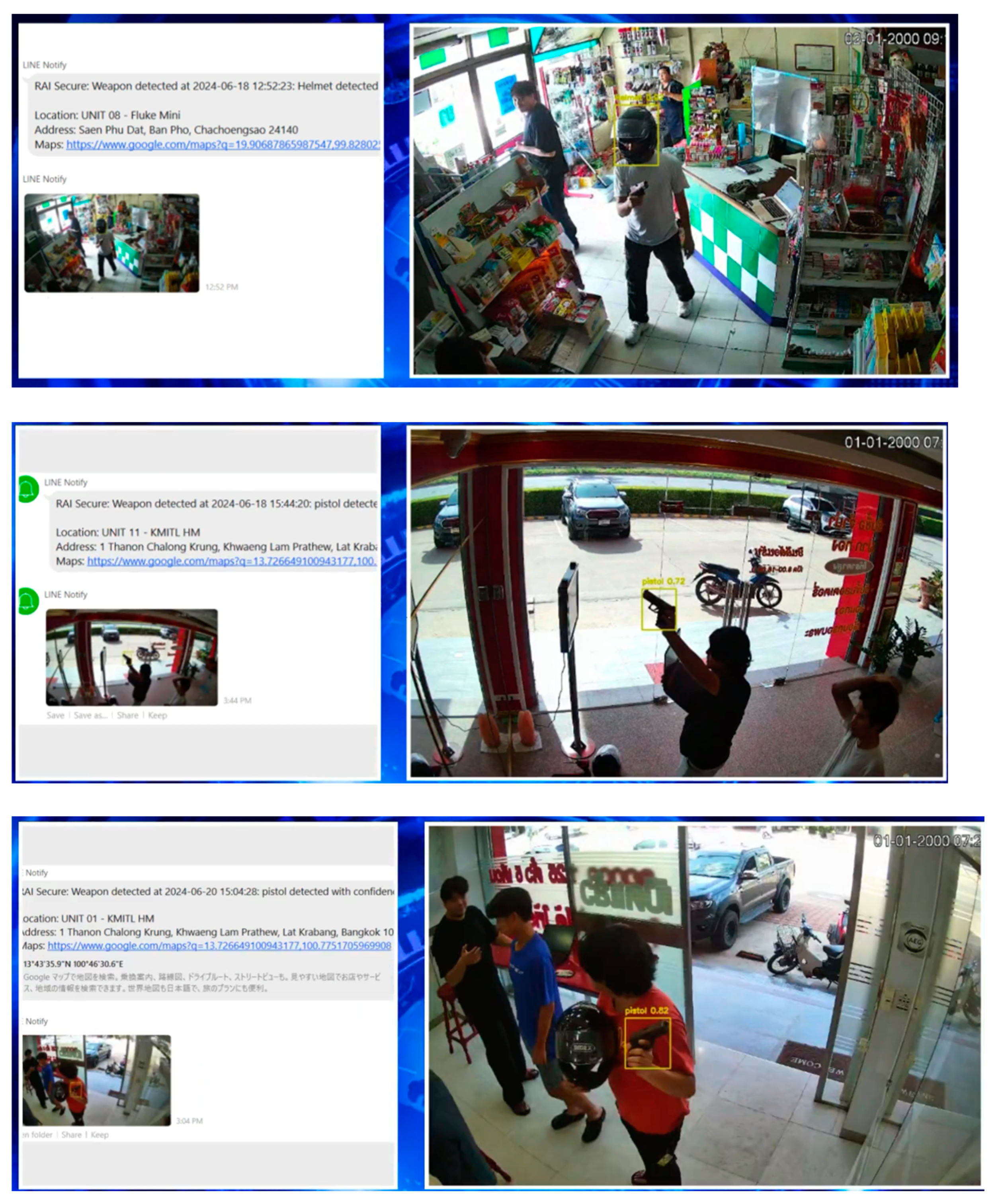

Theft Alert System (Weapon Detection)

The theft alert system is installed in locations prone to theft, such as convenience stores, gold shops, temples, schools, and hospitals. It is typically set up within the premises or at entrances to detect abnormal events, such as carrying weapons (e.g., knives or firearms), wearing helmets within restricted areas, or unauthorized access during off-hours (this feature can be enabled or disabled as needed). The system can also limit access based on different levels of authorization. Alerts are sent via Line Notify, accompanied by images of unusual activities.

Access to the system is divided into two levels:

Access to the database system is restricted exclusively to the Super Admin Level to ensure data security and integrity.

Vehicle Analysis System for Road Surveillance

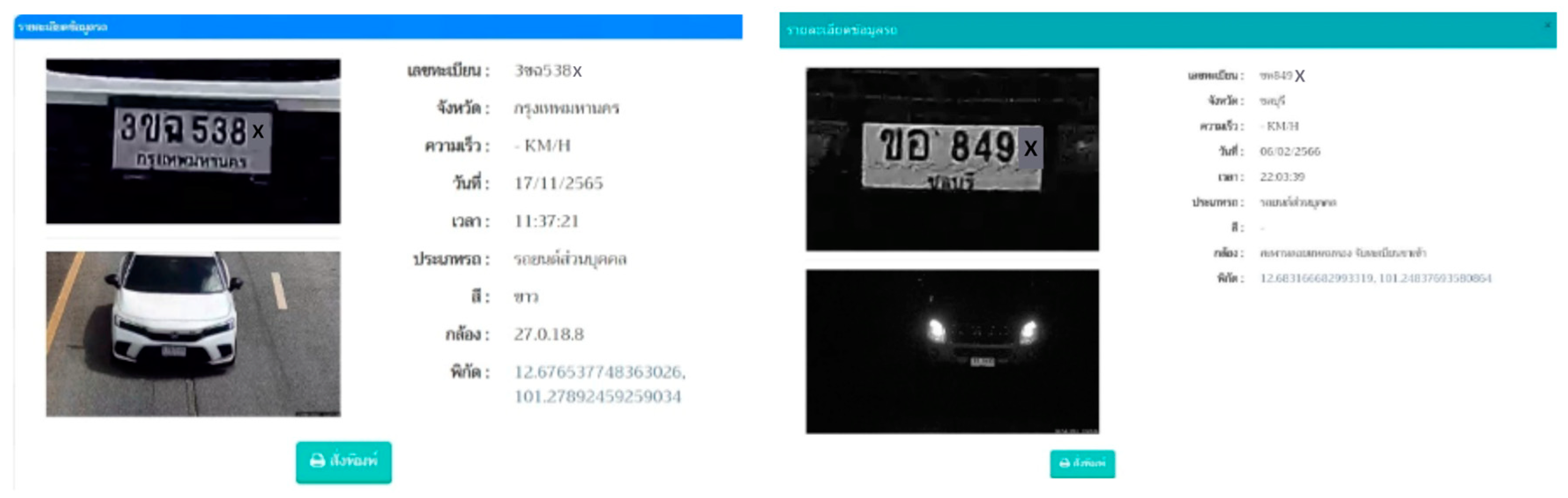

This system utilizes AI-powered computer processing to analyze vehicle data collected from license plate cameras installed in specific areas. It compiles information on passing vehicles and allows for data retrieval through a web-based platform. Users can search for license plate information related to stolen vehicles to track escape routes and apprehend suspects.

Access to this system is strictly limited to System Administrators to comply with the Personal Data Protection Act (PDPA).

The interconnection between these systems is illustrated in

Figure 4.

The operational process of the Theft Alert System is divided into five main steps, as illustrated in Figure 5. The details of each step are as follows:

This step involves obtaining images or video footage from CCTV cameras.

This step prepares the images for subsequent processing, which may include noise removal, light balance adjustment, and image resizing.

This step detects weapons, helmets, and intruders during off-hours or specified times. The intruder alert mode can be toggled on or off based on predefined schedules.

This step sends alerts via the Line Notify system to users and administrators.

This step stores the recorded events into a database for further investigation. Access to this system is restricted to administrators only.

Figure 5.

Operational Process of the Theft Alert System.

Figure 5.

Operational Process of the Theft Alert System.

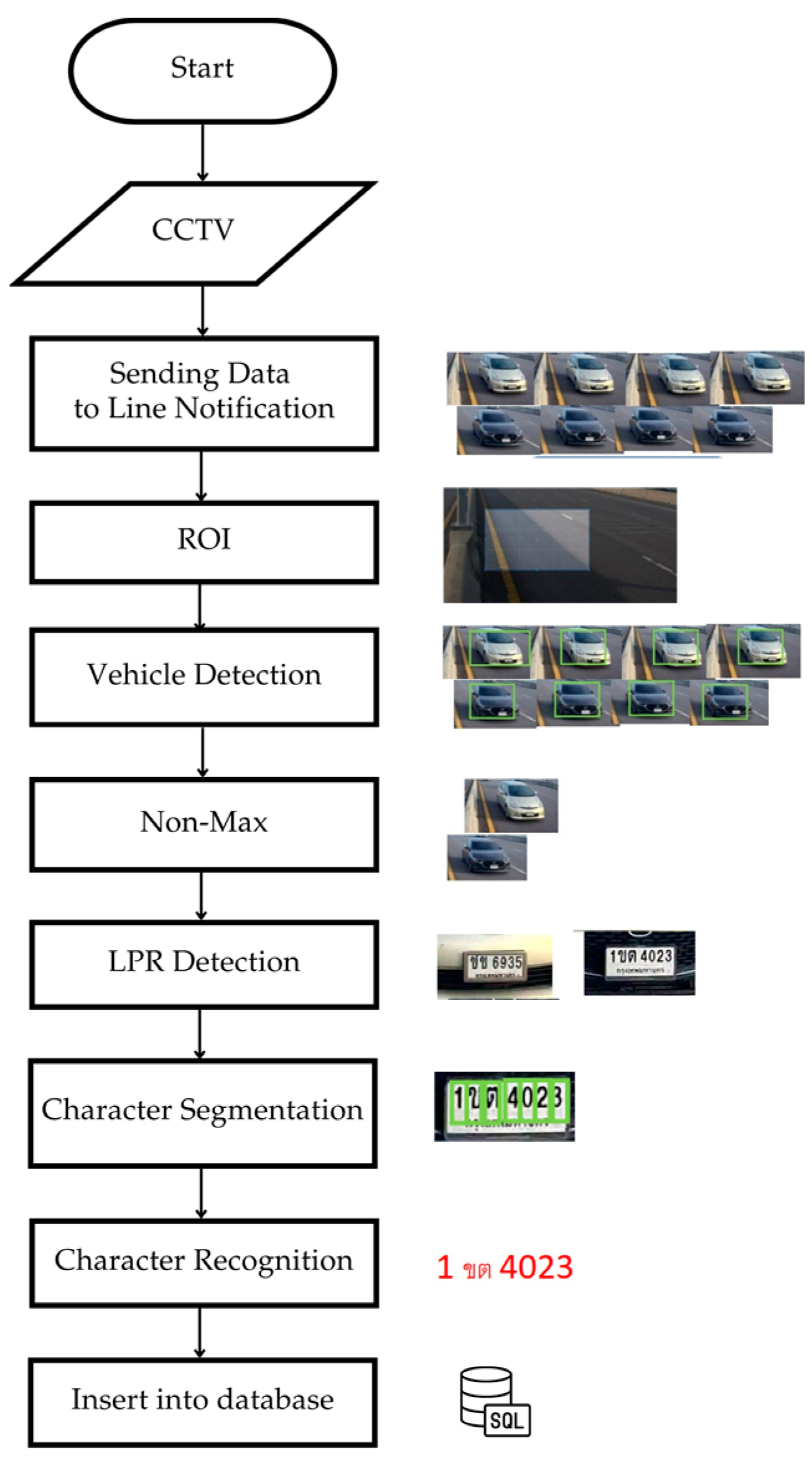

For the development of the license plate recognition system, an AI-based processing system was created in conjunction with License Plate Recognition (LPR) technology. This technology is used to identify the location and read vehicle license plates from images or video footage captured by CCTV cameras. The operational process of the developed system allows for rapid verification, as illustrated in

Figure 6.

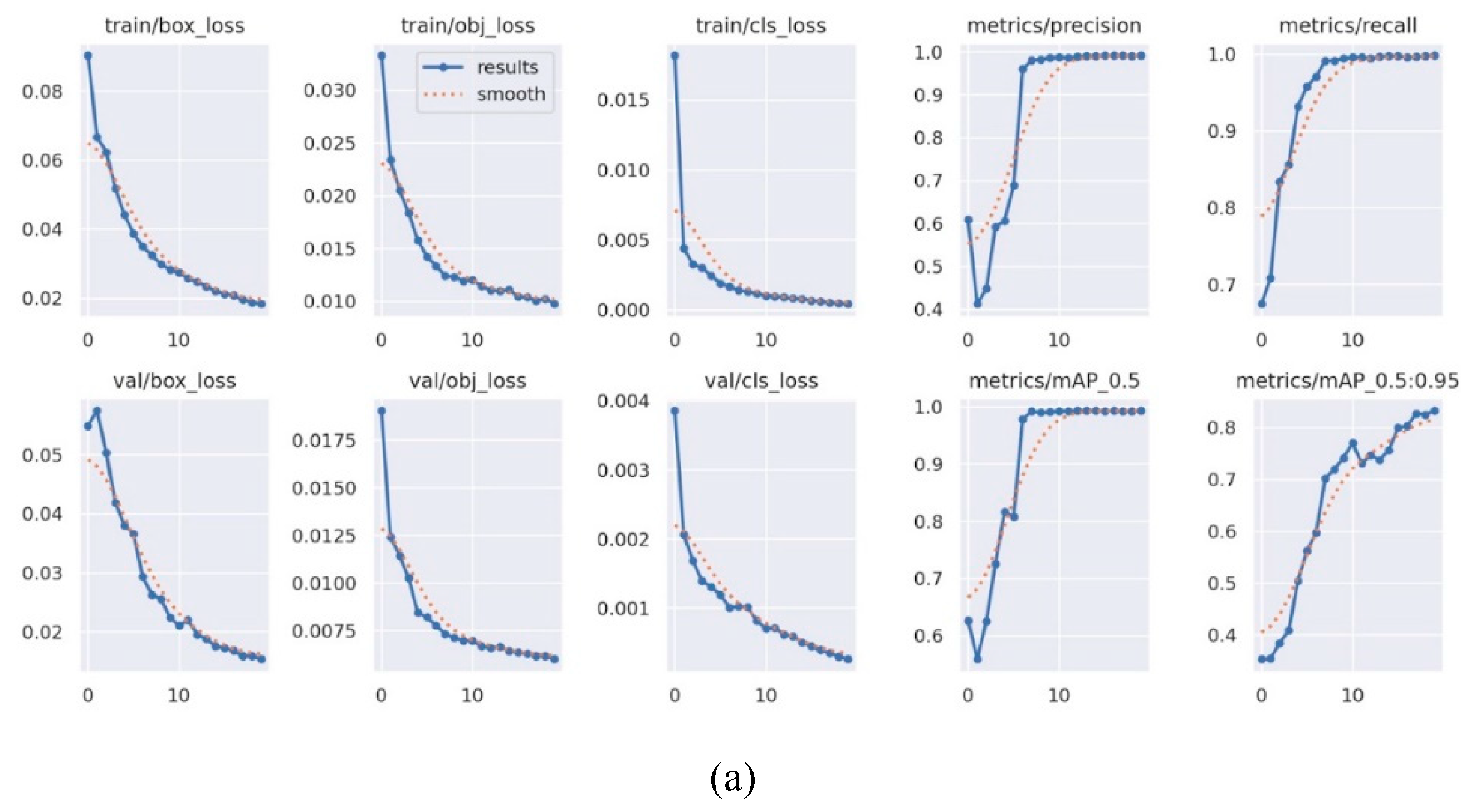

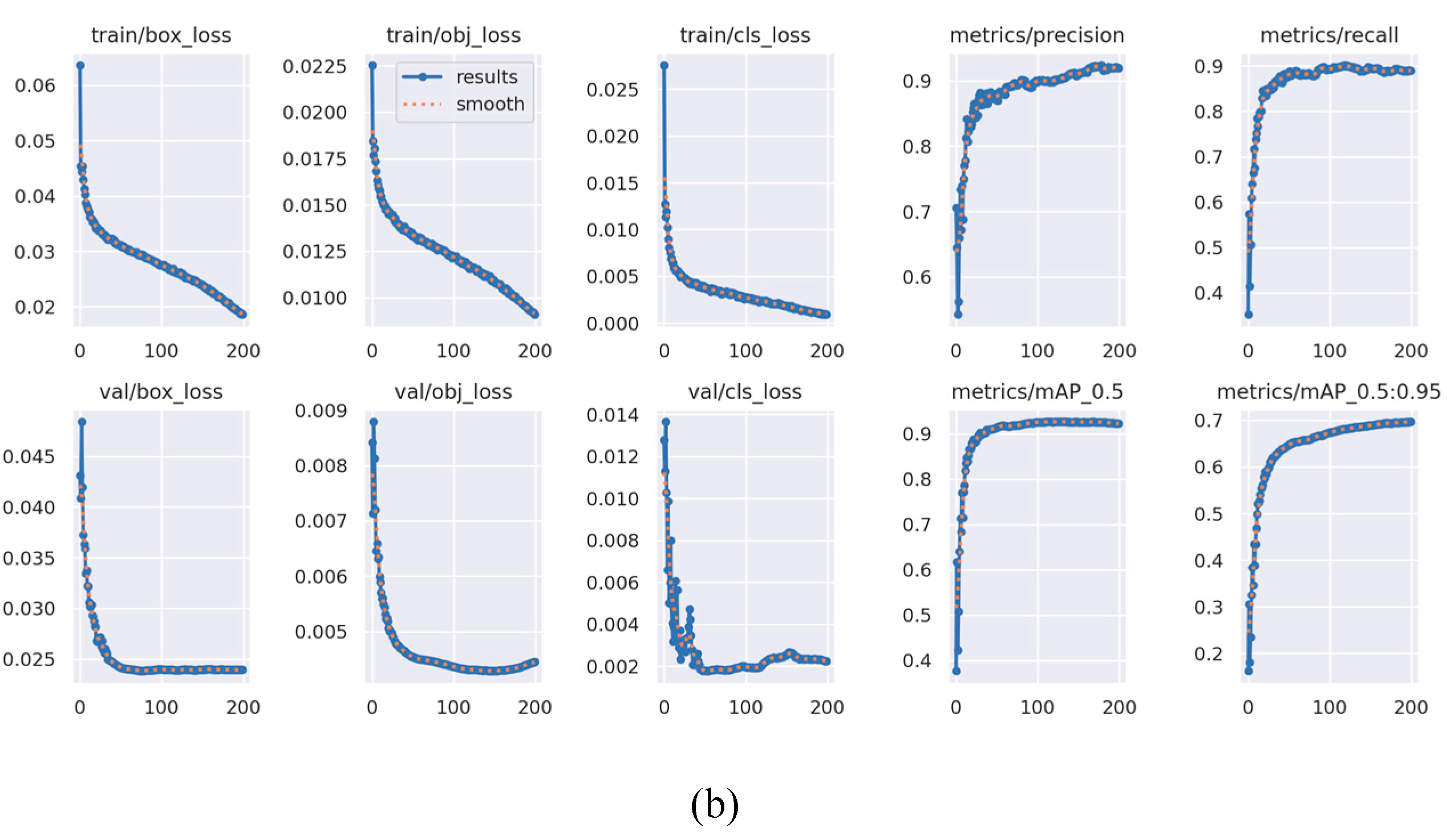

The Vehicle License Plate Training generated the training data results shown in

Figure 7(a). The Smart Police System Training results are depicted in

Figure 7(b). The figures illustrate key metrics such as accuracy, recall, mean average precision, and three distinct types of loss: box, objectness, and classification. Box loss measures the algorithm's ability to locate the center of an object and its effectiveness in covering the object with a bounding box. Additionally, the validation data for Vehicle License Plates demonstrates a significant reduction in box, objectness, and classification losses up to approximately epoch 5.

D. Knowledge Transfer and Capacity Building

The final phase focused on ensuring the sustainability of the system through knowledge transfer and capacity building:

Training sessions were conducted for local users to familiarize them with system operations and basic troubleshooting.

Law enforcement officials and IT staff received advanced training on maintaining and upgrading the system. These efforts aimed to enable local stakeholders to independently manage and further develop the system over time.

By integrating community participation, advanced statistical analysis, collaborative networks, and innovative technology, this methodology ensures the development of a robust and sustainable intelligent police system tailored to the needs of Chachoengsao Province.

As shown in

Figure 8, some examples of the test of weapon and thief detection has been shown. Clearly, the notification via the platform LINE has been successfully done. The vehicle license plate detection system is illustrated in

Figure 9. Additionally, a "Practical AI on Smart City" training program was conducted in collaboration with Provincial Police Region 2, as shown in the infographic for calling the training applicants in

Figure 10. The professional training aims to equip the police with the skills to develop programs involving embedded AI and license plate recognition, enabling the community to adjust to and manage intelligent devices in the future, thereby contributing to the sustainability of the smart city. The developed field prototype has been tested for criminal tracking and has undergone continuous improvements. At present, it has successfully been utilized to assist in capturing some criminals.