Introduction

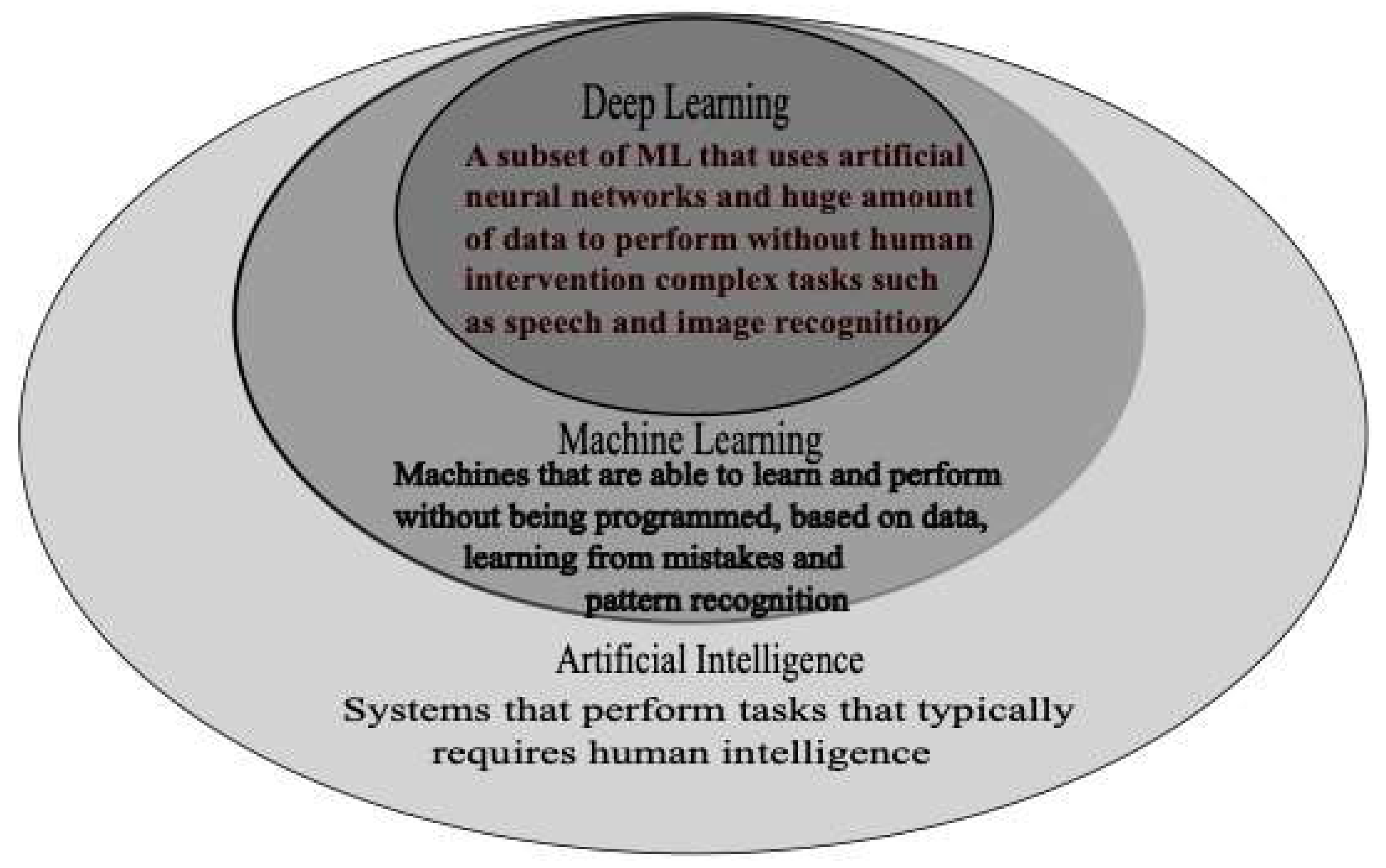

Artificial intelligence (AI) is a concept that refers to a variety of tools that use computer systems to simulate human intelligence. AI is becoming increasingly integrated into our daily lives through virtual assistants (Siri, Alexa, Google Assistant), personalized e-commerce recommendations, smart appliances, etc. As shown in

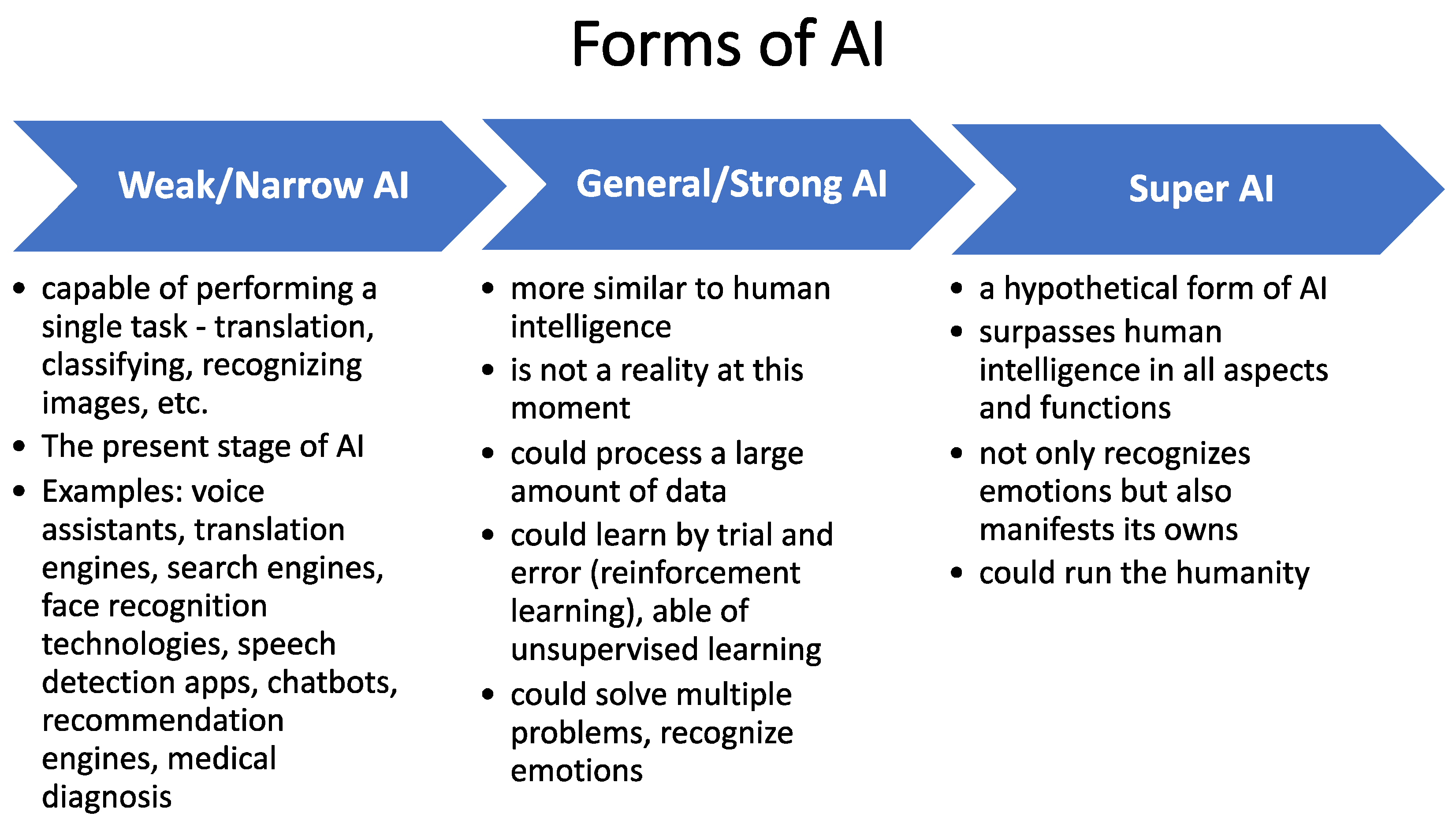

Figure 1, AI incorporates different technologies such as machine learning, deep learning, and natural language processing and it is expected to evolve from narrow AI to strong AI and possibly super AI (see

Figure 2). While narrow AI technologies are currently used in education and other fields, strong AI is still in the research stage and super AI is a prospect that raises more concerns than enthusiasm.

Besides offering interesting prospects for the future – autonomous cars, AI health diagnostic tools - AI also presents fascinating opportunities for the educational sector. Among them, we mention personalized education and intervention in the learning process, increased efficiency in the student management process, time-saving in instructors’ entire activity, from planning to grading, support for online and distance education, intelligent tutoring systems, and so on. However, these opportunities are accompanied by significant challenges. This paper will explore the ethical issues raised by the use of artificial intelligence in education.

Learning Analytics, Algorithmic Transparency, and Data Privacy

One of the most important benefits of using AI in education is the possibility of offering personalized education, which involves tailoring resources and guidance to meet the specific needs of each student [

1]. However, this requires gathering information about the student, including their previous assignment results, background, and motivation. With the assistance of AI, this information can be used to create a sort of “diagnosis” that ideally leads to the best “treatment”, or in other words, personalized education.

Learning analytics is the process of gathering and interpreting data about students involved in the learning process to improve the learning experience [

2].

Learning analytics systems can be either descriptive, predictive, or prescriptive. Descriptive analytics provide information about the students and their past activities, including demographic information and previous studies; predictive analytics offer insights into what is likely to happen with the students, for example, if they are at risk of dropping the class or failing an exam; prescriptive analytics provide recommendations to the instructor on actions that can be taken to avoid negative predictions and support the student’s progress [

3]

.

Any interaction between a student and an educational or administrative digital tool (an educational platform, a library catalog, an administrative management system, the video system of the university campus, etc.) generates a digital footprint. Brooks also mentions room-access cards, internet access points, or wearable systems as possible data sources [

4]. All these data are used through learning analytics to improve learning and teaching. Prior to the COVID-19 pandemic, these data were collected especially for online education, but the pandemic increased the use

of digital tools and educational platforms for all forms of instruction, generating a vast amount of educational data.

These data, which are essential for the personalization process, entail privacy and security risks. The EU legislation that was already protective regarding individual privacy, has been adapted to the AI challenges. The

AI Act, adopted by the European Parliament in March 2024 imposes rules against ”biometric categorization” and “emotion recognition system” which might interfere with some data collected for learning analytics purposes [

5] In other parts of the world (for example in China) some schools and universities test the use of technology to recognize and analyze the students’ and teachers’ emotions in order to increase the educational process efficiency [

6]. This different approach may cause challenges when using certain AI tools in different international environments. The use of AI in education is expected to enhance the globalization of this sector, but the various legal provisions may pose an obstacle to this process.

The learning analytics tools can be tailored to meet the specific requirements and goals of the university. Course Insights [

7] is an example of a learning analytics tool, developed by Pennsylvania University, that provides demographic information about the enrolled students, their activity in the course, the enrollment history, etc. to help the instructor design the class according to the students’ needs and to assist them when they face difficulties. Analyzing each student’s learning activity, this tool provides information about student’s engagement, the areas where they do not cope very well, and allows the professor to offer personalized feedback. The potential of the learning analytics tools to increase personalized education is very attractive, but there are still issues to be clarified concerning transparency and ethics.

Another issue with learning analytics is the necessity of training professors to interpret the data they receive and to make the best decisions. A university trying to implement Course Insight, the learning analytics tool mentioned above, involved instructors in designing it, and organized on-campus and online training workshops but had about only approximately 20% of the courses using it [

8]. It appears that the majority of professors are not yet convinced of the benefits of this tool. So, despite the potential, the use of analytics tools in higher education is limited due to the costs, the privacy issues, and the instructors’ lack of skills.

Predictive analytics involves training AI tools on large databases to accurately correlate input data with students’ future behavior. If the population used for training the system differs significantly from the population on which the system is used, it could cause important errors. The algorithms could also perpetuate errors or preconceptions from the training data [

9]. If the training data contains biases related to gender, race, socioeconomic status, etc., these biases will affect the population on which the algorithm is applied. “Algorithmic bias occurs when an algorithm encodes (typically unintentionally) the biases present in society, producing predictions or inferences that are clearly discriminatory towards specific groups” [

10]. Since machine learning models are increasingly used in the admission process, in automated grading, or to predict student success and retention, the risk of algorithmic bias has raised concerns among researchers and practitioners.

Costs and Inequalities in Implementing AI in Education

The cost of implementing AI technology in education is also a major concern. Developing or acquiring software solutions as well as the necessary hardware is expensive, leading to disparities among educational institutions. Some of them will be able to afford these expenses while others not. The integration of AI technology in education, and especially in higher education, could result in a decrease in the number of institutions and an increase in the size of the remaining ones. Universities with the financial resources to invest in AI tools will be able to accommodate a larger number of students, while those that lack the means may eventually be phased out.

The concept of the digital divide is well-known among both academics and international institutions, referring to the inequalities in the use of digital technologies [

11]. OECD expressed its concerns about this subject back in 2001, highlighting the gap in internet access worldwide [

12]. The digital divide, which originally concerned the availability of computers and internet access, has now been exacerbated in the context of AI usage in education. Forbes reports that in 2024, approximately 66% of the world’s population have internet access, totaling 5.35 billion people out of over 8 billion [

13]. While this number is impressive and continues to grow, it also means that about 34% of the world’s population lacks access to the opportunities provided by AI integration in education due to the absence of internet access. In reality, this percentage is even higher as limited internet access is just one of the factors constraining AI integration in education. Afzal et al. [

14] recommend several measures to narrow the digital gap: enhancing internet infrastructure in rural or underserved areas, providing affordable devices to low-income students, offering digital literacy training, promoting public-private partnerships, and conducting research in this field.

It is important to understand that dealing with the digital divide does not mean slowing down technological progress but finding ways to assist disadvantaged regions in improving their situations. It is unrealistic though to expect these inequalities to disappear when the general economic inequalities persist.

Reactions to the Ethical Challenges of Using AI in Education

The complex ethical implications of using AI have led governments, international organizations, and various institutions to attempt to regulate these aspects, including making recommendations for the educational sector.

In November 2021 UNESCO adopted the

Recommendation on the Ethics of Artificial Intelligence. The document outlines the benefits and risks associated with AI use and establishes a set of values and principles to guide its use across various domains, including education. These values and principles include the respect, protection, and promotion of human dignity, environmental protection, inclusiveness, avoidance of safety and security risks, non-discrimination, privacy protection, the ultimate human responsibility and accountability in cases when AI is involved, transparency, and explainability of AI systems [

15]. Although important these are only general guidelines. The most difficult part is identifying the practical implementation.

As already mentioned, the European Union adopted the

AI Act, expressing its concern about the ethical use of AI not only in education and trying to regulate sensitive issues. The EU has also developed a

Digital Education Action Plan (2021-2027), with two strategic priorities: to foster high-performing digital education ecosystems and to enhance digital skills and competencies for the digital age [

16]. The actions listed under each priority address the critical issues mentioned in the paper – the necessity for digital infrastructure, digital competencies, and AI tools that uphold privacy and ethics. Once again, the principles are clearly stated, but it is important to find ways to implement them into practice.

The

OECD Digital Education Outlook 2023 provides a detailed analysis of the content and impact of using AI in education and highlights the most important challenges [

17]. The document highlights the importance of equitable and affordable access to digital resources, and of teachers’ digital competencies, the need for “a good balance between digital and non-digital activities” (p.48), for human involvement in teaching and learning and for transparency and data protection.

As AI use in education increases, universities are interested in developing ethical frameworks and guidelines for AI use, that address issues such as bias, transparency, accountability, and privacy. For instance, Stanford University provides a guide on its website about the responsible use of AI, that includes information on safeguarding personal data, the characteristics of various AI generative tools, and the university’s AI policy.[

18] Other universities, such as Harvard and Oxford have established centers to study AI ethics. Many universities are starting to incorporate discussions about AI ethics into their curricula and continue the research on this topic.

Conclusions

The opportunities presented by the integration of AI in education are vast, but so are the challenges. The ethics of integrating AI into education is a crucial issue that must be carefully addressed

. The OECD Digital Education Outlook 2023 captures the two risks in approaching this aspect

: ”Over-regulating is a risk, especially for evolving and not well-known technology, but leaving decisions that could lead to serious harm to the ethics of individuals would be unreasonable” [

19].

On one hand, there is always a tendency for new things to scare us and create resistance to change; on the other hand, it is necessary for innovations in any field, especially in education, to adhere to principles and values recognized by society.

The interest and concern about this issue are evident from the numerous official documents and research papers produced by international institutions, organizations, and universities. The use of artificial intelligence is problematic in all fields, but especially in education, where children are involved, values are transmitted, thinking systems are formed, and the vulnerability level of the beneficiaries is very high.

The majority of the available documents on the ethics of using artificial intelligence, both in general and in education specifically, contain principles and guidelines that must be adhered to. Identifying concrete methods to avoid algorithmic bias, discrimination, the digital divide, lack of algorithmic transparency, and issues related to privacy protection remains challenging for educational institutions and teachers. For this reason, it is essential to have a continuous dialogue between educators, policymakers, and technologists to ensure that AI in education is used responsibly and ethically. Also, we emphasize the necessity for continuous research and development to enhance the transparency and equity of AI systems and to bridge the digital divide that may worsen educational disparities.

References

- Aleven, V., Rowe, J., Huang, Y., Mitrovic, A., “Domain modeling for AIED systems with connections to modeling student knowledge: a review”, in B du Boulay, A. Mitrovic & K. Yacef (Eds.), Handbook of Artificial Intelligence in Education, Edward Elgar Publishing, 2023, pp.127-169.

- Clow, Doug, “An overview of learning analytics”, in Teaching in Higher Education, 18(6)/2013, pp. 683–695. [CrossRef]

- Pozdniakov, S., Martinez-Maldonado, R., Singh, S., Khosravi, H. & Gasevc, D., “Using learning analytics to support teachers” in B du Boulay, A. Mitrovic & K. Yacef (Eds.), Handbook of Artificial Intelligence in Education, Edward Elgar Publishing, 2023, pp. 322-349.

- Brooks, C., Kovanovic, V.& Nguyen, Q., “Predictive modeling of students success”, in B du Boulay, A. Mitrovic & K. Yacef (Eds.), Handbook of Artificial Intelligence in Education, Edward Elgar Publishing, 2023, pp. 350-369.

- European Parliament: Artificial Intelligence Act: MEPs adopt landmark law. Press releases. 13.03.2024, available at https://www.europarl.europa.eu/news/en/press-room/20240308IPR19015/artificial-intelligence-act-meps-adopt-landmark-law (accessed April 2024).

- Yu, S., Lu, Y., An Introduction to Artificial Intelligence in Education, Singapore, Springer, 2021.

- Available online: https://tlt.psu.edu.

- Pozdniakov, S., Martinez-Maldonado, R., Singh, S., Khosravi, H. & Gasevc, D., “Using learning analytics to support teachers”, in B du Boulay, A. Mitrovic & K. Yacef (Eds.), Handbook of Artificial Intelligence in Education, Edward Elgar Publishing, 2023, pp. 322-349.

- Kizilcec, Rene, Lee, Hansol, “Algorithmic fairness in education”, in Holmes, Wayne, Porayska-Pompsta Kaska, The Ethics of Artificial Intelligence in Education, Routledge, 2022, pp.174-202.

- OECD: OECD Digital Education Outlook 2023: Towards an Effective Digital Education Ecosystem, OECD Publishing, Paris, p. 243, (Accessed June 2024). [CrossRef]

- Afzal, A., Khan, S., Daud, S., Ahmad, Z., & Butt, A, “Addressing the Digital Divide: Access and Use of Technology in Education”, Journal of Social Sciences Review, 3(2)/2023, pp. 883-895. [CrossRef]

- OECD: Understanding the Digital Divide, OECD Digital Economy Papers, No 49, 2001, (Accessed April 2024). [CrossRef]

- Peichen, Lexie, Mar 1, 2024, Internet Usage Statistics 2024, available at https://www.forbes.com/home-improvement/internet/internet-statistics/, (Accessed June 2024).

- Afzal, A., Khan, S., Daud, S., Ahmad, Z., & Butt, A, “Addressing the Digital Divide: Access and Use of Technology in Education”, Journal of Social Sciences Review, 3(2)/2023, pp. 883-895. [CrossRef]

- UNESCO: Recommendation on the Ethics of Artificial Intelligence, available at https://unesdoc.unesco.org/ark:/48223/pf0000381137/PDF/381137eng.pdf.multi (Accessed April 2024).

- European Commission: Ethical guidelines on the use of artificial intelligence (AI) and data in teaching and learning for Educators, Publications Office of the European Union. 2022, (Accessed May 2024). [CrossRef]

- OECD: OECD Digital Education Outlook 2023: Towards an Effective Digital Education Ecosystem, OECD Publishing, Paris, (Accessed June 2024). T. [CrossRef]

- Responsible AI at Stanford, available at https://uit.stanford.edu/security/responsibleai#section_3427 (Accessed June 2024).

- OECD: OECD Digital Education Outlook 2023: Towards an Effective Digital Education Ecosystem, OECD Publishing, Paris, p. 29, (Accessed June 2024). [CrossRef]

- AFZAL, A., Khan, S., Daud, S., Ahmad, Z., & Butt, A., “Addressing the Digital Divide: Access and Use of Technology in Education”, in Journal of Social Sciences Review, 3(2)/2023, pp. 883-895. [CrossRef]

- ALEVEN, V., Rowe, J., Huang, Y., Mitrovic, A., “Domain modeling for AIED systems with connections to modeling student knowledge: a review”, in B du Boulay, A. Mitrovic & K. Yacef (Eds.), Handbook of Artificial Intelligence in Education, Edward Elgar Publishing, 2023, pp.127-169.

- DU BOULAY, B., Mitrovic, A. & Yacef, K. (Eds.), Handbook of Artificial Intelligence in Education. Edward Elgar Publishing, 2023.

- BROOKS, C., Kovanovic, V.& Nguyen, Q., “Predictive modeling of students success”, in B du Boulay, A. Mitrovic & K. Yacef (Eds.), Handbook of Artificial Intelligence in Education, Edward Elgar Publishing, 2023, pp. 350-369.

- CASPARI-SADEGHI, S., “Learning assessment in the age of big data: Learning analytics in higer education”, in Cogent Education, 10(1)/2023. [CrossRef]

- CLOW, Doug, “An overview of learning analytics”, in Teaching in Higher Education, 18(6)/2013, pp. 683–695. [CrossRef]

- European Commission: Ethical guidelines on the use of artificial intelligence (AI) and data in teaching and learning for Educators, Publications Office of the European Union. 2022, (Accessed May 2024). [CrossRef]

- European Parliament: Artificial Intelligence Act: MEPs adopt landmark law. Press releases. 13.03.2024, available at https://www.europarl.europa.eu/news/en/press-room/20240308IPR19015/artificial-intelligence-act-meps-adopt-landmark-law (accessed April 2024).

- KIZILCEC, Rene, Lee, Hansol, “Algorithmic fairness in education”, in Holmes, Wayne, Porayska-Pompsta Kaska, The Ethics of Artificial Intelligence in Education, Routledge, 2022, pp.174-202.

- OECD: Understanding the Digital Divide, OECD Digital Economy Papers, No 49, 2001, (Accessed April 2024). [CrossRef]

- OECD: OECD Digital Education Outlook 2023: Towards an Effective Digital Education Ecosystem, OECD Publishing, Paris, (Accessed June 2024). T. [CrossRef]

- PEICHEN, Lexie, Mar 1, 2024, Internet Usage Statistics 2024, available at https://www.forbes.com/home-improvement/internet/internet-statistics/, (Accessed June 2024).

- POZDNIAKOV, S., Martinez-Maldonado, R., Singh, S., Khosravi, H. & Gasevc, D., “Using learning analytics to support teachers”, in B du Boulay, A. Mitrovic & K. Yacef (Eds.), Handbook of Artificial Intelligence in Education, Edward Elgar Publishing, 2023, pp. 322-349.

- UNESCO: Recommendation on the Ethics of Artificial Intelligence, available at https://unesdoc.unesco.org/ark:/48223/pf0000381137/PDF/381137eng.pdf.multi (Accessed April 2024).

- YU, S., Lu, Y., An Introduction to Artificial Intelligence in Education, Singapore, Springer, 2021.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).