Submitted:

30 January 2025

Posted:

31 January 2025

You are already at the latest version

Abstract

In this paper we explore ChatGPT's ability to produce a summary, a precis and/or an essay on the basis of excerpts from a novel – The Solid Mandala - by Noble Prize Australian writer Patrick White. We use a number of prompts to test a number of functions related to narrative analysis from the point of view of the “sujet”, the “fable”, and the style. In the paper, we illustrate extensively a number of recurrent hallucinations that can badly harm the understanding of the contents of the novel. We made a list of 12 different types of mistakes or hallucinations we found GPT made. We then tested Gemini for the same 12 mistakes and found a marked improvement in all critical key issues. The conclusion for ChatGPT is mostly negative. We formulate as an underlying hypothesis for its worse performance, the influence of vocabulary size which in Gemma 2 is 7 times higher than in GPT.

Keywords:

1. Introduction

1.1. State of the Art of Hallucinations

1.1.1. Hallucinations in Medicine

“... we aimed to assess whether ChatGPT could reliably produce accurate references to supplement the literature search process. We describe our March 2023 exchange with ChatGPT, which generated thirty-five citations, two of which were real. 12 citations were similar to actual manuscripts (e.g., near-matched title with incorrect author lists, journals, or publication years) and the remaining 21, while plausible, were in fact a pastiche of multiple existent manuscripts...Furthermore, we describe potential explanations for citation fabrication by GPTs, as well as measures being taken to remedy this issue, including reinforcement learning. Our results underscore that output from conversational LLMs should be verified.”

1.1.2. Hallucinations in Summarization

“A summary S of a document D contains a factual hallucination if it contains information not found in D that is factually correct. Factual hallucinations may be composed of intrinsic hallucinations or extrinsic hallucinations. By definition, abstractive summaries are written to preserve the salient information in the input document, but they are expressed in the words of the summary author as opposed to the input document author.”

1.1.3. Hallucinations in Law

1.1.4. Hallucinations: Vocabulary Size, Factuality and Faithfulness

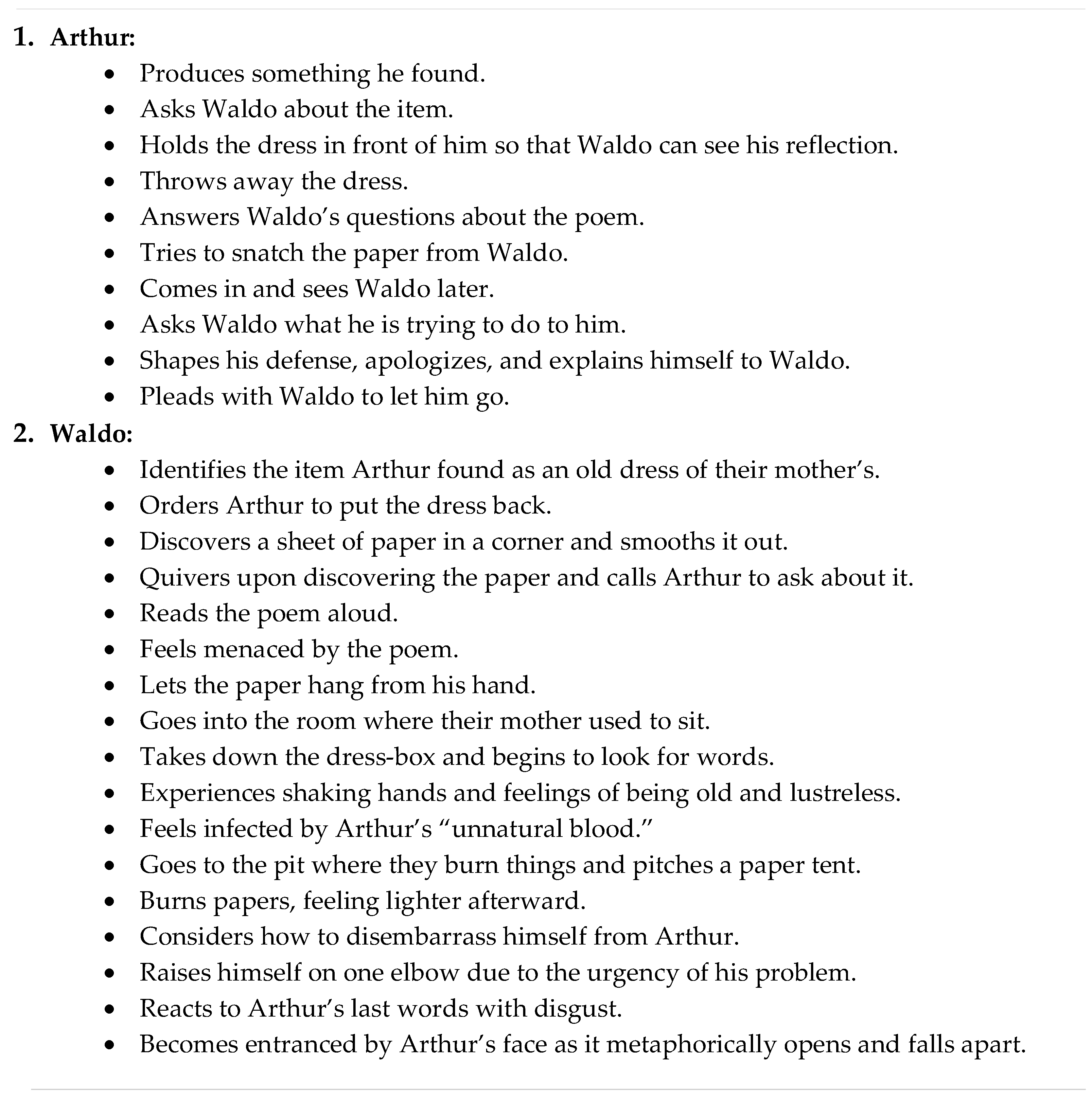

2. Materials, Methods and Results I°

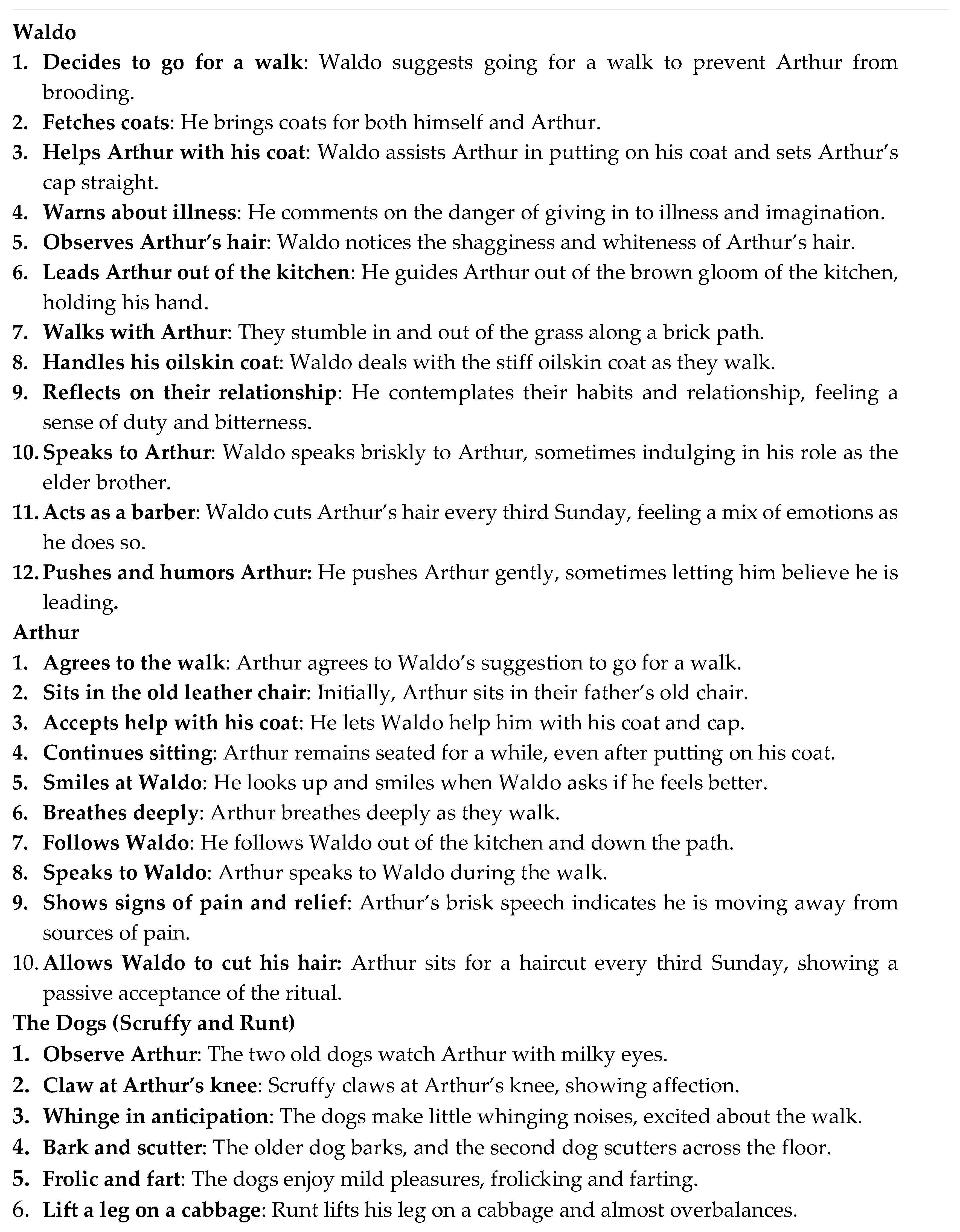

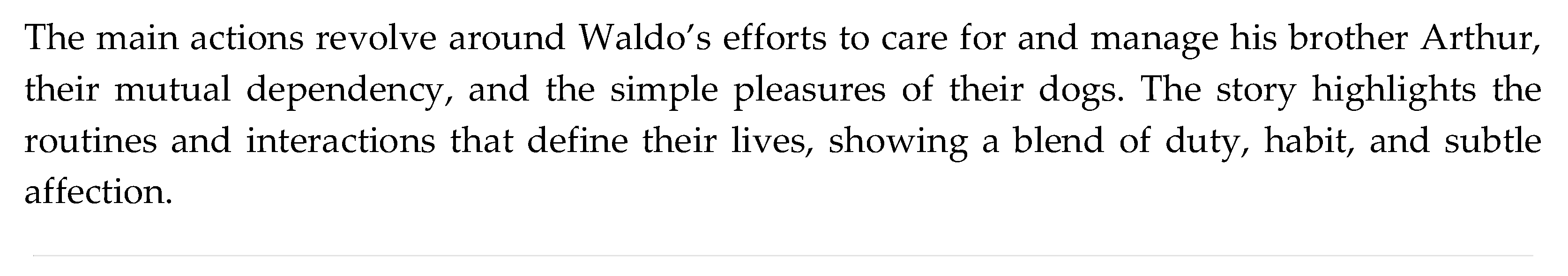

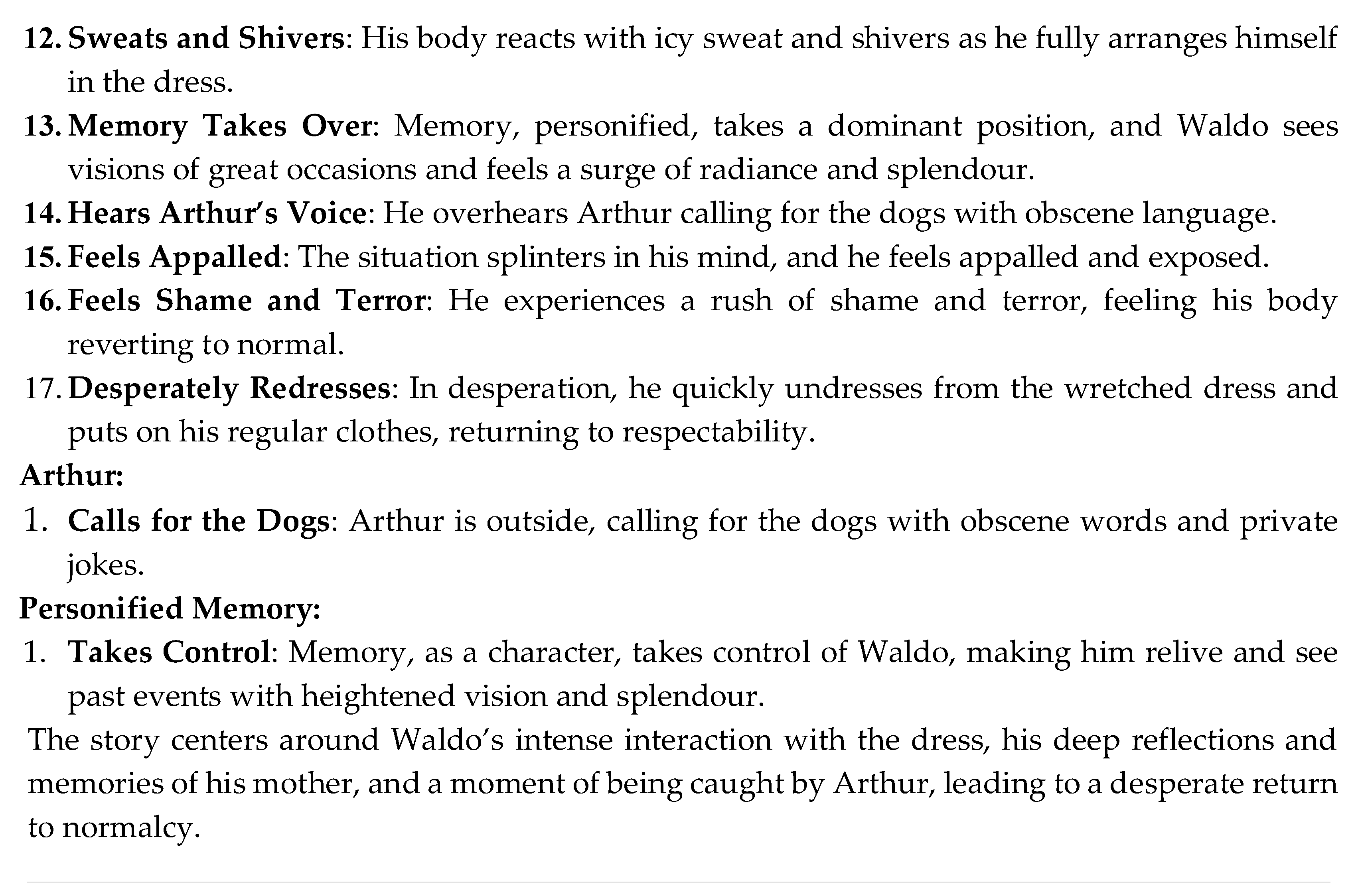

- Tell me all that happens in the story

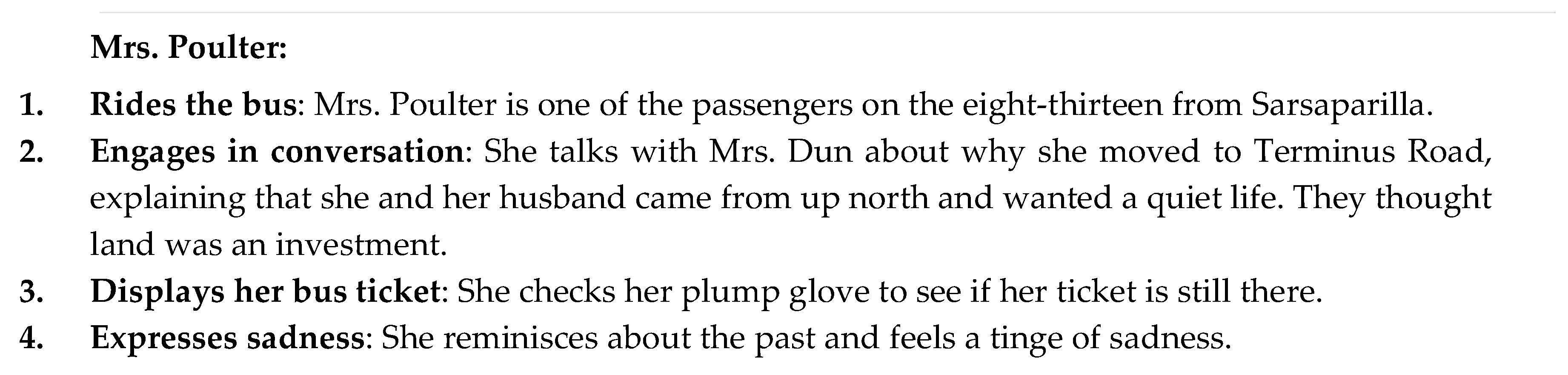

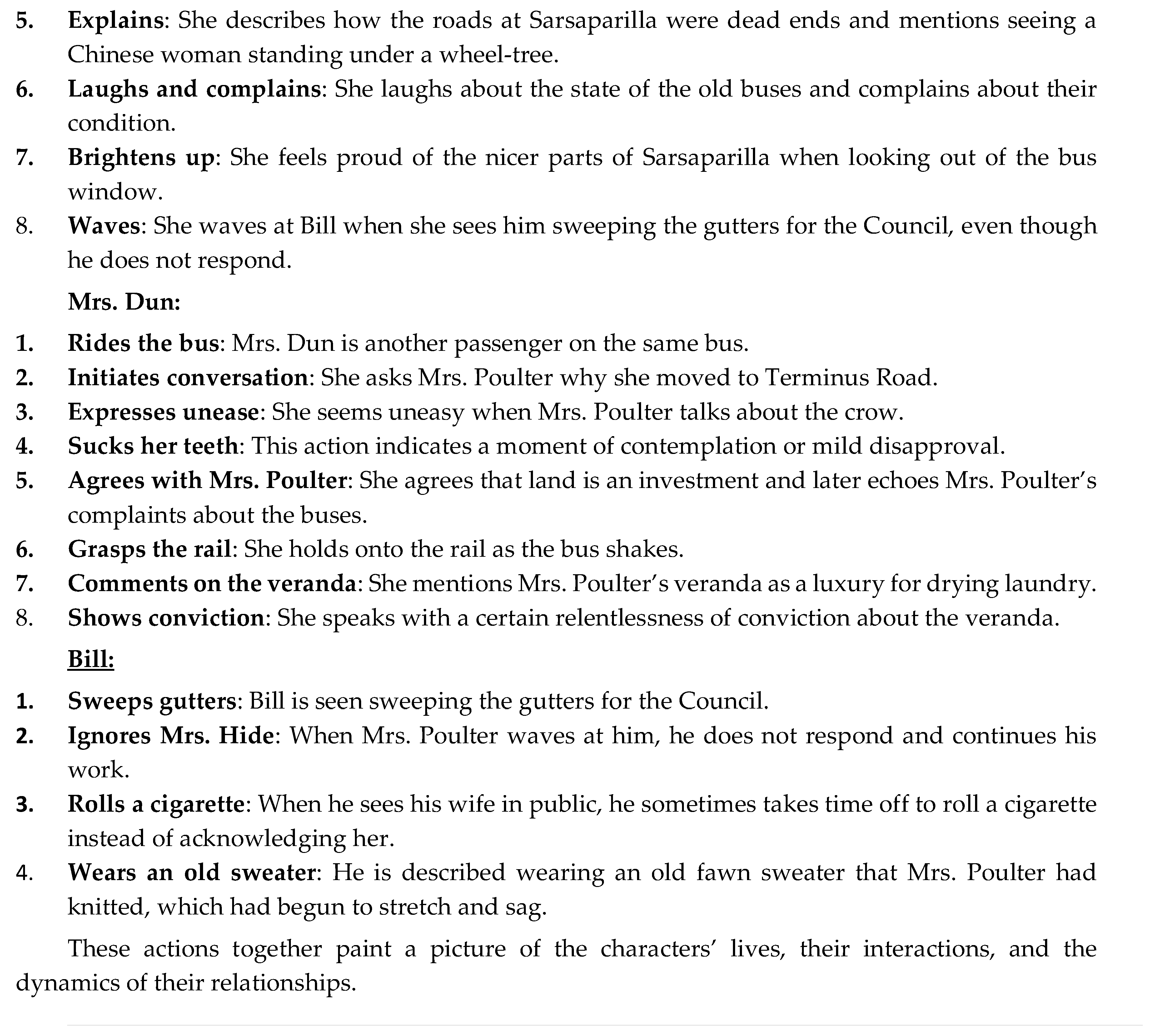

- Tell me all that the characters do in the story

- Tell me all that happens to the characters of the story

- What have all the characters in the story done?

- I will now copy paste the entire text of a novel if that will suit you: what narrative techniques and what linguistic devices did the author use to characterize the protagonists?

If you share the entire text of a novel, it might be challenging to analyze it in a single step due to its length. However, you can share key passages or chapters that you believe are crucial to understanding the characterization of the protagonists. This will allow for a focused analysis of the narrative techniques and linguistic devices used.

Alternatively, if you can specify which parts of the novel are particularly important for character development, I can analyze those sections. That way, we can break down how the author builds the characters and what literary tools are employed.

The excerpt from“Riders in the Chariot”byPatrick Whiteshowcases several linguistic devices and narrative techniques that characterize the protagonists, Mrs. Poulter and Mrs. Dun, as well as the setting of the scene. White uses these techniques to subtly reveal the characters’ personalities, social standing, and the nature of their relationship.

This excerpt from Patrick White’sThe Solid Mandalaemploys a range of linguistic and rhetorical devices to characterize Mrs. Poulter and Mrs. Dun, two elderly women who engage in conversation while riding a bus. Here’s an analysis of some key devices that help shape the personalities and dynamics between these characters:

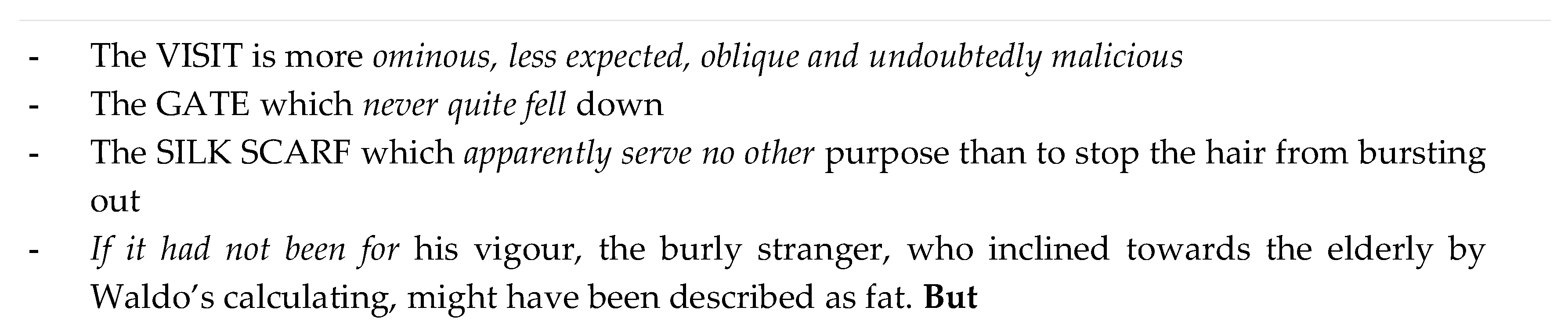

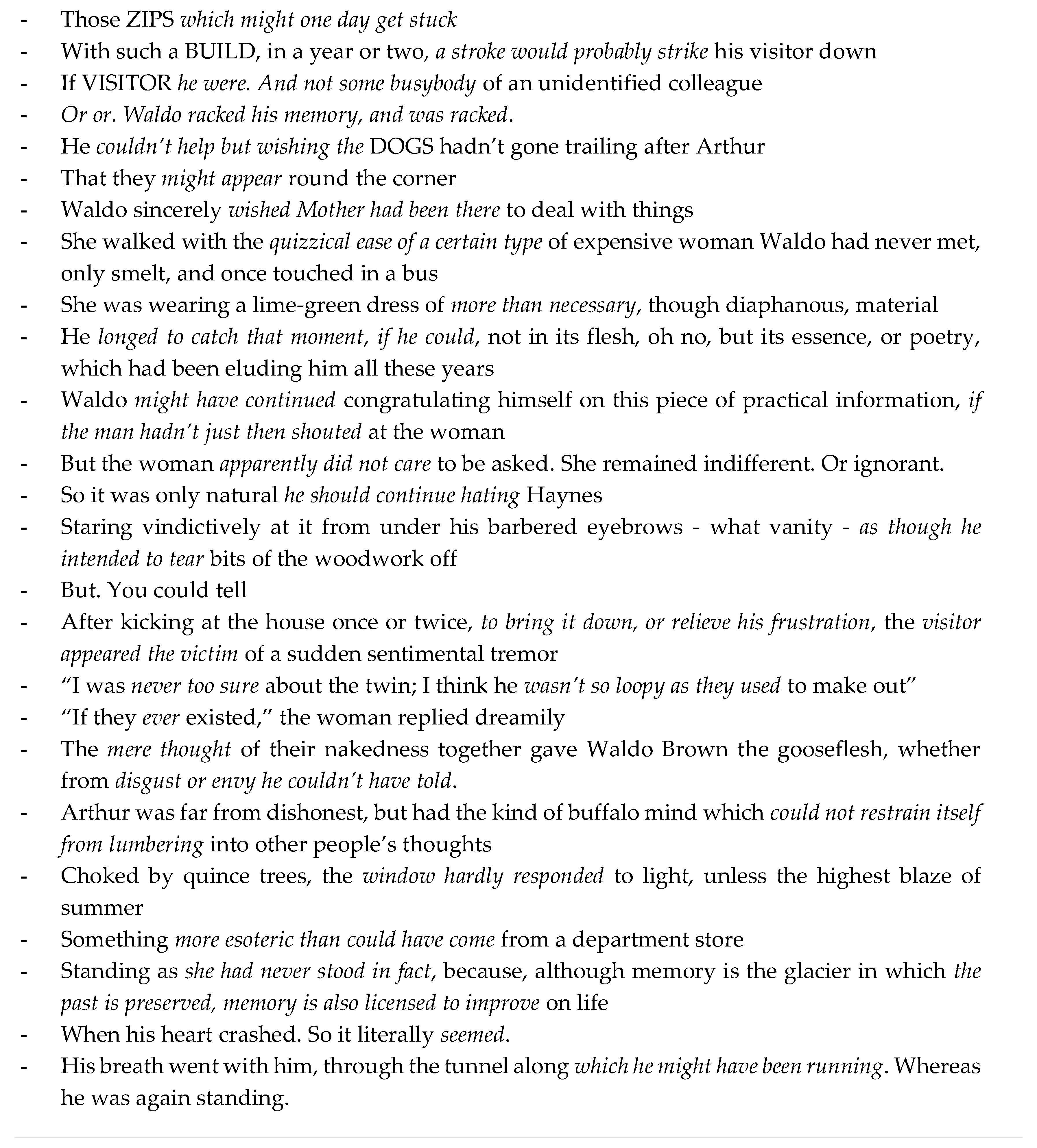

2.1. The Story or Fabula

- EXCERPT No.1

- EXCERPT No. 2

- EXCERPT No.3

- EXCERPT No.4

3. Method, Materials and Results II°

3.1. Testing ChatGPT with the Plot, the “Sujet”

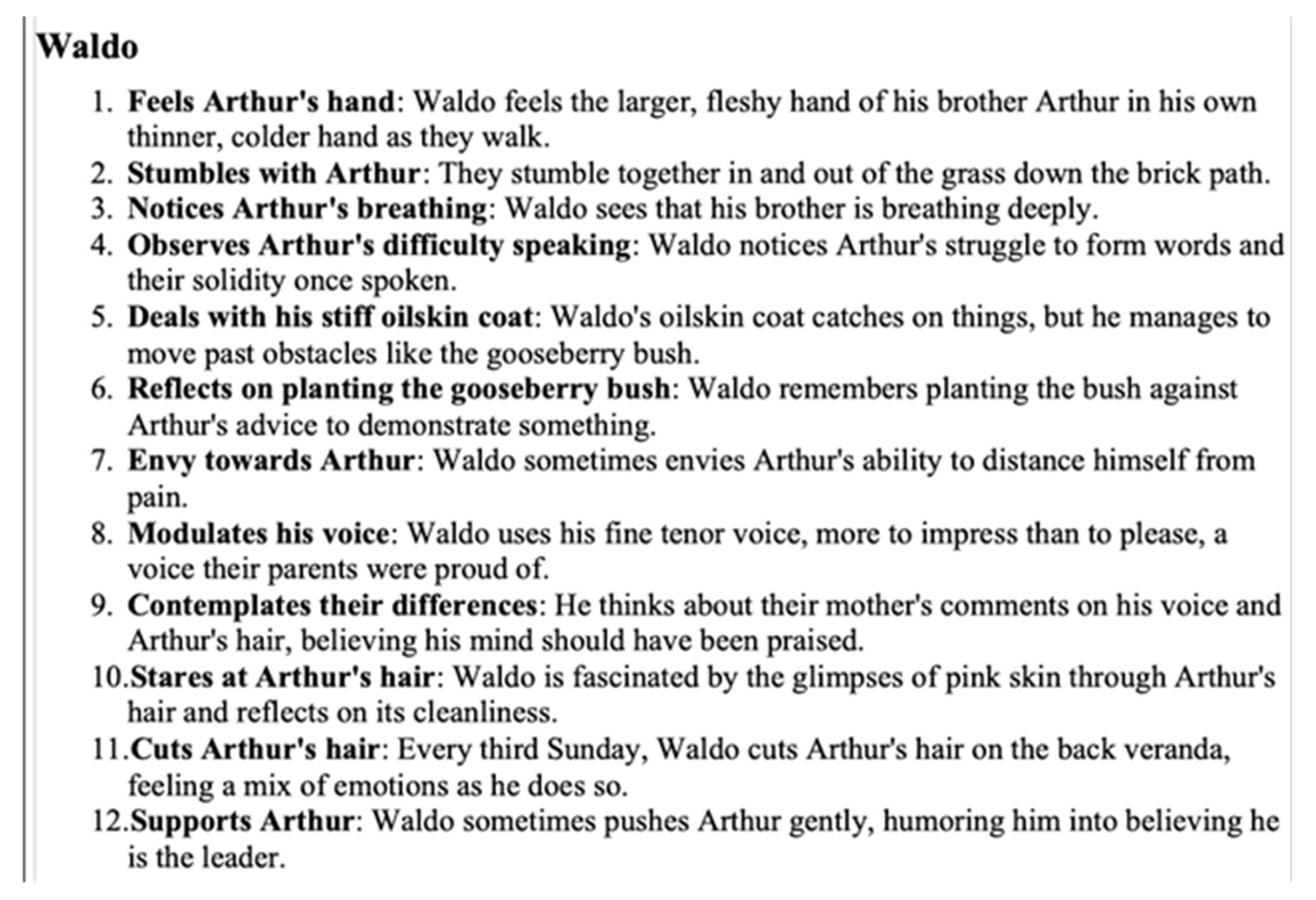

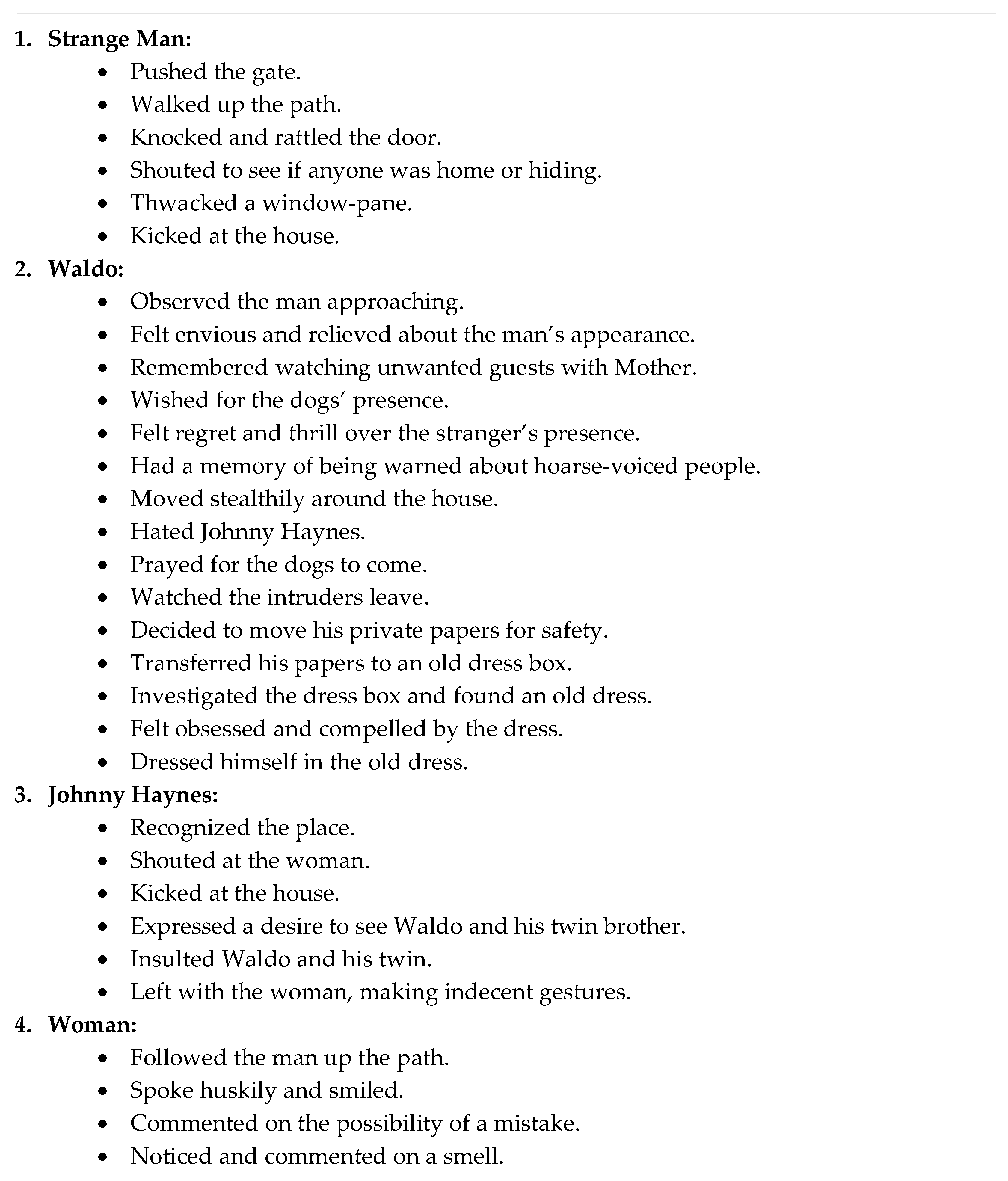

| Meta-Tags | Uncertainty | Subjectivity | Subjectivity | Judgement |

|---|---|---|---|---|

| Intermediate Tags | Non-Factuality | Psychology | Affectivity | Social_Evaluation |

| Tags | Seeming | Perception | Inclination_Positive | Esteem_Negative |

| Gnomic | Precognition | Inclination_Negative | Esteem_Positive | |

| Concessive | Cognition | Security_Positive | Sanction_Negative | |

| Conditional | Performwill | Security_Negative | Sanction_Positive | |

| Defdesire | Satisfaction_Positive | |||

| Will | Satisfaction_Negative | |||

| Possibility | ||||

| Ability | ||||

| Obligation | ||||

| Assumption | ||||

| Negation |

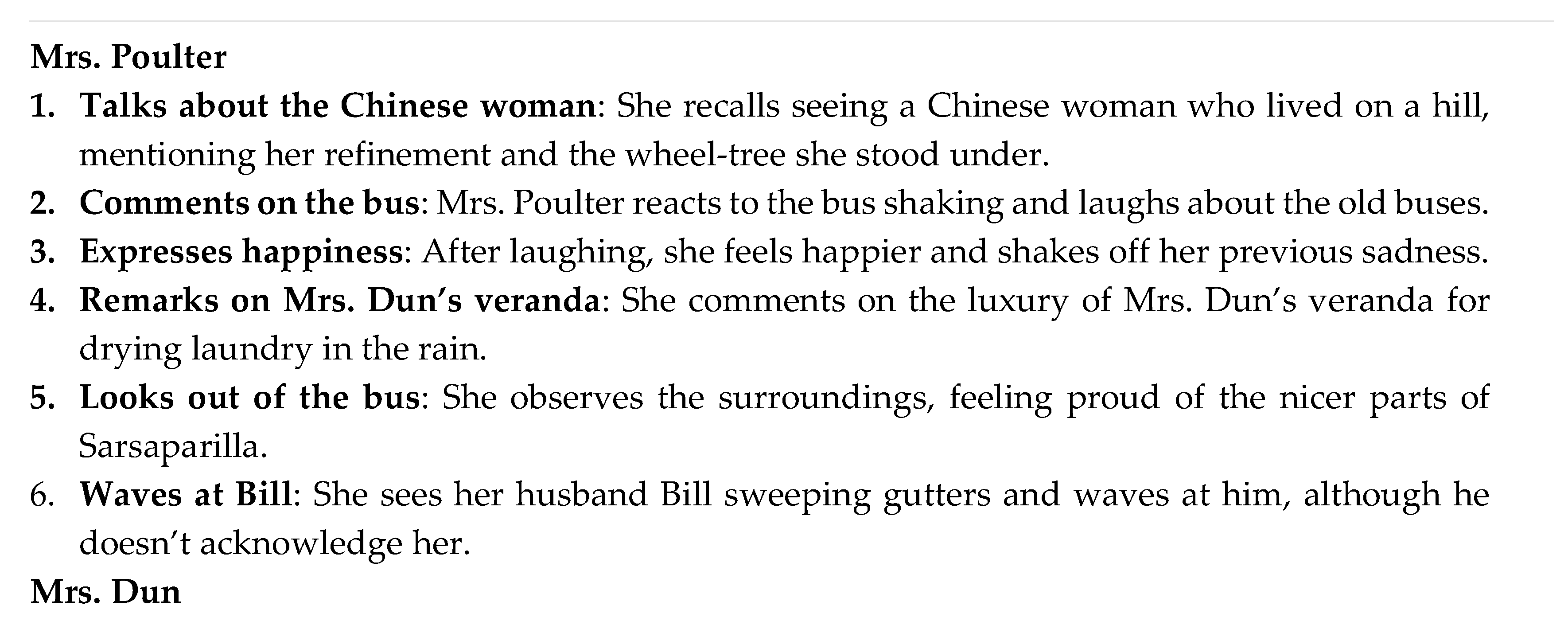

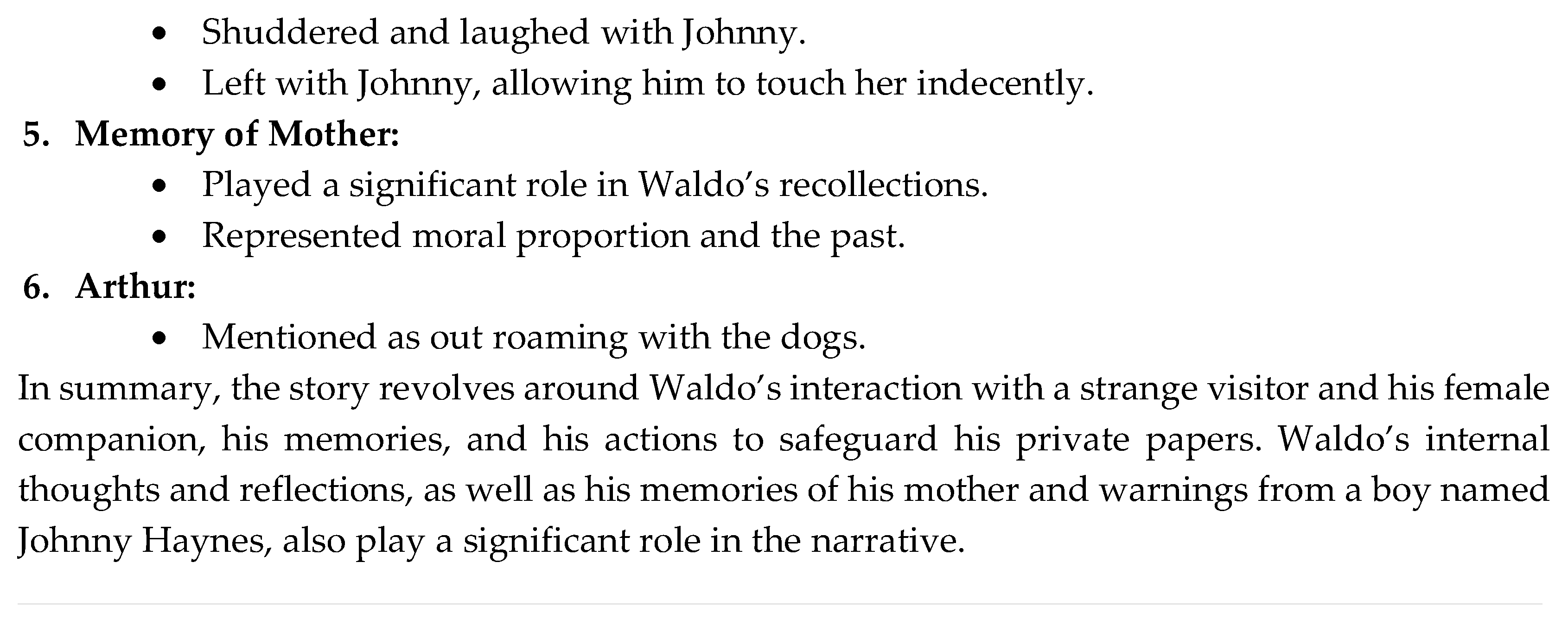

| Waldo | Arthur | Mrs. Poulter | Totals | RatioW | RatioA | RatioP | |

|---|---|---|---|---|---|---|---|

| Percept | 674 | 303 | 109 | 1086 | 62,063 | 27,9 | 10,037 |

| Precogn | 379 | 141 | 39 | 559 | 67,799 | 25,224 | 6,977 |

| Cognition | 953 | 460 | 140 | 1553 | 61,365 | 29,62 | 9,015 |

| PerformW | 39 | 3 | 0 | 42 | 92,857 | 7,143 | 0 |

| Seeming | 512 | 198 | 56 | 766 | 66,841 | 25,848 | 7,3107 |

| Will | 74 | 33 | 8 | 115 | 64,348 | 28,696 | 6,956 |

| Possibl | 224 | 54 | 42 | 320 | 70 | 16,875 | 13,125 |

| Gnomic | 32 | 20 | 9 | 61 | 52,459 | 32,787 | 14,754 |

| Ability | 347 | 195 | 51 | 593 | 58,516 | 32,884 | 8,6 |

| Obligation | 178 | 79 | 28 | 285 | 62,456 | 27,719 | 9,8246 |

| Concessv | 150 | 75 | 26 | 251 | 59,761 | 29,88 | 10,358 |

| Conditnl | 264 | 117 | 32 | 413 | 63,922 | 28,329 | 7,7482 |

| Defdesire | 49 | 35 | 2 | 86 | 56,977 | 40,698 | 2,325 |

| Assumpt | 73 | 36 | 9 | 118 | 61,864 | 30,508 | 7,627 |

| Emot_Pos | 400 | 183 | 43 | 626 | 63,898 | 29,233 | 6,869 |

| Emot_Neg | 247 | 107 | 30 | 384 | 64,323 | 27,864 | 7,812 |

| Inclin_Pos | 83 | 50 | 17 | 150 | 55,334 | 33,334 | 11,334 |

| Inclin_Neg | 75 | 26 | 12 | 113 | 66,372 | 23,009 | 10,619 |

| Secur_Pos | 133 | 65 | 19 | 217 | 61,29 | 29,954 | 8,756 |

| Secur_Neg | 297 | 116 | 40 | 453 | 65,563 | 25,607 | 8,83 |

| Satisf_Pos | 136 | 66 | 10 | 212 | 64,151 | 31,132 | 4,717 |

| Satisf_Neg | 215 | 112 | 25 | 352 | 61,079 | 31,818 | 7,102 |

| Estm_Pos | 223 | 71 | 47 | 341 | 65,396 | 20,821 | 13,783 |

| Estm_Neg | 346 | 129 | 39 | 514 | 67,315 | 25,097 | 7,587 |

| Sanct_Pos | 80 | 19 | 18 | 117 | 68,376 | 16,239 | 15,385 |

| Sanct_Neg | 127 | 47 | 16 | 190 | 66,842 | 24,737 | 8,421 |

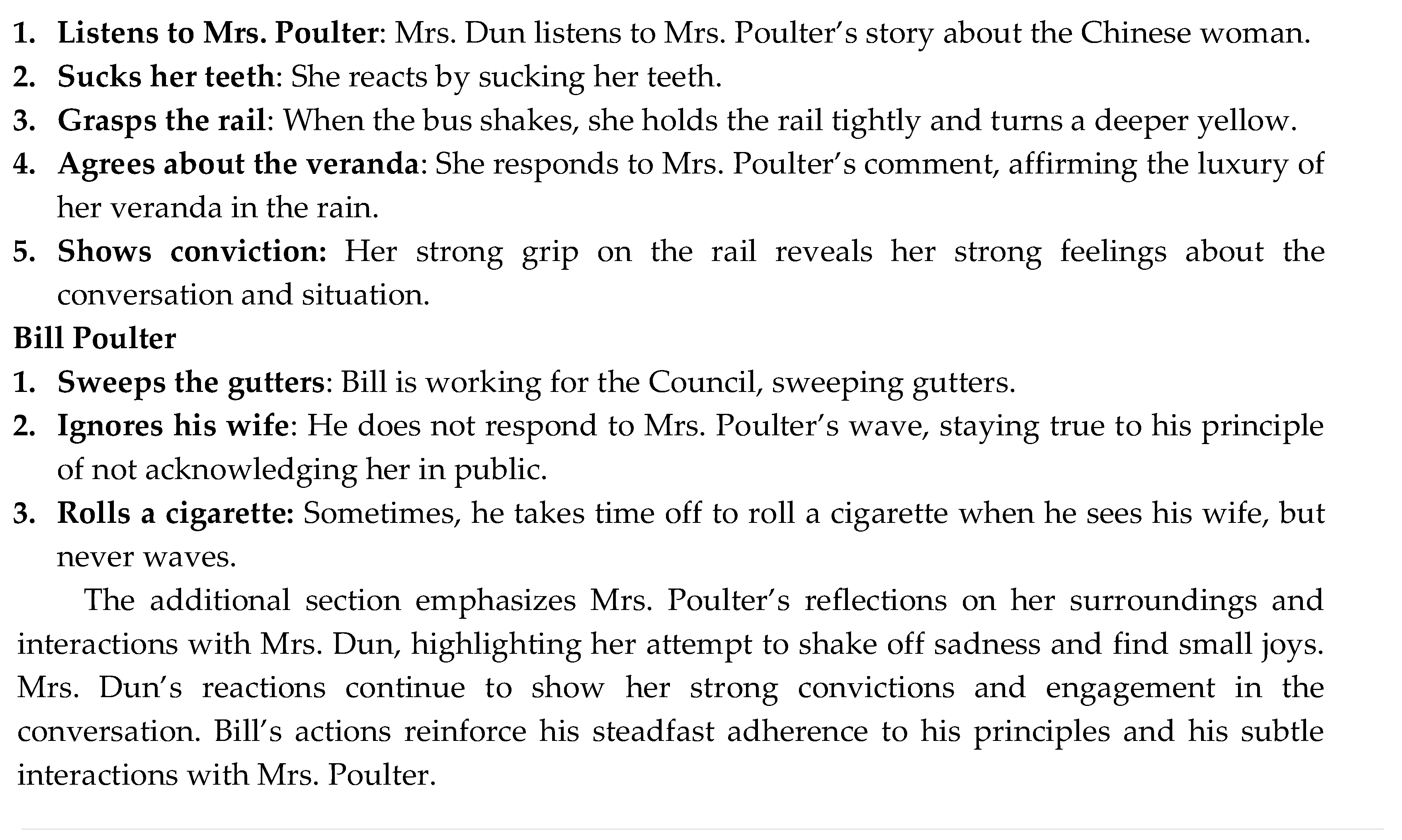

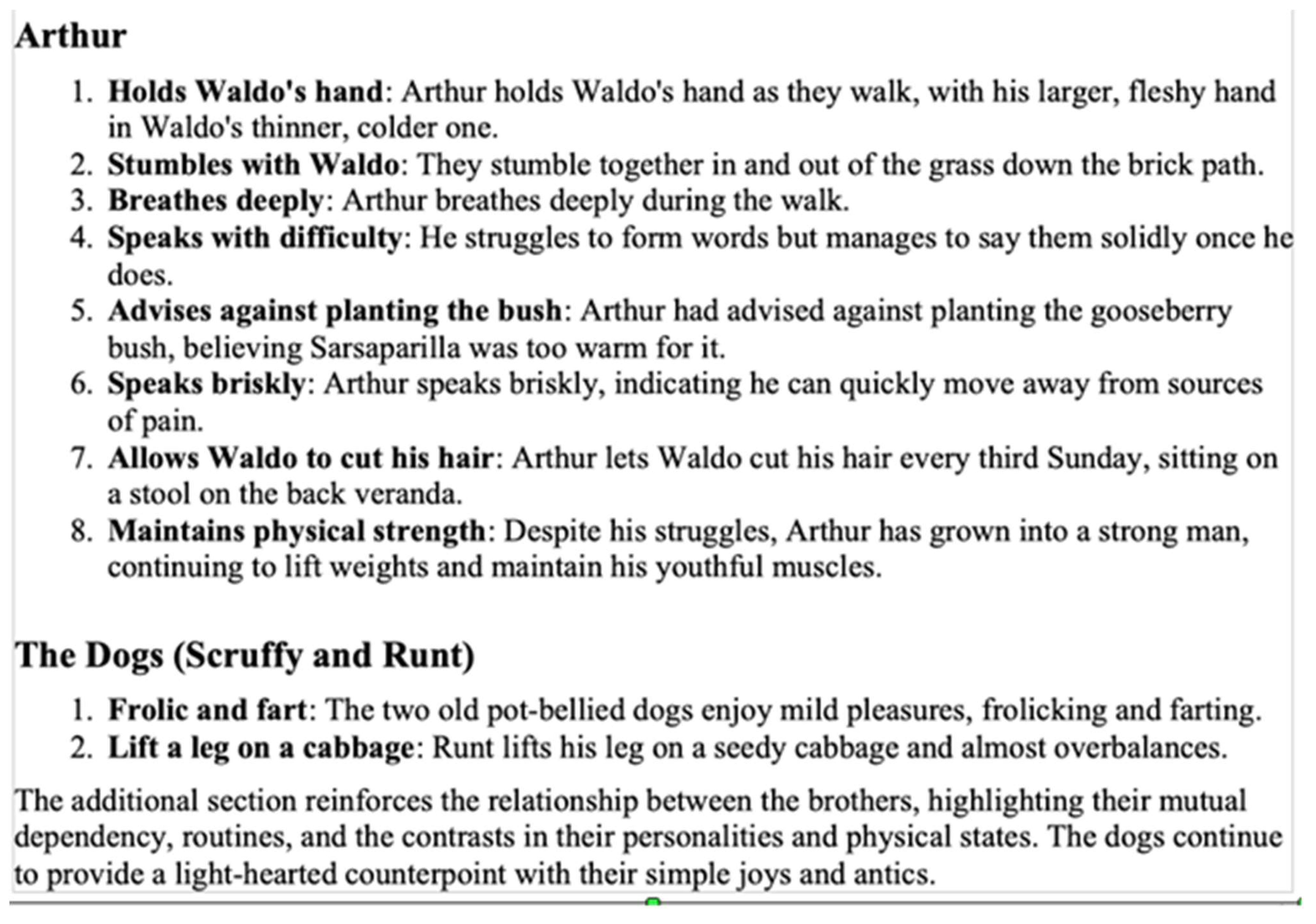

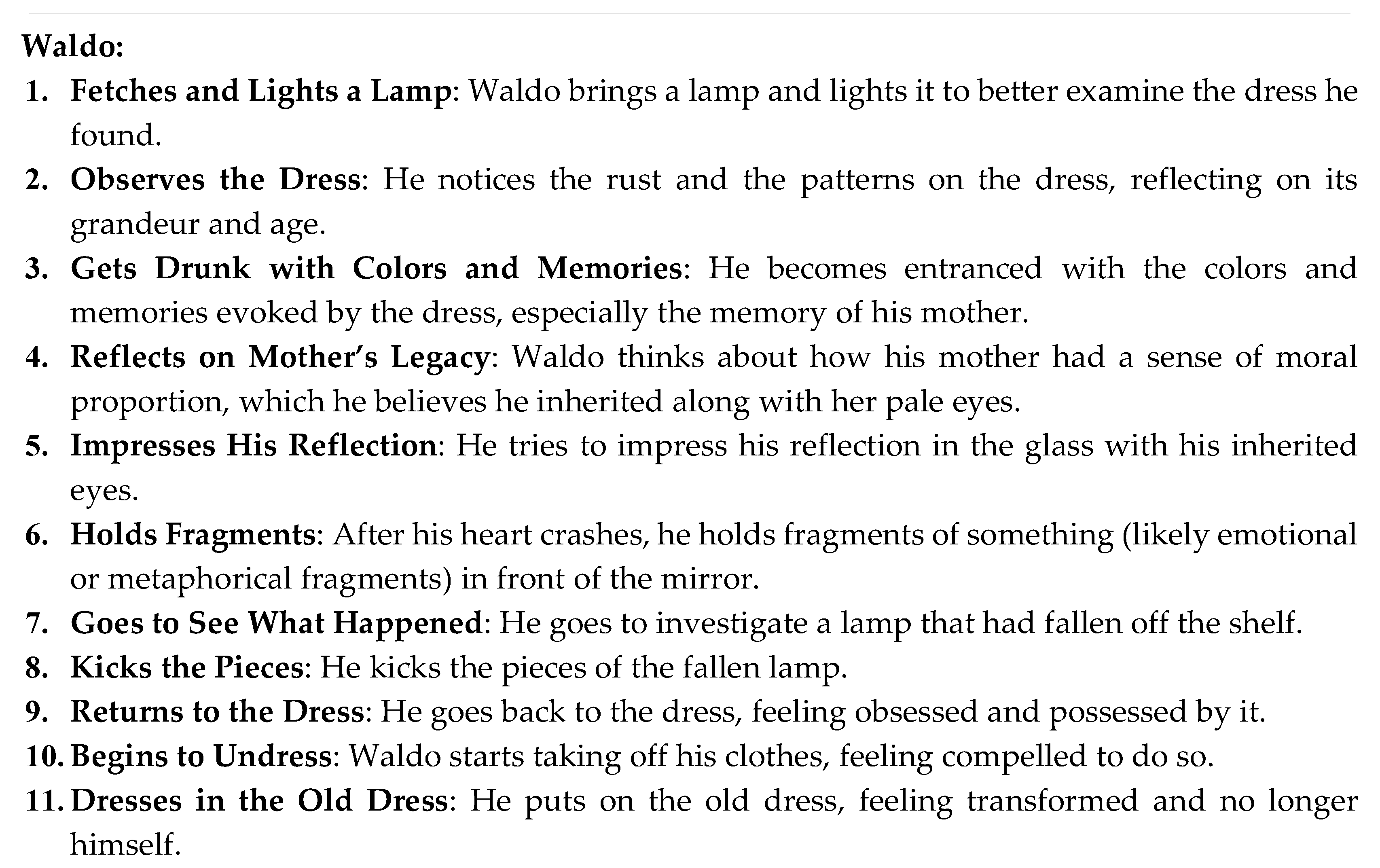

| Waldo’s Best 10 | Arthur’s Best 10 | Mrs. Poulter’s Best 10 |

|---|---|---|

| 1-PerformW | 1-DefDesire | 1-Sanct_Pos |

| 2-Possibilty | 2-Inclin_Pos | 2-Estm_Pos |

| 3-Sanct_Pos | 3-Ability | 3-Inclin_Pos |

| 4-Precognt | 4-Gnomic | 4-Gnomic |

| 5-Estm_Neg | 5-Satis_Neg | 5-Possibilty |

| 6-Sanct_Neg | 6-Satis_Pos | 6-Inclin_Neg |

| 7-Inclin_Neg | 7-Assumptn | 7-Concessiv |

| 8-Secur_Neg | 8-Secur_Pos | 8-Perceptn |

| 9-Estm_Pos | 9-Concessiv | 9-Obligation |

| 10-Will | 10-Cognition | 10-Cognition |

| Waldo’s Unique | Arthur’s Unique | Mrs P-‘s Unique |

|---|---|---|

| PerformW | DefDesire | |

| Ability | ||

| Precognt | ||

| Estm_Neg | Satis_Neg | |

| Sanct_Neg | ||

| Inclin_Neg | Assumptn | |

| Secur_Neg | Secur_Pos | Perceptn |

| Concessiv | Obligation | |

| Will |

3.1. Testing CHATGPT for Factuality and Temporal Ordering

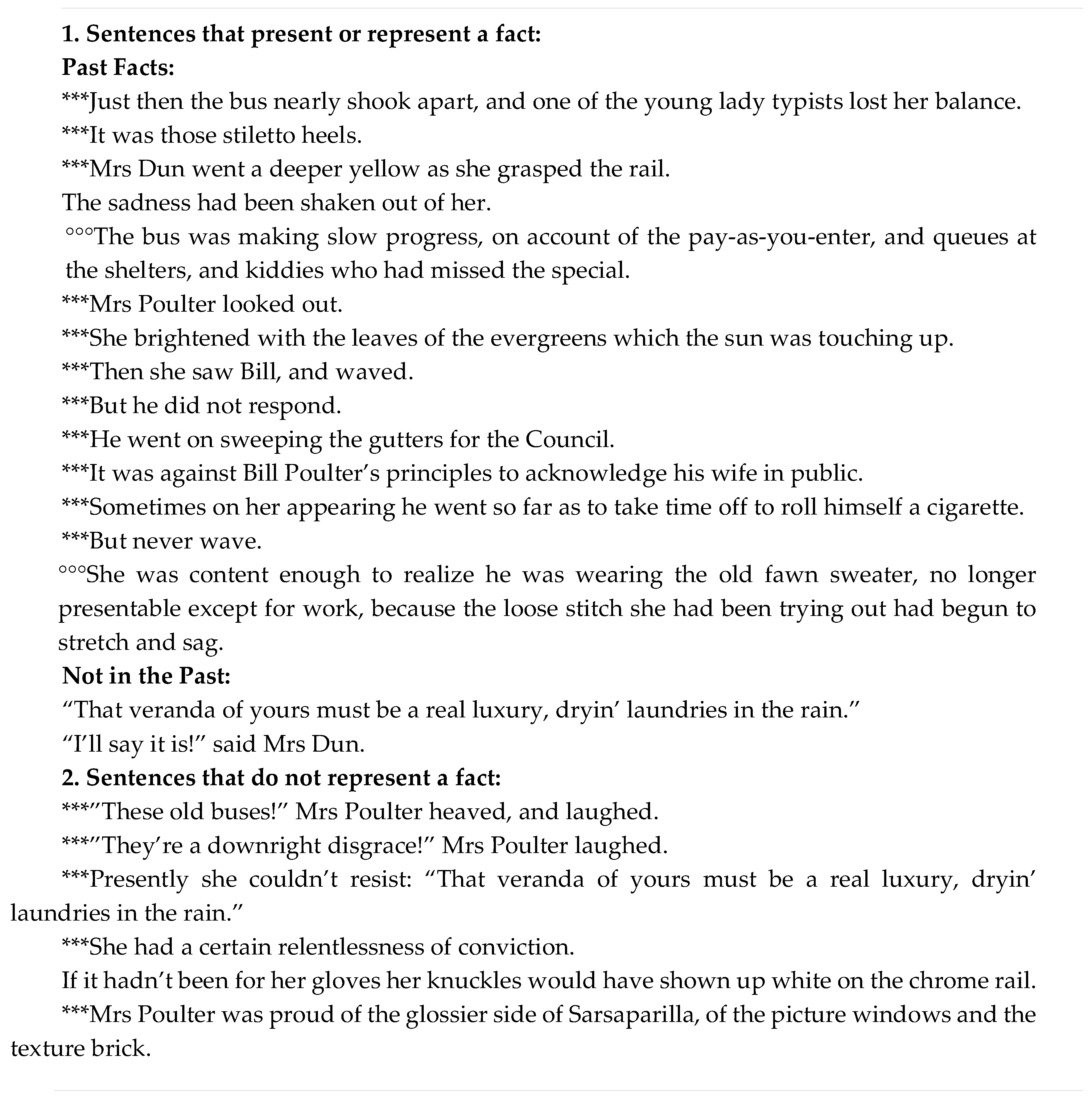

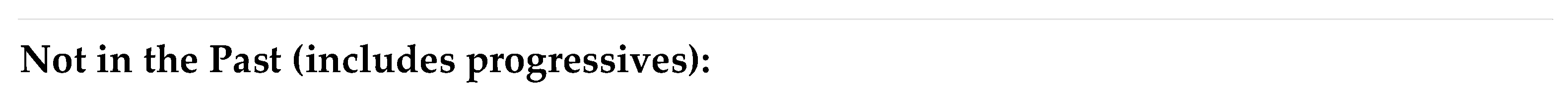

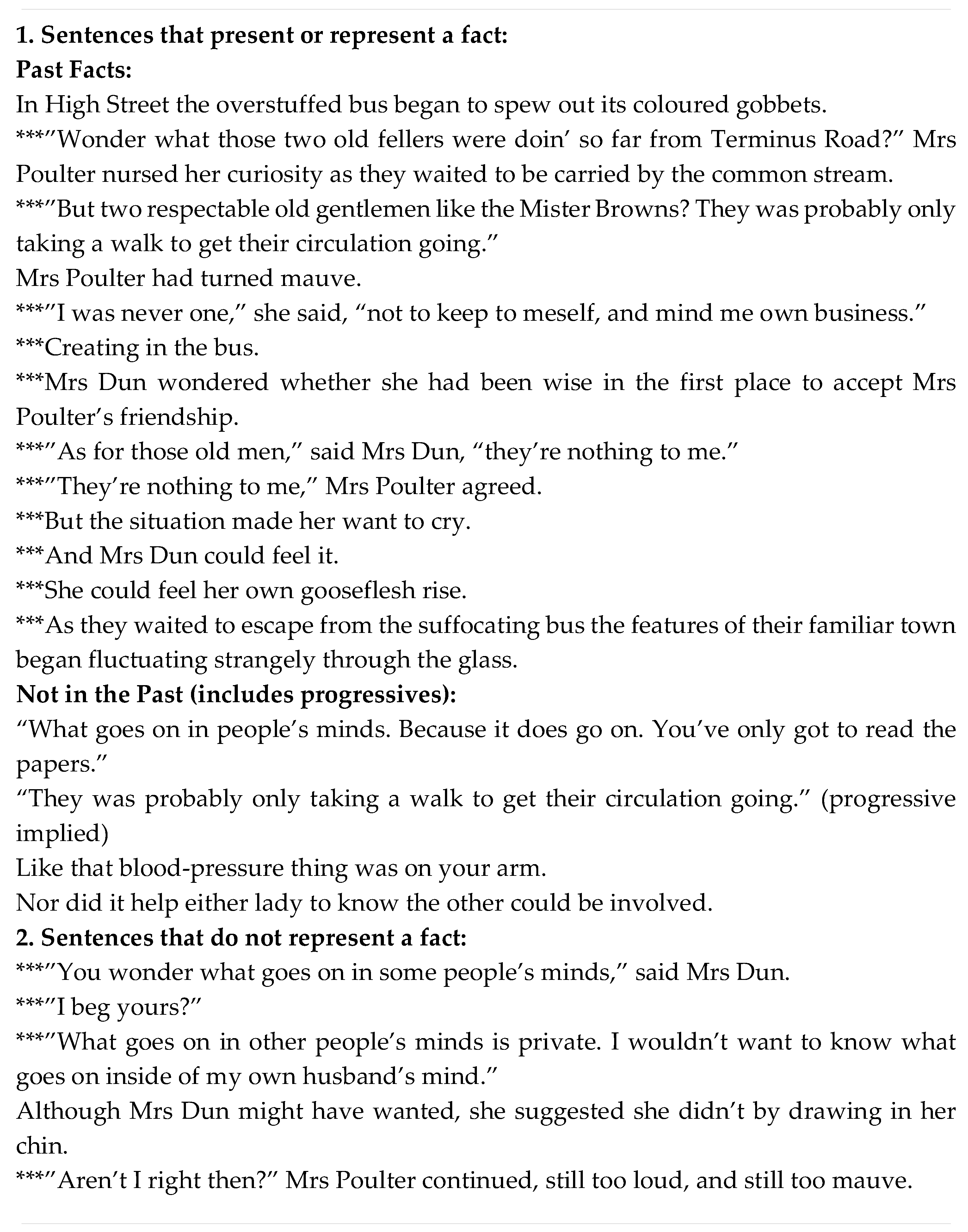

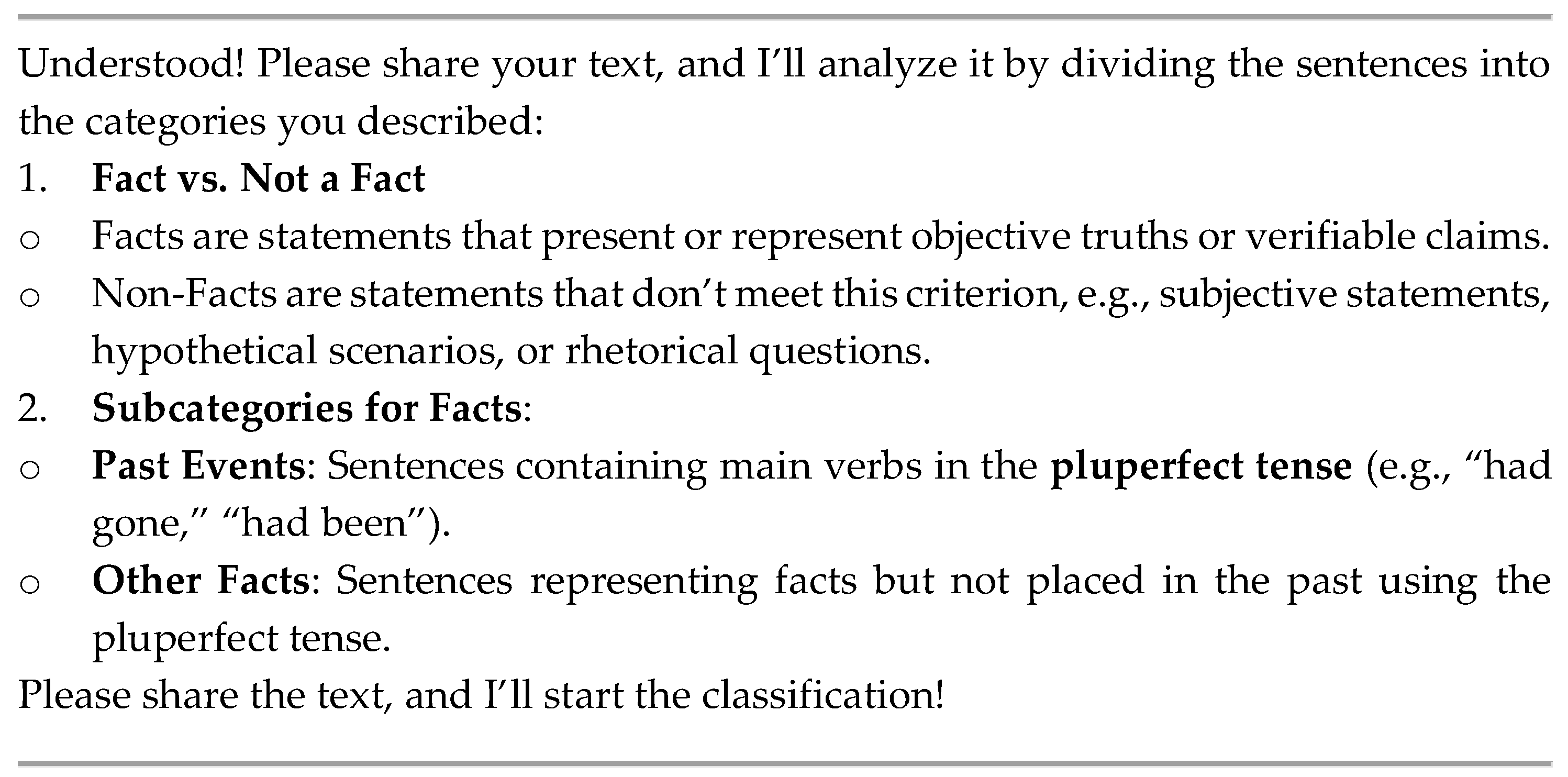

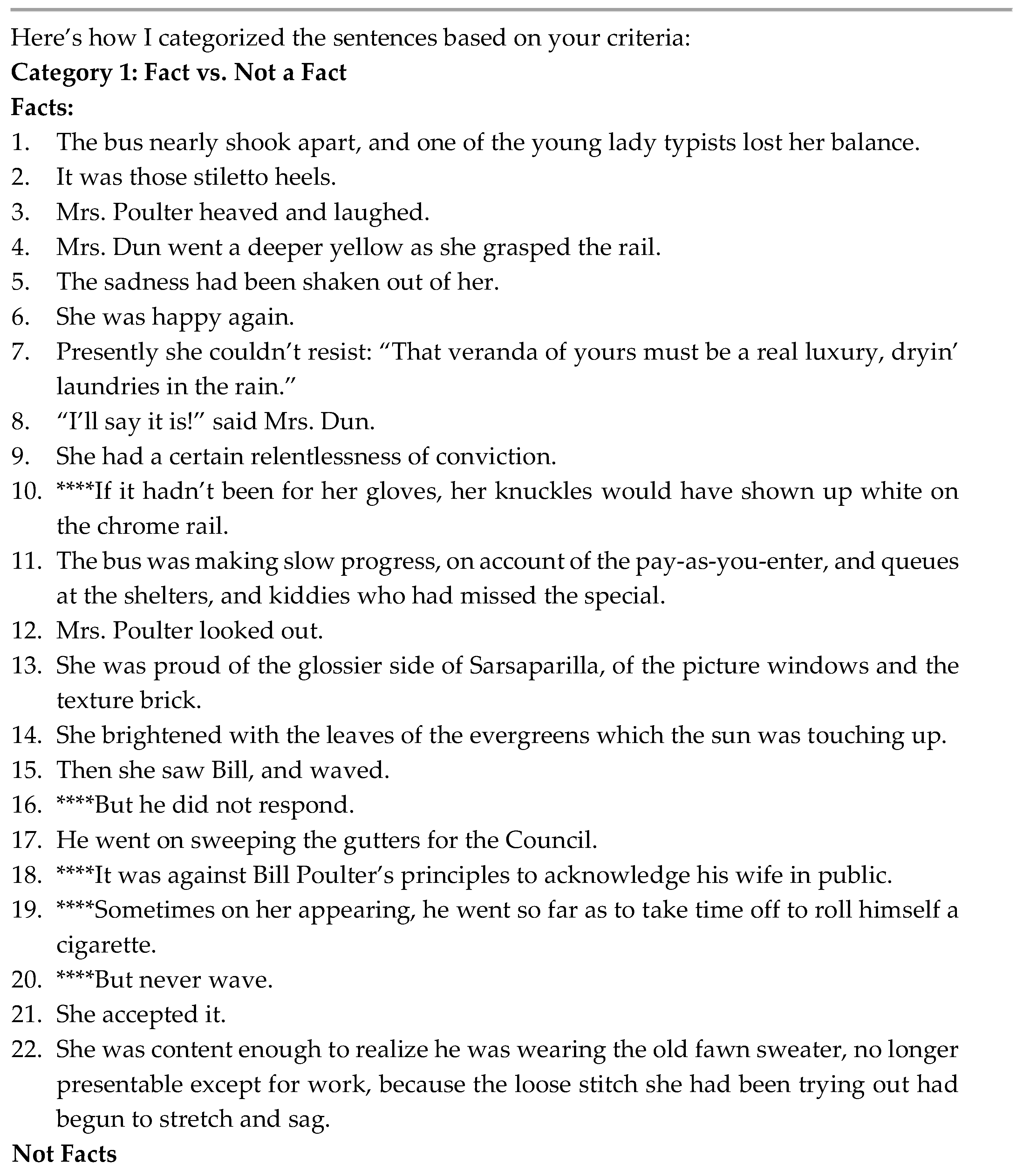

“I have a short text where you should divide up sentences at first into two categories: those that present or represent a fact and those that don’t. Then you should divide up those that represent a fact into two subcategories: those that are placed in the past and those that aren’t.”

I have a short text where you should divide up sentences at first into two categories: those that present or represent a fact and those that don’t. Then you should divide up those that represent a fact into two subcategories: those that are placed in the past and those that aren’t, where past event clauses are those that have a main verb in the pluperfect tense.

- Mrs. Poulter had turned mauve.

- Mrs. Dun wondered whether she had been wise in the first place to accept Mrs. Poulter’s friendship

4. Comparing GPT ad Gemini

- oversimplification (dropping the restrictive modifier)

- wrong coreference chain (Mrs Dun rather than Mrs Poulter)

- wrong sense selection with ambiguous term (think)

- omitted cataphora with split referents (a strange man and Johnny Haynes)

- disjoined reference of MEMORY and Mother

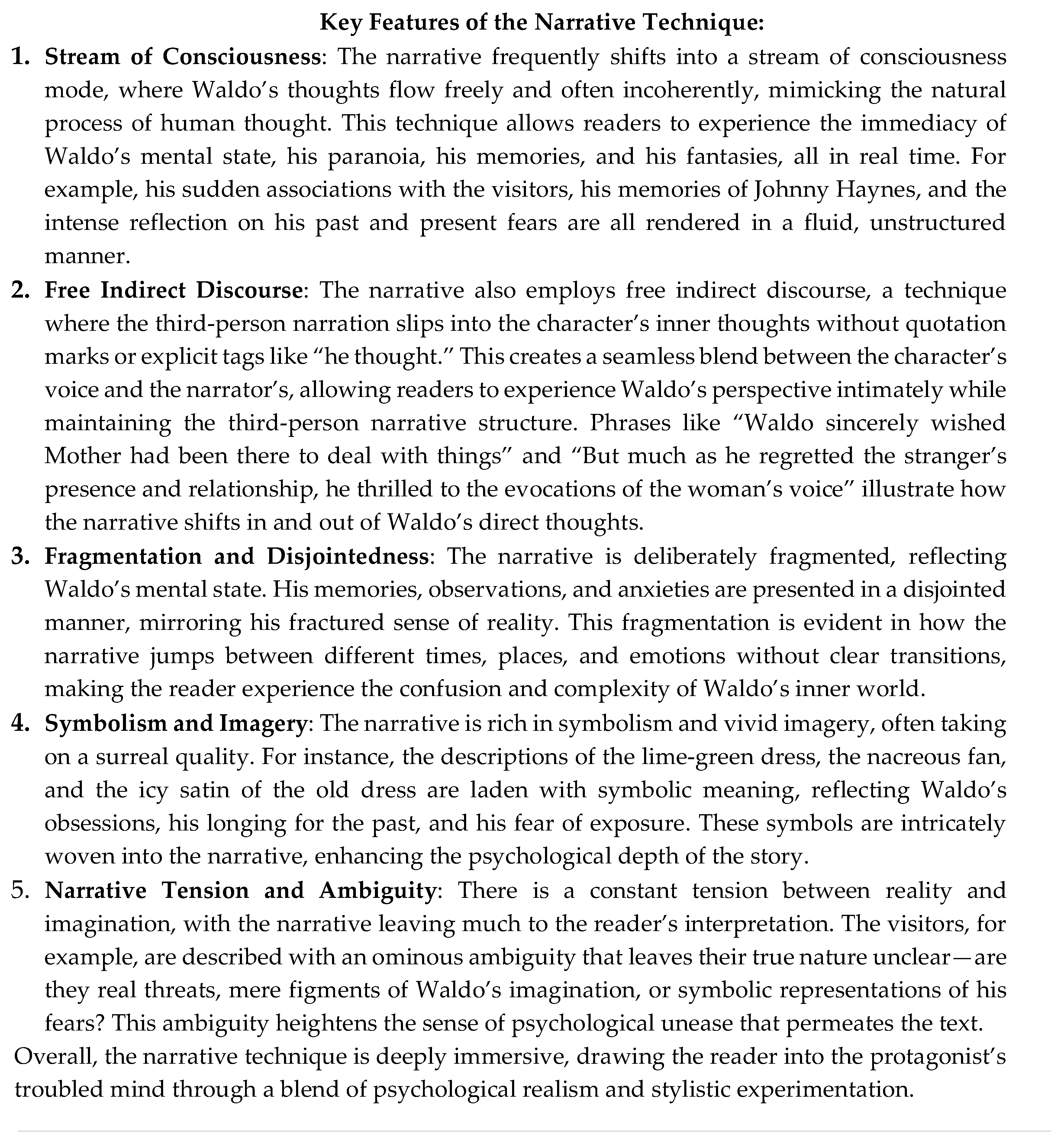

- use of stream of consciousness as narrative style

- mistakes in classifying factual vs non-factual sentences

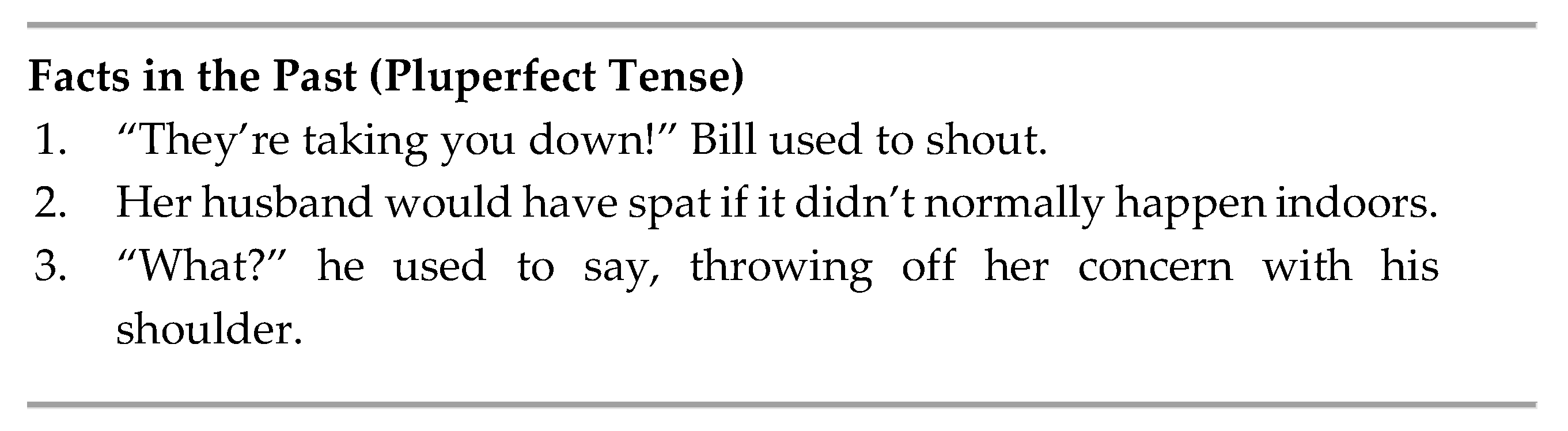

- mistakes in classifying factual sentences in the past with pluperfect

- inability to detect linguistic elements characterizing narrative style

- inability to characterize UNCERTAINTY in the verbal complex

- abstract key points substituted by direct extracted references

- Correct name of the author but wrong title of the novel

8. WRONG – a better classification of past vs. present but there are mistakes. In fact there are only one or two sentences in the pluperfect. Gemini wrongly declares that there are no pluperfect sentences and motivates it by this observation:

9. RIGHT – specific key points dedicated to linguistic items but they do not include what should have made the difference, that is mainly verbal complexes

10. RIGHT – UNCERTAINTY is depicted in deep details at the end of the Supplementary Materials in two pages. We paste here the beginning of the response by Gemini to the question “And now I would like to know what is the role of the abstract notion of

11. RIGHT - Bullet key themes in Gemini’s summaries are always abstractions, Here is a list from the Supplementary Materials where we can easily note that each excerpt has different themes unlike what happens with GPT where themes are often repeated:

12. WRONG – as happened with GPT also Gemini got the right name of the author but the wrong title of the novel. The response and the question are reported here below:

5. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| OOVW | Out Of Vocabulary Word |

| NLP | Natural Language Processing |

| GPT | Generative Pre-Trained Transformer |

| LLM | Large Language Model |

| BERT | Bidirectional Encoder Representations from Transformer |

| DOI | Digital Object Identifier |

| RHT | Reasoning Hallucination Test |

| FCT | False Confidence Test |

| FQT | Fake Question Test |

| NOTA | None Of The Above |

Appendix A

Appendix B

References

- OpenAI. 2024b. Hello gpt-4o, [Large language model]. https://chatgpt.com.

- Patrick White. 1966. The Solid Mandala. Eyre & Spottiswood, London.

- Gordon Collier. 1992. The Rocks and Sticks of Words Style, Discourse and Narrative Structure in the Fiction of Patrick White. Editions Rodopi B. V., Amsterdam Atlanta.

- Rodolfo Delmonte and Giulia Marchesini: A Semantically-Based Computational Approach to Narrative Structure, in Harry Bunt(ed.), Proceedings 13th Joint ISO - ACL Workshop on Interoperable Semantic Annotation (isa-13), Workshop at the 12th International Conference on Computational Semantics (IWCS 2017) Montpellier, France, September 19, 2017, pp. 14-25. https://aclanthology.info/papers/W17-6906/w17-690. (accessed on 17/01/2025).

- Giulia Marchesini and Rodolfo Delmonte: A semantically-based Approach to the Annotation of Narrative Style, in Harry Bunt(ed.), Proceedings 13th Joint ISO - ACL Workshop on Interoperable Semantic Annotation (isa-13), Workshop at the 12th International Conference on Computational Semantics (IWCS 2017) Montpellier, France, September 19, 2017, pp. 14-25. http://aclweb.org/anthology/W/W17/#7402. (accessed on 17/01/2025).

- Gordon Collier. 1999. Apparent Feature-Anomalies in Subjectivized Third-Person Narration, in John Pier(ed.), Recent Trends in Narratological Research, Open Edition Books, PUFR, GRAAT, 21, pp.129-151. https://books.openedition.org/pufr/3956. (accessed on 17/01/2025).

- J. Martin and P.R. White. 2005. Language of Evaluation, Appraisal in English. Palgrave Macmillan, London and New York.

- J. Read and J. Carrol. 2012. Annotating Expressions of Appraisal in English. Language Resources and Evaluation, 46:421–447.

- Robin Emsley, 2023. ChatGPT: these are not hallucinations – they’re fabrications and falsifications, in Schizophrenia volume 9, Article number: 52 (2023).

- Ankit Pal, Logesh Kumar Umapathi, Malaikannan Sankarasubbu, 2023. Med-HALT: Medical Domain Hallucination Test for Large Language Models, in Proceedings of the 27th Conference on Computational Natural Language Learning (CoNLL), pages 314–334.

- McGowan A, Gui Y, Dobbs M, Shuster S, Cotter M, Selloni A, Goodman M, Srivastava A, Cecchi GA, Corcoran CM. ChatGPT and Bard exhibit spontaneous citation fabrication during psychiatry literature search. Psychiatry Res. 2023, https://pmc.ncbi.nlm.nih.gov/articles/PMC10424704/pdf/nihms-1918813.pdf(accessed on January 22, 2025).

- Joshua Maynez, Shashi Narayan, Bernd Bohnet, Ryan McDonald, 2020. On Faithfulness and Factuality in Abstractive Summarization, in Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, pages 1906–1919.

- Kalev Hannes Leetaru, 2023. Hallucination In Summarization: When ChatGPT Hallucinated New Stories, https://blog.gdeltproject.org/hallucination-in-summarization-when-chatgpt-hallucinated-new-stories-in-an-evening-news-broadcast/ (accessed on January, 21 2025).

- Catarina G. Belem, Pouya Pezeskhpour, Hayate Iso, Seiji Maekawa, Nikita Bhutani, Estevam Hruschka, 2024. From Single to Multi: How LLMs Hallucinate in Multi-Document Summarization, Megagon Labs, University of California Irvine, arXiv:2410.13961v1.

- Philippe Laban, Alexander R. Fabbri, Caiming Xiong Chien-Sheng Wu, 2024. Summary of a Haystack: A Challenge to Long-Context LLMs and RAG Systems, in Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing, pages 9885–9903.

- Kung-Hsiang Huang, Philippe Laban, Alexander Fabbri, Prafulla Kumar Choubey, Shafiq Joty, Caiming Xiong, Chien-Sheng Wu, 2024. Embrace Divergence for Richer Insights: A Multi-document Summarization Benchmark and a Case Study on Summarizing Diverse Information from News Articles, in Proceedings of the 2024 Conference of (NACL) the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers), pages 570–593.

- Matthew Dahl, Varun Magesh, Mirac Suzgun, and Daniel E. Ho, 2024. Large Legal Fictions: Profiling Legal Hallucinations in Large Language Models, in Journal of Legal Analysis, Oxford University Press, 16, 64–93 https://doi.org/10.1093/jla/laae003 (accessed on January 21, 2015). [CrossRef]

- Cem Uluoglakci and Tugba Taskaya Temizel, 2024. Terms Dataset for Benchmarking Hallucination Tendency of LLMs, in Proceedings of the 18th Conference of the European Chapter of the Association for Computational Linguistics:, Student Research Workshop, pages 95–136.

- Sewon Min, Kalpesh Krishna, Xinxi Lyu, Mike Lewis, Wen-tau Yih, Pang Koh, Mohit Iyyer, Luke Zettle-moyer, and Hannaneh Hajishirzi. 2023. FActScore: Fine-grained atomic evaluation of factual precision in long form text generation. In Proceedings of the 2023 Conference (EMNLP) on Empirical Methods in Natural Language Processing, pages 12076–12100, Singapore. Association for Computational Linguistics.

- Prakamya Mishra, Zonghai Yao, Parth Vashisht, Feiyun Ouyang, Beining Wang, Vidhi Dhaval Mody, Hong Yu, 2024. SYNFAC-EDIT: Synthetic Imitation Edit Feedback for Factual Alignment in Clinical Summarization, in Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing, pages 20061–20083.

- Adam Tauman Kalai, Santosh S. Vempala. 2024. Calibrated Language Models Must Hallucinate, arXiv:2311.14648v3.(accessed on January 21, 2025).

- Yekyung Kim, Yapei Chang, Marzena Karpinska, Aparna Garimella, Varun Manjunatha, Kyle Lo, Tanya Goyal, Mohit Iyyer, 2024. Evaluating faithfulness and content selection in book-length summarization, arXiv:2404.01261v2, also published as a conference paper at COLM 2024, https://github.com/mungg/FABLES (accessed on January 21, 2025).

- Chaofan Tao, Qian Liu, Longxu Dou, Niklas Muennighoff, Zhongwei Wan, Ping Luo, Min Lin, Ngai Wong, 2024. Scaling Laws with Vocabulary: Larger Models Deserve Larger Vocabularies, arXiv:2407.13623v3.

- Delmonte R., Nicolò Busetto, Word Predictability is Based on Context - and/or Frequency, in David C. Wyld, Dhinaharan Nagamalai (Eds), Proceedings 8th International Conference on Artificial Intelligence and Applications (AI 2022) October 29~30, 2022, Vienna, Austria, pp.195-211.

- Delmonte, R., Nicolò Busetto, Stress Test for BERT and Deep Models: Predicting Words from Italian Poetry. International Journal on Natural Language Computing (IJNLC). Dec. 2022, Vol. 11, No. 6, pp. 15-37. [CrossRef]

- Harris ZS. 1954. Distributional structure. Word 10:146–62.

- Clark S. 2015. Vector space models of lexical meaning. The Handbook of Contemporary Semantic Theory S Lappin, C Fox pp. 493–522 New York: Wiley.

- Erk K. 2016. What do you know about an alligator when you know the company it keeps. Semant. Pragmat. 9:1–63.

- Roser Sauri and James Pustejovsky. 2009. FactBank: a corpus annotated with event factuality. Language Resources and Evaluation, 43(3):227 268.

- R. Sauri and J. Pustejovsky. 2012. Are you sure that this happened? Assessing the Factuality Degree of Events in Text. Computational Linguistics, 38(2):261–299.

- Google AI Studio, “Conversation with Gemini”, at https://aistudio.google.com/app/prompts/new_chat, (accessed on January 27, 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).