Submitted:

12 February 2025

Posted:

12 February 2025

You are already at the latest version

Abstract

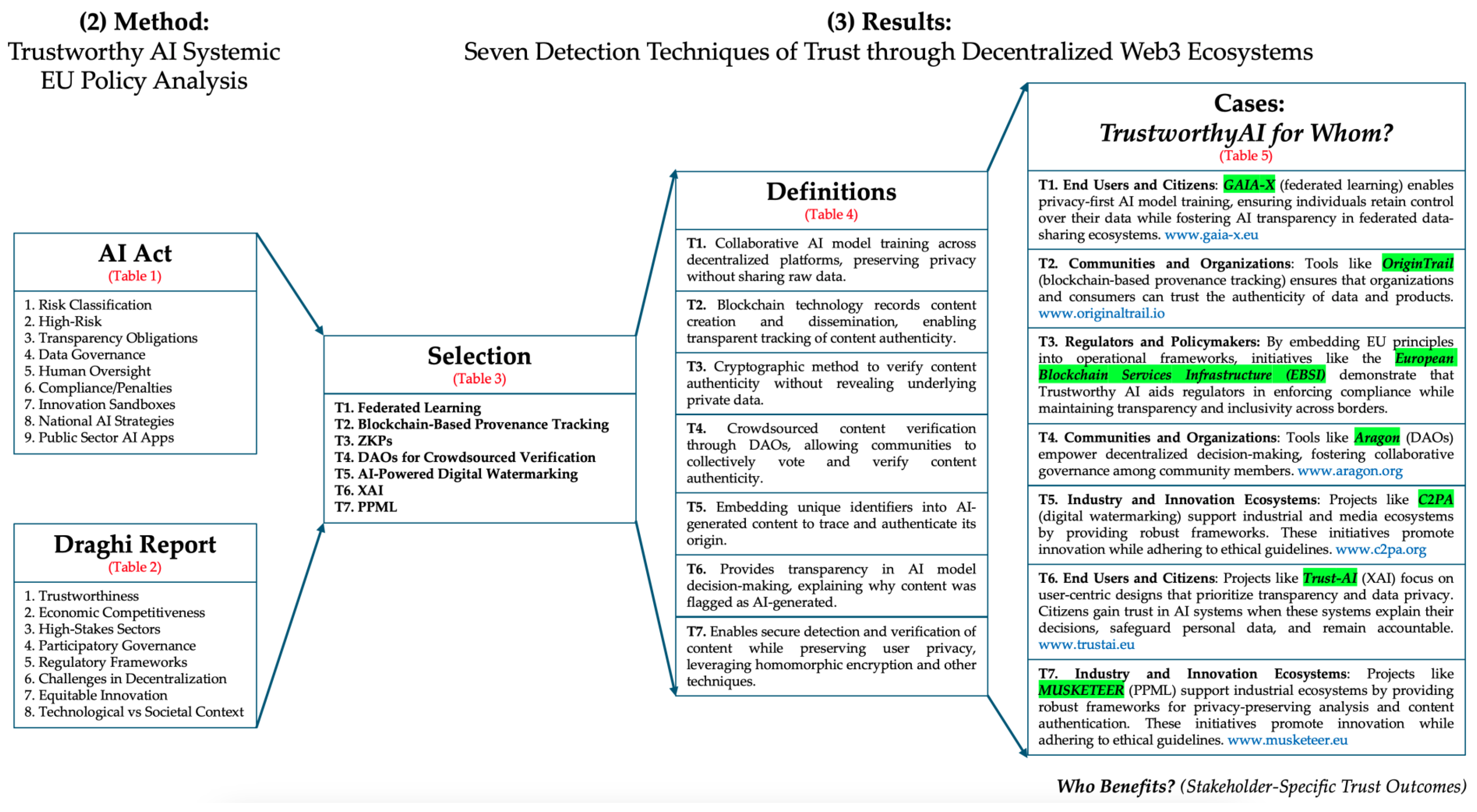

As generative AI (GenAI) technologies proliferate, ensuring trust and transparency in digital ecosystems becomes increasingly critical, particularly within democratic frameworks. This article examines decentralized Web3 mechanisms—blockchain, decentralized autonomous organizations (DAOs), and data cooperatives—as foundational tools for enhancing trust in GenAI. These mechanisms are analyzed within the framework of the EU’s AI Act and the Draghi Report, focusing on their potential to support content authenticity, community-driven verification, and data sovereignty. Based on a systematic policy analysis, this article proposes a multi-layered framework to mitigate the risks of AI-generated misinformation. Specifically, as a result of this analysis, it identifies and evaluates seven detection techniques of trust stemming from the action research conducted in the Horizon Europe lighthouse project called Enfield: (i) federated learning for decentralized AI detection, (ii) blockchain-based provenance tracking, (iii) Zero-Knowledge Proofs for content authentication, (iv) DAOs for crowdsourced verification, (v) AI-powered digital watermarking, (vi) explainable AI (XAI) for content detection, and (vii) Privacy-Preserving Machine Learning (PPML). By leveraging these approaches, the framework strengthens AI governance through peer-to-peer (P2P) structures while addressing the socio-political challenges of AI-driven misinformation. Ultimately, this research contributes to the development of resilient democratic systems in an era of increasing technopolitical polarization.

Keywords:

1. Introduction: Trustworthy AI for Whom?

- (i)

- Recent advances in digital watermarking present a scalable solution for distinguishing AI-generated content from human-authored material. SynthID-Text, a watermarking algorithm discussed by Dathathri et al. [142], provides an effective way to mark AI-generated text, ensuring that content remains identifiable without compromising its quality. This watermarking framework offers a pathway for managing AI’s outputs on a massive scale, potentially curbing the spread of misinformation. However, questions of accessibility and scalability remain, particularly in jurisdictions where trust infrastructures are underdeveloped. SynthID-Text’s deployment exemplifies how watermarking can help maintain trust in AI content, yet its application primarily serves contexts where technological infrastructure supports high computational demands, leaving out communities with limited resources.

- (ii)

- The concept of “personhood credentials” (PHCs) provides another lens for exploring trust. According to Adler et al. [143], PHCs allow users to authenticate as real individuals rather than AI agents, introducing a novel method for countering AI-powered deception. This system, based on zero-knowledge proofs, ensures privacy by verifying individuals’ authenticity without exposing personal details. While promising, PHCs may inadvertently centralize trust among issuing authorities, which could undermine local, decentralized trust systems. Additionally, the adoption of PHCs presents ethical challenges, particularly in regions where digital access is limited, raising further questions about inclusivity in digital spaces purportedly designed to be “trustworthy.”

- (iii)

- In the context of decentralized governance, Poblet et al. [133] highlight the role of blockchain-based oracles as tools for digital democracy, providing external information to support decision-making within blockchain networks. Oracles serve as intermediaries between real-world events and digital contracts, enabling secure, decentralized information transfer in applications like voting and community governance. Their use in digital democracy platforms has demonstrated potential for enhancing transparency and collective decision-making. Yet, this approach is not without challenges; the integration of oracles requires robust governance mechanisms to address biases and inaccuracies, especially when scaling across diverse socio-political landscapes. Thus, oracles provide valuable insights into building trustworthy systems, but their implementation remains context-dependent, raising critical questions about the universality of digital trust.

2. Method: Trustworthy AI Systematic EU Policy Analysis through AI Act and Draghi Report

2.1. AI Act at the Crossroads of Innovation and Responsibility

2.1.1. Risk Classification [43,58,59,71]: A Unified Framework with Tailored Enforcement

2.1.2. Human Oversight [160-179]: Enhancing Governance in Critical Sectors

2.1.3. Innovation Sandboxes: Bridging Compliance and Creativity

2.1.4. Sector-Specific Priorities: Aligning AI with Regional Significance

2.1.5. A Unified Vision with Localized Flexibility

2.1.6. Toward a Balanced Future?

| Aspect | EU-Wide Application Under AI Act |

Country-Specific Focus [3,4] |

|---|---|---|

| 1. Risk Classification | AI systems are classified as unacceptable, high, limited, or minimal risk. | Individual states may prioritize specific sectors (e.g., healthcare in Germany, transportation in the Netherlands) where high-risk AI applications are more prevalent. |

| 2. High-Risk AI Requirements | Mandatory requirements for data quality, transparency, robustness, and oversight. | Enforcement and oversight approaches may vary, with some countries opting for stricter testing and certification processes. |

| 3. Transparency Obligations | Users must be informed when interacting with AI (e.g., chatbots, deepfakes). | Implementation might vary, with some countries adding requirements for specific sectors like finance (France) or public services (Sweden). |

| 4. Data Governance | Data used by AI systems must be free from bias and respect privacy. | States with stronger data protection laws, like Germany, may adopt stricter data governance and audit practices. |

| 5. Human Oversight | High-risk AI requires mechanisms for human intervention and control. | Emphasis may vary, with some states prioritizing human oversight in sectors like education (Spain) or labor (Italy). |

| 6. Compliance and Penalties | Non-compliance can result in fines up to 6% of global turnover. | While fines are harmonized, enforcement strategies may differ based on each country's regulatory framework. |

| 7. Innovation Sandboxes | Creation of sandboxes to promote safe innovation in AI. | Some countries, like Denmark and Finland, have existing sandbox initiatives and may expand them to further support AI development. |

| 8. National AI Strategies | Member States align their AI strategies with the AI Act's principles. | Countries may adapt strategies to their economic strengths (e.g., robotics in Czechia, AI-driven fintech in Luxembourg). |

| 9. Public Sector AI Applications | Public services using AI must comply with the Act’s requirements. | Some countries prioritize transparency and ethics in government AI applications, with additional guidelines (e.g., Estonia and digital services). |

- (i)

- Federated Learning (Aligned with Data Governance and Privacy):

- · Supports privacy-preserving AI governance by enabling distributed training on sensitive data without centralizing information, ensuring compliance with GDPR and AI Act's high-risk AI requirements.

- · Example: Used in healthcare applications, allowing hospitals to collaboratively train AI models while preserving patient confidentiality.

- (ii)

- Blockchain-Based Provenance Tracking (Aligned with Transparency Obligations and Public Sector AI Applications):

- · Ensures immutability of AI-generated content, enabling verifiable authenticity for AI-driven decisions, which is crucial in public services and media regulation.

- · Example: Applied in journalism and digital identity systems to authenticate content sources and prevent AI-generated misinformation.

- (iii)

- Zero-Knowledge Proofs (ZKPs) (Aligned with Data Governance and Compliance):

- · Allows verification of AI interactions without exposing sensitive data, reinforcing trust in decentralized AI systems while complying with strict data protection laws.

- · Example: Used in identity verification protocols, ensuring that AI-driven authentication mechanisms operate transparently without privacy risks.

- (iv)

- Decentralized Autonomous Organizations (DAOs) for Crowdsourced Verification (Aligned with Human Oversight and AI Governance):

- · Introduces community-driven AI auditing, ensuring democratic oversight in high-risk AI applications where centralized institutions may lack credibility or impartiality.

- · Example: Implemented in fact-checking initiatives, where DAOs enable collective content moderation and AI accountability mechanisms.

- (v)

- AI-Powered Digital Watermarking (Aligned with Transparency and Misinformation Regulation):

- · Embeds traceable markers into AI-generated content, ensuring that users are informed when interacting with AI-generated media, aligning with the AI Act’s transparency provisions.

- · Example: Used in deepfake detection and content verification systems, particularly in elections and media trust initiatives.

- (vi)

- Explainable AI (XAI) (Aligned with High-Risk AI Requirements and Human Oversight):

- · Enhances interpretability of AI decisions, ensuring accountability in high-stakes AI applications where explainability is legally mandated.

- · Example: Adopted in finance, legal, and medical AI models to provide clear justifications for algorithmic outcomes, addressing concerns over AI opacity.

- (vii)

- Privacy-Preserving Machine Learning (PPML) (Aligned with Compliance and Innovation Sandboxes):

- · Facilitates secure AI model training without compromising user privacy, enabling safe AI innovation in regulatory sandboxes while ensuring alignment with compliance standards.

- · Example: Used in cross-border AI collaborations, particularly in fintech and digital identity management, to protect personal data while enabling AI innovation.

- Operationalizing risk management and compliance measures within the AI Act’s framework.

- Providing real-world applications that ensure AI technologies align with democratic values such as transparency, accountability, and human oversight.

- Addressing the limitations of centralized AI governance by introducing decentralized, privacy-preserving, and community-driven trust mechanisms.

2.2. Draghi Report

2.2.1. Trustworthiness Beyond Technological Robustness

2.2.2. Economic Competitiveness vs. Ethical Equity

2.2.3. Trustworthiness in High-Stakes Sectors

2.2.4. Toward a Participatory and Inclusive Vision

- Balancing Economic Competitiveness and Ethical Integrity – AI-powered digital watermarking and blockchain-based provenance tracking ensure transparency in high-stakes sectors like journalism and finance, mitigating risks of AI-generated misinformation and algorithmic opacity without stifling innovation.

- Ensuring Trustworthiness in High-Stakes Sectors – Federated learning, PPML, and XAI provide privacy-preserving, interpretable AI governance models, essential for healthcare, law enforcement, and energy sectors, where bias mitigation and explainability are crucial.

- Advancing Participatory and Inclusive AI Governance – DAOs and ZKPs introduce decentralized verification models, shifting AI accountability from top-down regulatory enforcement to bottom-up community-driven governance, aligning with the Draghi Report’s call for inclusive AI ecosystems.

2.3. Trustworthy AI for Whom: Approaching from Decentralized Web3 Ecosystem Perspective

2.3.1. The Challenges of Detection Techniques for Trust through Decentralized Web3 Ecosystems

2.3.2. GenAI and Disinformation/Misinformation [11]: A Perfect Storm?

2.3.3. Ethical AI and Accountability in Decentralized Systems

2.3.4. The Role of Blockchain in AI Content Authentication

2.3.5. Transdisciplinary Approaches to AI Governance

2.3.6. Addressing the Elephant in the Room

2.4. Justification for the Relevance and Rigor of the Methodology

2.4.1. Bridging Policy and Practice for Technological Communities

2.4.2. The AI Act as a Framework for Risk Classification and Ethical Safeguards

2.4.3. The Draghi Report as a Vision for Strategic Resilience

2.4.4. Policy Relevance in Decentralized Web3 Ecosystems

2.4.5. Advancing Detection Techniques of Trust

2.4.6. A Transdisciplinary Perspective for a Complex Problem

3. Results: Seven Detection Techniques of Trust through Decentralized Web3 Ecosystems

- Regulatory Alignment – They directly address trust, transparency, and accountability challenges outlined in the AI Act and Draghi Report, ensuring compliance with risk classification, data sovereignty, and explainability mandates.

- Decentralized Suitability – Each technique is designed to function within decentralized Web3 environments, overcoming the limitations of centralized AI governance mechanisms.

- Operational Feasibility – These techniques have been successfully deployed in real-world use cases, as demonstrated by European initiatives such as GAIA-X, OriginTrail, C2PA, and EBSI, which integrate AI detection mechanisms into trustworthy governance frameworks.

Synergistic Effects: How These Techniques Complement Each Other

- Enhancing Transparency & Provenance:

- ○

- Blockchain-based provenance tracking (T2) and AI-powered watermarking (T5) create a dual-layer verification system—blockchain ensures immutability, while watermarking ensures content traceability at a granular level.

- ○

- Example: In journalism and media trust, C2PA integrates blockchain and watermarking to validate the authenticity of AI-generated content.

- 2.

- Strengthening Privacy & Data Sovereignty:

- ○

- Federated learning (T1) and Privacy-Preserving Machine Learning (T7) ensure that AI models can be trained and verified without compromising personal data, reinforcing compliance with GDPR and AI Act privacy mandates.

- ○

- Example: The GAIA-X initiative integrates federated learning and PPML to enable secure AI data sharing across European industries.

- 3.

- Democratizing AI Governance:

- ○

- DAOs (T4) and Explainable AI (T6) create transparent, participatory AI decision-making frameworks, ensuring AI accountability in decentralized ecosystems.

- ○

- Example: The Aragon DAO model enables crowdsourced content verification while XAI ensures decisions remain interpretable and contestable.

- 4.

- Ensuring Robust AI Authentication:

- ○

- ZKPs (T3) and Blockchain-Based Provenance Tracking (T2) create a dual-layer trust framework—ZKPs enable confidential verification, while blockchain ensures traceability.

- ○

- Example: The European Blockchain Services Infrastructure (EBSI) integrates ZKPs and blockchain for secure and verifiable credential authentication.

- Addressing Specific Risks Identified in the AI Act & Draghi Report: They directly support risk classification, human oversight, transparency, and privacy protection.

- Ensuring AI Trustworthiness in Decentralized Governance: They prevent misinformation, verify AI-generated content authenticity, and democratize AI oversight, addressing trust deficits in decentralized AI ecosystems.

- Strengthening European Leadership in Trustworthy AI: They align with ongoing European AI initiatives (GAIA-X, EBSI, C2PA, MUSKETEER, Trust-AI), reinforcing Europe’s commitment to ethical AI innovation.

3.1. Federated Learning for Decentralized AI Detection (T1)

3.2. Blockchain-Based Provenance Tracking (T2)

3.3. Zero-Knowledge Proofs (ZKPs) for Content Authentication (T3)

3.4. DAOs for Crowdsourced Verification (T4)

3.5. AI-Powered Digital Watermarking (T5)

3.6. Explainable AI (XAI) for Content Detection (T6)

3.7. Privacy-Preserving Machine Learning (PPML) for Secure Content Verification (T7)

- Unlike abstract AI governance models, this article systematically identifies where and how these methods are implemented.

- Example: GAIA-X's federated learning directly translates into privacy-enhancing AI practices that ensure compliance with EU data sovereignty mandates.

- Second, it bridges policy and practice through empirical validation.

- The article does not rely on theoretical speculations; rather, it systematically aligns EU regulatory imperatives (AI Act, Draghi Report) with practical technological implementations.

- Example: EBSI’s integration of ZKPs resolves AI trust dilemmas by ensuring privacy-preserving yet verifiable digital transactions, aligning directly with EU’s cross-border regulatory frameworks.

- Unlike generic AI ethics proposals, this article makes crystal clear that Trustworthy AI must serve multiple actors, including citizens, regulators, industries, and communities.

- Example: DAOs empower communities by decentralizing AI governance, ensuring transparent, crowd-validated content oversight instead of opaque, corporate-controlled moderation.

4. Discussion and Conclusion

4.1. Discussions, Results, and Conclusions

4.2. Limitations

- (i)

- Technical and Operational Challenges: Many of the techniques discussed, such as federated learning and PPML, require advanced computational infrastructure (Quantum Computing) and significant technical expertise. Their deployment in resource-constrained environments may be limited, perpetuating global inequalities in digital access and trust frameworks.

- (ii)

- Ethical and Governance Gaps: While tools like DAOs and blockchain foster transparency and decentralization, they raise ethical concerns regarding power concentration among technologically savvy elites [28]. As recently noted by Calzada [28] and supported by AI hype approach by Floridi [248], decentralization does not inherently equate to democratization; instead, it risks replicating hierarchical structures in digital contexts.

- (iii)

- Regulatory Alignment and Enforcement: The AI Act and the Draghi Report provide robust policy frameworks, but their enforcement mechanisms remain uneven across EU member states. This regulatory fragmentation may hinder the uniform implementation of the detection techniques proposed.

- (iv)

- Public Awareness and Engagement: A significant barrier to adoption lies in the public’s limited understanding of decentralized technologies. As Medrado and Verdegem highlight [240], there is a need for more inclusive educational initiatives to bridge the knowledge gap and promote trust in AI governance systems.

- (v)

- Emergent Risks of AI: GenAI evolves rapidly, outpacing regulatory and technological safeguards. This dynamism introduces uncertainties about the long-term effectiveness of the proposed detection techniques.

4.3. Future Research Avenues

- (i)

- Context-Specific Adaptations: Further research is needed to tailor decentralized Web3 tools to diverse regional and cultural contexts. This involves integrating local governance norms and socio-political dynamics into the design and implementation of detection frameworks.

- (ii)

- Inclusive Governance Models: Building on the principles of participatory governance discussed by Mejias and Couldry [241], future studies should examine how multistakeholder frameworks can be institutionalized within decentralized ecosystems. Citizen assemblies, living labs, and co-design workshops offer promising methods for inclusive decision-making.

- (iii)

- User-Centric Design: Enhancing UX for detection tools such as digital watermarking and blockchain provenance tracking is crucial. Future research should focus on creating user-friendly interfaces that simplify complex functionalities, fostering greater public engagement and trust.

- (iv)

- Ethical and Legal Frameworks: Addressing the ethical and legal challenges posed by decentralized systems requires interdisciplinary collaboration. Scholars in law, ethics, and social sciences should work alongside technologists to develop governance models that balance innovation with accountability.

- (v)

- AI Literacy Initiatives: Expanding on Sieber et al. [Sieber], there is a need for targeted educational programs to improve public understanding of AI technologies. These initiatives could focus on empowering marginalized communities, ensuring equitable access to the benefits of AI.

- (vi)

- Monitoring and Evaluation Mechanisms: Future studies should investigate robust metrics for assessing the efficacy of detection techniques in real-world scenarios. This includes longitudinal studies to monitor their impact on trust, transparency, and accountability in decentralized systems.

- (vii)

- Emergent Technologies and Risks: Finally, research should anticipate the future trajectories of AI and Web3 ecosystems, exploring how emerging technologies such as quantum computing or advanced neural networks may impact trust frameworks.

- (viii)

- Learning from Urban AI: A potentially prominent field is emerging around the concept of Urban AI, which warrants further exploration. The question "Trustworthy AI for whom?" echoes the earlier query "Smart City for whom?", suggesting parallels between the challenges of integrating AI into urban environments and the broader quest for trustworthy AI [249-254]. Investigating the evolution of Urban AI as a distinct domain could provide valuable insights into the socio-technical dynamics of trust, governance, and inclusivity within AI-driven urban systems [255-257].

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Alwaisi, S., Salah Al-Radhi, M. & Németh, G., (2023) Automated child voice generation: Methodology and implementation. 2023 International Conference on Speech Technology and Human-Computer Dialogue (SpeD), Bucharest, Romania, pp. 48-53. [CrossRef]

- Alwaisi, S. & Németh, G., (2024) Advancements in expressive speech synthesis: A review. Infocommunications Journal, 16(1), pp. 35-49. [CrossRef]

- European Commission. The Future of European Competitiveness: A Competitiveness Strategy for Europe. European Commission, September 2024. Available online: https://commission.europa.eu/topics/eu-competitiveness/draghi-report_en#paragraph_47059 (accessed on 18 November 2024).

- European Parliament and Council. Regulation (EU) 2024/1689 of 13 June 2024 Laying Down Harmonised Rules on Artificial Intelligence and Amending Regulations and Directives. Official Journal of the European Union. 2024, L 1689, 1–144. Available online: http://data.europa.eu/eli/reg/2024/1689/oj (accessed on 18 November 2024).

- Yang, F. , Goldenfein, J., & Nickels, K. (2024). GenAI Concepts. Melbourne: ARC Centre of Excellence for Automated Decision-Making and Society RMIT University, and OVIC. [CrossRef]

- Insight & Foresight (2024). How Generative AI Will Transform Strategic Foresight.

- Amoore, L.; Campolo, A.; Jacobsen, B.; Rella, L. A world model: On the political logics of generative AI. Political Geography 2024, 113, 103134. [CrossRef]

- Chafetz, H., Saxena, S., & Verhulst, S.G., 2024. A Fourth Wave of Open Data? Exploring the Spectrum of Scenarios for Open Data and Generative AI. The GovLab. Available from: https://arxiv.org/abs/2405.04333 (Accessed 1 Sept 2024).

- Delacroix, S. (2024) Sustainable data rivers? Rebalancing the data ecosystem that underlies generative AI. Critical AI, 2(1), No Pagination Specified. [CrossRef]

- Gabriel, I. et al. (2024) The ethics of advanced AI assistants. arXiv preprint. https://arxiv.org/abs/2404.16244.

- Shin, D., Koerber, A., & Lim, J.S. (2024) Impact of misinformation from generative AI on user information processing: How people understand misinformation from generative AI. New Media & Society. [CrossRef]

- Tsai, L. L., Pentland, A., Braley, A., Chen, N., Enríquez, J. R., & Reuel, A. (2024) An MIT Exploration of Generative AI: From Novel Chemicals to Opera. MIT Governance Lab. [CrossRef]

- Weidinger, L., et al. (2023) Sociotechnical Safety Evaluation of Generative AI Systems. arXiv preprint. https://arxiv.org/abs/2310.11986.

- Allen, D. & Weyl, E.G. (2024) The Real Dangers of Generative AI. Journal of Democracy. 35(1): 147-162.

- Kitchin, R. The Data Revolution: Big Data, Open Data, Data Infrastructures and Their Consequences; Sage: London, UK, 2014.

- Cugurullo, F.; Caprotti, F.; Cook, M.; Karvonen, A.; McGuirk, P.; Marvin, S., Eds. Artificial Intelligence and the City: Urbanistic Perspectives on AI; Routledge: Abingdon, UK, 2024. [CrossRef]

- Farina, M., Yu, X. & Lavazza, A. (2023). Ethical considerations and policy interventions concerning the impact of generative AI tools in the economy and in society. AI and Ethics. [CrossRef]

- Calzada, I. (2021), Smart City Citizenship, Cambridge, Massachusetts: Elsevier Science Publishing Co Inc. [ISBN (Paperback): 978-0-12-815300-0]. [CrossRef]

- Aguerre, C. , Campbell-Verduyn, M. & Scholte, J.A. (2024) Global Digital Data Governance: Polycentric Perspectives. Abingdon, UK: Routledge.

- Angelidou, M.; Sofianos, S. The Future of AI in Optimizing Urban Planning: An In-Depth Overview of Emerging Fields of Application. International Conference on Changing Cities VI: Spatial, Design, Landscape, Heritage & Socio-economic Dimensions. Rhodes Island, Greece, 24-28 June 2024.

- Polanyi, K. (1944) The Great Transformation: The Political and Economic Origins of Our Time. New York: Farrar & Rinehart.

- Solaiman, I. , et al. (2019) Release Strategies and the Social Impacts of Language Models. arXiv preprint. https://arxiv.org/abs/1908.09203.

- Calzada, I. (2024), Artificial Intelligence for Social Innovation: Beyond the Noise of Algorithms and Datafication. Sustainability, 16(19), 8638. [CrossRef]

- Fang, R. , et al. (2024). LLM Agents can Autonomously Hack Websites. arXiv preprint. https://arxiv.org/abs/2402.06664.

- Farina, M., Lavazza, A., Sartori, G. & Pedrycz, W. (2024). Machine learning in human creativity: status and perspectives. AI & Society. [CrossRef]

- Abdi, I.I. Digital Capital and the Territorialization of Virtual Communities: An Analysis of Web3 Governance and Network Sovereignty. 2024.

- Murray, A.; Kim, D.; Combs, J. The Promise of a Decentralized Internet: What is Web 3.0 and How Can Firms Prepare? Bus. Horiz. 2022, 65, 511–526. [Google Scholar] [CrossRef]

- Calzada, I. (2024) Decentralized Web3 Reshaping Internet Governance: Towards the Emergence of New Forms of Nation-Statehood? Future Internet,. [CrossRef]

- Calzada, I. (2024) From data-opolies to decentralization? The AI disruption amid the Web3 Promiseland at stake in datafied democracies, in Visvizi, A., Corvello, V. and Troisi, O. (eds.) Research and Innovation Forum. Cham, Switzerland: Springer.

- Calzada, I. (2024) Democratic erosion of data-opolies: Decentralized Web3 technological paradigm shift amidst AI disruption. Big Data and Cognitive Computing. [CrossRef]

- Calzada, I. (2023) Disruptive technologies for e-diasporas: Blockchain, DAOs, data cooperatives, metaverse, and ChatGPT, Futures, 154(C), p. 10 3258. [CrossRef]

- Gebhardt, C.; Pique Huerta, J.M. Integrating Triple Helix and Sustainable Transition Research for Transformational Governance: Climate Change Adaptation and Climate Justice in Barcelona. Triple Helix 2024, 11, 107–130 Available online:. [Google Scholar] [CrossRef]

- Allen, D. , Frankel, E., Lim, W., Siddarth, D., Simons, J. & Weyl, E.G. (2023) Ethics of Decentralized Social Technologies: Lessons from Web3, the Fediverse, and Beyond, Harvard University Edmond & Lily Safra Center for Ethics. Available from: https://myaidrivecom/view/file-A5rvW7aJ8emgJMG8wKH3WDTz (Accessed 1 September 2024).

- De Filippi, P.; Cossar, S.; Mannan, M.; Nabben, K.; Merk, T.; Kamalova, J.; Report on Blockchain Governance Dynamics. Project Liberty Institute and BlockchainGov, May 2024. Available online: https://www.projectliberty.io/institute (accessed on 20 November 2024).

- Daraghmi, E.; Hamoudi, A.; Abu Helou, M. Decentralizing Democracy: Secure and Transparent E-Voting Systems with Blockchain Technology in the Context of Palestine. Future Internet 2024, 16, 388. [Google Scholar] [CrossRef]

- Liu, X.; Xu, R.; Chen, Y. A. Decentralized Digital Watermarking Framework for Secure and Auditable Video Data in Smart Vehicular Networks. Future Internet 2024, 16, 390. [Google Scholar] [CrossRef]

- Stefano Moroni, Revisiting subsidiarity: Not only administrative decentralization but also multidimensional polycentrism, Cities, Volume 155, 2024, 105463. [CrossRef]

- Van Kerckhoven, S. & Chohan, U.W. (2024) Decentralized Autonomous Organizations: Innovation and Vulnerability in the Digital Economy. Oxon, UK: Routledge.

- Singh, A. , Lu, C., Gupta, G., Chopra, A., Blanc, J., Klinghoffer, T., Tiwary, K., & Raskar, R. (2024). A perspective on decentralizing AI. MIT Media Lab.

- Mathew, A.J. The myth of the decentralised internet. Internet Policy Review, 2016, 9 (3). https://policyreview.info/articles/analysis/myth-decentralised-internet.

- Zook, M. (2023) Platforms, blockchains and the challenges of decentralization. Cambridge Journal of Regions, Economy and Society, 16(2), pp. 367–372.

- Kneese, T. & Oduro, S. (2024) AI Governance Needs Sociotechnical Expertise: Why the Humanities and Social Sciences are Critical to Government Efforts. Data & Society Policy Brief. 1-10.

- OECD. Assessing Potential Future Artificial Intelligence Risks, Benefits and Policy Imperatives. OECD Artificial Intelligence Papers. No. 27, November 2024. Available online: https://oecd.ai/site/ai-futures (accessed on 20 November 2024).

- Nabben, K.; De Filippi, P. Accountability protocols? On-chain dynamics in blockchain governance. Internet Policy Review. [CrossRef]

- Nanni, R. , Bizzaro, P. G., & Napolitano, M. (2024). The false promise of individual digital sovereignty in Europe: Comparing artificial intelligence and data regulations in China and the European Union. Policy & Internet. [CrossRef]

- Schroeder, R. (2024). Content moderation and the digital transformations of gatekeeping. Policy & Internet. [CrossRef]

- Gray, J.E. , Hutchinson, J., Stilinovic, M. and Tjahja, N. (2024), The pursuit of ‘good’ Internet policy. Policy Internet. [CrossRef]

- Pohle, J.; Santaniello, M. From multistakeholderism to digital sovereignty: Toward a new discursive order in internet governance. Policy & Internet. [CrossRef]

- Viano, C. , Avanzo, S., Cerutti, M., Cordero, A., Schifanella, C. & Boella, G. (2022) Blockchain tools for socio-economic interactions in local communities. Policy and Society. [CrossRef]

- Karatzogianni, A., Tiidenberg, K., & Parsanoglou, D. (2022). The impact of technological transformations on the digital generation: Digital citizenship policy analysis (Estonia, Greece, and the UK). DigiGen Policy Brief, April 2022. [CrossRef]

- European Commission. Commission Guidelines on Prohibited Artificial Intelligence Practices Established by Regulation (EU) 2024/1689 (AI Act); European Commission: Brussels, Belgium, 2025. Available online: https://digital-strategy.ec.europa.eu/en/library/second-draft-general-purpose-ai-code-practice-published-written-independent-experts (accessed on 9 February 2025).

- Huang, J.; Bibri, S.E.; Keel, P. Generative Spatial Artificial Intelligence for Sustainable Smart Cities: A Pioneering Large Flow Model for Urban Digital Twin. Environ. Sci. Ecotechnol. 2025, 24, 100526. [Google Scholar] [CrossRef]

- European Commission. Commission Guidelines on Prohibited Artificial Intelligence Practices - ANNEX; European Commission: Brussels, Belgium, 2025; Available online: https://ec.europa.eu (accessed on 9 February 2025).

- European Commission. Regulation (EU) 2024/1689 on Harmonised Rules on Artificial Intelligence (AI Act); European Commission: Brussels, Belgium, 2025; Available online: https://ec.europa.eu (accessed on 9 February 2025).

- Petropoulos, A.; Pataki, B.; Juijn, D.; Janků, D.; Reddel, M. Building CERN for AI: An Institutional Blueprint; Centre for Future Generations: Brussels, Belgium, 2025; Available online: http://www.cfg.eu/building-cern-for-ai (accessed on 9 February 2025).

- National Technical Committee 260 on Cybersecurity of SAC. AI Safety Governance Framework; China, 2024. Available online: https://gov.cn (accessed on 9 February 2025).

- Creemers, R. China’s Emerging Data Protection Framework. J. Cybersecur. 2022, 8, 1–12. [Google Scholar] [CrossRef]

- Raman, D.; Madkour, N.; Murphy, E.R.; Jackson, K.; Newman, J. Intolerable Risk Threshold Recommendations for Artificial Intelligence; Center for Long-Term Cybersecurity, UC Berkeley: Berkeley, CA, USA, 2025; Available online: https://cltc.berkeley.edu (accessed on 9 February 2025).

- Wald, B. Artificial Intelligence and First Nations: Risks and Opportunities; Ministry of Health: Canada, 2025. Available online: https://firstnations.ai/report.pdf (accessed on 9 February 2025.

- Zeng, Y. Global Index for AI Safety: AGILE Index on Global AI Safety Readiness; International Research Center for AI Ethics and Governance, Chinese Academy of Sciences: Beijing, China, 2025. [Google Scholar]

- Iosad, A.; Railton, D.; Westgarth, T. Governing in the Age of AI: A New Model to Transform the State; Tony Blair Institute for Global Change: London, UK, 2024; Available online: https://tonyblairinstitute.org/ai-governance (accessed on 9 February 2025).

- UN-Habitat. World Smart Cities Outlook 2024; UN-Habitat: Nairobi, Kenya, 2024; Available online: https://unhabitat.org/smartcities2024 (accessed on 9 February 2025).

- Popelka, S.; Narvaez Zertuche, L.; Beroche, H. Urban AI Guide 2023; Urban AI: Paris, France, 2023; Available online: https://urbanai.org/guide2023 (accessed on 9 February 2025).

- World Economic Forum. The Global Public Impact of GovTech: A $9.8 Trillion Opportunity; WEF: Geneva, Switzerland, 2025; Available online: https://weforum.org/govtech2025 (accessed on 9 February 2025).

- Boonstra, M.; Bruneault, F.; Chakraborty, S.; Faber, T.; Gallucci, A.; et al.; Lessons Learned in Performing a Trustworthy AI and Fundamental Rights Assessment arXiv 2024. Available online: https://arxiv.org/abs/2401.12345 (accessed on 9 February 2025).

- UK Government. AI Opportunities Action Plan: Government Response; Department for Science, Innovation & Technology: London, UK, 2025; Available online: https://www.gov.uk/government/publications/ai-opportunities-action-plan (accessed on 9 February 2025).

- Ben Dhaou, S.; Isagah, T.; Distor, C.; Ruas, I.C. Global Assessment of Responsible Artificial Intelligence in Cities: Research and Recommendations to Leverage AI for People-Centred Smart Cities; United Nations Human Settlements Programme (UN-Habitat): Nairobi, Kenya, 2024; Available online: https://www.unhabitat.org (accessed on 9 February 2025).

- David, A.; Yigitcanlar, T.; Desouza, K.; Li, R.Y.M.; Cheong, P.H.; Mehmood, R.; Corchado, J. Understanding Local Government Responsible AI Strategy: An International Municipal Policy Document Analysis. Cities 2024, 155, 105502. [Google Scholar] [CrossRef]

- Bipartisan House AI Task Force. Leading AI Progress: Policy Insights and a U.S. Vision for AI Adoption, Responsible Innovation, and Governance; United States Congress: Washington, DC, USA, 2025; Available online: https://www.house.gov/ai-task-force (accessed on 9 February 2025).

- World Bank. Global Trends in AI Governance: Evolving Country Approaches; World Bank: Washington, DC, USA, 2024; Available online: https://www.worldbank.org/ai-governance (accessed on 9 February 2025).

- World Economic Forum. The Global Risks Report 2025; WEF: Geneva, Switzerland, 2025; Available online: https://www.weforum.org/publications/global-risks-report-2025 (accessed on 9 February 2025).

- World Economic Forum. Navigating the AI Frontier: A Primer on the Evolution and Impact of AI Agents; WEF: Geneva, Switzerland, 2024; Available online: https://www.weforum.org/ai-frontier (accessed on 9 February 2025).

- Claps, M.; Barker, L. Moving from “Why AI” to “How to AI” — A Playbook for Governments Procuring AI and GenAI; IDC Government Insights: Washington, DC, USA, 2024; Available online: https://idc.com/research/ai-procurement (accessed on 9 February 2025).

- Couture, S.; Toupin, S.; Mayoral Baños, A. Resisting and Claiming Digital Sovereignty: The Cases of Civil Society and Indigenous Groups. Policy Internet 2025, 1, 1–11. [Google Scholar] [CrossRef]

- Pohle, J.; Nanni, R.; Santaniello, M. Unthinking Digital Sovereignty: A Critical Reflection on Origins, Objectives, and Practices. Policy Internet 2025, 1, 1–6. [Google Scholar] [CrossRef]

- European Commission. The Potential of Generative AI for the Public Sector: Current Use, Key Questions, and Policy Considerations; Digital Public Governance, Joint Research Centre: Brussels, Belgium, 2025; Available online: https://ec.europa.eu/jrc-genai (accessed on 9 February 2025).

- Heeks, R.; Wall, P.J.; Graham, M. Pragmatist-Critical Realism as a Development Studies Research Paradigm. Dev. Stud. Res. 2025, 12, 2439407. [Google Scholar] [CrossRef]

- García, A.; Alarcón, Á.; Quijano, H.; Kruger, K.; Narváez, S.; Alimonti, V.; Flores, V.; Mendieta, X. Privacidad en Desplazamiento Migratorio; Coalición Latinoamericana #MigrarSinVigilancia: Mexico City, Mexico, 2024; Available online: https://migrarsinvigilancia.org (accessed on 9 February 2025).

- PwC Global. Agentic AI – The New Frontier in GenAI; PwC: London, UK, 2025; Available online: https://pwc.com/ai-strategy (accessed on 9 February 2025).

- De Filippi, P.; Cossar, S.; Mannan, M.; Nabben, K.; Merk, T.; Kamalova, J. Report on Blockchain Governance Dynamics; Project Liberty Institute & BlockchainGov: Paris, France, 2024; Available online: https://blockchaingov.eu (accessed on 9 February 2025).

- Sharkey, A. Could a Robot Feel Pain? AI & Soc. 2024. [CrossRef]

- Behuria, P. Is the Study of Development Humiliating or Emancipatory? The Case Against Universalising ‘Development’. Eur. J. Dev. Res. [CrossRef]

- World Economic Forum. The Future of Jobs Report 2025; WEF: Geneva, Switzerland, 2025; Available online: https://www.weforum.org/reports/the-future-of-jobs-report-2025 (accessed on 9 February 2025).

- Bengio, Y.; Mindermann, S.; Privitera, D.; Besiroglu, T.; Bommasani, R.; Casper, S.; et al. International AI Safety Report 2025; AI Safety Institute: London, UK, 2025; Available online: https://www.nationalarchives.gov.uk/doc/open-government-licence/version/3/ (accessed on 9 February 2025).

- European Commission Joint Research Centre. Data Sovereignty for Local Governments: Enablers and Considerations; JRC Report No. 138657, European Commission: Brussels, Belgium, 2025; Available online: https://ec.europa.eu/jrc (accessed on 9 February 2025).

- OECD. AI and Governance: Regulatory Approaches to AI and Their Global Implications; OECD Publishing: Paris, France, 2024; Available online: https://www.oecd.org/ai-regulation (accessed on 9 February 2025).

- OECD. Digital Public Infrastructure for Digital Governments; OECD Public Governance Policy Papers No. 68, OECD Publishing: Paris, France, 2024; Available online: https://www.oecd.org/digitalpublic-infrastructure (accessed on 9 February 2025).

- OpenAI. AI in America: OpenAI's Economic Blueprint; OpenAI: San Francisco, CA, USA, 2025; Available online: https://openai.com (accessed on 9 February 2025).

- Majcher, K. (Ed.) . Charting the Digital and Technological Future of Europe: Priorities for the European Commission (2024-2029); European University Institute: Florence, Italy, 2024; Available online: https://www.eui.eu (accessed on 9 February 2025).

- Savova, V. Navigating Privacy in Crypto: Current Challenges and (Future) Solutions. Educ. Sci. Res. Innov. 71–85. [CrossRef]

- Nicole, S.; Mishra, V.; Bell, J.; Kastrop, C.; Rodriguez, M. Digital Infrastructure Solutions to Advance Data Agency in the Age of Artificial Intelligence; Project Liberty Institute & Global Solutions Initiative: Paris, France, 2024; Available online: https://projectliberty.io (accessed on 9 February 2025).

- Nicole, S.; Vance-Law, S.; Spelliscy, C.; Bell, J. Towards Data Cooperatives for a Sustainable Digital Economy; Project Liberty Institute & Decentralization Research Center: New York, USA, 2025; Available online: https://decentralizationresearch.org (accessed on 9 February 2025).

- Qlik. Maximizing Data Value in the Age of AI; Qlik: 2024. Available online: https://qlik.com (accessed on 9 February 2025).

- Lauer, R.; Merkel, S.; Bosompem, J.; Langer, H.; Naeve, P.; Herten, B.; Burmann, A.; Vollmar, H.C.; Otte, I. (Data-) Cooperatives in Health and Social Care: A Scoping Review. J. Public Health 2024. [CrossRef]

- Kaal, W.A.; AI Governance via Web3 Reputation System. Stanford J. Blockchain Law Policy 2025. Available online: https://stanford-jblp.pubpub.org/pub/aigov-via-web3 (accessed on 9 February 2025).

- Roberts, T.; Oosterom, M. Digital Authoritarianism: A Systematic Literature Review. Inf. Technol. Dev. 2024. [CrossRef]

- Roberts, H.; Hine, E.; Floridi, L.; Digital Sovereignty, Digital Expansionism, and the Prospects for Global AI Governance. SSRN Electron. J. 2024. Available online: https://ssrn.com/abstract=4483271 (accessed on 9 February 2025).

- European Committee of the Regions. AI and GenAI Adoption by Local and Regional Administrations; European Union: Brussels, Belgium, 2024; Available online: https://cor.europa.eu (accessed on 9 February 2025).

- French Artificial Intelligence Commission. AI: Our Ambition for France; French AI Commission: Paris, France, 2024; Available online: https://gouvernement.fr (accessed on 9 February 2025).

- UK Government. Copyright and Artificial Intelligence; Intellectual Property Office: London, UK, 2024; Available online: https://www.gov.uk/government/consultations/copyright-and-artificial-intelligence (accessed on 9 February 2025).

- Institute of Development Studies. Indigenous Knowledge and Artificial Intelligence; IDS Series: Navigating Data Landscapes: Brighton, UK, 2024; Available online: https://www.ids.ac.uk (accessed on 9 February 2025).

- Congressional Research Service. Indigenous Knowledge and Data: Overview and Issues for Congress; CRS Report No. R48317, CRS: Washington, DC, USA, 2024; Available online: https://crsreports.congress.gov (accessed on 9 February 2025).

- UK Government. AI Opportunities Action Plan; Department for Science, Innovation and Technology: London, UK, 2025; Available online: https://www.gov.uk/official-documents (accessed on 9 February 2025).

- Centre for Information Policy Leadership (CIPL). Applying Data Protection Principles to Generative AI: Practical Approaches for Organizations and Regulators; CIPL: Washington, DC, USA, 2024; Available online: https://www.informationpolicycentre.com (accessed on 9 February 2025).

- Holgersson, M.; Dahlander, L.; Chesbrough, H.; Bogers, M.L.A.M. Open Innovation in the Age of AI. Calif. Manag. Rev. 2024, 67, 5–20. [Google Scholar] [CrossRef]

- State of California. State of California Guidelines for Evaluating Impacts of Generative AI on Vulnerable and Marginalized Communities; Office of Data and Innovation: Sacramento, CA, USA, 2024; Available online: https://www.genai.ca.gov (accessed on 9 February 2025).

- Bogen, M.; Deshpande, C.; Joshi, R.; Radiya-Dixit, E.; Winecoff, A.; Bankston, K. Assessing AI: Surveying the Spectrum of Approaches to Understanding and Auditing AI Systems; Center for Democracy & Technology: Washington, DC, USA, 2025; Available online: https://cdt.org (accessed on 9 February 2025).

- Majcher, K. (Ed.) . Charting the Digital and Technological Future of Europe: What Priorities for the European Commission in 2024–2029? European University Institute: Florence, Italy, 2024; Available online: https://www.eui.eu (accessed on 9 February 2025).

- Mannan, M.; Schneider, N.; Merk, T. Cooperative Online Communities. In The Routledge Handbook of Cooperative Economics and Management; Routledge: London, UK, 2024; pp. 411–432. Routledge. [CrossRef]

- Durmus, M. Critical Thinking is Your Superpower: Cultivating Critical Thinking in an AI-Driven World; Mindful AI Press: 2024. Available online: https://mindful-ai.org (accessed on 9 February 2025).

- Lustenberger, M.; Spychiger, F.; Küng, L.; Cuadra, P. Mastering DAOs: A Practical Guidebook for Building and Managing Decentralized Autonomous Organizations; ZHAW Institute for Organizational Viability: Zurich, Switzerland, 2024; Available online: https://dao-handbook.org (accessed on 9 February 2025).

- Fritsch, R.; Müller, M.; Wattenhofer, R. Analyzing Voting Power in Decentralized Governance: Who Controls DAOs? arXiv 2024. Available online: https://arxiv.org/abs/2204.01176 (accessed on 9 February 2025).

- EuroHPC Joint Undertaking. Selection of the First Seven AI Factories to Drive Europe's Leadership in AI; EuroHPC JU: Luxembourg, 2024; Available online: https://eurohpc-ju.europa.eu/index_en (accessed on 9 February 2025).

- Marchal, N.; Xu, R.; Elasmar, R.; Gabriel, I.; Goldberg, B.; Isaac, W.; Generative AI Misuse: A Taxonomy of Tactics and Insights from Real-World Data. arXiv 2024. Available online: https://arxiv.org/abs/2406.13843 (accessed on 9 February 2025).

- Davenport, T.H.; Gupta, S.; Wang, R. SuperTech Leaders and the Evolution of Technology and Data Leadership; ThoughtWorks: 2024. Available online: https://thoughtworks.com (accessed on 9 February 2025).

- European Securities and Markets Authority (ESMA). Final Report on the Guidelines on the Conditions and Criteria for the Qualification of Crypto-Assets as Financial Instruments; ESMA: Paris, France, 2024; Available online: https://www.esma.europa.eu (accessed on 9 February 2025).

- Shrishak, K. AI-Complex Algorithms and Effective Data Protection Supervision: Bias Evaluation; European Data Protection Board (EDPB): Brussels, Belgium, 2024; Available online: https://edpb.europa.eu (accessed on 9 February 2025).

- Ada Lovelace Institute. Buying AI: Is the Public Sector Equipped to Procure Technology in the Public Interest? Ada Lovelace Institute: London, UK, 2024; Available online: https://www.adalovelaceinstitute.org (accessed on 9 February 2025).

- European Court of Auditors (ECA). AI Auditors: Auditing AI-Based Projects, Systems, and Processes; ECA: Luxembourg, 2024; Available online: https://eca.europa.eu (accessed on 9 February 2025).

- OpenAI. AI in America: OpenAI's Economic Blueprint; OpenAI: San Francisco, CA, USA, 2025; Available online: https://openai.com (accessed on 9 February 2025).

- Papadimitropoulos, V.; Perperidis, G. On the Foundations of Open Cooperativism. In The Handbook of Peer Production; Bauwens, M., Kostakis, V., Pazaitis, A., Eds.; Wiley: Hoboken, NJ, USA, 2021; pp. 398–410. [Google Scholar] [CrossRef]

- Tarkowski, A. Data Governance in Open Source AI: Enabling Responsible and Systemic Access. Open Future, 2025. [Google Scholar]

- Gerlich, Michael. 2024. Societal Perceptions and Acceptance of Virtual Humans: Trust and Ethics across Different Contexts. Social Sciences 13: 516. [CrossRef]

- Waldner, D. & Lust, E. (2018) Unwelcome change: Coming to terms with democratic backsliding. Annual Review of Political Science. 21(1): 93-113.

- Roose, K. (2024) Available from: https://www.nytimes.com/2024/07/19/technology/ai-data-restrictions.html (Accessed on 1 Sept 2024).

- Kolt, N. (2024) ‘Governing AI Agents.’ Available at SSRN. [CrossRef]

- Calzada, I. (2024c) Data (un)sustainability: Navigating utopian resistance while tracing emancipatory datafication strategies in Certomá, C., Martelozzo, F. and Iapaolo, F. (eds.) Digital (Un)Sustainabilities: Promises, Contradictions, and Pitfalls of the Digitalization-Sustainability Nexus. Routledge: Oxon, UK. [CrossRef]

- Benson, J. (2024) Intelligent Democracy: Answering The New Democratic Scepticism. Oxford, UK: Oxford University Press.

- Coeckelbergh, M. (2024) Artificial intelligence, the common good, and the democratic deficit in AI governance. AI Ethics. [CrossRef]

- García-Marzá, D. & Calvo, P. (2024) Algorithmic Democracy: A Critical Perspective Based on Deliberative Democracy. Cham, Switzerland: Springer Nature.

- KT4Democracy. Available at: https://kt4democracy.eu/ (Accessed 1 January 2024).

- Levi, S. (2024) Digitalización Democrática: Soberanía Digital para las Personas. Barcelona, Spain: Rayo Verde.

- Poblet, M.; Allen, D. W. E.; Konashevych, O.; Lane, A. M.; Diaz Valdivia, C. A. From Athens to the Blockchain: Oracles for Digital Democracy. Front. Blockchain 2020, 3, 575662. [CrossRef]

- De Filippi, P. , Reijers, W. & Morshed, M. (2024) Blockchain Governance. Boston, USA: MIT Press.

- Visvizi, A.; Malik, R.; Guazzo, G.M.; Çekani, V. The Industry 5.0 (I50) Paradigm, Blockchain-Based Applications and the Smart City. Eur. J. Innov. Manag. [CrossRef]

- Roio, D. , Selvaggini, R., Bellini, G. & Dintino, A. (2024) SD-BLS: Privacy preserving selective disclosure of verifiable credentials with unlinkable threshold revocation. 2024 IEEE International Conference on Blockchain (Blockchain). [CrossRef]

- Viano, C. , Avanzo, S., Boella, G., Schifanella, C. & Giorgino, V. (2023) Civic blockchain: Making blockchains accessible for social collaborative economies. Journal of Responsible Technology. [CrossRef]

- Ahmed, S. , et al. (2024) Field-building and the epistemic culture of AI safety. First Monday.

- Tan, J. et al. 2024, Open Problems in DAOs. https://arxiv.org/abs/2310.19201v2.

- Petreski, Davor and Cheong, Marc, "Data Cooperatives: A Conceptual Review" (2024). ICIS 2024 Proceedings. 15. https://aisel.aisnet.org/icis2024/lit_review/lit_review/15.

- Stein, J., Fung, M.L., Weyenbergh, G.V. & Soccorso, A. (2023) Data cooperatives: A framework for collective data governance and digital justice', People-Centered Internet. Available from: https://myaidrivecom/view/file-ihq4z4zhVBYaytB0mS1k6uxy (Accessed 1 September 2024).

- Dathatri, S.; et al. Scalable watermarking for identifying large model outputs. Nature 2024, 634, 818–823. [Google Scholar] [CrossRef]

- Adler, et al. (2024) Personhood credentials: Artificial intelligence and the value of privacy-preserving tools to distinguish who is real online, arXiv. Available from: https://arxiv.org/abs/2408.07892 (Accessed 1 September 2024).

- Fratini, Samuele and Hine, Emmie and Novelli, Claudio and Roberts, Huw and Floridi, Luciano, Digital Sovereignty: A Descriptive Analysis and a Critical Evaluation of Existing Models (April 21, 2024). Available at SSRN: https://ssrn.com/abstract=4816020. [CrossRef]

- Hui, Yuk. Machine and Sovereignty for a Planetary Thinking. University of Minnesota Press: Minneapolis and London.

- New America. From Digital Sovereignty to Digital Agency. New America Foundation, 2023. Available online: https://www.newamerica.org/planetary-politics/briefs/from-digital-sovereignty-to-digital-agency/ (accessed on 20 November 2024).

- Glasze, G.; et al. Contested Spatialities of Digital Sovereignty. Geopolitics. [CrossRef]

- The Conversation (2023) Elon Musk’s feud with Brazilian judge is much more than a personal spat – it’s about national sovereignty, freedom of speech, and the rule of law. Available from: https://theconversation.com/elon-musks-feud-with-brazilian-judge-is-much-more-than-a-personal-spat-its-about-national-sovereignty-freedom-of-speech-and-the-rule-of-law-238264 (Accessed 20 September 2024).

- The Conversation (2023) Albanese promises to legislate minimum age for kids’ access to social media. Available from: https://theconversation.com/albanese-promises-to-legislate-minimum-age-for-kids-access-to-social-media-238586 (Accessed 20 September 2024).

- Calzada, I. Data Co-operatives through Data Sovereignty. Smart Cities 2021, 4, 1158–1172. [Google Scholar] [CrossRef]

- Belanche, D., Belk, R.W., Casaló, L.V. & Flavián, C. (2024) The dark side of artificial intelligence in services. Service Industries Journal, 44, pp. 149–172.

- European Parliament. (2023). EU AI Act: First Regulation on Artificial Intelligence. Available online: https://www.europarl.europa.eu/topics/en/article/20230601STO93804/eu-ai-act-first-regulation-on-artificial-intelligence (accessed on 23 November 2024).

- Yakowitz Bambauer, Jane R. and Zarsky, Tal, Fair-Enough AI (August 08, 2024). Forthcoming in the Yale Journal of Law & Technology, Available at SSRN: https://ssrn.com/abstract=4924588. [CrossRef]

- Dennis, C.; et al. (2024). What Should Be Internationalised in AI Governance? Oxford Martin AI Governance Initiative.

- Ghioni, R.; Taddeo, M.; Floridi, L.; Open Source Intelligence and AI: A Systematic Review of the GELSI Literature. SSRN. Available online: https://ssrn.com/abstract=4272245 (accessed on 18 November 2024).

- Bullock, S.; Ajmeri, N.; Batty, M.; Black, M.; Cartlidge, J.; Challen, R.; Chen, C.; Chen, J.; Condell, J.; Danon, L.; Dennett, A.; et al. Artificial Intelligence for Collective Intelligence: A National-Scale Research Strategy. 2024. Available online: https://ai4ci.ac.uk (accessed on 20 November 2024).

- Alon, I.; Haidar, H.; Haidar, A.; Guimón, J. The future of artificial intelligence: Insights from recent Delphi studies. Futures 2024, 103514. [Google Scholar] [CrossRef]

- Ben Dhaou, S. , Isagah, T., Distor, C., & Ruas, I.C. (2024). Global Assessment of Responsible Artificial Intelligence in Cities: Research and recommendations to leverage AI for people-centred smart cities. Nairobi, Kenya. United Nations Human Settlements Programme (UN-Habitat).

- Narayanan, A. (2023) Understanding Social Media Recommendation Algorithms'. Knight First Amendment Institute, 1-49.

- Settle, J.E. (2018) Frenemies: How Social Media Polarizes America. Cambridge University Press.

- European Commission, Joint Research Centre, Lähteenoja, V., Himanen, J., Turpeinen, M., and Signorelli, S. The landscape of consent management tools - a data altruism perspective. 2024. [CrossRef]

- Fink, A. (2024). Data cooperative. Internet Policy Review, 13(2). [CrossRef]

- Nabben, K. (2024). AI as a Constituted System: Accountability Lessons from an LLM Experiment.

- Von Thun, M. , Hanley, D.A. (2024) Stopping Big Tech from Becoming Big AI. Open Markets Institute and Mozilla.

- Rajamohan, R. (2024) Networked Cooperative Ecosystems. https://paragraph.

- Ananthaswamy, A. (2024) Why Machines Learn: The Elegant Math Behind Modern AI. London, UK: Penguin.

- Bengio, Y. (2023) AI and catastrophic risk. Journal of Democracy. 34(4): 111-121.

- European Parliament. Social approach to the transition to smart cities. European Parliament: Luxembourg, 2023.

- Magro, A. , (2024) Emerging digital technologies in the public sector: The case of virtual worlds. Luxembourg: Publications Office of the European Union.

- Estévez Almenzar, M., Fernández Llorca, D., Gómez, E., & Martínez Plumed, F., 2022. Glossary of human-centric artificial intelligence. Publications Office of the European Union: Luxembourg. [CrossRef]

- Varon, J.; Costanza-Chock, S.; Tamari, M.; Taye, B.; Koetz, V. AI Commons: Nourishing Alternatives to Big Tech Monoculture; Coding Rights: Rio de Janeiro, Brazil, 2024; Available online: https://codingrights.org/docs/AICommons.pdf (accessed on 9 February 2025).

- Verhulst, S.G. Toward a Polycentric or Distributed Approach to Artificial Intelligence & Science. Front. Policy Labs 2024, 1, 1–10. [Google Scholar] [CrossRef]

- Mitchell, M. , Palmarini, A.B. & Moskvichev, A. (2023) Comparing Humans, GPT-4, and GPT-4V on abstraction and reasoning tasks. arXiv preprint.

- Gasser, U. & Mayer-Schönberger, V. (2024) Guardrails: Guiding Human Decisions in the Age of AI. Princeton, USA: Princeton University Press.

- United Nations High-level Advisory Body on Artificial Intelligence (2024) Governing AI for Humanity: Final Report. United Nations, New York.

- Vallor, S. (2024) The AI Mirror: How to Reclaim Our Humanity in an Age of Machine Thinking. NYC, USA: OUP.

- Buolamwini, J. (2023) Unmasking AI: My Mission to Protect What is Human in a World of Machines. London, UK: Random House.

- McCourt, F.H. Our Biggest Fight: Reclaiming Liberty, Humanity, and Dignity in the Digital Age. Crown Publishing: London, 2024.

- Muldoon, J. , Graham, M. & Cant, C. (2024) Feeding the Machine: The Hidden Human Labour Powering AI. Edinburgh, UK: Cannongate.

- Burkhardt, S. & Rieder, B. (2024) Foundation models are platform models: Prompting and the political economy of AI. Big Data & Society, pp. 1–15. [CrossRef]

- Finnemore, M. & Sikkink, K. (1998) International Norm Dynamics and Political Change. International Organization. 52: 887 - 917.

- Lazar, S. (2024, forthcoming) Connected by Code: Algorithmic Intermediaries and Political Philosophy. Oxford: Oxford University Press.

- Hoeyer, K. (2023) Data Paradoxes: The Politics of Intensified Data Sourcing in Contemporary Healthcare. Cambridge, MA, USA: MIT Press.

- Hughes, T. (2024) The political theory of techno-colonialism. European Journal of Political Theory, pp. 1–24. [CrossRef]

- Srivastava, S. Algorithmic Governance and the International Politics of Big Tech. Cambridge University Press: Cambridge, USA, 2021.

- Utrata, A. (2024) Engineering territory: Space and colonies in Silicon Valley. American Political Science Review, 118(3), pp. 1097–1109. [CrossRef]

- Waldner, D. & Lust, E. (2018) Unwelcome change: Coming to terms with democratic backsliding. Annual Review of Political Science. 21(1): 93-113.

- Guersenzvaig, A. & Sánchez-Monedero, J. (2024). AI research assistants, intrinsic values, and the science we want. AI & Society. [CrossRef]

- Wachter-Boettcher, S. (2018) Technically Wrong: Sexist Apps, Biased Algorithms, and Other Threat of Toxic Tech. London, UK: WW Norton & Co.

- D’Amato, K. (2024). ChatGPT: towards AI subjectivity. AI & Society. [CrossRef]

- Shavit, Y. , et al. (2023) Practices for governing agentic AI systems. OpenAI.

- Bibri, S.E.; Allam, Z. The Metaverse as a Virtual Form of Data-Driven Smart Urbanism: On Post-Pandemic Governance through the Prism of the Logic of Surveillance Capitalism. Smart Cities 2022, 5, 715–727. [Google Scholar] [CrossRef]

- Bibri, S.E.; Visvizi, A.; Troisi, O. Advancing Smart Cities: Sustainable Practices, Digital Transformation, and IoT Innovations; Springer: Cham, Switzerland, 2024. [CrossRef]

- Ayyoob Sharifi, Zaheer Allam, Simon Elias Bibri, Amir Reza Khavarian-Garmsir, Smart cities and sustainable development goals (SDGs): A systematic literature review of co-benefits and trade-offs, Cities, Volume 146, 2024, 104659, ISSN 0264-2751. [CrossRef]

- Singh, A. Advances in Smart Cities: Smarter People, Governance, and Solutions. Journal of Urban Technology, 2019, 1-4. [CrossRef]

- Reuel, A. , et al. (2024) Open Problems in Technical AI Governance. arXiv preprint. https://arxiv.org/abs/2407.14981.

- Aho, B. Data communism: Constructing a national data ecosystem. Big Data & Society, 2024, pp. 1-14. [CrossRef]

- Valmeekam, K. , et al. (2023). On the Planning Abilities of Large Language Models—A Critical Investigation. arXiv preprint. https://arxiv.org/abs/2305.15771.

- Yao, S. , et al. (2022) ReAct: Synergizing reasoning and acting in language models. arXiv preprint. https://arxiv.org/abs/2210.03629.

- Krause, D. Web3 and the Decentralized Future: Exploring Data Ownership, Privacy, and Blockchain Infrastructure. Preprint 2024. online. [CrossRef]

- Lazar, S. & Pascal, A. (2024) AGI and Democracy. Allen Lab for Democracy Renovation.

- Ovadya, A. (2023) Reimagining Democracy for AI. Journal of Democracy. 34(4): 162-170.

- Ovadya, A.; Thorburn, L.; Redman, K.; Devine, F.; Milli, S.; Revel, M.; Konya, A.; Kasirzadeh, A.; Toward Democracy Levels for AI. Pluralistic Alignment Workshop at NeurIPS 2024. Available online: https://arxiv.org/abs/2411.09222 (accessed on 14 November 2024).

- Alnabhan, M.Q.; Branco, P. BERTGuard: Two-Tiered Multi- Domain Fake News Detection with Class Imbalance Mitigation. Big Data Cogn. Comput. 2024, 8, 93. [Google Scholar] [CrossRef]

- Gourlet, P., Ricci, D. and Crépel, M. (2024) Reclaiming artificial intelligence accounts: A plea for a participatory turn in artificial intelligence inquiries. Big Data & Society, pp. 1–21. [CrossRef]

- Spathoulas, G. , Katsika, A., & Kavallieratos, G. (2024) Privacy preserving and verifiable outsourcing of AI processing for cyber-physical systems. Norwegian University of Science and Technology, University of Thessaly.

- Abhishek, T. & Varda, M. (2024) Data hegemony: The invisible war for digital empires'. Internet Policy Review. Available from: https://policyreview.info/articles/news/data-hegemony-digital-empires/1789 (Accessed 1 September 2024).

- Alaimo, C. & Kallinikos, J. (2024) Data Rules: Reinventing the Market Economy. Cambridge, MA, USA: MIT Press.

- OpenAI, GPT-4 Technical Report. 2023.

- Dobbe, R. (2022) System safety and artificial intelligence.’ in Proceedings of the 2022 ACM Conference on Fairness, Accountability, and Transparency.

- Bengio, Y. , et al. (2024) International Scientific Report on the Safety of Advanced AI: Interim Report.

- World Digital Technology Academy (WDTA). 2024. Large Language Model Security Requirements for Supply Chain. WDTA AI-STR-03, World Digital Technology Academy.

- AI4GOV. Available at: https://ai4gov-project.eu/2023/11/14/ai4gov-d3-1/ (Accessed 1 January 2024).

- Cazzaniga, M., Jaumotte, F., Li, L., Melina, G., Panton, A.J., Pizzinelli, C., Rockall, E., & Tavares, M.M., 2024. Gen-AI: Artificial Intelligence and the Future of Work. IMF Staff Discussion Note SDN2024/001. International Monetary Fund, Washington, DC.

- ENFIELD (2024) Available from: https://www.enfield-project.eu/about (Accessed 1 September 2024). Call: oc1-2024-TES-01. SGA: oc1-2024-TES-01-01. Democracy in the Age of Algorithms: Enhancing Transparency and Trust in AI-Generated Content through Innovative Detection Techniques (PI: Prof Igor Calzada). Grant Agreement Number: 101120657. https://ec.europa.eu/info/funding-tenders/opportunities/portal/screen/opportunities/competitive-calls-cs/6083?isExactMatch=true&status=31094502&order=DESC&pageNumber=1&pageSize=50&sortBy=startDate.

- Palacios S. et al. (2022). AGAPECert: An Auditable, Generalized, Automated, Privacy-Enabling Certification Framework with Oblivious Smart Contracts. Journal of Defendable and Secure Computing, X,Y.

- GPAI Algorithmic Transparency in the Public Sector (2024) A State-of-the-Art Report of Algorithmic Transparency Instruments. Global Partnership on Artificial Intelligence. Available from www.gpai.ai. (Accessed 1 September 2024).

- Lazar, S. & Nelson, A. (2023) AI safety on whose terms? Science, 2023. 381(6654): 138-138.

- HAI (2024) Artificial Intelligence Index Report 2024. Palo Alto, USA: HAI.

- Nagy, P. & Neff, G. (2024) Conjuring algorithms: Understanding the tech industry as stage magicians. New Media & Society, 26(9), pp. 4938–4954.

- Kim, E. , Jang, G.Y. & Kim, S.H. (2022) How to apply artificial intelligence for social innovations. Applied Artificial Intelligence. [CrossRef]

- Calzada, I.; Cobo, C. Unplugging: Deconstructing the Smart City. Journal of Urban Technology, 2015, 22, 23–43. [Google Scholar] [CrossRef]

- Visvizi, A.; Godlewska-Majkowska, H. Not Only Technology: From Smart City 1.0 through Smart City 4.0 and Beyond (An Introduction). In Smart Cities: Lock-In, Path-dependence and Non-linearity of Digitalization and Smartification; Visvizi, A., Godlewska-Majkowska, H., Eds.; Routledge: London, UK, 2025; pp. 3–16. [Google Scholar]

- Troisi, O.; Visvizi, A.; Grimaldi, M. The Different Shades of Innovation Emergence in Smart Service Systems: The Case of Italian Cluster for Aerospace Technology. J. Bus. Ind. Mark. 2024, 39, 1105–1129. [Google Scholar] [CrossRef]

- Visvizi, A.; Troisi, O.; Corvello, V. Research and Innovation Forum 2023: Navigating Shocks and Crises in Uncertain Times—Technology, Business, Society; Springer Nature: Cham, Switzerland, 2024. [Google Scholar] [CrossRef]

- Federico Caprotti, Federico Cugurullo, Matthew Cook, Andrew Karvonen, Simon Marvin, Pauline McGuirk & Alan-Miguel Valdez (27 Mar 2024): Why does urban Artificial Intelligence (AI) matter for urban studies? Developing research directions in urban AI research, Urban Geography. [CrossRef]

- Federico Caprotti, Catalina Duarte, Simon Joss, The 15-minute city as paranoid urbanism: Ten critical reflections, Cities, Volume 155, 2024, 105497. [CrossRef]

- Cugurullo, F.; Caprotti, F.; Cook, M.; Karvonen, A.; McGuirk, P.; Marvin, S. The rise of AI urbanism in post-smart cities: A critical commentary on urban artificial intelligence. Urban Studies. [CrossRef]

- Sanchez, T.W.; Fu, X.; Yigitcanlar, T.; Ye, X. The Research Landscape of AI in Urban Planning: A Topic Analysis of the Literature with ChatGPT. Urban Sci. 2024, 8, 197. [Google Scholar] [CrossRef]

- Kuppler, A.; Fricke, C. Between innovative ambitions and erratic everyday practices: urban planners’ ambivalences towards digital transformation. [CrossRef]

- Eubanks, V. (2019) Automating Inequality: How High-Tech Tools Profile, Police, and Punish the Poor. London: Picador.

- Lorinc, J. Dream States: Smart Cities, Technology, and the Pursuit of Urban Utopias. Toronto: Coach House Books, 2022.

- Benjamin Leffel, Ben Derudder, Michele Acuto, Jeroen van der Heijden, Not so polycentric: The stratified structure & national drivers of transnational municipal networks, Cities, Volume 143, 2023, 104597, ISSN 0264-2751. [CrossRef]

- Luccioni, S. , Jernite, Y. & Strubell, E. (2024) Power hungry processing: Watts driving the cost of AI deployment? in The 2024 ACM Conference on Fairness, Accountability, and Transparency.

- Gohdes, A.R. (2023) Repression in the Digital Age: Surveillance, Censorship, and the Dynamics of State Violence. Oxford, UK: Oxford University Press.

- Seger, E. , et al. (2020) Tackling threats to informed decision-making in democratic societies: Promoting epistemic security in a technologically-advanced world.

- Burton, J.W. , Lopez-Lopez, E., Hechtlinger, S. et al. ( 2024) How large language models can reshape collective intelligence. Nat Hum Behav 8, 1643–1655. [CrossRef]

- Lalka, R. (2024) The Venture Alchemists: How Big Tech Turned Profits into Power. New York, NY, USA: Columbia University Press.

- Li, F.-F. (2023) The Worlds I See: Curiosity, Exploration, and Discovery and the Dawn of AI. London, UK: Macmillan.

- Medrado, A. & Verdegem, P. (2024) Participatory action research in critical data studies: Interrogating AI from a South–North approach. Big Data & Society.

- Mejias, U.A.; Couldry, N. Data Grab: The New Colonialism of Big Tech (and How to Fight Back). WH Allen: London, 2024.

- Murgia, M. (2024) Code Dependent: Living in the Shadow of AI. London, UK: Henry Holt and Co.

- Johnson, S. & Acemoglu, D. (2023) Power and Progress: Our Thousand-Year Struggle Over Technology and Prosperity. London, UK: Basic Books.

- Ludovico Rella, Kristian Bondo Hansen, Nanna Bonde Thylsturp, Malcolm Campbell-Verduyn, Alex Preda, Daivi Rodima-Taylor, Ruowen Xu & Till Straube (22 Oct 2024): Hybrid materialities, power, and expertise in the era of general purpose technologies, Distinktion: Journal of Social Theory. [CrossRef]

- Merchant, B. (2023) Blood in the Machine: The Origins of the Rebellion Against Big Tech. London, UK: Little, Brown and Company.

- Sieber, R. , Brandusescu, A., Adu-Daako, A. & Sangiambut, S. (2024) Who are the publics engaging in AI? Public Understanding of Science. [CrossRef]

- Tunç, A. (2024). Can AI determine its own future? AI & Society. [CrossRef]

- Floridi, Luciano, Why the AI Hype is Another Tech Bubble (September 18, 2024). Available at SSRN: https://ssrn.com/abstract=4960826.

- Batty, M. The New Science of Cities; MIT Press: Cambridge, MA, USA, 2013. [Google Scholar]

- Batty, M. Inventing Future Cities; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Batty, M. Urban Analytics Defined. Environment and Planning B: Urban Analytics and City Science 2019, 46, 403–405. [Google Scholar] [CrossRef]

- Marvin, S.; Luque-Ayala, A.; McFarlane, C. Smart Urbanism: Utopian Vision or False Dawn? Routledge: New York, NY, USA, 2016. [Google Scholar]

- Marvin, S.; Graham, S. Splintering Urbanism: Networked Infrastructures, Technological Mobilities, and the Urban Condition; Routledge: London, UK, 2001. [Google Scholar]

- Marvin, S.; Bulkeley, H.; Mai, L.; McCormick, K.; Palgan, Y.V. Urban Living Labs: Experimenting with City Futures. European Urban and Regional Studies 2018, 25, 317–333. [Google Scholar] [CrossRef]

- Kitchin, R. Code/Space: Software and Everyday Life; MIT Press: Cambridge, MA, USA, 2011. [Google Scholar]

- Kitchin, R.; Lauriault, T.P.; McArdle, G. Knowing and Governing Cities through Urban Indicators, City Benchmarking, and Real-Time Dashboards. Regional Studies, Regional Science 2015, 2, 6–28. [Google Scholar] [CrossRef]

- Calzada, I. (2020) Platform and data co-operatives amidst European pandemic citizenship. Sustainability, 8309. [Google Scholar] [CrossRef]

- Monsees, L. Crypto-Politics: Encryption and Democratic Practices in the Digital Era. Routledge: Oxon, UK, 2020.

- European Commission. Second Draft of the General Purpose AI Code of Practice; Digital Strategy, 2024. Available online: https://digital-strategy.ec.europa.eu/en/library/second-draft-general-purpose-ai-code-practice-published-written-independent-experts (accessed on 10 February 2025).

- Visvizi, A.; Kozlowski, K.; Calzada, I.; Troisi, O. ; Troisi, O. Multidisciplinary Movements in AI and Generative AI: Society, Business, Education. 2025. [Google Scholar]

- Leslie, D.; Burr, C.; Aitken, M.; Cowls, J.; Katell, M.; Briggs, M. Artificial Intelligence, Human Rights, Democracy, and the Rule of Law: A Primer. Council of Europe, 2021. [Google Scholar]

- Palacios, S.; Ault, A.; Krogmeier, J.V.; Bhargava, B.; Brinton, C.G. AGAPECert: An Auditable, Generalized, Automated, Privacy-Enabling Certification Framework with Oblivious Smart Contracts. IEEE Trans. Dependable Secur. Comput. 2023, 20, 3269–3286. [Google Scholar] [CrossRef]

- Hossain, S.T.; Yigitcanlar, T.; Local Governments Are Using AI without Clear Rules or Policies, and the Public Has No Idea. QUT Newsroom. Available online: https://www.qut.edu.au/news/realfocus/local-governments-are-using-ai-without-clear-rules-or-policies-and-the-public-has-no-idea (accessed on 9 January 2025).

- Gerlich, M. AI Tools in Society: Impacts on Cognitive Offloading and the Future of Critical Thinking. Societies 2025, 15, 6. [Google Scholar] [CrossRef]

- Bousetouane, F.; Agentic Systems: A Guide to Transforming Industries with Vertical AI Agents. arXiv 2025, [cs.MA] 2501.00881. Available online: https://arxiv.org/abs/2501.00881 (accessed on 9 January 2025).

- Fontana, S.; Errico, B.; Tedesco, S.; Bisogni, F.; Renwick, R.; Akagi, M.; Santiago, N. AI and GenAI Adoption by Local and Regional Administrations. European Union, Commission for Economic Policy, 2024. ISBN: 978-92-895-3679-0; [CrossRef]

- Hossain, S.T.; Yigitcanlar, T.; Nguyen, K.; Xu, Y. Cybersecurity in Local Governments: A Systematic Review and Framework of Key Challenges. Urban Governance. [CrossRef]

- Laksito, J.; Pratiwi, B.; Ariani, W. Harmonizing Data Privacy Frameworks in Artificial Intelligence: Comparative Insights from Asia and Europe. PERKARA – Jurnal Ilmu Hukum dan Politik. [CrossRef]

- Nature. Science for Policy: Why Scientists and Politicians Struggle to Collaborate. Nature, 2024. Available online: https://www.nature.com/articles/science4policy (accessed on 9 January 2025).

| Dimension | Key Insights | Implications |

|---|---|---|

| 1. Trustworthiness Definition | Encompasses transparency, accountability, ethical integrity. | Calls for participatory governance to ensure inclusivity and co-construction of trust. |

| 2. Economic Competitiveness | Tension between fostering innovation and maintaining ethical standards. | Uneven playing fields for SMEs and grassroots initiatives; innovation sandboxes as a potential equalizer. |

| 3. High-Stakes Sectors | Focus on healthcare, law enforcement, energy; risks of bias and misuse. | Continuous monitoring and inclusive frameworks to ensure systems empower rather than oppress vulnerable populations. |

| 4. Participatory Governance | Advocates for inclusion via citizen assemblies, living labs, and co-design workshops. | Encourages diverse stakeholder engagement to align technological advancements with democratic values. |

| 5. Regulatory Frameworks | Balances economic growth with societal equity. | Promotes innovation while safeguarding against tech concentration and ethical oversights. |

| 6. Challenges in Decentralization | Risks of bias, misinformation, and reduced accountability in decentralized ecosystems. | Emphasizes blockchain and other tech as solutions to enhance accountability without compromising user privacy. |

| 7. Equitable Innovation | Highlights disparities in economic benefits across industries and societal groups. | Need for policies that ensure AI benefits reach marginalized communities and foster equity. |

| 8. Technological vs. Societal Context | Debate over prioritizing technological robustness vs. societal inclusivity in trustworthiness. | Shift required towards frameworks addressing underrepresented groups. |

| Detection Technique | Why Chosen? | Key Challenge Addressed |

|---|---|---|

| Federated Learning (T1) | Aligns with privacy-first AI frameworks (GDPR, AI Act) and ensures secure, decentralized AI model training. | Privacy protection and AI trust in decentralized networks. |

| Blockchain-Based Provenance Tracking (T2) | Provides immutable verification of content origin, crucial for combating misinformation. | Ensuring AI-generated content authenticity. |

| Zero-Knowledge Proofs (ZKPs) (T3) | Balances verification and privacy, crucial in decentralized AI governance. | Trust verification without compromising data privacy. |

| DAOs for Crowdsourced Verification (T4) | Enables community-driven AI content validation, reducing centralized biases. | Democratic, transparent AI oversight. |

| AI-Powered Digital Watermarking (T5) | Ensures traceability of AI-generated content, preventing deepfake and AI-driven disinformation. | Tracking AI-generated media for accountability. |

| Explainable AI (XAI) (T6) | Improves trust in AI decision-making, aligning with human oversight principles in the AI Act. | Making AI decision processes understandable. |

| Privacy-Preserving Machine Learning (PPML) (T7) | Provides secure AI verification while maintaining user privacy. | Balancing AI transparency and personal data security. |

| Techniques | Definition |

|---|---|

| T1. Federated Learning for Decentralized AI Detection | Collaborative AI model training across decentralized platforms, preserving privacy without sharing raw data. |

| T2. Blockchain-Based Provenance Tracking | Blockchain technology records content creation and dissemination, enabling transparent tracking of content authenticity. |

| T3. Zero-Knowledge Proofs for Content Authentication | Cryptographic method to verify content authenticity without revealing underlying private data. |

| T4. Decentralized Autonomous Organizations (DAOs) for Crowdsourced Verification | Crowdsourced content verification through DAOs, allowing communities to collectively vote and verify content authenticity. |

| T5. AI-Powered Digital Watermarking | Embedding unique identifiers into AI-generated content to trace and authenticate its origin. |

| T6. Explainable AI (XAI) for Content Detection | Provides transparency in AI model decision-making [236], explaining why content was flagged as AI-generated. |

| T7. Privacy-Preserving Machine Learning (PPML) for Secure Content Verification | Enables secure detection and verification of content while preserving user privacy, leveraging homomorphic encryption and other techniques. |

| Technique | European Initiative |

Response to the Research Question |

Trustworthy AI for Whom? Who Benefits? (Stakeholder-Specific Trust Outcomes) |

| T1. Federated Learning for Decentralized AI Detection |

GAIA-X initiative promoting secure and decentralized data ecosystems www.gaia-x.eu |

Supports user-centric data sharing and privacy compliance across Europe | End Users and Citizens: GAIA-X (federated learning) enables privacy-first AI model training, ensuring individuals retain control over their data while fostering AI transparency in federated data-sharing ecosystems. |

| T2. Blockchain-Based Provenance Tracking |

OriginTrail project ensuring data and product traceability www.originaltrail.io |

Enhances product authenticity and trust in supply chains for consumers and industries | Communities and Organizations: Tools like OriginTrail (blockchain-based provenance tracking) ensures that organizations and consumers can trust the authenticity of data and products. Verifiable content provenance fosters trust in digital ecosystems, particularly in journalism, supply chains, and digital identity verification. |

| T3. Zero-Knowledge Proofs (ZKPs) for Content Authentication |

European Blockchain Services Infrastructure (EBSI) for credential verification https://digital-strategy.ec.europa.eu/en/policies/european-blockchain-services-infrastructure |