Developing diagnostic methods for early detection in the medical field is crucial for providing better treatments and achieving effective outcomes. Among noticeable disruptions, chronic wounds are categorized as hard-to-heal and require early diagnosis and treatment as they affect at least 1.51 to 2.21 per 1000 population[

1,

2]. Chronic wounds can lead to various complications and increased healthcare costs. With an aging population, the ongoing threat of diabetes and obesity, and persistent infection problems, chronic wounds are expected to remain a significant clinical, social, and economic challenge [

3,

4,

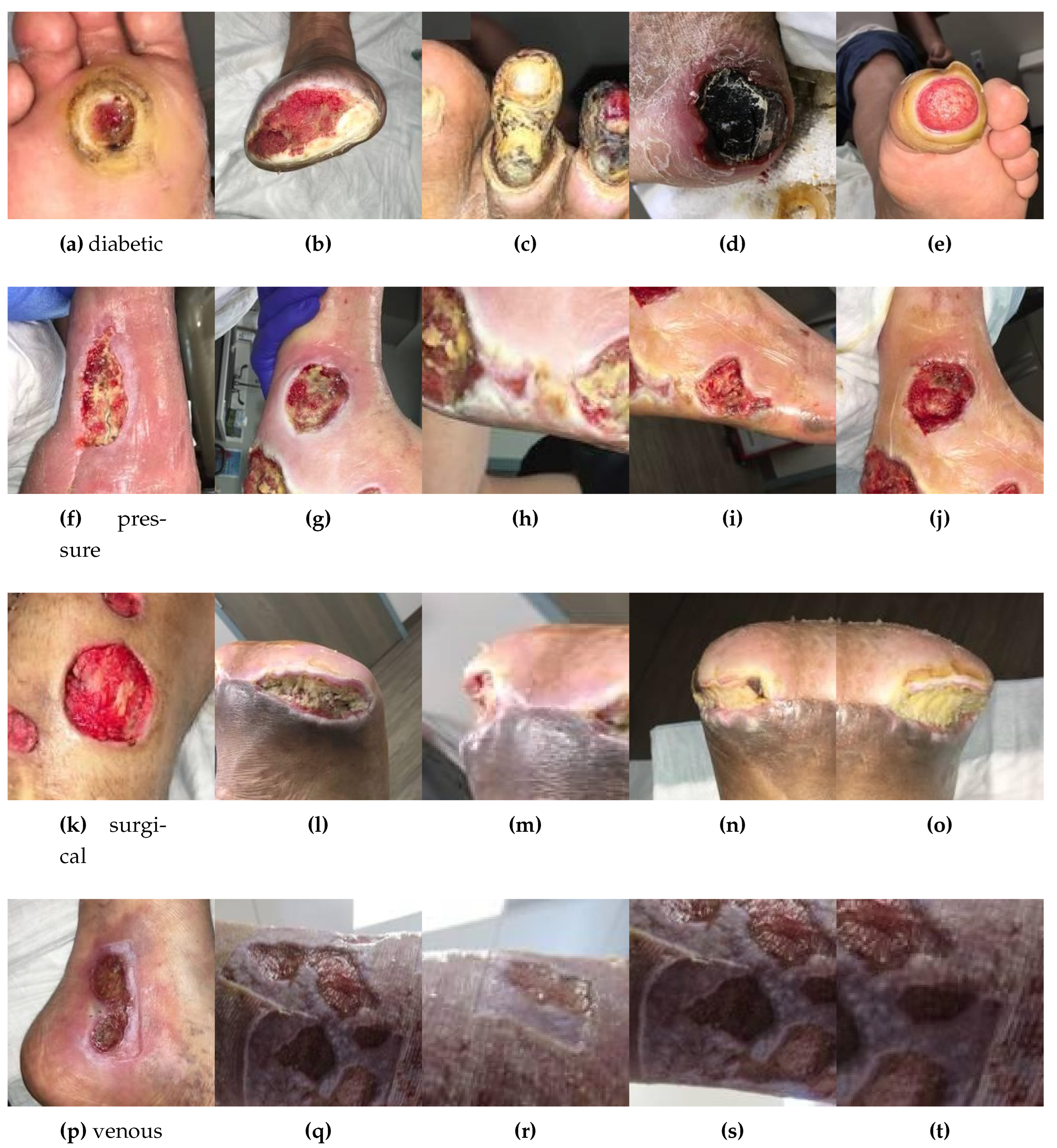

5]. Chronic wound healing is an intricate time-consuming process (healing time 12 weeks). An acute wound is a faster healing wound, whereas, a chronic wound is time-consuming and its healing process is naturally more complicated than an acute wound. The most common types of wounds and ulcers include diabetic foot ulcers (DFUs), venous leg ulcers (VLUs), pressure ulcers (PUs), and surgical wounds (SWs), each involving a significant portion of the population [

6,

7]. Explainable Artificial Intelligence (XAI) has promisingly been applied in medical research to deliver individualized and data-driven outcomes in wound care. Therefore, use of AI in chronic wound classification appears to be one of the significant keys to serving better treatments [

8,

9,

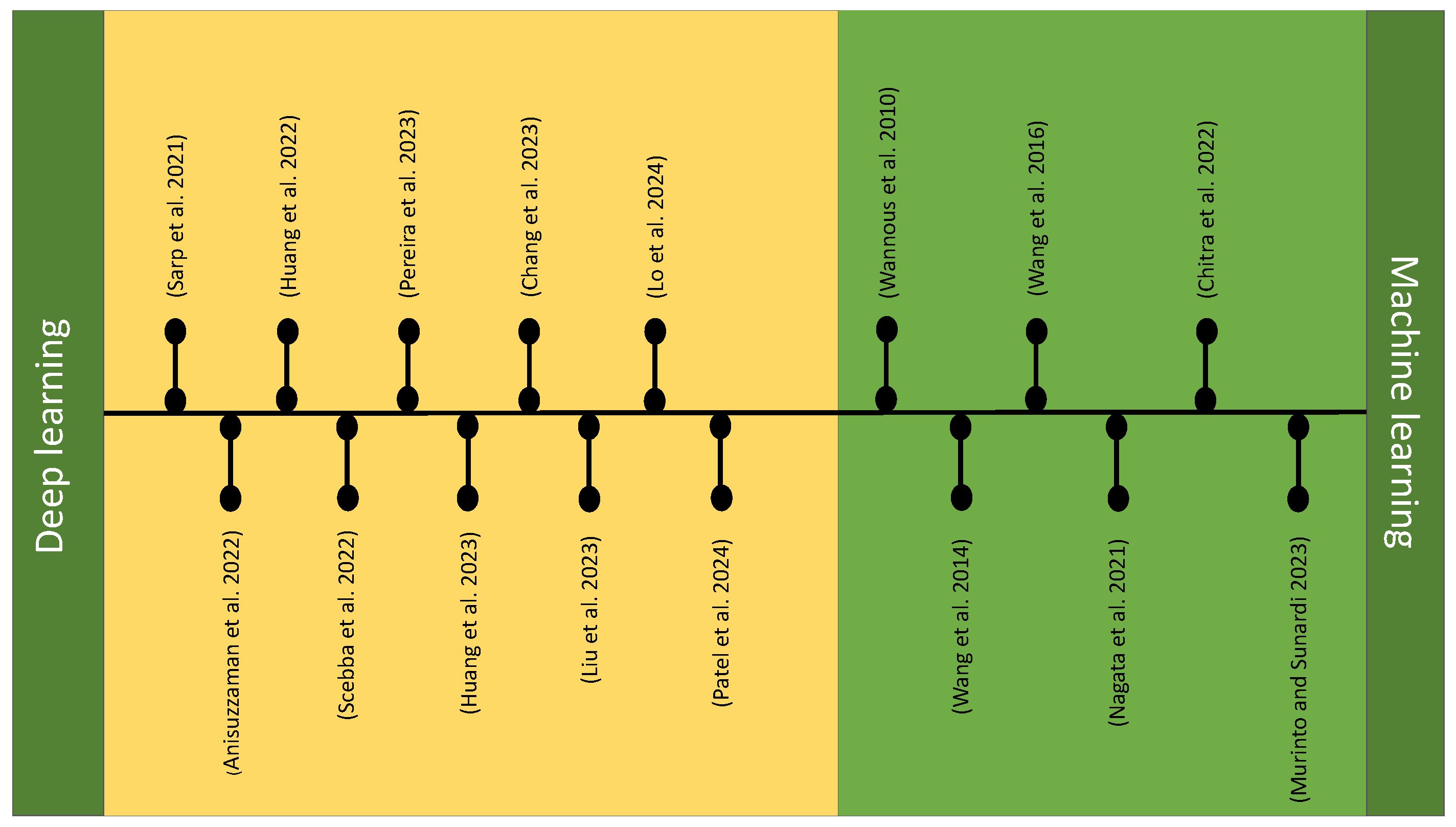

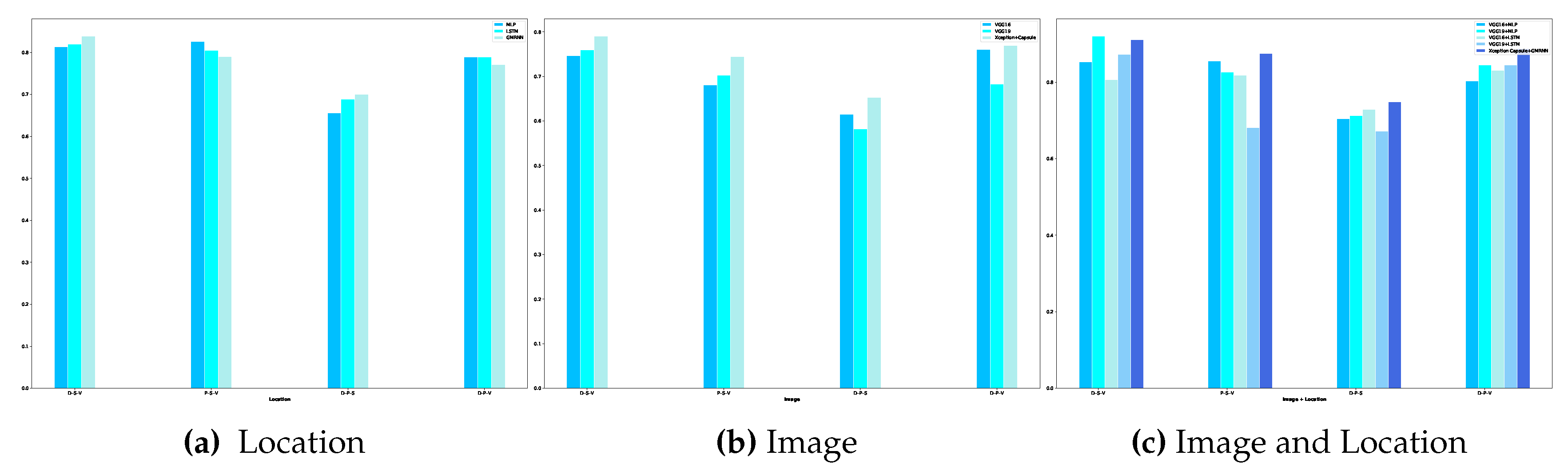

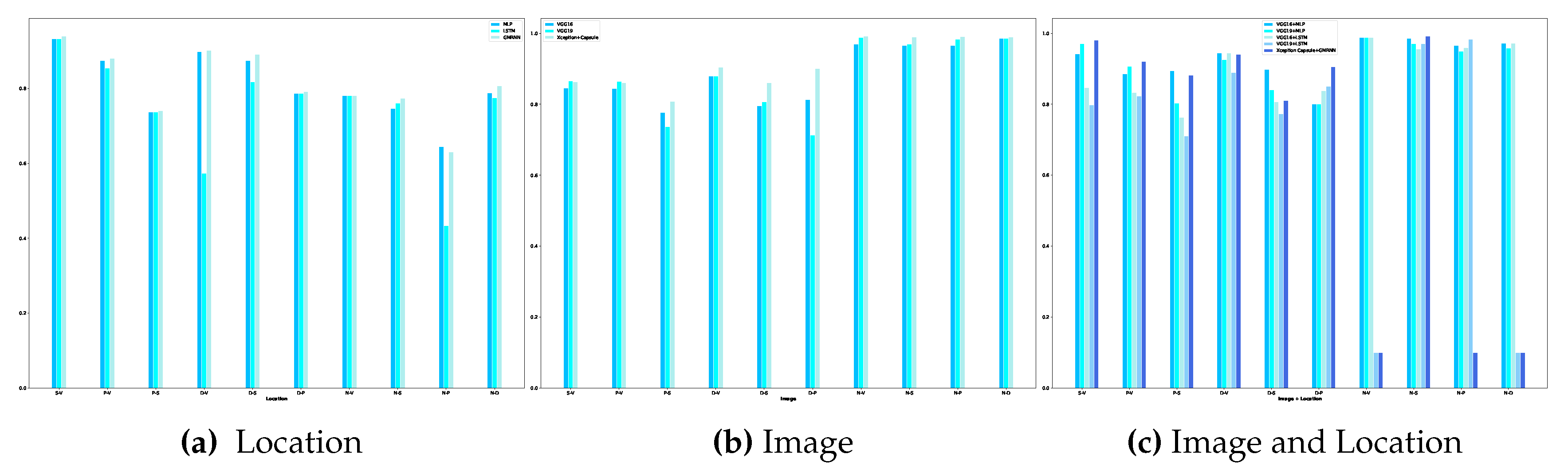

10]. The tremendous success of AI algorithms in medical image analysis in recent years intersects with a time of dramatically increased use of electronic medical records and diagnostic imaging. Wound diagnosis methods are categorized into machine learning (ML) and deep learning (DL) methods as shown in

Figure 1. Various methods based on machine learning and deep learning, have been developed for wound classification by integrating image and location analysis for wound classification. ML models are designed with explicit features extracted from the input image data. Deep learning models utilize neural networks composed of multiple layers, known as deep neural networks. These networks can learn hierarchical representations of data, enabling them to automatically extract features from raw inputs. This can be advantageous for complex data like medical images or text (e.g., patient records), where feature extraction can be challenging. Wannous et al. [

11] performed a tissue classification by combining color and texture descriptors as an input vector of an support vector machine (SVM) classifier. They developed a 3D color imaging method for measuring surface area and volume and classifying wound tissues (e.g., granulation, slough, necrosis) to present a single-view and a multi-view approach. Wang et al. [

12,

13] proposed an approach, using SVM to determine the wound boundaries on foot ulcer images captured with an image capture box. They utilized cascaded two-stage support vector classification to ascertain the DFU region, followed by a two-stage super-pixel classification technique for segmentation and feature extraction. A machine learning approach was developed by Nagata et al. [

14] to classify skin tears based on the Skin Tear Audit Research (STAR) classification system using digital images, introducing shape features for enhanced accuracy. It compares the performance of support vector machines and random forest algorithms in classifying wound segments and STAR categories. An automated method was proposed by Chitra et al. [

15] for chronic wound tissue classification using the Random Forest (RF) algorithm. They integrated 3-D modeling and unsupervised segmentation techniques to improve accuracy in identifying tissue types such as granulation, slough, and necrotic tissue, achieving a classification accuracy of 93.8%. Murinto and Sunardi [

16] also evaluated the effectiveness of the SVM algorithm for classifying external wound images. In this research, a feature extraction technique known as the Gray Level Co-occurrence Matrix (GLCM) was employed. GLCM is an image texture analysis method that characterizes the relationship between two adjacent pixels based on their intensity, distance, and grayscale angle. Sarp et al. [

17] proposed a model for classifying chronic wounds that utilize transfer learning and fully connected layers. Their goal was to improve the interpretability and transparency of AI models, helping clinicians better understand AI-driven diagnoses. The model effectively used transfer learning with VGG16 for feature extraction. Anisuzzaman et al. [

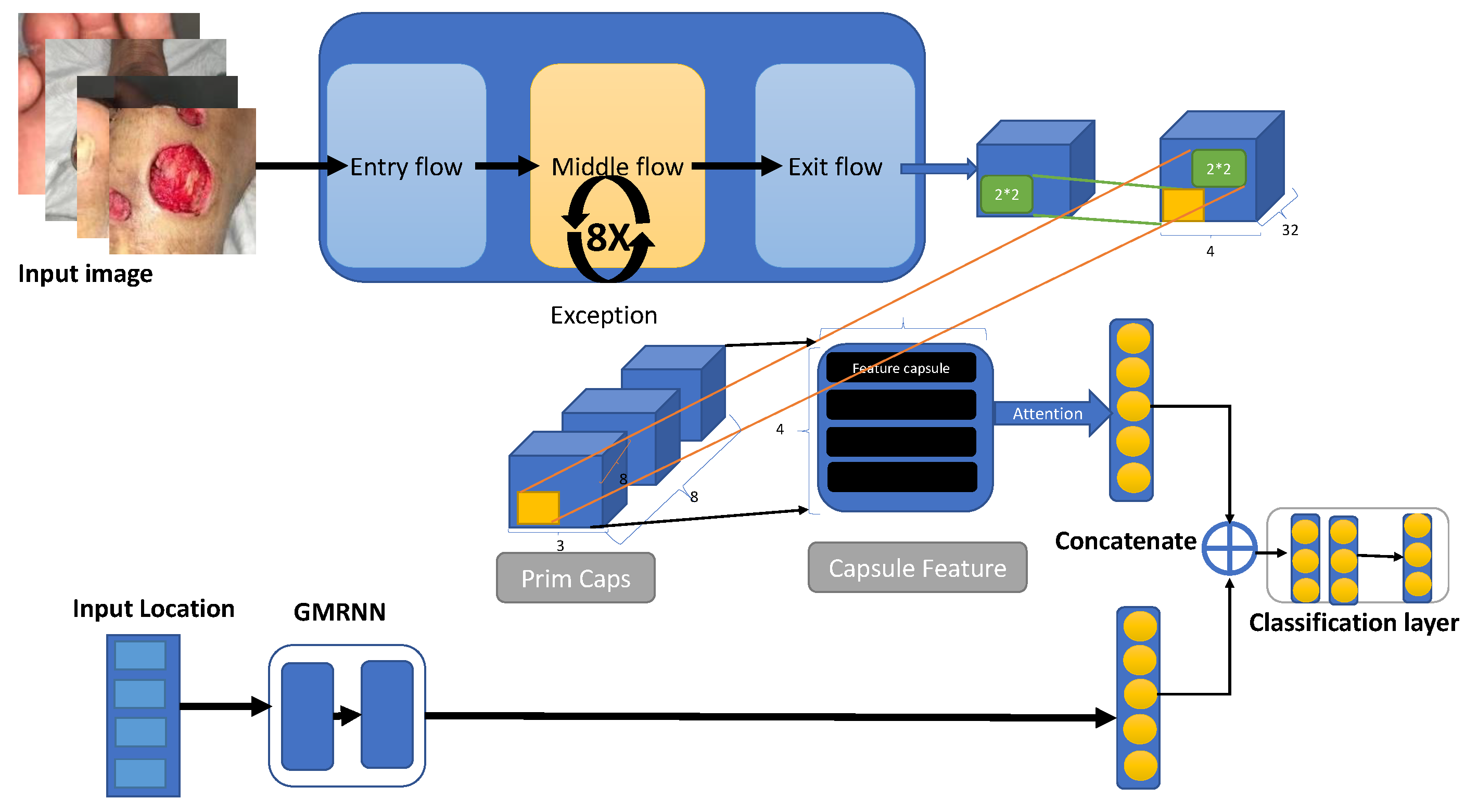

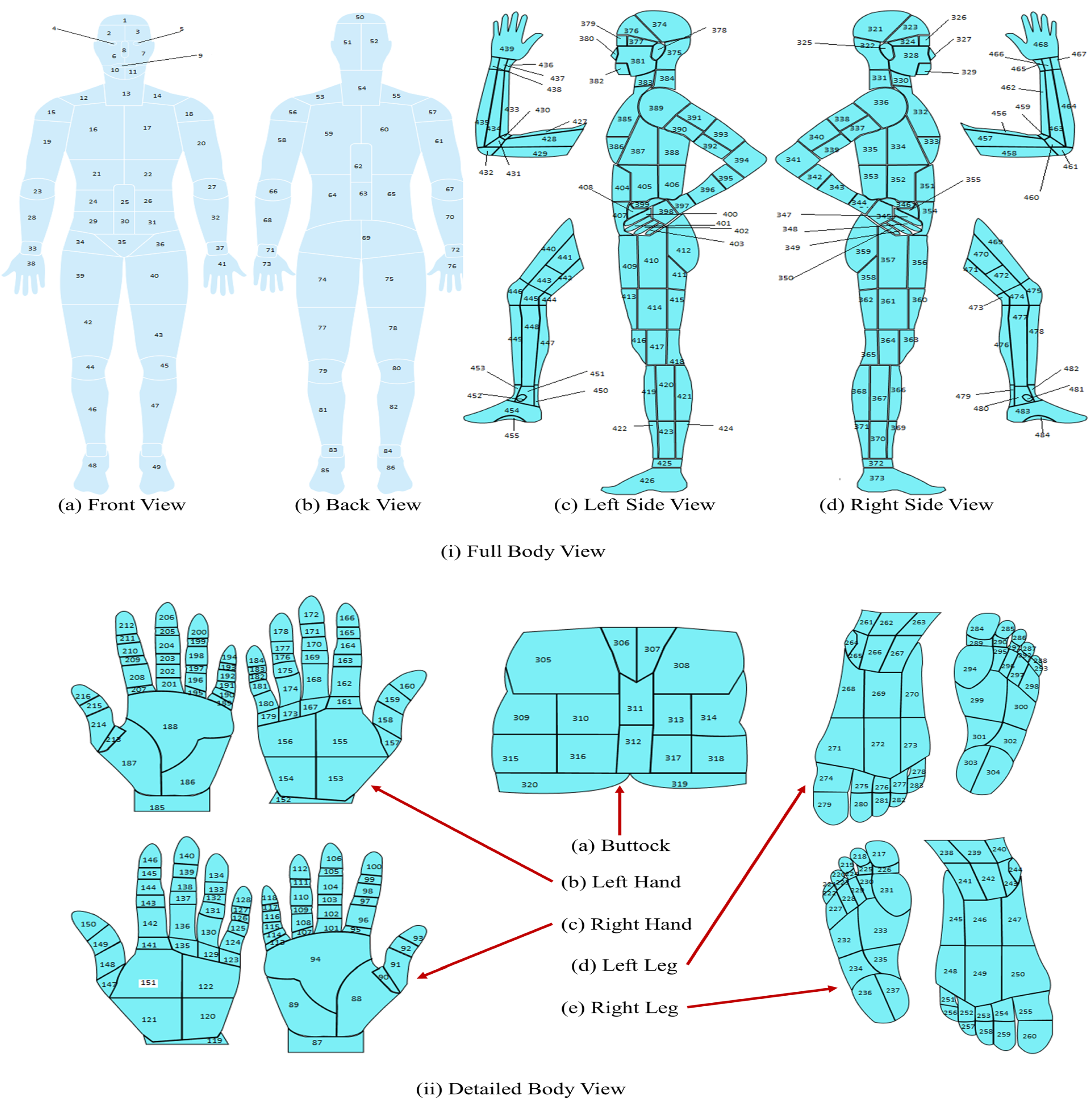

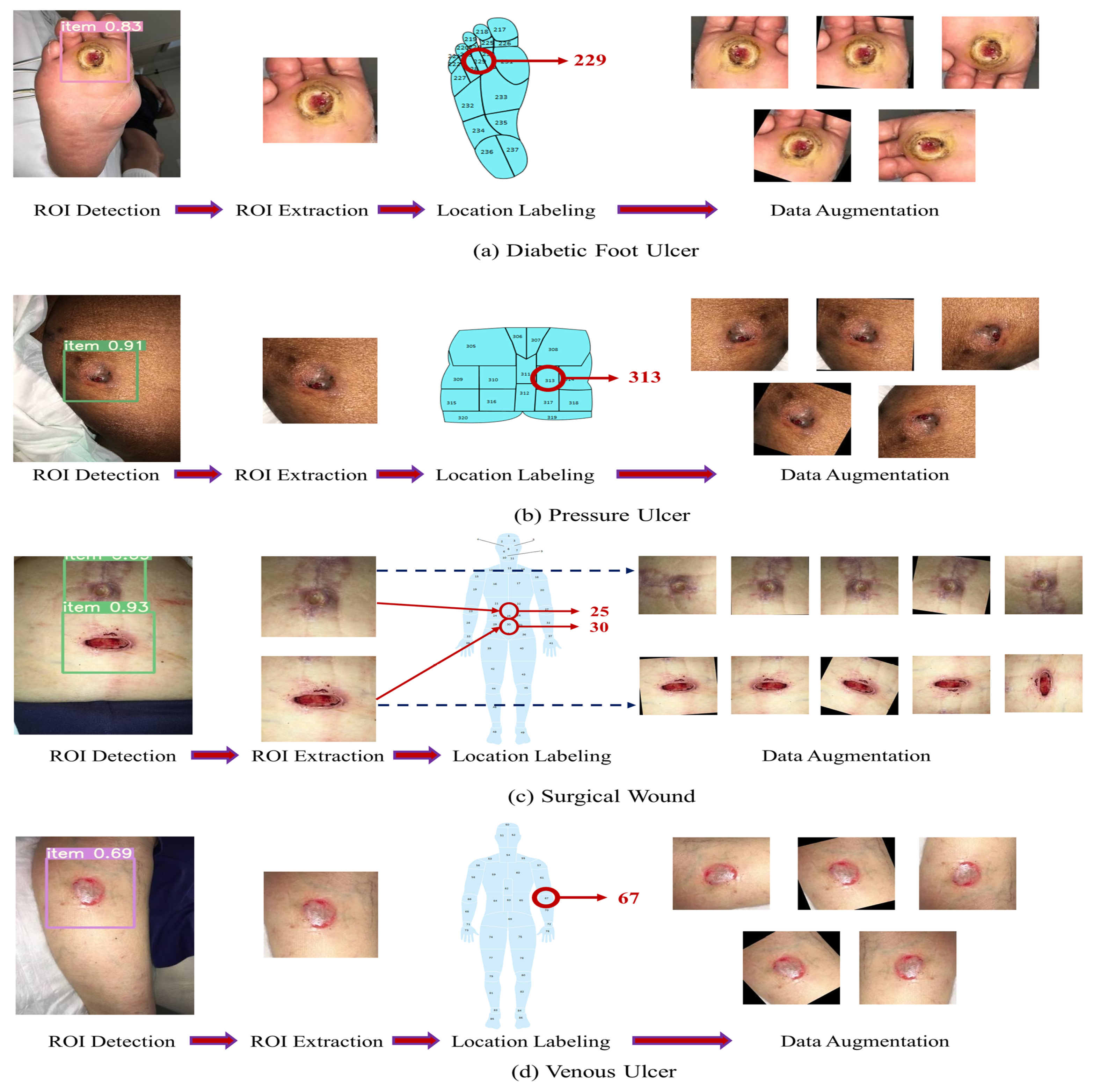

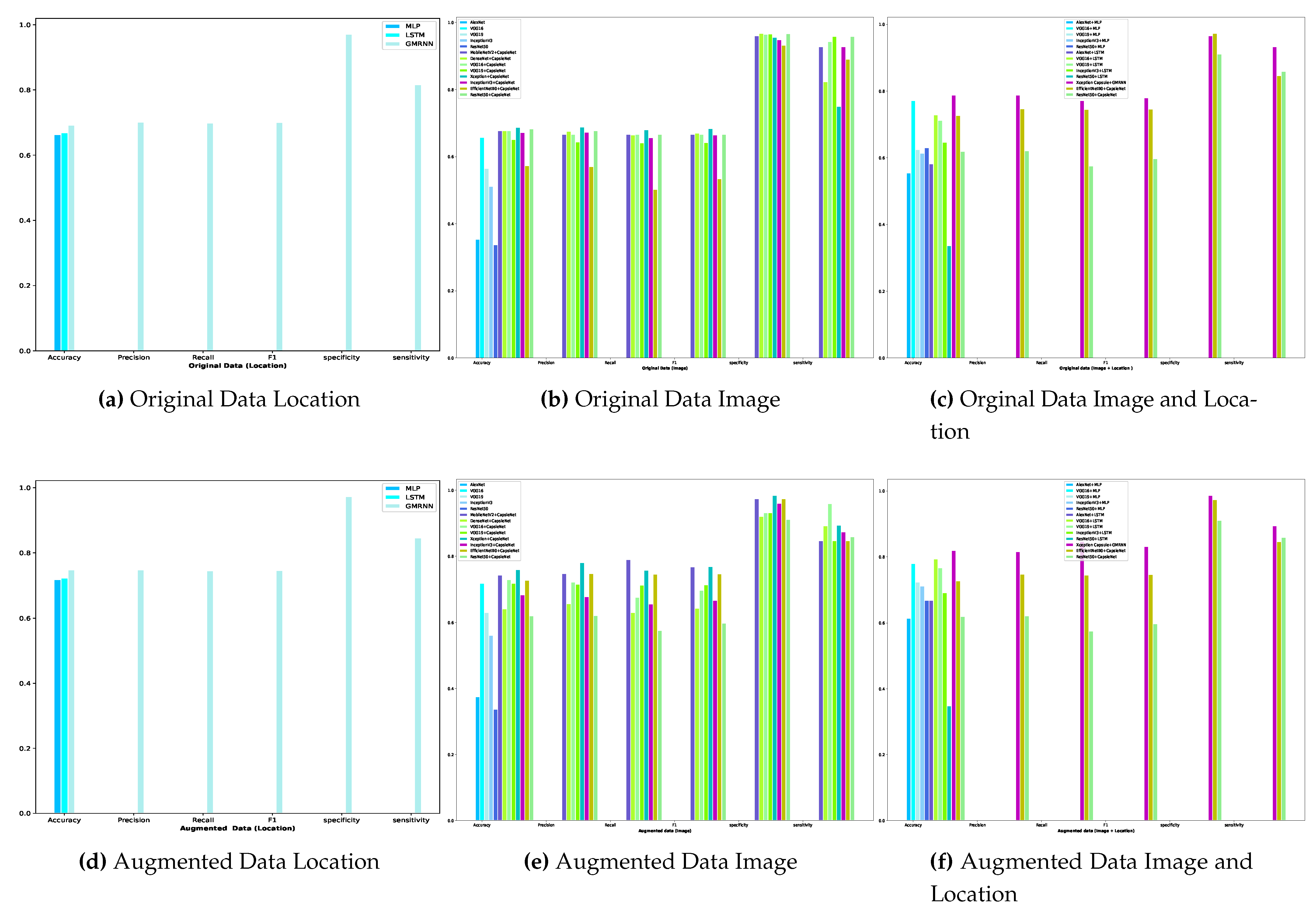

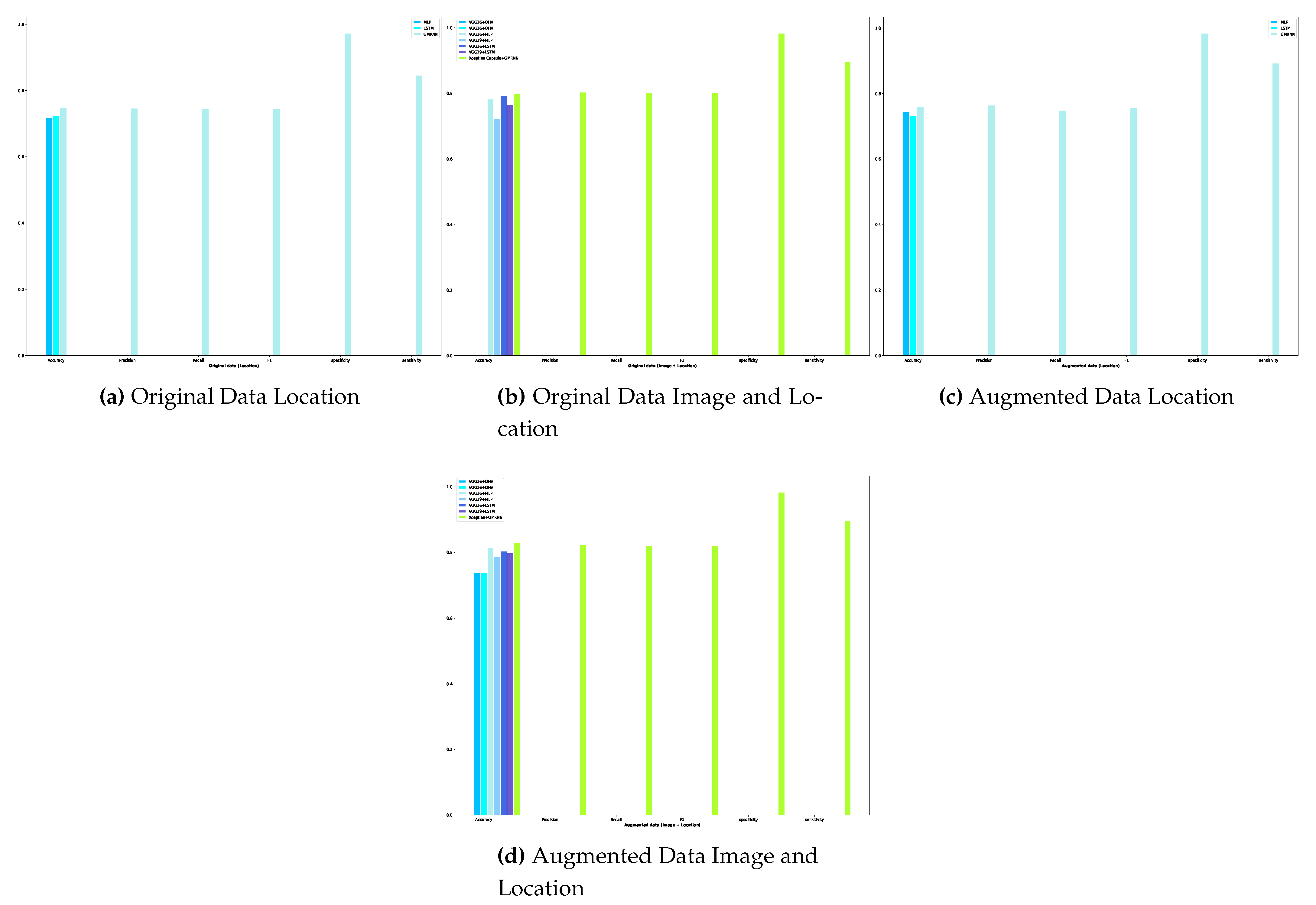

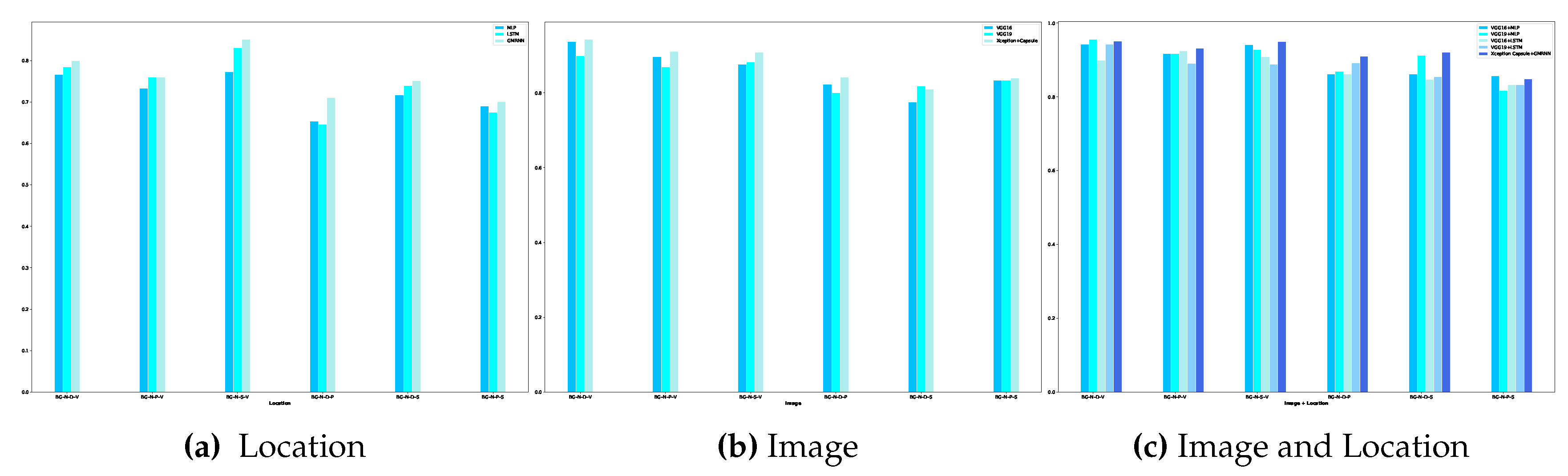

18] presented a multi-modal wound classifier (WMC) network that combines wound images and their corresponding locations to classify different types of wounds. Utilizing datasets like AZH and Medetec, the study employs a novel deep learning architecture with parallel squeeze-and-excitation blocks, adaptive gated MLP, axial attention mechanism, and convolutional layers. An AI-based system [

20] was developed based on Fast R-CNN and transfer learning techniques for classifying and evaluating diabetic foot ulcers. Fast R-CNN was used for object detection and segmentation. It identifies regions of interest (ROIs) within an image and classifies these regions, while also providing bounding box coordinates for object localization. The model leverages pre-trained convolutional neural networks (CNNs) to improve the learning process on a relatively smaller dataset of diabetic foot wound images. Scebba et al. [

20] introduced a deep-learning method for automating the segmentation of chronic wound images. This approach employs neural networks to identify and separate wound regions from background noise in the images. The method significantly enhances segmentation accuracy, generalizes effectively to various wound types, and minimizes the need for extensive training data. Another study [

21] combined segmentation with a CNN architecture and a binary classification with traditional ML algorithms to predict surgical site infections in cardiothoracic surgery patients. The system utilizes a MobileNet-Unet model for segmentation and different machine learning classifiers (random forest, support vector machine, and k-nearest neighbors) for classifying wound alterations based on wound type (chest, drain, and leg). Another model based on a convolutional neural network (CNN) [

22] was presented for five wound classification tasks. This model first carries out a phase of feature extraction from the original input image to extract features such as shapes and texture. All extracted features are considered higher-level features, providing semantic information used to classify the input image. Changa et al. [

23] released a system utilizing multiple deep learning models for automatic burn wound assessment, focusing on accurately estimating the percentage of total body surface area (%TBSA) burned and segmentation of deep burn regions. The study trained models like U-Net, PSPNet, DeeplabV3+, and Mask R-CNN using boundary-based and region-based labeling methods, achieving high precision and recall. A web-based server was developed to provide automatic burn wound diagnoses and calculate necessary clinical parameters. Another approach was presented by Liu et al. [

24] for automatic segmentation and measurement of pressure injuries using deep learning models and a LiDAR camera. The authors utilized U-Net and Mask R-CNN models to segment wounds from clinical photos and measured wound areas using LiDAR. U-Net outperformed Mask R-CNN in both segmentation and area measurement accuracy. The proposed system achieved acceptable accuracy, showing potential for clinical application in remote monitoring and treatment of pressure injuries. An XAI model [

25] has been developed to analyze vascular wound images from an Asian population. It leverages deep learning models for wound classification, measurement, and segmentation, achieving high accuracy and explainability. The model utilizes SHAP (Shapley Additive ExPlanations) for model interpretability, providing insights into the decision-making process of the AI, which is crucial for clinical acceptance. A multi-modal wound classification network by Patel et al. [

26] has explored integrating wound location data and image data in classifying pressure injuries using deep learning models. The study employs an Adaptive-gated MLP for separate wound location analysis. Performance metrics vary depending on the number and combination of classes and data splits.