1. Introduction

Microsoft Windows dominates the global operating system (OS) market, with over 75% of desktop users worldwide relying on it for both personal and professional purposes [

1]. This widespread adoption, however, makes Windows a prime target for malicious actors seeking to exploit vulnerabilities for data theft, system disruption, and other nefarious activities [

2]. The increasing complexity, volume, and evolution of cyber threats have rendered traditional investigative methods insufficient for addressing large-scale cyber incidents [

3,

4].

The Windows Registry, a hierarchical database storing critical system configurations and user activity data, is pivotal in digital forensic investigations. It contains interrelated keys and values that record recently accessed files, user actions, and system settings [

4]. However, the sheer volume of data within the Registry and the sophistication of modern cyberattacks pose significant challenges for the digital forensics and incident response (DFIR) community [

6]. The introduction of Windows 10 and 11, designed for seamless operation across computers, smartphones, tablets, and embedded systems, has further expanded their adaptability and reach, complicating forensic efforts [

5].

In cyber or cyber-enabled offenses, the efficiency of DFIR processes is critical for loss recovery and mitigation [

3]. Effective DFIR can identify key elements such as the point of entry, techniques used for unauthorised access, perpetrator identities, and attack motives [

4]. Digital forensics, as an investigative technique, is essential for identifying and analysing evidence related to cybersecurity incidents or crimes [

5]. The Windows Registry serves as a living repository of configuration settings, user activities, and system events, providing a foundation for reconstructing digital event sequences, detecting malicious behaviour, and delivering crucial evidence in investigations [

9]. The cyber incident response involves both live monitoring and post-incident forensic analysis. Live monitoring tracks and records system data in real-time, while post-incident analysis investigates events after they occur. Both methods are used to map Registry paths containing USB identifiers, such as make, model, and GUIDs (Global Unique Identifiers), which distinguish individual USB devices. These identifiers are often located in both allocated and unallocated Registry spaces [

7].

This research aims to enhance the efficiency and effectiveness of forensic investigations involving the Windows Registry. Modern Windows versions generate substantial logging data, which can hinder analytical processes [

8]. To address this challenge, we propose a novel Reinforcement Learning (RL)-driven framework that combines RL techniques with a Rule-Based Artificial Intelligence (RB-AI) system. The framework incorporates a Markov Decision Process (MDP) model to represent the Windows Registry and Events Timeline environment, enabling state transitions, actions, and rewards for Registry analysis and event correlation.

1.1. Challenges and Motivation

Current Registry forensic analysis, whether manual or automated, faces several challenges:

Volume of Data: The Registry contains vast amounts of data reflecting system activities and user interactions.

Lack of Automation: Many traditional tools require manual, repetitive tasks, consuming significant time and resources.

Dynamic Nature: Registry entries frequently change during system operation or software installations.

Limited Contextual Information: Registry entries often lack explicit context, necessitating expert interpretation.

Data Fragmentation: Registry data may be fragmented across hives and keys.

Evolution of Windows Versions: Different Windows versions introduce variations in Registry structures.

Limited Advanced Analysis: Traditional tools often lack features like anomaly detection or pattern recognition.

Addressing these challenges requires advanced tools, investigative techniques, and AI integration to enhance the efficiency and accuracy of Registry analysis. Our motivation for integrating reinforcement learning (RL) is to optimise the automation and enhance the coverage of Windows Registry analysis in cyber incident response. This is driven by the growing complexity and volume of cyber threats targeting critical systems. The Windows registry stores various information, including system information, configuration settings, and application data. Considering the data found in the registry, it is an invaluable source of evidence in digital investigations. Windows registry is key for tracking changes and uncovering traces of malicious acts in the registry. However, the amount of data contained in the registry poses eminent challenges to digital forensic analysis. this is particularly evident when real-time analysis is necessary. Analysing the entire registry manually can be time-consuming and resource-intensive, making it challenging for forensic analysts to efficiently extract relevant information.

1.2. Research Questions

This study aims to contribute to the advancement of Cyber incident response in the context of Windows Registry analysis and forensics by addressing the following research questions:

RQ1: How does the combination of RL with RB-AI, under the framework of WinRegRL, help improve the effectiveness, accuracy, and scalability of digital forensic investigations on Windows Operating Systems?

RQ2: What are the primary metrics of performance used to validate the effectiveness of the framework WinRegRL compared to traditional digital forensics methods, and how does it address the problems brought about by modern cybercrime in attacking Windows Registry artefacts?

RQ3: To what extent does the WinRegRL framework allow for consistent, optimised analysis on many machines sharing identical configurations, and what implications are there for practical applications in Digital Forensics and Incident Response (DFIR)?

1.3. Novelty and Contribution

The research novelty lies in the leveraging of RL to enhance the automation of cyber incident investigation in Windows machines in terms of accuracy, timing, and coverage. In the beginning, this work identified the gap by reviewing existing methodologies, tools, and techniques for analysing registry artefacts and identifying current challenges and limitations. Subsequently, this work’s contributions to the body of knowledge and DFIR practice are:

MDP Model and WinRegRL: A comprehensive framework relying on a novel MDP model that provides a comprehensive and structured representation of Windows environment covering Registry, Volatile Memory and File System data and facilitating an efficient automation of the investigation process.

Expertise Extraction and Reply: Through the Optimal Policies capturing and generalisation, We minimise investigation time by enhancing the MDP solving time through direct feeding of optimal policies to the out-of-the-box MDP solver, which reduces the time needed for the RL agent to discover optimal decision policies for each state.

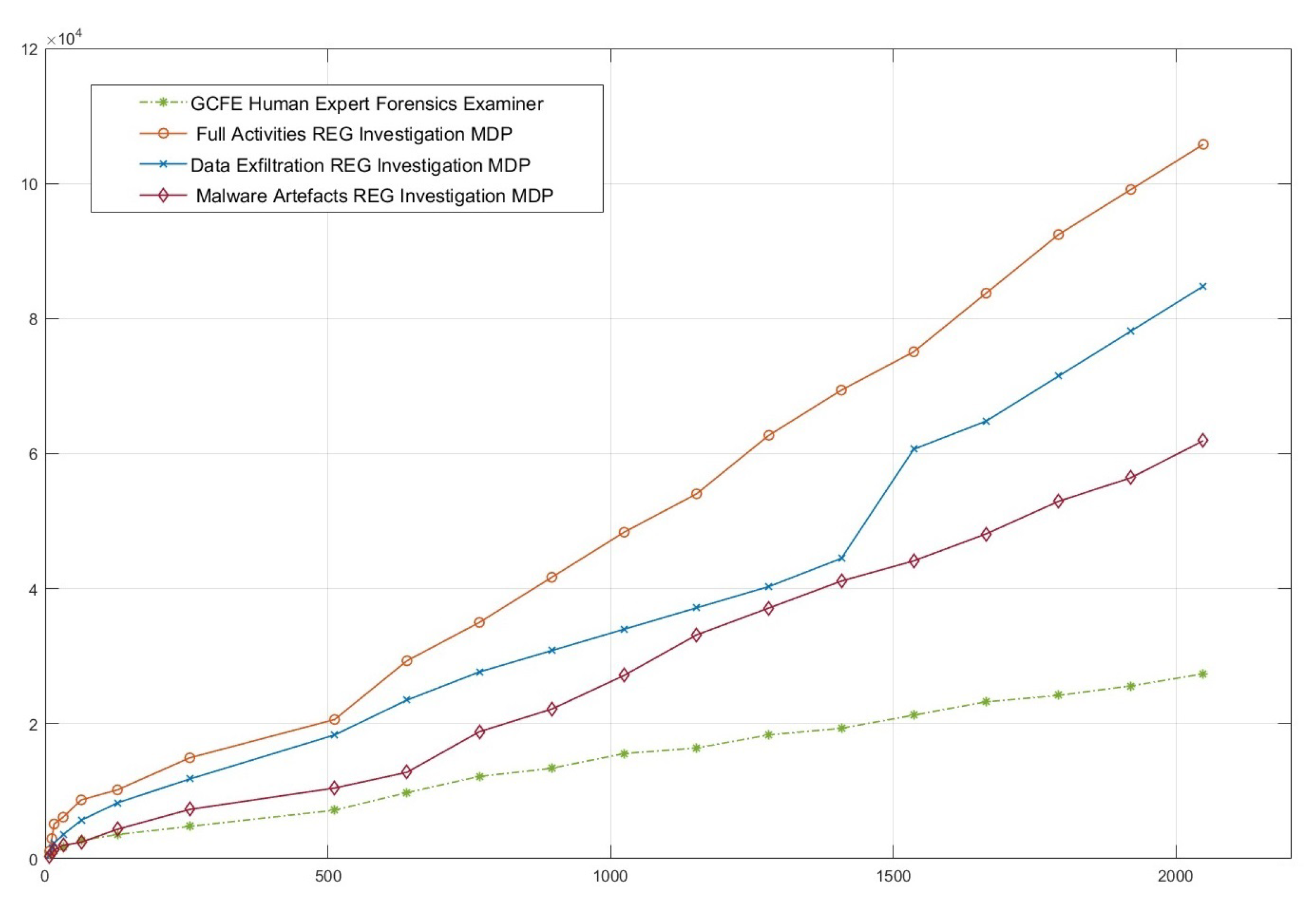

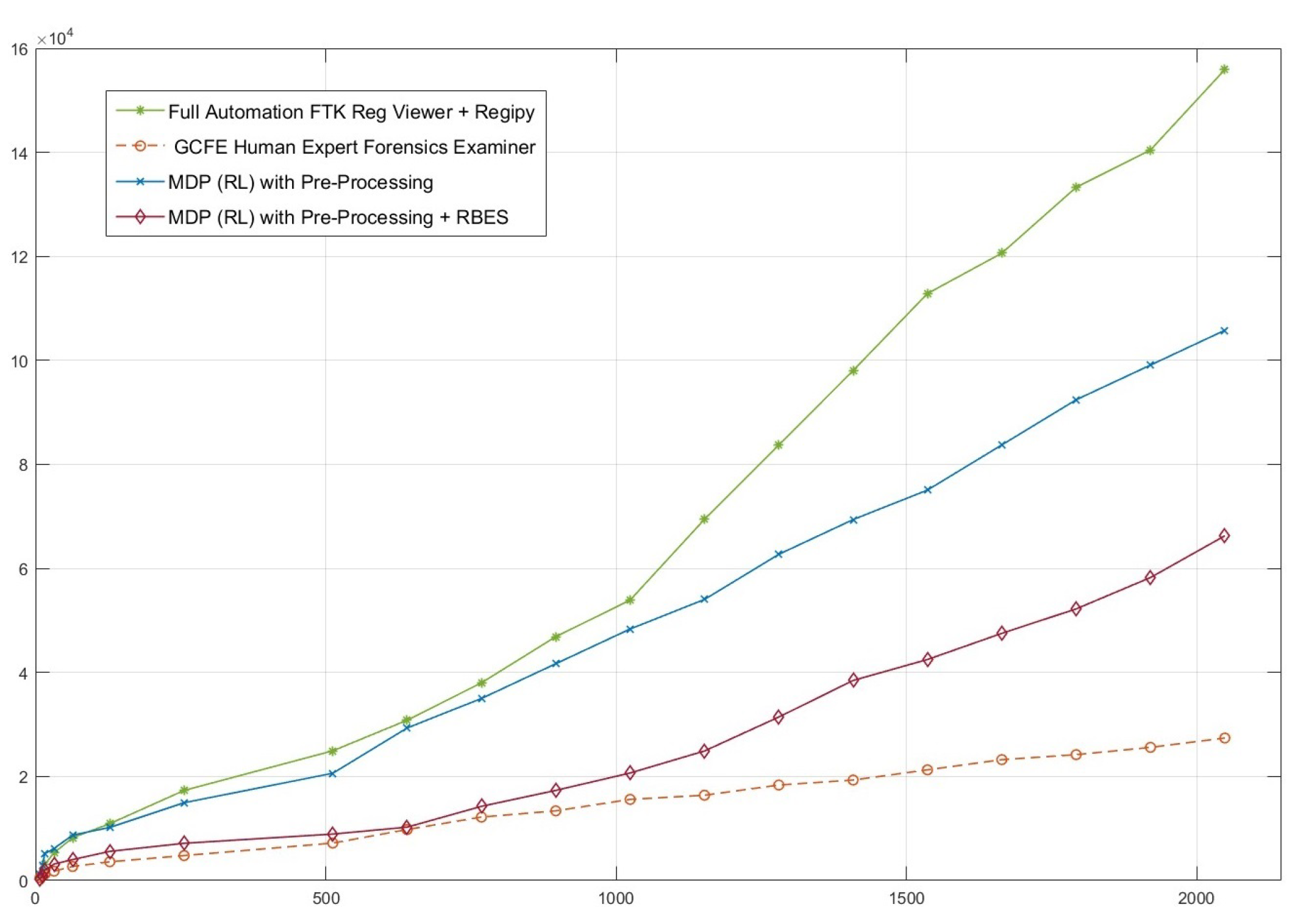

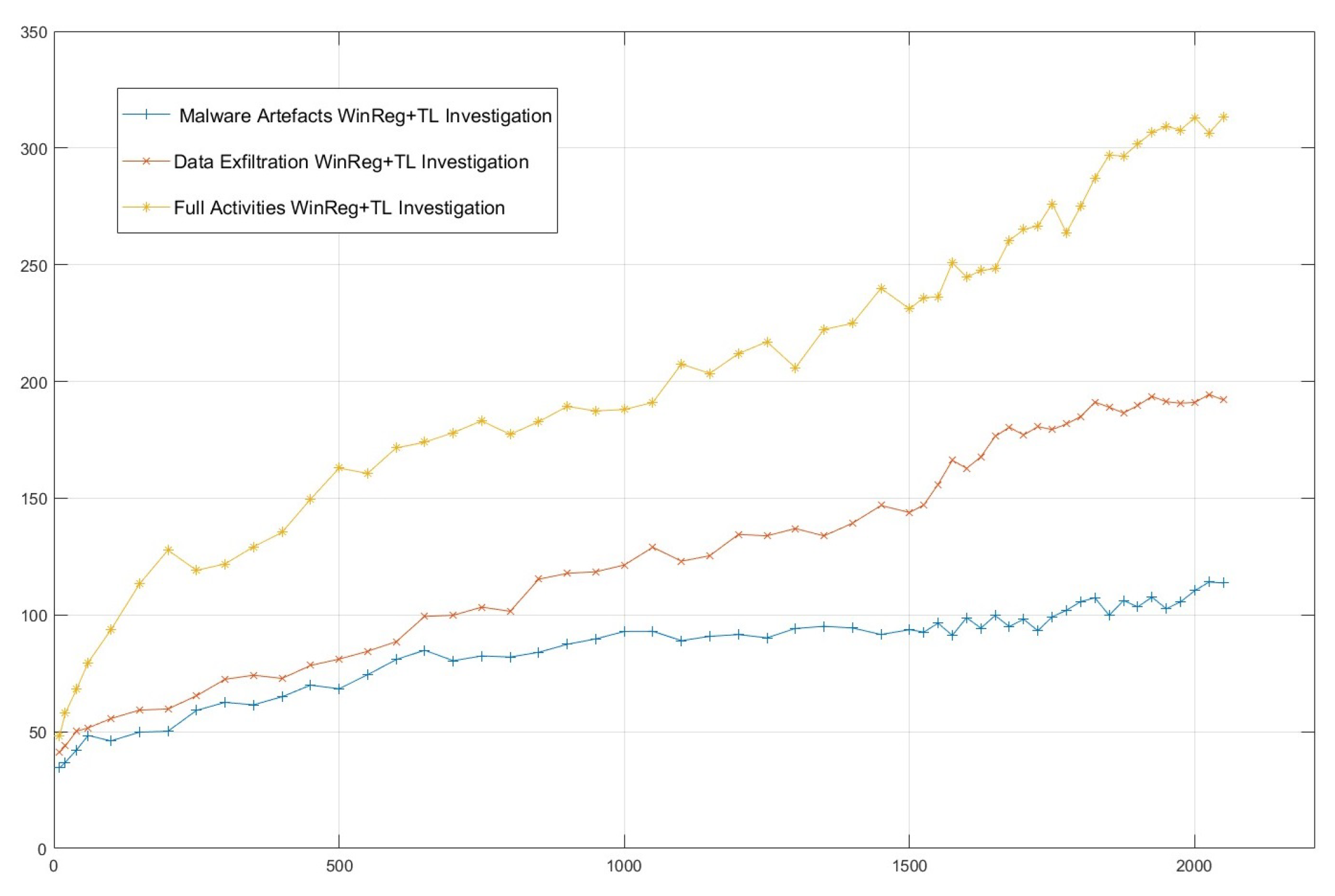

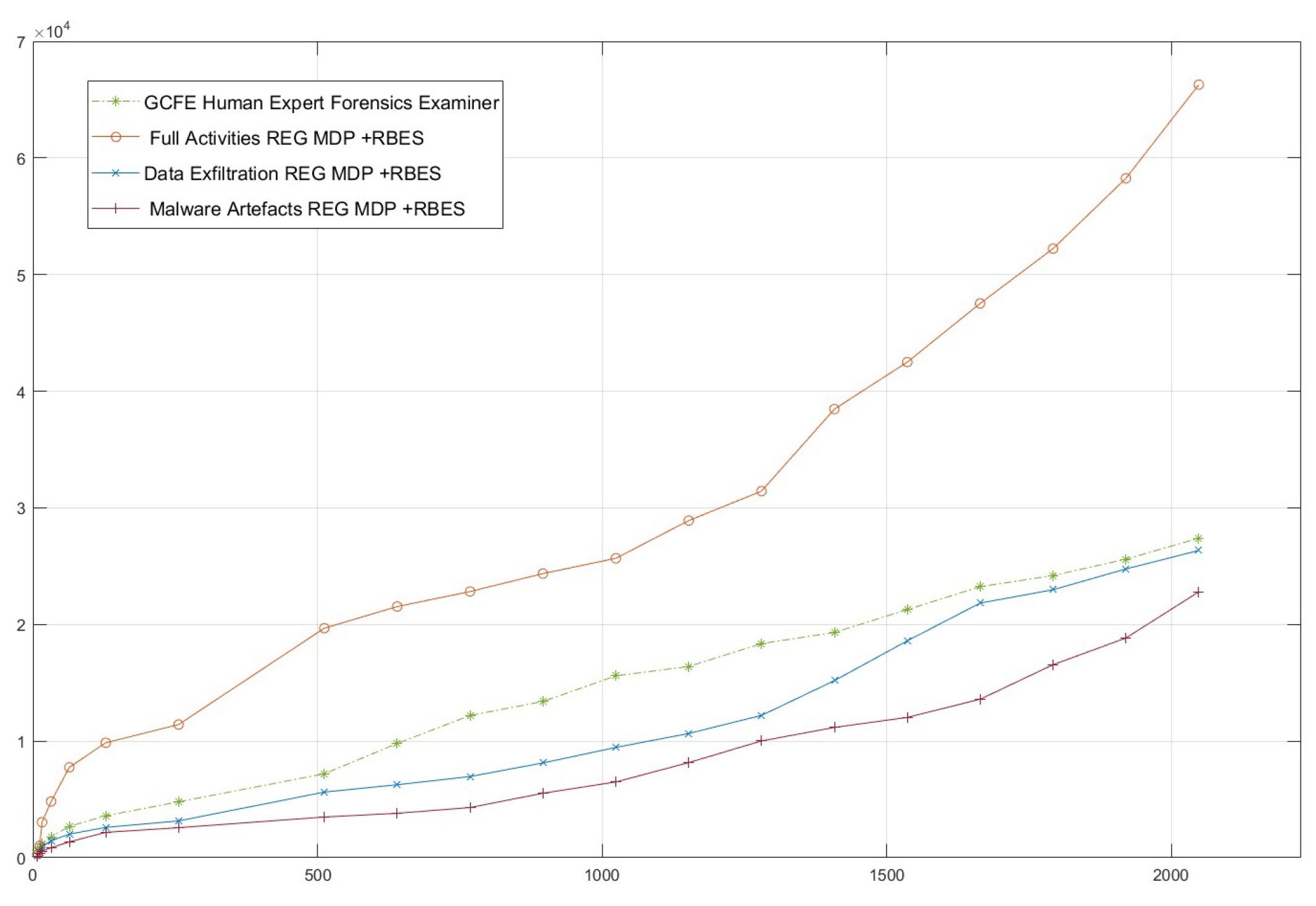

Performance Superiority: Observing the empirical results, our results demonstrate that the proposed RL-based approach outperforms existing industry approaches in terms of Consumed Time, Relevance and Coverage accounting for both automated systems and human experts.

1.4. Article Organization

The remainder of this paper is organized as follows:

Section 2 provides a technical overview of Windows registry and volatile memory forensic analysis as well as the current state of the art of tools, and framework investigation techniques.

Section 3 explains registry structure and its relevance in forensics. Additionally, the section introduces the reinforcement learning key elements as well as RL approaches and how they were leveraged in this research. The section also covers the proposed RL-powered framework’s overall architecture and modules.

Section 4 covers WinRegRL framework implementation, testing, obtained results, and an in-depth discussion.

Section 5 provides reflections and conclusions regarding answering the research questions and future directions.

3. Research Methodology

This section provides an outline of the research methodology followed in this work. These steps are summarized below in a manner which enables grasping the Windows Registry (WinRegRL) Forensics domains and components and understanding the interaction between the different entities and the human expert. The research methodology used in this research has five steps:

Current Methods Review for Windows Registry Analysis Automation and study the mechanisms, limitations, and challenges of existing methods in light of the requirements set by Windows Registry forensics for efficiency and accuracy.

Investigating Techniques and Approaches used in Windows Registry Forensics. we particularly focused on machine learning approaches and rule-based reasoning systems that can extend or replace human intervention in the sequential decision-making processes involved in Windows Registry forensics. Find the best ways through which expert systems can be integrated into the forensics process in order to gain better investigative results.

WinRegEL Framework Developmentas we progress with the design and implemention of diffirenet modules making the WinRegRL framework, we first focus on the WinRegRL-Core which introduce a new MDP model and Reinforcement Learning to optimize and enhance Windows Registry investigations. Also, add a module to capture, process, generalize, and feed human expertise into the RL environment using a Rule-Based Expert System (RBES) via Pyke Python Knowledge Engine.

Testing and Validation of WinRegRL on the latest research and industry standards datasets made to mimic real-world DFIR scenarios and covering different size evidences especially in large-scale Windows Registry forensics. The performance of the framework should be evaluated against traditional methods and human experts in terms of efficiency, accuracy, time reduction, and artefact exploration. Refine the framework iteratively based on feedback testing for robust and adaptive performance in diverse investigative contexts.

Finalisation of the WinRegRL Framework through unifying reinforcement learning and rule-based reasoning to support efficient Windows Registry and volatile data investigations. the final testing will demonstrate how it reduces reliance on human expertise while increasing efficiency, accuracy, and repeatability significantly, especially in cases with multiple machines of similar configurations.

3.1. Windows Registry and Volatile Data Markov Decision Process

A Markov Decision Process (MDP) is commonly used to mathematically formalize RL problems. The key components of an MDP include the state space, action space, transition probabilities, and reward function. This formalism provides a structured approach to implement RL algorithms, ensuring that agents can learn robust policies for complex sequential decision-making tasks across diverse application domains[

53]. Markov decision process (MDP) is widely adopted to model sequential decision-making process problems to automate and optimise these processes [

56]. The components of an MDP environment are summarised in equation

1:

A

Markov Decision Process, MDP, is a 5-tuple

where:

Value Function: Value function describes

how good is to be in a specific state

s under a certain policy

. For MDP:

To achieve the optimal value function, WinRegRL Agent will calculate the expected reward return when starting from

s and following

but account for the cumulative discounted reward.

WinRegRL Agent at each step t receives a representation of the environment’s state, and it selects an action . Then, as a consequence of its action the agent receives a reward, .

Policy: WinRegRL

policy is a mapping from a state to an action

That is the probability of select an action if .

Reward: The total

reward is expressed as:

Where is the discount factor and H is the horizon, that can be infinite.

3.2. Overall Windows Registry and Timeline MDP Modelling

Markov decision processes (MDPs) provide a natural representation for DFIR investigator sequential decision making including browsing, interacting and performing actions on registry files and waiting for the outcome to validate the finding or exclude a scenario. As with any sequential decision process, automation could be enhanced considerably using RL, it involves an agent that interacts synchronously with the its environment or system; the sequence of system states are modelled as a stochastic process. The RL agent’s goal is to maximize reward by choosing appropriate actions. These actions and the history of the environment states determine the probability distribution over possible next states. This MDP framework provides a structured approach for modelling and optimizing forensic investigations, enabling analysts to make statistically informed decisions in the presence of complex Windows Registry and volatile memory analysis.

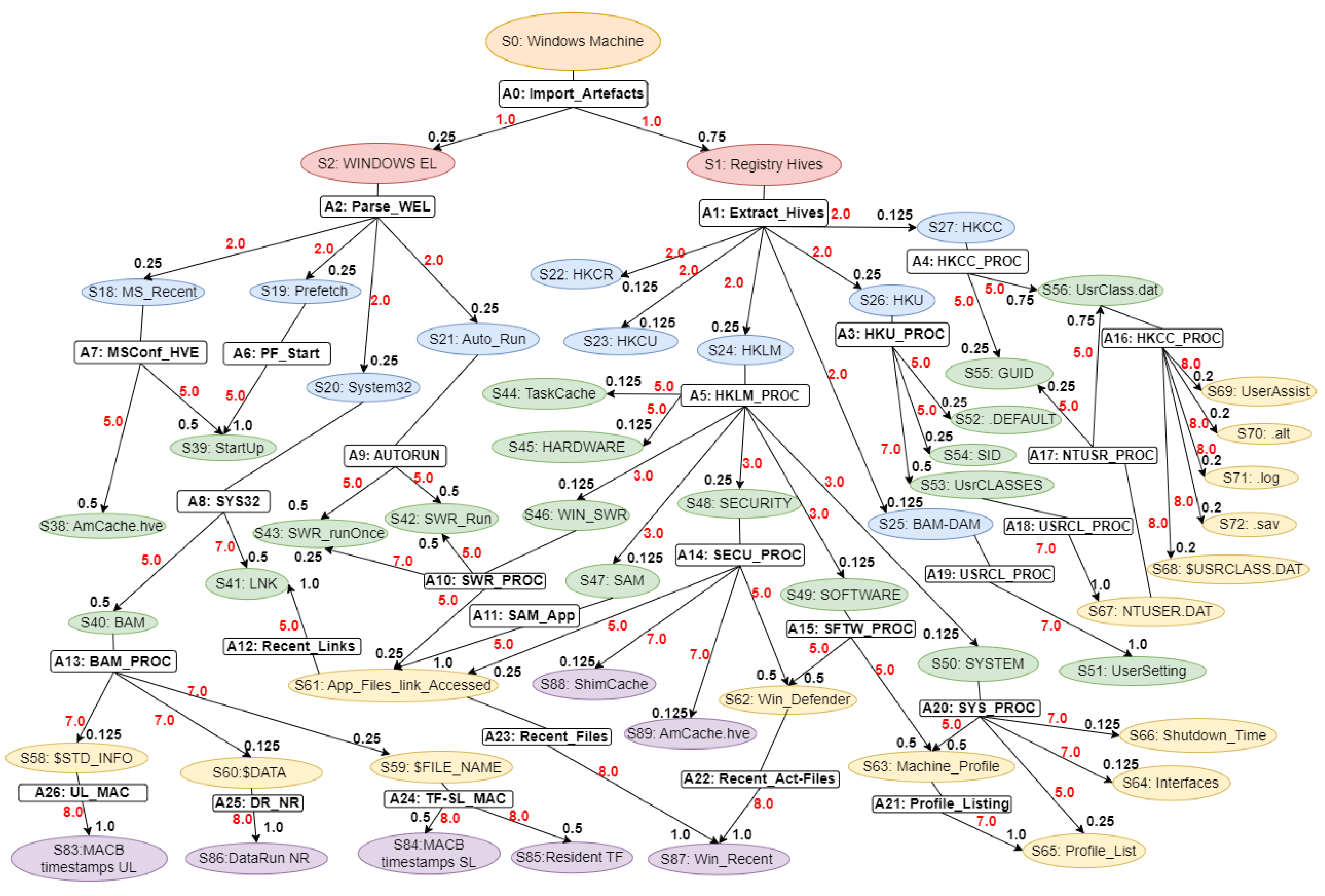

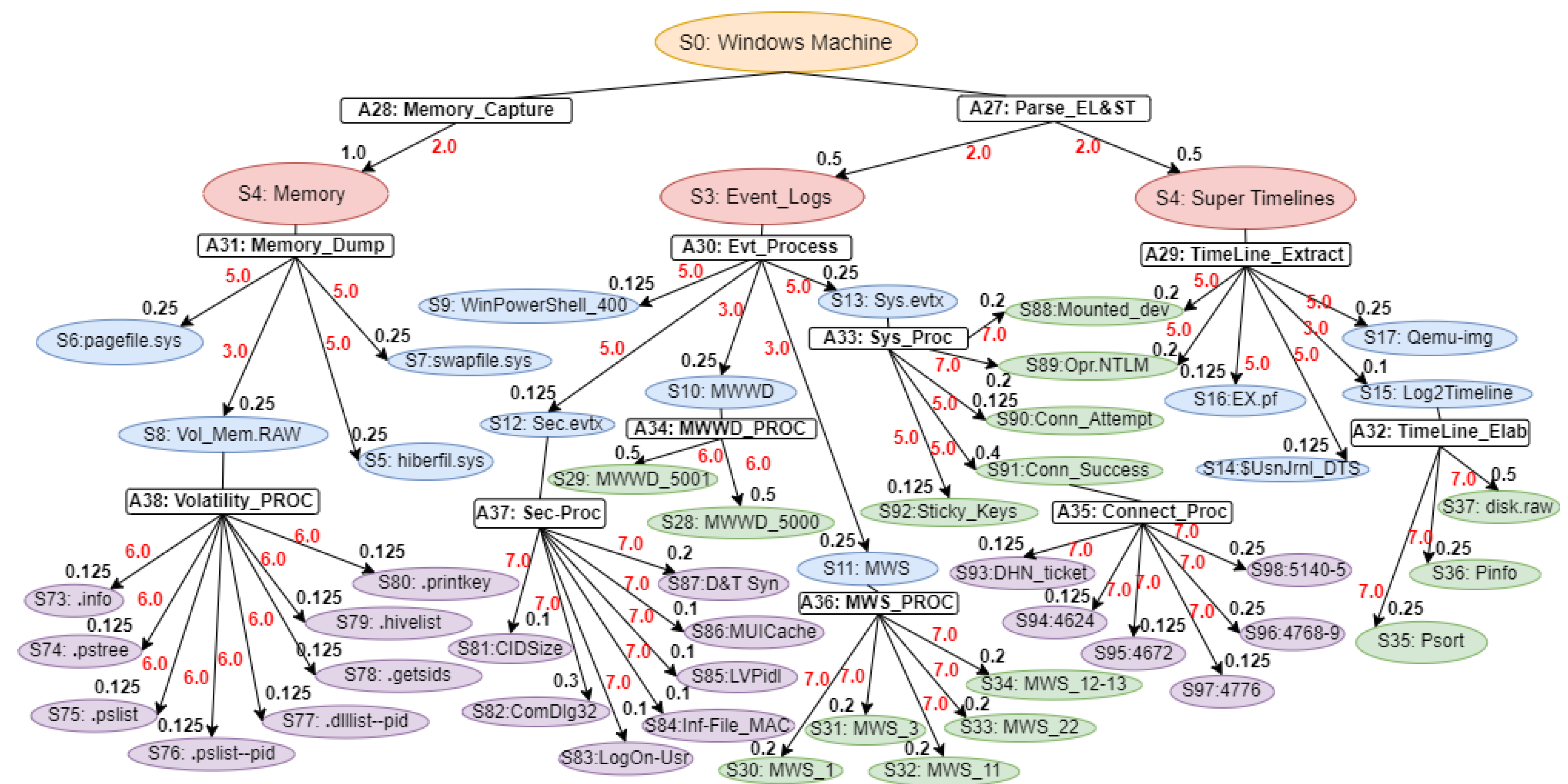

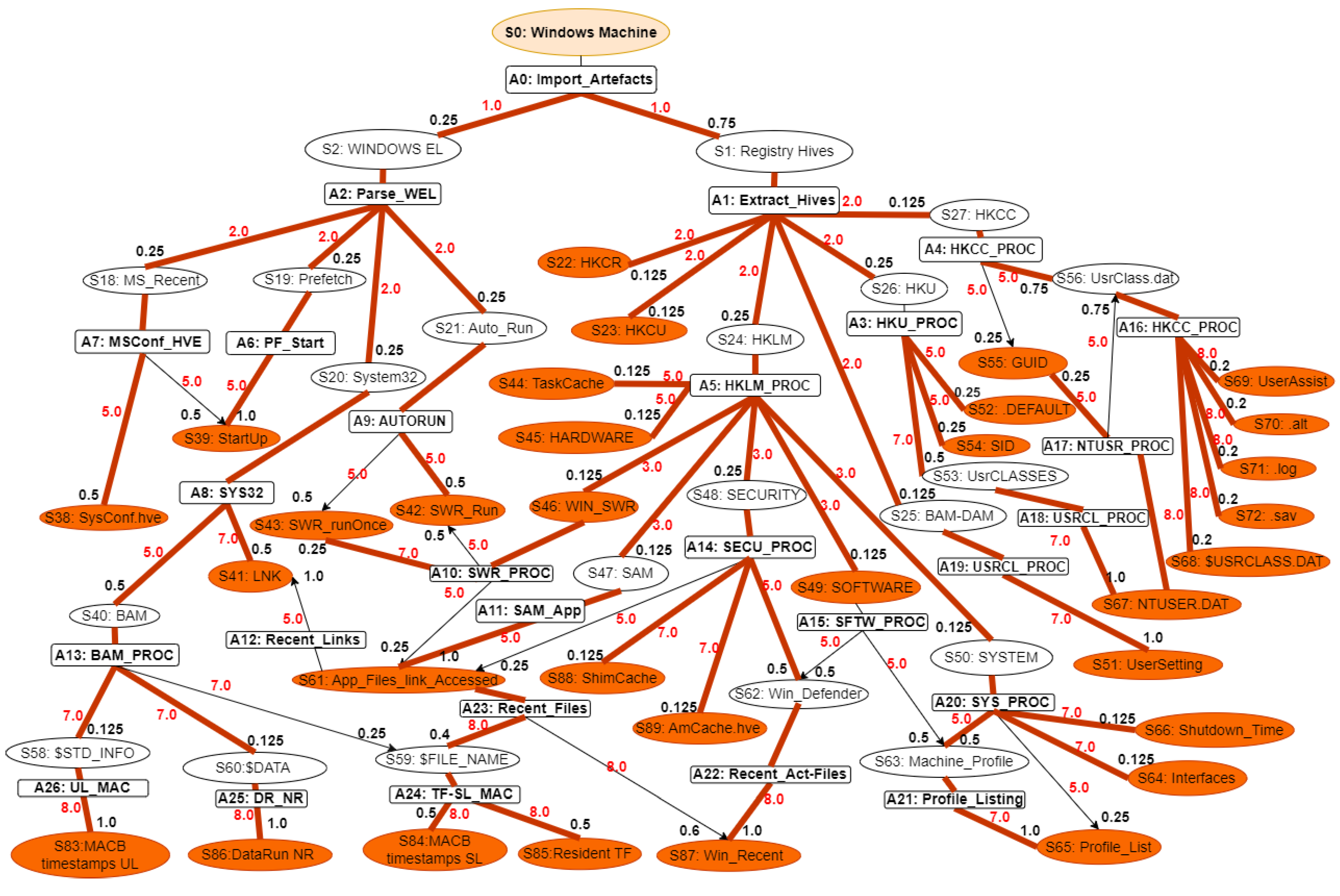

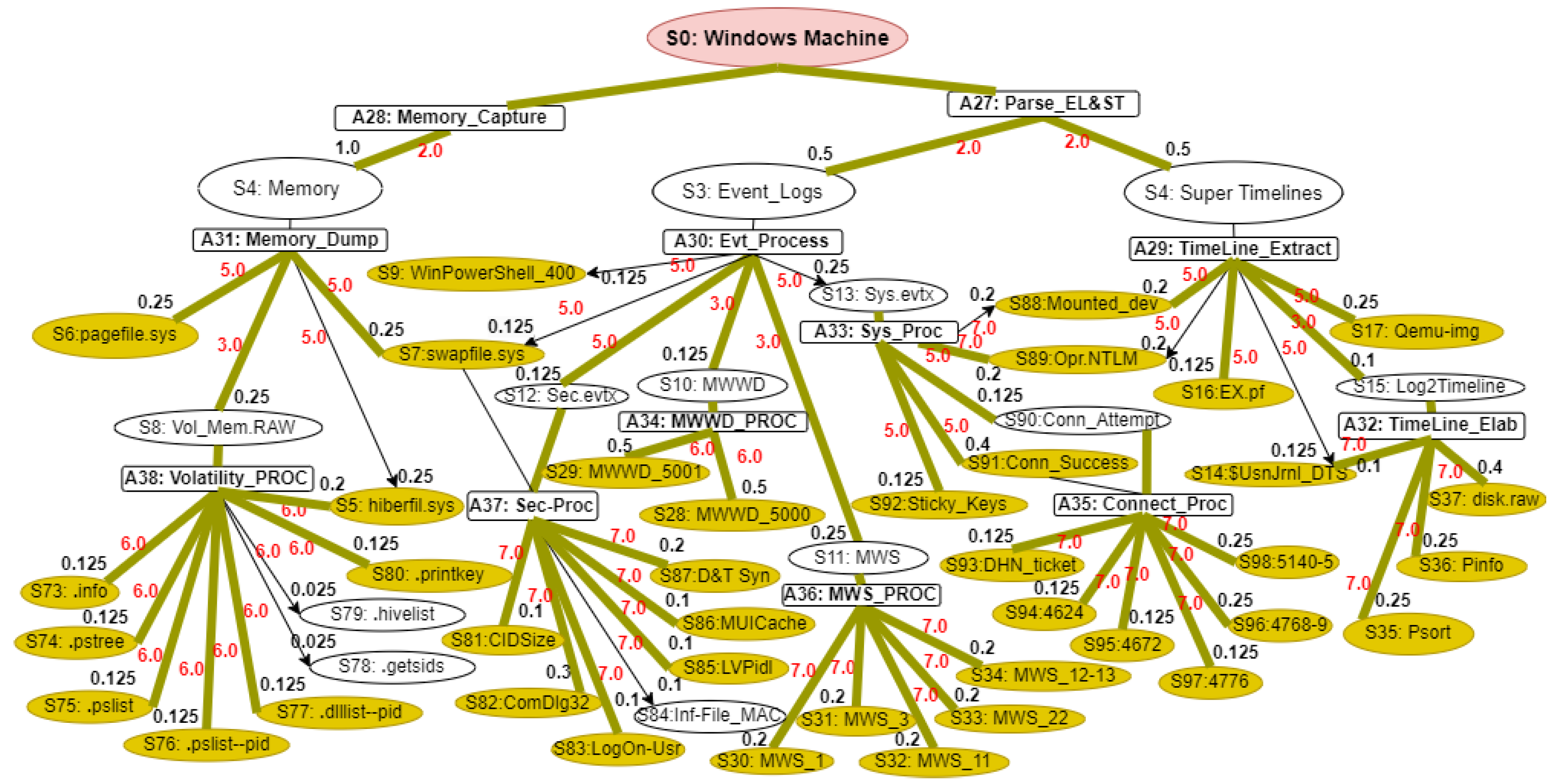

Our proposed WinRegRL MDP illustrated in

Figure 1 and

Figure 2 representation is unique and distinct by the complete capturing of the Registry Forensics and Timeline features of allowing the RL agent to act even when it fails to identify the exact environment state. The environment for RL in this context would be a simulation of the Windows operating system registry activities combined with real-world data logs. This setup should allow the agent to interact with a variety of registry states and receive feedback. The MDP model elaboration is operated following SANS including File Systems, Registry Forensic, Jump Lists, Timeline, Peripheral Profiling and Journaling as summarised in

Table 2.

State Space: In Windows Registry and volatile memory forensics, the state space refers to the status of a system at some time points. Such states include the structure and values of Registry keys, the presence of volatile artefacts, and other forensic evidence. The state space captures a finite and plausible set of such abstractions, allowing the modeling of the investigative process as an MDP. For example, states could be "Registry key exists with specific value" or "Volatile memory contains a given process artefact."

Actions Space: Actions denote forensic steps that move the system from one state to another. Examples of these are Registry investigation, memory dump analysis, or timeline artefact correlation. Every action can probabilistically progress to one of many possible states. The latter reflect inherent uncertainties of forensic methods: for example, querying a given Registry key might show some value with some probability and guide the investigator with future actions.

Transition Function: The transition function models the probability of moving from one state another after performing an action. For example, examining a process in memory might lead to identifying associated Registry keys or other volatile artefacts with a given likelihood. The transition probabilities capture the dynamics of the forensic investigation, reflecting how evidence unfolds as actions are performed.

Reward Function: The reward function assigns a numerical value to state-action pairs, representing the immediate benefit obtained by taking a specific action in a given state. In the forensics context, rewards can quantify the importance of discovering critical evidence or reducing uncertainty in the investigation process. For example, finding a timestamp in the Registry that corresponds to one of the known events can be assigned a large reward for solving the case.

This MDP framework provides a structured approach for modelling and optimizing forensic investigations, enabling analysts to make statistically informed decisions in the presence of complex Windows Registry and volatile memory analysis. The MDP model elaboration is operated following SANS including File Systems, Registry Forensic, Jump Lists, Timeline, Peripheral Profiling and Journaling as summarised in

Table 2.

The problem of registry analysis can be defined as an optimisation problem focused on the agent, where it is to determine the best sequence of actions so as to maximize long-term rewards, thus obtaining the best possible investigation strategies under each system state. Reinforcement Learning (RL) provides a broad framework for addressing this challenge through flexible decision-making in complex sequential situations. Over the past decade, RL has revolutionized planning, problem-solving, and cognitive science, evolving from a repository of computational techniques into a versatile framework for modelling decision-making and learning processes in diverse fields [

52]. Its adaptability has, therefore, stretched beyond the traditional domains of gaming and robotics to address real-world challenges ranging from cybersecurity to digital forensics.

in the proposed MDP model, an investigative process can be modelled as a continuous interaction between an agent—forensic tool or algorithm—and its environment—Windows Registry structure. The environment is formally defined by the set of states representing the registry’s hierarchical keys, values, and metadata; the agent, on the other hand, selects actions in a number of states at scanning, analysing, or extracting data from it. Every action transitions the environment to a new state and provides feedback in the form of rewards, which guide the agent’s learning process.

The sequential decision-making inherent in registry forensics involves navigating a series of states—from an initial registry snapshot to actionable insights. Feedback in this context includes positive rewards for identifying anomalies, detecting malicious artefacts, or uncovering system misconfigurations and negative rewards for redundant or ineffective actions. The RL agent will learn how to optimize its analysis by interacting iteratively with the environment and refining its policy; hence, it will raise the efficiency of finding important forensic artefacts systematically. That would automate and speed up registry analysis, additionally making it adaptive to changing attack patterns—what makes RL a very valuable tool for modern Windows Registry forensics.

RL enables an autonomous system to learn from its experiences using rewards and punishments derived from its actions. By exploring its environment through trial and error, the RL agent refines its decision-making process [

50]. As training RL agents requires a large number of samples from an environment to reach human-level performance [

45], we have opted for WinRegRL for an efficient approach by adopting model-based RL instead of training an agent’s policy network using actual environment samples. We only use dataset samples to train a separate model being supervised by a certified Windows Registry Forensics having acquired GCFE or GCFA. This phase enabled us to predict the environment’s behaviour, and then use this transition dynamics model to generate samples for learning the agent’s policy [

15].

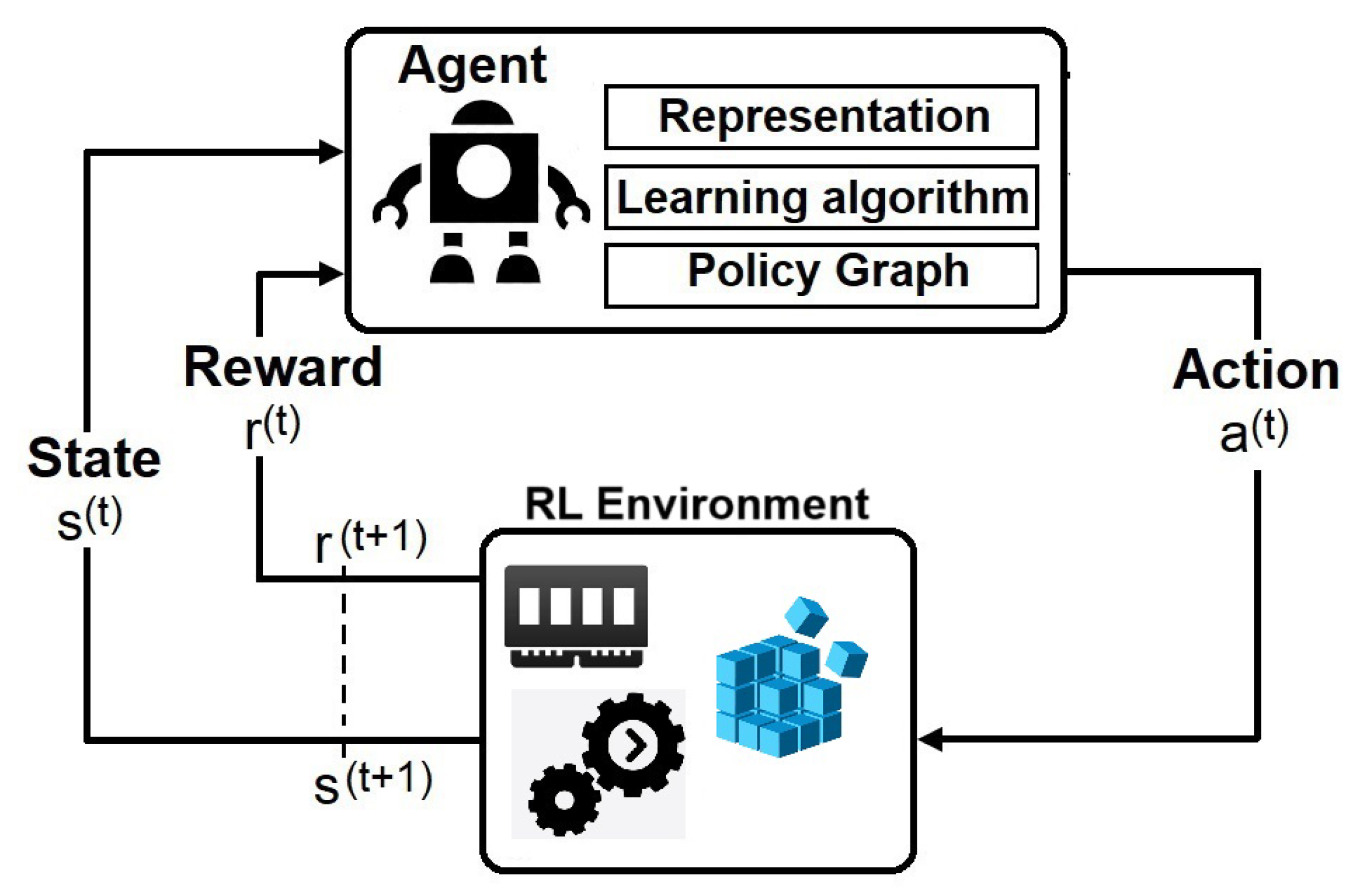

3.3. Reinforcement Learning

In this system, an RL agent interacts with its surround in a sequence of steps in time : 1) the agent receives a representation of the environment (state); 2)) selects and executes an action; 3 receives the reinforcement signal; 4) updates the learning matrix; 5) observe the new state of the environment.

The RL Agent at each step t receives a representation of the environment’s state, and it selects an action . Then, as a consequence of its action the agent receives a reward, . RL is about learning a policy () that maximises the reinforcement values. A policy defines the agent’s behaviour, mapping states into actions. The -greedy method is an example of the action selection policy adopted in RL. We are seeking an Exact solution to our MDP problems and initially considered the following methods:

Value Iteration

Policy Iteration

Linear Programming

3.3.1. Value Iteration and Action-Value (Q) Function

We denoted the expected reward for state and action pairs in equation

6.

3.3.2. Optimal Decision Policy

WinRegRL optimal value-action decision policy is formulated by the following function:

We can then redefine

, equation

3, using

, equation

7:

This equation conveys that the value of a state under the optimal policy

must be equal to the expected return from the best action taken from that state. Rather than waiting for V(s)V(s) to converge, we can perform policy improvement and a truncated policy evaluation step in a single operation [

28]

Algorithm 1 WinRegRL Value Iteration |

-

Require:

State space S, action space A, transition probability , discount factor , small threshold

-

Ensure:

Optimal value function and deterministic policy

- 1:

Initialization: - 2:

▹ Initialize value function - 3:

- 4:

repeat - 5:

- 6:

for all do

- 7:

▹ Store current value of s

- 8:

- 9:

▹ Update the maximum change - 10:

end for

- 11:

until▹ Check for convergence - 12:

- 13:

Extract Policy: - 14:

for alldo

- 15:

- 16:

end for - 17:

Output: Deterministic policy and value function

|

Monte Carlo (MC) is a

model-free method, meaning it does not require complete knowledge of the environment. It relies on

averaging sample returns for each state-action pair [

28]. The following algorithm outlines the fundamental implementation, as shown in

Figure 4.

3.3.3. Bellman Equation

An important recursive property emerges for both Value (

2) and Q (

6) functions if we expand them [

51].

3.3.4. Value Function

Similarly, we can do the same for the Q function:

3.3.5. Policy Iteration

We can now find the optimal policy

Algorithm 2 WinRegRL Policy Iteration |

-

Require:

State space S, action space A, transition probability , discount factor , small threshold

-

Ensure:

Optimal value function and policy

- 1:

Initialization: - 2:

▹ Initialize value function - 3:

▹ Initialize arbitrary policy - 4:

- 5:

repeat - 6:

Policy Evaluation:

- 7:

- 8:

for all do

- 9:

- 10:

- 11:

- 12:

end for

- 13:

until▹ Repeat until value function converges - 14:

- 15:

Policy Improvement: - 16:

- 17:

for alldo

- 18:

- 19:

- 20:

if then

- 21:

- 22:

end if

- 23:

end for - 24:

- 25:

ifthen

- 26:

return and

- 27:

else - 28:

go to Policy Evaluation - 29:

end if |

3.4. WinRegRL Framework Design and Implementation

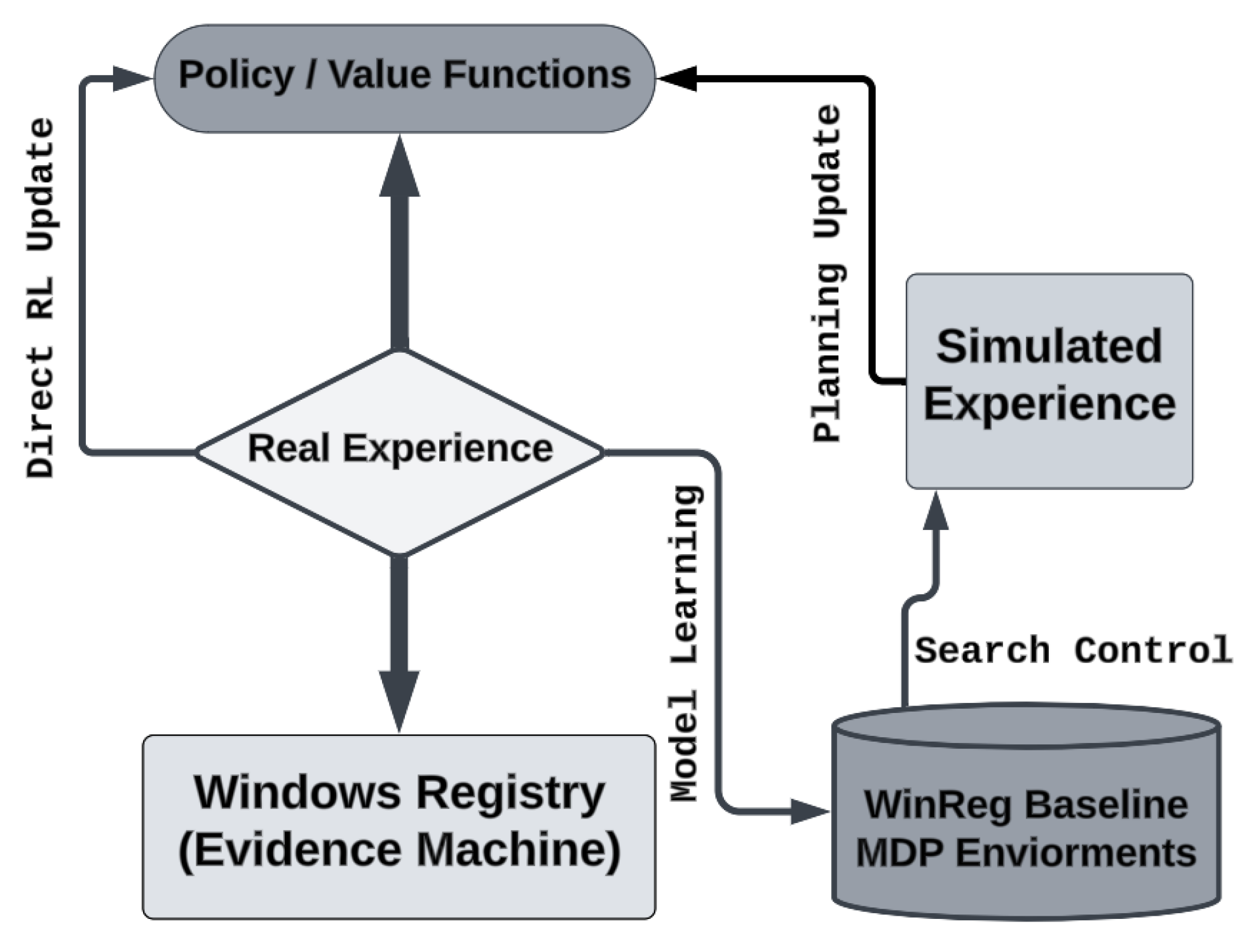

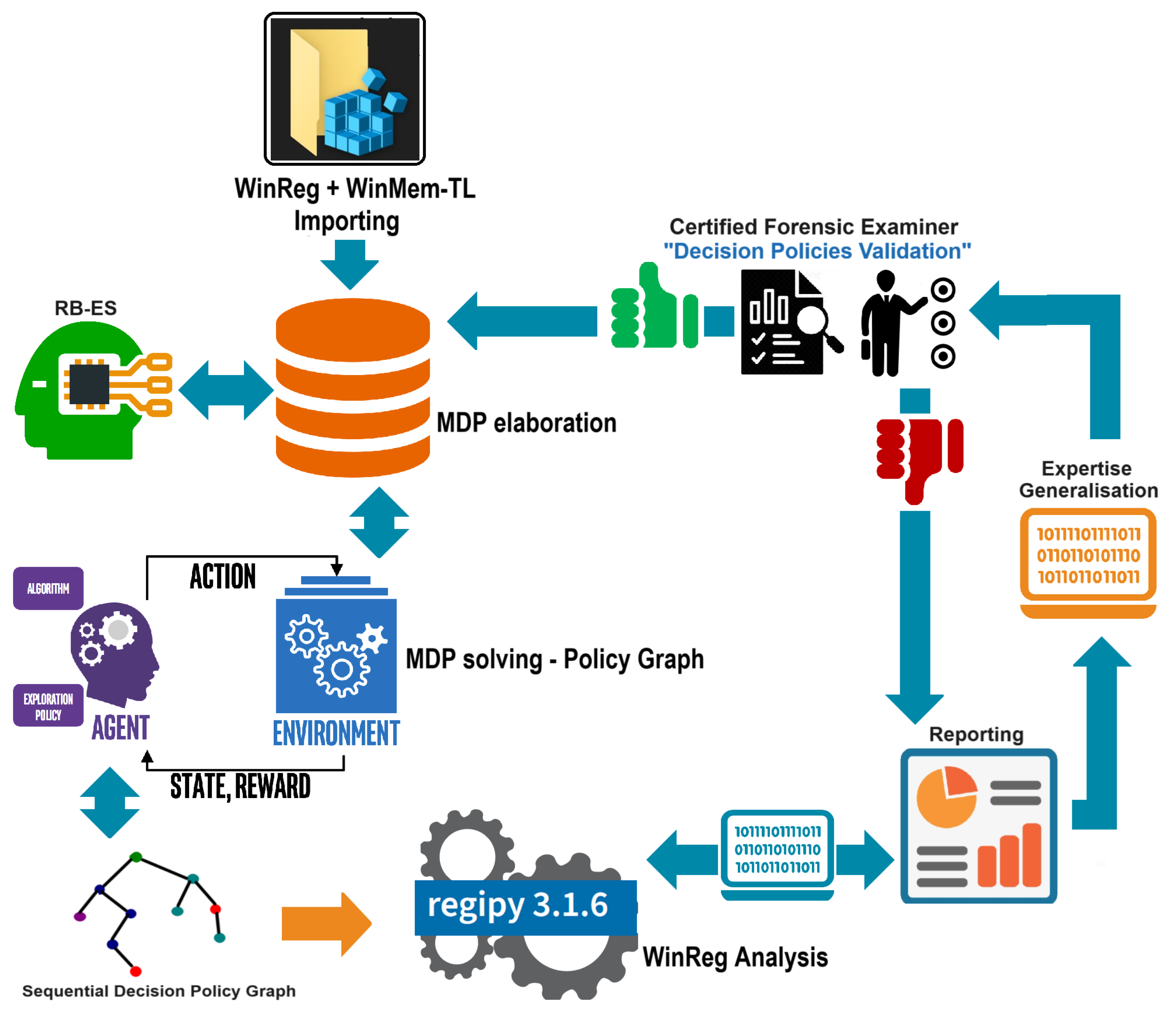

The proposed WinRegRL framework illustrated in

Figure 5 integrates data from the Windows Registry and volatile memory into a decision-making system that models these data as MDP Environments, it also relies on Rule-Based Expert System (RBES) and other Machine Learning algorithms during the phase of MDP environments elaboration. It enables forensic examiners to validate decisions, identify patterns in fraudulent or malicious activities, and generate actionable reports.

Figure 5 provides a detailed diagram illustrating WinRegRL functioning covering Windows Registry Hives and Volatile Data Parsing, MDP environment elaboration, RL and RBES functioning as well as the human expert (GCFE) validation. The Diagram only consider Windows Registry (WinReg) analysis and volatile memory (WinMem-TL) data for elaborating MDP envisionments. This framework highlights an iterative decision-making process that combines expert knowledge with Reinforcement Learning and the use of automated forensic tools to enhance the analysis and detection of fraudulent or anomalous activities in a Windows environment.

We Describe here, WinRegRL framework modular breakdown:

WinReg + WinMem-TL Importing The process begins by importing Windows Registry data and memory timelines (WinMem-TL).

MDP Elaboration The imported data is transformed into a Markov Decision Process (MDP) environment following the structure defined in Equation

1.

MDP Solver The out-of-the-box MATLAB MDP toolbox contains various algorithms to solve the discrete-time Markov Decision Processes: backward induction, value iteration, policy iteration, and linear programming [

19]. In the case of the analysis of the Windows Registry, an MDP agent—a purple head icon with the label AGENT—interacts with the registry environment to receive state observations and reward signals to improve its decision policies [

21]. The RL agent will therefore systematically search through registry keys and values, identifying patterns and anomalies in a feedback-driven cycle of analysis. This interaction constructs a Sequential Decision Policy Graph to visualize possible actions and decision paths specific to registry analysis. An off-the-shelf MDP solver is then applied to find optimal policies, dynamically focusing on registry artefacts based on their reward importance. This methodology exposes, with great skill, hidden malicious behaviours or misconfigurations in the Windows Registry, yielding insights that are often overlooked by traditional static analysis or manual investigation techniques. The algorithm functioning is detailed in Sub

Section 3.7

3.5. Expertise Capturing and Generalisation

A Rule-Based Expert System (RBES) with an online integrator that interacts with the RL MDP-solver, likely applying expert knowledge or predefined rules to assist in elaborating decision policies based on the imported data [

47].

3.5.1. Expertise Generalisation

If the generated policies are inadequate, they undergo further Expertise Generalisation, where more complex or nuanced decision-making logic is applied to better handle fraud detection or anomaly identification.

3.5.2. Reporting and Analysis

The validated results are compiled into a report for decision-making or further investigation. The process feeds back into WinReg Analysis using Regipy 3.1.6, a tool for forensic analysis of Windows Registry hives, ensuring continual refinement and improvement of the decision-making model.

3.6. Human Expert Validation module

The generated decision policies are validated by a Certified Forensic Examiner holding at least GIAC GCFE or GCFA to ensure that the automatically generated decision policies are correct and appropriate. If the policies are valid, they receive a "thumbs up"; if not, further generalisation or adjustments are needed (denoted by the "thumbs down" icon).

3.7. WinRegRL-Core

WinRegRL-Core as illustrated in Algorithm 3 is the Core Reinforcement Learning algorithm where RL Agent is trained on a model MDP—the latter created based on some given data about the Windows Registry. The algorithm initialises the policy, value function, and generator with random values first, then updates each iteration of the policy of the agent based on the computed state-action values by using either policy iteration or value iteration. Policy iteration directly updates the policy by choosing those actions with maximum Q-values, while value iteration, is an iterative refinement of a value function V(s). Q-learning is used so the RL Agent would explore the environment and update a Q-table while following the epsilon-greedy approach for balancing exploration and exploitation. WinRegRL-Core incorporates increasingly improved iterations of the critic, or value function, and the generator, or policy, for the refinement of the policy towards the selection of appropriate actions for the maximisation of cumulative rewards. Convergence is verified based on whether the difference between the predicted and target policy is less than a specified threshold, which demonstrates that is the optimal policy. The last trained generator and the critic represent the optimized policy and value function, Respectively, used for decision-making within the MDP environment.

For non-stationary problems, the Monte Carlo estimate for, e.g,

V is:

Where

is the learning rate, how much we want to forget about past experiences.

|

Algorithm 3 WinRegRL-Core |

-

Require:

MDP , where S is the set of states derived from Windows Registry data, A is the set of actions, P is the transition probability matrix, R is the reward function, and is the discount factor; Local iterations I, learning rate for Critic , learning rate for Generator , gradient penalty

-

Ensure:

Trained Critic and Generator for the optimal policy

- 1:

Initialize Generator with random weights - 2:

Initialize Critic (value function) for each

- 3:

Initialize policy arbitrarily for each

- 4:

for iteration to I do

- 5:

while MDP not converged do

- 6:

for each state do

- 7:

for each action do

- 8:

Compute Bellman update for expected reward

- 9:

end for

- 10:

Update policy with max Q-value

- 11:

Update value

- 12:

end for

- 13:

if using Policy Iteration then

- 14:

Check for stability of ; if stable, break

- 15:

else if using Value Iteration then

- 16:

Check convergence in ; break if changes are below threshold - 17:

end if

- 18:

end while

- 19:

if Q-Learning is needed then

- 20:

Initialize Q-table for all

- 21:

for each episode until convergence do

- 22:

Observe initial state s

- 23:

while episode not done do

- 24:

Choose action a using -greedy policy based on

- 25:

Execute action a, observe reward r and next state

- 26:

Update

- 27:

- 28:

end while

- 29:

end for

- 30:

end if

- 31:

Train Critic to update value function - 32:

Train Generator to optimize policy using updated critic values - 33:

Confirm convergence of the target policy , break

- 34:

end for - 35:

return Trained Generator and Critic for optimized policy

|

3.8. PGGenExpert-Core

PGGenExpert is the proposed Expertise Extraction, Assessment and Generalisation Algorithm 4, it converts WinRegRL Policy Graph to Generalized Policies independently of the covered case for future use, processes and corrects a state-action policy graph derived from reinforcement learning on Windows Registry data. It starts by taking the original dataset, a complementary volatile dataset, and an expert-corrected policy graph as inputs. The algorithm first creates a copy of the action space and iterates over each feature to ensure actions remain within the valid range, correcting any out-of-bounds actions. Next, it addresses binary values by identifying incorrect actions that differ from expected binary states and substitutes them with expert-provided corrections. For each state-action pair, it then adjusts the action values so that only the highest-probability action remains non-zero, effectively refining the policy to select the most relevant actions. Finally, the algorithm returns the corrected and generalized policy graph, providing an updated model of action transitions that aligns with expert knowledge and domain constraints.

|

Algorithm 4 GenExpert: Policy Graph Expertise Extraction, Assessment and Generalisation Algorithm |

-

Require:

Original Windows Registry data with n instances and d features, Complementary volatile data Y with shape matching W, and State-to-Action Policy Graph Z. Expert-corrected general policy graph C. -

Ensure:

Updated transition probabilities in the MDP model based on corrected actions. - 1:

procedureCorrect_Action_State_PG Maping() - 2:

Copy of the action space:

- 3:

for do▹ Handle missing and out-of-range actions - 4:

- 5:

- 6:

▹ Bound actions within valid range - 7:

end for

- 8:

for do▹ Correct binary values - 9:

▹ Identify invalid actions - 10:

▹ Replace with expert-corrected values - 11:

▹ Set all but highest value in state-action vector to 0 - 12:

end for

- 13:

▹ Extract generalized policy graph based on highest value action - 14:

return ▹ Return updated state-action policy graph - 15:

end procedure |

5. Conclusion and Future Works

This paper introduces a novel RL-based approach to enhance the current automation and data management in Windows registry forensics and timeline analysis in volatile data investigation. We have shown that it is possible to streamline the analysis process, reducing both the time and effort required to identify relevant forensic artefacts as well as increase accuracy and performance which will be translated into a better and more trusted output. Our proposed MDP model dynamically covers all registry hives, volatile data and key values based on their relevance to the investigation, and has proven effective in a variety of simulated attack scenarios. The model’s ability to adapt to different investigation contexts and learn from previous experiences represents a significant advancement in the field of cyber forensics. This research addresses gaps in existing methodologies through the introduction of a novel MDP model representing the Windows environment, enabling efficient automation of the investigation process. It also tackles the waste of knowledge in the practice as RL Optimal policies are captured and generalised thus reducing investigation time by minimising MDP solving time. However, while the results are promising, several challenges remain. The effectiveness of the RL model heavily relies on the quality and the modelling which vary considerably in real-world scenarios and can be sparse or unbalanced notably in new Windows OSs. Ultimately, the application of RL in Windows Registry analysis holds significant promise for improving the speed and accuracy of cyber incident investigations, potentially leading to more timely and effective responses to cyber threats. As the cyber threat landscape continues to evolve, the development of intelligent, automated tools such as the one proposed in this study will be crucial in staying ahead of adversaries and ensuring the integrity of digital investigations.

While RL offers robust solutions for cyber incident forensics analysis, challenges remain in implementing scalable models for Windows Registry analysis. Existing studies highlight the complexity of crafting accurate state-action representations and addressing reward sparsity. Future work should refine the model to handle diverse and evolving threat landscapes more robustly [

48]. This includes incorporating Large Language Models (LLMs) coupled with Retrieval-Augmented Generation (RAGs) techniques in defining the MDP environments from dynamic cyber incident response data, enhancing the model’s generalisability, and ensuring its applicability across various versions of the Windows operating system.