1. Introduction

The changed image detection is an important technology which refers to those remote sensing images in same geographical area captured at different time points in this approach. Identifying changes in the surface or landscape through these variations, which can be used as a basis for real-time updates to provide better geographic information services. Recent years, this technology has been used in urban planning [

1,

2], environmental monitoring [

3], crop growth observation [

4,

5], and disaster weather forecast [

6,

7], etc. Generally, from used methods on the image change detection above, they are mainly divided into three types. (i) supervised, (ii) unsupervised and (iii) semi-supervised methods.

It was found that supervised methods perform well when trained with a large amount of labeled data. Li et al, proposed a multi-scale feature fusion Siamese network [

8]. By introducing a multiscale fusion mechanism into the Siamese network, it can effectively use features of different scale images to enhance detection. Additionally, Yu et al, proposed an image change detection method that greatly improves the detection accuracy of image changes by using multi-level feature cross-fusion and three dimensional (3D) convolutional neural networks [

9]. These methods can identify areas of change in images. However, the acquisition of labeled data is costly, especially in high-resolution remote sensing images, where manual annotation is complex and time-consuming.

Relatively, the unsupervised methods do not rely on labeled data for model training. Fang et al, proposed the Siamese nested fully convolutional network for change detection, which achieves high-resolution remote sensing images in setting through a densely connected Siamese network structure [

10]. Subsequently, Jiang et al, developed an unsupervised remote sensing image processing framework by integrating the image generation capabilities of deep convolutional generative adversarial networks with the temporal analysis advantages of waveform neural network [

11]. Although these unsupervised methods reduce the dependence on labeled data, the models struggle to capture complex change patterns and are prone to noise interference due to the lack of supervisory signals, leading to lower detection accuracy and difficulty in meeting the needs of high-precision applications.

The semi-supervised methods, combining advantages of both above, enhance the model's detection capabilities by utilizing the potential information of unlabeled data when labeled data is insufficient. Shuai et al, constructed a superpixel-based multiscale Siamese graph attention network [

12], which demonstrates a potential for superiority. Thereafter, Wang et al, set up a method based on existing vector polygons. They use latest imagery improving detection accuracy [

13]. In recent years, many semi-supervised methods based on consistent regularization have been proposed to facilitate learning from unlabeled data using teacher-student models or generative adversarial networks. For example, Jiang et al proposed a sample expansion interpolation method based on consistency regularization to enhance the generalization ability of remote sensing change detection models by augmenting the influence of unlabeled data [

14]. Although these methods solve the data scarcity problem in change detection to some extent and improve the detection accuracy, the problems of blurred change edges and missed detection of very small targets are still one of the main challenges in current research.

To address these issues, Yang et al, constructed a multi-scale concatenated graph attention network and demonstrated the potential of using different feature scales for change detection in a semi-supervised environment [

15]. Lv et al, on the other hand, constructed a multi-scale attention network with feature bootstrapping and demonstrated the potential of using different feature scales for land-cover change detection in remote sensing images [

16].

Due to the varying size of change regions, blurred edges, and the scarcity of labeled data in remote sensing images, existing methods struggle to effectively address these challenges simultaneously. This paper aims to improve change detection accuracy by combining semi-supervised methods with multi-scale feature fusion, alleviating the issues of blurred change edges and small target misdetection in situations with limited labeled data. Therefore, this paper proposes a semi-supervised remote sensing image change detection network based on consistent regularization for multi-scale feature fusion and reuse. The network mainly includes two parts: the supervised change module and the teacher-student consistency regularization module for semi-supervised learning. The main contributions of this paper are as follows.

(1) The semi-supervised image change detection network is addressed, which includes a supervised change detection module and a teacher-student consistency regularization module for semi-supervised learning. In the supervised part, the effective fusion and reuse of multi-scale features enhance the recognition ability of change areas, especially in the detection of small targets and edge details. Meanwhile, in the semi-supervised part, consistency regularization is used to strengthen feature learning on unlabeled data, mainly by reducing the prediction differences between different perturbed versions of the same unlabeled sample to enhance the robustness of the model.

(2) In the supervised phase change detection module, a brand-new multi-scale feature fusion global context module (GCM) and feature fusion and reuse module (FFM) have been added to alleviate the problems of unclear change edge detection and the omission of very small target detection. The GCM enhances the detection capability for very small targets by integrating features from different levels; and the FFM improves the localization accuracy of edge change areas and reduces the issue of unclear change edge detection by fusing, refining, and reusing features from different levels.

(3) Extensive experiments were conducted on some typical datasets, such as the large-scale earth observation image dataset for building change detection (LEVIR-CD), the Wuhan University, China, building change detection dataset (WHU-CD), and the Google earth imagery Guangzhou, China, change detection dataset (GoogleGZ-CD). We use three label ratios of 5%, 10%, and 20% to verify our model. The proposed model demonstrates significant performance improvements in both training and inference, highlighting the effectiveness and advantages of the method.

The rest content is structed as follows. First, we elaborate on the principle of semi-supervised image change detection and the detection network model, constructed in the

Section 2. We not only introduce the multi-scale feature fusion and reuse network in the supervised phase, but also deeply discuss the teacher-student consistency regularization method used for semi-supervised learning. It enhances the model's detection performance and generalization ability under limited labeled data through consistency regularization. It also strengthens the recognition ability of changed areas, especially for small targets and edge details, through effective fusion and reuse of multiscale features. In the

Section 3, we verify the effectiveness of the principles and technical solutions, and conducted extensive experiments. The section includes performance evaluation on the three public datasets LEVIR-CD, WHU-CD, and GoogleGZ-CD, and compares several advanced semi-supervised change detection models. In addition, we also conducted detailed ablation experiments to analyze the role of each module. Finally, in last section, we summarize the research results and propose prospects for future researches.

2. Methodology

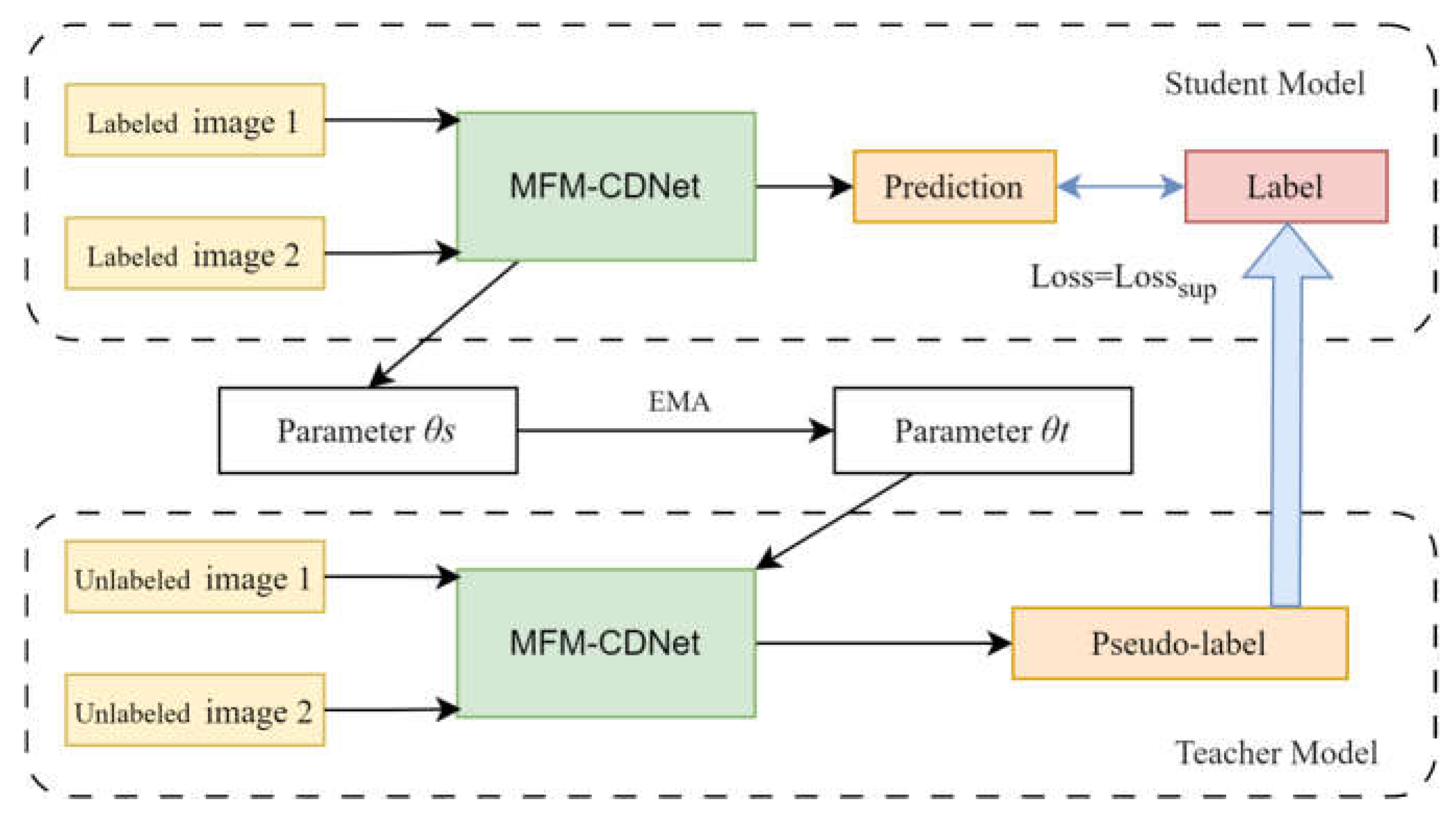

The semi-supervised remote sensing image change detection network based on consistent regularization for multi-scale feature fusion and multiplexing proposed in this paper consists of two main components, a change detection network with a supervised phase of multi-scale feature fusion and multiplexing, named MFM-CDNet, and a semi-supervised learning consistency regularization method based on the teacher-student model used to update the parameters. Its structure will be discussed in

Section 2.1. The semi-supervised consistency regularization works by generating pseudo-labels for unlabeled data using the teacher model and incorporating consistency loss and regularity terms when training the student model to ensure that the model's predictions of the data and its perturbed versions remain consistent, thereby forcing the model to learn a more generalized representation of the features and iteratively updating the model parameters as performance improves. Initial parameters are first generated using supervised learning in labelled data using MFM-CDNet. Then, for unlabeled data, the teacher-student model is iteratively trained for semi-supervised learning. The main idea is to use the teacher model to generate pseudo-labels of the student model to guide the student model to learn the features of the unlabeled data, thus achieving semi-supervised learning. The quality of the pseudo-labels plays a key role in the performance of the final model, named SCMFM-CDNet. The overall architecture of proposed model is shown in

Figure 1.

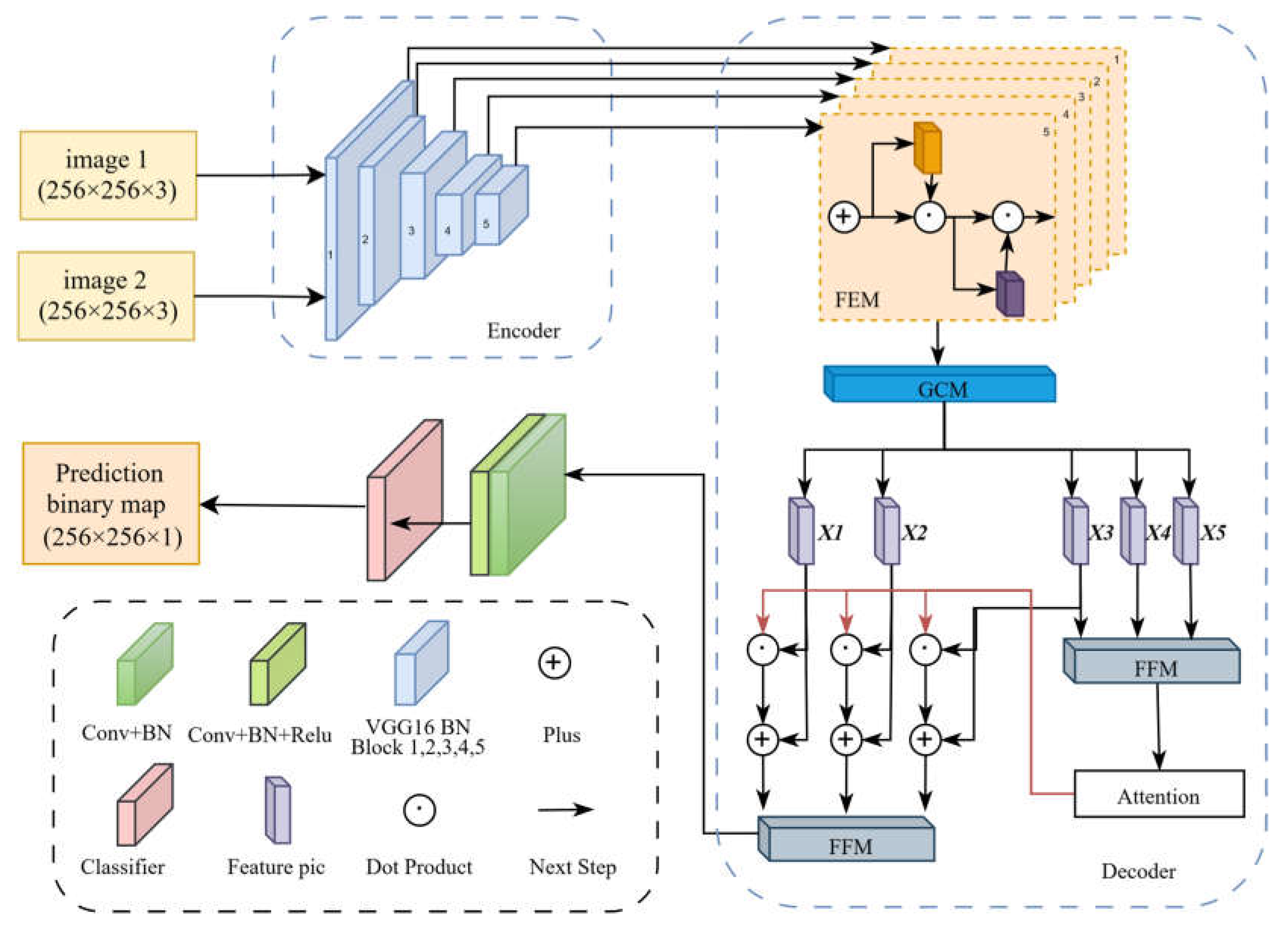

2.1. MFM-CDNet Structure

In order to analyze the proposed network, the MFM-CDNet is required to be introduced first. As shown in

Figure 2, it consists of an encoder and a decoder parts. The former uses the VGG-16 network as the backbone, employing 5 VGG-16 with batch normalization (VGG16_BN) blocks, which correspond to the operations of VGG16_BN at layers 0-5, 5-12, 12-22, 22-32, and 32-42. Features are extracted at different stages to achieve feature fusion from coarse to fine. In the decoder part, the feature enhancement module (FEM), global context module (GCM), and feature fusion and reuse module (FFM) are used in combination.

(1) Feature Enhancement Module

This module primarily guides the attention by sequentially passing the feature maps through a channel attention module and a spatial attention module, enabling the model to accurately locate important areas in the image and significantly reduce computational complexity.

The channel attention module can highlight the most important feature channels in the image, enabling the model to obtain more refined and distinctive feature representations, and reducing the computational load on unimportant features. The channel attention mechanism mainly addresses the question of ‘what the network should focus on’. The spatial attention module allows the network to focus on key areas within the image, primarily addressing the question of ‘where the network should concentrate’.

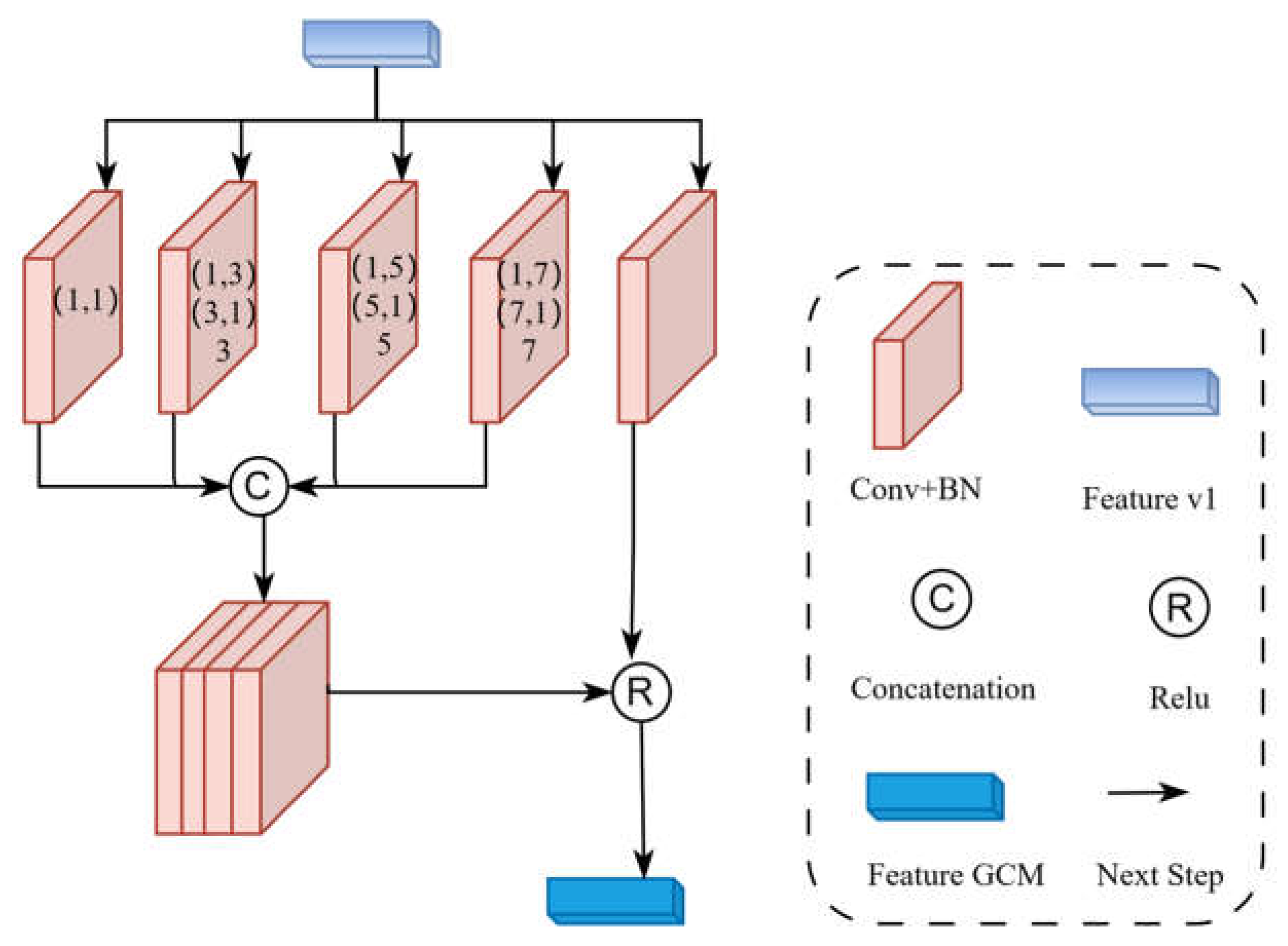

(2) Global Context Module

The model is to integrate multi-scale contextual information of images to enhance the expressive power of features. In this way, the model can not only capture local details but also integrate features from different levels, which can improve the detection ability of extremely small targets.

It consists of four parts. The first part is a 2D convolutional unit, while the second to fourth parts each contain four 2D convolutional units. Each 2D convolutional unit is composed of a convolutional layer and a batch normalization layer, but they have different kernel sizes and dilation factors. The outputs of these four units are horizontally concatenated, and then placed together with the output of the feature map obtained di-rectly through the 2D convolution operation into the Rectified Linear Unit (ReLU) activation function, ultimately resulting in a feature-enhanced map that contains multi-scale contextual information. The detailed architecture of the GCM module is shown in

Figure 3.

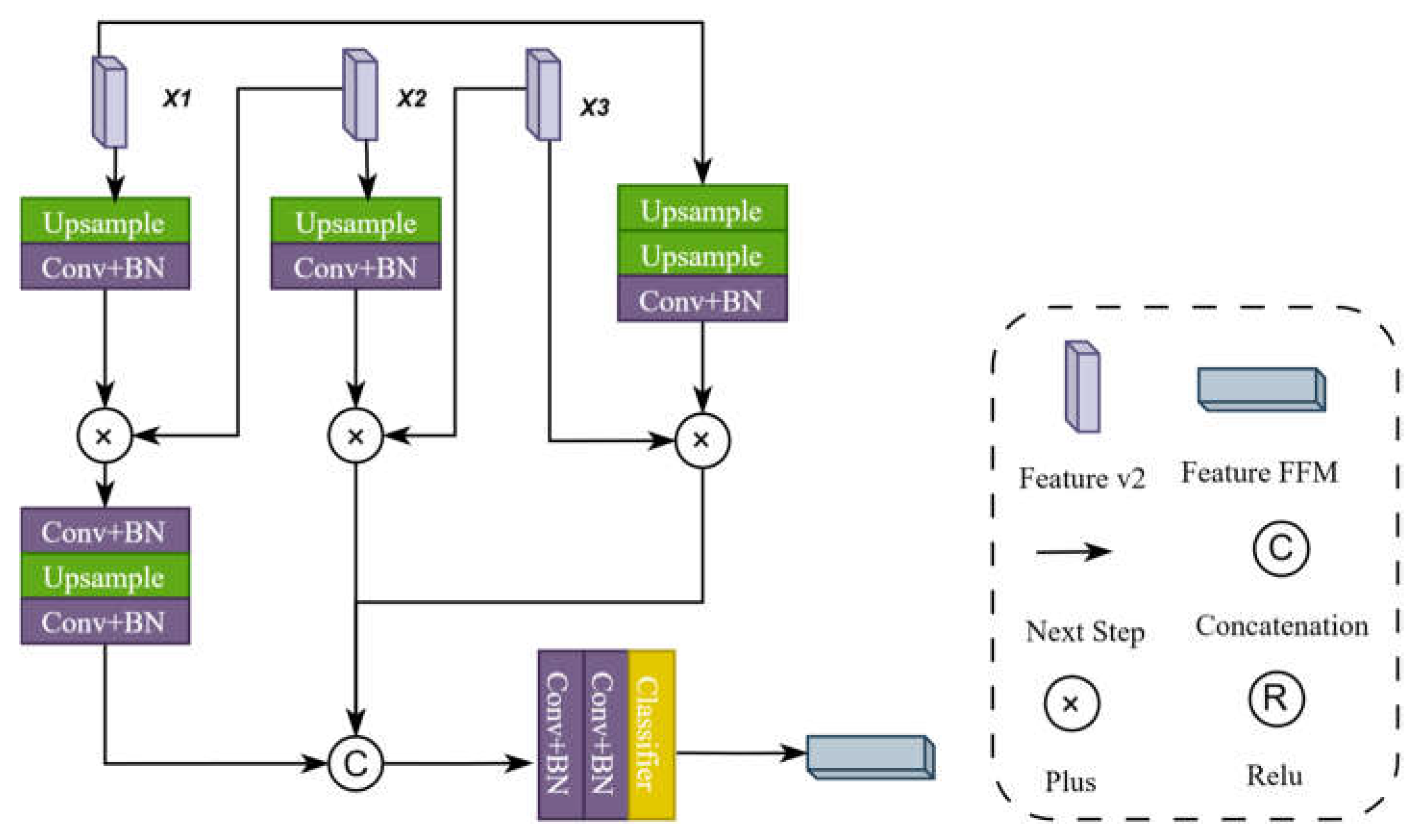

(3) Feature Fusion and Multiplexing Module

The module primarily fuses, refines, and reuses features. Its main function is to integrate and optimize feature information from different levels. Through a series of operations, it enhances the model's ability to recognize positive samples, improves the localization ac-curacy of edge change areas, and reduces the occurrence of missed detections. This mod-ule mainly correlates the features with the attention maps processed by the FFM, which primarily refines the decoding of features from three stages to enhance the accuracy of change information. The detailed operation involves bilinear upsampling of the input feature X1, multiplying the upsampled feature with another layer of features X2, bilinearly upsampling the input feature X2 and multiplying the upsampled feature with another layer of features X3, bilinearly upsampling the input feature X1 twice and multiplying the upsampled feature with another layer of features X3, horizontally concatenating the re-sults of the three operations, and then passing them through two 3x2D two-dimensional convolutional modules to produce the final decoded value of FFM. This results in an aggregated and refined feature map with higher spatial resolution and stronger feature ex-pression capabilities. The detailed architecture of the FFM is shown in

Figure 4.

2.2. Teacher-Student Module

In the semi-supervised learning task, for unlabeled data, this paper employs the mean teacher method to learn features. The main idea is to switch between the roles of the student model and the teacher model. When using the backbone network as the teacher model, it primarily generates pseudo-labels for the student model. When using the back-bone network as the student model, it learns from the pseudo-labels generated by the teacher model. The parameters of the teacher module are obtained by taking a weighted average of the parameters from previously iterated training of the student model. The target labels for the unlabeled data come from the predictions of the teacher model, i.e., the pseudo-labels. Since the pseudo-labels generated by the teacher model can serve as prior information in later training, they guide the student model in learning the features of a large amount of unlabeled data, thereby achieving the effect of semi-supervised learning.

The parameters of the teacher model are updated through EMA of the parameters from the student model, with the policy as follows.

where,

represents the parameters of the teacher model at the current step,

represents the parameters of the teacher model at the previous step,

θs represents the parameters of the student model, and

α is a smoothing factor set to 0.9, which controls the smoothness of the changes in the teacher model's parameters.

2.3. Loss Function

Change detection tasks are considered a special type of binary classification problem. In this paper, a binary cross-entropy loss function is used in conjunction with the Sigmoid function and binary cross-entropy loss (BCELoss). The supervised loss function expressed by

where

α(X) is a Sigmoid function that maps x to the interval (0, 1). In the semi-supervised part, the input is the result of supervised training, and the semi-supervised loss function is as follows.

where

β represents the difference in coefficients between the two loss functions, and in this case,

β is used with a value of 0.2, at which point the impact of this coefficient on model accuracy is minimal.

2.4. Training Process

The training process of the model is shown in

Table 1.

The model first utilizes the MFM-CDNet in the supervised phase to conduct supervised learning on labeled data to generate initial parameters. For unlabeled data, the parameters generated by the teacher model are used as pseudo-labels for training. The parameters of the student model are then averaged with those of the teacher model to obtain new teacher model parameters, with a weighting coefficient of 0.9 for the student model parameters and teacher model parameters. This process is iterated repeatedly to obtain the final model parameters.

3. Experiments

To validate the effectiveness of the proposed method, we must run on three public datasets, LEVIR-CD, WHU-CD, and GoogleGZ-CD through extensive experiments, which mainly include dataset introduction, experimental setup, comparison methods, experimental results and analysis, and ablation experiments.

3.1. Dataset Introduction

The LEVIR-CD dataset is provided by Beihang University, China, and is a building change detection dataset with largescale and very high resolution. It consists of 637 pairs of high-resolution image blocks, with a spatial resolution of 0.5 meters and an image size of 1024×1024 pixels. The dataset focuses on building-related changes, including various types of structures such as villas, high-rise apartments, small garages, and large ware-houses.

The WHU-CD dataset is provided by Wuhan University, China, and is a high-resolution re-mote sensing image dataset for building change detection. The dataset includes a large image pair sized at 32507×15345 pixels with a spatial resolution of 0.2 meters. It reflects significant reconstruction activities in the area following an earthquake, with change in-formation primarily focused on buildings. The images are typically cropped into non-overlapping blocks of 256×256 pixels.

The GoogleGZ-CD dataset is provided by Wuhan University and is a largescale high-resolution multispectral satellite image dataset specifically for change detection tasks. The dataset contains 20 pairs of seasonal high-resolution images covering the sub-urban areas of Guangzhou, China, with an acquisition time span from 2006 to 2019. The image resolution is 0.55 meters, and the size varies from 1006×1168 pixels to 4936×5224 pixels. It covers various types of changes, including water bodies, roads, farmland, bare land, forests, buildings, and ships, with buildings being the main changes. The image pairs are cropped into non-overlapping blocks of 256×256 pixels.

The detailed number of samples in the training set, validation set, and test set of the three datasets is shown in

Table 2.

3.2. Experimental Setup

The model is implemented on PyTorch, an open-source deep learning framework widely used for building and training neural networks. It is trained and tested on an NVIDIA RTX 4090 GPU. For optimization, we use the AdamW optimizer (Adaptive Moment Estimation with Weight Decay), which combines the benefits of Adam and weight decay to regularize the model and prevent overfitting. The optimizer minimizes the loss function with a weight decay coefficient of 0.0025 and a learning rate of 5e-4. Due to the limitations of GPU resources and through extensive experimental validation, this paper sets the batch size to 16 and the number of training epochs to 180 to ensure that the model can converge. In the semi-supervised experiment, the dataset is constructed through random sampling. Specifically, 5%, 10%, and 20% of the training data are randomly selected as labeled data, and the rest of the data is processed as unlabeled data.

Experimental comparison methods selected Currently advanced semi-supervised detection models include AdvNet, SemiCD, RCL, TCNet.

To clearly investigate the performance of proposed SCMFM-CDNet, this experiment uses three evaluation metrics: Precision (P), Recall (R), and F1 score. The formulas are shown as fol-lows.

In these metrics, TP stands for true positives, FP stands for false positives, and FN stands for false negatives.

3.3. Experimental Results and Analysis

For the proposed model, 5%, 10%, and 20% of the training data were randomly selected as labeled data, with the remaining data treated as unlabeled. Comparative experiments on the detection performance of different methods were conducted on the LEVIR-CD, WHU-CD, and GoogleGZ-CD datasets.

The quantitative accuracy results of different methods at different annotation ratios on the LEVIR-CD dataset are shown in

Table 3.

The proposed network, SCMFM-CDNet has shown significant superiority on the LEVIR-CD dataset. Among the three label ratios, our network ranks first in recall and F1 score, indicating the network's superior comprehensive performance. Although the accuracy of proposed network is slightly lower than the UniMatch-DeepLabv3+ method, the recall rate R of the proposed network under the three label ratios is much higher than that of UniMatch-DeepLabv3+ and other methods. This means that the proportion of actual change areas that the network in this paper can detect is far higher than other methods, demonstrating the good performance of the network proposed in this paper. In general, ours have shown significant superiority in improving the accuracy and efficiency of change detection through its innovative semi-supervised strategy.

The quantitative accuracy results of different methods at different annotation ratios on the WHU-CD dataset are shown in

Table 4.

The quantitative accuracy results of various methods on the WHU-CD dataset indi-cate that the SCMFM-CDNet network proposed in this paper ranks first in recall and F1 score at label ratios of 5% and 10%. Additionally, at a 10% label ratio, the SCMFM-CDNet network's accuracy also ranks first, demonstrating the network's superior comprehensive performance. At a 20% label ratio, the SCMFM-CDNet network's recall rate is second, low-er than the UniMatch-DeepLabv3+ method, but still better than other methods. The main reason for this is that the WHU-CD dataset has relatively fewer positive samples (changed areas) and more negative samples (unchanged areas), with the changed areas being more distinct and less complex. The high performance of UniMatch-DeepLabv3+ in semantic segmentation allows it to detect changed areas with high accuracy. However, on the more complex LEVIR-CD and GoogleGZ-CD datasets, the performance of our method is signif-icantly higher than that of UniMatch-DeepLabv3+. Particularly on the complex Goog-leGZ-CD dataset, it can be seen that UniMatch-DeepLabv3+ is inferior to the SCMFM-CDNet network proposed in this paper in terms of generalization ability.

The quantitative accuracy results of different methods at different annotation ratios on the GoogleGZ-CD dataset are shown in

Table 5. Compared to some other datasets, the GoogleGZ-CD dataset has a smaller scale. From a semi-supervised perspective, with only 5% labeled data, SCMFM-CDNet achieved an F1 score of 81.44%, which is higher than other semi-supervised change detection methods. This demonstrates its strong perfor-mance under conditions of limited labeled data, aligning with the original intention of semi-supervised learning to train using a small set of labeled data.

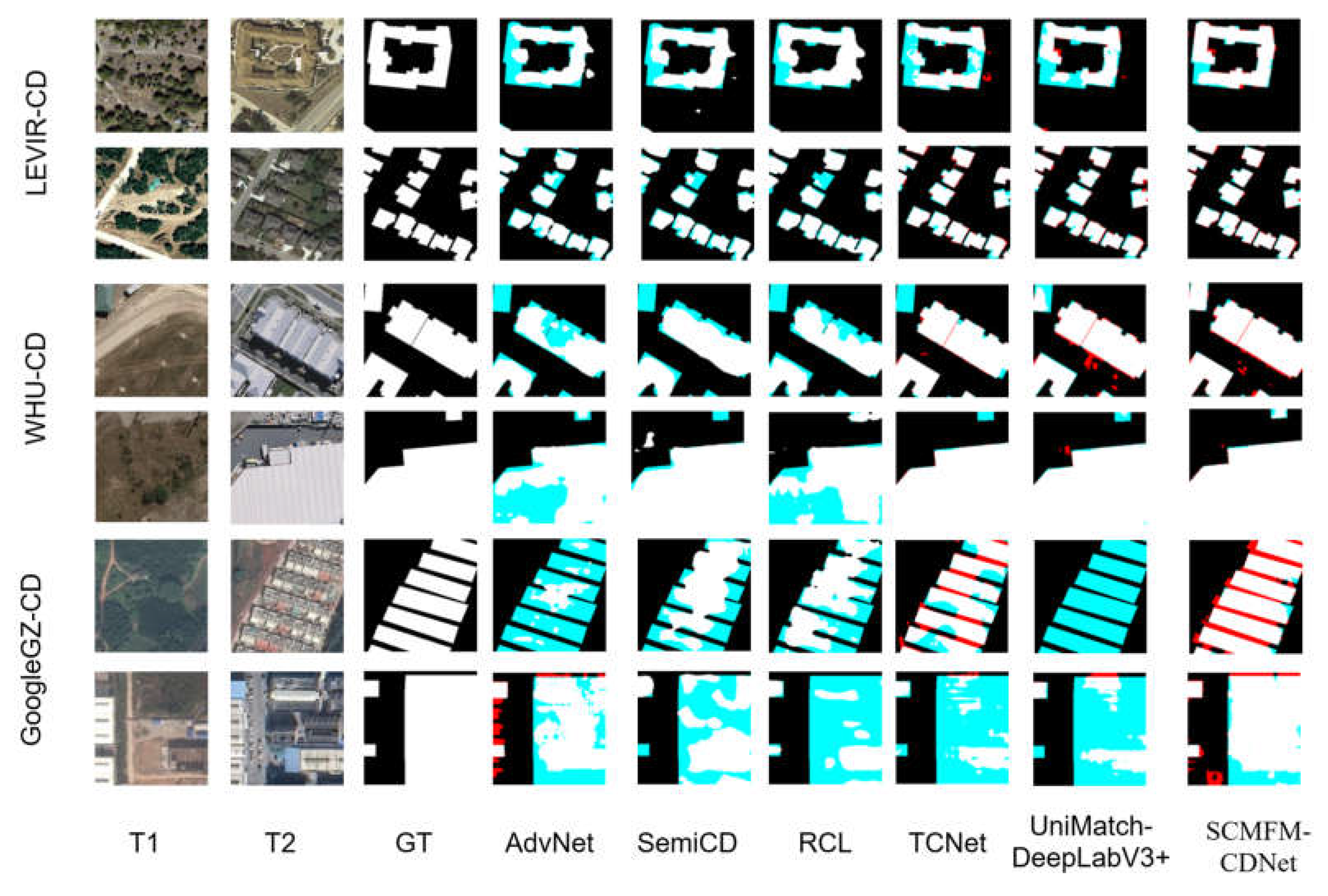

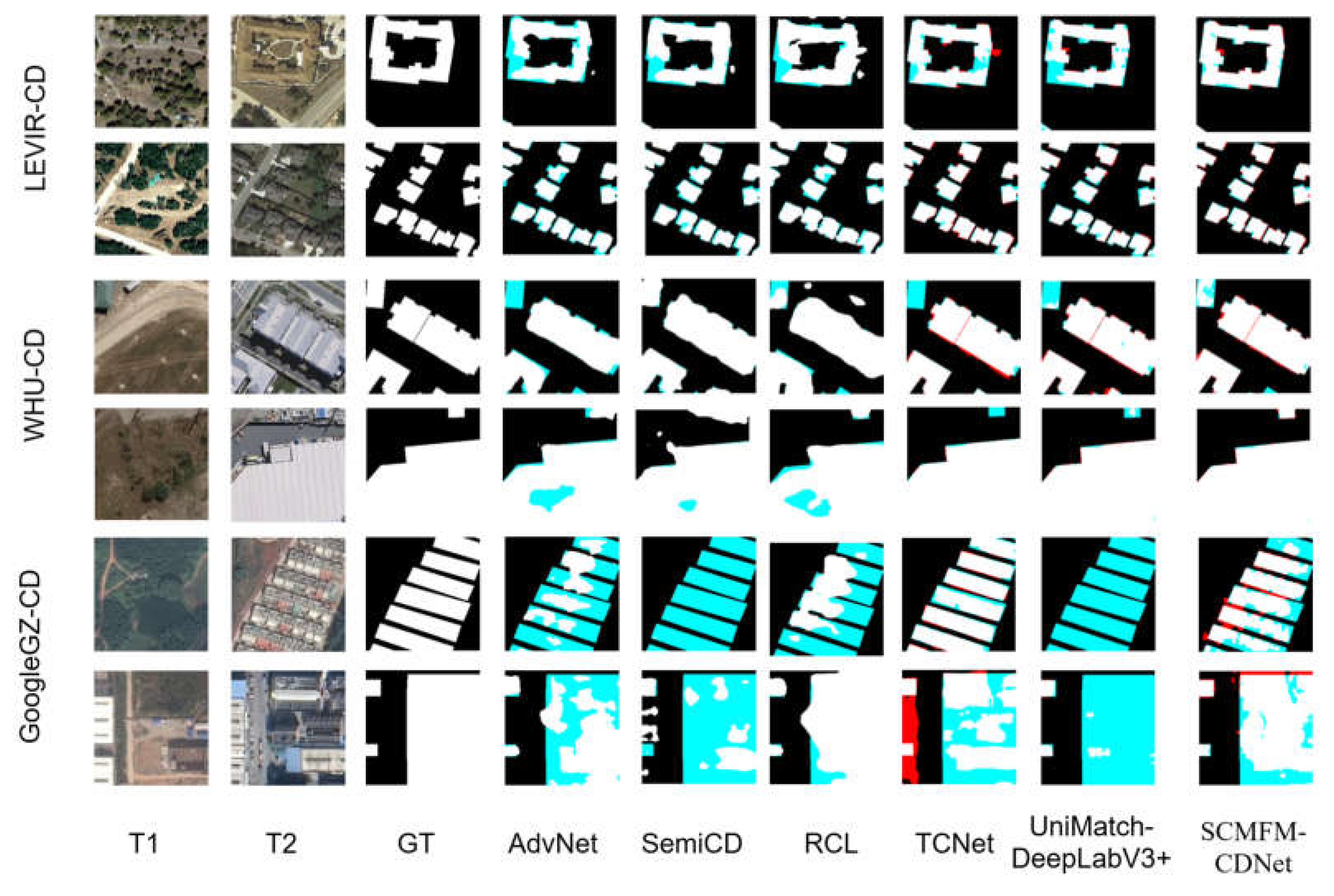

Figure 5 and

Figure 6 respectively represent the qualitative evaluation results of different comparison methods on various datasets with labeling rates of 5% and 10%. To make the visualization more intui-tive, we use white to express the TP (true positives), black to represent the TN (true negatives), red to illustrate the FP (false positives), and light blue to depict the FN (false negatives). Black indicates unchanged areas, while white indicates changed areas. Red represents areas where the model incorrectly identifies unchanged areas as changed, i.e., false alarms. Light blue represents areas where the model fails to detect actual changes, i.e., missed detections. By observing the visualization, the following conclusions can be drawn: Our proposed model stands out in reducing the number of false negatives (light blue areas), with significantly smaller areas of light blue indicating that the model detects change areas more completely. Although there is a certain amount of false positives, the overall performance of the model is obviously higher. In general, SCMFM-CDNet has significantly improved the model's detection capabilities through the design of improved modules and semi-supervised learning strategies. To save space, the result on 20% labeling rate were neglected.

3.4. Ablation Experiments

The ablation experiments mainly validate the impact of modules and strategies such as the global context module, feature fusion and reuse module, teacher model, and exponential moving average update on the overall performance of the model. This paper selects 5% and 10% label ratios in the GoogleGZ-CD dataset for validation. The quantita-tive accuracy results of ablation experiments at different annotation ratios on the Goog-leGZ-CD dataset are shown in

Table 6.

From the table, it can be observed that the performance improves significantly when both the Global Context Module (GCM) and the Feature Fusion and Reuse Module (FFM) are included. Additionally, the teacher model and Exponential Moving Average (EMA) update further enhance the performance, demonstrating their importance in improving the model's overall accuracy and robustness.