1. Introduction

Large Language Models (LLMs) have recently become a cornerstone in artificial intelligence (AI) research and application, thanks to their remarkable ability to understand and generate human-like text [

10]. LLMs such as GPT [

106], BERT [

30], and others have achieved widespread adoption in a variety of fields, including Natural Language Processing (NLP), machine translation, and conversational AI, due to their capacity to generalize across diverse tasks [

133]. However, this surge in popularity has also exposed LLMs to a myriad of vulnerabilities, becoming an attractive target for various security threats [

1,

50,

79].

One of the most significant threats to LLMs is Adversarial Attacks (AAs) [

101,

119,

164], which are typically designed to fool Machine Learning (ML) models by modifying input data or introducing carefully-crafted inputs that cause the model to behave inappropriately [

50,

130]. These attacks often remain indistinguishable to humans but significantly impact the model’s decision-making process, posing a significant threat to LLMs, as they can compromise the integrity, reliability, and security of applications that rely on these models [

14]. One significant example is the Crescendo attack [

111]. This sophisticated method manipulates LLMs by gradually escalating a conversation with benign prompts that evolve into more harmful requests, effectively bypassing safety mechanisms. Therefore, protecting LLMs has become a critical concern for researchers and practitioners alike [

66,

167].

To effectively secure LLMs against AAs, it is crucial to assess and rank these threats based on their severity and potential impact on the model. For instance, some attacks, like Prompt Injection [

85], are easy to execute and widely applicable, making them higher-priority threats. Others, like Backdoor attacks [

76], may require greater sophistication but can cause significant long-term damage [

51]. This prioritization allows security teams to focus on the most dangerous attacks first for mitigation efforts. Existing vulnerability metrics, such as the Common Vulnerability Scoring System (CVSS) [

114] and OWASP Risk Rating [

140], are commonly used to evaluate the danger level of attacks on traditional systems, taking into account factors such as attack vector, attack complexity, and impact. However, their applicability to LLMs remains questionable.

Most existing vulnerability metrics are tailored for assessing

technical vulnerabilities in software or network systems. In contrast, AAs on LLMs often target the model’s

decision-making capabilities and may not result in traditional technical-impacts, such as data breaches or service outages [

161]. For example, attacks like Jailbreaks [

24], which manipulate the model’s outputs to bypass ethical or safety constraints, cannot easily be classified as technical vulnerabilities. These attacks focus on manipulating the model’s behavior rather than exploiting system-level weaknesses. In other terms, the context-specific factors used in existing metrics, such as CVSS, do not adequately account for the unique characteristics of LLMs or the nature of AAs. Consequently, they may be ill-suited for assessing the risk posed by these attacks on LLMs.

In this study, we aim to evaluate the suitability of known vulnerability metrics in assessing Adversarial Attacks against LLMs. We hypothesize that: ‘the factors used by traditional metrics may not be fully applicable to attacks on LLMs’, because many of these factors are not designed to capture the nuances of AAs.

To test this hypothesis, we evaluated 56 different AAs across four widely used vulnerability metrics. Each attack was assessed using three distinct LLMs, and the scores were averaged to provide a final assessment. This multi-faceted approach aims to provide a nuanced understanding of how well current metrics can distinguish between varying levels of threat posed by different adversarial strategies, as relying solely on human judgment for security assessments would require domain experts, and human evaluation could introduce biases.

Our findings indicate that average scores across diverse attacks exhibit low variability, suggesting that many of the existing metric factors may not offer fair distinctions among all types of adversarial threats on LLMs. Furthermore, we observe that metrics incorporating more generalized factors tend to yield better differentiation among adversarial attacks, indicating a potential pathway for refining vulnerability assessments tailored for LLMs.

The contributions of this paper are fourfold.

We provide a taxonomy of the various classification criteria of Adversarial Attacks existing in the literature, showing the logic followed in classifying AAs into multiple types.

We present a list of 56 AAs specifically targeting LLMs, which serve as our test scenarios.

We provide a comprehensive evaluation of some vulnerability metrics, in the context of AAs targeting LLMs, using differential statistics to analyse the variations of metric scores across different attacks.

We suggest that future work should focus on developing more general and LLM-specific vulnerability metrics that can effectively capture the unique characteristics of AAs targeting these models.

This paper is structured in seven parts. We start in

Section 2 by detailing the procedures we employed in this study, especially concerning the data collection, vulnerability assessments through LLMs, and mathematical analysis of the results. After that, we present in

Section 3 an overview of AAs and their existing classifications. In

Section 4, we present a detailed list of AAs on LLMs, and propose a classification based on the danger level in

Section 5.

Section 6,

Section 7, and

Section 8 encompasses respectively, the evaluation of the vulnerability metrics on LLMs, the discussion of the results, and the perspectives for future enhancements.

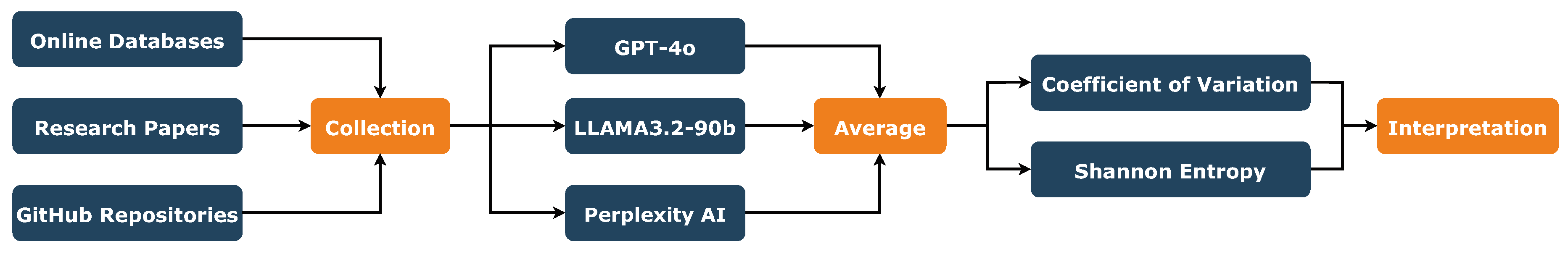

2. Methods

In this section, we outline the methodology adopted to evaluate vulnerabilities in attacks targeting Large Language Models using established metrics such as DREAD [

92], CVSS [

114], OWASP Risk Rating [

140], and Stakeholder-Specific Vulnerability Categorization (SSVC) [

128].

Our approach involves three key steps depicted below in

Figure 1 : data collection, assessment, and statistical interpretation.

2.1. Data collection

The first step in our methodology was to gather a comprehensive dataset of AAs targeting LLMs. To ensure a thorough and systematic approach, we began by reviewing the literature on these attacks, exploring existing types and classifications. This step provided a broad understanding of the main categories of attacks commonly observed in the context of ML and NLP systems.

Following this foundational review, we focused on identifying recent AAs specifically targeting LLMs. These attacks were grouped into

seven primary types: Jailbreaks (White-box and Black-box) [

150], Prompt Injections [

84], Evasion attacks [

137], Model-Inference (Membership Inference) attacks [

57], Model-Extraction attacks [

49], and Poisoning/Trojan/Backdoor attacks [

76,

88,

131]. For each type, we selected

eight representative attacks, prioritizing those published in recent research or demonstrated in practical scenarios. This effort resulted in a list of 56 attacks, covering a diverse range of threat vectors and methodologies.

To enable a systematic ranking of these attacks based on their potential danger, we decided to assess each attack using vulnerability metrics. By applying multiple metrics, we aimed to provide a multi-faceted evaluation of each attack’s severity and to ensure that the dataset would serve as a robust basis for further analysis and interpretation.

2.2. Score assessments

To evaluate the severity and danger level of the 56 gathered attacks, we began by identifying widely recognized vulnerability assessment metrics to ensure a comprehensive analysis. After careful consideration, we selected four metrics: DREAD [

92], CVSS [

114], OWASP Risk Rating [

140], and SSVC [

128]. These metrics were chosen for their broad adoption and their focus on different factors, enabling a more nuanced understanding of the vulnerabilities. Since the Adversarial Attacks we collected are recent and not yet assessed in the literature, calculating their scores became essential to address this gap.

Manually assessing 56 attacks across four metrics is a daunting task, requiring extensive expertise from security analysts, system administrators, and other domain experts. The process involves interpreting complex scenarios, considering varying factors for each metric, and ensuring consistency between all evaluations. Completing such an effort manually could take months or even years, which is impractical given the fast-evolving nature of adversarial threats.

To overcome this challenge and accelerate the process, we leveraged the capabilities of LLMs to perform

semi-automated scoring. Specifically, we utilized three state-of-the-art models: GPT-4o [

97], LLAMA3.2-90b [

38], and Perplexity AI [

62]. Each model operated independently, assessing the attacks and vulnerabilities according to the factors defined by the selected metrics. For each scoring factor, we calculated the average score provided by the three LLMs, rounded to the closest unit.

This approach offers several advantages. First, it enables rapid assessments. Second, using multiple LLMs increases the robustness of the results by minimizing biases or errors from any single model. Furthermore, the models’ advanced text-processing capabilities allow them to analyze the contextual details of each attack and provide scores that align with the logic of the vulnerability metrics.

A recent work of [

23] proves that LLMs are able to identify and analyze software vulnerabilities; but that they can lead to misinterpretations or oversights in understanding complex vulnerabilities. To address such potential inconsistencies in the assessments, we incorporated a

Human-in-the-Loop (HitL) verification process. We reviewed the logic and reasoning behind each LLM-provided score to ensure its accuracy and reliability. This step was essential to mitigate any errors or misinterpretations that might arise from the LLMs, especially when handling complex scenarios.

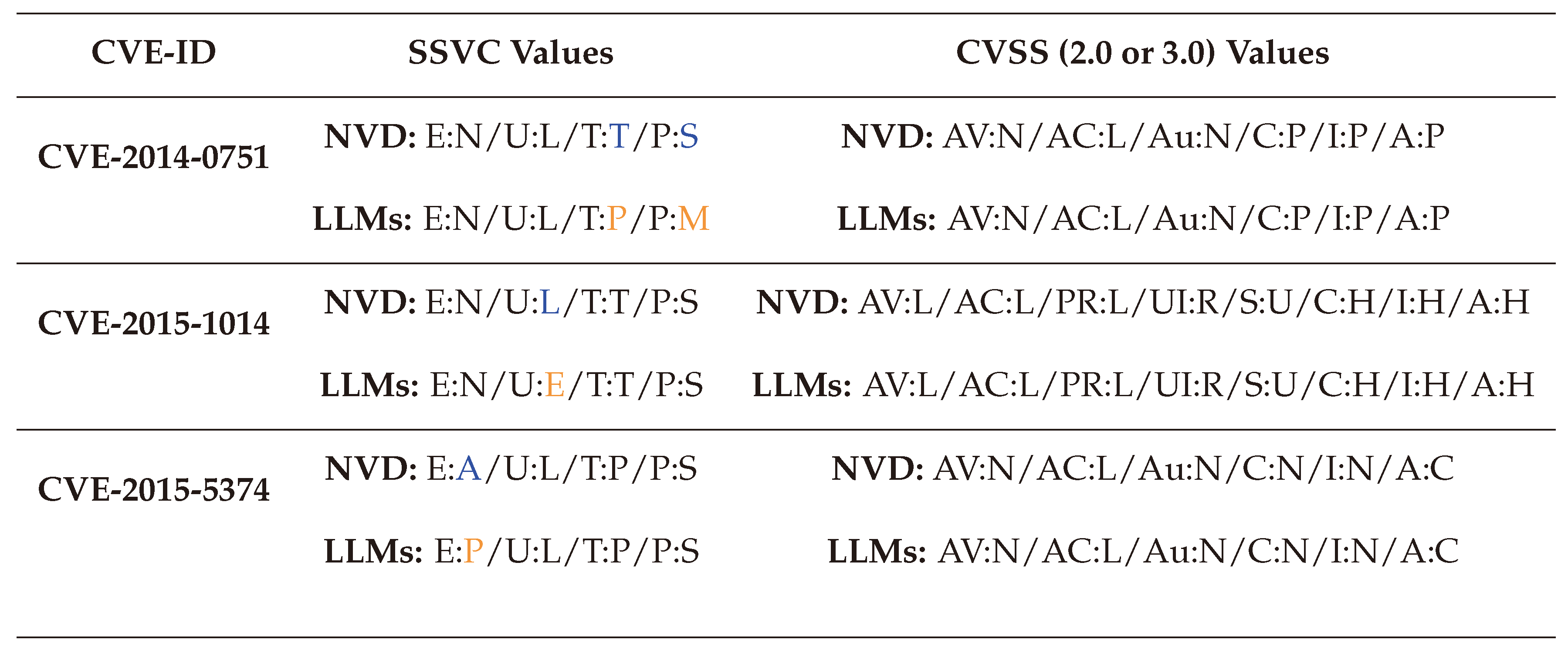

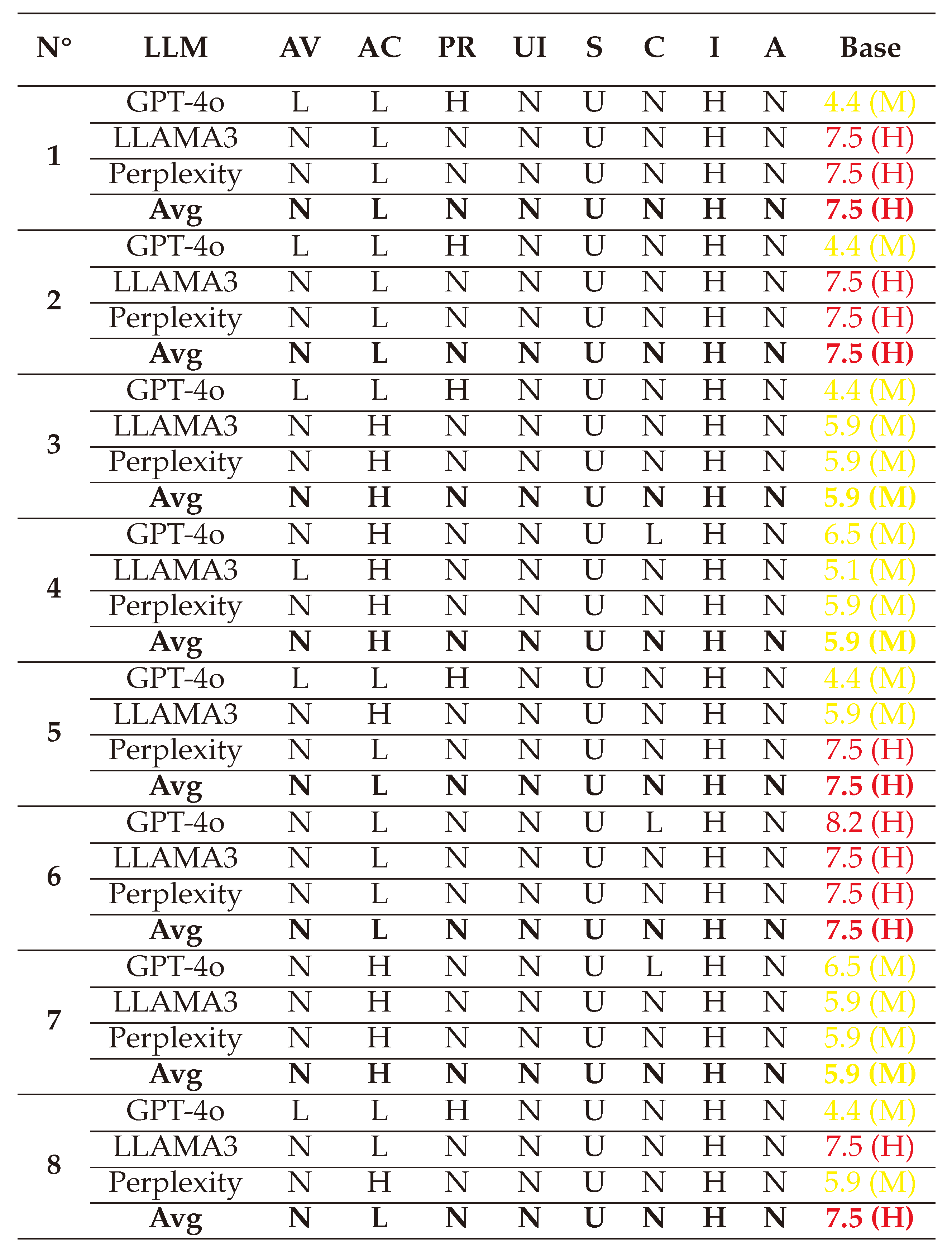

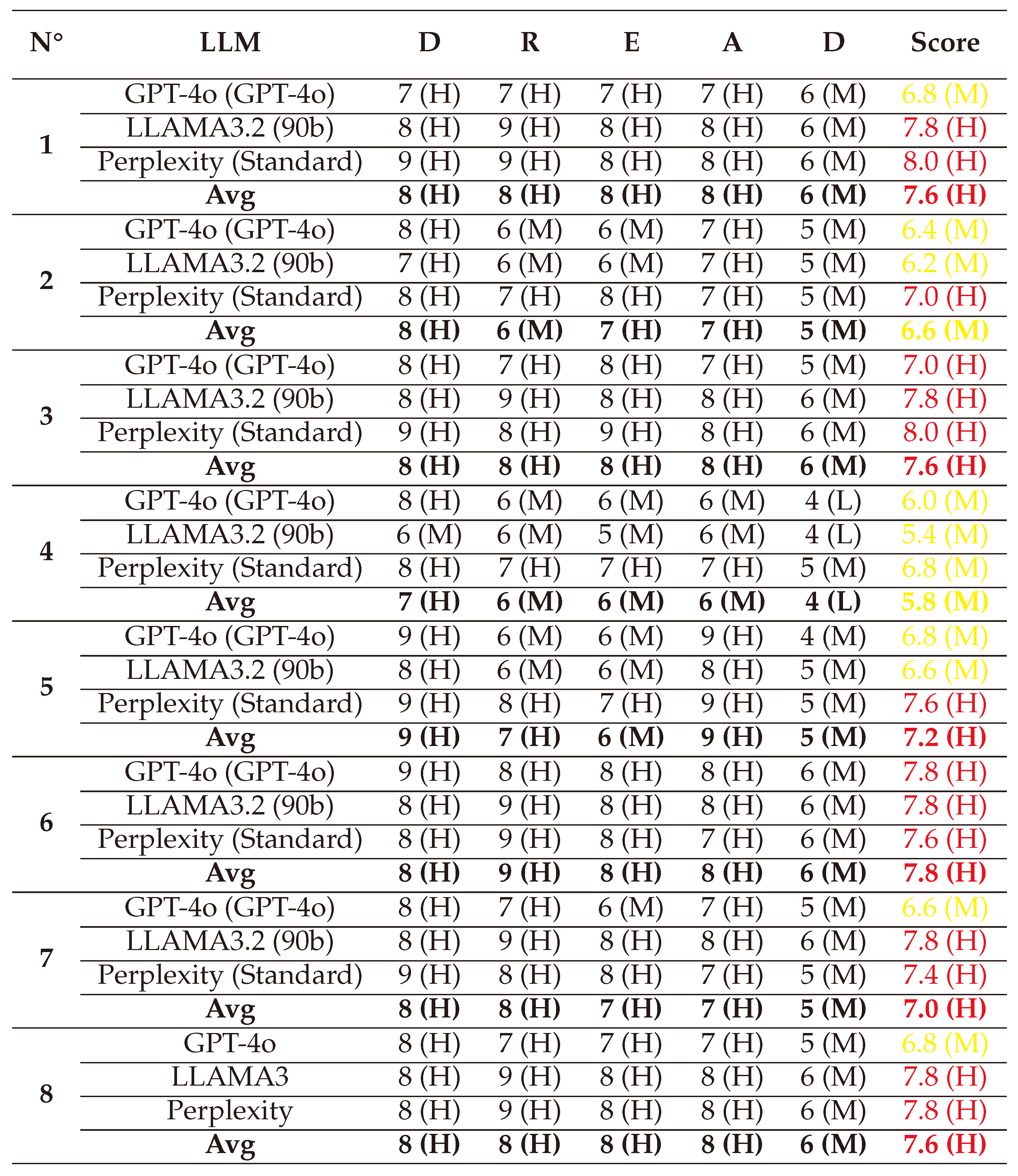

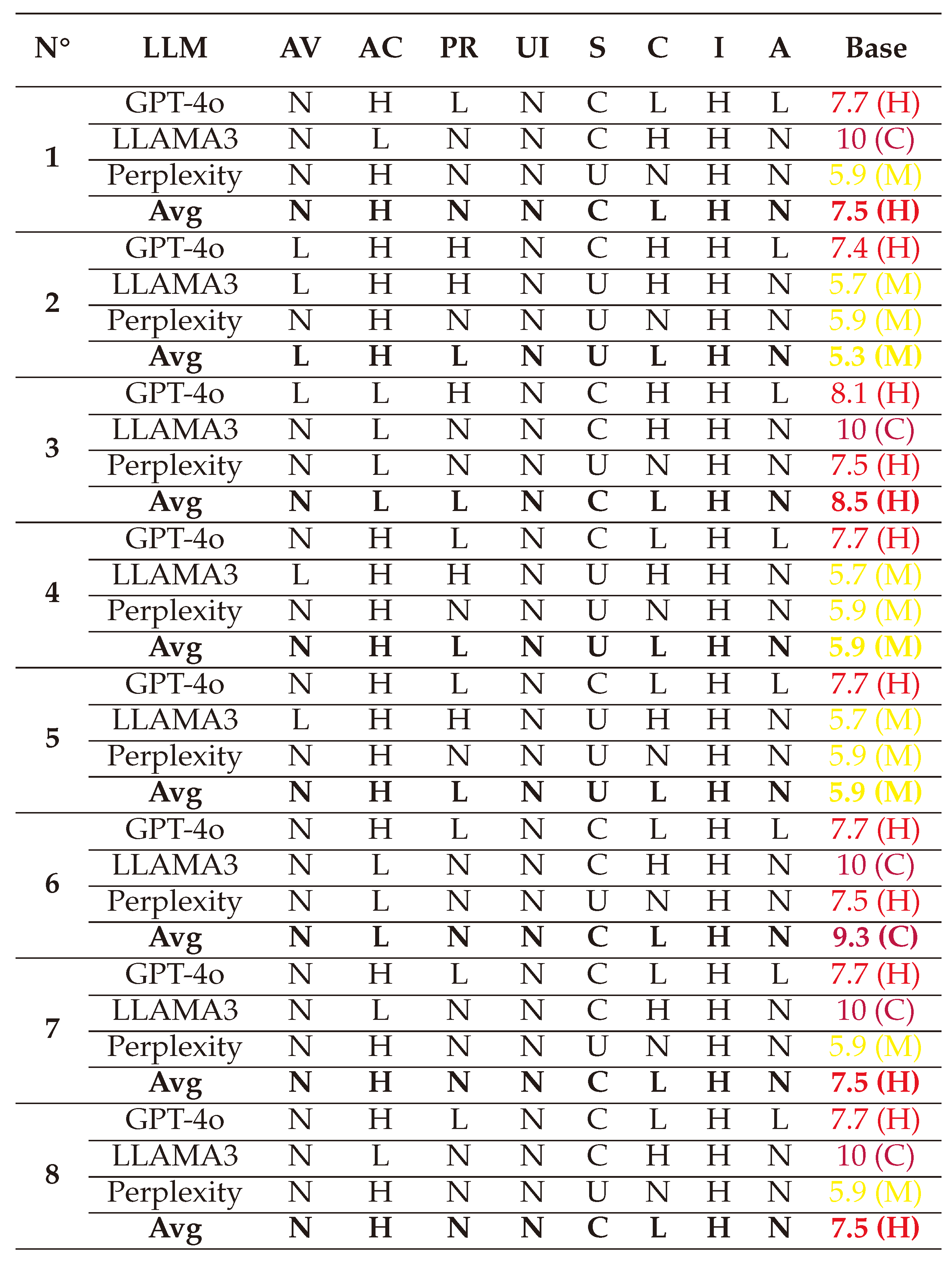

To validate this methodology, we tested it on a set of Common Vulnerabilities and Exposures (CVEs) that already have human validated scores with both CVSS and SSVC [

128] to measure the gap, as shown in

Table 1. The details of each factor are further explained in

Section 5.2.

The results demonstrate that our approach of aggregating the assessments of three LLMS yields scores closely aligned with existing assessments, with few differences related mainly to the advancements of technologies from the first assessment of those vulnerabilities to today. For instance, vulnerabilities such as

‘CVE-2015-5374’ and

‘CVE-2019-9042’ became less active than before, making their exploitation value with SSVC change from

Active to

Proof-of-Concept (refer to

Section 5.2.4 for more details).

This experiment also shows that combining the computational efficiency of LLMs with human oversight represents a practical solution for scoring new and unassessed attacks in the absence of readily available experts. This innovative approach not only saves time but also ensures a balanced and consistent evaluation process, enabling a deeper understanding of vulnerabilities and their potential impact.

Table 1.

Comparison between some existing and LLM-generated CVSS and SSVC values

Table 1.

Comparison between some existing and LLM-generated CVSS and SSVC values

2.3. Results interpretations

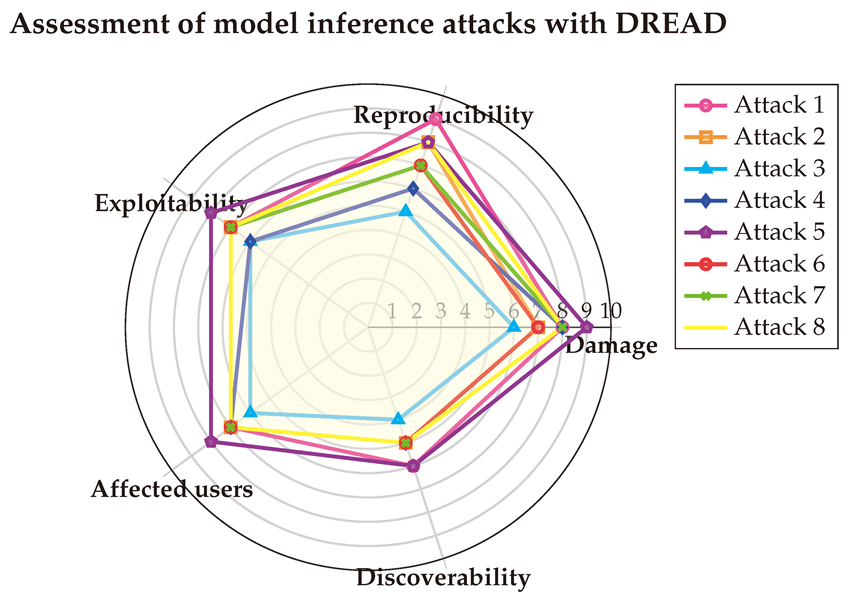

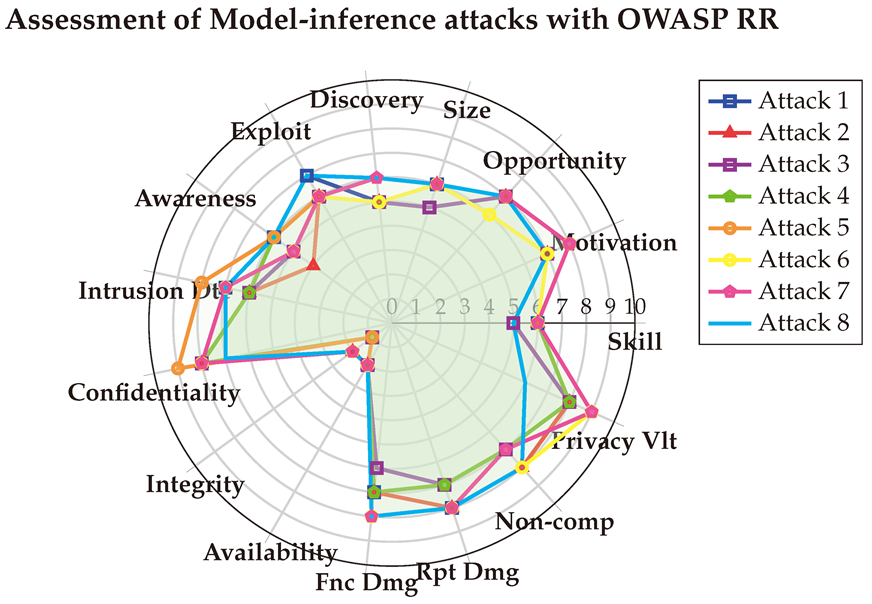

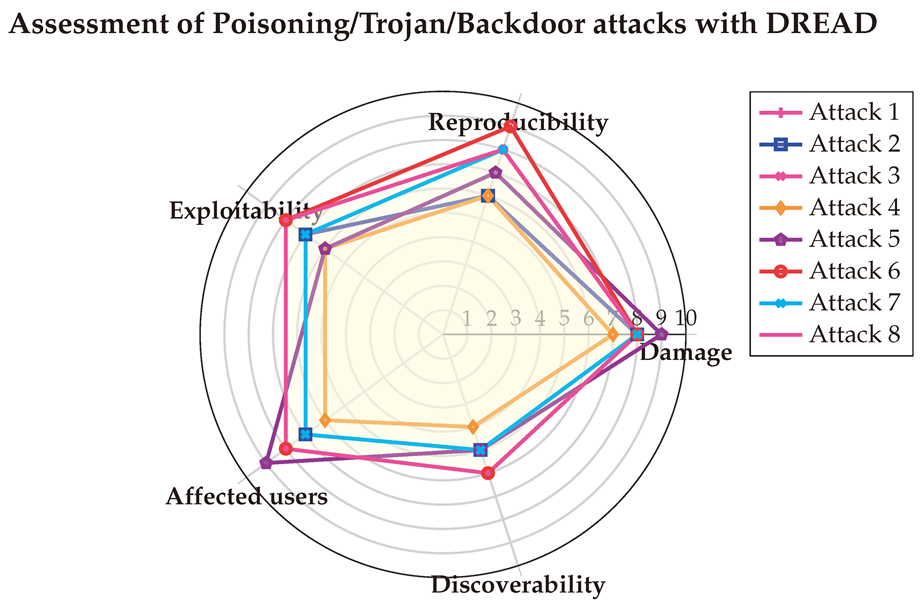

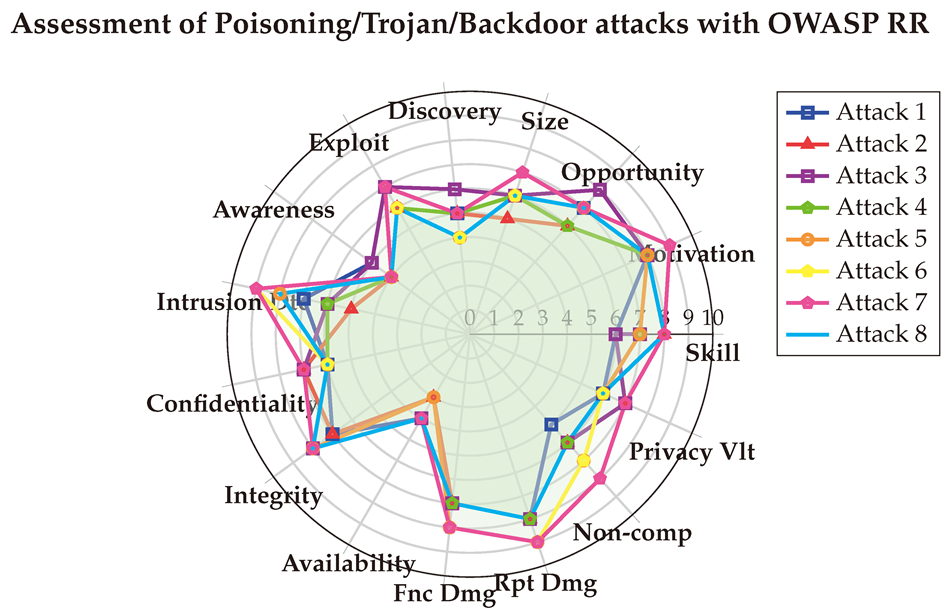

Our approach provided a multi-dimensional analysis of Adversarial Attacks against LLMs by leveraging four distinct vulnerability assessment metrics: DREAD, CVSS, OWASP Risk Rating, and SSVC. This comprehensive evaluation allowed us to gain a broad perspective on how these metrics reflect the severity and impact of attacks, as well as their usefulness in ranking and understanding vulnerabilities in the LLM context.

To assess the utility and added value of each factor within the metrics, we analyzed their

variability across the 56 attacks, grouped by attack type. For the

quantitative metrics (DREAD and OWASP Risk Rating), we calculated the

coefficient of variation (CV) for each factor to measure the relative dispersion of scores. For the

qualitative metrics (CVSS and SSVC), we used

entropy [

118] to quantify the diversity or uniformity of categorical values.

3. Adversarial Attacks

The rise of AAs in the field of Machine Learning has posed significant security challenges, especially for Large Language Models. These attacks exploit the vulnerabilities inherent in AI models by manipulating inputs to achieve unintended or harmful outputs. This section provides a detailed exploration of AAs, beginning with their formal definition and an analysis of why they are considered particularly dangerous to LLMs. Then it introduces various types and classifications of AAs, offering insight into the range of attack strategies used to compromise LLMs. Understanding these elements is crucial for designing more robust defenses and enhancing the security of AI-driven systems.

3.1. Definition

Adversarial Attacks are

intentional manipulations of input data designed to exploit vulnerabilities in ML models [

43]. The concept of adversarial examples was first introduced in the domain of Image Recognition by [

130], and it has since been widely explored across different ML tasks, including NLP [

33,

105,

161]. In the context of LLMs, adversarial inputs are carefully crafted to cause the model to produce incorrect, biased, or harmful

outputs [

68]. Unlike traditional errors, AAs are not random; but are

strategically designed to exploit the decision boundaries of models by altering inputs in ways imperceptible to humans and effective against ML models [

13]. These attacks can involve minimal changes, such as swapping words, inserting seemingly harmless phrases, or restructuring sentences, that lead to dramatically different responses from the model, often having severe real-world consequences [

61,

67], particularly in safety-critical applications such as autonomous driving, healthcare diagnostics, and security systems [

100]. For example, an Adversarial Attack could lead an autonomous vehicle to misinterpret road signs, resulting in catastrophic accidents [

41,

165].

On top of that, AAs can come in various forms, each exploiting different aspects of LLMs. These attacks can be broadly categorized based on the attacker’s knowledge, the nature of the perturbations, and the model’s vulnerability. The following section will explore the different types and classifications of AAs, showing that each type has distinct strategies and potential impacts on LLMs.

3.2. Classifications of AAs

Adversarial Attacks have been classified in various ways in the literature, offering different perspectives on how AAs operate and their potential impact on Machine Learning models. In this section, we have gathered the most common classifications of AAs, based on criterias such as their purpose, target, the attacker’s knowledge and strategy, life-cycle stages, CIA

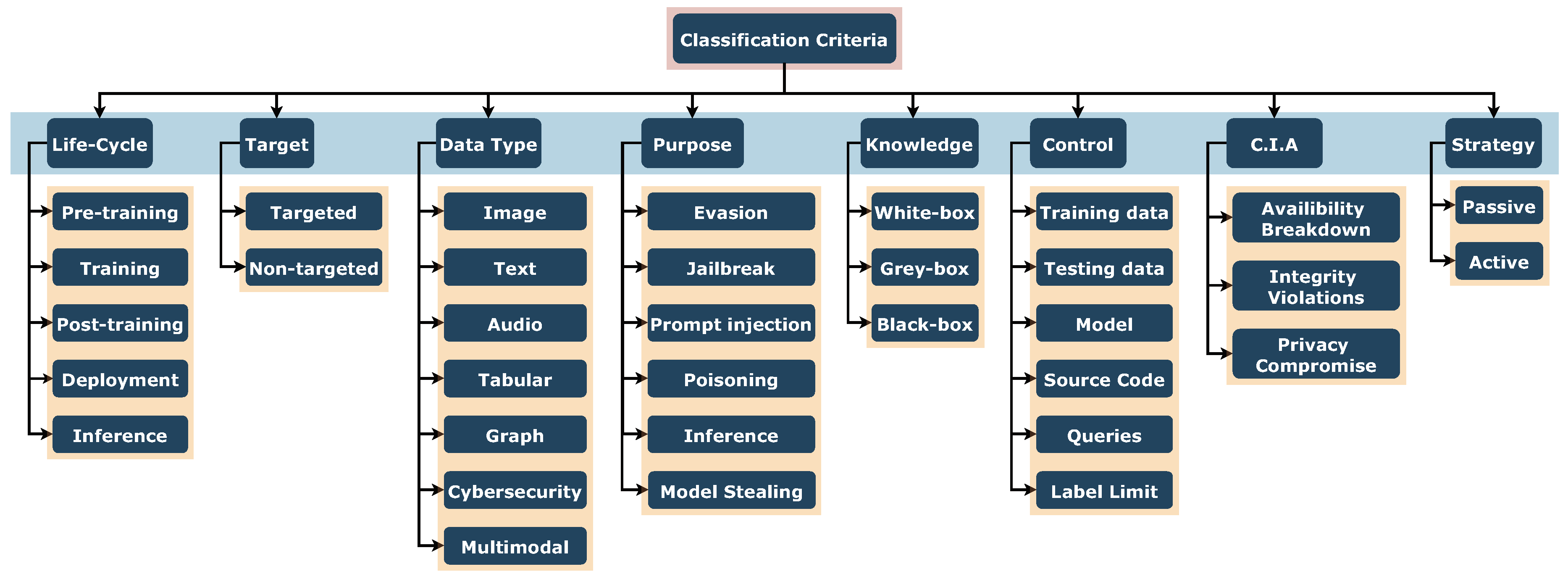

1 triad, and the type of data and control involved. We depict these classifications in

Figure 2, and each one will be discussed in detail in the following subsections.

3.2.1. Based on the Purpose

One of the most widely used ways to classify AAs is by analyzing their

intended purpose [

9,

27,

142]. Attacks can be designed either to evade detection by a model or to cause intentional misclassifications, thereby compromising the system’s integrity or exploiting its weaknesses. Based on these overarching objectives, several distinct types of AAs have emerged, including: Evasion attacks [

76,

88,

137], Jailbreak attacks [

150], Prompt Injections [

84], Model Inference attacks [

57], Model Extraction (Stealing) attacks [

49], and Poisoning/Trojan/Backdoor attacks [

76,

88,

131]. Each type targets different aspects of a ML system, posing unique challenges to the robustness and security of the models.

Evasion attacks:

In evasion attacks, adversaries craft inputs that evade detection or mislead the model into making incorrect classifications. For instance, small changes in an image may lead a computer vision model to misclassify it, while adversarial inputs in LLMs can bypass content filters [

3,

4,

21].

Model jailbreaking:

In jailbreak attacks, the attacker manipulates the model to bypass restrictions or constraints set by the system such as ethical filters. For example, bypassing content filters in a chatbot by providing a carefully crafted prompt that tricks the model into generating restricted outputs [

28,

29].

Prompt injections (PI):

In prompt injections, the attacker provides maliciously designed prompts that cause the model to follow unintended instructions or generate harmful outputs. Unlike jailbreaking, PI typically involves inserting harmful instructions within regular inputs rather than overriding system-level restrictions [

141]. An example is injecting hidden instructions within user input to manipulate a language model’s behavior in ways not intended by the developers [

70,

84,

87].

Model inference:

In Model (or Membership) Inference attacks, adversaries aim to determine whether specific data was part of the training set. By analyzing model outputs, they can infer sensitive or proprietary information from the training data, posing significant privacy risks [

107,

127].

Model Extraction:

Model Extraction (or Stealing) involves probing a black-box model to reconstruct its functionality or recover sensitive data. For example, an attacker could steal a proprietary financial model by systematically querying it and analyzing its responses [

121,

157].

Poisoning/Trojan/Backdoors:

These attacks aims at the integrity of the model during the training phase. The attacker injects malicious data (poisoning) or patterns (trojan, backdoor) into the training set to influence the model’s behavior at inference time. For instance, a Poisoning scenario would be introducing mislabeled data to reduce model accuracy [

155], and a Trojan scenario would be embedding a hidden trigger in the training data to activate malicious behavior later, such as a trigger that could cause a traffic-light recognition model to classify a red light as a green light in autonomous driving cars [

32].

3.2.2. Based on the Target

Adversarial attacks can also be classified based on their target, which refers to whether the attack is aimed at causing a specific or arbitrary misclassification [

27].

Targeted:

In targeted attacks, the attacker aims to manipulate the model into misclassifying an input into a specific, incorrect class [

15]. For example, an attacker might craft an input to make a stop sign consistently classified as a yield sign.

Non-targeted:

In non-targeted attacks, the goal is to cause the model to misclassify the input, but the specific incorrect class is irrelevant to the attacker [

143]. For instance, an adversarial input could cause a stop sign to be classified as any incorrect traffic sign.

3.2.3. Based on the Attacker’s Knowledge

Another existing classification is categorizing AAs by the amount of knowledge the attacker has about the target model [

98,

142]. These categories typically include white-box, black-box, and sometimes grey-box attacks, although grey-box is not always explicitly classified.

White-box:

In white-box attacks, the attacker has full access to the model’s architecture, parameters, and training data, allowing them to exploit the model’s gradients for highly effective adversarial examples. For instance, using gradient-based methods, an adversary can precisely manipulate inputs to deceive the model [

54,

81].

Black-box:

In black-box attacks, the attacker has no direct access to the model’s internals and can only interact with it by sending queries and observing outputs. Despite this limitation, attackers can use techniques like transfer learning, where adversarial examples generated on a surrogate model are used to attack the target model [

60,

83].

Grey-box:

In grey-box attacks, the attacker has partial knowledge of the model, such as knowing the architecture but lacking access to the exact parameters or training data. These attacks may combine both white-box and black-box techniques to exploit vulnerabilities effectively [

90,

148].

3.2.4. Based on the Life-Cycle

Adversarial attacks can be categorised also by when they occur in the machine learning pipeline, with some references focusing on the training and deployment phases only [

98], and others adding phases such as pre-training, post-training, and inference phase [

144].

Pre-training:

Pre-training attacks are conducted before the model training begins, often during the data collection phase. For example, poisoned-data injection into a dataset to compromise the model’s integrity once training commences [

73,

80].

Training:

Training-phase attacks occur during the actual model training process. A notable example is backdoor injection, where adversaries embed specific triggers in the training data to manipulate the model’s behavior later [

35,

147].

Post-training:

Post-training attacks take place immediately after the training process concludes, before the model is deployed. These attacks might involve modifying the model parameters in a way that alters its predictions without detection [

104,

163].

Deployment:

Deployment-phase attacks are executed after the model has been deployed on a hardware device, such as a server or mobile device. An example includes modifying model parameters in memory through techniques like bit-flipping, which can lead to unexpected behaviors [

6,

20].

Inference:

Inference attacks are performed by querying the model with test samples. A specific instance is backdoor activation, where an adversary triggers the model’s malicious behaviors by providing inputs that match the previously embedded backdoor conditions [

34,

69].

3.2.5. Based on the CIA Violation

A fifth classification of AAs is made according to the targeted aspect of the CIA triad, which encompasses confidentiality, integrity, and availability violations [

98,

112].

Availability breakdown:

In availability breakdown attacks, the attacker aims to degrade the model’s performance during testing or deployment. This can involve energy-latency attacks that manipulate queries to exhaust system resources, leading to denial of service or reduced responsiveness [

8,

124].

Integrity violations:

Integrity violation attacks target the accuracy and reliability of the model’s outputs, resulting in incorrect predictions. For instance, poisoning attacks during training can introduce malicious data, causing the model to produce erroneous results when deployed [

47,

53].

Privacy compromise:

Privacy compromise attacks focus on extracting sensitive information about the model or its training data. Model-extraction attacks exemplify this by allowing an adversary to reconstruct the model’s functionalities or retrieve confidential data used during training [

18,

63].

3.2.6. Based on the Type of Control

Adversarial attacks are classified in other sources based on the type of control the attacker exerts over various elements of the ML model, with some highlighting the control of training and testing data [

112], and others [

98] proposing more aspects of control, such as the control of the model, source code, and queries, as well as a limited control on the data labels.

Training data:

In training data attacks, the attacker manipulates the training dataset by inserting or modifying samples. An example is data poisoning attacks, where malicious inputs are added to influence the model’s learning process [

138].

Testing data:

Testing data attacks involve altering the input samples during the model’s deployment phase. Backdoor poisoning attacks serve as an example, where specific triggers are embedded in the testing data to manipulate the model’s predictions under certain conditions [

113].

Model:

Model attacks occur when the attacker gains control over the model’s parameters, often by altering the updates applied during training. This can happen in Federated Learning (FL) environments, where malicious model updates are sent to compromise the integrity of the aggregated model [

132].

Source code:

Source code attacks involve modifying the underlying code of the model, which can include changes to third-party libraries, especially those that are open source. This allows attackers to introduce vulnerabilities directly into the model’s functionality [

159].

Queries:

Query-based attacks allow the attacker to gather information about the model by submitting various inputs and analyzing the outputs. Black-box evasion attacks exemplify this, as adversaries attempt to craft inputs that evade detection while learning about the model’s behavior through its responses [

42].

Label limit:

In label limit attacks, the attacker does not have control over the labels associated with the training data. An example is clean-label poisoning attacks, where the adversary influences the model without altering the labels themselves, making detection more difficult [

116].

3.2.7. Based on the Type of Data

An seventh classification of Adversarial attacks is based on the type of data they target, highlighting the diverse methodologies employed across different modalities. Some underline attacks targeting data types as images, text, tabulars, cybersecurity, and even multimodal [

98], while other works mention attacks on audio data [

16], and graph-based data [

25].

Image:

In image-based attacks, the attacker crafts adversarial images designed to cause misclassification. An example includes perturbing images to deceive object detectors or image classifiers, leading to incorrect identification [

129].

Text:

Text attacks involve modifying text inputs to mislead NLP models. For instance, an adversary might introduce typos or antonyms to trick sentiment analysis tools or text classifiers into generating false outputs [

46].

Tabular:

Tabular data attacks target models that operate on structured data, often seen in applications like finance or healthcare. A common example is poisoning attacks, where malicious entries are inserted into tabular datasets to manipulate model behavior [

17].

Audio:

Audio-based attacks involve crafting adversarial noise or altering audio inputs to cause misclassification in systems like voice recognition. For example, specific sound patterns can be designed to mislead voice-activated systems, resulting in incorrect command interpretations [

78].

Graphs:

Graph-based attacks manipulate graph structures and attributes to deceive Graph Neural Networks (GNNs). An attacker might alter edges or node features to induce misclassification or misleading outputs from graph-based models [

93].

Cybersecurity:

In the cybersecurity domain, AAs target systems like malware detection or intrusion detection systems. An example is poisoning a spam email classifier, where attackers introduce deceptive emails to degrade the model’s performance [

135].

Multimodal:

Multimodal attacks involve exploiting systems that integrate multiple data types. In these cases, attackers might gain insights by submitting queries that encompass different modalities, such as text and image combinations [

145].

3.2.8. Based on the Strategy

Last but not least, Adversarial attacks can also be categorized based on the strategy employed by the attacker, distinguishing between passive and active approaches [

112].

Passive:

In passive attacks, the attacker seeks to gather information about the application or its users without actively interfering with the system’s operation. An example is reverse engineering, where an adversary analyzes a black-box classifier to extract its functionalities and gain insights into its behavior [

22].

Active:

Active attacks are designed to disrupt the normal functioning of an application. The attacker may implement poisoning attacks that introduce malicious inputs, aiming to trigger misclassifications or degrade the model’s performance during operation [

59].

4. Adversarial Attacks on LLMs

In recent years, LLMs have been increasingly targeted by AAs [

68,

119,

154], posing various threats to their reliability, safety, and security. These attacks can take multiple forms and serve distinct purposes, each exploiting different vulnerabilities within the model or its deployment. In this section, we present a comprehensive taxonomy of 56 recent AAs targeting LLMs, following the purpose-based classification of AA (refer to

Section 3). We consider 7 types of AAs: White-box Jailbreak attacks, Black-box Jailbreak attack, Prompt Injection, Evasion Attacks, Model Extraction, Model Inference, and Poisoning/Trojan/Backdoor. Each attack type includes 8 prominent examples, which are detailed in the following subsections.

4.1. Jailbreak Attacks

The type of AAs that we begin with are model Jailbreaking attacks, which are designed to bypass safety measures. We consider two approaches in jailbreak attacks according to the targeted model: White-box, and Black-box model jailbreaking.

4.1.1. White-box attacks

The first type are White-box Jailbreak attacks, where the attacker has complete access to the model’s architecture, parameters, and training data. This level of knowledge allows the attacker to design specific inputs that exploit vulnerabilities in the model, often related to the model gradients, in order to bypass its restrictions or safety measures.

4.1.2. Black-box attacks

The second type of attacks are Black-box Jailbreak attack, in which, in contrast to white-box attacks, the attacker has no access to the model’s internal workings or training data. Instead, the attacker can only interact with the model by providing inputs and observing the outputs, often relying on trial and error to discover effective prompts able to bypass the model’s safeguards.

We present in

Table 2 a list of recent white-box and black-box jailbreak attacks existing in the literature [

125], and if they are Open Source (OS) or not, as each has a different strategy and implementation.

4.2. Prompt Injection

The third type of attacks that we illustrate are Prompt injections, where the adversary manipulates the input prompts and queries to deceive the model into producing unintended or harmful outputs. This technique is ranked among the most dangerous attacks against LLMs by [

99]. To illustrate the diverse strategies attackers employ to exploit LLMs with PIs, we have gathered eight different attacks, utilizing both direct injections, where the attacker append a malicious input to a prompt, and indirect injection methods, where the attacker append malicious prompts through file or external inputs. These attacks are presented in

Table 3

4.3. Evasion Attacks

The forth type of attacks we illustrate are Evasion attacks, in which attackers aim to deceive language models by crafting inputs designed to bypass detection or classification. These attacks often target sentiment analysis and text classification models, seeking to manipulate their outputs through subtle modifications. In

Table 4, we have gathered eight different examples and techniques of evasion attacks presented in the literature, some of which employ text perturbations to alter the original input, while others leverage LLMs to generate sophisticated evasion samples against their counterparts.

4.4. Model Extraction

Model extraction attacks are the fifth type we illustrate in this section. These attacks aim to recreate or steal a language model’s functionality by querying it and using the responses to reconstruct the model, this poses a significant threat as they allow adversaries to duplicate proprietary models without access to their internal details. We present below in

Table 5, eight examples of Model Extraction attacks, showcasing different methods adversaries use to probe black-box LLMs and either extract training data of the model, or precise personal information of users.

4.5. Model Inference

Model inference (or Membership Inference) are the sixth type of attacks we focus on in this study. These attacks determine whether specific data samples, especially sensitive information, were part of the training set of an LLM. These attacks can compromise the privacy of users or organizations by revealing training data patterns. We gathered in

Table 6 eight examples of model inference attacks, which demonstrate how attackers exploit LLMs to infer confidential training data and gain insights into the model’s behavior.

4.6. Poisoning/Trojan/Backdoors

The last attacks on LLM we show are Poisoning, Trojan, and Backdoor attacks, which involve injecting malicious data or hidden triggers during the training phase of an LLM. This can lead to incorrect or dangerous behavior at deployment, allowing attackers to manipulate the model’s responses. We have compiled in

Table 7 eight examples of these attacks, where adversaries either corrupt the training process with poisoned data, or plant triggers to exploit models during inference, demonstrating the serious risks these methods pose to LLMs.

5. Classification of Adversarial attacks on LLMs based on their danger level

After presenting the existing classifications of AAs and some of the most-recent attacks against LLMs, we propose in this section a new criterion for classifying AAs on LLMs. We present the idea and methodology in the following subsections.

5.1. Principle

Seeing the list of AAs on LLMs presented in

Section 4 and how frequent they are, one question that comes across the mind is what attacks should be

mitigated first to secure LLMs? In order to answer this question, we need to rank the available attacks based on their

danger level against LLMs in order to know what attacks is a model most-vulnerable to. This can be done by calculating the vulnerability score those of attacks using Vulnerability-assessment metrics [

117].

5.2. Vulnerability-assessment Metrics

Vulnerability assessment metrics are critical tools for evaluating and ranking potential security threats based on their severity and likelihood of exploitation. Various methodologies, such as DREAD [

92], CVSS [

114], OWASP Risk Rating [

140], and SSVC [

128], provide frameworks for assessing vulnerabilities by considering different factors, including technical attributes, potential impacts, and contextual elements. By systematically analyzing attacks based on their danger, these assessment tools facilitate informed decision-making in an ever-evolving threat landscape, allowing organizations to strengthen their security posture and better protect their assets.

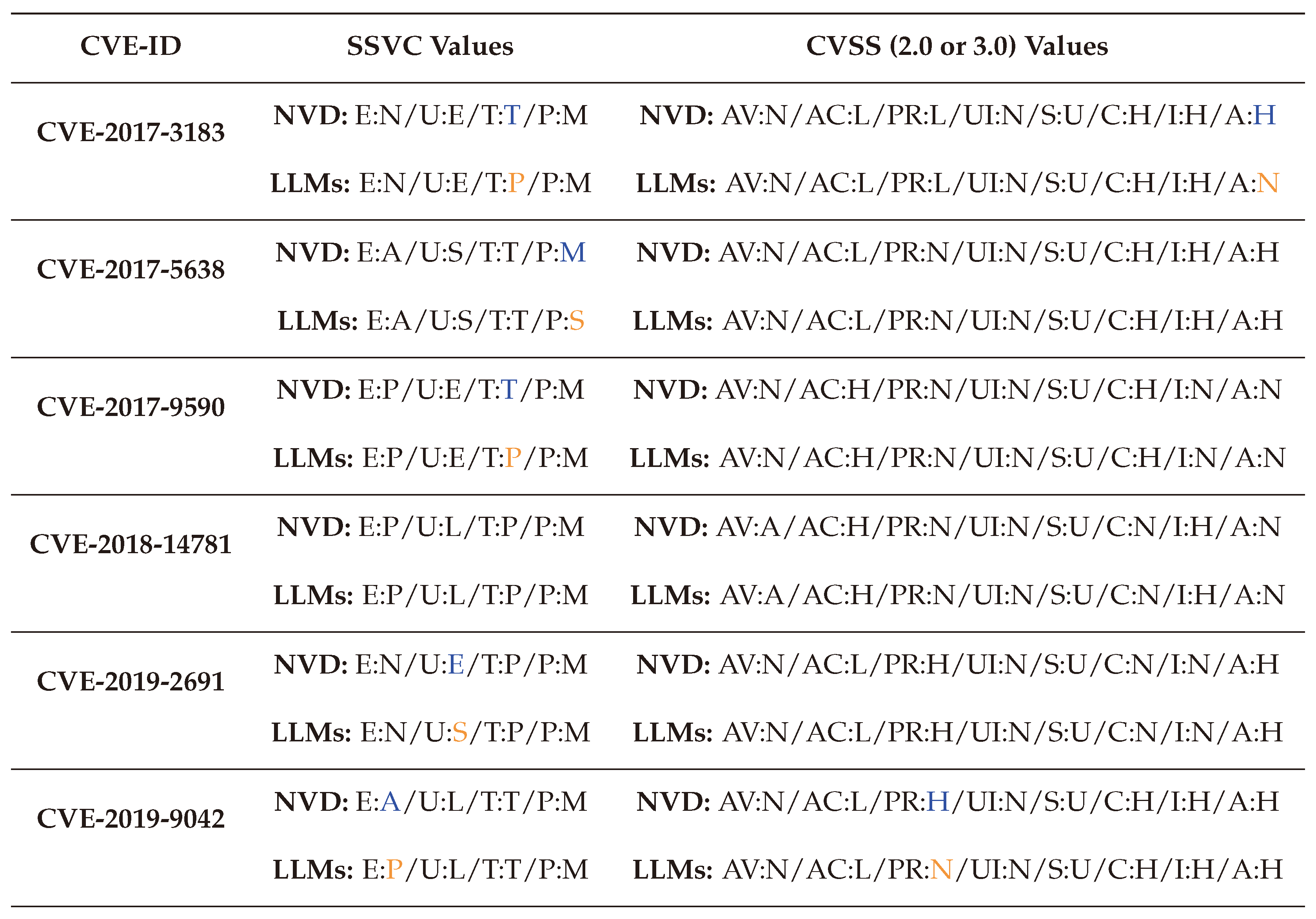

Figure 3.

Examples of known vulnerability assessment metrics

Figure 3.

Examples of known vulnerability assessment metrics

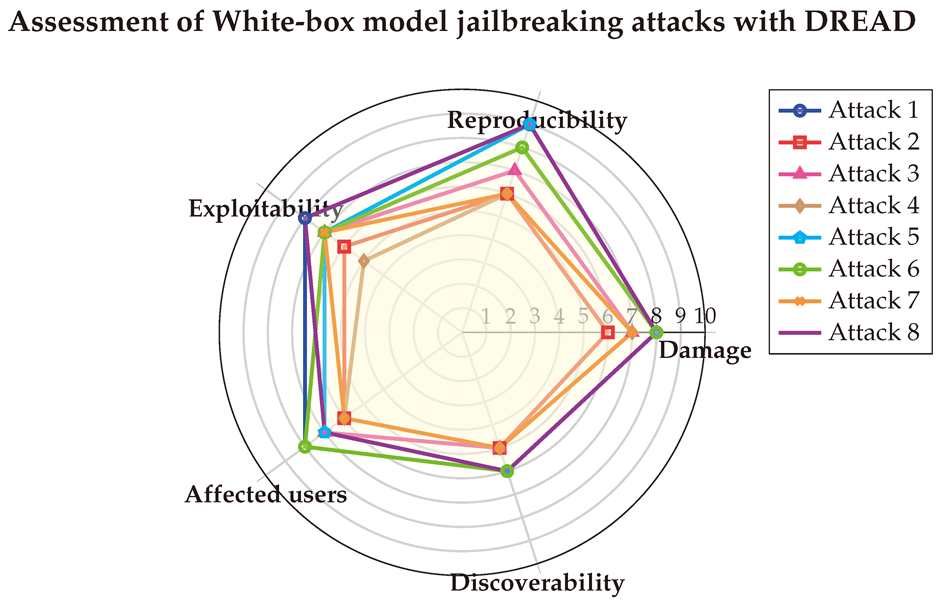

5.2.1. DREAD [92]

Originally developed by Microsoft, DREAD is a qualitative risk assessment model that ranks, prioritizes, and evaluates the severity of vulnerabilities and potential threats based on five factors: Damage potential (D), Reproducibility (R), Exploitability (E), Affected users (A), and Discoverability (D) of the attack.

Calculations

The vulnerability score is calculated with DREAD as an average score of the five factors, each assessed with a value out of 10. The details of each factor and their values are shown in

Table 8 below.

A value in the range

is labeled as

‘Low’ in the level of criticality, a value in the range

labeled as

‘Medium’ in criticality, and values over 7 are labeled as

‘High’ in criticality. The final score is calculated following this equation:

Limitations

The DREAD model, previously popular for qualitative risk assessment, has several limitations that have reduced its use in favor of more structured frameworks. First of all, its five categories (Damage, Reproducibility, Exploitability, Affected Users, and Discoverability) are highly subjective, leading to inconsistent scoring and prioritization across different assessors and organizations. Moreover, DREAD overlooks contextual factors like the specific environment and business impact, limiting its adaptability for complex needs. Finally, it also fails to account for dynamic threats or mitigation measures, making it less effective for ongoing risk management.

5.2.2. CVSS (Common Vulnerability Scoring System) [114]

Created by the FIRST

2 (Forum of Incident Response and Security Teams), the CVSS is an industry-standard scoring system for rating the severity of software vulnerabilities out of 10. It is encompasses three main metrics:

Base Metrics: Represent the vulnerabilities that are constant over time. It contains factor related to the exploitability of an attack (how easy it is to exploit the vulnerability) like the Attack Vector (AV), Attack Complexity (AC), Privileges Required (PR), User Interaction (UI), and the Scope (S) of the attack. And factors related to the impact of an attack on the CIA triad, such as Confidentiality Impact (C), Integrity Impact (I), and Availability Impact (A).

Temporal Metrics (Optional): Represent the vulnerabilities that might change over time in order to update the base score, it encompasses three factors, Exploit Code Maturity (E), Remediation Level (RL), and Report Confidence (RC).

Environmental Metrics (Optional): Vulnerabilities that are unique to a user environment, such as the Confidentiality Requirements (CR), Integrity Requirement (IR), Availability Requirement (AR), and the modified Base Metrics.

Calculations

The values in CVSS factors are not explicitly numerical; but selected from a specific range of choices, with each qualitative value having a corresponding coefficient. The details of each factor and of the Base Metric and their values according to CVSS version 3.1

3 are presented below in

Table 9, and their equivalent decimal values are detailed in

Table 10.

After assessing a value for each metric, the Base Score of the CVSS is calculated using two different equations depending on the Scope (S), which is either Changed (C) or Unchanged (U). Below are the full details of the equations for both cases:

The final Base Score ranges from 0 to 10, with the same criticality assignment as in DREAD, adding to it that a base score of 9 or more is considered a ‘Critical’ vulnerability.

Limitations

CVSS is a widely used standard for scoring vulnerabilities but has several limitations that affect its real-world effectiveness. Firstly, it tends to oversimplify calculations by focusing on technical aspects like attack complexity and impacts on confidentiality, integrity, and availability, while neglecting business impact and regulatory considerations. Additionally, the Temporal score of CVSS, intended to reflect changing conditions, relies on manual updates rather than real-time adjustments, making it less responsive to evolving threats. Finally, CVSS can be inconsistent, as different organizations may interpret scoring criteria differently, leading to varying assessments for the same vulnerability.

5.2.3. OWASP Risk Rating [140]

Developed by the Open Web Application Security Project (OWASP)

4, it is a risk assessment methodology that evaluates vulnerabilities and categorizes security risks in web applications by assessing likelihood (based on threat agent and vulnerability characteristics) and impact (considering technical and business factors) to produce an overall risk score.

-

Likelihood: Calculates the probability of the attack to be exploited based on two components:

- −

Threat Agent (TA): Quantifies the skill level, motivation, opportunity, and size of the threat-agent population

- −

Vulnerability (V): Quantifies the ease of discovery, ease of exploit, awareness, awareness of the system administrators, and the intrusion detection level.

-

Impact: Calculates the impact or loss produced by the attacks, it encompasses two types of impact:

- −

Technical Impact (TI): Quantifies the impact on Confidentiality, Integrity, and Availability.

- −

Business Impact (BI): Quantifies the financial damage, reputation damage, non-compliance, and privacy violation

Calculations

The vulnerability score is calculated based on the average score of each component. The values and definitions of each factor of OWASP Risk Rating is presented in

Table 11.

In this metric, a value in the range

is considered an attack of

‘Low’ criticality. The

‘Medium’ criticality range is

, and the values starting from 6 are labeled as

‘High’ in criticality. After assessing all the values, the final score is a multiplication between the score of Likelihood and the score of Impact as shown below:

The score of Likelihood is calculated as the mean of the Threat Agent and the Vulnerability scores:

And the score of Impact is calculated as the mean of the Technical and Business impact scores

Where the score of each component (TA, V, TI, BI) are respectively the average score of their factors:

The rank of the final OWASP severity-score (Low, Medium, High, Critical) is defined based on the combinations shown in the

Table 12. For example, if the

(Medium criticality) and the

(High criticality), the final score according the matrix is

High.

Limitations

The OWASP Risk Rating methodology, though widely used for web application security assessment, has notable limitations. Its reliance on subjective evaluations of factors like threat agent skill and impact severity can result in inconsistent ratings across different assessors and lead to biased outcomes. Additionally, OWASP Risk Rating lacks specificity for environments like cloud or mobile and does not adapt to rapidly changing threat landscapes, making it less responsive in dynamic security contexts. Finally, having many factors increases the complexity of this metric and its reliance on experts knowledge to assess each factor precisely.

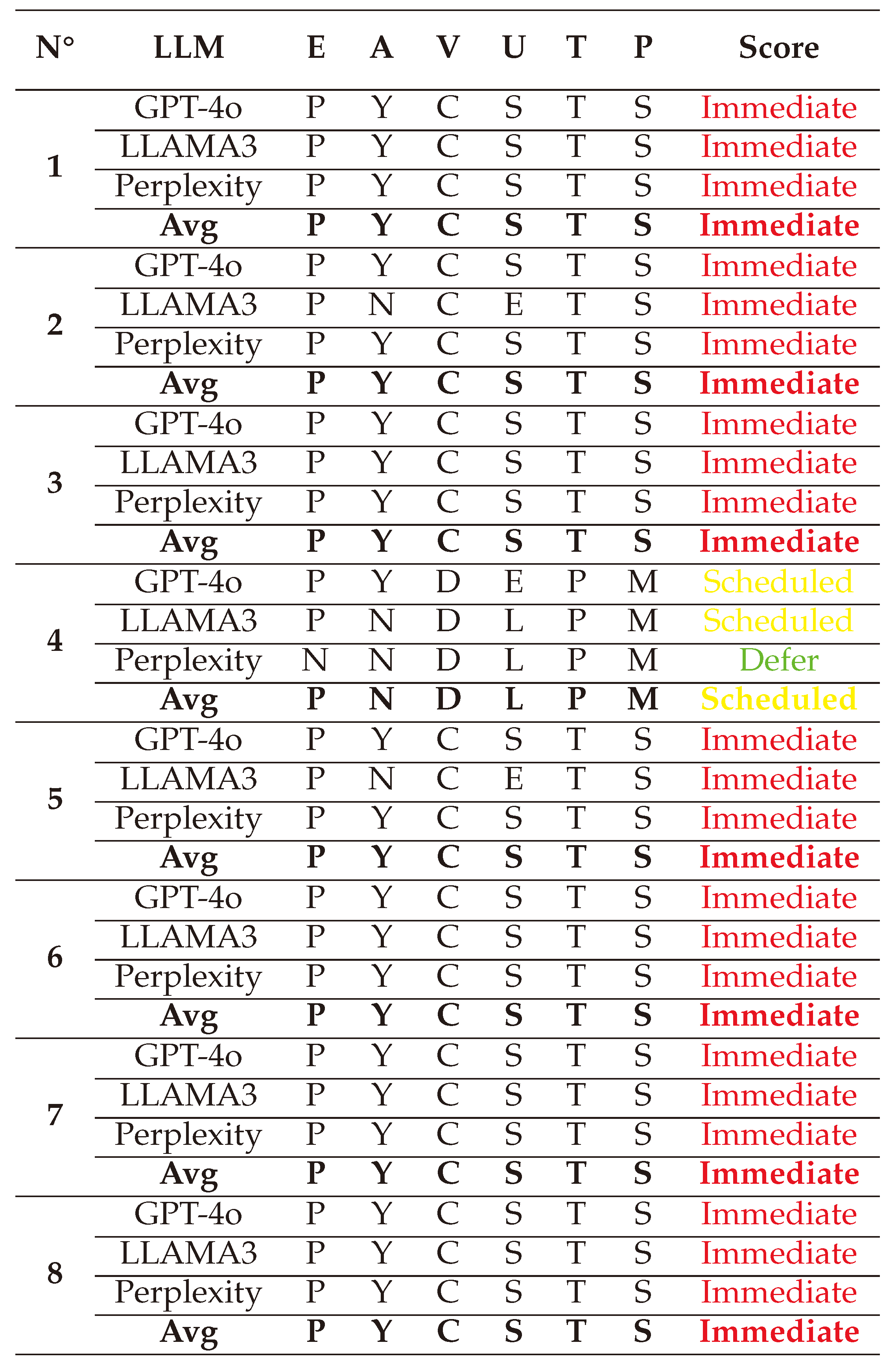

5.2.4. SSVC (Stakeholder-Specific Vulnerability Categorization) [128]

The SSVC is a framework that prioritizes vulnerabilities based on qualitative decision trees tailored to specific stakeholder roles, instead of numerical severity scores. The main two stakeholders represented are:

Suppliers: They decide how urgent it is to develop and release patches for their systems based on reports about potential vulnerabilities. Their decision tree is based on factors such as Exploitation, Technical Impact, Utility, and Safety Impact.

Deployers: They decide when and how to deploy the patches developed by the suppliers. Their decision tree is based on similar factors such as Exploitation, System Exposure, Automation, and Human Impact.

Calculations

In our case, we consider LLMselves as Suppliers trying to assess the potential vulnerabilities impacting their LLM.

Table 13 below show the different factors used in evaluating vulnerabilities using SSVC as a supplier.

The value of Utility (U) is calculated based in the values if Automatable (A) and Value Density (V) as follows:

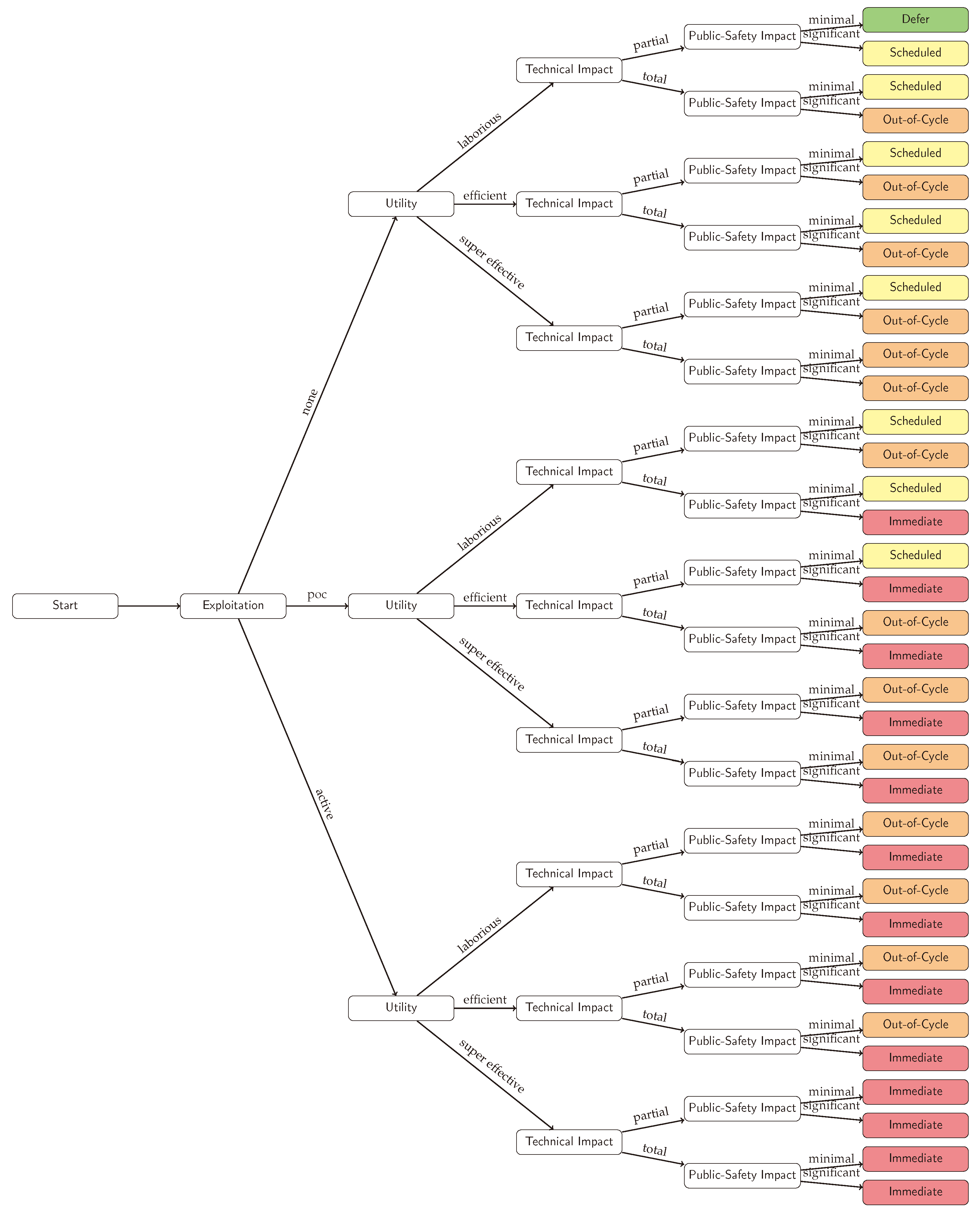

The final decision is taken by following the logic described in

Figure 4. There four main possible outcomes ranked from the lowest priority to the highest one are: Defer, Scheduled, Out-of-cycle, and Immediate. Each one of them represents the emergency level for developing corresponding patches.

Limitations

The SSVC metric has several limitations. It relies heavily on qualitative decision points, which may lead to subjective interpretations and inconsistencies across stakeholders. Additionally, the absence of numerical scoring might limit its integration with existing risk management systems that rely on quantitative data, potentially requiring significant adjustments to current workflows. Lastly, SSVC is tailored for specific stakeholder roles, which may make it be less effective in hybrid roles or complex environments where stakeholders overlap.

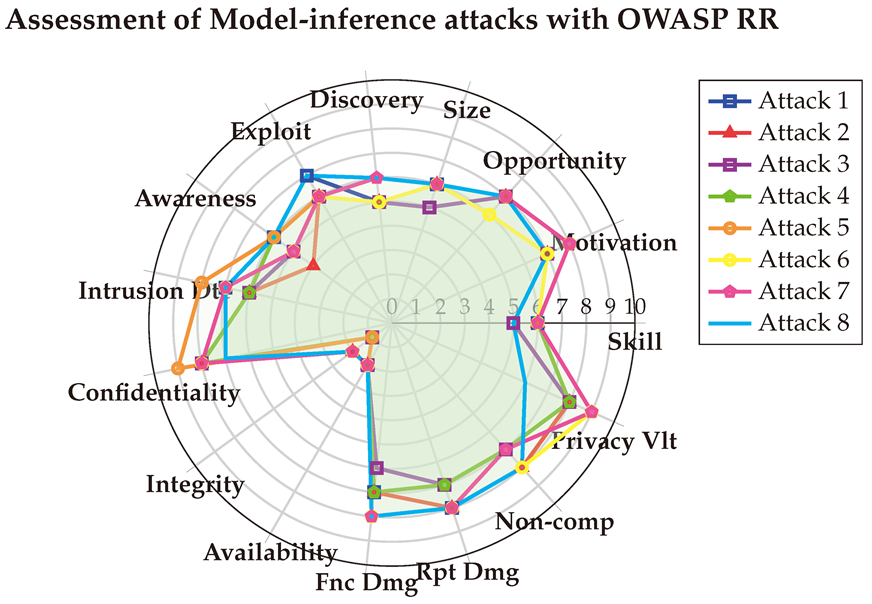

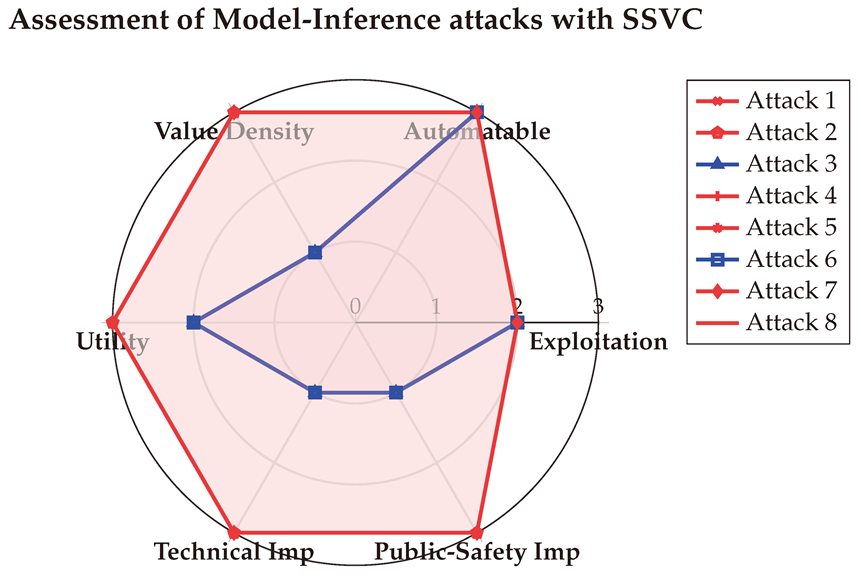

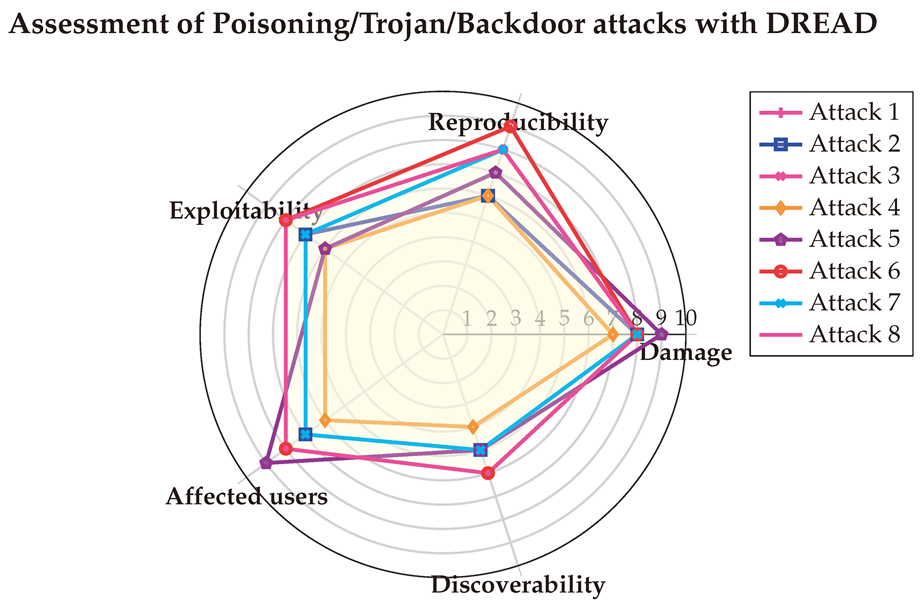

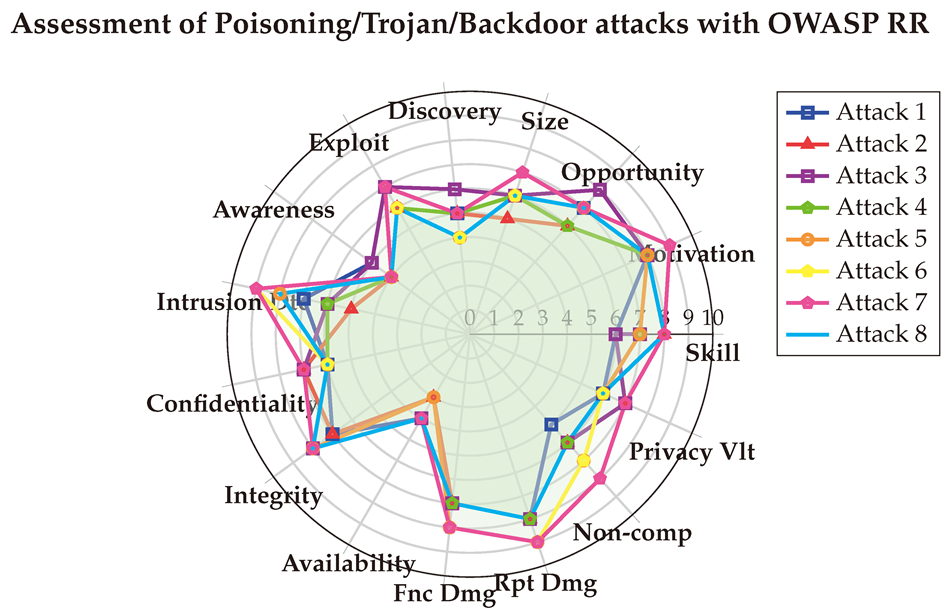

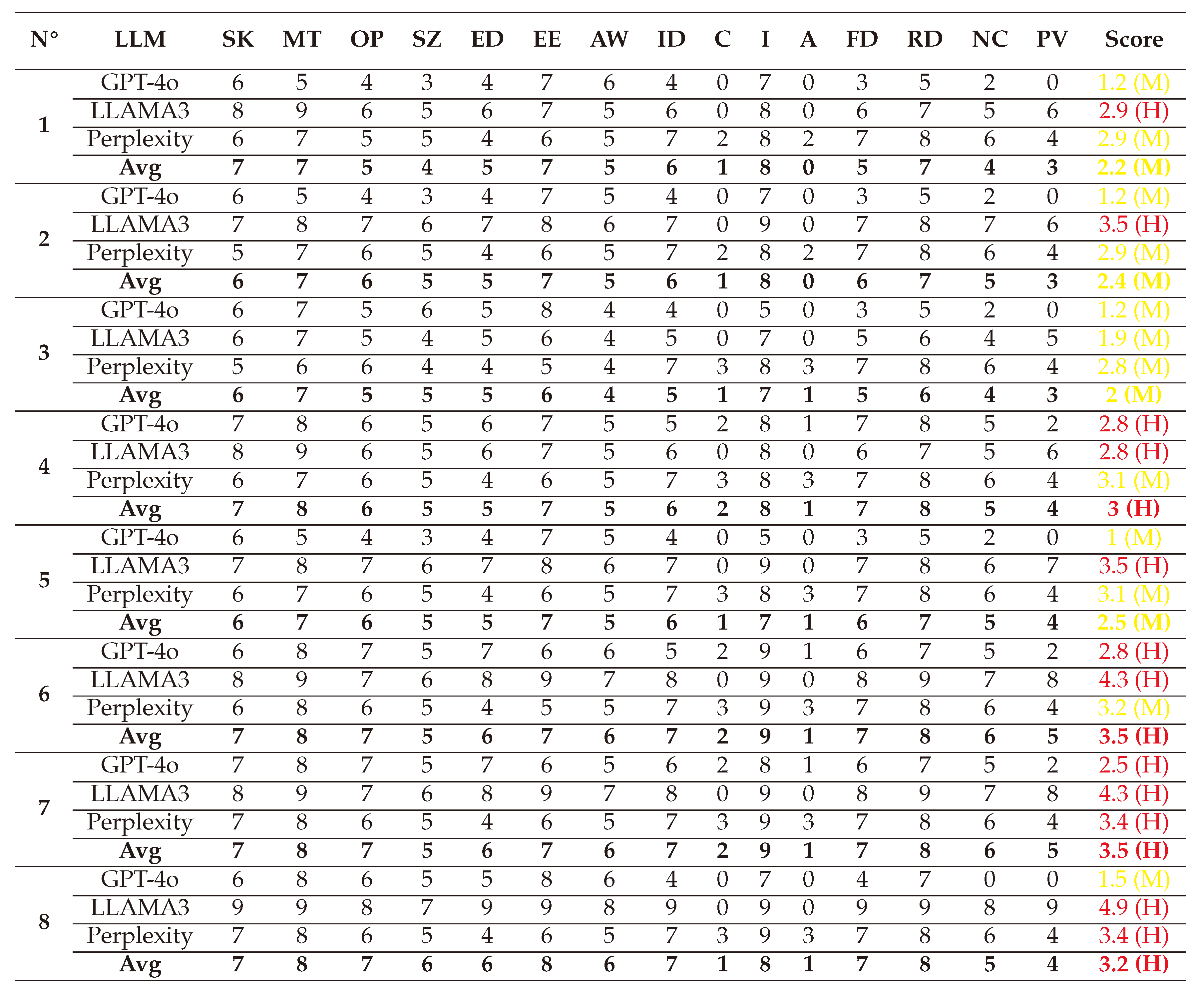

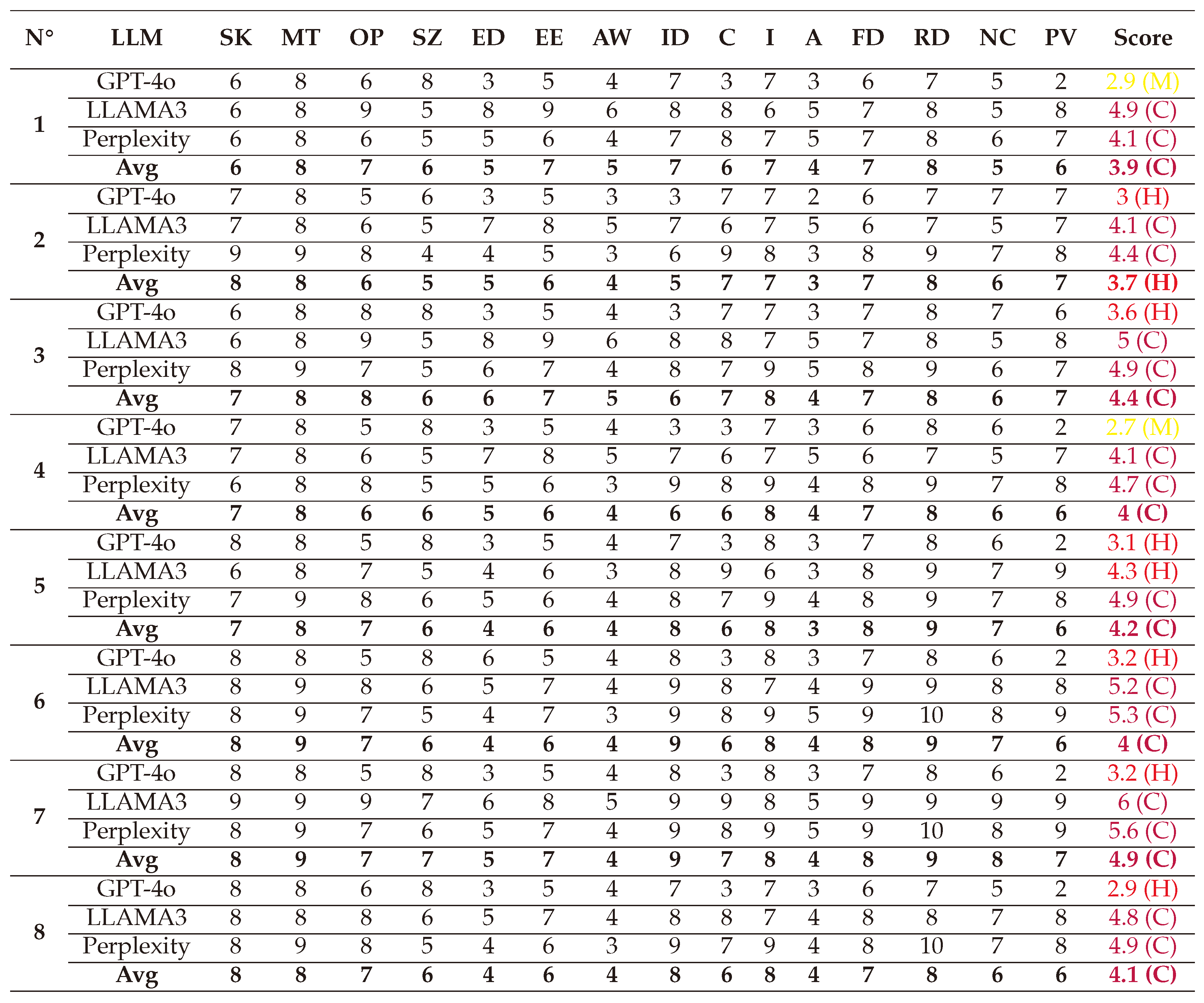

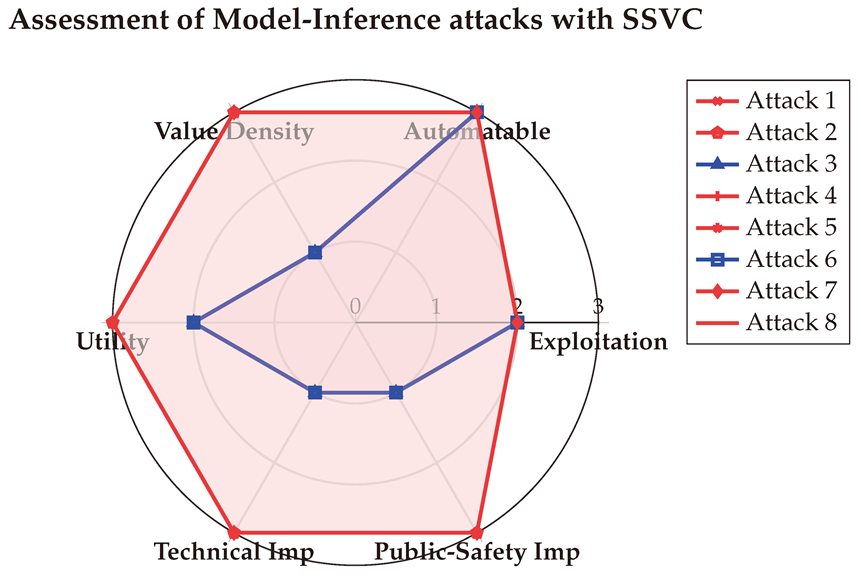

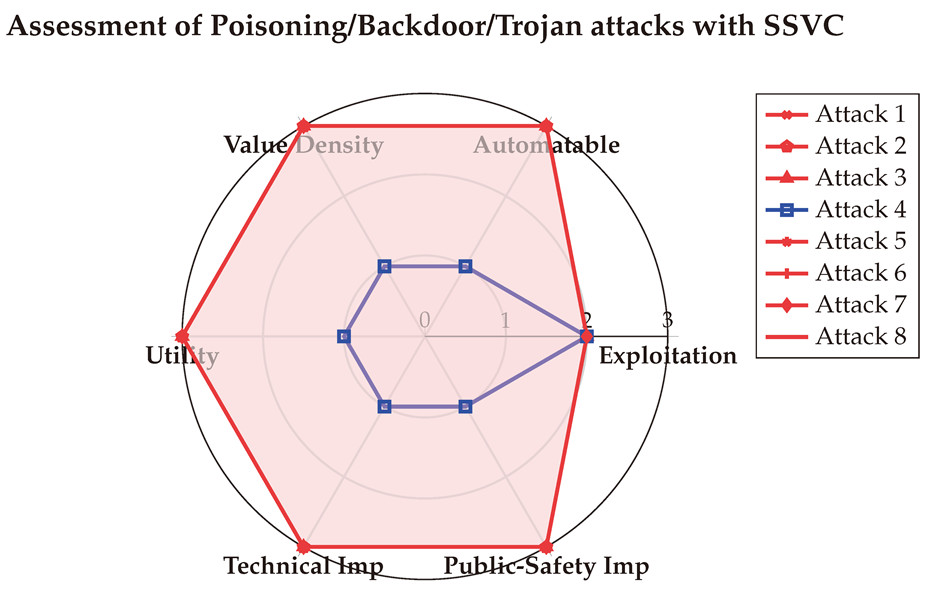

6. Assessment of AAs on LLMs with Vulnerability Metrics

In this section, we present and interpret the results of assessing the criticality of AAs against LLMs, grouped in seven types: White-box Jailbreak, Black-box Jailbreak, Prompt Injection, Evasion attacks, Model Extraction, Model Inference, and Poisoning/Trojan/Backdoor attacks. The detailed scores of these attacks given by the 3 LLMs (GPT-4o, LLAMA, and Perplexity) and their average are presented in

Appendix A. We represent the results in score-vectors and in spider-graph formats for more interpretability.

Note that for the qualitative factors of CVSS and SSVC, we represent their values numerically in the spider graph following this logic:

-

For CVSS Factors:

- −

If they have four values (eg. AV), they are represented with values from 1 to 4.

- −

If have three values (eg. PR, C, I, A), they are represented with values from 1 to 3.

- −

If they have two values (eg. AC, UI, S), they are represented with the values 2 and 4.

-

For SSVC Factors:

- −

If they have three values (eg. E, U), they are represented with values from 1 to 3.

- −

If they have two values (eg. A, V, T, P), they are represented with the values 1 and 3.

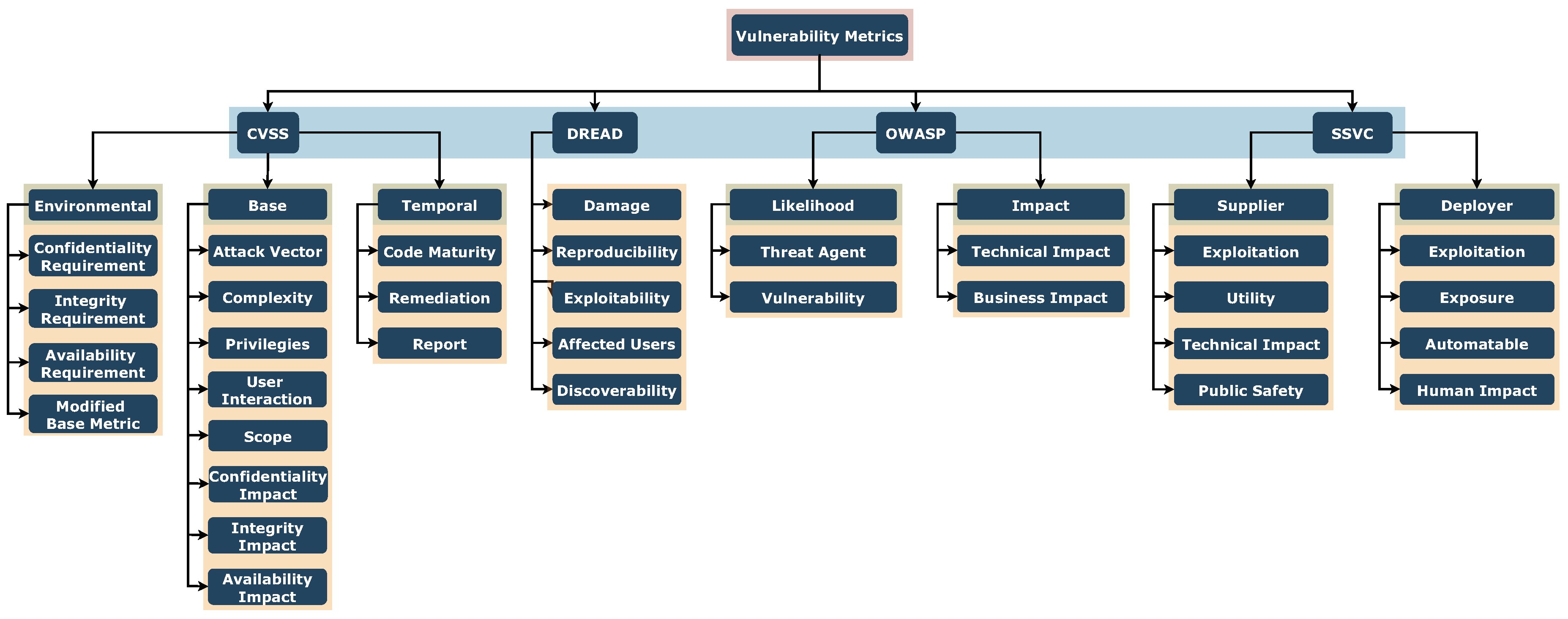

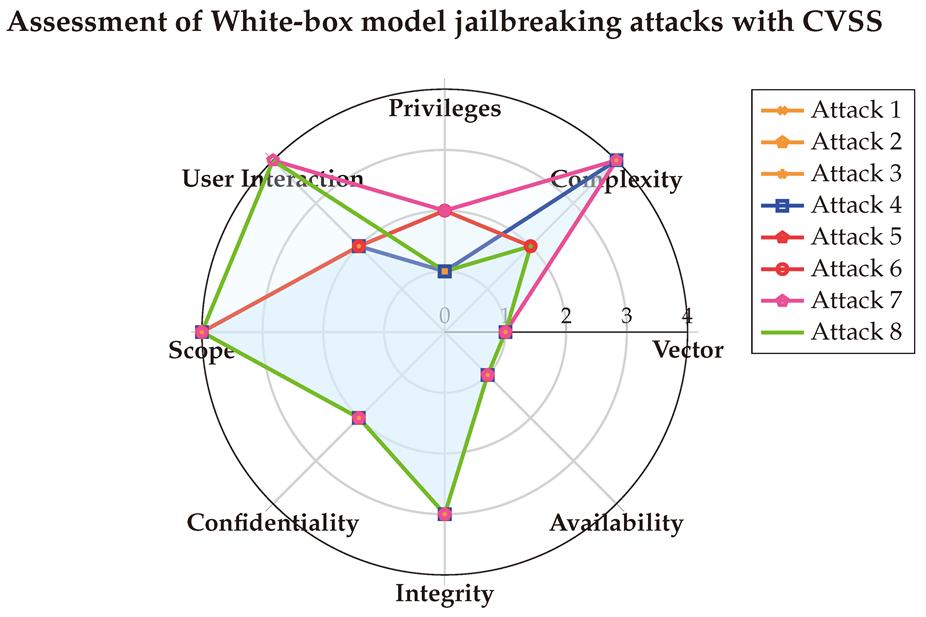

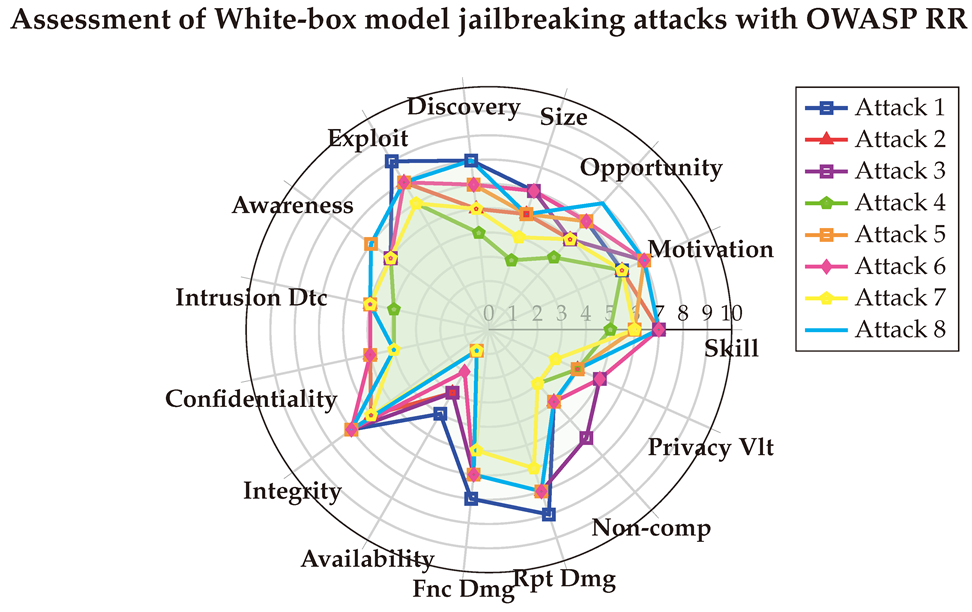

6.1. Assessment of White-box Jailbreak attacks

We start by evaluating White-box jailbreak attacks, the chosen attacks are the same presented in

Section 4.1.1 earlier: (1) GCG [

166], (2) Visual Modality [

96], (3) PGD [

48], (4) SCAV [

149], (5) Soft Prompt Threats [

115], (6) DrAttack [

74], (7) RADIAL [

36], (8) ReNeLLM [

31].

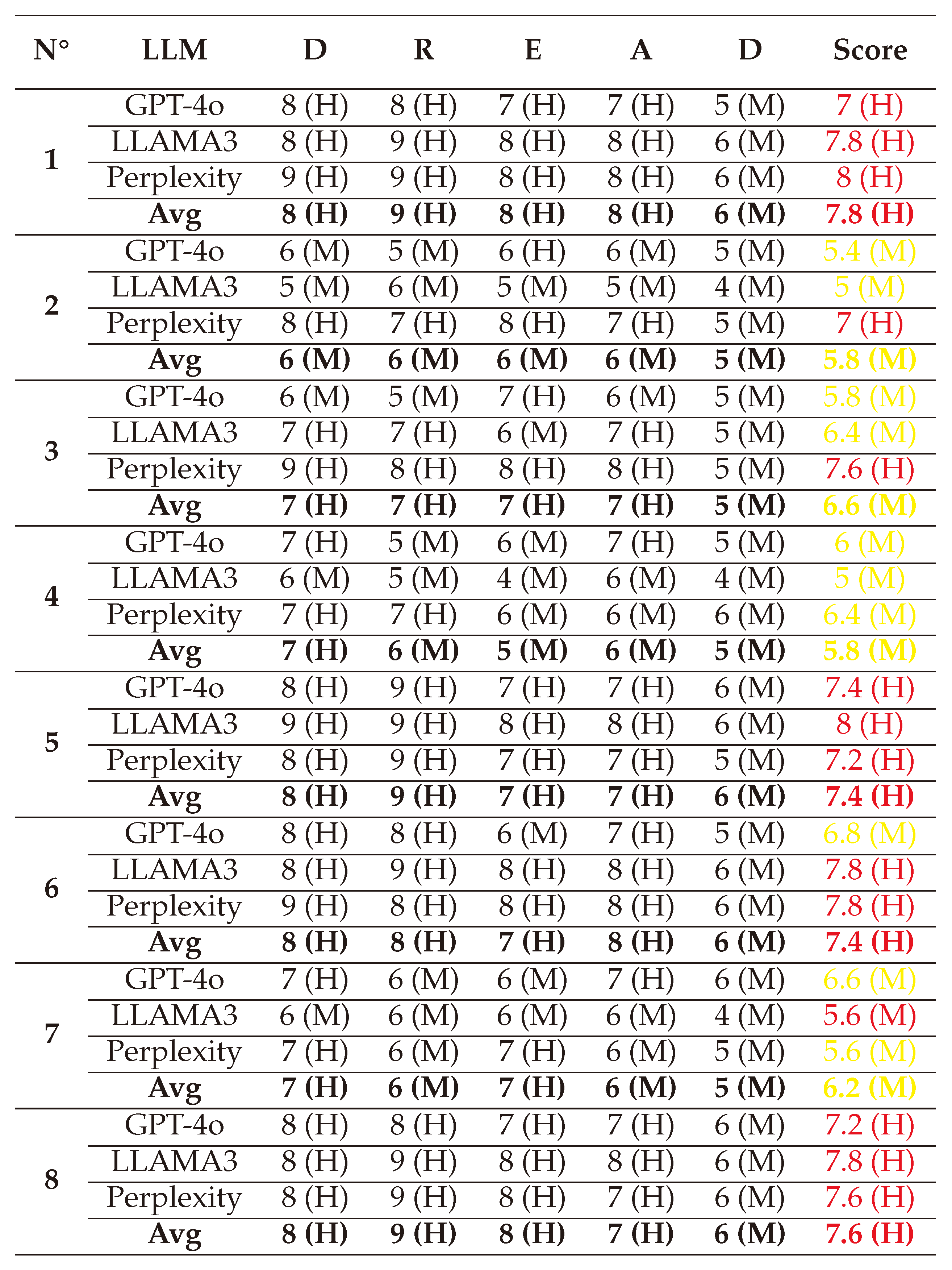

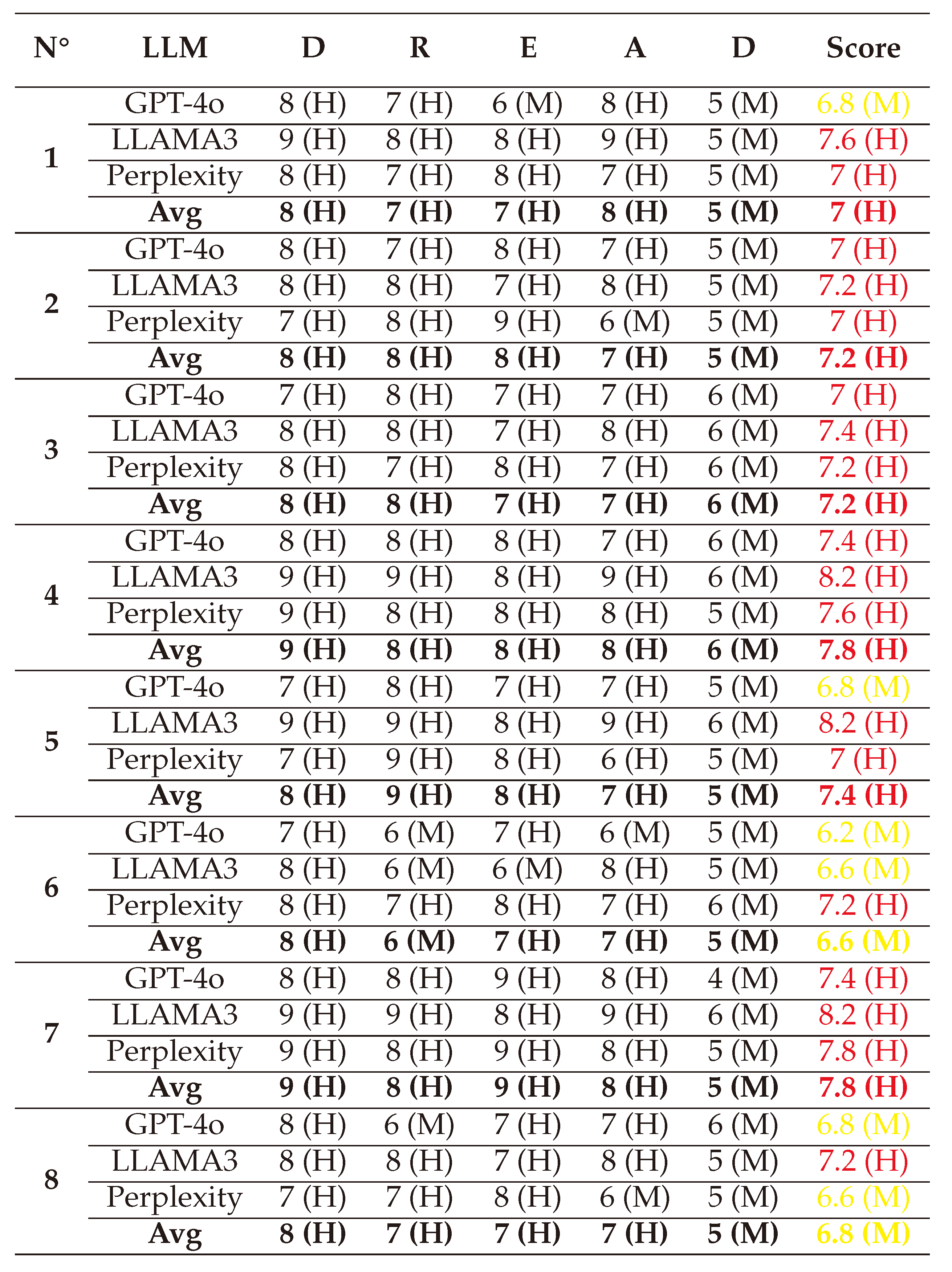

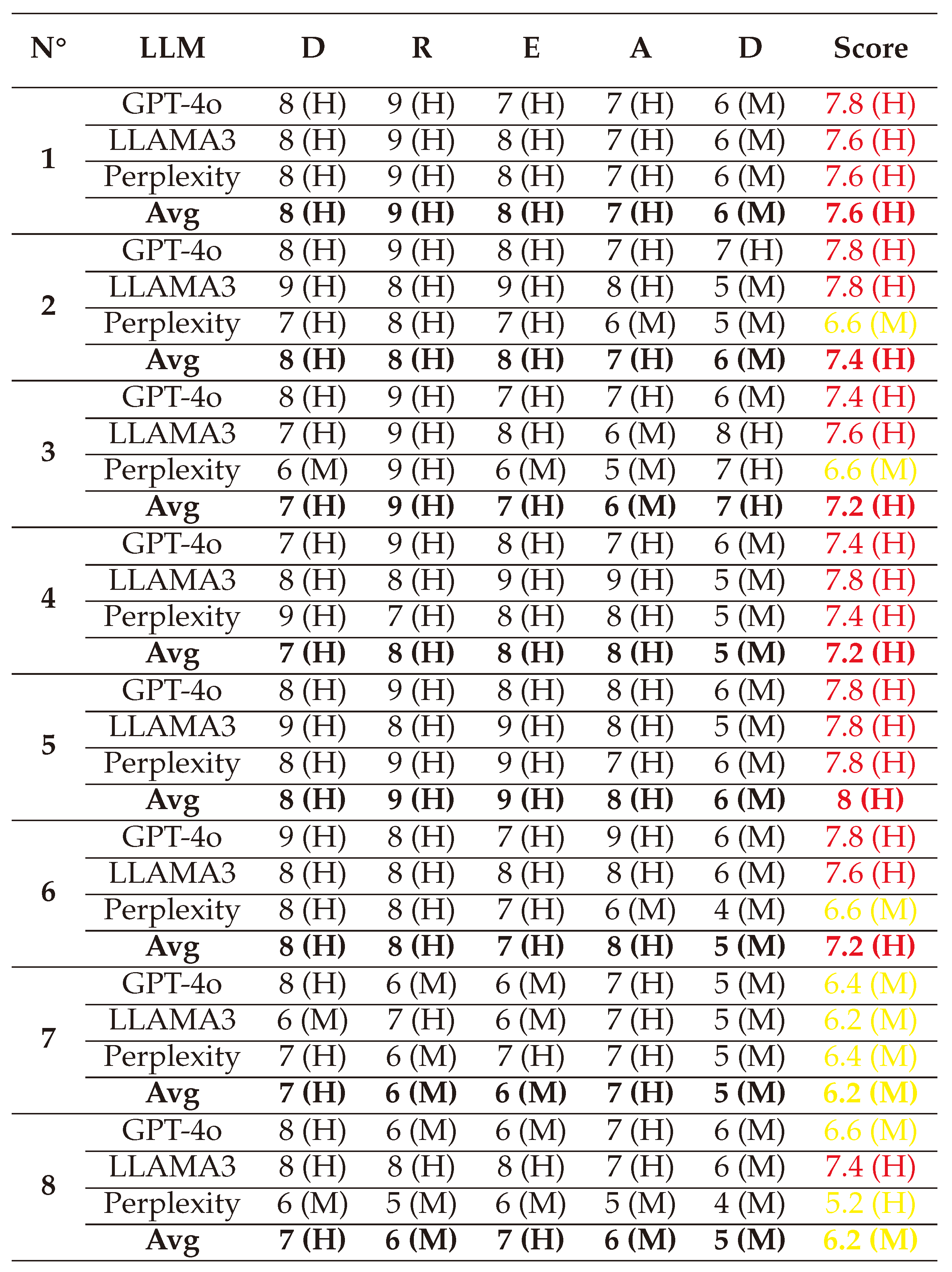

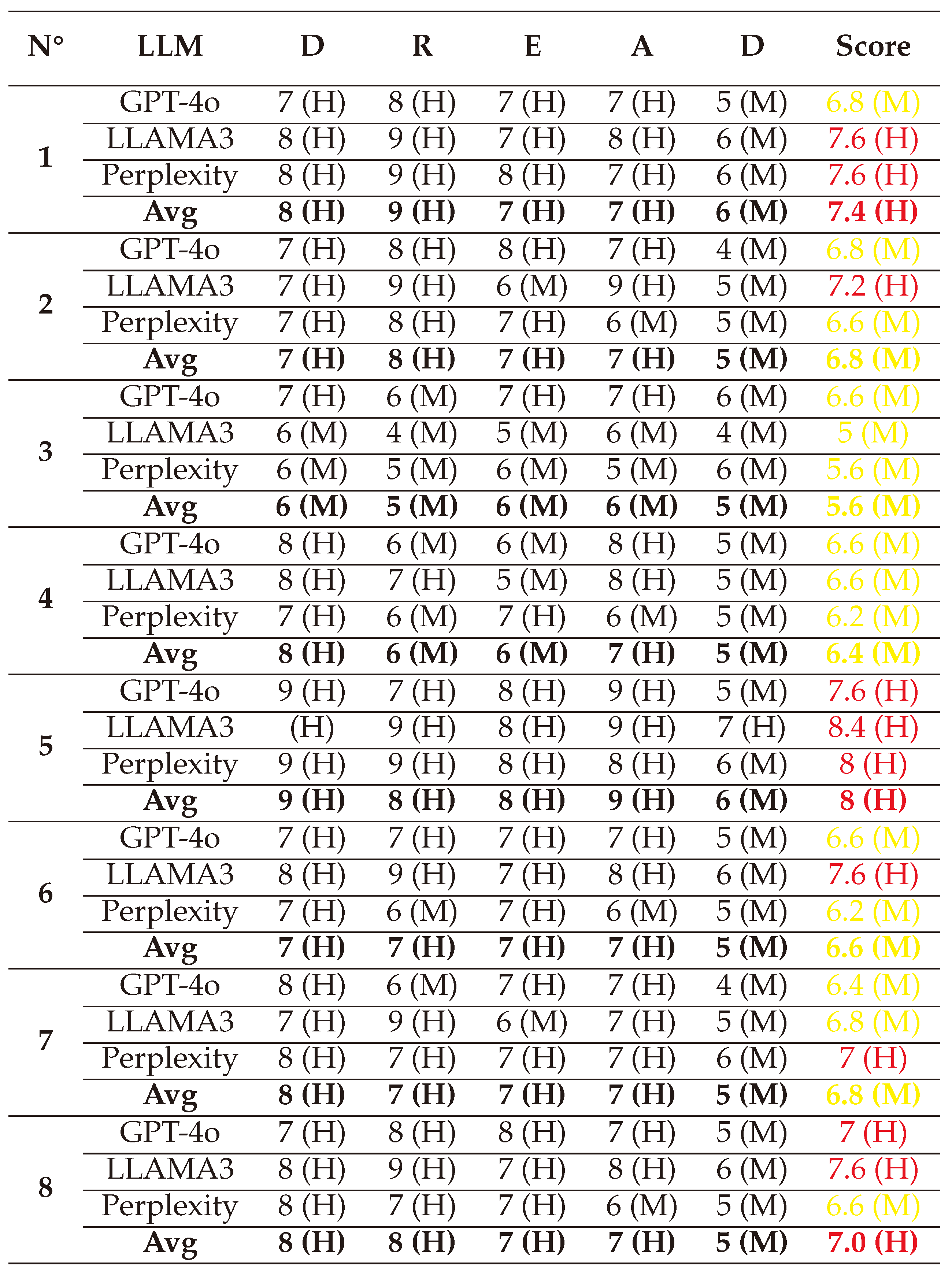

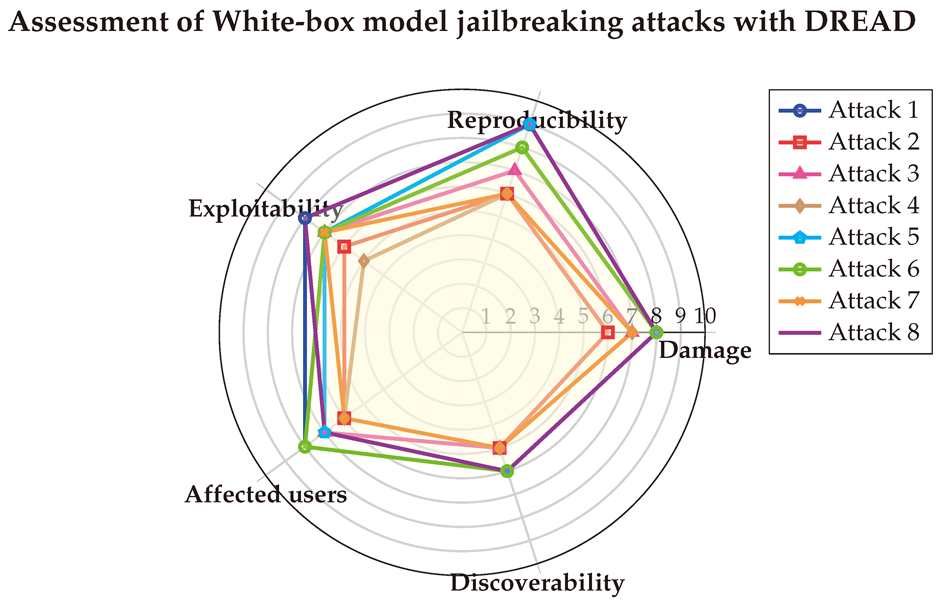

6.1.1. With DREAD

We start by evaluating the eight White-box jailbreaks attacks using DREAD [

92]. Here are below the attack vectors of each attack:

(1) → (D:8/R:9/E:8/A:8/D:6) = 7.8 (High)

(2) → (D:6/R:6/E:6/A:6/D:5) = 5.8 (Medium)

(3) → (D:7/R:7/E:7/A:7/D:5) = 6.6 (Medium)

(4) → (D:7/R:6/E:5/A:6/D:5) = 5.8 (Medium)

(5) → (D:8/R:9/E:7/A:7/D:6) = 7.4 (High)

(6) → (D:8/R:8/E:7/A:8/D:6) = 7.4 (High)

(7) → (D:7/R:6/E:7/A:6/D:5) = 6.2 (Medium)

(8) → (D:8/R:9/E:8/A:7/D:6) = 7.6 (High)

The detailed calculations for these attacks are presented in

Table A1, with assessments supervised by a

Human-in-the-Loop (HitL) to minimize

misconceptions. For instance, GPT-4o initially scored 8/10 for the Discoverability factor in DREAD for the first attack [

166], while LLAMA-3 and Perplexity AI both assigned a score of 6/10. GPT-4o’s higher score stemmed from a

misunderstanding of the factor’s meaning, interpreting Discoverability as the level of researcher awareness about the threat rather than the ease with which it can be discovered. After

clarifying this distinction, GPT-4o revised its score to 5/10, aligning more closely with the intended definition of the metric.

A different issue arose when evaluating the

impact of attacks. For example, the Damage factor of the second attack [

96] was rated 6/10 by GPT-4o and 5/10 by LLAMA-3, reflecting moderate damage due to situational input requirements, such as specific visual input use cases. However, Perplexity AI assigned a higher score of 8/10, citing potential scenarios where the attack could have a significant impact on the targeted system. In this case, the discrepancy was due to differing

interpretations rather than misunderstandings, making it difficult to standardize the scores. To address this,

averaging the three scores provided a balanced result, aligning closely with the consensus of GPT-4o and LLAMA-3.

Using this approach, we reduced inconsistencies in the scoring process. The final DREAD scores are illustrated in a spider graph, highlighting that White-box Jailbreak attacks can inflict considerable damage on systems while being relatively easy to reproduce. However, discovering these threats remains a significant challenge.

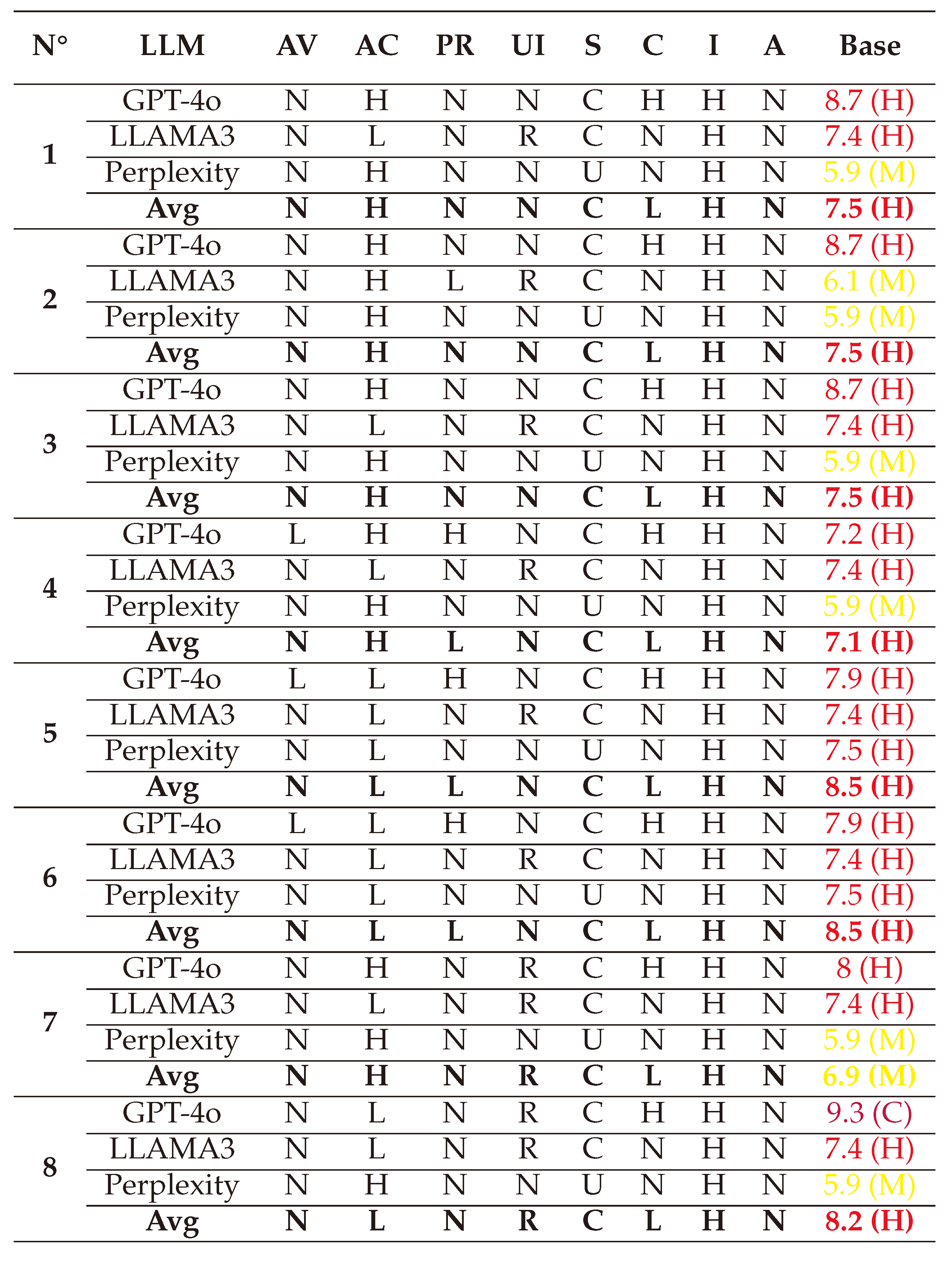

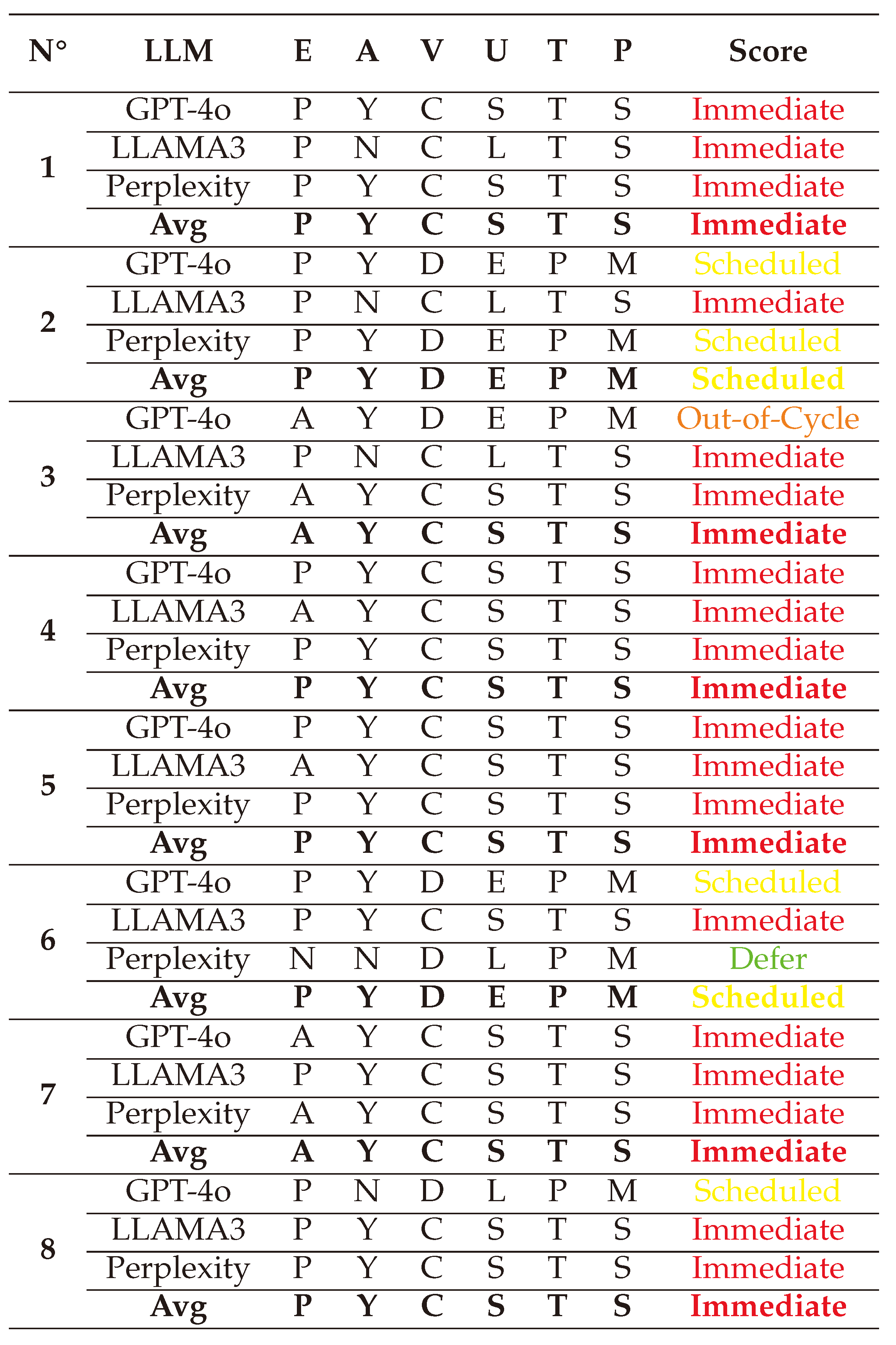

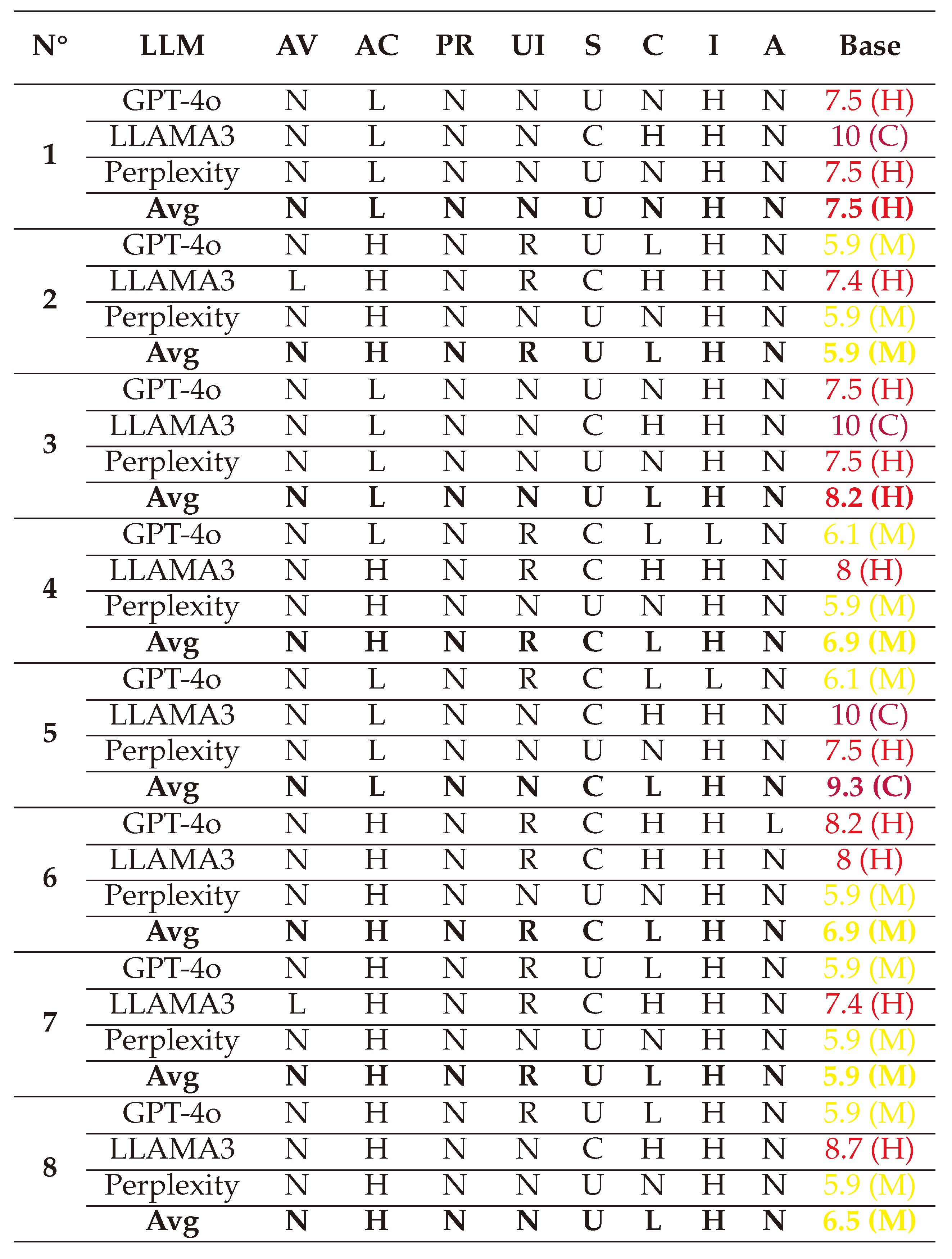

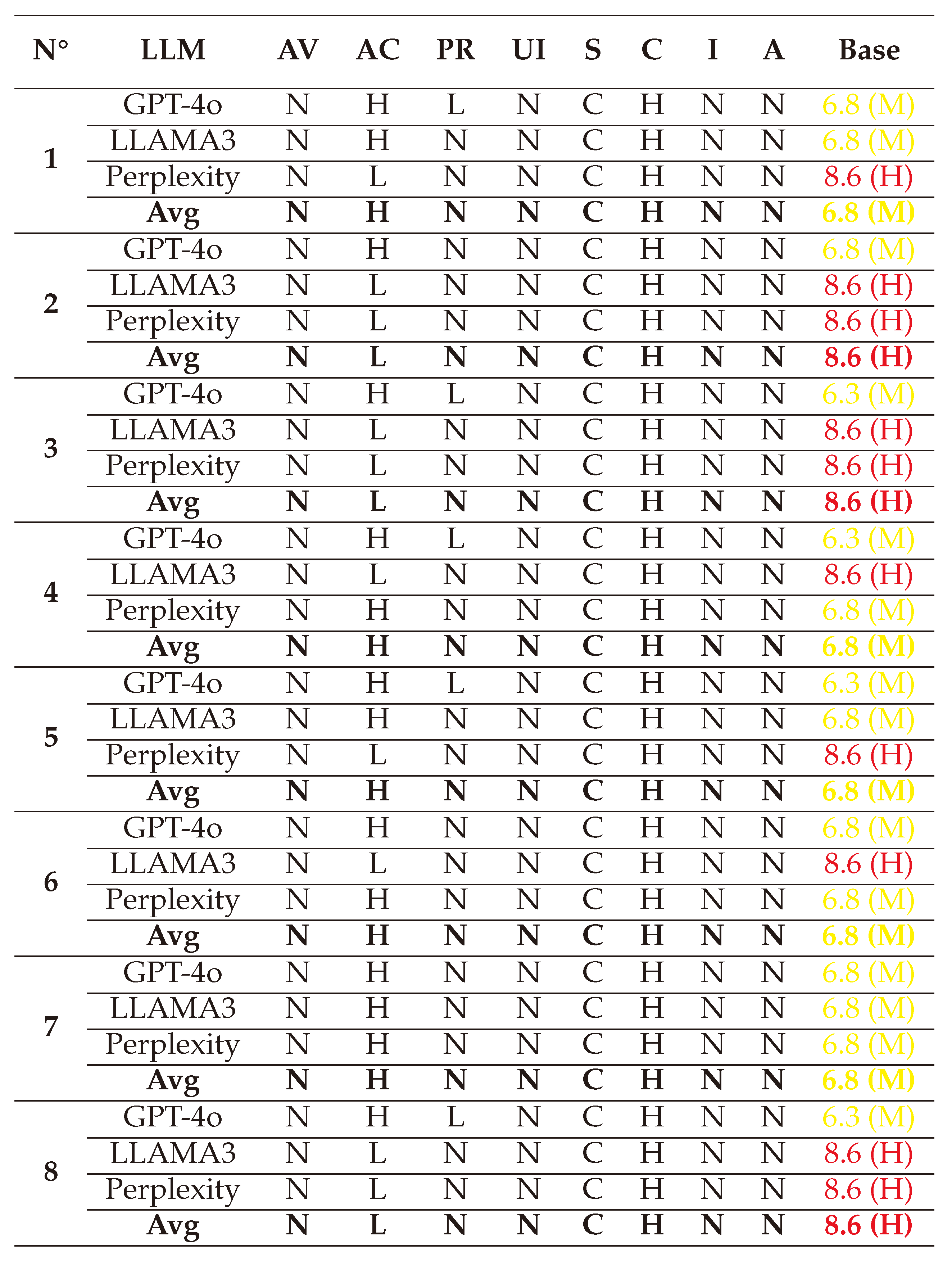

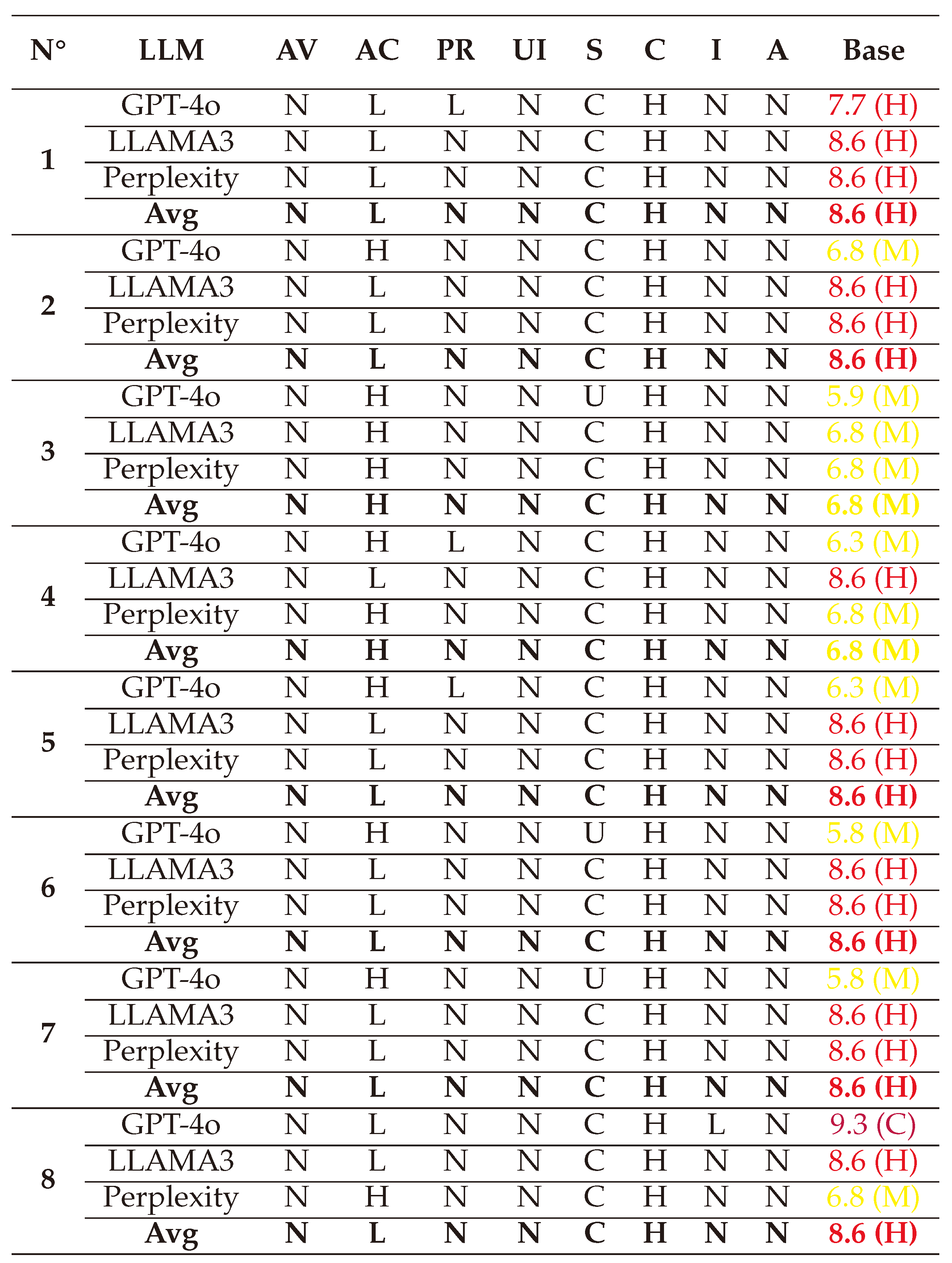

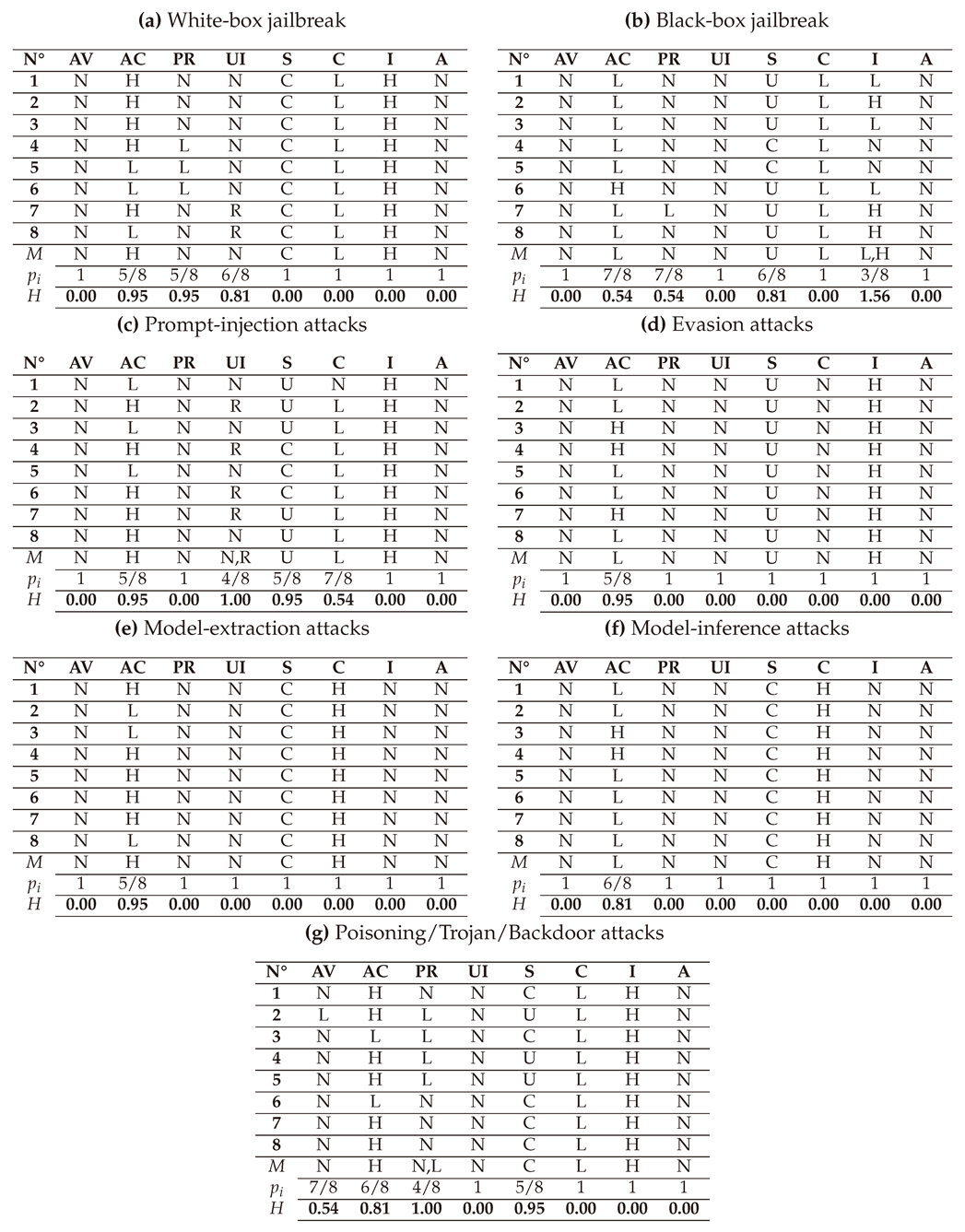

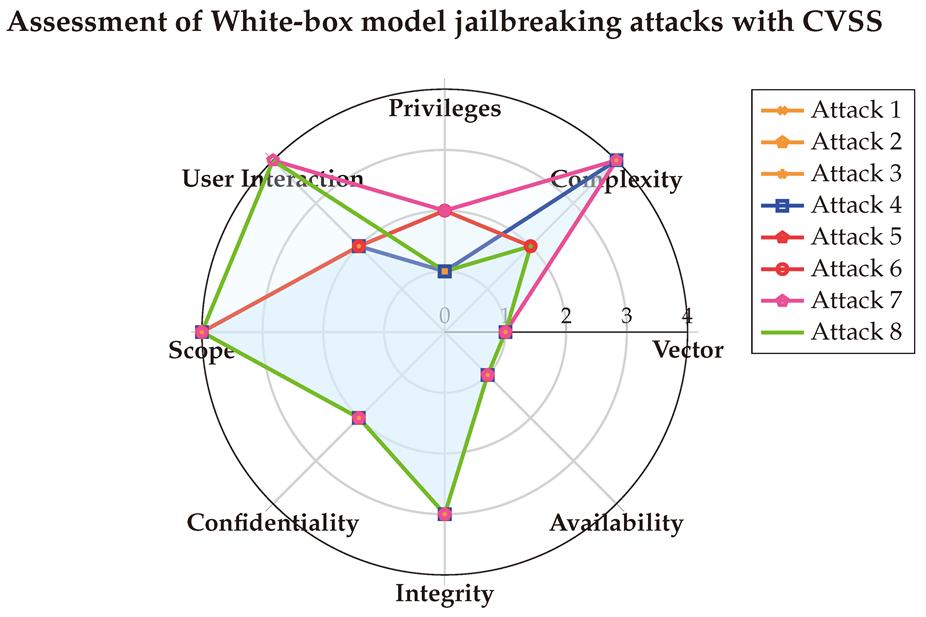

6.1.2. With CVSS

Then, we evaluate the assessment of these attacks using CVSS [

114]. The corresponding CVSS Vectors are shown below:

(1) → (AV:N/AC:H/PR:N/UI:N/S:C/C:L/I:H/A:N) = 7.5 (High)

(2) → (AV:N/AC:H/PR:N/UI:N/S:C/C:L/I:H/A:N) = 7.5 (High)

(3) → (AV:N/AC:H/PR:N/UI:N/S:C/C:L/I:H/A:N) = 7.5 (High)

(4) → (AV:N/AC:H/PR:N/UI:N/S:C/C:L/I:H/A:N) = 7.1 (High)

(5) → (AV:N/AC:L/PR:L/UI:N/S:C/C:L/I:H/A:N) = 8.5 (High)

(6) → (AV:N/AC:L/PR:L/UI:N/S:C/C:L/I:H/A:N) = 8.5 (High)

(7) → (AV:N/AC:H/PR:N/UI:R/S:C/C:L/I:H/A:N) = 6.9 (Medium)

(8) → (AV:N/AC:L/PR:N/UI:R/S:C/C:L/I:H/A:N) = 8.2 (High)

The detailed scores are presented in

Table A2. During the analysis, some LLMs encountered challenges in correctly interpreting the characteristics of each attack. For instance, GPT-4o initially concluded that White-box jailbreak attacks only impact the Confidentiality of data, with no effect on Integrity—a conclusion that was refuted by the other two LLMs. It was crucial to identify such

misunderstandings and

guide the models to recognize their errors. Rather than providing direct corrections, we prompted GPT-4o with

questions such as:

Do these attacks target Integrity given that they involve manipulation of gradients and embeddings? This approach enabled the model to identify and rectify its own mistake while fostering greater

caution in subsequent assessments.

This process highlights another key advantage of using multiple LLMs: they provide diverse perspectives and explanations, which help identify and address unconventional or erroneous analyses. Moreover, there was also other slight divergence in scoring factors such as the Scope and User Interaction; but using an averaging method helps align the final scores to the majority.

After averaging the final values, we visualized the scores using a spider chart for clarity. The CVSS scores reveal that White-box attacks are typically executed through the network, requiring low-to-medium privileges and primarily targeting the Integrity of systems.

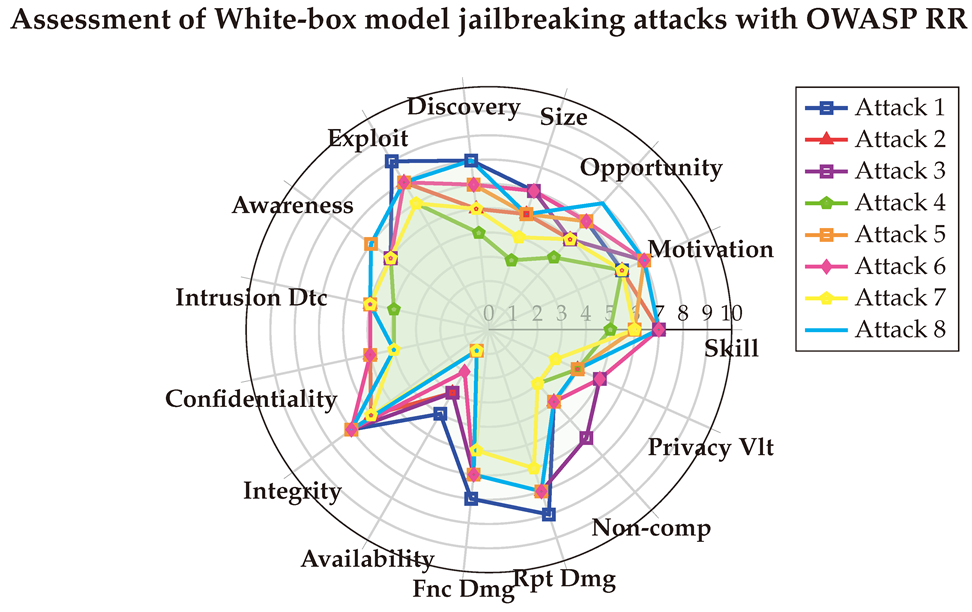

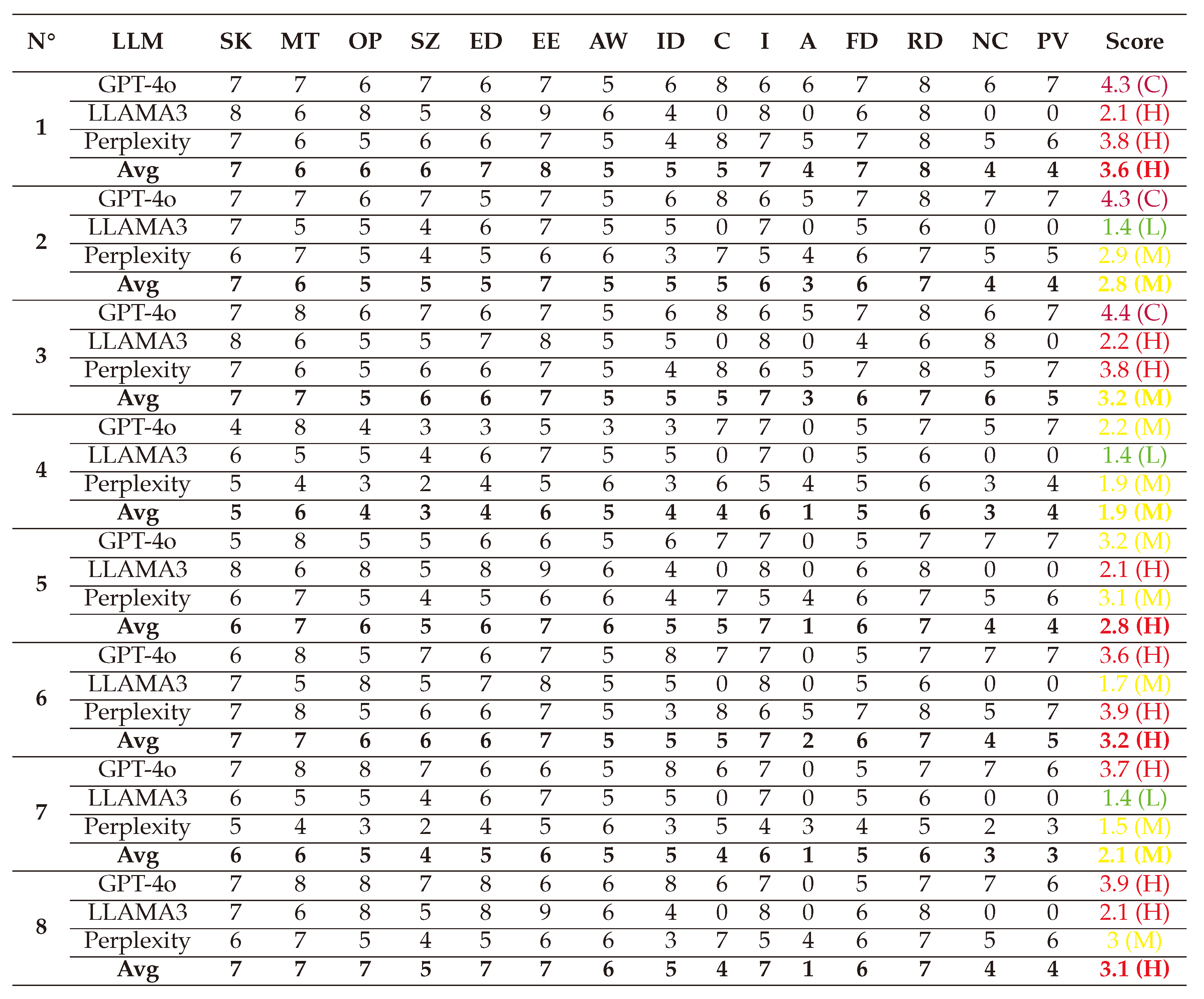

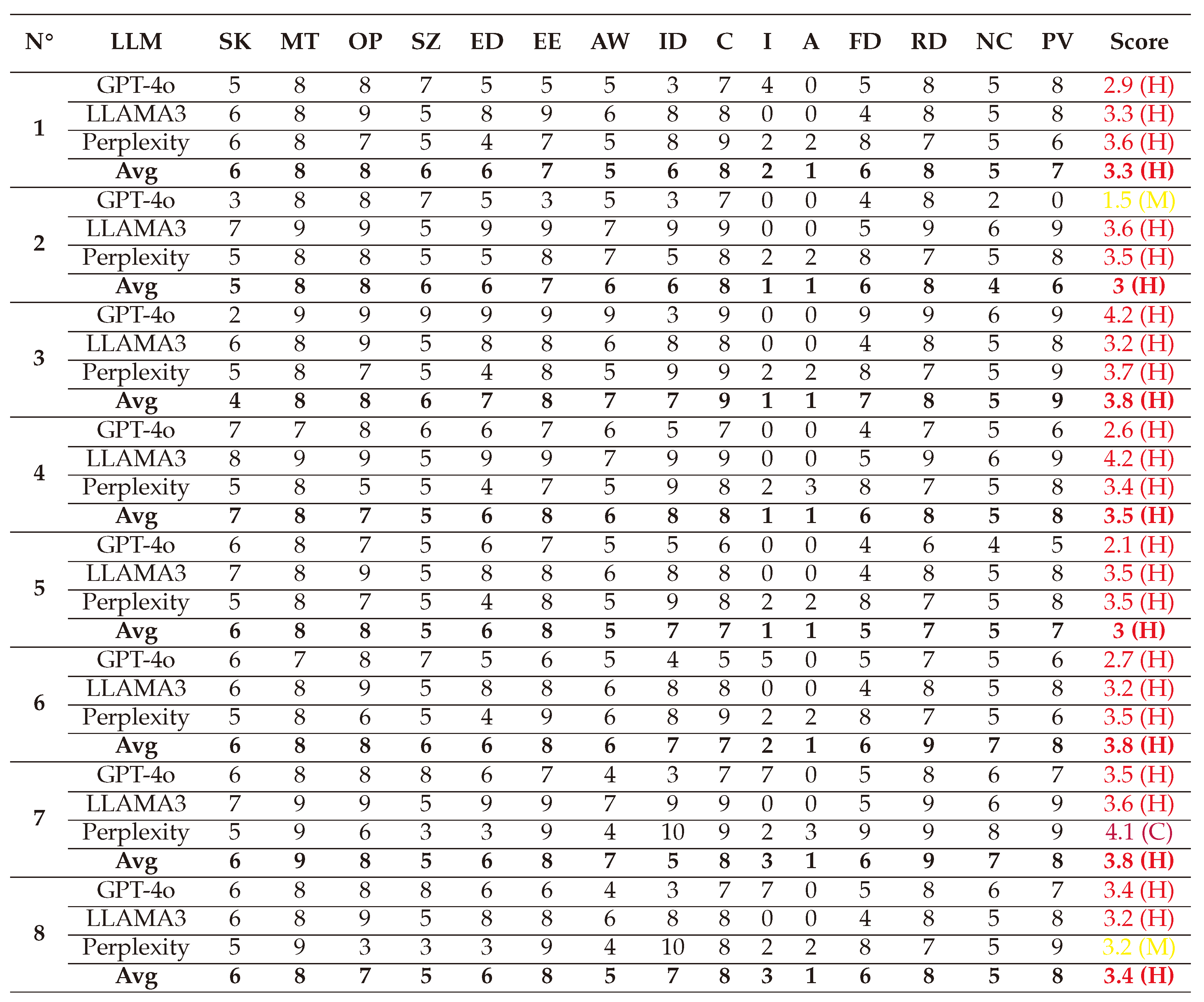

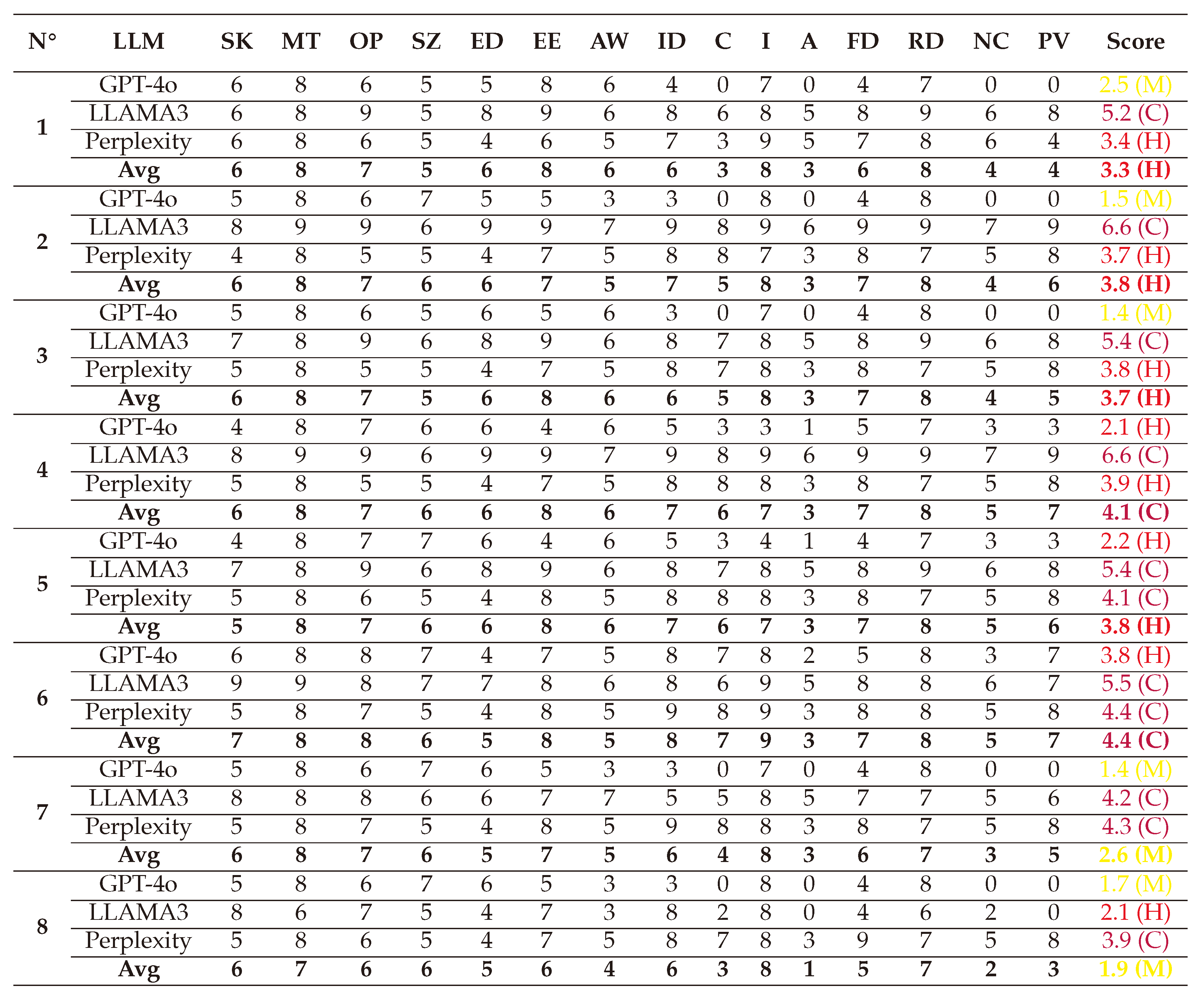

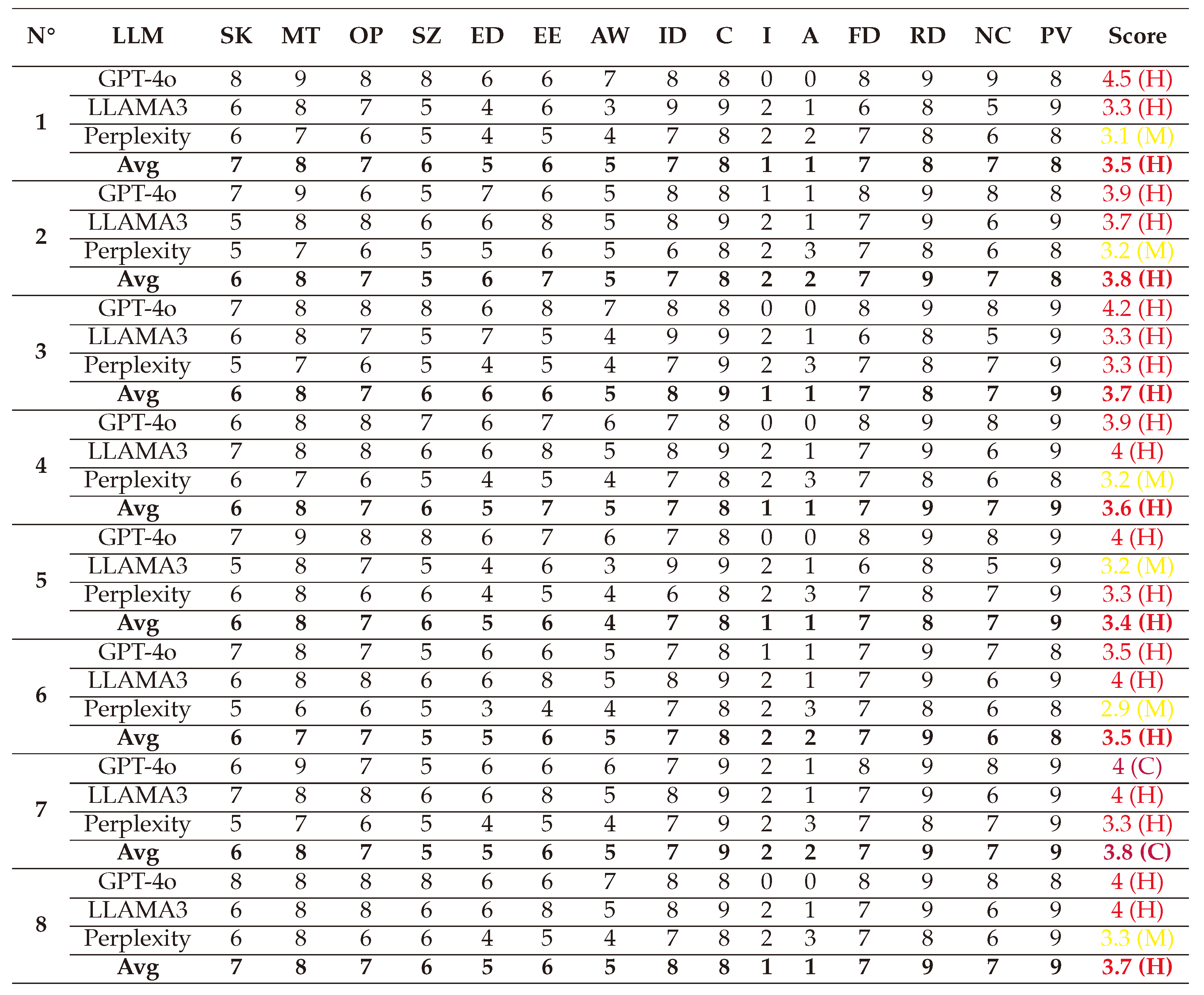

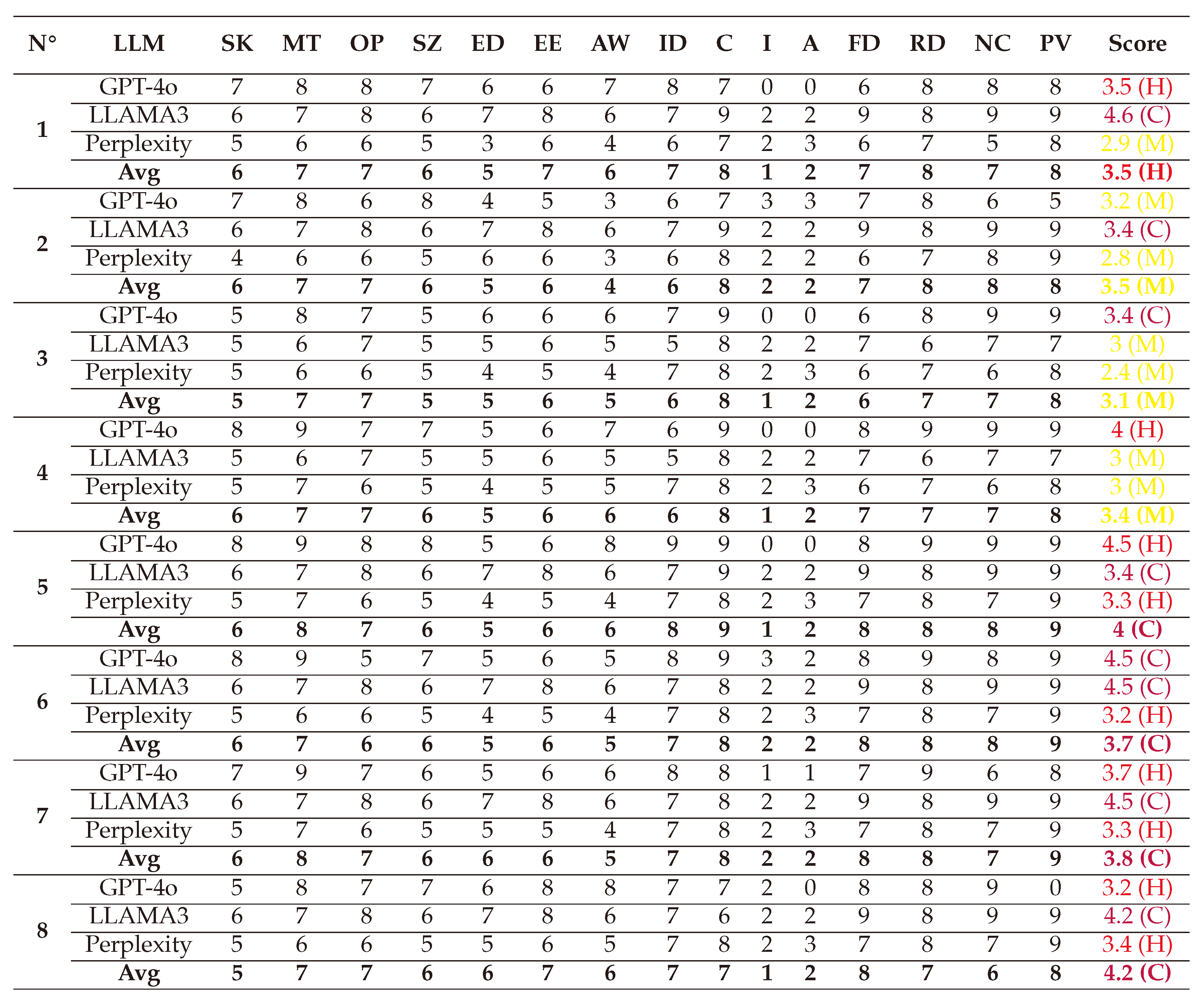

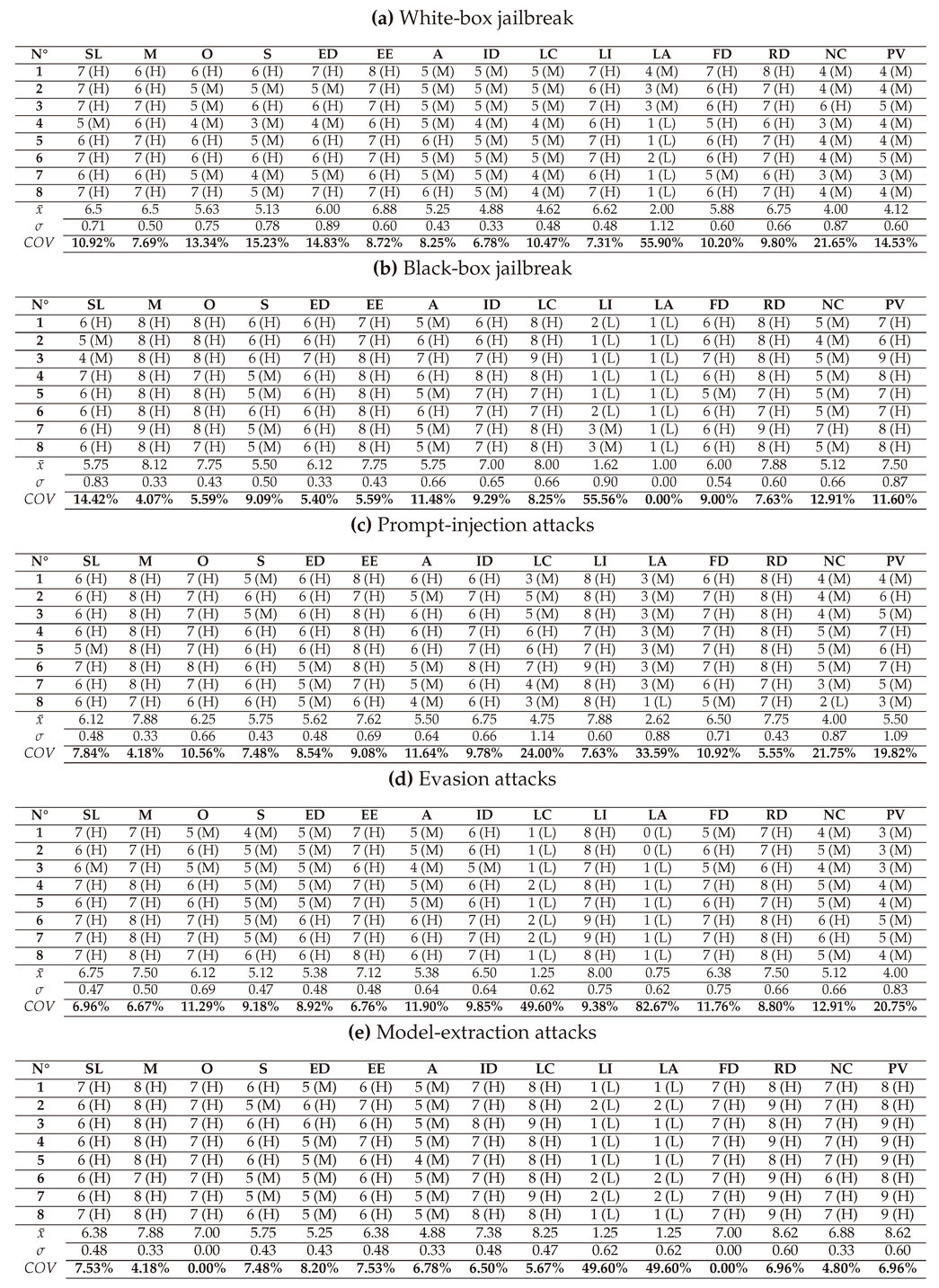

6.1.3. With OWASP Risk Rating

A third evaluation of white-box jailbreak attacks is done using OWASP RR [

140]. The corresponding vulnerability vectors of each attack is:

(1) → (SL:7/M:6/O:6/S:6/ED:7/EE:8/A:5/ID:5/LC:5/LI:7/LA:4/FD:7/RD:8/NC:4/PV:4) = 3.6 (High)

(2) → (SL:7/M:6/O:5/S:5/ED:5/EE:7/A:5/ID:5/LC:5/LI:6/LA:3/FD:6/RD:7/NC:4/PV:4) = 2.8 (Medium)

(3) → (SL:7/M:7/O:5/S:6/ED:6/EE:7/A:5/ID:5/LC:5/LI:7/LA:3/FD:6/RD:7/NC:6/PV:5) = 3.2 (Medium)

(4) → (SL:5/M:6/O:4/S:3/ED:4/EE:6/A:5/ID:4/LC:4/LI:6/LA:1/FD:5/RD:6/NC:3/PV:4) = 1.9 (Medium)

(5) → (SL:6/M:7/O:6/S:5/ED:6/EE:7/A:6/ID:5/LC:5/LI:7/LA:1/FD:6/RD:7/NC:4/PV:4) = 2.8 (High)

(6) → (SL:7/M:7/O:6/S:6/ED:6/EE:7/A:5/ID:5/LC:5/LI:7/LA:2/FD:6/RD:7/NC:4/PV:5) = 3.2 (High)

(7) → (SL:6/M:6/O:5/S:4/ED:5/EE:6/A:5/ID:5/LC:4/LI:6/LA:1/FD:5/RD:6/NC:3/PV:3) = 2.1 (Medium)

(8) → (SL:7/M:7/O:7/S:5/ED:7/EE:7/A:6/ID:5/LC:4/LI:7/LA:1/FD:6/RD:7/NC:4/PV:4) = 3.1 (High)

The scores assigned by each LLM are detailed in

Table A3. With its multiple factors, the OWASP Risk Rating provided a more comprehensive analysis of each attack. However, we encountered some

interpretation discrepancies, particularly with Perplexity AI. This model argued that these attacks have a Medium-to-High impact on Confidentiality—an assessment that differed from its CVSS evaluation of the same attacks. This highlights the inherent

subjectivity in scoring, as analyzing identical attacks in separate conversations can yield inconsistent results. In contrast, the other two LLMs provided scores consistent with the CVSS evaluation for Confidentiality and Integrity, along with a Low-to-None impact on Availability. Averaging the scores

mitigated such discrepancies while preserving the unique perspectives offered by each LLM, especially in factors like Non-Compliance and Privacy Violation. Notably, LLAMA-3 failed to detect any impact in these areas, whereas GPT-4o and Perplexity AI highlighted their significance.

Another challenge we observed was the tendency of LLMs to rely on

memorized values when evaluating attacks across multiple factors. For example, GPT-4o initially assigned identical scores to the first two attacks [

96,

166]. Upon prompting it to provide objective and distinct evaluations, GPT-4o revised its scores, adjusting the ED value from 6/10 to 5/10, the Availability impact from 6/10 to 5/10, and the NC value from 6/10 to 7/10. It justified these changes by acknowledging

similarities between the attacks while ensuring the scores reflected nuanced differences.

The averaged scores are visualized below for clarity. These results align with the CVSS evaluation in terms of technical impact and ease of exploitation, while also shedding light on the reputational damage that could arise if such attacks are exploited. Moreover, they emphasize that White-box attacks have a medium impact on Non-Compliance and Privacy Violation.

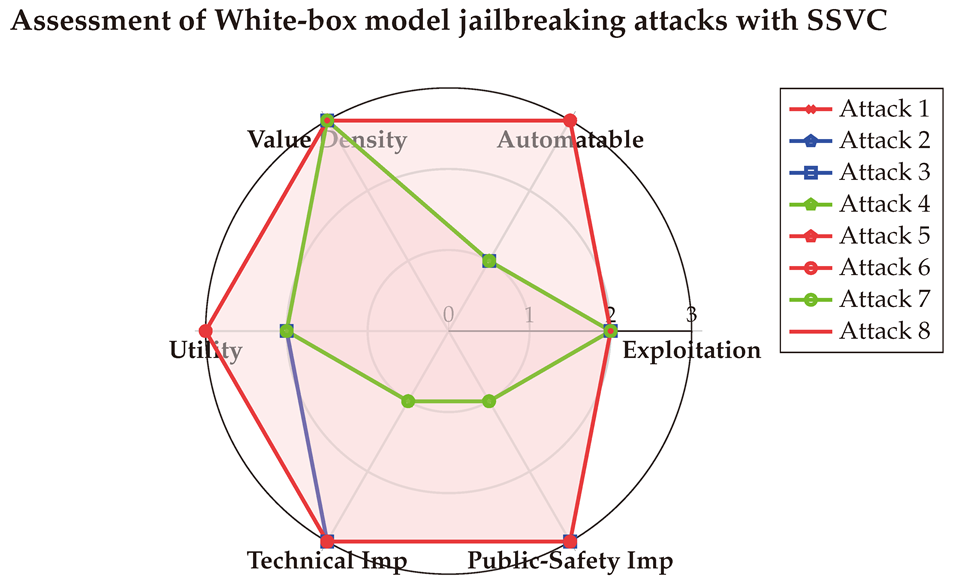

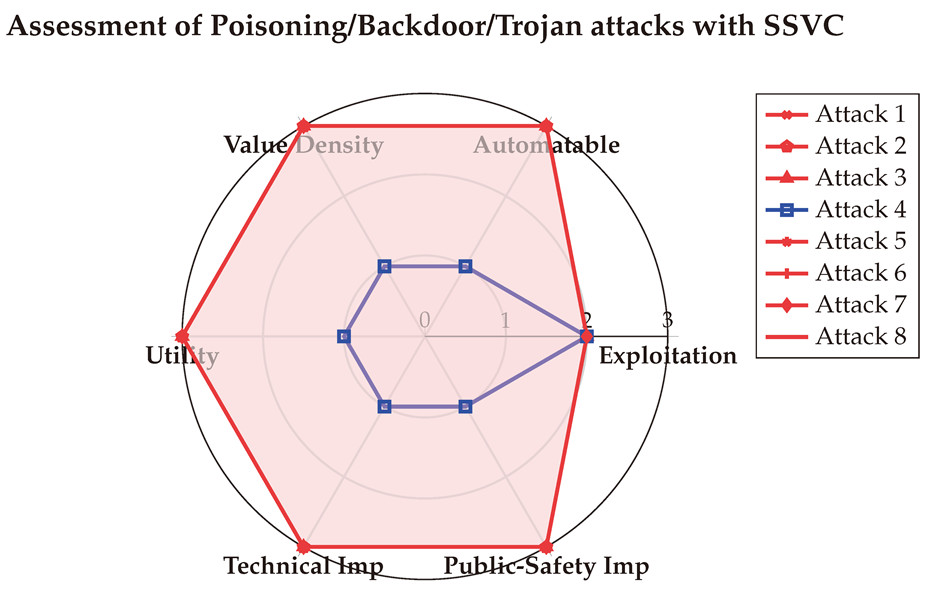

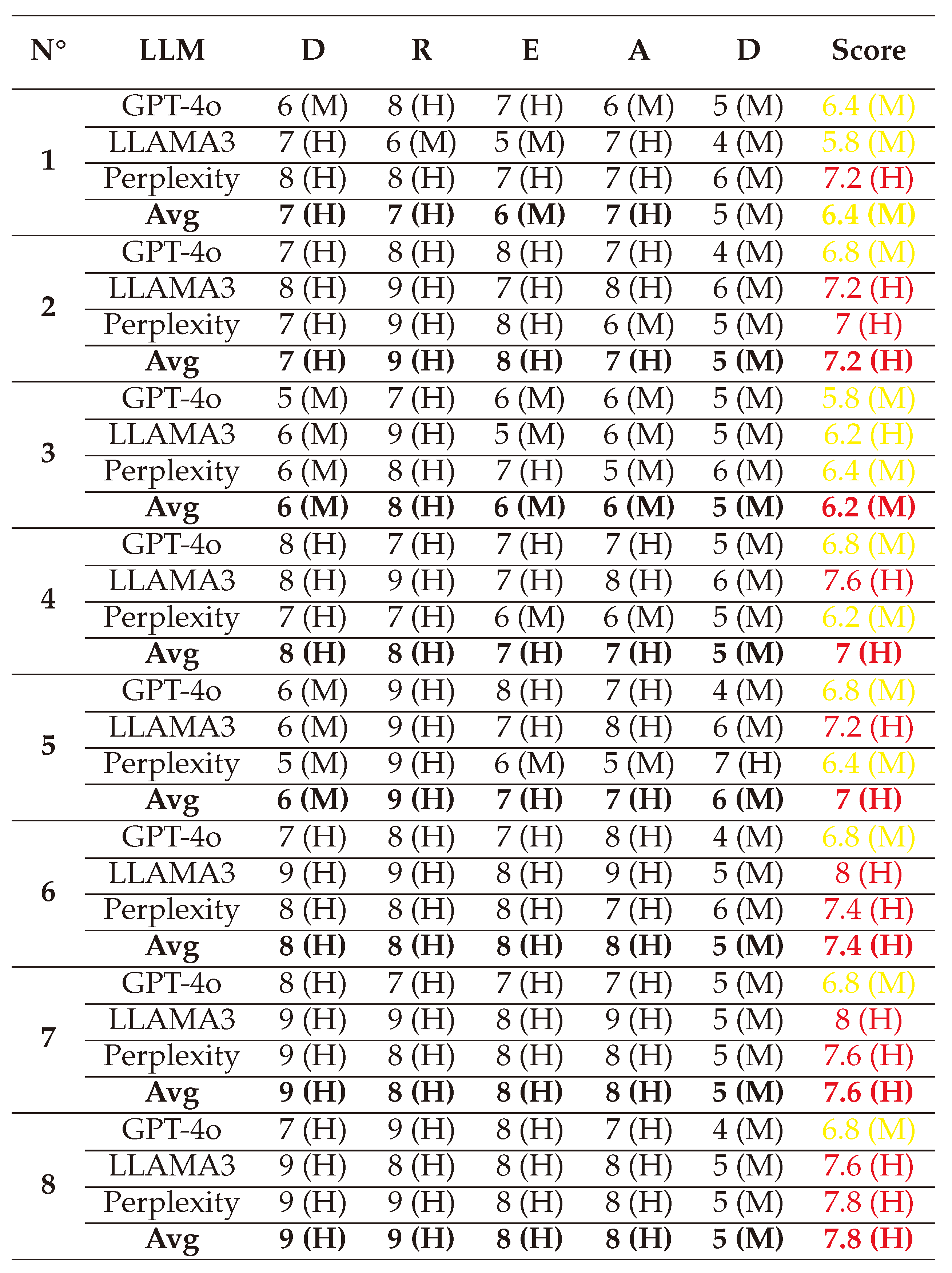

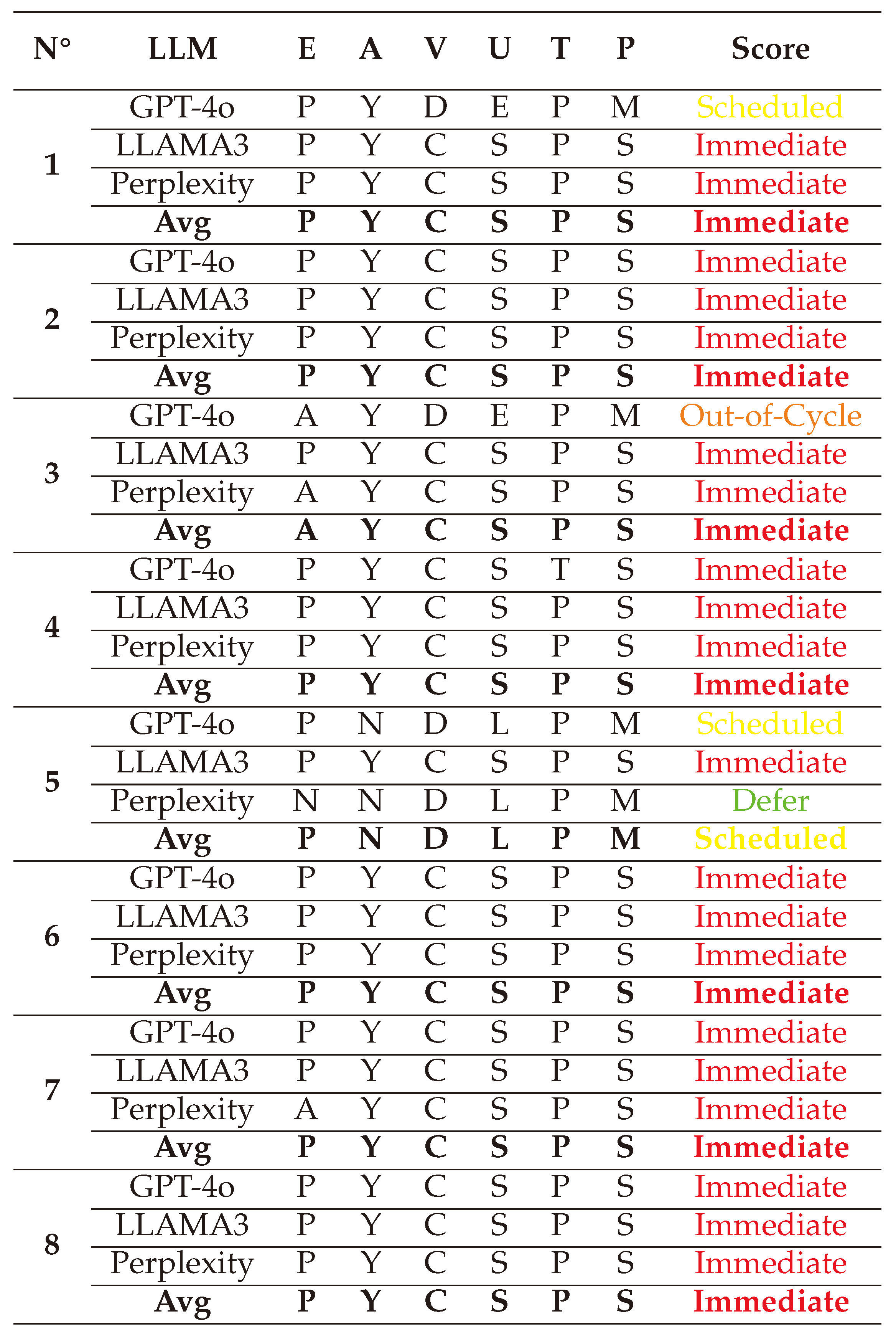

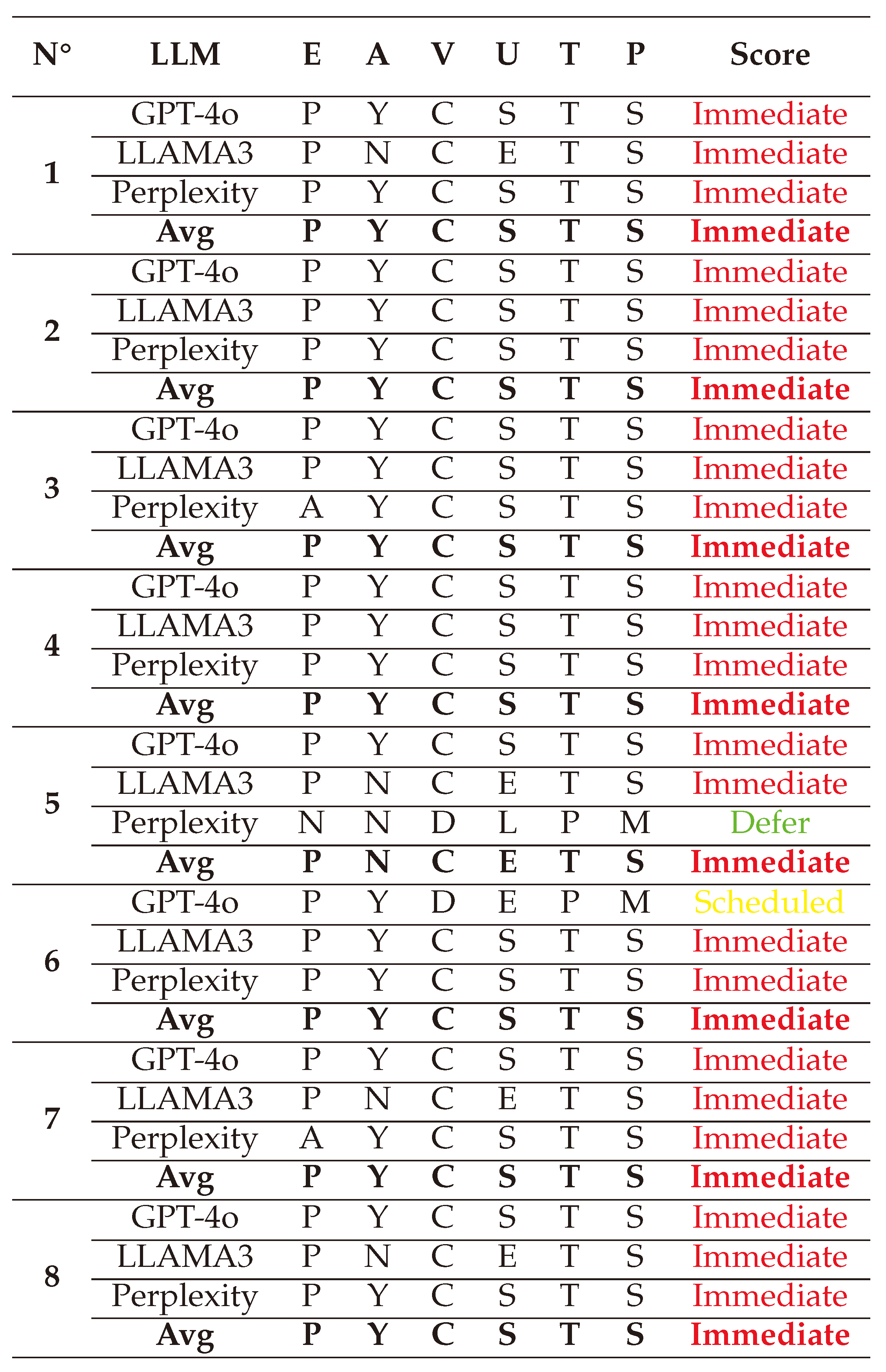

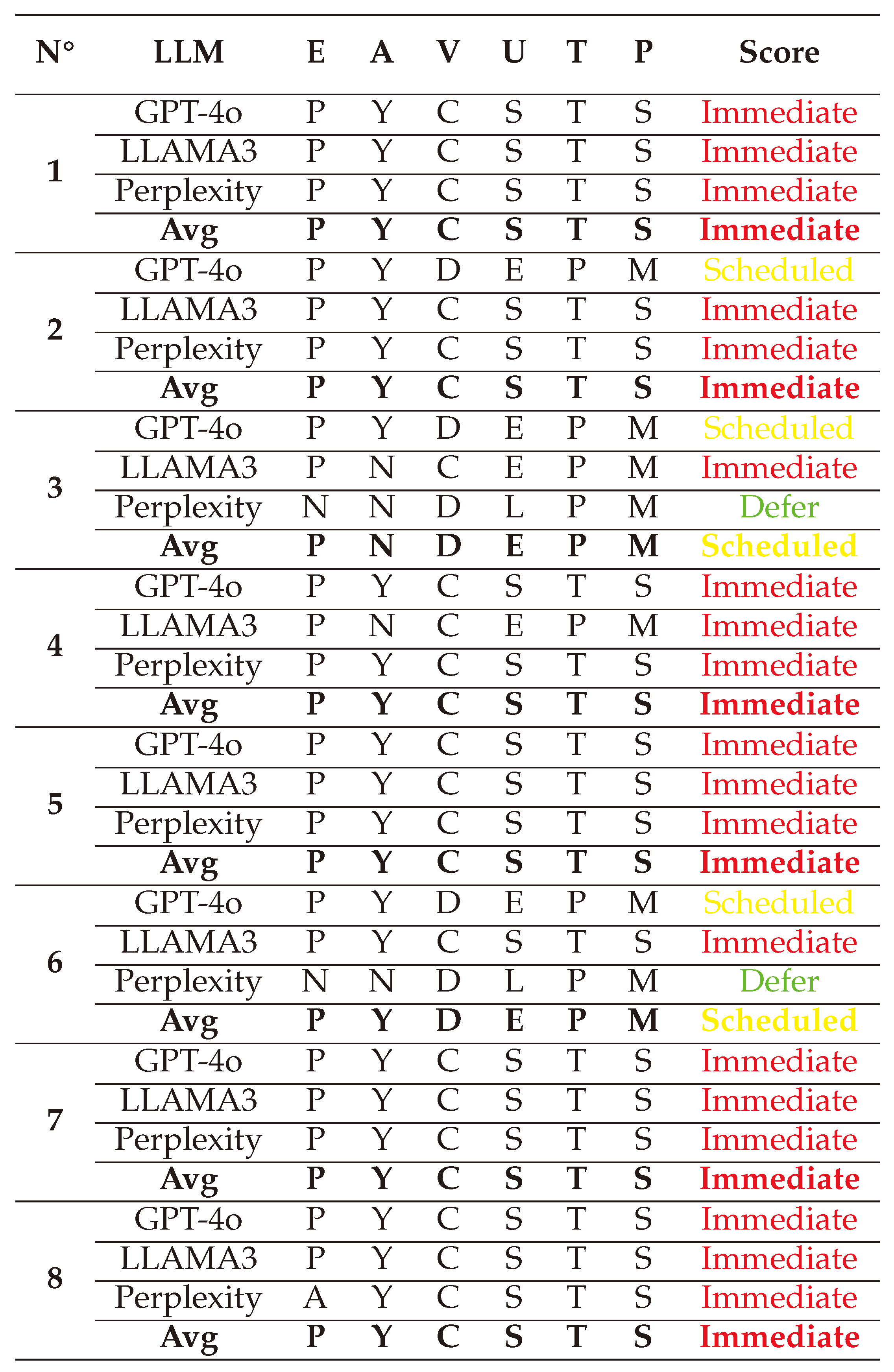

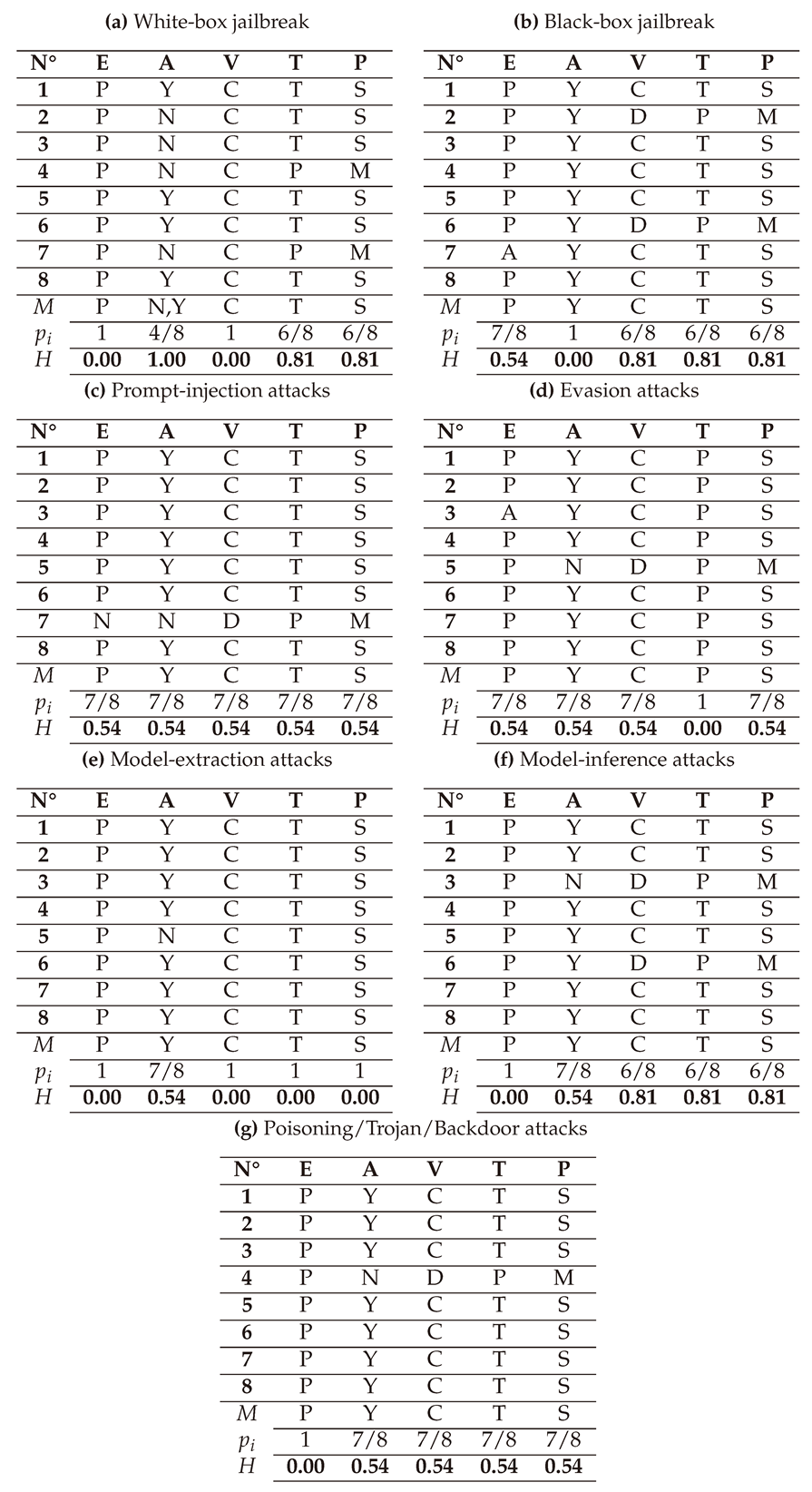

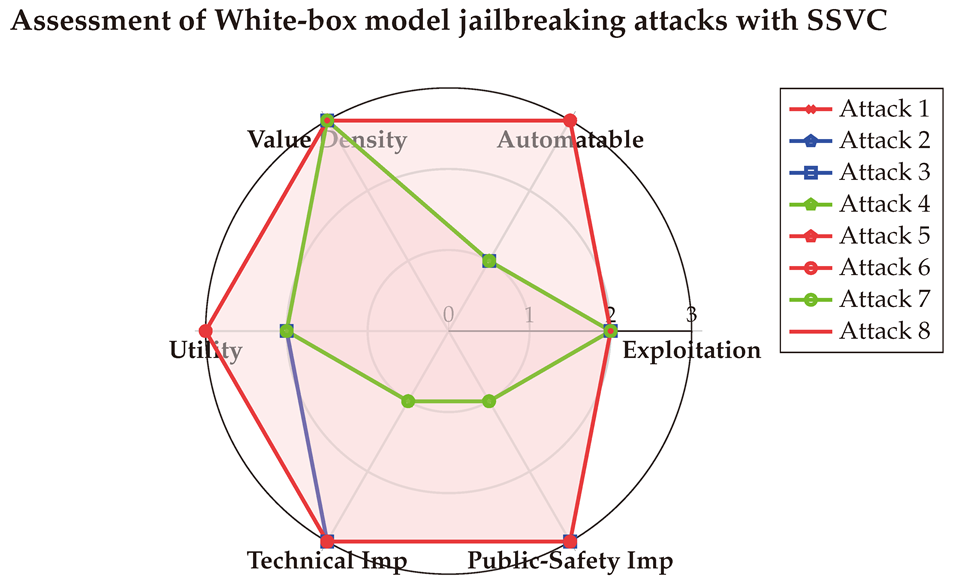

6.1.4. With SSVC

Finally, we evaluate these attacks using SSVC [

128]. The corresponding vectors, as a supplier, are shown below:

(1) → (E:P/A:Y/V:C/U:S/T:T/P:S) = Immediate (Very High)

(2) → (E:P/A:N/V:C/U:E/T:T/P:S) = Immediate (Very High)

(3) → (E:P/A:N/V:C/U:E/T:T/P:S) = Immediate (Very High)

(4) → (E:P/A:N/V:C/U:E/T:P/P:M) = Scheduled (Medium)

(5) → (E:P/A:Y/V:C/U:S/T:T/P:S) = Immediate (Very High)

(6) → (E:P/A:Y/V:C/U:S/T:T/P:S) = Immediate (Very High)

(7) → (E:P/A:N/V:C/U:E/T:P/P:M) = Scheduled (Medium)

(8) → (E:P/A:Y/V:C/U:S/T:T/P:S) = Immediate (Very High)

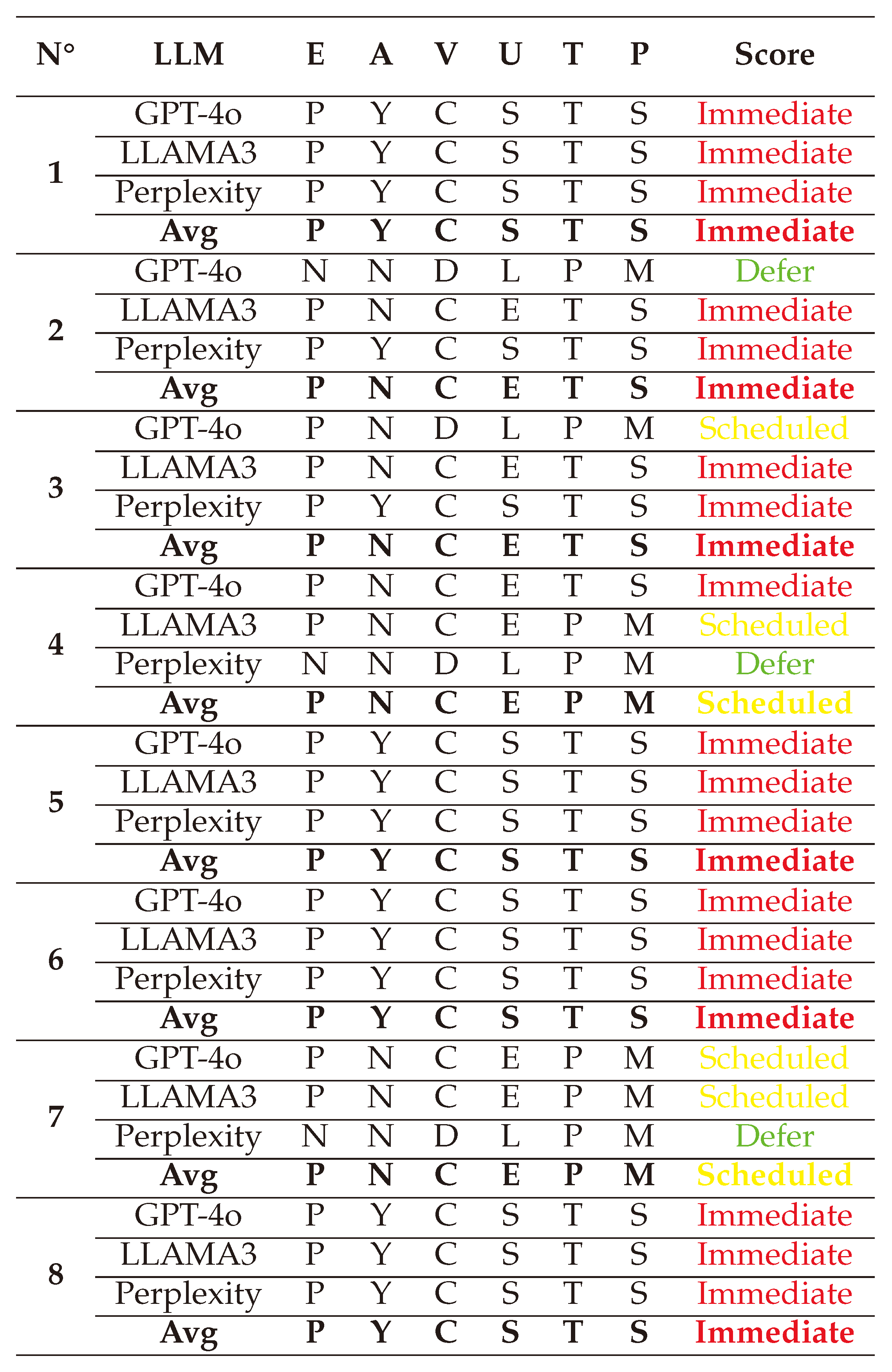

Table A4 presents the detailed SSVC assessment scores provided by each LLM. As SSVC is relatively

straightforward to apply, the LLMs performed the evaluations without significant issues. The primary role of the HitL in this context was to

interpret the rationale behind the values assigned by the LLMs, particularly for the Exploitation factor. For instance, when evaluating the second White-box jailbreak attack [

96], GPT-4o determined there was no PoC for the attack, as its implementation was not publicly available, and accordingly assigned it a "None" value. In contrast, LLAMA-3 and Perplexity AI offered a different perspective. Both argued that the paper provided sufficient detail about the attack, making it possible to reproduce with some effort. Consequently, they concluded that a PoC exists. With the majority of models agreeing, the average score reflected their viewpoint, recognizing the presence of a PoC.

The final SSVC scores are visualized below in a spider chart. These results indicate that White-box jailbreak attacks can be automated and highly rewarding, underscoring their significant risks to both technical systems and public safety.

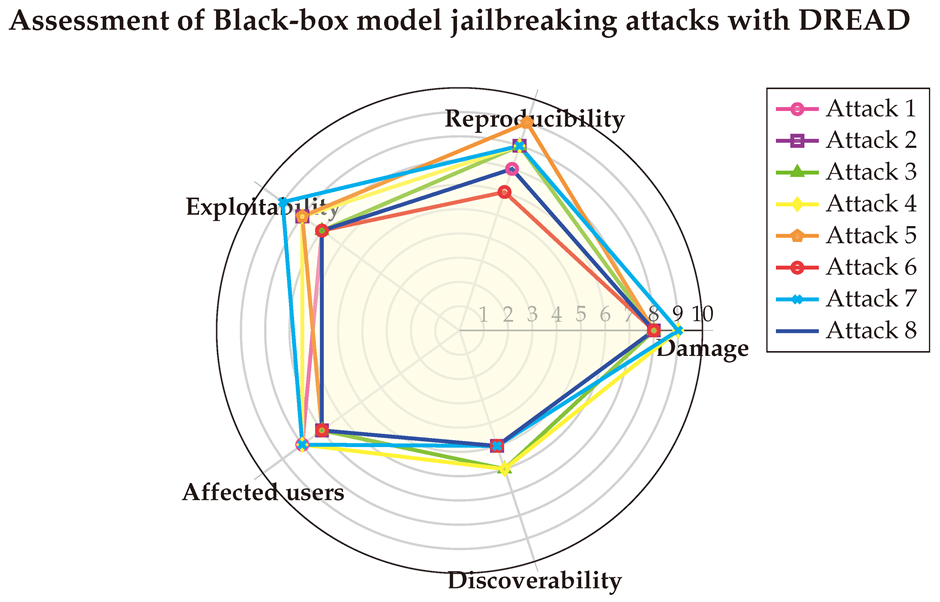

6.2. Assessment of Black-box Jailbreak attacks

We evaluate now Black-box jailbreak attacks, the eight attacks are the same presented in

Section 4.1.2 earlier: (1) Privacy attack on GPT-4o [

71], (2) PAIR [

19], (3) DAN [

120], (4) Simple Adaptive Attack [

2], (5) PAL [

126], (6) GCQ [

56], (7) IRIS [

108], (8) Tastle [

146].

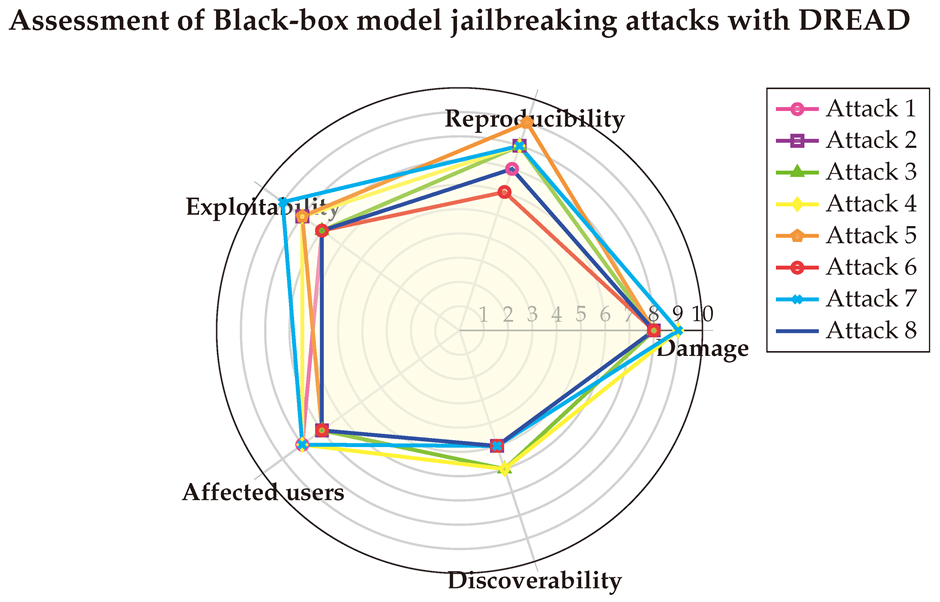

6.2.1. With DREAD

We start with the evaluation using DREAD [

92]. Below are the DREAD vectors of each of the eight Black-box Jailbreak attacks:

(1) → (D:8/R:7/E:7/A:8/D:5) = 7 (High)

(2) → (D:8/R:8/E:8/A:7/D:5) = 7.2 (High)

(3) → (D:8/R:8/E:7/A:7/D:6) = 7.2 (High)

(4) → (D:9/R:8/E:8/A:8/D:6) = 7.8 (High)

(5) → (D:8/R:9/E:8/A:7/D:5) = 7.4 (High)

(6) → (D:8/R:6/E:7/A:7/D:5) = 6.6 (Medium)

(7) → (D:9/R:8/E:9/A:8/D:5) = 7.8 (High)

(8) → (D:8/R:7/E:7/A:7/D:5) = 6.8 (Medium)

The details are presented in

Table A5. This time, no misunderstandings occurred, as the corrections made during the DREAD assessment of White-box attacks were already in place. However, some divergences in attack analysis still arose. For instance, the Exploitability factor of the first attack [

71] was rated 6/10 by GPT-4o, which noted that the attack requires specific query patterns but is still manageable to execute. In contrast, LLAMA-3 and Perplexity AI assigned a score of 8/10, arguing that the implementation details provided in the paper make the attack easily exploitable.

Another challenge was the potential

memorization of values. For example, LLAMA-3 gave identical scores for the fourth, fifth, and seventh attacks [

2,

108,

126], justifying this by highlighting the

similar characteristics of these attacks. While this explanation is plausible, as the scores were consistent with those of the other LLMs, averaging the scores across all models helped mitigate these analytical inconsistencies by favoring the majority consensus.

After averaging the scores, we visualized the results in a spider chart for clarity. The DREAD scores indicate that Black-box Jailbreak attacks, like their White-box counterparts, can inflict significant damage while being highly reproducible and exploitable, yet challenging to detect.

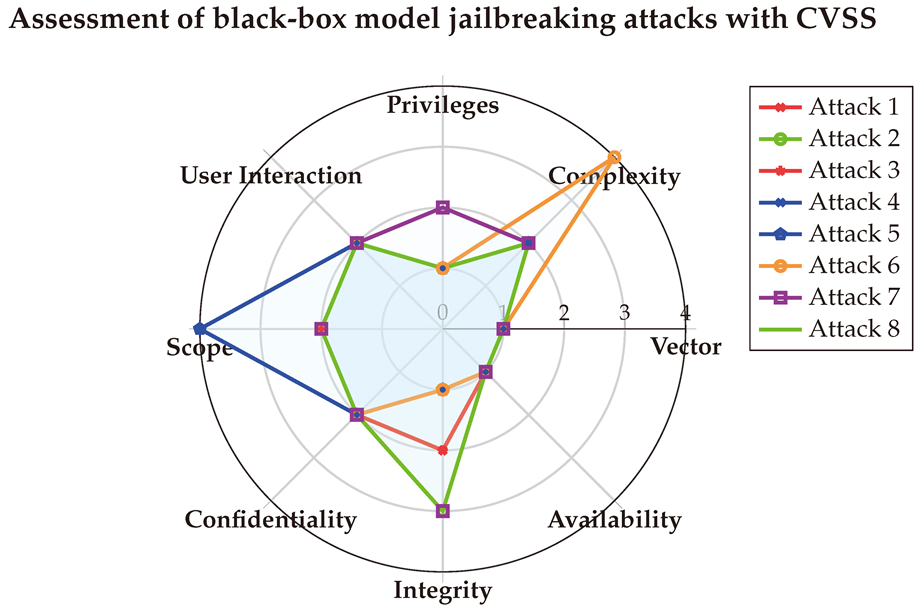

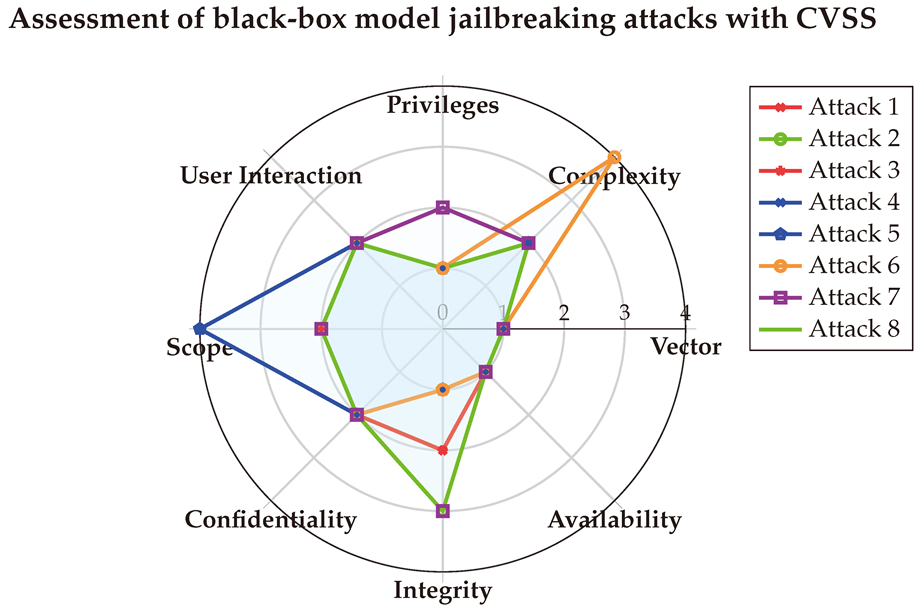

6.2.2. With CVSS

In this second assessment of Black-box Jailbreak, we evaluate the attacks using CVSS [

114]. The corresponding CVSS Vectors are shown below:

(1) → (AV:N/AC:L/PR:N/UI:N/S:U/C:L/I:L/A:N) = 6.5 (Medium)

(2) → (AV:N/AC:L/PR:N/UI:N/S:U/C:L/I:H/A:N) = 8.2 (High)

(3) → (AV:N/AC:L/PR:N/UI:N/S:U/C:L/I:L/A:N) = 6.5 (Medium)

(4) → (AV:N/AC:L/PR:N/UI:N/S:C/C:L/I:N/A:N) = 7.2 (High)

(5) → (AV:N/AC:L/PR:N/UI:N/S:C/C:L/I:N/A:N) = 7.2 (High)

(6) → (AV:N/AC:H/PR:N/UI:N/S:U/C:L/I:N/A:N) = 5.4 (Medium)

(7) → (AV:N/AC:L/PR:L/UI:N/S:U/C:L/I:H/A:N) = 7.1 (High)

(8) → (AV:N/AC:L/PR:N/UI:N/S:U/C:L/I:H/A:N) = 8.2 (High)

Table A6 outlines the detailed CVSS scores for the Black-box Jailbreak attacks. As observed with previous assessments, the three LLMs displayed some divergence in evaluating the technical impact of each attack. However, averaging the scores allowed us to establish a balanced consensus that moderated the variations in their evaluations.

One notable issue arose with LLAMA-3 in interpreting the User Interaction factor, which assesses whether a user other than the attacker must interact with the system for the attack to succeed. In the case of Black-box jailbreaks, where most attacks are executed remotely, no additional user interaction is required—a point accurately identified by GPT-4o and Perplexity AI. However, LLAMA-3 initially marked the UI factor as "Required," justifying this based on the attacker’s interaction with the system. The HitL clarified through prompts that the UI factor refers specifically to interactions by users other than the attacker. Following this explanation, LLAMA-3 adjusted its evaluation, aligning with the "None" rating given by the other LLMs.

After averaging the scores, the final results are visualized below in a spider chart. The CVSS scores highlight that Black-box jailbreak attacks are easier to reproduce compared to White-box jailbreaks, require no privileges, and have a low-to-moderate impact on both integrity and confidentiality.

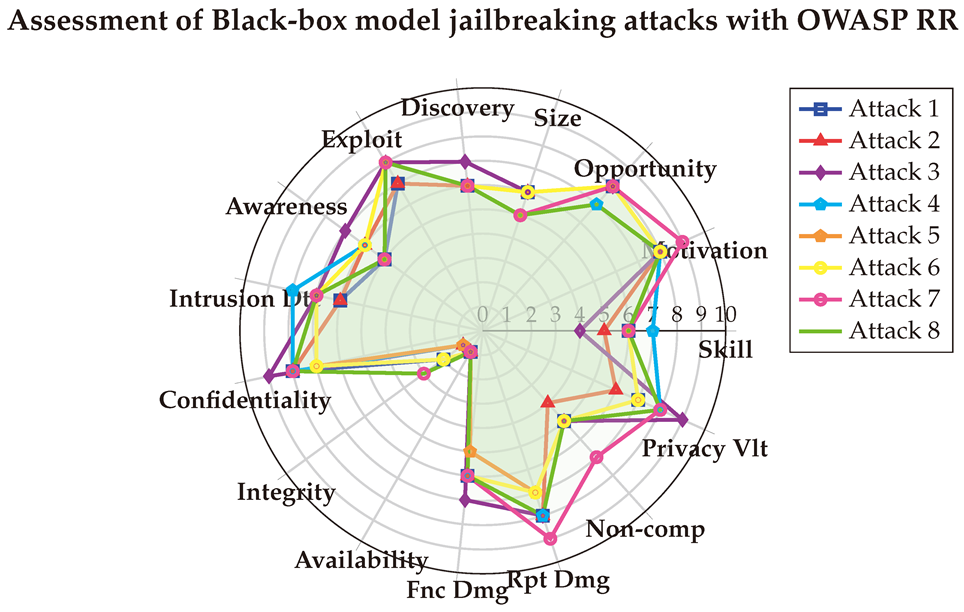

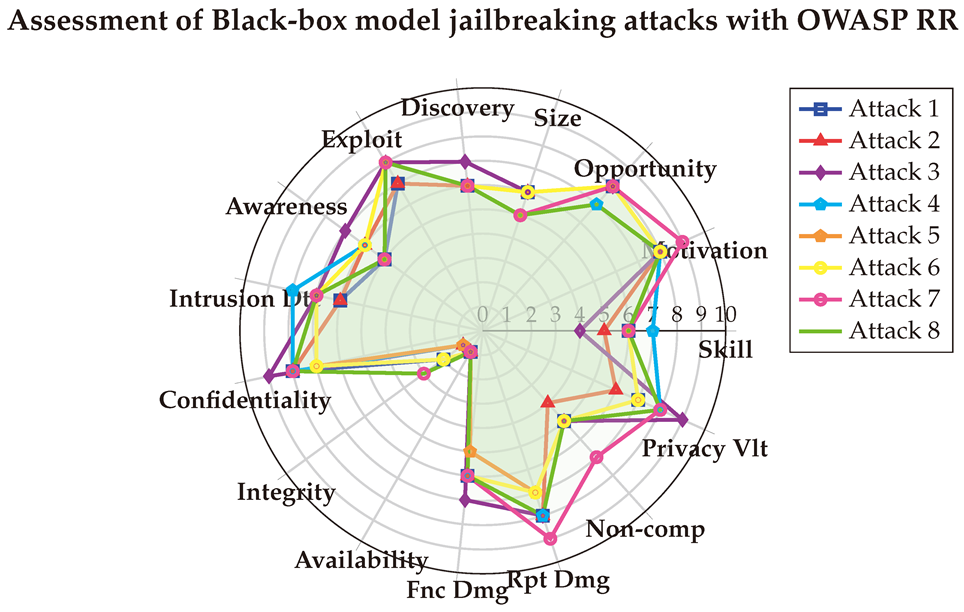

6.2.3. With OWASP Risk Rating

A third evaluation of is done with OWASP Risk Rating [

140]. The corresponding vulnerability vectors of each attack is:

(1) → (SL:6/M:8/O:8/S:6/ED:6/EE:7/A:5/ID:6/LC:8/LI:2/LA:1/FD:6/RD:8/NC:5/PV:7) = 3.3 (High)

(2) → (SL:5/M:8/O:8/S:6/ED:6/EE:7/A:6/ID:6/LC:8/LI:1/LA:1/FD:6/RD:8/NC:4/PV:6) = 3 (High)

(3) → (SL:4/M:8/O:8/S:6/ED:7/EE:8/A:7/ID:7/LC:9/LI:1/LA:1/FD:7/RD:8/NC:5/PV:9) = 3.8 (High)

(4) → (SL:7/M:8/O:7/S:5/ED:6/EE:8/A:6/ID:8/LC:8/LI:1/LA:1/FD:6/RD:8/NC:5/PV:8) = 3.5 (High)

(5) → (SL:6/M:8/O:8/S:5/ED:6/EE:8/A:5/ID:7/LC:7/LI:1/LA:1/FD:5/RD:7/NC:5/PV:7) = 3 (High)

(6) → (SL:6/M:8/O:8/S:6/ED:6/EE:8/A:6/ID:7/LC:7/LI:2/LA:1/FD:6/RD:7/NC:5/PV:7) = 3 (High)

(7) → (SL:6/M:9/O:8/S:5/ED:6/EE:8/A:5/ID:7/LC:8/LI:3/LA:1/FD:6/RD:9/NC:7/PV:8) = 3.8 (High)

(8) → (SL:6/M:8/O:7/S:5/ED:6/EE:8/A:5/ID:7/LC:8/LI:3/LA:1/FD:6/RD:8/NC:5/PV:8) = 3.4 (High)

Table A7 presents the detailed OWASP RR assessments conducted using three LLMs. Unlike previous evaluations, no significant errors were observed in the scoring provided by the models. However, some divergence was noted in specific factors. For example, when assessing the Opportunity factor for the sixth attack [

56], GPT-4o and LLAMA-3 scored it 8/10 and 9/10, respectively, arguing that these attacks target online LLMs, thereby increasing the availability of opportunities for exploitation. In contrast, Perplexity AI assigned a score of 6/10, reasoning that the attacks are not immediately apparent or straightforward to execute, resulting in a medium-to-high Opportunity rating. To maintain

neutrality and

objectivity, we chose not to modify or influence these values, allowing the models’ perspectives to remain intact. Averaging the scores enabled a

balanced consideration of all three points of view.

The final scores are visualized below in the spider chart. The results indicate that Black-box jailbreak attacks have a significant impact on the confidentiality of data, as they can extract sensitive information from the models. In contrast, their impact on integrity is minimal, and they have no impact on availability. The OWASP RR metric further highlights the severe implications these attacks have on privacy violations and the reputation of the targeted organization.

6.2.4. With SSVC

The forth evaluation is performed using SSVC [

128] in a supplier role. The corresponding vulnerability vectors are detailed below:

(1) → (E:P/A:Y/V:C/U:S/T:T/P:S) = Immediate (Very High)

(2) → (E:P/A:Y/V:D/U:E/T:P/P:M) = Scheduled (Medium)

(3) → (E:A/A:Y/V:C/U:S/T:T/P:S) = Immediate (Very High)

(4) → (E:P/A:Y/V:C/U:S/T:T/P:S) = Immediate (Very High)

(5) → (E:P/A:Y/V:C/U:S/T:T/P:S) = Immediate (Very High)

(6) → (E:P/A:Y/V:D/U:E/T:P/P:M) = Scheduled (Medium)

(7) → (E:A/A:Y/V:C/U:S/T:T/P:S) = Immediate (Very High)

(8) → (E:P/A:Y/V:C/U:S/T:T/P:S) = Immediate (Very High)

Table A8 presents the SSVC scores assigned by the three LLMs. The primary challenge encountered during this assessment was the ability of the LLMs to remain

up-to-date. Specifically, some attacks might have been actively exploited in the past but are now less prevalent. For example, in the case of the third and fourth attacks [

2,

120], some LLMs classified these as "Active," while others evaluated them at the "Proof-of-Concept" stage. Determining which LLM is correct in such scenarios is challenging. To address this, we prompted the LLMs to confirm their assessments by asking clarifying questions such as:

"Are there proofs of recent active exploitations of these attacks?" This approach led to adjustments in certain scores. For instance, LLAMA-3 revised its assessment for the third attack from "Active" to "Proof-of-Concept," explaining that while the attack was previously active, there is no current evidence of active exploitation.

The final scores are visualized below in the spider chart. The results indicate that the SSVC scores align closely with those of DREAD, demonstrating that these Black-box jailbreak attacks are highly dangerous and straightforward to exploit, regardless of whether they target the CIA triad or financial aspects.

For the subsequent assessments, we will present only the results, as the justifications follow the same reasoning outlined for the White-box and Black-box Jailbreak attacks.

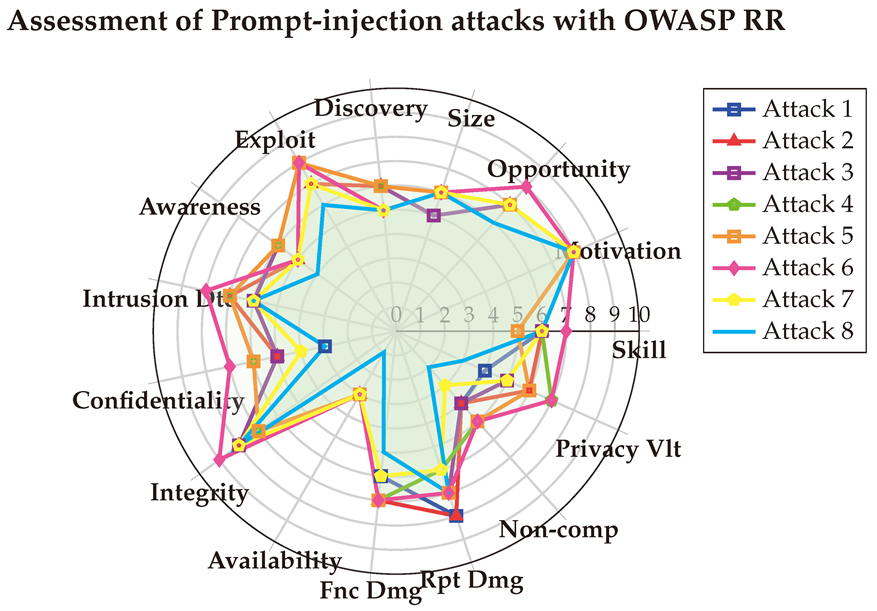

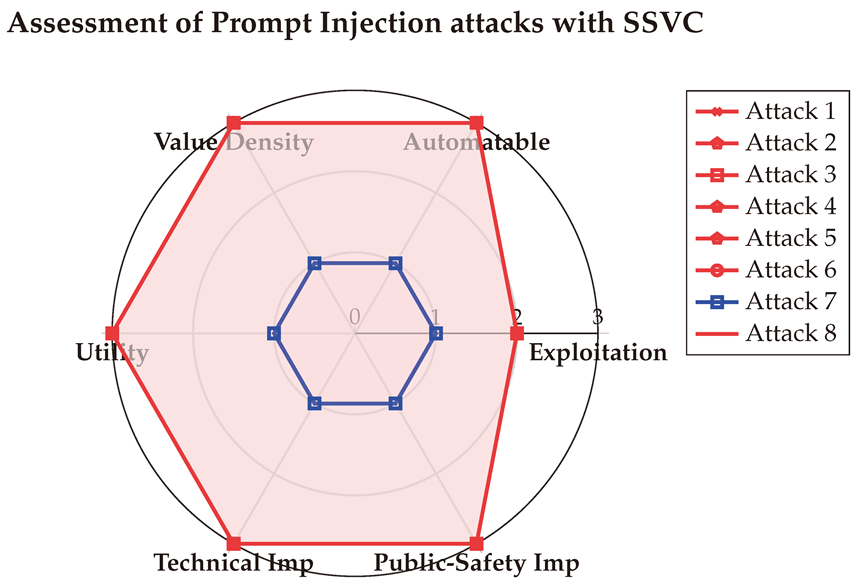

6.3. Assessment of Prompt Injection attacks

The third assessment is that of PI attacks, we evaluate the attacks described earlier in

Section 4.2: (1) Ignore Previous Prompt [

102], (2) Indirect Instruction Injection [

52] (3) Formalised Prompt Injection [

86], (4) Injection through file input [

5], (5) Universal Prompt Injection [

82], (6) Virtual Prompt Injection [

152], (7) Chat History Tampering [

139], (8) JudgeDeceiverAttack [

122].

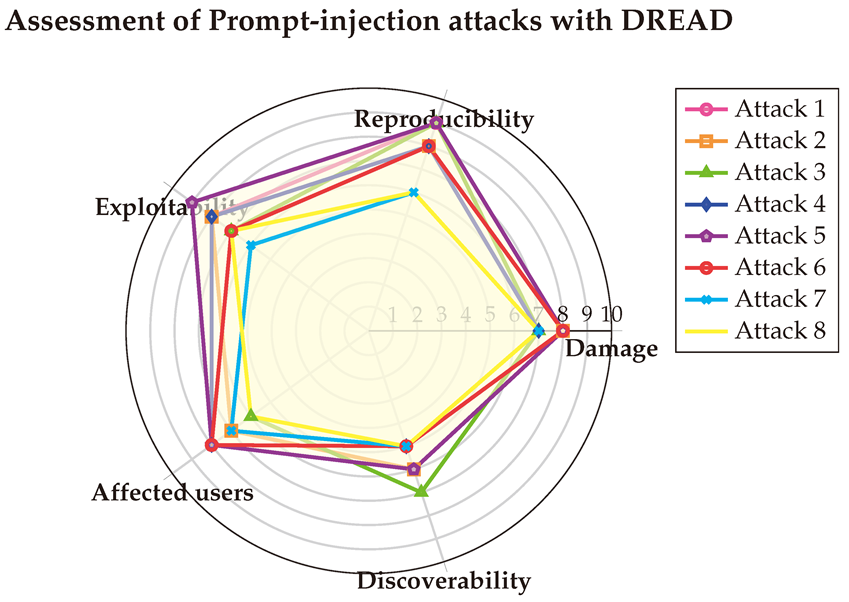

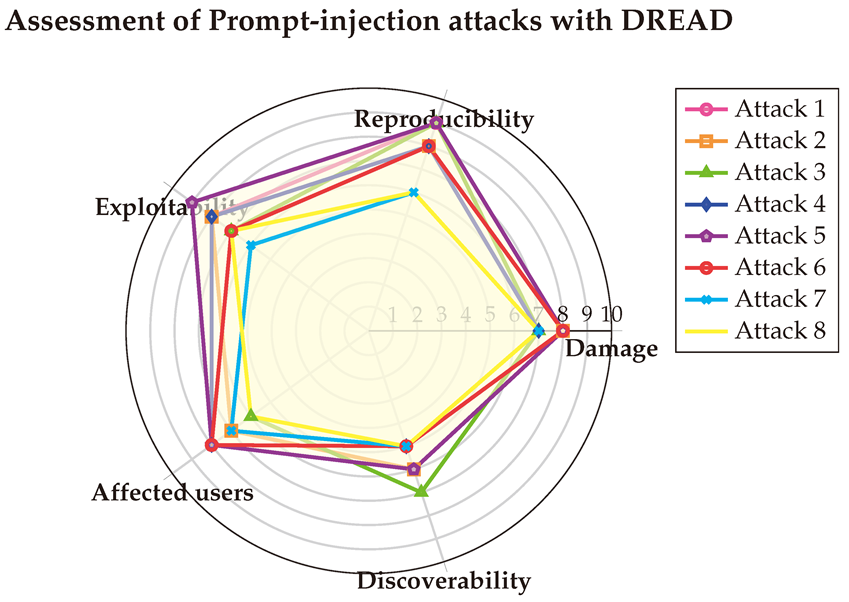

6.3.1. With DREAD

As done before, we start by evaluating the eight prompt injection attacks using DREAD [

92], and we find the corresponding vulnerability vectors as follows:

(1) → (D:8/R:9/E:8/A:7/D:6) = 7.6 (High)

(2) → (D:8/R:8/E:8/A:7/D:6) = 7.4 (High)

(3) → (D:7/R:9/E:7/A:6/D:7) = 7.2 (High)

(4) → (D:7/R:8/E:8/A:8/D:5) = 7.2 (High)

(5) → (D:8/R:9/E:9/A:8/D:6) = 8 (High)

(6) → (D:8/R:8/E:7/A:8/D:5) = 7.2 (High)

(7) → (D:7/R:6/E:6/A:7/D:5) = 6.2 (Medium)

(8) → (D:7/R:6/E:7/A:6/D:5) = 6.2 (Medium)

The detailed scores are shown in

Table A9, with the final results visualized in the Spider-chart below. The DREAD analysis reveals that Prompt-Injection attacks cause significant damage to systems and impact a wide range of users, but they are comparatively harder to exploit and reproduce than Jailbreak attacks.

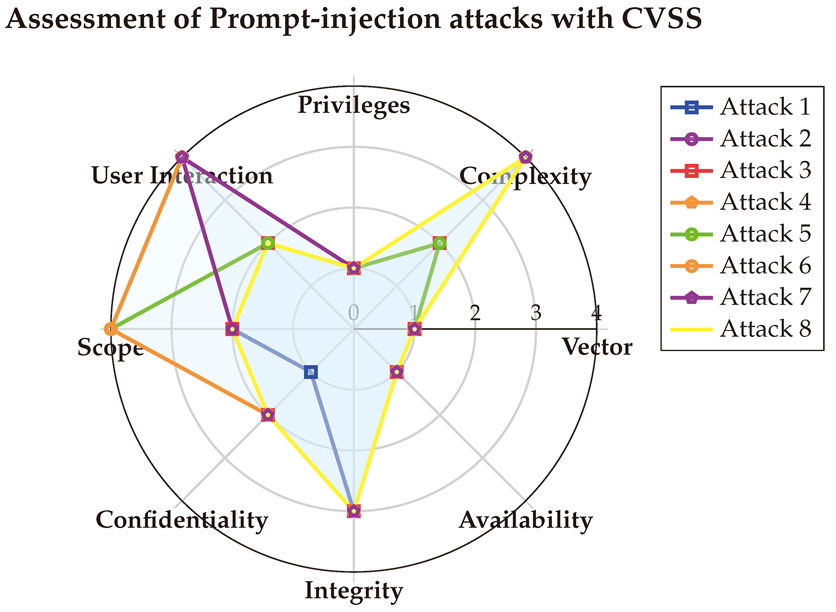

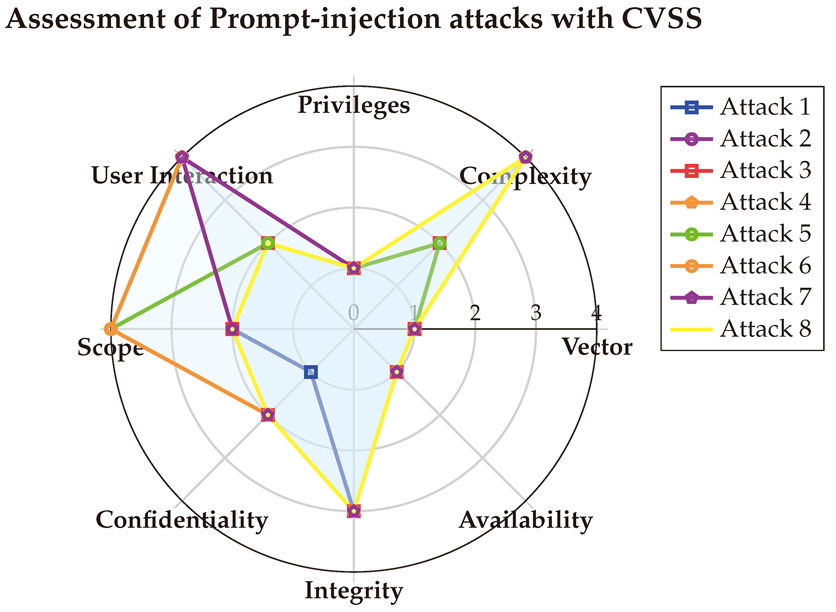

6.3.2. With CVSS

The second assessment of PI attacks is done with CVSS [

114]. The corresponding CVSS Vectors are shown below:

(1) → (AV:N/AC:L/PR:N/UI:N/S:U/C:N/I:H/A:N) = 7.5 (High)

(2) → (AV:N/AC:H/PR:N/UI:R/S:U/C:L/I:H/A:N) = 5.9 (Medium)

(3) → (AV:N/AC:L/PR:N/UI:N/S:U/C:L/I:H/A:N) = 8.2 (High)

(4) → (AV:N/AC:H/PR:N/UI:R/S:C/C:L/I:H/A:N) = 6.9 (Medium)

(5) → (AV:N/AC:L/PR:N/UI:N/S:C/C:L/I:H/A:N) = 9.3 (Critical)

(6) → (AV:N/AC:H/PR:N/UI:R/S:C/C:L/I:H/A:N) = 6.9 (Medium)

(7) → (AV:N/AC:H/PR:N/UI:R/S:U/C:L/I:H/A:N) = 5.9 (Medium)

(8) → (AV:N/AC:H/PR:N/UI:N/S:U/C:L/I:H/A:N) = 6.5 (Medium)

The detailed CVSS results are presented in

Table A10, with the final scores visualized in the Spider-chart below. The analysis indicates that Prompt-Injection attacks share similarities with Jailbreak attacks, as they are primarily executed remotely through the network. However, they are slightly more complex to perform than Jailbreak attacks. These attacks predominantly target system integrity, have a lesser impact on confidentiality, and do not affect availability.

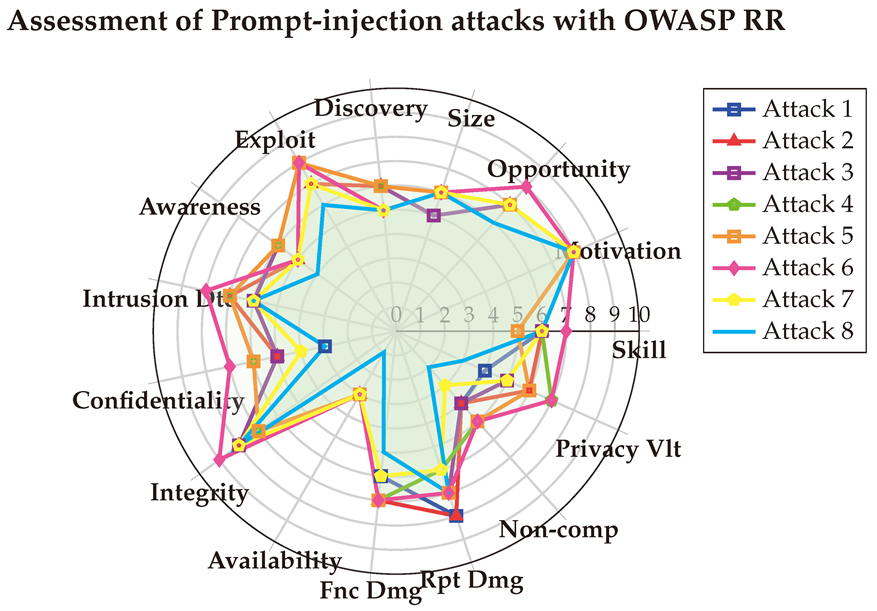

6.3.3. With OWASP Risk Rating

Another evaluation of Prompt Injection attacks is done with OWASP Risk Rating [

140]. The corresponding vulnerability vectors of each attack is:

(1) → (SL:6/M:8/O:7/S:5/ED:6/EE:8/A:6/ID:6/LC:3/LI:8/LA:3/FD:6/RD:8/NC:4/PV:4) = 3.3 (High)

(2) → (SL:6/M:8/O:7/S:6/ED:6/EE:7/A:5/ID:7/LC:5/LI:8/LA:3/FD:7/RD:8/NC:4/PV:6) = 3.8 (High)

(3) → (SL:6/M:8/O:7/S:5/ED:6/EE:8/A:6/ID:6/LC:5/LI:8/LA:3/FD:7/RD:8/NC:4/PV:5) = 3.7 (High)

(4) → (SL:6/M:8/O:7/S:6/ED:6/EE:8/A:6/ID:7/LC:6/LI:7/LA:3/FD:7/RD:8/NC:5/PV:7) = 4.1 (Critical)

(5) → (SL:5/M:8/O:7/S:6/ED:6/EE:8/A:6/ID:7/LC:6/LI:7/LA:3/FD:7/RD:8/NC:5/PV:6) = 3.8 (High)

(6) → (SL:7/M:8/O:8/S:6/ED:5/EE:8/A:5/ID:8/LC:7/LI:9/LA:3/FD:7/RD:8/NC:5/PV:7) = 4.4 (Critical)

(7) → (SL:6/M:8/O:7/S:6/ED:5/EE:7/A:5/ID:6/LC:4/LI:8/LA:3/FD:6/RD:7/NC:3/PV:5) = 2.6 (Medium)

(8) → (SL:6/M:7/O:6/S:6/ED:5/EE:6/A:4/ID:6/LC:3/LI:8/LA:1/FD:5/RD:7/NC:2/PV:3) = 1.9 (Medium)

The assessments conducted using three LLMs are detailed in

Table A11, and the final scores are depicted in the Spider-chart below for enhanced visualization. The OWASP RR results corroborate that Prompt-Injection attacks exert a greater impact on integrity than on confidentiality and availability. Additionally, they highlight the significant influence these attacks have on privacy violations and reputation damage, which are critical factors beyond the technical scope. Notably, the assessments also reveal a general lack of awareness among public users regarding these specific threats.

6.3.4. With SSVC

A last evaluation is performed using SSVC [

128] as done before, the results of the assessments are presented below:

(1) → (E:P/A:Y/V:C/U:S/T:T/P:S) = Immediate (Very High)

(2) → (E:P/A:Y/V:C/U:S/T:T/P:S) = Immediate (Very High)

(3) → (E:P/A:Y/V:C/U:S/T:T/P:S) = Immediate (Very High)

(4) → (E:P/A:Y/V:C/U:S/T:T/P:S) = Immediate (Very High)

(5) → (E:P/A:Y/V:C/U:S/T:T/P:S) = Immediate (Very High)

(6) → (E:P/A:Y/V:C/U:S/T:T/P:S) = Immediate (Very High)

(7) → (E:N/A:N/V:D/U:L/T:P/P:M) = Defer (Low)

(8) → (E:P/A:Y/V:C/U:S/T:T/P:S) = Immediate (Very High)

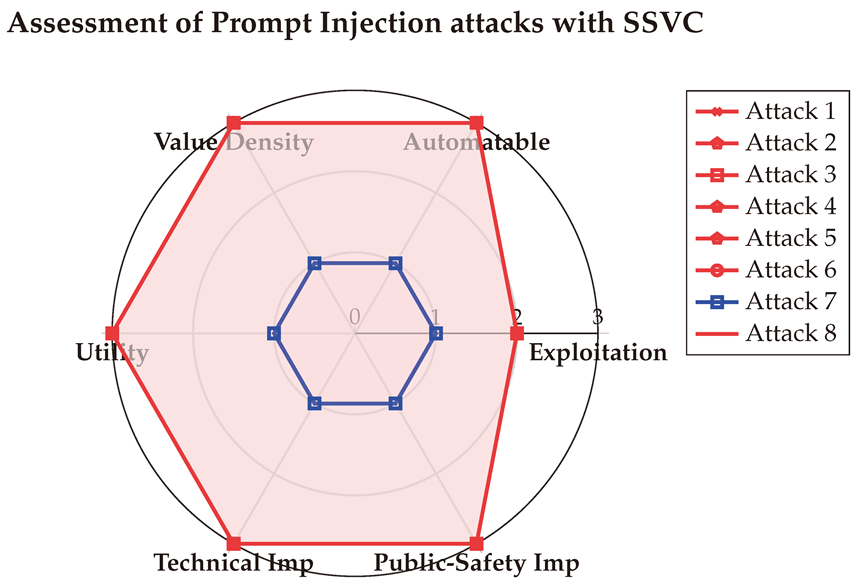

Table A12 provides the detailed SSVC assessments conducted with the three LLMs. These scores offer additional insights beyond those captured by other metrics, emphasizing that Prompt Injection attacks are highly automatable, posing significant risks to both technical systems and public safety. The averaged results are visualized in the Spider-chart below for enhanced clarity.

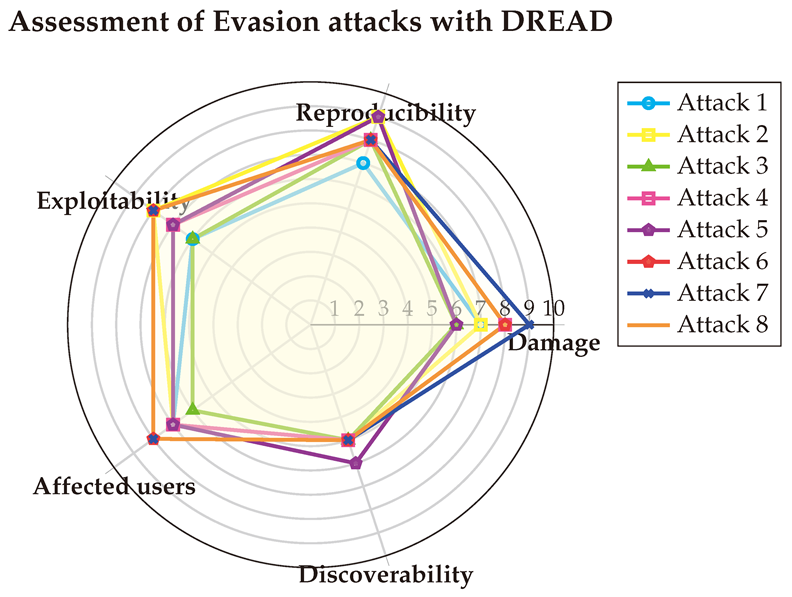

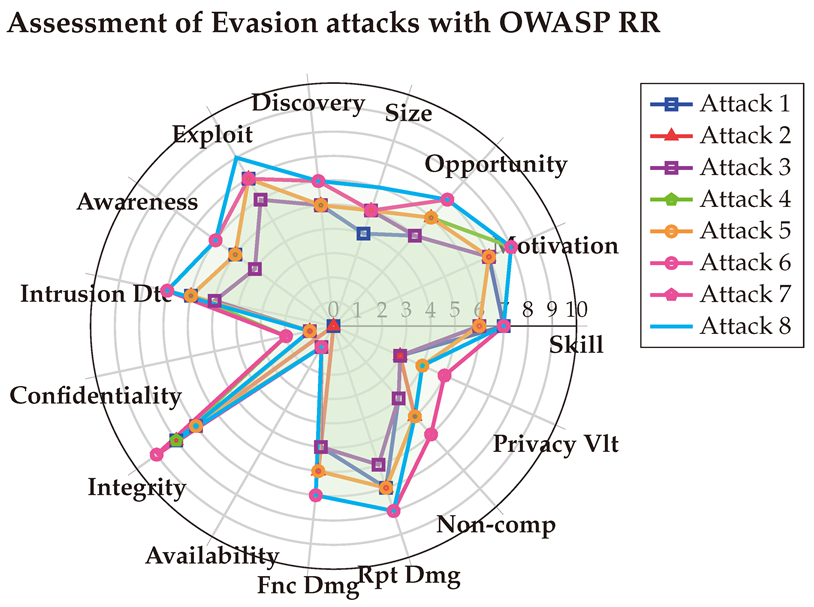

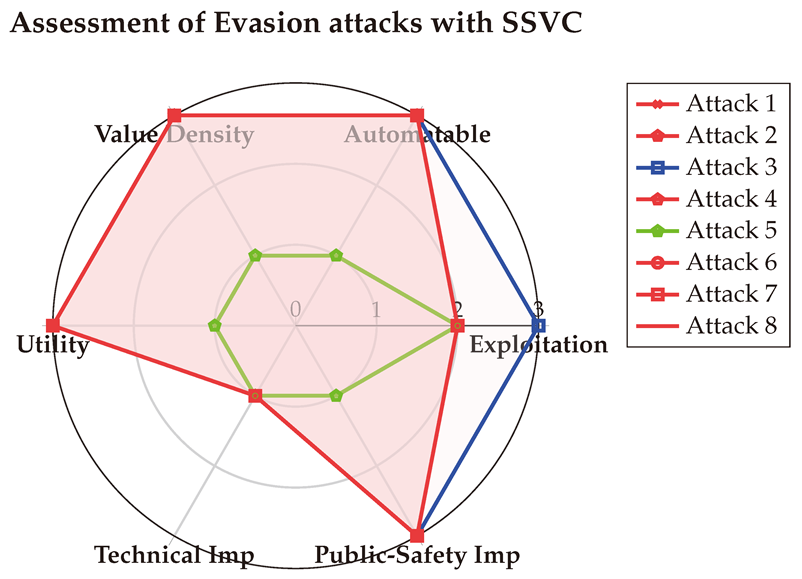

6.4. Assessment of Evasion attacks

The forth experiment is evaluating eight Evasion attacks described in

Section 4.3: (1) Hot Flip [

39], (2) PWWS [

109], (3) TypoAttack [

103], (4) VIPER [

40], (5) CheckList [

110], (6) BertAttack [

72], (7) GBDA [

55], (8) TF-Attack [

77].

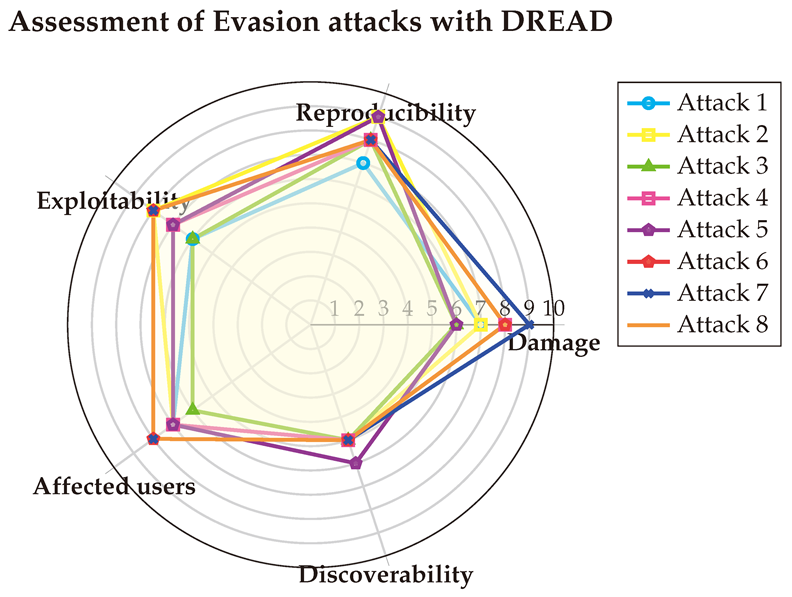

6.4.1. With DREAD

The first evaluation is done using DREAD [

92]. The corresponding vulnerability vectors as follows:

(1) → (D:7/R:7/E:6/A:7/D:5) = 6.4 (Medium)

(2) → (D:7/R:9/E:8/A:7/D:5) = 7.2 (High)

(3) → (D:6/R:8/E:6/A:6/D:5) = 6.2 (Medium)

(4) → (D:8/R:8/E:7/A:7/D:5) = 7 (High)

(5) → (D:6/R:9/E:7/A:7/D:6) = 7 (High)

(6) → (D:8/R:8/E:8/A:8/D:5) = 7.4 (High)

(7) → (D:9/R:8/E:8/A:8/D:5) = 7.6 (High)

(8) → (D:8/R:8/E:8/A:8/D:5) = 7.4 (High)

Table A13 presents the detailed assessments conducted with the three LLMs, with the final scores visualized in the Spider-chart below. The DREAD evaluation reveals that evasion attacks generally cause medium-to-high damage and are highly reproducible, easily exploitable, and difficult to detect, while having the potential to impact a wide range of users. This underscores the critical need to mitigate such attacks.

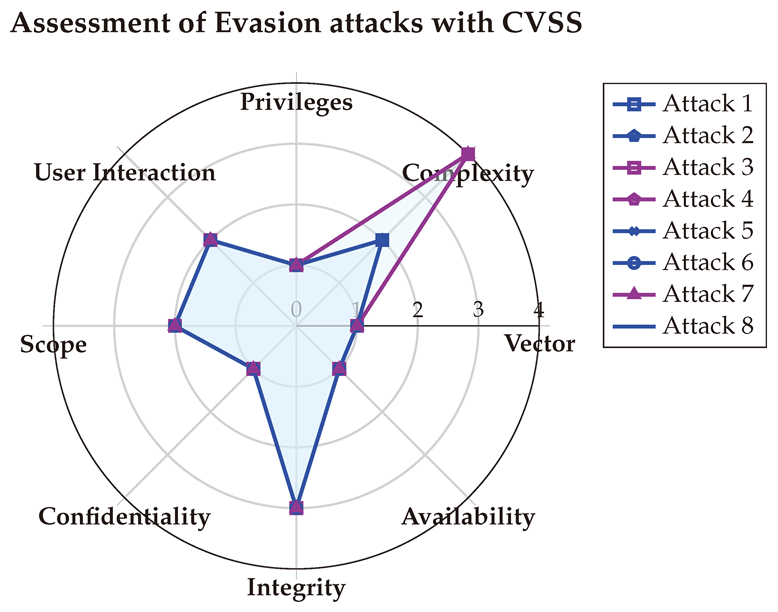

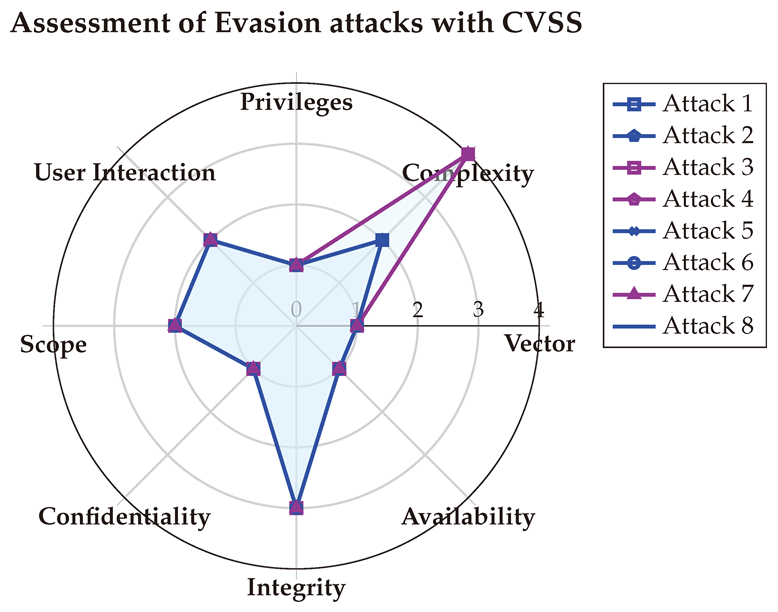

6.4.2. With CVSS

The second assessment of Evasion attacks is done with CVSS [

114]. The corresponding CVSS Vectors are shown below:

(1) → (AV:N/AC:L/PR:N/UI:N/S:U/C:N/I:H/A:N) = 7.5 (High)

(2) → (AV:N/AC:L/PR:N/UI:N/S:U/C:N/I:H/A:N) = 7.5 (High)

(3) → (AV:N/AC:H/PR:N/UI:N/S:U/C:N/I:H/A:N) = 5.9 (Medium)

(4) → (AV:N/AC:H/PR:N/UI:N/S:U/C:N/I:H/A:N) = 5.9 (Medium)

(5) → (AV:N/AC:L/PR:N/UI:N/S:U/C:N/I:H/A:N) = 7.5 (High)

(6) → (AV:N/AC:L/PR:N/UI:N/S:U/C:N/I:H/A:N) = 7.5 (High)

(7) → (AV:N/AC:H/PR:N/UI:N/S:U/C:N/I:H/A:N) = 5.9 (Medium)

(8) → (AV:N/AC:L/PR:N/UI:N/S:U/C:N/I:H/A:N) = 7.5 (High)

Table A14 displays the scores provided by the three LLMs along with their average. For enhanced clarity and ease of interpretation, the score vectors are visualized in the Spider-chart below.

The CVSS evaluations reveal a consistent scoring pattern for evasion attacks, emphasizing their typical characteristics. These attacks are often performed over a network, require minimal complexity, and do not necessitate privileges or user interaction. While they have no impact on data Confidentiality or Availability, they can significantly affect Integrity.

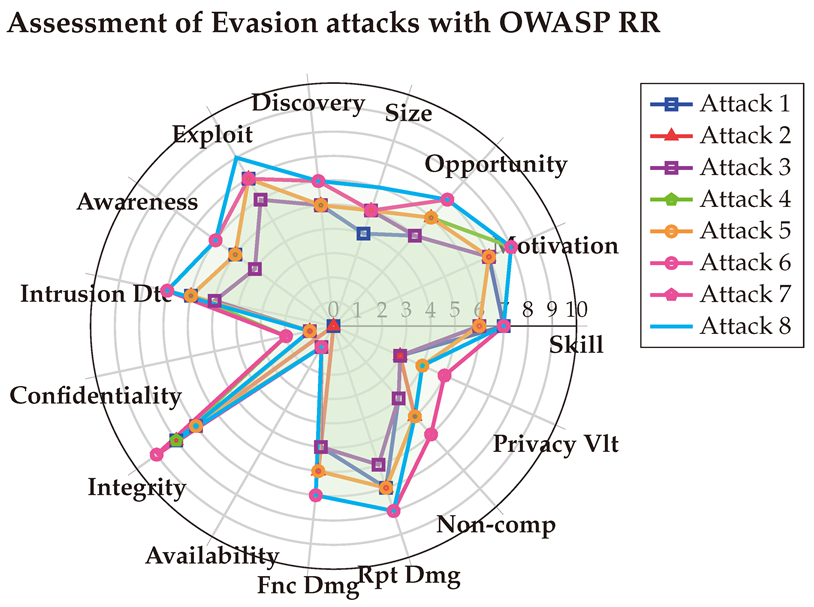

6.4.3. With OWASP Risk Rating

Another evaluation of Evasion attacks is done with OWASP Risk Rating [

140]. The corresponding vulnerability vectors of each attack is:

(1) → (SL:7/M:7/O:5/S:4/ED:5/EE:7/A:5/ID:6/LC:1/LI:8/LA:0/FD:5/RD:7/NC:4/PV:3) = 2.2 (Medium)

(2) → (SL:6/M:7/O:6/S:5/ED:5/EE:7/A:5/ID:6/LC:1/LI:8/LA:0/FD:6/RD:7/NC:5/PV:3) = 2.4 (Medium)

(3) → (SL:6/M:7/O:5/S:5/ED:5/EE:6/A:4/ID:5/LC:1/LI:7/LA:1/FD:5/RD:6/NC:4/PV:3) = 2 (Medium)

(4) → (SL:7/M:8/O:6/S:5/ED:5/EE:7/A:5/ID:6/LC:2/LI:8/LA:1/FD:7/RD:8/NC:5/PV:4) = 3 (High)

(5) → (SL:6/M:7/O:6/S:5/ED:5/EE:7/A:5/ID:6/LC:1/LI:7/LA:1/FD:6/RD:7/NC:5/PV:4) = 2.5 (Medium)

(6) → (SL:7/M:8/O:7/S:5/ED:6/EE:7/A:6/ID:7/LC:2/LI:9/LA:1/FD:7/RD:8/NC:6/PV:5) = 3.5 (High)

(7) → (SL:7/M:8/O:7/S:5/ED:6/EE:7/A:6/ID:7/LC:2/LI:9/LA:1/FD:7/RD:8/NC:6/PV:5) = 3.5 (High)

(8) → (SL:7/M:8/O:7/S:6/ED:6/EE:8/A:6/ID:7/LC:1/LI:8/LA:1/FD:7/RD:8/NC:5/PV:4) = 3.2 (High)

The detailed OWASP RR scoring is outlined in

Table A15, offering insights consistent with those from the CVSS assessments. It highlights that evasion attacks demand only a moderate level of skill and motivation to be executed, are easily exploitable, and are relatively unknown to defenders, making them challenging to detect and mitigate. These attacks pose a significant threat to data integrity while remaining harmless to Confidentiality and Availability. Additionally, OWASP RR sheds light on the substantial financial and reputational impact these attacks can impose on targeted organizations.

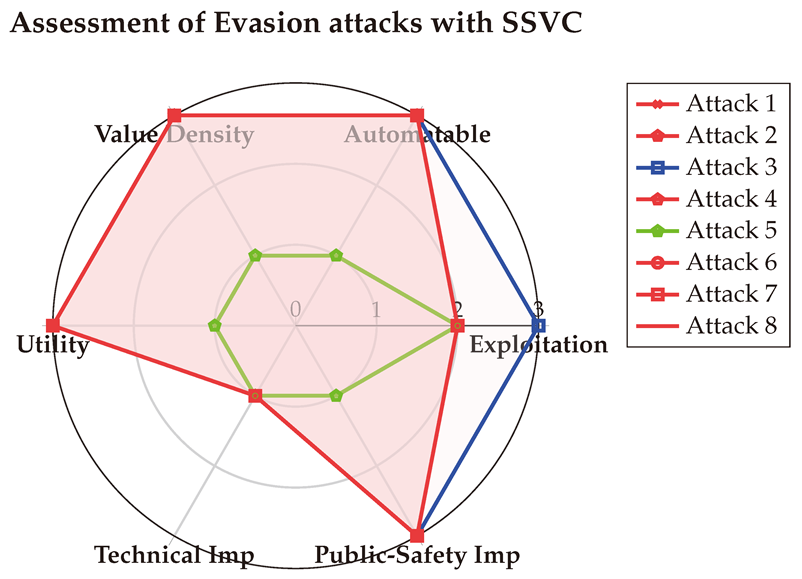

6.4.4. With SSVC

We continue the evaluation of Evasion attacks with SSVC [

128] as a last metric, the results of the assessments are shown and detailed below:

(1) → (E:P/A:Y/V:C/U:S/T:P/P:S) = Immediate (Very High)

(2) → (E:P/A:Y/V:C/U:S/T:P/P:S) = Immediate (Very High)

(3) → (E:A/A:Y/V:C/U:S/T:P/P:S) = Immediate (Very High)

(4) → (E:P/A:Y/V:C/U:S/T:P/P:S) = Immediate (Very High)

(5) → (E:P/A:N/V:D/U:L/T:P/P:M) = Scheduled (Medium)

(6) → (E:P/A:Y/V:C/U:S/T:P/P:S) = Immediate (Very High)

(7) → (E:P/A:Y/V:C/U:S/T:P/P:S) = Immediate (Very High)

(8) → (E:P/A:Y/V:C/U:S/T:P/P:S) = Immediate (Very High)

The scores are visualized below in a Spider-chart, with the detailed assessments provided in

Table A16. Notably, the SSVC results align with those of DREAD and OWASP RR, emphasizing that evasion attacks are frequently exploited by attackers. Additionally, SSVC highlights that these attacks are highly automatable and rewarding, making them particularly valuable to adversaries. However, it suggests that while evasion attacks pose minimal technical threats to organizations, their primary danger lies in their significant potential to compromise public safety, especially in scenarios involving object detection and classification.

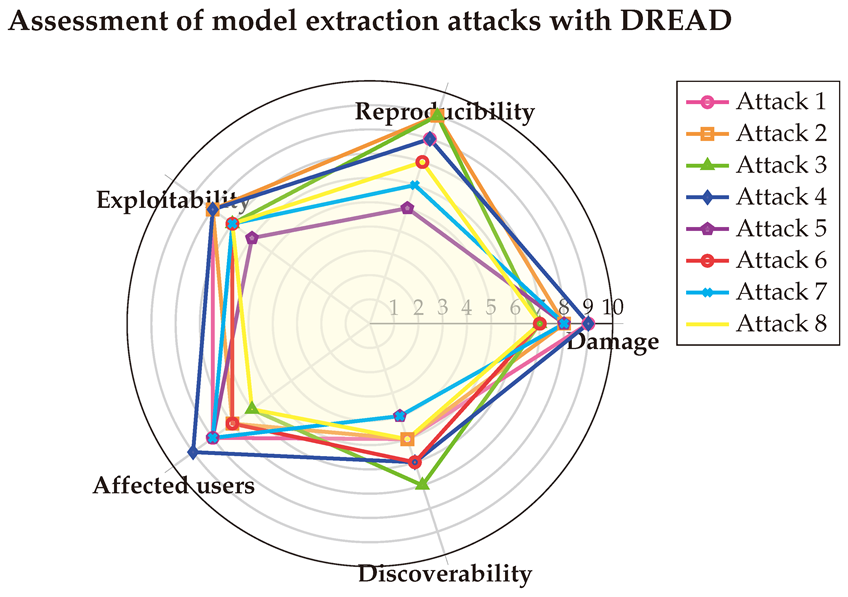

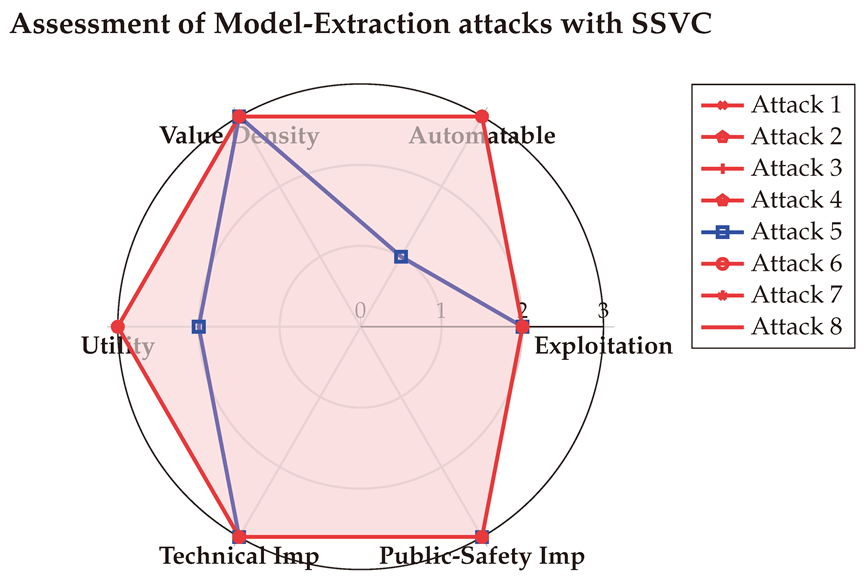

6.5. Assessment of Model Extraction attacks

Model Extraction are the fifth attacks we evaluate with the five vulnerability metrics. The attacks were presented earlier in

Section 4.4 and are respectively: (1) User Data Extraction [

12], (2) LLM Tricks [

156], (3) Analysing PII Leakage [

89], (4) ETHICIST [

162], (5) Scalable Extraction [

95], (6) Output2Prompt [

158], (7) PII-Compass [

94], (8) Alpaca VS Vicuna [

64].

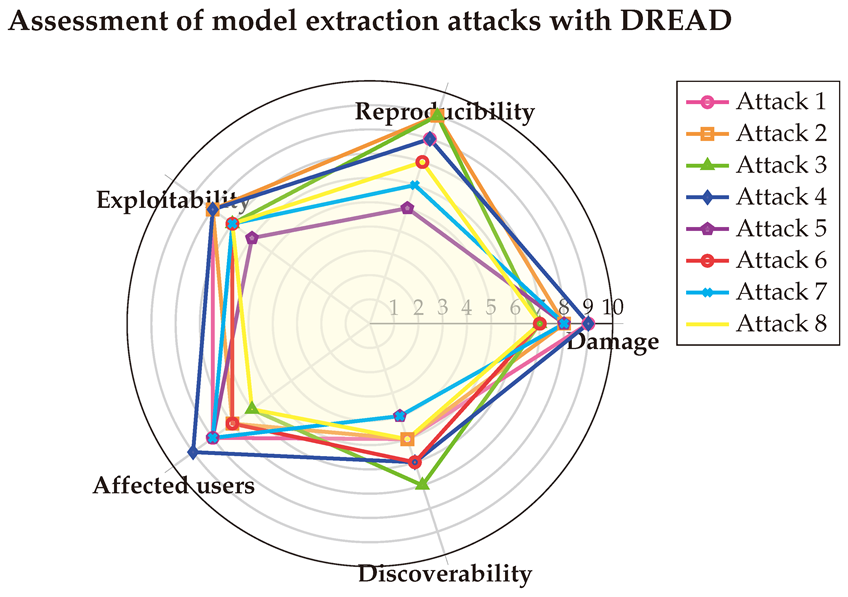

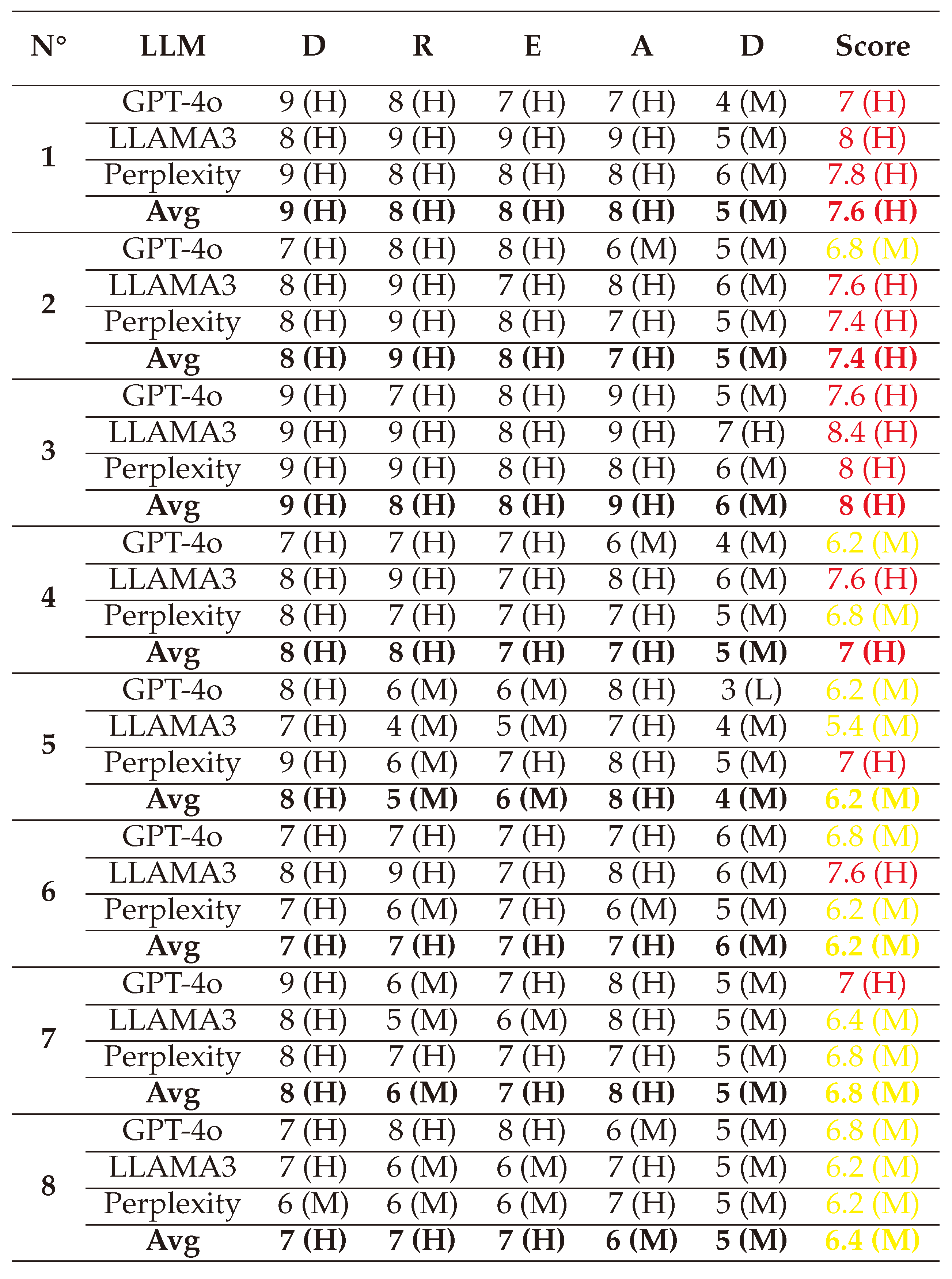

6.5.1. With DREAD

We start evaluating Extraction attacks using DREAD [

92]. The corresponding vulnerability vectors as follows:

(1) → (D:9/R:8/E:8/A:8/D:5) = 7.6 (High)

(2) → (D:8/R:9/E:8/A:7/D:5) = 7.4 (High)

(3) → (D:9/R:8/E:8/A:9/D:6) = 8 (High)

(4) → (D:8/R:8/E:7/A:7/D:5) = 7 (High)

(5) → (D:8/R:5/E:6/A:8/D:4) = 6.2 (Medium)

(6) → (D:7/R:7/E:7/A:7/D:6) = 6.8 (Medium)

(7) → (D:8/R:6/E:7/A:8/D:4) = 6.8 (Medium)

(8) → (D:7/R:7/E:7/A:6/D:5) = 6.4 (Medium)

The detailed scores for this fifth type of attack are shown in

Table A17, with the score vectors visualized in a Spider-chart below for clarity. The DREAD assessment reveals that the exploitability and discoverability of model extraction attacks vary depending on the specific implementation. However, all these attacks share a high level of danger to systems due to their potential to cause significant damage. Additionally, the analysis highlights that such attacks can directly or indirectly affect multiple users, while remaining relatively challenging to detect.

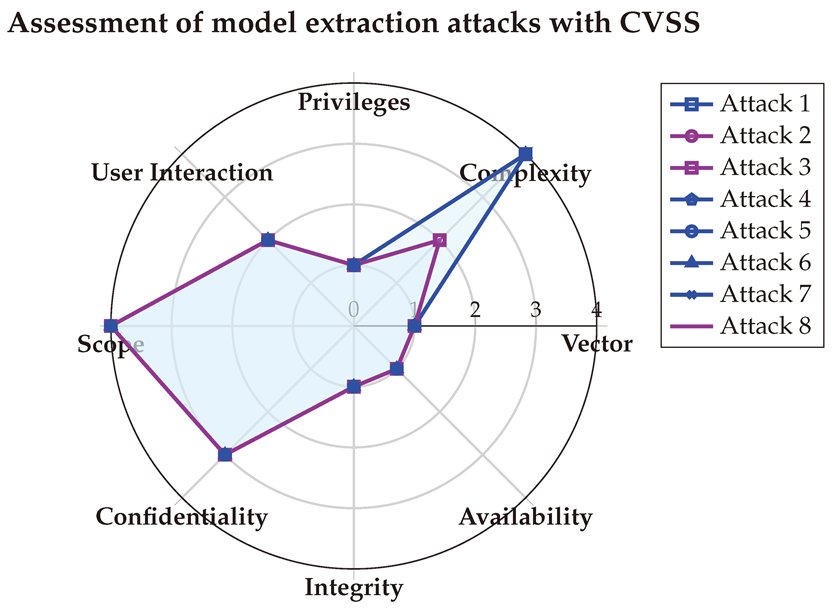

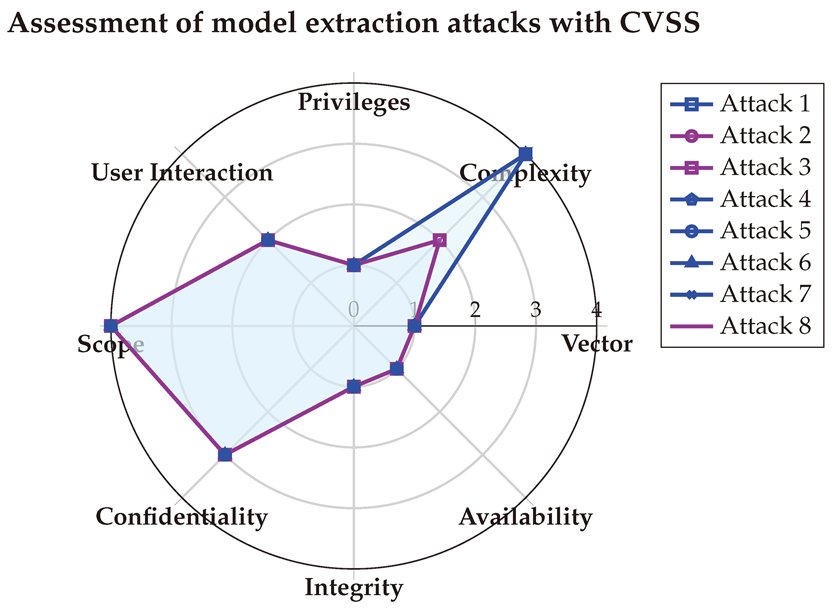

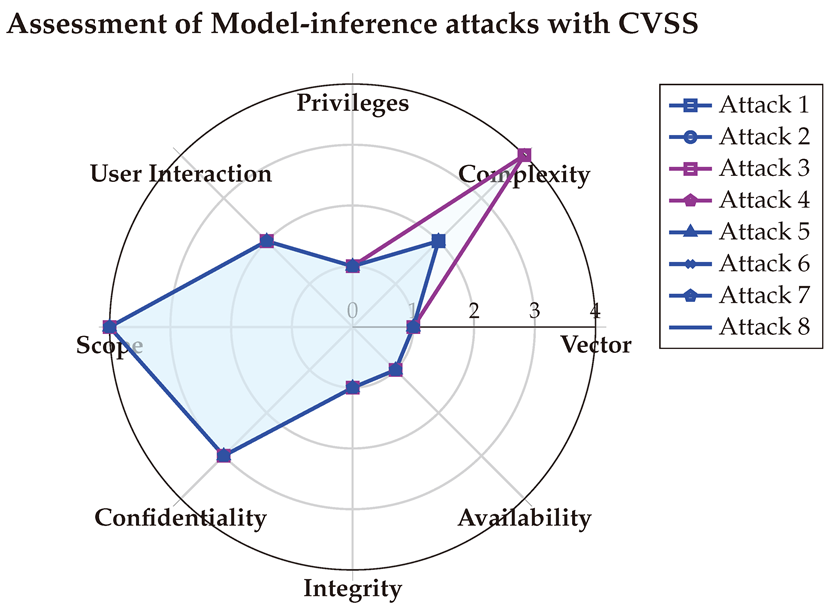

6.5.2. With CVSS

The second assessment of Model Extraction attacks is done with CVSS [

114]. The corresponding Vectors are:

(1) → (AV:N/AC:H/PR:N/UI:N/S:C/C:H/I:N/A:N) = 6.8 (Medium)

(2) → (AV:N/AC:L/PR:N/UI:N/S:C/C:H/I:N/A:N) = 8.6 (High)

(3) → (AV:N/AC:L/PR:N/UI:N/S:C/C:H/I:N/A:N) = 8.6 (High)

(4) → (AV:N/AC:H/PR:N/UI:N/S:C/C:H/I:N/A:N) = 6.8 (Medium)

(5) → (AV:N/AC:H/PR:N/UI:N/S:C/C:H/I:N/A:N) = 6.8 (Medium)

(6) → (AV:N/AC:H/PR:N/UI:N/S:C/C:H/I:N/A:N) = 6.8 (Medium)

(7) → (AV:N/AC:H/PR:N/UI:N/S:C/C:H/I:N/A:N) = 6.8 (Medium)

(8) → (AV:N/AC:L/PR:N/UI:N/S:C/C:H/I:N/A:N) = 8.6 (High)

The CVSS scores assigned by each LLM are detailed in

Table A18, with the final vectors visualized in the Spider-chart below. Compared to DREAD, CVSS provides more granular insights into the nature of the damage caused by these attacks, particularly their impact on confidentiality. Additionally, the assessment highlights that these attacks typically do not require specific privileges or user interaction for execution. However, their scope can vary depending on the type of data extracted, making them broader in target range than previous attack types.

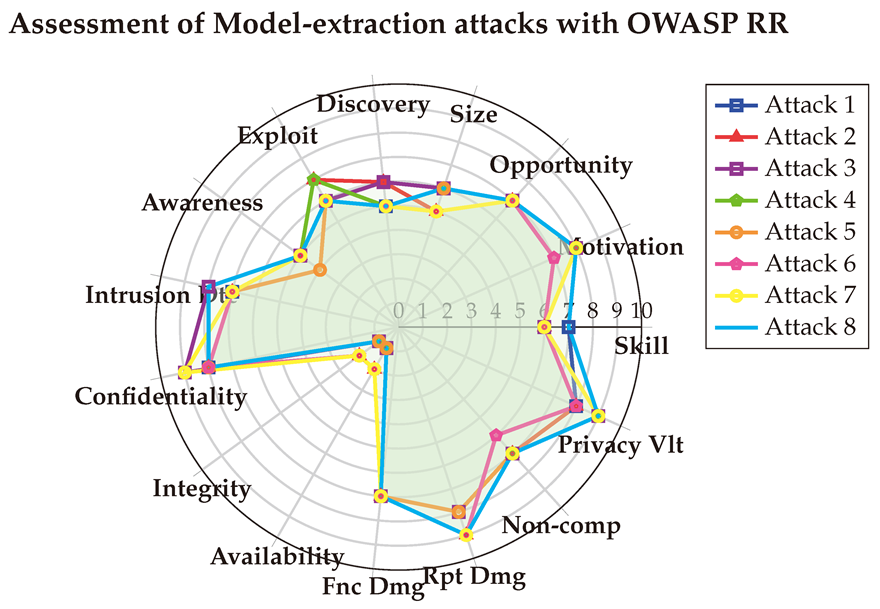

6.5.3. With OWASP Risk Rating

A third evaluation of Model Extraction attacks is done using OWASP RR [

140]. The corresponding vulnerability vectors of each attack is:

(1) → (SL:7/M:8/O:7/S:6/ED:5/EE:6/A:5/ID:7/LC:8/LI:1/LA:1/FD:7/RD:8/NC:7/PV:8) = 3.5 (High)

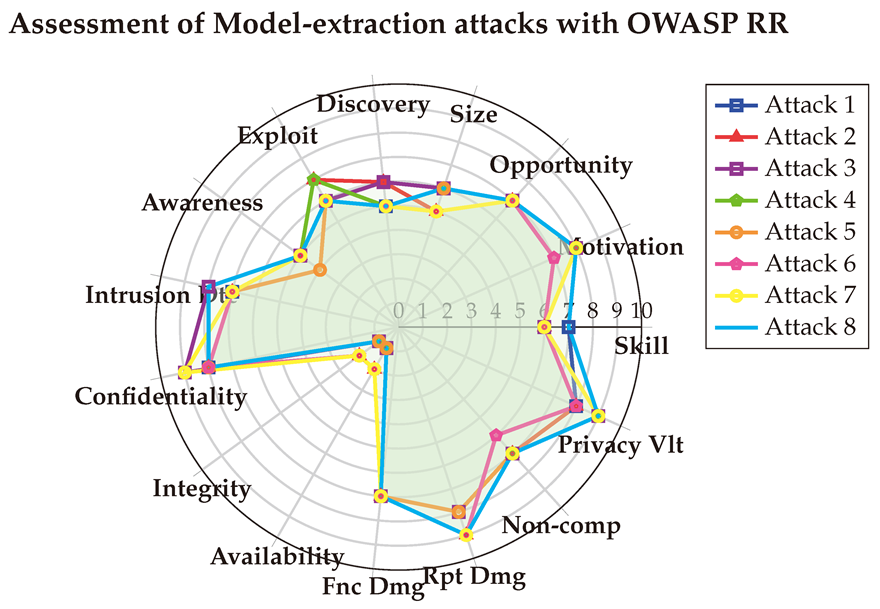

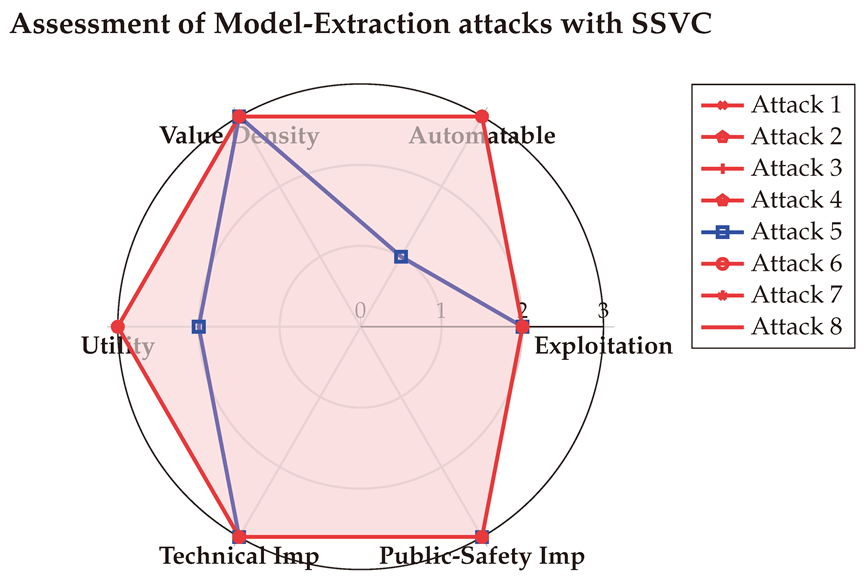

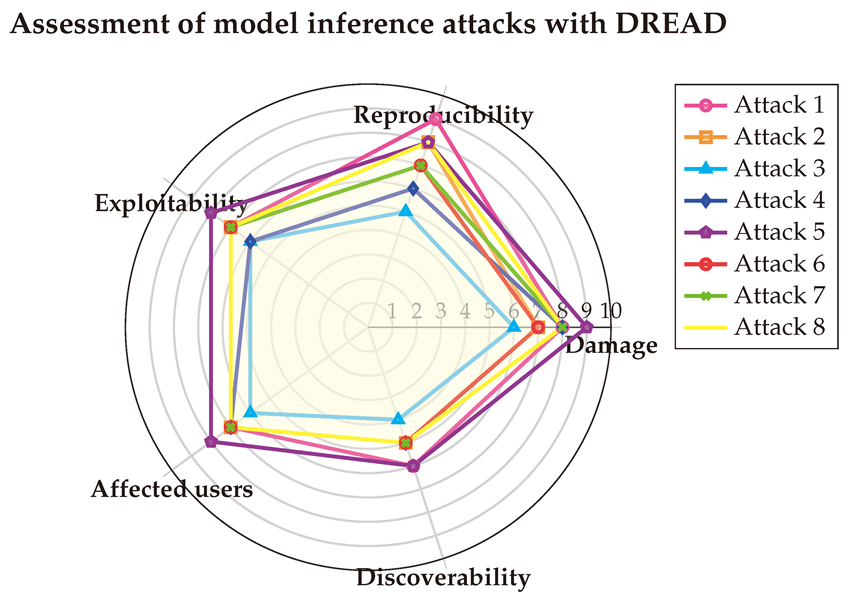

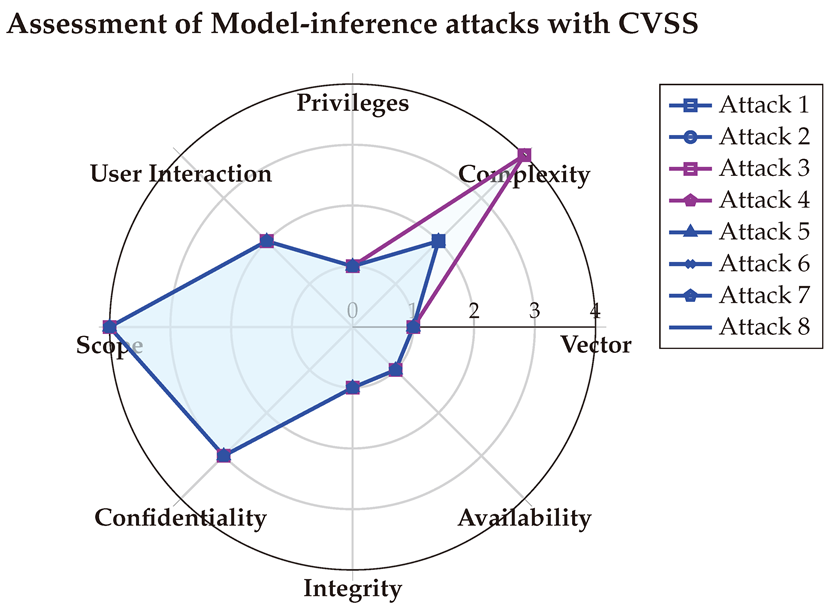

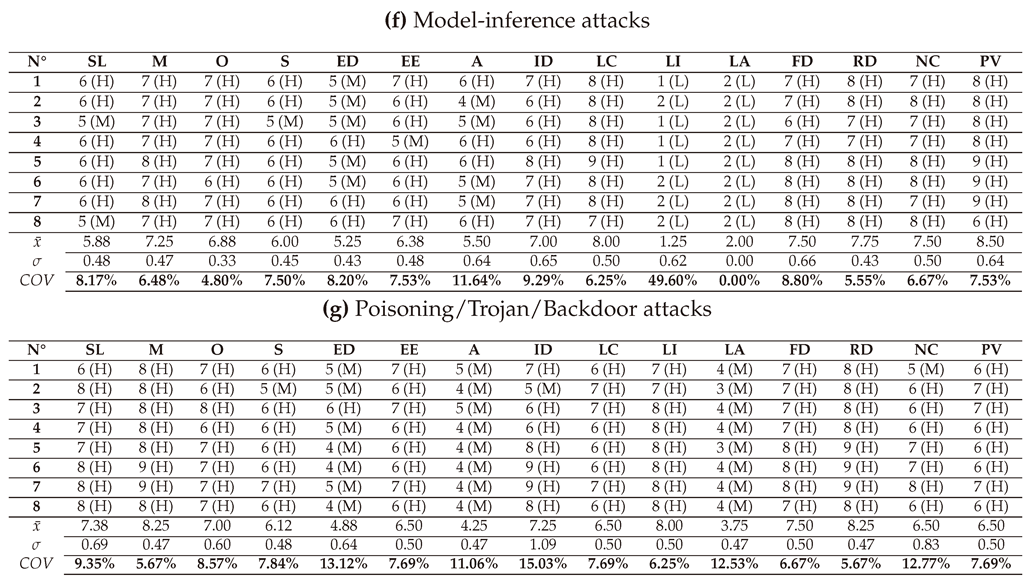

(2) → (SL:6/M:8/O:7/S:5/ED:6/EE:7/A:5/ID:7/LC:8/LI:2/LA:2/FD:7/RD:9/NC:7/PV:8) = 3.8 (High)