Submitted:

25 November 2024

Posted:

26 November 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

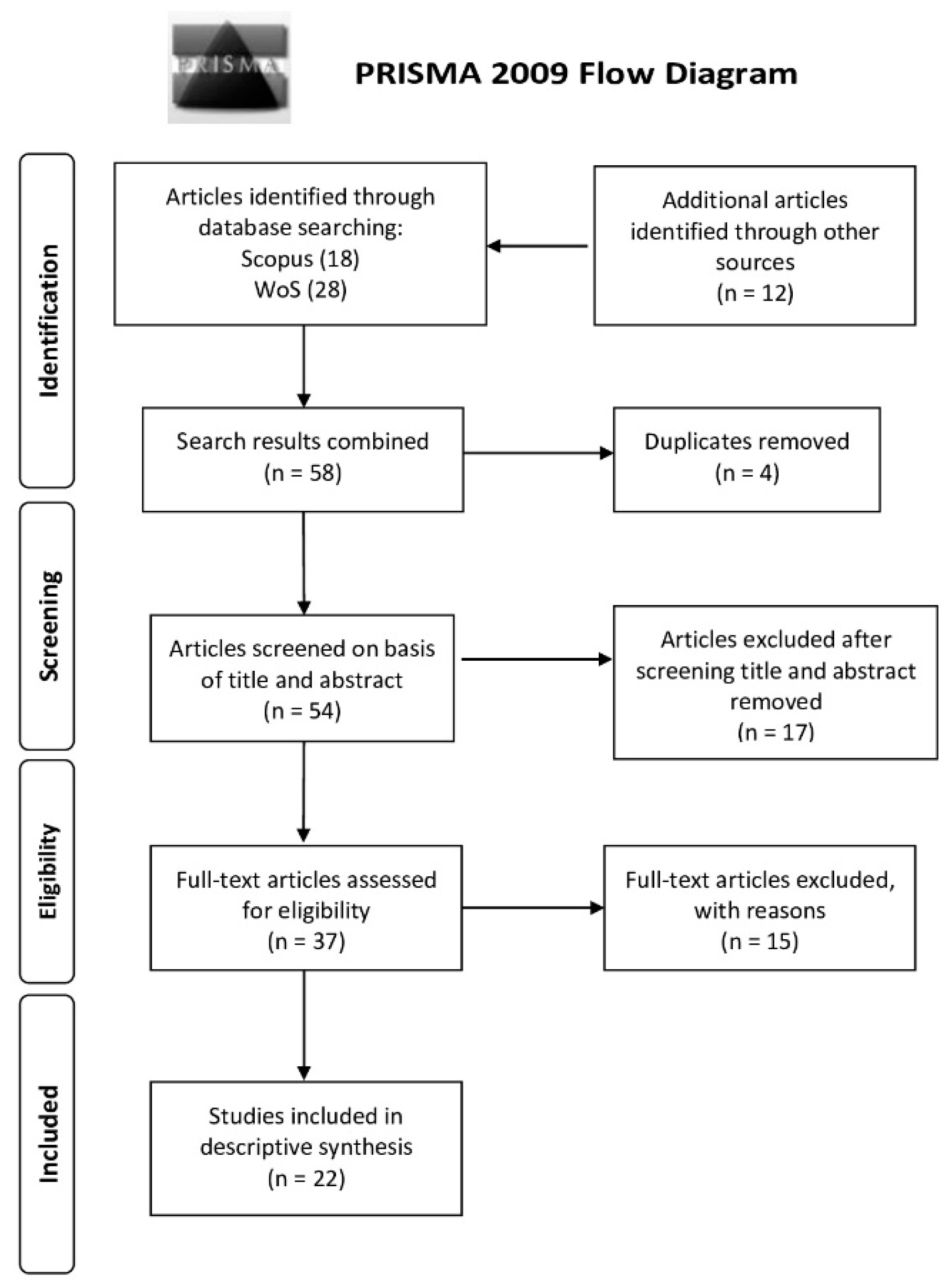

2. Materials and Methods

2.1. Scope and research questions

2.2. Eligibility Criteria

2.3. Sources and Search Strategy

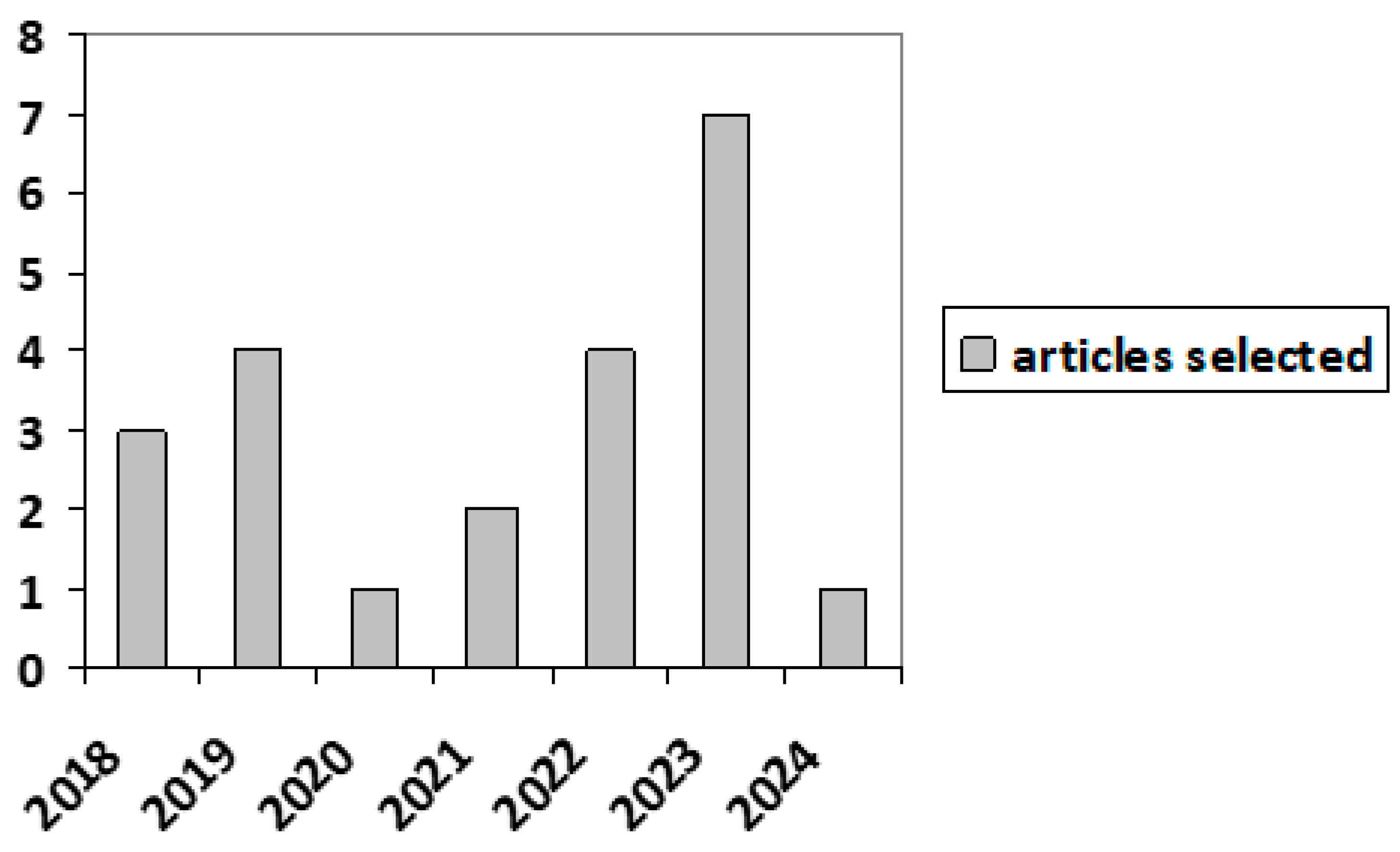

2.4. Selection of studies

3. Results

3.1. Parts of a Real-Time ER

3.2. Experiences of Real-Time ER

3.3. Ethical and Privacy Issues

4. Discussion

5. Conclusions

6. Patents

Author Contributions

Funding

Conflicts of Interest

References

- Cai, Y.; Li, X.; Li, J. Emotion Recognition Using Different Sensors, Emotion Models, Methods and Datasets: A Comprehensive Review. Sensors 2023, 23, 2455. [Google Scholar] [CrossRef] [PubMed]

- Fakhar, S.; Baber, J.; Bazai, S.U.; Marjan, S.; Jasinski, M.; Jasinska, E.; Chaudhry, M.U.; Leonowicz, Z.; Hussain, S. Smart Classroom Monitoring Using Novel Real-Time Facial Expression Recognition System. Appl. Sci. 2022, 12, 12134. [Google Scholar] [CrossRef]

- Dzedzickis, A.; Kaklauskas, A.; Bucinskas, V. Human Emotion Recognition: Review of Sensors and Methods. Sensors 2020, 20, 592. [Google Scholar] [CrossRef] [PubMed]

- Wozniak, M.; Sakowicz, M.; Ledwosinski, K.; Rzepkowski, J.; Czapla, P.; Zaporowski, S. Bimodal Emotion Recognition Based on Vocal and Facial Features. Procedia Computer Science 2023, 225, 2556–2566. [Google Scholar] [CrossRef]

- Khare, S.; Blanes-Vidal, V.; Nadimi, E.; Acharya, U. Emotion recognition and artificial intelligence: A systematic review (2014–2023) and research recommendations. Information Fusion 2023, 102, 102019. [Google Scholar] [CrossRef]

- Shit, S.; Rana, A.; Das, D. K.; Ray, D. N. Real-time emotion recognition using end-to-end attention-based fusion network. Journal of Electronic Imaging 2023, 32. [Google Scholar] [CrossRef]

- Harem, A. Human-computer interaction: enhancing user experience in interactive systems. Kufa Journal of Engineering 2023, 14, 23–41. [Google Scholar] [CrossRef]

- Rokhsaritalemi, S.; Sadeghi, A. ; Choi, Soo-Mi. Exploring Emotion Analysis Using Artificial Intelligence, Geospatial Information Systems, and Extended Reality for Urban Services. IEEE Access 2023, 1. [Google Scholar] [CrossRef]

- Ballesteros, J.; Ramirez, G.; Moreira, F.; Solano, A.; Pelaez, C. Facial emotion recognition through artificial intelligence. Frontiers in Computer Science 2024, 6. [Google Scholar] [CrossRef]

- Tanabe, H.; Shiraishi, T.; Sato, H.; Nihei, M.; Inoue, T.; Kuwabara, C. A concept for emotion recognition systems for children with profound intellectual and multiple disabilities based on artificial intelligence using physiological and motion signals. Disability and Rehabilitation. Assistive Technology 2023, 19, 1–8. [Google Scholar] [CrossRef]

- Lee, Y.-S.; Park, W.H. Diagnosis of Depressive Disorder Model on Facial Expression Based on Fast R-CNN. Diagnostics 2022, 12, 317. [Google Scholar] [CrossRef] [PubMed]

- Taype-Huarca, L.A.; Zavalaga-del Carpio, A.L.; Fernández-González, S.V. Usefulness of the Perezhivanie Construct in Affectivity and Learning: A Systematic Review. Affectivity and Learning: Bridging the Gap Between Neurosciences, Cultural and Cognitive Psychology. Springer, 2023. [CrossRef]

- Cristóvão, A.M.; Valente, S.; Rebelo, H.; Ruivo, A.F. Emotional education for sustainable development: A curriculum analysis of teacher training in Portugal and Spain. Frontiers in Education 2023, 8, 1165319. [Google Scholar] [CrossRef]

- Minsky, M. The Emotion Machine: Commonsense Thinking, Artificial Intelligence, and the Future of the Human Mind. Simon & Schuster: New York, United States, 2007.

- Bower, G.H.; Forgas, J.P. Mood and social memory. Handbook of Affect and Social Cognition. Forgas, J.P.; 2000; pp. 95–120. [CrossRef]

- Li, J.; Shi, D.; Tumnark, P.; Xu, H. A system for real-time intervention in negative emotional contagion in a smart classroom deployed under edge computing service infrastructure. Peer-to-Peer Networking and Applications 2020, 13, 1706–1719. [CrossRef]

- Abásolo, M.J.; Castro Lozano, C.; Olmedo Cifuentes, G.F. Applications and Usability of Interactive TV. Communications in Computer and Information Science (jAUTI) 11th Iberoamerican Conference. Springer Nature, Córdoba, Spain, 2022.

- Paredes, N.; Caicedo Bravo, E.; Bacca, B. Real-Time Emotion Recognition Through Video Conference and Streaming. Communications in Computer and Information Science (CCIS) 2022, 1597. [Google Scholar] [CrossRef]

- Mega, C.; Ronconi, L.; De Beni, R. What Makes a Good Student? How Emotions, Self-Regulated Learning, and Motivation Contribute to Academic Achievement. Journal of Educational Psychology 2013; 106. 121. [CrossRef]

- Goleman, D. Emotional Intelligence. Bantam Books: New York, USA, 1995.

- Jarrell, A.; Lajoie, S.P. The regulation of achievements emotions: implications for research and practice. Can Psychol/Psychologie canadienne 2017, 58, 276–87. [CrossRef]

- Gross, J.J.; Thompson, R.A. Emotion regulation: conceptual foundations. Handbook of Emotion Regulation. Guilford Publications: New York, USA, 2007; pp. 3-24.

- Pekrun, R. The control-value theory of achievement emotions: assumptions, corollaries, and implications for educational research and practice. Educational Psychology Review 2006, 18, 315–341. [Google Scholar] [CrossRef]

- Ahn, B.; Maurice-Ventouris, M.; Bilgic, E.; Yang, A.; Lau, C.; Peters, H.; Li, K.; Chang-Ou, D.; Harley, J.M. A scoping review of emotions and related constructs in simulation-based education research articles. Adv Simul 2023, 8, 22. [Google Scholar] [CrossRef]

- Maturana, H. Emociones y lenguaje en educación y política; Dolmen: Palma, Spain, 1990.

- Maturana, H. El sentido de lo humano; Hachette: Paris, France, 1992. [Google Scholar]

- Darwin, C.R. The Expression of the Emotions in Man and Animals, 1st ed.; John Murray: London, UK, 1872. [Google Scholar]

- James, W. The Principles of Psychology; Henry Holt and Co.: New York, NY, USA, 1890; Volume II. [Google Scholar]

- Lange, C. The Emotions; William and Wilkins: Baltimore, MD, USA, 1885. [Google Scholar]

- Freud, S. A Project for a Scientific Psychology, Standard Edition; Hogarth: London, UK, 1895; Volume 1, pp. 283–397. [Google Scholar]

- Lazarus, R. S. Thoughts on the relations between emotion and cognition. American Psychologist 1982, 37, 1019–1024. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V. Measuring facial movement. Environmental Psychology and Nonverbal Behavior 1976, 1, 56–75. [Google Scholar] [CrossRef]

- Ekman, P.; O'Sullivan, M.; Friesen, W.V.; Scherer, K.R. Invited article: Face, voice, and body in detecting deceit. Journal of Nonverbal Behavior 1991, 15, 125–135. [Google Scholar] [CrossRef]

- Gotarane, V.; Vedhas, V.; Rajeev, S.; Manasi, B. Emotion Recognition Using Real Time Face Recognition. International Journal of Creative Research Thoughts 2018, 6, 2320–2882. [Google Scholar] [CrossRef]

- Linnenbrink-Garcia, L.; Patall, E.A.; Pekrun, R. Adaptive Motivation and Emotion in Education: Research and Principles for Instructional Design. Policy Insights from the Behavioral and Brain Sciences 2016, 3, 228–236. [Google Scholar] [CrossRef]

- Calado, J.; Luís-Ferreira, F.; Sarraipa, J.; Jardim-Gonçalves, R. A framework to bridge teachers, student's affective state, and improve academic performance. ASME International Mechanical Engineering Congress and Exposition, Proceedings (IMECE) 2017, 1–7. [CrossRef]

- Kopalidis T; Solachidis V.; Vretos N.; Daras P. Advances in Facial Expression Recognition: A Survey of Methods, Benchmarks, Models, and Datasets. Information 2024, 15, 135. [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; Chou, R.; Glanville, J.; Grimshaw, J.M.; Hróbjartsson, A;, Lalu, M.M.,; Li, T.; Loder, E.W.; Mayo-Wilson, E.; McDonald,S.; McGuinness, L.A.; et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ 2021. [CrossRef]

- Reichelt, J.; Henning, V.; Foeckler, P. Mendeley [Software]. Elsevier 2024. https://www.mendeley.com/.

- Fida, A.; Umer, M.; Saidani, O.; Hamdi, M.; Alnowaiser, K.; Bisogni, C.; Abate, A. F.; Ashraf, I. Real time emotions recognition through facial expressions. Multimedia Tools and Applications 2023. [Google Scholar] [CrossRef]

- Trabelsi, Z.; Alnajjar, F.; Parambil, M. M. A.; Gochoo, M.; Ali, L. Real-Time Attention Monitoring System for Classroom: A Deep Learning Approach for Student’s Behavior Recognition. Big Data and Cognitive Computing 2023, 7, 1–17. [Google Scholar] [CrossRef]

- Li, L.; Chen, C. P.; Wang, L.; Liang, K.; Bao, W. Exploring Artificial Intelligence in Smart Education: Real-Time Classroom Behavior Analysis with Embedded Devices. Sustainability 2023, 15. [Google Scholar] [CrossRef]

- Boonroungrut, C. , O, T.T.; One, K. Exploring classroom emotion with cloud-based facial recognizer in the Chinese beginning class: A preliminary study. International Journal of Instruction 2019, 12, 947–958. [Google Scholar] [CrossRef]

- Sassi, A.; Jaafar, W.; Cherif, S.; Abderrazak, J. B.; Yanikomeroglu, H. Video Traffic Analysis for Real-Time Emotion Recognition and Visualization in Online Learning. IEEE Access 2023, 11, 99376–99386. [Google Scholar] [CrossRef]

- Ruiz-Garcia, A.; Elshaw, M.; Altahhan, A.; Palade, V. A hybrid deep learning neural approach for emotion recognition from facial expressions for socially assistive robots. Neural Computing and Applications 2018, 29, 359–373. [Google Scholar] [CrossRef]

- Sukumaran, A.; Manoharan, A. Multimodal Engagement Recognition From Image Traits Using Deep Learning Techniques. IEEE Access 2024, 12, 25228–25244. [Google Scholar] [CrossRef]

- Luo, Z.; Zheng, C.; Gong, J.; Chen, S.; Luo, Y.; Yi, Y. 3DLIM: Intelligent analysis of students’ learning interest by using multimodal fusion technology. Education and Information Technologies 2023, 28, 7975–7995. [Google Scholar] [CrossRef]

- Ashwin, D. V.; Kumar, A.; Manikandan, J. Design of a real-time human emotion recognition system. Institute for Computer Sciences, Social-Informatics and Telecommunications Engineering, LNICST, 2018; 218. [Google Scholar] [CrossRef]

- Putra, W. B.; Arifin, F. Real-Time Emotion Recognition System to Monitor Student’s Mood in a Classroom. Journal of Physics: Conference Series 2019, 1413. [Google Scholar] [CrossRef]

- Zhu, Z.; Zheng, X.Q.; Ke, T.P.; Chai, G.F. Emotion recognition in learning scenes supported by smart classroom and its application. Traitement du Signal 2023, 40, 751–758. [Google Scholar] [CrossRef]

- Chen, S.; Dai, J.; Yan, Y. Classroom Teaching Feedback System Based on Emotion Detection. 9th International Conference on Education and Social Science (ICESS), 2019, 940–946. [CrossRef]

- Dukić, D.; Krzic, A. S. Real-Time Facial Expression Recognition Using Deep Learning with Application in the Active Classroom Environment. Electronics 2022, 11. [Google Scholar] [CrossRef]

- Huang, D.; Zhang, W. X. Research on Learning State Based on Students’ Attitude and Emotion in Class Learning. Scientific Programming 2021. [Google Scholar] [CrossRef]

- Jha, T.; Kavya, R.; Christopher, J.; Arunachalam, V. Machine learning techniques for speech emotion recognition using paralinguistic acoustic features. International Journal of Speech Technology 2022, 25, 707–725. [Google Scholar] [CrossRef]

- Cui, Y.; Wang, S.; Zhao, R. Machine Learning-Based Student Emotion Recognition for Business English Class. International Journal of Emerging Technologies in Learning 2021, 16, 94–107. [Google Scholar] [CrossRef]

- Monisha, G. S.; Yogashree, G. S.; Baghyalaksmi, R.; Haritha, P. Enhanced Automatic Recognition of Human Emotions Using Machine Learning Techniques. Procedia Computer Science 2022, 218, 375–382. [Google Scholar] [CrossRef]

- Mamieva, D.; Abdusalomov, A.B.; Kutlimuratov, A.; Muminov, B.; Whangbo, T.K. Multimodal Emotion Detection via Attention-Based Fusion of Extracted Facial and Speech Features. Sensors 2023, 23, 5475. [Google Scholar] [CrossRef]

- Zhu, H.; Pengyun, H.; Tang, X.; Xia, D.; Huang, H. NAGNet: A novel framework for real-time students' sentiment analysis in the wisdom classroom. Concurrency and Computation: Practice and Experience 2023, 35. [Google Scholar] [CrossRef]

- Li, L.; Yao, D. Emotion Recognition in Complex Classroom Scenes Based on Improved Convolutional Block Attention Module Algorithm. IEEE Access 2023, 11, 143050–143059. [Google Scholar] [CrossRef]

- Chang, B. Student privacy issues in online learning environments. Distance Education 2021, 42, 55–69. [Google Scholar] [CrossRef]

- Drachsler, H.; Hoel, T;, Scheffel, M.; Kismihok, G.; Berg, A.; Ferguson, R.; Chen, W.; Cooper, A.; Manderveld, J. Ethical and privacy issues in the application of learning analytics. In Proceedings of the Fifth International Conference on Learning Analytics and Knowledge. Association for Computing Machinery, Poughkeepsie, United States 2015; 390-391.

- Anwar, M.; Greer, J. Facilitating trust in privacy-preserving E-learning environments. IEEE Transactions on Learning Technologies 2012, 5, 62–73. [Google Scholar] [CrossRef]

- McMillion, T.; King, C. S. T. Communication and security issues in online education: Student self-disclosure in course introductions. Journal of Interactive Online Learning 2017, 15, 1–25. https://www.ncolr.org/jiol/issues/pdf/15.1.1.pdf.

- European Comission. (14 October 2024). Shaping Europe’s digital future. https://digital-strategy.ec.europa.eu/en/policies/regulatory-framework-ai.

- World Medical Association, WMA Declaration of Helsinki, Ethical Principles for medical research involving human subjects. 2024. https://www.wma.net/.

- Li, J.; Deng, L.; Haeb-Umbach, R.; Gong, Y. Robust automatic speech recognition: A bridge to practical applications. Academic Press: Cambridge, MA, USA, 2015.

- Müller, M.; Dupuis, A.; Zeulner, T.; Vazquez, I.; Hagerer, J.; Gloor, P.A. Predicting Team Well-Being through Face Video Analysis with AI. Appl. Sci. 2024, 14, 1284. [Google Scholar] [CrossRef]

- Guo, R.; Guo, H.; Wang, L.; Chen, M.; Yang, D.; Li, B. Development and application of emotion recognition technology-a systematic literature review. BMC Psychology 2024, 12. [CrossRef]

- Unciti, O.; Palau, R. Teacher decision making tool: Development of a prototype to facilitate teacher decision making in the classroom. JOTSE: Journal of Technology and Science Education 2023, 13, 740–760. [Google Scholar] [CrossRef]

- Joksimović, S.; Kovanović, V.; Dawson, S. The Journey of Learning Analytics. HERDSA Review of Higher Education 2019, 6, 37–63. [Google Scholar]

- Bouhlal, M.; Arika, K.; Abdelouahid, R.; Filali, S.; Benlahmar, E. Emotions recognition as innovative tool for improving students’ performance and learning approaches. Procedia Computer Science 2020, 175, 597–602. [Google Scholar] [CrossRef]

- Unciti, O.; Ballesté, A.; Palau, R. Real-Time Emotion Recognition and its Effects in a Learning Environment. Interaction Design and Architecture(s) 2024, 60, 85–102. [Google Scholar] [CrossRef]

- Pritchard, M.; Wilson, G. Using Emotional and Social Factors to Predict Student Success. Journal of College Student Development 2003, 44, 18–28. [Google Scholar] [CrossRef]

| RQ 1 | Which patterns of ER are used? |

| RQ 2 | Which technology is used for real-time ER in classrooms? |

| RQ 3 | How is the technological design in which teachers obtain information? |

| Inclusion criteria | Exclusion criteria |

|---|---|

| Real-time ER | Non real-time ER |

| Educational purposes | Non Educational purposes |

| Face-to-face lessons | E-learning / virtual lessons |

| Empirical studies | Systematic review |

| Published after 2018 | Published before 2018 |

| Published articles in full-text form | Unpublished articles |

| English articles | Non-English articles |

| Parts of an ER system | Definition |

|---|---|

| face detection | algorithm capable of detecting accurately the detection of the presence and facial position in video or images sequences in real-time |

| pre-processing | normalizes images, eliminates noise and enhances contrast to increase the accuracy of feature extraction in real-time |

| data processing | can handle the video or image stream and process the extracted features and the results of the classification in real-time |

| feature extraction | algorithm which can extract relevant facial features in real-time (facial landmarks’ shape or position, facial textures, facial muscle movement) |

| training data | train the classification model through a wide and rich dataset of facial expression annotations |

| classification model | ML model such as a deep neural network or vector machine support, that can learn from the extracted features to categorize various facial expressions in real-time. |

| output and feedback | real-time system that displays the results and provides the user with information |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).