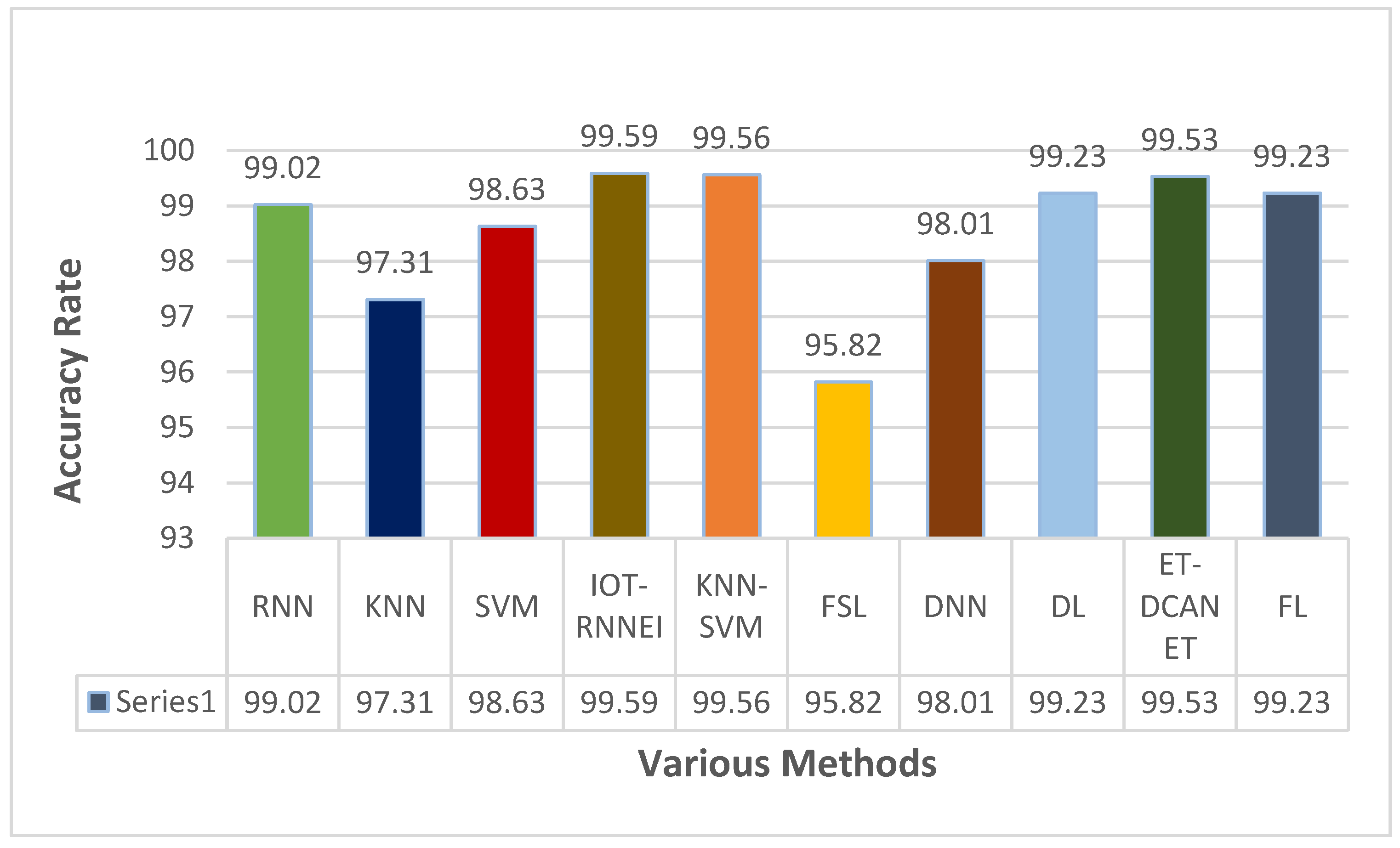

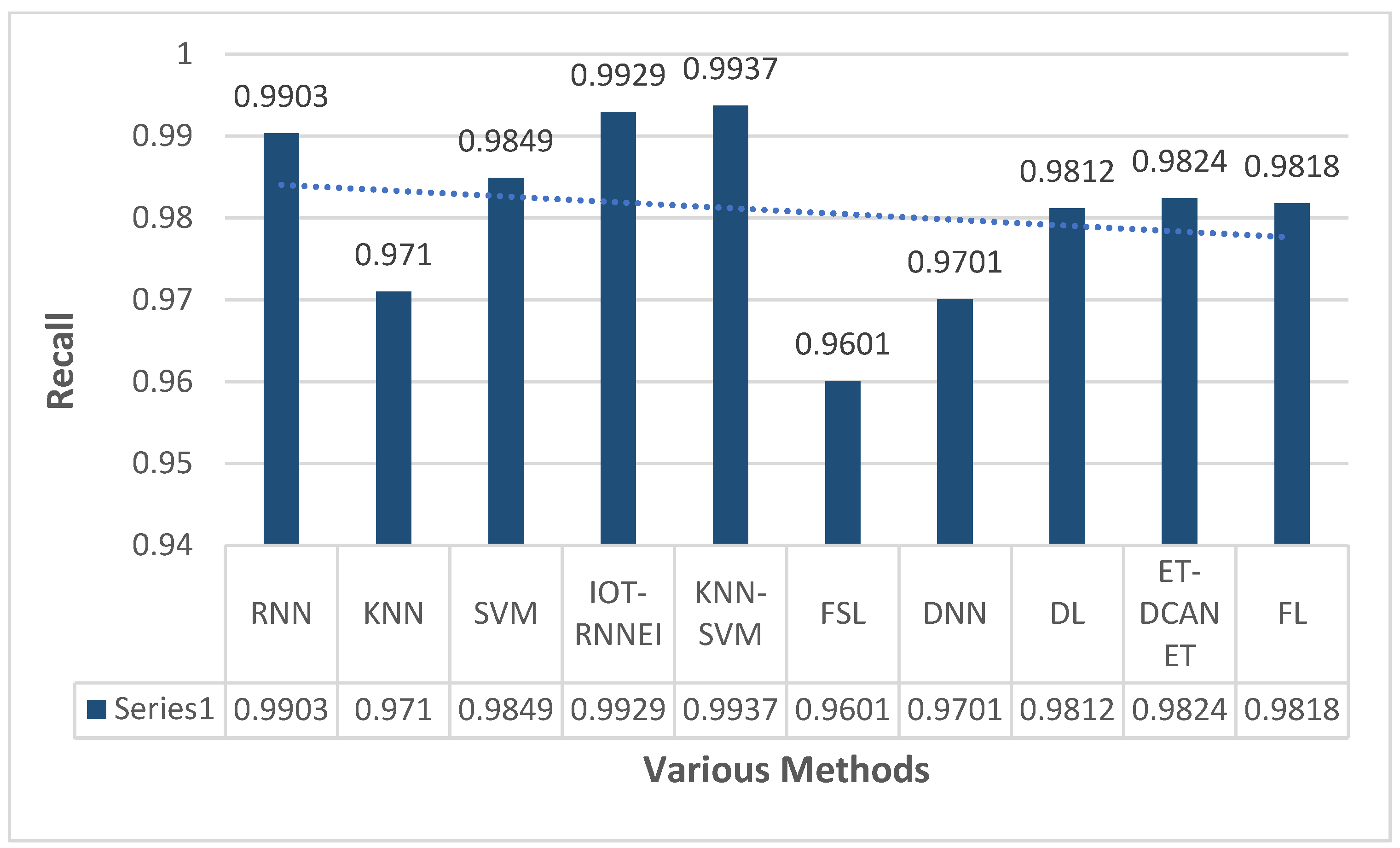

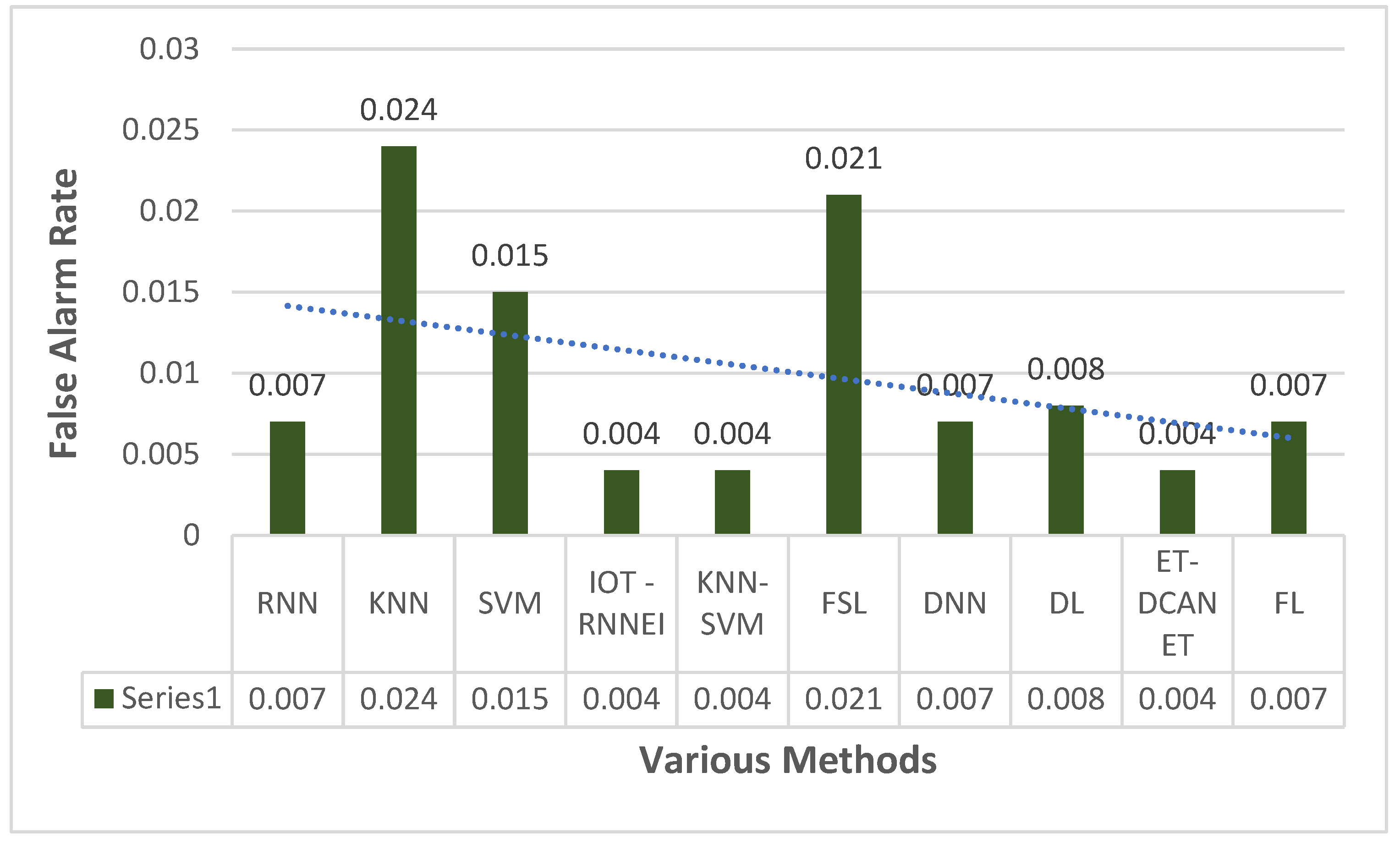

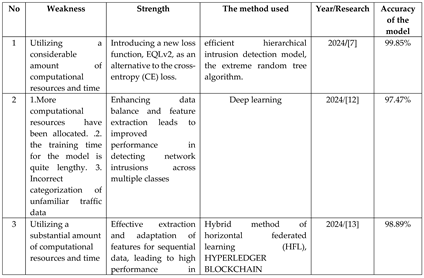

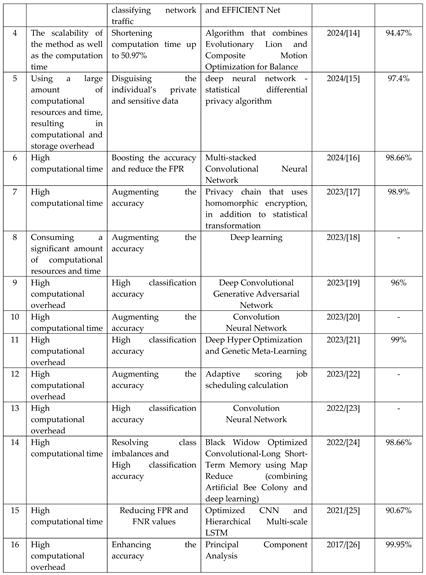

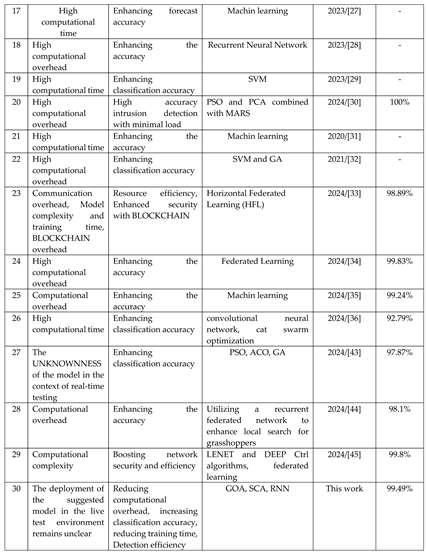

This section focuses on the research conducted in developing attack detection systems in IOT applications, which includes various machine learning methods on different datasets in this field. ET-DCANET, a highly efficient hierarchical intrusion detection model, was introduced by Xin Yang et al [

7]. In this model, the extreme random tree algorithm was utilized to meticulously choose the optimal feature subset. Subsequently, the dilated convolution and dual attention mechanism (channel attention and spatial attention) were introduced, along with a plan to transition from overall learning to detailed learning by decreasing the expansion rate of cavity convolution. It was discovered that this model has an accuracy of 99.85%. Wang et al [

12] introduced a network intrusion detection model based on deep learning (DL). This model sought to boost detection accuracy through feature extraction from spatial and temporal aspects of network traffic data. The aim of the model was to amplify the minority class samples, handle data imbalance, and enhance the accuracy of network intrusion detection. The model achieved an accuracy of 97.47%. elsewhere in their work, ARINDAM SAKAR et al [

13] proposed intrusion detection system within a Block Chain framework that leverages federated learning (FL) and an artificial neural network (ANN)-based key exchange mechanism. A. SUBRAMANIAM et al [

14] Intrusion Detection System using Hybrid Evolutionary Lion and Balancing Composite Motion Optimization Algorithm espoused feature selection with Ensemble Classifier (IDS-IOT Hybrid ELOA-BCMOA-Ensemble-DT-LSVM-RF-XGBOOST) propose for Securing IOT Network. Accuracy of this model was achieved 94.47%. G. SATHISH Kumar et al [

15] In their study, SATHISH Kumar et al [

15] introduced a statistical differential privacy-deep neural network (DNN - SDP) algorithm to protect sensitive personal data. The input layer of the neural network received both numerical and categorical human-specific data. The statistical methods weight of evidence and information value was applied in the hidden layer along with the random weight (

) to obtain the initial perturbed data. This initially perturbed data were taken by Laplace computation based differential privacy mechanism as the input and provided the final perturbed data. DNN-SDP algorithm provided 97.4% accuracy. In [

16]

, the main goal was to develop suitable models and algorithms for data augmentation, feature extraction, and classification. The proposed TB-MFCC multi-fuse feature consisted of data amplification and feature extraction. In the proposed signal augmentation, each audio signal used noise injection, stretching, shifting, and pitching separately, where this process increased the number of instances in the dataset. The proposed augmentation reduced the overfitting problem in the network. The suggested Pooled MULTIFUSE Feature Augmentation (PMFA) with MCNN & A-BILSTM enhanced the accuracy to 98.66 %. The proposal by SATHISH Kumar et al [

17] outlines a homomorphic encryption scheme that utilizes the privacy chain, the weight of evidence, and the information value of the statistical transformation method. This scheme is designed to safeguard the user's private and sensitive information. The proposed STHE algorithm ensures that perturbing numerical and categorical values from multiple datasets does not impact data utility. Elsewhere, in [

18] a new model and lightweight forecasting model was proposed using time series data from the KAGGLE website. The obtained dataset was then processed with the help of deep learning techniques. The Long Short-Term Memory (LSTM) algorithm was used to produce better results with higher accuracy when compared with other deep learning methodologies. In [

19]

, A model for the class imbalance problem was addressed using Generative Adversarial Networks (GANs). Accordingly, an equal number of train and test images was considered for better accuracy. The prediction accuracy was enhanced by Multi piled Deep Convolutional Generative Adversarial Network (DCGAN). In [

20] An automatic number plate recognition (ALPR) system provided the vehicle's license plate (LP). The computer vision area considered the ALPR system as a solved problem. The algorithm applied in the proposed methodology was Convolution Neural Network (CNN). In [

21] A quadratic static method was used to find the correlation between features, enhancing the dimensionality reduction in the dataset, where deep over-optimization and genetic hyper-learning model (DHO-GML) were applied to efficiently perform the classification by selecting the optimized model. The proposed model produced an accuracy above 99%. In another research, [

22] Created design and computation to support fine-tuned static allocation scheduling for distributed computing space with dynamic distribution of virtual machines. The design coordinated delicate continuous assignment planning calculations, particularly expert hub and virtual machine hub plans. In [

23]

, was model used to increase cognitive efficiency in Artificial General Intelligence (AGI), thereby improving agent image classification and object localization. This system, which used RNN and CNN, would enable any user to do creative work using the system model. The system model took in the sample input and produced the output based on the input given. P.R. KANNA and P. SANTHI [

24] presented an efficient hybrid IDS model built using Map Reduce-based optimized Black Widow short-term convolutional-long-term memory (BWO-CONV-LSTM) network. This model was used for intrusion detection in online systems. The first stage of this IDS model involved the feature selection by the Artificial Bee Colony (ABC) algorithm. The second stage was the hybrid deep learning classifier model of BWO-CONV-LSTM on a Map Reduce framework for intrusion detection from the system traffic data. P Rajesh KANNA and P SANTHI [

25] proposed a high-accuracy intrusion detection model using an integrated optimized CNN (OCNN) model and hierarchical multiscale LSTM (HMLSTM) to effectively extract and learn SPATIO-temporal features. The proposed intrusion detection model performed pre-processing plus feature extraction through network training and network testing together with final classification. In [

26]

, a new DOS attack detection system was introduced, which was equipped with the previously developed MCA technique and EMD-L1. The technique used previously helps extract the correlations between individual pairs of two distinct features within each network traffic record and detects more accurate characterization for network traffic behaviors. The recent technique facilitates our system to be able to effectively distinguish both known and unknown DOS attacks from authorized network traffic. In [

27]

, the authors offered a system that recommended the right crops for the region and the right fertilizers for the crops to farmers based on soil measurements, all based on past years' yield history. This agricultural yield forecast and fertilizer recommendation would employ machine learning methods. The random forest algorithm and the K-means clustering algorithm proved to be successful in predicting crop suitability and recommending FERTILISER. The dataset for the Salem region contains a number of factors that are used for this purpose. The approach in [

28] utilized deep learning technology to detect leaf diseases through the Recurrent Neural Network (RNN) algorithm. About 53,000 images of infected and healthy leaves, showcasing fruits and vegetables such as apple, orange, strawberry, and more, make up the dataset. The neural network was trained to increase accuracy in predicting efficient outputs. Elsewhere [

29] conducted a study on a water quality monitoring system to make water quality predictions with a machine learning system. The aim of this study was to create a water quality prediction model using an Artificial Neural Network (ANN) and time-series analysis. The specific data and investigation goals will determine the chosen prediction model. In [

30], researchers studied the use of feature reduction, feature selection, and machine learning models to detect attacked traffic in IOT industrial networks. They explored the combination of PSO and PCA with MARS or GAM machine learning models. in another research, [

31] proposed framework for advancing an explicit sprayer arrangement. The created gadget aimed to reduce the use of pesticides by spraying individual targets explicitly and by setting the separation of spray items based on the target. A sharp mechanical structure for spraying pesticides in cultivation field for controlling the robot by the use of a remote choice rather than manual completion of yields shower tests, would reduce the prompt prologue to pesticides and the human body, while also decreasing pesticide harm to people and improving age adequacy. In [

32]

, the authors tried to diagnose heart disease through Support Vector Machine (SVM) and Genetic Algorithm (GA) as a data analysis approach. AI methodologies were utilized during this work to influence crude data providing novel insights into coronary artery disease. In their proposal, ANITTHA GOVINDARAM and JEGATHEESAN A [

33] presented FLBC-IDS, a technique that utilizes HFL, HYPERLEDGER BLOCKCHAIN, and EFFICIENT Net for effective intrusion detection in IOT environments. HFL empowers the FLBC-IDS model to enable secure and privacy-preserving model training on a wide range of IOT devices, leading to decentralized data privacy and optimized resource utilization. presented an accuracy of 98.89%, recall of 98.044%, F1-score of 98.29%, and precision of 98.44%. They created a model in [

34] that combines federated learning with distributed computing resources and BLOCKCHAIN decentralized features to introduce an intrusion detection framework called IOV-BCFL. It was capable of distributed intrusion detection and reliable logging with privacy protection. Elsewhere, [

35] introduced a model called MP-GUARD, a novel framework which used software-defined networking (SDN), machine learning (ML), and multi-controller architecture to address intrusion detection challenges. MP-GUARD tackles multi-pronged intrusion attacks in IOT networks by offering real-time intrusion detection, collaborative traffic monitoring and multi-layered attack mitigation. This model achieved exceptional performance with 99.32% accuracy, 99.24% F1 score, and 0.49% false positive rate. In another study, [

36] discussed a new intrusion detection system that approached big data management. Intrusion detection was done by a hybrid model, fusing the long short term memory and optimizing convolutional neural network (CNN). Then, the optimization-assisted training algorithm called elephant adapted cat swarm optimization (EA-CSO) was proposed which would tune the optimal weights of CNN to enhance the performance of detection. In [

43]

, a new light intrusion detection system was designed in two phases using swarm intelligence based technique. In the first step, the basic features were selected using the particle swarm optimization algorithm considering the unbalanced data set. The ant colony optimization algorithm was used in the second phase to extract information-rich and unrelated features. In addition, genetic algorithm was employed to fine-tune each detection model. The accuracy of the model was 97.87%. In [

44]

, an advanced local search locus algorithm was proposed based on recurrent federated network (RFN-ELG). Datasets such as UNSW-NB15 and MQTT-IoT-IDS2020 dataset were used to determine IIOT performance through two different phases, i.e. data preprocessing phase as well as attack detection phase. In a different study, [

45] proposed a client selection method using clustering to identify malicious clients by analyzing their run time. This was paired with a trigger-set-based encryption system for client authentication, achieving a model accuracy of 99.8%. A comparison of the various approaches for analyzing the research gap is presented in

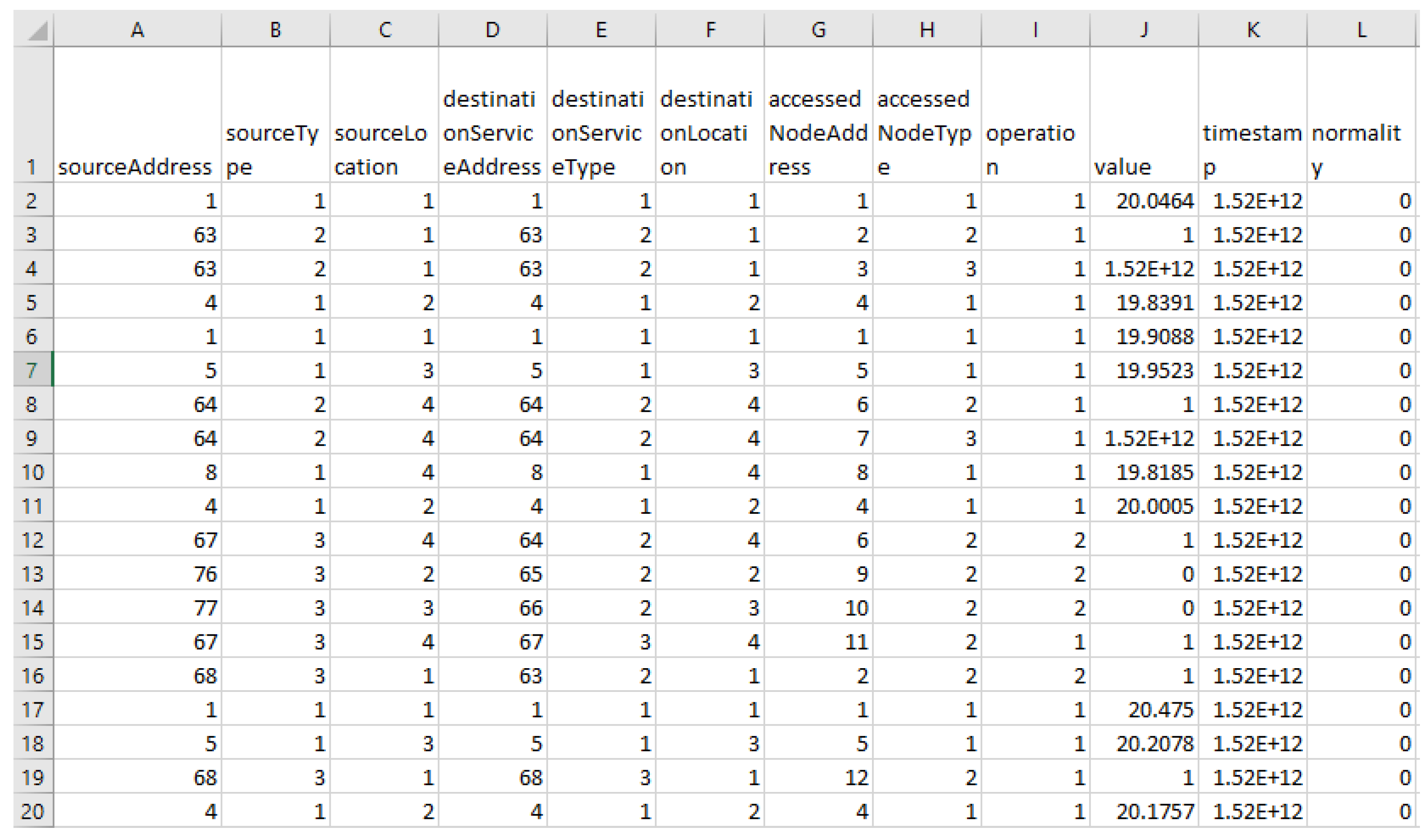

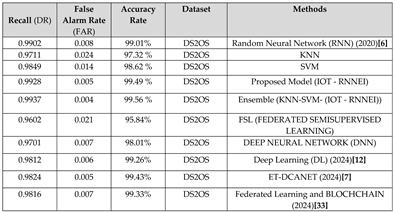

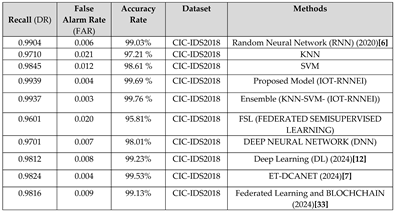

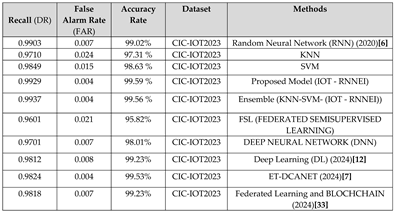

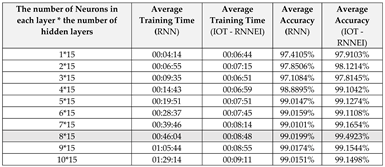

Table 1.