1. Introduction

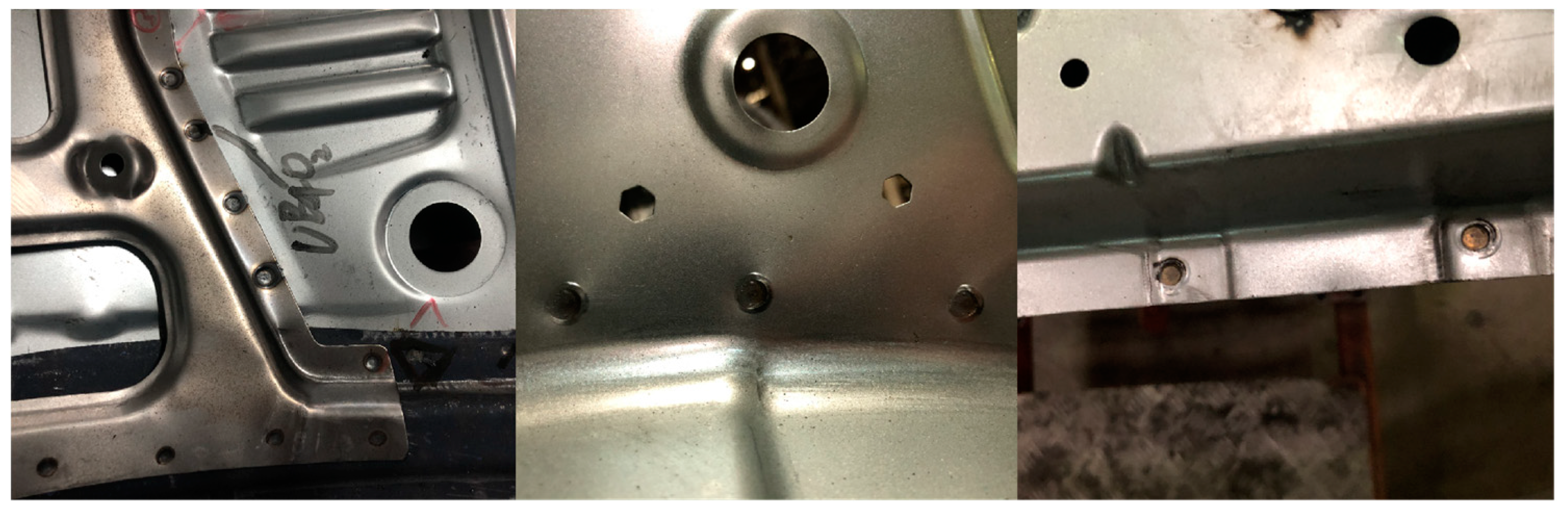

Welding spot quality is a very crucial factor that affects the hardware reliability of auto-body manufacturing. In body-in-white (BIW) production phase, the resistance spot welding (RSW) method is widely used. A lot of traditional image processing technics were adopted in some previous work [

1]. However, these methods cannot work well with the influence of environmental factors such as vibration, dust, lightness, which all usually appear during the inspection [

2]. Therefore, the manual visual inspection method is still used for surface defect inspection in lots of workshops nowadays.

At present, the quality inspection of BIW welds mainly includes two types of methods: non-visual method and visual inspection method [

3]. And these methods can be divided into non-destructive testing technology and destructive testing technology. non-visual methods mainly include ultrasonic testing, X-ray inspection, dynamic resistance monitoring, tensile testing and electromagnetic testing [

4].

Ultrasonic non-destructive testing (NDT) is widely applied in the evaluation of resistance spot welding detection. By emitting high-frequency sound waves and capturing their reflections, the detection is allowed to check internal defects such as cracks or porosity within welds. Mirmahdi et al. [

5] provided a comprehensive review of ultrasonic testing for resistance spot welding, highlighting its effectiveness in determining the integrity of welding spots. Similarly, Yang et al. [

6] explored the use of ultrasonic methods to assess weld quality, demonstrating its accuracy in identifying internal inconsistencies. Amiri et al. [

7] used a neural network to study the relationship between the results of ultrasonic testing with tensile strength and fatigue life of spot welded joints.

X-ray imaging is another well-established NDT method used to examine the internal structure of spot welds. This technique creates radio-graphic images that allow for the identification of defects such as voids or incomplete fusion. Juengert et al. [

8] employed X-ray technology to inspect automotive spot welds, showcasing its ability to reveal internal defects that may compromise weld strength. Similarly, Maeda et al. [

9] investigated X-ray inspection of aluminum alloy welds, confirming its capability in the non-invasive evaluation of weld quality.

Dynamic resistance monitoring involves analyzing changes in welding current, voltage, and resistance during the welding process to infer weld quality. Butsykin et al. [

10] explored the application of dynamic resistance in real-time quality evaluation of resistance spot welds, suggesting that fluctuations in resistance provide key indicators of weld formation. Wang [

11] developed this approach, demonstrating its effectiveness in detecting weld faults through real-time monitoring of resistance signals.

Visual inspection, combined with the tapping method, is a simple yet widely-used approach for surface quality checks. By examining the weld for surface defects such as burns or inconsistencies, it provides initial insights into weld integrity. Dahmene [

12] integrated visual inspection with acoustic emission to enhance defect detection in spot welding, illustrating the method's potential when combined with other techniques. Similarly, Hopkinson et al. applied acoustic testing to assess weld integrity, revealing a correlation between sound emissions and weld defects.

Tensile testing, a destructive evaluation method, is employed to measure the mechanical strength of spot welds by applying stress until failure occurs. Safari. [

13] investigated the tensile-shear performance of spot welds, providing insights into the relationship between mechanical properties and weld quality. D.J. et al. [

14] examined failure modes in tensile testing, offering detailed analysis on the impact of weld conditions on shear strength and failure behavior.

Electromagnetic testing is also a non-contact method that evaluates the electromagnetic properties of welds to detect imperfections. Tsukada et al. [

15] utilized magnetic flux leakage testing to detect imperfections in automotive parts, affirming its role in efficient weld detection. They demonstrated the use of electromagnetic NDT for quality assessment in resistance spot welding, showing how variations in magnetic signals can indicate weld defects.

Since an automobile workshop can generate batches of standard data very quickly, an automatic spot welding vision inspection system based on deep learning is a suitable choice. Computer vision systems leverage high-resolution imaging and advanced image processing algorithms to inspect the external characteristics of welds, such as size, shape, and surface defects. Ye et al. [

16] applied a system for the quality inspection of RSW, showcasing the potential of automated optical systems for large-scale manufacturing. Yang et al. [

17] developed an automated visual inspection system that combines image processing with artificial intelligence to enhance spot weld assessment.

Due to the powerful perception, recognition, and classification capabilities of deep learning, target detection based on deep learning is now a hot research field, which can be divided into One stage and Two stage. Typical target detection algorithms are shown in

Table 1.

Transformer models become more and more popular in computer vision domain, particularly in tasks such as surface defect detection. The attention mechanisms in Transformers allow them to capture global context and long-range dependencies across an image, which is critical for identifying subtle or dispersed surface defects. Recent studies have proposed using Vision Transformers (ViTs) to detect surface irregularities, benefiting from their ability to model both local and global features without the need for convolutions particularly effective for detecting complex surface patterns in materials. Vision Transformers were shown to outperform convolutional models on various visual recognition tasks, including defect detection on manufacturing surfaces, by using self-attention mechanisms to capture relationships between distant parts of the image.

YOLO is a real-time object detection model known for its speed and accuracy. In surface defect detection, YOLO's single-shot approach is particularly advantageous because it processes the entire image at once, making it highly efficient for real-time industrial applications. YOLO models have been adapted to detect surface defects in manufacturing industries, especially in steel or fabric inspection. The model detects defects by identifying them as "objects" and uses bounding boxes to localize anomalies in a single pass. Dai et al .[

4] Proposed an improved YOLOv3 method with use of MobileNetV3.

The SSD model is another real-time object detection algorithm that excels in speed and accuracy, making it well-suited for surface defect detection in industrial settings. Like YOLO, SSD processes images in a single pass, but it uses multiple feature maps of different sizes to detect objects (or defects) at various scales. This feature makes it particularly effective for detecting defects that vary in size, such as small scratches or large dents on a surface. Li et al. [

27] proposed an SSD based model to detect surface defects in ceramic materials, showing that the multi-scale feature extraction capability of SSD is beneficial for identifying both small and large-scale defects in real-time production environments.

The R-CNN family of models is widely applied for object detection and has been adapted for surface defect detection due to its robust performance in handling region proposals and localization. R-CNN models generate region proposals that likely contain defects, which are then classified and localized. Although slower than YOLO and SSD, R-CNNs are highly accurate and are often used in applications where detection precision is paramount. Wang et al. [

28] applied Mask R-CNN to detect rail surface defects, demonstrating that despite the slower inference time, the model's high precision is advantageous for identifying critical defects that require exact localization.

SPPNet improves upon traditional CNNs by introducing spatial pyramid pooling, which allows the model to handle images of varying sizes without the need for resizing. This feature makes SPPNet particularly useful in surface defect detection tasks, where defects might appear at different scales across different products. The model’s ability to pool spatial information at multiple scales enhances its ability to detect both small and large surface defects. Quantity of studies employed SPPNet defects on the surface of metallic materials, finding that the multi-scale pooling allowed the model to capture details of both microscopic surface roughness and larger structural defects.

Faster R-CNN extends R-CNN by integrating a Region Proposal Network (RPN) that significantly accelerates the process of generating region proposals. Faster R-CNN combines the precision of R-CNN with greater computational efficiency, making it suitable for detecting defects in industrial applications where accuracy and speed are both critical. This model has been widely utilized in detecting surface cracks, scratches, and other anomalies in BIW. Luo et al. [

29] developed an FPC surface defect method based on the Faster R-CNN object detection model, achieving high precision and recall while maintaining a reasonable inference speed, making it ideal for quality control in high-precision manufacturing.

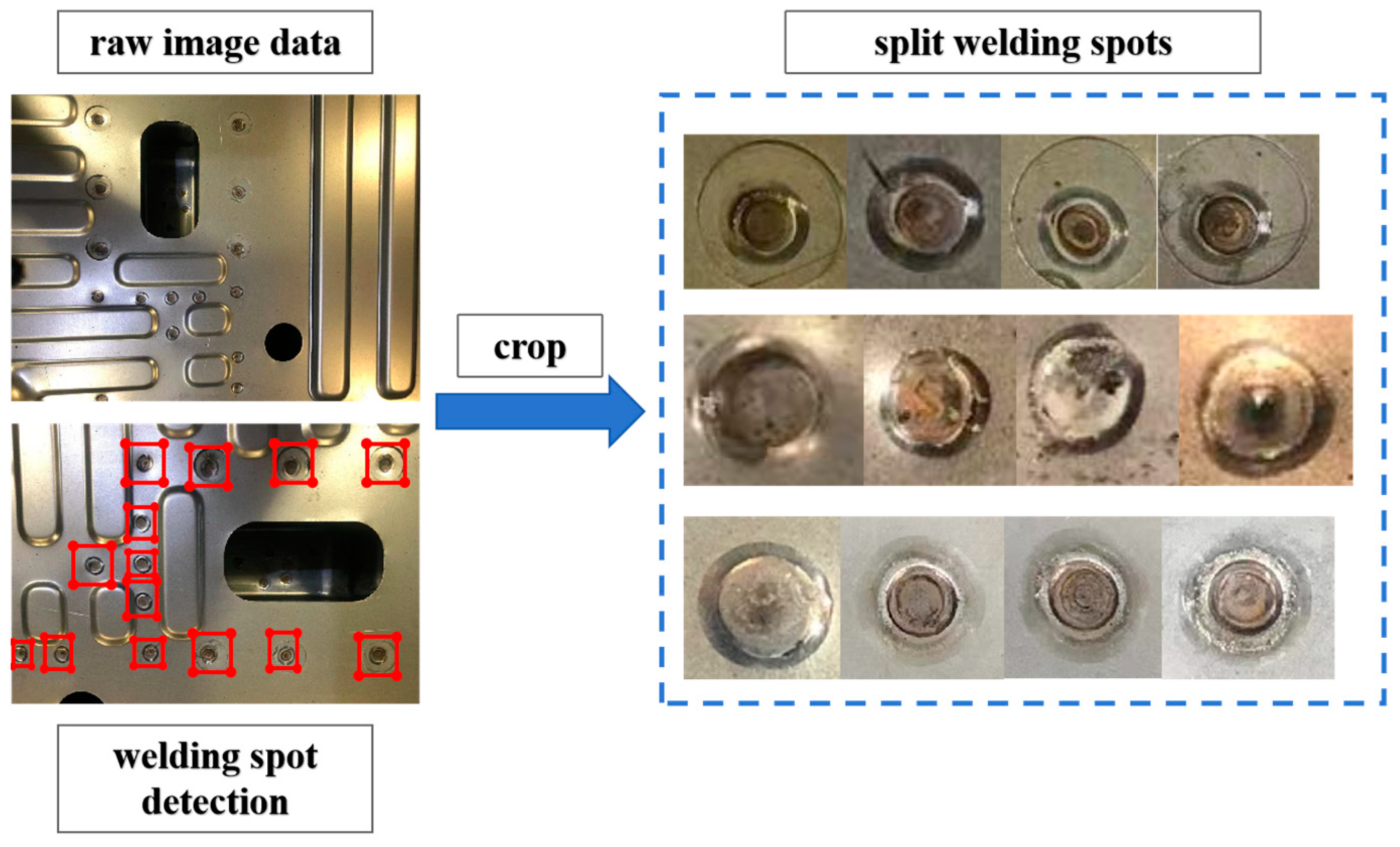

In this paper, a lightweight deep learning detection model for small objects(RSW) is proposed to detect welding spots' position and quality. In order to increase the speed of model calculations, the Faster R-CNN algorithm uses a shared feature extraction layer structure, which greatly reduces the amount of parameters and simplifies calculations. The Faster R-CNN detection algorithm based on the VGG-16 [

30] model has achieved 86.2% and 90.4% accuracy (mAP) on the PASCAL VOC 2007 and PASCAL VOC 2012 data sets, respectively, and the detection speed is 5 fps, which is a milestone in target detection. The algorithm is currently widely used in various target detection scenarios.

2. Proposed Approaches

2.1. Principle of Defect Detection Algorithm Based on Improved Faster R-CNN

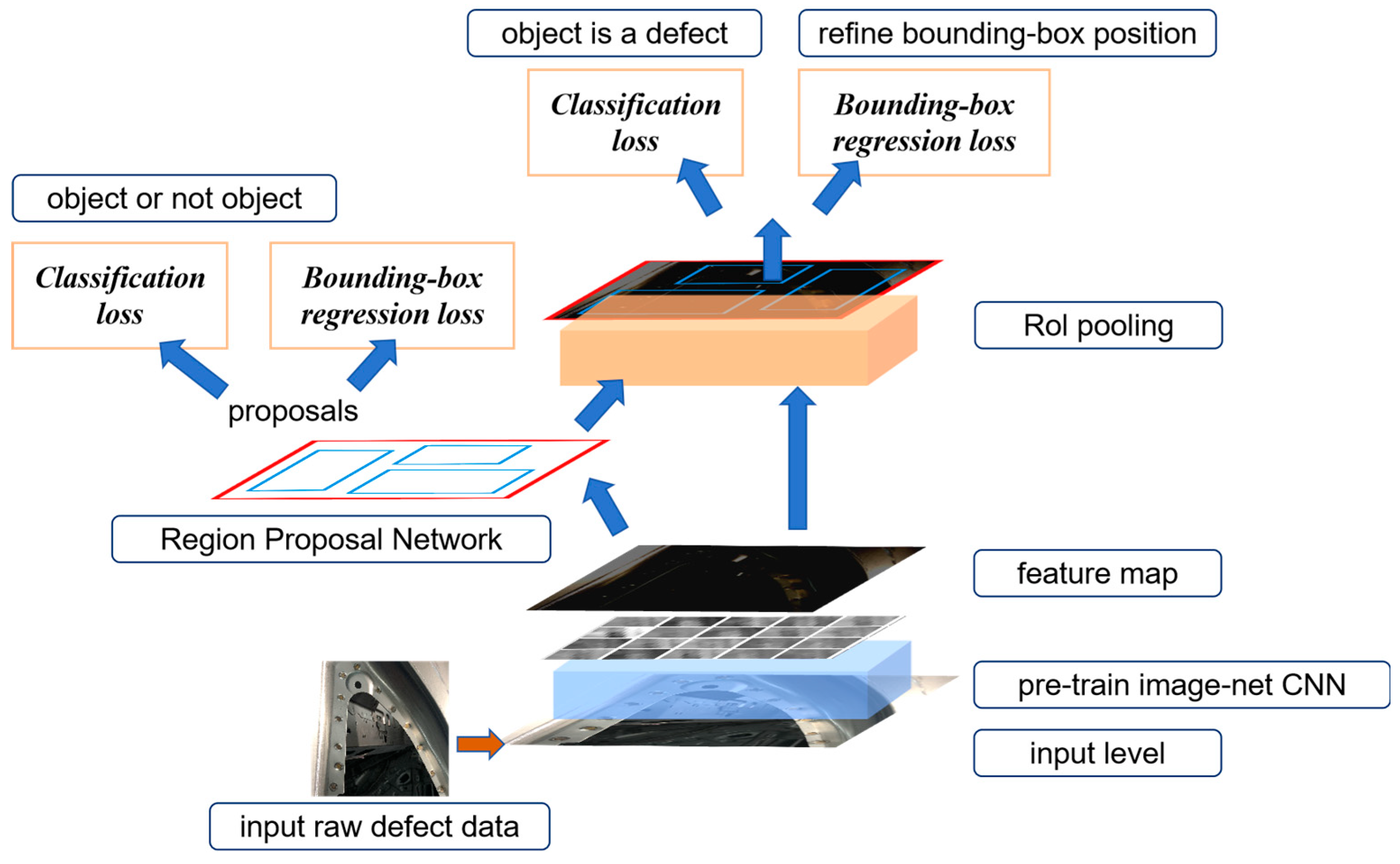

The Faster R-CNN [

25] detection algorithm is a two-stage high-efficiency target detection algorithm proposed by Yuming He and others in 2015, which has the advantages of fast speed and high accuracy. This algorithm first proposed the concept of region proposal network (RPN), using neural network to extract candidate detection regions, and based on candidate regions, using the target detection principle of Fast R-CNN [

24] algorithm for target classification and target positioning.

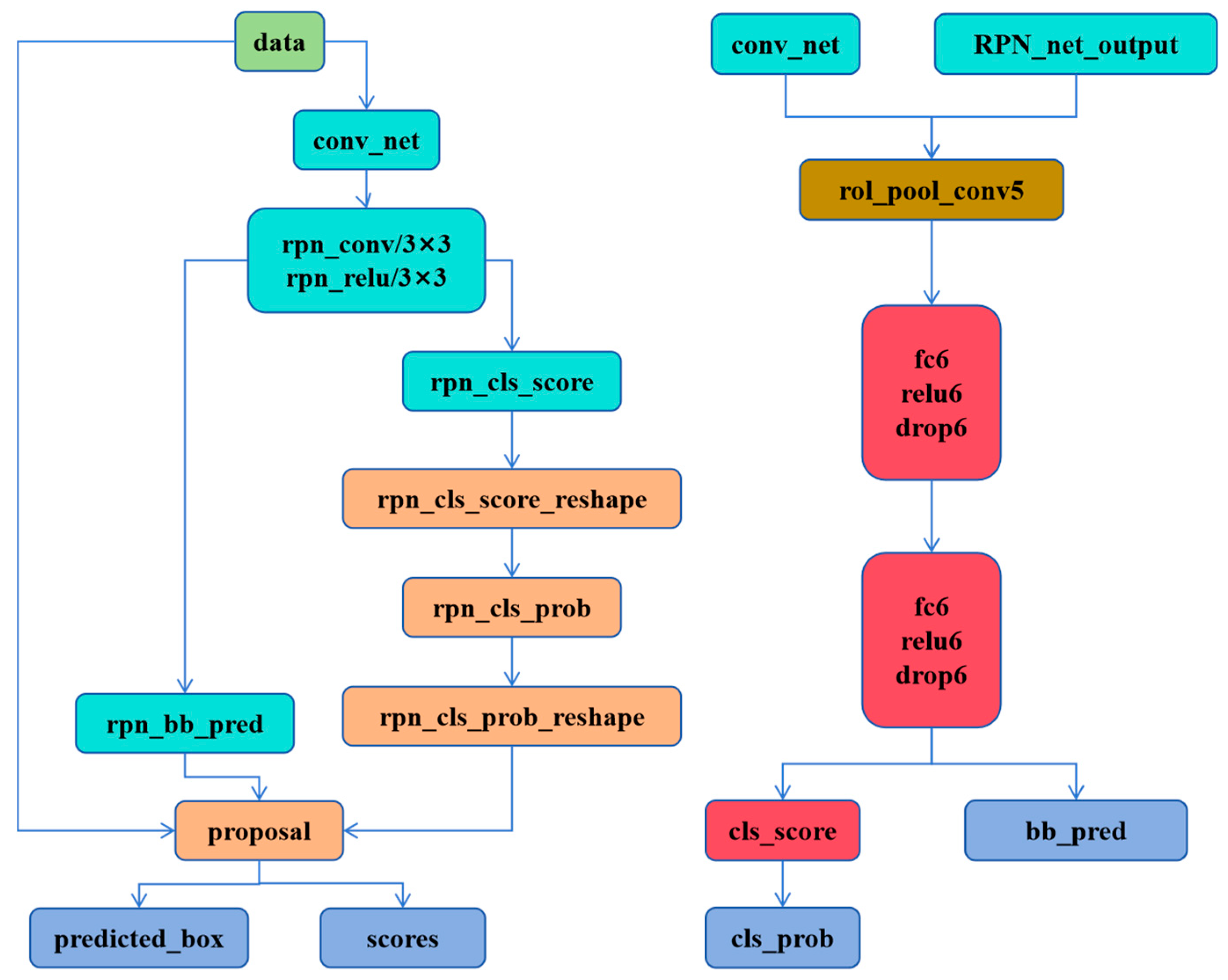

The Faster R-CNN detection algorithm can be divided into three main parts: convolution feature sharing, RPN candidate region decision-making, target regression, and classification calculation. Its overall structure is shown in

Figure 1.

Shared feature extraction layer: The feature extraction layer architecture of the Faster R-CNN algorithm is similar to other convolutional neural networks. It is a combination of a series of convolutional layers, pooling layers and ReLU activation layers. In the algorithm, the author performs feature extraction operations based on the two structures of VGG-16 and ZF respectively. The comparison between the two is shown in

Table 2.

From the comparison of the above table, we can see that VGG-16 has more layers and a deeper network, so it is conducive to extracting more subtle features. However, the huge amount of parameters affects the calculation speed. Compared with ZF, the detection speed is greatly improved, and at the same time, it can guarantee a higher accuracy rate (62.1%). Therefore, the feature extraction layer based on the ZF structure is used in this article to satisfy real-time requirements for welding spot defect detection.

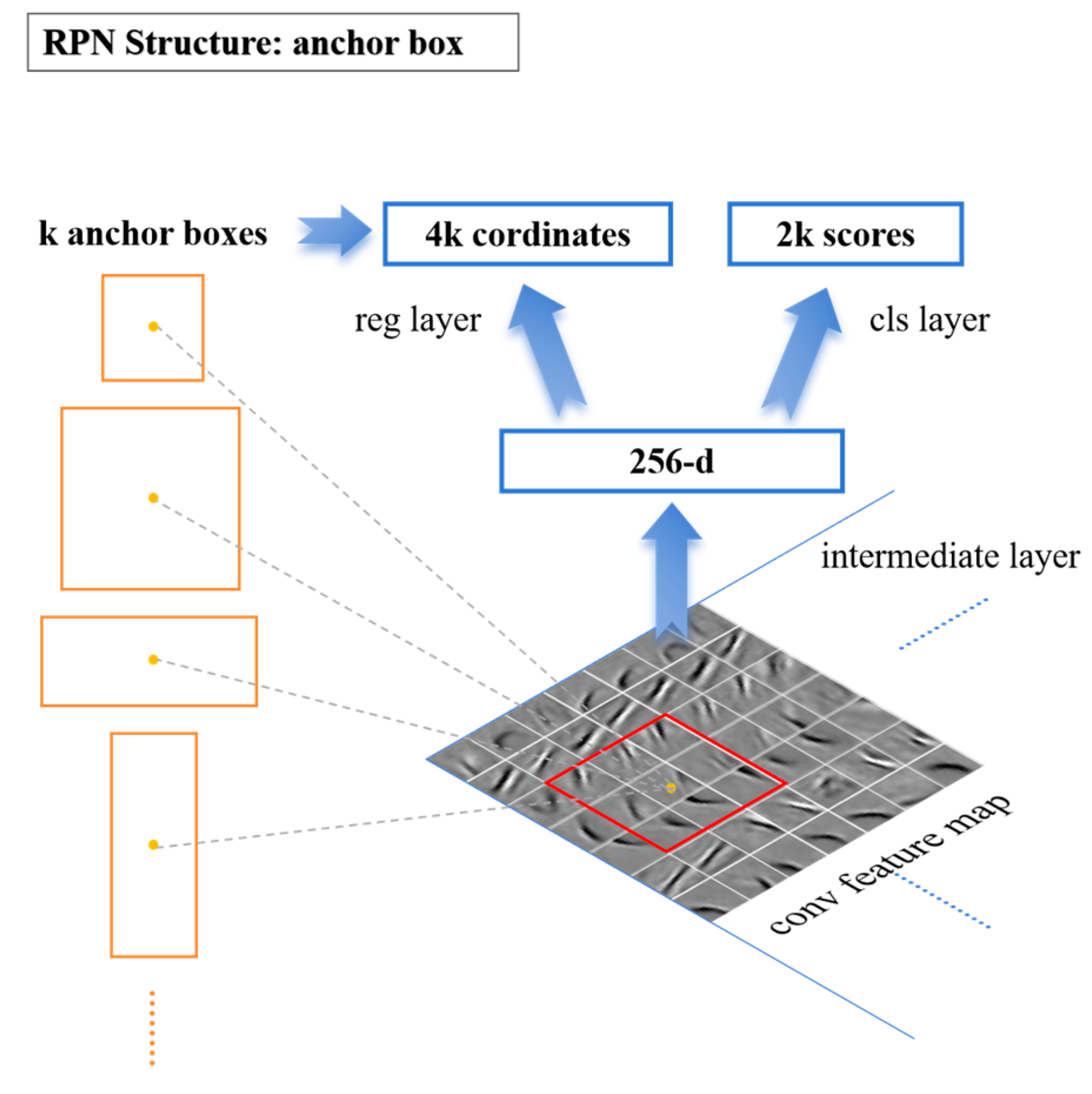

2.2.RPN Candidate Region Decision-Making

The feature map generated after extraction by the convolutional network ais input to the RPN network to generate a series of rectangular region candidate frames and scores. Different from the manual sliding window target area selection method based on the SS algorithm, RPN uses neural network to further extract the feature map features and generate anchor boxes of different scales (the number is k) at each point of the feature map, and map them to the original image, Carry out coordinate regression calculation and target judgment through neural network. At each feature point, RPN predicts 2k scores (probability of being/not the target), and 4k values (target coordinates). The detailed RPN structure is shown in

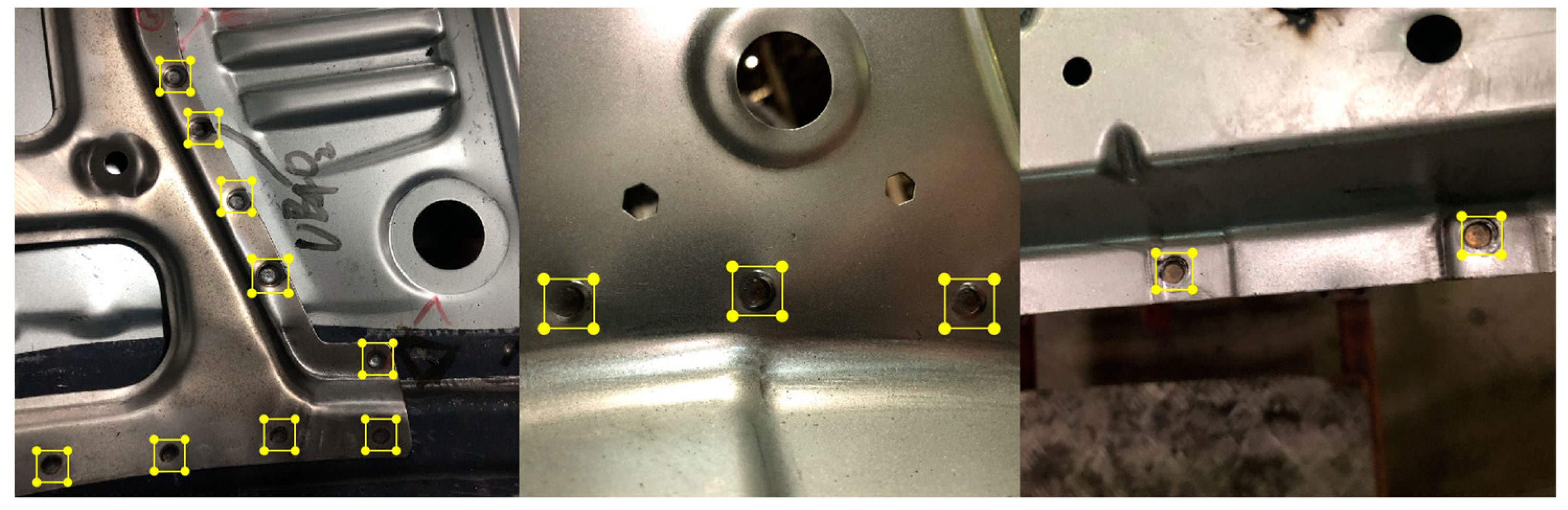

Figure 2.

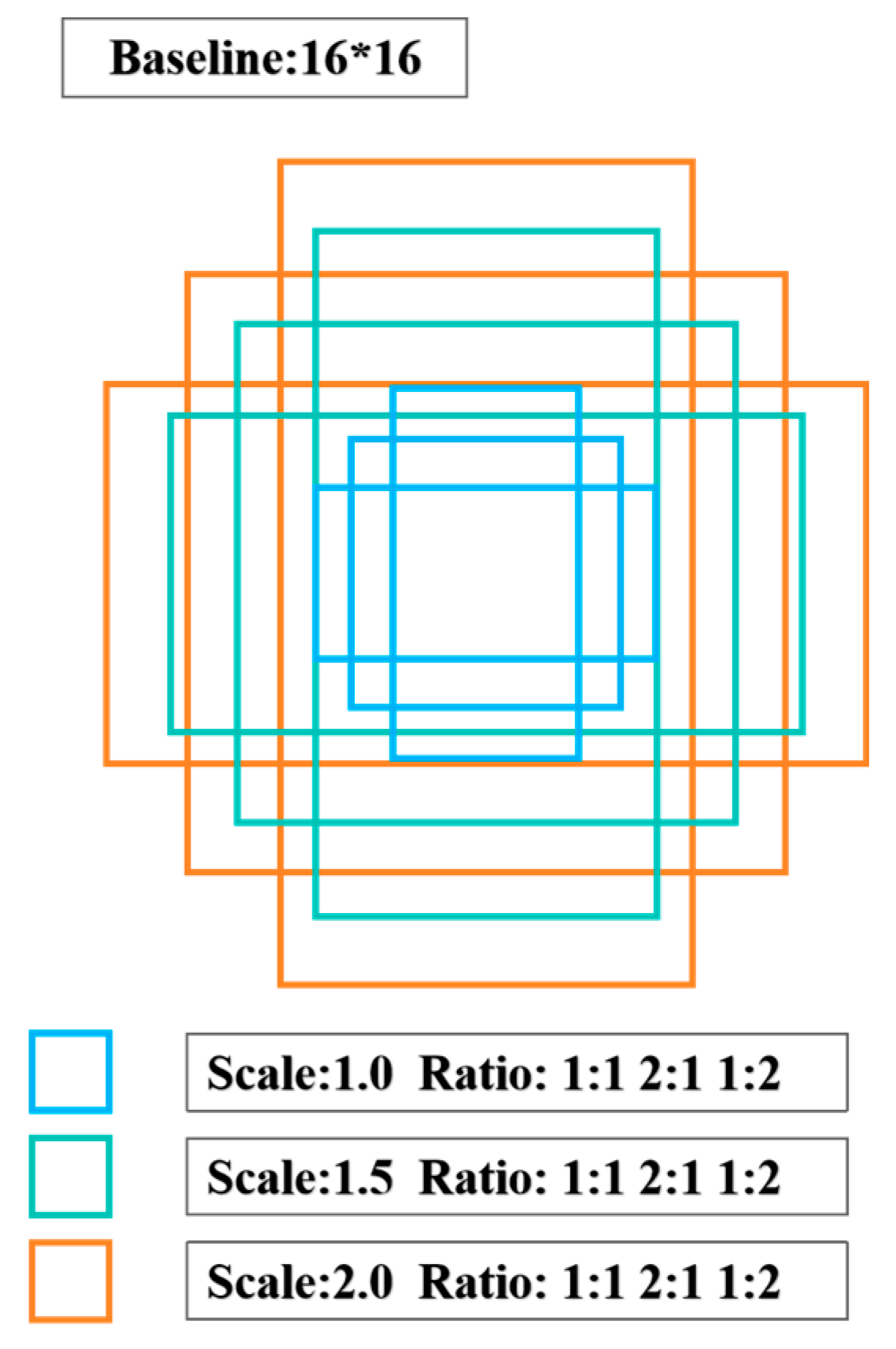

In order to adapt to different detection sizes, the anchor can change the size according to the actual detection situation, and adjust the ratio of width to height and zoom ratio. The size of the receptive field of the feature map is the basic size of the anchor setting. In the ZF model, after multi-convolutional layer down-sampling, the receptive field of the feature map is 16×16, so the anchor can be pulled on the basis of 16×16. Stretch or zoom. The size of the defect image detected in this article is 640×480. After the feature extraction of the convolutional layer, the feature map size is 50×37, then a total of 50×37×k anchor boxes are generated, and k is the number of anchor box types. The anchor box setting diagram is shown in

Figure 3.

During training process, each anchor box may be close to the ground-truth box (the target marked in the training sample). We need to judge whether the anchor has a target based on the relationship between the anchor box and the adjacent ground truth box, so we adopt the method of calculating the intersection-over-union ratio IoU, as shown in Equation (1). Between the two, that is to measure the closeness between the anchor box and the ground-truth by the ratio of the overlapping area to the total area.

Mark the anchor according to the value of IoU, and decide whether the anchor participates in training. The marking method is expressed in Equation (2).

It can be seen from the above that the anchor box corresponding to the same ground-truth box, the IoU is the maximum value or the value is greater than 0.7 is a positive sample, the value is less than 0.3 is a negative sample, and the rest are not involved in the training, after preliminary marking and screening , Which greatly reduces the number of anchor box training, and only trains the samples that have an impact on the result. Random sampling is performed in batches in the marked anchor box samples during training process, and each batch of data ensures that the data of the positive sample and the data of the negative sample are 1:1.

For each prediction box, you need to perform category output and coordinate regression output. The category output can use the Softmax function to calculate the probability of belonging to the positive sample and the negative sample respectively. The coordinate regression output uses the feed-forward operation to obtain the coordinates of the prediction box, and calculate the The offset between the prediction box and the anchor box and the offset between the ground-truth box and the anchor box are optimized to minimize the difference between the two offsets. The offset between the two coordinates can be expressed as Equation (3):

:the center coordinates, width and height of the anchor box;

:the center coordinates, width and height of the ground-truth box.

During training, the joint optimization method of multi-task solving is adopted, and the loss function is composed of classification loss and regression loss. In this article, the positive and negative samples of the anchor box are marked as a multi-class problem, and the Softmax output unit is used, so the loss function based on negative logarithm is used together; the coordinate prediction of the anchor box is a regression problem, and the improved SmoothL1 loss function is used to calculate the prediction The distance between the coordinates and the true value. The loss function is expressed in Equation (4):

Fast R-CNN target detection calculation: First, the selected candidate frame is matched with the feature map extracted by the convolutional layer, and then the feature map is pooled with a fixed size, so that the feature map dimension when entering the fully connected layer is the same. At the end of the network, the Softmax output unit is also used to determine the specific types of defects, and the SmoothL1 loss function is used to correct the position of the defect to output more accurate position coordinates.

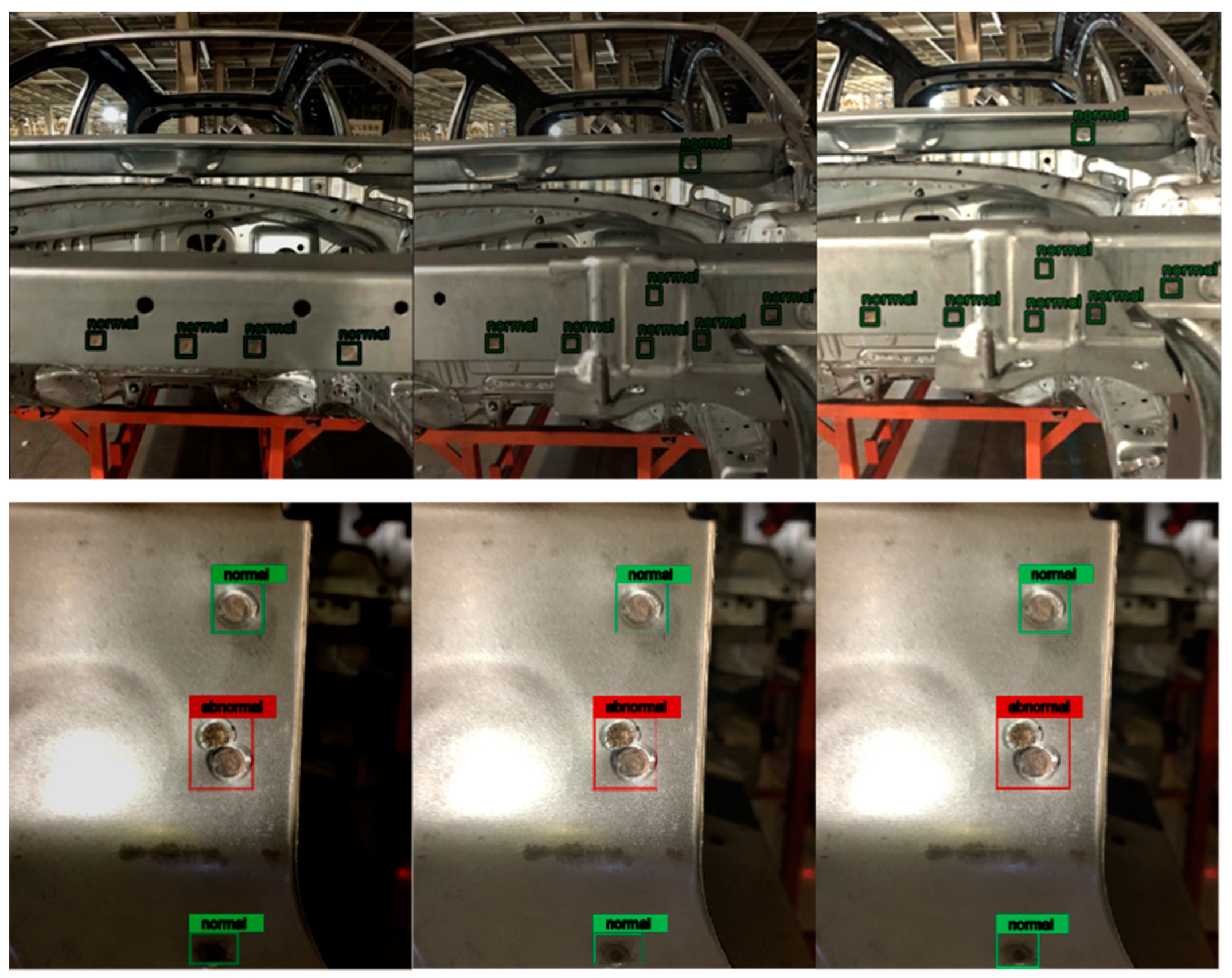

This article focuses on the common defects in actual production. According to statistics of existing defect samples, it is found that the largest defect is about 240 pixels *240 pixels, and the smallest defect is about 18 pixels *18 pixels. Therefore, the initial setting is 12 kinds of anchor box,. The ratio of width to height is [0.5,1,2] to cover the shape and size of the actual defect as much as possible. At this time k=12, when setting the convolution kernel dimension of RPN classification and regression output, it is also modified to cls: 2k=24, reg: 4k=48.

The network is split into two sub-networks respectively responsible for detecting defects and confirming defects. The RPN network is responsible for detecting whether there are defects, and the Fast RCNN network confirms the detailed information of the defects. The test network structure of the two sub-models is shown in

Figure 4.

4. Conclusions

1).Novelty

This paper aims to predict the welding spot defects during the production of body-in-white by constructing a inspection system using an improved faster R-CNN model. This deep learning network model shows good performance on surface quality inspection for small object detection.

The improved algorithm uses the anchor box with higher confidence output by the RPN network to locate defects. When a defect is detected and the detection system is in a suspended state, the Fast R-CNN network is used to confirm the defect category and details. During training, the end-to-end training method is used to train the RPN network and Fast R-CNN network as a whole to ensure the stability of the network.

The threshold of IoU is increased to improve convergence speed and regression accuracy in the proposed model. The setting of the structure parameters in the network is more fit the characteristics of the welding spot surface defects, and the accuracy of the defect location is improved. Results on our dataset demonstrate that the proposed model has a better performance compared with several typical surface defect detection algorithms.

2).Limitation

Due to the actual environment of the production line, we are unable to collect more sufficient data sample. The number of samples in the dataset is insufficient and unbalanced, when the model is trained, it may cause a over-fitting phenomenon,which may miss detection and recognition errors in the real test.

3).Future work

The abundance of data plays a vital role in the performance of deep learning algorithms. In future work, it is necessary to improve the data management system, increase the number of samples, and improve the quality of samples. We hope to make some progress in the aspect of novel data augmentation.

Faster R-CNN, while accurate, is still slower than models like YOLO or SSD when used in real-time production environments. Future developments could focus on optimizing the Region Proposal Network (RPN) or incorporating lightweight architectures like MobileNet or EfficientNet into the Faster R-CNN pipeline to reduce inference time while maintaining accuracy.

Surface defects in weld spots often vary in size, from small cracks to large spatters. Faster R-CNN already handles multi-scale detection to some degree, but enhancing this capability and potentially through multi-scale feature extraction techniques like Feature Pyramid Networks (FPN), which could lead to even better detection of tiny or barely visible defects.