Submitted:

01 October 2024

Posted:

02 October 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

- What are the common practices in terms of patterns and essential elements in empirical evaluations of AI explanations?

- What pitfalls, but also best practices, standards, and benchmarks should be established for empirical evaluations of AI explanations?

2. Explainable Artificial Intelligence (XAI): Evaluation Theory

- Functionality-grounded evaluations require no humans. Instead, objective evaluations are carried out using algorithmic metrics and formal definitions of interpretability to evaluate the quality of explanations.

- Application-grounded evaluations measure the quality of explanations by conducting experiments with end-users within an actual application.

- Human-grounded evaluation, which involves human subjects with less experience and measures general constructs with respect to explanations, such as understandability, trust, and usability on a simple task.

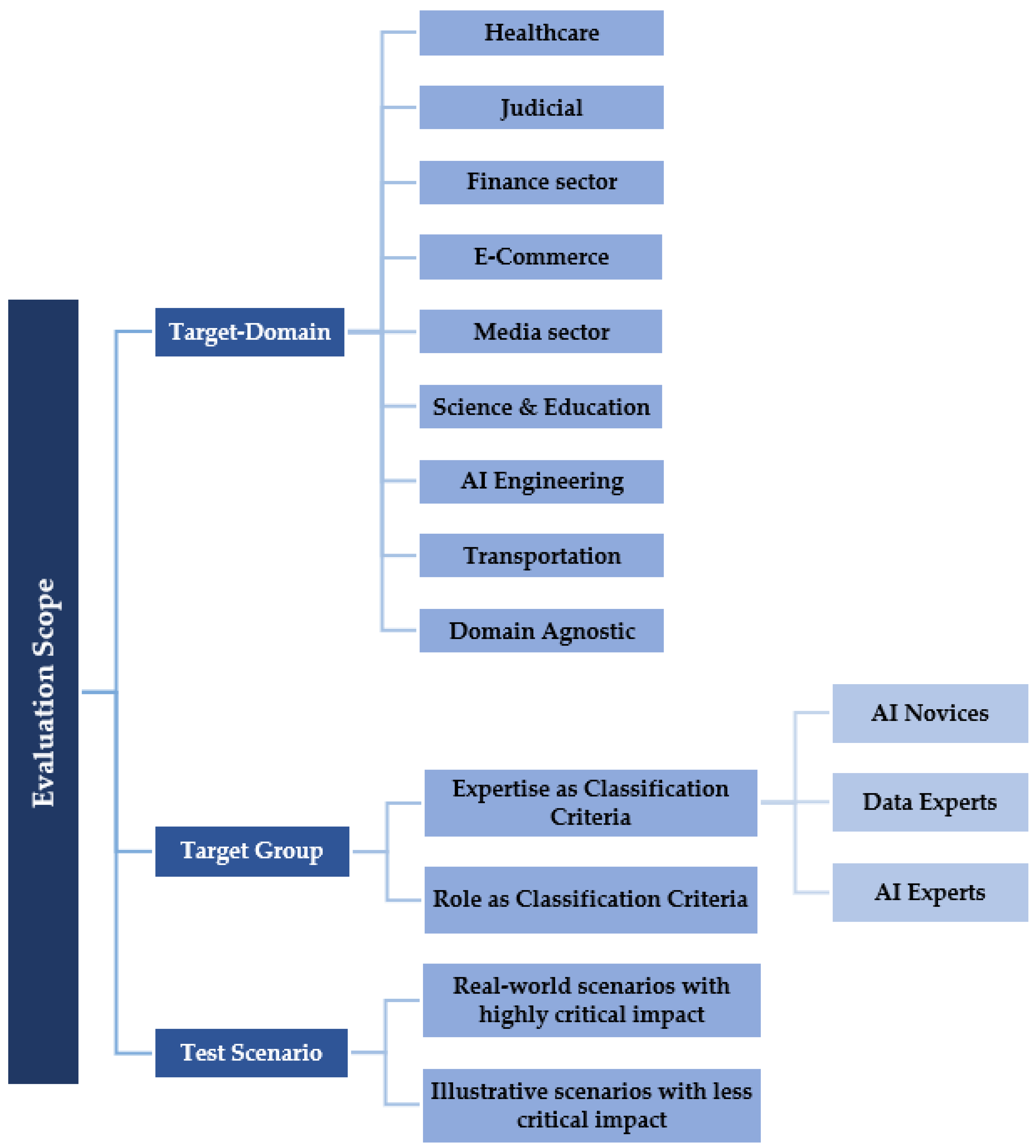

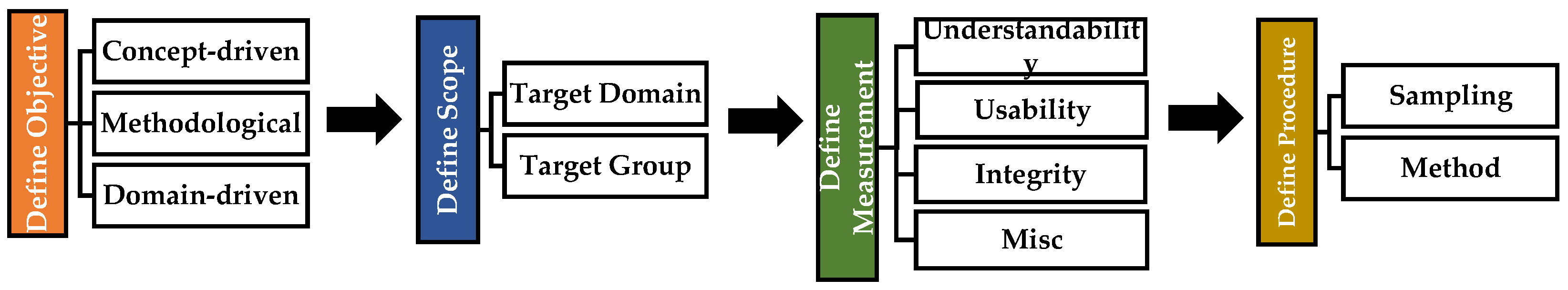

- Evaluation Objective and Scope. Evaluation studies can have different scopes as well as different objectives, such as understanding a general concept or improving a specific application. Hence, the first step in planning an evaluation study should be defining the objective and scope, including a specification of the intended application domain and target group. Such a specification is also essential for assessing instrument validity, referring to the process of ensuring that an evaluation method will measure the constructs accurately, reliably, and consistently. The scope of validity indicates where the instrument has been validated and calibrated and where it will be measured effectively.

- Measurement Constructs and Metrics. Furthermore, it is important to specify what the measurement constructs of the study are and how they should be evaluated. In principle, measurement constructs could be any object, phenomenon, or property of interest that we seek to quantify. In user studies, they are typically theoretical constructs such as user satisfaction, user trust, or system intelligibility. Some constructs, such as task performance, can be directly measured. However, most constructs need to be operationalized through a set of measurable items. Operationalization includes selecting validated metrics and defining the measurement method. The method should describe the process of assigning a quantitative or qualitative value to a particular entity in a systematic way.

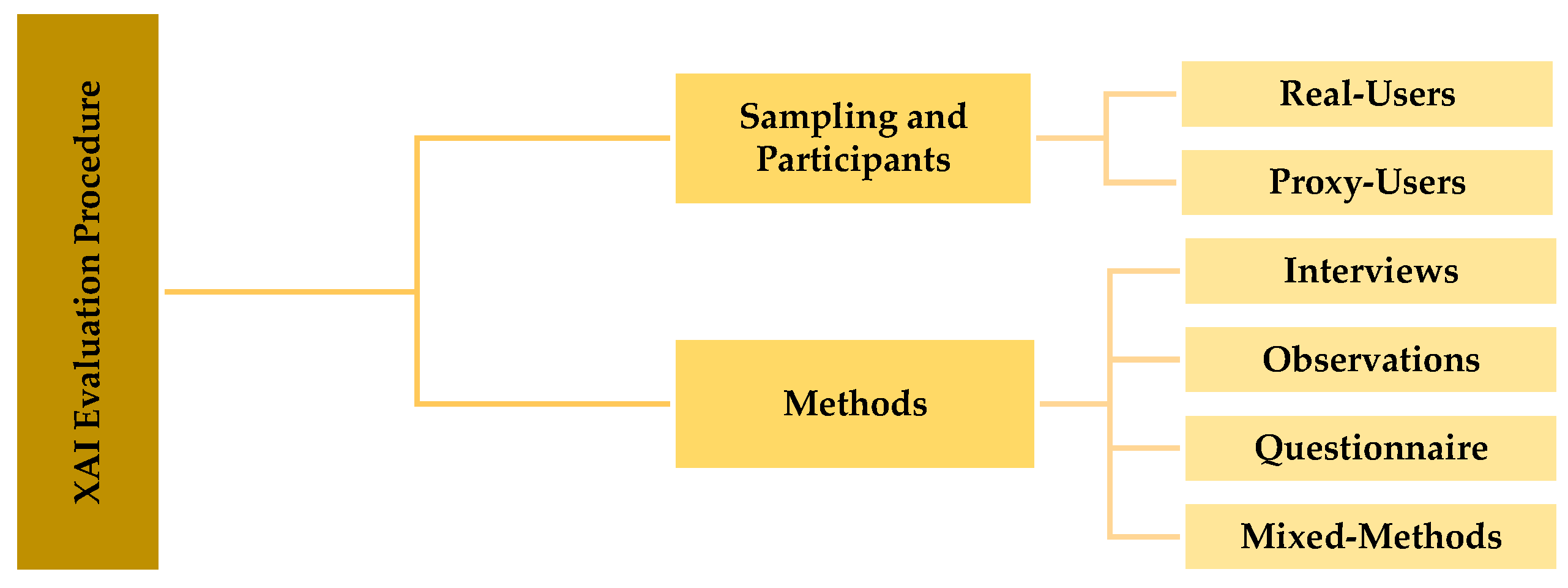

- Implementation and Procedure. Finally, the implementation of the study must be planned. This includes decisions about the study participants (e.g., members of the target group or proxy users) and recruitment methods (such as using a convenience sample, an online panel, or a sample representative of the target group/application domain). Additionally, one must consider whether a working system or a prototype should be evaluated and under which conditions (e.g. laboratory conditions or real-world settings). Furthermore, the data collection method should be specified. Generally, this can be categorized into observation, interviews, and surveys. Each method has its strengths, and the choice of method should align with the research objectives, scope, and nature of the constructs being measured.

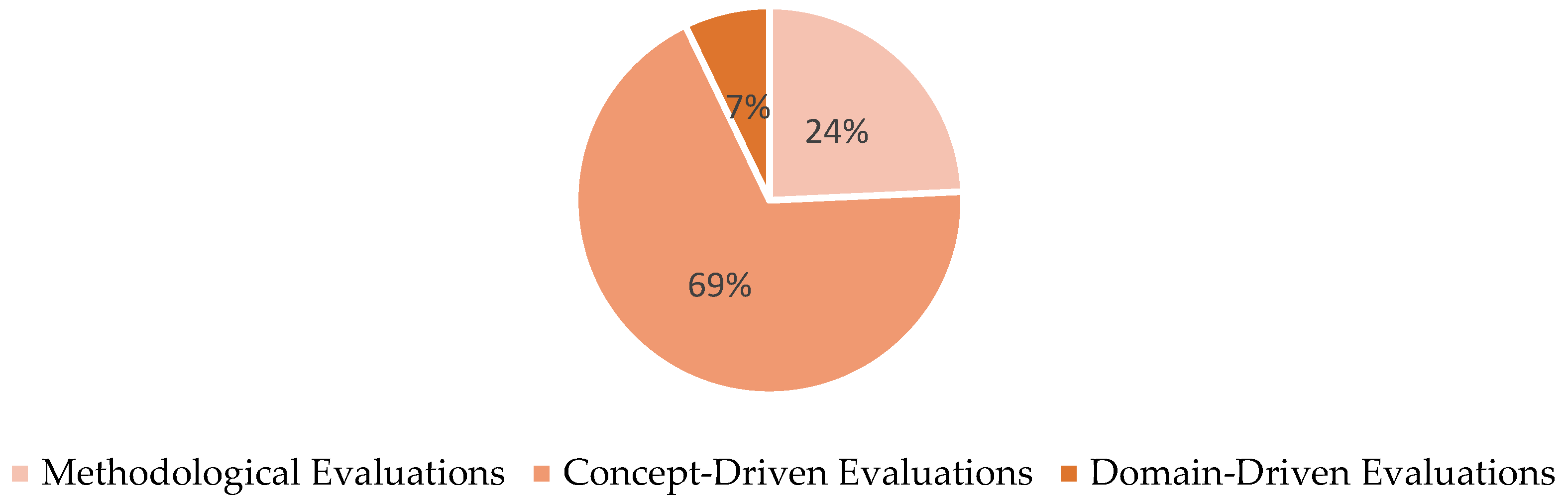

3. Evaluation Objectives

3.1. Studies About Evaluation Methodologies

3.2. Concept-Driven Evaluation Studies

3.3. Domain-Driven Evaluation Studies

4. Evaluation Scope

4.1. Target Domain

4.1.1. Healthcare

4.1.2. Judiciary

4.1.3. Finance Sector

4.1.4. E-Commerce

4.1.7. Media Sector

4.1.8. Transportation Sector

4.1.5. Science and Education

4.1.6. AI Engineering

4.1.7. Domain-Agnostic

4.2. Target Group

4.2.1. Expertise

4.2.2. Role

4.2.3. Lay Persons

4.2.4. Professionals

4.3. Test Scenarios

- The evaluation scope also relies on the test scenarios used for the evaluation, their relevancy, and their ecological validity. Concerning this, our sample includes two types of test scenarios: those with significant real-world impact and those that serve an illustrative purpose using toy scenarios [8].

4.3.1. Real-World Scenarios with Highly Critical Impact

4.3.2. Illustrative Scenarios with Less Critical Impact

5. Evaluation Measures

5.1. Understandability

5.1.1 Mental Model

5.1.2 Perceived Understandability

5.1.3 Goodness/Soundness of the Understanding

- Qualitative methods involve uncovering users' mental models through introspection, such as interviews, think-aloud protocols, or drawings made by the users.

- Subjective-quantitative methods assess perceived understandability through self-report measures.

- Objective-quantitative methods evaluate how accurately users can predict and explain system behavior based on their mental models.

5.1.4 Perceived Explanation Qualities

5.2 Usability

5.2.1 Satisfaction

5.2.2 Utility and Suitability

5.2.3 Task Performance and Cognitive Workload

5.2.4 User Control and Scrutability

5.3. Integrity Measures

5.3.1 Trust

5.3.2 Transparency

5.3.3 Fairness

5.4 Miscellaneous

5.4.1. Diversity, novelty, and curiosity

5.4.2. Persuasiveness, Plausibility, and Intention to Use

5.4.3 Intention to Use or Purchase

5.4.4. Explanation Preferences

5.4.5. Debugging Support

5.4.6. Situational Awareness

5.4.7. Learning and Education

6. Evaluation Procedure

6.1 Sampling and Participants

6.1.1. Real-User Studies

6.1.2. Proxy-User Studies

6.2 Evaluation Methods

- Measurement methods can be classified by various criteria such as qualitative/quantitative or objective/subjective measures [14]. In HCI, a widespread categorization distinct between interviews, observations, questionnaires, and mixed methods [13]. In the following we briefly summarize how these methods have been adopted in XAI evaluation research.

6.2.1. Interviews

6.2.2. Observations

6.2.3. Questionnaires

6.2.4. Mixed-Methods Approach

7. Discussion: Pitfalls and Guidelines for planning and conducting XAI evaluation

Guidelines: The guideline is to carefully consider the tension between rigor and relevance from the very beginning when planning an evaluation study, as it influences both the evaluation scope, and the methods used.

|

|

Pitfalls: A common pitfall in many evaluation studies is not to define the target domain, group, and context explicitly. This lack of explication negatively affects both the planning of the study and the broader scientific community.: During the planning phase, this complicates the formulation of test scenarios, recruitment strategies, and predictions regarding the impact of pragmatic research decisions (e.g., using proxy users instead of real users, or evaluating a click-dummy instead of a fully functional system, using toy examples instead of real-world scenarios, etc.). During the publication phase, the missing explication impede to assess the study's scope of validity and its reproducibility. Without clearly articulating the limitations imposed by early decisions—such as the choice of participants, test conditions, or simplified test scenarios—the results may be seen as less robust or generalizable. Guidelines: The systematic planning of an evaluation study should include a clear and explicit definition of the application domain, target group, and use context of the explanation system. This definition should be as precise as possible. However, an overly narrow scope may restrict the generalizability of the research findings, while a broader scope could reduce the focus of the study, informing the systematic implantation, and the relevancy of the findings [161]. Striking the right balance is essential to ensure both meaningful insights and the potential applicability of the results across different contexts. |

|

Pitfalls: A common pitfall in many evaluation studies to use of ad hoc questionnaires instead of standardized. This lack of explication has negative impact for study planning as well as the scientific community: During the planning phase, creating ad-hoc questionnaires adds to the cost, particularly when theoretical constructs are rigorously operationalized including pre-testing the questionnaires and validating it psychometrically. During the publication phase, using non-standardized questionnaires complicate reproducibility, comparability, and the assessment of the validity of the study. Guidelines: Regarding the definition of measurement constructs:

|

Guidelines: Essentially, there are three types of methods:

|

|

Pitfalls: Real and proxy user sampling each come with their own set of advantages and disadvantages. A real-user approach is particularly challenging in niche domains beyond the mass market, especially where AI systems address sensitive topics or affect marginalized or hard-to-reach populations. Key sectors in this regard include healthcare, justice, and finance, where real-user studies typically have smaller sample sizes due to the specific conditions of the domain and the unique characteristics of the target group. Conversely, the availability of crowd workers and online panel platforms simplifies the recruitment process for proxy-user studies, enabling larger sample sizes. While recruiting proxy users can be beneficial for achieving a substantial sample size and sometimes essential for gathering valuable insights, researchers must be mindful of the limitations and potential biases this approach introduces. It is crucial to carefully assess how accurately proxy users represent the target audience and to interpret the findings considering these constraints. Relying on proxy users, rather than real users from the target group, can be viewed as a compromise often driven by practical considerations. However, the decision using proxy-users are often made by pragmatically reasons only without considering the implications for the study design and the applicability of research findings to real-world scenarios. Guidelines: The sampling method has serious impact on the study results. Sometimes, a small sample with real users could let to more valid result than large sample studies with proxy-users. Therefore, the decision sampling method should be done intentionally, balancing the statistically required sample size, contextual relevance, and ecological validity, along with the practicalities of conducting the study in a time- and cost-efficient manner. In addition, researchers should articulate the rationale behind the sampling decision, as well as the implications for the study design and the limitations of the findings. |

8. ConclusionS

| 1 | |

| 2 | Plausability measures how convincing AI explanation is to humans. Typically measured in terms of quantitative metrics such as feature localization or feature correlation. |

| 3 | As outline in previous section, this approach also used as a metric to measure understandability indirectly. |

| 4 |

References

- A. Abdul, J. Vermeulen, D. Wang, B. Y. Lim, and M. Kankanhalli, “Trends and Trajectories for Explainable, Accountable and Intelligible Systems: An HCI Research Agenda,” in Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, in CHI ’18. New York, NY, USA: Association for Computing Machinery, 2018, pp. 1–18. [CrossRef]

- B. Shneiderman, “Human-Centered Artificial Intelligence: Reliable, Safe & Trustworthy,” Int J Hum Comput Interact, vol. 36, no. 6, pp. 495–504, 2020. [CrossRef]

- F. Doshi-Velez and B. Kim, “Towards A Rigorous Science of Interpretable Machine Learning,” arXiv: Machine Learning, 2017, [Online]. Available: https://api.semanticscholar.org/CorpusID:11319376.

- T. Herrmann and S. Pfeiffer, “Keeping the organization in the loop: a socio-technical extension of human-centered artificial intelligence,” AI Soc, vol. 38, no. 4, pp. 1523–1542, 2023. [CrossRef]

- G. Vilone and L. Longo, “Notions of explainability and evaluation approaches for explainable artificial intelligence,” Information Fusion, vol. 76, pp. 89–106, 2021. [CrossRef]

- A. Barredo Arrieta et al., “Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI,” Information Fusion, vol. 58, pp. 82–115, 2020. [CrossRef]

- D. Gunning and D. Aha, “DARPA’s Explainable Artificial Intelligence (XAI) Program,” AI Mag, vol. 40, no. 2, pp. 44–58, Jun. 2019. [CrossRef]

- I. Nunes and D. Jannach, “A systematic review and taxonomy of explanations in decision support and recommender systems,” User Model User-adapt Interact, vol. 27, no. 3, pp. 393–444, 2017. [CrossRef]

- L. H. Gilpin, D. Bau, B. Z. Yuan, A. Bajwa, M. Specter, and L. Kagal, “Explaining Explanations: An Overview of Interpretability of Machine Learning,” in 2018 IEEE 5th International Conference on Data Science and Advanced Analytics (DSAA), 2018, pp. 80–89. [CrossRef]

- J. Zhou, A. H. Gandomi, F. Chen, and A. Holzinger, “Evaluating the Quality of Machine Learning Explanations: A Survey on Methods and Metrics,” Electronics (Basel), vol. 10, no. 5, 2021. [CrossRef]

- A. Nguyen and M. R. Martínez, “MonoNet: Towards Interpretable Models by Learning Monotonic Features,” May 2019, [Online]. Available: http://arxiv.org/abs/1909.13611.

- A. Rosenfeld, “Better Metrics for Evaluating Explainable Artificial Intelligence,” in Adaptive Agents and Multi-Agent Systems, 2021. [Online]. Available: https://api.semanticscholar.org/CorpusID:233453690.

- H. Sharp, J. Preece, and Y. Rogers, Interaction design: Beyond human-computer interaction . jon wiley & sons. Inc, 2002.

- M. Chromik and M. Schuessler, “A Taxonomy for Human Subject Evaluation of Black-Box Explanations in XAI,” in ExSS-ATEC@IUI, 2020. [Online]. Available: https://api.semanticscholar.org/CorpusID:214730454.

- S. Mohseni, J. E. Block, and E. Ragan, “Quantitative Evaluation of Machine Learning Explanations: A Human-Grounded Benchmark,” in 26th International Conference on Intelligent User Interfaces, in IUI ’21. New York, NY, USA: Association for Computing Machinery, 2021, pp. 22–31. [CrossRef]

- F. Doshi-Velez and B. Kim, “Towards a rigorous science of interpretable machine learning,” arXiv preprint arXiv:1702.08608, 2017.

- A. Adadi and M. Berrada, “Peeking Inside the Black-Box: A Survey on Explainable Artificial Intelligence (XAI),” IEEE Access, vol. 6, pp. 52138–52160, 2018. [CrossRef]

- S. Anjomshoae, A. Najjar, D. Calvaresi, and K. Främling, “Explainable Agents and Robots : Results from a Systematic Literature Review,” in AAMAS ’19: Proceedings of the 18th International Conference on Autonomous Agents and MultiAgent Systems : , in Proceedings. International Foundation for Autonomous Agents and MultiAgent Systems, 2019, pp. 1078–1088. [Online]. Available: http://www.ifaamas.org/Proceedings/aamas2019/pdfs/p1078.pdf.

- M. Nauta et al., “From Anecdotal Evidence to Quantitative Evaluation Methods: A Systematic Review on Evaluating Explainable AI,” ACM Comput. Surv., vol. 55, no. 13s, Jul. 2023. [CrossRef]

- A. Barredo Arrieta et al., “Explainable Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI,” Information Fusion, vol. 58, pp. 82–115, May 2020. [CrossRef]

- T. Miller, “Explanation in artificial intelligence: Insights from the social sciences,” Artif Intell, vol. 267, pp. 1–38, 2019. [CrossRef]

- F. Doshi-Velez and B. Kim, “Towards A Rigorous Science of Interpretable Machine Learning,” in arXiv preprint arXiv:1702.08608, 2017.

- M. ’Nauta et al., “From Anecdotal Evidence to Quantitative Evaluation Methods: A Systematic Review on Evaluating Explainable AI,” ACM Comput Surv, vol. 55, no. 13, pp. 1–42, 2023.

- mark S. Litwin, How to measure survey reliability and validity, vol. 7. Sage, 1995.

- DeVellis, F. Robert, and T. T. Carolyn, Scale development: Theory and applications. Sage, 2003.

- T. Raykov and G. A. Marcoulides, Introduction to Psychometric Theory, 1st ed. Routledge, 2010.

- F. Alizadeh, G. Stevens, and M. Esau, “An Empirical Study of Folk Concepts and People’s Expectations of Current and Future Artificial Intelligence,” i-com, vol. 20, no. 1, pp. 3–17, 2021. [CrossRef]

- H. Kaur, H. Nori, S. Jenkins, R. Caruana, H. Wallach, and J. Wortman Vaughan, “Interpreting Interpretability: Understanding Data Scientists’ Use of Interpretability Tools for Machine Learning,” in Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, in CHI ’20. New York, NY, USA: Association for Computing Machinery, 2020, pp. 1–14. [CrossRef]

- V. Lai, H. Liu, and C. Tan, “‘Why is “Chicago” deceptive?’ Towards Building Model-Driven Tutorials for Humans,” in Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, in CHI ’20. New York, NY, USA: Association for Computing Machinery, 2020, pp. 1–13. [CrossRef]

- T. Ngo, J. Kunkel, and J. Ziegler, “Exploring Mental Models for Transparent and Controllable Recommender Systems: A Qualitative Study,” in Proceedings of the 28th ACM Conference on User Modeling, Adaptation and Personalization, in UMAP ’20. New York, NY, USA: Association for Computing Machinery, 2020, pp. 183–191. [CrossRef]

- T. Kulesza, S. Stumpf, M. Burnett, S. Yang, I. Kwan, and W.-K. Wong, “Too much, too little, or just right? Ways explanations impact end users’ mental models,” in 2013 IEEE Symposium on Visual Languages and Human Centric Computing, 2013, pp. 3–10. [CrossRef]

- R. Sukkerd, “Improving Transparency and Intelligibility of Multi-Objective Probabilistic Planning,” May 2022. [CrossRef]

- R. R. Hoffman, S. T. Mueller, G. Klein, and J. Litman, “Metrics for Explainable AI: Challenges and Prospects,” 2019.

- A. I. Anik and A. Bunt, “Data-Centric Explanations: Explaining Training Data of Machine Learning Systems to Promote Transparency,” in Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, in CHI ’21. New York, NY, USA: Association for Computing Machinery, 2021. [CrossRef]

- D. Hannah, “Criteria and Metrics for the Explainability of Software.,” Gottfried Wilhelm Leibniz Universität Hannover, 2022.

- L. Guo, E. M. Daly, O. Alkan, M. Mattetti, O. Cornec, and B. Knijnenburg, “Building Trust in Interactive Machine Learning via User Contributed Interpretable Rules,” in Proceedings of the 27th International Conference on Intelligent User Interfaces, in IUI ’22. New York, NY, USA: Association for Computing Machinery, 2022, pp. 537–548. [CrossRef]

- V. Dominguez, P. Messina, I. Donoso-Guzmán, and D. Parra, “The effect of explanations and algorithmic accuracy on visual recommender systems of artistic images,” in Proceedings of the 24th International Conference on Intelligent User Interfaces, in IUI ’19. New York, NY, USA: Association for Computing Machinery, 2019, pp. 408–416. [CrossRef]

- J. Dieber and S. Kirrane, “A novel model usability evaluation framework (MUsE) for explainable artificial intelligence,” Information Fusion, vol. 81, pp. 143–153, 2022. [CrossRef]

- M. Millecamp, N. N. Htun, C. Conati, and K. Verbert, “To explain or not to explain: the effects of personal characteristics when explaining music recommendations,” in Proceedings of the 24th International Conference on Intelligent User Interfaces, in IUI ’19. New York, NY, USA: Association for Computing Machinery, 2019, pp. 397–407. [CrossRef]

- Z. Buçinca, P. Lin, K. Z. Gajos, and E. L. Glassman, “Proxy tasks and subjective measures can be misleading in evaluating explainable AI systems,” in Proceedings of the 25th International Conference on Intelligent User Interfaces, in IUI ’20. New York, NY, USA: Association for Computing Machinery, 2020, pp. 454–464. [CrossRef]

- H.-F. Cheng et al., “Explaining Decision-Making Algorithms through UI: Strategies to Help Non-Expert Stakeholders,” in Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, in CHI ’19. New York, NY, USA: Association for Computing Machinery, 2019, pp. 1–12. [CrossRef]

- A. Holzinger, A. Carrington, and H. Müller, “Measuring the Quality of Explanations: The System Causability Scale (SCS),” KI - Künstliche Intelligenz, vol. 34, no. 2, pp. 193–198, 2020. [CrossRef]

- J. Weina and G. Hamarneh, “The XAI alignment problem: Rethinking how should we evaluate human-centered AI explainability techniques,” in arXiv preprint, 2023.

- A. Papenmeier, G. Englebienn, and C. Seifert, “How model accuracy and explanation fidelity influence user trust,” 2019.

- M. Liao and S. S. Sundar, “How Should AI Systems Talk to Users when Collecting their Personal Information? Effects of Role Framing and Self-Referencing on Human-AI Interaction,” in Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, in CHI ’21. New York, NY, USA: Association for Computing Machinery, 2021. [CrossRef]

- C. J. Cai, J. Jongejan, and J. Holbrook, “The effects of example-based explanations in a machine learning interface,” in Proceedings of the 24th International Conference on Intelligent User Interfaces, in IUI ’19. New York, NY, USA: Association for Computing Machinery, 2019, pp. 258–262. [CrossRef]

- J. van der Waa, E. Nieuwburg, A. Cremers, and M. Neerincx, “Evaluating XAI: A comparison of rule-based and example-based explanations,” Artif Intell, vol. 291, p. 103404, 2021. [CrossRef]

- F. Poursabzi-Sangdeh, D. G. Goldstein, J. M. Hofman, J. W. Wortman Vaughan, and H. Wallach, “Manipulating and Measuring Model Interpretability,” in Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, in CHI ’21. New York, NY, USA: Association for Computing Machinery, 2021. [CrossRef]

- M. Narayanan, E. Chen, J. He, B. Kim, S. Gershman, and F. Doshi-Velez, “How do humans understand explanations from machine learning systems? an evaluation of the human-interpretability of explanation,” 2018.

- H. Liu, V. Lai, and C. Tan, “Understanding the Effect of Out-of-distribution Examples and Interactive Explanations on Human-AI Decision Making,” Proc. ACM Hum.-Comput. Interact., vol. 5, no. CSCW2, Oct. 2021. [CrossRef]

- P. Schmidt and F. Biessmann, “Quantifying Interpretability and Trust in Machine Learning Systems,” arXiv preprint arXiv:1901.08558, 2019.

- N. and R. V. V. and F. R. and R. O. Kim Sunnie S. Y. and Meister, “HIVE: Evaluating the Human Interpretability of Visual Explanations,” in Computer Vision – ECCV 2022, G. and C. M. and F. G. M. and H. T. Avidan Shai and Brostow, Ed., Cham: Springer Nature Switzerland, 2022, pp. 280–298.

- E. Rader, K. Cotter, and J. Cho, “Explanations as Mechanisms for Supporting Algorithmic Transparency,” in Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, in CHI ’18. New York, NY, USA: Association for Computing Machinery, 2018, pp. 1–13. [CrossRef]

- J. Ooge, S. Kato, and K. Verbert, “Explaining Recommendations in E-Learning: Effects on Adolescents’ Trust,” in Proceedings of the 27th International Conference on Intelligent User Interfaces, in IUI ’22. New York, NY, USA: Association for Computing Machinery, 2022, pp. 93–105. [CrossRef]

- S. Naveed, D. R. Kern, and G. Stevens, “Explainable Robo-Advisors: Empirical Investigations to Specify and Evaluate a User-Centric Taxonomy of Explanations in the Financial Domain,” in IntRS@RecSys, 2022, pp. 85–103.

- M. Millecamp, S. Naveed, K. Verbert, and J. Ziegler, “To Explain or not to Explain: the Effects of Personal Characteristics when Explaining Feature-based Recommendations in Different Domains,” in IntRS@RecSys, 2019. [Online]. Available: https://api.semanticscholar.org/CorpusID:203415984.

- S. Naveed, B. Loepp, and J. Ziegler, “On the Use of Feature-based Collaborative Explanations: An Empirical Comparison of Explanation Styles,” in Adjunct Publication of the 28th ACM Conference on User Modeling, Adaptation and Personalization, in UMAP ’20 Adjunct. New York, NY, USA: Association for Computing Machinery, 2020, pp. 226–232. [CrossRef]

- C.-H. Tsai, Y. You, X. Gui, Y. Kou, and J. M. Carroll, “Exploring and Promoting Diagnostic Transparency and Explainability in Online Symptom Checkers,” in Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, in CHI ’21. New York, NY, USA: Association for Computing Machinery, 2021. [CrossRef]

- M. Guesmi et al., “Explaining User Models with Different Levels of Detail for Transparent Recommendation: A User Study,” in Adjunct Proceedings of the 30th ACM Conference on User Modeling, Adaptation and Personalization, in UMAP ’22 Adjunct. New York, NY, USA: Association for Computing Machinery, 2022, pp. 175–183. [CrossRef]

- S. Naveed, T. Donkers, and J. Ziegler, “Argumentation-Based Explanations in Recommender Systems: Conceptual Framework and Empirical Results,” in Adjunct Publication of the 26th Conference on User Modeling, Adaptation and Personalization, in UMAP ’18. New York, NY, USA: Association for Computing Machinery, 2018, pp. 293–298. [CrossRef]

- M. T. Ford Courtney and Keane, “Explaining Classifications to Non-experts: An XAI User Study of Post-Hoc Explanations for a Classifier When People Lack Expertise,” in Pattern Recognition, Computer Vision, and Image Processing. ICPR 2022 International Workshops and Challenges, B. Rousseau Jean-Jacques and Kapralos, Ed., Cham: Springer Nature Switzerland, 2023, pp. 246–260.

- G. Bansal et al., “Does the Whole Exceed its Parts? The Effect of AI Explanations on Complementary Team Performance,” in Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, in CHI ’21. New York, NY, USA: Association for Computing Machinery, 2021. [CrossRef]

- D. H. Kim, E. Hoque, and M. Agrawala, “Answering Questions about Charts and Generating Visual Explanations,” in Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, in CHI ’20. New York, NY, USA: Association for Computing Machinery, 2020, pp. 1–13. [CrossRef]

- J. Dodge, S. Penney, A. Anderson, and M. M. Burnett, “What Should Be in an XAI Explanation? What IFT Reveals,” in Joint Proceedings of the ACM IUI 2018 Workshops co-located with the 23rd ACM Conference on Intelligent User Interfaces (ACM IUI 2018), Tokyo, Japan, March 11, 2018, A. Said and T. Komatsu, Eds., in CEUR Workshop Proceedings, vol. 2068. CEUR-WS.org, 2018. [Online]. Available: https://ceur-ws.org/Vol-2068/exss9.pdf.

- T. A. J. Schoonderwoerd, W. Jorritsma, M. A. Neerincx, and K. van den Bosch, “Human-centered XAI: Developing design patterns for explanations of clinical decision support systems,” Int J Hum Comput Stud, vol. 154, p. 102684, 2021. [CrossRef]

- R. Paleja, M. Ghuy, N. Ranawaka Arachchige, R. Jensen, and M. Gombolay, “The Utility of Explainable AI in Ad Hoc Human-Machine Teaming,” in Advances in Neural Information Processing Systems, M. Ranzato, A. Beygelzimer, Y. Dauphin, P. S. Liang, and J. W. Vaughan, Eds., Curran Associates, Inc., 2021, pp. 610–623. [Online]. Available: https://proceedings.neurips.cc/paper_files/paper/2021/file/05d74c48b5b30514d8e9bd60320fc8f6-Paper.pdf.

- Y. Alufaisan, L. R. Marusich, J. Z. Bakdash, Y. Zhou, and M. Kantarcioglu, “Does Explainable Artificial Intelligence Improve Human Decision-Making?,” Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35, no. 8, pp. 6618–6626, May 2021. [CrossRef]

- J. Schaffer, J. O’Donovan, J. Michaelis, A. Raglin, and T. Höllerer, “I can do better than your AI: expertise and explanations,” in Proceedings of the 24th International Conference on Intelligent User Interfaces, in IUI ’19. New York, NY, USA: Association for Computing Machinery, 2019, pp. 240–251. [CrossRef]

- M. Colley, B. Eder, J. O. Rixen, and E. Rukzio, “Effects of Semantic Segmentation Visualization on Trust, Situation Awareness, and Cognitive Load in Highly Automated Vehicles,” in Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, in CHI ’21. New York, NY, USA: Association for Computing Machinery, 2021. [CrossRef]

- Y. Zhang, Q. V. Liao, and R. K. E. Bellamy, “Effect of confidence and explanation on accuracy and trust calibration in AI-assisted decision making,” in Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, in FAT* ’20. New York, NY, USA: Association for Computing Machinery, 2020, pp. 295–305. [CrossRef]

- S. Carton, Q. Mei, and P. Resnick, “Feature-Based Explanations Don’t Help People Detect Misclassifications of Online Toxicity,” Proceedings of the International AAAI Conference on Web and Social Media, vol. 14, no. 1, pp. 95–106, May 2020. [CrossRef]

- J. Schoeffer, N. Kuehl, and Y. Machowski, “‘There Is Not Enough Information’: On the Effects of Explanations on Perceptions of Informational Fairness and Trustworthiness in Automated Decision-Making,” in Proceedings of the 2022 ACM Conference on Fairness, Accountability, and Transparency, in FAccT ’22. New York, NY, USA: Association for Computing Machinery, 2022, pp. 1616–1628. [CrossRef]

- J. Kunkel, T. Donkers, L. Michael, C.-M. Barbu, and J. Ziegler, “Let Me Explain: Impact of Personal and Impersonal Explanations on Trust in Recommender Systems,” in Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, in CHI ’19. New York, NY, USA: Association for Computing Machinery, 2019, pp. 1–12. [CrossRef]

- J. V. Jeyakumar, J. Noor, Y.-H. Cheng, L. Garcia, and M. Srivastava, “How Can I Explain This to You? An Empirical Study of Deep Neural Network Explanation Methods,” in Advances in Neural Information Processing Systems, H. Larochelle, M. Ranzato, R. Hadsell, M. F. Balcan, and H. Lin, Eds., Curran Associates, Inc., 2020, pp. 4211–4222. [Online]. Available: https://proceedings.neurips.cc/paper_files/paper/2020/file/2c29d89cc56cdb191c60db2f0bae796b-Paper.pdf.

- G. Harrison, J. Hanson, C. Jacinto, J. Ramirez, and B. Ur, “An Empirical Study on the Perceived Fairness of Realistic, Imperfect Machine Learning Models,” in Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, in FAT* ’20. New York, NY, USA: Association for Computing Machinery, 2020, pp. 392–402. [CrossRef]

- K. Weitz, Z. Alexander, and A. Elisabeth, “What do end-users really want? investigation of human-centered xai for mobile health apps,” in arXiv preprint, 2022.

- A. Fügener, J. Grahl, A. Gupta, and W. Ketter, “Will Humans-in-The-Loop Become Borgs? Merits and Pitfalls of Working with AI,” Management Information Systems Quarterly (MISQ), vol. 45, 2021.

- W. Jin, M. Fatehi, R. Guo, and G. Hamarneh, “Evaluating the clinical utility of artificial intelligence assistance and its explanation on the glioma grading task,” Artif Intell Med, vol. 148, p. 102751, 2024. [CrossRef]

- C. Panigutti et al., “The role of explainable AI in the context of the AI Act,” in Proceedings of the 2023 ACM Conference on Fairness, Accountability, and Transparency, in FAccT ’23. New York, NY, USA: Association for Computing Machinery, 2023, pp. 1139–1150. [CrossRef]

- P. Lopes, E. Silva, C. Braga, T. Oliveira, and L. Rosado, “XAI Systems Evaluation: A Review of Human and Computer-Centred Methods,” Applied Sciences, vol. 12, no. 19, 2022. [CrossRef]

- X. Kong, S. Liu, and L. Zhu, “Toward Human-centered XAI in Practice: A survey,” Machine Intelligence Research, 2024. [CrossRef]

- T. Kulesza, S. Stumpf, M. Burnett, and I. Kwan, “Tell Me More? The Effects of Mental Model Soundness on Personalizing an Intelligent Agent,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, in CHI ’12. New York, NY, USA: Association for Computing Machinery, 2012, pp. 1–10. [CrossRef]

- S. Naveed, B. Loepp, and J. Ziegler, “On the Use of Feature-based Collaborative Explanations: An Empirical Comparison of Explanation Styles,” in Adjunct Publication of the 28th ACM Conference on User Modeling, Adaptation and Personalization, in UMAP ’20 Adjunct. New York, NY, USA: Association for Computing Machinery, 2020, pp. 226–232. [CrossRef]

- G. Bansal, B. Nushi, E. Kamar, W. S. Lasecki, D. S. Weld, and E. Horvitz, “Beyond Accuracy: The Role of Mental Models in Human-AI Team Performance,” Proc AAAI Conf Hum Comput Crowdsourc, vol. 7, no. 1, pp. 2–11, Oct. 2019. [CrossRef]

- L. Guo, E. M. Daly, O. Alkan, M. Mattetti, O. Cornec, and B. Knijnenburg, “Building Trust in Interactive Machine Learning via User Contributed Interpretable Rules,” in 27th International Conference on Intelligent User Interfaces, in IUI ’22. New York, NY, USA: Association for Computing Machinery, 2022, pp. 537–548. [CrossRef]

- T. H. Davenport and M. L. Markus, “Rigor vs. Relevance Revisited: Response to Benbasat and Zmud,” MIS Quarterly, vol. 23, no. 1, pp. 19–23, 1999. [CrossRef]

- M. R. Islam, M. U. Ahmed, S. Barua, and S. Begum, “A Systematic Review of Explainable Artificial Intelligence in Terms of Different Application Domains and Tasks,” Applied Sciences, vol. 12, no. 3, 2022. [CrossRef]

- A. and F. L. and G. E. and L. M. G. A. Cabitza Federico and Campagner, “Color Shadows (Part I): Exploratory Usability Evaluation of Activation Maps in Radiological Machine Learning,” in Machine Learning and Knowledge Extraction, P. and T. A. M. and W. E. Holzinger Andreas and Kieseberg, Ed., Cham: Springer International Publishing, 2022, pp. 31–50.

- N. Grgic-Hlaca, M. B. Zafar, K. P. Gummadi, and A. Weller, “Beyond Distributive Fairness in Algorithmic Decision Making: Feature Selection for Procedurally Fair Learning,” in AAAI Conference on Artificial Intelligence, 2018. [Online]. Available: https://api.semanticscholar.org/CorpusID:19196469.

- J. Dodge, Q. V. Liao, Y. Zhang, R. K. E. Bellamy, and C. Dugan, “Explaining Models: An Empirical Study of How Explanations Impact Fairness Judgment,” in Proceedings of the 24th International Conference on Intelligent User Interfaces, in IUI ’19. New York, NY, USA: Association for Computing Machinery, 2019, pp. 275–285. [CrossRef]

- D.-R. Kern et al., “Peeking Inside the Schufa Blackbox: Explaining the German Housing Scoring System,” ArXiv, 2023.

- S. Naveed and J. Ziegler, “Featuristic: An interactive hybrid system for generating explainable recommendations - beyond system accuracy,” in IntRS@RecSys, 2020. [Online]. Available: https://api.semanticscholar.org/CorpusID:225063158.

- S. Naveed and J. Ziegler, “Feature-Driven Interactive Recommendations and Explanations with Collaborative Filtering Approach,” in ComplexRec@ RecSys, 2019, p. 1015.

- J. L. Herlocker, J. A. Konstan, and J. Riedl, “Explaining Collaborative Filtering Recommendations,” in Proceedings of the 2000 ACM Conference on Computer Supported Cooperative Work, in CSCW ’00. New York, NY, USA: Association for Computing Machinery, 2000, pp. 241–250. [CrossRef]

- N. Tintarev and J. Masthoff, “Explaining Recommendations: Design and Evaluation,” in Recommender Systems Handbook, 2015. [Online]. Available: https://api.semanticscholar.org/CorpusID:8407569.

- J. Tintarev Nava and Masthoff, “Designing and Evaluating Explanations for Recommender Systems,” in Recommender Systems Handbook, L. and S. B. and K. P. B. Ricci Francesco and Rokach, Ed., Boston, MA: Springer US, 2011, pp. 479–510. [CrossRef]

- I. Nunes and D. Jannach, “A systematic review and taxonomy of explanations in decision support and recommender systems,” May 2020.

- P. Kouki, J. Schaffer, J. Pujara, J. O’Donovan, and L. Getoor, “Personalized explanations for hybrid recommender systems,” in Proceedings of the 24th International Conference on Intelligent User Interfaces, in IUI ’19. New York, NY, USA: Association for Computing Machinery, 2019, pp. 379–390. [CrossRef]

- N. L. Le, M.-H. Abel, and P. Gouspillou, “Combining Embedding-Based and Semantic-Based Models for Post-Hoc Explanations in Recommender Systems,” in 2023 IEEE International Conference on Systems, Man, and Cybernetics (SMC), 2023, pp. 4619–4624. [CrossRef]

- S. Raza and C. Ding, “News recommender system: a review of recent progress, challenges, and opportunities,” Artif Intell Rev, vol. 55, no. 1, pp. 749–800, 2022. [CrossRef]

- X. Wang, D. Wang, C. Xu, X. He, Y. Cao, and T.-S. Chua, “Explainable Reasoning over Knowledge Graphs for Recommendation,” Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33, no. 01, pp. 5329–5336, Jul. 2019. [CrossRef]

- U. Ehsan, Q. V. Liao, M. Muller, M. O. Riedl, and J. D. Weisz, “Expanding Explainability: Towards Social Transparency in AI systems,” in Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, in CHI ’21. New York, NY, USA: Association for Computing Machinery, 2021. [CrossRef]

- A. Hudon, T. Demazure, A. J. Karran, P.-M. Léger, and S. Sénécal, “Explainable Artificial Intelligence (XAI): How the Visualization of AI Predictions Affects User Cognitive Load and Confidence,” Information Systems and Neuroscience, 2021, [Online]. Available: https://api.semanticscholar.org/CorpusID:240199397.

- H. Cramer et al., “The effects of transparency on trust in and acceptance of a content-based art recommender,” User Model User-adapt Interact, vol. 18, no. 5, pp. 455–496, 2008. [CrossRef]

- U. Ehsan, P. Tambwekar, L. Chan, B. Harrison, and M. O. Riedl, “Automated rationale generation: a technique for explainable AI and its effects on human perceptions,” Proceedings of the 24th International Conference on Intelligent User Interfaces, 2019, [Online]. Available: https://api.semanticscholar.org/CorpusID:58004583.

- H. Robert R, M. Shane T, K. Gary, and L. Jordan, “Metrics for Explainable AI: Challenges and Prospects,” arXiv preprint arXiv:1812.04608., 2018.

- J. S. Christian Meske Enrico Bunde and M. Gersch, “Explainable Artificial Intelligence: Objectives, Stakeholders, and Future Research Opportunities,” Information Systems Management, vol. 39, no. 1, pp. 53–63, 2022. [CrossRef]

- S. Mohseni, N. Zarei, and E. D. Ragan, “A Multidisciplinary Survey and Framework for Design and Evaluation of Explainable AI Systems,” ACM Trans. Interact. Intell. Syst., vol. 11, no. 3–4, Sep. 2021. [CrossRef]

- P. J. Phillips et al., “Four principles of explainable artificial intelligence,” 2021.

- B. Goodman and S. Flaxman, “European Union Regulations on Algorithmic Decision-Making and a ‘Right to Explanation,’” AI Mag, vol. 38, no. 3, pp. 50–57, Oct. 2017. [CrossRef]

- A. B. Lund, “A Stakeholder Approach to Media Governance,” in Managing Media Firms and Industries: What’s So Special About Media Management?, C. Lowe Gregory Ferrell and Brown, Ed., Cham: Springer International Publishing, 2016, pp. 103–120. [CrossRef]

- Y. Rong et al., “Towards Human-centered Explainable AI: User Studies for Model Explanations,” May 2022.

- D. Gunning and D. Aha, “DARPA’s Explainable Artificial Intelligence (XAI) Program,” AI Mag, vol. 40, no. 2, pp. 44–58, Jun. 2019. [CrossRef]

- Y. Rong et al., “Towards Human-Centered Explainable AI: A Survey of User Studies for Model Explanations,” IEEE Trans Pattern Anal Mach Intell, pp. 1–20, 2023. [CrossRef]

- A. Páez, “The Pragmatic Turn in Explainable Artificial Intelligence (XAI),” Minds Mach (Dordr), vol. 29, no. 3, pp. 441–459, 2019. [CrossRef]

- B. Y. Lim and A. K. Dey, “Assessing demand for intelligibility in context-aware applications,” in Proceedings of the 11th International Conference on Ubiquitous Computing, in UbiComp ’09. New York, NY, USA: Association for Computing Machinery, 2009, pp. 195–204. [CrossRef]

- D. S. Weld and G. Bansal, “The challenge of crafting intelligible intelligence,” Commun. ACM, vol. 62, no. 6, pp. 70–79, May 2019. [CrossRef]

- B. P. Knijnenburg, M. C. Willemsen, and A. Kobsa, “A pragmatic procedure to support the user-centric evaluation of recommender systems,” in Proceedings of the Fifth ACM Conference on Recommender Systems, in RecSys ’11. New York, NY, USA: Association for Computing Machinery, 2011, pp. 321–324. [CrossRef]

- J. Dai, S. Upadhyay, U. Aivodji, S. H. Bach, and H. Lakkaraju, “Fairness via Explanation Quality: Evaluating Disparities in the Quality of Post hoc Explanations,” in Proceedings of the 2022 AAAI/ACM Conference on AI, Ethics, and Society, in AIES ’22. New York, NY, USA: Association for Computing Machinery, 2022, pp. 203–214. [CrossRef]

- P. Pu, L. Chen, and R. Hu, “A user-centric evaluation framework for recommender systems,” in Proceedings of the Fifth ACM Conference on Recommender Systems, in RecSys ’11. New York, NY, USA: Association for Computing Machinery, 2011, pp. 157–164. [CrossRef]

- L. Martínez Caro and J. A. Martínez García, “Cognitive–affective model of consumer satisfaction. An exploratory study within the framework of a sporting event,” J Bus Res, vol. 60, no. 2, pp. 108–114, 2007. [CrossRef]

- D. G.Myers and C. N. Dewall, Psychology, 11th ed. Worth Publishers, 2021.

- F. Gedikli, D. Jannach, and M. Ge, “How should I explain? A comparison of different explanation types for recommender systems,” Int J Hum Comput Stud, vol. 72, no. 4, pp. 367–382, 2014. [CrossRef]

- M. Kahng, P. Y. Andrews, A. Kalro, and D. H. Chau, “ActiVis: Visual Exploration of Industry-Scale Deep Neural Network Models,” IEEE Trans Vis Comput Graph, vol. 24, no. 1, pp. 88–97, 2018. [CrossRef]

- R. Buettner, “Cognitive Workload of Humans Using Artificial Intelligence Systems: Towards Objective Measurement Applying Eye-Tracking Technology,” in KI 2013: Advances in Artificial Intelligence, M. Timm Ingo J. and Thimm, Ed., Berlin, Heidelberg: Springer Berlin Heidelberg, 2013, pp. 37–48.

- Y. Wu, Y. Liu, Y. R. Tsai, and S. Yau, “Investigating the role of eye movements and physiological signals in search satisfaction prediction using geometric analysis,” Journal of the Association for Information Science & Technology, vol. 70, no. 9, pp. 981–999, 2019, [Online]. Available: https://EconPapers.repec.org/RePEc:bla:jinfst:v:70:y:2019:i:9:p:981-999.

- M. Hassenzahl, R.Kekez, and m. Burmester, “The Importance of a software’s pragmatic quality depends on usage modes,” in roceedings of the 6th international conference on Work With Display Units WWDU 2002, ERGONOMIC Institut für Arbeits- und Sozialforschung, 2002, pp. 275–276.

- A. Nemeth and A. Bekmukhambetova, “Achieving Usability: Looking for Connections between User-Centred Design Practices and Resultant Usability Metrics in Agile Software Development,” Periodica Polytechnica Social and Management Sciences, vol. 31, no. 2, pp. 135–143, 2023. [CrossRef]

- W. Zhang and B. Y. Lim, “Towards Relatable Explainable AI with the Perceptual Process,” in Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, in CHI ’22. New York, NY, USA: Association for Computing Machinery, 2022. [CrossRef]

- M. Nourani, S. Kabir, S. Mohseni, and E. D. Ragan, “The Effects of Meaningful and Meaningless Explanations on Trust and Perceived System Accuracy in Intelligent Systems,” Proc AAAI Conf Hum Comput Crowdsourc, vol. 7, no. 1, pp. 97–105, Oct. 2019. [CrossRef]

- A. Abdul, C. von der Weth, M. Kankanhalli, and B. Y. Lim, “COGAM: Measuring and Moderating Cognitive Load in Machine Learning Model Explanations,” in Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, in CHI ’20. New York, NY, USA: Association for Computing Machinery, 2020, pp. 1–14. [CrossRef]

- B. Y. Lim, A. K. Dey, and D. Avrahami, “Why and why not explanations improve the intelligibility of context-aware intelligent systems,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, in CHI ’09. New York, NY, USA: Association for Computing Machinery, 2009, pp. 2119–2128. [CrossRef]

- R. R. Hoffman, S. T. Mueller, G. Klein, and J. Litman, “Measures for explainable AI: Explanation goodness, user satisfaction, mental models, curiosity, trust, and human-AI performance,” Front Comput Sci, vol. 5, 2023. [CrossRef]

- D. Das and S. Chernova, “Leveraging rationales to improve human task performance,” in Proceedings of the 25th International Conference on Intelligent User Interfaces, in IUI ’20. New York, NY, USA: Association for Computing Machinery, 2020, pp. 510–518. [CrossRef]

- F. de Andreis, “A Theoretical Approach to the Effective Decision-Making Process,” Open Journal of Applied Sciences, vol. 10, no. 6, pp. 287–304, Jun. 2020.

- R. Parasuraman, T. B. Sheridan, and C. D. Wickens, “Situation Awareness, Mental Workload, and Trust in Automation: Viable, Empirically Supported Cognitive Engineering Constructs,” J Cogn Eng Decis Mak, vol. 2, no. 2, pp. 140–160, 2008. [CrossRef]

- M. Pomplun and S. Sunkara, “Pupil Dilation as an Indicator of Cognitive Workload in Human-Computer Interaction,” 2003. [Online]. Available: https://api.semanticscholar.org/CorpusID:1052200.

- J. Cegarra and A. Chevalier, “The use of Tholos software for combining measures of mental workload: Toward theoretical and methodological improvements,” Behav Res Methods, vol. 40, no. 4, pp. 988–1000, 2008. [CrossRef]

- S. G. Hart and L. E. Staveland, “Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research,” in Human Mental Workload, vol. 52, P. A. Hancock and N. Meshkati, Eds., in Advances in Psychology, vol. 52. , North-Holland, 1988, pp. 139–183. [CrossRef]

- R. C. Mayer, J. H. Davis, and F. D. Schoorman, “An Integrative Model of Organizational Trust,” The Academy of Management Review, vol. 20, no. 3, pp. 709–734, 1995. [CrossRef]

- M. Madsen and S. Gregor, “Measuring Human-Computer Trust ,” in 11th australasian conference on information systems, Vol.53., 2000, pp. 6–8.

- D. Gefen, “Reflections on the dimensions of trust and trustworthiness among online consumers,” SIGMIS Database, vol. 33, no. 3, pp. 38–53, Aug. 2002. [CrossRef]

- M. Madsen and S. D. Gregor, “Measuring Human-Computer Trust,” 2000. [Online]. Available: https://api.semanticscholar.org/CorpusID:18821611.

- G. Stevens and P. Bossauer, “Who do you trust: Peers or Technology? A conjoint analysis about computational reputation mechanisms,” vol. 4, no. 1, 2020. [CrossRef]

- W. Wang and I. Benbasat, “Recommendation Agents for Electronic Commerce: Effects of Explanation Facilities on Trusting Beliefs,” Journal of Management Information Systems, vol. 23, no. 4, pp. 217–246, 2007. [CrossRef]

- M. Wahlström, B. Tammentie, T.-T. Salonen, and A. Karvonen, “AI and the transformation of industrial work: Hybrid intelligence vs double-black box effect,” Appl Ergon, vol. 118, p. 104271, 2024. [CrossRef]

- E. Rader and R. Gray, “Understanding User Beliefs About Algorithmic Curation in the Facebook News Feed,” in Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, in CHI ’15. New York, NY, USA: Association for Computing Machinery, 2015, pp. 173–182. [CrossRef]

- M. R. Endsley, “Situation awareness global assessment technique (SAGAT),” in Proceedings of the IEEE 1988 National Aerospace and Electronics Conference, 1988, pp. 789–795 vol.3. [CrossRef]

- Z. Buçinca, P. Lin, K. Z. Gajos, and E. L. Glassman, “Proxy tasks and subjective measures can be misleading in evaluating explainable AI systems,” in Proceedings of the 25th International Conference on Intelligent User Interfaces, in IUI ’20. New York, NY, USA: Association for Computing Machinery, 2020, pp. 454–464. [CrossRef]

- F. Uwe, Doing Interview Research : The Essential How To Guide. Sage Publications, 2021.

- A. Blandford, D. Furniss, and S. Makri, “Qualitative HCI Research: Going Behind the Scenes,” in Synthesis Lectures on Human-Centered Informatics, 2016. [Online]. Available: https://api.semanticscholar.org/CorpusID:38190394.

- U. Kelle, “„Mixed Methods" in der Evaluationsforschung - mit den Möglichkeiten und Beschränkungen quantitativer und qualitativer Methoden arbeiten,” Zeitschrift für Evaluation, vol. 17, no. 1, pp. 25–52, 208, Apr. 2018, [Online]. Available: https://www.proquest.com/scholarly-journals/mixed-methods-der-evaluationsforschung-mit-den/docview/2037015610/se-2?accountid=14644.

- M. S. Gorber Sarah Connor and Tremblay, “Self-Report and Direct Measures of Health: Bias and Implications,” in The Objective Monitoring of Physical Activity: Contributions of Accelerometry to Epidemiology, Exercise Science and Rehabilitation, C. Shephard Roy J. and Tudor-Locke, Ed., Cham: Springer International Publishing, 2016, pp. 369–376. [CrossRef]

- S. M. Sikes Landon M. and Dunn, “Subjective Experiences,” in Encyclopedia of Personality and Individual Differences, T. K. Zeigler-Hill Virgil and Shackelford, Ed., Cham: Springer International Publishing, 2020, pp. 5273–5275. [CrossRef]

- W. Mellouk and W. Handouzi, “Facial emotion recognition using deep learning: review and insights,” Procedia Comput Sci, vol. 175, pp. 689–694, 2020. [CrossRef]

- T. R. Hinkin, “A review of scale development practices in the study of organizations,” J Manage, vol. 21, no. 5, pp. 967–988, 1995. [CrossRef]

- “Organizational_Trust”.

- C. John W and C. V.L.Plano, “Revisiting mixed methods research designs twenty years later,” in handbookofmixedmethodsresearchdesigns, 2023, pp. 21–36.

- T. Miller, “Explanation in artificial intelligence: Insights from the social sciences,” May 2019, Elsevier B.V. [CrossRef]

- R. Binns, “Fairness in Machine Learning: Lessons from Political Philosophy,” in Proceedings of the 1st Conference on Fairness, Accountability and Transparency, S. A. Friedler and C. Wilson, Eds., in Proceedings of Machine Learning Research, vol. 81. PMLR, Sep. 2018, pp. 149–159. [Online]. Available: https://proceedings.mlr.press/v81/binns18a.html.

- M. Eiband, D. Buschek, A. Kremer, and H. Hussmann, “The Impact of Placebic Explanations on Trust in Intelligent Systems,” in Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems, in CHI EA ’19. New York, NY, USA: Association for Computing Machinery, 2019, pp. 1–6. [CrossRef]

- R. Binns, M. Van Kleek, M. Veale, U. Lyngs, J. Zhao, and N. Shadbolt, “‘It’s Reducing a Human Being to a Percentage’: Perceptions of Justice in Algorithmic Decisions,” in Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, in CHI ’18. New York, NY, USA: Association for Computing Machinery, 2018, pp. 1–14. [CrossRef]

| Literature Source | Objective | Scope | Procedure | |||||||||||||

| Domain | Target Group | Sampling and Participants | Method | |||||||||||||

| Methodology-driven Evaluation | Concept-driven Evaluation | Domain-driven Evaluation | Domain-Specific | Domain Agnostic / Not Stated | Agnostic / Not stated | Developers/ Engineers | Managers/ Regulators | End Users | Not Stated | Proxy Users | Real Users | Interviews/ Think Aloud | Observations | Questionnaires | Mixed-Method | |

| Chromik et al. [14] | O | O | O | NA | NA | |||||||||||

| Alizadeh et al. [27] | O | O | O | O | O | O | ||||||||||

| Mohseni et al. [15] | O | O | O | O | O | O | ||||||||||

| Kaur et al. [28] | O | O | O | O | O | O | ||||||||||

| Lai et al. [29] | O | O | O | O | O | |||||||||||

| Ngo et al. [30] | O | O | O | O | O | |||||||||||

| Kulesza et al. [31] | O | O | O | O | O | |||||||||||

| Sukkerd [32] | O | O | O | O | O | O | ||||||||||

| Hoffman et al. [33] | O | O | O | O | O | |||||||||||

| Anik et al. [34] | O | O | O | O | O | O | ||||||||||

| Deters [35] | O | O | O | O | O | |||||||||||

| Guo et al. [36] | O | O | O | O | O | O | ||||||||||

| Dominguez et al. [37] | O | O | O | O | O | O | O | |||||||||

| Dieber et al. [38] | O | O | O | O | O | O | ||||||||||

| Millecamp et al. [39] | O | O | O | O | O | O | ||||||||||

| Bucina et al. [40] | O | O | O | O | O | O | ||||||||||

| Cheng et al. [41] | O | O | O | O | O | O | ||||||||||

| Holzinger et al. [42] | O | O | O | O | O | O | ||||||||||

| Jin [43] | O | O | O | O | O | |||||||||||

| Papenmeier et al. [44] | O | O | O | O | O | O | ||||||||||

| Liao et al. [45] | O | O | O | O | O | |||||||||||

| Cai et al. [46] | O | O | O | O | O | O | O | |||||||||

| Van der waa et al. [47] | O | O | O | O | O | O | ||||||||||

| Poursabzi et al. [48] | O | O | O | O | O | O | ||||||||||

| Narayanan et al. [49] | O | O | O | O | O | O | O | |||||||||

| Liu et al. [50] | O | O | O | O | O | O | ||||||||||

| Schmidt et al. [51] | O | O | O | O | O | |||||||||||

| Kim et al. [52] | O | O | O | O | O | O | O | |||||||||

| Rader et al. [53] | O | O | O | O | O | |||||||||||

| Ooge et al. [54] | O | O | O | O | O | O | ||||||||||

| Naveed et al. [55] | O | O | O | O | O | O | ||||||||||

| Naveed et al. [56] | O | O | O | O | O | O | ||||||||||

| Naveed et al. [57] | O | O | O | O | O | |||||||||||

| Tsai et al. [58] | O | O | O | O | ||||||||||||

| Guesmi et al. [59] | O | O | O | O | O | |||||||||||

| Naveed et al. [60] | O | O | O | O | O | |||||||||||

| Ford et al. [61] | O | O | O | O | O | |||||||||||

| Bansal et al. [62] | O | O | O | O | O | O | ||||||||||

| Kim et al. [63] | O | O | O | O | O | O | ||||||||||

| Dodge et al. [64] | O | O | O | O | O | O | ||||||||||

| Schoonderwoerd et al. [65] | O | O | O | O | O | O | O | O | ||||||||

| Paleja et al. [66] | O | O | O | O | O | O | O | |||||||||

| Alufaisan et al. [67] | O | O | O | O | O | |||||||||||

| Schaffer et al. [68] | O | O | O | O | O | O | O | |||||||||

| Colley et al. [69] | O | O | O | O | O | |||||||||||

| Zhang et al. [70] | O | O | O | O | O | |||||||||||

| Carton et al. [71] | O | O | O | O | O | |||||||||||

| Schoeffer et al. [72] | O | O | O | O | O | |||||||||||

| Kunkel et al. [73] | O | O | O | O | O | |||||||||||

| Jeyakumar et al. [74] | O | O | O | O | O | |||||||||||

| Harrison et al. [75] | O | O | O | O | O | |||||||||||

| Weitz et al. [76] | O | O | O | O | O | O | ||||||||||

| Fügener et al. [77] | O | O | O | O | O | |||||||||||

| Literature Source | Understandability | Usability | Integrity | Misc | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Mental Models | Perceived Understandability | Understanding Goodness / Soundness | Perceived Explanation Qualities | Satisfaction | Utility / Suitability | Performance / Workload | Controllability / Scrutability | Trust / Confidence | Perceived Fairness | Transparency | Other | ||

| Chromik et al. [14] | O | O | O | O | O | O | Persuasiveness, Education, Debugging | ||||||

| Alizadeh et al. [27] | O | ||||||||||||

| Mohseni et al. [15] | O | O | |||||||||||

| Kaur et al. [28] | O | O | O | O | Intention to use/purchase | ||||||||

| Lai et al. [29] | O | O | O | ||||||||||

| Ngo et al. [30] | O | O | O | O | Diversity | ||||||||

| Kulesza et al. [31] |

O | O | O | O | O | Debugging | |||||||

| Sukkerd [32] | O | O | O | O | |||||||||

| Hoffman et al. [33] |

O | O | O | O | O | O | O | O | Curiosity | ||||

| Anik et al. [34] |

O | O | O | O | O | O | |||||||

| Deters [35] | O | O | O | O | O | O | Persuasiveness, Debugging, Situ. Awareness, Learn/Edu. | ||||||

| Guo et al. [36] | O | O | O | O | O | ||||||||

| Dominguez et al. [37] |

O | O | O | O | Diversity | ||||||||

| Dieber et al. [38] | O | O | O | ||||||||||

| Millecamp et al. [39] | O | O | O | O | Novelty, Intention to use/purchase | ||||||||

|

et al. [40] |

O | O | O | O | O | ||||||||

| Cheng et al. [41] |

O | O | O | O | |||||||||

| Holzinger et al. [42] |

O | O | |||||||||||

| Jin [43] | O | O | O | O | Plausability2 | ||||||||

| Papenmeier et al. [44] |

O | O | Persuasiveness | ||||||||||

| Liao et al. [45] | O | O | O | Intention to use/purchase | |||||||||

| Cai et al. [46] | O | O | O | ||||||||||

| Van der Waa et al. [47] |

O | O | O | O | Persuasiveness | ||||||||

| Poursabzi et al. [48] |

O | O | |||||||||||

| Narayanan et al. [49] |

O | O | O | ||||||||||

| Liu et al. [50] | O | O | O | ||||||||||

| Schmidt et al. [51] | O | O | O | ||||||||||

| Kim et al. [52] | O | O | |||||||||||

| Rader et al. [53] | O | O | O | Diversity, Situation Awareness | |||||||||

| Ooge et al. [54] | O | O | O | O | Intention to use/purchase | ||||||||

| Naveed et al. [55] | O | O | |||||||||||

| Naveed et al. [56] | O | O | |||||||||||

| Naveed et al. [57] | O | O | O | O | O | ||||||||

| Tsai et al. [58] | O | O | O | O | O | O | Situation Awareness, Learning/Education | ||||||

| Guesmi et al. [59] | O | O | O | O | O | O | Persuasiveness | ||||||

| Naveed et al. [60] | O | O | O | O | Diversity, Use Intentions | ||||||||

| Ford et al. [61] | O | O | O | O | O | ||||||||

| Bansal et al. [62] |

O | O | O | ||||||||||

| Kim et al. [63] | O | O | O | ||||||||||

| Dodge et al. [64] |

O | ||||||||||||

| Schoonderwoerd et al. [65] | O | O | O | O | Preferences | ||||||||

| Paleja et al. [66] |

O | O | Situation Awareness | ||||||||||

| Alufaisan et al. [67] |

O | O | O | ||||||||||

| Schaffer et al. [68] |

O | O | Situation Awareness | ||||||||||

| Colley et al. [69] | O | O | Situation Awareness | ||||||||||

| Zhang et al. [70] | O | O | Persuasiveness | ||||||||||

| Carton et al. [71] | O | O | |||||||||||

| Schoeffer et al. [72] | O | O | O | ||||||||||

| Kunkel et al. [73] |

O | Intention to use/purchase | |||||||||||

| Jeyakumar et al. [74] |

Preferences | ||||||||||||

| Harrison et al. [75] |

O | Preferences | |||||||||||

| Weitz et al. [76] | Preferences | ||||||||||||

| Fügener et al. [77] |

O | O | Persuasiveness | ||||||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).