Submitted:

10 September 2024

Posted:

13 September 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Related Work

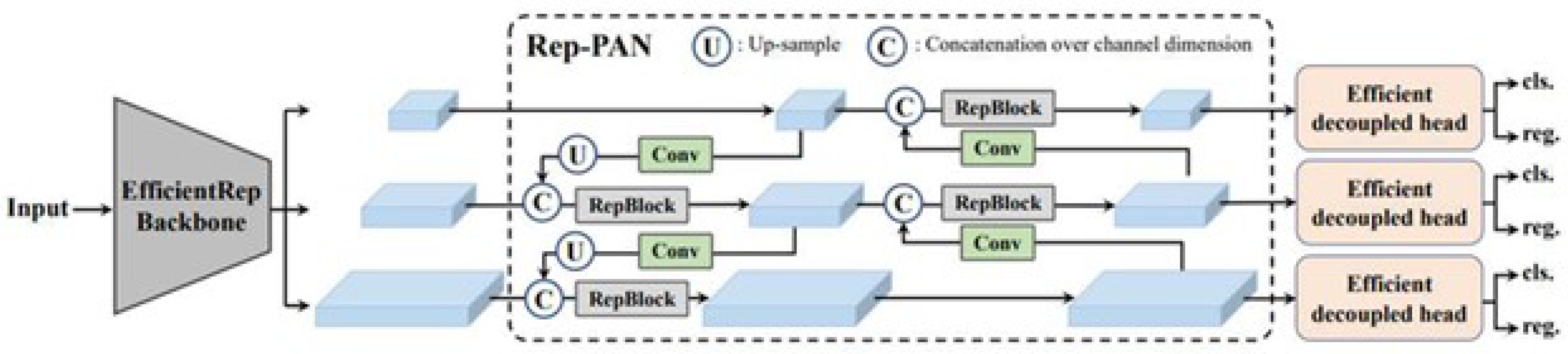

2.1. Real Time Object Detection Algorithms

2.2. Feature Fusion Structure

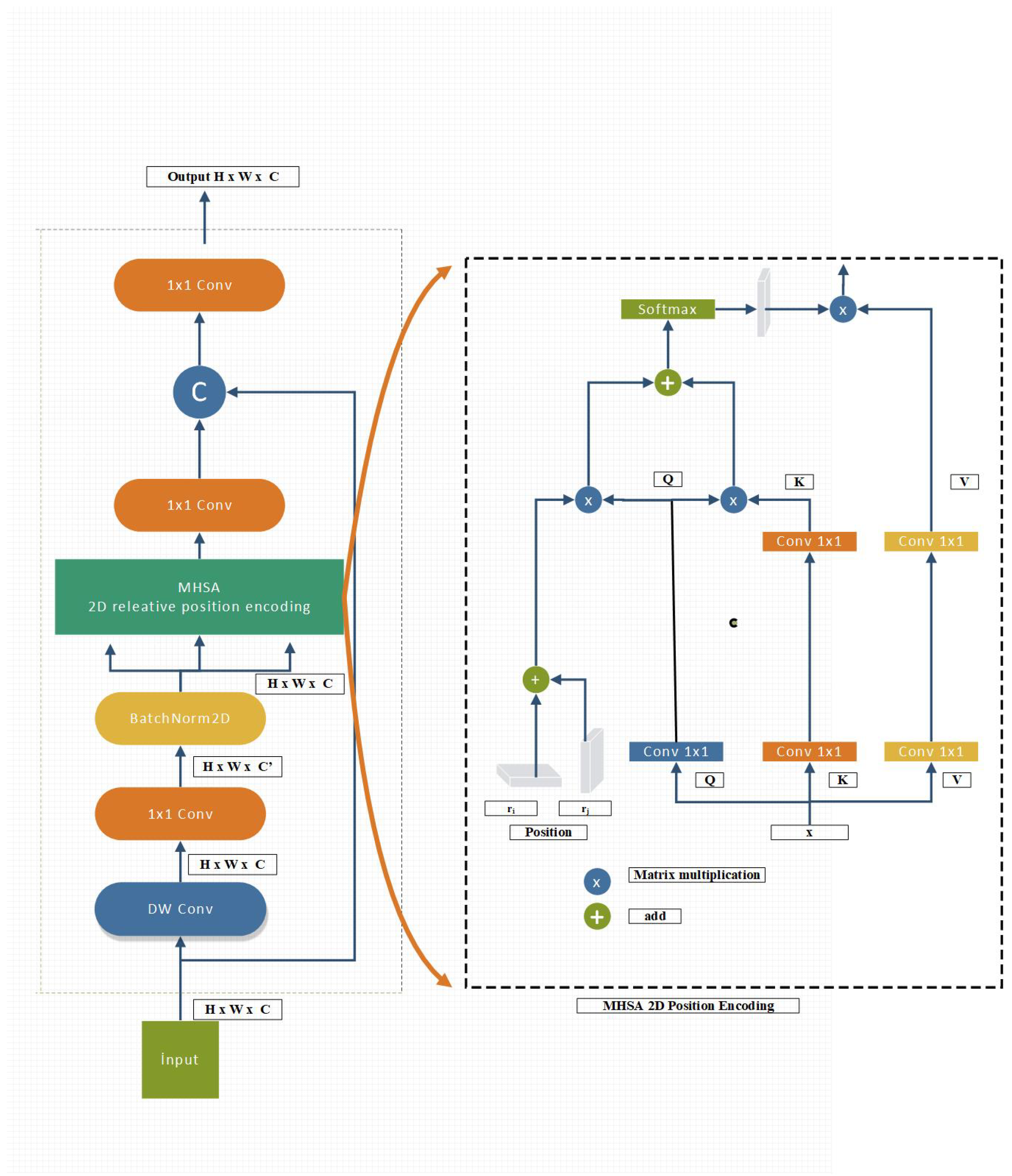

2.3. Vision Transformer (ViT) and Attention Mechanism Module

2.4. Spatial Pyramid Pooling Structure

2.5. Small Object Detection

3. AYOLO Model (Attention YOLO)

- Dilated convolutions: Using “Dilated Convolutions” that expand the convolution filter to obtain non-neighbouring, more distant information.

- Pyramid architecture: Using the pyramid structure to better integrate features at different scales and obtain semantic information.

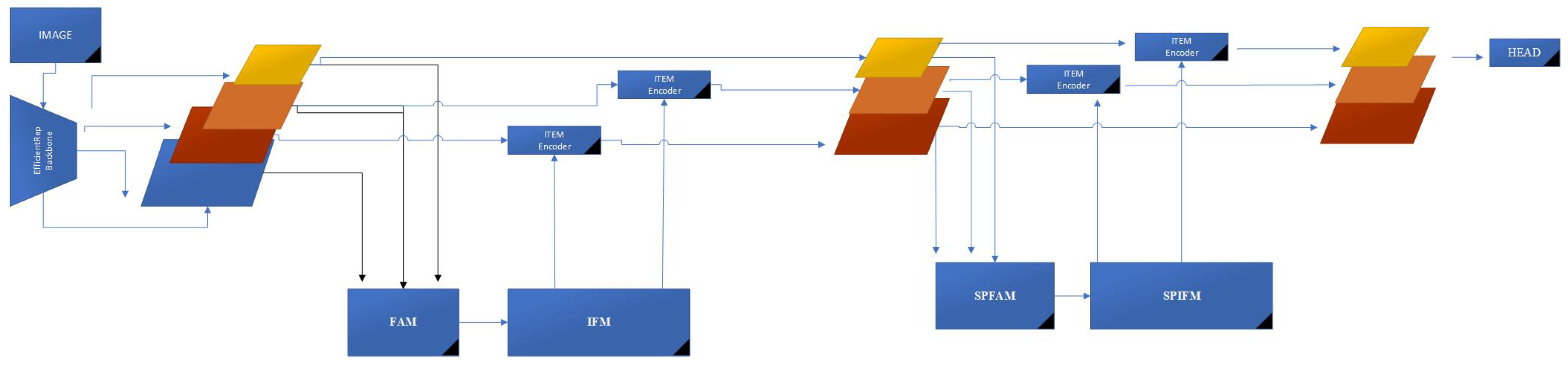

3.1. AYOLO Architecture

- Dilated convolutions: Using “Dilated Convolutions” that expand the convolution filter to obtain non-neighbouring, more distant information.

- Pyramid architecture: Using the pyramid structure to better integrate features at different scales and obtain semantic information.

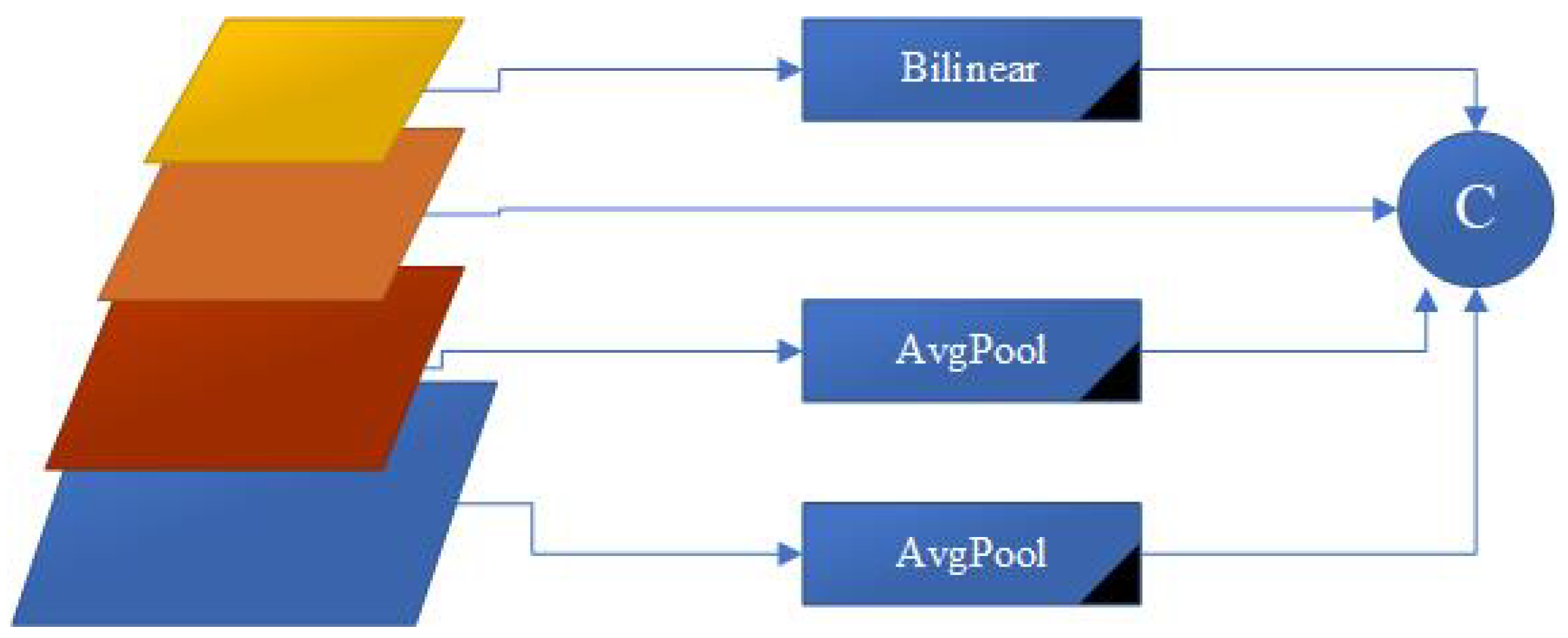

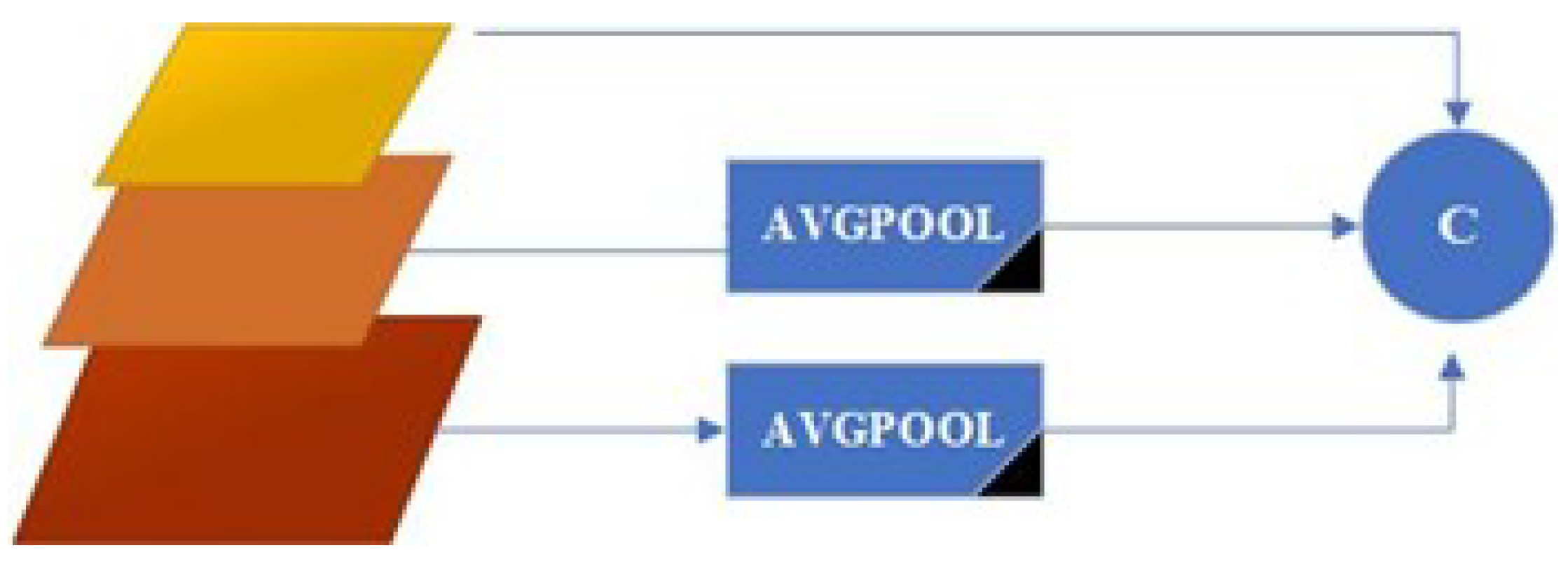

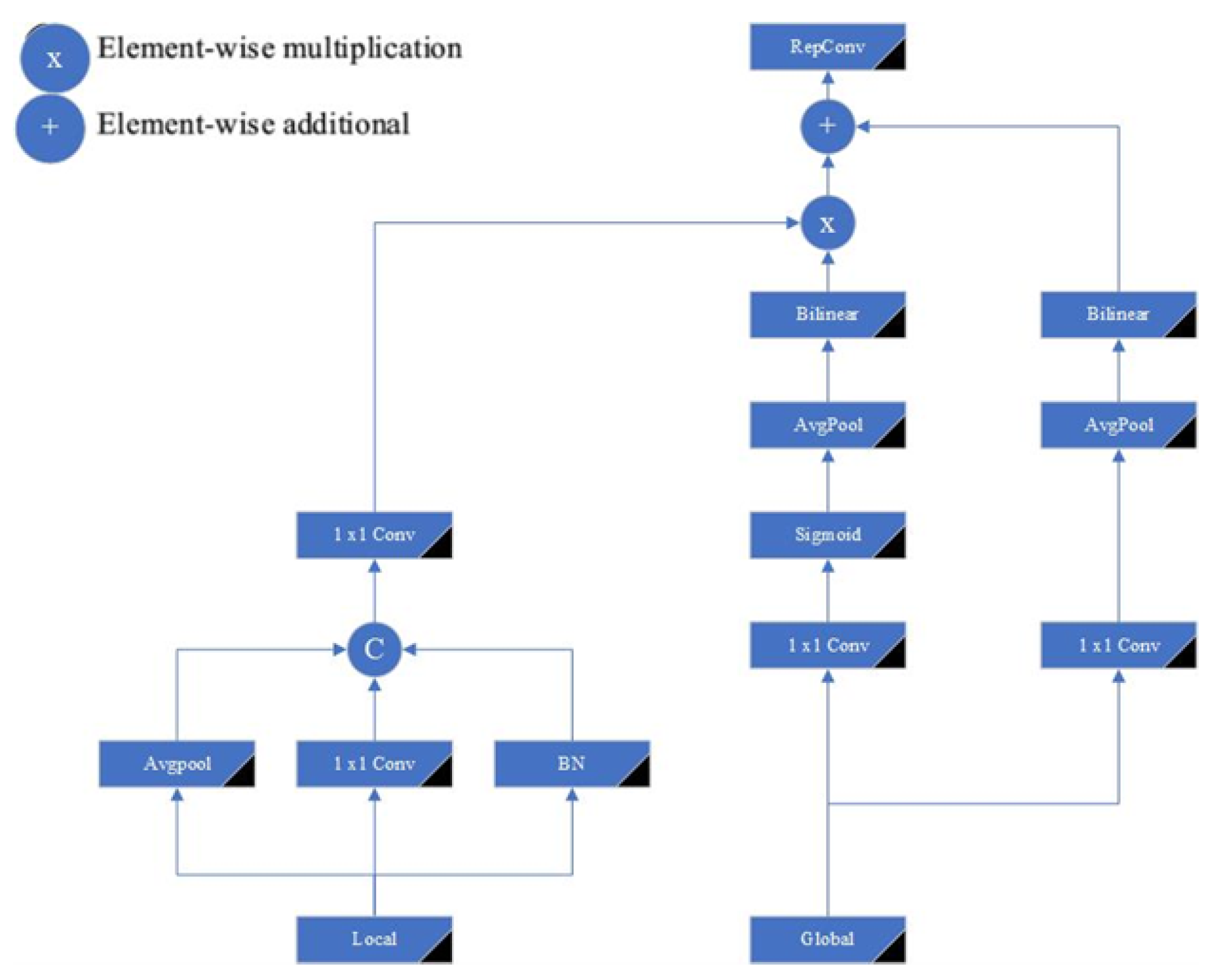

3.2. Feature Alignment Module - FAM

3.3. Information Fusion Module IFM

3.4. Feature Aggregation and Alignment Module Second Pyramid

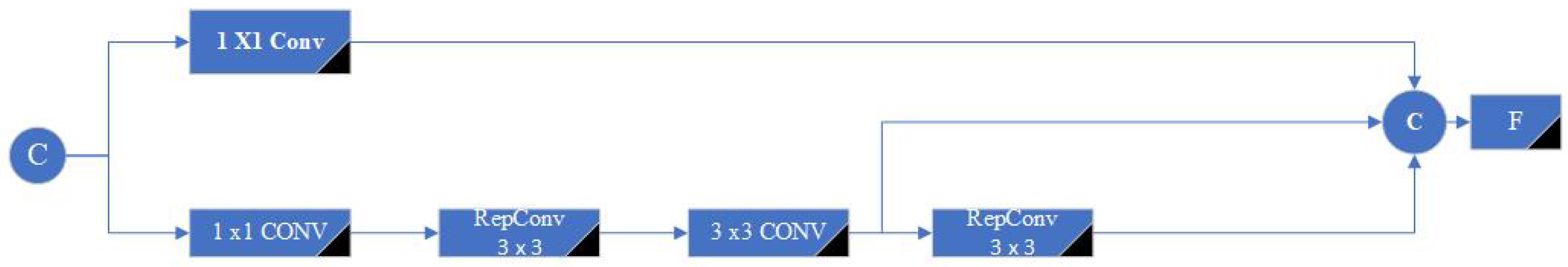

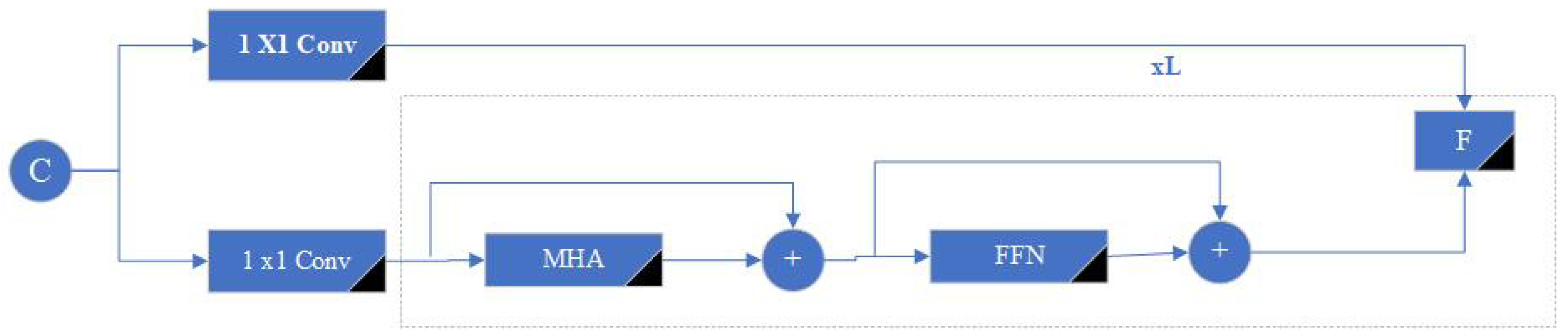

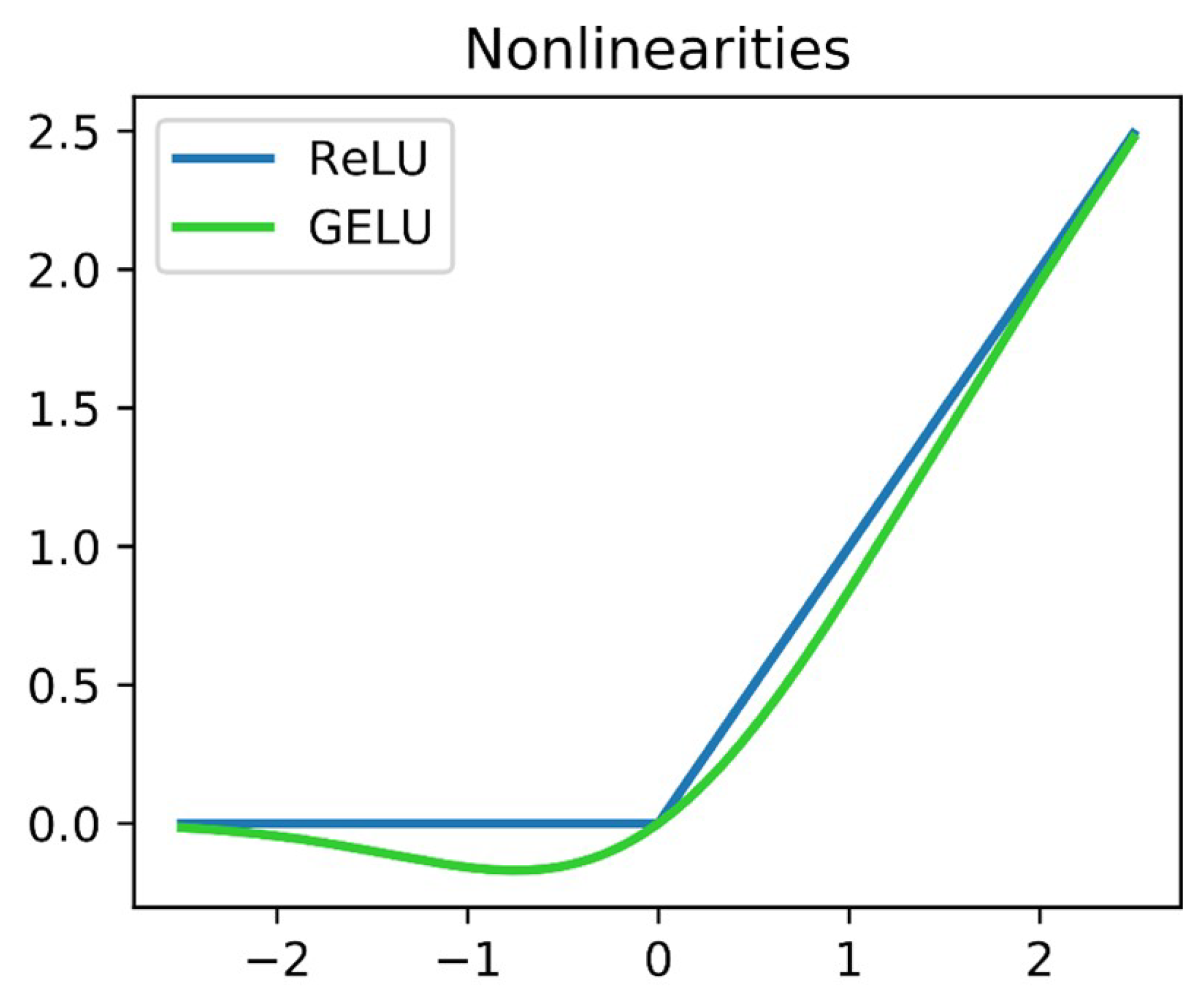

3.5. Information Transformer Encoder Module ITEM

4. Experiments

4.1. Technology Comparison

5. Discussion and Conclusion

References

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE transactions on pattern analysis and machine intelligence 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gkioxari, G. P. Doll ar, and R. Girshick,“Mask r-CNN,”. Proc. IEEE Int. Conf. Comput. Vis, 2017, pp. 2980–2988.

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 779–788.

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv preprint arXiv:2107.08430 2021.

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv preprint arXiv:1804.02767 2018.

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv preprint arXiv:2004.10934 2020.

- Ghiasi, G.; Lin, T.Y.; Le, Q.V. Nas-fpn: Learning scalable feature pyramid architecture for object detection. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019, pp. 7036–7045.

- Chen, Y.; Yang, T.; Zhang, X.; Meng, G.; Xiao, X.; Sun, J. Detnas: Backbone search for object detection. Advances in Neural Information Processing Systems 2019, 32. [Google Scholar]

- Guo, J.; Han, K.; Wang, Y.; Zhang, C.; Yang, Z.; Wu, H.; Chen, X.; Xu, C. Hit-detector: Hierarchical trinity architecture search for object detection. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 11405–11414.

- Guo, J.; Han, K.; Wang, Y.; Wu, H.; Chen, X.; Xu, C.; Xu, C. Distilling object detectors via decoupled features. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 2154–2164.

- Hao, Z.; Guo, J.; Jia, D.; Han, K.; Tang, Y.; Zhang, C.; Hu, H.; Wang, Y. Learning efficient vision transformers via fine-grained manifold distillation. Advances in Neural Information Processing Systems 2022, 35, 9164–9175. [Google Scholar]

- Guo, J.; Han, K.; Wu, H.; Zhang, C.; Chen, X.; Xu, C.; Xu, C.; Wang, Y. Positive-unlabeled data purification in the wild for object detection. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 2653–2662.

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. Centernet: Keypoint triplets for object detection. Proceedings of the IEEE/CVF international conference on computer vision, 2019, pp. 6569–6578.

- Han, K.; Wang, Y.; Chen, H.; Chen, X.; Guo, J.; Liu, Z.; Tang, Y.; Xiao, A.; Xu, C.; Xu, Y.; others. A survey on vision transformer. IEEE transactions on pattern analysis and machine intelligence 2022, 45, 87–110. [Google Scholar] [CrossRef] [PubMed]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 1580–1589.

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 770–778.

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv preprint arXiv:1704.04861 2017.

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 6848–6856.

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable detr: Deformable transformers for end-to-end object detection. arXiv preprint arXiv:2010.04159 2020.

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; others. YOLOv6: A single-stage object detection framework for industrial applications. arXiv preprint arXiv:2209.02976 2022.

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2023, pp. 7464–7475.

- Chen, P.Y.; Chang, M.C.; Hsieh, J.W.; Chen, Y.S. Parallel residual bi-fusion feature pyramid network for accurate single-shot object detection. IEEE transactions on Image Processing 2021, 30, 9099–9111. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 2117–2125.

- Xu, X.; Jiang, Y.; Chen, W.; Huang, Y.; Zhang, Y.; Sun, X. Damo-yolo: A report on real-time object detection design. arXiv preprint arXiv:2211.15444 2022.

- Huang, L.; Huang, W. RD-YOLO: An effective and efficient object detector for roadside perception system. Sensors 2022, 22, 8097. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Yan, X.; Wang, Y.; Qi, J. Adaptive Dehazing YOLO for Object Detection. International Conference on Artificial Neural Networks. Springer, 2023, pp. 14–27.

- Zhu, B.; Hofstee, P.; Lee, J.; Al-Ars, Z. An attention module for convolutional neural networks. Artificial Neural Networks and Machine Learning–ICANN 2021: 30th International Conference on Artificial Neural Networks, Bratislava, Slovakia, September 14–17, 2021, Proceedings, Part I 30. Springer, 2021, pp. 167–178.

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE transactions on pattern analysis and machine intelligence 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; others. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929 2020.

- Redmon, J.; Farhadi, A. YOLO9000: better, faster, stronger. Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 7263–7271.

- Jocher, G.; Chaurasia, A.; Stoken, A.; Borovec, J.; Kwon, Y.; Fang, J.; Michael, K.; Montes, D.; Nadar, J.; Skalski, P.; others. ultralytics/yolov5: v6. 1-tensorrt, tensorflow edge tpu and openvino export and inference. Zenodo 2022.

- Glenn, J.; Alex, S.; Jirka, B. Ultralytics yolov8. Computer software]. Version 2023, 8.

- Song, G.; Liu, Y.; Wang, X. Revisiting the sibling head in object detector. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 11563–11572.

- Wu, Y.; Chen, Y.; Yuan, L.; Liu, Z.; Wang, L.; Li, H.; Fu, Y. Rethinking classification and localization for object detection. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 10186–10195.

- Li, C.; Li, L.; Geng, Y.; Jiang, H.; Cheng, M.; Zhang, B.; Ke, Z.; Xu, X.; Chu, X. Yolov6 v3. 0: A full-scale reloading. arXiv preprint arXiv:2301.05586 2023.

- Guo, J.; Han, K.; Wu, H.; Tang, Y.; Chen, X.; Wang, Y.; Xu, C. Cmt: Convolutional neural networks meet vision transformers. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2022, pp. 12175–12185.

- Wang, K.; Liew, J.H.; Zou, Y.; Zhou, D.; Feng, J. Panet: Few-shot image semantic segmentation with prototype alignment. proceedings of the IEEE/CVF international conference on computer vision, 2019, pp. 9197–9206.

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 8759–8768.

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 10781–10790.

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 7132–7141.

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 11534–11542.

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. Proceedings of the European conference on computer vision (ECCV), 2018, pp. 3–19.

- Zhou, K.; Tong, Y.; Li, X.; Wei, X.; Huang, H.; Song, K.; Chen, X. Exploring global attention mechanism on fault detection and diagnosis for complex engineering processes. Process Safety and Environmental Protection 2023, 170, 660–669. [Google Scholar] [CrossRef]

- Qiu, M.; Huang, L.; Tang, B.H. ASFF-YOLOv5: Multielement detection method for road traffic in UAV images based on multiscale feature fusion. Remote Sensing 2022, 14, 3498. [Google Scholar] [CrossRef]

- Lin, T.Y.; RoyChowdhury, A.; Maji, S. Bilinear CNN models for fine-grained visual recognition. Proceedings of the IEEE international conference on computer vision, 2015, pp. 1449–1457.

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. Journal of big data 2019, 6, 1–48. [Google Scholar] [CrossRef]

- Chlap, P.; Min, H.; Vandenberg, N.; Dowling, J.; Holloway, L.; Haworth, A. A review of medical image data augmentation techniques for deep learning applications. Journal of Medical Imaging and Radiation Oncology 2021, 65, 545–563. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.; Tang, S.; Zhang, S.; Ogai, H. An enhanced feature pyramid object detection network for autonomous driving. Applied Sciences 2019, 9, 4363. [Google Scholar] [CrossRef]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 based on transformer prediction head for object detection on drone-captured scenarios. Proceedings of the IEEE/CVF international conference on computer vision, 2021, pp. 2778–2788.

- Liu, C.; Li, D.; Huang, P. ISE-YOLO: Improved squeeze-and-excitation attention module based YOLO for blood cells detection. 2021 IEEE International Conference on Big Data (Big Data). IEEE, 2021, pp. 3911–3916.

- Weng, K.; Chu, X.; Xu, X.; Huang, J.; Wei, X. EfficientRep: an efficient RepVGG-style convnets with hardware-aware neural network design. arXiv preprint arXiv:2302.00386 2023.

- Lin, T.; RoyChowdhury, A.; Maji, S. Bilinear CNNs for Fine-grained Visual Recognition. arXiv 2015. arXiv preprint arXiv:1504.07889.

- Kong, S.; Fowlkes, C. Low-rank bilinear pooling for fine-grained classification. Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 365–374.

- Yu, Z.; Yu, J.; Fan, J.; Tao, D. Multi-modal factorized bilinear pooling with co-attention learning for visual question answering. Proceedings of the IEEE international conference on computer vision, 2017, pp. 1821–1830.

- Cafarelli, D.; Ciampi, L.; Vadicamo, L.; Gennaro, C.; Berton, A.; Paterni, M.; Benvenuti, C.; Passera, M.; Falchi, F. MOBDrone: A drone video dataset for man overboard rescue. International Conference on Image Analysis and Processing. Springer, 2022, pp. 633–644.

- Wang, Y.; Zou, H.; Yin, M.; Zhang, X. SMFF-YOLO: A Scale-Adaptive YOLO Algorithm with Multi-Level Feature Fusion for Object Detection in UAV Scenes. Remote Sensing 2023, 15, 4580. [Google Scholar] [CrossRef]

- Li, X.; Li, X.; Zhang, S.; Zhang, G.; Zhang, M.; Shang, H. SLViT: Shuffle-convolution-based lightweight Vision transformer for effective diagnosis of sugarcane leaf diseases. Journal of King Saud University-Computer and Information Sciences 2023, 35, 101401. [Google Scholar] [CrossRef]

- Zhao, S.; Wu, X.; Tian, K.; Yuan, Y. Bilateral network with rich semantic extractor for real-time semantic segmentation. Complex & Intelligent Systems 2023, 1–18. [Google Scholar]

- Li, J.; Sun, W.; Feng, X.; von Deneen, K.M.; Wang, W.; Cui, G.; Zhang, Y. A hybrid network integrating convolution and transformer for thymoma segmentation. Intelligent Medicine 2023, 3, 164–172. [Google Scholar] [CrossRef]

- Gao, Y.; Zhou, M.; Metaxas, D.N. UTNet: a hybrid transformer architecture for medical image segmentation. Medical Image Computing and Computer Assisted Intervention–MICCAI 2021: 24th International Conference, Strasbourg, France, September 27–October 1, 2021, Proceedings, Part III 24. Springer, 2021, pp. 61–71.

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. International conference on machine learning. PMLR, 2019, pp. 6105–6114.

- Shaw, P.; Uszkoreit, J.; Vaswani, A. Self-attention with relative position representations. arXiv preprint arXiv:1803.02155 2018.

- Bello, I.; Zoph, B.; Vaswani, A.; Shlens, J.; Le, Q.V. Attention augmented convolutional networks. Proceedings of the IEEE/CVF international conference on computer vision, 2019, pp. 3286–3295.

- Huang, Z.; Ben, Y.; Luo, G.; Cheng, P.; Yu, G.; Fu, B. Shuffle transformer: Rethinking spatial shuffle for vision transformer. arXiv preprint arXiv:2106.03650 2021.

- Yuan, K.; Guo, S.; Liu, Z.; Zhou, A.; Yu, F.; Wu, W. Incorporating convolution designs into visual transformers. Proceedings of the IEEE/CVF international conference on computer vision, 2021, pp. 579–588.

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. Shufflenet v2: Practical guidelines for efficient cnn architecture design. Proceedings of the European conference on computer vision (ECCV), 2018, pp. 116–131.

| Methods | AP 0 | AP95 | APs | APm | APl | Para | FLOPS |

|---|---|---|---|---|---|---|---|

| Methods | AP 0 (%)↑ | AP95 (%)↑ | APs (%)↑ | APm (%)↑ | APl (%)↑ | Para (M)↓ | FLOPS (G)↓ |

| DETR | 79.9 | 4.60 | 14.7 | 34.4 | 61.8 | 39.37 | 86 |

| YOLOv5s | 79.6 | 46.3 | 17.6 | 37.2 | 58.3 | 6.72 | 15.9 |

| YOLOv5m | 80.9 | 47.3 | 17.4 | 38.2 | 62.0 | 19.94 | 48 |

| DAMO-YOLOs | 81.6 | 48.7 | 19.0 | 40.8 | 57.5 | 16.3 | 37.8 |

| DAMO-YOLOm | 81.8 | 50.1 | 18.7 | 40.3 | 61.8 | 28.2 | 61.8 |

| YOLOv6m | 81.0 | 49.3 | 20.3 | 39.7 | 62.7 | 34.86 | 103.4 |

| YOLOv6x | 83.0 | 49.2 | 21.6 | 39.3 | 62.6 | 67.62 | 188.3 |

| YOLOv8n | 80.3 | 47.7 | 15.8 | 37.8 | 62.7 | 3.01 | 8.1 |

| YOLOv8s | 81.8 | 49.2 | 18.7 | 38.7 | 62.5 | 11.13 | 28.5 |

| YOLOv8m | 82.2 | 49.9 | 18.3 | 39.9 | 63.0 | 25.85 | 78.7 |

| AYOLO | 83.2 | 49.4 | 21.7 | 39.8 | 63.1 | 32.8 | 89.6 |

| Method | Input Size | FPS | Latency |

|---|---|---|---|

| YOLOv5-M | 640 | 235 | 4.9 ms |

| YOLOX-M | 640 | 204 | 5.3 ms |

| PPYOLOE-M | 640 | 210 | 6.1 ms |

| YOLOv7 | 640 | 135 | 7.6 ms |

| YOLOv6-3.0-M | 640 | 238 | 5.6 ms |

| YOLOv8 | 640 | 236 | 7 ms |

| AYOLO-M | 640 | 239 | 6.1 ms |

| Architecture | Input Size | FPS | Latency | Avrg Precision |

|---|---|---|---|---|

| AYOLO-S | 416 x 416 | 300 | 4.9 ms | 0.85 |

| AYOLO-M | 640 x 640 | 239 | 6.1 ms | 0.88 |

| AYOLO-L | 80 x 800 | 177 | 7.3 ms | 0.90 |

| AYOLO-XL | 1024 x 1024 | 118 | 9.6 ms | 0.92 |

| Normalization | Accuracy | mAP | Inference | Parameters | FLOPs |

|---|---|---|---|---|---|

| Technique | (%) | (%) | Time (ms) | (Million) | (Billion) |

| BatchNorm | 92.3 | 94.1 | 25 | 8.5 | 45 |

| LayerNorm | 91.8 | 93.7 | 28 | 8.5 | 45 |

| RMSNorm | 92.0 | 93.9 | 27 | 8.5 | 45 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).