Submitted:

18 June 2024

Posted:

19 June 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

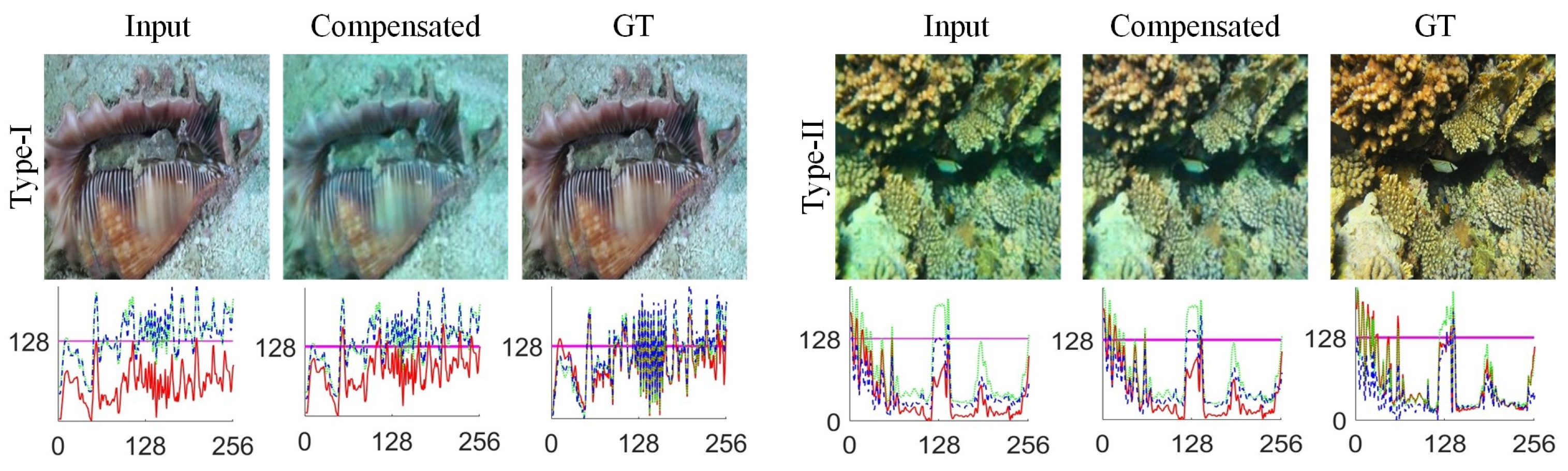

2. Motivation

3. Proposed Method

3.1. Image Classifier

3.2. Deep Line Model

3.3. Deep Curve Model

4. Results and Discussion

4.1. Implementation Details

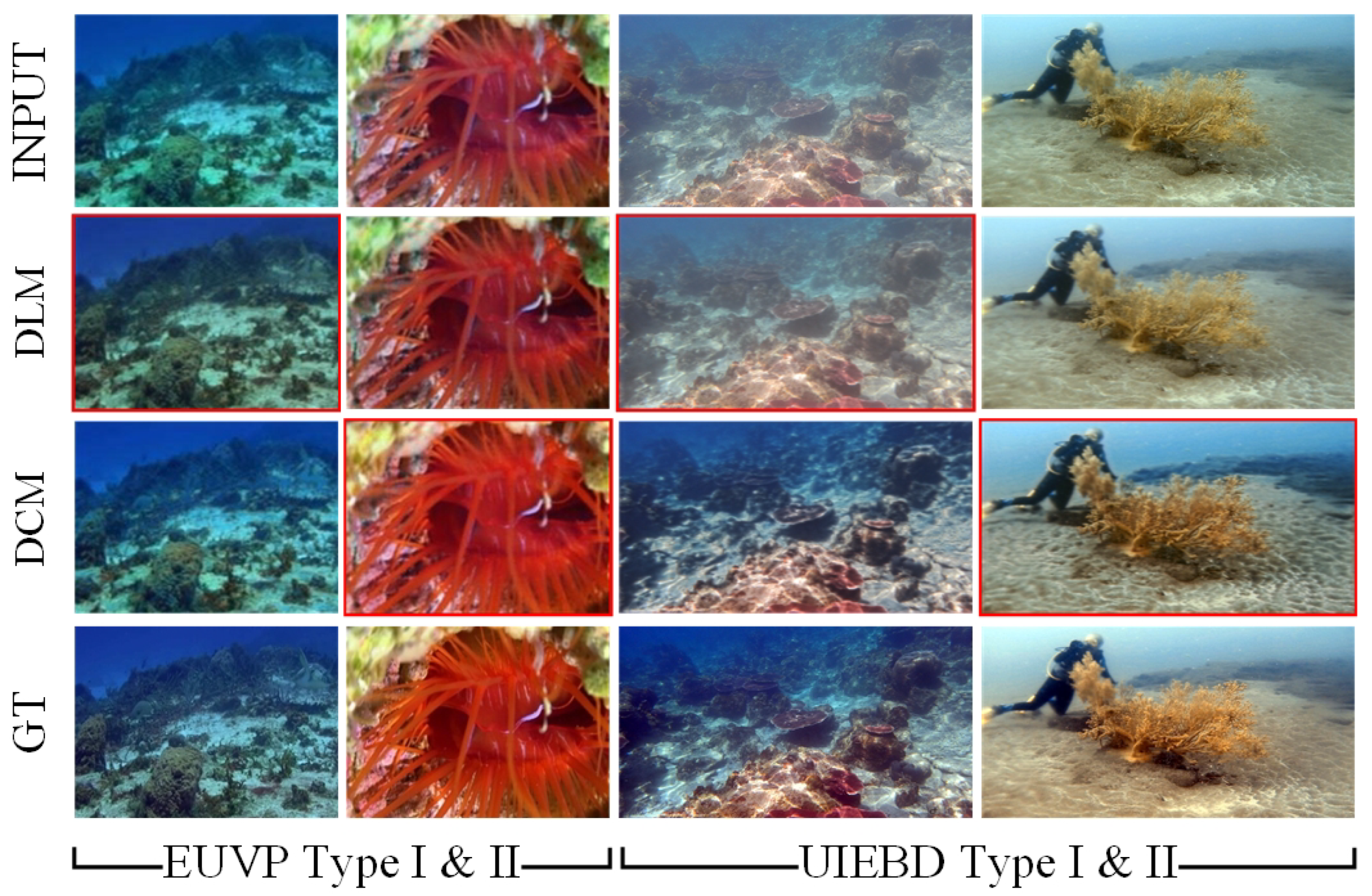

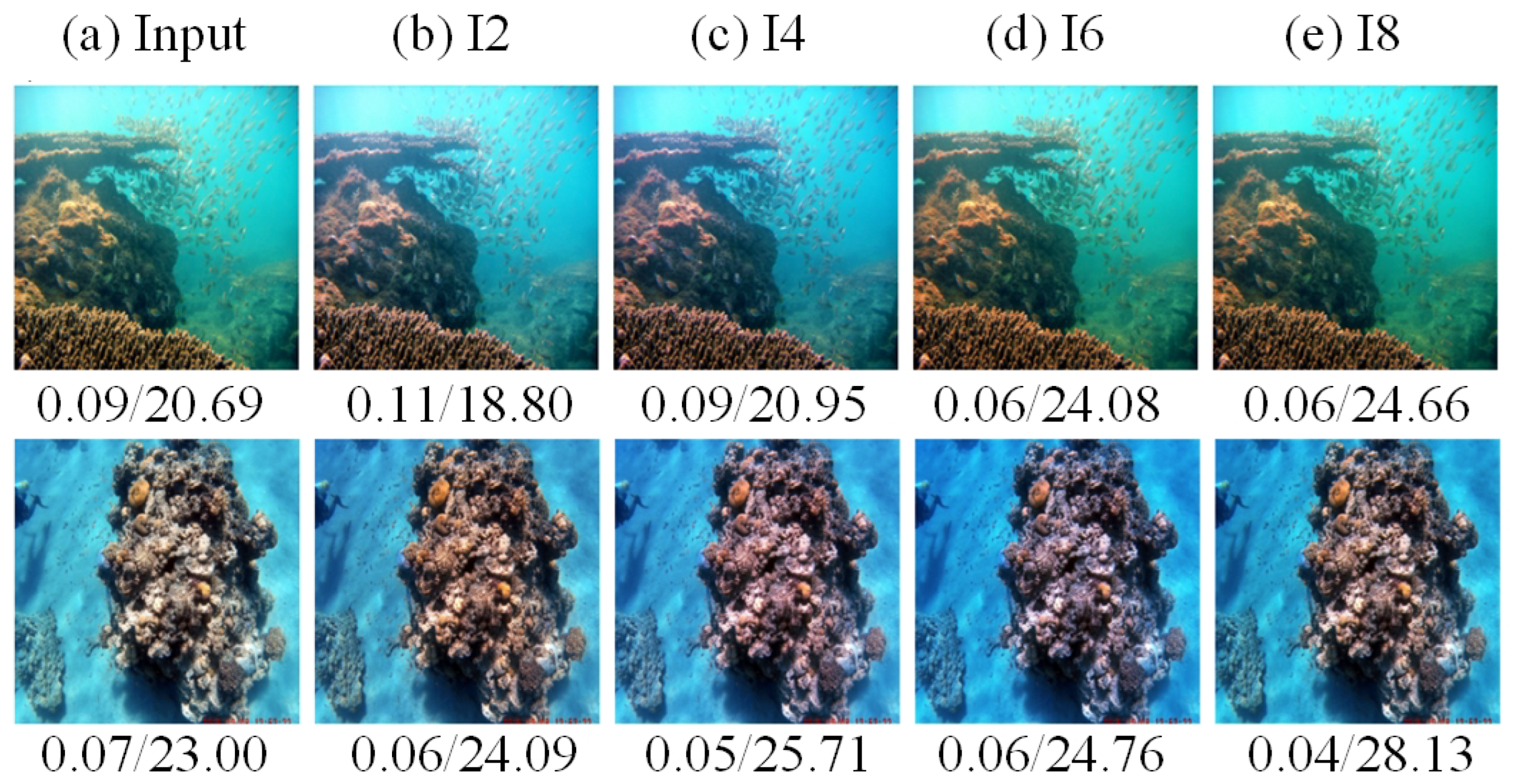

4.2. Analysis

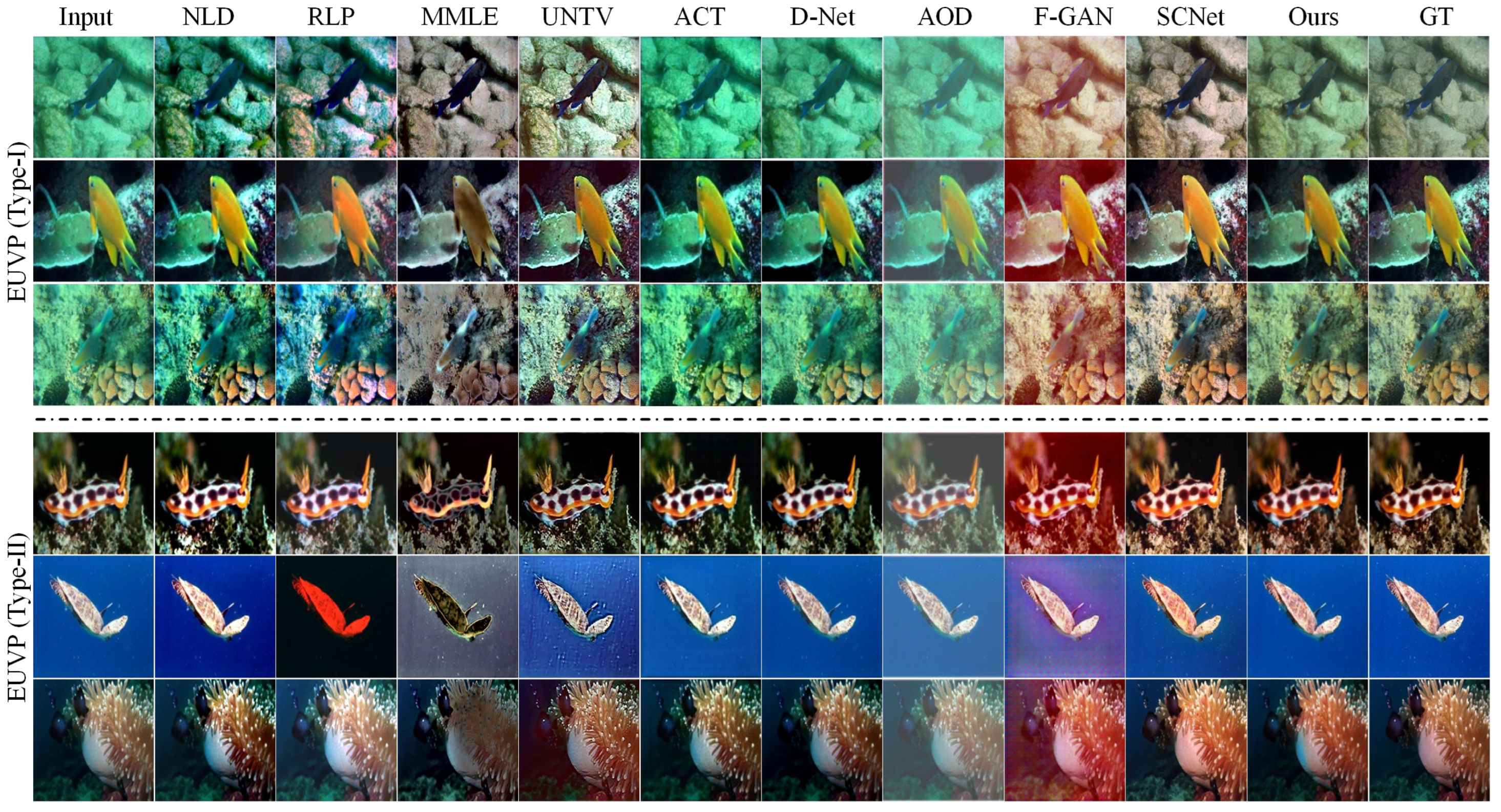

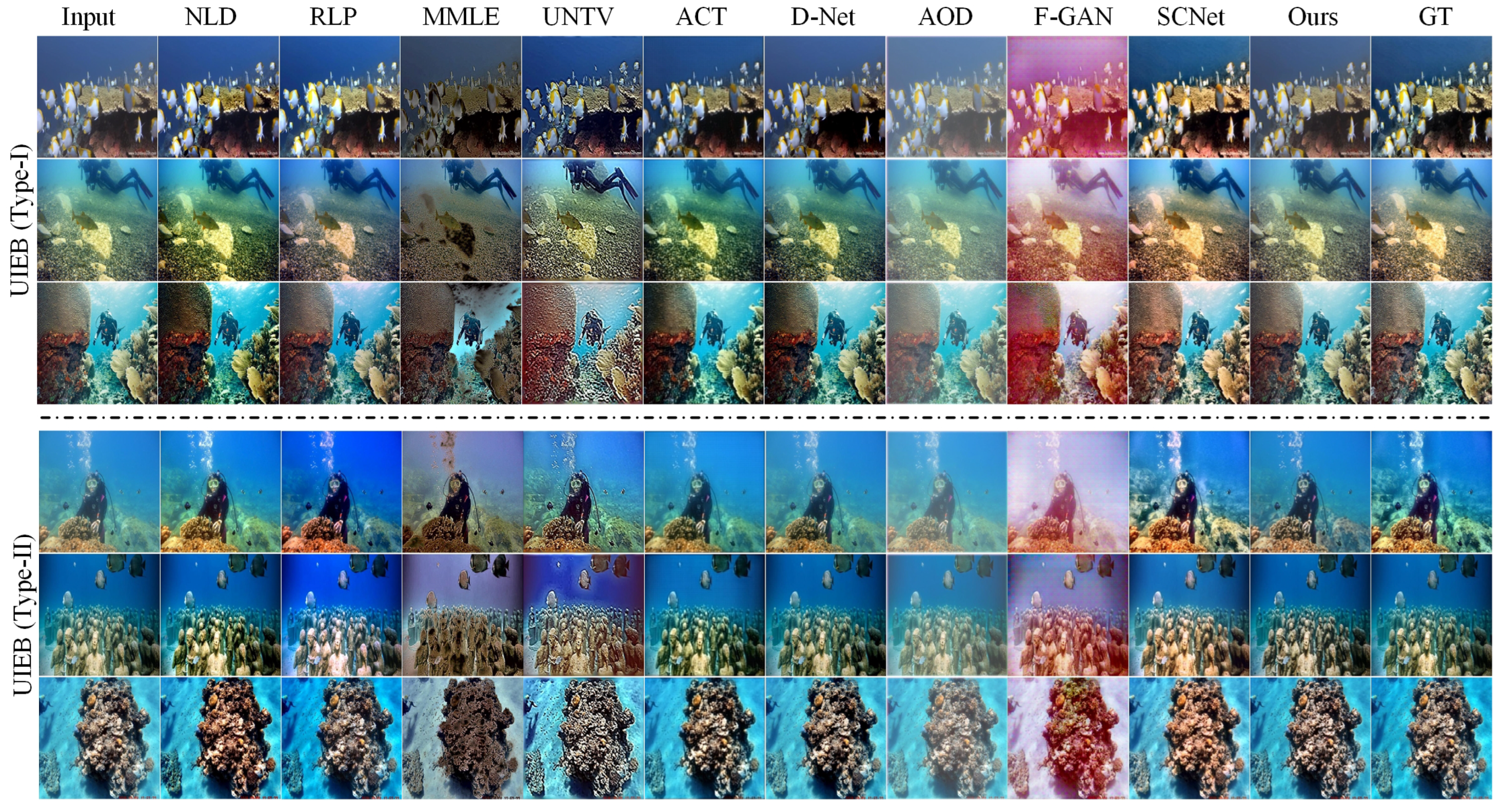

4.3. Comparative Analysis

| Non-learning-based Methods | Learning-based Methods | |||||||||||

| Dataset | Type | Input | NLD | RLP | MMLE | UNTV | ACT | D-Net | AOD | F-GAN | SCNet | Ours |

| EUVP | Type-I | 0.11/20.00 | 0.15/17.00 | 0.15/17.30 | 0.18/15.30 | 0.14/17.40 | 0.84/17.50 | 0.12/19.10 | 0.78/15.10 | 0.73/13.70 | 0.10/20.00 | 0.08/22.30 |

| Type-II | 0.08/22.00 | 0.12/18.40 | 0.15/16.90 | 0.17/15.60 | 0.11/19.20 | 0.93/20.60 | 0.10/20.10 | 0.87/13.30 | 0.84/15.50 | 0.11/19.70 | 0.06/25.00 | |

| UIEBD | Type-I | 0.15/17.60 | 0.18/15.30 | 0.14/17.50 | 0.21/14.30 | 0.16/16.20 | 0.81/15.50 | 0.17/15.90 | 0.75/15.10 | 0.70/12.70 | 0.07/23.60 | 0.11/20.10 |

| Type-II | 0.14/18.60 | 0.12/18.70 | 0.11/19.20 | 0.24/12.60 | 0.17/15.60 | 0.92/19.70 | 0.14/17.80 | 0.86/13.10 | 0.53/9.99 | 0.10/20.70 | 0.08/22.20 | |

| Non-learning-based Methods | Learning-based Methods | |||||||||||

| Dataset | Image | Input | NLD | RLP | MMLE | UNTV | ACT | D-Net | AOD | F-GAN | SCNet | Ours |

| EUVP | test_p84_ | 0.11/19.30 | 0.16/15.70 | 0.20/15.10 | 0.14/17.33 | 0.13/17.52 | 0.14/17.00 | 0.11/18.90 | 0.18/15.16 | 0.17/15.50 | 0.17/15.62 | 0.05/26.70 |

| Type-I | test_p404_ | 0.14/17.20 | 0.18/15.00 | 0.20/16.00 | 0.11/18.79 | 0.16/15.96 | 0.18/14.99 | 0.14/17.10 | 0.17/15.24 | 0.19/14.60 | 0.20/13.96 | 0.04/28.30 |

| test_p510_ | 0.07/22.70 | 0.09/21.10 | 0.10/22.20 | 0.13/17.48 | 0.09/20.77 | 0.10/20.11 | 0.10/20.40 | 0.21/13.73 | 0.21/13.60 | 0.13/17.55 | 0.06/24.50 | |

| EUVP | test_p171_ | 0.07/23.70 | 0.09/20.70 | 0.10/19.60 | 0.17/15.61 | 0.10/20.38 | 0.08/22.04 | 0.10/19.90 | 0.25/12.13 | 0.17/15.20 | 0.10/20.22 | 0.04/27.70 |

| Type-II | test_p255_ | 0.05/26.50 | 0.11/18.90 | 0.40/8.45 | 0.30/10.45 | 0.12/18.61 | 0.06/24.88 | 0.05/26.40 | 0.16/15.73 | 0.21/13.50 | 0.06/24.27 | 0.03/29.90 |

| test_p327_ | 0.07/23.70 | 0.10/20.20 | 0.20/16.20 | 0.16/15.68 | 0.10/20.24 | 0.09/21.18 | 0.09/20.50 | 0.21/13.44 | 0.18/15.00 | 0.11/18.99 | 0.05/26.10 | |

| UIEBD | 375_img_ | 0.09/20.80 | 0.11/19.50 | 0.14/16.80 | 0.15/16.54 | 0.10/20.10 | 0.11/19.40 | 0.11/19.30 | 0.18/15.01 | 0.17/15.40 | 0.17/15.57 | 0.07/22.90 |

| Type-I | 495_img_ | 0.14/18.10 | 0.20/15.40 | 0.20/14.50 | 0.18/15.11 | 0.14/17.51 | 0.19/14.62 | 0.14/17.20 | 0.20/13.94 | 0.20/14.00 | 0.20/13.80 | 0.04/27.00 |

| 619_img_ | 0.07/22.70 | 0.09/21.10 | 0.10/22.20 | 0.13/17.49 | 0.09/20.78 | 0.10/20.12 | 0.10/20.41 | 0.21/13.74 | 0.21/13.61 | 0.13/17.56 | 0.06/24.51 | |

| UIEBD | 746_img_ | 0.07/23.70 | 0.09/20.70 | 0.10/19.60 | 0.17/15.62 | 0.10/20.39 | 0.08/22.05 | 0.10/19.91 | 0.25/12.14 | 0.17/15.21 | 0.10/20.23 | 0.04/27.71 |

| Type-II | 845_img_ | 0.05/26.50 | 0.11/18.90 | 0.40/8.46 | 0.30/10.46 | 0.12/18.62 | 0.06/24.89 | 0.05/26.41 | 0.16/15.74 | 0.21/13.51 | 0.06/24.28 | 0.03/29.91 |

| 967_img_ | 0.07/23.70 | 0.10/20.20 | 0.20/16.21 | 0.16/15.69 | 0.10/20.25 | 0.09/21.19 | 0.09/20.51 | 0.21/13.45 | 0.18/15.01 | 0.11/19.00 | 0.05/26.11 | |

4.4. Limitations

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| CNN | Convolutional Neural Network |

| DCP | dark-channel prior (DCP) |

| DCM | Deep Curve Model |

| DLM | Deep Line Model |

| IFM | image formation model |

| MCP | medium-channel prior |

| PSNR | Peak Signal-to-Noise Ratio |

| RCP | Red-Channel Prior |

| RMSE | Root Mean Squares Error |

References

- Akkaynak, D.; Treibitz, T.; Shlesinger, T.; Loya, Y.; Tamir, R.; Iluz, D. What is the space of attenuation coefficients in underwater computer vision? In Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; 2017; pp. 4931–4940. [Google Scholar]

- Peng, Y.T.; Cosman, P.C. Underwater image restoration based on image blurriness and light absorption. IEEE transactions on image processing 2017, 26, 1579–1594. [Google Scholar] [CrossRef]

- Raveendran, S.; Patil, M.D.; Birajdar, G.K. Underwater image enhancement: a comprehensive review, recent trends, challenges and applications. Artificial Intelligence Review 2021, 54, 5413–5467. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE transactions on pattern analysis and machine intelligence 2010, 33, 2341–2353. [Google Scholar] [PubMed]

- Galdran, A.; Pardo, D.; Picón, A.; Alvarez-Gila, A. Automatic Red-Channel underwater image restoration. Journal of Visual Communication and Image Representation 2015, 26, 132–145. [Google Scholar] [CrossRef]

- Gibson, K.B.; Vo, D.T.; Nguyen, T.Q. An investigation of dehazing effects on image and video coding. IEEE transactions on image processing 2011, 21, 662–673. [Google Scholar] [CrossRef] [PubMed]

- Berman, D.; Levy, D.; Avidan, S.; Treibitz, T. Underwater single image color restoration using haze-lines and a new quantitative dataset. IEEE transactions on pattern analysis and machine intelligence 2020, 43, 2822–2837. [Google Scholar] [CrossRef] [PubMed]

- Xie, J.; Hou, G.; Wang, G.; Pan, Z. A Variational Framework for Underwater Image Dehazing and Deblurring. IEEE Transactions on Circuits and Systems for Video Technology 2022, 32, 3514–3526. [Google Scholar] [CrossRef]

- Ancuti, C.O.; Ancuti, C.; De Vleeschouwer, C.; Bekaert, P. Color Balance and Fusion for Underwater Image Enhancement. IEEE Transactions on Image Processing 2018, 27, 379–393. [Google Scholar] [CrossRef]

- Zhang, W.; Zhuang, P.; Sun, H.H.; Li, G.; Kwong, S.; Li, C. Underwater image enhancement via minimal color loss and locally adaptive contrast enhancement. IEEE Transactions on Image Processing 2022, 31, 3997–4010. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.; Dong, L.; Xu, W. Retinex-inspired color correction and detail preserved fusion for underwater image enhancement. Computers and Electronics in Agriculture 2022, 192, 106585. [Google Scholar] [CrossRef]

- Cai, B.; Xu, X.; Jia, K.; Qing, C.; Tao, D. Dehazenet: An End-to-End System for Single Image Haze Removal. IEEE transactions on image processing 2016, 25, 5187–5198. [Google Scholar] [CrossRef] [PubMed]

- Ren, W.; Liu, S.; Zhang, H.; Pan, J.; Cao, X.; Yang, M.H. Single image dehazing via multi-scale convolutional neural networks. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 2016, Proceedings, Part II 14. Springer, 2016, October 11-14; pp. 154–169.

- Li, B.; Peng, X.; Wang, Z.; Xu, J.; Feng, D. Aod-Net: All-in-One Dehazing Network. In Proceedings of the Proceedings of the IEEE international conference on computer vision; 2017. [Google Scholar]

- Zhang, H.; Patel, V.M. Densely connected pyramid dehazing network. In Proceedings of the Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp.; pp. 3194–3203.

- Ren, W.; Ma, L.; Zhang, J.; Pan, J.; Cao, X.; Liu, W.; Yang, M.H. Gated fusion network for single image dehazing. In Proceedings of the Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp.; pp. 3253–3261.

- Chen, D.; He, M.; Fan, Q.; Liao, J.; Zhang, L.; Hou, D.; Yuan, L.; Hua, G. Gated context aggregation network for image dehazing and deraining. In Proceedings of the 2019 IEEE winter conference on applications of computer vision (WACV). IEEE; 2019; pp. 1375–1383. [Google Scholar]

- Engin, D.; Genç, A.; Kemal Ekenel, H. Cycle-dehaze: Enhanced cyclegan for single image dehazing. In Proceedings of the Proceedings of the IEEE conference on computer vision and pattern recognition workshops, 2018, pp.; pp. 825–833.

- Singh, A.; Bhave, A.; Prasad, D.K. Single image dehazing for a variety of haze scenarios using back projected pyramid network. In Proceedings of the Computer Vision–ECCV 2020 Workshops: Glasgow, UK, 2020, Proceedings, Part IV 16. Springer, 2020, August 23–28; pp. 166–181.

- Liu, X.; Ma, Y.; Shi, Z.; Chen, J. Griddehazenet: Attention-based multi-scale network for image dehazing. In Proceedings of the Proceedings of the IEEE/CVF international conference on computer vision, 2019, pp.; pp. 7314–7323.

- Qin, X.; Wang, Z.; Bai, Y.; Xie, X.; Jia, H. FFA-Net: Feature fusion attention network for single image dehazing. In Proceedings of the Proceedings of the AAAI conference on artificial intelligence, 2020, Vol.; pp. 3411908–11915.

- Wu, H.; Liu, J.; Xie, Y.; Qu, Y.; Ma, L. Knowledge transfer dehazing network for nonhomogeneous dehazing. In Proceedings of the Proceedings of the IEEE/CVF conference on computer vision and pattern recognition workshops, 2020, pp.; pp. 478–479.

- Song, Y.; He, Z.; Qian, H.; Du, X. Vision transformers for single image dehazing. IEEE Transactions on Image Processing 2023, 32, 1927–1941. [Google Scholar] [CrossRef] [PubMed]

- Ancuti, C.O.; Ancuti, C.; De Vleeschouwer, C.; Sbetr, M. Color Channel Transfer for Image Dehazing. IEEE Signal Processing Letters 2019, 26, 1413–1417. [Google Scholar] [CrossRef]

- Buchsbaum, G. A spatial processor model for object colour perception. Journal of the Franklin institute 1980, 310, 1–26. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp.; pp. 4700–4708.

- Ebner, M. Color constancy; Vol. 7, John Wiley & Sons, 2007.

- Guo, C.; Li, C.; Guo, J.; Loy, C.C.; Hou, J.; Kwong, S.; Cong, R. Zero-reference deep curve estimation for low-light image enhancement. In Proceedings of the Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp.; pp. 1780–1789.

- Islam, M.J.; Xia, Y.; Sattar, J. Fast Underwater Image Enhancement for Improved Visual Perception. IEEE Robotics and Automation Letters 2020, 5, 3227–3234. [Google Scholar] [CrossRef]

- Li, C.; Guo, C.; Ren, W.; Cong, R.; Hou, J.; Kwong, S.; Tao, D. An Underwater Image Enhancement Benchmark Dataset and Beyond. IEEE Transactions on Image Processing 2020, 29, 4376–4389. [Google Scholar] [CrossRef] [PubMed]

- Ju, M.; Ding, C.; Guo, C.A.; Ren, W.; Tao, D. IDRLP: Image dehazing using region line prior. IEEE Transactions on Image Processing 2021, 30, 9043–9057. [Google Scholar] [CrossRef]

- Yang, H.H.; Fu, Y. Wavelet U-Net and the Chromatic Adaptation Transform for Single Image Dehazing. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP); 2019. [Google Scholar]

- Islam, M.J.; Xia, Y.; Sattar, J. Fast underwater image enhancement for improved visual perception. IEEE Robotics and Automation Letters 2020, 5, 3227–3234. [Google Scholar] [CrossRef]

- Fu, Z.; Lin, X.; Wang, W.; Huang, Y.; Ding, X. Underwater image enhancement via learning water type desensitized representations. In Proceedings of the ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE; 2022; pp. 2764–2768. [Google Scholar]

| Datasets | Group | Input | DLM | DCM |

| EUVP | Type-I | 0.11/20.00 | 0.93/22.35 | 0.89/20.63 |

| Type-II | 0.08/22.00 | 0.97/24.18 | 0.97/25.03 | |

| UIEBD | Type-I | 0.15/17.60 | 0.93/20.08 | 0.85/16.37 |

| Type-II | 0.14/18.60 | 0.94/17.81 | 0.95/22.20 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).