Introduction

Computational neuroscience, a field dedicated to understanding the brain through mathematical models and computer simulations, has experienced a remarkable evolution since its inception. Early pioneers, such as Hodgkin and Huxley (1952), laid the groundwork with their groundbreaking model of neural electrical activity. This model, rooted in differential equations, provided a fundamental framework for understanding how neurons generate and transmit electrical signals. Subsequent decades saw the development of increasingly sophisticated models that captured the intricacies of neural networks and their role in various cognitive functions (Hopfield, 1982; Rumelhart & McClelland, 1986).

However, unlike the field of economics, where models often rely on idealized assumptions about rational actors and perfect markets, computational neuroscience has always been grounded in the biological reality of the brain. While early models may have been limited by the available data and computational resources, they were nonetheless constrained by the known principles of neurophysiology and anatomy. In contrast, many economic models have been criticized for their failure to accurately predict or explain real-world phenomena, such as financial crises and market crashes. This has led some to question the validity and usefulness of economic models in general. The 2008 financial crisis, for example, exposed the limitations of models that assumed rational behavior and efficient markets.

Despite these challenges, computational neuroscience has continued to evolve and mature. The advent of modern neuroimaging techniques, such as fMRI (Ogawa et al., 1990) and EEG (Niedermeyer & Lopes da Silva, 2005), coupled with advancements in electrophysiology (Buzsáki et al., 2012) and molecular biology, has ushered in a new era of data-driven computational neuroscience.

The abundance of neural data, ranging from the activity of individual neurons to the dynamics of large-scale brain networks, has presented both challenges and opportunities for the field. The challenge lies in developing computational models that can effectively capture the complexity and variability of neural data. The opportunity lies in the potential to develop more accurate, biologically plausible, and predictive models that can unlock the mysteries of the brain and pave the way for new treatments for neurological disorders.

This article explores the evolving landscape of computational neuroscience, highlighting the shift towards data-driven and integrative approaches. We delve into the history of the field, tracing its origins from early theoretical models to the present day, where data-driven approaches are increasingly prevalent. We discuss the key challenges and opportunities presented by the abundance of neural data and examine how computational neuroscientists are leveraging machine learning (Glaser et al., 2019), statistics, and other data science techniques to develop more sophisticated models. Finally, we explore the implications of this shift for future research and clinical applications, emphasizing the potential for data-driven computational neuroscience to revolutionize our understanding of the brain and its disorders.

Methodology: The Modern Toolbox of Computational Neuroscience

The methodological landscape of computational neuroscience has evolved significantly in recent years, driven by the influx of neural data and advancements in data science and machine learning. Today, computational neuroscientists employ a diverse array of tools and techniques to analyze, interpret, and model neural data.

Data Acquisition and Preprocessing

Neuroimaging: Techniques such as fMRI, EEG, and MEG provide rich datasets of brain activity at various spatial and temporal scales. Preprocessing techniques, such as artifact removal, normalization, and feature extraction, are essential for preparing these datasets for further analysis.

Electrophysiology: Single-unit recordings, local field potentials (LFPs), and multielectrode arrays capture electrical activity from individual neurons and populations of neurons. Spike sorting and signal processing techniques are used to extract meaningful information from these recordings.

Molecular and Cellular Data: Gene expression profiles, protein interactions, and cellular morphology data provide insights into the molecular and cellular underpinnings of neural function. Bioinformatics and computational biology tools are used to analyze and integrate these datasets.

Data Analysis and Modeling

Statistical Modeling: Classical statistical techniques, such as regression, ANOVA, and time series analysis, are used to identify correlations and causal relationships between neural variables.

Machine Learning: Supervised and unsupervised learning algorithms, such as deep neural networks, support vector machines, and clustering methods, are increasingly employed for pattern recognition, classification, and dimensionality reduction in neural data.

Network Analysis: Graph theory and network analysis tools are used to characterize the structure and dynamics of neural networks at various scales, from local circuits to whole-brain connectomes.

Dynamical Systems Modeling: Differential equations and other mathematical tools are used to model the dynamic behavior of neural systems and predict their responses to various inputs.

Model Validation and Simulation

Cross-Validation: Models are validated by comparing their predictions to independent datasets or experimental results.

Simulation: Computational models are simulated to test their behavior under various conditions and explore the potential consequences of different parameter settings.

Parameter Optimization: Algorithms such as gradient descent and genetic algorithms are used to optimize model parameters to best fit the available data.

Integrative Approaches

Multimodal Data Integration: Combining data from multiple modalities, such as neuroimaging and electrophysiology, can provide a more comprehensive view of neural function.

Data-Driven Model Development: Using machine learning and statistical techniques to inform and constrain model development can lead to more accurate and biologically plausible models.

Hybrid Modeling: Combining data-driven and theoretical approaches can leverage the strengths of both methods to gain deeper insights into neural function.

This diverse toolbox of computational neuroscience is constantly evolving, with new techniques and approaches emerging as technology advances and our understanding of the brain deepens. The integration of data science, machine learning, and theoretical modeling is paving the way for a new era of computational neuroscience, one that promises to unlock the mysteries of the brain and transform our understanding of neural function.

A Deeper Dive into Modern Computational Neuroscience Methods

The evolving landscape of computational neuroscience is characterized by a growing emphasis on data-driven approaches, leveraging a powerful arsenal of tools from machine learning, statistics, and network science. Let's delve deeper into some of the key methods that are shaping the field today.

Machine Learning in Neuroscience

Machine learning, a subfield of artificial intelligence, focuses on developing algorithms that can learn from and make predictions on data. In neuroscience, machine learning techniques are being increasingly used to analyze large-scale neural datasets, extract meaningful patterns, and build predictive models of neural activity.

Supervised Learning: This approach involves training a model on labeled data, where the input is a set of neural features and the output is a known label or category. For example, a supervised learning algorithm can be trained to classify EEG patterns associated with different cognitive states (e.g., attention, memory).

Unsupervised Learning: In this approach, the model is not provided with labeled data and must discover patterns and relationships in the data on its own. Unsupervised learning techniques, such as clustering and dimensionality reduction, can be used to identify distinct neural populations or uncover hidden structures in neural data.

Reinforcement Learning: This approach involves training a model to make decisions in an environment to maximize a reward signal. In neuroscience, reinforcement learning models have been used to simulate decision-making processes in the brain and explore the neural mechanisms underlying learning and behavior.

Neural Networks and Deep Learning

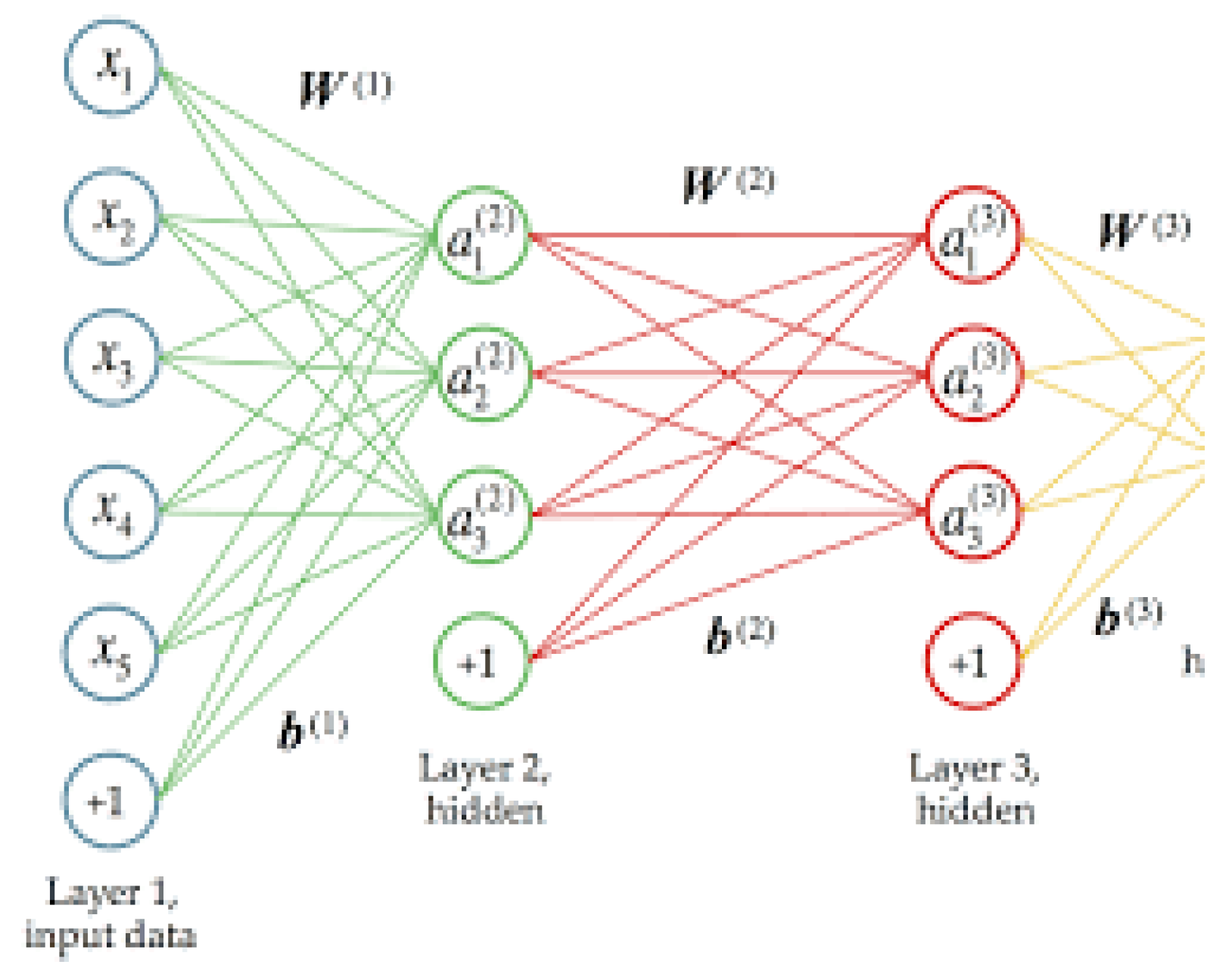

Neural networks, a class of machine learning algorithms inspired by the structure and function of biological neural networks, have revolutionized many fields, including neuroscience. Deep neural networks, which consist of multiple layers of interconnected nodes, have been particularly successful in tasks such as image recognition and natural language processing.In neuroscience, deep neural networks are being used to model complex neural computations, such as object recognition and decision-making. They can also be used to decode neural activity, allowing researchers to infer the stimuli or cognitive states that gave rise to a particular pattern of brain activity.

Figure 1.

Typical Neural Network from Wikimedia.

Figure 1.

Typical Neural Network from Wikimedia.

Figure 2.

Art made for his article.

Figure 2.

Art made for his article.

Backpropagation and Convolutional Neural Networks

Backpropagation is a key algorithm used to train neural networks. It involves calculating the gradient of the error function with respect to the network's weights and then adjusting the weights to minimize the error (

Figure 3.). This process is repeated iteratively until the network's performance reaches a satisfactory level.

Convolutional neural networks (CNNs) are a type of deep neural network specifically designed for processing grid-like data, such as images. CNNs have been highly successful in image recognition tasks and are now being applied to neuroscience research, for example, to analyze brain imaging data and decode neural activity.

Figure 3.

Art made for this article showing backpropagation convolutional network.

Figure 3.

Art made for this article showing backpropagation convolutional network.

Discussion: Challenges and Future Directions

While machine learning and neural networks offer great promise for advancing computational neuroscience, there are also challenges that need to be addressed. One key challenge is the interpretability of complex models. Deep neural networks, for example, can be highly accurate but difficult to interpret, making it challenging to understand the underlying neural mechanisms they capture (Kriegeskorte, 2015).

Another challenge is the need for large and diverse datasets to train effective models. While the amount of neural data is growing rapidly, there is still a need for more standardized data collection and sharing practices to ensure that models can be generalized across different populations and experimental conditions.

Despite these challenges, the future of computational neuroscience is bright. The integration of data-driven approaches, machine learning, and theoretical modeling is paving the way for a new era of discovery in neuroscience. By leveraging the power of these tools, we can gain deeper insights into the complex workings of the brain and develop more effective treatments for neurological disorders.

Conclusion: The Dawn of a New Era in Computational Neuroscience

The burgeoning field of computational neuroscience is undergoing a profound transformation, transitioning from a predominantly theoretical discipline to one that is increasingly data-driven and integrative. The deluge of neural data, coupled with advancements in machine learning and data science, is ushering in a new era of model development that promises to revolutionize our understanding of the brain and its disorders.

While traditional theoretical models have laid the foundation for the field, the limitations of relying solely on idealized assumptions and limited data have become increasingly apparent. In contrast, data-driven approaches, grounded in the biological reality of the brain, offer the potential for greater accuracy, biological plausibility, and predictive power.

This shift towards data-driven computational neuroscience represents a paradigm shift in the field. The integration of machine learning, statistical modeling, and network analysis with theoretical insights is enabling the development of more sophisticated models that can capture the complexity and variability of neural data. These models are not only providing deeper insights into the mechanisms of neural function but also paving the way for new diagnostic tools and therapeutic interventions for neurological disorders.

However, this new era also presents challenges, such as the need for large and diverse datasets, the interpretability of complex models, and the ethical considerations surrounding the use of artificial intelligence in neuroscience research. As the field continues to evolve, it will be crucial to address these challenges and ensure that the benefits of data-driven computational neuroscience are realized in a responsible and ethical manner.

In conclusion, the future of computational neuroscience is bright. By embracing data-driven approaches and integrating them with theoretical models, we can unlock the full potential of this exciting field and pave the way for a deeper understanding of the brain and its disorders. This new era of computational neuroscience promises to transform our understanding of neural function and usher in a new wave of innovation in neuroscience research and clinical practice.

Conflicts of Interest

The Author claims no conflicts of interest.

References

- Buzsáki, G., Anastassiou, C. A., & Koch, C. (2012). The origin of extracellular fields and currents—EEG, ECoG, LFP and spikes. Nature Reviews Neuroscience, 13(6), 407-420.

- Glaser, J. I., Chowdhury, R. H., Perich, M. G., Miller, L. E., & Körding, K. P. (2019). Machine learning for neural decoding. eNeuro, 6(2).

- Hodgkin, A. L., & Huxley, A. F. (1952). A quantitative description of membrane current and its application to conduction and excitation in nerve. The Journal of Physiology, 117(4), 500-544.

- Hopfield, J. J. (1982). Neural networks and physical systems with emergent collective computational abilities. Proceedings of the National Academy of Sciences, 79(8), 2554-2558.

- Kriegeskorte, N. (2015). Deep neural networks: a new framework for modeling biological vision and brain information processing. Annual Review of Vision Science, 1, 417-446.

- Niedermeyer, E., & Lopes da Silva, F. H. (2005). Electroencephalography: Basic Principles, Clinical Applications, and Related Fields. Lippincott Williams & Wilkins.

- Ogawa, S., Lee, T. M., Kay, A. R., & Tank, D. W. (1990). Brain magnetic resonance imaging with contrast dependent on blood oxygenation. Proceedings of the National Academy of Sciences, 87(24), 9868-9872.

- Richards, B. A., Lillicrap, T. P., Beaudoin, P., Bengio, Y., Bogacz, R., Christensen, A.,... & Kording, K. (2019). A deep learning framework for neuroscience. Nature Neuroscience, 22(11), 1761-1770.

- Rumelhart, D. E., & McClelland, J. L. (1986). Parallel Distributed Processing: Explorations in the Microstructure of Cognition. MIT Press.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).