Submitted:

08 April 2024

Posted:

08 April 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Related Works

3. Materials and Methods

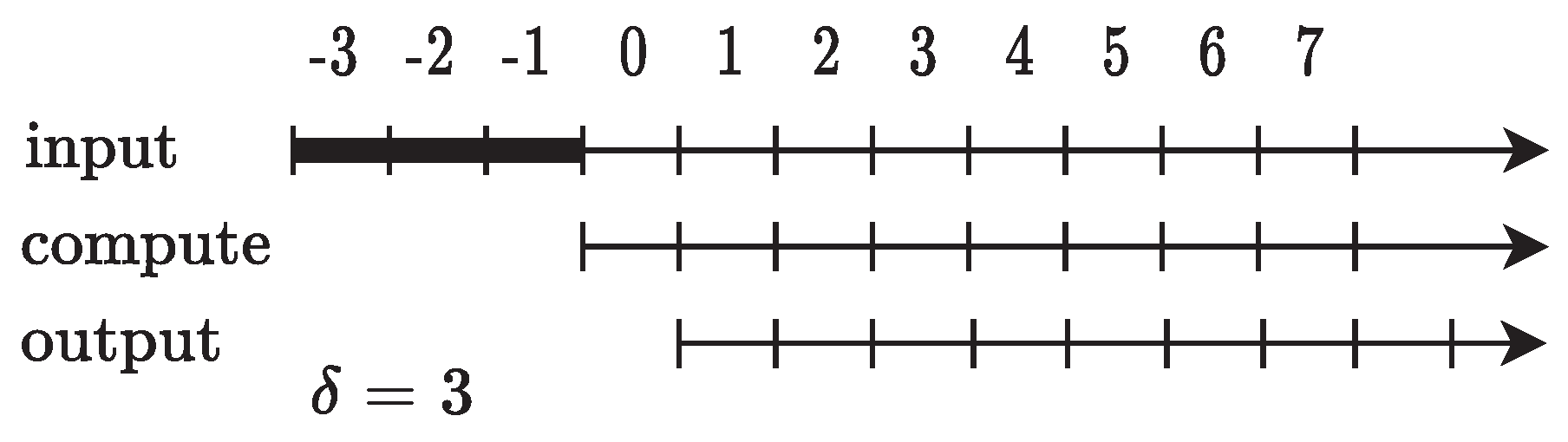

3.1. Online Arithmetic

3.1.1. Online Multiplier (OLM)

| Algorithm 1 Serial-Parallel Online Multiplication |

|

3.1.2. Online Adder (OLA)

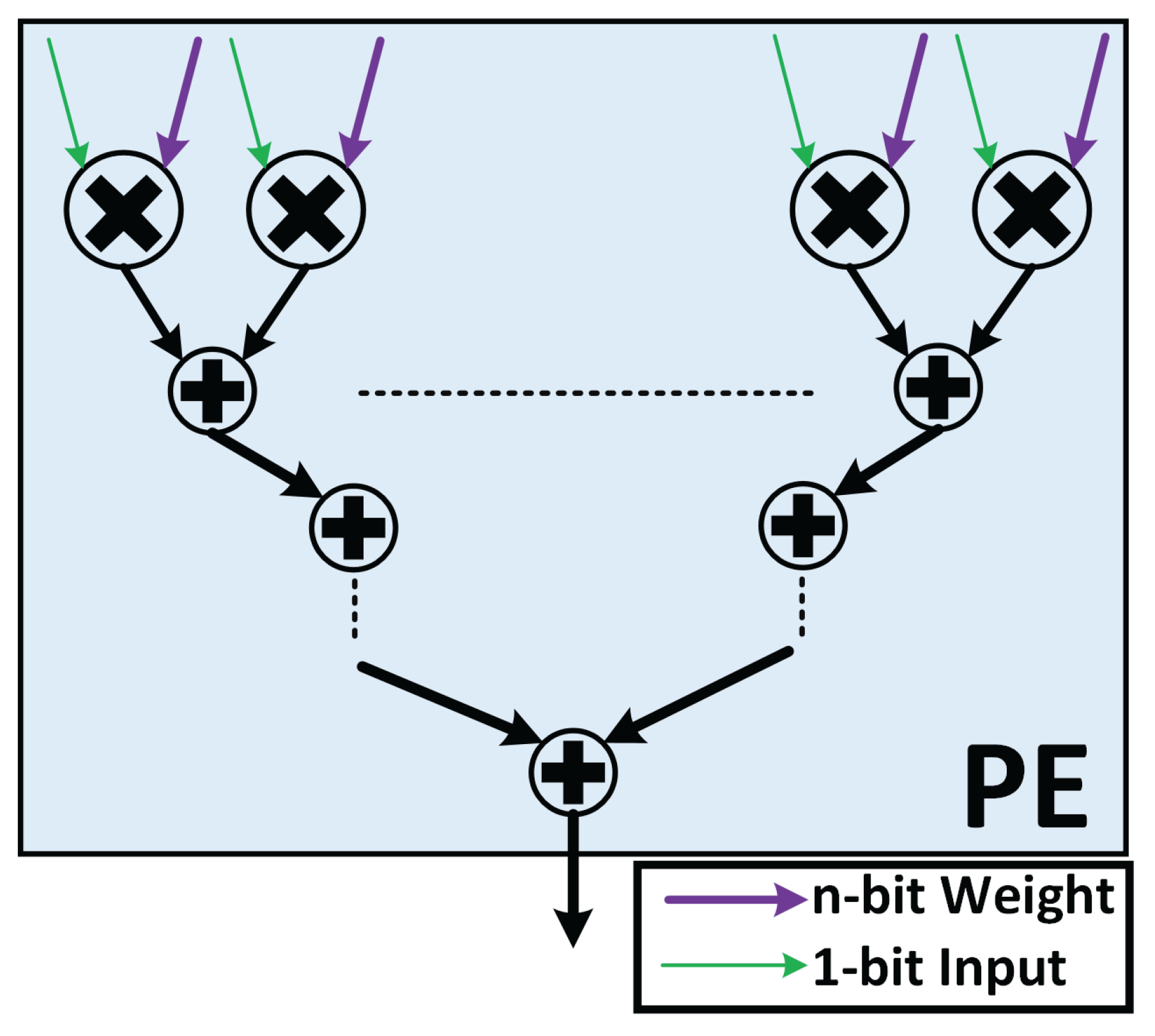

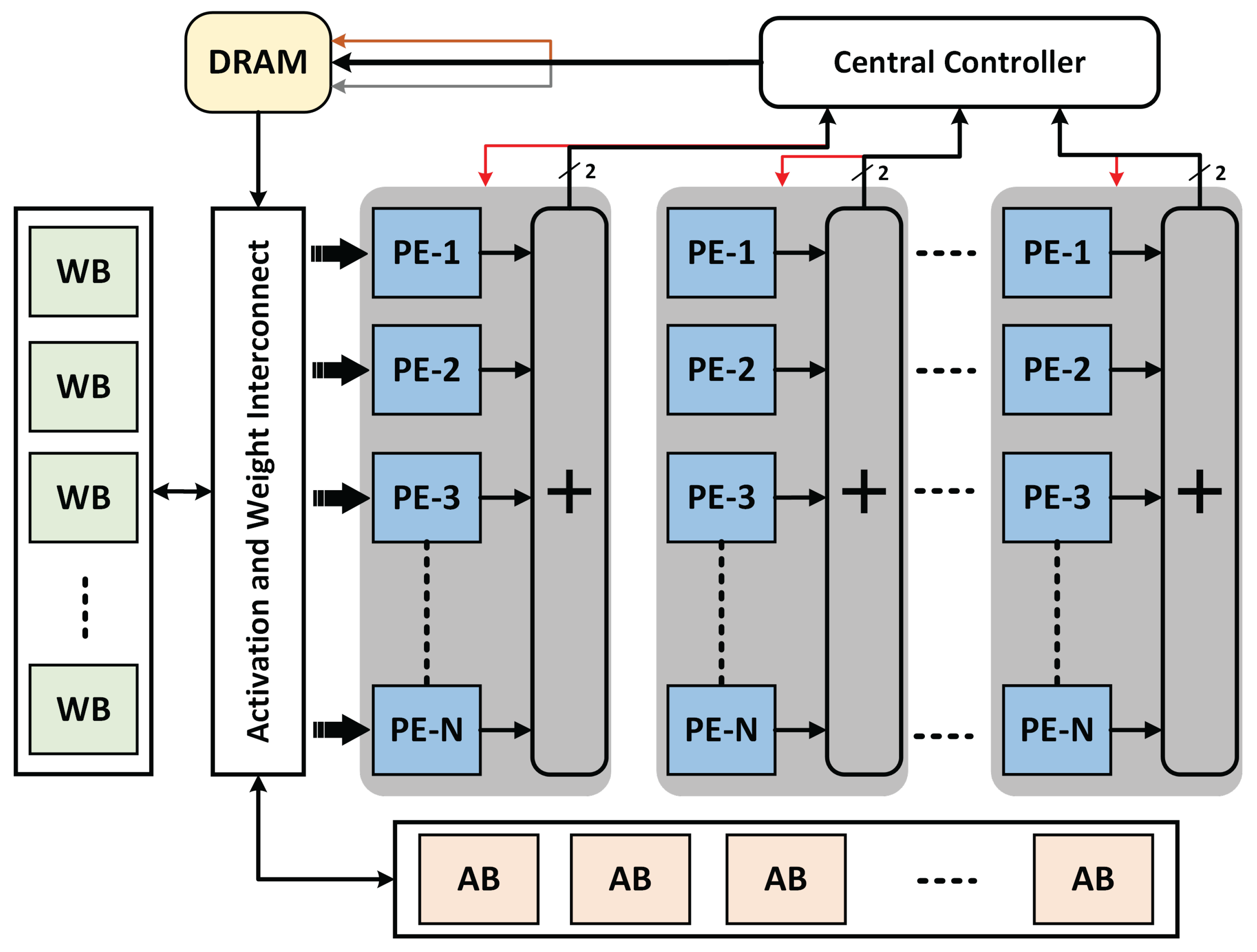

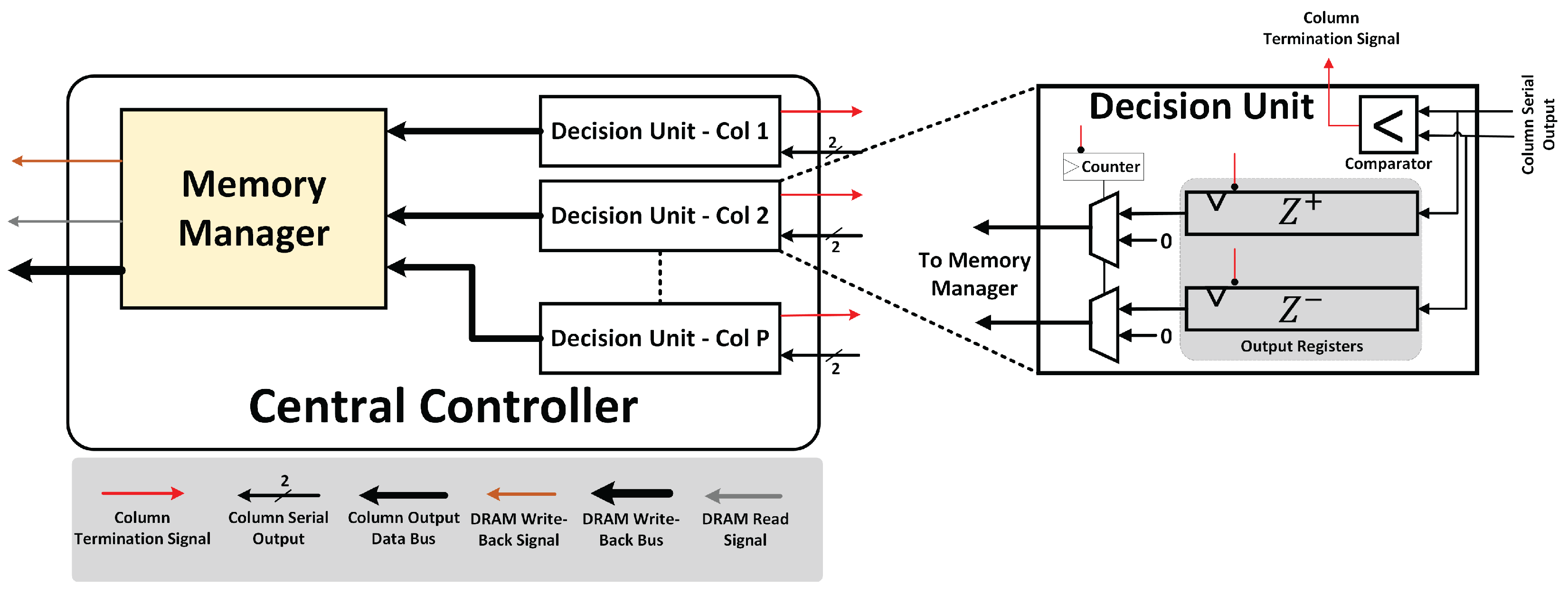

3.2. Proposed Design

3.2.1. Processing Engine Architecture

3.2.2. Early Detection and Termination of Negative Computations

| Algorithm 2 Early detection and termination of negative activations in ECHO |

|

1.35

|

3.2.3. Accelerator Design

4. Experimental Results

5. Conclusion

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Gao, G.; Xu, Z.; Li, J.; Yang, J.; Zeng, T.; Qi, G.J. CTCNet: A CNN-transformer cooperation network for face image super-resolution. IEEE Transactions on Image Processing 2023, 32, 1978–1991. [Google Scholar] [CrossRef] [PubMed]

- Bai, X.; Wang, X.; Liu, X.; Liu, Q.; Song, J.; Sebe, N.; Kim, B. Explainable deep learning for efficient and robust pattern recognition: A survey of recent developments. Pattern Recognition 2021, 120, 108102. [Google Scholar] [CrossRef]

- Usman, M.; Khan, S.; Park, S.; Lee, J.A. AoP-LSE: Antioxidant Proteins Classification Using Deep Latent Space Encoding of Sequence Features. Current Issues in Molecular Biology 2021, 43, 1489–1501. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Wang, Z.; Liu, X.; Zeng, N.; Liu, Y.; Alsaadi, F.E. A survey of deep neural network architectures and their applications. Neurocomputing 2017, 234, 11–26. [Google Scholar] [CrossRef]

- Kwon, H.; Chatarasi, P.; Pellauer, M.; Parashar, A.; Sarkar, V.; Krishna, T. Understanding reuse, performance, and hardware cost of dnn dataflow: A data-centric approach. Proceedings of the 52nd Annual IEEE/ACM International Symposium on Microarchitecture, 2019, pp. 754–768.

- Gupta, U.; Jiang, D.; Balandat, M.; Wu, C.J. TOWARDS GREEN, ACCURATE, AND EFFICIENT AI MODELS THROUGH MULTI-OBJECTIVE OPTIMIZATION. Workshop paper at Tackling Climate Change with Machine Learning, ICLR 2023.

- Narayanan, D.; Harlap, A.; Phanishayee, A.; Seshadri, V.; Devanur, N.R.; Ganger, G.R.; Gibbons, P.B.; Zaharia, M. PipeDream: Generalized pipeline parallelism for DNN training. Proceedings of the 27th ACM Symposium on Operating Systems Principles, 2019, pp. 1–15.

- Deng, C.; Liao, S.; Xie, Y.; Parhi, K.K.; Qian, X.; Yuan, B. PermDNN: Efficient compressed DNN architecture with permuted diagonal matrices. 2018 51st Annual IEEE/ACM international symposium on microarchitecture (MICRO). IEEE, 2018, pp. 189–202.

- Jain, S.; Venkataramani, S.; Srinivasan, V.; Choi, J.; Chuang, P.; Chang, L. Compensated-DNN: Energy efficient low-precision deep neural networks by compensating quantization errors. Proceedings of the 55th Annual Design Automation Conference, 2018, pp. 1–6.

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. 2009 IEEE conference on computer vision and pattern recognition. Ieee, 2009, pp. 248–255.

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 770–778.

- Hanif, M.A.; Javed, M.U.; Hafiz, R.; Rehman, S.; Shafique, M. Hardware–Software Approximations for Deep Neural Networks. Approximate Circuits: Methodologies and CAD 2019, pp. 269–288.

- Zhang, S.; Mao, W.; Wang, Z. An efficient accelerator based on lightweight deformable 3D-CNN for video super-resolution. IEEE Transactions on Circuits and Systems I: Regular Papers 2023.

- Lo, C.Y.; Sham, C.W. Energy efficient fixed-point inference system of convolutional neural network. 2020 IEEE 63rd International Midwest Symposium on Circuits and Systems (MWSCAS). IEEE, 2020, pp. 403–406.

- Rastegari, M.; Ordonez, V.; Redmon, J.; Farhadi, A. Xnor-net: Imagenet classification using binary convolutional neural networks. European conference on computer vision. Springer, 2016, pp. 525–542.

- Agrawal, A.; Choi, J.; Gopalakrishnan, K.; Gupta, S.; Nair, R.; Oh, J.; Prener, D.A.; Shukla, S.; Srinivasan, V.; Sura, Z. Approximate computing: Challenges and opportunities. 2016 IEEE International Conference on Rebooting Computing (ICRC). IEEE, 2016, pp. 1–8.

- Liu, B.; Wang, Z.; Guo, S.; Yu, H.; Gong, Y.; Yang, J.; Shi, L. An energy-efficient voice activity detector using deep neural networks and approximate computing. Microelectronics Journal 2019, 87, 12–21. [Google Scholar] [CrossRef]

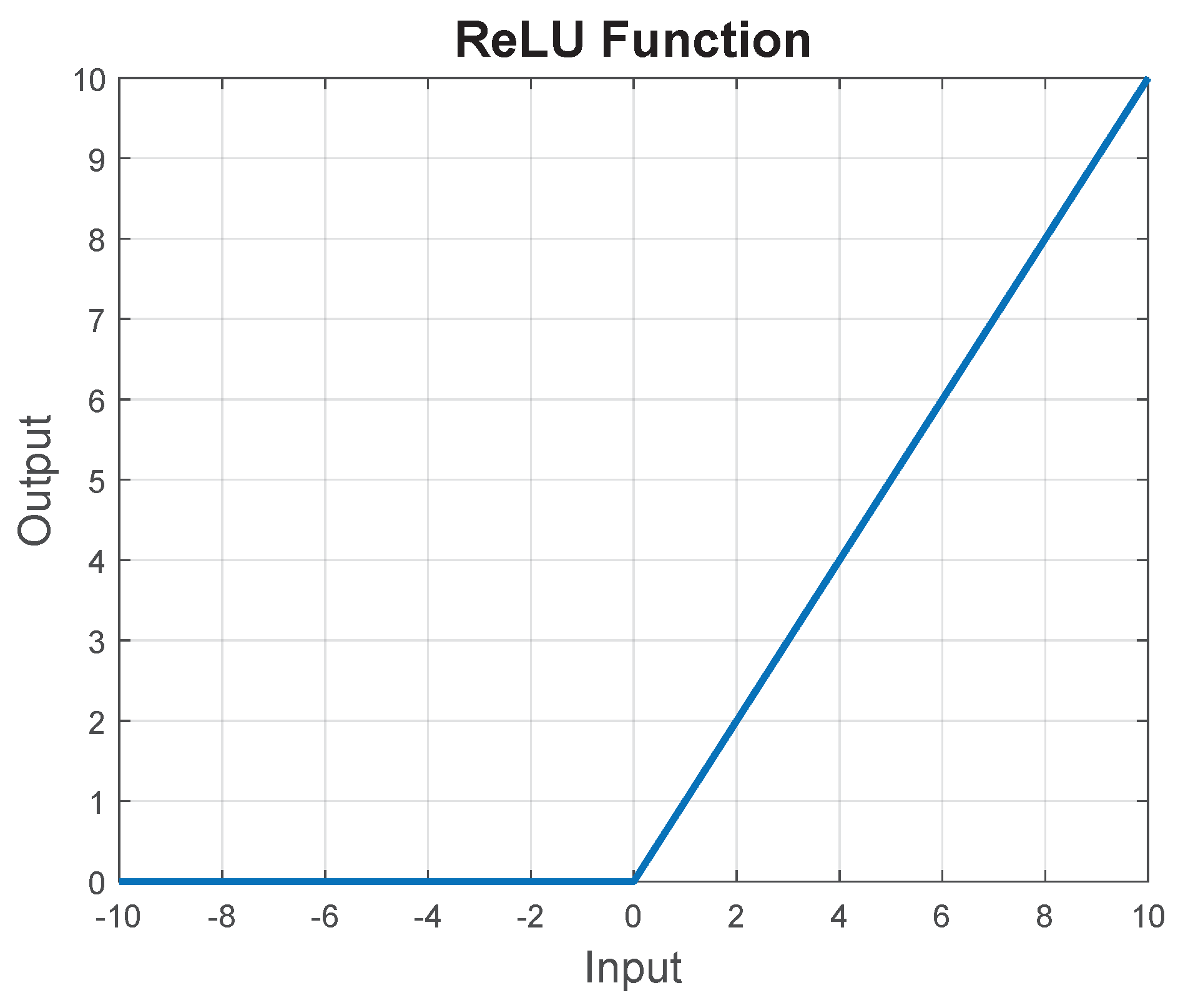

- Szandała, T. Review and comparison of commonly used activation functions for deep neural networks. Bio-inspired neurocomputing 2021, pp. 203–224.

- Dubey, S.R.; Singh, S.K.; Chaudhuri, B.B. Activation functions in deep learning: A comprehensive survey and benchmark. Neurocomputing 2022. [Google Scholar] [CrossRef]

- Cao, J.; Pang, Y.; Li, X.; Liang, J. Randomly translational activation inspired by the input distributions of ReLU. Neurocomputing 2018, 275, 859–868. [Google Scholar] [CrossRef]

- Shi, S.; Chu, X. Speeding up convolutional neural networks by exploiting the sparsity of rectifier units. arXiv preprint 2017, arXiv:1704.07724. [Google Scholar]

- Luo, T.; Liu, S.; Li, L.; Wang, Y.; Zhang, S.; Chen, T.; Xu, Z.; Temam, O.; Chen, Y. DaDianNao: A neural network supercomputer. IEEE Transactions on Computers 2016, 66, 73–88. [Google Scholar] [CrossRef]

- Judd, P.; Albericio, J.; Hetherington, T.; Aamodt, T.M.; Moshovos, A. Stripes: Bit-serial deep neural network computing. 2016 49th Annual IEEE/ACM International Symposium on Microarchitecture (MICRO). IEEE, 2016, pp. 1–12.

- Albericio, J.; Delmás, A.; Judd, P.; Sharify, S.; O’Leary, G.; Genov, R.; Moshovos, A. Bit-Pragmatic Deep Neural Network Computing. 2017 50th Annual IEEE/ACM International Symposium on Microarchitecture (MICRO), 2017, pp. 382–394.

- Albericio, J.; Judd, P.; Hetherington, T.; Aamodt, T.; Jerger, N.E.; Moshovos, A. Cnvlutin: Ineffectual-neuron-free deep neural network computing. ACM SIGARCH Computer Architecture News 2016, 44, 1–13. [Google Scholar] [CrossRef]

- Gao, M.; Pu, J.; Yang, X.; Horowitz, M.; Kozyrakis, C. Tetris: Scalable and efficient neural network acceleration with 3d memory. Proceedings of the Twenty-Second International Conference on Architectural Support for Programming Languages and Operating Systems, 2017, pp. 751–764.

- Judd, P.; Albericio, J.; Hetherington, T.; Aamodt, T.; Jerger, N.E.; Urtasun, R.; Moshovos, A. Proteus: Exploiting precision variability in deep neural networks. Parallel Computing 2018, 73, 40–51. [Google Scholar] [CrossRef]

- Shin, S.; Boo, Y.; Sung, W. Fixed-point optimization of deep neural networks with adaptive step size retraining. 2017 IEEE International conference on acoustics, speech and signal processing (ICASSP). IEEE, 2017, pp. 1203–1207.

- Jouppi, N.P.; Young, C.; Patil, N.; Patterson, D. A domain-specific architecture for deep neural networks. Communications of the ACM 2018, 61, 50–59. [Google Scholar] [CrossRef]

- Juracy, L.R.; Garibotti, R.; Moraes, F.G.; others. From CNN to DNN Hardware Accelerators: A Survey on Design, Exploration, Simulation, and Frameworks. Foundations and Trends® in Electronic Design Automation 2023, 13, 270–344. [Google Scholar] [CrossRef]

- Shomron, G.; Weiser, U. Spatial correlation and value prediction in convolutional neural networks. IEEE Computer Architecture Letters 2018, 18, 10–13. [Google Scholar] [CrossRef]

- Zhang, Q.; Wang, T.; Tian, Y.; Yuan, F.; Xu, Q. ApproxANN: An approximate computing framework for artificial neural network. 2015 Design, Automation & Test in Europe Conference & Exhibition (DATE). IEEE, 2015, pp. 701–706.

- Lee, J.; Kim, C.; Kang, S.; Shin, D.; Kim, S.; Yoo, H.J. UNPU: An energy-efficient deep neural network accelerator with fully variable weight bit precision. IEEE Journal of Solid-State Circuits 2018, 54, 173–185. [Google Scholar] [CrossRef]

- Hsu, L.C.; Chiu, C.T.; Lin, K.T.; Chou, H.H.; Pu, Y.Y. ESSA: An energy-Aware bit-Serial streaming deep convolutional neural network accelerator. Journal of Systems Architecture 2020, 111, 101831. [Google Scholar] [CrossRef]

- Isobe, S.; Tomioka, Y. Low-bit Quantized CNN Acceleration based on Bit-serial Dot Product Unit with Zero-bit Skip. 2020 Eighth International Symposium on Computing and Networking (CANDAR). IEEE, 2020, pp. 141–145.

- Li, A.; Mo, H.; Zhu, W.; Li, Q.; Yin, S.; Wei, S.; Liu, L. BitCluster: Fine-Grained Weight Quantization for Load-Balanced Bit-Serial Neural Network Accelerators. IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems 2022, 41, 4747–4757. [Google Scholar] [CrossRef]

- Akhlaghi, V.; Yazdanbakhsh, A.; Samadi, K.; Gupta, R.K.; Esmaeilzadeh, H. Snapea: Predictive early activation for reducing computation in deep convolutional neural networks. 2018 ACM/IEEE 45th Annual International Symposium on Computer Architecture (ISCA). IEEE, 2018, pp. 662–673.

- Lee, D.; Kang, S.; Choi, K. ComPEND: Computation Pruning through Early Negative Detection for ReLU in a deep neural network accelerator. Proceedings of the 2018 International Conference on Supercomputing, 2018, pp. 139–148.

- Kim, N.; Park, H.; Lee, D.; Kang, S.; Lee, J.; Choi, K. ComPreEND: Computation Pruning through Predictive Early Negative Detection for ReLU in a Deep Neural Network Accelerator. IEEE Transactions on Computers 2021. [Google Scholar] [CrossRef]

- Shuvo, M.K.; Thompson, D.E.; Wang, H. MSB-First Distributed Arithmetic Circuit for Convolution Neural Network Computation. 2020 IEEE 63rd International Midwest Symposium on Circuits and Systems (MWSCAS). IEEE, 2020, pp. 399–402.

- Karadeniz, M.B.; Altun, M. TALIPOT: Energy-Efficient DNN Booster Employing Hybrid Bit Parallel-Serial Processing in MSB-First Fashion. IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems 2021, 41, 2714–2727. [Google Scholar] [CrossRef]

- Song, M.; Zhao, J.; Hu, Y.; Zhang, J.; Li, T. Prediction based execution on deep neural networks. 2018 ACM/IEEE 45th Annual International Symposium on Computer Architecture (ISCA). IEEE, 2018, pp. 752–763.

- Lin, Y.; Sakr, C.; Kim, Y.; Shanbhag, N. PredictiveNet: An energy-efficient convolutional neural network via zero prediction. 2017 IEEE international symposium on circuits and systems (ISCAS). IEEE, 2017, pp. 1–4.

- Asadikouhanjani, M.; Ko, S.B. A novel architecture for early detection of negative output features in deep neural network accelerators. IEEE Transactions on Circuits and Systems II: Express Briefs 2020, 67, 3332–3336. [Google Scholar] [CrossRef]

- Suresh, B.; Pillai, K.; Kalsi, G.S.; Abuhatzera, A.; Subramoney, S. Early Prediction of DNN Activation Using Hierarchical Computations. Mathematics 2021, 9, 3130. [Google Scholar] [CrossRef]

- Pan, Y.; Yu, J.; Lukefahr, A.; Das, R.; Mahlke, S. BitSET: Bit-Serial Early Termination for Computation Reduction in Convolutional Neural Networks. ACM Transactions on Embedded Computing Systems 2023, 22, 1–24. [Google Scholar] [CrossRef]

- Ercegovac, M.D. On-Line Arithmetic: An Overview. Real-Time Signal Processing VII; Bromley, K., Ed. International Society for Optics and Photonics, SPIE, 1984, Vol. 0495, pp. 86 – 93.

- Usman, M.; Lee, J.A.; Ercegovac, M.D. Multiplier with reduced activities and minimized interconnect for inner product arrays. 2021 55th Asilomar Conference on Signals, Systems, and Computers. IEEE, 2021, pp. 1–5.

- Ibrahim, M.S.; Usman, M.; Nisar, M.Z.; Lee, J.A. DSLOT-NN: Digit-Serial Left-to-Right Neural Network Accelerator. 2023 26th Euromicro Conference on Digital System Design (DSD). IEEE, 2023, pp. 686–692.

- Usman, M. ; D. Ercegovac, M.; Lee, J.A. Low-Latency Online Multiplier with Reduced Activities and Minimized Interconnect for Inner Product Arrays. Journal of Signal Processing Systems, 2003; 1–20. [Google Scholar]

- Ercegovac, M.D.; Lang, T. Digital arithmetic; Elsevier, 2004.

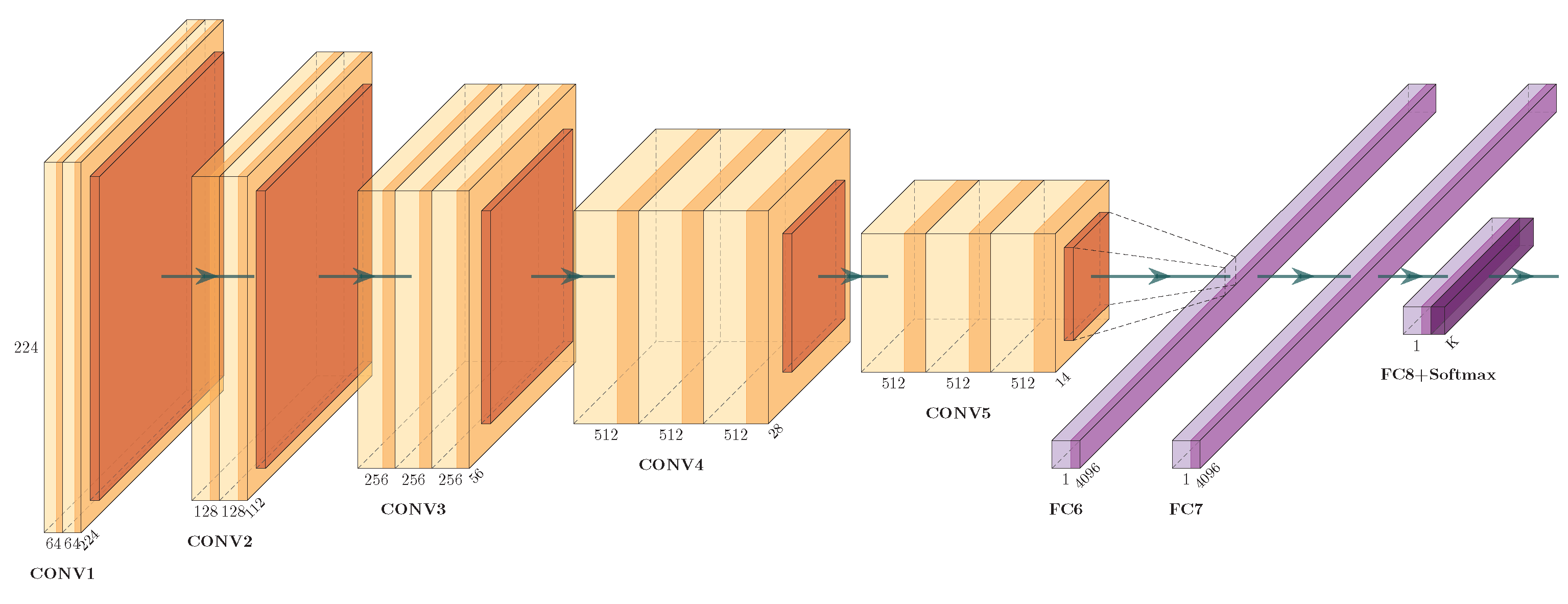

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv preprint 2014, arXiv:1409.1556. [Google Scholar]

- Marcel, S.; Rodriguez, Y. Torchvision the machine-vision package of torch. Proceedings of the 18th ACM international conference on Multimedia, 2010, pp. 1485–1488.

- Meloni, P.; Capotondi, A.; Deriu, G.; Brian, M.; Conti, F.; Rossi, D.; Raffo, L.; Benini, L. NEURAghe: Exploiting CPU-FPGA synergies for efficient and flexible CNN inference acceleration on Zynq SoCs. ACM Transactions on Reconfigurable Technology and Systems (TRETS) 2018, 11, 1–24. [Google Scholar] [CrossRef]

- Li, G.; Liu, Z.; Li, F.; Cheng, J. Block convolution: toward memory-efficient inference of large-scale CNNs on FPGA. IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems 2021, 41, 1436–1447. [Google Scholar] [CrossRef]

- Yu, Y.; Wu, C.; Zhao, T.; Wang, K.; He, L. OPU: An FPGA-based overlay processor for convolutional neural networks. IEEE Transactions on Very Large Scale Integration (VLSI) Systems 2019, 28, 35–47. [Google Scholar] [CrossRef]

- Zhang, C.; Sun, G.; Fang, Z.; Zhou, P.; Pan, P.; Cong, J. Caffeine: Toward Uniformed Representation and Acceleration for Deep Convolutional Neural Networks. IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems 2019, 38, 2072–2085. [Google Scholar] [CrossRef]

- Xie, X.; Lin, J.; Wang, Z.; Wei, J. An efficient and flexible accelerator design for sparse convolutional neural networks. IEEE Transactions on Circuits and Systems I: Regular Papers 2021, 68, 2936–2949. [Google Scholar] [CrossRef]

| Network | Layer | Kernel Size | M | |

|---|---|---|---|---|

| VGG-16 | C1-C2 | 64 | ||

| C3-C4 | 128 | |||

| C5-C7 | 256 | |||

| C8-C10 | 512 | |||

| C11-C13 | 512 | |||

| ResNet-18 | C1 | 64 | ||

| C2-C5 | 64 | |||

| C6-C9 | 128 | |||

| C10-C13 | 256 | |||

| C14-C17 | 512 | |||

| ResNet-50 | C1 | 64 | ||

| C2-x |

|

64, 256 | ||

| C3-x | 128, 512 | |||

| C4-x | 256, 1024 | |||

| C5-x | 512, 2048 |

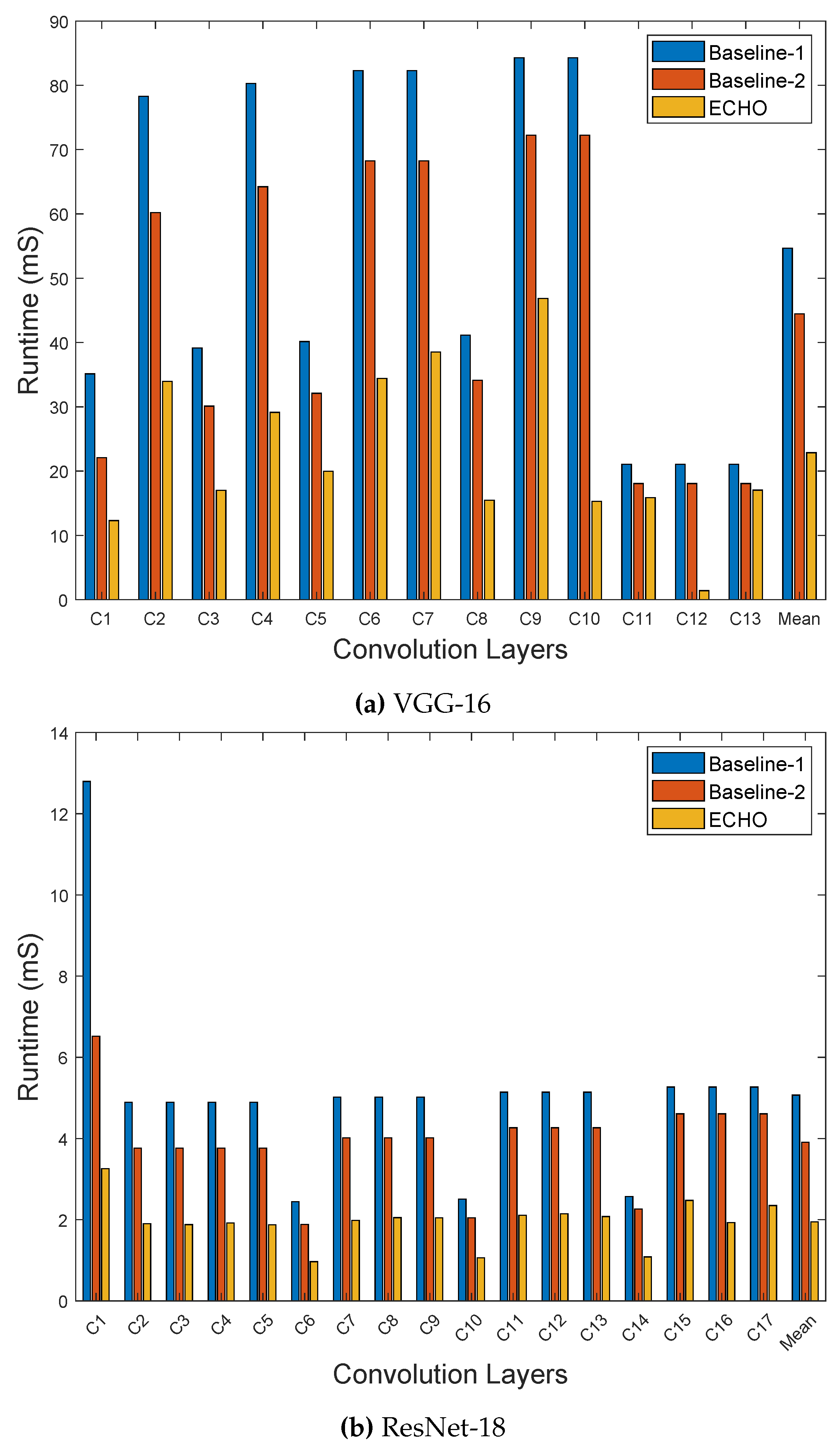

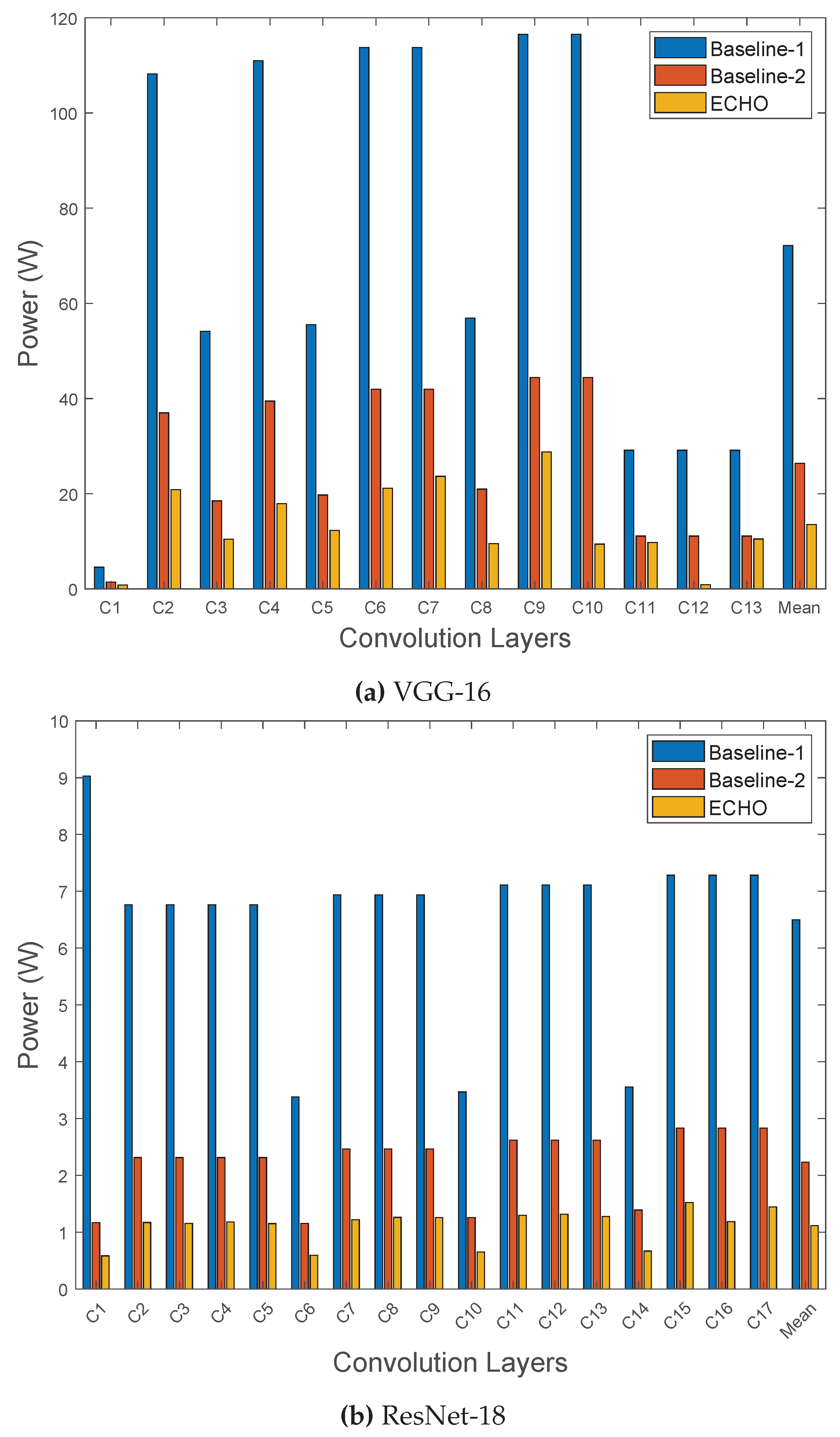

| Layer | Inference Time (mS) | Power (W) | Speedup | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Baseline-1 | Baseline-2 | ECHO | Baseline-1 | Baseline-2 | ECHO | Baseline-1 | Baseline-2 | ECHO | |

| C1 | 35.12 | 22.8 | 12.29 | 4.55 | 1.44 | 0.81 | 1 | 1.54 | 2.86 |

| C2 | 78.27 | 60.21 | 33.95 | 108.2 | 36.99 | 20.86 | 1 | 1.3 | 2.31 |

| C3 | 39.13 | 30.10 | 16.99 | 54.10 | 18.5 | 10.44 | 1 | 1.3 | 2.3 |

| C4 | 80.28 | 64.22 | 29.14 | 110.98 | 39.46 | 17.90 | 1 | 1.25 | 2.75 |

| C5 | 40.14 | 32.11 | 19.98 | 55.49 | 19.73 | 12.27 | 1 | 1.25 | 2.01 |

| C6 | 82.28 | 68.24 | 34.42 | 113.75 | 41.92 | 21.14 | 1 | 1.21 | 2.39 |

| C7 | 82.28 | 68.24 | 38.51 | 113.75 | 41.92 | 23.66 | 1 | 1.21 | 2.14 |

| C8 | 41.14 | 34.12 | 15.49 | 56.87 | 20.96 | 9.51 | 1 | 1.21 | 2.67 |

| C9 | 84.29 | 72.25 | 46.86 | 116.53 | 44.39 | 28.79 | 1 | 1.16 | 1.79 |

| C10 | 84.29 | 72.25 | 15.30 | 116.53 | 44.39 | 9.40 | 1 | 1.16 | 5.51 |

| C11 | 21.07 | 18.06 | 15.86 | 29.13 | 11.09 | 9.74 | 1 | 1.16 | 1.33 |

| C12 | 21.07 | 18.06 | 1.41 | 29.13 | 11.09 | 0.86 | 1 | 1.16 | 14.92 |

| C13 | 21.07 | 18.06 | 17.04 | 29.13 | 11.09 | 10.47 | 1 | 1.16 | 1.24 |

| Mean | 54.65 | 44.46 | 22.87 | 72.16 | 26.38 | 13.53 | 1 | 1.23 | 2.39 |

| Layer | Inference Time (mS) | Power (W) | Speedup (×) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Baseline-1 | Baseline-2 | ECHO | Baseline-1 | Baseline-2 | ECHO | Baseline-1 | Baseline-2 | ECHO | |

| C1 | 12.79 | 6.52 | 3.25 | 9.02 | 1.16 | 0.58 | 1 | 1.96 | 3.93 |

| C2 | 4.89 | 3.76 | 1.9 | 6.76 | 2.31 | 1.16 | 1 | 1.3 | 2.57 |

| C3 | 4.89 | 3.76 | 1.88 | 6.76 | 2.31 | 1.15 | 1 | 1.3 | 2.6 |

| C4 | 4.89 | 3.76 | 1.91 | 6.76 | 2.31 | 1.17 | 1 | 1.3 | 2.56 |

| C5 | 4.89 | 3.76 | 1.87 | 6.76 | 2.31 | 1.15 | 1 | 1.3 | 2.61 |

| C6 | 2.44 | 1.88 | 0.96 | 3.38 | 1.15 | 0.59 | 1 | 1.29 | 2.54 |

| C7 | 5.01 | 4.01 | 1.98 | 6.93 | 2.46 | 1.21 | 1 | 1.24 | 2.53 |

| C8 | 5.01 | 4.01 | 2.05 | 6.93 | 2.46 | 1.26 | 1 | 1.24 | 2.44 |

| C9 | 5.01 | 4.01 | 2.04 | 6.93 | 2.46 | 1.25 | 1 | 1.24 | 2.45 |

| C10 | 2.5 | 2.04 | 1.06 | 3.46 | 1.25 | 0.65 | 1 | 1.22 | 2.35 |

| C11 | 5.14 | 4.26 | 2.1 | 7.1 | 2.62 | 1.29 | 1 | 1.2 | 2.44 |

| C12 | 5.14 | 4.26 | 2.14 | 7.1 | 2.62 | 1.31 | 1 | 1.2 | 2.4 |

| C13 | 5.14 | 4.26 | 2.07 | 7.1 | 2.62 | 1.27 | 1 | 1.2 | 2.48 |

| C14 | 2.57 | 2.26 | 1.08 | 3.55 | 1.39 | 0.66 | 1 | 1.13 | 2.37 |

| C15 | 5.26 | 4.6 | 2.47 | 7.28 | 2.83 | 1.52 | 1 | 1.14 | 2.12 |

| C16 | 5.26 | 4.6 | 1.92 | 7.28 | 2.83 | 1.18 | 1 | 1.14 | 2.73 |

| C17 | 5.26 | 4.6 | 2.35 | 7.28 | 2.83 | 1.44 | 1 | 1.14 | 2.23 |

| Mean | 5.06 | 3.9 | 1.94 | 6.49 | 2.23 | 1.11 | 1 | 1.29 | 2.6 |

| Model | VGG-16 | ResNet-18 | ResNet-50 | ||||||

| Design | Baseline-1 | Baseline-2 | ECHO | Baseline-1 | Baseline-2 | ECHO | Baseline-1 | Baseline-2 | ECHO |

| Frequency (MHz) | 100 | 100 | 100 | ||||||

| Logic Utilization | 238K (27.6%) |

315K (36.54%) |

238K (27.6%) |

315K (36.54%) |

238K (27.6%) |

315K (36.54%) |

|||

| BRAM Utilization | 83 (11.4%) |

84 (11.54%) |

83 (11.4%) |

84 (11.54%) |

83 (11.4%) |

84 (11.54%) |

|||

| Mean Power (W) | 72.16 | 26.38 | 13.53 | 6.49 | 2.23 | 1.11 | 9.63 | 11.5 | 5.72 |

| Peak Throughput (GOPS) | 47.3 | 61.4 | 655 | 47.3 | 61.4 | 123 | 39.01 | 61.4 | 128.7 |

| Latency per Image (ms) | 710.5 | 578.03 | 297.3 | 86.2 | 66.4 | 33.1 | 366.7 | 464.01 | 231.03 |

| Average Speedup (×) | 1 | 1.23 | 2.39 | 1 | 1.29 | 2.6 | 1 | 1.21 | 2.42 |

| Models | VGG-16 | ResNet-18 | ResNet-50 | |||||||

| Designs | NEURAghe [54] | [55] | OPU [56] | Caffeine [57] | ECHO | NEURAghe [54] | [58] | ECHO | [58] | ECHO |

| Device | Zynq Z7045 |

Zynq ZC706 |

Zynq XC7Z100 |

VX690t | VU3P | Zynq Z7045 |

Arria10 SX660 |

VU3P | Arria10 SX660 |

VU3P |

| Frequency (MHz) | 140 | 150 | 200 | 150 | 100 | 140 | 170 | 100 | 170 | 100 |

| Logic Utilization | 100K | - | - | - | 315K | 100K | 102.6K | 315K | 102.6K | 315K |

| BRAM Utilization | 320 | 1090 | 1510 | 2940 | 84 | 320 | 465 | 84 | 465 | 84 |

| Peak Throughput (GOPS) | 169 | 374.98 | 397 | 636 | 655 | 58 | 89.29 | 123 | 90.19 | 128.7 |

| Energy Efficiency (GOPS/W) | 16.9 | - | 24.06 | - | 48.41 | 5.8 | 19.41 | 21.58 | 19.61 | 22.51 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).