1. Introduction

The method presented in the current paper addresses medical applications where the strategic goal is to provide better medical care through real-time detection, warning, prevention, diagnosis, and treatment. The specific task is to detect seizures in people who have epilepsy.

Epilepsy is a neurological disease whose symptoms are sudden transitions from normal to pathological behavioral states called epileptic seizures, often accompanied by rhythmic movements of body parts. Some of these seizures may lead to life-threatening conditions and ultimately cause Sudden Unexplained Death in Epilepsy (SUDEP).

Therefore, medical treatment involves continuous observation of individuals for long periods to obtain sufficient data for an adequate diagnosis and to plan therapeutic strategies. Some people, especially those with untreatable epileptic conditions, may need long-term care in specialist units to allow early intervention that prevents complications.

We have developed and implemented an earlier method that detects motor seizures in real time using remote sensing by video camera. Video human surveillance is used successfully for monitoring patients, but it poses certain societal burdens and costs and creates ethical issues related to privacy. Epilepsy patients are sick people, often stigmatized and sensitive to privacy. Therefore, it is necessary to use only remote sensing devices (i.e., no contact with the object) such as video cameras, recording not movies but vector fields.

The method was published [

1] and is currently used in medical facilities with validated detection success. It uses an original, efficient algorithm to reconstruct global optical flow group velocities (GLORIA). These quantities also provide input for detecting falls and non-obstructive apnea [

2,

3]. However, the technique only works in a static situation, for example, during night observation when the patient lies in bed. When the patient changes position, for example, during daytime observation, the operator has to adjust either the camera field of view with PTZ (pen tilt zoom) field of view or the Region of Interest (ROI). Here, we address the challenge of developing and embedding patient-tracking functionality in our system. We approach this task using the same group velocities provided by the GLORIA algorithm. This way, we avoid introducing extra computational complexity that may prevent the system from being used in real-time. To the best of our knowledge, this is a novel video-tracking approach and constitutes the main contribution of this work. Below, we acknowledge other works related to video tracking but none of them can directly utilize the group velocities that provide also input for our convulsive seizure detection algorithm.

Real-time automated tracking of moving objects is a technique that has a wide range of applications in various research and commercial fields [

4]. Examples are self-driving cars [

5,

6], automated surveillance for incident detection and criminal activity [

7], traffic flow control [

8,

9], interface for human-computer interactions and inputs [

10,

11,

12], and patient care, including intensive care unit monitoring and epilepsy seizure alerting [

1,

13].

Generally, there are different requirements and limitations when one attempts to track a moving object, such as noise in the video data, complex object movement, occlusions, illumination changes between frames, and processing complexity. Many object-tracking methods involve particular tracking strategies [

14,

15,

16,

17], including frame difference methods [

18], background subtraction [

19], image segmentation, static and deep learning algorithms [

20,

21], and optic flow methods. All the methods have advantages and disadvantages, and the choice depends on the specific task.

In the current work, we propose a method based on direct global motion parameter reconstruction from optical camera frame sequences. As noticed above, the main reason for focusing on such a method is that it uses already calculated quantities as part of the convulsive seizure detection system. The method avoids intensive pixel-level optic flow calculation. Such an approach provides thus computationally efficient and content-independent tracking capabilities. Tracking methods that use the standard pixel-based optic flow reconstruction [

22,

23] suffer from limitations caused by relatively high computational costs and ambiguities due to the absence of sufficient variation of the luminance in the frames. We propose a solution to these limitations using the multi-spectral direct group parameter reconstruction algorithm GLORIA, developed by our group and introduced in [

24]. The GLORIA algorithm offers the following advantages compared to other optic flow-based tracking techniques: A) It calculates directly the rates of group transformations (such as but not limited to translations, rotation, dilatation, and shear) of the whole scene. Thus, it avoids calculating the velocity vector fields for each image point, thus lowering computational requirements. B) Unlike the standard intensity-based algorithms, we apply a multi-channel method directly using co-registered data from various sources, such as multi-spectral and thermal cameras. This early fusion of sensory data features increases accuracy and decreases possible ambiguities of the optic flow inverse problem.

Our objective for the particular application here is to track a single object that moves within the camera’s field of view. We introduce a rectangular Region of Interest (ROI with a specific size around the object we plan to track). This GLORIA method then further accurately estimates how the object moves. Subsequently, the novel algorithm proposed here adjusts the ROI accordingly. Using an ROI also helps reduce the size of the images for optical flow estimation and limits the adverse effects of any areas with high levels of brightness change outside the ROI. It also automatically disregards movements of no interest to us outside the specified area. In short, our approach reduces the tracking problem to a dynamic ROI steering algorithm.

The present work is part of a more extensive study on autonomous video surveillance of epilepsy patients. Tracking how a patient moves can further improve results related to seizure detection from video data [

1]. The proposed method, however, is not limited to use only in health care but can successfully apply to other scenarios related to automated remote tracking.

The rest of the paper is organized as follows. The next section introduces the proposed original tracking method. Then, we present our results from both simulated and real-world image sequences. We also apply the novel method to a sequence from a publicly available dataset (LaSoT). The outcome from all the examples provides quantitative validation of the algorithm’s effectiveness and qualitative illustrations. Finally, in the Discussion, we comment on the features, possible extensions, and limitations of the proposed approach to tracking.

2. Methods

Optic Flow Reconstruction Problem

The algorithm presented in the current work uses motion information reconstructed from the optic flow in video sequences. Optical flow reconstruction is a general technique that enables determining the spatial velocities of a vector field from changes in luminance spectral intensities between sequential observed scenes (frames). Here, we briefly introduce optic flow methods, leaving the details to the dedicated literature [

25,

26,

27,

28,

29,

30,

31,

32,

33].

We denote the pixel content in a multi-spectral image frame as

, where

are the spatial coordinates and the time,

is the spectral index, most commonly labeling the R, G, and B channels. Assuming that all changes of the image content in time are due to scene deformation and defining the local vector velocity (rates of deformation) vector field as

, the corresponding image transformation is:

In

Eq. (1)

is the vector field operator,

are the two-dimensional spatial coordinates in each frame, and

is the time or frame number. The velocity field can determine a large variety of object motion properties such as translations, rotations, dilatations (expansions and contractions), etc. In the current work, however, we do not need to calculate the velocity vector field for each point, as we can directly reconstruct global features of the optic flow, considering only specific aggregated values associated with it. In particular, we are interested in the global two-dimensional linear non-homogeneous transformations consisting of translations, rotations, dilatations, and shear transformations. Therefore, we use the Global Optical-flow Reconstruction Iterative Algorithm “GLORIA,” which was developed previously by our group [

3]. The vector field operator introduced in

Eq. (1) takes the following form:

The

Eq. (2) representation can be helpful when decomposing the transformation field

as a superposition of known transformations. If we denote the vector fields corresponding to each transformation generator within a group as

, and the corresponding parameters as

, then:

With

Eq. (3) one may define a set of differential operators for the group of transformations that form a Lie algebra:

As a particular case, we apply

Eq. (4) to the group of three general linear non-homogeneous transformations in two-dimensional images that preserve the orientation of the axes and the ratio between their lengths:

Evaluation of the ROI Tracking Performance

We introduce several quantities to assess the proposed method’s accuracy and working boundaries. The first one reflects a combination of the absolute difference between the center coordinates of the moving

object and the calculated values of the center coordinates

of the ROI, as well as the absolute differences between the true

and calculated

values of the dimensions of the ROI:

We use these values to determine the maximum velocities of moving objects that can be registered with the method. They only apply when the ROI’s ground truth coordinates and sizes are known, for example, when dealing with synthetic test data. To assess the average deviation between the true position of the moving object and the detected one for a given tracking sequence, we define the following quantity:

In eq. (9).

is the total number of frames, and the values in brackets are the summed values of

Eq. (8) for the corresponding number of frames,

Eq. (9) represents the average values of

Eq. (8) a

frames. We apply the measure in

Eq. (9) to explore the influence of the background image contrast on the accuracy of our tracking algorithm. Image contrast is defined as in [

34] –the root-mean-square deviation of the pixel intensity from the mean pixel intensity for the whole frame, divided by the mean pixel intensity for the entire frame. Each color channel has a specific background contrast value. It can affect the optical flow reconstruction quality and, accordingly, the quality of the ROI tracking

Initial ROI placement also affects the accuracy of the method. To examine what the optimal size of the initial ROI is, we define the ratio

between the ROI area

and object area

Eq. (10):

If one wants to verify that the ROI tracks the object accurately but does not have access to the true ROI center position dimensions (as is in

Eq. (8)), we introduce the relative mismatch

:

In

Eq. (11),

is the image in the ROI in the

frame, resampled to the pixel size of the initial ROI,

is the initial image from the initial ROI in the first frame,

is the index of the current frame,

is a summation index over all the pixels of

and

. In our tests, we will show that the quantities

and

are highly correlated using two correlation measures – the Pearson correlation coefficient and the nonlinear association index

, developed in [

35]. That would mean one might use the relative mismatch

to give a qualitative measure of the accuracy of the method in real-world data where the true positions and sizes of moving objects are unknown.

The precision value is a measure used in the literature for tracking performance evaluation. It is defined as the ratio between the number of frames

in which the center location is below some threshold

and the total number of frames

in the sequence:

Another measure is the success rate, which also considers the ROI box’s size and compares it to ground truth. It is defined as the relative number of frames

where the area of intersection between the tracked ROI with the ground truth bounding box divided by the area of the union between the two is larger than some threshold

Here is the intersection-over-union between the tracking region of interest of the frame and the ground truth region of interest. The function is an indicator which returns a value of “1” if is above the current threshold , and “0” otherwise.

We note the last two quantifiers of tracking quality, just as the one defined by Eq. (8) and (9) depend on the existence of "unequivocal" ground truth. For a general assessment of tracking quality, the quantity introduced by Eq. (11) applies to complex objects and scenes.

3. Results

Tracking Capabilifties

To show the feasibility of our method, we started by creating simple test cases with only a single moving object. Initially, we made tests of movements comprised of only one of the primary generators in Eq. (5). The test methodology goes as follows:

Generate an initial image, in our case, a Gaussian spot with a starting size and coordinates on a homogenous background

Specify the coordinates and size of the first region of interest, R1

Transform the initial image with any number

of basic movement generators as described in

Figure 1 to arrive at an image sequence

Using the GLORIA algorithm, calculate the transformation parameters

Update the ROI according to Eq. (7)

Compare properties of regions of interest – coordinates and size

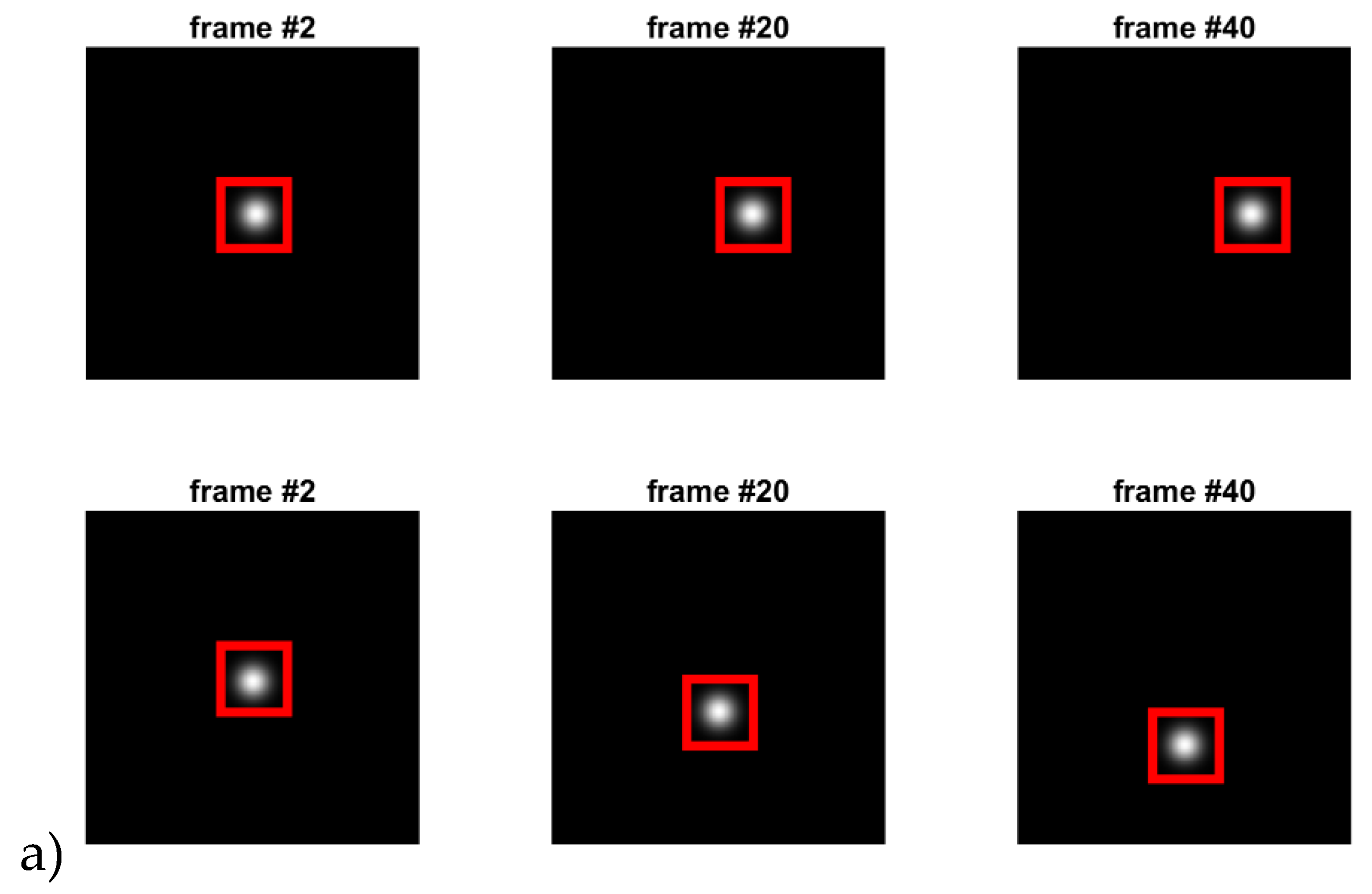

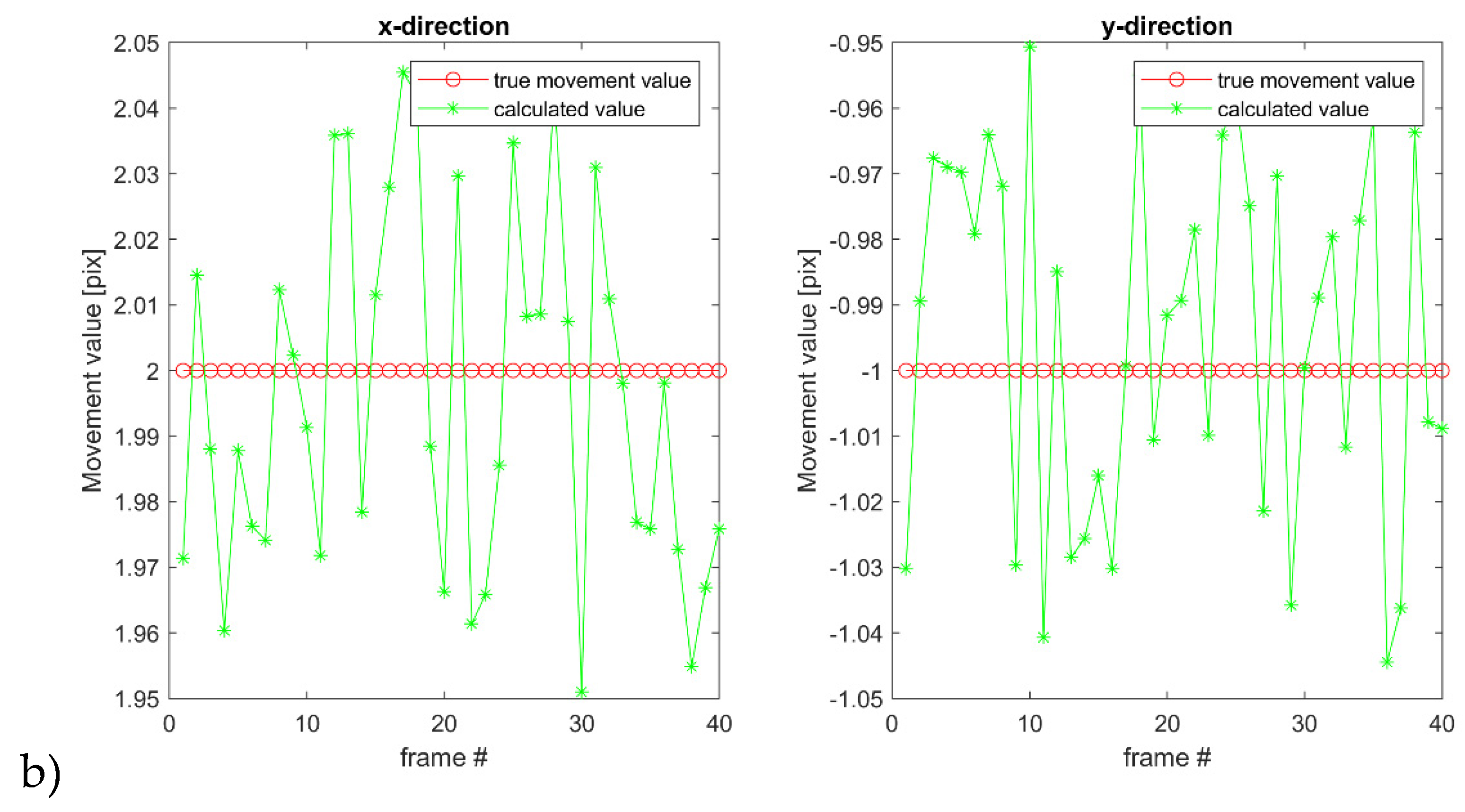

For translations in only the or direction, the method proved to be very effective:

Figure 4 a) shows the Gaussian “blob” moving with a speed of 2 pixels per frame to the right, while in the second row, the object’s velocity is 1 pixel per frame.

Figure 4 b) shows how much the calculated values deviated from the original. Although there is some spread, the final region of interest selection uses integer values for pixel coordinates, meaning that those computed values are rounded up. After rounding the values calculated by GLORIA and applying

Eq. (8) and

Eq. (11), both

and

show a positive linear correlation with a

Pearson coefficient value of 1. Therefore, the complete positive linear correlation between the two measures of tracking precision shows that

can be used instead

for translational motion tracking assessment.

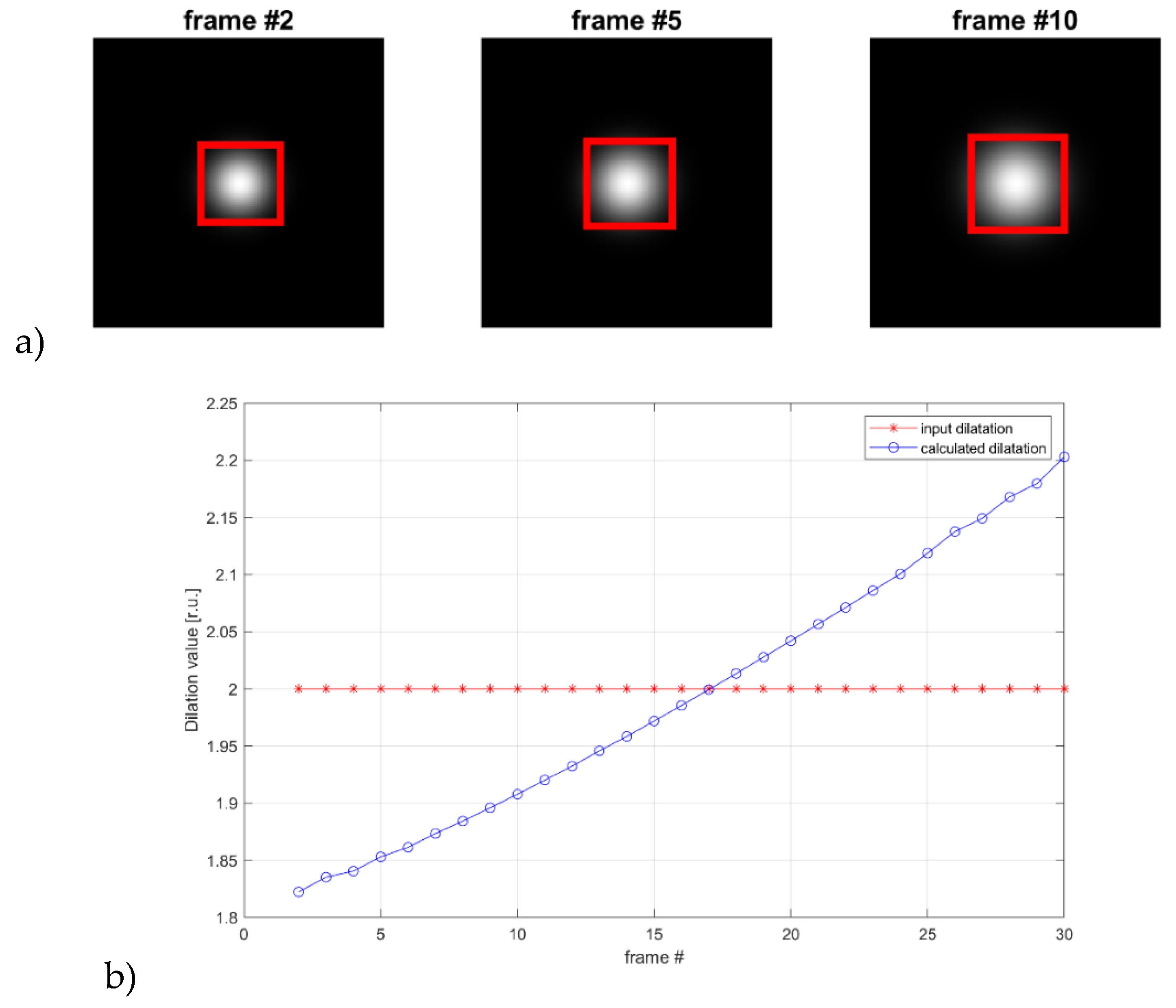

Further, we demonstrate the usefulness of the GLORIA algorithm when estimating the dilatational transformation rate

Figure 5. In this test, the size of the observed object is increased by a fixed amount with each frame. The algorithm successfully detected the scaling of the object:

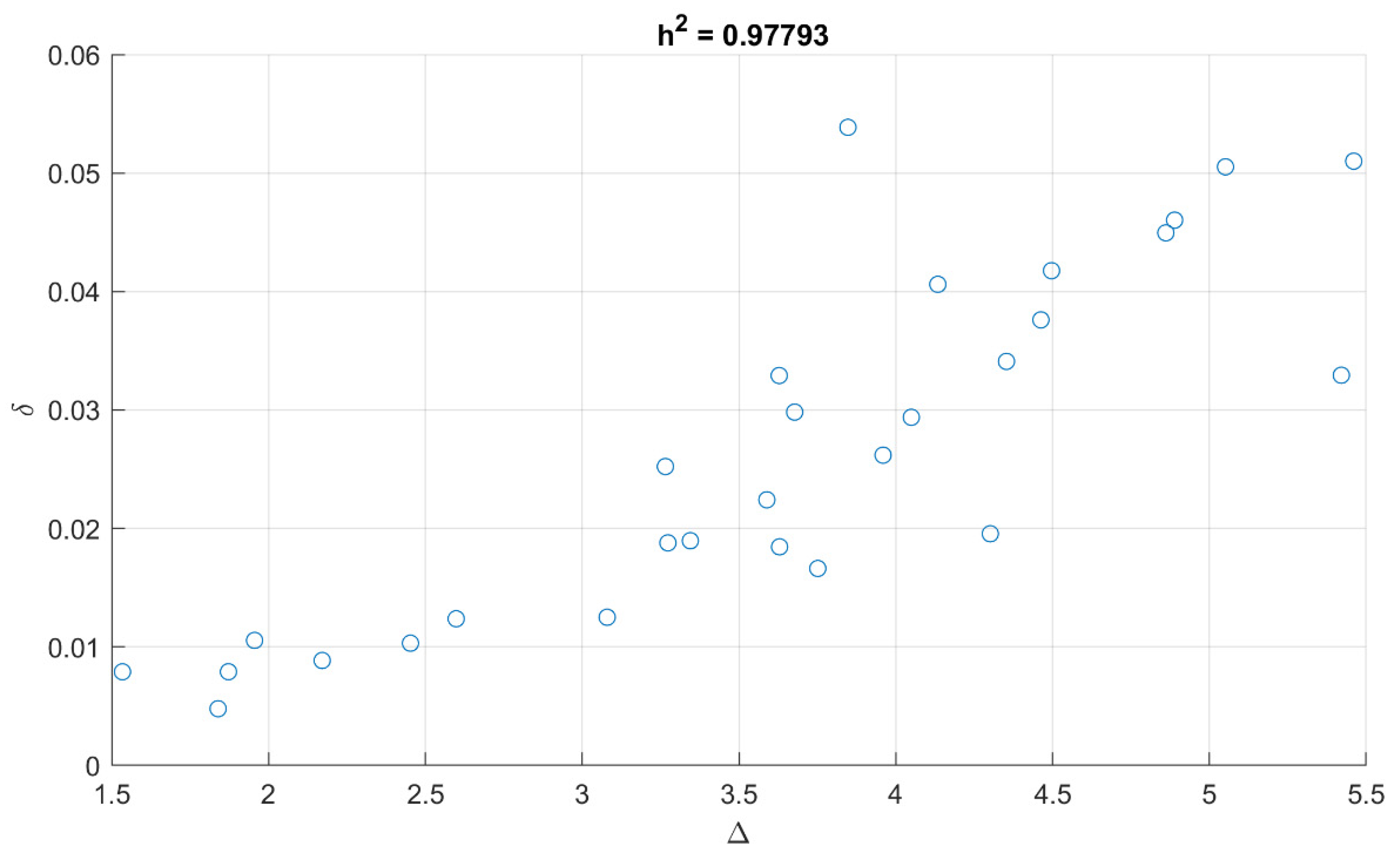

The mismatch values

and

are calculated again. However, this time, they only exhibit a partial linear correlation. We provide their scatter plot in

Figure 6.

The nonlinear association index h2 value shows that and have a high nonlinear correlation. The variance of values obtained by Eq. (8) can be explained by the variance of values obtained by Eq. (11) for dilatational movements.

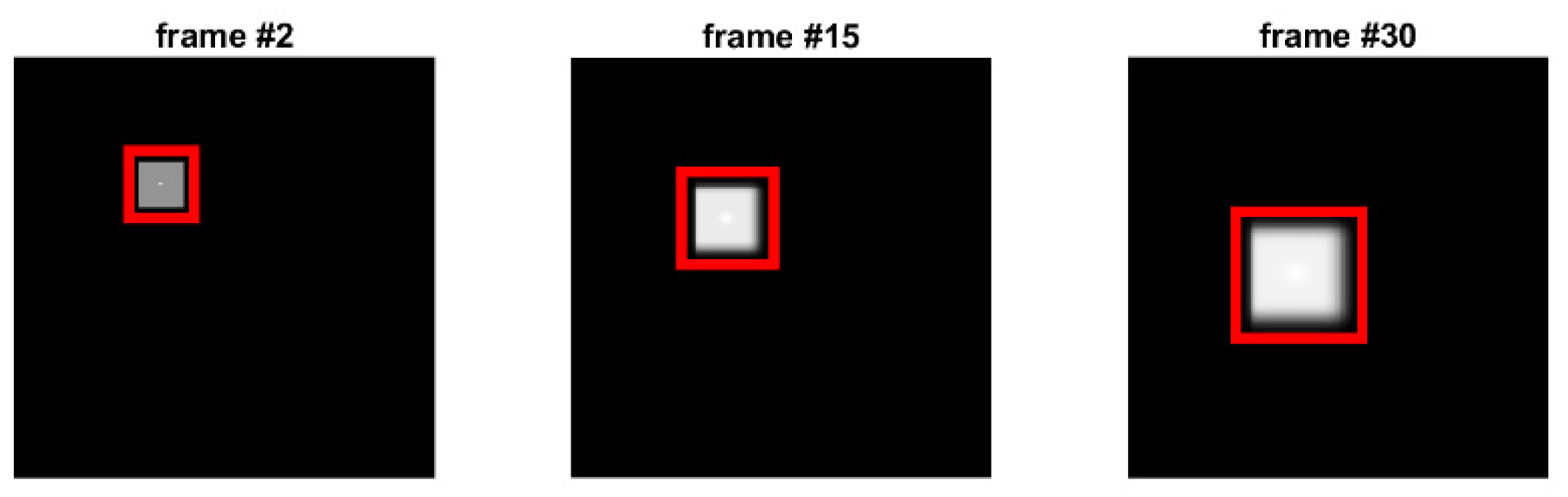

The next step is to show the tracking capabilities of our method when multiple types of movement are involved, as illustrated in

Figure 7. We have prepared a test where both translations and dilatation are present.

We applied both

Eq. (8) and

Eq. (11) to this test to show that both measures are highly correlated, and the relative mismatch

can be used for cases where no ground truth is available. The linear correlation between the measures

and is much lower than the nonlinear association index

which accounts for arbitrary functional relations. The measured

between the two mismatch measures

, and

is 0.8103. In other words, the variance of the values, given by Eq. (8), can be explained by the variance of the values, given by Eq. (11), and this fact, alongside the results from

Figure 4 and

Figure 6, allows using the relative mismatch

for real-world data.

In Figure 8, we combined different types of movement and changed the scene’s background. We tested both low-contrast and high-contrast backgrounds. Our method works both with grayscale and RGB data.

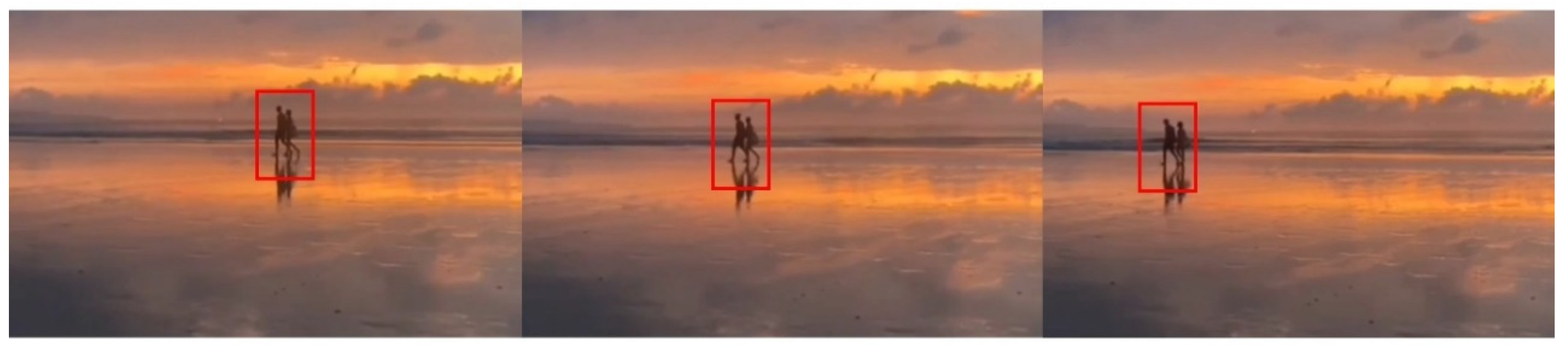

After the initial tests, we tried applying it to various real-world tracking scenarios, which showed accurate tracking results as well. We started with a video sequence that contained only one moving party to track with a relatively static background

Figure 9. The method successfully estimated a proper region of interest around the moving objects:

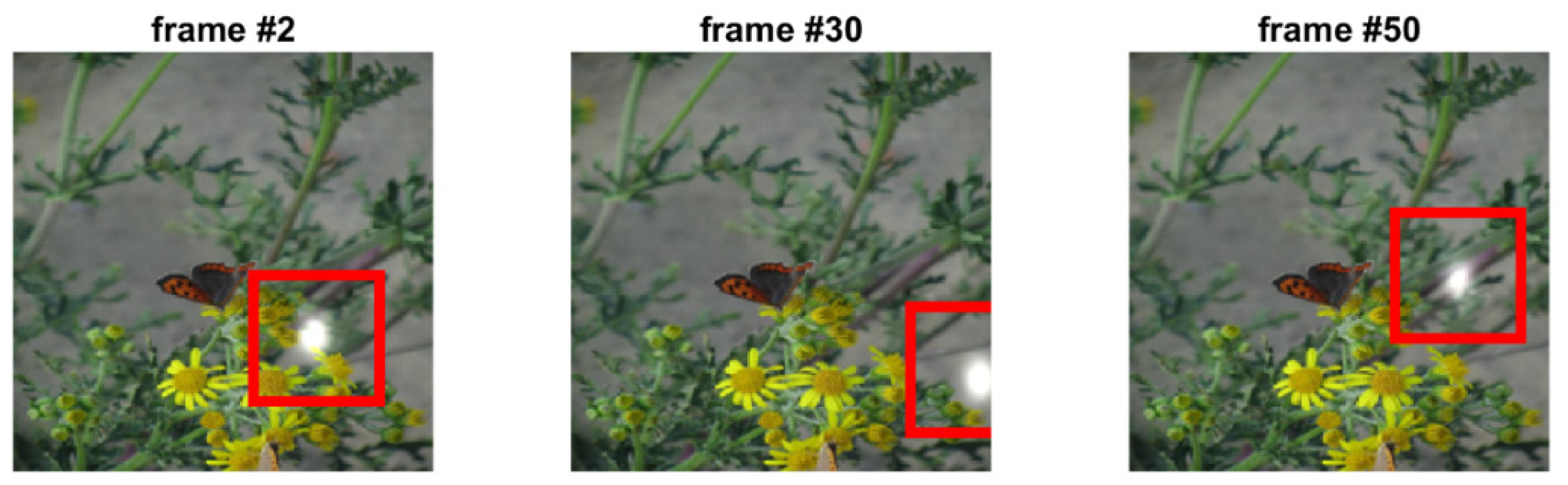

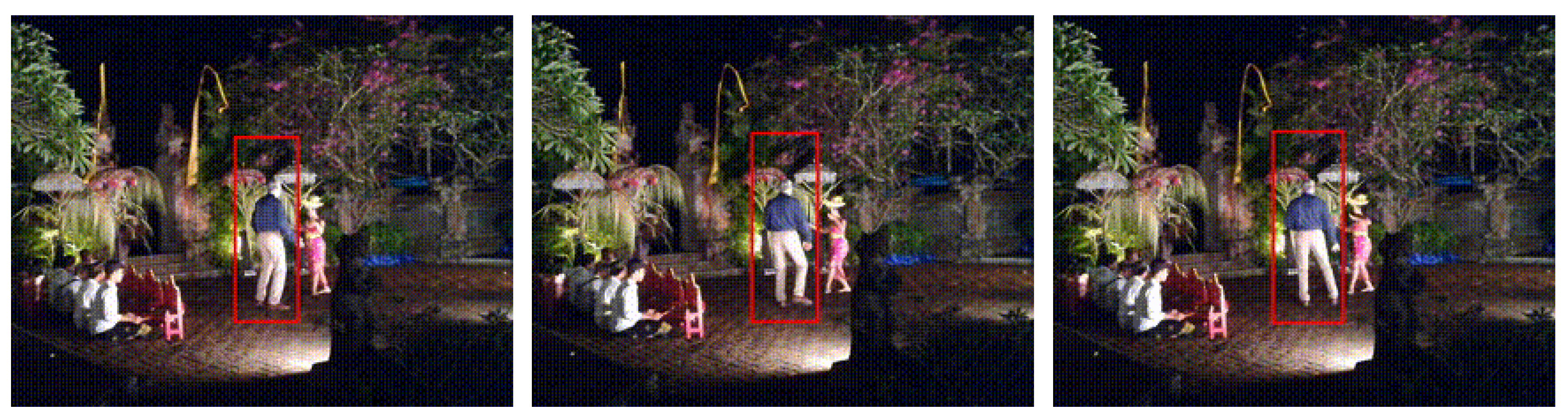

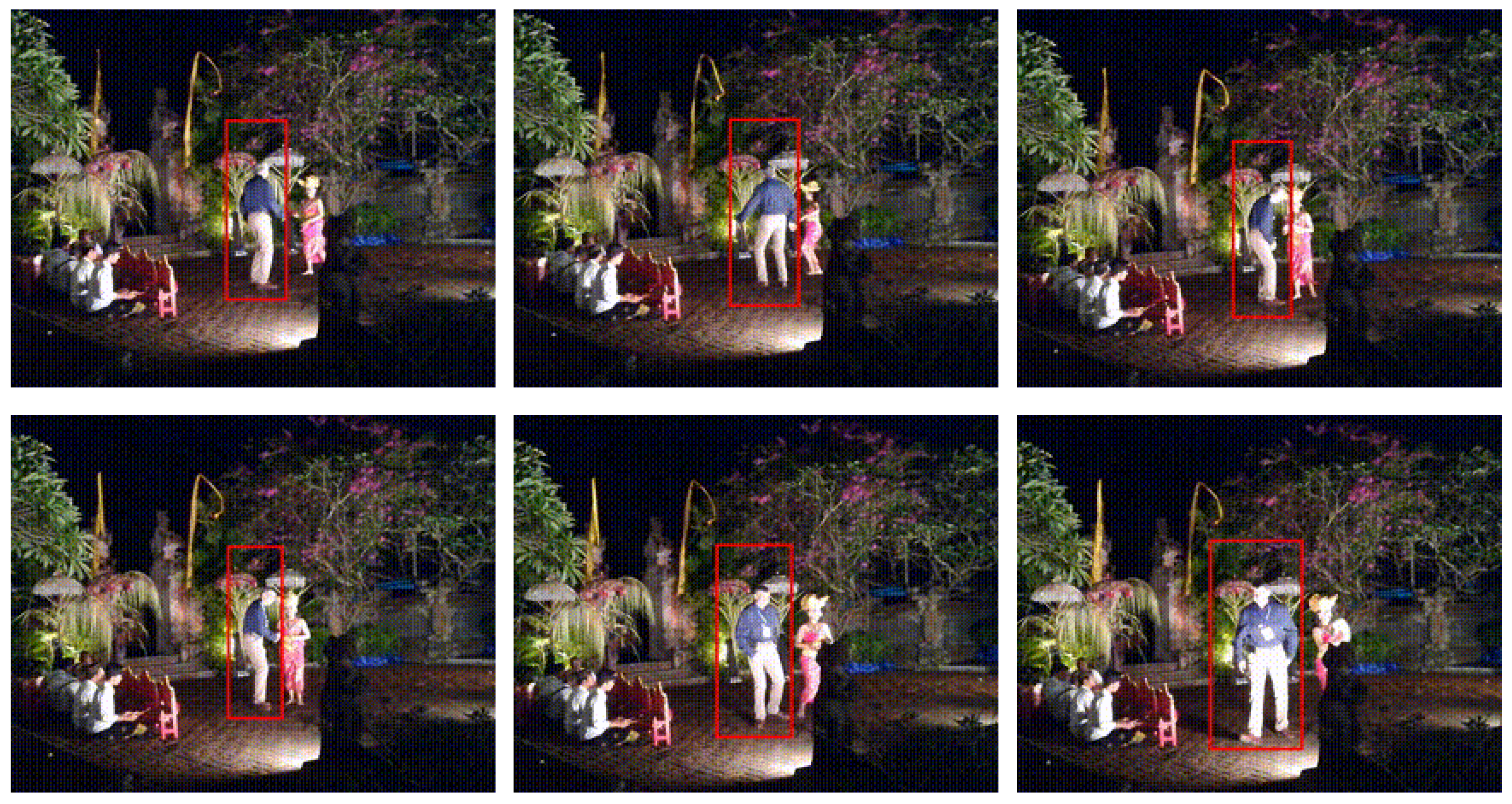

Finally, we tested a dynamic scene

Figure 10 with multiple moving objects and a high-contrast background.

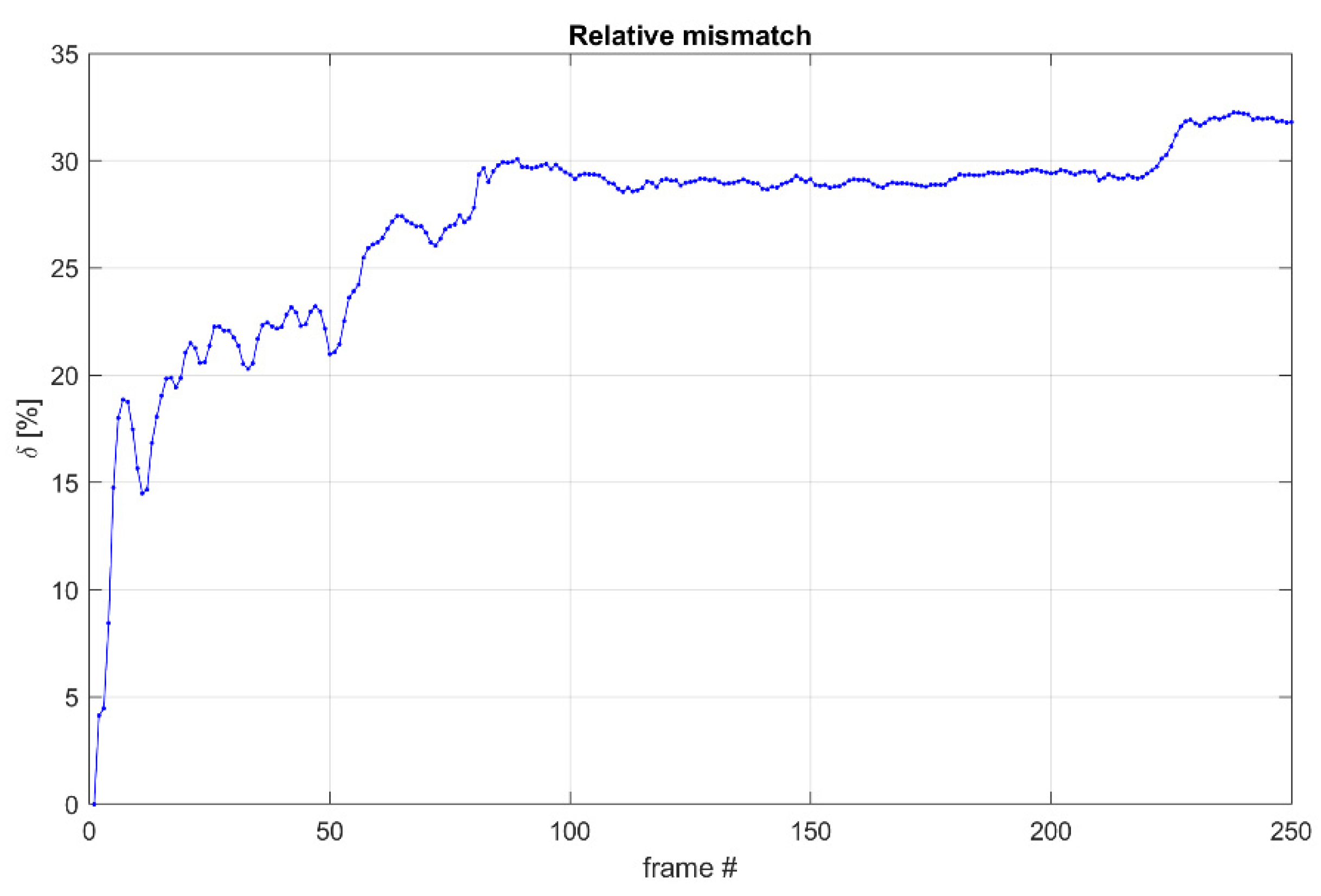

The results in

Figure 10 show the benefits of the proposed method. It can easily track the person in the ROI throughout the frames. Although the background is complex and there are other moving objects, the ROI stays centered around the man and changes size accordingly based on the distance to the camera (can be observed in the last presented frame). The relative mismatch

for the video sequence in

Figure 10 is shown in

Figure 11:

To test the performance of our method with test data provided for the evaluation of tracking methods [

20], we have applied it to a sample of image sequences from the LaSOT dataset [

36]. We provide the precision (Eq.12) and success (Eq.13) plots in

Figure 12. The initial ROI for our method is the same as the RoI from the first image in the specific LaSOT image sequence:

Our method shows higher precision and success values in this example than the dataset’s averaged performance of any of the other tested methods [

20]. This is certainly not a conclusive comparison, but it still indicates that the proposed technique provides promising tracking abilities.

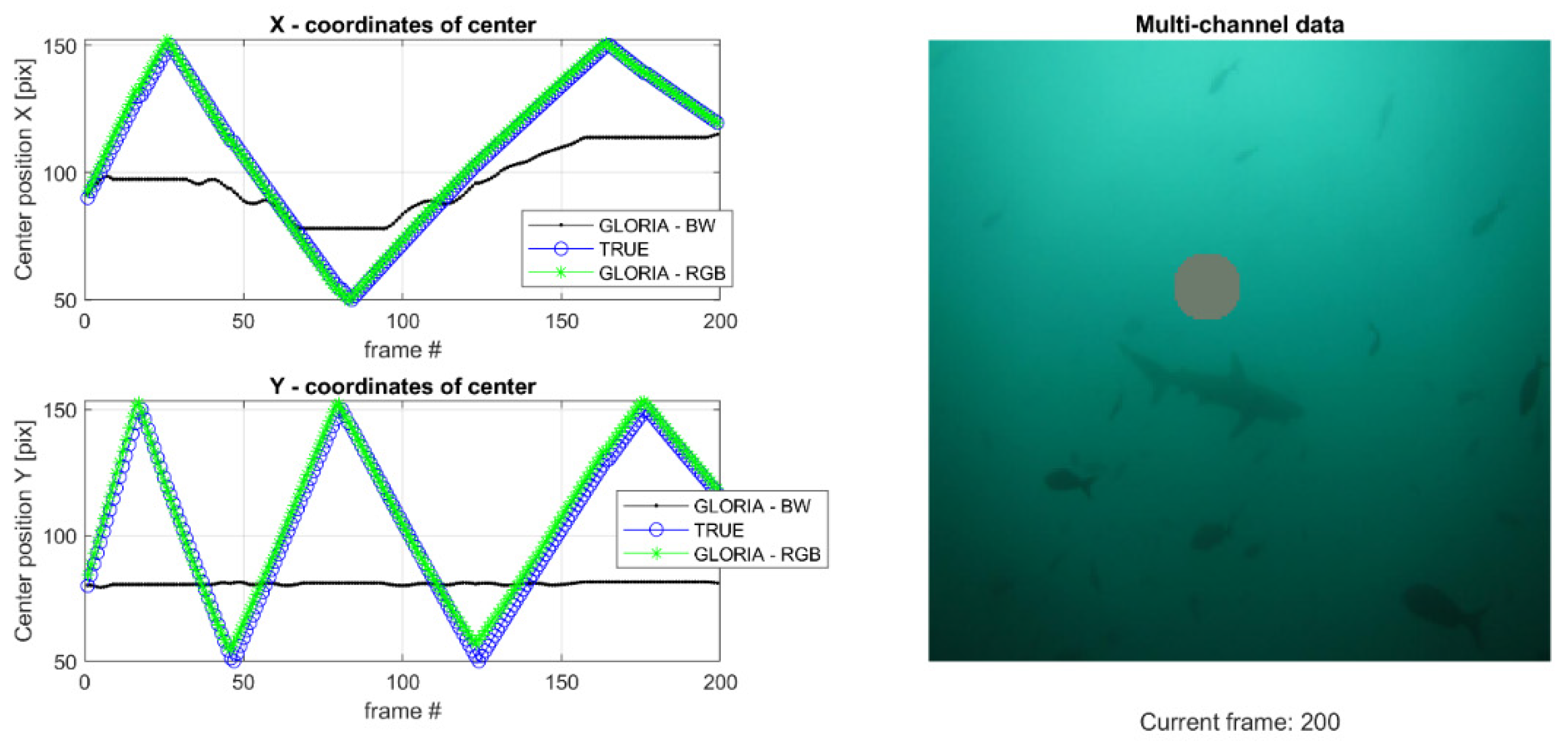

Our method works significantly better when multi-spectral data is used. It is a consequence of the GLORIA algorithm, which provides an early fusion of all spectral components and reduces any contrast-related ambiguities for the group parameter reconstruction. We have prepared an example demonstrating the importance of multi-channel data (in our case, the use of colored image sequences). The test is presented in

Figure 13. We have prepared a moving object (circle) on a shallow contrast background image. The moving object is not trackable in greyscale but is successfully tracked when the video has all three color channels:

Tracking Limitations

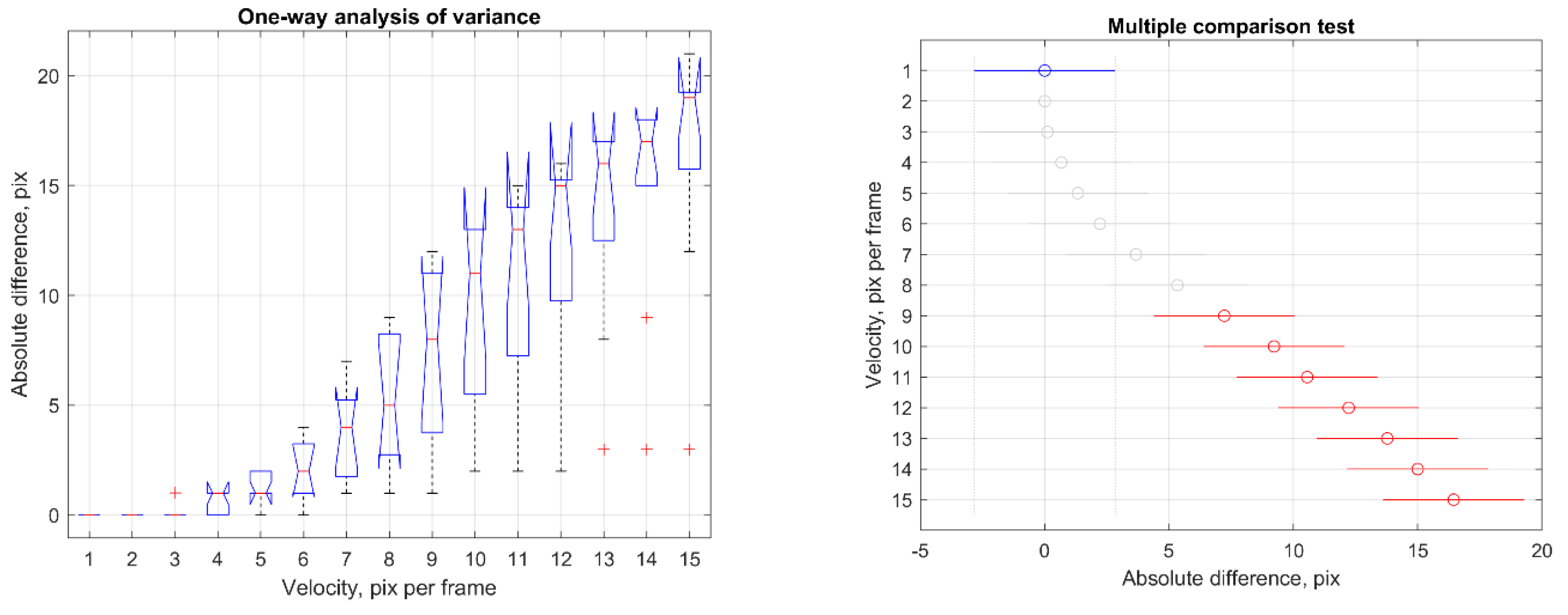

Several limitations apply when using the method presented in the current work. One is the maximum speed with which an object can move and be tracked by our method. To find the extent of this limitation, we made numerous simulations of a moving circular spot on a homogenous background with varying speeds. We use

Eq. (8) to compare the method’s accuracy for varying object velocities. The means of the quantities of

Eq. (8) were analyzed for movement spread out in twenty consecutive positions (frames), and a one-way analysis of variances test can summarize the results

Figure 14:

The tests show that the tracking method becomes less reliable for velocities over seven pixels per frame, and some inaccuracies become apparent.

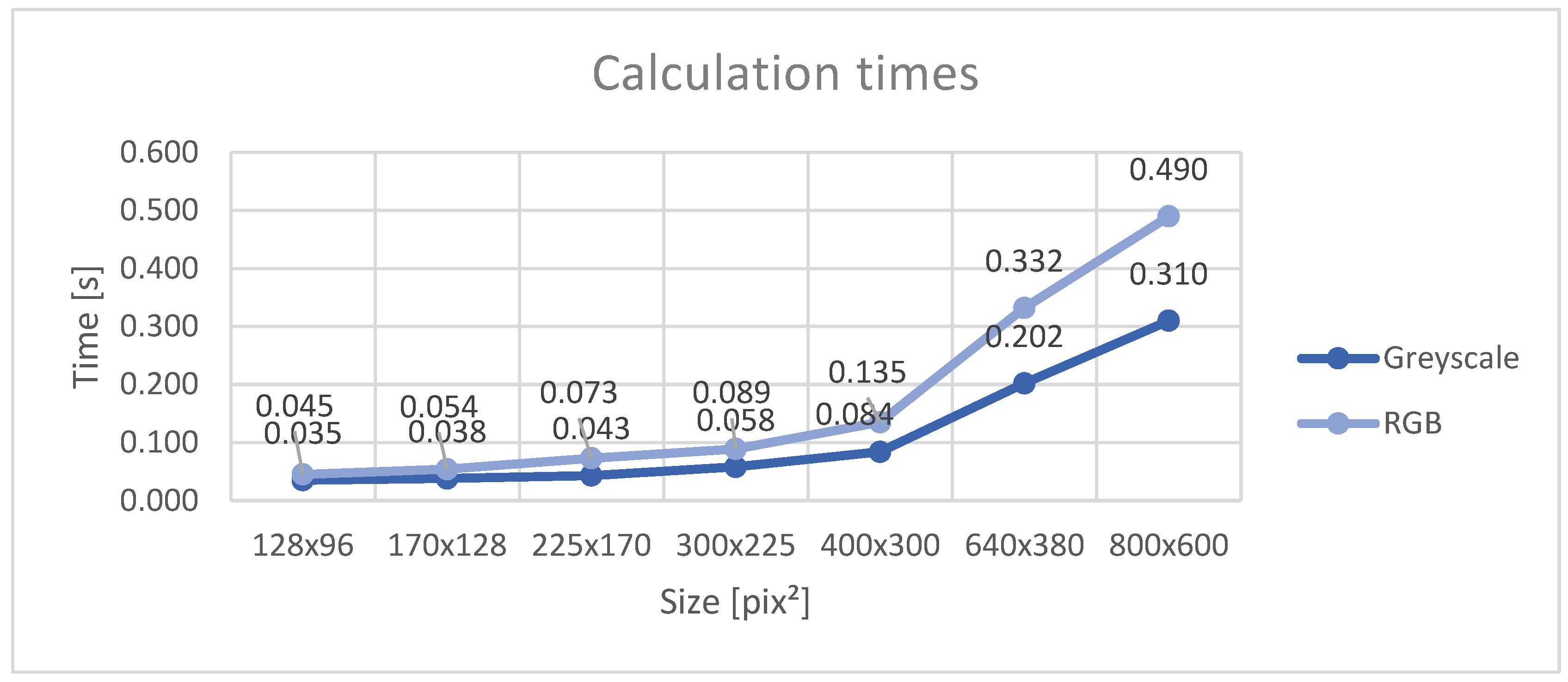

Another critical detail of our method is its applicability in real-time image sequences. It is limited by the processing time needed to update the ROI between frames. We investigated how fast our algorithm is on the following personal computer: Lenovo™ IdeaPad 130-15IKB laptop with an Intel® Core™ i5-8250 CPU and 20 GB of DDR4-2133 MHz SODIMM random access memory. Results for processing time depending on ROI size are presented in

Figure 15.

The graph shows that real-time calculations between each frame can be done for a smaller ROI. However, our initial tests with a PTZ camera have shown that updating the ROI between each two frames is not always necessary, leaving even more room for real-time applications. Newer systems would also demonstrate significantly faster results.

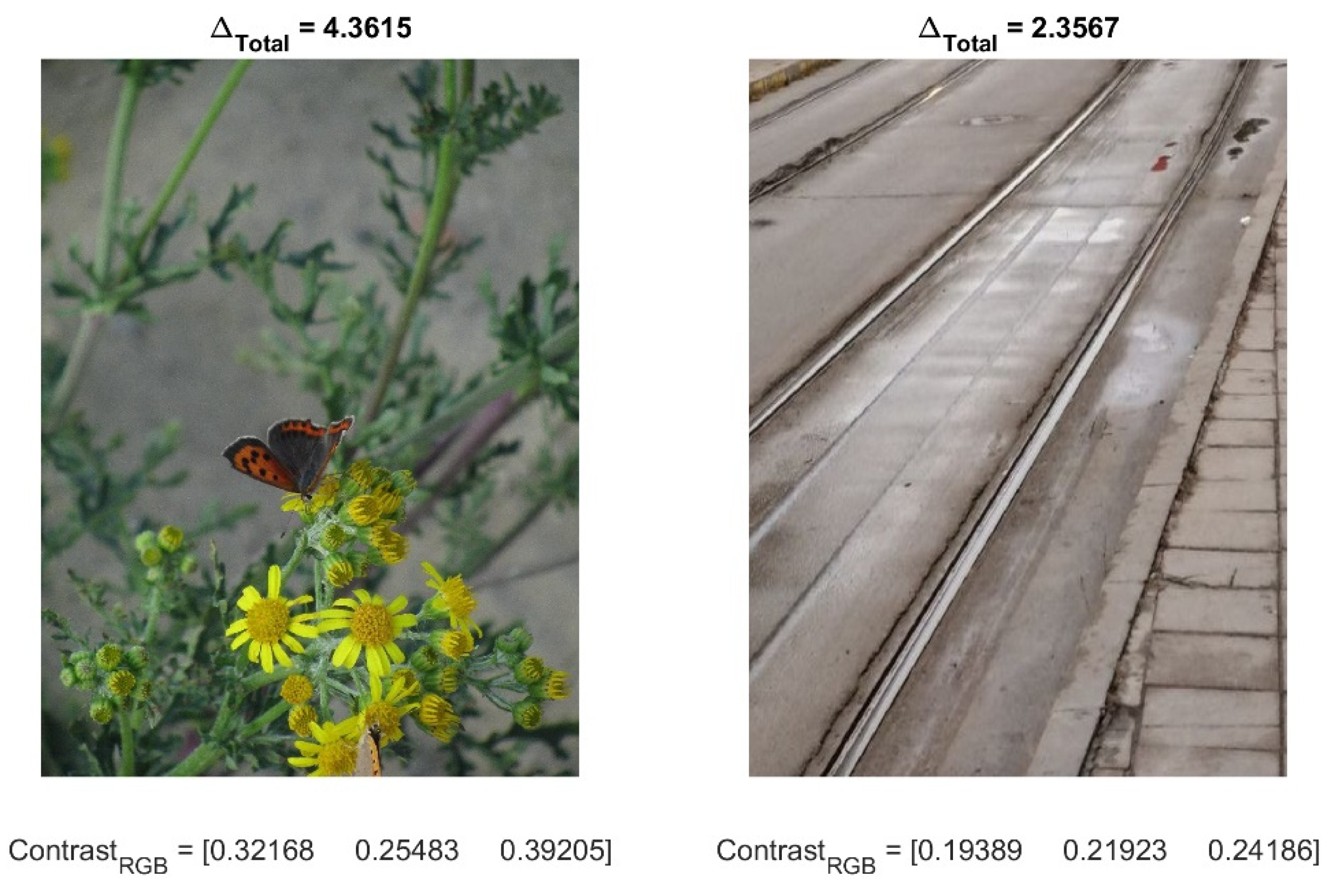

The contrast of an image can also affect the accuracy of the presented method. We tested our method using a moving Gaussian spot on backgrounds with different contrasts

Figure 16. The total error, as defined in

Eq. (9), is given in the title of the background pictures.

These results show we can expect less reliable behavior when the background scene’s contrast is more significant. The reason is that higher background contrast within the ROI may interfere with the changes caused by the moving object and obscure the tracking. Specific actions such as selecting a smaller initial region of interest can reduce the deviation caused by higher contrast values.

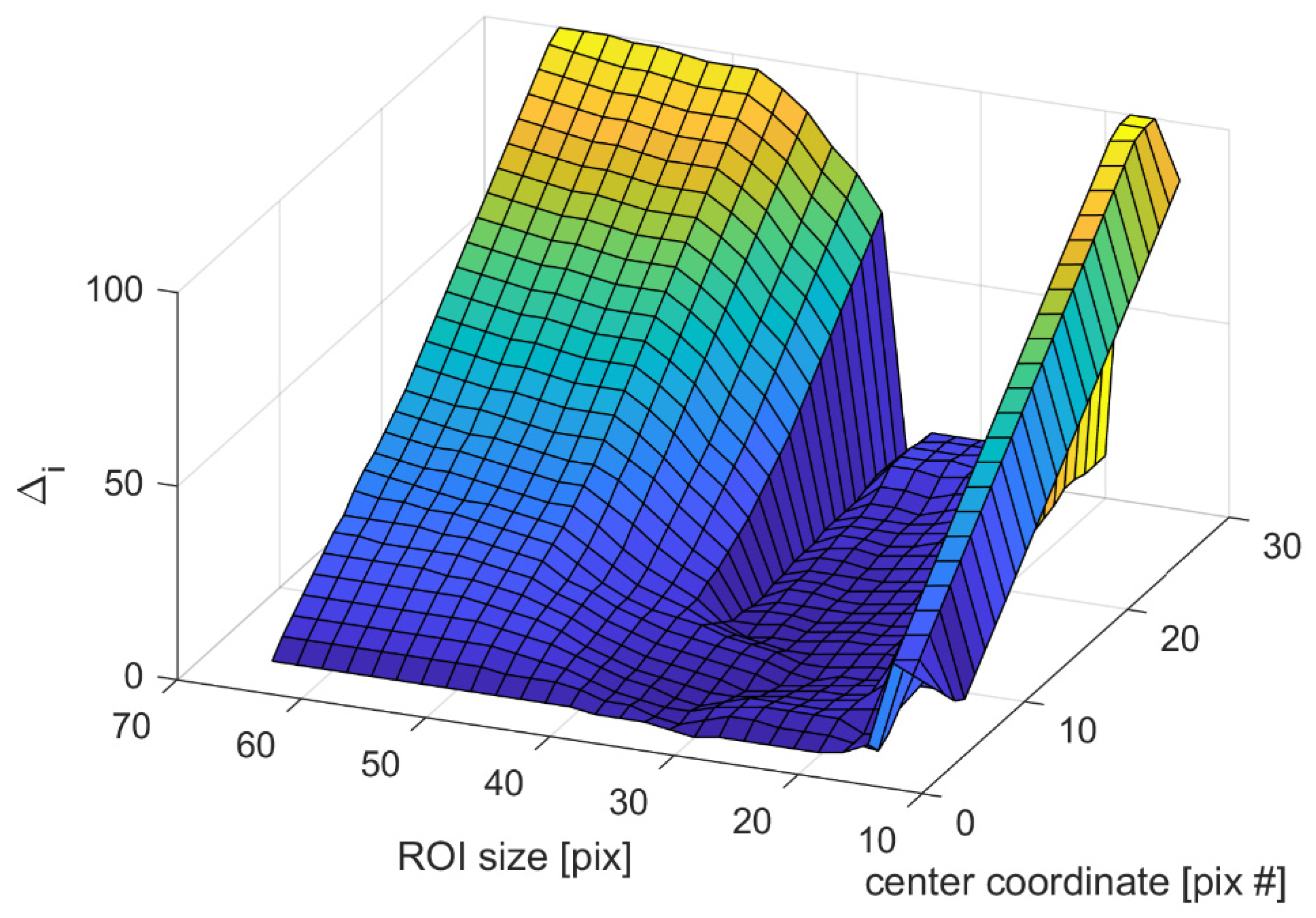

When analyzing the effect that the initial ROI size has on the performance of the tracking algorithm, we devised three different sets of tests. The first test was varying the length and width of the rectangular region for a moving circular spot on a homogenous background. In the second test, various backgrounds were used, and the third analyzed real-world tracking scenarios. An example is presented in

Figure 17.

We explore further the difference between the actual coordinates of ROI and the measured ones. For the case of non-simulated videos, we examined whether or not the object of interest remained within the tracking area. An example test that examines the lower and upper boundary for the area ratio

K is given in

Figure 17. After selecting the ROI size, we track the object’s movement for a set number of frames. We plot the values from

Eq. (8) along the z-Axis depending on the object center’s initial ROI size and current frame position.

Our tests found that the mean lower boundary for the area ratio defined in Eq. (10) is 69%, while the mean upper boundary is 34%. For the proposed tracking algorithm’s future development, additional analysis on the effect of background contrast and autonomous initial ROI selection is underway.

4. Summary and Discussion

We propose a novel method for object tracking. It addresses the challenge of real-time object-tracking optic flow techniques. The method successfully applies to numerous tests and real-world data, showing its effectiveness with various examples. An essential feature of our approach is the reconstruction of global transformation parameters, mitigating the computational complexity associated with most pixel-based optical flow algorithms this way. The method can be helpful for virtual tracking by dynamically adjusting a region of interest in a static wide-angle video stream and tracking with a mechanically steerable PTZ camera.

Remote sensing and detecting adverse and potentially dangerous events is an ever-growing necessity. In certain situations, attached sensors are not the optimal or even not a possible solution. Video observation provides remote functionality, but in its commonly used operator-based form, it requires constant alertness of trained personnel. For this reason, we have established a broad program dedicated to automated remote sensing algorithms. One of the currently operational systems is dedicated to real-time detection of convulsive epileptic seizures. The results presented in this work intend to develop modules that deliver tracking capabilities and operate in conjunction with existing detection and alerting facilities.

One of the limitations of our approach is that we have used only group transformations that preserve the aspect ratio between the ROI axes, namely the translations and dilatations of the video image. As explained in the Methods, one argument for this is the potential application of PTZ cameras. A second argument related to operator-controlled settings is that standard, fixed aspect-ratio monitors render the video images. We will explore an extended version of our ROI adaptive control paradigm in a forthcoming study.

The comparison between the performance of the proposed ROI tracking method and that of other existing techniques for only one available data set is for reference only. We remind that our goal is to investigate the potential use of reconstructed optical flow group velocities for autonomous ROI tracking. To the best of our knowledge, no other published methodology provides such functionality. Even if other algorithms produce better tracking results in particular applications or according to some specific performance criteria, implementing them in our integrated system would require higher, additional computational resources. In our modular approach, the optical flow reconstruction is done for detecting epileptic motor fits, and applying it to other modules, such as ROI or PTZ tracking, involves minimal added complexity. This said, the illustrative comparison suggests that the proposed technique may be generally competitive with other tracking methods, especially if the required computational resources are considered.

Further limitations and restrictions of the method related to the velocity of the tracked object and the initial size of the region of interest are examined and listed earlier in this work. An open question that remains here is how to proceed if the algorithm "loses" the object of observation. One immediate solution is to detect the situation and alert an operator to intervene. Such an approach will, of course, undermine the autonomous operation of the system. Another possibility we are currently investigating is to introduce a dual-ROI concept where the algorithm keeps a broader observation margin that would allow for mitigating some of the limitations.

Our technique can also utilize adaptive features that provide performance reinforcement on the move while operating in real time. The adaptive extension is now considered on synthetic and real-life sequences and will be published elsewhere. Here, we note that it does not need large sets of pre-recorded training samples, as when using conventional learning techniques [

14,

20,

21,

37].

We would also point out that because the method proposed in our work is ROI-based, it allows parallel proliferation for simultaneous tracking of multiple separated objects if the computational resources permit. A typical application of such a technique would be using a wide-angle high-resolution static camera for multiple targets observation. If, however, the objects cross their positions in the camera’s field of view, rules of disambiguation should apply. This extension of the methodology goes beyond the scope of this report and is a subject of our further investigations.

Finally, we note that the method introduced in this work was primarily dedicated to the already running application for detecting adverse motion events in patients. This allowed us to restrict the optical flow reconstruction task to those transformations relevant to the PTZ camera control. The technique is, however, applicable to a broader set of situations, including applications where the camera is moving, as for example considered in [

38]. In the clinical practice of patient observation, cameras moving on rails and/or poles are available. It is, however, the added complexity of manual control that limits their use. To achieve automated control in real-time, a larger set of group transformations, including rotations and shear, may be used to track and control the camera position and orientation.

Funding

The GATE project has received funding from the European Union’s Horizon 2020 WIDESPREAD-2018-2020 TEAMING Phase 2 programme under Grant Agreement No. 857155 and Operational Programme Science and Education for Smart Growth under Grant Agreement No. BG05M2OP001-1.003-0002-C01. Stiliyan Kalitzin is Partially funded by “De Christelijke Vereniging voor de Verpleging van Lijders aan Epilepsie”. Program 35401, Remote Detection of Motor Paroxysms (REDEMP).

Acknowledgments

The result presented in this paper is part of the GATE project.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kalitzin, S., et al. Automatic segmentation of episodes containing epileptic clonic seizures in video sequences. IEEE transactions on biomedical engineering 2012, 59, 3379–3385. [Google Scholar] [CrossRef]

- Geertsema, E.E., et al. Automated non-contact detection of central apneas using video. Biomedical Signal Processing and Control 2020, 55, 101658. [Google Scholar] [CrossRef]

- Geertsema, E.E., et al. Automated remote fall detection using impact features from video and audio. Journal of biomechanics 2019, 88, 25–32. [Google Scholar] [CrossRef] [PubMed]

- Choi, H., B. Kang, and D. Kim. Moving object tracking based on sparse optical flow with moving window and target estimator. Sensors 2022, 22, 2878. [Google Scholar] [CrossRef] [PubMed]

- Farag, W. and Z. Saleh. An advanced vehicle detection and tracking scheme for self-driving cars. in 2nd Smart Cities Symposium (SCS 2019). 2019. IET.

- Gupta, A., et al. Deep learning for object detection and scene perception in self-driving cars: Survey, challenges, and open issues. Array 2021, 10, 100057. [Google Scholar] [CrossRef]

- Lipton, A.J., et al. Automated video protection, monitoring & detection. IEEE Aerospace and Electronic Systems Magazine 2003, 18, 3–18. [Google Scholar]

-

Wang, W., et al. Real time multi-vehicle tracking and counting at intersections from a fisheye camera. in 2015 IEEE Winter Conference on Applications of Computer Vision. 2015. IEEE.

- Kim, H. Multiple vehicle tracking and classification system with a convolutional neural network. Journal of Ambient Intelligence and Humanized Computing 2022, 13, 1603–1614. [Google Scholar] [CrossRef]

- Yeo, H.-S., B.-G. Lee, and H. Lim. Hand tracking and gesture recognition system for human-computer interaction using low-cost hardware. Multimedia Tools and Applications 2015, 74, 2687–2715. [Google Scholar] [CrossRef]

- Fagiani, C., M. Betke, and J. Gips. Evaluation of Tracking Methods for Human-Computer Interaction. in WACV. 2002.

- Hunke, M. and A. Waibel. Face locating and tracking for human-computer interaction. in Proceedings of 1994 28th Asilomar Conference on Signals, Systems and Computers. 1994. IEEE.

- Salinsky, M. A practical analysis of computer based seizure detection during continuous video-EEG monitoring. Electroencephalography and clinical Neurophysiology 1997, 103, 445–449. [Google Scholar] [CrossRef]

- Yilmaz, A., O. Javed, and M. Shah, Object tracking: A survey. Acm computing surveys (CSUR), 2006, 38, 13-es.

- Deori, B. and D.M. Thounaojam, A survey on moving object tracking in video. International Journal on Information Theory (IJIT), 2014, 3, 31–46.

- Mangawati, A., M. Leesan, and H.R. Aradhya. Object Tracking Algorithms for video surveillance applications. in 2018 international conference on communication and signal processing (ICCSP). 2018. IEEE.

- Li, X., et al. A survey of appearance models in visual object tracking. ACM transactions on Intelligent Systems and Technology (TIST) 2013, 4, 1–48. [Google Scholar]

- Piccardi, M. Background subtraction techniques: a review. in 2004 IEEE international conference on systems, man and cybernetics (IEEE Cat. No. 04CH37583). 2004. IEEE.

- Benezeth, Y., et al. Comparative study of background subtraction algorithms. Journal of Electronic Imaging 2010, 19, 033003-1–033003-12. [Google Scholar]

- Chen, F., et al. Visual object tracking: A survey. Computer Vision and Image Understanding 2022, 222, 103508. [Google Scholar] [CrossRef]

- Ondrašovič, M. and P. Tarábek. Siamese visual object tracking: A survey. IEEE Access 2021, 9, 110149–110172. [Google Scholar] [CrossRef]

- Doyle, D.D., A.L. Jennings, and J.T. Black. Optical flow background estimation for real-time pan/tilt camera object tracking. Measurement 2014, 48, 195–207. [Google Scholar] [CrossRef]

- Husseini, S., A survey of optical flow techniques for object tracking. 2017.

- Kalitzin, S., E.E. Geertsema, and G. Petkov. Optical Flow Group-Parameter Reconstruction from Multi-Channel Image Sequences. in APPIS. 2018.

- Horn, B.K. and B.G. Schunck, Determining optical flow. 1980.

- Lucas, B.D. and T. Kanade. An iterative image registration technique with an application to stereo vision. in IJCAI’81: 7th international joint conference on Artificial intelligence. 1981.

- Koenderink, J.J. Optic flow. Vision research 1986, 26, 161–179. [Google Scholar] [CrossRef] [PubMed]

- Beauchemin, S.S. and J.L. Barron. The computation of optical flow. ACM computing surveys (CSUR) 1995, 27, 433–466. [Google Scholar] [CrossRef]

- Florack, L., W. Niessen, and M. Nielsen. The intrinsic structure of optic flow incorporating measurement duality. International Journal of Computer Vision 1998, 27, 263–286. [Google Scholar] [CrossRef]

- Niessen, W. and R. Maas, Multiscale optic flow and stereo. Gaussian Scale-Space Theory, Computational Imaging and Vision. Dordrecht: Kluwer Academic Publishers, 1996.

- Maas, R., B.M. ter Haar Romeny, and M.A. Viergever. A Multiscale Taylor Series Approaches to Optic Flow and Stereo: A Generalization of Optic Flow under the Aperture. in Scale-Space Theories in Computer Vision: Second International Conference, Scale-Space’99 Corfu, Greece, September 26–27, 1999 Proceedings 2. 1999. Springer.

- Aires, K.R., A.M. Santana, and A.A. Medeiros. Optical flow using color information: preliminary results. in Proceedings of the 2008 ACM symposium on Applied computing. 2008.

- Niessen, W., et al. Spatiotemporal operators and optic flow. in Proceedings of the Workshop on Physics-Based Modeling in Computer Vision. 1995. IEEE Computer Society.

- Pavel, M., et al. Limits of visual communication: the effect of signal-to-noise ratio on the intelligibility of American Sign Language. JOSA A 1987, 4, 2355–2365. [Google Scholar] [CrossRef] [PubMed]

- Kalitzin, S.N., et al. Quantification of unidirectional nonlinear associations between multidimensional signals. IEEE transactions on biomedical engineering 2007, 54, 454–461. [Google Scholar] [CrossRef] [PubMed]

- Fan, H., et al. Lasot: A high-quality large-scale single object tracking benchmark. International Journal of Computer Vision 2021, 129, 439–461. [Google Scholar] [CrossRef]

- Yang, F., X. Zhang, and B. Liu. Video object tracking based on YOLOv7 and DeepSORT. arXiv 2022, arXiv:2207.12202, arXiv:2207.12202. [Google Scholar]

- Jana, D. and S. Nagarajaiah. Computer vision-based real-time cable tension estimation in Dubrovnik cable-stayed bridge using moving handheld video camera. Structural Control and Health Monitoring 2021, 28, e2713. [Google Scholar]

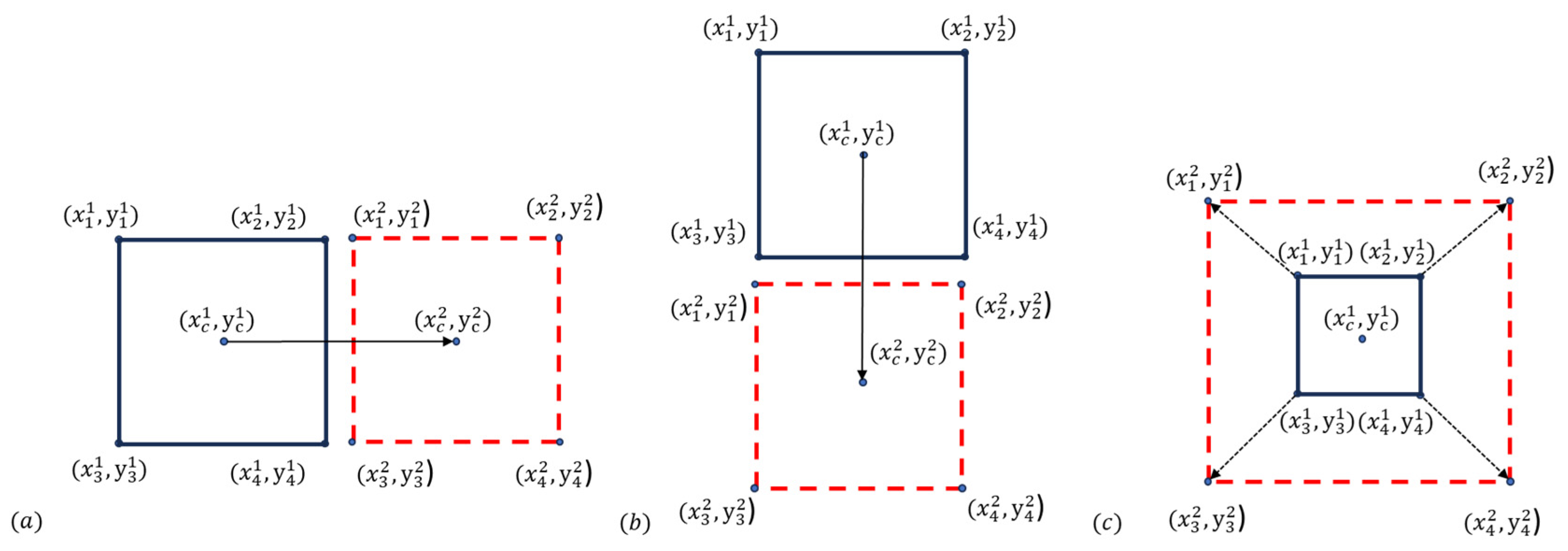

Figure 1.

ROI evolution, based on the values of the global transformation parameters. The figure shows the three elementary motions of the points of the ROI – corner points (xi,yi) for i = 1…4; center coordinates of the ROI (xc, yc). a) Translation along the X-axis. b) Translation along the Y-axis. c) Dilatation or “scaling” of the ROI.

Figure 1.

ROI evolution, based on the values of the global transformation parameters. The figure shows the three elementary motions of the points of the ROI – corner points (xi,yi) for i = 1…4; center coordinates of the ROI (xc, yc). a) Translation along the X-axis. b) Translation along the Y-axis. c) Dilatation or “scaling” of the ROI.

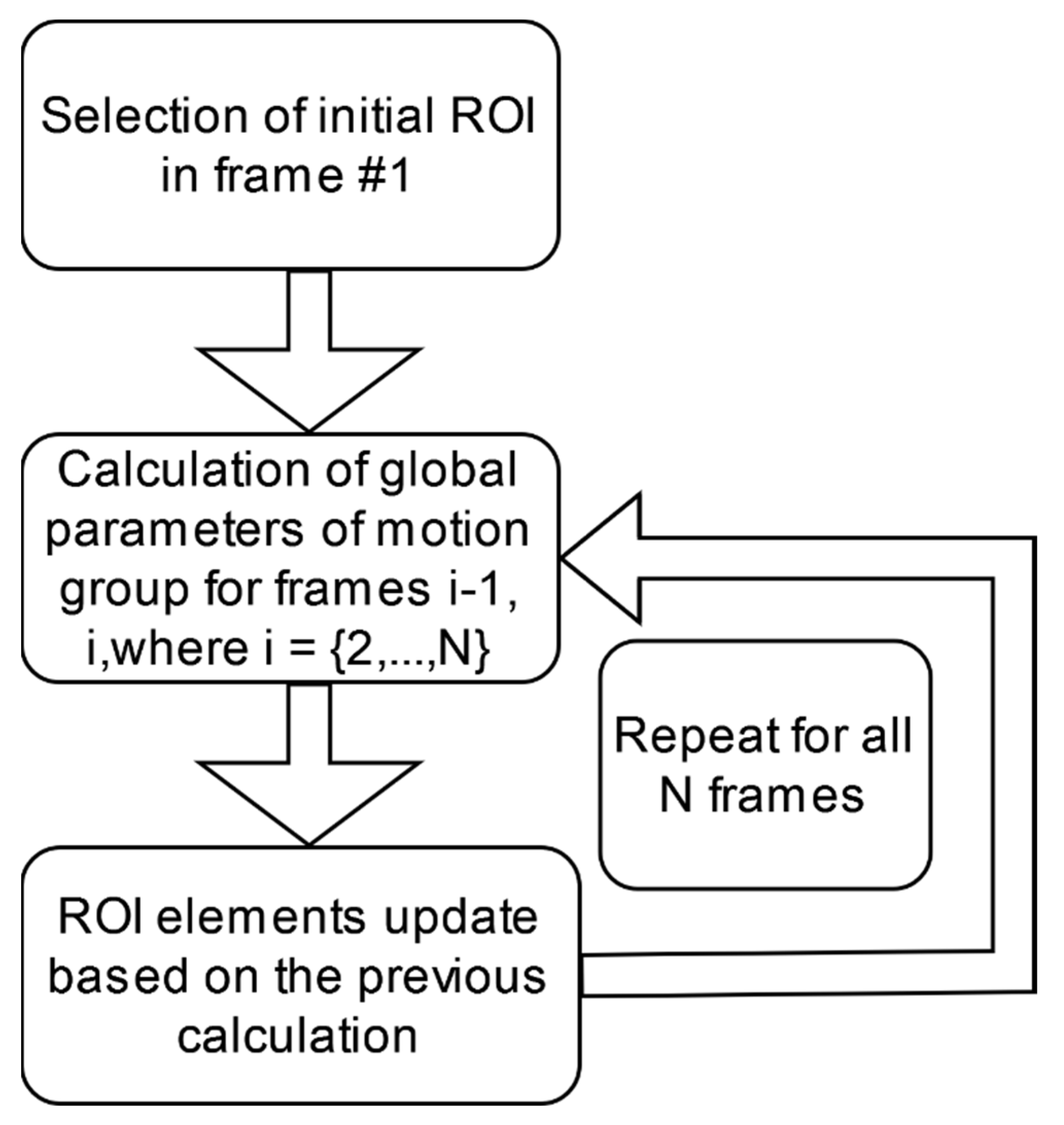

Figure 2.

Diagram of the tracking process. The region of interest is specified in the first frame. Following the selection of center coordinates and size, global motion parameters are calculated for each two consecutive frames, and the region of interest is updated based on the calculation results.

Figure 2.

Diagram of the tracking process. The region of interest is specified in the first frame. Following the selection of center coordinates and size, global motion parameters are calculated for each two consecutive frames, and the region of interest is updated based on the calculation results.

Figure 3.

Coordinates and size of rectangular ROI. The center point of the ROI has coordinates

while the corner points are marked as for i = 1-4.

Figure 3.

Coordinates and size of rectangular ROI. The center point of the ROI has coordinates

while the corner points are marked as for i = 1-4.

Figure 4.

a) Demonstration of ROI tracking in the case of translational movement. The top row displays an example of translational movement in the x-direction. The moving object is a Gaussian “blob” at different moments in time – frames #2, #20, and #40. Similarly, the bottom row shows the translational movement of a Gaussian blob in the y-direction. The selected moments in time are again at frames #2, #20, and #40. The rectangular region of interest successfully follows the moving object in both tests. b) Comparison between the calculated and actual values of the moving objects depending on the current frame. Red circular markers show the actual movement values, while green star markers show the calculated values. The maximum deviations are ±0.05 pixels per frame change, less than 5% for the y-direction and 2.5% for the x-direction.

Figure 4.

a) Demonstration of ROI tracking in the case of translational movement. The top row displays an example of translational movement in the x-direction. The moving object is a Gaussian “blob” at different moments in time – frames #2, #20, and #40. Similarly, the bottom row shows the translational movement of a Gaussian blob in the y-direction. The selected moments in time are again at frames #2, #20, and #40. The rectangular region of interest successfully follows the moving object in both tests. b) Comparison between the calculated and actual values of the moving objects depending on the current frame. Red circular markers show the actual movement values, while green star markers show the calculated values. The maximum deviations are ±0.05 pixels per frame change, less than 5% for the y-direction and 2.5% for the x-direction.

Figure 5.

Scaling of an object and its detection. a) An object-gaussian “blob” is increasing, and the corresponding ROI tracks those changes. The object is displayed in specific moments in time – frames #2, #5, and #10. b) Comparison between the calculated and actual values of the object depending on the current frame. Some differences in the range of 10% of the reconstructed parameter can be observed.

Figure 5.

Scaling of an object and its detection. a) An object-gaussian “blob” is increasing, and the corresponding ROI tracks those changes. The object is displayed in specific moments in time – frames #2, #5, and #10. b) Comparison between the calculated and actual values of the object depending on the current frame. Some differences in the range of 10% of the reconstructed parameter can be observed.

Figure 6.

Scatter plot of values

and for the tests with tracking scaling (dilatation). The h2 nonlinear association index value is provided in the title.

Figure 6.

Scatter plot of values

and for the tests with tracking scaling (dilatation). The h2 nonlinear association index value is provided in the title.

Figure 7.

A test with translational and dilatational movements present. Here, the moving object is a rectangle. It is shown in different moments in time – frames #2, #15 and #30. The object moves simultaneously to the left and downwards while increasing in size. The tracking ROI is shown in red.

Figure 7.

A test with translational and dilatational movements present. Here, the moving object is a rectangle. It is shown in different moments in time – frames #2, #15 and #30. The object moves simultaneously to the left and downwards while increasing in size. The tracking ROI is shown in red.

Figure 8.

Tracking a moving object on a complex RGB background. The object is a Gaussian “blob” shown in different moments in time – frames #2, #30, and #50. The ROI that tracks the object is displayed in red.

Figure 8.

Tracking a moving object on a complex RGB background. The object is a Gaussian “blob” shown in different moments in time – frames #2, #30, and #50. The ROI that tracks the object is displayed in red.

Figure 9.

Tracking in a real-world scenario. A video of a couple walking on a beach. The background is static. The ROI tracking the moving people is shown in red.

Figure 9.

Tracking in a real-world scenario. A video of a couple walking on a beach. The background is static. The ROI tracking the moving people is shown in red.

Figure 10.

Tracking a dancing man. Nine frames from the video are provided, with frame order from top to bottom and from left to right. The background is dynamic, with other moving objects in the frame. The ROI is shown in red.

Figure 10.

Tracking a dancing man. Nine frames from the video are provided, with frame order from top to bottom and from left to right. The background is dynamic, with other moving objects in the frame. The ROI is shown in red.

Figure 11.

Relative mismatch throughout the different frames from the video sequence. The mean value for

is 27% for all 250 frames. The y-axis shows the value of , while the x-axis shows the frame number.

Figure 11.

Relative mismatch throughout the different frames from the video sequence. The mean value for

is 27% for all 250 frames. The y-axis shows the value of , while the x-axis shows the frame number.

Figure 12.

The precision graph (left) and success graph (right) on the coin-2 image sequence from the LaSOT dataset. The PRE value displayed in the legend in the left frame is the precision at a threshold of 20 pixels. The SUC value in the plot legend to the right is the area under the success curve. Both PRE = 1 and SUC = 0.935 values are very high compared to other methods tested on the LaSOT database. Again, we note that the values in the figure are for the specific coin-2 example.

Figure 12.

The precision graph (left) and success graph (right) on the coin-2 image sequence from the LaSOT dataset. The PRE value displayed in the legend in the left frame is the precision at a threshold of 20 pixels. The SUC value in the plot legend to the right is the area under the success curve. Both PRE = 1 and SUC = 0.935 values are very high compared to other methods tested on the LaSOT database. Again, we note that the values in the figure are for the specific coin-2 example.

Figure 13.

On the left side: True (blue circle markers) and measured (green star markers for RGB data, black dot markers for greyscale data) positions of the center of the moving object. The current frame is displayed on the x-axis. The top graph displays the X-coordinate of the object’s center, while the bottom graph displays the Y-coordinate of the object’s center. The measured positions acquired from colored videos overlap almost entirely with the actual positions of the object. On the right side is a snapshot from the video of the moving circle; the background is low in contrast.

Figure 13.

On the left side: True (blue circle markers) and measured (green star markers for RGB data, black dot markers for greyscale data) positions of the center of the moving object. The current frame is displayed on the x-axis. The top graph displays the X-coordinate of the object’s center, while the bottom graph displays the Y-coordinate of the object’s center. The measured positions acquired from colored videos overlap almost entirely with the actual positions of the object. On the right side is a snapshot from the video of the moving circle; the background is low in contrast.

Figure 14.

Analysis of variance (left) and multiple comparison tests (right) for maximum velocity estimation. The velocity in pixels per frame is given on the x-axis of the ANOVA graph (left). In contrast, the medians and variances of the absolute differences are provided on the y-axis. Outliers are marked with a plus sign, while the dotted lines, or whiskers, indicate the most extreme data points which are not outliers. The red central mark indicates the median on each box, while the edges are the 25th and 75th percentiles. On the multiple comparison test to the right, the blue bar represents the first velocity’s comparison interval, and the circle marker indicates the mean value.

Figure 14.

Analysis of variance (left) and multiple comparison tests (right) for maximum velocity estimation. The velocity in pixels per frame is given on the x-axis of the ANOVA graph (left). In contrast, the medians and variances of the absolute differences are provided on the y-axis. Outliers are marked with a plus sign, while the dotted lines, or whiskers, indicate the most extreme data points which are not outliers. The red central mark indicates the median on each box, while the edges are the 25th and 75th percentiles. On the multiple comparison test to the right, the blue bar represents the first velocity’s comparison interval, and the circle marker indicates the mean value.

Figure 15.

Analysis of processing times. The y-axis shows processing times, while the x-axis shows the size in squared pixels of the tracked ROI. Both RGB and B&W tests are shown.

Figure 15.

Analysis of processing times. The y-axis shows processing times, while the x-axis shows the size in squared pixels of the tracked ROI. Both RGB and B&W tests are shown.

Figure 16.

Effect of contrast on method accuracy. The moving pattern is the same as in Fig.8, but the backgrounds differ. The figure presents two backgrounds, where the image on the right has a lower contrast for all three color-channels than the one on the left. The contrast values are calculated for the whole scene. The value for

as defined in Eq. (9), is calculated for both cases of a different background and is given in the title of the background pictures.

Figure 16.

Effect of contrast on method accuracy. The moving pattern is the same as in Fig.8, but the backgrounds differ. The figure presents two backgrounds, where the image on the right has a lower contrast for all three color-channels than the one on the left. The contrast values are calculated for the whole scene. The value for

as defined in Eq. (9), is calculated for both cases of a different background and is given in the title of the background pictures.

Figure 17.

Optimal region of interest size analysis. The x-axis shows the coordinate of the center of the ROI, the y-axis shows the current ROI size, and the z-axis shows the values acquired by Eq. (8). The plateau shows which ROI sizes are optimal for the present case. The moving pattern is again of a moving Gaussian “blob”, as is in Fig.8. The tracking process is under different initial ROI sizes – the y-axis.

Figure 17.

Optimal region of interest size analysis. The x-axis shows the coordinate of the center of the ROI, the y-axis shows the current ROI size, and the z-axis shows the values acquired by Eq. (8). The plateau shows which ROI sizes are optimal for the present case. The moving pattern is again of a moving Gaussian “blob”, as is in Fig.8. The tracking process is under different initial ROI sizes – the y-axis.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).