Submitted:

10 January 2024

Posted:

12 January 2024

Read the latest preprint version here

Abstract

Keywords:

1. Introduction

2. Related Work

| Approach | Key Features | Advantages | Limitations | Dataset Used |

| DeepIDS [6] | Stacked autoencoders, SVM classifier | Improved detection accuracy | High computational complexity | NSL-KDD |

| CNN-GRU [7] | Hybrid CNN and GRU architecture | High detection rates, efficient | High training time | CICIDS2017 |

| DNN-AD [8] | RBMs for unsupervised feature learning | Effective detection of anomalies | Sensitivity to hyperparameters | UNSW-NB15 |

| GANIDS [9] | Generative Adversarial Networks (GANs) | Detects both known and unknown attacks | Difficulty in training GANs | CICIDS2017 |

| DBSCAN-DNN [10] | DBSCAN clustering, DNN classification | High detection rates, handles variability | Difficulty in determining DBSCAN's eps | UNSW-NB15 |

| LSTM-CNN [11] | LSTM and CNN hybrid architecture | Improved accuracy and efficiency | Difficulty in capturing long dependencies | NSL-KDD |

| Autoencoder-Based [12] | Autoencoder reconstruction for anomaly | Effective detection of unknown attacks | Sensitive to selection of reconstruction error threshold | UNSW-NB15 |

3. Publically Available Datasets

| Dataset | Description | Size | Number of Features | Attack Types | Year |

| NSL-KDD | Network traffic data | 1.8 GB | 41 | Multiple | 2009 |

| CICIDS2017 | Network traffic data | 256 GB | 79 | Multiple | 2017 |

| UNSW-NB15 | Network traffic data | 1.9 GB | 49 | Multiple | 2015 |

| KDD Cup 1999 | Network traffic data | 743 MB | 41 | Multiple | 1999 |

| DARPA1999 | Network traffic data | 2.8 GB | 41 | Multiple | 1999 |

| ISCXIDS2012 | Network traffic data | 3.8 GB | 79 | Multiple | 2012 |

| NSL-KDD+ | Network traffic data | 2.3 GB | 41 | Multiple | 2009 |

| CIDDS-001 | Network traffic data | 3.7 GB | 48 | Multiple | 2018 |

| ADFA-LD | Windows system logs | 155 MB | N/A | Normal and Anomalous | 2015 |

| SADL | Sensor anomaly detection logs | N/A | N/A | Anomalous | 2014 |

4. CICIDS2017 Dataset

| Dataset | Description |

| Name | CICIDS2017 |

| Source | University of New Brunswick (UNB) |

| Purpose | Intrusion Detection System (IDS) research |

| Data Size | 256 GB |

| Features | 79 |

| Attack Types | Multiple |

| Year | 2017 |

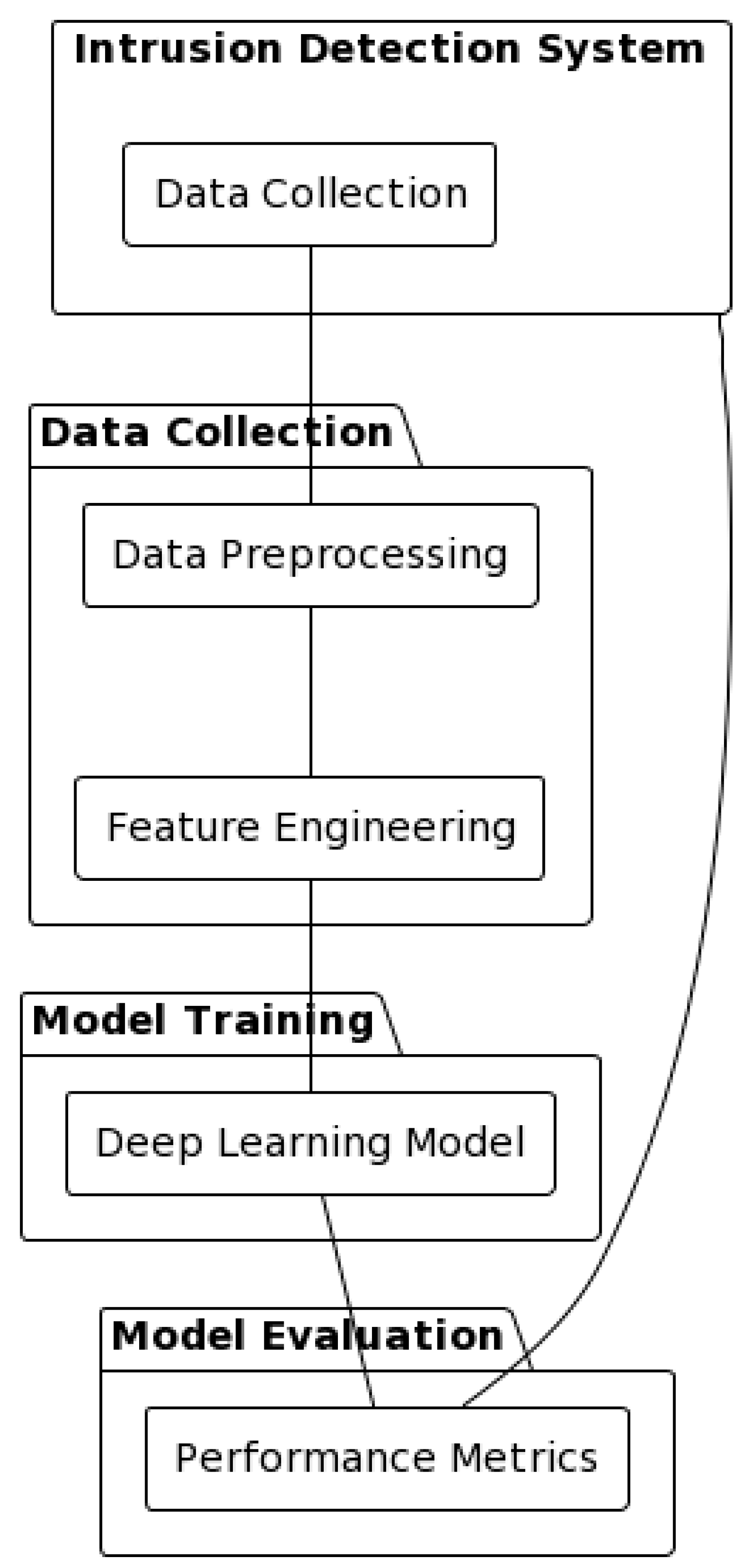

5. Proposed IDS Systems

- a.

- CNN-based Intrusion Detection System (IDS)

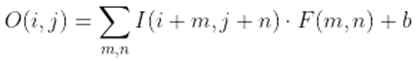

- Convolution Operation: The convolution operation in a CNN involves convolving an input tensor with a set of learnable filters. Each filter applies a convolution operation to a local receptive field of the input tensor. The mathematical representation of the convolution operation can be expressed as follows:

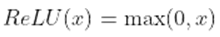

- Activation Function: After the convolution operation, an activation function is applied element-wise to introduce non-linearity into the network. Common activation functions used in CNNs include the Rectified Linear Unit (ReLU), which can be mathematically represented as follows:

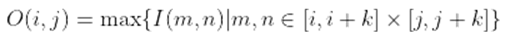

- Pooling Operation: The pooling operation is applied to reduce the spatial dimensions of the feature maps while preserving important features. Max pooling is a commonly used pooling operation in CNNs. Mathematically, max pooling can be expressed as follows:

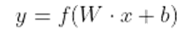

- Fully Connected Layers: The fully connected layers in a CNN connect every neuron from the previous layer to every neuron in the subsequent layer. The mathematical computations performed in the fully connected layers involve matrix multiplications and application of activation functions.

- b.

- LSTM-based Intrusion Detection System (IDS):

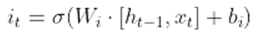

- The input gate controls how much fresh data is incorporated into the cell state. The following equations are used in its computation, which makes use of a sigmoid activation function:

- The forget gate regulates the amount of data that is removed from the cell state. The following equations are used in its computation, which makes use of a sigmoid activation function:

- The output gate controls how much data from the cell state is sent to the following layer. The following equations are used in its computation, which makes use of a sigmoid activation function:

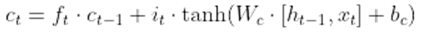

- Cell State: The input gate, forget gate, and prior cell state are used to update the cell state, which serves as the LSTM's memory. The following equations are involved:

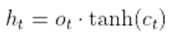

- Hidden State: The hidden state represents the output of the LSTM cell and is updated using the output gate and the cell state. It involves the following equations:

- LSTM Layer: In an LSTM-based IDS, multiple LSTM cells are typically stacked together to form an LSTM layer. The output of each LSTM cell serves as the input to the next LSTM cell in the sequence. The mathematical operations described above are applied sequentially for each LSTM cell in the layer.

- Fully Connected Layers: Following the LSTM layer, fully connected layers can be added to further process the output of the LSTM layer and perform classification or detection tasks. The computations involved in the fully connected layers are similar to those in the CNN-based IDS, as mentioned in the previous response.

- Output Layer: LSTM-based IDS output layers classify or detect. The number of output layer neurons varies on the job and IDS classifications. One sigmoid neuron can classify binary data. Multi-class classification uses a softmax activation function and a number of neurons in the output layer equal to the number of classes.

- Loss Function and Optimization: Loss functions measure the difference between anticipated output and ground truth labels during training. Binary cross-entropy is used for binary classification and categorical for multi-class classification. Weights and biases are optimized using stochastic gradient descent (SGD) or its derivatives. Backpropagation through time (BPTT) updates parameters and minimizes loss by computing the loss function gradients with respect to parameters.

- c.

- GAN-based Intrusion Detection System (IDS):

- Discriminator Network: A GAN-based IDS uses a discriminator network to discriminate between samples of actual and fake network data. It accepts genuine samples from the dataset or artificial samples produced by the generator network as input. The activation functions (like ReLU) are followed by normalization layers and perhaps by a sequence of fully connected or convolutional layers in the mathematical model of the discriminator network.

6. Conclusion

References

- Diro, A. A., & Chilamkurti, N. (2018). Distributed attack detection scheme using deep learning approach for Internet of Things. Future Generation Computer Systems, 82, 761-768. [CrossRef]

- Kukkar, A., Gupta, D., Beram, S. M., Soni, M., Singh, N. K., Sharma, A., Neware, R., Shabaz, M., & Rizwan, A. (2022). Optimizing Deep Learning Model Parameters Using Socially Implemented IoMT Systems for Diabetic Retinopathy Classification Problem. IEEE Transactions on Computational Social Systems,10(4). [CrossRef]

- Muna, A. H., Moustafa, N., & Sitnikova, E. (2018). Identification of malicious activities in industrial Internet of things based on deep learning models. Journal of information security and applications, 41, 1-11. [CrossRef]

- Mehbodniya, A., Alam, I., Pande, S., Neware, R., Rane, K. P., Shabaz, M., & Madhavan, M. V. (2021). Financial fraud detection in healthcare using machine learning and deep learning techniques. Security and Communication Networks, 2021, 1-8. [CrossRef]

- Vinayakumar, R., Alazab, M., Srinivasan, S., Pham, Q. V., Padannayil, S. K., & Simran, K. (2020). A visualized botnet detection system based deep learning for the internet of things networks of smart cities. IEEE Transactions on Industry Applications, 56(4), 4436-4456. [CrossRef]

- Parra, G. D. L. T., Rad, P., Choo, K. K. R., & Beebe, N. (2020). Detecting Internet of Things attacks using distributed deep learning. Journal of Network and Computer Applications, 163, 102662. [CrossRef]

- Ajani, S., & Wanjari, M. (2013, September). An efficient approach for clustering uncertain data mining based on hash indexing and voronoi clustering. In 2013 5th International Conference and Computational Intelligence and Communication Networks (pp. 486-490). IEEE. [CrossRef]

- HaddadPajouh, H., Dehghantanha, A., Khayami, R., & Choo, K. K. R. (2018). A deep recurrent neural network-based approach for Internet of things malware threat hunting. Future Generation Computer Systems, 85, 88-96. [CrossRef]

- Popoola, S. I., Adebisi, B., Hammoudeh, M., Gui, G., & Gacanin, H. (2020). Hybrid deep learning for botnet attack detection in the internet-of-things networks. IEEE Internet of Things Journal, 8(6), 4944-4956. [CrossRef]

- Ajani, S. N., & Amdani, S. Y. (2020, February). Probabilistic path planning using current obstacle position in static environment. In 2nd International Conference on Data, Engineering and Applications (IDEA) (pp. 1-6). IEEE. [CrossRef]

- Manimurugan, S., Al-Mutairi, S., Aborokbah, M. M., Chilamkurti, N., Ganesan, S., & Patan, R. (2020). Effective attack detection in internet of medical things smart environment using a deep belief neural network. IEEE Access, 8, 77396-77404. [CrossRef]

- NG, B. A., & Selvakumar, S. (2020). Anomaly detection framework for Internet of things traffic using vector convolutional deep learning approach in fog environment. Future Generation Computer Systems, 113, 255-265. [CrossRef]

- Sharafaldin, I., Lashkari, A. H., & Ghorbani, A. A. (2018). Toward generating a new intrusion detection dataset and intrusion traffic characterization. ICISSp, 1, 108-116. [CrossRef]

- Ferrag, M. A., Friha, O., Hamouda, D., Maglaras, L., & Janicke, H. (2022). Edge-IIoTset: A new comprehensive realistic cyber security dataset of IoT and IIoT applications for centralized and federated learning. IEEE Access, 10, 40281-40306. [CrossRef]

- Chawla, N. V., Bowyer, K. W., Hall, L. O., & Kegelmeyer, W. P. (2002). SMOTE: synthetic minority over-sampling technique. Journal of artificial intelligence research, 16, 321-357. [CrossRef]

- Samek, W., Binder, A., Montavon, G., Lapuschkin, S., & Müller, K. R. (2016). Evaluating the visualization of what a deep neural network has learned. IEEE transactions on neural networks and learning systems, 28(11), 2660-2673. [CrossRef]

- Liu, W., Wang, Z., Liu, X., Zeng, N., Liu, Y., & Alsaadi, F. E. (2017). A survey of deep neural network architectures and their applications. Neurocomputing, 234, 11-26. [CrossRef]

- Deng, L., Hinton, G., & Kingsbury, B. (2013, May). New types of deep neural network learning for speech recognition and related applications: An overview. In 2013 IEEE international conference on acoustics, speech and signal processing (pp. 8599-8603). IEEE. [CrossRef]

- Albawi, S., Mohammed, T. A., & Al-Zawi, S. (2017, August). Understanding of a convolutional neural network. In 2017 International Conference on engineering and Technology (ICET) (pp.1-6). IEEE. [CrossRef]

- Gu, J., Wang, Z., Kuen, J., Ma, L., Shahroudy, A., Shuai, B., ... & Chen, T. (2018). Recent advances in convolutional neural networks. Pattern recognition, 77, 354-377. [CrossRef]

- Yu, Y., Si, X., Hu, C., & Zhang, J. (2019). A review of recurrent neural networks: LSTM cells and network architectures. Neural computation, 31(7), 1235-1270. [CrossRef]

- Sherstinsky, A. (2020). Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Physica D: Nonlinear Phenomena, 404, 132306. [CrossRef]

- Tschannen, M., Bachem, O., & Lucic, M. (2018). Recent advances in autoencoder-based representation learning. Third workshop Bayesian Deep Learning. arXiv preprint arXiv:1812.05069.

- Meng, Q., Catchpoole, D., Skillicom, D., & Kennedy, P. J. (2017, May). Relational autoencoder for feature extraction. In 2017 International joint conference on neural networks (IJCNN) (pp. 364-371). IEEE. [CrossRef]

- Chen, Z., Yeo, C. K., Lee, B. S., & Lau, C. T. (2018, April). Autoencoder-based network anomaly detection. Wireless telecommunications symposium (pp. 1-5). IEEE. 10.1109/WTS.2018.8363930.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).