Submitted:

22 December 2023

Posted:

26 December 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Materials and Methods

2.1. Image Dataset

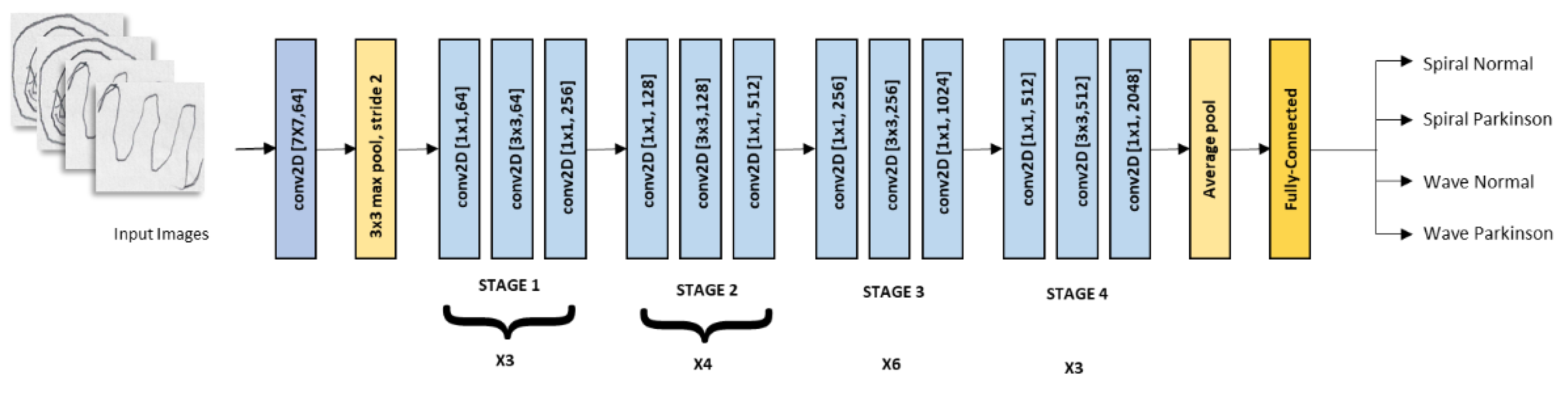

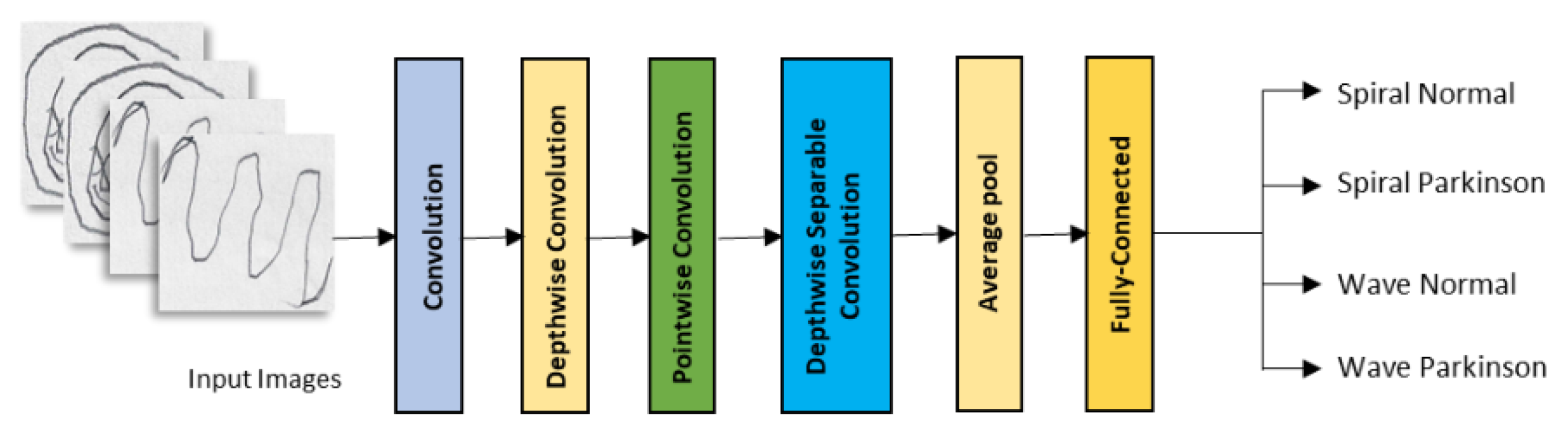

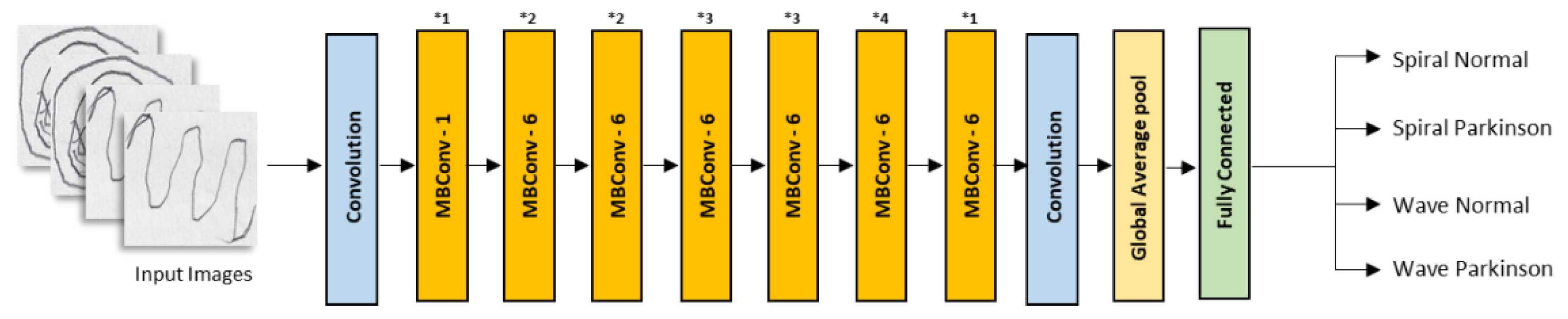

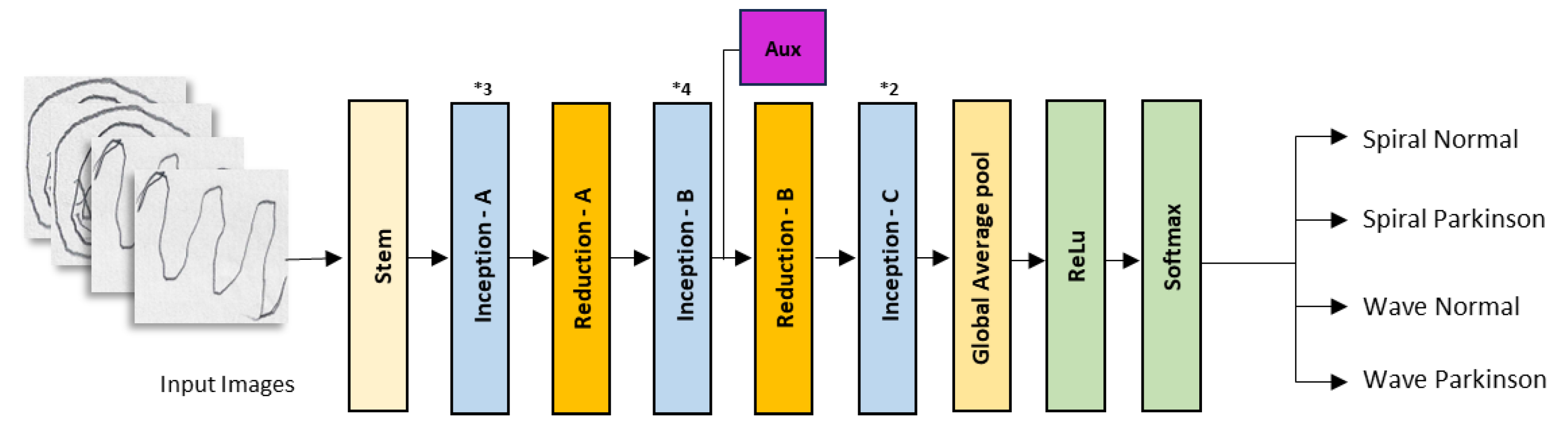

2.2. Convolutional Neural Networks

3. Results

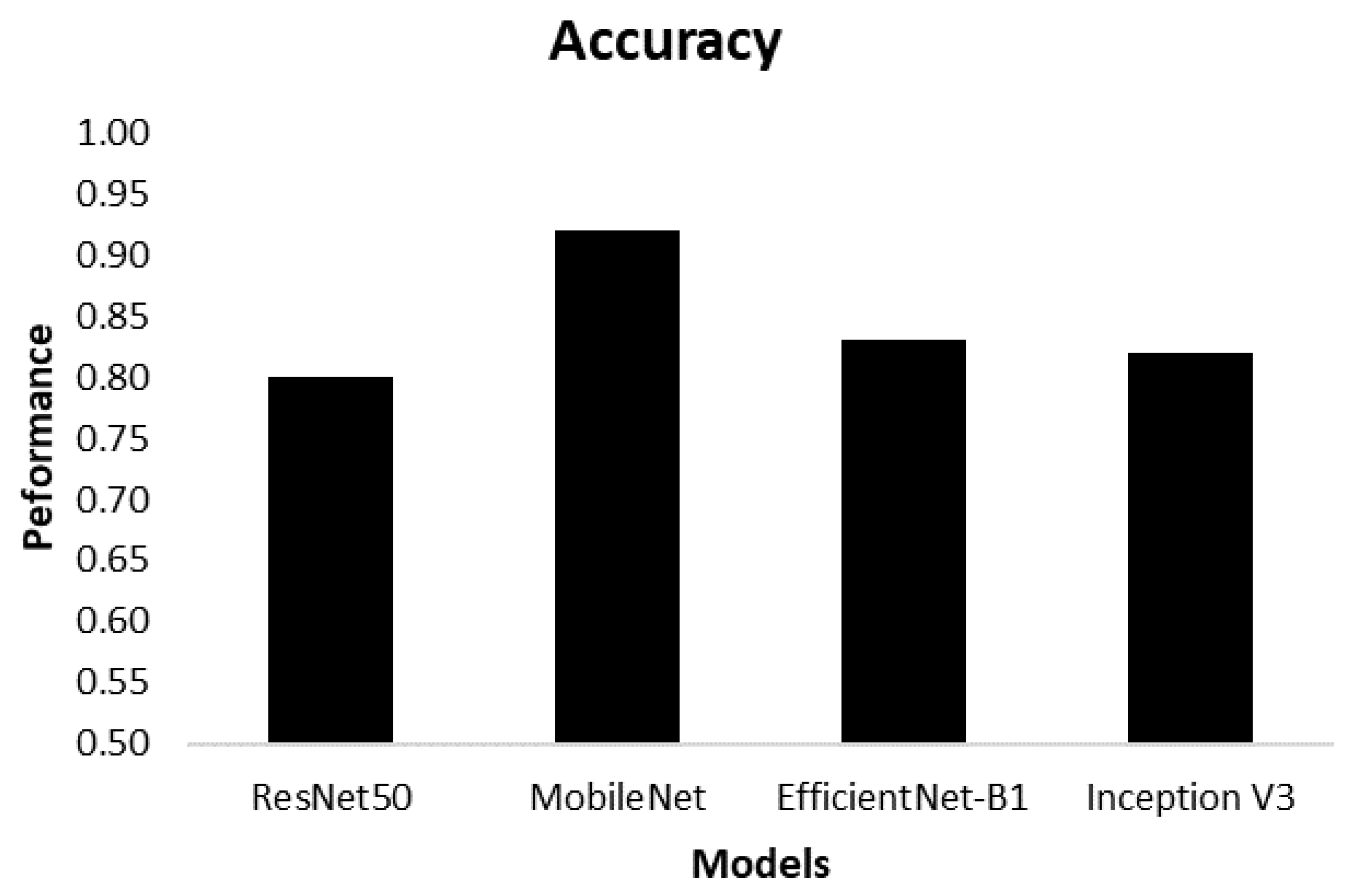

3.1. Performances of Deep Learning

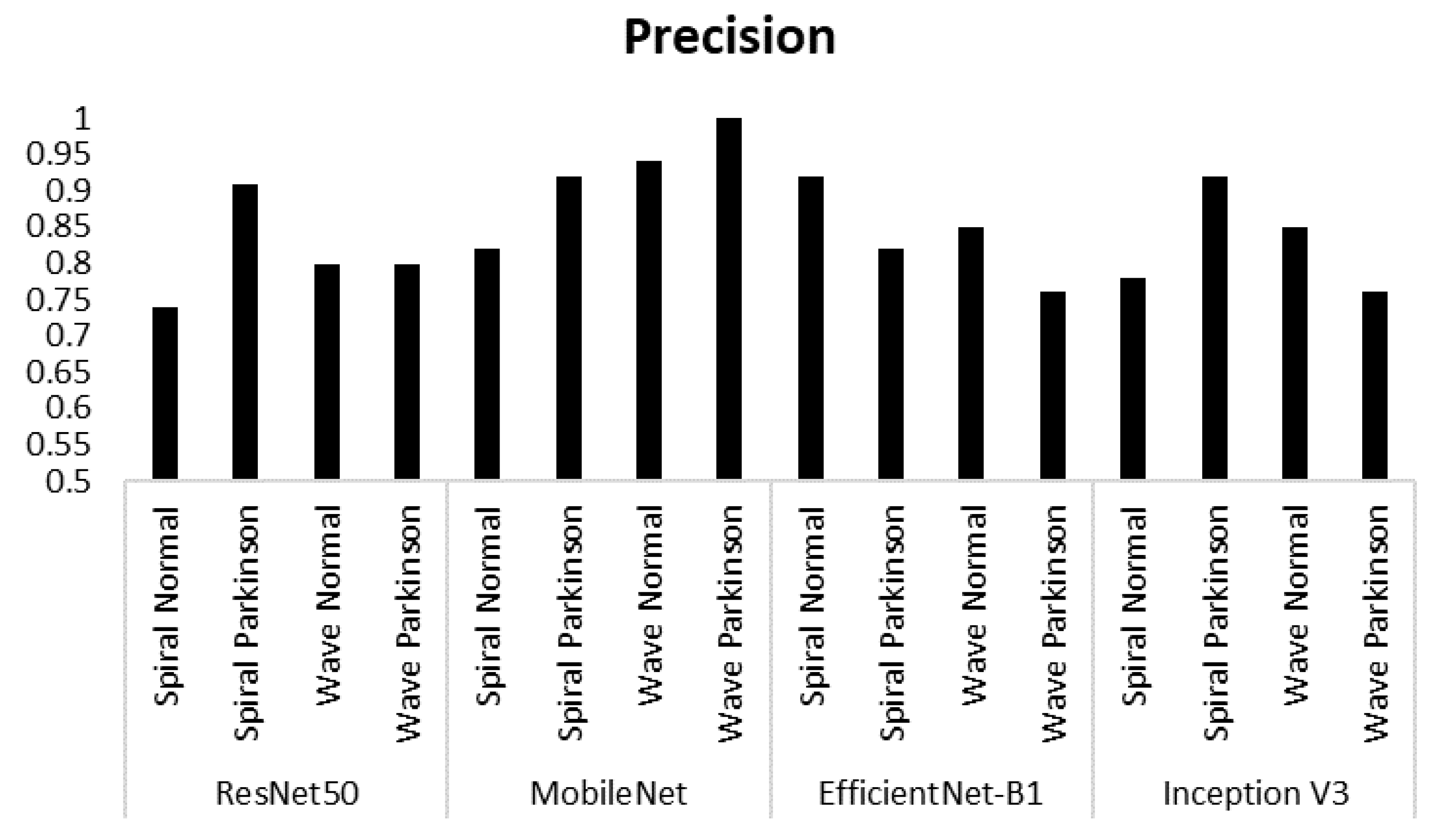

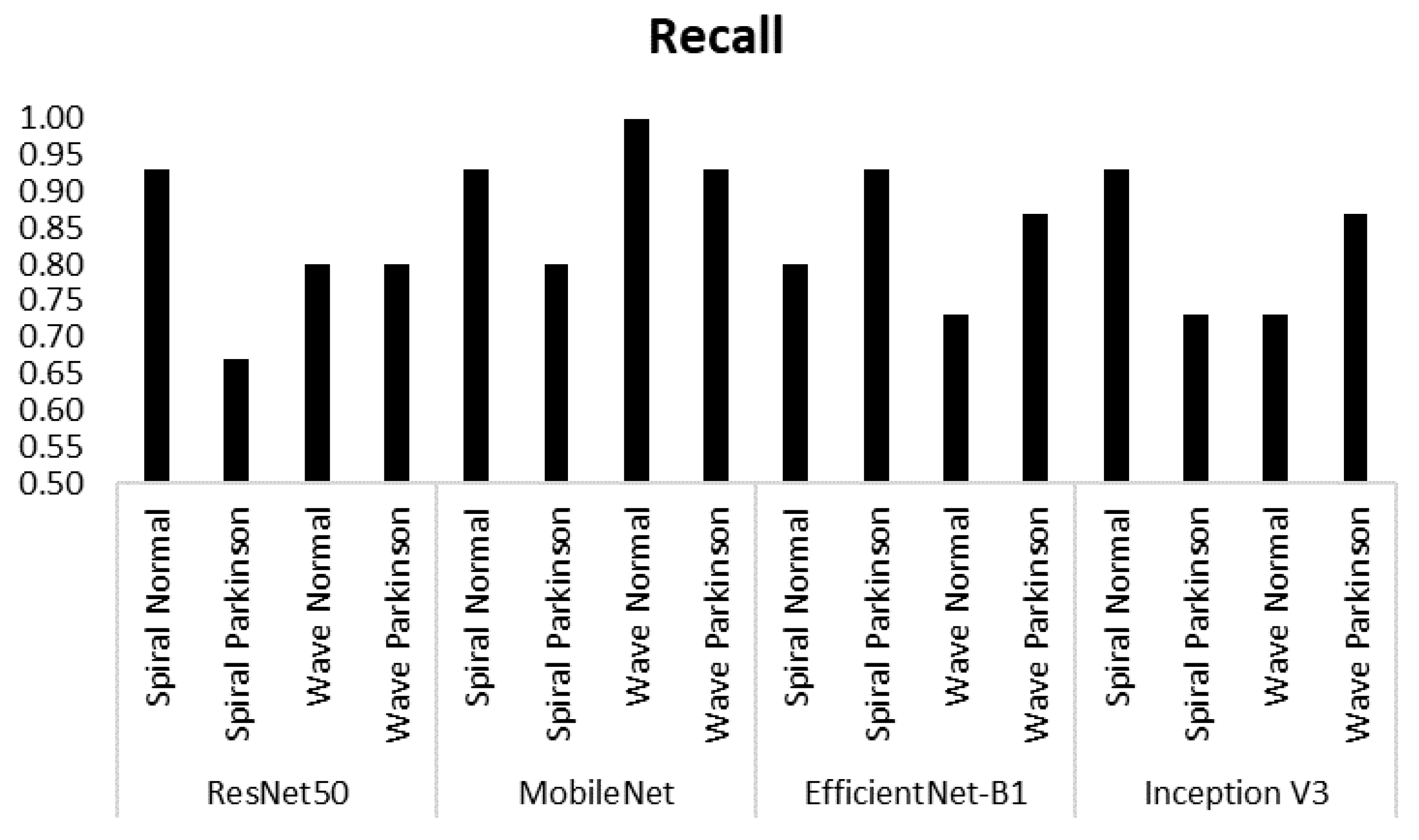

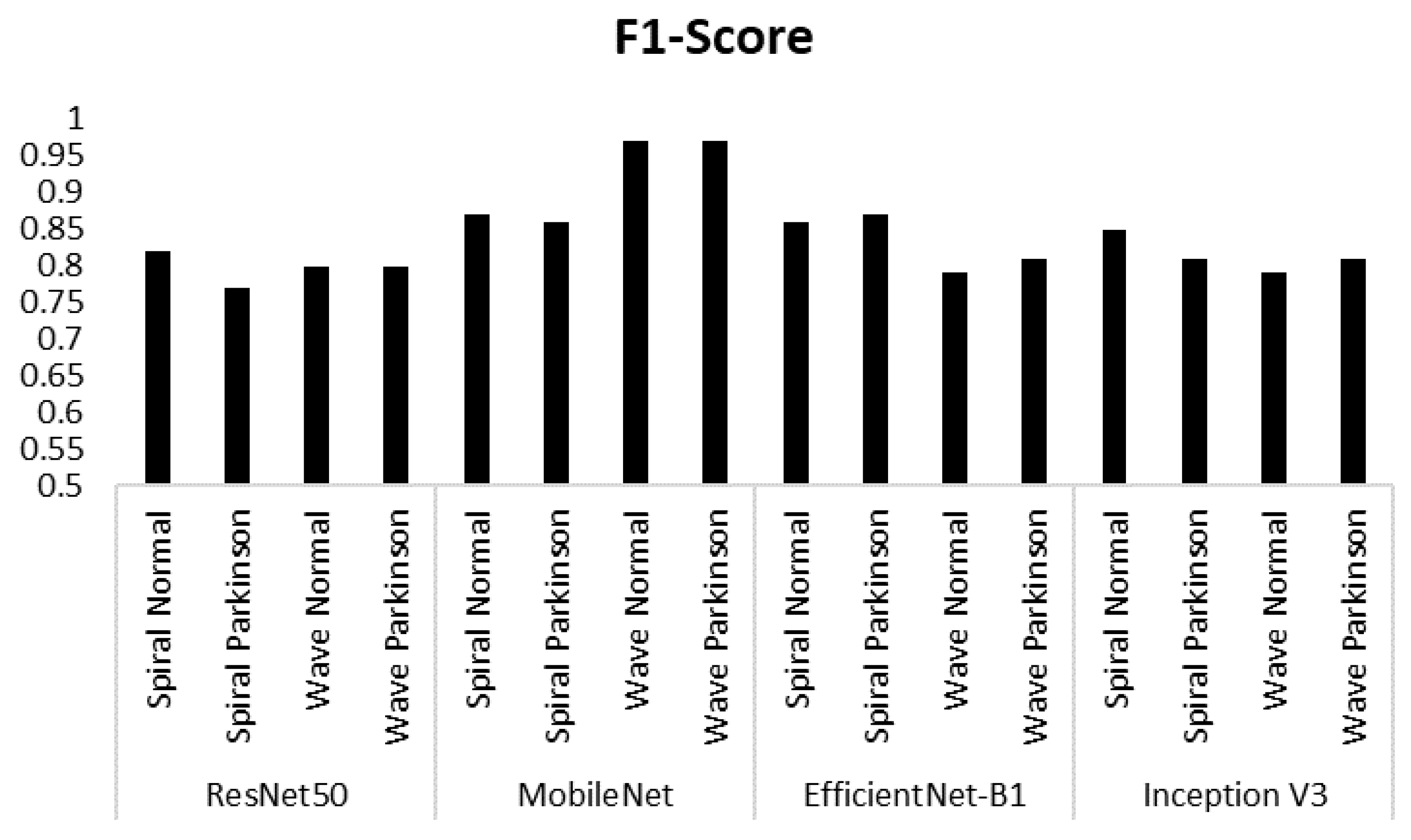

3.2. Precision, Recall and F1-Score

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Balestrino, R. and A. Schapira. "Parkinson disease." European journal of neurology 27 (2020): 27-42. [CrossRef]

- Connolly, B. S. and A. E. Lang. "Pharmacological treatment of parkinson disease: A review." Jama 311 (2014): 1670-83. [CrossRef]

- Bhat, S. R. Acharya, Y. Hagiwara, N. Dadmehr and H. Adeli. "Parkinson's disease: Cause factors, measurable indicators, and early diagnosis." Computers in biology and medicine 102 (2018): 234-41. [CrossRef]

- Goldman, S. M. "Environmental toxins and parkinson's disease." Annual review of pharmacology and toxicology 54 (2014): 141-64. [CrossRef]

- Hossain, M. S. and M. Shorfuzzaman. "Metaparkinson: A cyber-physical deep meta-learning framework for n-shot diagnosis and monitoring of parkinson's patients. IEEE Systems Journal. [CrossRef]

- Lima, A. A. F. Mridha, S. C. Das, M. M. Kabir, M. R. Islam and Y. Watanobe. "A comprehensive survey on the detection, classification, and challenges of neurological disorders." Biology 11 (2022): 469. [CrossRef]

- Varalakshmi, P. T. Priya, B. A. Rithiga, R. Bhuvaneaswari and R. S. J. Sundar. "Diagnosis of parkinson's disease from hand drawing utilizing hybrid models." Parkinsonism & Related Disorders 105 (2022): 24-31. [CrossRef]

- Vadalà, M. Vallelunga, L. Palmieri, B. Palmieri, J. C. Morales-Medina and T. Iannitti. "Mechanisms and therapeutic applications of electromagnetic therapy in parkinson’s disease." Behavioral and Brain Functions 11 (2015): 1-12. [CrossRef]

- Meiappane, A. and V. S. SR. "Design and implementation of end to end application for parkinson disease categorization." Journal of Coastal Life Medicine 11 (2023): 1556-63.

- Kraus, P. H. and A. Hoffmann. "Spiralometry: Computerized assessment of tremor amplitude on the basis of spiral drawing." Movement Disorders 25 (2010): 2164-70. [CrossRef]

- Toffoli, S. Lunardini, M. Parati, M. Gallotta, B. De Maria, L. Longoni, M. E. Dell'Anna and S. Ferrante. "Spiral drawing analysis with a smart ink pen to identify parkinson's disease fine motor deficits." Frontiers in neurology 14 (2023): 1093690. [CrossRef]

- Angelillo, M. T. Impedovo, G. Pirlo and G. Vessio. "Performance-driven handwriting task selection for parkinson’s disease classification." Presented at AI* IA 2019–Advances in Artificial Intelligence: XVIIIth International Conference of the Italian Association for Artificial Intelligence, Rende, Italy, –22, 2019, Proceedings 18, 2019. Springer, 281-93. 19 November. [CrossRef]

- Yaseen, M. U. Identification of cause of impairment in spiral drawings, using non-stationary feature extraction approach. 2012. [Google Scholar]

- Masud, M. Singh, G. S. Gaba, A. Kaur, R. Alroobaea, M. Alrashoud and S. A. Alqahtani. "Crowd: Crow search and deep learning based feature extractor for classification of parkinson’s disease." ACM Transactions on Internet Technology (TOIT) 21 (2021): 1-18. [CrossRef]

- Wang, W. Lee, F. Harrou and Y. Sun. "Early detection of parkinson’s disease using deep learning and machine learning." IEEE Access 8 (2020): 147635-46. [CrossRef]

- Noor, M. B. T. Z. Zenia, M. S. Kaiser, S. A. Mamun and M. Mahmud. "Application of deep learning in detecting neurological disorders from magnetic resonance images: A survey on the detection of alzheimer’s disease, parkinson’s disease and schizophrenia." Brain informatics 7 (2020): 1-21. [CrossRef]

- Ardhianto, P. -Y. Liau, Y.-K. Jan, J.-Y. Tsai, F. Akhyar, C.-Y. Lin, R. B. R. Subiakto and C.-W. Lung. "Deep learning in left and right footprint image detection based on plantar pressure." Applied Sciences 12 (2022): 8885. [CrossRef]

- Fan, S. and Y. Sun. "Early detection of parkinson’s disease using machine learning and convolutional neural networks from drawing movements." Presented at CS & IT Conference Proceedings, 2022. 12. [CrossRef]

- Purushotham, S. Meng, Z. Che and Y. Liu. "Benchmarking deep learning models on large healthcare datasets." Journal of biomedical informatics 83 (2018): 112-34. [CrossRef]

- Kumar, A. Kim, D. Lyndon, M. Fulham and D. Feng. "An ensemble of fine-tuned convolutional neural networks for medical image classification." IEEE journal of biomedical and health informatics 21 (2016): 31-40. [CrossRef]

- Ardhianto, P. B. R. Subiakto, C.-Y. Lin, Y.-K. Jan, B.-Y. Liau, J.-Y. Tsai, V. B. H. Akbari and C.-W. Lung. "A deep learning method for foot progression angle detection in plantar pressure images." Sensors 22 (2022): 2786. [CrossRef]

- Muzammel, M. Salam and A. Othmani. "End-to-end multimodal clinical depression recognition using deep neural networks: A comparative analysis." Computer Methods and Programs in Biomedicine 211 (2021): 106433. [CrossRef]

- Yu, W. and P. Lv. "An end-to-end intelligent fault diagnosis application for rolling bearing based on mobilenet." IEEE Access 9 (2021): 41925-33. [CrossRef]

- Pusparani, Y. -Y. Lin, Y.-K. Jan, F.-Y. Lin, B.-Y. Liau, P. Ardhianto, I. Farady, J. S. R. Alex, J. Aparajeeta and W.-H. Chao. "Diagnosis of alzheimer’s disease using convolutional neural network with select slices by landmark on hippocampus in mri images. IEEE Access. [CrossRef]

- Oloko-Oba, M. and S. Viriri. "Ensemble of efficientnets for the diagnosis of tuberculosis. Computational Intelligence and Neuroscience, 2021. [Google Scholar] [CrossRef]

- Mujahid, M. Rustam, R. Álvarez, J. Luis Vidal Mazón, I. d. l. T. Díez and I. Ashraf. "Pneumonia classification from x-ray images with inception-v3 and convolutional neural network." Diagnostics 12 (2022): 1280. [CrossRef]

- Zham, P. K. Kumar, P. Dabnichki, S. Poosapadi Arjunan and S. Raghav. "Distinguishing different stages of parkinson’s disease using composite index of speed and pen-pressure of sketching a spiral." Frontiers in neurology (2017): 435. [CrossRef]

- Tsai, J.-Y. Y.-J. Hung, Y. L. Guo, Y.-K. Jan, C.-Y. Lin, T. T.-F. Shih, B.-B. Chen and C.-W. Lung. "Lumbar disc herniation automatic detection in magnetic resonance imaging based on deep learning." Frontiers in Bioengineering and Biotechnology 9 (2021): 708137. [CrossRef]

- Ardhianto, P. -Y. Tsai, C.-Y. Lin, B.-Y. Liau, Y.-K. Jan, V. B. H. Akbari and C.-W. Lung. "A review of the challenges in deep learning for skeletal and smooth muscle ultrasound images." Applied Sciences 11 (2021): 4021. [CrossRef]

- Kornblith, S. Shlens and Q. V. Le. "Do better imagenet models transfer better?" Presented at Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019. 2661-71.

- Kim, H. E. Cosa-Linan, N. Santhanam, M. Jannesari, M. E. Maros and T. Ganslandt. "Transfer learning for medical image classification: A literature review." BMC medical imaging 22 (2022): 69. [CrossRef]

- Wang, J. Zhu, S.-H. Wang and Y.-D. Zhang. "A review of deep learning on medical image analysis." Mobile Networks and Applications 26 (2021): 351-80. [CrossRef]

- Mascarenhas, S. and M. Agarwal. "A comparison between vgg16, vgg19 and resnet50 architecture frameworks for image classification." Presented at 2021 International conference on disruptive technologies for multi-disciplinary research and applications (CENTCON), 2021. IEEE, 1, 96-99. [CrossRef]

- Sae-Lim, W. Wettayaprasit and P. Aiyarak. "Convolutional neural networks using mobilenet for skin lesion classification." Presented at 2019 16th international joint conference on computer science and software engineering (JCSSE), 2019. IEEE, 242-47. [CrossRef]

- Yadav, P. Menon, V. Ravi, S. Vishvanathan and T. D. Pham. "Efficientnet convolutional neural networks-based android malware detection." Computers & Security 115 (2022): 102622. [CrossRef]

- Minarno, A. E. Aripa, Y. Azhar and Y. Munarko. "Classification of malaria cell image using inception-v3 architecture." JOIV: International Journal on Informatics Visualization 7 (2023): 273-78. [CrossRef]

- Kawasaki, Y. Uga, S. Kagiwada and H. Iyatomi. "Basic study of automated diagnosis of viral plant diseases using convolutional neural networks." Presented at Advances in Visual Computing: 11th International Symposium, ISVC 2015, Las Vegas, NV, USA, -16, 2015, Proceedings, Part II 11, 2015. Springer, 638-45. 14 December. [CrossRef]

- Ajayi, O. G. and J. Ashi. "Effect of varying training epochs of a faster region-based convolutional neural network on the accuracy of an automatic weed classification scheme." Smart Agricultural Technology 3 (2023): 100128. [CrossRef]

- Ferentinos, K. P. "Deep learning models for plant disease detection and diagnosis." Computers and electronics in agriculture 145 (2018): 311-18. [CrossRef]

- Kamilaris, A. and F. X. Prenafeta-Boldú. "Deep learning in agriculture: A survey." Computers and electronics in agriculture 147 (2018): 70-90. [CrossRef]

- Falconí, L. G. Pérez and W. G. Aguilar. "Transfer learning in breast mammogram abnormalities classification with mobilenet and nasnet." Presented at 2019 international conference on systems, signals and image processing (IWSSIP), 2019. IEEE, 109-14. [CrossRef]

- Frid-Adar, M. Diamant, E. Klang, M. Amitai, J. Goldberger and H. Greenspan. "Gan-based synthetic medical image augmentation for increased cnn performance in liver lesion classification." Neurocomputing 321 (2018): 321-31. [CrossRef]

- Howard, A. G. Zhu, B. Chen, D. Kalenichenko, W. Wang, T. Weyand, M. Andreetto and H. Adam. "Mobilenets: Efficient convolutional neural networks for mobile vision applications." arXiv preprint arXiv:1704. 0486. [Google Scholar] [CrossRef]

- Thu, M. Suvonvorn and N. Kittiphattanabawon. "Pedestrian classification on transfer learning based deep convolutional neural network for partial occlusion handling." International Journal of Electrical and Computer Engineering (IJECE) 13 (2023): 2812-26. [CrossRef]

- Kaur, S. Aggarwal and R. Rani. "Diagnosis of parkinson’s disease using deep cnn with transfer learning and data augmentation." Multimedia Tools and Applications 80 (2021): 10113-39. [CrossRef]

- Nan, Y. Ju, Q. Hua, H. Zhang and B. Wang. "A-mobilenet: An approach of facial expression recognition." Alexandria Engineering Journal 61 (2022): 4435-44. [CrossRef]

- Sajid, M. Z. Qureshi, Q. Abbas, M. Albathan, K. Shaheed, A. Youssef, S. Ferdous and A. Hussain. "Mobile-hr: An ophthalmologic-based classification system for diagnosis of hypertensive retinopathy using optimized mobilenet architecture." Diagnostics 13 (2023): 1439. [CrossRef]

- Baghdadi, N. A. Malki, S. F. Abdelaliem, H. M. Balaha, M. Badawy and M. Elhosseini. "An automated diagnosis and classification of covid-19 from chest ct images using a transfer learning-based convolutional neural network." Computers in biology and medicine 144 (2022): 105383. [CrossRef]

- Gupta, J. Pathak and G. Kumar. "Deep learning (cnn) and transfer learning: A review." Presented at Journal of Physics: Conference Series, 2022. IOP Publishing, 2273, 012029. [CrossRef]

- Khasoggi, B. Ermatita and S. Sahmin. "Efficient mobilenet architecture as image recognition on mobile and embedded devices." Indonesian Journal of Electrical Engineering and Computer Science 16 (2019): 389-94. [CrossRef]

- Lin, Y. Zhang and X. Yang. "A low memory requirement mobilenets accelerator based on fpga for auxiliary medical tasks." Bioengineering 10 (2022): 28. [CrossRef]

- Hartanto, C. A. and A. Wibowo. "Development of mobile skin cancer detection using faster r-cnn and mobilenet v2 model." Presented at 2020 7th International Conference on Information Technology, Computer, and Electrical Engineering (ICITACEE), 2020. IEEE, 58-63. [CrossRef]

- Ogundokun, R. O. Misra, A. O. Akinrotimi and H. Ogul. "Mobilenet-svm: A lightweight deep transfer learning model to diagnose bch scans for iomt-based imaging sensors." Sensors 23 (2023): 656. [CrossRef]

- Kassani, S. H. H. Kassani, M. J. Wesolowski, K. A. Schneider and R. Deters. "Deep transfer learning based model for colorectal cancer histopathology segmentation: A comparative study of deep pre-trained models." International Journal of Medical Informatics 159 (2022): 104669. [CrossRef]

- AKGÜN, D. T. KABAKUŞ, Z. K. ŞENTÜRK, A. ŞENTÜRK and E. KÜÇÜKKÜLAHLI. "A transfer learning-based deep learning approach for automated covid-19diagnosis with audio data." Turkish Journal of Electrical Engineering and Computer Sciences 29 (2021): 2807-23. [CrossRef]

- Sarvamangala, D. and R. V. Kulkarni. "Convolutional neural networks in medical image understanding: A survey." Evolutionary intelligence 15 (2022): 1-22. [CrossRef]

- Elfatimi, E. Eryigit and L. Elfatimi. "Beans leaf diseases classification using mobilenet models." IEEE Access 10 (2022): 9471-82. [CrossRef]

- Filatov, D. and G. N. A. H. Yar. "Brain tumor diagnosis and classification via pre-trained convolutional neural networks." arXiv preprint arXiv:2208. 0076; arXiv:2208.00768. [Google Scholar]

- Chaturvedi, S. S. V. Tembhurne and T. Diwan. "A multi-class skin cancer classification using deep convolutional neural networks." Multimedia Tools and Applications 79 (2020): 28477-98. [CrossRef]

| Model | Class | Accuracy |

|---|---|---|

| ResNet50 | Spiral Normal Spiral Parkinson Wave Normal Wave Parkinson | 0.80 |

| MobileNet | Spiral Normal Spiral Parkinson Wave Normal Wave Parkinson | 0.92 |

| EfficientNet-B1 | Spiral Normal Spiral Parkinson Wave Normal Wave Parkinson | 0.83 |

| Inception | Spiral Normal Spiral Parkinson Wave Normal Wave Parkinson | 0.82 |

| Model | Class | Performance | ||

|---|---|---|---|---|

| Precision | Recall | F1-Score | ||

| ResNet50 | Spiral Normal | 0.74 | 0.93 | 0.82 |

| Spiral Parkinson | 0.91 | 0.67 | 0.77 | |

| Wave Normal | 0.80 | 0.80 | 0.80 | |

| Wave Parkinson | 0.80 | 0.80 | 0.80 | |

| MobileNet | Spiral Normal | 0.82 | 0.93 | 0.87 |

| Spiral Parkinson | 0.92 | 0.80 | 0.86 | |

| Wave Normal | 0.94 | 1.00 | 0.97 | |

| Wave Parkinson | 1.00 | 0.93 | 0.97 | |

| EfficientNet-B1 | Spiral Normal | 0.92 | 0.80 | 0.86 |

| Spiral Parkinson | 0.82 | 0.93 | 0.87 | |

| Wave Normal | 0.85 | 0.73 | 0.79 | |

| Wave Parkinson | 0.76 | 0.87 | 0.81 | |

| Inception V3 | Spiral Normal | 0.78 | 0.93 | 0.85 |

| Spiral Parkinson | 0.92 | 0.73 | 0.81 | |

| Wave Normal | 0.85 | 0.73 | 0.79 | |

| Wave Parkinson | 0.76 | 0.87 | 0.81 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).