Submitted:

21 December 2023

Posted:

22 December 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Linked Open Data

1.2. SPARQL

1.3. WebGIS

1.4. Functions

1.5. Geocoding

1.6. Critical issues

2. Related works

2.1. Cammini D’Italia (Atlas of Paths)

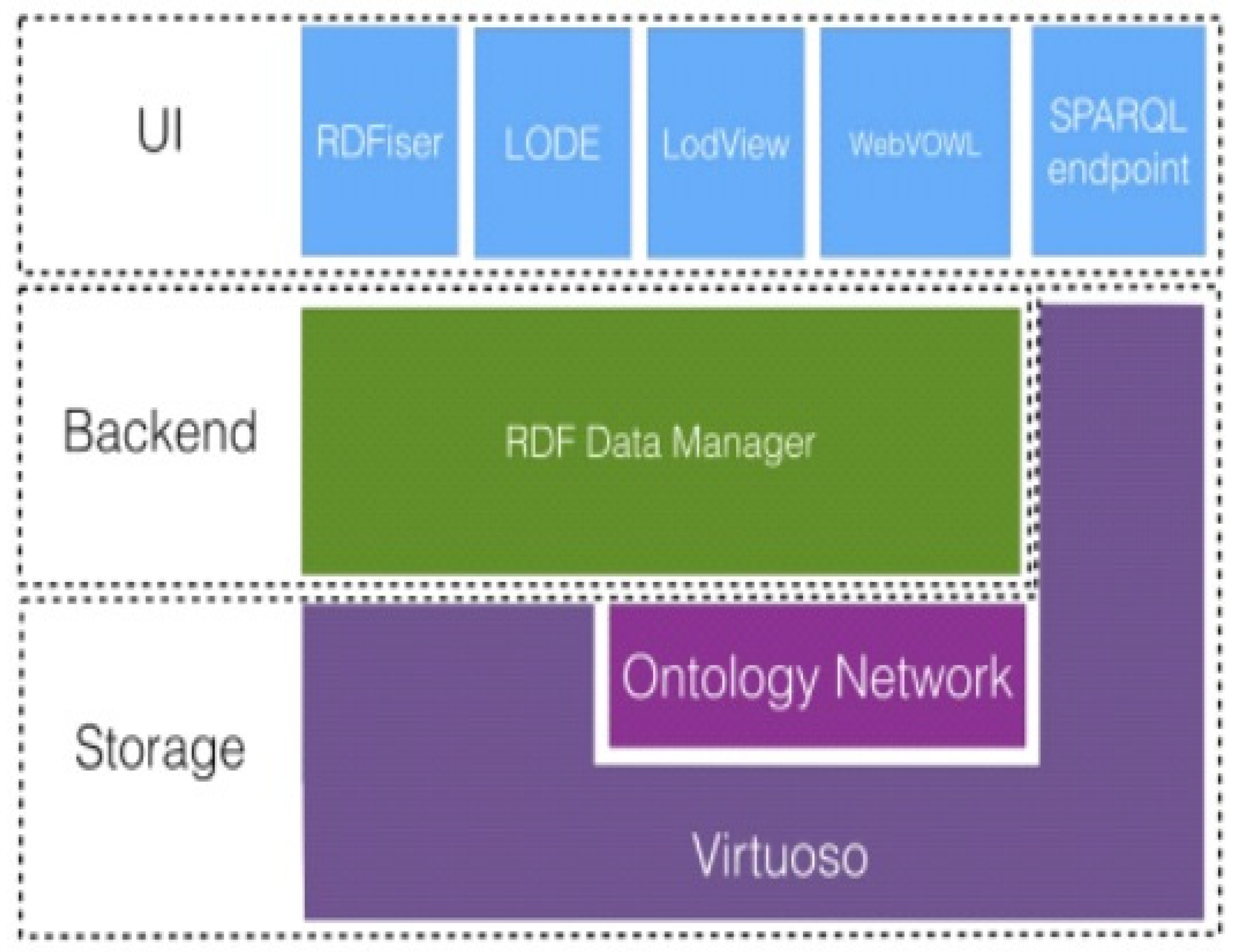

2.1.1. Architecture

2.1.2. Ontologies

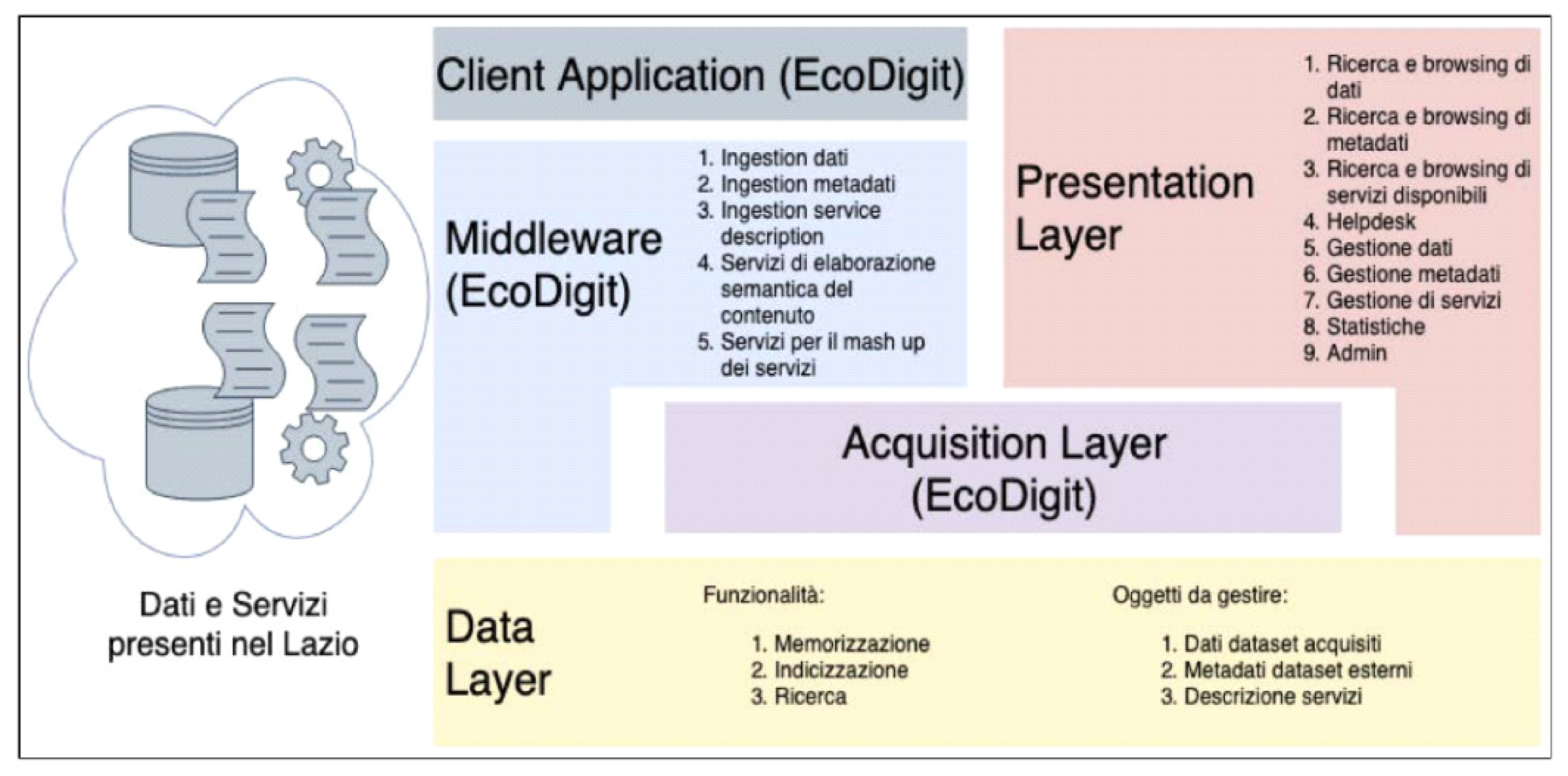

2.2. EcoDigit

2.2.1. Architecture

2.2.2. Ontologies

2.2.3. Prototypes

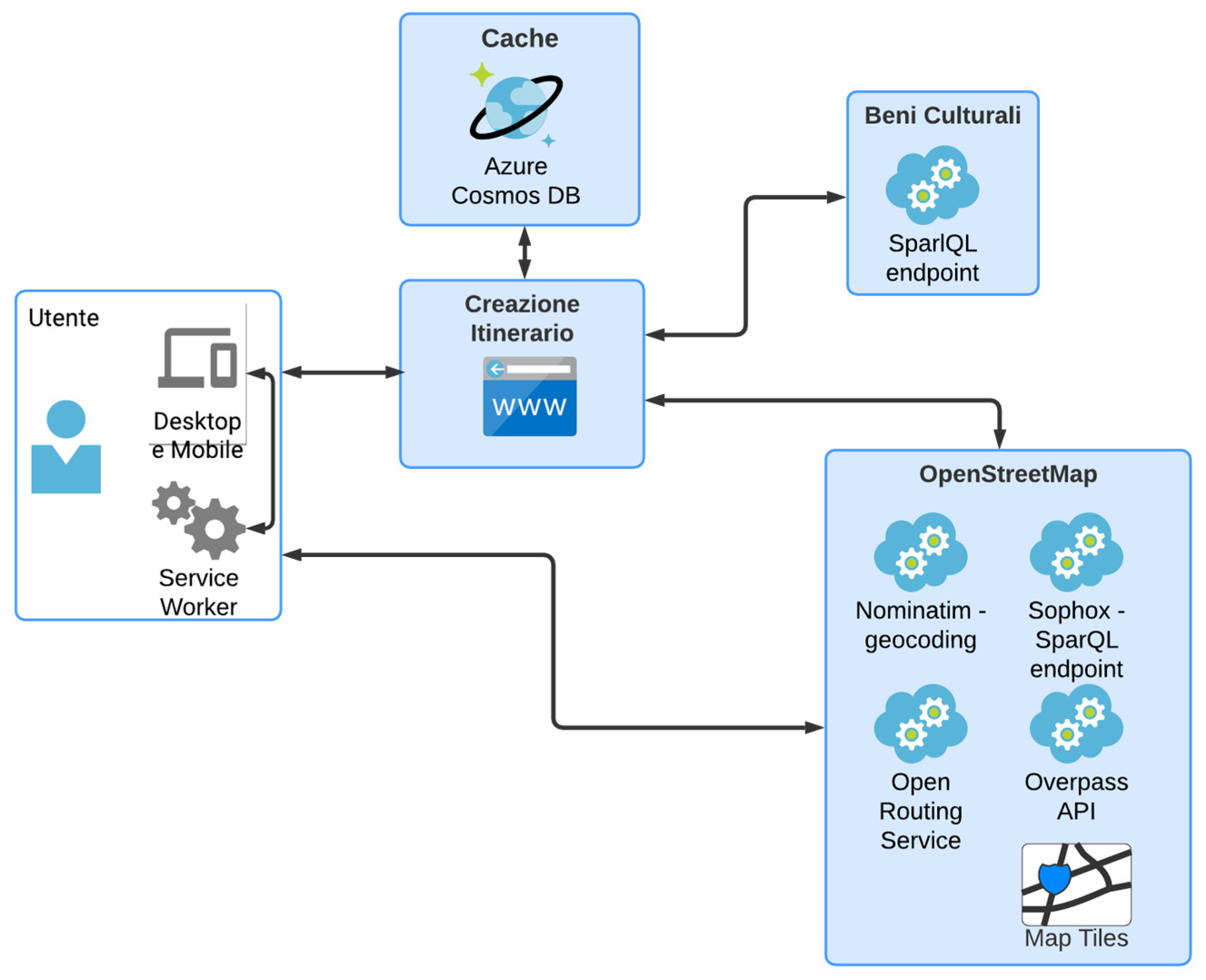

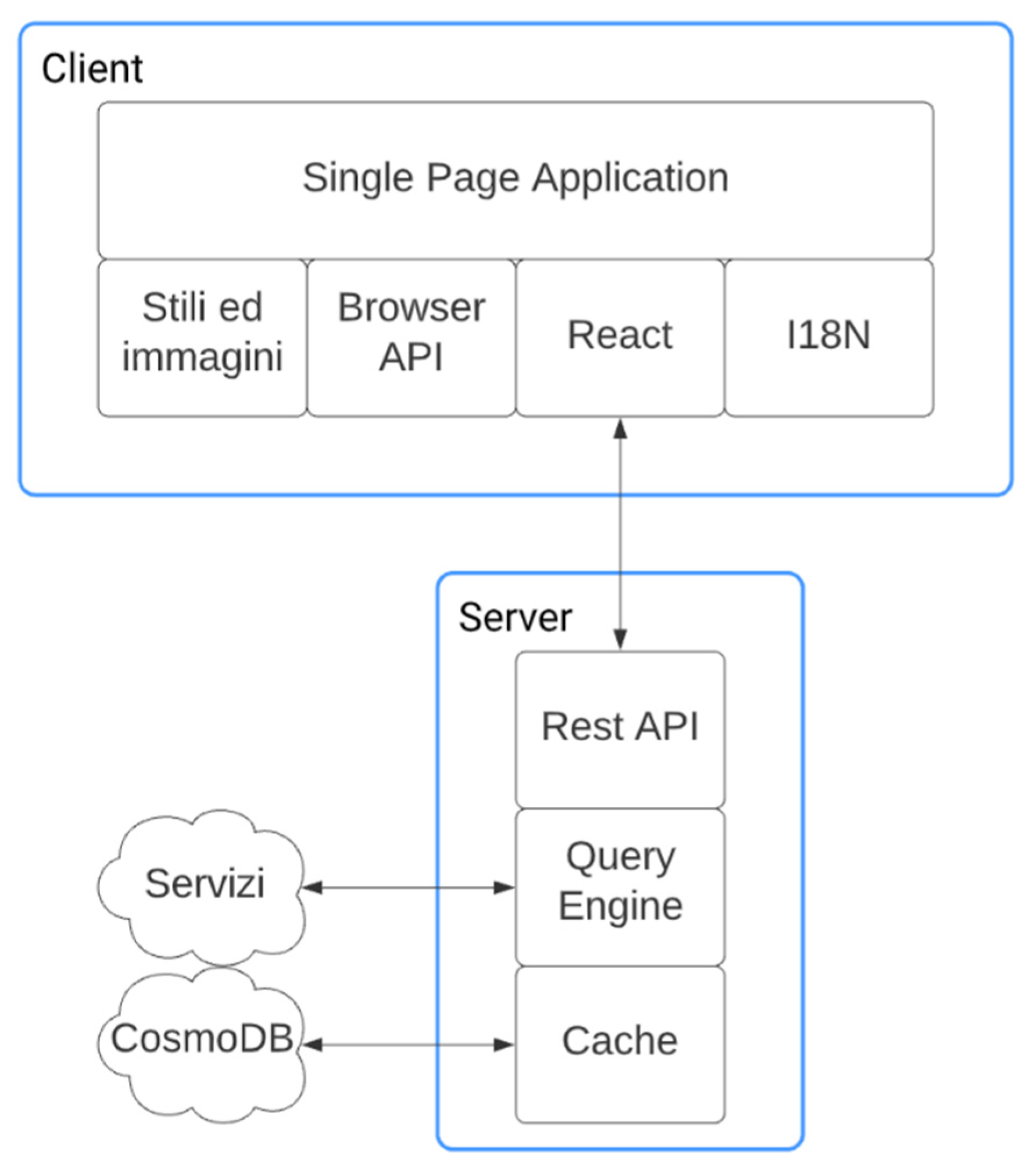

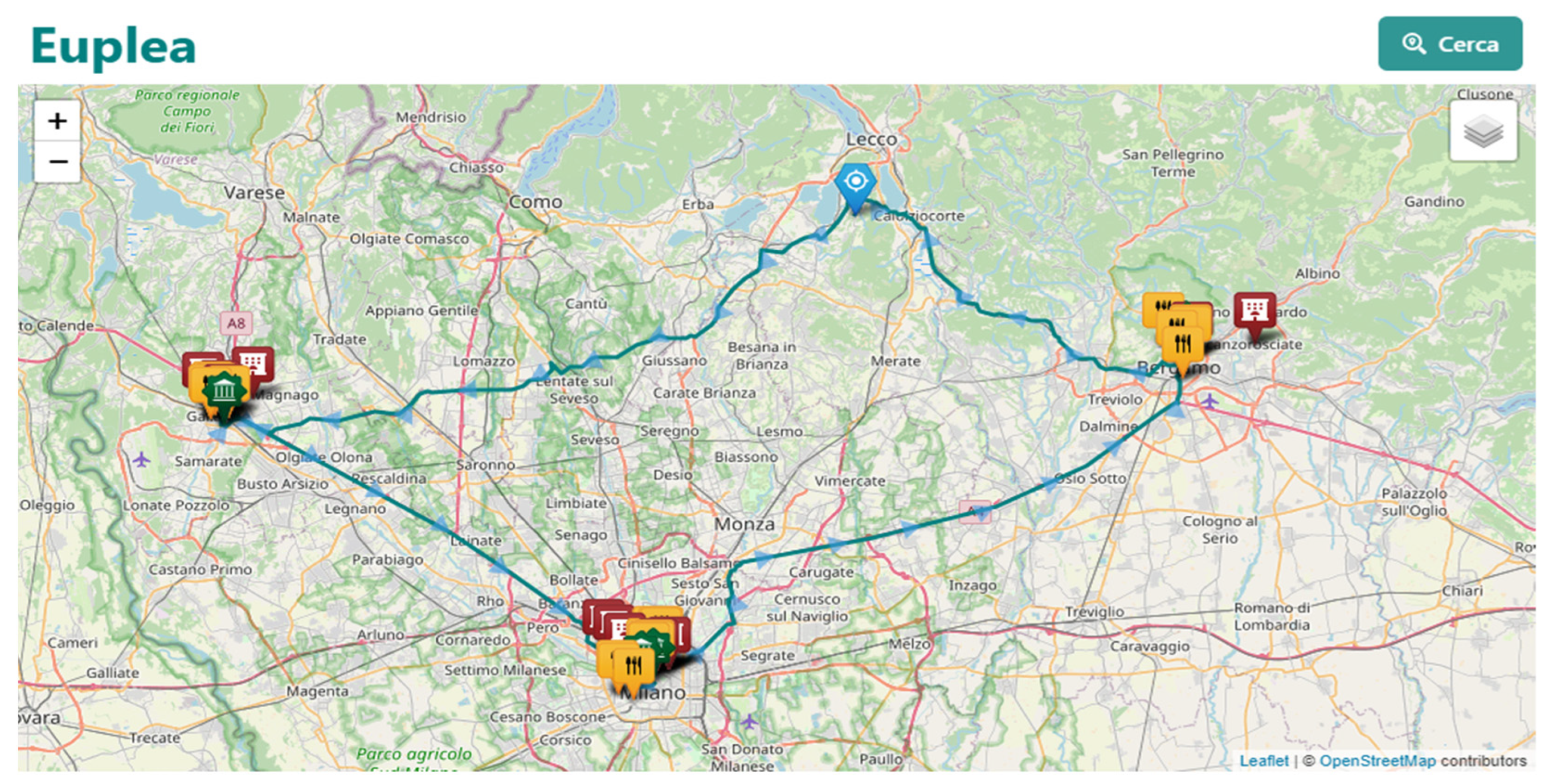

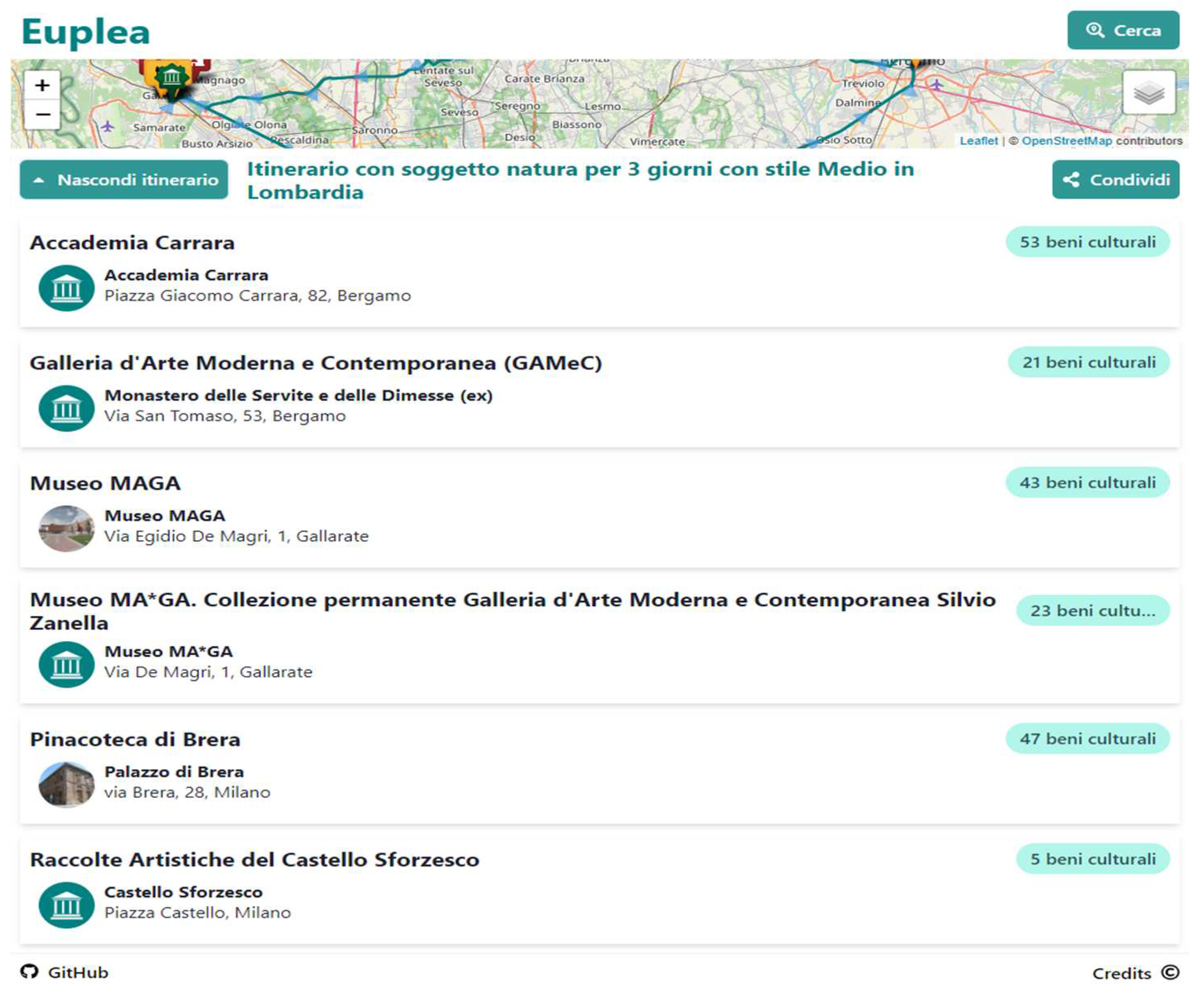

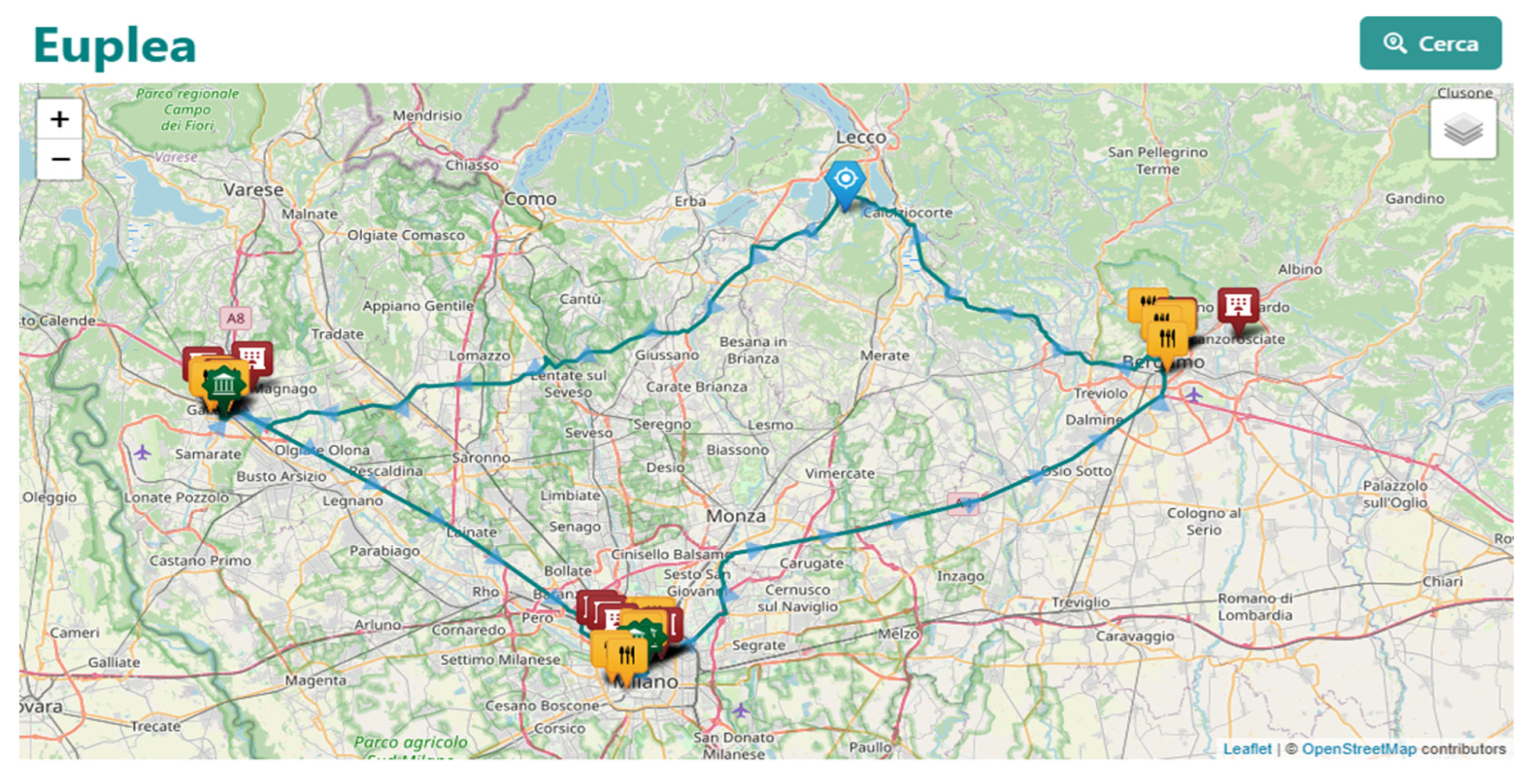

3. Case Study: Euplea framework

3.1. Innovation

3.2. Methodology

3.3. Selection of places of interest

3.3.1. Search for culture points

3.3.2. Identification of the Italian regions

3.3.3. Non-homogeneous data

3.3.4. Geocoding

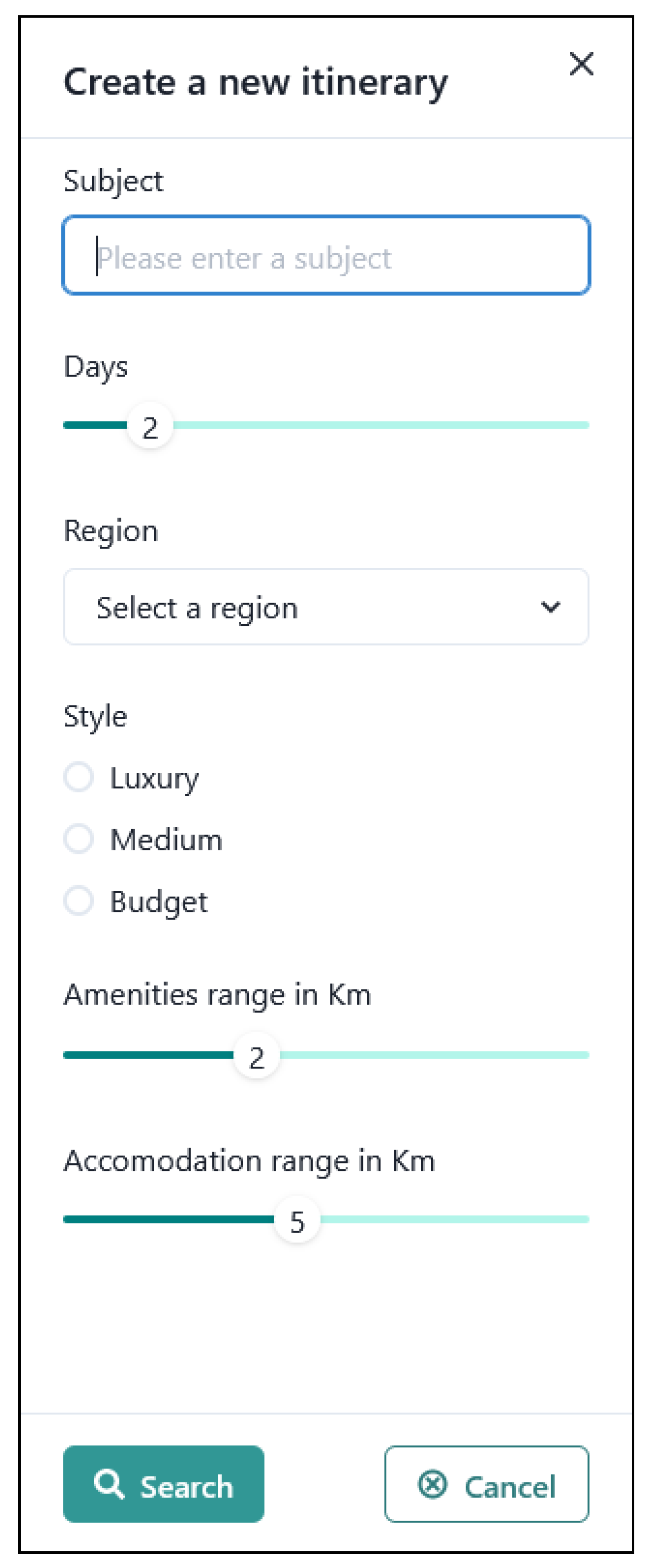

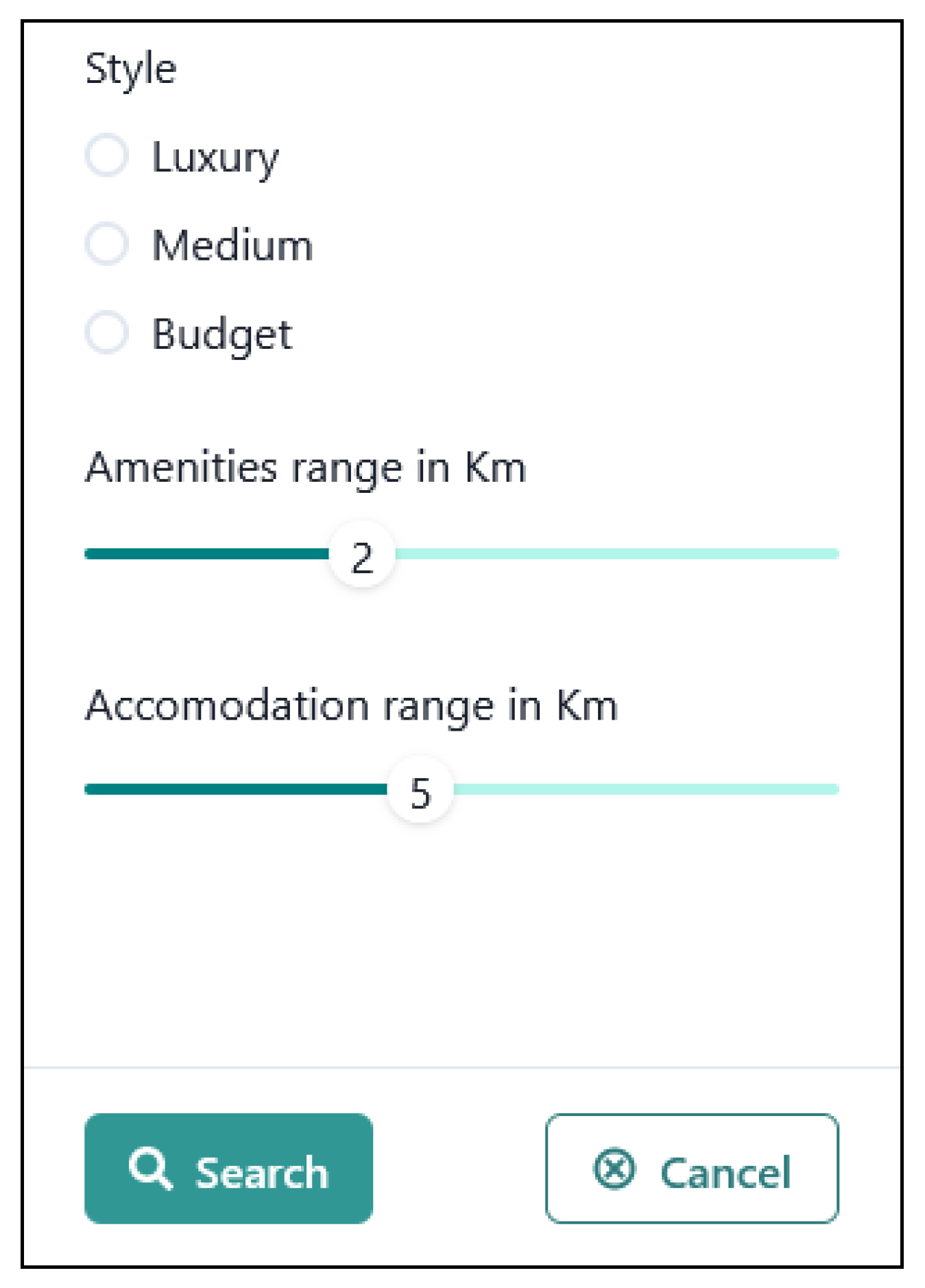

3.3.5. Itinerary creation

3.3.6. Selection of services and accommodation

3.3.7. Cache

3.3.8. WebGIS presentation

3.3.9. Layers

3.3.10. Routing

4. Conclusions and Future Work

4.1. Conclusions

4.1. Future developments

- -

- Use additional Linked Open Data sources

- –

- Introduction of other selection criteria

- -

- Display itineraries created as Linked Open Data

- -

- Analysis of the itineraries created and user feedback

- -

- Integration with virtual assistants

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- I. Jacobs e N. Walsh, «Architecture of the World Wide Web, Volume One,» 15 Dicembre 2004. Online. Available: https://www.w3.org/TR/webarch/.

- M.-J. Kraak e F. Ormeling, Cartography: visualization of geospatial data, CRC Press, 2020.

- T. Berners-Lee, «Linked Data - Design Issues,» 18 Giugno 2009. Online. Available: https://www.w3.org/DesignIssues/LinkedData.html.

- T. Berners-Lee, R. Fielding e L. Masinter, «RFC3986: Uniform resource identifiers (URI): Generic Syntax,» Gennaio 2005. Online. Available: https://www.rfc-editor.org/rfc/rfc3986.html.

- R. Fielding, J. Gettys, J. Mogul, H. Frystyk, L. Masinter, P. Leach e T. Berners-Lee, «Hypertext transfer protocol–HTTP/1.1.,» Giugno 1999. Online. Available: https://www.w3.org/Protocols/rfc2616/rfc2616.html.

- G. Klyne e J. J. Carroll, «Resource Description Framework (RDF): Concepts and Abstract Syntax,» 10 Febbraio 2004. Online. Available: https://www.w3.org/TR/rdf-concepts/.

- T. Heath e C. Bizer, «Linked data: Evolving the web into a global data space,» Synthesis lectures on the semantic web: theory and technology, vol. 1, n. 1, pp. 1-136, 2011.

- Worl Wide Web Consortium, «Linked Open Data,». Available: https://www.w3.org/egov/wiki/Linked_Open_Data.

- B. Uzzi, «The sources and consequences of embeddedness for the economic performance of organizations: The network effect,» American sociological review, pp. 674--698, 1996.

- O. Signore, RDF per la rappresentazione della conoscenza, 2005.

- T. Berners-Lee, J. Hendler e O. Lassila, «The semantic web,» Scientific american, vol. 284, n. 5, pp. 34-43, 2001.

- World Wide Web Consortium, «Ontologies,» 2015. Online. Available: https://www.w3.org/standards/semanticweb/ontology.

- W3C Owl Working Group and others, «OWL 2 Web Ontology Language Document Overview (Second Edition),» World Wide Web Consortium, 11 Dicembre 2012. Online. Available: https://www.w3.org/TR/owl2-overview/.

- A. Miles e S. Bechhofer, «SKOS simple knowledge organization system reference,» World Wide Web Consortium, 18 Agosto 2009. Online. Available: https://www.w3.org/TR/skos-reference/.

- S. Bechhofer e A. Miles, «Using OWL and SKOS,» Maggio 2008. Online. Available: https://www.w3.org/2006/07/SWD/SKOS/skos-and-owl/master.html.

- N. Shadbolt, T. Berners-Lee e W. Hall, «The Semantic Web Revisited,» IEEE intelligent systems, vol. 21, n. 3, pp. 96-101, 2006.

- The W3C SPARQL Working Group, «SPARQL 1.1 Overview,» 21 Marzo 2013. Online. Available: https://www.w3.org/TR/sparql11-overview/.

- J. Pérez, M. Arenas e C. Gutierrez, «Semantics and complexity of SPARQL,» ACM Transactions on Database Systems (TODS), vol. 34, n. 3, pp. 1-45, 2009.

- R. García-Castro e A. Gómez Pérez, «Perspectives in Semantic Interoperability,» in International Workshop on Semantic Interoperability - IWSI 2011, Roma, 2011.

- C. Pluempitiwiriyawej e J. Hammer, «A classification scheme for semantic and schematic heterogeneities in XML data sources,» TR00-004, University of Florida, Gainesville, FL, 2000.

- A. Ferrara, D. Lorusso, S. Montanelli e G. Varese, «Towards a benchmark for instance matching,» in The 7th International Semantic Web Conference, Karlsuhe, 2008.

- M. Lunghi, E. Bellini e C. Cirinnà, «Trust and persistence for internet resources,» JLIS.it, vol. 4, n. 1, pp. 375-390, 2013.

- P. Fu e J. Sun, «GIS in the Web Era,» Web GIS: Principles and applications, pp. 1-24, 2011.

- M. J. De Smith, M. F. Goodchild e P. Longley, Geospatial analysis: a comprehensive guide to principles, techniques and software tools, Troubador publishing ltd, 2007.

- D. W. Goldberg, J. P. Wilson e C. A. Knoblock, «From text to geographic coordinates: the current state of geocoding,» URISA journal, vol. 19, n. 1, pp. 33-46, 2007.

- Z. Yin, A. Ma e D. W. Goldberg, «A deep learning approach for rooftop geocoding,» Transactions in GIS, vol. 23, n. 3, pp. 495-514, 2019.

- R. Annamoradnejad, I. Annamoradnejad, T. Safarrad e J. Habibi, «2019 5th International Conference on Web Research (ICWR),» in Using Web Mining in the Analysis of Housing Prices: A Case study of Tehran, 2019, pp. 55-60.

- G. M. Morton, A computer oriented geodetic data base and a new technique in file sequencing, IBM, 1966.

- T. Vukovic, Hilbert-geohash-hashing geographical point data using the hilbert space-filling curve, 2016.

- «Cammini d’Italia,». Online. Available: http://wit.istc.cnr.it/atlas-of-paths/.

- L. Asprino, V. A. Carriero, A. Gangemi, L. Marinucci, A. G. Nuzzolese e V. Presutti, «Atlas of Paths: a Formal Ontology of Historical Pathways in Italy.,» in ISWC Satellites, 2019, pp. 149-52.

- Consiglio Nazionale delle Ricerche (CNR), «Portale dei Cammini d’Italia: Tecnologie semantiche e Linked Data,». Online. Available: http://wit.istc.cnr.it/atlas-of-paths/AtlasOfPaths.pdf.

- S. Peroni, «Live OWL Documentation Environment (LODE),». Online. Available: https://essepuntato.it/lode/.

- D. V. Camarda, S. Mazzini e A. Antonuccio, «LodView,». Online. Available: https://github.com/LodLive/LodView.

- S. Negru, S. Lohmann e F. Haag, «VOWL: Visual Notation for OWL Ontologies,» Aprile 2014. Online. Available: http://vowl.visualdataweb.org/v2/.

- Istituto di Scienze e Tecnologie della Cognizione del CNR - Semantic Technology Lab (STLab), «Routes Ontology - Italian Application Profile,». Online. Available: https://w3id.org/italia/onto/Route.

- Istituto di Scienze e Tecnologie della Cognizione del CNR - Semantic Technology Lab (STLab), «Atlas of Paths Ontology - Italian Application Profile,». Online. Available: https://w3id.org/italia/onto/AtlasOfPaths.

- «Architettura della Conoscenza,». Online. Available: http://wit.istc.cnr.it/arco.

- «Istituto Centrale per il Catalogo e la Documentazione,». Online. Available: http://www.iccd.beniculturali.it/.

- «FOod in Open Data (FOOD),». Online. Available: http://etna.istc.cnr.it/food.

- «EcoDigit - Ecosistema digitale per la fruizione e la valorizzazione dei beni e delle attività culturali della regione Lazio,». Online. Available: http://ecodigit.dtclazio.it/.

- «Europeana Data Model,». Online. Available: https://pro.europeana.eu/page/edm-documentation.

- L. Asprino, A. Budano, M. Canciani, L. Carbone, M. Ceriani, L. Marinucci, M. Mecella, F. Meschini, M. Mongelli, A. G. Nuzzolese, V. Presutti, M. Puccini e M. Saccone, «EcoDigit-Ecosistema Digitale per la fruizione e la valorizzazione dei beni e delle attività culturali del Lazio,» in IX Convegno Annuale AIUCD, Milano, 2020.

- «Agenzia per l’Italia Digitale,». Online. Available: https://www.agid.gov.it.

- «OntoPiA - la rete di ontologie e vocabolari controllati della Pubblica Amministrazione,». Online. Available: https://github.com/italia/daf-ontologie-vocabolari-controllati/wiki.

- «Description of a Project,». Online. Available: https://github.com/ewilderj/doap/wiki.

- D. Reynolds, «The Organization Ontology,». Available: https://www.w3.org/TR/vocab-org/.

- D. Shotton e S. Peroni, «Semantic Publishing and Referencing Ontologies,». Online. Available: http://www.sparontologies.net/ontologies.

- «Workspace del progetto Ecodigit,». Online. Available: https://github.com/ecodigit/workspace.

- «A tool for harvesting LOD resources,». Online. Available: https://github.com/ecodigit/lod-resource-harvester.

- «Prototipo EcoDigit,». Online. Available: https://github.com/ecodigit/prototipo.

- F. F.-H. Nah, «A study on tolerable waiting time: how long are web users willing to wait?,» Behaviour & Information Technolog, vol. 23, n. 3, pp. 153-163, 2004.

- «ha luogo o istituto della cultura,». Online. Available: https://w3id.org/arco/ontology/location/hasCulturalInstituteOrSite.

- A. Gangemi, A. Nuzzolese, C. Veninata, L. Marinucci, L. Asprino, M. Porena, M. Mancinelli, V. Carriero e V. Presutti, «Location Ontology (ArCo network),». Online. Available: https://w3id.org/arco/ontology/location.

- D. Brickley, «Basic Geo (WGS84 lat/long) Vocabulary,» 20 Aprile 2009. Online. Available: https://www.w3.org/2003/01/geo/.

- «Italian Core Location Vocabulary,» 29 Aprile 2019. Online. Available: https://w3id.org/italia/onto/CLV/.

- Microsoft, «Azure Cosmos DB,». Online. Available: https://azure.microsoft.com/services/cosmos-db/.

- Mozilla, «Geolocation API,». Online. Available: https://developer.mozilla.org/en-US/docs/Web/API/Geolocation_API.

- Mazzei, M. (2023). Integration of OpenSees Data Models in 3D WebGIS for Bridge Monitoring. In: Di Trapani, F., Demartino, C., Marano, G.C., Monti, G. (eds) Proceedings of the 2022 Eurasian OpenSees Days. EOS 2022. Lecture Notes in Civil Engineering, vol 326. Springer, Cham. [CrossRef]

- Mazzei, M.; Quaroni, D. Development of a 3D WebGIS Application for the Visualization of Seismic Risk on Infrastructural Work. ISPRS Int. J. Geo-Inf. 2022, 11, 22. [Google Scholar] [CrossRef]

- Maria, M.D.; Fiumi, L.; Mazzei, M.; V., B.O. A System for Monitoring the Environment of Historic Places Us.

- A. Formica, M. Mazzei, E. Pourabbas and M. Rafanelli, "Approximate Query Answering Based on Topological Neighborhood and Semantic Similarity in OpenStreetMap," in IEEE Access, vol. 8, pp. 87011-87030, 2020, doi: 10.1109/ACCESS.2020.2992202.ing Convolutional Neural Network Methodologies. Heritage 2021, 4, 1429-1446. [CrossRef]

| Ranking | Description |

|---|---|

| * | Available on the web (whatever format) but with an open license, to be Open Data; |

| ** | Available as machine-readable structured data (e.g., excel instead of image scan of a table); |

| *** | as (2) plus non-proprietary format (e.g., CSV instead of excel); |

| **** | All the above plus, use open standards from W3C (RDF and SPARQL) to identify things, so that people can point at your stuff; |

| ****** | All the above, plus: Link your data to other people’s data to provide context |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).