Submitted:

07 December 2023

Posted:

07 December 2023

You are already at the latest version

Abstract

Keywords:

1. Introduction

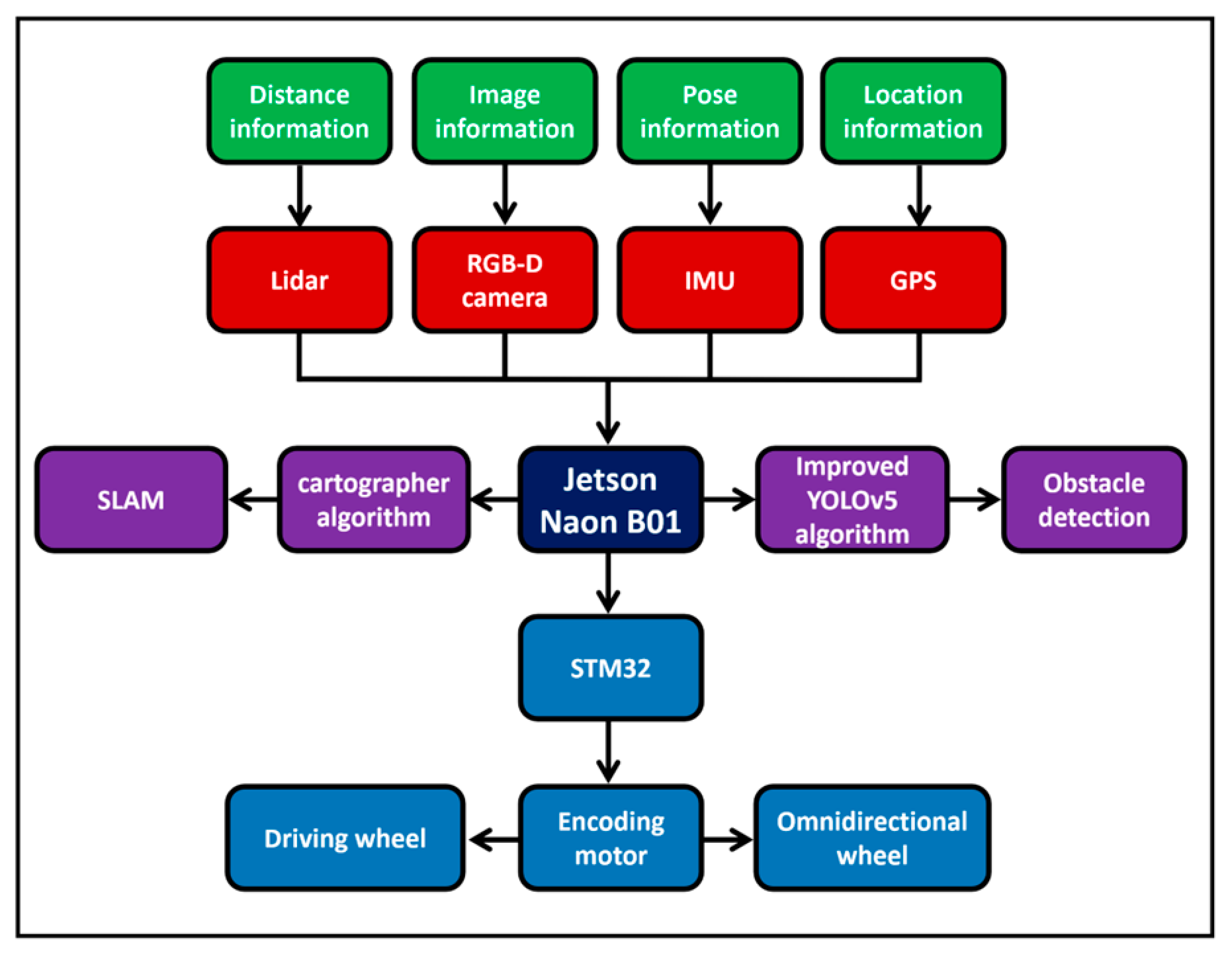

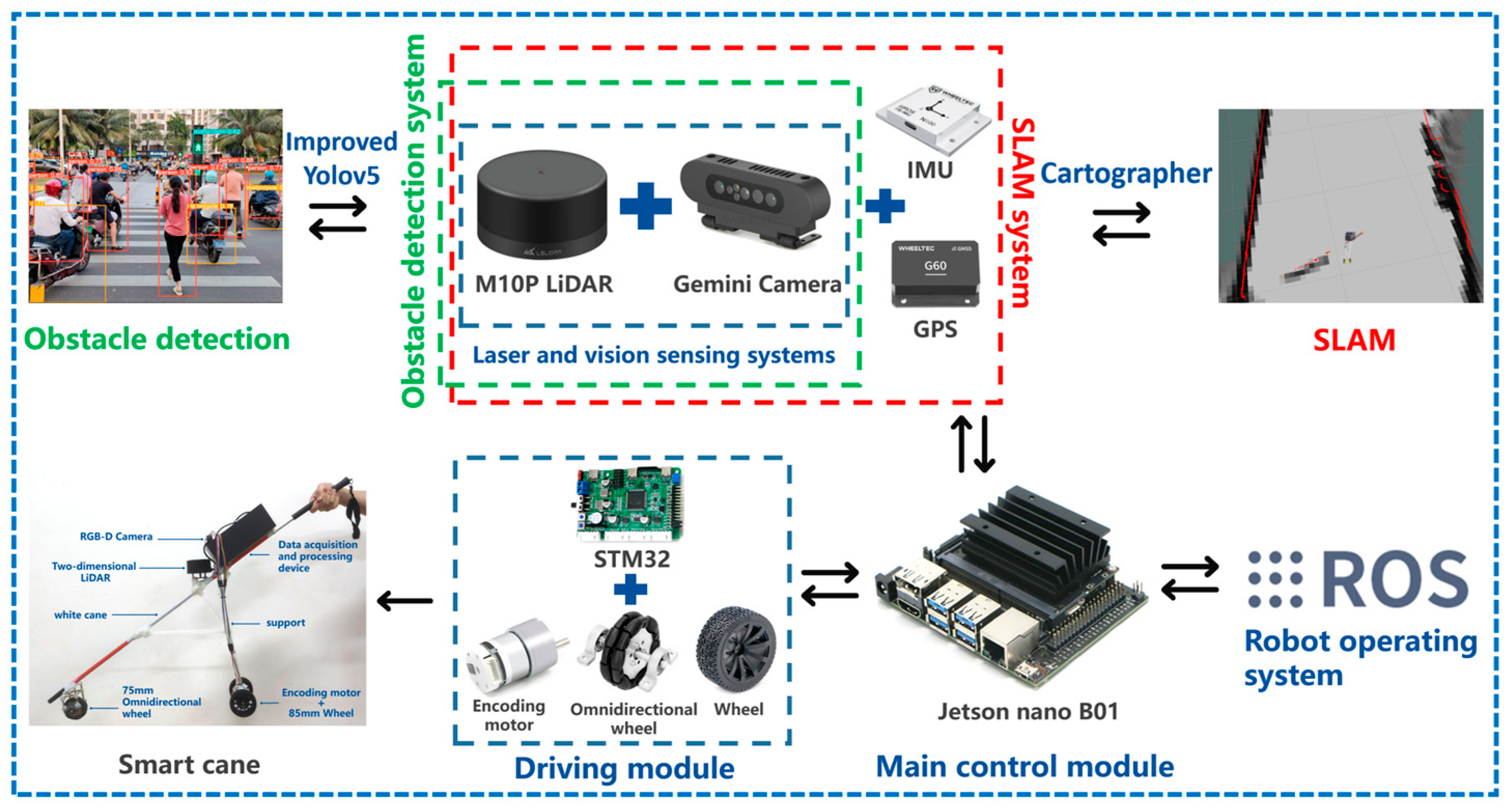

2. System design

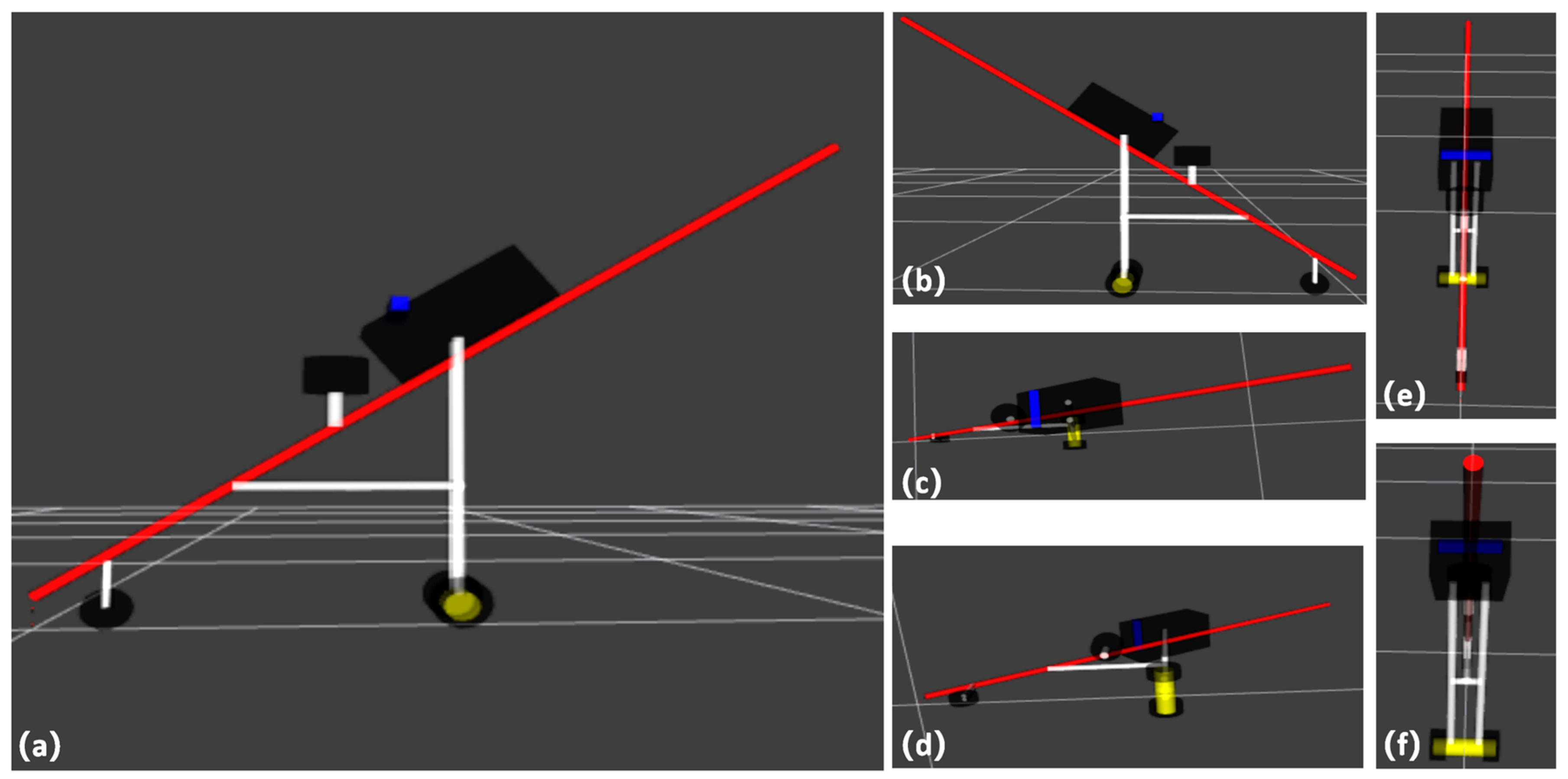

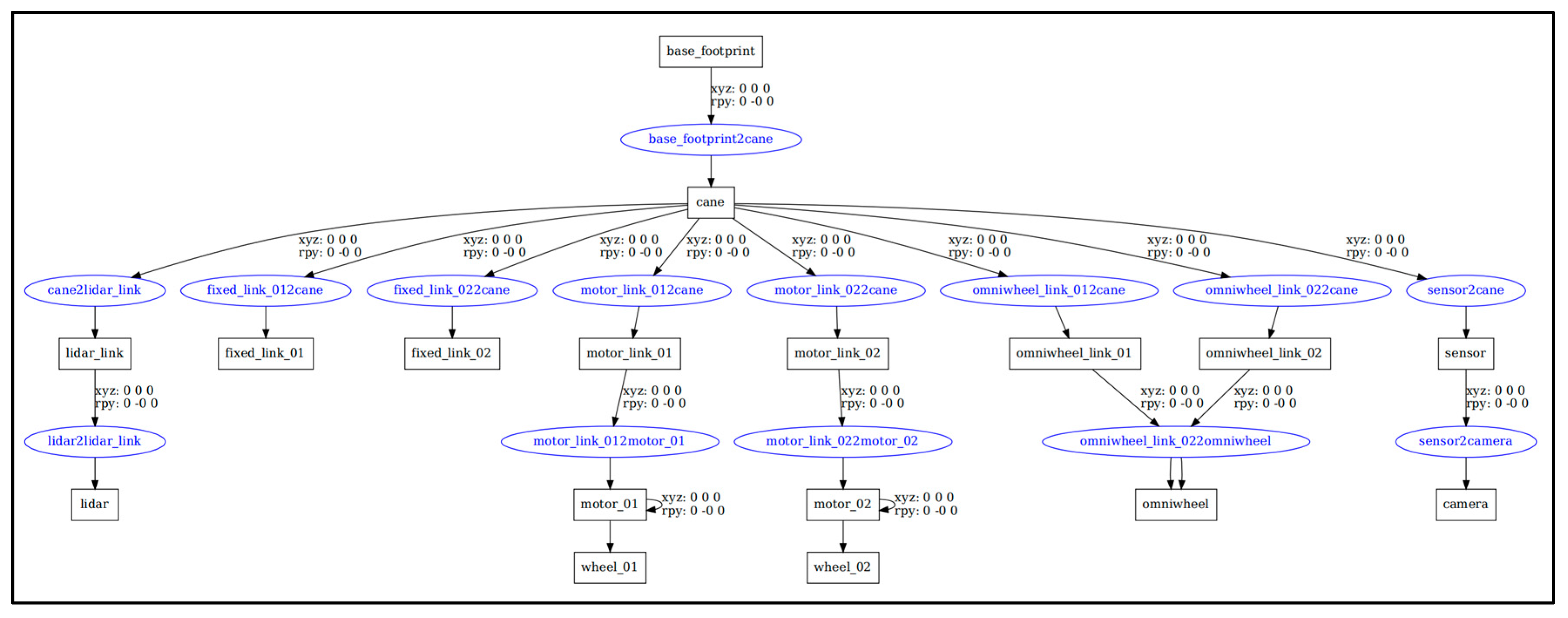

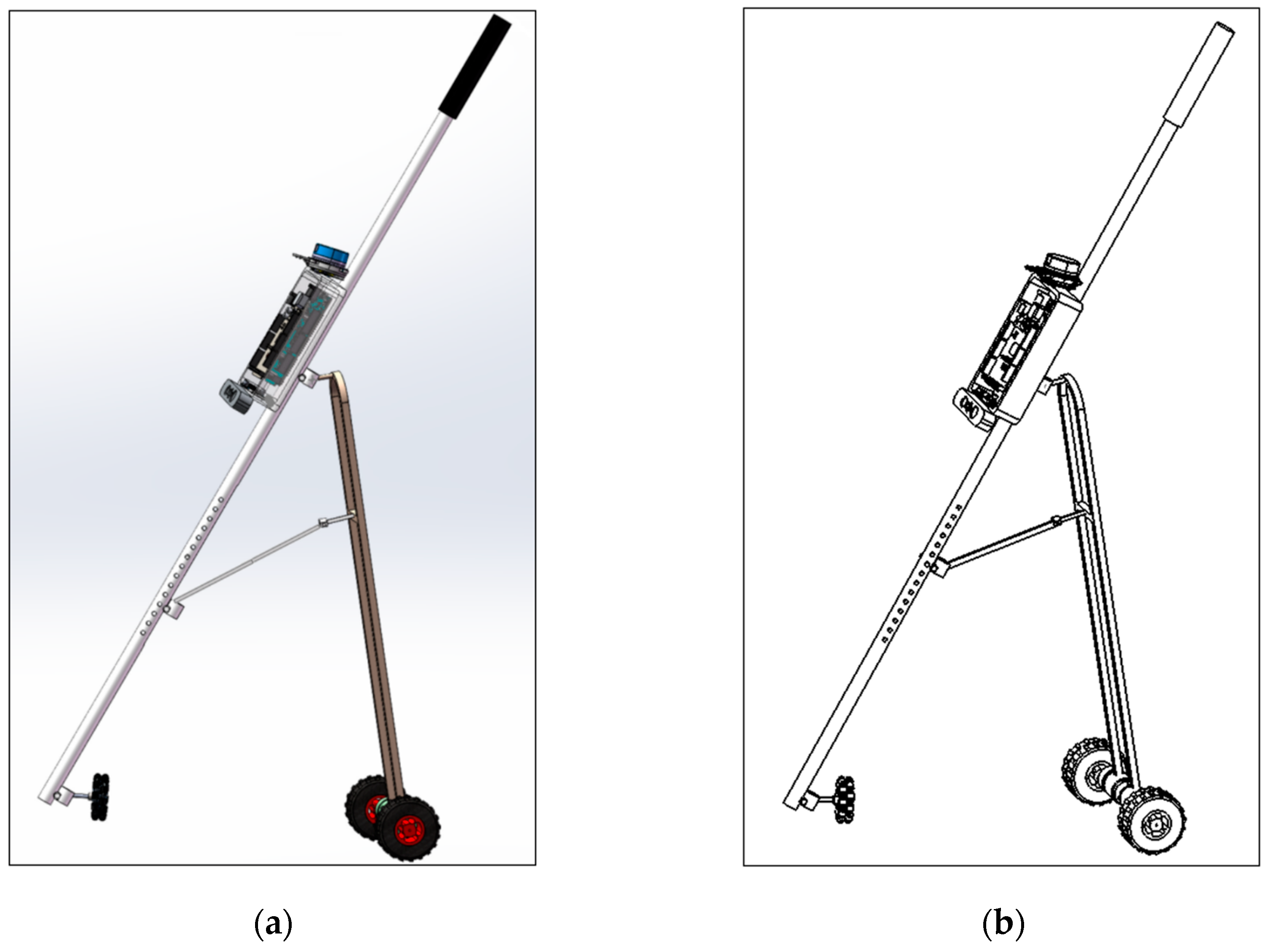

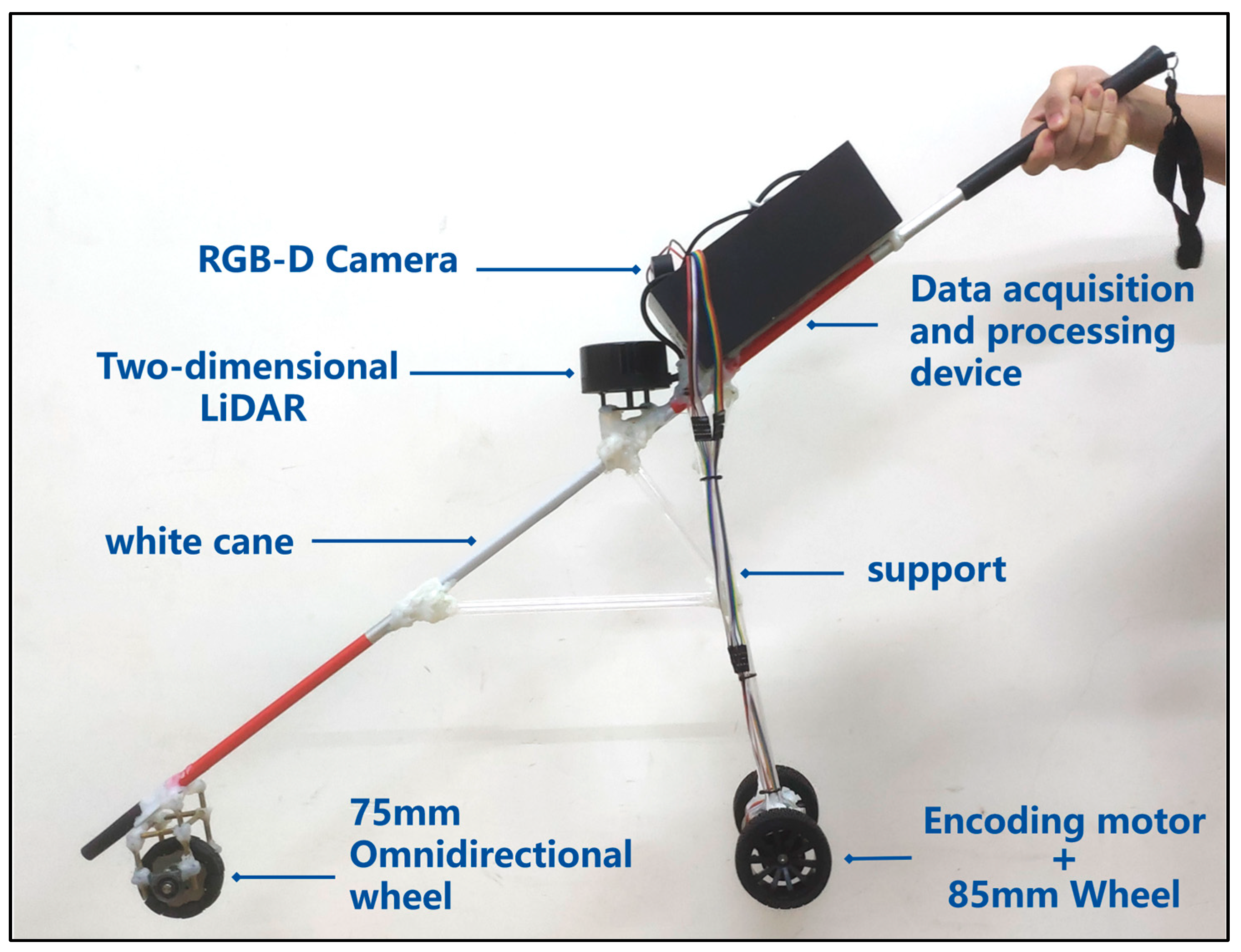

2.1. Smart cane structure design

2.2. Smart cane system hardware

| Hardware | Hardwaretype | parameters and dimensions |

| Main control module | Jetson Nano B01(4GB) | CPU:ARM Cortex-A57 GPU:128-core Maxwell |

| 2D LiDAR | Leishen Intelligent System M10P TOF |

Detection distance radius: 0-25m Measurement accuracy: ±3cm Detection Angle: 360° Scanning frequency: 10HZ |

| RGB-D camera | ORBBEC Gemini Pro | Detection accuracy :1m±5mm Detection field of view: H71.0°xV56.7° |

| IMU | WHEELTECN 100N | Static accuracy: 0.05°RMS Dynamic accuracy: 0.1°RMS |

| PGS | WHEELTEC G60 | Positioning accuracy :2.5m |

| Microcontroller | STM32 | STM32F407VET6 |

| Encoding motor | WHEELTECN GMR | 500 line、AB phase GMR |

| Omnidirectional wheel | WHEELTEC omni wheel | Diameter :75mm Width: 25mm |

| Wheel | WHEELTEC 85mm | Diameter :85mm Width: 33.4mm Coupling aperture: 6mm |

| Battery | 12V-9800MAH | Size: 98.6×64×29mm3 |

| White cane | j&x White cane | Length: 116cm Diameter: 1.5cm |

2.3. Working process of the intelligent guide system

3. Materials and Methods

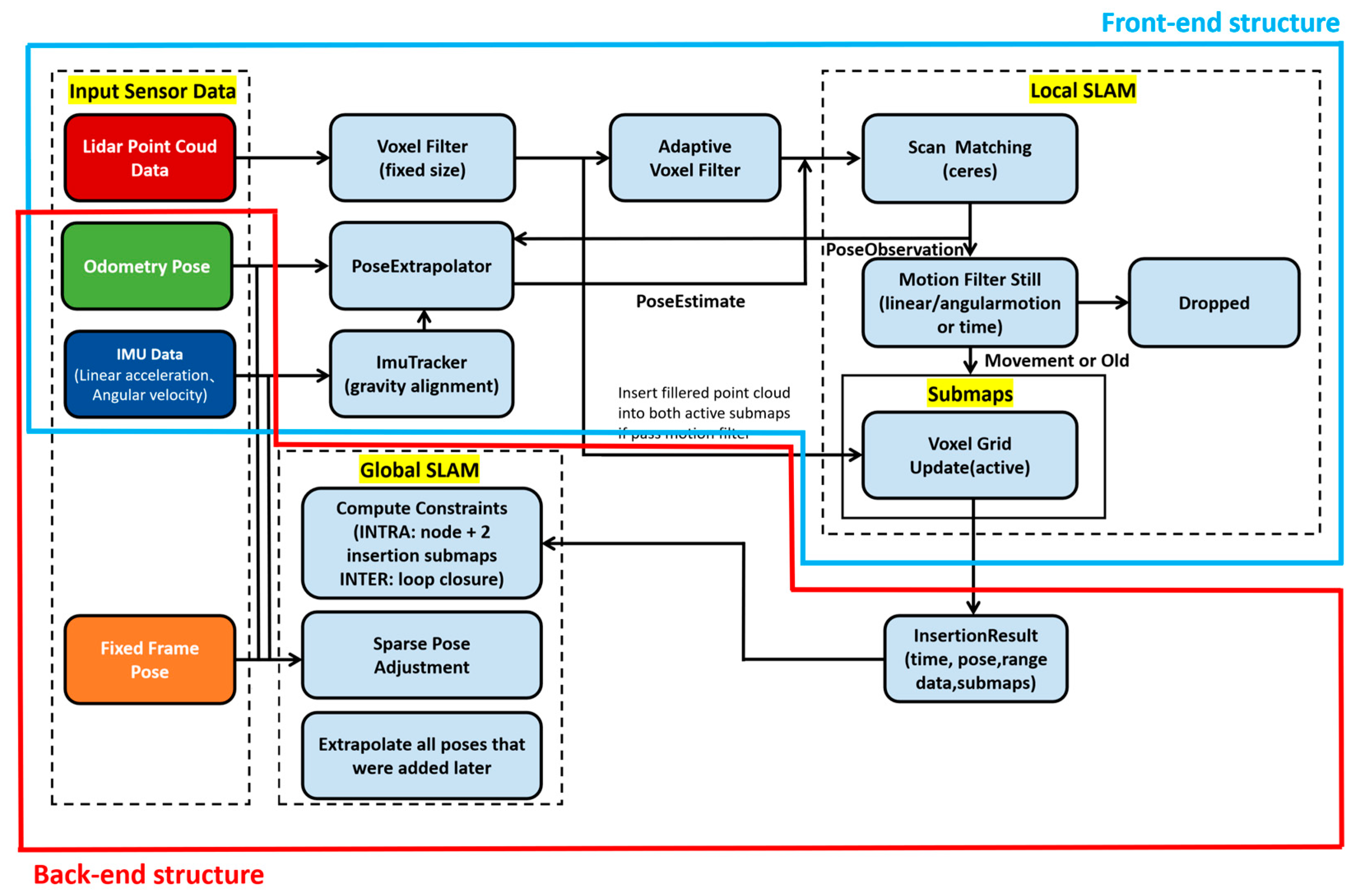

3.1. Cartographer algorithm

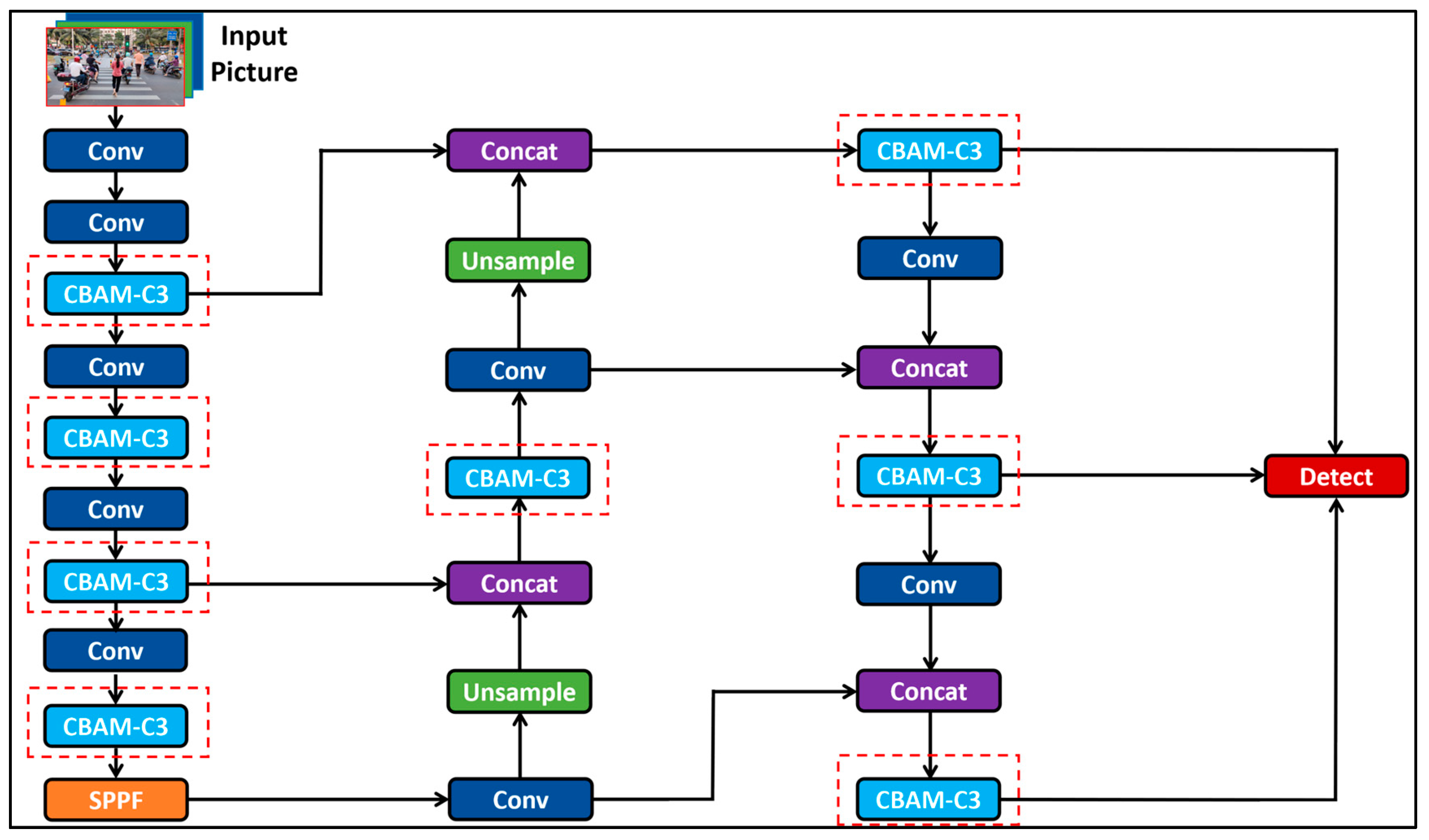

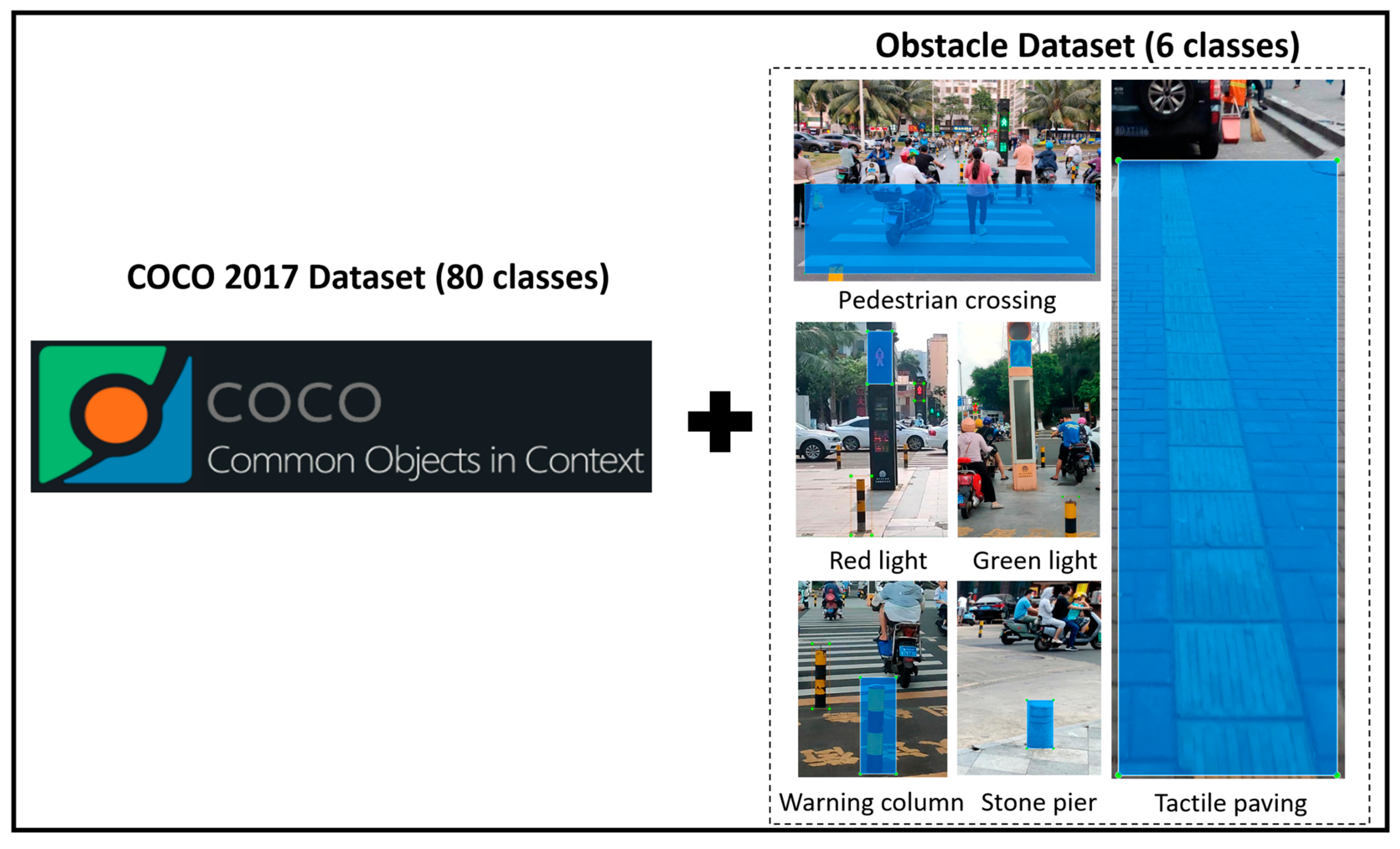

3.2. Improved yolov5 algorithm

4. Experiment and Results

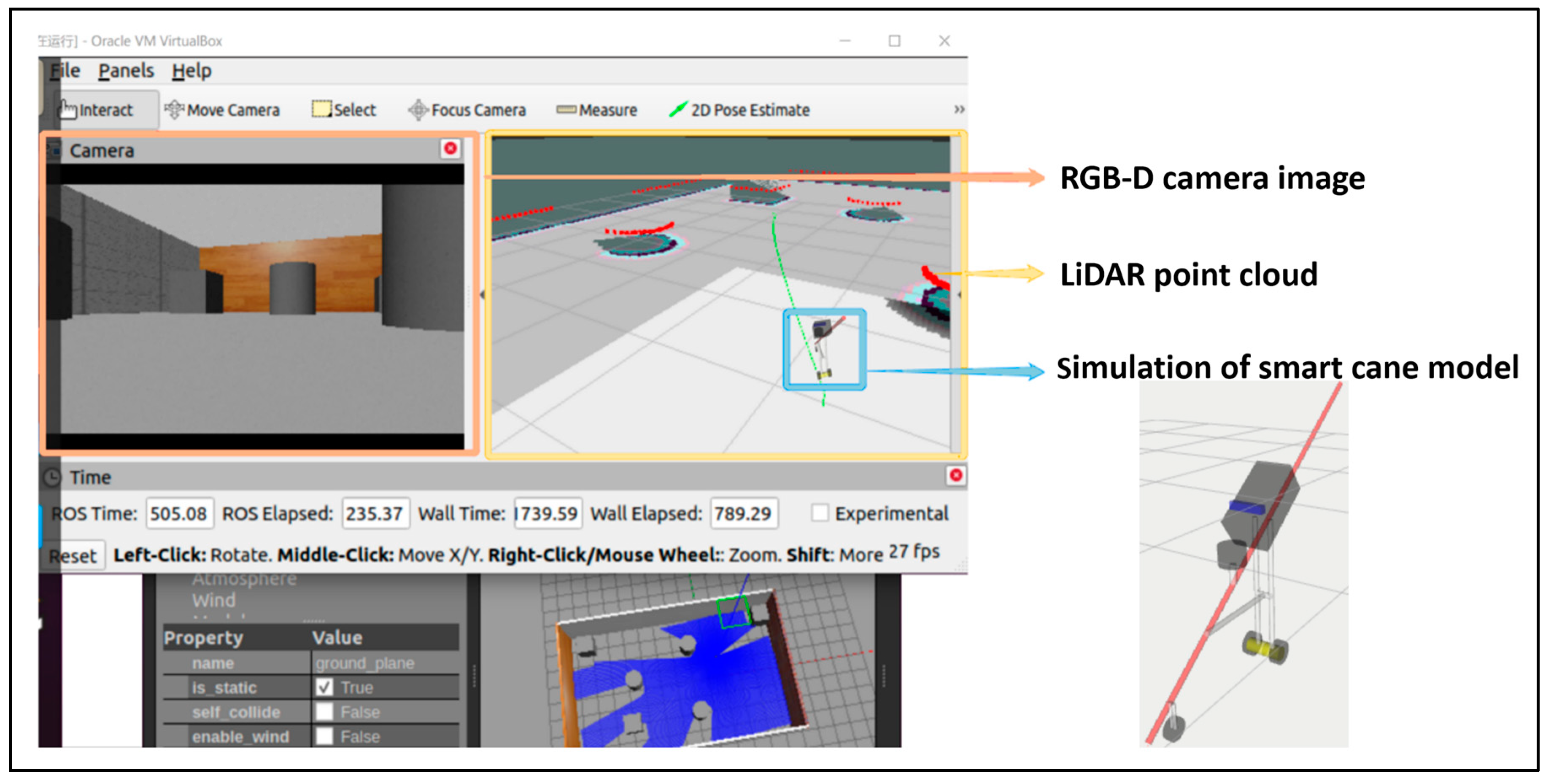

4.1. Simulation experiment

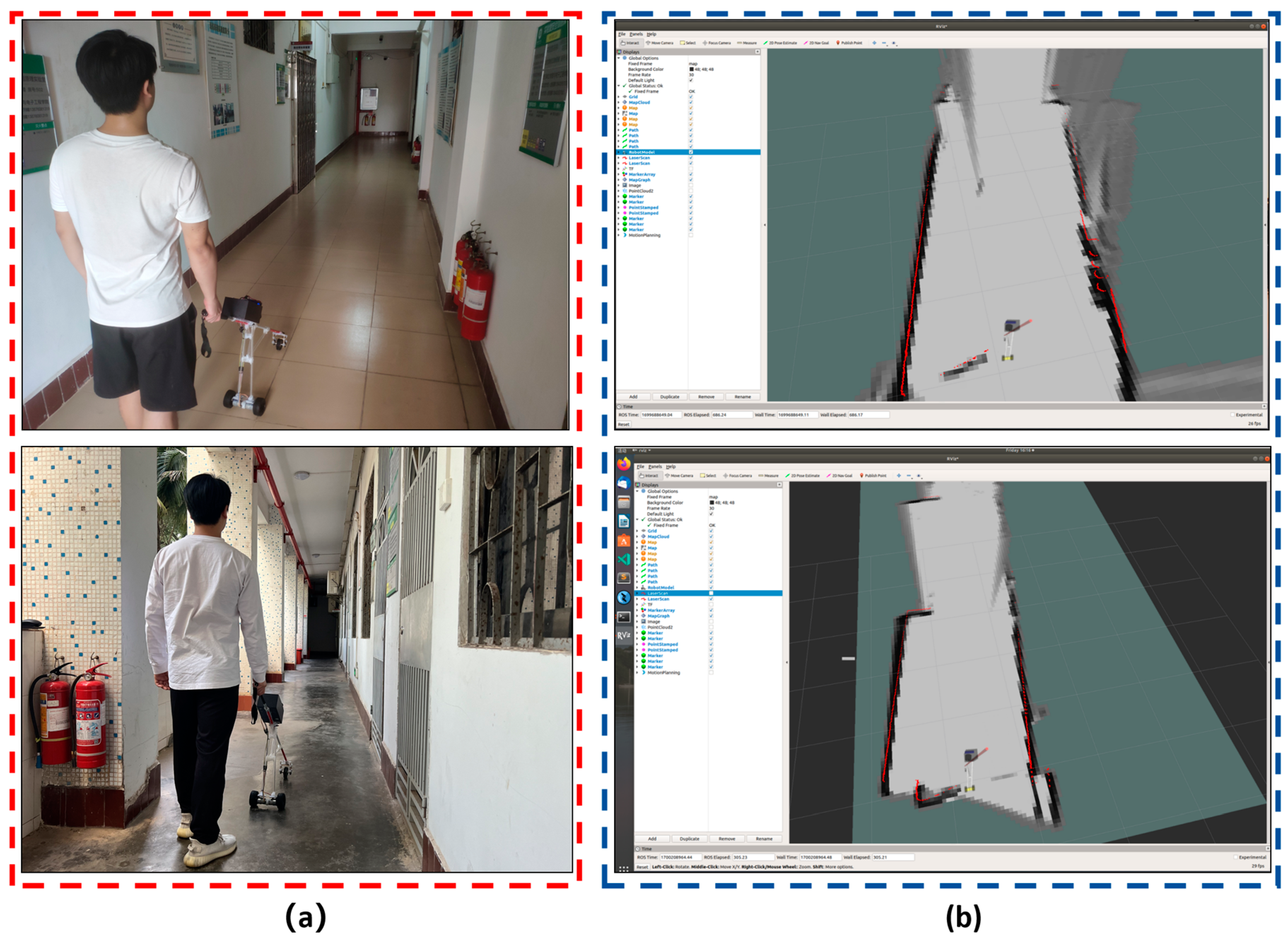

4.2. Laser SLAM experiment

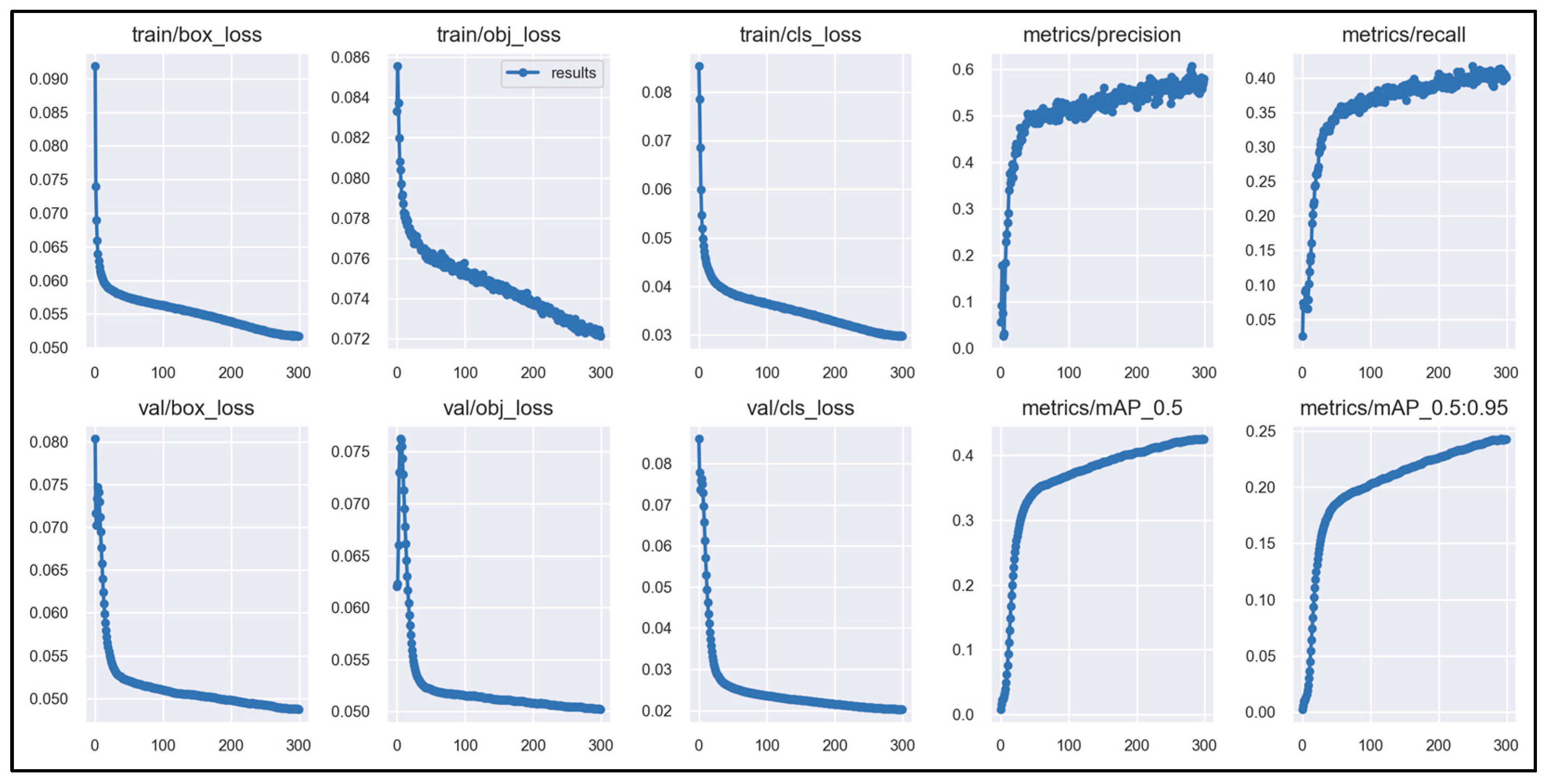

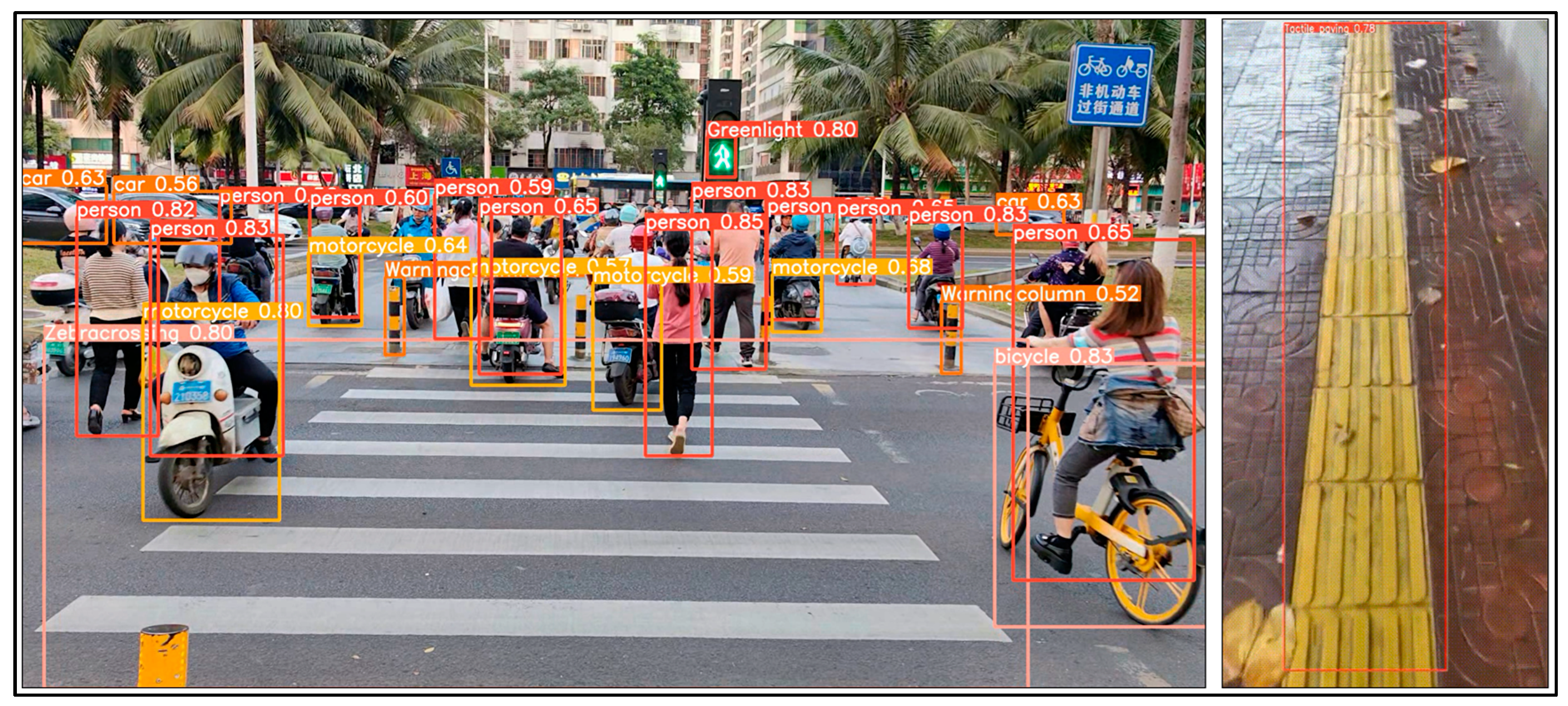

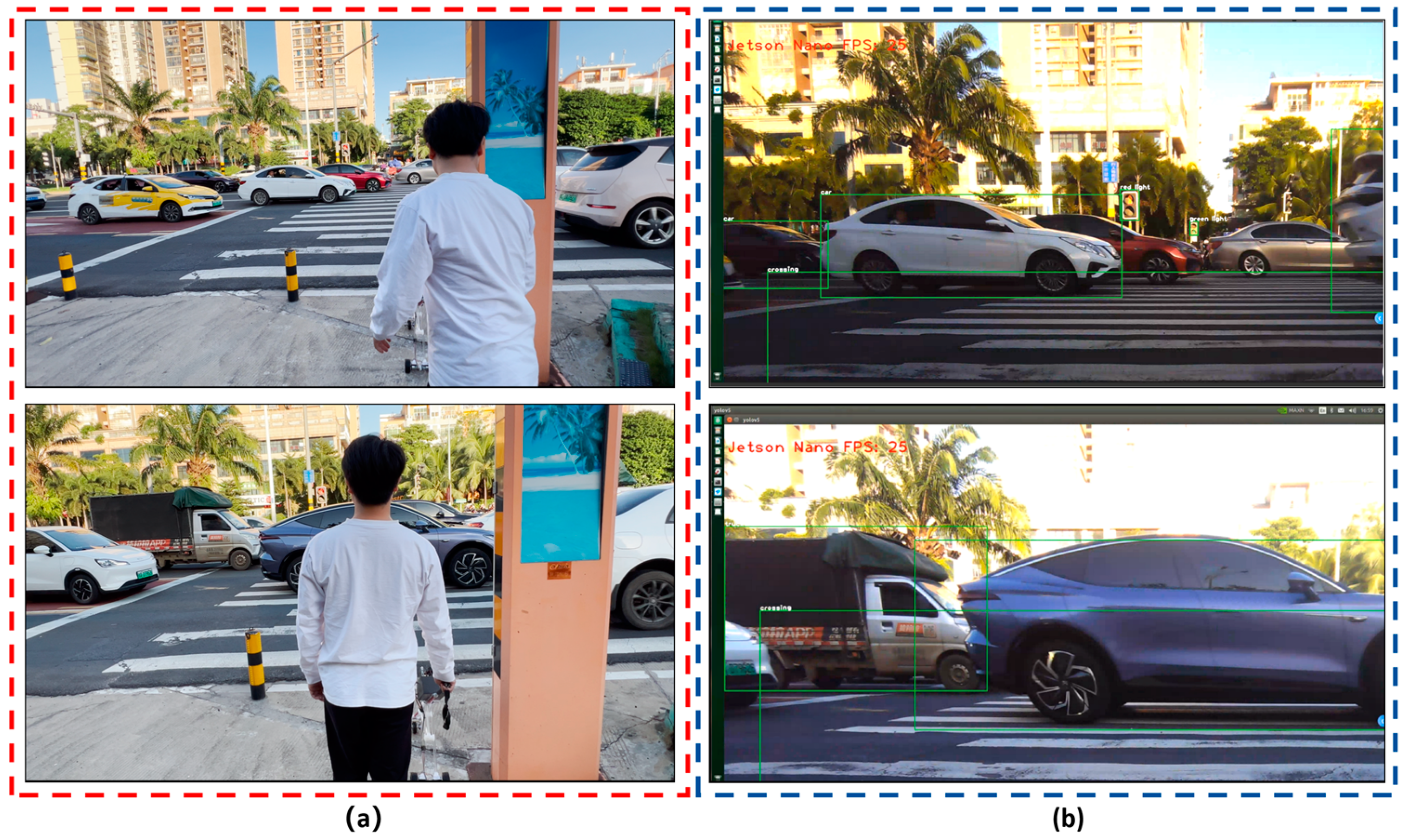

4.3. The improved yolov5 algorithm realizing obstacle detection

| Class | precision | recall | mAP50 | mAP50-95 |

| person | 0.667 | 0.634 | 0.67 | 0.388 |

| car | 0.718 | 0.685 | 0.738 | 0.259 |

| motorcycle | 0.61 | 0.447 | 0.505 | 0.473 |

| bus | 0.72 | 0.625 | 0.666 | 0.488 |

| truck | 0.798 | 0.666 | 0.75 | 0.481 |

| bicycle | 0.543 | 0.389 | 0.392 | 0.183 |

| traffic light | 0.583 | 0.361 | 0.374 | 0.176 |

| Greenlight | 0.608 | 0.619 | 0.572 | 0.164 |

| Redlight | 0.616 | 0.516 | 0.572 | 0.354 |

| Crossing | 0.846 | 0.644 | 0.827 | 0.357 |

| Warningcolumn | 0.783 | 0.856 | 0.724 | 0.51 |

| Stonepier | 0.692 | 0.633 | 0.669 | 0.42 |

| Tactilepaving | 0.74 | 0.807 | 0.839 | 0.533 |

5. Discussion

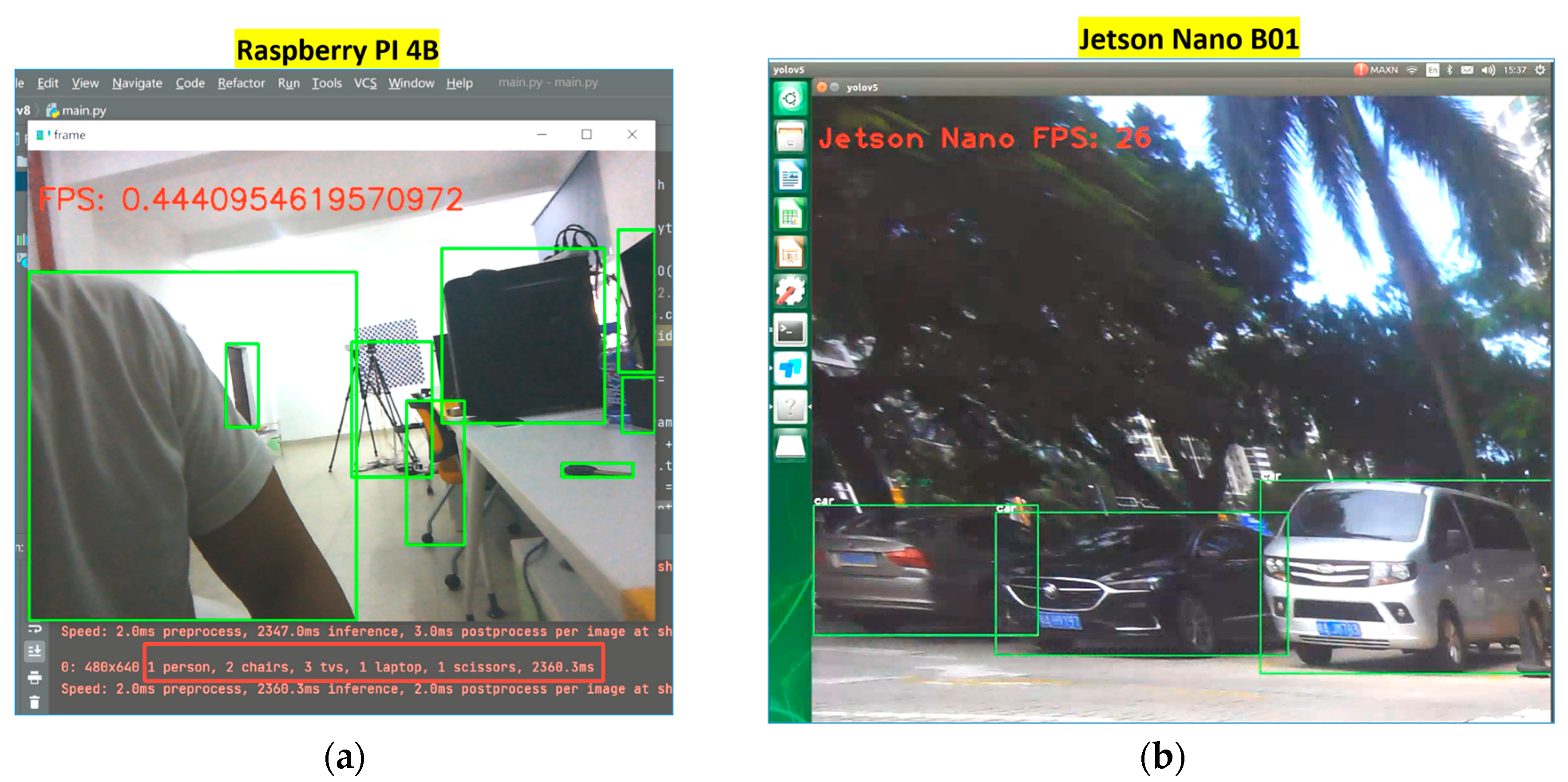

5.1. The choice of main control module of smart cane

5.2. Limitations of this work

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ackland, P.; Resnikoff, S.; Bourne, R. World blindness and visual impairment: despite many successes, the problem is growing. Community eye health 2017, 30, 71–73. [Google Scholar]

- World Health Organization. World report on vision. Available online: https://www.who.int/zh/news-room/detail/08-10-2019-who-launches-first-world-report-on-vision (accessed on 24 November 2022).

- Bourne, R.; Steinmetz, J.D.; Flaxman, S.; Briant, P.S.; Taylor, H.R.; Resnikoff, S.; Tareque, M.I. Trends in prevalence of blindness and distance and near vision impairment over 30 years: an analysis for the Global Burden of Disease Study. The Lancet global health 2021, 9, 130–143. [Google Scholar] [CrossRef]

- Mai, C.; Xie, D.; Zeng, L.; Li, Z.; Li, Z.; Qiao, Z.; Qu, Y.; Liu, G.; Li, L. Laser Sensing and Vision Sensing Smart Blind Cane: A Review. Sensors 2013, 23, 869. [Google Scholar] [CrossRef]

- Jain, M.; Patel, W. Review on LiDAR-Based Navigation Systems for the Visually Impaired. SN Computer Science 2023, 4, 323. [Google Scholar] [CrossRef]

- Bamdad, M.; Scaramuzza, D.; Darvishy, A. SLAM for Visually Impaired Navigation: A Systematic Literature Review of the Current State of Research. arXiv 2023, arXiv:2212.04745v2. [Google Scholar]

- Plikynas, D.; Žvironas, A.; Budrionis, A.; Gudauskis, M. Indoor navigation systems for visually impaired persons: Mapping the features of existing technologies to user needs. Sensors 2020, 20, 636. [Google Scholar] [CrossRef]

- Bhandari, A.; Prasad, P.W.C.; Alsadoon, A.; Maag, A. Object detection and recognition: using deep learning to assist the visually impaired. Disability and Rehabilitation: Assistive Technology 2021, 16, 280–288. [Google Scholar] [CrossRef]

- Chen, H.; Li, X.; Zhang, Z.; Zhao, R. Research Advanced in Blind Navigation based on YOLO SLAM. Proceedings of the 2nd International Conference on Computational Innovation and Applied Physics 2023, 163-171.

- Simões, W.C.; Machado, G.S.; Sales, A.M.; de Lucena, M.M.; Jazdi, N.; de Lucena Jr, V.F. A review of technologies and techniques for indoor navigation systems for the visually impaired. Sensors 2020, 20, 3935. [Google Scholar] [CrossRef]

- Prasad, N.; Nadaf, A.; Patel, M. A Literature Survey on Vision Assistance System Based on Binocular Sensors for Visually Impaired Users. Journal of Artificial Intelligence, Machine Learning and Neural Network 2022, 2, 33–42. [Google Scholar] [CrossRef]

- Kandalan, R.N.; Namuduri, K. Techniques for constructing indoor navigation systems for the visually impaired: A review. IEEE Transactions on Human-Machine Systems 2020, 50, 492–506. [Google Scholar] [CrossRef]

- Slade, P.; Tambe, A.; Kochenderfer, M.J. Multimodal sensing and intuitive steering assistance improve navigation and mobility for people with impaired vision. Sci. Robot. 2021, 6, eabg6594. [Google Scholar] [CrossRef] [PubMed]

- Carranza, A.; Baez, A.; Hernandez, J.; Carranza, H.; Rahemi, H. Raspberry Pi and White Cane Integration for Assisting the Visually Impaired. In Proceedings of the the 9th International Conference of Control Systems, and Robotics (CDSR’22), Niagara Falls, NA, Canada, 2–4 June 2022.

- Chuang, T.K.; Lin, N.C.; Chen, J.S.; Hung, C.H.; Huang, Y.W.; Teng, C.; Huang, H.; Yu, L.F.; Giarré, L.; Wang, H.C. Deep trail-following robotic guide dog in pedestrian environments for people who are blind and visually impaired-learning from virtual and real worlds. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018.

- Jin, Y.; Kim, J.; Kim, B.; Mallipeddi, R.; Lee, M. Smart cane: Face recognition system for blind. In Proceedings of the 3rd International Conference on Human-Agent Interaction, Daegu, Republic of Korea, 21–24 October 2015.

- Jivrajani, K.; Patel, S.K.; Parmar, C.; Surve, J.; Ahmed, K.; Bui, F.M.; Al-Zahrani, F.A. AIoT-based smart stick for visually impaired person. IEEE Transactions on Instrumentation and Measurement 2022, 72, 1–11. [Google Scholar] [CrossRef]

- Hakim, H.; Fadhil, A. Indoor Low Cost Assistive Device using 2D SLAM Based on LiDAR for Visually Impaired People. Iraqi Journal for Electrical & Electronic Engineering 2019, 15. [Google Scholar]

- Hakim, H.; Alhakeem, Z.M.; Fadhil, A. Asus Xtion Pro Camera Performance in Constructing a 2D Map Using Hector SLAM Method. Iraqi Journal of Communication, Control & Engineering 2021, 21, 1–11. [Google Scholar]

- Kumar, B. ViT Cane: Visual Assistant for the Visually Impaired. arXiv 2021, arXiv:2109.13857. [Google Scholar]

- Kumar, N.; Jain, A. A Deep Learning Based Model to Assist Blind People in Their Navigation. Journal of Information Technology Education: Innovations in Practice 2022, 21, 095–114. [Google Scholar] [CrossRef] [PubMed]

- Xie, Z.; Li, Z.; Zhang, Y.; Zhang, J.; Liu, F.; Chen, W. A multi-sensory guidance system for the visually impaired using YOLO and ORB-SLAM. Information 2022, 13, 343. [Google Scholar] [CrossRef]

- Udayagini, L.; Vanga, S.R.; Kukkala, L.; Remalli, K.K.; Lolabhattu, S.S. Smart Cane For Blind People. In 2023 10th International Conference on Signal Processing and Integrated Networks (SPIN), Noida, India, 23-24 March 2023.

- Ye, C.; Hong, S.; Qian, X. A co-robotic cane for blind navigation. In Proceedings of the 2014 IEEE International Conference on Systems, Man, and Cybernetics (SMC), San Diego, CA, USA, 5–8 October 2014.

- Agrawal, S.; West, M.E.; Hayes, B. A Novel Perceptive Robotic Cane with Haptic Navigation for Enabling Vision-Independent Participation in the Social Dynamics of Seat Choice. In Proceedings of the IEEERSJ International Conference on Intelligent Robots and Systems, Prague, Czech Republic, 27 September–1 October 2021.

- Zhang, H.; Ye, C. An indoor wayfinding system based on geometric features aided graph SLAM for the visually impaired. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 1592–1604. [Google Scholar] [CrossRef] [PubMed]

- Ye, C.; Qian, X. 3-D object recognition of a robotic navigation aid for the visually impaired. IEEE Transactions on Neural Systems and Rehabilitation Engineering 2018, 26, 441–450. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Jin, L.; Zhang, H.; Ye, C. A comparative analysis of visual-inertial slam for assisted wayfinding of the visually impaired. In 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 07-11 January 2019.

- Ju, J.S.; Ko, E.; Kim, E.Y. EYECane: Navigating with camera embedded white cane for visually impaired person. In Proceedings of the 11th international ACM SIGACCESS conference on Computers and accessibility, pp. 237–238.

- Masud, U.; Saeed, T.; Malaikah, H.M.; Islam, F.U.; Abbas, G. Smart assistive system for visually impaired people obstruction avoidance through object detection and classification. IEEE Access 2022, 10, 13428–13441. [Google Scholar] [CrossRef]

- Suresh, K. Smart Assistive Stick for Visually Impaired Person with Image Recognition. In 2022 International Conference on Power, Energy, Control and Transmission Systems (ICPECTS), Chennai, India, 08-09 December 2022.

- Chen, Q.; Khan, M.; Tsangouri, C.; Yang, C.; Li, B.; Xiao, J.; Zhu, Z. CCNY smart cane. In Proceedings of the 2017 IEEE 7th Annual International Conference on CYBER Technology in Automation, Control, and Intelligent Systems (CYBER), Honolulu, HI, USA, 31 July–4 August 2017.

- Jin, L.; Zhang, H.; Ye, C. Camera intrinsic parameters estimation by visual–inertial odometry for a mobile phone with application to assisted navigation. IEEE/ASME Transactions on Mechatronics 2020, 25, 1803–1811. [Google Scholar] [CrossRef]

- Alcantarilla, P.F.; Yebes, J.J.; Almazán, J.; Bergasa, L.M. On combining visual SLAM and dense scene flow to increase the robustness of localization and mapping in dynamic environments. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012.

- Rodríguez, A.; Bergasa, L.M.; Alcantarilla, P.F.; Yebes, J.; Cela, A. Obstacle avoidance system for assisting visually impaired people. In Proceedings of the IEEE Intelligent Vehicles Symposium Workshops 2012, 35, 16.

- Takizawa, H.; Yamaguchi, S.; Aoyagi, M.; Ezaki, N.; Mizuno, S. Kinect cane: An assistive system for the visually impaired based on the concept of object recognition aid. Pers. Ubiquitous Comput. 2015, 19, 955–965. [Google Scholar] [CrossRef]

- Taylor, E.J. An Obstacle Avoidance System for the Visually Impaired Using 3-D Point Cloud Processing. Master’s Thesis, Brigham Young University, Provo, UT, USA, 2017.

- Ranganeni, V.; Sinclair, M.; Ofek, E.; Miller, A.; Campbell, J.; Kolobov, A.; Cutrell, E. Exploring Levels of Control for a Navigation Assistant for Blind Travelers. In Proceedings of the 2023 ACM/IEEE International Conference on Human-Robot Interaction 2023, pp. 4–12.

- Achuth Ram, M.; Shaji, I.; Alappatt, I.B.; Varghese, J.; John Paul, C.D.; Thomas, M.J. Easy Portability and Cost-Effective Assistive Mechanism for the Visually Impaired. ICT Infrastructure and Computing 2022, pp. 491–498.

- Lu, C.L.; Liu, Z.Y.; Huang, J.T.; Huang, C.I.; Wang, B.H.; Chen, Y.; Kuo, P.Y. Assistive navigation using deep reinforcement learning guiding robot with UWB/voice beacons and semantic feedbacks for blind and visually impaired people. Frontiers in Robotics and AI 2021, 8, 654132. [Google Scholar] [CrossRef] [PubMed]

- Chaudhari, G.; Deshpande, A. Robotic assistant for visually impaired using sensor fusion. In 2017 IEEE SmartWorld, Ubiquitous Intelligence & Computing, Advanced & Trusted Computed, Scalable Computing & Communications, Cloud & Big Data Computing, Internet of People and Smart City Innovation (SmartWorld/SCALCOM/UIC/ATC/CBDCom/IOP/SCI), Francisco, CA, USA, 04-08 August 2017.

- Wu, Y.; Hao, L.; Wang, F.; Zu, L. The Construction of Occupancy Grid Map with Semantic Information for the Indoor Blind Guiding Robot. In 2023 IEEE 13th International Conference on CYBER Technology in Automation, Control, and Intelligent Systems (CYBER), Qinhuangdao, China, 11-14 July 2023.

- Filipenko, M.; Afanasyev, I. Comparison of various slam systems for mobile robot in an indoor environment. In 2018 International Conference on Intelligent Systems (IS), Stockholm, Sweden, 16-21 May 2016.

- Hess, W.; Kohler, D.; Rapp, H.; Andor, D. Real-time loop closure in 2D LIDAR SLAM. In 2016 IEEE international conference on robotics and automation (ICRA), Stockholm, Sweden, 16-21 May 2016.

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE conference on computer vision and pattern recognition 2016, pp. 779–788.

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Ultralytics YOLOv5. Available online: https://github.com/ultralytics/yolov5/tree/v6.0(accessed on 13 November 2023).

- Chandna, S.; Singhal, A. Towards outdoor navigation system for visually impaired people using YOLOv5. 2022 12th International Conference on Cloud Computing, Data Science & Engineering (Confluence), Noida, India, 27-28 january 2022.

- Tai, K.W.; Lee, H.; Chen, H.H.; Yeh, J.S.; Ouhyoung, M. Guardian Angel: A Novel Walking Aid for the Visually Impaired. arXiv 2022, arXiv:2206.09570. [Google Scholar]

- Zhang, L.; Jia, K.; Liu, J.; Wang, G.; Huang, W. Design of Blind Guiding Robot Based on Speed Adaptation and Visual Recognition. IEEE Access 2023, 11, 75971–75978. [Google Scholar] [CrossRef]

- Arifando, R.; Eto, S.; Wada, C. Improved YOLOv5-Based Lightweight Object Detection Algorithm for People with Visual Impairment to Detect Buses. Applied Sciences 2023, 13, 5802. [Google Scholar] [CrossRef]

- Kumar, N.; Jain, A. A Deep Learning Based Model to Assist Blind People in Their Navigation. Journal of Information Technology Education: Innovations in Practice 2022, 21, 095–114. [Google Scholar] [CrossRef]

- Rocha, D.; Pinto, L.; Machado, J.; Soares, F.; Carvalho, V. Using Object Detection Technology to Identify Defects in Clothing for Blind People. Sensors 2023, 23, 4381. [Google Scholar] [CrossRef]

- Hsieh, I.H.; Cheng, H.C.; Ke, H.H.; Chen, H.C.; Wang, W.J. A CNN-based wearable assistive system for visually impaired people walking outdoors. Applied Sciences 2021, 11, 10026. [Google Scholar] [CrossRef]

- Sethuraman, S.C.; Tadkapally, G.R.; Mohanty, S.P.; Galada, G.; Subramanian, A. MagicEye: An Intelligent Wearable Towards Independent Living of Visually Impaired. arXiv 2023, arXiv:2303.13863. [Google Scholar]

- Abdusalomov, A.B.; Mukhiddinov, M.; Kutlimuratov, A.; Whangbo, T.K. Improved Real-Time Fire Warning System Based on Advanced Technologies for Visually Impaired People. Sensors 2022, 22, 7305. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Polosukhin, I. Attention is all you need. 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4-9 December 2017.

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European conference on computer vision (ECCV) 2018, pp. 3–19.

| Environment | version |

| Ubunut | 18.04 |

| Python | 3.6.9 |

| Pytorch | 1.10.0 |

| Cuda | 10.2.300 |

| CuDNN | 8.2.1.8 |

| Opencv | 4.1.1 |

| TensorRT | 8.2.1 |

| Jetpack | 4.6.1 |

| Machine | aarch64 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).