2.1. ARIMA Models

In contemporary financial analytics, Autoregressive Integrated Moving Average (ARIMA) models have been extensively harnessed to extrapolate potential trajectories for stock prices, anchored primarily on their historical performance, or to project a company’s future earnings by scrutinizing previous fiscal intervals. These analytical structures are anchored in the broader spectrum of regression studies, proficiently elucidating the relative potency of a chosen dependent variable in contrast to other evolving determinants. Fundamentally, the ARIMA methodology aspires to forecast impending shifts in securities or broader financial market trajectories. Intriguingly, this is accomplished not by direct examination of absolute values but rather by analyzing the variances between consecutive data points in a series.

The construction of ARIMA models pivots around three parameters, colloquially denoted as

p,

d, and

q [

5]. The autoregressive component, symbolized by ‘

p’, encapsulates the influence exerted by data from ‘

p’ antecedent intervals within the analytical framework. Conversely, the integrated component, represented by ‘

d’, captures the overarching trend manifest in the dataset. The moving average segment, denoted by ‘

q’, delineates the number of sequential data points that are leveraged to temper minor oscillations using a moving average methodology.

To encapsulate the theoretical construct, an ARIMA model with the specified parameters

p,

d, and

q, as cited in references [

5,

6], can be mathematically represented as per equation (1):

Here

are the parameters sought,

is a randomly distributed variable with mathematical expectation of zero and dispersion

. If we do not have any information about the distribution of this variable, then by default it is assumed that the distribution is normal [

6]. ∆ is the difference operator, which is defined as (2):

In this study, we have experimented with diverse permutations of the parameters (p, d, q) with the intent of meticulously characterizing the underlying time series dynamics. The graphical representations of auto-correlation functions (ACFs) and partial auto-correlation functions (PACFs) for each unique parameter combination are reviewed. These functions are predicated upon a predetermined number of lags and are systematically computed for every temporal juncture ‘t’, barring certain terminal instances where their derivation proves infeasible.

Critical to our assessment is the scrutiny of discontinuities or ‘jumps’ manifested within both the ACF and PACF. These fluctuations serve as invaluable markers, guiding the optimal selection of parameters for each model. It’s noteworthy that if for a given Yt both ACF and PACF portray either an absence of jumps or a singular, marginal deviation beyond the confines of the 95% confidence intervals, such a model is adjudged to be adequately robust and congruent with the research objectives delineated in this study.

The formula for the autocorrelation function ACF in the current moment ‘

t’ for a ‘

k’ lag can be articulated as per equation (3):

Here n is the number of observations in the time series; k is the delay, calculated as number of lags; is the mean value of the time series. The denominator represents the dispersion of the time series. The standard autocorrelation error is based on the square of the autocorrelation of all previous autocorrelations.

In the given context, n denotes the total count of observations present within the time series, while k signifies the lag, quantified in terms of the number of time delays. stands as the arithmetic mean of the entire time series. The denominator encapsulates the variance inherent to the time series. The canonical error associated with autocorrelation is derived from the squared magnitude of the autocorrelation spanning all preceding autocorrelations.

The mathematical representations for ascertaining partial correlations are intrinsically intricate, necessitating the application of recursive methodologies [

7].

2.2. Modified ODE Models

In those cases, where extensive datasets are utilized, the application of more intricate forecasting techniques based on numerical solutions for ordinary (ODE), partial (PDE), and stochastic (SDE) differential equations becomes imperative [

8,

9,

10].

This article investigates a modified ODE methodology for simulating the price fluctuations of a specific asset over a defined period. The benefits of this adaptation are illustrated through numerical examinations. Market data encompassing the daily closing prices of the Alterco instrument (A4L), ranging from June 1, 2020, to October 28, 2020, has been employed for this purpose. Numerical models derived for price forecasting are based on the numerical resolution of the Cauchy initial problem for the first-order ODE. The computational attributes of the modified ODE are analyzed using Matlab [

11]. The computational tests involve an array of data fitting models to determine the optimal predictive values, computed as the weighted average of all projections for the respective instruments. The weights are inversely correlated to the "final errors."

Consider the chronologically organized moments in ascending order, along with the observed values for an asset, represented as a time series, as indicated in (4):

As demonstrated in prior studies [

8,

9], it is feasible to calibrate an ordinary differential equation to this particular time series.

describing the discrete time points of the time series values provided. The function g(t, y) can be significantly diverse.

In the scenario where

, equation (5) can be addressed either through numerical integration or by acquiring an analytic solution, provided that the value of

is known. It is also plausible to calibrate the variable

at various time intervals. One method for resolving equation (5), with

, is delineated by Lascsáková in [

9]. Another approach, presented by Xue and Lai [

8], introduces several alternatives in the structure of the first derivative in (5). They suggest the subsequent form for

Here, and are representable by elementary functions such as polynomial functions, exponential functions, logarithmic functions, and the like. They can be expanded to include series of degrees subject to specific conditions. Furthermore, within the dataset (4), discernible periodic trends can be identified. Consequently, the function in the structure of (6) could potentially comprise a polynomial aspect along with a trigonometric component.

Let us consider the scenario where the first derivative is in the following format:

The parameters under consideration are the coefficients:

The number of these coefficients is

. If the condition

(which will be assumed to always hold) is satisfied, they can be determined by resolving an inverse problem using a numerical method involving a one-step explicit or implicit approach (or a combination of the two):

Here

, while

denotes the right-hand side of (5), and

signifies a specific one-step numerical method, such as explicit or implicit Euler, Runge-Kutta, among others. As demonstrated in [

8], one can utilize the last

values of the time series (4). Employing (10) leads to the establishment of a system of nonlinear equations concerning the coefficients (9). Once this system is solved, the said coefficients (9) can be determined. These coefficients are then employed to define the forthcoming unknown value,

, at time instant

. In equation (5), all coefficients are already known, enabling the computation of the next

value through the numerical method

.

One limitation of this methodology is its failure to utilize the complete information embedded within the time series (4). As per this approach, only as many values are extracted from series (4) as necessary to close the system of nonlinear equations, determined by the specific selection of and . However, this shortcoming is effectively circumvented in this research, wherein any number of values can be selected (when ) from line (4). Subsequently, the over-determined system of nonlinear algebraic equations is solved through the application of the Least Squares Method (LSM). The LSM can also be applied in a weighted format, allowing for the incorporation of a weight function. It stands to reason that the weight function should assign higher weights to the more recent values in the time series (4), thus demonstrating an increasing trend or, at the very least, remaining constant.

Within the context of this paper, the weight of the errors in the Least Squares Method (LSM) is determined by the ascending function:

Various experiments have been conducted at diverse weight values of

(at

the function (11) is convex, at

– concave). To be precise, the weights (12) have been employed in lieu of the weight function (11).

The utilization of (12) is motivated by the assignment of a weight to each error within the range of [0,1]. A weight of 0 is assigned to the data point furthest in time, while the closest (most recent) data point is assigned a weight of 1. This selection process is primarily subjective, allowing for the potential use of varying weights, provided they follow an increasing pattern.

The predicament arises when employing LSM (9) to define the coefficients, resulting in the resolution of a nonlinear system of algebraic equations. It is widely acknowledged that nonlinear systems can yield multiple solutions, and different selections among these solutions can lead to diverse predictions [

12].

Due to the inherent nonlinearity of the system, it is not feasible to ascertain beforehand the exact number of solutions it may offer. When employing a specific numerical method to solve the nonlinear system concerning the coefficients (9), initial approximations for these coefficients are provided. Different choices of initial approximations typically result in varied solutions. In this study, the approach involves the selection of

different initial approximations:

The generated solutions are determined pseudo-randomly, with each value assigned to a pre-selected interval. These distinct solutions are isolated. To select a specific solution from this set, the last values from the time series are extracted and segregated to create an error, which then validates a chosen solution of (9). These designated values are referred to as "test values." The subsequent value is predicted and contrasted with the actual value, subsequently incorporating the real value back into the time series and recording the corresponding error. This process is carried out sequentially for these values of the time series. The "final error" is computed as the weighted average of the errors obtained, whereby an increasing function may once again be employed as the weighting function. The solution of (10) yielding the smallest "final error" is selected to define (10) for a specific choice of and . Subsequently, the future value at time is predicted.

The solution for the over-determined system of nonlinear equations can also be achieved through the minimax method, ensuring minimal maximum error between the approximated and actual values. Additionally, the weighted minimax method can also be applied in this case.

To summarize the approach for predicting the subsequent value in the time series at given intervals and given time series values (4):

1. Fit an ordinary differential equation with initial condition (5) - a Cauchy problem to the time series.

2. Choose the form (7) for the function by fixing the parameters and .

3. Select a specific numerical method - such as explicit, implicit, or a combination of both, including one-step methods like Euler, Milne, Runge-Kutta, etc. - for the numerical solution of (5).

4. Select the last values of the time series (4) (where ).

5. Solve an inverse problem with respect to the unknown coefficients (9), which reduces to solving an over-determined system of nonlinear equations.

Choose a method to minimize the given error for solving the over-determined system - whether it’s LSM, the minimax method, or a similar approach.

Select a weight function to implement this method, ensuring the weight function is non-decreasing (in this specific case, of type (12)).

Apply the chosen method with the selected weight function to reduce the over-determined system to a nonlinear system of equations.

Due to the nonlinearity of the obtained system, generate different pseudo-random initial approximations (13) for the coefficients (9) and obtain different solutions for (9).

6. Select the last values of (4) and segregate them to construct an error and validate the chosen solution of (9). Compute the "final error" as a weighted average of the errors obtained, where the weights are increasing.

7. Choose the solution of (9) with the smallest "final error."

8. Substitute the chosen solution of (9) into (10) and predict the future value at time for .

9. Repeat steps 1-8 for different choices of: the numerical method used to solve the initial Cauchy problem, the parameters , , , , , the numerical method for solving the over-determined system of nonlinear equations, the weight function for solving the over-determined system of nonlinear equations, and the weight function for estimating the "final error."

10. After obtaining different forecasts and their "final errors," compute their weighted average value, with weights being inversely proportional to their "final errors," and consider it as the final forecast.

2.3. Risk Portfolio Optimization

The authors utilize a previously established Matlab programming code [

13] to generate an efficient risk portfolio comprising a set of

volatile securities. The primary objective is to maximize the Capital Allocation Line (CAL) for every eligible risk portfolio labeled as

. The code effectively resolves the optimization problem (14) while adhering to the specified condition (15):

where:

- - weight of the i-th asset,

-

- the anticipated return rate of the risk portfolio refers to the average value of the expected return rates of the volatile assets, weighted by their respective proportions within the risk portfolio. This value is computed in accordance with the demonstration provided in (16).

-

– the standard deviation, calculated as shown in (17).

- – the correlation coefficient between the i-th and the j-th asset rates of return.

The resolution of the optimization problem relies on a modified version of the Markowitz model [

14] predicated on the following assumptions: the presence of risk-free assets, the ability to borrow at a risk-free rate, and the opportunity for short sales of volatile assets.

The input data provided for the developed programming code encompasses projected return estimates, their respective deviations from the mean, the corresponding correlation matrix, and the return rate for risk-free assets. The resulting output from the program comprises an optimized risk portfolio for assets over a single period, along with an estimation of the expected return for the designated risk portfolio.

The programming code itself is listed below.

function [S,W_p,Er_p, StD_p]=fopt_portfolio(ERoR,StD,CM,RoRf)

% %% INPUT DATA %%%

% ERoR – Expected Rate of Return (RoR) – n-dimensional vector

% StD - Standard Deviation of RoR – n-dimensional vector

% CM - Correlation Matrix – nxn matrix

% RoRf – RoR of the risk-free asset

n=length(ERoR);

ERoR=ERoR(:);

StD=StD (:);

% CovM - Covariance Matrix

CovM=( StD*StD').*CM;

% F = -S – optimization function

F=@(w)-(w(:)'*ERoR-RoRf)./sqrt(w(:)'*CovM*w(:));

% w - vector of weights

% Search that w, where min(F)

[W_p,S]=fmincon(F,ones(n,1)/2,[],[],ones(1,n),1,zeros(n,1),ones(n,1));

%%% OUTPUT DATA %%%

Er_p=W_p'*ERoR;S=-S;

% W_p - weights in the optimal risk portfolio

% Er_p - Estimated RoR of the optimal risk portfolio

StD_p=sqrt(W_p'* CovM*W_p);

% StD_p - Standard Deviation of RoR of the optimal risk portfolio

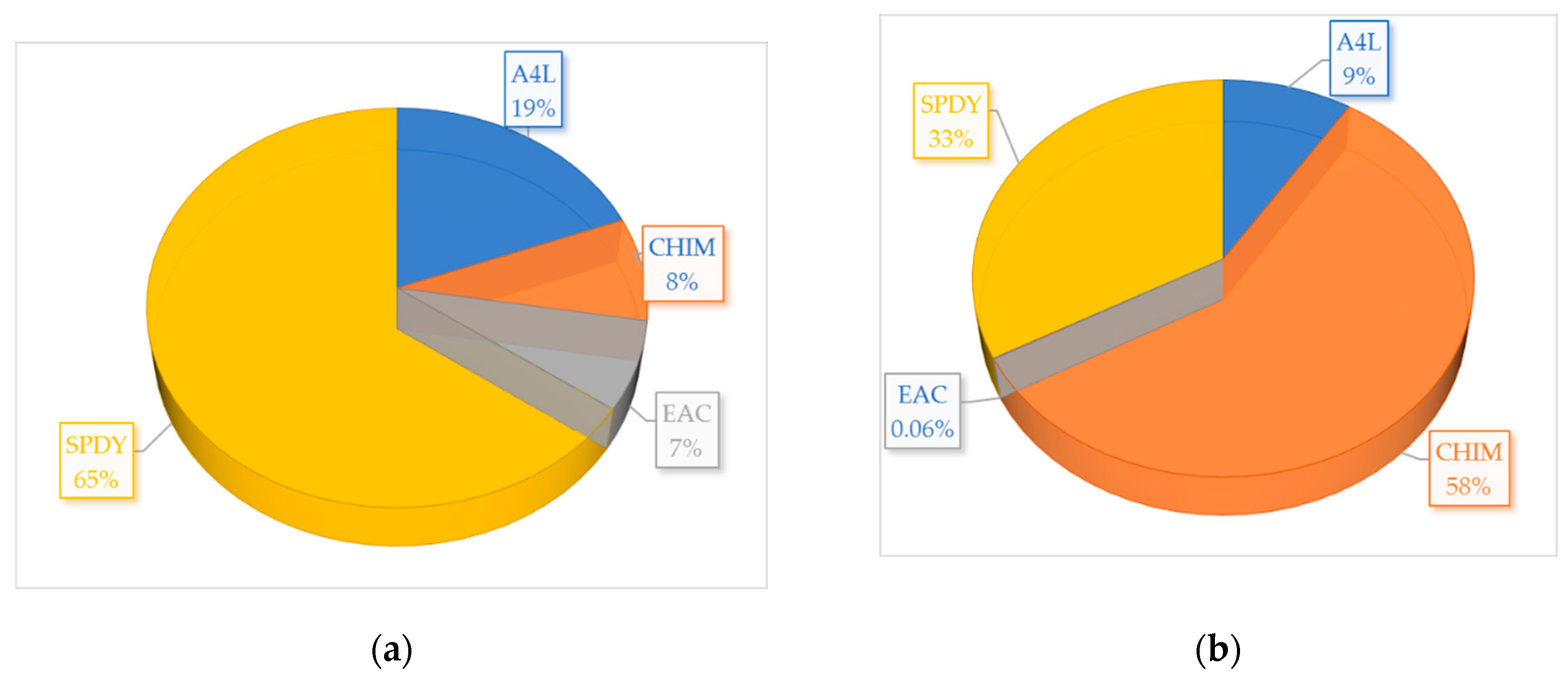

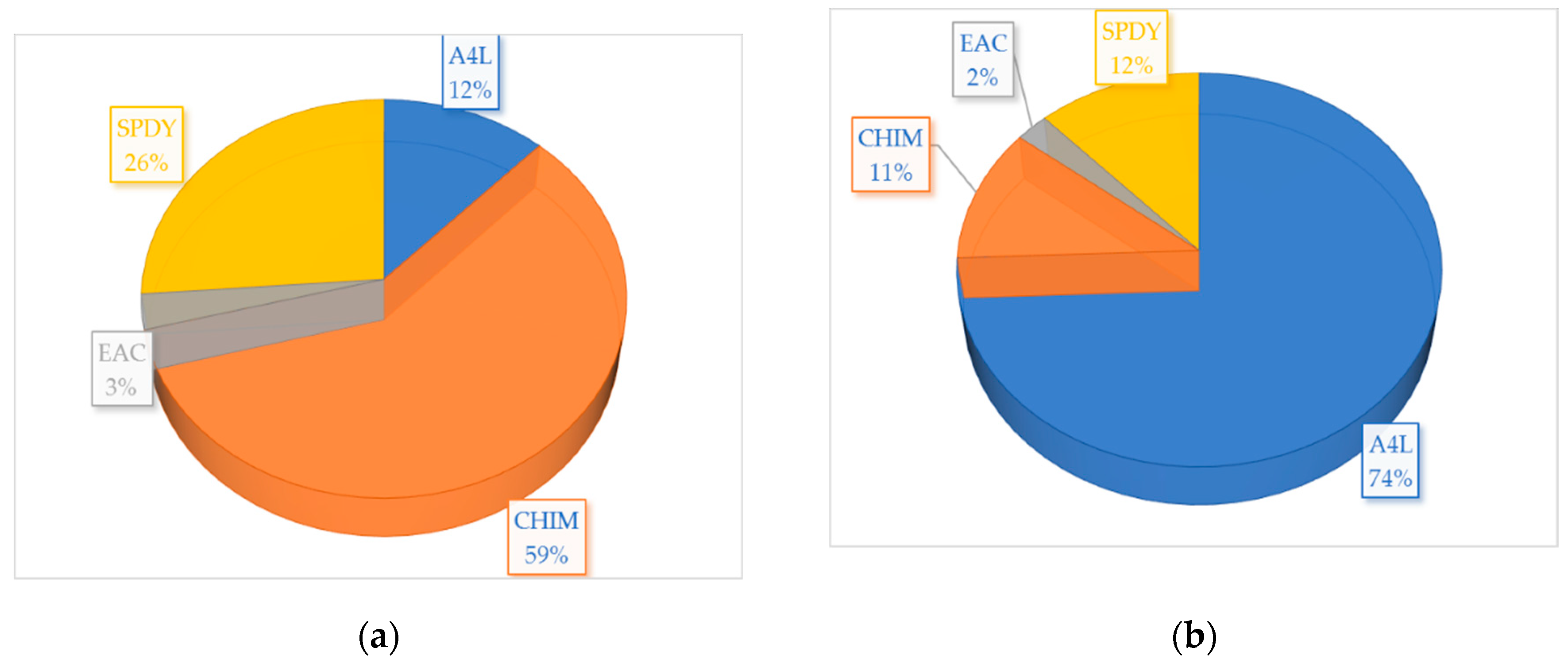

In the present work, the problem of creating a portfolio of equities of the four companies and treasury bills is solved. The horizon of the portfolio plan is one period ahead. All estimates refer to the rates of return for one period of ownership.

The assets’prices from 29.10.2020 (respectively, 28.10.2022) are taken as the prices for a moment of time 0. The set of expected rates of return

is calculated as shown in (18):

where

– the prices at time 0 ,

- the estimated prices from the obtained models at time 1 (30.10.2020, and respectively 31.10.2022).

In the context of the implemented program, the calculations disregard the signs preceding the return rates. However, during the interpretation of the outcomes, this sign is employed to determine whether to engage in a short (negative) or a long (positive) position regarding that specific asset.